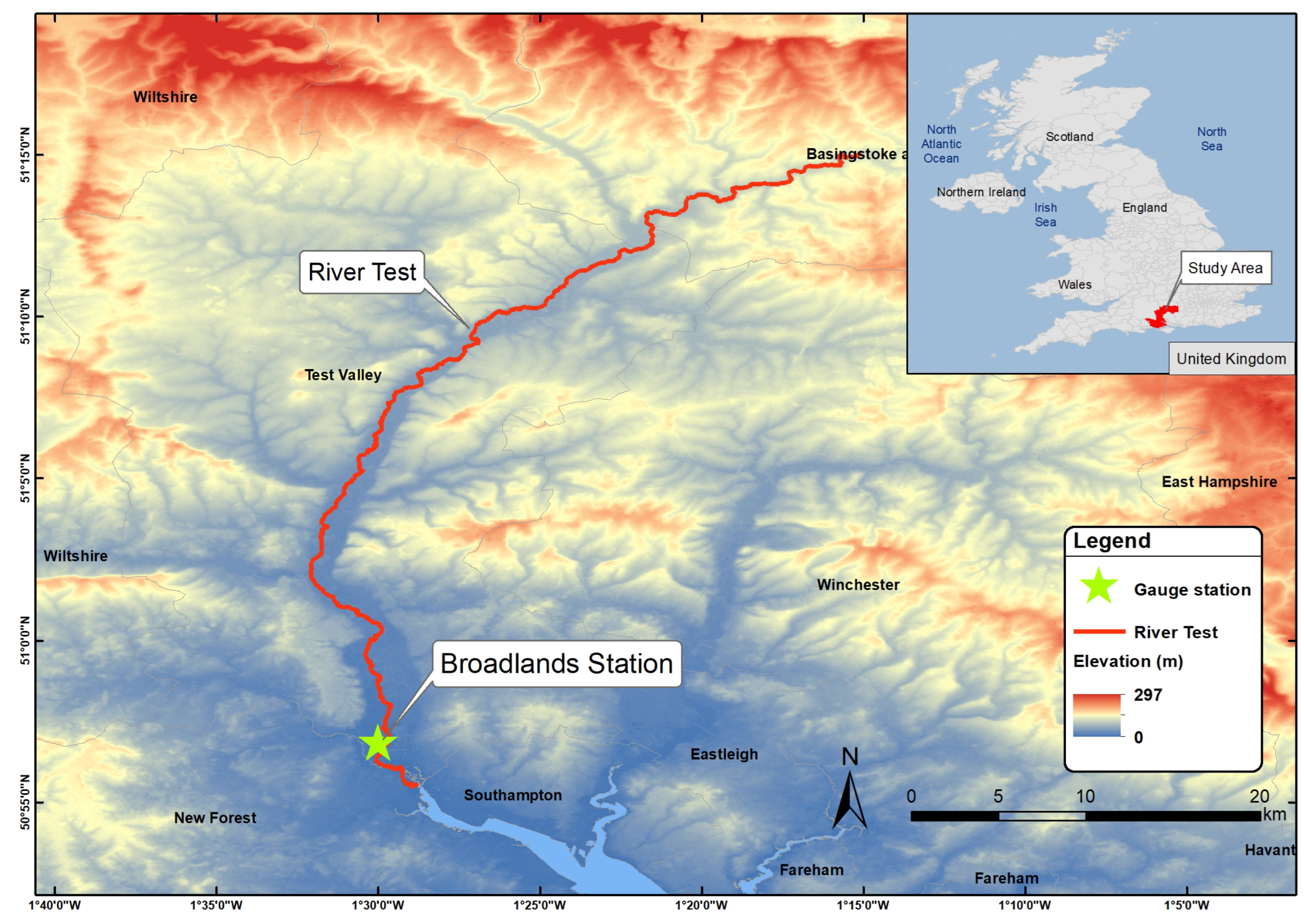

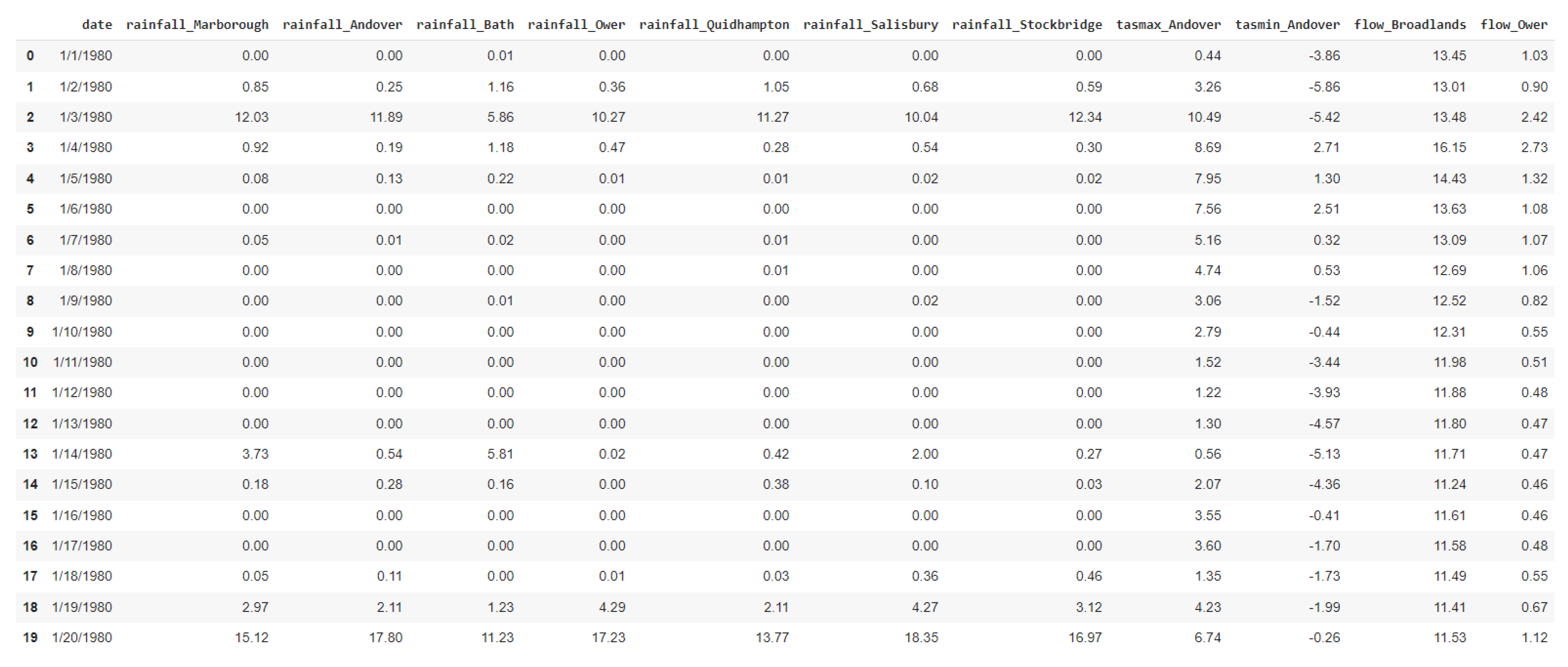

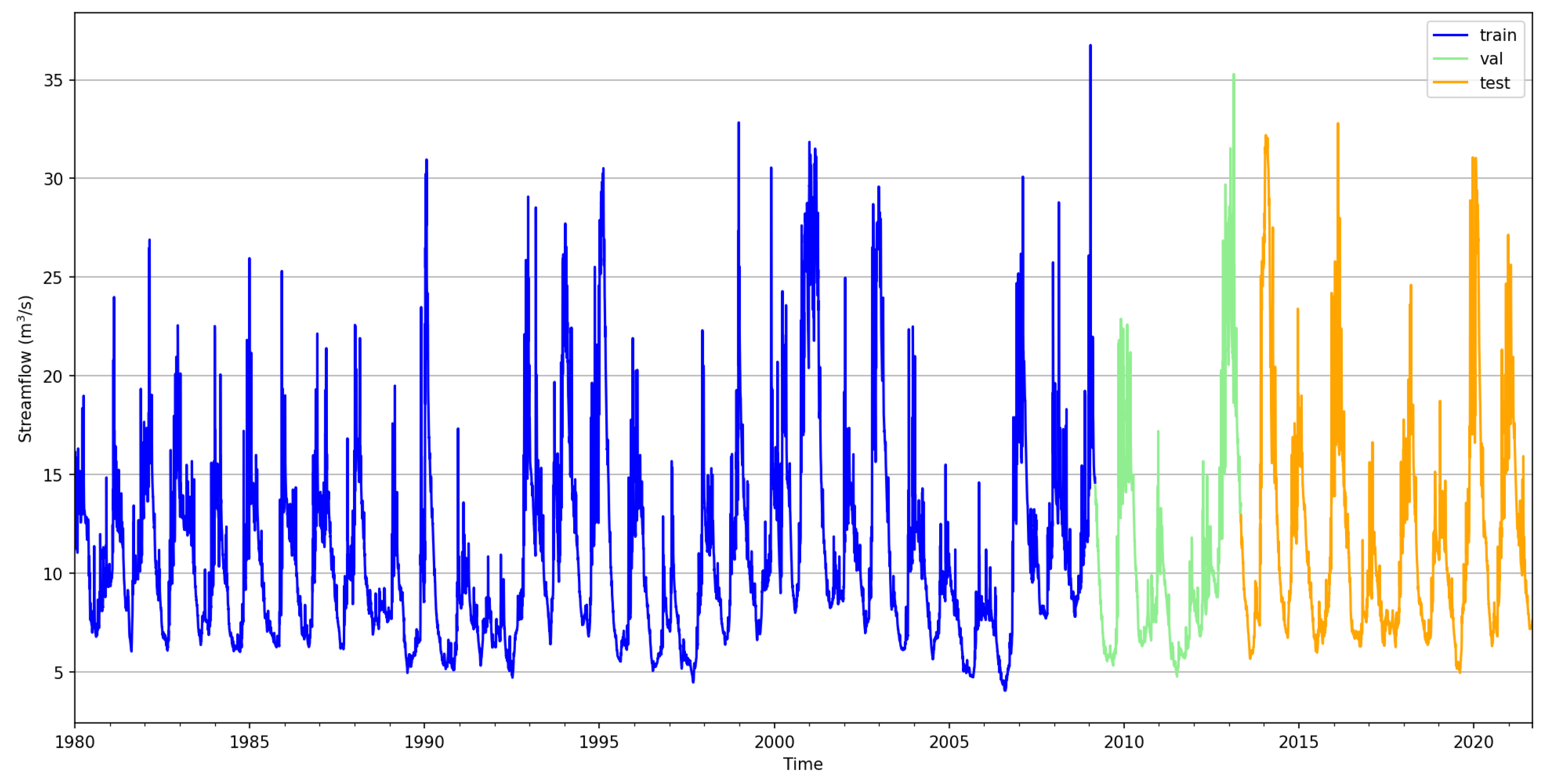

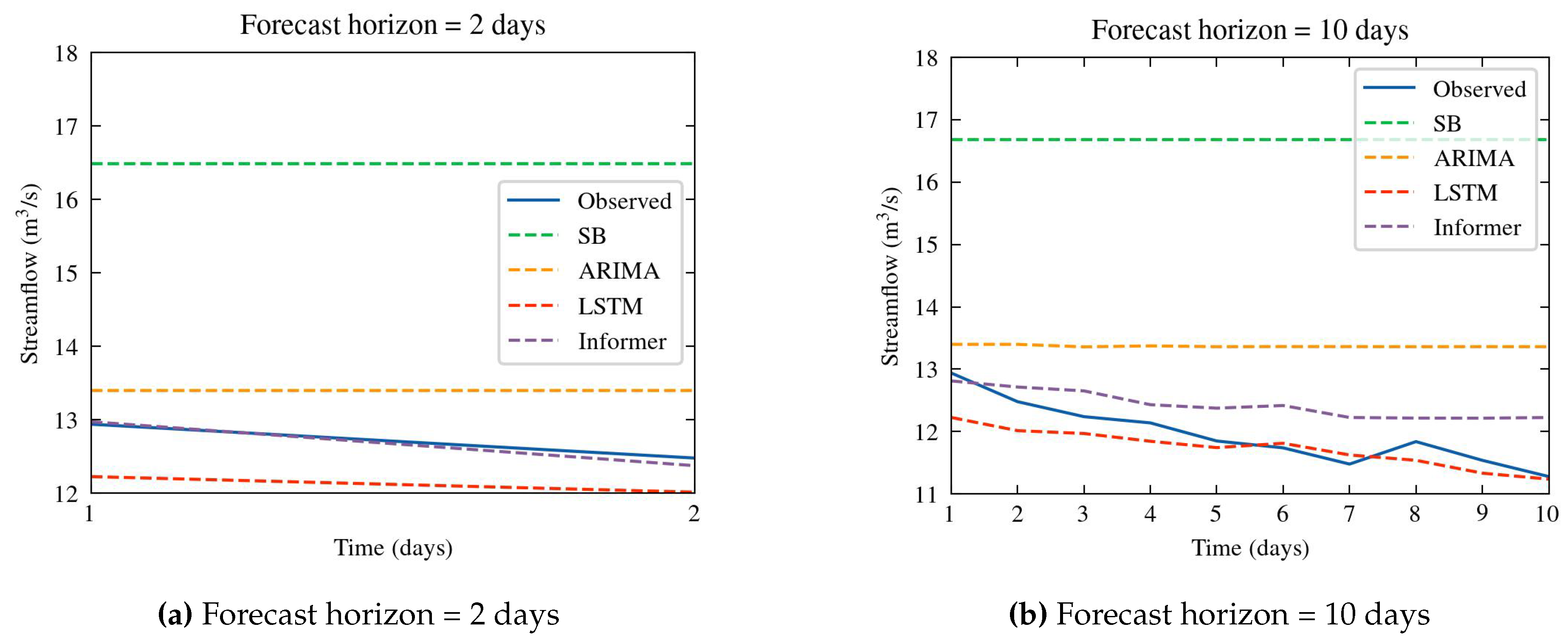

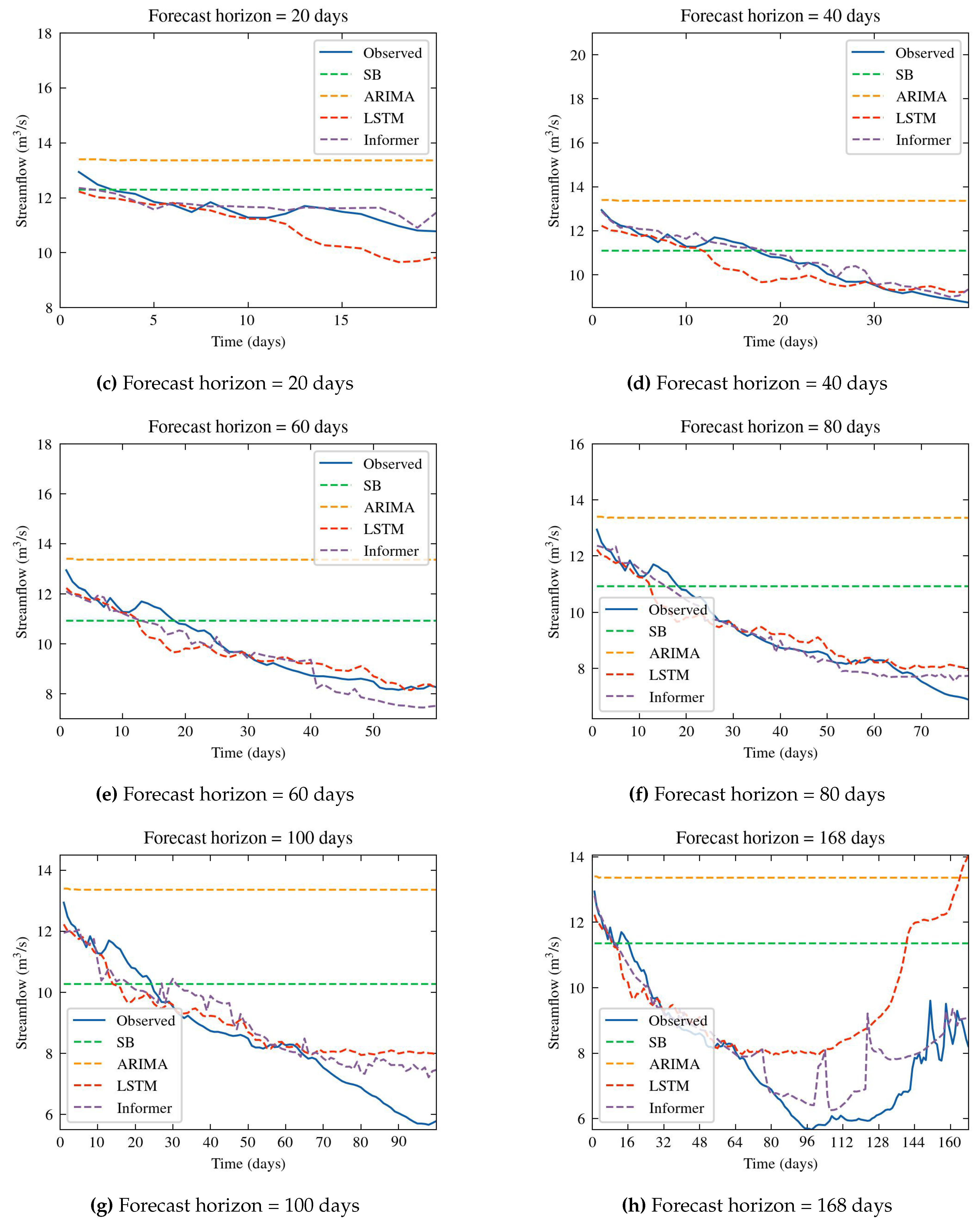

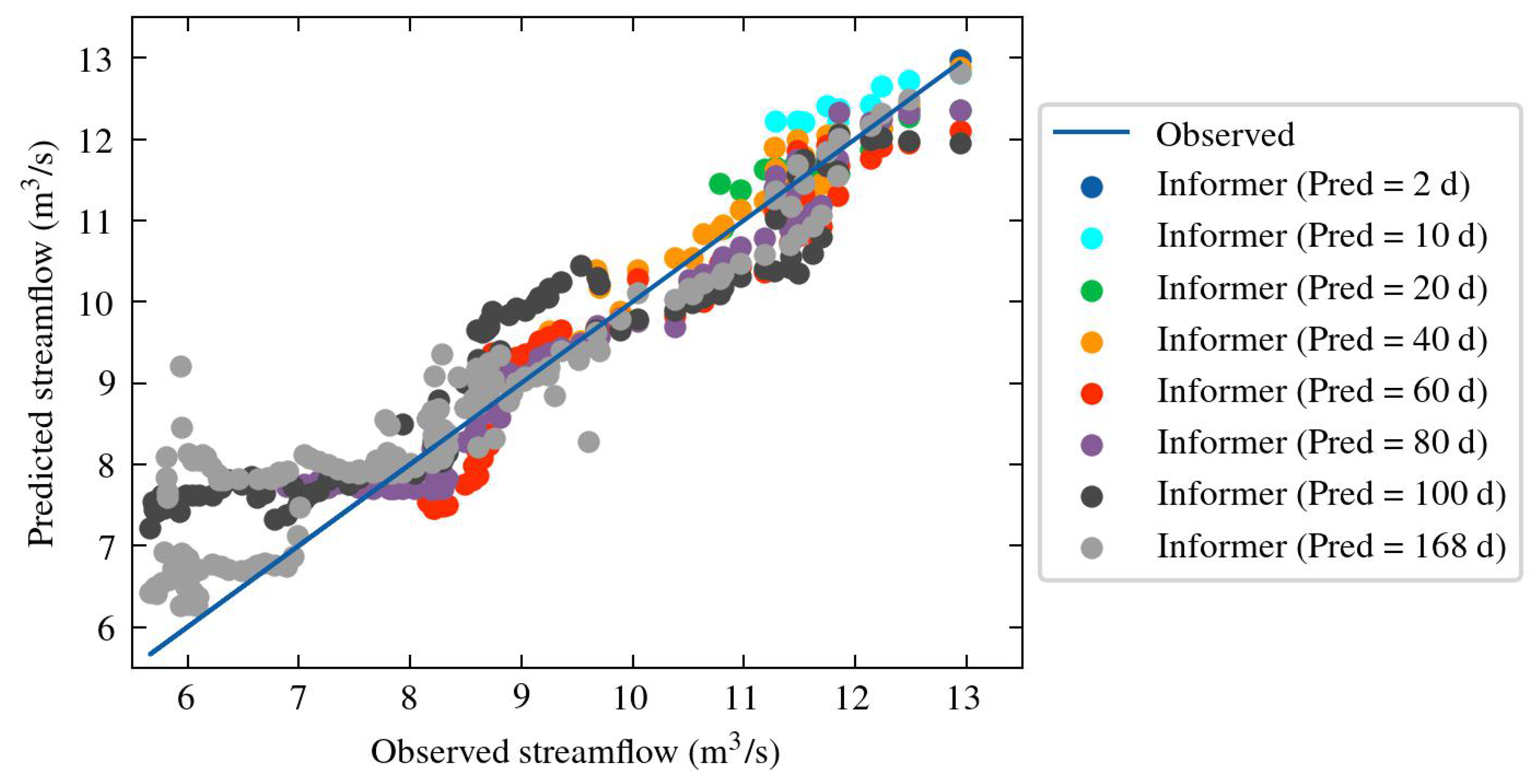

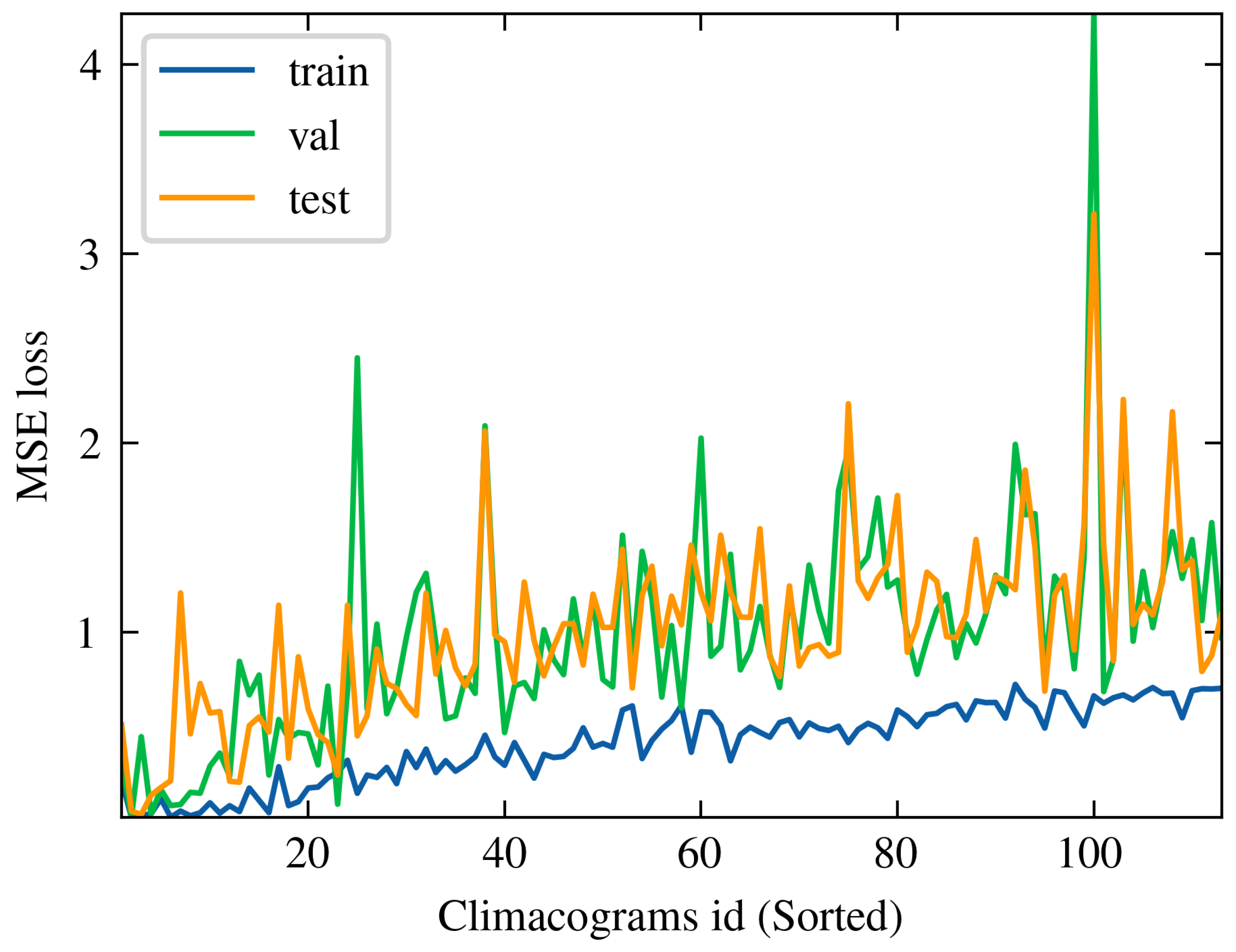

This section introduces four models (SB, ARIMA, LSTM and Informer) applied to time series forecasting of daily streamflow in the River Test and analyses their limitations.

2.1. Stochastic Benchmark (SB)

Establishing a benchmark model is crucial for comparative analysis. For this task, we introduce the Stochastic Benchmark (SB) method as our benchmark model. The stochastic benchmark method provides a fundamental baseline for evaluation, embodying a simplified yet robust approach in hydroclimatic time series forecasting. This method can serve as an alternative to other benchmark methods, such as the average or naive methods.

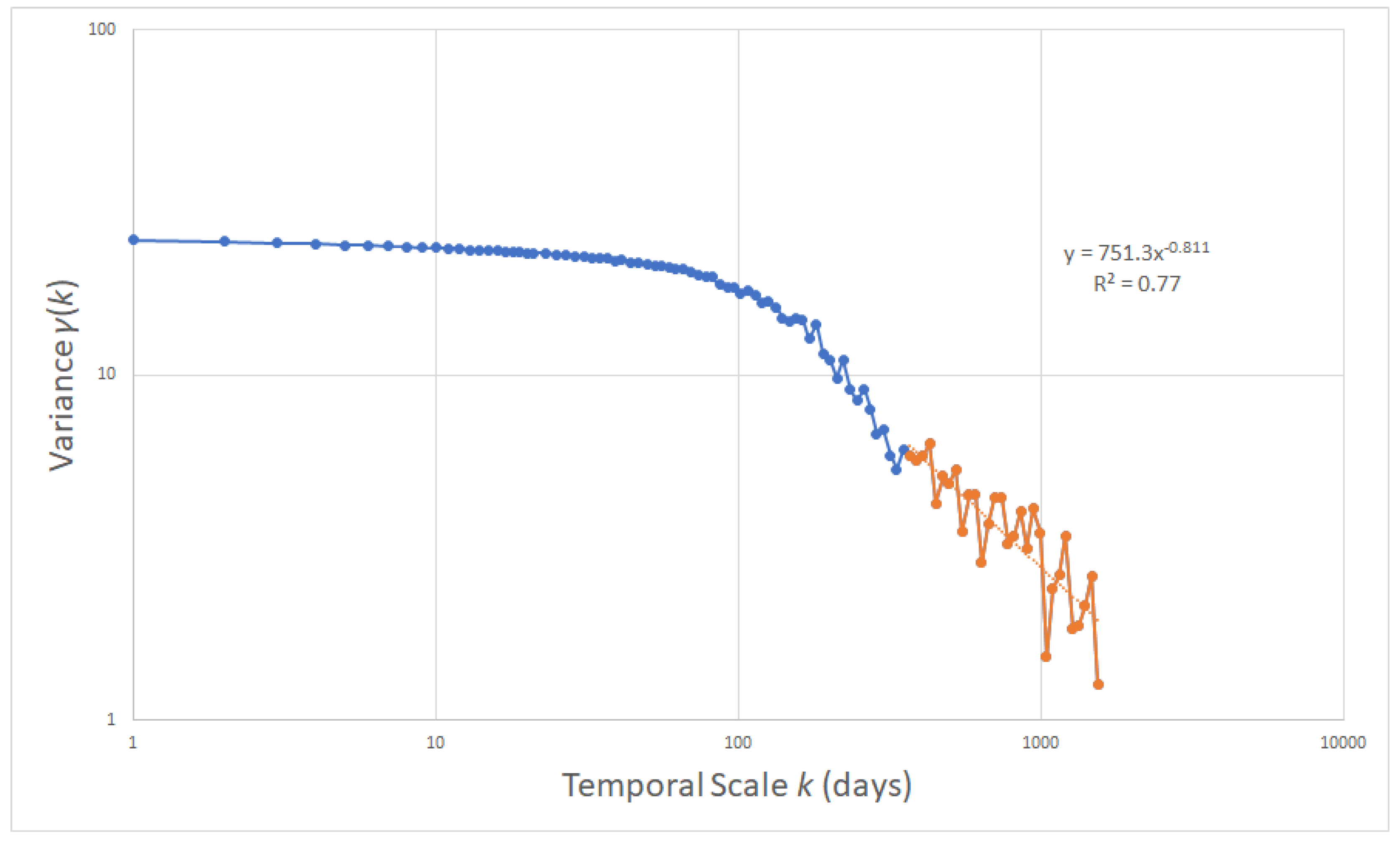

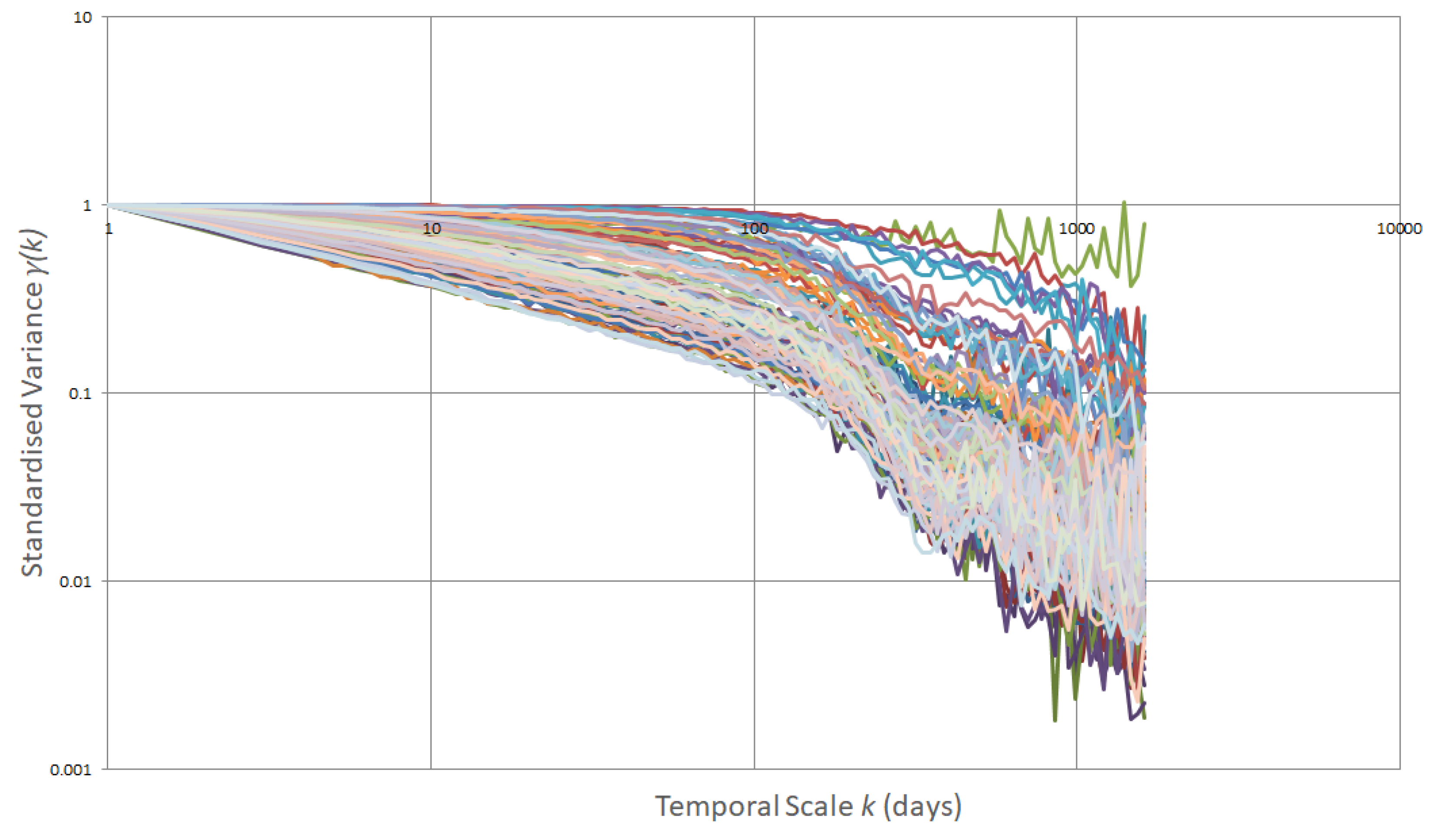

This approach is based on the assumption of a Hurst-Kolmogorov (HK) process, characterized by its climacogram. The climacogram [

58] is a key tool in stochastic time series analyses of natural phenomena. The term is defined as the graph of the variance of time-averaged stochastic process

(assuming stationarity) with respect to time scale

k and is denoted by

[

59]. The climacogram is proven valuable in identifying long-term variations, dependencies, persistence, and clustering within a process, a characteristic shared with Transformer models. This can be achieved by using the Hurst (

H) coefficient, which is equal to half of the slope of the climacogram on a double logarithmic scale graph.

Considering a stochastic process

(here we denote stochastic variables, by underlining them) in continuous time

t, representing variables like rainfall or river flow, the cumulative process is defined as follows:

Then,

is the time-averaged process. The climacogram

of the process

is the variance of the time-averaged process at time scale

k [

60], i.e.,

In Hurst-Kolmogorov (HK) processes, the future depends on the recorded past and the climacogram is expressed in mathematical form as [

61]:

where the

and

are scale parameters with units of time and

, respectively, while the coefficient

H is the Hurst coefficient, and describes the long-term behaviour of the autocorrelation structure, or the persistence of a process [

62]. More specifically, based on the Hurst coefficient, three cases can be distinguished as follows:

0.5; the process has random variation (white noise).

0.5 1; the process is positively correlated. It seems to be the case in most natural processes, where the time series shows long-term persistence or long-term dependence.

0 0.5; the process is anti-correlated.

As described in Koutsoyiannis [

63] in classical statistics, samples are inherently defined as sets of independent and identically distributed (IID) stochastic variables. Classical statistical methods, aiming to estimate future states, leverage all observations within the sample. The above pose challenges when applied to time series data from stochastic processes. This leads to the following question: what is the required number of past conditions to consider when estimating an average that accurately represents the future average over a given period of length

?

The estimation of the local future mean at period length

with respect to the present and the past, i.e.,

Assuming that there are a large number of

n observations from the present and the past, but only

are selected for estimation [

63], then we have:

while we can answer the above question by finding the

that minimizes the mean square error:

As demonstrated in [

63] the standardized mean square error can be expressed in terms of the climacogram as:

For the case of the Hurst-Kolmogorov process for which the Equation (

3) is valid, the value of

that minimizes the error

A is:

The above equation (

8) distinguishes two cases depending on the value of

H coefficient. If

0.6, this yields

, which means that the future mean estimate is the average of the entire set of

n observations (equivalent to average method). However, if

0.6, then it can be used

terms for estimating the future average.

In this paper the above methodology is used to create a new, simple and theoretically substantiated benchmark model for comparison with other models.

2.3. Long Short-Term Memory (LSTM)

Long Short-Term Memory (LSTM) networks represent an enhanced version of Recurrent Neural Networks (RNNs) [

65], engineered to hold onto information from previous data for extended periods. They are designed for processing sequential data. LSTMs adeptly address the challenge of long-term dependencies through the incorporation of three gating mechanisms and a dedicated memory unit. They were first introduced by Hochreiter and Schmidhuber in 1997 [

66] marking a milestone in deep learning. In many cases it is very important to know the previous time steps for a long length, in order to obtain a correct prediction of the outcome in each problem. This type of networks try to solve the "vanishing gradients" problem [

67] (i.e., the loss of information during training that occurs when gradients become extremely small and extend further into the past), which remains the main problem in RNN’s. At its core, LSTM consists of various components, including gates and cell state (also referred as the memory of the network), which collectively enable it to selectively retain or discard information over time. In a LSTM network there are three gates as depicted in

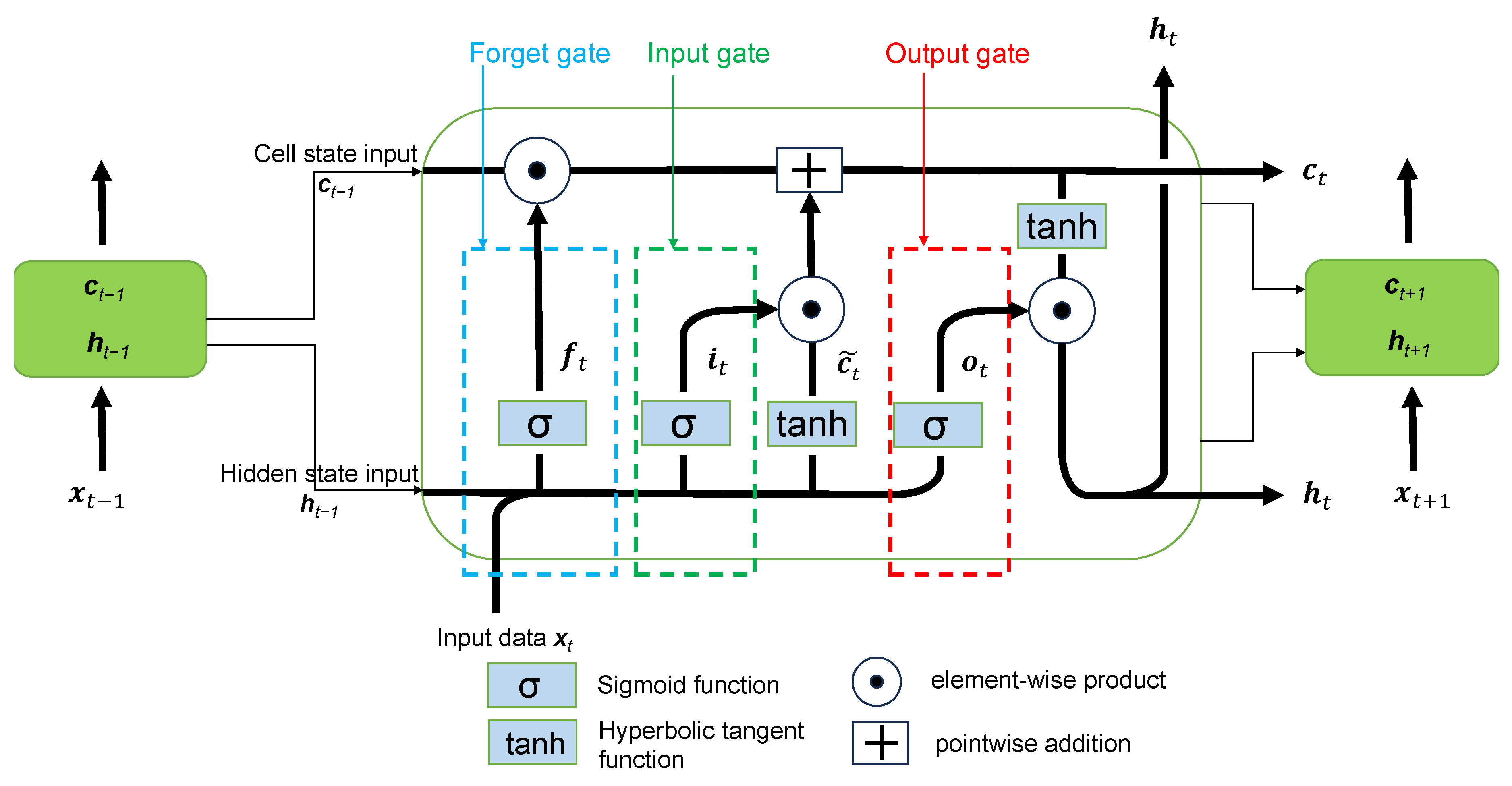

Figure 2, and the back-propagation algorithm is used to train the model. These three gates and the cell state can be described as follows:

- (1)

Input gate: decides how valuable the current input is for solving the task, thus choosing the inflow of new information, selectively updating the memory cell. This gate use hidden state at former time step

and current input

to update the input gate with the following equation:

- (2)

Forget gate: decides which information from the previous cell state is to be discarded, allowing the network to prioritize relevant information. This is determined by the sigmoid function. Specifically, it takes the previous hidden state

as well as the current input

and outputs a number between 0 (meaning this will be skipped) and 1 (meaning this will be utilized), for each number of the cell state

. The output of the forget gate

can be expressed as:

- (3)

Output gate: regulates the information that the network outputs, refining the data stored in the memory cell. It decides what the next hidden state should be and this is achieved through the output vector

. The output of this gate

is described by the equation:

- (4)

Cell state: The long-term dependencies are encoded in the cell states and in this way the problem of "vanishing gradients" can be avoided. Cell state

at current time step updates the former

by taking into account the candidate cell state

, representing the new information that could be added to the cell state, the input vector

and the forget vector

. Cell states updated values are as follows:

Final output

of the LSTM has the form:

To summarize, Equations (

10)–(

15) express the mathematical calculations that are performed sequentially in the LSTM model, where

,

,

are the input gate, forget gate and output gate vectors respectively, with subscript

t indicating the current time step;

,

,

represent weight matrices for connecting input, forget, and output gates with the input

, respectively;

,

,

denote weight matrices for connecting input, forget, and output gates with the hidden state

;

,

,

are input, forget, and output gate bias vectors, respectively;

the candidate cell state and

the current cell state. Two activation functions is being used with

and

stands for sigmoid function and hyperbolic tangent function respectively. Finally ⊙ operation is the element-wise or Hadamard product and + operation is simply pointwise addition.

2.4. Transformer-Based Model (Informer)

First off, an initial examination of transformer models is imperative for our investigation. The characteristic feature of transformers is the so-called self-attention mechanism, enabling the model to weigh different parts of the input sequence differently based on their relevance. This attention mechanism allows transformers to capture long-range dependencies efficiently, breaking the sequential constraints of traditional models like LSTMs. The transformer architecture consists of encoder and decoder layers, each comprised of multi-head self-attention mechanisms and feedforward neural networks. The encoder processes the input sequence using self-attention mechanisms to extract relevant features. The decoder then utilizes these features to generate the output sequence, incorporating both self-attention and encoder-decoder attention mechanisms for effective sequence-to-sequence tasks. The use of positional encoding provide the model with information about the position of input tokens in the sequence. Parallelization becomes a strength, as transformers can process input sequences in parallel rather than sequentially, significantly speeding up training and inference.

The Informer model, which was proposed by Zhou et al. (2021) [

52], is a deep artificial neural network, and more specifically an improved Transformer-based model, that aims to solve the Long Sequence Time-series Forecasting (LSTF) problem [

52]. This problem requires high prediction capability to capture long-range dependence between inputs and outputs. Unlike LSTM, which relies on sequential processing, transformers, and consequently Informer, leverage attention mechanisms to capture long-range dependencies and parallelize computations. The key feature lies in self-attention, allowing the model to weigh input tokens differently based on their relevance to each other, enhancing its ability to capture temporal dependencies efficiently. The rise in the application of transformer networks have emphasized their potential in increasing the forecasting capability. However, some issues concerning the operation of transformers, such as the quadratic time complexity (

), the high memory usage and the limitation of the encoder-decoder architecture, make up transformer to be unproductive in problems when dealing with long sequences. Informer addresses those issues of the "vanilla" transformer [

32] and trying to resolve them, by applying three main characteristics [

52]:

ProbSparse self-attention mechanism: a special kind of attention mechanism (ProbSparse - Probabilistic Sparsity) which achieves in time complexity and memory usage, where L is the length of the input sequence.

Self-attention distilling: a technique that highlights the dominant attention making it effective in handling extremely long input sequences.

Generative style decoder: a special kind of decoder that predicts long-term time series sequences with one forward pass rather than step-by-step, which drastically improves the speed of forecast generation.

Informer model preserves the classic transformer architecture, i.e., encoder-decoder architecture, while at the same time aiming to solve LSTF problem. The LSTF problem, that Informer is trying to solve, has an sequence input at time

t:

and the output or target (predictions) is the corresponding sequence of vectors:

where

,

are input and output series of vectors at a specific time

t, and

the input and output number elements, respectively. LSTF encourage a longer enough output length

, than usual forecasting cases. Informer supports all forecasting tasks (e.g., multivariate predict multivariate, multivariate predict univariate etc.), where

are feature dimensions of input and output, respectively.

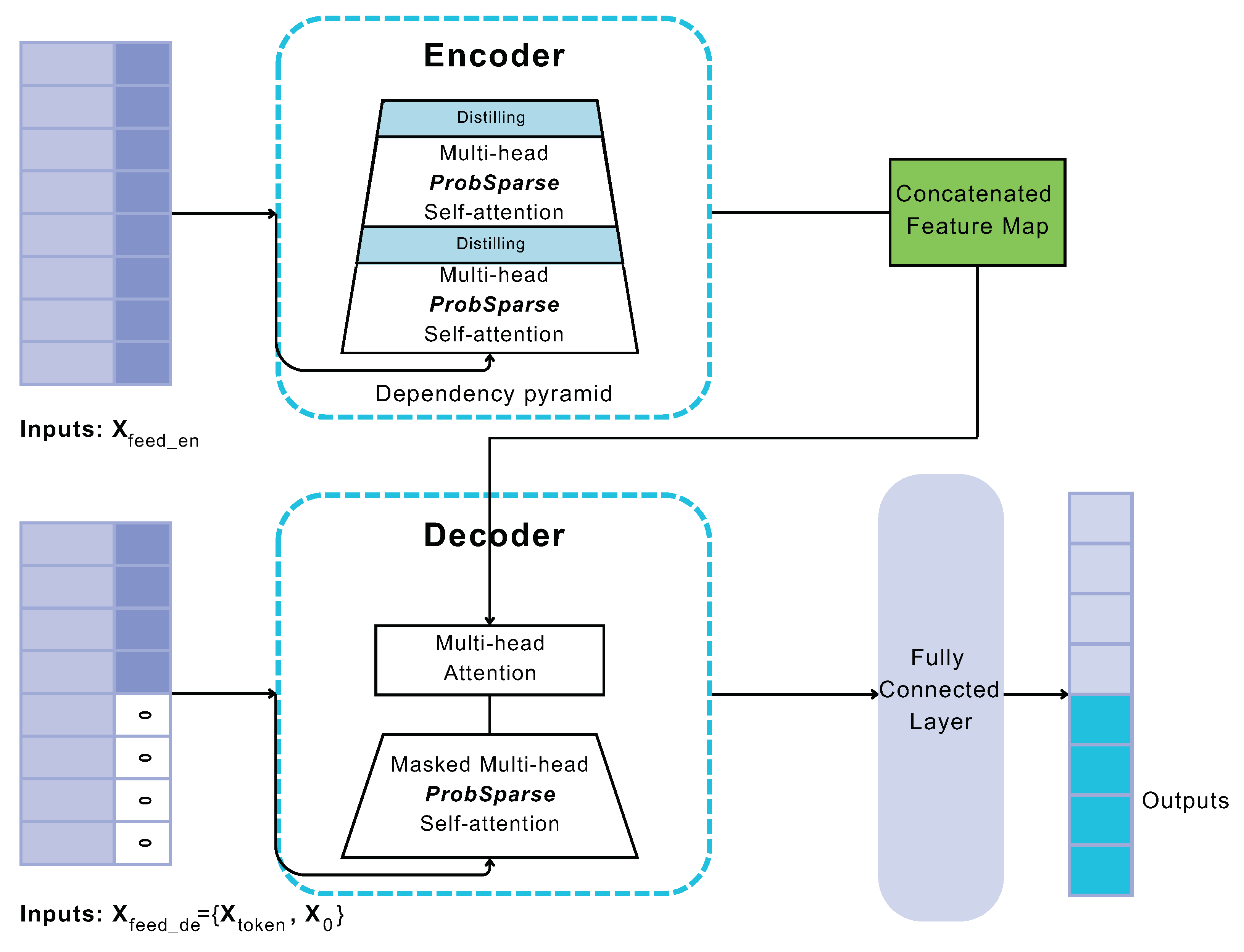

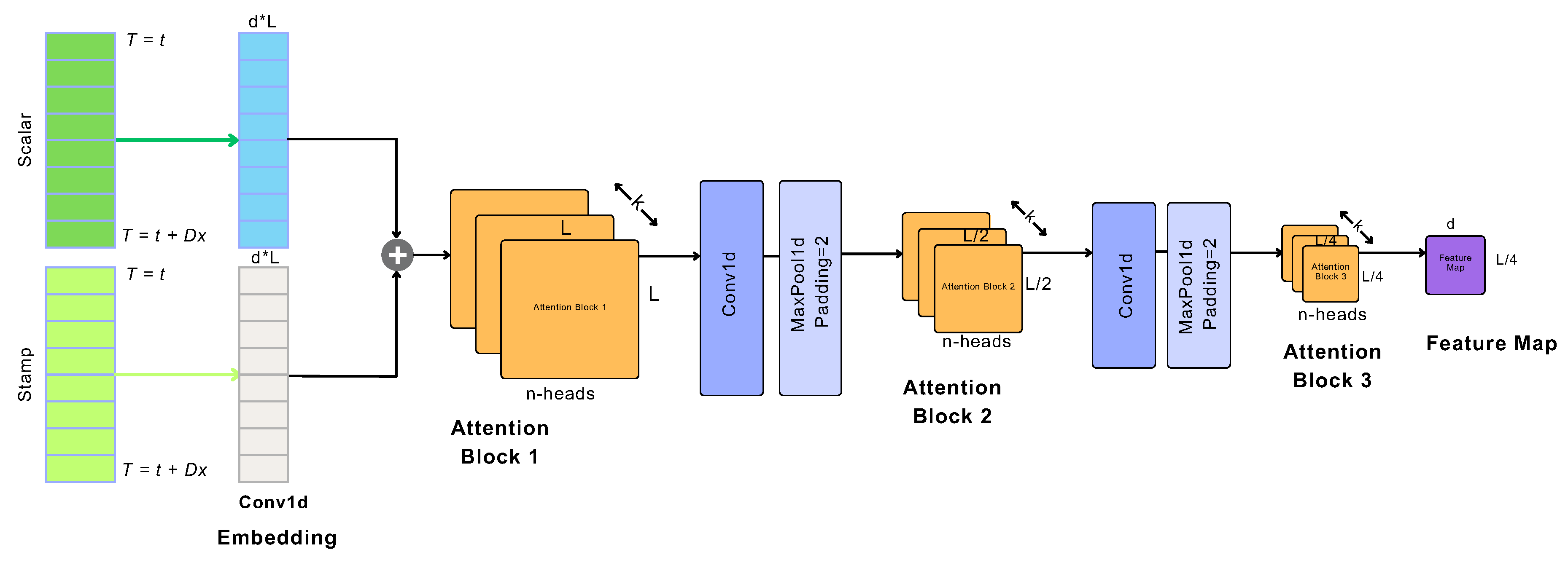

The structure of Informer model is illustrated in

Figure 3, wherein three main components are distinguished: encoder layer, decoder layer and prediction layer. Encoder mainly handles longer sequence inputs by using sparse self-attention, an alternative to the traditional self-attention method. The trapezoidal component refers to the extracted self-attention operation, which significantly reduces the network’s size. The component between multi-head (i.e., a module for attention mechanisms which is often applied several times in parallel) ProbSparse attention, indicates self-attention distilling blocks (presented with light blue), that lead to concatenated feature map. On the other side, the decoder deals with input from the long-term sequence, padding target elements to zero. This procedure computes an attention-weighted component within the feature graph, resulting in the prompt generation of these elements in an efficient format.

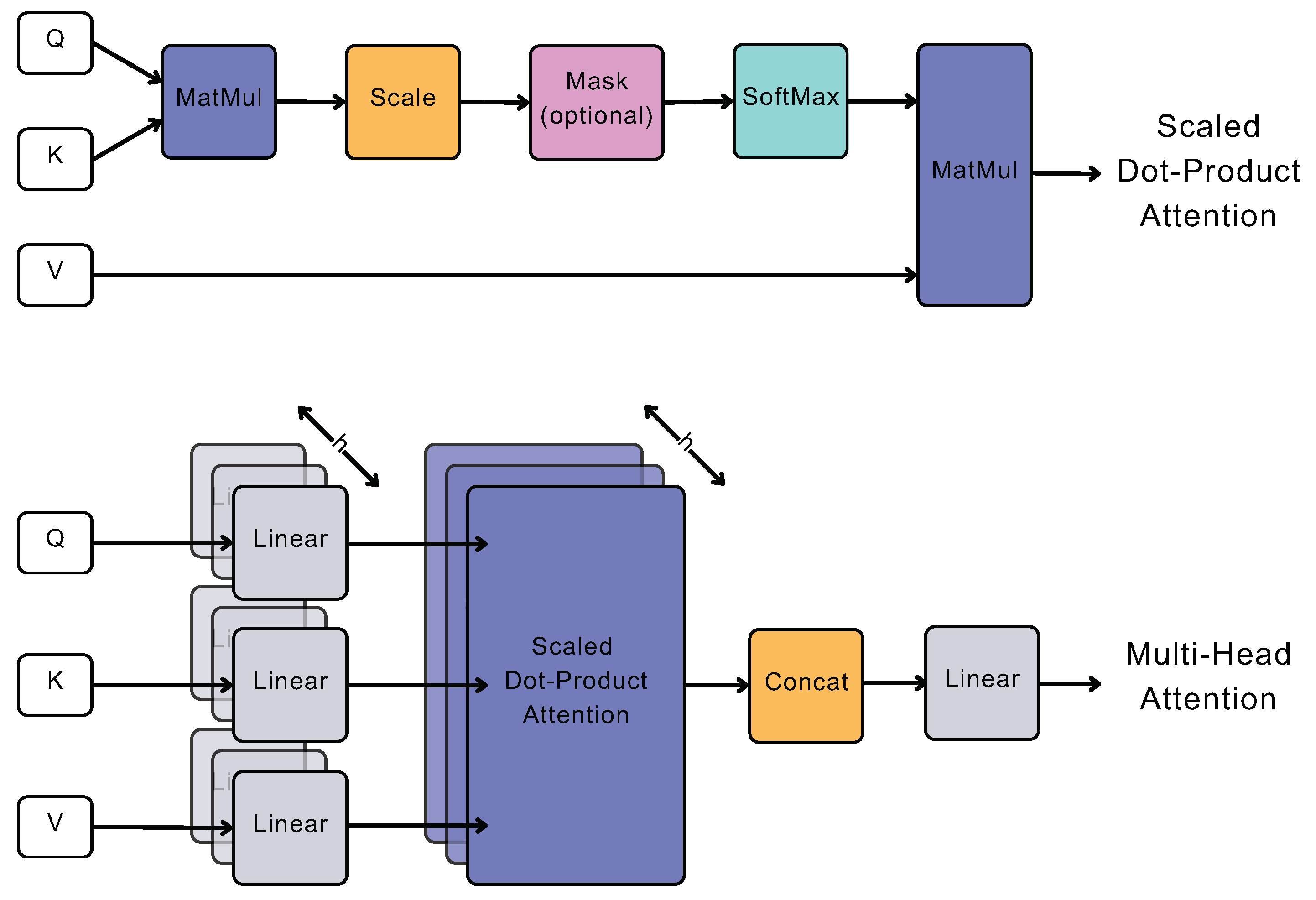

The ProbSparse (i.e., Probabilistic Sparsity) self-attention mechanism reduces the computation cost of self-attention drastically, while maintaining its effectiveness. Essentially, instead of Equation (

18), Informer uses Equation (

19) for the calculation of attention. The core concept of the attention mechanism revolves around computing attention weights for each element in the input sequence, indicating their relevance to the current output. This process begins by generating query (

q), key (

k), and value (

v) vectors for each element in the sequence, where the query vector represents the current network output state, and the key and value vectors correspond to the input element and its associated attribute vector, respectively. Subsequently, these vectors are utilized to calculate attention weights through a scaled dot-product relation, measuring the similarity between the vectors.

Figure 4 provides an overview of the attention mechanism’s architecture, where MatMul stands for matrix multiplication, SoftMax is the normalized exponential function for converting numbers into a probability distribution, and Concat for concatenation. The function that summarises attention mechanism can be written as [

32]:

where

Q,

K,

V are the input matrices of the attention mechanism. The dot-product of query

q and key

k is divided by the scaling factor of

, with

d representing the dimension of query and key vectors (input dimension). The significant difference between Equation (

18) and

19 is that keys

K are not linked to all possible

Q queries but to the

u most dominant ones, resulting in the matrix

(which is obtained by the probability sparse of

Q).

An empirical approach is proposed for effectively measuring query sparsity, where is calculated by a method similar to Kullback–Leibler divergence. For each (query)

and

in the set of keys

K, there is the bound

[

52]. Therefore, the proposed max-mean measurement is as follows:

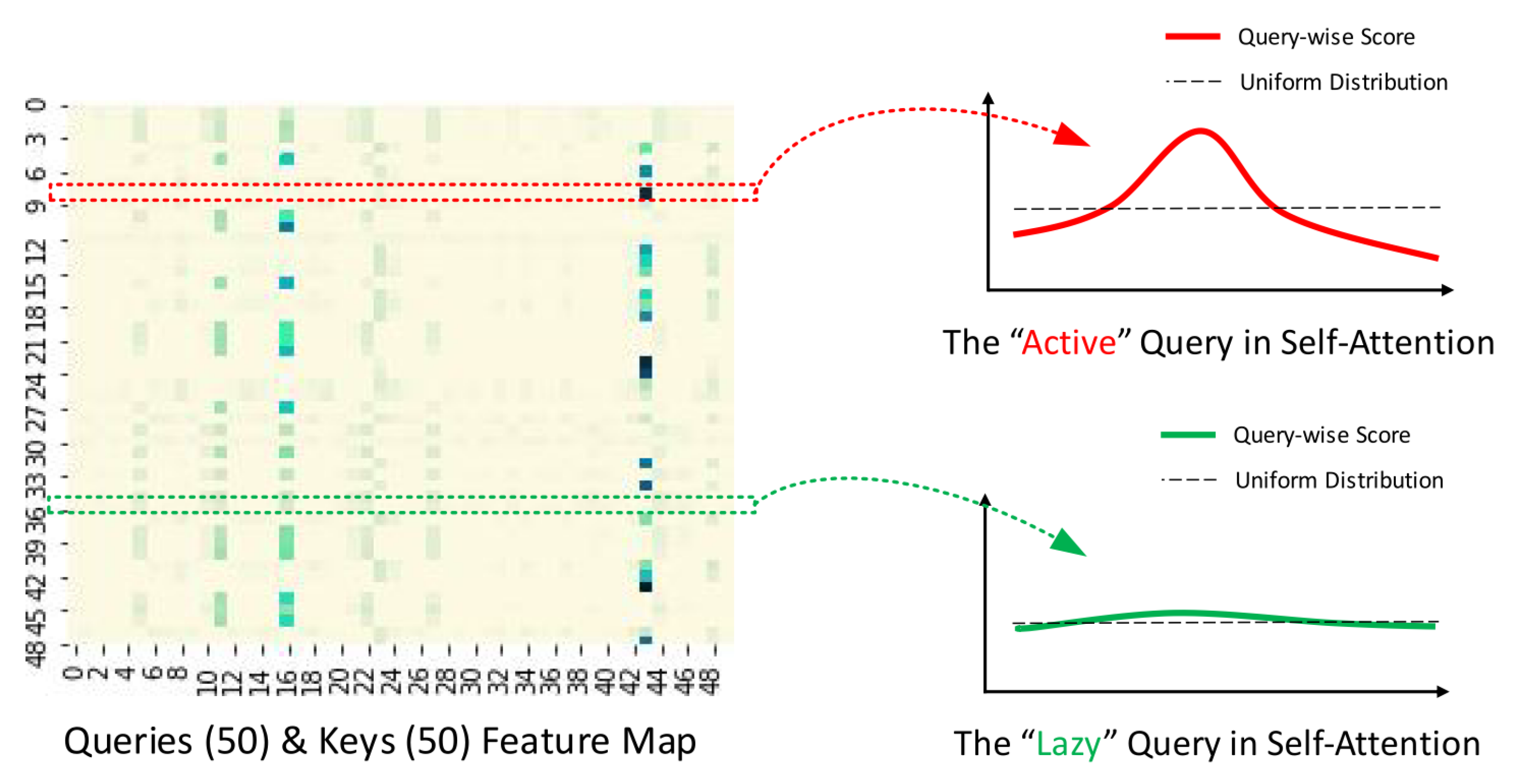

If the query achieves a higher ), its attention probability p becomes more "diverse" and is likely to include the dominant dot-product pairs in the header field of the long-tail self-attention distribution.

The distribution of self-attention scores follows a long-tail distribution, with "active queries" positioned at the upper end of the feature map within the "head" scores, while "lazy queries" reside at the lower end within the "tail" scores. The preceding is illustrated in

Figure 5. The ProbSparse mechanism is tailored to prioritize the selection of "active" queries over "lazy" ones.

The encoder aims to capture the long-range dependencies within the lengthy sequence of inputs, under the constraint of using memory.

Figure 6 is depicting model’s encoder. The process of self-attention distilling serves to assess the dominant features and generate a consolidated map of self-attention features for the subsequent level. Essentially, it involves simplifying the complex self-attention mechanism of the transformer model into simpler and smaller form, suitable for integration into the Informer model.

As distinguished in

Figure 6 encoder contains enough attention blocks, convolution layers (Conv1d) and max pooling layers (MaxPool), to encode the input data. The copies of the main stack (Attention Block 1) with continuously decreasing inputs by half, increase the reliability of the distilling operation. The process continues by reducing to

of the original length. At the end of the encoder, all feature maps are concatenated to direct the output of the encoder directly to the decoder.

The purpose of the ’distillation’ operation is to reduce the size of the network parameters, giving higher weights to the dominant features, and produce a focused feature map in the subsequent layer. The distillation process from the

j-th layer to the

-th layer is described by the following procedure:

where

represents the attention block, Conv1d(·) performs an 1-D convolutional filters with the ELU(·) activation function [

70].

The structure of the decoder does not differ greatly from that published by Vaswani et al. [

32]. It is capable of generating large sequential outputs through a single forward pass process. As shown in

Figure 3, it includes two identical multi-head attention layers. The main difference is the way in which the predictions are generated through a process called generative inference, which significantly speeds up the long-range prediction process. The decoder receives the following vectors:

where

is the start token (i.e., parts of input data),

is a placeholder for the target sequence (set to 0). Subsequently, the masked multi-head attention mechanism adjusts the ProbSparse self-attention computation by setting the inner products to

, thereby prohibiting each position from attending to subsequent positions. Finally, a fully connected layer produces the final output, while the dimension of the output

depends on whether univariate or multivariate prediction is implemented.

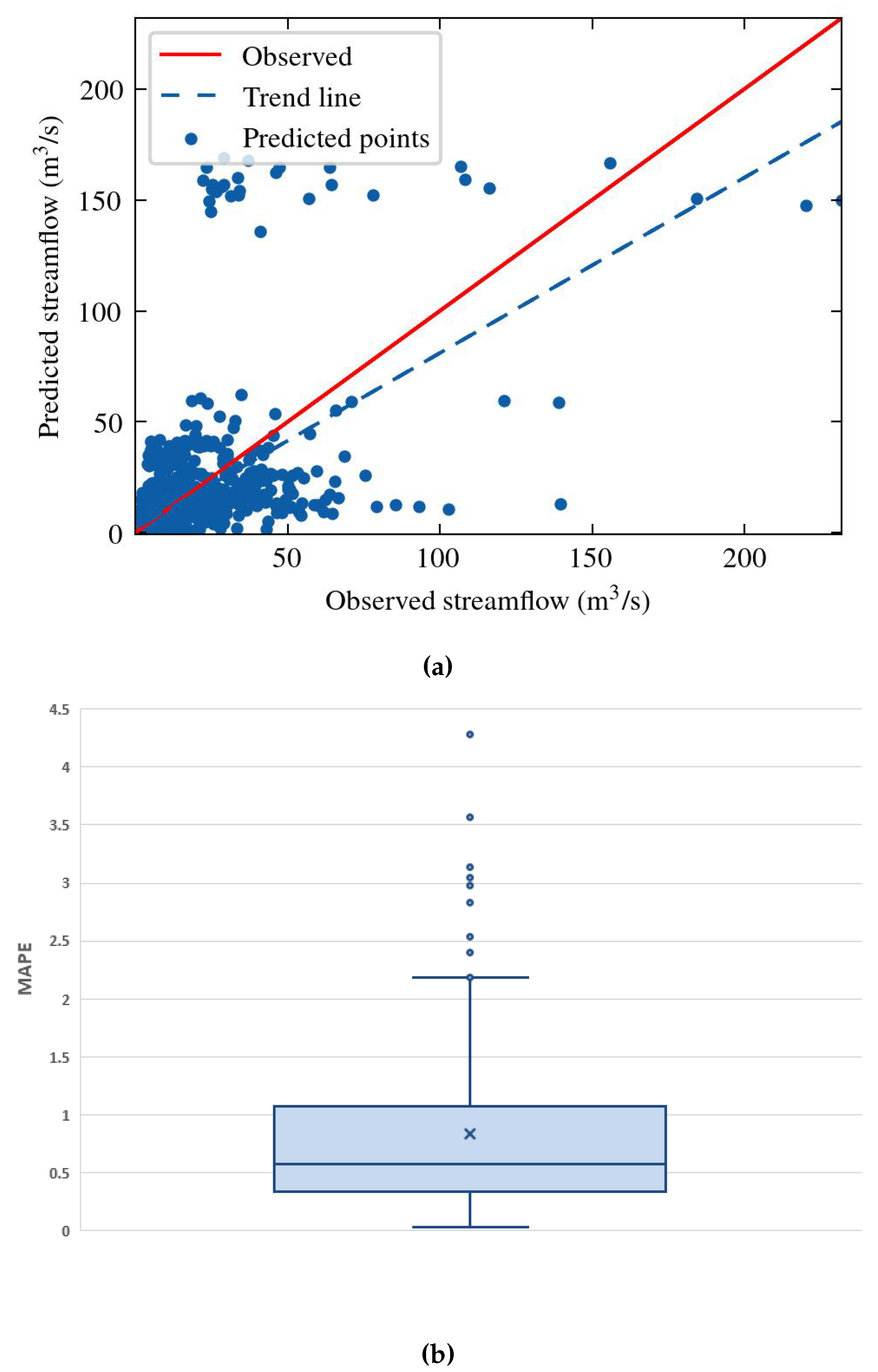

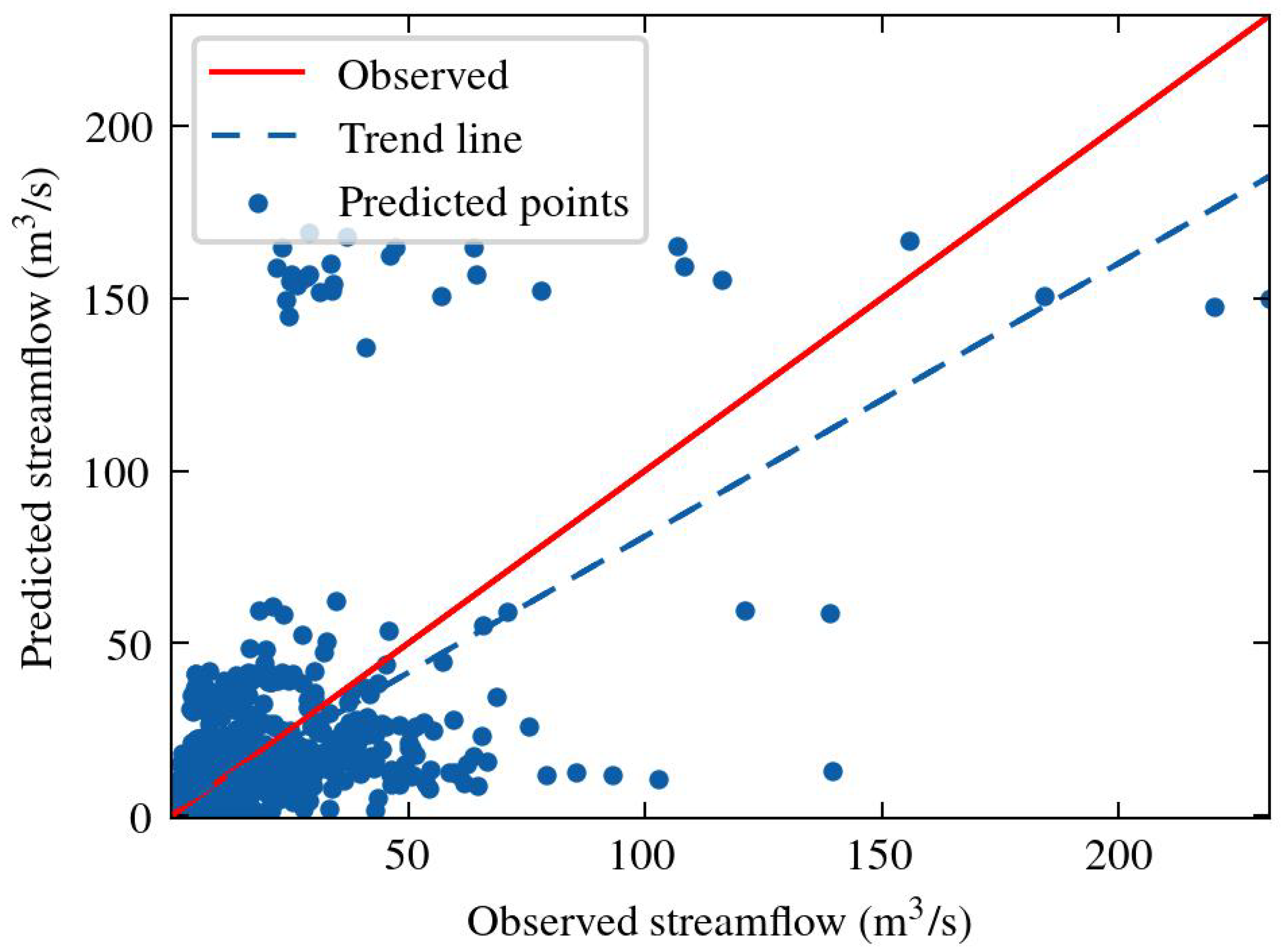

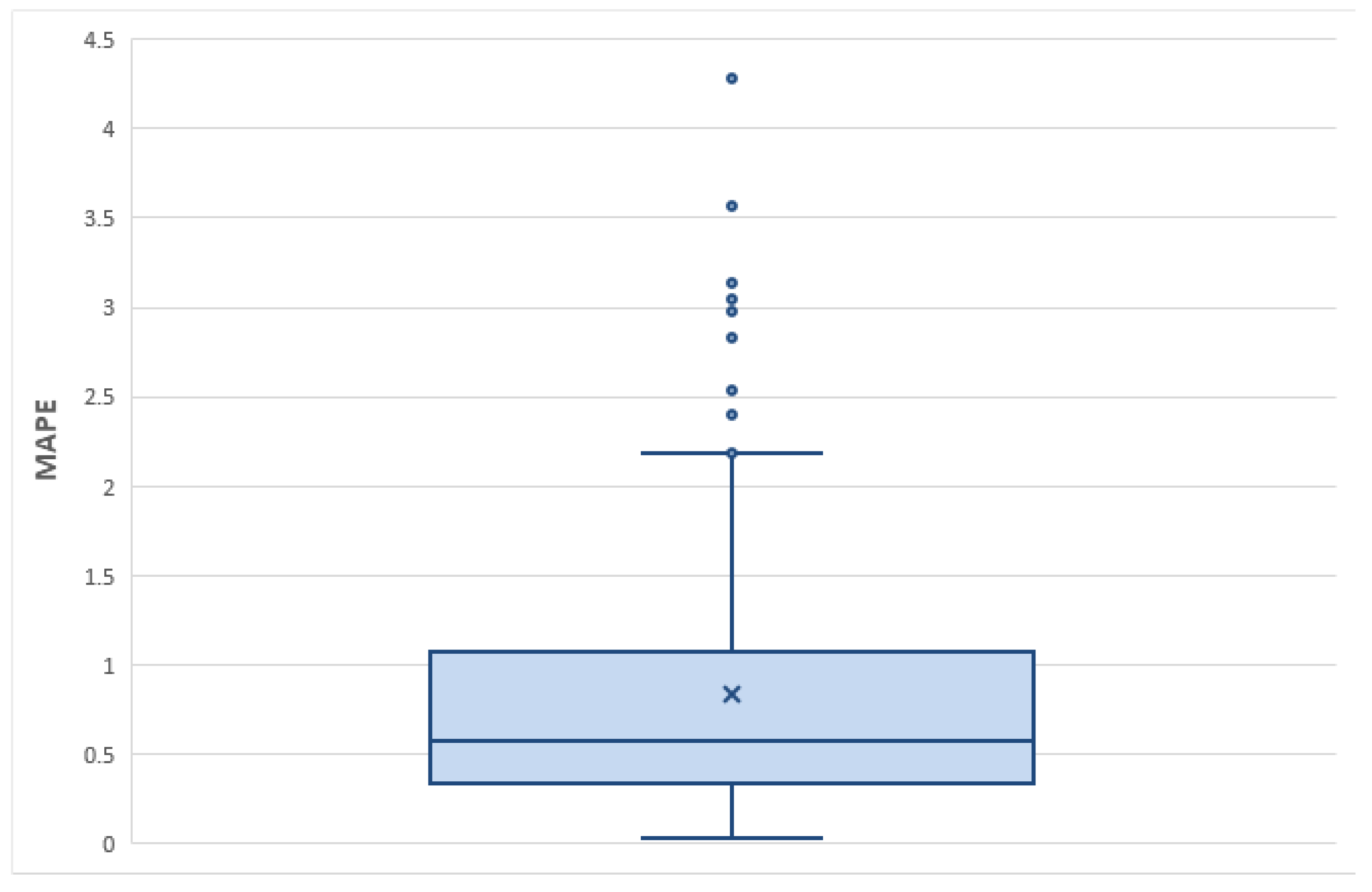

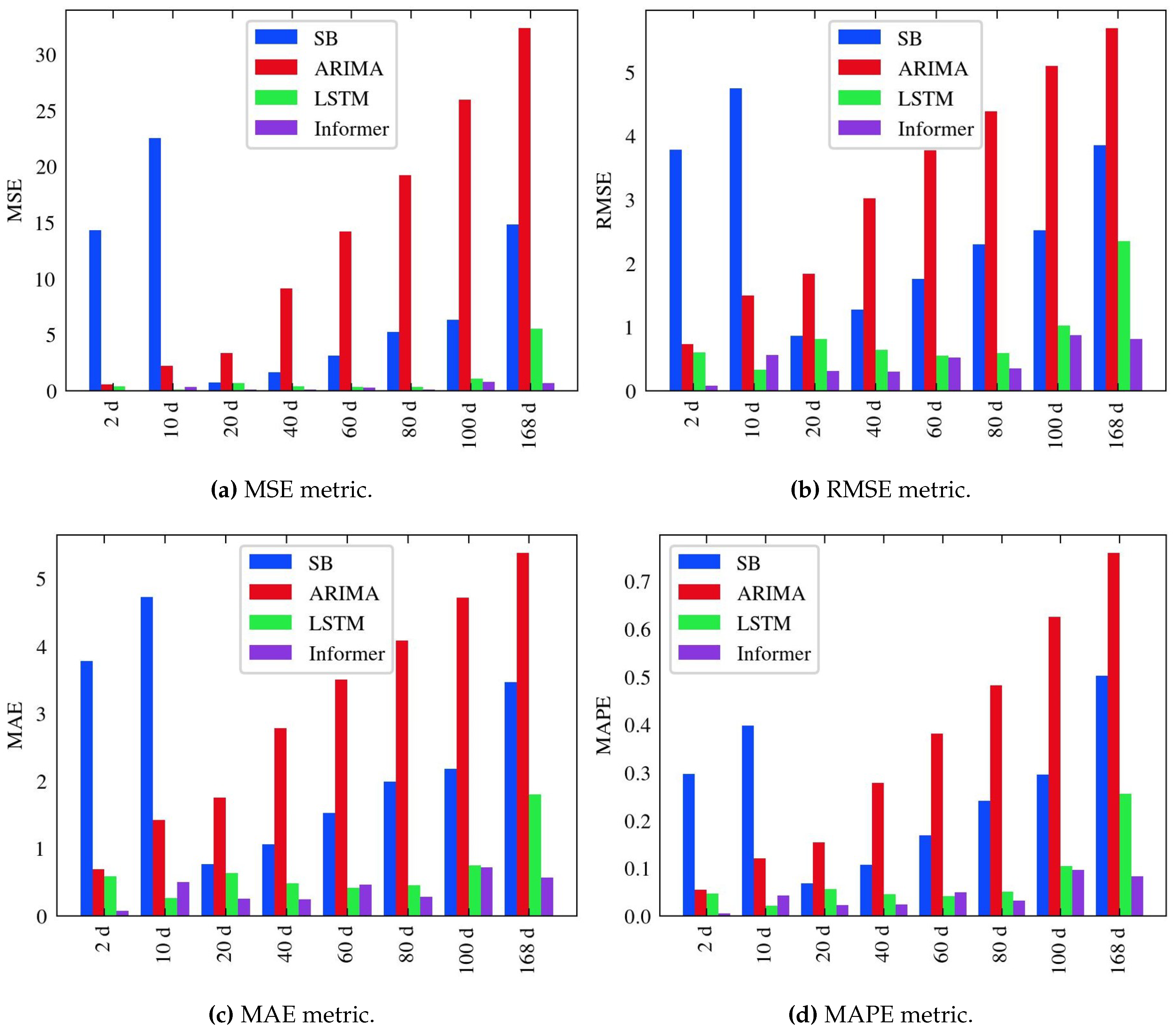

2.5. Evaluation Metrics

To assess the effectiveness of the different models accurately, this paper engages Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE) and Mean Absolute Percentage Error (MAPE) as evaluation metrics. In some cases, the coefficient of determination

was also determined. The formulas for calculating these metrics are provided below.

where

is the

i-th observed value;

is the corresponding forecasted value;

is the mean of the

y values; and

n the number of total data points for

.

Materials and Methods should be described with sufficient details to allow others to replicate and build on published results. Please note that publication of your manuscript implicates that you must make all materials, data, computer code, and protocols associated with the publication available to readers. Please disclose at the submission stage any restrictions on the availability of materials or information. New methods and protocols should be described in detail while well-established methods can be briefly described and appropriately cited.

Research manuscripts reporting large datasets that are deposited in a publicly avail-able database should specify where the data have been deposited and provide the relevant accession numbers. If the accession numbers have not yet been obtained at the time of submission, please state that they will be provided during review. They must be provided prior to publication.

Interventionary studies involving animals or humans, and other studies require ethical approval must list the authority that provided approval and the corresponding ethical approval code.

This is an example of a quote.