Submitted:

15 August 2024

Posted:

20 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Background

2.1. AI in Medical Diagnostics

2.2. Gastrointestinal Abnormality Detection

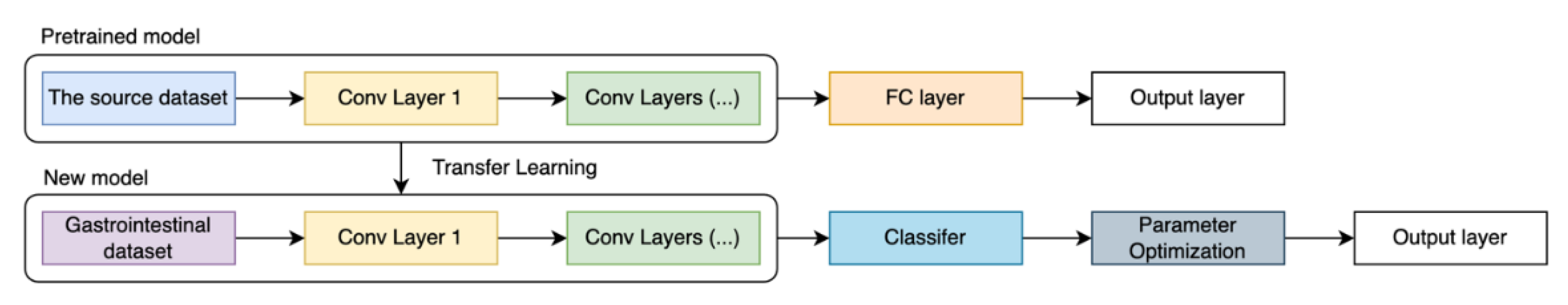

3. Methodology

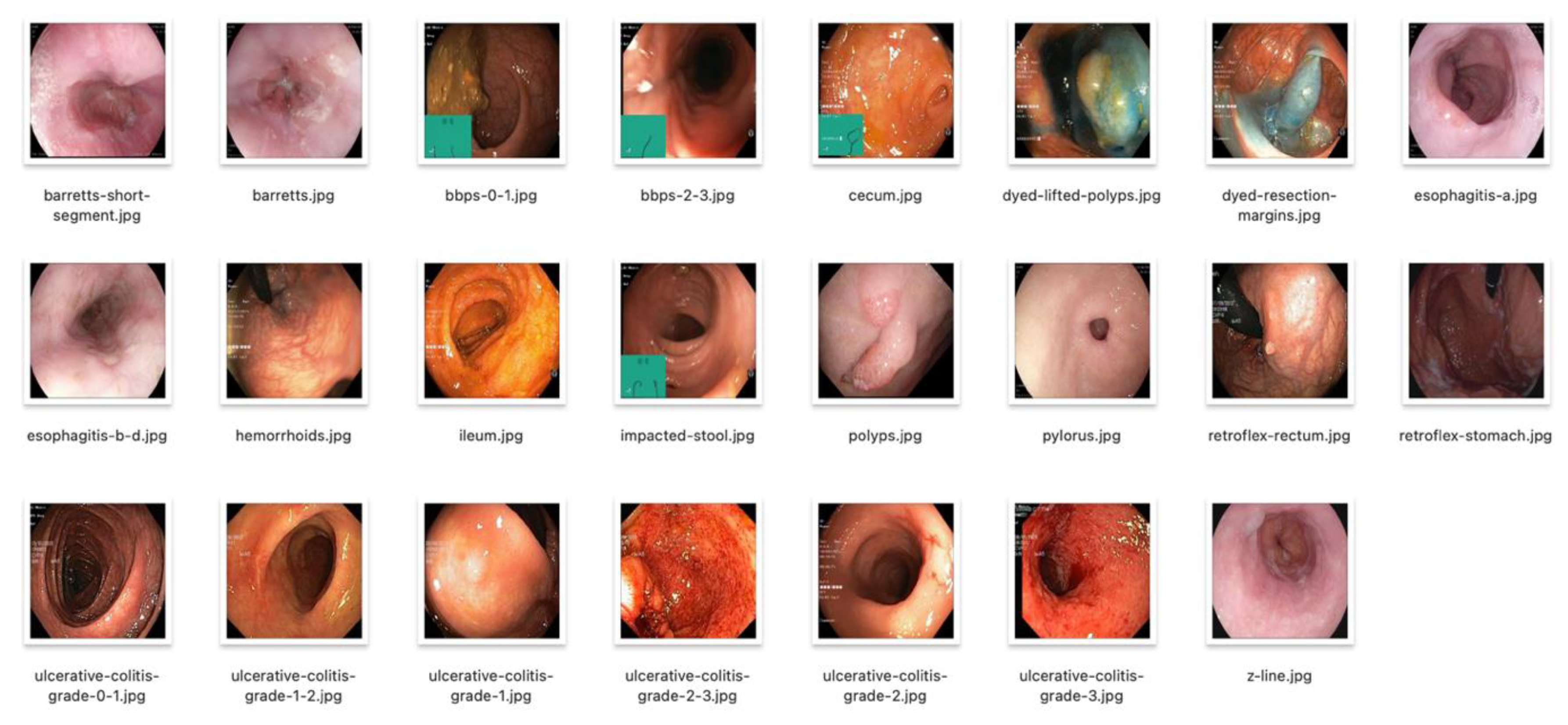

3.1. Data Collection

3.2. Data Preprocessing and Augmentation

3.3. Model Training

4. Experiment

4.1. Performance Metrics

4.2. Impact of Semi-Supervised Learning and Data Augmentation

5. Conclusions

References

- Z. Tan, J. Peng, T. Chen, and H. Liu, “Tuning-Free Accountable Intervention for LLM Deployment–A Metacognitive Approach,” arXiv preprint arXiv:2403.05636, 2024. [CrossRef]

- W. Zhu and T. Hu, “Twitter Sentiment analysis of covid vaccines,” in 2021 5th International Conference on Artificial Intelligence and Virtual Reality (AIVR), 2021, pp. 118–122.

- Y. Zhao, H. Gao, and S. Yang, “Utilizing Large Language Models to Analyze Common Law Contract Formation,” OSF Preprints, Jun. 2024. [CrossRef]

- P. Li, Q. Yang, X. Geng, W. Zhou, Z. Ding, and Y. Nian, “Exploring Diverse Methods in Visual Question Answering,” arXiv preprint arXiv:2404.13565, 2024. [CrossRef]

- Z. Tan et al., “Large Language Models for Data Annotation: A Survey,” arXiv preprint arXiv:2402.13446, 2024.

- K. Wantlin et al., “Benchmd: A benchmark for modality-agnostic learning on medical images and sensors,” arXiv preprint arXiv:2304.08486, 2023.

- Y. Zhao, “A Survey of Retrieval Algorithms in Ad and Content Recommendation Systems,” arXiv preprint arXiv:2407.01712, 2024. [CrossRef]

- Z. Ding, P. Li, Q. Yang, and S. Li, “Enhance Image-to-Image Generation with LLaVA Prompt and Negative Prompt,” arXiv preprint arXiv:2406.01956, 2024.

- B. Dang, D. Ma, S. Li, Z. Qi, and E. Zhu, “Deep learning-based snore sound analysis for the detection of night-time breathing disorders,” Applied and Computational Engineering, vol. 76, pp. 109–114, Jul. 2024. [CrossRef]

- Z. Tan, L. Cheng, S. Wang, Y. Bo, J. Li, and H. Liu, “Interpreting pretrained language models via concept bottlenecks,” arXiv preprint arXiv:2311.05014, 2023. [CrossRef]

- X. Tang, F. Li, Z. Cao, Q. Yu, and Y. Gong, “Optimising Random Forest Machine Learning Algorithms for User VR Experience Prediction Based on Iterative Local Search-Sparrow Search Algorithm,” arXiv preprint arXiv:2406.16905, 2024.

- H. Ni et al., “Harnessing Earnings Reports for Stock Predictions: A QLoRA-Enhanced LLM Approach,” Preprints (Basel), Aug. 2024. [CrossRef]

- F. Guo et al., “A Hybrid Stacking Model for Enhanced Short-Term Load Forecasting,” Electronics (Basel), vol. 13, no. 14, 2024. [CrossRef]

- Z. Lin, C. Wang, Z. Li, Z. Wang, X. Liu, and Y. Zhu, “Neural Radiance Fields Convert 2D to 3D Texture,” Applied Science and Biotechnology Journal for Advanced Research, vol. 3, no. 3, pp. 40–44, 2024.

- P. Li, M. Abouelenien, R. Mihalcea, Z. Ding, Q. Yang, and Y. Zhou, “Deception Detection from Linguistic and Physiological Data Streams Using Bimodal Convolutional Neural Networks,” arXiv preprint arXiv:2311.10944, 2023. [CrossRef]

- S. Li, X. Dong, D. Ma, B. Dang, H. Zang, and Y. Gong, “Utilizing the LightGBM algorithm for operator user credit assessment research,” Applied and Computational Engineering, vol. 75, no. 1, pp. 36–47, Jul. 2024. [CrossRef]

- S. Yang, Y. Zhao, and H. Gao, “Using Large Language Models in Real Estate Transactions: A Few-shot Learning Approach,” OSF Preprints, May 2024. [CrossRef]

- Z. Tan, T. Chen, Z. Zhang, and H. Liu, “Sparsity-guided holistic explanation for llms with interpretable inference-time intervention,” in Proceedings of the AAAI Conference on Artificial Intelligence, 2024, pp. 21619–21627.

- J.-Z. and P. L. Guo Fusen and Wu, “An Empirical Study of聽AI Model’s Performance for聽Electricity Load Forecasting with聽Extreme Weather Conditions,” in Science of Cyber Security , C. and M. W. Yung Moti and Chen, Ed., Cham: Springer Nature Switzerland, 2023, pp. 193–204.

- L. Tan, S. Liu, J. Gao, X. Liu, L. Chu, and H. Jiang, “Enhanced Self-Checkout System for Retail Based on Improved YOLOv10,” arXiv preprint arXiv:2407.21308, 2024. [CrossRef]

- T. Hu, W. Zhu, and Y. Yan, “Artificial intelligence aspect of transportation analysis using large scale systems,” in Proceedings of the 2023 6th Artificial Intelligence and Cloud Computing Conference, 2023, pp. 54–59.

- C. Jin et al., “Visual prompting upgrades neural network sparsification: A data-model perspective,” arXiv preprint arXiv:2312.01397, 2023.

- P. Li, Y. Lin, and E. Schultz-Fellenz, “Contextual Hourglass Network for Semantic Segmentation of High Resolution Aerial Imagery,” arXiv preprint arXiv:1810.12813, 2019. [CrossRef]

- Simula, “Hyper-Kvasir Dataset,” 2024.

- X. Wu et al., “Application of Adaptive Machine Learning Systems in Heterogeneous Data Environments,” Global Academic Frontiers, vol. 2, no. 3, pp. 37–50, Jul. 2024. [CrossRef]

- H. Ni et al., “Time Series Modeling for Heart Rate Prediction: From ARIMA to Transformers,” arXiv preprint arXiv:2406.12199, 2024.

- Y. Yang, H. Qiu, Y. Gong, X. Liu, Y. Lin, and M. Li, “Application of Computer Deep Learning Model in Diagnosis of Pulmonary Nodules,” arXiv preprint arXiv:2406.13205, 2024. [CrossRef]

- C. Jin, T. Che, H. Peng, Y. Li, and M. Pavone, “Learning from teaching regularization: Generalizable correlations should be easy to imitate,” arXiv preprint arXiv:2402.02769, 2024.

- G. Urban et al., “Deep Learning Localizes and Identifies Polyps in Real Time With 96% Accuracy in Screening Colonoscopy,” Gastroenterology, vol. 155, no. 4, pp. 1069–1078.e8, 2018. [CrossRef]

- J. Liu, T. Yang, and J. Neville, “CliqueParcel: An Approach For Batching LLM Prompts That Jointly Optimizes Efficiency And Faithfulness,” arXiv preprint arXiv:2402.14833, 2024.

- X. Li, J. Chang, T. Li, W. Fan, Y. Ma, and H. Ni, “A Vehicle Classification Method Based on Machine Learning,” Preprints (Basel), Jul. 2024. [CrossRef]

- Y. Liu, H. Yang, and C. Wu, “Unveiling Patterns: A Study on Semi-Supervised Classification of Strip Surface Defects,” IEEE Access, vol. 11, pp. 119933–119946, 2023.

- X. Liu, H. Qiu, M. Li, Z. Yu, Y. Yang, and Y. Yan, “Application of Multimodal Fusion Deep Learning Model in Disease Recognition,” arXiv preprint arXiv:2406.18546, 2024. [CrossRef]

- Z. Li, B. Wan, C. Mu, R. Zhao, S. Qiu, and C. Yan, “AD-Aligning: Emulating Human-like Generalization for Cognitive Domain Adaptation in Deep Learning,” arXiv preprint arXiv:2405.09582, 2024.

- Y. Zhao and H. Gao, “Utilizing large language models for information extraction from real estate transactions,” arXiv preprint arXiv:2404.18043, 2024.

- B. Dang, W. Zhao, Y. Li, D. Ma, Q. Yu, and E. Y. Zhu, “Real-Time Pill Identification for the Visually Impaired Using Deep Learning,” arXiv preprint arXiv:2405.05983, 2024. [CrossRef]

- J. Ye et al., “Multiplexed oam beams classification via fourier optical convolutional neural network,” in 2023 IEEE Photonics Conference (IPC), 2023, pp. 1–2.

- Y. Tao, “Meta Learning Enabled Adversarial Defense,” in 2023 IEEE International Conference on Sensors, Electronics and Computer Engineering (ICSECE), 2023, pp. 1326–1330. [CrossRef]

- Q. Xu et al., “Applications of Explainable AI in Natural Language Processing,” Global Academic Frontiers, vol. 2, no. 3, pp. 51–64, 2024. [CrossRef]

- J. Wang, S. Hong, Y. Dong, Z. Li, and J. Hu, “Predicting Stock Market Trends Using LSTM Networks: Overcoming RNN Limitations for Improved Financial Forecasting,” Journal of Computer Science and Software Applications, vol. 4, no. 3, pp. 1–7, 2024. [CrossRef]

- J. Ye et al., “Multiplexed orbital angular momentum beams demultiplexing using hybrid optical-electronic convolutional neural network,” Commun Phys, vol. 7, no. 1, p. 105, 2024. [CrossRef]

- W. Zhu, “Optimizing distributed networking with big data scheduling and cloud computing,” in International Conference on Cloud Computing, Internet of Things, and Computer Applications (CICA 2022), 2022, pp. 23–28.

- X. Liu and Z. Wang, “Deep Learning in Medical Image Classification from MRI-based Brain Tumor Images,” arXiv preprint arXiv:2408.00636, 2024.

- J. Yuan, L. Wu, Y. Gong, Z. Yu, Z. Liu, and S. He, “Research on intelligent aided diagnosis system of medical image based on computer deep learning,” arXiv preprint arXiv:2404.18419, 2024. [CrossRef]

- M. Sun, Z. Feng, Z. Li, W. Gu, and X. Gu, “Enhancing Financial Risk Management through LSTM and Extreme Value Theory: A High-Frequency Trading Volume Approach,” Journal of Computer Technology and Software, vol. 3, no. 3, 2024. [CrossRef]

- Z. Wang, Y. Zhu, Z. Li, Z. Wang, H. Qin, and X. Liu, “Graph Neural Network Recommendation System for Football Formation,” Applied Science and Biotechnology Journal for Advanced Research, vol. 3, no. 3, pp. 33–39, 2024.

- Z. Wang, H. Yan, Y. Wang, Z. Xu, Z. Wang, and Z. Wu, “Research on Autonomous Robots Navigation based on Reinforcement Learning,” Jul. 2024. [CrossRef]

- Y. Yan, “Influencing Factors of Housing Price in New York-analysis: Based on Excel Multi-regression Model,” 2022.

- D. Ma, S. Li, B. Dang, H. Zang, and X. Dong, “Fostc3net: A Lightweight YOLOv5 Based On the Network Structure Optimization,” arXiv preprint arXiv:2403.13703, 2024.

- J. Ye et al., “OAM modes classification and demultiplexing via Fourier optical neural network,” in Complex Light and Optical Forces XVIII, 2024, pp. 44–52.

- K. Xu, Y. Wu, Z. Li, R. Zhang, and Z. Feng, “Investigating Financial Risk Behavior Prediction Using Deep Learning and Big Data,” International Journal of Innovative Research in Engineering and Management, vol. 11, no. 3, pp. 77–81, 2024. [CrossRef]

- X. Li, Y. Yang, Y. Yuan, Y. Ma, Y. Huang, and H. Ni, “Intelligent Vehicle Classification System Based on Deep Learning and Multi-Sensor Fusion,” Preprints (Basel), Jul. 2024. [CrossRef]

- J. Ye, M. Solyanik, Z. Hu, H. Dalir, B. M. Nouri, and V. J. Sorger, “Free-space optical multiplexed orbital angular momentum beam identification system using Fourier optical convolutional layer based on 4f system,” in Complex Light and Optical Forces XVII, 2023, pp. 69–79.

- Y. Tao, “SQBA: sequential query-based blackbox attack,” in Fifth International Conference on Artificial Intelligence and Computer Science (AICS 2023), SPIE, 2023, p. 128032Q. [CrossRef]

- C. Yan, J. Wang, Y. Zou, Y. Weng, Y. Zhao, and Z. Li, “Enhancing Credit Card Fraud Detection Through Adaptive Model Optimization,” 2024. [CrossRef]

- Y. Zhong, Y. Liu, E. Gao, C. Wei, Z. Wang, and C. Yan, “Deep Learning Solutions for Pneumonia Detection: Performance Comparison of Custom and Transfer Learning Models,” medRxiv, pp. 2024–2026, 2024.

| Class | YOLO Precision (%) | YOLO Recall (%) | YOLO F1 Score (%) | Faster R-CNN F1 Score (%) | SSD F1 Score (%) |

| barretts | 90.2 | 88.3 | 89.2 | 84.6 | 82.1 |

| bbps-0-1 | 85.6 | 83.7 | 84.6 | 80.2 | 77.8 |

| bbps-2-3 | 88.5 | 86.4 | 87.4 | 82.9 | 80.5 |

| cecum | 87.1 | 85.0 | 86.0 | 81.0 | 78.6 |

| dyed-lifted-polyps | 84.3 | 82.2 | 83.2 | 78.1 | 75.9 |

| esophagitis-a | 86.7 | 84.5 | 85.6 | 80.0 | 77.4 |

| esophagitis-b-d | 89.0 | 87.1 | 88.0 | 83.5 | 81.0 |

| hemorrhoids | 88.8 | 86.7 | 87.7 | 82.7 | 80.2 |

| Other Classes (average) | 86.5 | 84.3 | 85.4 | 80.1 | 77.6 |

| Overall Average | 87.5 | 85.4 | 86.4 | 81.5 | 79.0 |

| Model Configuration | Precision (%) | Recall (%) | F1 Score (%) |

| Baseline YOLO (no augmentation) | 82.0 | 79.5 | 80.7 |

| YOLO + Data Augmentation | 84.6 | 82.4 | 83.5 |

| YOLO + Semi-supervised Learning | 85.7 | 83.6 | 84.6 |

| YOLO + Augmentation + Semi-supervised | 87.5 | 85.4 | 86.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).