1. Introduction

The intensification of global climate change has led to the widespread, frequent, recurrent, and concurrent occurrence of extreme weather disasters in various regions [

1,

2,

3]. In recent years, the losses and impacts of natural disasters caused by extreme weather events have also intensified [

4,

5,

6]. According to the "2023 Asian Climate Status" report released by the World Meteorological Organization, Asia experienced the highest incidence of disasters worldwide in 2023, primarily due to weather, climate, and hydrological factors. Floods and storms accounted for over 80% of these incidents, with floods and rainstorms causing the highest number of casualties and economic losses. Specifically, more than 60% of all disaster-related fatalities were caused by flooding. Urban areas, in particular, suffer significant disruptions from sudden, extreme rainstorms, leading to waterlogging, and severely impacting road traffic. Rapid and precise identification of the spatial location, extent, and severity of the damage is crucial for enhancing the efficiency of post-disaster recovery efforts. Such measures are vitally important for establishing lifelines during disasters and reducing their overall impact [

7,

8]. As critical components of regional economic infrastructure, transportation roads play a pivotal role in the spread of disaster impacts. Consequently, the timely dissemination of traffic and road disaster assessment results is essential for effective emergency response and robust disaster risk management [

9,

10].

With the rapid advancement of remote sensing technology, remote sensing methods now enable the large-scale and rapid extraction of disaster-related information, characterized by low costs and dynamic detection capabilities [

11,

12]. Recent studies have focused on delineating flood inundation extents, primarily utilizing optical remote sensing images and Synthetic Aperture Radar (SAR) images for flood feature extraction [

13,

14,

15]. Optical remote sensing offers direct imaging, better image quality, and abundant spectral data. Using spectral indices to identify areas affected by flooding and sediment deposition has yielded notable results [

16,

17,

18]. However, optical remote sensing data is highly vulnerable to cloud cover and adverse weather conditions, which compromise the accurate extraction of disaster information. Frequently existing cloud cover can also introduce significant errors in delineating inundation extents [

19,

20]. Microwave remote sensing, operable under all weather conditions, can penetrate clouds, snow, and fog, unaffected by meteorological factors. Flood information extraction methods using SAR imagery are typically categorized into traditional and deep learning approaches [

21,

22]. Traditional methods are notably rapid, catering to the urgent needs following disasters but are less effective in complex terrains, often hampered by mountain shadows and sensor noise. Conversely, deep learning methods have significantly enhanced extraction accuracy, offering improvements over issues associated with spectral interference from terrain and sensor anomalies. Nonetheless, the performance of deep learning models is largely dependent on ample sample sizes, requiring further refinement and optimization, especially for models utilizing remote sensing data from diverse sources. The application of existing deep learning models for rapid flood information extraction following brief, extreme weather-induced rainfall remains a subject for detailed investigation. Therefore, developing a rapid flood information extraction system using SAR image data is a primary objective of this study.

In the era of digital intelligence, leveraging remote sensing for urban natural disaster emergency response no longer suffices for comprehensive collection and rapid analysis of extreme natural disaster information. The advent of big data has revolutionized disaster management approaches and paradigms, introducing new research methodologies and strategies for urban flood prevention and governance. Social media crowdsourcing data, serving as an additional data source, offers a communication channel for reporting extreme natural disaster events. Its volume and spatial density often surpass that of traditional sensors [

23,

24]. Social media can rapidly aggregate information about sudden natural disasters in near real-time, eliminating the need for deploying reconnaissance equipment or personnel. It provides timely disaster and geographic distribution information, enhancing the monitoring capabilities of Earth observation satellites. On one hand, it monitors social media users’ attention and emotional responses to sudden natural disasters, providing valuable insights for emergency decision-making and public opinion management. On the other hand, the immediacy of social media data allows for a real-time understanding of conditions in disaster-affected areas, supporting emergency assessments and aiding disaster response decision-making. However, despite these advantages, the accuracy of risk assessment for sudden natural disasters using social media data still presents significant challenges compared to the processing of Earth observation data.

Current studies on the application of Natural Language Processing (NLP) to natural disasters primarily focus on emotional analysis, sensitivity mapping, post-disaster recovery, and loss assessment. Previous studies [

25] have demonstrated the key role of social media in earthquake damage assessment and have defined a text-based damage assessment scale to underscore the rapid assessment capabilities of social media data. Researchers frequently employ text mining techniques to derive actionable insights during disasters. For instance, Yuan et al. (2018) created an index dictionary through semantic analysis to pinpoint damage-related tweets during Hurricane Matthew in Florida, illustrating the feasibility of using social media data to identify severely affected areas at the county level [

26]. Another example is which utilized social media data for disaster loss evaluation, encompassing data preprocessing, fine-grained topic extraction, and quantitative damage estimation, and conducted correlation analysis at the urban level to link various themes with disaster losses [

27]. Inspired by these findings, researchers have identified a strong correlation between social media activities and disaster damage [

25,

26,

27]. Moreover, disaster loss assessment using social media information primarily involves text cleaning and processing of big data. Unlike news information classification, text classification methods in a disaster context face challenges due to the small amount of effective information, imbalanced sample data, and unclear differentiation scales between loss levels [

8,

30,

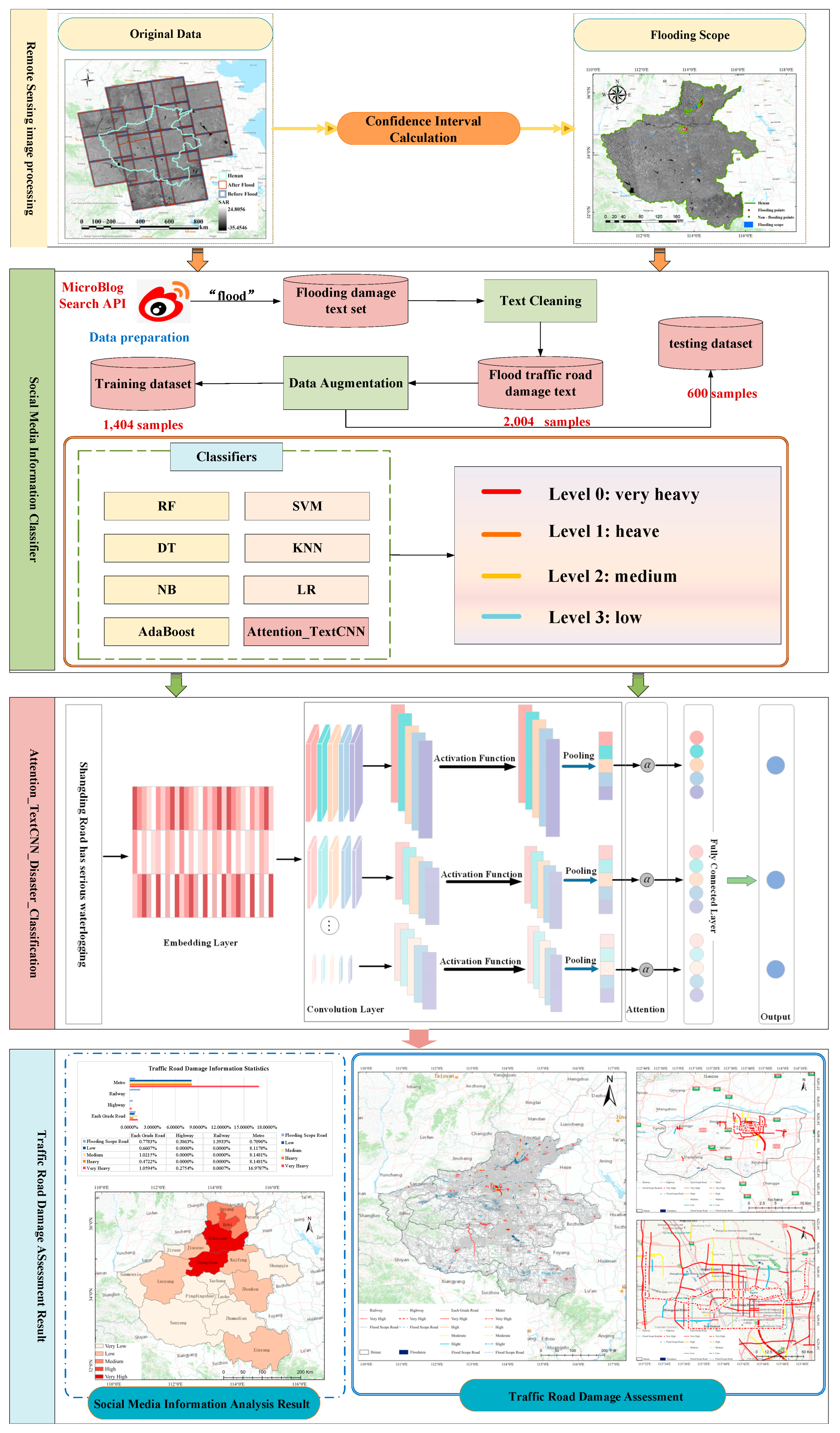

31]. Motivated by these insights, this paper aims to assess emergency flood traffic road damage by integrating analyses of social media data, remote sensing, and geographic information. By leveraging multi-source heterogeneous data, this approach aims to improve the accuracy of traffic road loss assessments in sudden natural disasters, enabling emergency personnel to devise more effective disaster relief strategies. In this study, damage assessment of the transportation network is completed using social media data, remote sensing image data, and basic geographic information. The main technical process is illustrated in

Figure 1. This work contributes in two major ways:

(1) Flood impact scope identification of SAR images Based on Confidence Interval is proposed, the establishment of this threshold provides a scientific and reliable technique, enhancing the credibility of the model's predictions and results.

(2) A quantitative table for assessing road traffic loss based on social media disaster information was established. Then, a road traffic risk classification model using social media data was developed. This model adds attention mechanisms and improves prediction performance, accuracy, and stability by optimizing the size of convolutional kernels and the number of layers.

2. Study Case and Data Collection

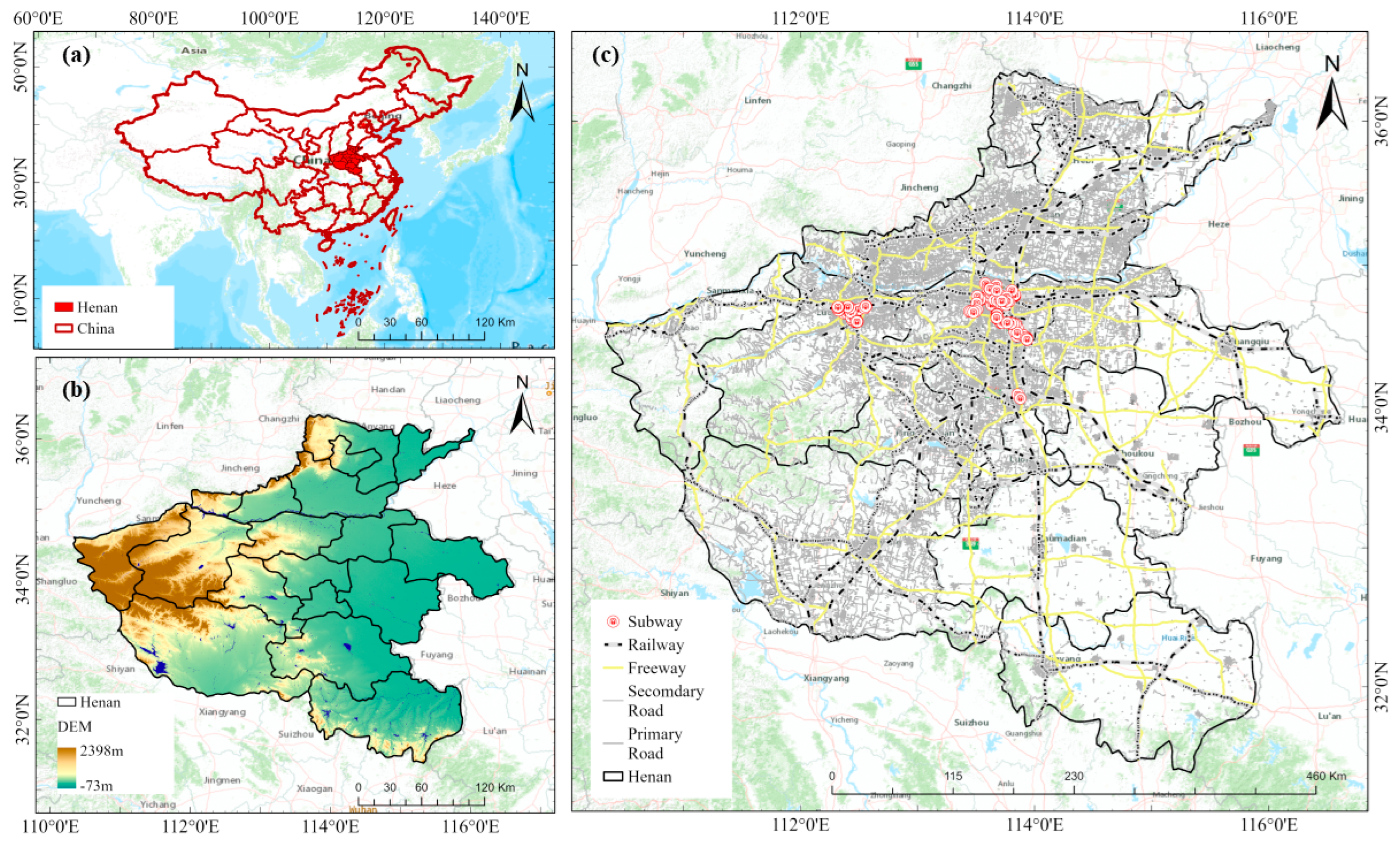

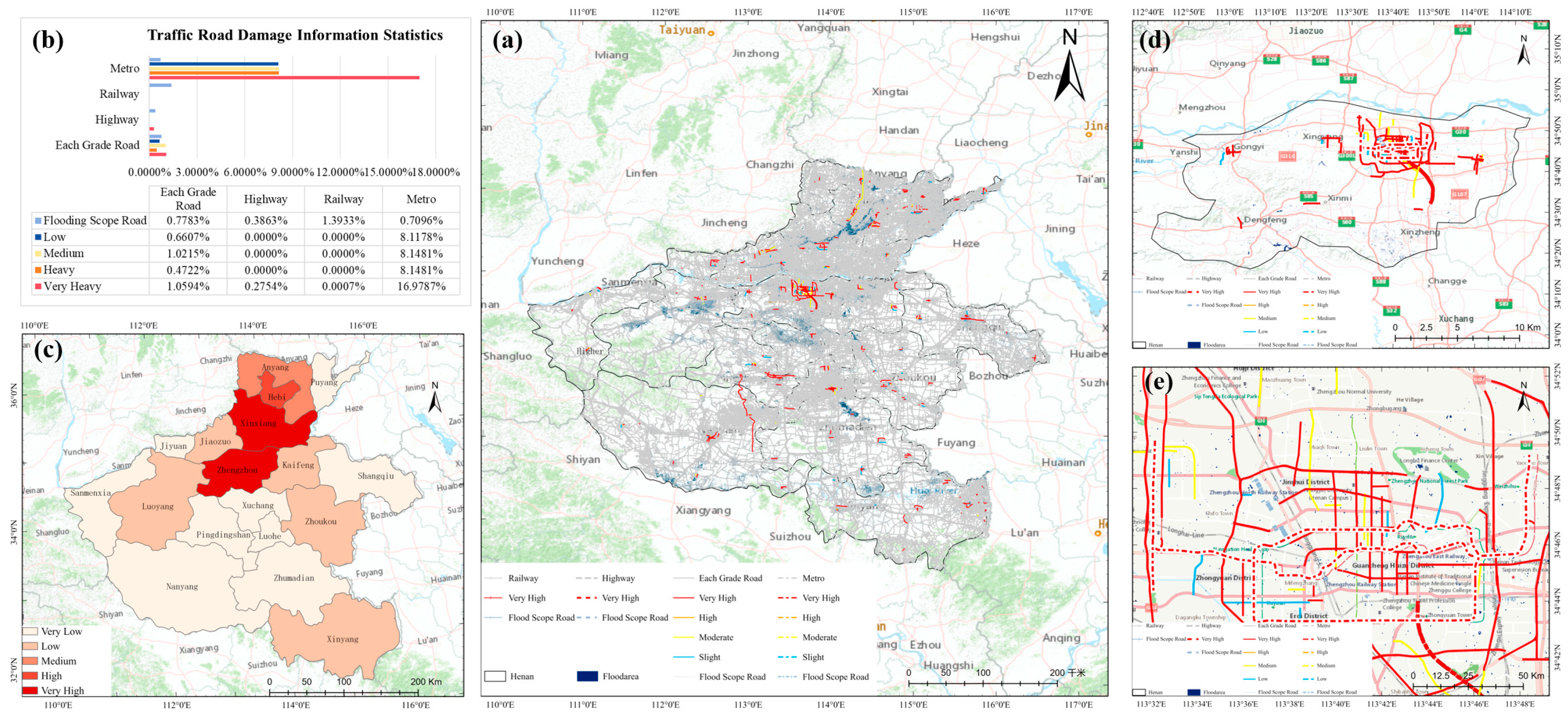

From 08:00 on July 20, 2021, to 06:00 on July 21, 2021, a severe rainstorm occurred in the north-central part of Henan Province, with extremely heavy rainfall recorded in areas of Zhengzhou, Xinxiang, Kaifeng, and others. Between 16:00 and 17:00 on July 20, the maximum hourly rainfall in the urban area of Zhengzhou reached 120-201.9 mm. The daily rainfall recorded at ten national meteorological observation stations in Zhengzhou, Xinxiang, and Kaifeng surpassed historical records. The intense rainfall affected approximately 13.9128 million people in Henan Province, resulting in 302 deaths and 50 individuals missing due to the disaster.

This study utilizes the Sina Weibo social platform to extract information on the rainstorm disaster in Henan. It allows users to access information through various mediums including web, email, apps, instant messaging, SMS, PCs, and mobile devices, facilitating real-time sharing and transmission of information in text, images, videos, and other multimedia formats. Social media platforms, characterized by their rapid response, extensive coverage, and significant impact, are critical in managing large-scale sudden natural disasters. Scientific analysis of post-disaster social media data can effectively mitigate losses and social hazards, providing essential data for emergency management departments to understand the needs of affected areas. The data collection period spanned from July 19, 2021, to July 24, 2021, across Weibo and other platforms, yielding a total of 83,030 valid entries, including basic geographic information from Henan Province, as illustrated in

Figure 2.

3. Methodology

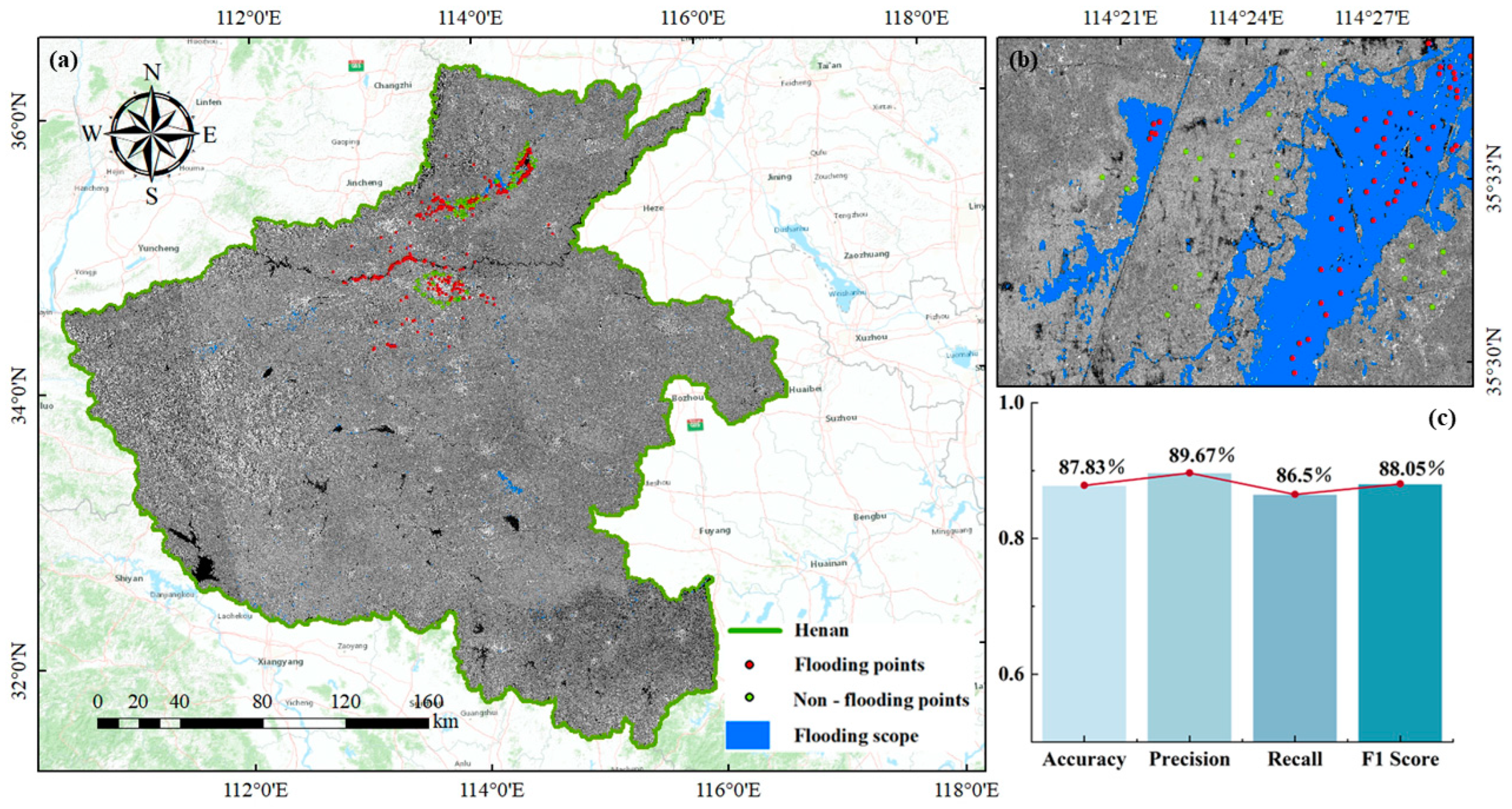

3.1. Flood Impact Scope Identification of SAR Images Based on Confidence Interval

For remote sensing image data, this study employed Sentinel-1 synthetic aperture radar (SAR) satellite imagery from the European Space Agency to identify areas affected by waterlogging. The analysis utilized VH polarized SAR images in IW mode GRD type HR, capturing data before the disaster from July 1, 2021, to July 19, 2021, and during the disaster from July 20, 2021, to August 10, 2021. Under the GEE platform, the extent of waterlogging was extracted using Sentinel-1 GRD image data, following preprocessing steps including orbit correction, ARD boundary noise removal, thermal noise removal, radiometric correction, terrain correction, and dB conversion. The study applied smoothing filters to SAR images to minimize speckle effects and differentiate post-disaster images from pre-disaster ones, creating a differential image that highlights changes due to flooding. The flood threshold was determined by manual selection of sample points within the constraints of confidence intervals.

A confidence interval is a commonly used method of interval estimation. For a dataset, where

Ω is defined as the observation object,

W is all possible observation results, and

X is the actual observation value,

X is essentially a random variable defined on

Ω with values ranging over

W. The confidence interval is defined by the functions

u(.) and

v(.), signifying that for an observation value

X=

x, its confidence interval is (

u(

x),

v(

x)). If the true value is w, then the confidence level is the probability c, expressed mathematically as:

Where U=u(X) and V=v(X) are statistics, making the confidence interval a random interval: (U, V). For instance, constructing a value interval with 95% confidence implies that, in 100 parameter estimates, 95 are expected to fall within this interval. Confidence is typically denoted as 1- α. Thus, if p<0.05, α represents statistical significance, and the confidence level is 0.95, equating to 95%. This is mathematically expressed as:

Here, μ represents the estimated true value, and P(μ∈[low, high]) denotes the probability that the true value falls within the specified interval.

Assuming that the data follows a normal distribution, due to the symmetric nature of the distribution, the confidence interval can be expressed as

, where

is the sample mean and

c is the critical value to be calculated. This is applied in formula (2) as:

In the formula, is the sample mean, δ is the sample standard deviation, n is the sample size, α represents the confidence level, and P is the proportion value in the statistical sample.

3.2. Rapid Loss Assessment of Road Traffic in Flood Disasters Based on Social Media Data

3.2.1. Social Media Information Preprocessing

In the task of disaster loss analysis using social media data, data cleaning and purification are essential components of data preprocessing, significantly influencing both efficiency and outcomes. Initially, the original text often includes numerous non-essential characters (such as punctuation and spaces), which need to be removed, retaining only Chinese characters. After eliminating these characters, the text was segmented using Jieba's precise mode. In this mode, Jieba accurately divides the text into optimal word units, facilitating further analysis. To enhance text analysis quality, a stop word list was employed to filter the segmentation results. This list includes common conjunctions and particles that add little analytical value, such as "we", "de", and "ma". The processed segments were then concatenated into a single string, separated by spaces, forming the preliminary disaster assessment text corpus. Furthermore, deduplication of the initial dataset is necessary. Assuming two texts, S1 and S2, are provided, Word2Vec is used to encode feature vectors. Using these encodings, the MinHash algorithm was used to perform a deduplication on social media text data. This method can measure the similarity between texts and efficiently purifies a large volume of collected social media data, thereby enhancing the accuracy of disaster data analysis.

Disaster loss analysis via social media is essentially a multi-class text classification task, often challenged by small sample sizes and data imbalance between classes. To ensure balanced data across categories and maintain model classification accuracy, the study applied data augmentation. However, due to the specific nature of disaster information on social media, using random deletion methods may remove critical information, such as specific road names. Similarly, backtranslation for data augmentation might introduce semantic biases and compromise subsequent road information matching. To address these issues, this study designated road names as stop words to preserve essential road network information. The purpose of this operation is to avoid replacing road names with synonyms during data augmentation. On this basis, synonym substitution method was used for data augmentation. The synonym library used is the Harbin Institute of Technology Synonym Word Forest. Additionally, random extraction enhancement was performed on each category of the original text. If the same text is selected multiple times, synonyms are used to prevent redundancy in the augmented data. Ultimately, the augmented text was integrated with the original corpus to create the final social media disaster information dataset.

3.2.2. Damage Scales and Text Labeling

To construct a rapid loss assessment model for road traffic in flood disasters using social media data, the initial step involves defining the classification of loss levels derived from social media data. This study references emergency response plans for highway traffic emergencies to create a quantitative assessment table for road traffic losses based on social media information, as depicted in

Table 1. The disaster levels of road networks are categorized into four grades: very serious, severe, relatively serious, and minor. Considering that some road disaster situations may not be reported by social media, remote sensing images identifying areas affected by waterlogging are used to enhance the assessment of the road network disaster status. The classifications are detailed as follows:

Level 0: Represents cases of significant impact, such as roads that have been submerged and require closure.

Level 1: Denotes heavy damage, characterized by deep water accumulation and resulting traffic congestion.

Level 2: Corresponds to moderate damage, for instance, partial waterlogging that leads to reduced driving speeds.

Level 3: Indicates slight damage, where there is virtually no standing water, and traffic flows normally.

Level 4: Represents a submerged state as identified in remote sensing images.

3.2.3. Traffic Risk Classification Method Based on Social Media Information Data

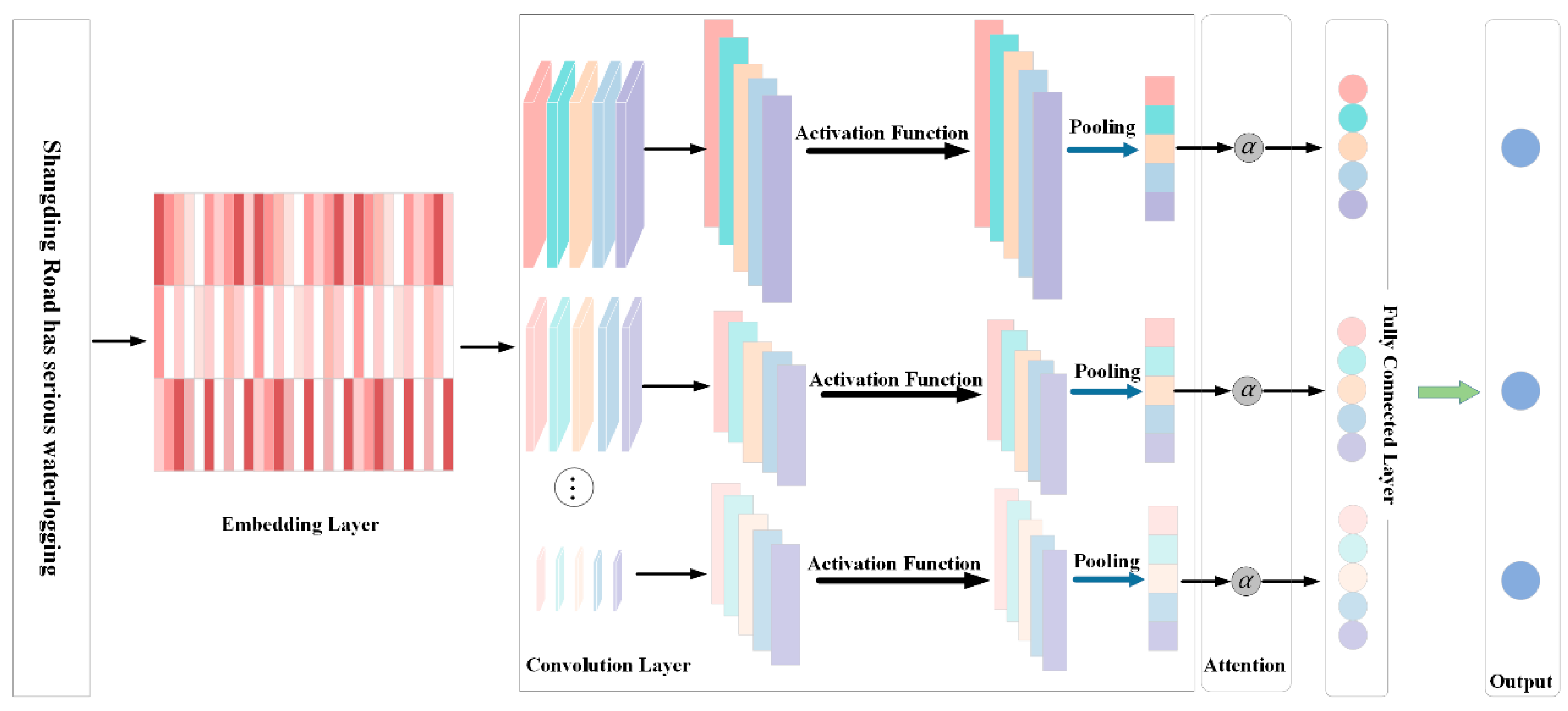

In this study, the domain of traffic road classification using social media information encompasses four categories: Level 0, Level 1, Level 2, and Level 3. This study introduces a text classification model that integrates convolutional neural networks with attention mechanisms, termed the TextCNN-Attention Disaster Classification model. This model is designed to optimize the utilization of both local features and global correlation information of text data, enhancing the efficiency and generalization capabilities of text classification tasks. Upon converting each word in social media information into a vector, the TextCNN-Attention Disaster Classification network classifies traffic damage information. This network comprises an embedding layer, a convolutional neural network module, an attention mechanism module, and a fully connected layer. The structure of the network is illustrated in

Figure 3:

Word Embedding Layer: Each word in the input text is transformed into a vector through spatial mapping, creating low-dimensional representations for each word. These vector representations are connected to form a two-dimensional matrix. Assuming the word vector representing the

ith word in the sentence, the mathematical description is as follows:

Where represents concatenation, a represents concatenation, and usually xi:i+j can be expressed as concatenation of words xi, xi+1, xi+j.

Convolutional module: This module extracts local features from the text. Each convolutional block includes a one-dimensional convolutional layer, a ReLU activation function, and a maximum pooling layer. The size and number of convolution kernels are adjusted to control the receptive field and the number of features extracted by the convolutional block. Convolutional layers are utilized to extract features between words, representing local sentence features. The ReLU activation function is employed to effectively capture and learn complex relationships and features in the input data, while the max pooling layer reduces feature dimensionality and highlights the most significant features. The mathematical description of a convolution operation includes a convolution kernel

w∈

Rh×k, which uses a window size of

h×

k (which

h is the number of words and

k is the encoding dimension) to generate a new feature. For example, feature

ci is generated by the words within the window from

xi:i+h-1, as shown in the following formula:

Where b ∈ R is the bias term, and f is a nonlinear function. This convolutional kernel traverses the entire sentence's words {x1:h, x2:h+1, xn-h+1:n} to generate a feature map c=[c1,c2,…,cn-h+1], where c∈Rn-h+1. The pooling layer employs maximum pooling, with the pooling size matching the dimensions of the feature map. The feature map is processed to extract the maximum value, resulting in the pooled feature map C. This map is then permuted, i.e. F=Permute (C).

Attention mechanism module: Following the convolution module, an attention mechanism is introduced, enhancing the model’s performance and interpretability by dynamically focusing on critical text segments. The attention module includes a learnable parameter matrix to compute the attention weights for each feature. The weighted feature representation, which incorporates global correlation information, is derived by calculating the weighted average of the feature and attention weights. Its mathematical description is as follows:

In the formula, et is the attention score, W is the weight matrix, α t is the attention weight, and V represents the weighted feature.

Finally, these weighted feature representations are fed into a linear classifier to determine the text's category probabilities. The model's accuracy is enhanced using a cross-entropy loss function to gauge the discrepancy between the model output and actual labels, and model parameters are refined via the backpropagation algorithm to minimize loss. The entire training regimen is conducted using an optimizer, specifically the AdamW optimizer, which updates model parameters and boosts the model's convergence speed and performance by fine-tuning the learning rate and other hyperparameters.

3.3. Accuracy Evaluation

Here, Accuracy, Precision, Recall, and F1 Score are metrics used to evaluate the accuracy of remote sensing image inundation range recognition classification and the performance of traffic risk level classification based on social media information. Here, TP represents the number of true positives, TN the number of true negatives, FP the number of false positives, and FN the number of false negatives.

Accuracy is the ratio of correctly classified samples to the total number of samples, serving as a statistical measure applicable to all samples. It is expressed mathematically as shown in formula (6):

Precision is a metric that assesses the accuracy of prediction results, specifically the ratio of correctly classified positive samples to the number of samples classified as positive by the model. Precision focuses on the accuracy of positive data identifications by the classifier. It is defined as in formula (7):

Recall measures the ratio of correctly classified positive samples to the actual positive samples. Recall rate is also a specific statistical measure, emphasizing the accuracy within the real positive samples. It is defined as formula (8):

F1 Score is the harmonic mean of Precision and Recall, incorporating both metrics to assess the overall efficacy of classification models. It is a statistical indicator for evaluating the precision of classification models. An F1 Score ranges from 0 to 1, with higher values indicating superior model performance. The formula is shown in (9):

5. Discussion

5.1. Theoretical Contributions and Implications for Practice

Integrating multi-source data and multi-disciplinary theoretical methods to address traffic road damage caused by short-term urban rainstorms and waterlogging holds significant theoretical importance and practical value for enhancing disaster management capabilities and post-disaster emergency response. Firstly, microwave remote sensing images employ a confidence interval-constrained threshold to extract the surface area affected by precipitation. This method accounts for the uncertainty inherent in the data, offering a more robust approach than relying solely on estimated sample values. Utilizing confidence intervals allows for a more effective management and interpretation of sample data fluctuations. The establishment of this threshold provides a scientific and reliable technique, enhancing the credibility of the model's predictions and results. Secondly, disaster information is collected from Sina Weibo, where social media data is screened and cleaned. A quantitative table for assessing road traffic loss based on this information was established. Building on this, a road traffic risk classification model using social media data (TextCNN-Attention Disaster Classification) was developed. This model integrates convolutional neural networks with attention mechanisms and improves prediction performance, accuracy, and stability by optimizing the size of convolutional kernels and the number of layers.

The essence of recognizing areas affected by short-term urban waterlogging involves identifying water bodies in remote sensing images. The proposed method for recognizing surface areas in SAR images affected by waterlogging, based on confidence intervals, offers simplicity, rapid processing, and independence from data-driven constraints. However, the main challenge lies in the precise determination of thresholds, which depends on the number and distribution of sample points. To reduce the reliance on sample point selection for threshold accuracy, this experiment establishes a threshold range based on confidence intervals, thereby enhancing threshold reliability to meet the urgent needs posed by short-term urban rainstorm disasters. Social media data can swiftly collect information about sudden natural disasters in near-real-time, complementing Earth observation satellite monitoring. Nonetheless, the preprocessing of such extensive big data requires further enhancement. The efficient and precise filtering and cleaning of vast amounts of social media information are crucial for determining the accuracy of text classification models. Improvements in deduplication and condensation of social media information can boost the efficiency of text classification models. Additionally, to maintain the accuracy of road network information, this study processes social media data with stop words during data augmentation to prevent alterations in road network names, which could impact disaster assessment accuracy. Building on sample enhancement, a text classification model that combines convolutional neural networks with attention mechanisms (TextCNN-Attention) is introduced to increase the accuracy of addressing small sample multi-classification issues. The text information is utilized to analyze the damage to traffic roads under short-term rainstorm conditions. The experiment validates the reliability of using social media data to assess the extent of road traffic damage from both temporal and spatial perspectives. This rapid assessment can facilitate swift decision-making following urban waterlogging due to short-term rainstorms, playing a crucial role in mobilizing rescue resources.

5.2. Limitations and Future Research

This experiment has shifted the approach of disaster data collection and mining, enabling a vast amount of "blind data" to assert its "data sovereignty." This transition aids in gaining crucial buffer time for disaster risk response, transforming from remote sensing big data to multi-source heterogeneous disaster data, and applying disaster big data to facilitate timely and rapid loss assessments post short-term rainstorms. In this experiment, the SAR radiation values of the sample data were relatively concentrated, with minimal variation in threshold determination based on the confidence interval. Additionally, a semi-automatic interpretation method was employed to address the inherent band problem in SAR data. Future research will consider using adaptive thresholds to enhance the accuracy of water body recognition. Compared to Earth observation data, the robustness and accuracy of integrating Internet social media data in sudden natural disaster risk assessments remain central to further in-depth studies. This study uniformly processed stop words for social media data. However, due to the wide variety of social media data sources and formats, applying this processing method uniformly to subsequent disaster events may not be feasible. Monitoring updates to the stop word database for disaster public opinion information will be a foundational task moving forward. This study primarily visualized the damage in affected areas based on location feedback from text content, which differs from the user’s posting location, home location, or registration location, ensuring the reliability of spatial distribution in disaster damage information. The overall evaluation depends on the dataset's quantity and quality; insufficient data or inadequate data cleaning may introduce biases into the final assessment. Ongoing efforts will focus on improving text classification models to better handle the challenges of small sample data and high-precision classification in social media information. Future research will also emphasize the development of multimodal large language models, which excel in text generation, semantic understanding, and are effective in cross-modal information fusion and human-computer interaction. A key future challenge will be selecting appropriate models based on actual needs in specific disaster assessment scenarios.

6. Conclusions

The flood disaster caused by short-term severe precipitation has inflicted significant losses on social and economic development. This study, focusing on the extreme rainstorm in Henan Province from July 17-23, 2021, applied multi-source heterogeneous data to thoroughly assess the risk to traffic roads from short-term urban flood disasters. This approach can provide valuable insights for utilizing multi-source heterogeneous data to analyze damage to urban waterlogged traffic roads from short-term rainstorms and offers a new perspective on the potential of using social media to assess expressway damage.

(1) This study used a confidence interval-based threshold algorithm to identify the scope of flood impact, enabling rapid responses to sudden disasters. The evaluation metrics, including Precision, Recall, F1 score, and Accuracy, were recorded at 0.89, 0.86, 0.88, and 0.87, respectively. This method allows for swift and targeted analysis of the impact range of short-term flood disasters, providing essential data support for disaster emergency response and post-disaster rescue efforts.

(2) This study can improve the accuracy of addressing small sample multi-classification problems by filtering and cleaning social media data and integrating a convolutional neural networks and attention mechanism-based text classification model (TextCNN-Attention) following sample enhancement. The traffic road risk grades for short-term rainstorm flood disasters are categorized into four levels, completing the definition of a quantitative table for road traffic loss assessment based on social media information. The accuracy of the TextCNN-Attention model in classifying traffic road risk grades is 96%. The assessment method proposed by the TextCNN-Attention model demonstrates high transferability for traffic road risk assessment in various disaster contexts, offering a robust tool for estimating traffic road risk in other regions affected by short-term rainstorm floods.