During the research for this literature review, it was observed that most studies focus on motor imagery (MI) data rather than actual movement data. However, the techniques used with MI can be applicable to actual movement EEG data since MI activates similar brain areas and preserves the same temporal characteristics [

6]. Therefore, this section discusses papers using both MI and actual movement data. Decades of research have gone into developing EEG-based BCI systems since Dr. Hans Berger first recorded EEG signals from humans in the 1930s [

7]. Despite this extensive research, a universally reliable system has yet to be developed. The primary challenge is the highly non-stationary nature of brain signals, which are prone to contamination by artifacts such as eye movements, heartbeat signals (ECG), and electrical noise. Additionally, these signals are subject-dependent and vary across different trials, making it difficult to classify even between two movement activities from EEG signals. The difficulty increases with multiclass systems [

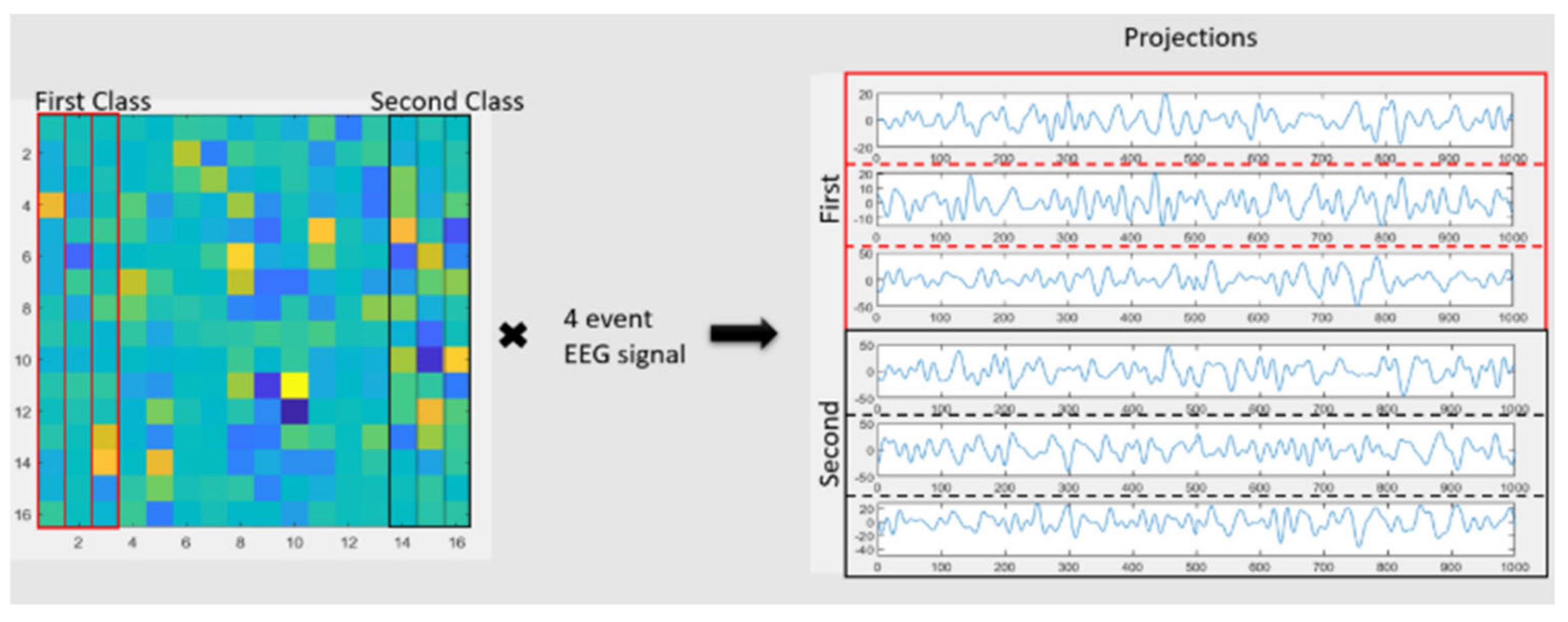

8]. To overcome these challenges, several techniques have been developed. One popular and efficient technique is Common Spatial Patterns (CSP), which was initially developed to distinguish between two classes [

9,

10]. The CSP algorithm finds spatial filters that maximize the variance of the filtered signal under one condition while minimizing it under the other condition [

11]. Paired with an 8-30 Hz broadband filter, CSP has achieved accuracies of 84-94% in discriminating three actions: left hand movement, right hand movement, and right foot movement within three subjects. An extension of CSP, using pairwise classification and majority voting, has also been described to enable multiclass classification [

9]. Other extensions include CSP One-Vs-Rest (OVR), which computes spatial patterns for each class against all others [

12,

13], and CSP Divide-And-Conquer (DC), which adopts a tree-based classifier approach [

13]. Since the effectiveness of CSP generally relies on optimal participant-specific frequency, it is nearly impossible to obtain spatial filters that generalize for optimal discrimination of classes for every person. Several approaches have been employed to address this issue [

13,

14]. Filter Bank Common Spatial Patterns (FBCSP), which autonomously selects the subject-specific frequency range for bandpass filtering of EEG signals [

13]; non-conventional FBCSP, which differentiates classes using a fixed set of four frequency bands, reducing the computational cost [

8]; and sliding window discriminative CSP (SWDCSP), which uses a sliding window of overlapping frequencies to filter the EEG signals [

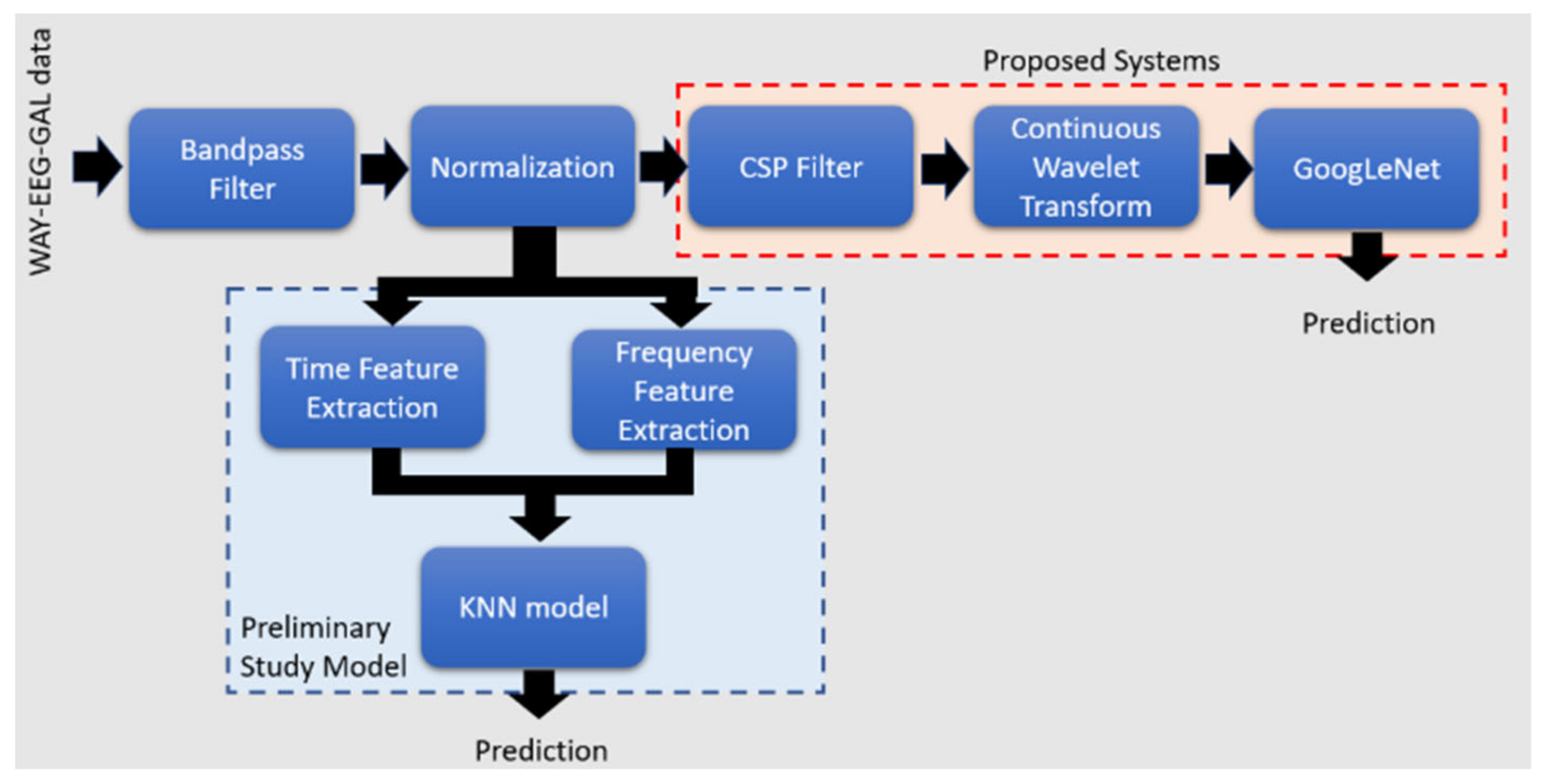

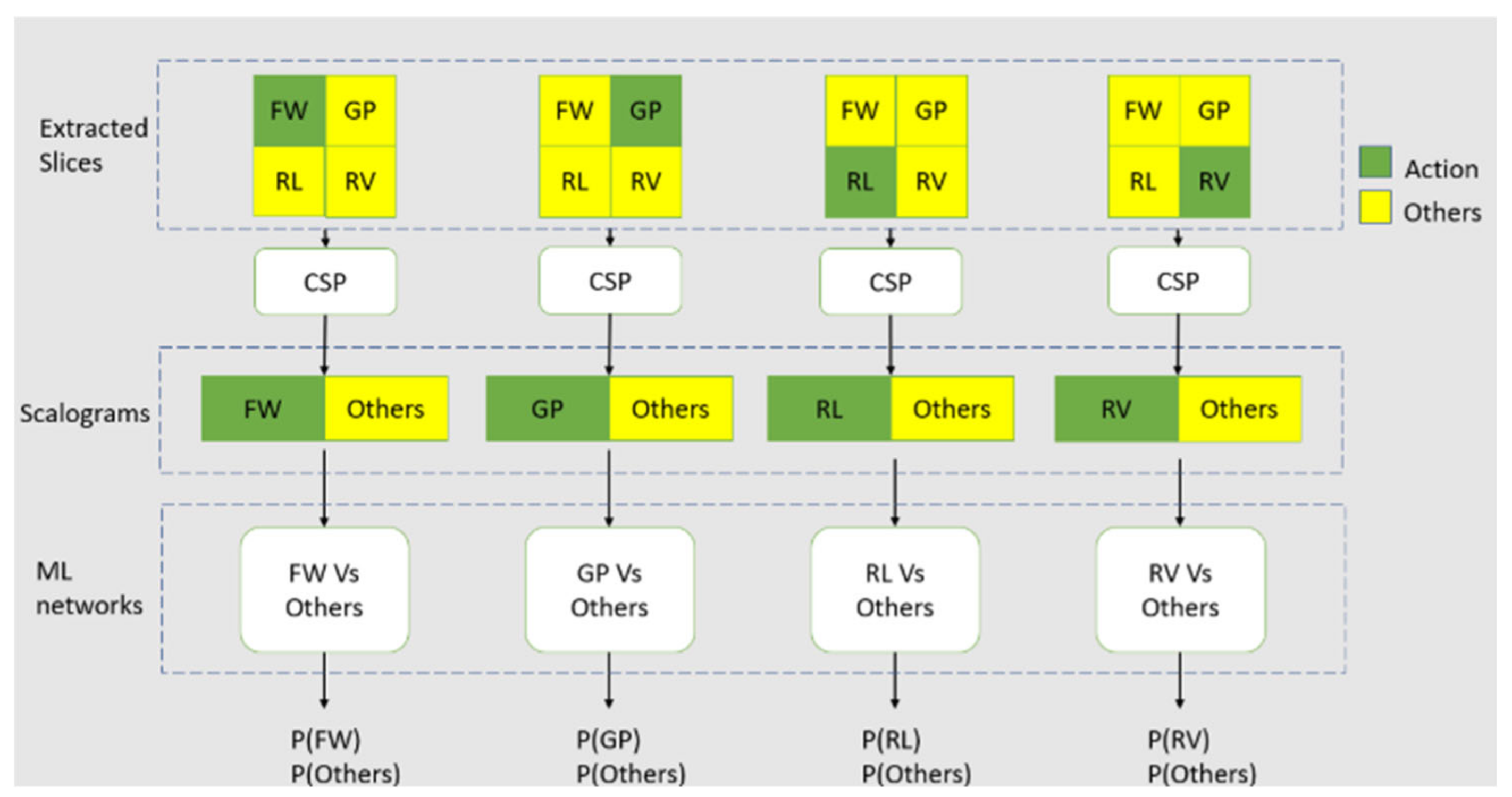

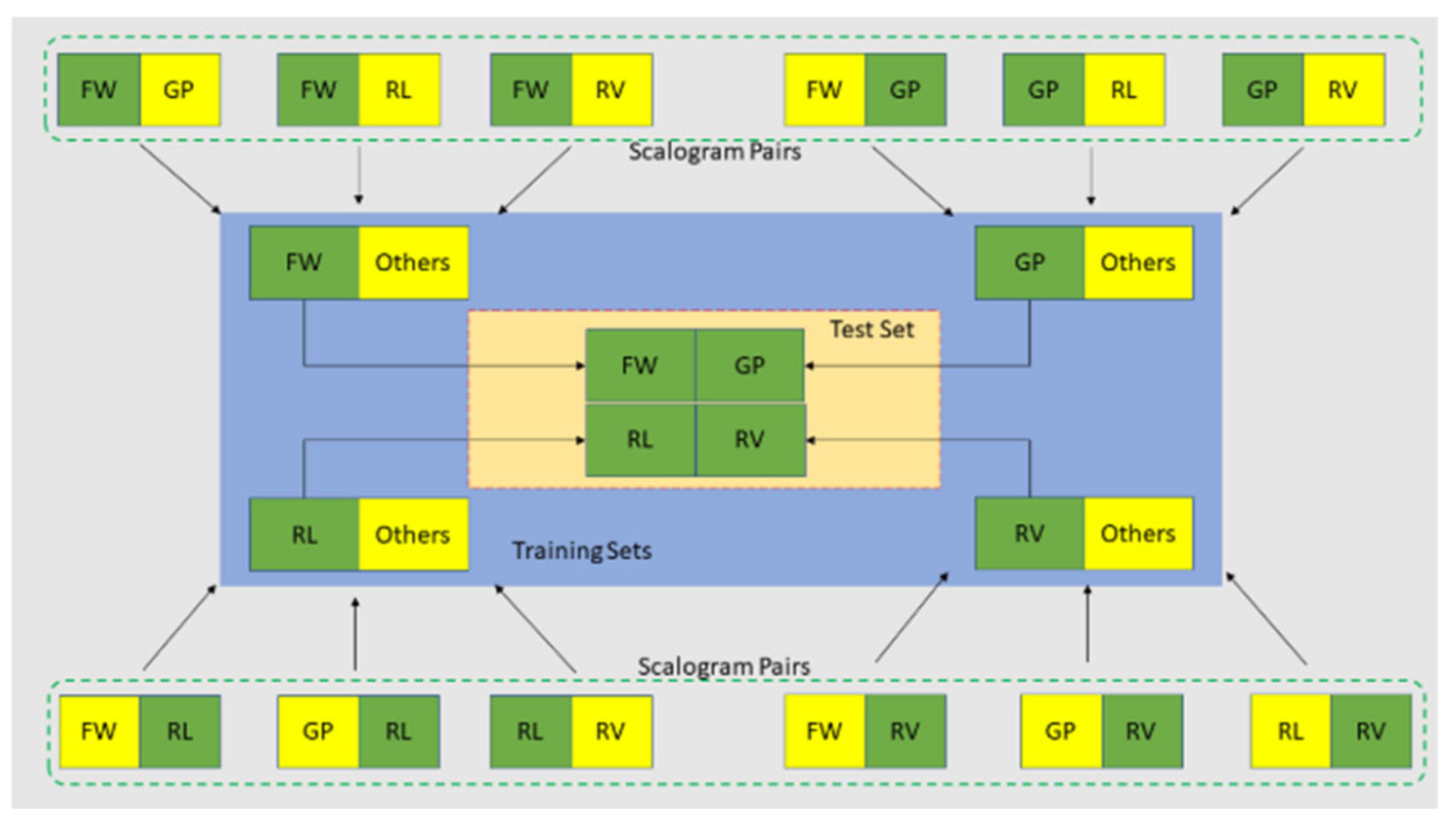

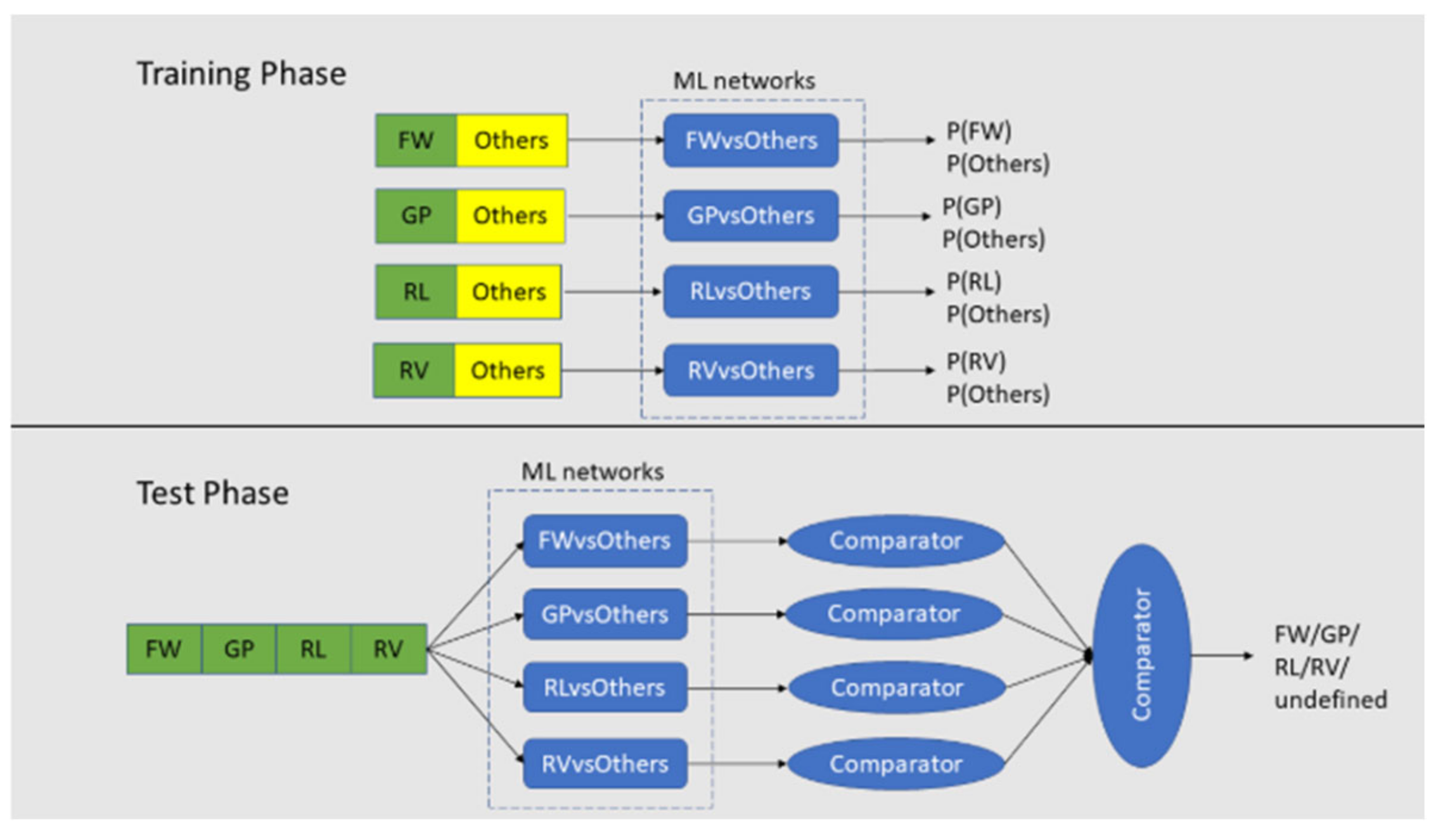

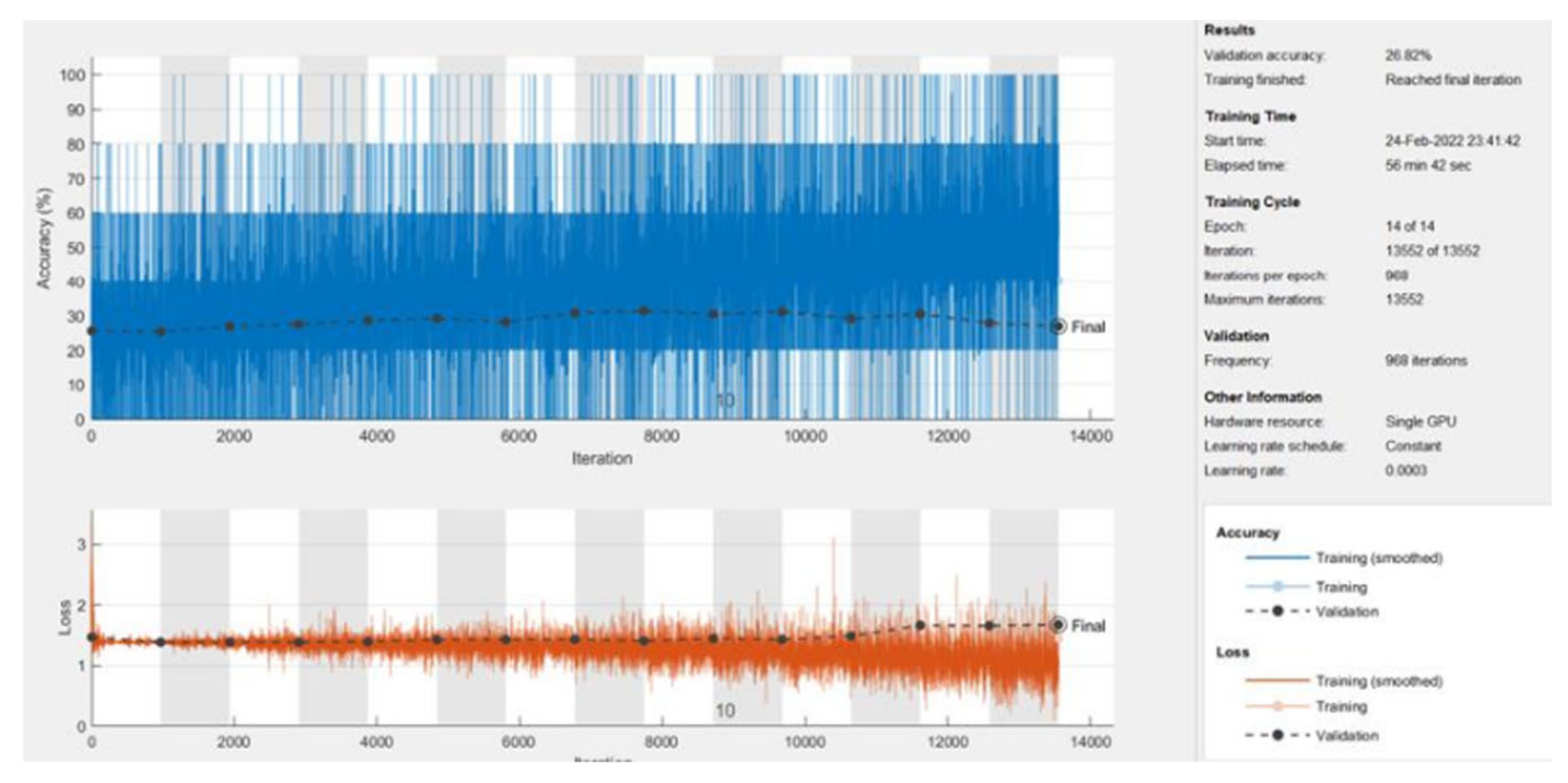

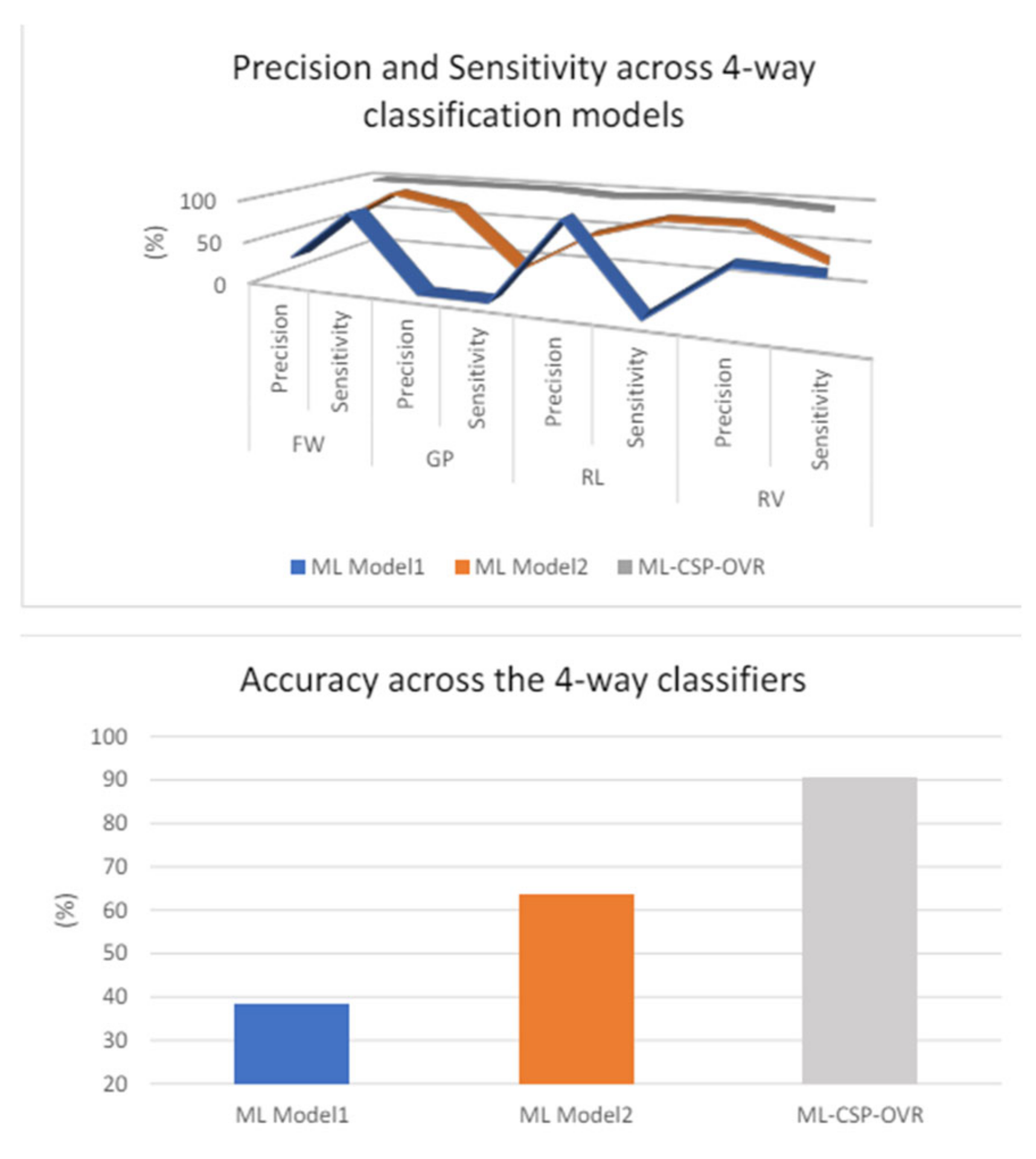

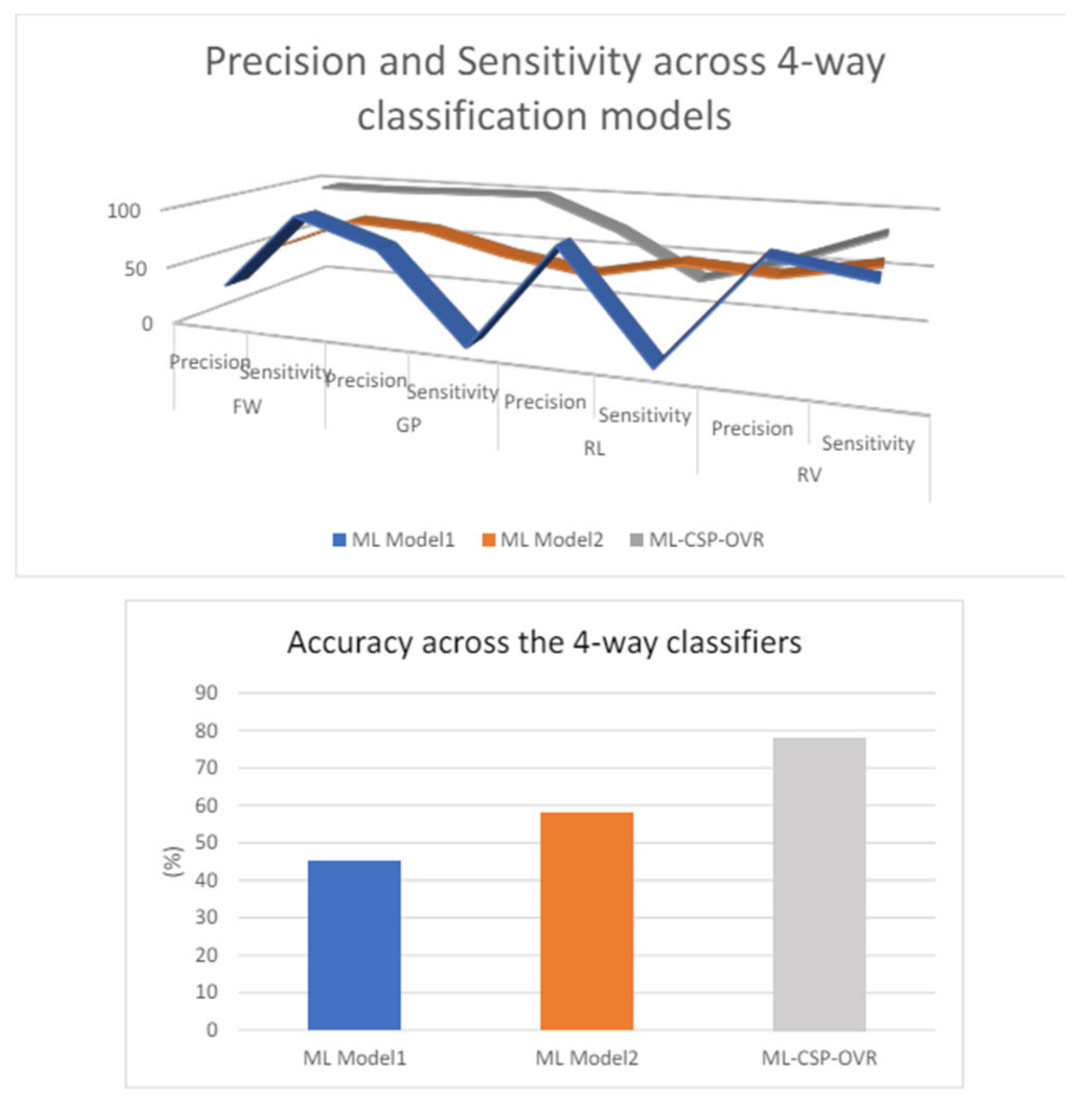

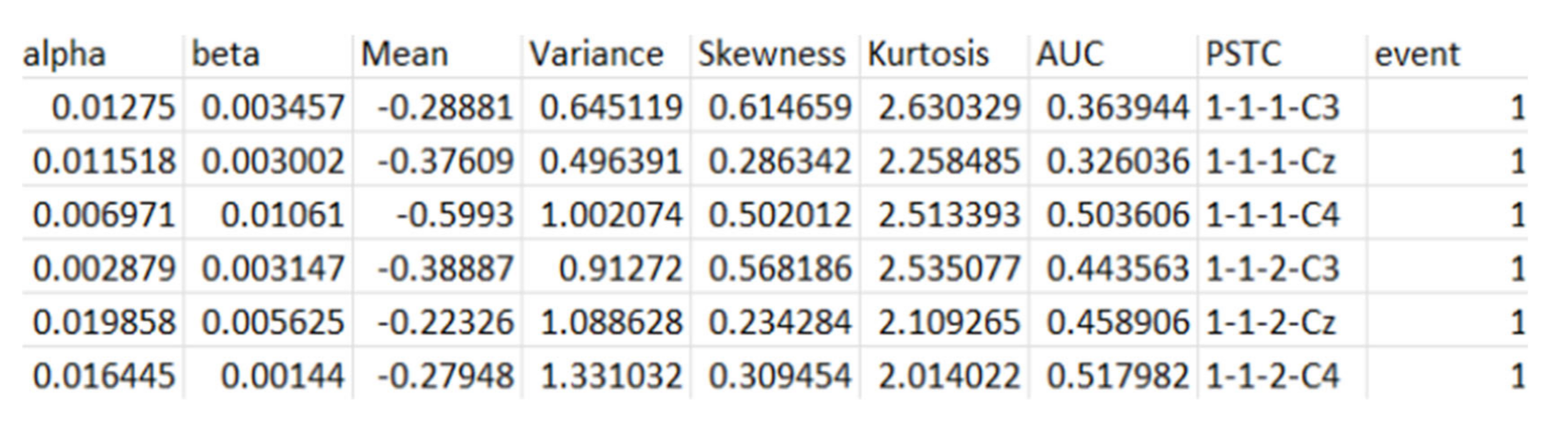

15].This paper proposes a model using CSP OVR and CNN with the WAY-EEG-GAL data-set to distinguish four hand movements. Some closely related papers are explored. In [

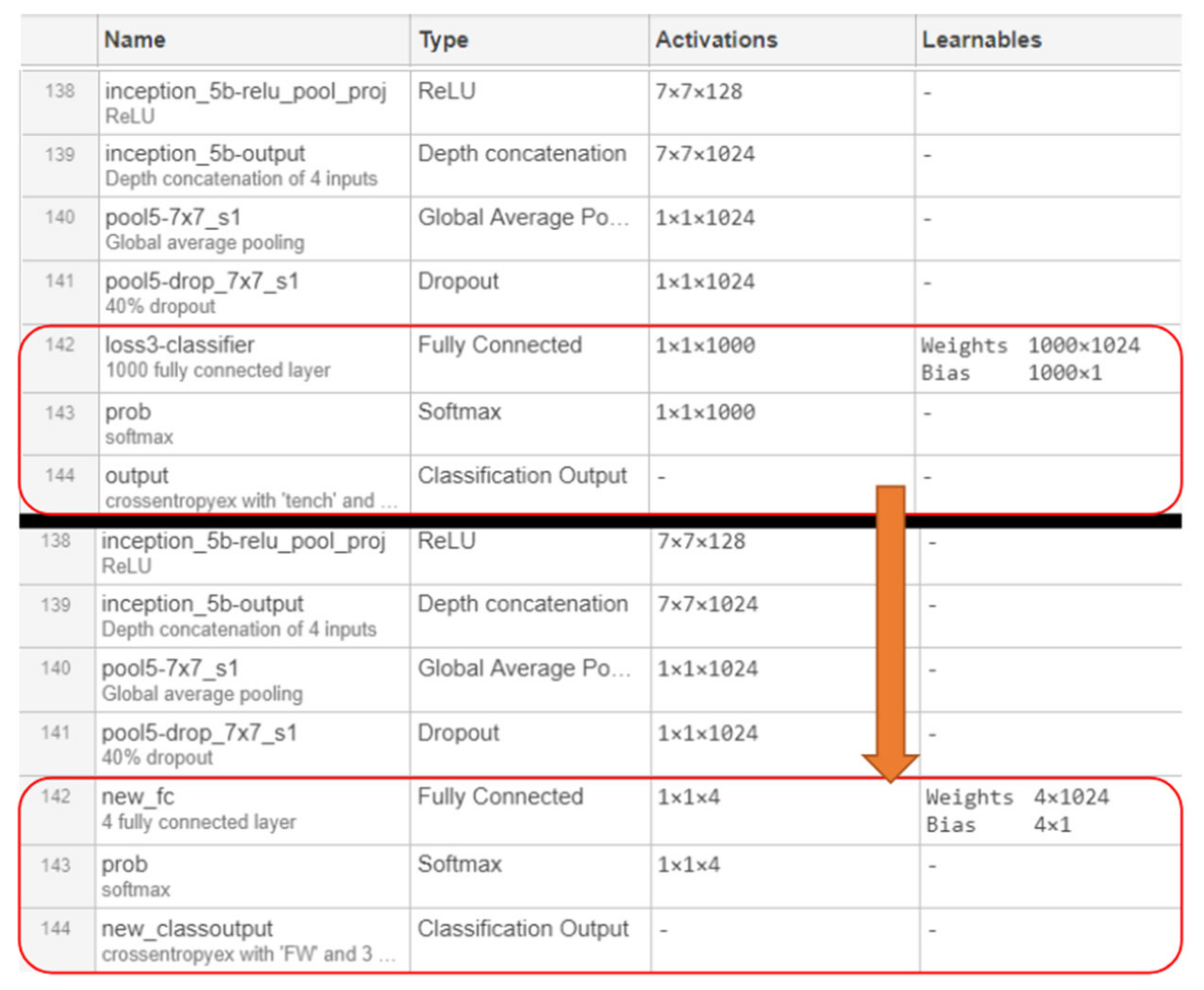

16], the BCI competition III dataset 3a was used, where each subject performs four different motor imagery tasks (left hand, right hand, foot, tongue). Forty-three channels were used out of sixty-four available. CSP was applied with five filters ranging from 8 Hz to 28 Hz, with increments of 4 Hz. A convolutional neural network (CNN) was used as a classifier, with 80% of the data for training and 20% for validation, resulting in a validation accuracy of 93.75% for intersubject classification using combined data. The author of [

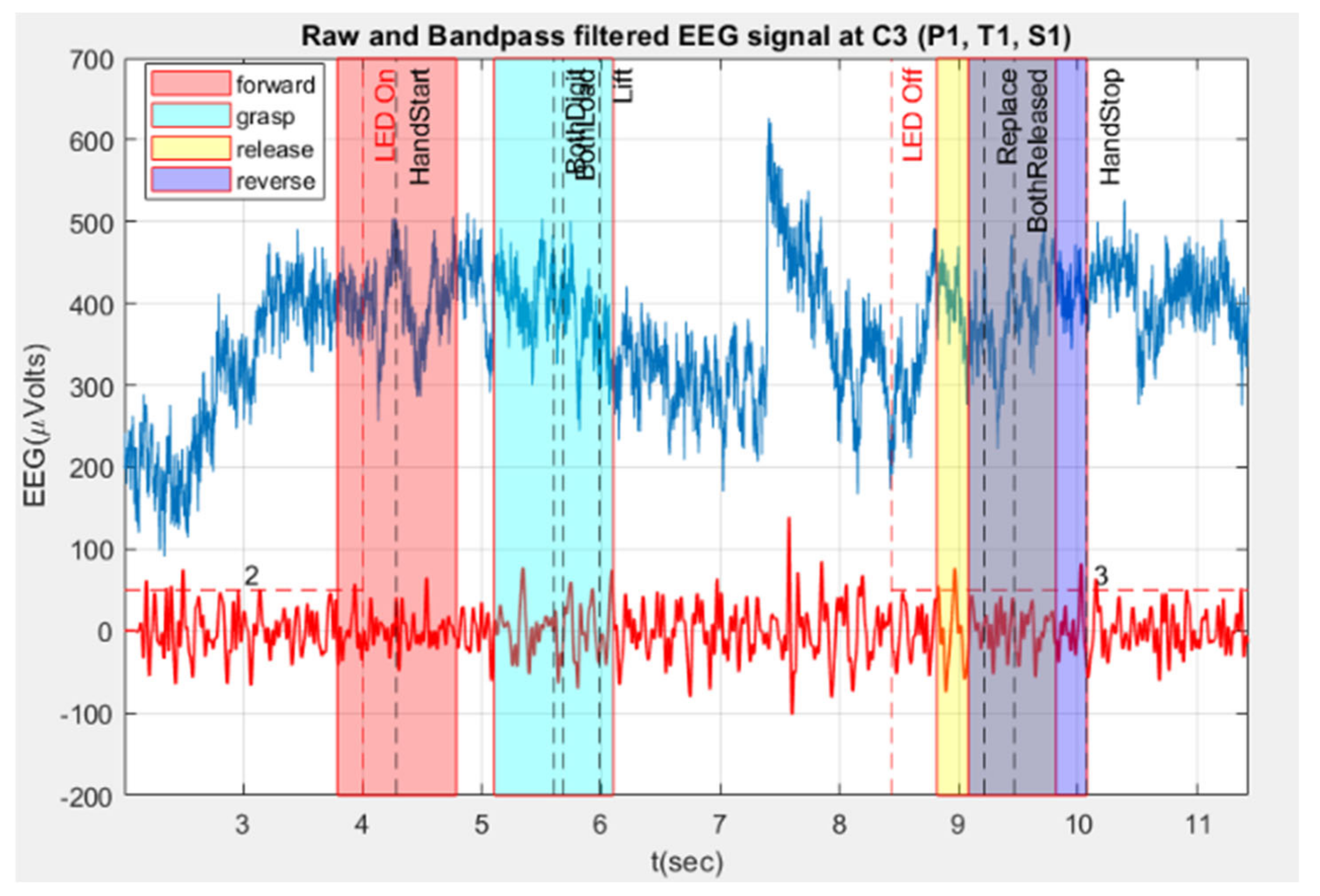

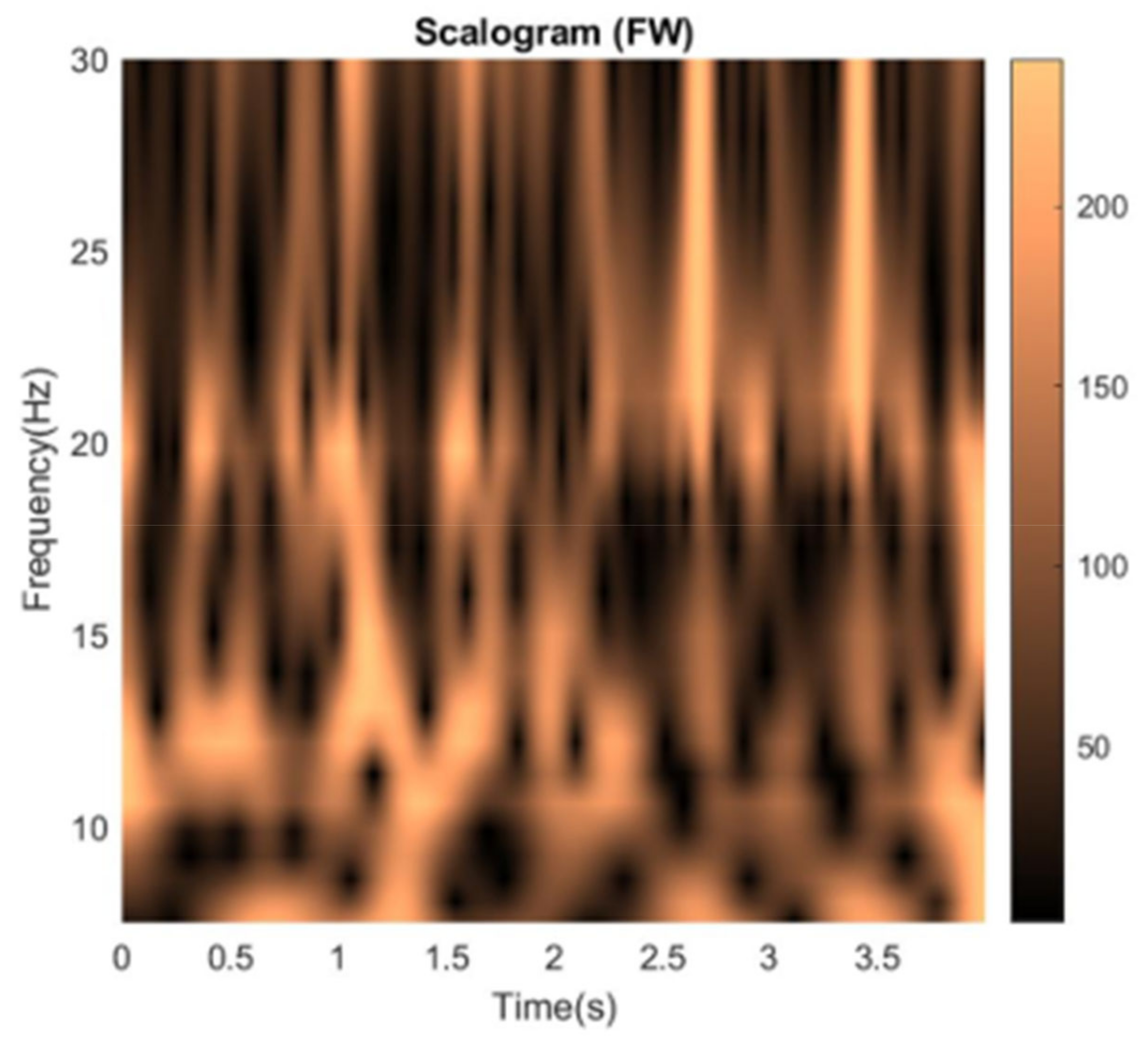

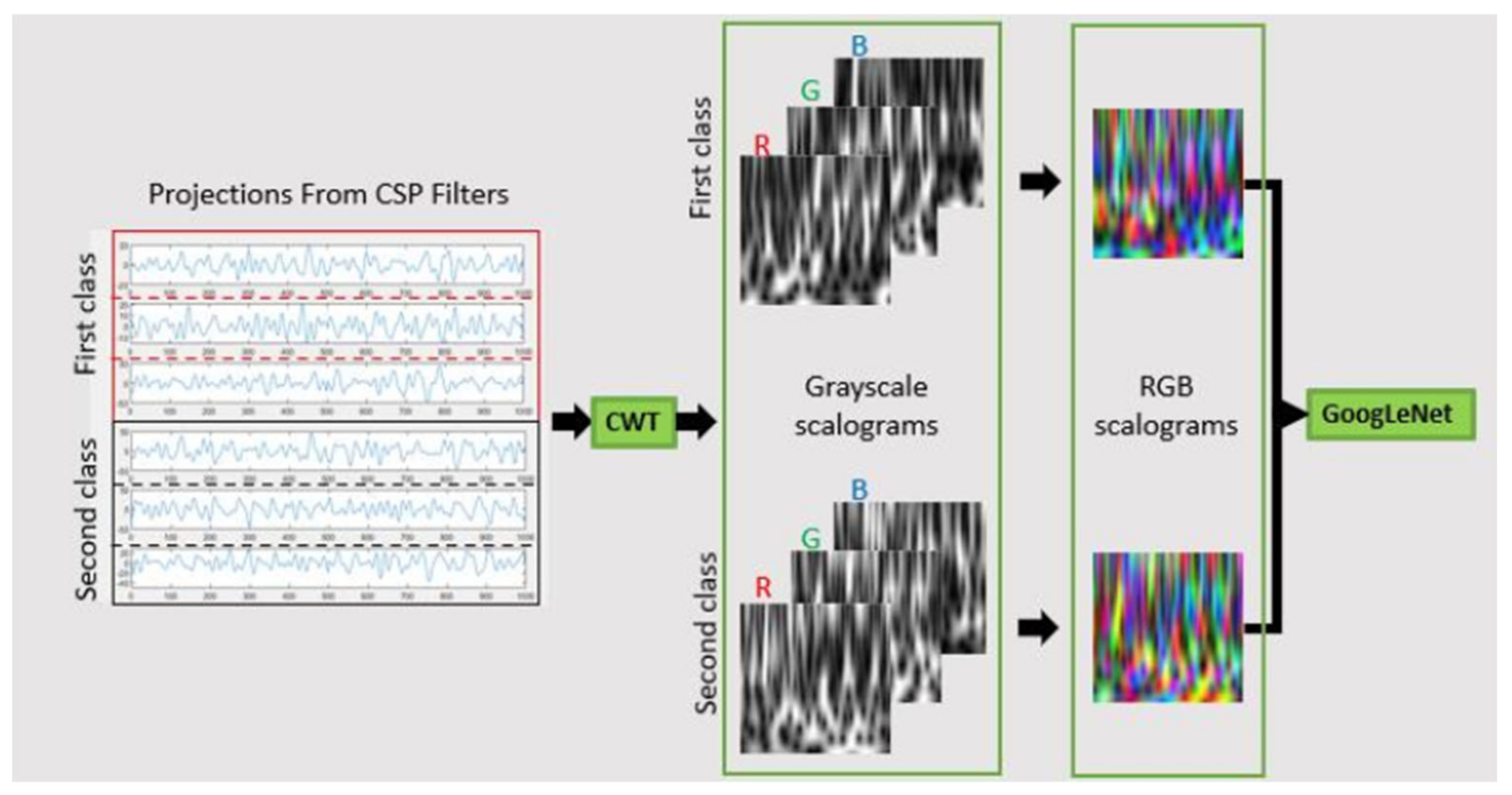

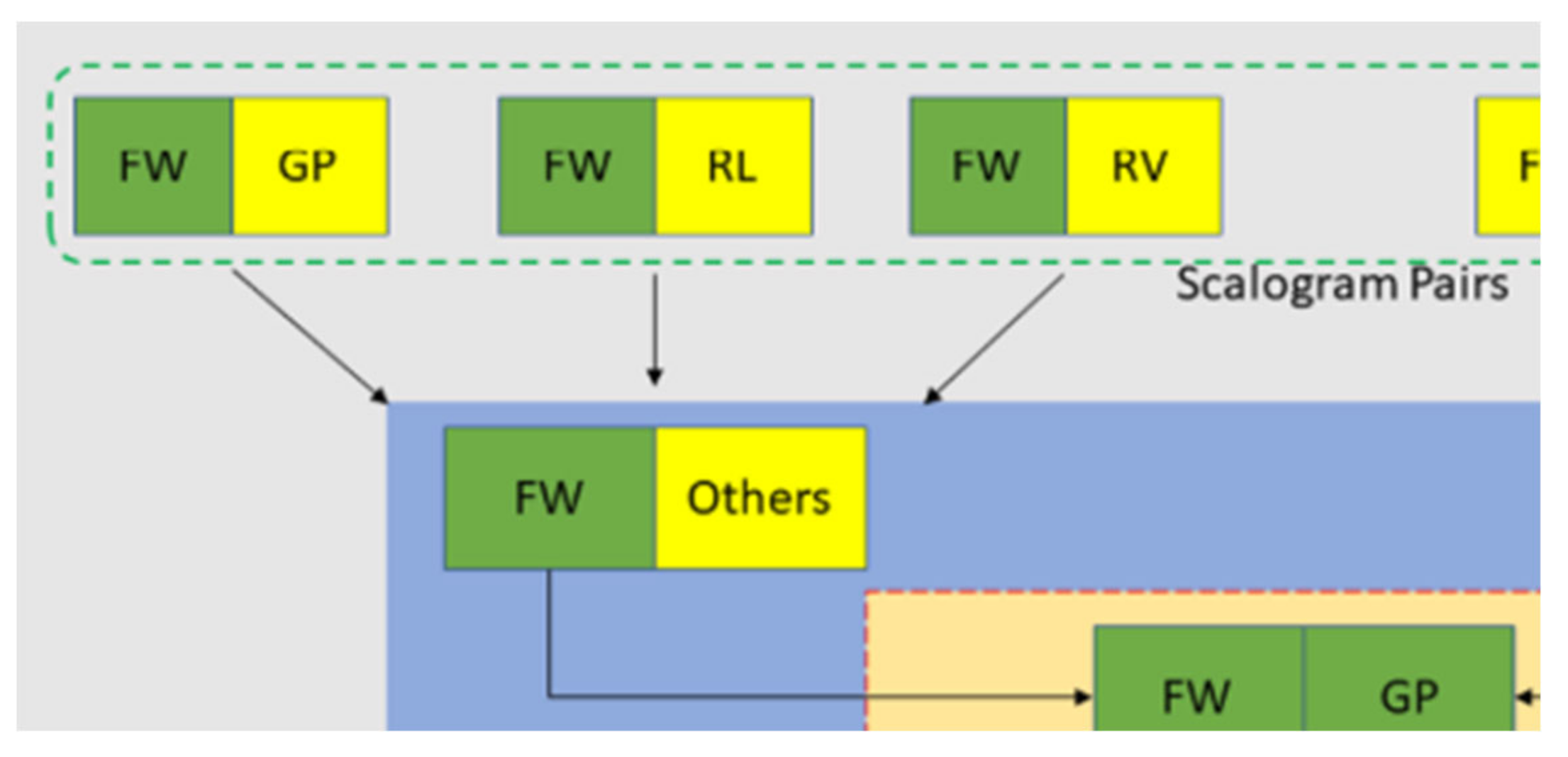

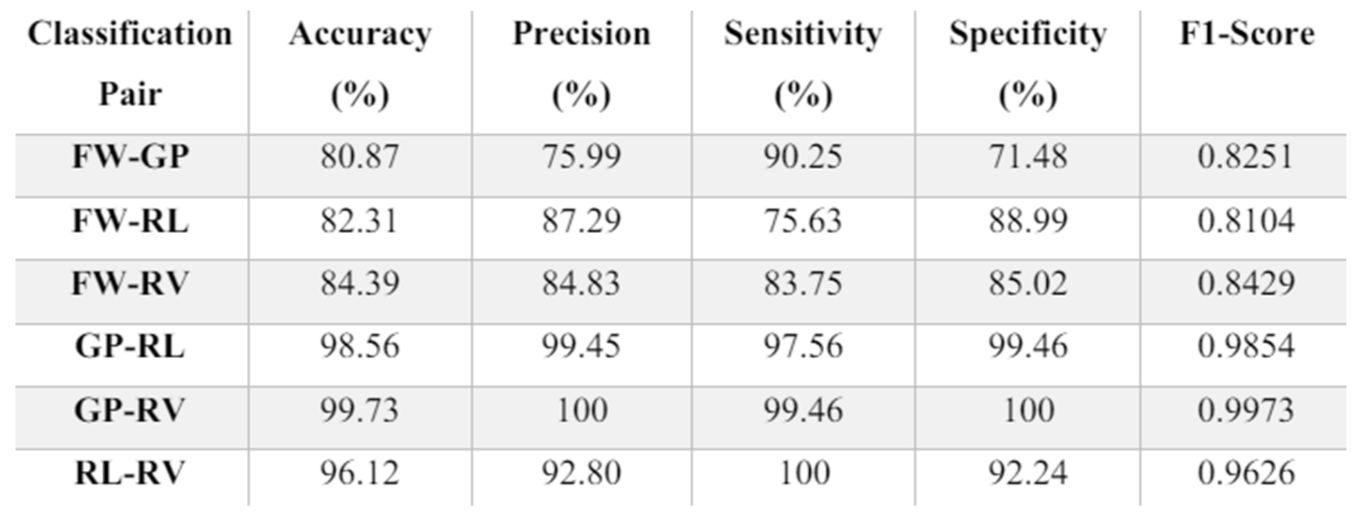

17] used the WAY-EEG-GAL dataset, where twelve participants performed continuous movements of a single limb to grasp and lift an object. From these continuous movements, six segments of interest (hand start movement, grasp, lift, hold, replace, release) were selected for classification. CSP performed six binary classifications on the pairs formed from the six segments. Continuous wavelets transform (CWT) generated scalograms, which were inputs for the CNN network. The classification used was intersubject classification without leave-one-out. The highest accuracy was for the 'hold' segment at 96.1%, and the lowest was for 'replace' at 92.9%. In [

18], the BCI competition IV Dataset 2a was used, where nine subjects performed motor imagery on four movements: left hand, right hand, feet, and tongue. Feature extraction was performed using time-frequency CSP, while Linear Discriminant Analysis (LDA), naïve Bayes, and Support Vector Machine (SVM) were used as classifiers. The results showed that the average computation time was 37.22% less than FBCSP, used by the first winner of BCI competition IV, and 4.98% longer than the conventional CSP method. Li and Feng [

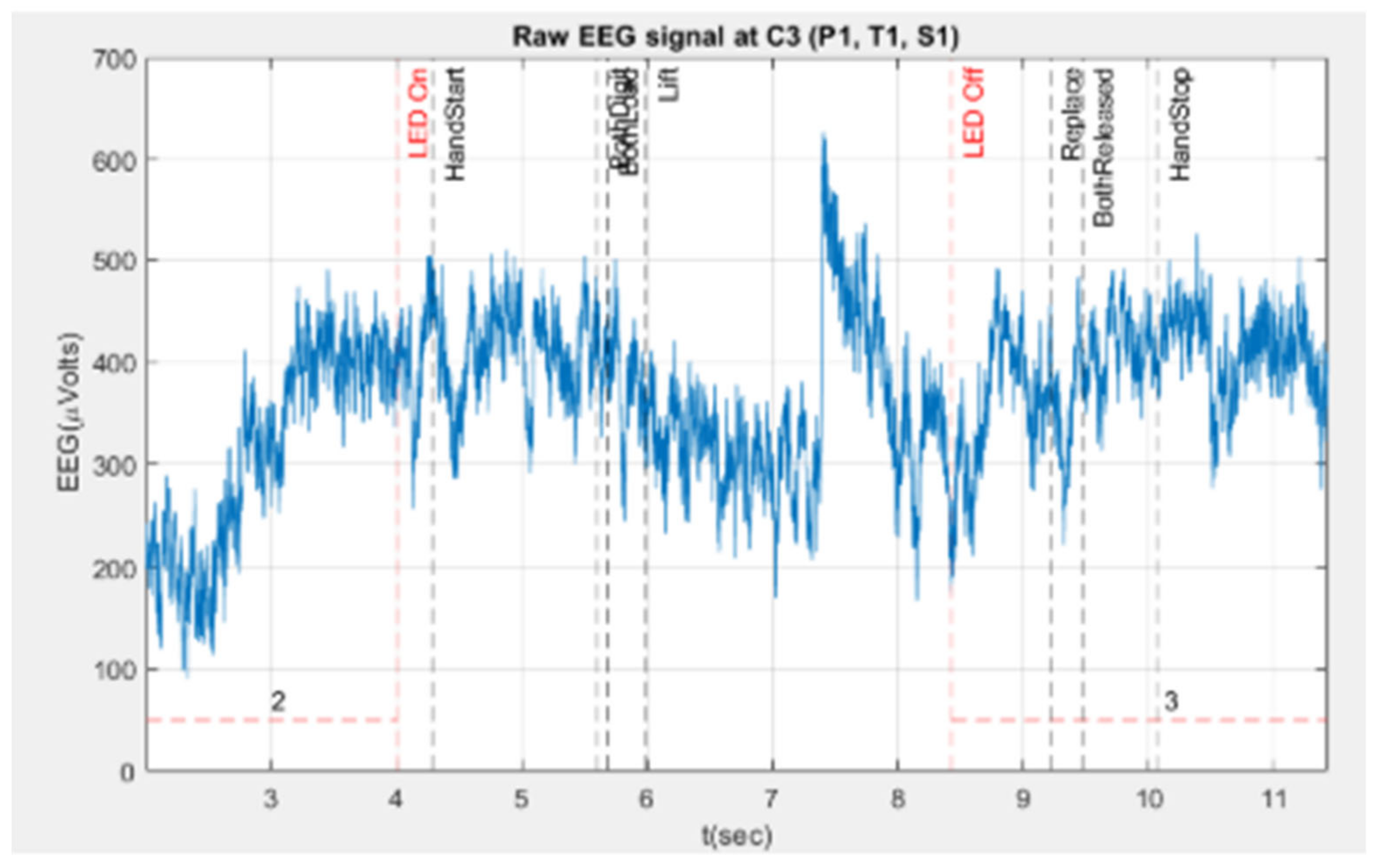

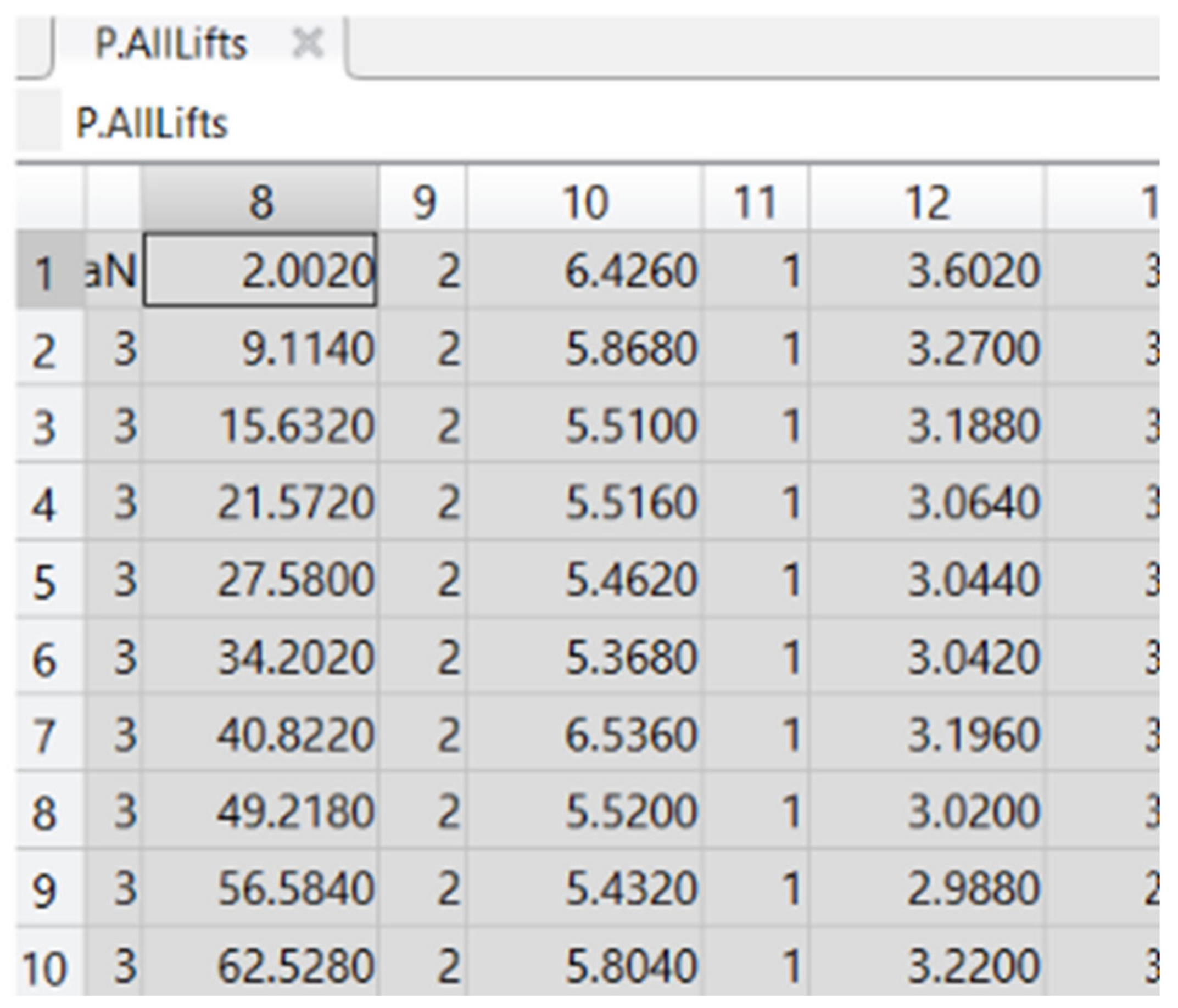

19] used the WAY-EEG-GAL dataset for six-movement classification, involving HandStart, FirstDigitTouch, BothStartLoadPhase, LiftOff, Replace, and BothReleased. A random forest algorithm identified the important electrodes from the thirty-two available. Wavelet transform extracted features, which were inputs for the CNN classifier. This model achieved an accuracy rate of 93.22%. For a more comprehensive view of EEG classification techniques used in the past decade, consider other methods besides CSP. In [

20], a public BCI Research database from NUST (National University of Sciences and Technology, Pakistan) was used. Eight channels (F3, Fz, C3, Cz, C4, Pz, O1, O2) were selected. Classification of EEG to identify left and right arm movements was conducted using wavelet transformation (WT) and a multilayer perceptron neural network (MPNN or MLP), achieving 88.72% accuracy. Alomari et al. [

21] created a publicly available dataset at physionet.org. Eight channels (FC3, FCz, FC4, C3, C1, CZ, C2, C4) were used of the sixty-four available. A bandpass filter from 0.5 Hz to 90 Hz and a notch filter to remove 50 Hz line noise were applied. Independent Component Analysis (ICA) filtered out artifacts. MATLAB's neural networks toolbox built a neural network (NN) with 1-20 hidden layers, and 'MySVM' software performed SVM classification. Classification accuracies were 89.8% using NN and 97.1% using SVM for left- and right-hand movements. In [

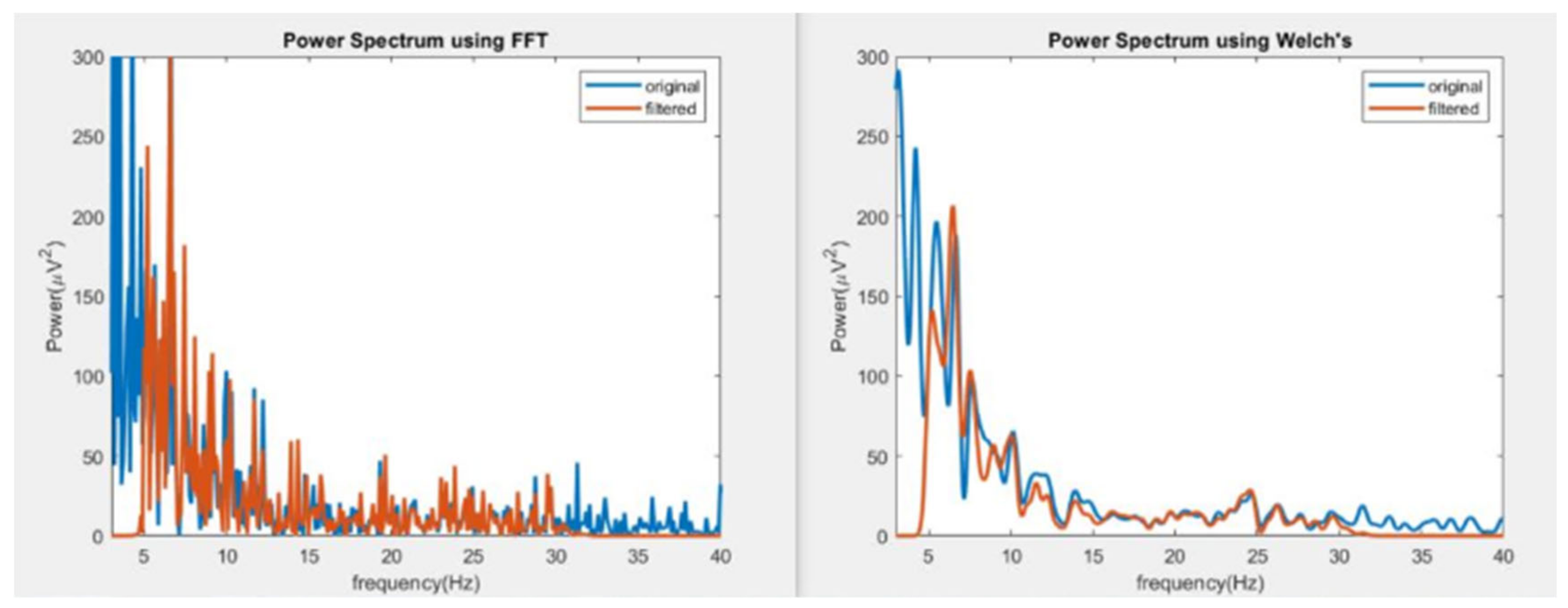

22], EEG data were obtained from a 26-year-old male moving his right hand. Four channels (AF3, F7, F3, and FC5) were used. The EEG signals were preprocessed using a Butterworth band-pass filter (0.5-45 Hz). Feature extraction was performed using WT, Fast Fourier Transform (FFT), and Principal Component Analysis (PCA). Classification was done using MPNN to classify three movements: open arm, close arm, and close hand. The model, with one hidden layer, ten neurons, and 500 epochs, achieved classification performances of 91.1%, 86.7%, and 85.6% using the three feature extraction methods, respectively. The author of [

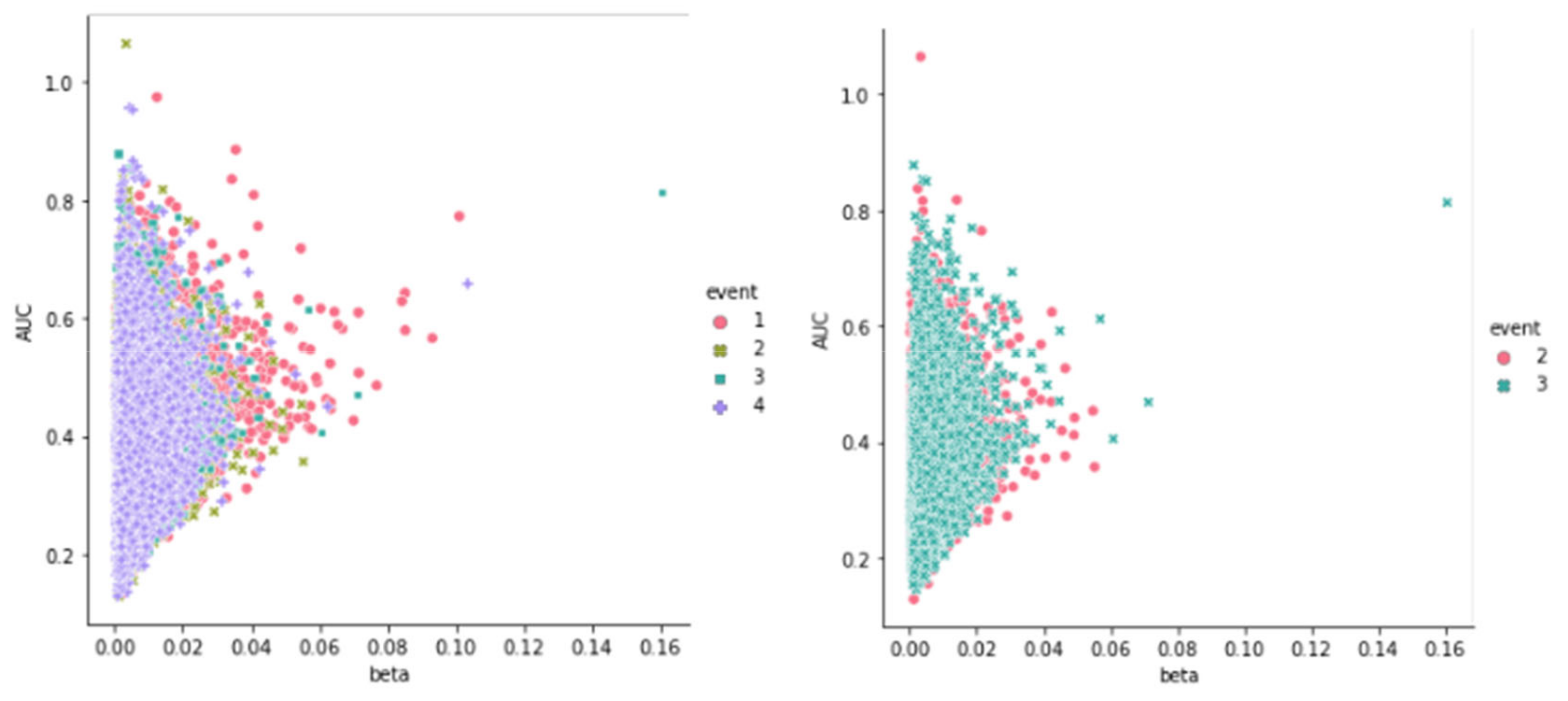

23] created a custom dataset involving two subjects performing motor imagery of three different right-hand grasp movements. From twenty-four electrodes, the best combination was found using the Genetic Algorithm (GA), based on evolution and natural genetics. Preprocessing involved ICA and a Butterworth High Pass Filter with a 1 Hz cutoff frequency. Features included maximum mu-power, beta-power, and frequencies of maximum mu and beta power. Among the four NN classifiers, Probabilistic Neural Network (PNN) achieved the best classification accuracy at 61.96%. In [

24], a public data-set from fifteen subjects performing six functional movements of a single limb (forearm pronation/supination, hand open/close, and elbow flexion/extension) was used. EEGLAB filtered out irrelevant channels, and ICA identified eye and muscle artifacts. A bandpass filter (8-30 Hz) was applied. PCA standardized and reduced data dimensionality. Wavelet Packet Decomposition (WPD) extracted features, providing a multi-level time-frequency decomposition with good time localization. Classification was performed using a Wavelet Neural Network (WNN), typically comprising three layers: input, hidden, and output. This model achieved an intersubject classification accuracy of 86.27% for the six limb movements [

24,

25]. The field of EEG signal processing has witnessed significant progress in recent years, particularly in feature extraction and classification techniques, driven by the demand for more accurate and real-time applications in areas such as brain-computer interfaces (BCIs), healthcare, and neuroscience research [

51,

52,

53,

54]. One of the recent trends is the application of deep learning methods in EEG signal processing. Convolutional Neural Networks (CNNs) have gained popularity due to their ability to automatically extract features from raw EEG data without the need for manual feature engineering [

55,

56,

57,

58]. For instance, CNNs have been successfully employed to decode motor imagery tasks in BCIs, leading to improved classification accuracies. Additionally, hybrid models combining CNNs with other deep learning architectures, such as Long Short-Term Memory (LSTM) networks, have been explored to capture both spatial and temporal features of EEG signals, further enhancing performance. Another promising approach involves the use of transfer learning [

59,

60], which leverages pre-trained models on large datasets to improve the generalization of EEG classification models on smaller, domain-specific datasets. This is particularly useful in the context of EEG, where obtaining large, labeled datasets is challenging. Transfer learning has shown potential in tasks such as emotion recognition and mental workload estimation, where it helps in reducing training time and improving model accuracy. In feature extraction, the fusion of traditional methods like Wavelet Transform (WT) with advanced machine learning techniques has been explored to enhance the representation of EEG signals. For example, continuous wavelet transform (CWT) has been used to capture both time and frequency domain features, which are then classified using machine learning models like Support Vector Machines (SVMs) or Random Forests. Additionally, methods such as Empirical Mode Decomposition (EMD) have been combined with deep learning models to extract intrinsic mode functions that are more representative of the underlying brain activity. Finally, recent studies have also focused on improving the interpretability of EEG classification models. Explainable AI (XAI) techniques are being integrated into EEG processing pipelines to provide insights into how models make decisions, which is crucial for applications in clinical settings. This not only enhances the trustworthiness of the models but also aids in the discovery of novel neurophysiological patterns.