1. Introduction

In today’s digital landscape, user feedback, and reviews on social media platforms like Twitter, Facebook and Instagram hold significant value for organizations and play a predominant role aiming to enhance services, products and manage the whole performance efficiently. People can openly express their thoughts, ideas, and views as short messages called tweets on many micro blogging platforms in social networks and web forums [

1]. Organizations frequently employ sentiment analysis or opinion mining techniques to extract meaningful information from the unstructured user inputs [

2]. Sentiment analysis involves assessing emotions, opinions, and attitudes expressed within text data. Twitter serves as a rich source for the analysis due to its real-time nature and the vast amount of user generated content [

3]. Notably, popular Twitter users like Justin Bieber receive over 300.000, an excessive volume of tweets every day. Similarly, accounts like Xbox Support, which have over 400,000 followers, face the daunting task of managing and responding to more than 1.5 million daily tweets. During notable events like the 2024 IPL match, Twitter experienced an even more significant surge, with over 750 million tweets related to the tournament sent during that time. Handling such massive datasets poses a considerable workload for any organization. The applications offered by TSA exhibit inadequate performance capabilities. According to a literature assessment, the accuracy metrics for sentiment analysis generally ranges from 40% to 80%. There is a need for more improved and precise TSA tools for firms in reviewing client feedback and analysing sentiments. Tweets use a wide-ranging, diverse, and ever-changing vocabulary that includes slang, acronyms, and emojis. Furthermore, tweets offer only a restricted number and shorter terms for sentiment analysis. This leads to tweet features having sparse representations, a common issue found with standard feature sets, which typically leads to the suboptimal performance of sentiment analysis algorithms.

Some of the key contributions in the article can be analysed from the following points:

- ▪

Introduction of a Twitter-specific sophisticated Lexicon set to avoid ambiguity of sentiments in sentence level.

- ▪

Analysis of Target class determination and Domain Adaptability using classifiers.

- ▪

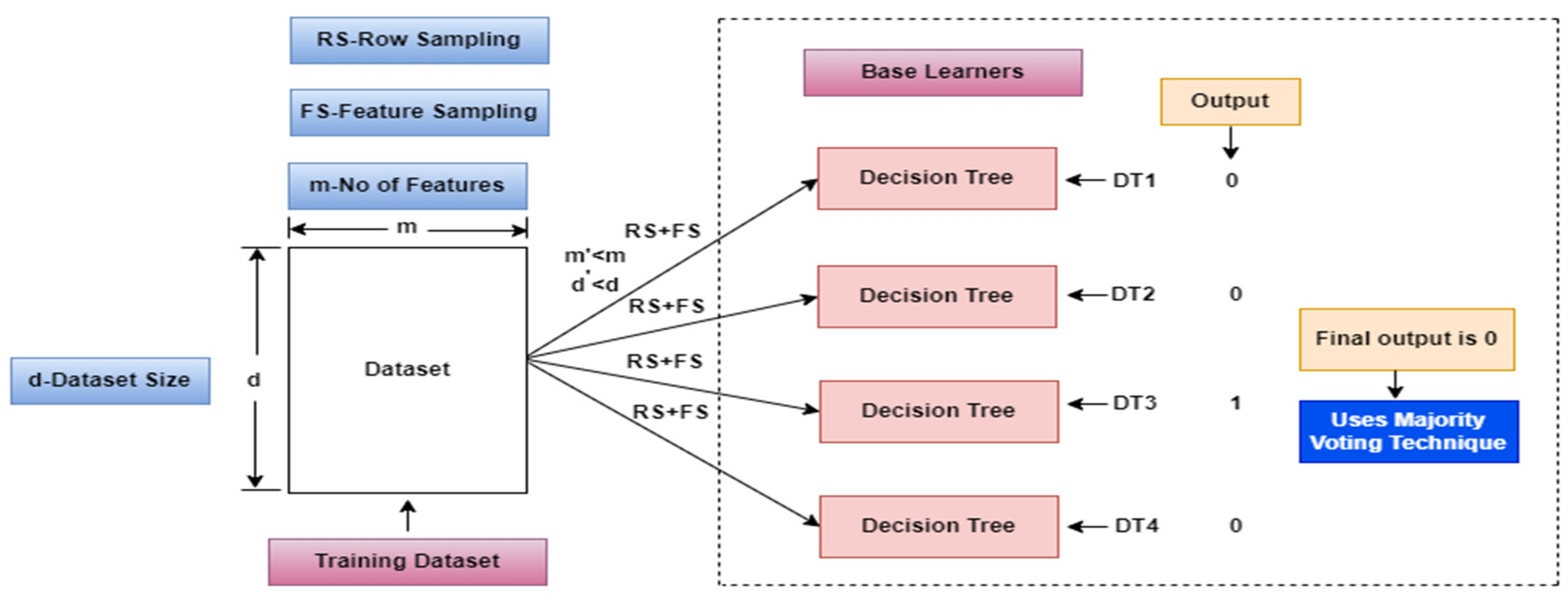

Implementation of MVT (Majority Voting Technique) with Random Forest classifier for improvement on Accuracy and other performance measures in sentiment analysis task.

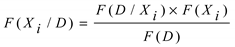

The sequence of steps involved in the model have been depicted in

Figure 1, that ultimately lead the classification of tweets as either Positive or Negative.

The subsequent sections of the article are structured as follows:

Section 2 discusses related work, examining existing research in the domain.

Section 3 introduces the proposed framework, providing an in-depth of its structure and components.

Section 4 outlines vectorization techniques employed in the study.

Section 5 illustrates various machine learning models.

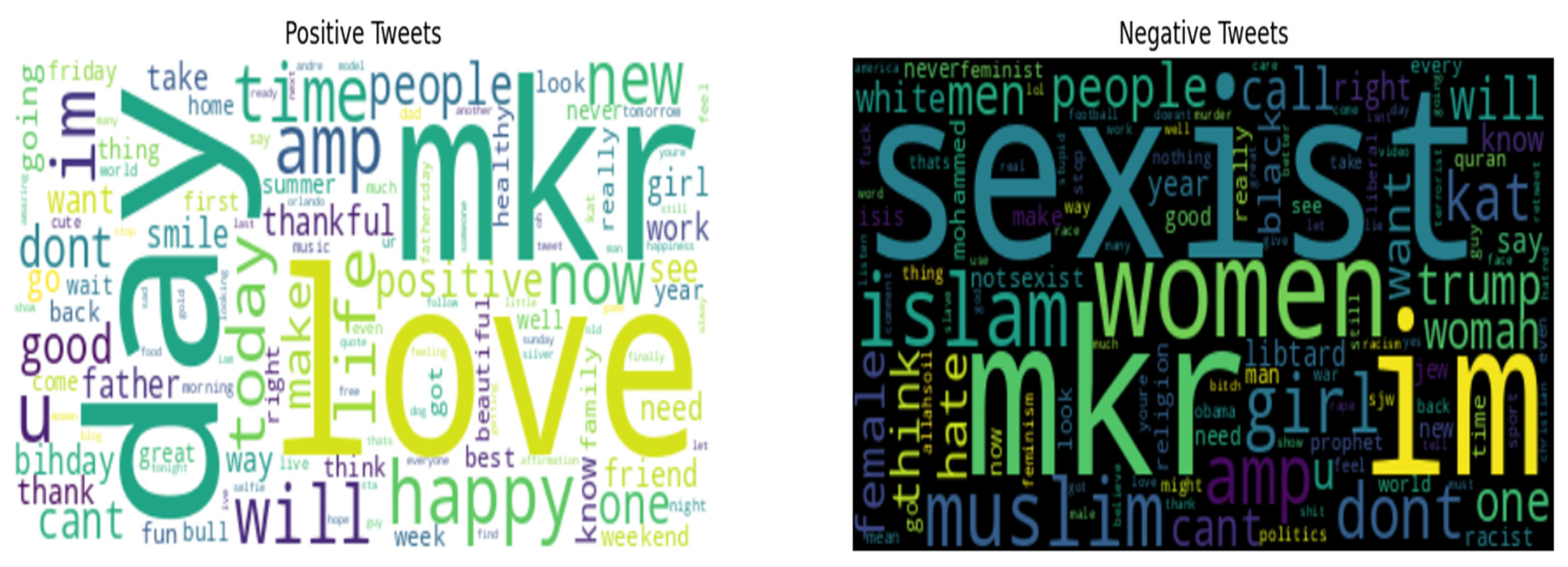

Section 6 furnishes dataset descriptions and wordcloud results.

Section 7 is on Results and Discussion. Eventually ends with an outcome and future plans for more work.

2. Related Work

The primary objective of this study is to examine the application of Machine Learning techniques for sentiment classification. These techniques have been thoroughly reviewed and summarized by researchers and academics. The study concludes by providing different result approaches and machine learning algorithms tailored specifically for this research implementation. In sentiment analysis, the information has been reduced into a smaller subset referred to as the “sentiment lexicon”, which serves as a critical resource for sentiment algorithms. By leveraging the sophisticated lexicon set (SLS), the algorithms employed within the manuscript to retrieve access to a wide range of sentiments pertaining to emotions. This privilege facilitates to more accurately identify and separate the affective content expressed in the text with increased accuracy. Therefore, the principal aim of this work is to develop a concise, reliable, and reusable lexicon that can be utilized effectively to produce a better outcome for the model. The subsequent section highlights several contributions made by numerous researchers in the domain of related work on TSA.

F.M. Javed Mehedi Shamrat et al. [

3] proposed a supervised KNN classification algorithm that extracts tweets from Twitter using API authentication token. The algorithm classifies the text in the dataset into three classes, positive, negative, and neutral. In [

4] a novel architecture has been implemented for tweet sentiment classification exploiting the advantage of lexical dictionary and stacked ensemble of Long short-term memory (LSTM) as base classifiers and Logistic Regression (LR) as meta classifier. Mohit Dagar et al. [

5] has applied two filters named as Sparse Feature Vector and Lexicon Feature Vector to identify the sentiments in the text is hatred or not hatered. The dataset has been analysed using weka software and the highest accuracy result they have achieved is with Random Forest technique. Stacked Weighted Ensemble (SWE) [

6] proposed a sentiment detection model that integrates several independent classifiers like Hard voting, Soft voting, Linear Regression, Random Forest and Naïve Bayes. This model has utilized a variety of labelled grouping strategies to represent the order of emotions, including anger, fear, disgust, and cheer. Sentiment Specific Word Embedding (SSWE) [

7] a technique addressed that learns vectorization for Twitter sentiment classification, which typically map words with similar syntactic context but opposite sentiment polarity such as good or bad.

Haowang Dogan Can et al. [

8] proposed a sentiment analysis model and an annotation interface that allows the user to rate the sentiment toward the candidate's tweet mentioned as positive, negative or neutral or mark it as unsecure. There are also two options to specify whether a tweet is sarcastic and/or funny. Relief and gain information [

9], are two approaches used for feature selection of spam profiles on Twitter. This study tested four classification methods and compared: multilayer perceptron, decision trees, naïve bayes and k-nearest neighbors. The advantage of this strategy is that it can achieve high detection rates regardless of the language used in tweets. The drawback of the technique is the poor accuracy rate due to the employment of small datasets for training. Arun Kumar Yadav et al. [

10] proposed various machine learning and deep learning models for detection of hate speech utilizing various feature extraction and word embedding techniques on a consolidated dataset of 20600 instances with English-Hindi mixed tweets. S Saranya and G. Usha [

11] implemented machine-learning based sentiment analysis method for feature extraction from Term Frequency and Inverse Document Frequency (TF-IDF), along with utilization of wordnet lemmatize and Random Forest (RF) network to detect sentiments from a Tweet. Study [

12] Logistic Regression with Count Vectorizer (LRCV), a combined approach has been implemented by the authors on product reviews for sentiment analysis to convert text into numerical vectors, which further feed as input to the Machine Learning Model. The test has been conducted on benchmark datasets, producing better results across various performance metrics.

To conclude, from the extensive related work and literature survey, we observed significant contributions made by many researchers on TSA. The researchers have implemented different machine learning and deep learning algorithms on a wide range of datasets. However, the most significant research gap noticed is the absence of a proper dataset specifically tailored for TSA and an absence of comparisons among machine learning algorithms for sentiment analysis and classification.

In this regard, our proposed work makes a unique contribution for TSA in terms of the vectorization techniques, preprocessing steps used, algorithms implemented for sentiment classification, and finally the utilization of statistical and analytical approaches to interpret the results. To address and understand Twitter Sentiment Analysis, various research questions are addressed as follows:

2.1. Research Questions and Inferences in TSA

In context with TSA, research questions and inferences focus on understanding the sentiment (positive, negative) expressed in tweets.

Here are some research questions and potential conclusions related to TSA.

RQ1: What factors influence Twitter sentiment polarization during public events?

A variety of factors can influence Twitter sentiment polarization during public events. These factors can contribute to the division of opinions, resulting to a strong separation between positive and negative sentiments. The list includes event controversy, political or ideological affiliations, media coverage and framing, inflammatory tone and language, misinformation etc.

RQ2: What factors of Twitter has been chosen as the target platform for the study of Sentiment Analysis?

Twitter has been chosen as a popular platform because of its increasing number of active users every time span, and the platform can make it brief and simple to collect vast quantities of passionate and real time text data. Furthermore, it often includes hash tags and keywords to categorize and highlight the tweets which give researchers insights, allowing a deeper understanding of sentiment dynamics.

RQ3: Can sentiment analysis on Twitter provide more timely and accurate insights compared to traditional methods?

TSA can provide more timely insights than traditional methods of tracking public opinion, thus allowing for a quicker identification of emerging trends and events, on the other hand, traditional methods like surveys might take more time to design, conduct and analyze which could lead to delay in capturing the current sentiment.

RQ4: Could sentiment analysis benefit from integrating visual content (images and videos) alongside textual analysis within tweets?

Yes, combining textual analysis of tweets with visual content could be helpful for sentiment analysis. The accuracy and depth of sentiment analysis results can be improved by using visual content, which can give context and clues that may not be available in the text alone.

3. Proposed Framework

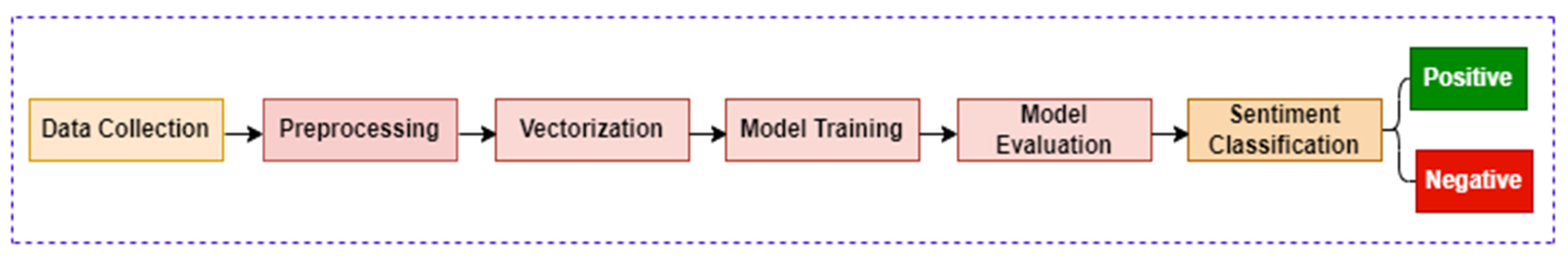

The main emphasis and objective of this study are to analyze the sentiments and opinions expressed in tweets. Our work aims to create precise and robust machine learning techniques employing vectorization methods and classifiers. In order to optimize the data for machine processing vectorization techniques such as CBow and skip-gram are used to transform the cleaned and refined data into formats that are numerical in nature. The analysis is segmented into distinct phases as highlighted in

Figure 2. The initial phase encompasses data collection from the Twitter database and API. Following this, the selection of a suitable dataset and the subsequent phases involve the preprocessing of data, vector representation, term frequency calculation, sentiment analysis, execution of different classifiers, and ultimately choosing the most optimal classifier that produces the finest outcome for sentiment classification, effectively categorizing as positive or negative. The steps of data preprocessing have been summarized.

3.1. Data Preprocessing

A crucial data mining approach is to preprocess real-world data into a more comprehensive and consistent format. Before beginning any analysis, it is critical to address the inconsistency and missing aspect of Twitter data. Many rounds of preprocessing steps are performed on the tweets before they are ready for further analysis.

3.2. Text Cleaning and Tokenization

The initial stage of this process involves the elimination of special characters, URLs, hash tags, stop words, mentions, emojis, and HTML tags. Subsequently, the text has been split into discrete words or tokens. Its application ensures that the text is ready for a wide array of natural language processing applications, leading to more meaningful and accurate findings.

3.3. Lemmatization

The texts are lemmatized after stop words are eliminated. Examples include changing the words “running” to “run”. As a result, any instances of non-English words are removed during preprocessing. The subsequent phase involves the n-gram construction, which is a crucial step in text analysis tasks like sentiment classification, especially for platforms like Twitter with concise and casual texts.

3.4. N-gram Construction

N-grams are contiguous occurrences of n items, In essence constructing n-grams serves to capture nearby contextual information that carries significance for the study. Following this phase, the word count vectorization technique is employed.

3.5. Vector Representation

This technique is used to transform collection of raw textual data into vectors of continuous real numbers. The occurrence of specific terms or phrases is assessed through frequency analysis. Subsequently an AI classifier is integrated into the pipeline, accurately classifies the sentiment of each tweets using machine learning algorithms. A thorough evaluation evaluates accuracy and other performance criteria to determine the most optimal classifier. Pseudocode 1, outlines the detailed steps of the proposed framework.

Pseudocode1 of proposed framework

----------------------------------------------------------------------------------------------------------------

1: Input dataset (ds) with corresponding sentiment labels (positive, negative)

2: for each sample in the ds do

3: Pre-process the input text (sample.text (Text cleaning and Tokenization, Lowercasing, Lemmatization, n- gram construction))

4: Feature extraction creating a vocabulary from the tokenized tweets and representing each tweet as a vector of word frequencies in the vocabulary.

5: Vector representation using (CBoW, Skip-gram) converting each tweet into a numerical value.

6: Implement the sentiment classification algorithm (NB, DT, KNN, LR, RF)

7: Split pre-processed (ds) into training and testing sets.

8: for each model in models do

9: TrainModel= (model, ds, tr (training_data))

10: Performance_metrics (pt) = (model, ds, td (testing_data))

11: CapturePerformanceMetrics (model, pt)

12: end for:

13: Optimal_classifier =SelectOptimalClassifier (model, pt)

14: End.

----------------------------------------------------------------------------------------------------------------

4. Vectorization Approach for Machine Learning Techniques

Word embedding is a method that transforms individual terms into a distinct vector representation, considering both their syntactic and semantic contexts. This approach enables to determine how similar a term is in relation to others in a tweet. Words are represented by vectors that are trained using neural networks to fit within a predefined vector space. The learning procedure can be executed through either a supervised neural network model or an unsupervised approach that leverages document statistics.

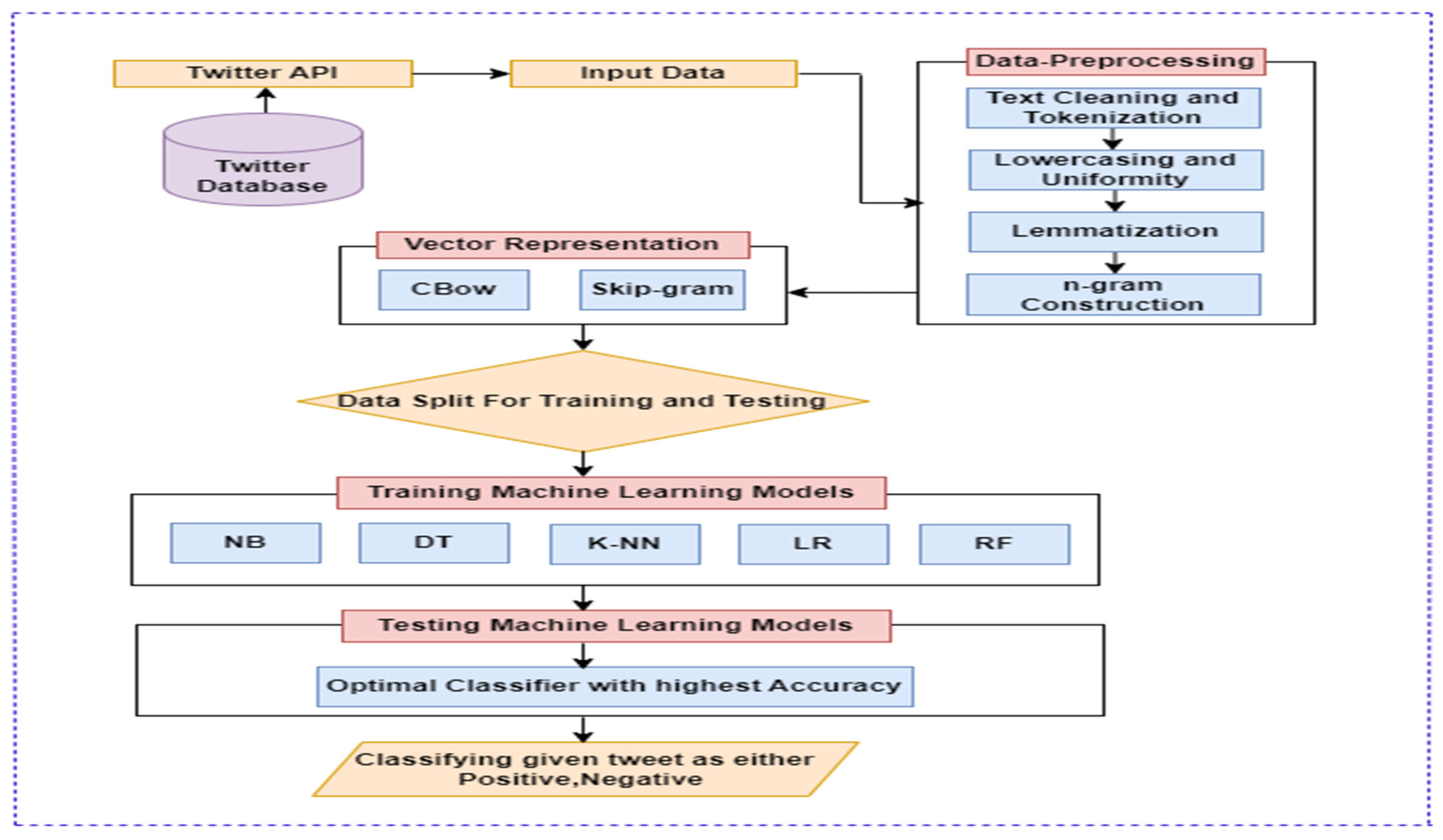

Figure 3. illustrates different words embedding techniques which can be used depending on the context and type of applications.

Word Embedding can be classified into two main types: Frequency Based and Prediction Based embeddings. Representing words numerically present several challenges. One such technique, the Bag of Words, although it produces acceptable outcomes, but lacks of ordering preservance, assigns values of either 0 or 1, making incapable to derive the most significant words. To address this limitation, we can turn into another technique called TF-IDF. Some of the word embedding techniques outlined in

Figure 2. has been explained to improve readability.

4.1. TF-IDF

This simple and straightforward technique assigns weight to words based on their occurrence and importance in a document, making it easy to understand which words contribute to sentiment. However, this technique has limitations in capturing context, semantics, and word order, which are important aspects of sentiment analysis on social media data.

Below is the equation to calculate TF-IDF.

For the word i in document j, f (i, j) represents the frequency of the term i in document j. This basically indicates the number of times term i appears in document j.f (i) represents the overall number of occurrences of term i in the entire collection of documents. N represents the aggregate number of documents in the collection.

4.2. Co-Occurrence Matrix

This is an effective technique for capturing semantic information from the text, but they have drawbacks related to high dimensionality, sparsity, word loss, and memory requirements. In response to the limitations of frequency-based word embeddings, this study explores a more sophisticated approach employing, prediction-based word embedding techniques such as Word2Vec.

4.3. Word2Vec

It is a two-layer neural network model that has been applied to produce word embeddings within a vector space and identify patterns in word association in a large text corpus [

10]. It preserves the relationship between words encompassing synonyms and antonyms, thereby facilitating a contextual understanding that proves valuable for identifying sentiment relevant words and their polarities. Additionally, Word2Vec employs two algorithms for the task of generating vectors from words: CBow (Continuous Bag of words) and Skip-gram.

4.4. CBow

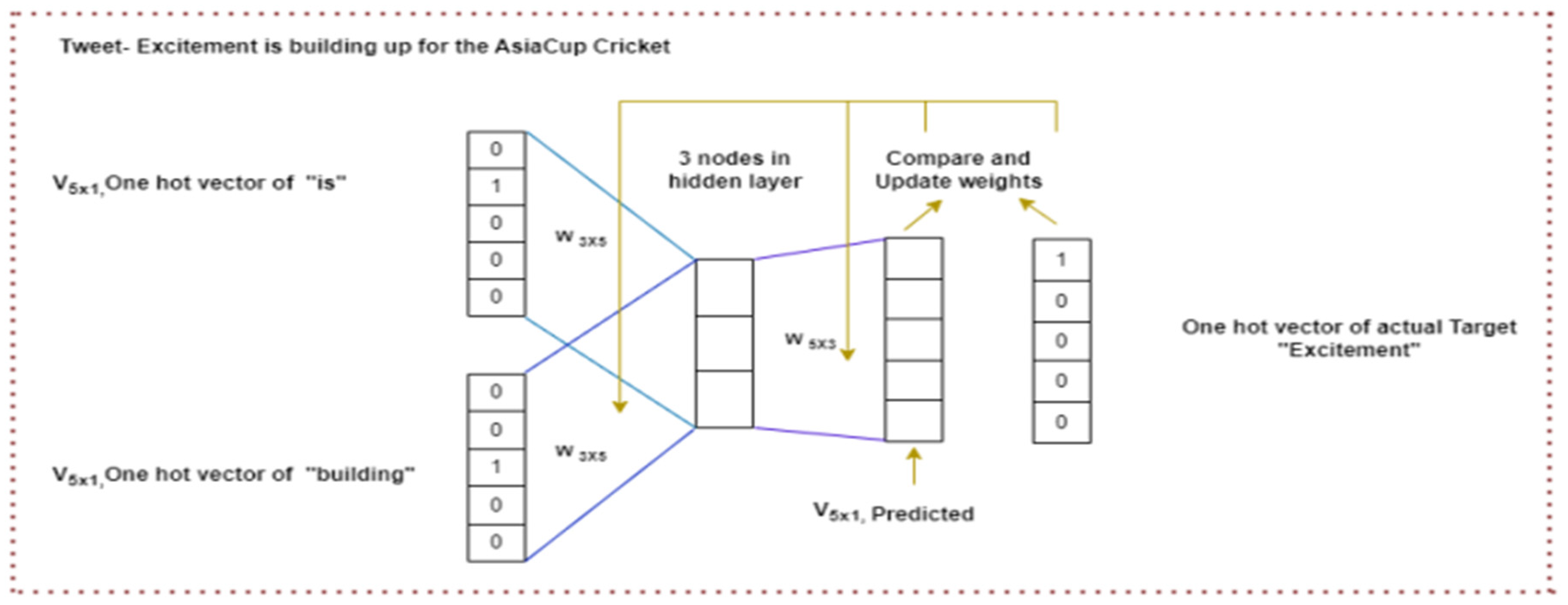

This is a most popular word embedding technique which predicts the actual target word from the context words.

Figure 4. depicts the working principle of the technique with an example. Here a Tweet sentence is taken. To predict the target word (“Excitement”), it has taken two context words (“is” and “building”). The words are initially converted into one hot encoding vector representations (One hot vector-one bit is “1”, all other bits are “0” and vector length= No of words in sentence) which is shown in the Figure. Next step is to select the window size to iterate over the sentence. The window size is-3 and the neural network is taken which has the context words as input share the 5x3 matrix and we pass the one hot vector of “is” and “building” to the neural network that tries to predict the target word.

4.5. Skip-Gram

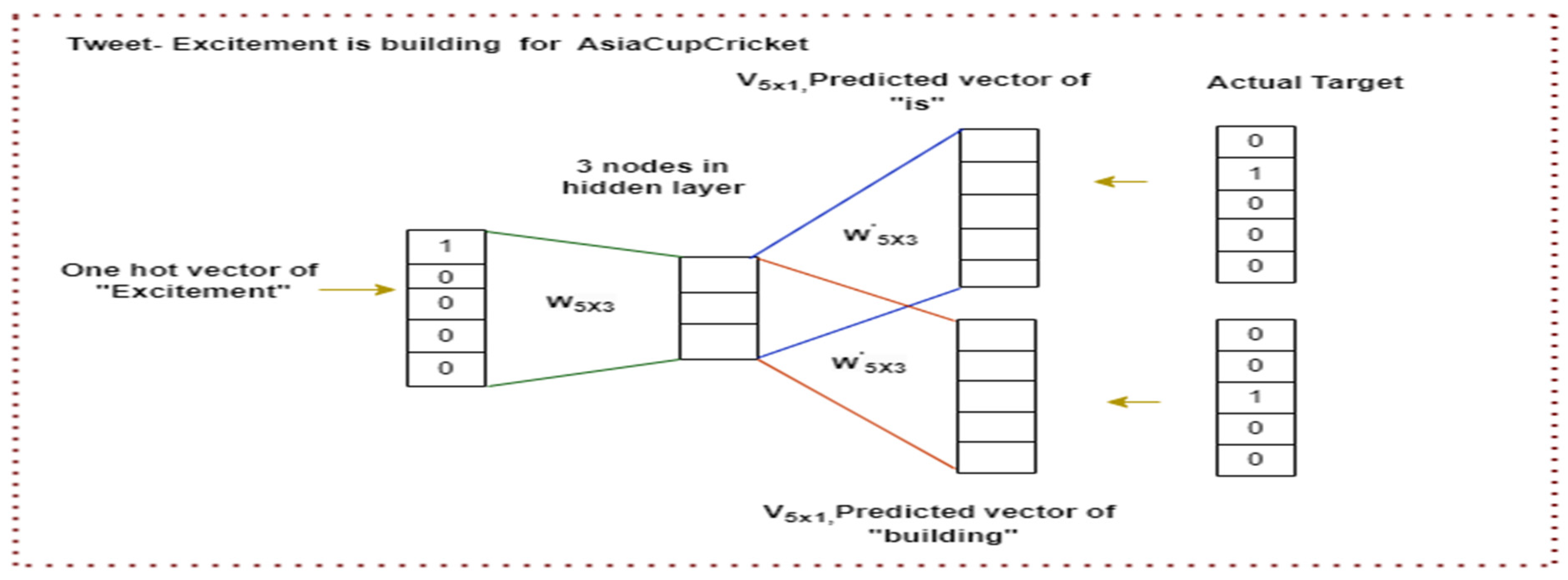

The NLP technique, employed in this study, serves as a counterpart to CBow used for word embedding, a technique of representing words as condensed vectors within a continuous vector space. This approach is used to learn word embeddings by forecasting the context words based on the target word. It possesses the capability to capture semantic relationships between words, as words have analogous meanings tends to have similar embeddings.

Figure 5. visually illustrates the operational principles of this renowned technique.

7. Results and Discussion

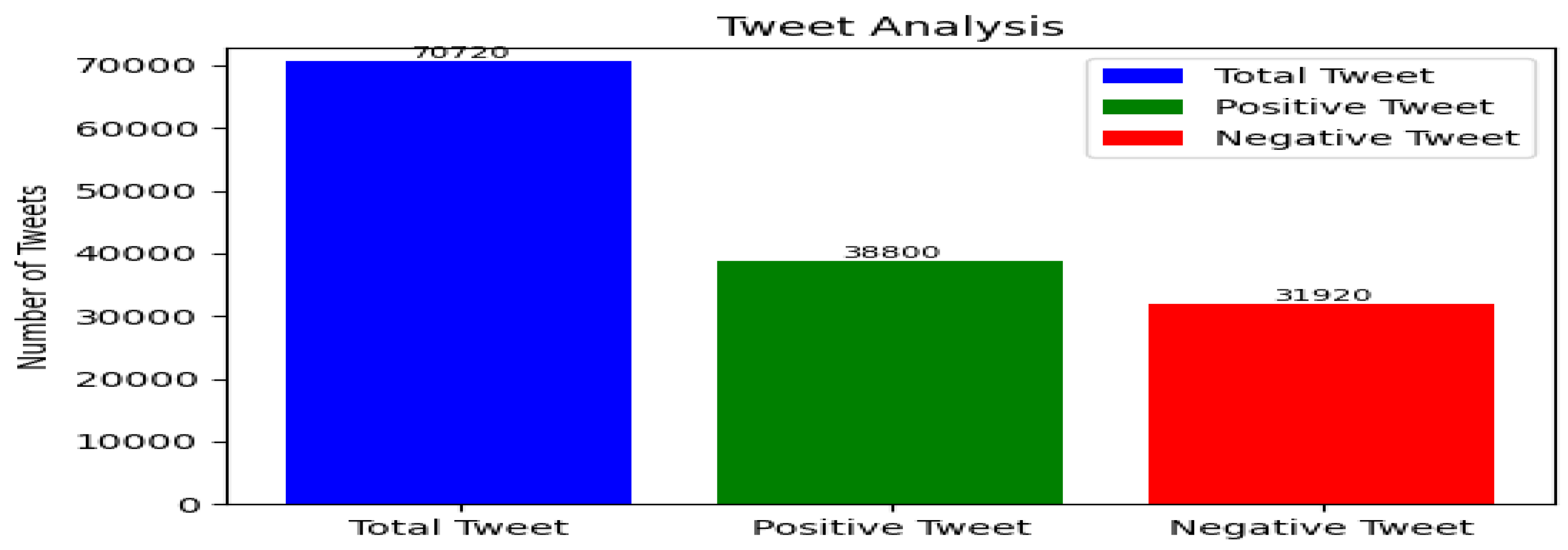

The dataset contains 70720 tweets, split into training and testing sets following the 70-30 rule, with 70% of data allocated for training and the remaining 30% for testing. To assess the effectiveness of our chosen methodologies, we employed a set of performance metrics, including Accuracy, Precision, Recall, F1-score, Specificity (Negative Recall), and ROC_AUC. Below are the equations for the discussed performance metrics.

The accuracy measure provides how many data points are correctly predicted.

The Precision measure calculates the number of actually positive samples among all the predicted positive class samples.

Recall (or Sensitivity) calculates how many test case samples are predicted correctly among all the positive classes.

F1-Score is the harmonic mean of Precision and Recall.

Negative Recall (or Specificity) computes how many test case samples are predicted correctly among all the negative classes.

Table 1.

Performance Measures (in percentage) for sentiment classification.

Table 1.

Performance Measures (in percentage) for sentiment classification.

| Performance Measures |

NB |

DT |

KNN |

LR |

RF |

| Accuracy |

81.77 |

4.55 |

89.43 |

87.44 |

96.10 |

| Precision |

87.75 |

97.94 |

91.80 |

86.98 |

98.91 |

| Recall |

73.82 |

90.06 |

86.57 |

88.02 |

93.34 |

| F1-Score |

80.19 |

94.29 |

89.11 |

87.50 |

96.01 |

| Specificity |

73.81 |

90.04 |

86.56 |

88.01 |

92.91 |

| ROC_AUC |

81.77 |

94.55 |

89.43 |

87.44 |

96.15 |

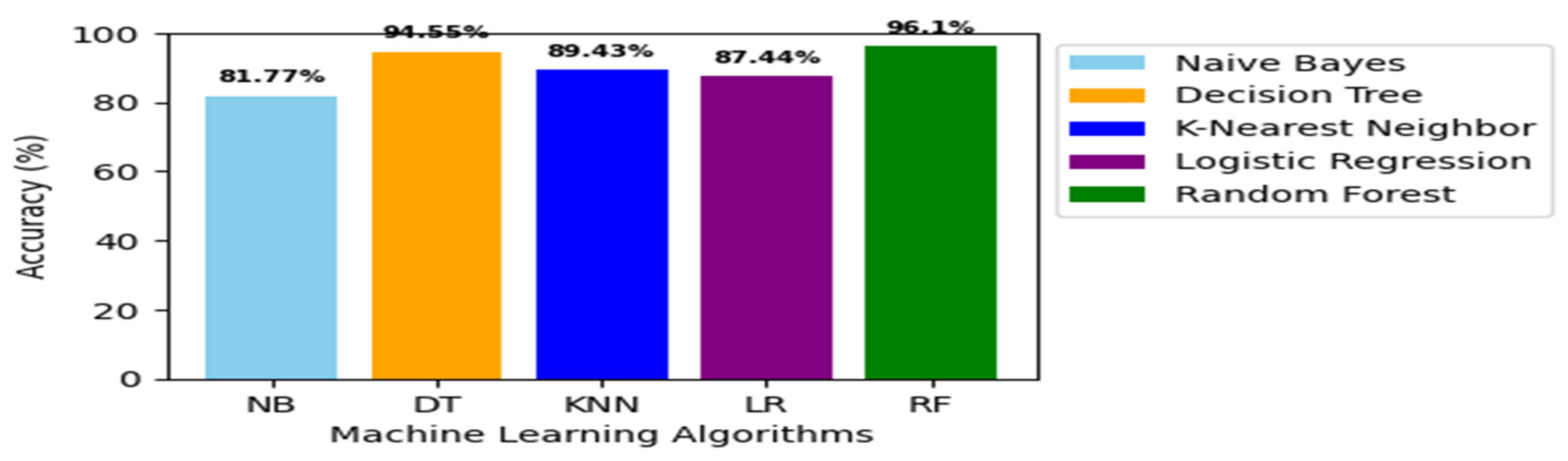

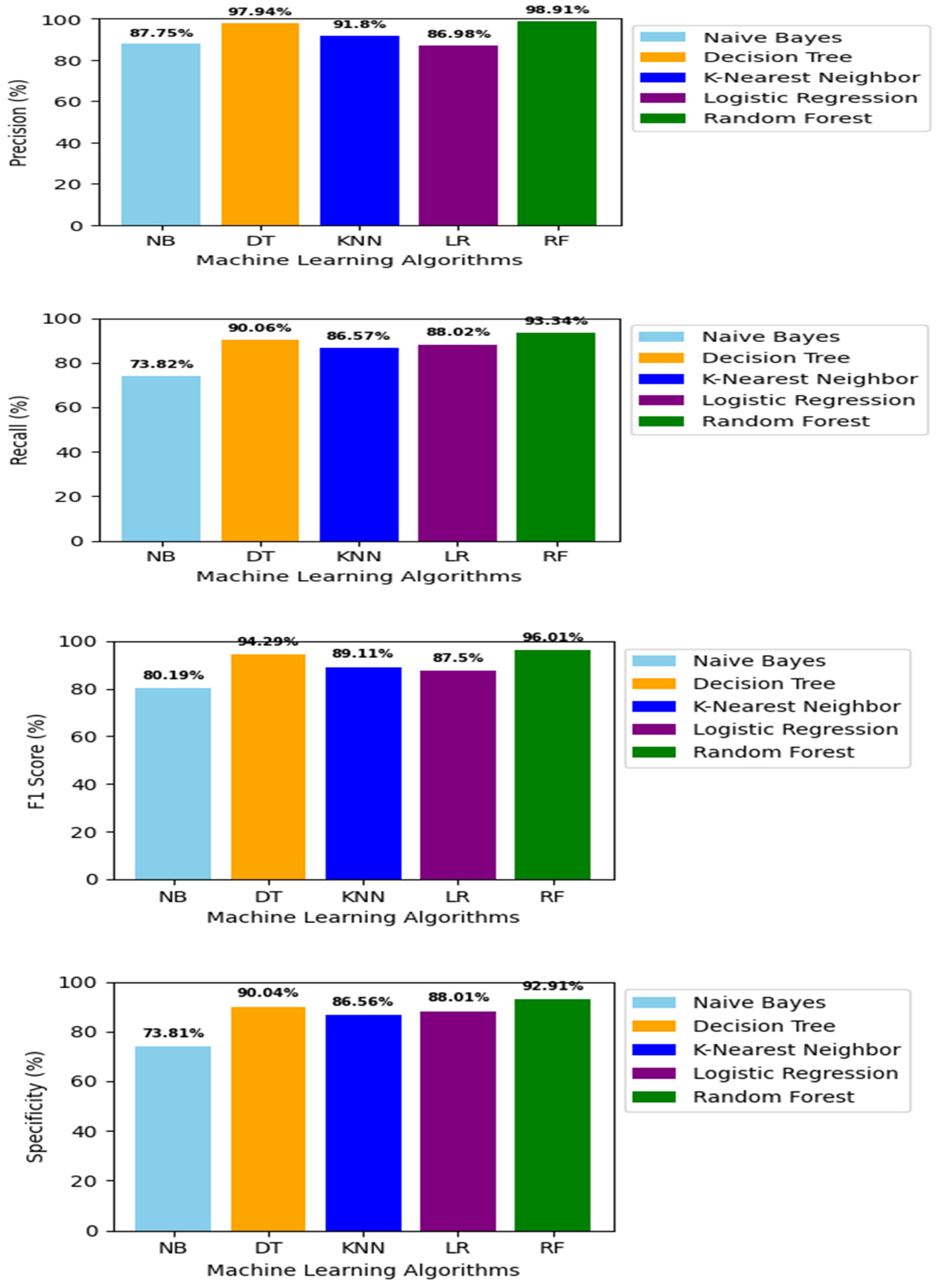

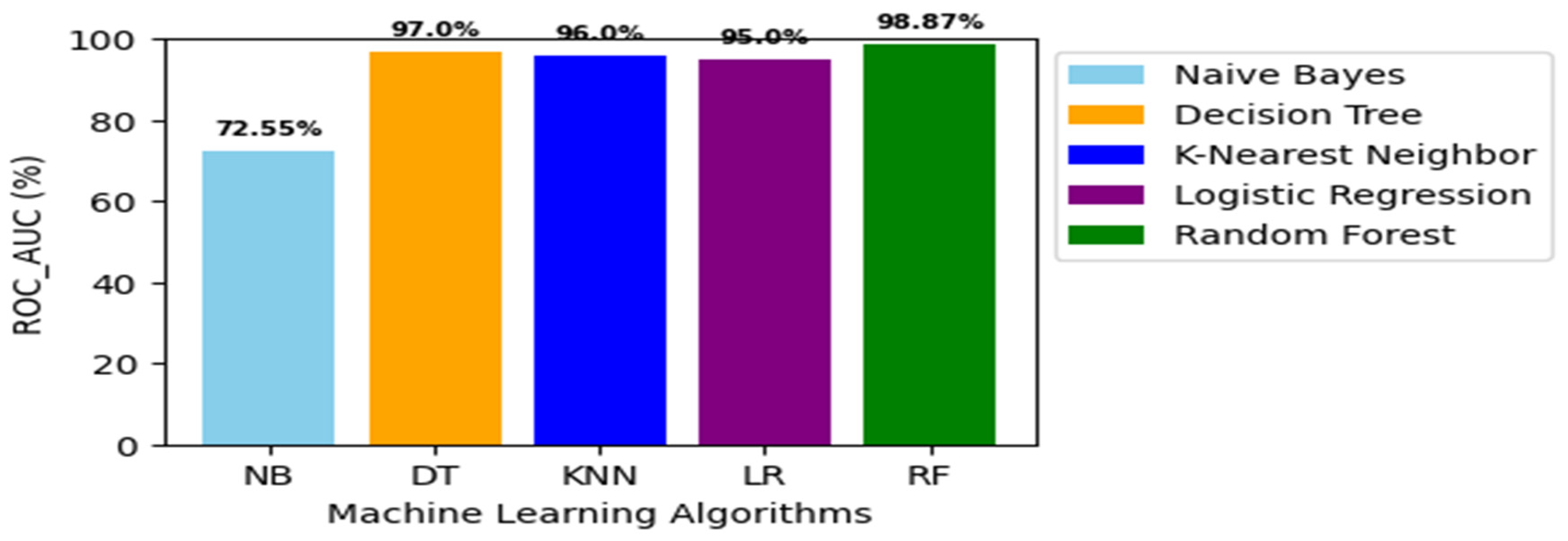

7.1. Comparative Analysis through Evaluation Metrics

In our comprehensive evaluation depicted in

Figure 10(a), notably, Random Forest (RF) achieved an impressive accuracy %, surpassing of 96.15the accuracy of NB (81.77%), DT (94.55%), KNN (89.43%), and LR (87.44%).

Furthermore, RF emerged as the most precise classifier with precision of 98.91% compared to NB (87.55%), DT (97.94%), KNN (91.8%), and LR (86.98%), as highlighted in

Figure 10(b). Additionally, RF exhibited a remarkable recall rate of 93.34%, exceeding NB (73.82%), DT (90.06%), KNN (86.57%), and LR (88.02%) as illustrated in

Figure 10(c). In terms of F1-score, RF excelled with a rate of 96.01%, eclipsing NB (80.19%), DT (94.29%), KNN (89.11%), and LR (87.5%), as shown in

Figure 10(d). Moreover, RF displayed a specificity rate of 92.91% in

Figure 10(e), outperforming NB (73.81), DT (90.04%), KNN (85.56%), and LR (88.01%). Finally, RF demonstrated superior predictive performance achieving an ROC_AUC rate of 96.15% as illustrated in

Figure 10(f). This performance clearly outpaced NB (81.77%), DT (94.55%), K-NN (89.43%), and LR (87.44%). These findings emphasize RF’s exceptional performance and reliability across a range of evaluation metrics.

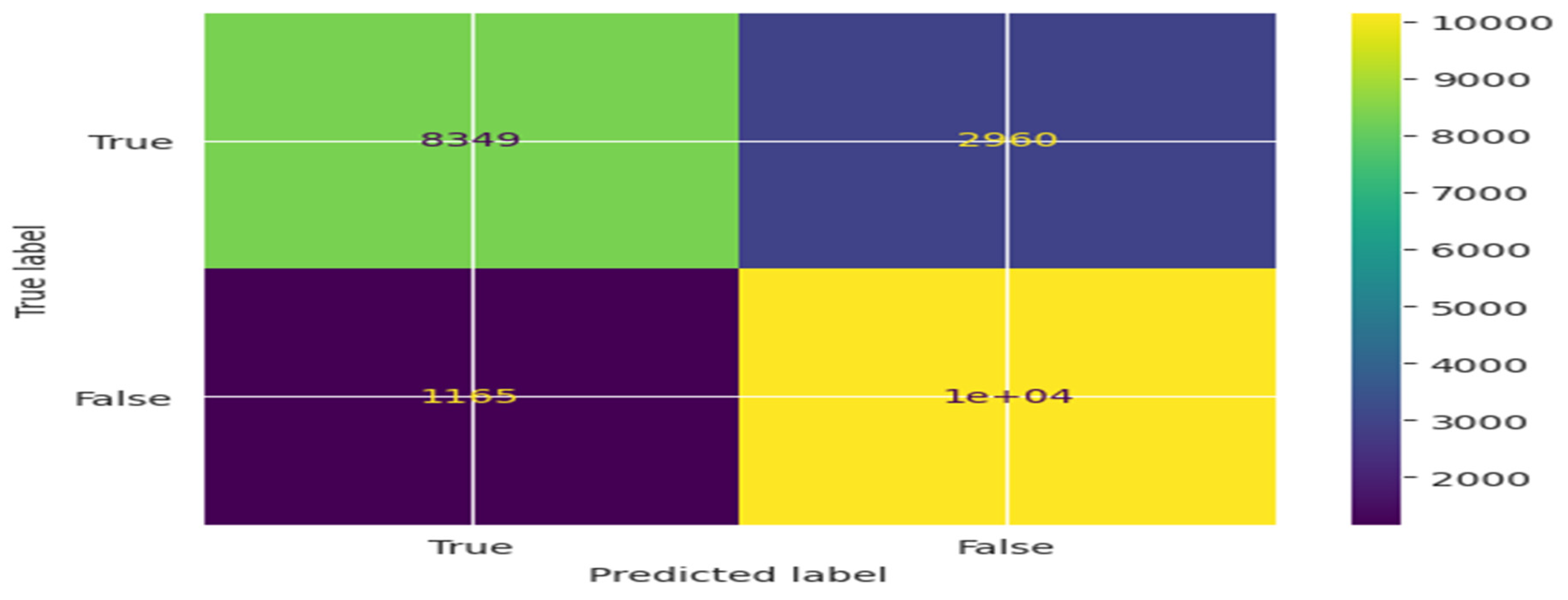

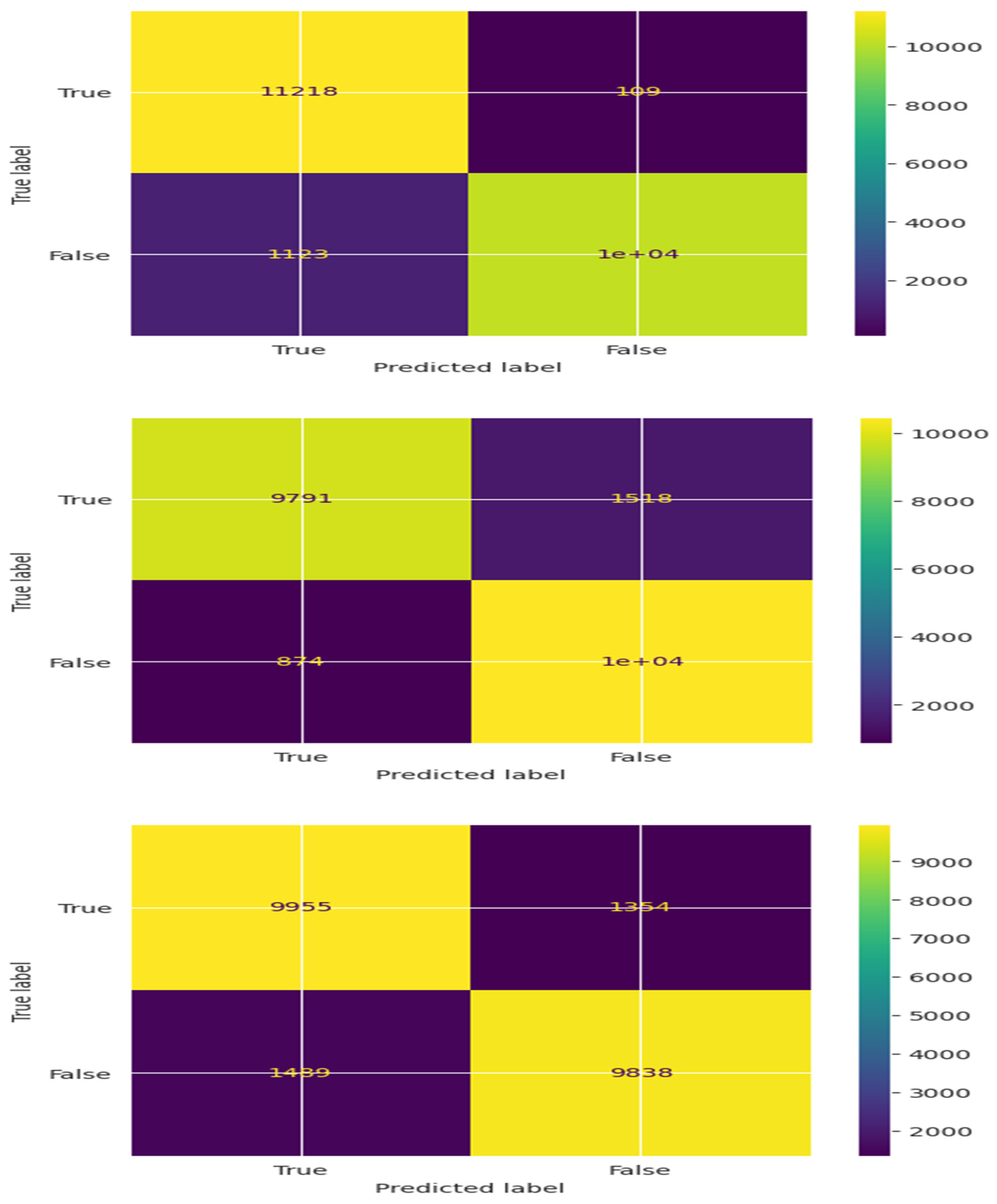

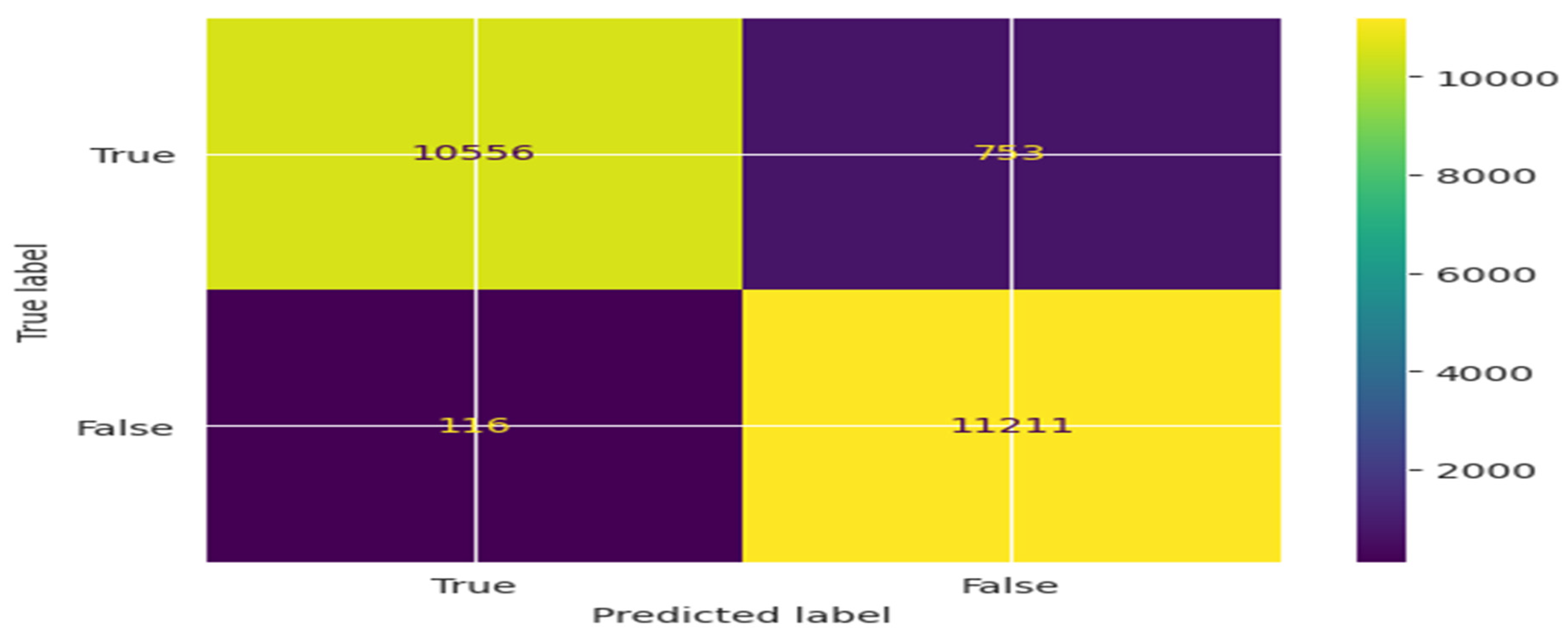

7.2. Analysis through Confusion Matrix

Confusion Matrix is a performance evaluation tool for classification models, breaking down predictions into categories of TP (True positives), TN (True Negatives), FP (False positives), and FN (False Negatives). In

Figure 11(a), the confusion matrix for NB classifier is illustrated. The matrix includes the values as TP-8349, FP-1165, FN-2960, and TN-10162.Furthermore the confusion matrix for DT classifier is highlighted in

Figure 11(b), and the values are as TP-11218, FP-1123, FN-109, and TN-10186.

Moreover, in

Figure 11(c), the matrix reveals the values as TP-9791, FP-874, FN-1518, and TN-10453 for KNN classifier. Additionally in

Figure 11(d), the matrix for LR classifier is presented with specific values as TP-9955, FP-1439, FN-1354, and TN-9838. Finally,

Figure 11(e), the emerged RF classifier outlines the values for the confusion matrix as TP-10556, FP-116, FN-753, and TN-11211.

This indicates that the Random Forest (RF) classifier exhibits a remarkable True Positive rate while maintaining an extremely Low False positive rate. Above is the illustration of the Confusion Matrix for NB, DT, KNN, LR, and RF classifiers.

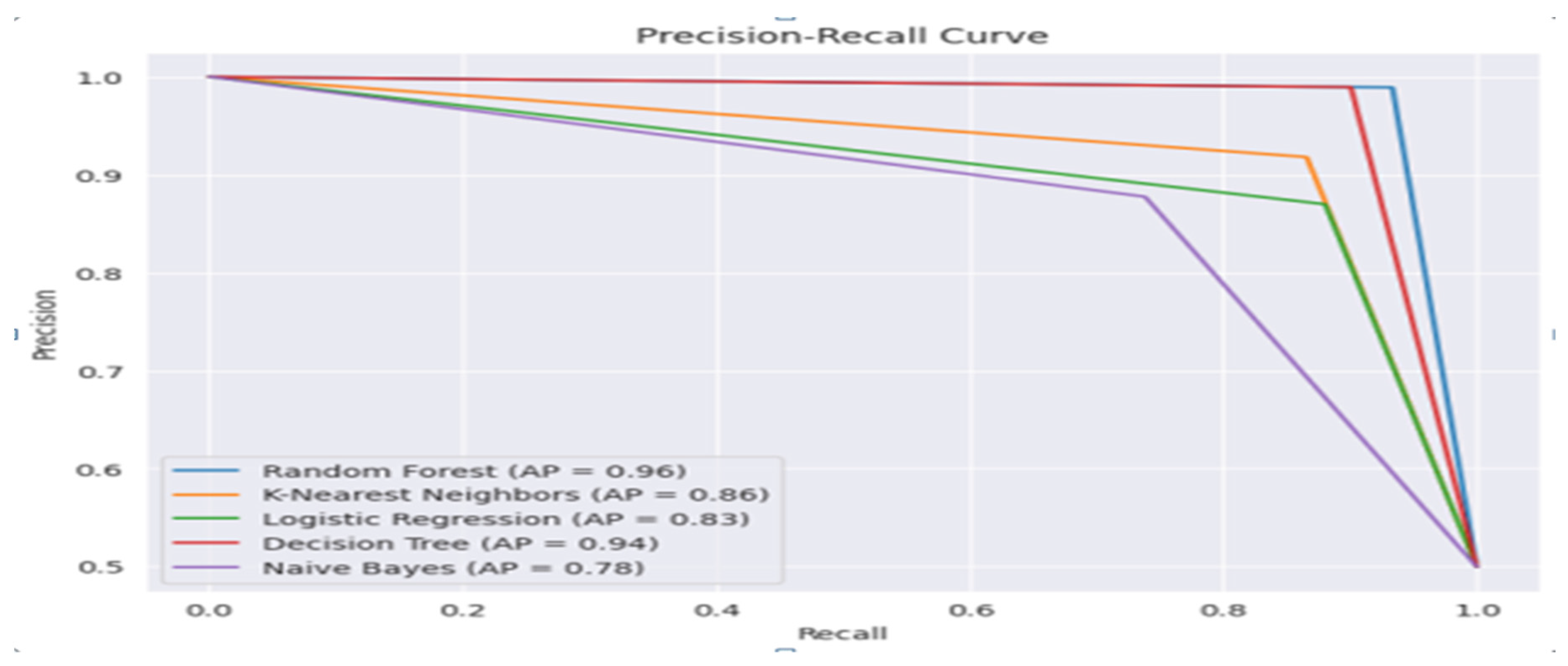

The effectiveness and performance of the classifier upgrades as the graph approaches the top-left corner one popular analytics for compressing a classifier’s overall performance is the area under the ROC curve (ROC-AUC). Its values vary from 0 to 1, with 1 denoting perfect classification and 0.5 denoting inconsistent grouping. The research shows that out of the five algorithms used, for sentiment analysis on Twitter data, the RF classifier exhibits the optimal convergence. The average precision score it receives is 0.96, meaning that it effectively identifies almost all positive cases and thus producing less false positives.

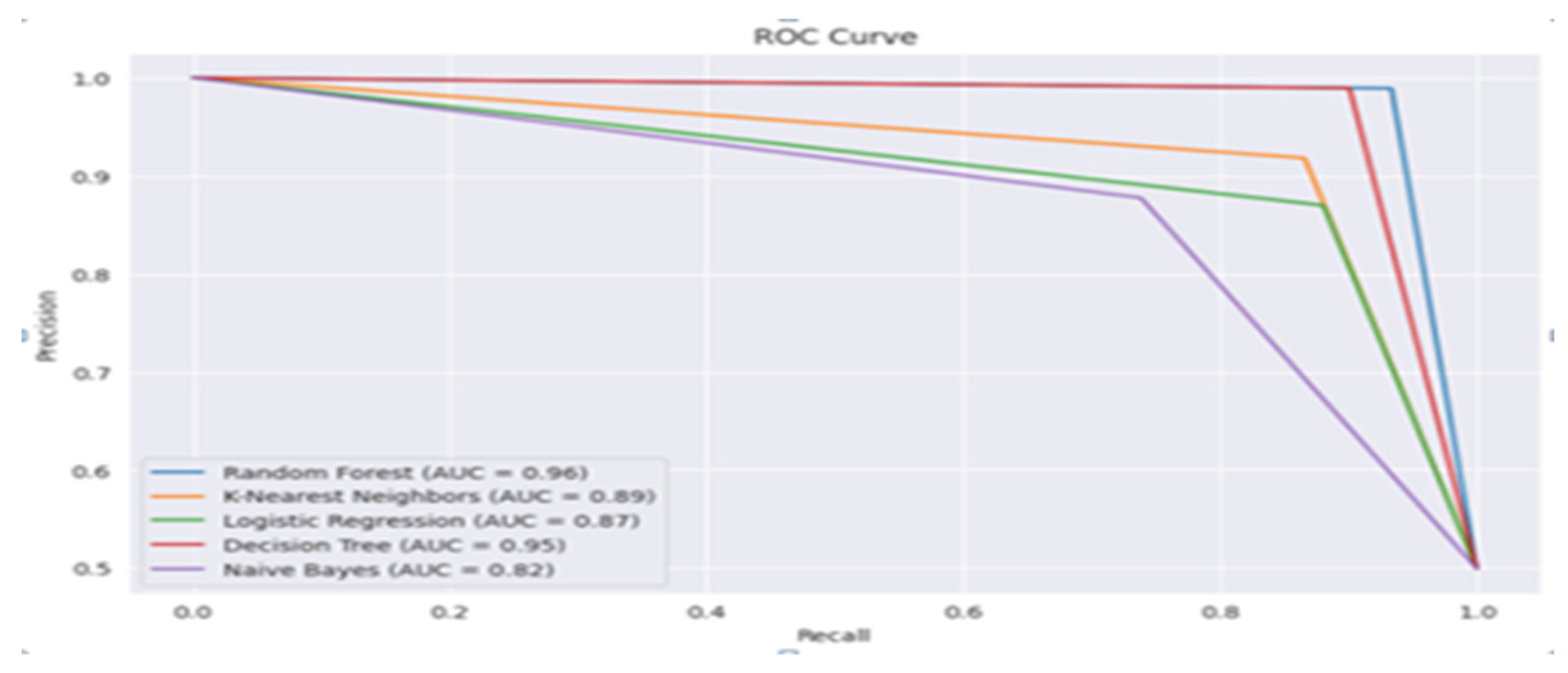

The ROC curves for NB, DT, KNN, LR, and RF classifiers were displayed in

Figure 12(a). This was observed that the RF classifier presents superior AUC value, which was as high as 0.96. This demonstrates that the RF classifier detects positive sentiment from Twitter data with an excellent degree of precision. Furthermore, due to the curve’s close placement to the top-left corner, the ROC-AUC shows great performance.

Figure 12(b), highlights the precision recall curves for NB, DT, KNN, LR, and RF classifiers along with the average precision score. It is noticed that the highest AP score was viewed for RF classifier with AP score=0.96.ROC curve displays how, as the classification threshold changes, the relationship between the true positive rate (sensitivity) and the false positive rate (specificity - 1) adjusted.

7.3. Comparison study

In this work, we closely examine and compare several sentiment classification algorithms used on Twitter data as illustrated in

Table 2. Due to the informal language, shortness, and diverse expression [

22] used by the users, sentiment analysis on social media sites, particularly Twitter presents unique challenges. To address these challenges, we explore the effectiveness of several machine learning algorithms. Also, as sentiment analysis is being utilized in various corporate sectors for review of products, it is equally important to check whether the text posted is positive, negative or neutral [

23].