Submitted:

19 August 2024

Posted:

20 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review and Theoretical Framework

2.1. Evolution of Financial Advisory Services: From Robo-Advisors to GenAI

2.2. GenAI’s Attributes: Personalized Investment Suggestion, Human-Like Empathy, and Continuous Improvement

2.3. Perceived Authenticity of GenAI

2.4. Utilitarian Attitude towards GenAI and Consumer Response

2.5. AI lLiteracy

2.6. Service-Dominant Logic (SDL) and Artificially Intelligent Device Use Acceptance (AIDUA)

2.7. Integrating SDL and AIDUA to Understand Consumer-AI Interaction

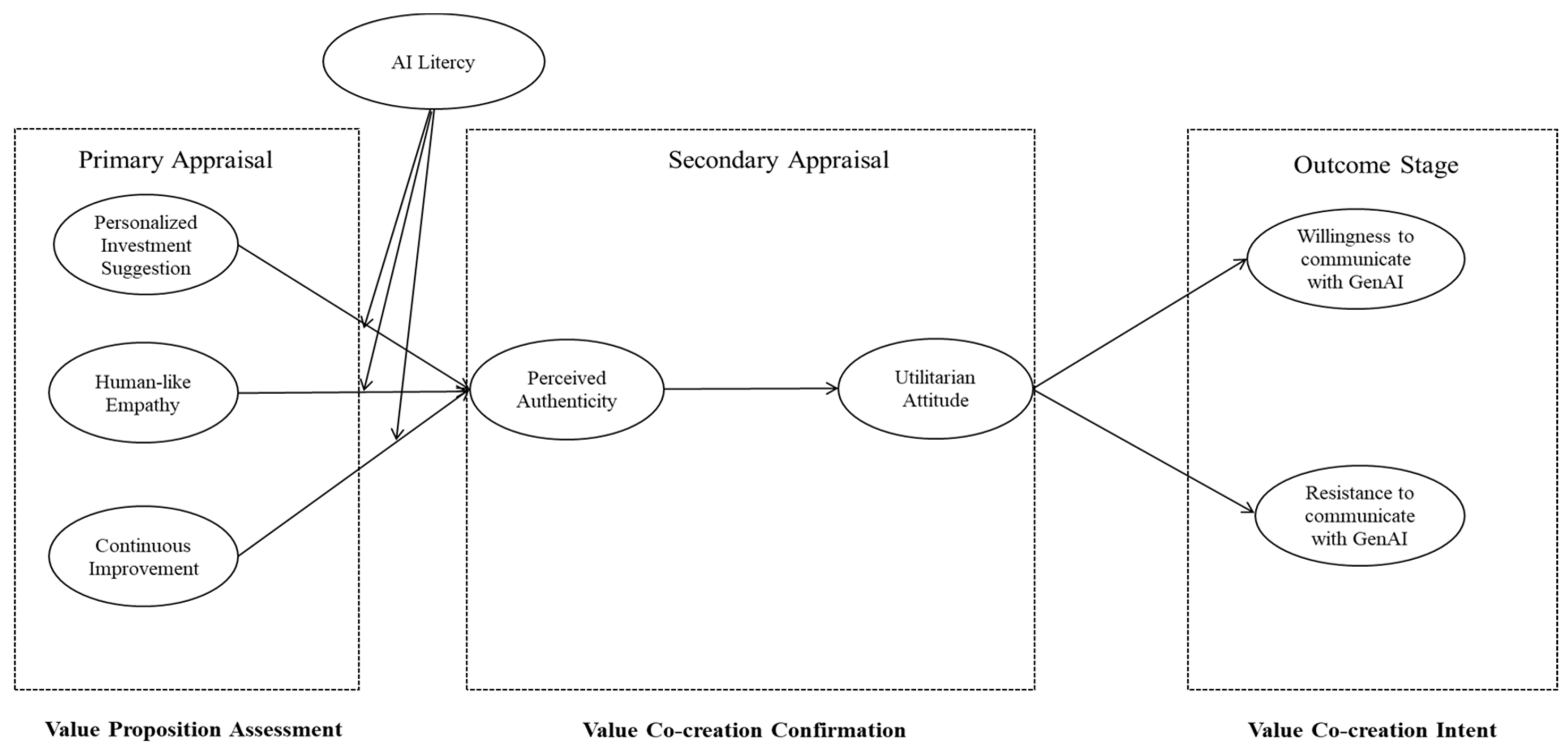

3. Hypotheses Development and Research Model

3.1. Personalized Investment Suggestion, Human-Like Empathy, Continuous Improvement

3.2. Perceived Authenticity

3.3. Utilitarian Attitude

3.4. AI Literacy

4. Research Methodology

4.1. Measurement Development

4.2. Data Collection

5. Data analysis and Results

5.1. Measurement Model

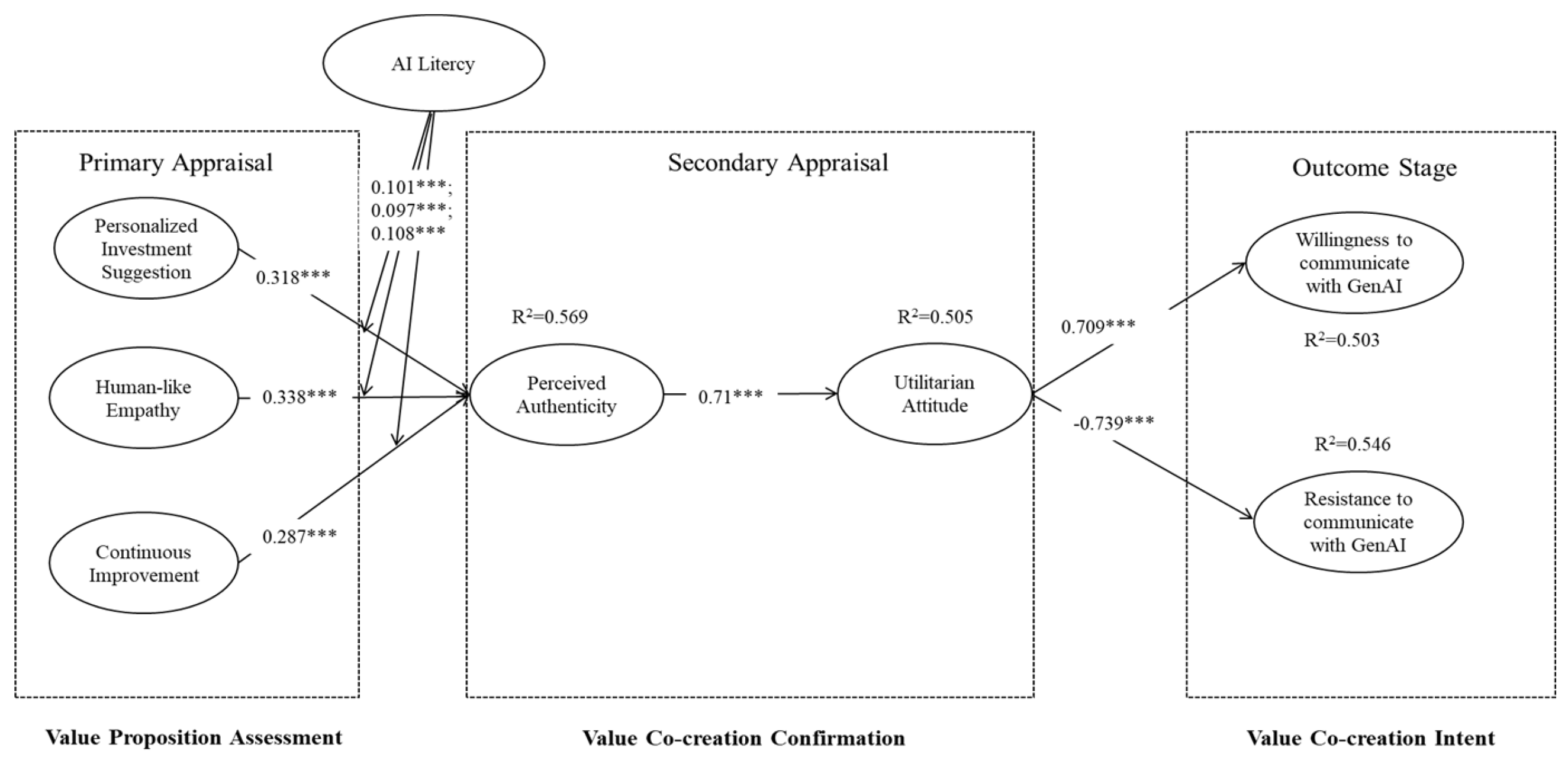

5.2. Structural Model

6. Conclusion

6.1. Academic Implications

6.2. Practical Implications

6.3. Limitations and future Directions

Conflicts of Interest

Appendix

| Constructs | Measurements | Source(s) |

| Personalized Investment Suggestion (PIS) |

1. I feel that the investment suggestion by the GenAI is in line with my preferences. | [73] |

| 2.I feel that the investment suggestion by the GenAI is in line with my taste. | ||

| 3. The investment suggestion by the GenAI is what I am interested in. | ||

| 4. The investment suggestion by the GenAI is better than the suggestions I get from other places. | ||

| 5. I feel that the quality of investment suggestion by the GenAI is what I want. | ||

| 6. My overall evaluation of the GenAI investment suggestion is very high. | ||

| 7. I think the the GenAI investment suggestions are valuable. | ||

| 8. The investment suggestions of the GenAI is flexible and changeable according to my question. | ||

| Human-like Enpathy (HLE) |

1. The GenAI makes me feel warm. | [26,74,85] |

| 2. The GenAI makes me feel that it cares about my needs. | ||

| 3. The GenAI makes me feel concerned. | ||

| 4. I feel that the GenaI serves me attentively. | ||

| 5. I feel that the GenAI puts my interests first. | ||

| 6. The GenAI gives me personalized attention. | ||

| 7. The GenAI has expressed being able to empathize with the customer’s feelings. | ||

| 8. The GenAI has indicated it could put itself well in the customer’s shoes. | ||

| 9. The GenAI is able to accurately understand the customer’s concerns. | ||

| 10. The GenAI can adopt my perspective and recommending the desired financial products. | ||

| 11. The GenAI is preoccupied with offering me the best financial products. | ||

| Continuous Improvement (CI) | 1. The GenAI can learn from past experience. | [73] |

| 2. The GenAI’s ability is enhanced through learning. | ||

| 3. After a period of use, the GenAI’s performance is getting better and better. | ||

| 4. I can feel the GenAI is constantly upgrading. | ||

| 5. The GenAI fixes previous errors. | ||

| 6. I feel that the GenAI is getting more and more advanced. | ||

| 7. The function of the GenAI has been enhanced. | ||

| Perceived Authenticity (PA) | 1. When I think of the GenAI, I see a unique set of characteristics. | [24,76] |

| 2. I would think of the GenAI as a unique individual. | ||

| 3. Using the GenaI provided me with genuine experiences. | ||

| Utilitarian Attitude (UA) |

1. The GenaI is useful. | [68] |

| 2. The GenAI is productive. | ||

| 3. The GenaI is necessary. | ||

| 4. The GenAI is practical. | ||

| 5. The GenAI is functional. | ||

| Willingness to Communicate with GenAI (WCG) |

1. I am willing to receive financial advisory services from GenAI. | [31,77] |

| 2. I will feel happy to interact with GenAI. | ||

| 3. I am likely to interact with GenAI. | ||

| 4. I would like to utilize the GenAI powered financial service if there is an opportunity. | ||

| 5. I intend to utilize the GenAI financial advisory service continuously. | ||

| 6. I recommend the GenAI financial advisory service to my friends. | ||

| Resistance to Communicate with GenAI (RCG) |

1. The financial advisory service provided by the GenAI is processed in a less humanized manner. | [31,78] |

| 2. I prefer human contact when looking for investment suggestions. | ||

| 3. People need emotion exchange during service transactions. | ||

| 4. Interaction with the GenAI lacks social contact. | ||

| 5. The existing problems with GenAI make me take a wait-and-see approach to it. | ||

| 6. I do not plan to continue using GenAI. | ||

| AI Literacy (AIL) | 1. I can use AI to solve problems involving text and words. | [79,80] |

| 2. I know how to decide which data to collect and how to process them for training AI models to solve problems. | ||

| 3. I know how to interpret results obtained from AI to solve problems. | ||

| 4. I know how to select AI algorithms to solve problems. | ||

| 5. I know how to improve my ability to use AI for problem-solving. | ||

| 6. I can use AI to solve problems involving images and videos. |

References

- Sironi, P. FinTech Innovation: From Robo-Advisors to Goal Based Investing and Gamification; John Wiley & Sons: Hoboken, NJ, USA, 2016.

- Dewasiri, N.J.; Karunarathna, K.S.S.N.; Rathnasiri, M.S.H.; Dharmarathne, D.G.; Sood, K. Unleashing the Challenges of Chatbots and ChatGPT in the Banking Industry: Evidence from an Emerging Economy. In The Framework for Resilient Industry: A Holistic Approach for Developing Economies; Routledge: London, UK, 2024; pp. 23-37.

- Brenner, L.; Meyll, T. Robo-Advisors: A Substitute for Human Financial Advice?. J. Behav. Exp. Finance 2020, 25, 100275. [CrossRef]

- Bhatia, A.; Chandani, A.; Divekar, R.; Mehta, M.; Vijay, N. Digital Innovation in Wealth Management Landscape: The Moderating Role of Robo Advisors in Behavioural Biases and Investment Decision-Making. Int. J. Innov. Sci. 2022, 14, 693-712. [CrossRef]

- Xia, H.; Zhang, Q.; Zhang, J.Z.; Zheng, L.J. Exploring Investors’ Willingness to Use Robo-Advisors: Mediating Role of Emotional Response. Ind. Manag. Data Syst. 2023, 123, 2857-2881. [CrossRef]

- Fui-Hoon Nah, F.; Zheng, R.; Cai, J.; Siau, K.; Chen, L. Generative AI and ChatGPT: Applications, Challenges, and AI-Human Collaboration. J. Inf. Technol. Case Appl. Res. 2023, 25, 277-304. [CrossRef]

- Pelau, C.; Dabija, D.C.; Ene, I. What Makes an AI Device Human-Like? The Role of Interaction Quality, Empathy, and Perceived Psychological Anthropomorphic Characteristics in the Acceptance of Artificial Intelligence in the Service Industry. Comput. Hum. Behav. 2021, 122, 106855. [CrossRef]

- Vargo, S.L.; Lusch, R.F. Evolving to a New Dominant Logic for Marketing. J. Mark. 2004, 68, 1-17. [CrossRef]

- Gursoy, D.; Chi, O.H.; Lu, L.; Nunkoo, R. Consumers’ Acceptance of Artificially Intelligent (AI) Device Use in Service Delivery. Int. J. Inf. Manag. 2019, 49, 157-169. [CrossRef]

- Huang, M.H.; Rust, R.T. Artificial Intelligence in Service. J. Serv. Res. 2018, 21, 155-172. [CrossRef]

- Roh, T.; Park, B.I.; Xiao, S.S. Adoption of AI-Enabled Robo-Advisors in Fintech: Simultaneous Employment of UTAUT and the Theory of Reasoned Action. J. Electron. Commer. Res. 2023, 24, 29-47. https://api.semanticscholar.org/CorpusID:258835831.

- Chou, S.Y.; Lin, C.W.; Chen, Y.C.; Chiou, J.S. The Complementary Effects of Bank Intangible Value Binding in Customer Robo-Advisory Adoption. Int. J. Bank Mark. 2023, 41, 971-988. [CrossRef]

- Ullah, R.; Ismail, H.B.; Khan, M.T.I.; Zeb, A. Nexus between ChatGPT Usage Dimensions and Investment Decisions Making in Pakistan: Moderating Role of Financial Literacy. Technol. Soc. 2024, 76, 102454.

- Roumeliotis, K.I.; Tselikas, N.D. ChatGPT and Open-AI Models: A Preliminary Review. Future Internet 2023, 15, 192. [CrossRef]

- Javaid, M.; Haleem, A.; Singh, R.P. A Study on ChatGPT for Industry 4.0: Background, Potentials, Challenges, and Eventualities. J. Econ. Technol. 2023, 1, 127-143. [CrossRef]

- Oehler, A.; Horn, M. Does ChatGPT Provide Better Advice than Robo-Advisors?. Finance Res. Lett. 2024, 60, 104898. [CrossRef]

- Aldunate, Á.; Maldonado, S.; Vairetti, C.; Armelini, G. Understanding Customer Satisfaction via Deep Learning and Natural Language Processing. Expert Syst. Appl. 2022, 209, 118309. [CrossRef]

- Ko, H.; Lee, J. Can ChatGPT Improve Investment Decisions? From a Portfolio Management Perspective. Finance Res. Lett. 2024, 64, 105433.

- Chen, B.; Wu, Z.; Zhao, R. From Fiction to Fact: The Growing Role of Generative AI in Business and Finance. J. Chin. Econ. Bus. Stud. 2023, 21, 471-496. [CrossRef]

- Srinivasan, S.S.; Anderson, R.; Ponnavolu, K. Customer Loyalty in E-Commerce: An Exploration of its Antecedents and Consequences. J. Retail. 2002, 78, 41-50. [CrossRef]

- Tam, K.Y.; Ho, S.Y. Web Personalization as a Persuasion Strategy: An Elaboration Likelihood Model Perspective. Inf. Syst. Res. 2005, 16, 271-291. [CrossRef]

- Ali, H.; Aysan, A.F. What Will ChatGPT Revolutionize in Financial Industry?. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4403372 (accessed on 18 August 2024).

- Ashta, A.; Herrmann, H. Artificial Intelligence and Fintech: An Overview of Opportunities and Risks for Banking, Investments, and Microfinance. Strateg. Change 2021, 30, 211-222. [CrossRef]

- Vo, D.T.; Nguyen, L.T.V.; Dang-Pham, D.; Hoang, A.P. When Young Customers Co-Create Value of AI-Powered Branded App: The Mediating Role of Perceived Authenticity. Young Consum. 2024, [Epub ahead of print]. [CrossRef]

- Nazir, A.; Wang, Z. A Comprehensive Survey of ChatGPT: Advancements, Applications, Prospects, and Challenges. Meta-Radiology 2023, 100022. [CrossRef]

- Pelau, C.; Dabija, D.C.; Ene, I. What Makes an AI Device Human-Like? The Role of Interaction Quality, Empathy and Perceived Psychological Anthropomorphic Characteristics in the Acceptance of Artificial Intelligence in the Service Industry. Comput. Hum. Behav. 2021, 122, 106855. [CrossRef]

- Alboqami, H. Trust Me, I’m an Influencer!-Causal Recipes for Customer Trust in Artificial Intelligence Influencers in the Retail Industry. J. Retail. Consum. Serv. 2023, 72, 103242. [CrossRef]

- Glikson, E.; Asscher, O. AI-Mediated Apology in a Multilingual Work Context: Implications for Perceived Authenticity and Willingness to Forgive. Comput. Hum. Behav. 2023, 140, 107592. [CrossRef]

- Jones, C.L.E.; Hancock, T.; Kazandjian, B.; Voorhees, C.M. Engaging the Avatar: The Effects of Authenticity Signals during Chat-Based Service Recoveries. J. Bus. Res. 2022, 144, 703-716. [CrossRef]

- Stahl, B.C.; Eke, D. The Ethics of ChatGPT–Exploring the Ethical Issues of an Emerging Technology. Int. J. Inf. Manag. 2024, 74, 102700. [CrossRef]

- Ma, X.; Huo, Y. Are Users Willing to Embrace ChatGPT? Exploring the Factors on the Acceptance of Chatbots from the Perspective of AIDUA Framework. Technol. Soc. 2023, 75, 102362. [CrossRef]

- Niu, B.; Mvondo, G.F.N. I Am ChatGPT, the Ultimate AI Chatbot! Investigating the Determinants of Users’ Loyalty and Ethical Usage Concerns of ChatGPT. J. Retail. Consum. Serv. 2024, 76, 103562. [CrossRef]

- Paul, J.; Ueno, A.; Dennis, C. ChatGPT and Consumers: Benefits, Pitfalls and Future Research Agenda. Int. J. Consum. Stud. 2023, 47, 1213-1225. [CrossRef]

- Chang, T.S.; Hsiao, W.H. Understand Resist Use Online Customer Service Chatbot: An Integrated Innovation Resist Theory and Negative Emotion Perspective. Aslib J. Inf. Manag. 2024. [CrossRef]

- Baek, T.H.; Kim, M. Is ChatGPT Scary Good? How User Motivations Affect Creepiness and Trust in Generative Artificial Intelligence. Telemat. Inform. 2023, 83, 102030. [CrossRef]

- Ng, D.T.K.; Leung, J.K.L.; Chu, S.K.W.; Qiao, M.S. Conceptualizing AI Literacy: An Exploratory Review. Comput. Educ. Artif. Intell. 2021, 2, 100041. [CrossRef]

- Perchik, J.D.; Smith, A.D.; Elkassem, A.A.; Park, J.M.; Rothenberg, S.A.; Tanwar, M.; Sotoudeh, H. Artificial Intelligence Literacy: Developing a Multi-Institutional Infrastructure for AI Education. Acad. Radiol. 2023, 30, 1472-1480. [CrossRef]

- Cardon, P.; Fleischmann, C.; Aritz, J.; Logemann, M.; Heidewald, J. The Challenges and Opportunities of AI-Assisted Writing: Developing AI Literacy for the AI Age. Bus. Prof. Commun. Q. 2023, 86, 257-295. [CrossRef]

- Shin, D.; Rasul, A.; Fotiadis, A. Why Am I Seeing This? Deconstructing Algorithm Literacy through the Lens of Users. Internet Res. 2022, 32, 1214-1234. [CrossRef]

- Wang, B.; Rau, P.L.P.; Yuan, T. Measuring User Competence in Using Artificial Intelligence: Validity and Reliability of Artificial Intelligence Literacy Scale. Behav. Inf. Technol. 2023, 42, 1324-1337. [CrossRef]

- Markus, A.; Pfister, J.; Carolus, A.; Hotho, A.; Wienrich, C. Effects of AI Understanding-Training on AI Literacy, Usage, Self-Determined Interactions, and Anthropomorphization with Voice Assistants. Comput. Educ. Open 2024, 6, 100176. [CrossRef]

- Vargo, S.L.; Maglio, P.P.; Akaka, M.A. On Value and Value Co-Creation: A Service Systems and Service Logic Perspective. Eur. Manag. J. 2008, 26, 145-152. [CrossRef]

- Grönroos, C. Service Logic Revisited: Who Creates Value? And Who Co-Creates?. Eur. Bus. Rev. 2008, 20, 298-314. [CrossRef]

- Riikkinen, M.; Saarijärvi, H.; Sarlin, P.; Lähteenmäki, I. Using Artificial Intelligence to Create Value in Insurance. Int. J. Bank Mark. 2018, 36, 1145-1168. [CrossRef]

- Zhu, H.; Vigren, O.; Söderberg, I.L. Implementing Artificial Intelligence Empowered Financial Advisory Services: A Literature Review and Critical Research Agenda. J. Bus. Res. 2024, 174, 114494. [CrossRef]

- Lin, H.; Chi, O.H.; Gursoy, D. Antecedents of Customers’ Acceptance of Artificially Intelligent Robotic Device Use in Hospitality Services. J. Hosp. Mark. Manag. 2020, 29, 530-549. [CrossRef]

- Kelly, S.; Kaye, S.A.; Oviedo-Trespalacios, O. What Factors Contribute to the Acceptance of Artificial Intelligence? A Systematic Review. Telemat. Inform. 2023, 77, 101925. [CrossRef]

- Bag, S.; Srivastava, G.; Bashir, M.M.A.; Kumari, S.; Giannakis, M.; Chowdhury, A.H. Journey of Customers in this Digital Era: Understanding the Role of Artificial Intelligence Technologies in User Engagement and Conversion. Benchmarking 2022, 29, 2074-2098. [CrossRef]

- Ameen, N.; Tarhini, A.; Reppel, A.; Anand, A. Customer Experiences in the Age of Artificial Intelligence. Comput. Hum. Behav. 2021, 114, 106548. [CrossRef]

- Vesanen, J. What is Personalization? A Conceptual Framework. Eur. J. Mark. 2007, 41, 409-418. [CrossRef]

- Musto, C.; Semeraro, G.; Lops, P.; De Gemmis, M.; Lekkas, G. Personalized Finance Advisory through Case-Based Recommender Systems and Diversification Strategies. Decis. Support Syst. 2015, 77, 100-111. [CrossRef]

- Napoli, J.; Dickinson, S.J.; Beverland, M.B.; Farrelly, F. Measuring Consumer-Based Brand Authenticity. J. Bus. Res. 2014, 67, 1090-1098. [CrossRef]

- Morhart, F.; Malär, L.; Guèvremont, A.; Girardin, F.; Grohmann, B. Brand Authenticity: An Integrative Framework and Measurement Scale. J. Consum. Psychol. 2015, 25, 200-218. [CrossRef]

- Chi, N.T.K.; Hoang Vu, N. Investigating the Customer Trust in Artificial Intelligence: The Role of Anthropomorphism, Empathy Response, and Interaction. CAAI Trans. Intell. Technol. 2023, 8, 260-273. [CrossRef]

- Chuah, S.H.W.; Yu, J. The Future of Service: The Power of Emotion in Human-Robot Interaction. J. Retail. Consum. Serv. 2021, 61, 102551. [CrossRef]

- Huang, M.H.; Rust, R.T. Engaged to a Robot? The Role of AI in Service. J. Serv. Res. 2021, 24, 30-41. [CrossRef]

- Li, J.; Huang, J.; Li, Y. Examining the Effects of Authenticity Fit and Association Fit: A Digital Human Avatar Endorsement Model. J. Retail. Consum. Serv. 2023, 71, 103230. [CrossRef]

- Alimamy, S.; Al-Imamy, S. Customer Perceived Value through Quality Augmented Reality Experiences in Retail: The Mediating Effect of Customer Attitudes. J. Mark. Commun. 2022, 28, 428-447.

- Kwon, J.; Amendah, E.; Ahn, J. Mediating Role of Perceived Authenticity in the Relationship between Luxury Service Experience and Life Satisfaction. J. Strateg. Mark. 2024, 32, 137-151. [CrossRef]

- Zamil, A.M.; Ali, S.; Akbar, M.; Zubr, V.; Rasool, F. The Consumer Purchase Intention toward Hybrid Electric Car: A Utilitarian-Hedonic Attitude Approach. Front. Environ. Sci. 2023, 11, 1101258. [CrossRef]

- Fu, X. Understanding the Adoption Intention for Electric Vehicles: The Role of Hedonic-Utilitarian Values. Energy 2024, 131703. [CrossRef]

- Kim, H.W.; Chan, H.C.; Gupta, S. Value-Based Adoption of Mobile Internet: An Empirical Investigation. Decis. Support Syst. 2007, 43, 111-126. [CrossRef]

- Dinh, C.M.; Park, S. How to Increase Consumer Intention to Use Chatbots? An Empirical Analysis of Hedonic and Utilitarian Motivations on Social Presence and the Moderating Effects of Fear across Generations. Electron. Commer. Res. 2023, 1-41. [CrossRef]

- Hsieh, P.J. An Empirical Investigation of Patients’ Acceptance and Resistance Toward the Health Cloud: The Dual Factor Perspective. Comput. Hum. Behav. 2016, 63, 959-969. [CrossRef]

- Ghosh, M. Empirical Study on Consumers’ Reluctance to Mobile Payments in a Developing Economy. J. Sci. Technol. Policy Manag. 2024, 15, 67-92. [CrossRef]

- Attié, E.; Meyer-Waarden, L. The Acceptance and Usage of Smart Connected Objects According to Adoption Stages: An Enhanced Technology Acceptance Model Integrating the Diffusion of Innovation, Uses and Gratification and Privacy Calculus Theories. Technol. Forecast. Soc. Change 2022, 176, 121485. [CrossRef]

- Jan, I.U.; Ji, S.; Kim, C. What (De) Motivates Customers to Use AI-Powered Conversational Agents for Shopping? The Extended Behavioral Reasoning Perspective. J. Retail. Consum. Serv. 2023, 75, 103440. [CrossRef]

- Priya, B.; Sharma, V. Exploring Users’ Adoption Intentions of Intelligent Virtual Assistants in Financial Services: An Anthropomorphic Perspectives and Socio-Psychological Perspectives. Comput. Hum. Behav. 2023, 148, 107912. [CrossRef]

- Carolus, A.; Koch, M.J.; Straka, S.; Latoschik, M.E.; Wienrich, C. MAILS—Meta AI Literacy Scale: Development and Testing of an AI Literacy Questionnaire Based on Well-Founded Competency Models and Psychological Change- and Meta-Competencies. Comput. Hum. Behav. Artif. Humans 2023, 1, 100014. [CrossRef]

- Tirado-Morueta, R.; Aguaded-Gómez, J.I.; Hernando-Gómez, Á. The Socio-Demographic Divide in Internet Usage Moderated by Digital Literacy Support. Technol. Soc. 2018, 55, 47-55. [CrossRef]

- Baabdullah, A.M.; Alalwan, A.A.; Algharabat, R.S.; Metri, B.; Rana, N.P. Virtual Agents and Flow Experience: An Empirical Examination of AI-Powered Chatbots. Technol. Forecast. Soc. Change 2022, 181, 121772. [CrossRef]

- Sperling, K.; Stenberg, C.J.; McGrath, C.; Åkerfeldt, A.; Heintz, F.; Stenliden, L. In Search of Artificial Intelligence (AI) Literacy in Teacher Education: A Scoping Review. Comput. Educ. Open 2024, 100169. [CrossRef]

- Chen, Q.; Gong, Y.; Lu, Y.; Tang, J. Classifying and Measuring the Service Quality of AI Chatbot in Frontline Service. J. Bus. Res. 2022, 145, 552-568. [CrossRef]

- Fu, J.; Mouakket, S.; Sun, Y. The Role of Chatbots’ Human-Like Characteristics in Online Shopping. Electron. Commer. Res. Appl. 2023, 61, 101304. [CrossRef]

- Seitz, L. Artificial Empathy in Healthcare Chatbots: Does it Feel Authentic?. Comput. Hum. Behav. Artif. Humans 2024, 2, 100067. [CrossRef]

- Meng, L.M.; Li, T.; Huang, X. Double-Sided Messages Improve the Acceptance of Chatbots. Ann. Tour. Res. 2023, 102, 103644. [CrossRef]

- Kim, W.B.; Hur, H.J. What Makes People Feel Empathy for AI Chatbots? Assessing the Role of Competence and Warmth. Int. J. Hum. Comput. Interact. 2023, 1-14.

- Yang, B.; Sun, Y.; Shen, X.L. Understanding AI-Based Customer Service Resistance: A Perspective of Defective AI Features and Tri-Dimensional Distrusting Beliefs. Inf. Process. Manag. 2023, 60, 103257. [CrossRef]

- Almatrafi, O.; Johri, A.; Lee, H. A Systematic Review of AI Literacy Conceptualization, Constructs, and Implementation and Assessment Efforts (2019-2023). Comput. Educ. Open 2024, 100173. [CrossRef]

- Kong, S.C.; Cheung, M.Y.W.; Tsang, O. Developing an Artificial Intelligence Literacy Framework: Evaluation of a Literacy Course for Senior Secondary Students Using a Project-Based Learning Approach. Comput. Educ. Artif. Intell. 2024, 6, 100214. [CrossRef]

- Podsakoff, P.M.; Organ, D.W. Self-Reports in Organizational Research: Problems and Prospects. J. Manag. 1986, 12, 531-544. [CrossRef]

- Hair, J.F.; Gabriel, M.; Patel, V. AMOS Covariance-Based Structural Equation Modeling (CB-SEM): Guidelines on its Application as a Marketing Research Tool. Braz. J. Mark. 2014, 13, 1-15. [CrossRef]

- Raza, S.A.; Qazi, W.; Khan, K.A.; Salam, J. Social Isolation and Acceptance of the Learning Management System (LMS) in the Time of COVID-19 Pandemic: An Expansion of the UTAUT Model. J. Educ. Comput. Res. 2021, 59, 183-208. [CrossRef]

- Fornell, C.; Larcker, D.F. Structural Equation Models with Unobservable Variables and Measurement Error: Algebra and Statistics. J. Mark. Res. 1981, 18, 39-50. [CrossRef]

- Hu, L.T.; Bentler, P.M. Cutoff Criteria for Fit Indexes in Covariance Structure Analysis: Conventional Criteria Versus New Alternatives. Struct. Equ. Modeling 1999, 6, 1-55. [CrossRef]

- Kim, J.; Kang, S.; Bae, J. Human Likeness and Attachment Effect on the Perceived Interactivity of AI Speakers. J. Bus. Res. 2022, 144, 797-804. [CrossRef]

- Pitardi, V. Personalized and Contextual Artificial Intelligence-Based Services Experience. In Artificial Intelligence in Customer Service: The Next Frontier for Personalized Engagement; Springer: Cham, Switzerland, 2023; pp. 101-122.

- Lee, G.; Kim, H.Y. Human vs. AI: The Battle for Authenticity in Fashion Design and Consumer Response. J. Retail. Consum. Serv. 2024, 77, 103690. [CrossRef]

- Pandey, P.; Rai, A.K. Analytical Modeling of Perceived Authenticity in AI Assistants: Application of PLS-Predict Algorithm and Importance-Performance Map Analysis. South Asian J. Bus. Stud. 2024. [CrossRef]

- Wen, H.; Zhang, L.; Sheng, A.; Li, M.; Guo, B. From “Human-to-Human” to “Human-to-Non-Human”–Influence Factors of Artificial Intelligence-Enabled Consumer Value Co-Creation Behavior. Front. Psychol. 2022, 13, 863313. [CrossRef]

- Markovitch, D.G.; Stough, R.A.; Huang, D. Consumer Reactions to Chatbot Versus Human Service: An Investigation in the Role of Outcome Valence and Perceived Empathy. J. Retail. Consum. Serv. 2024, 79, 103847. [CrossRef]

- Baidoo-Anu, D.; Ansah, L.O. Education in the Era of Generative Artificial Intelligence (AI): Understanding the Potential Benefits of ChatGPT in Promoting Teaching and Learning. J. AI 2023, 7, 52-62. [CrossRef]

- Raj, R.; Singh, A.; Kumar, V.; Verma, P. Analyzing the Potential Benefits and Use Cases of ChatGPT as a Tool for Improving the Efficiency and Effectiveness of Business Operations. BenchCouncil Trans. Benchmarks Stand. Eval. 2023, 3, 100140. [CrossRef]

- Rese, A.; Ganster, L.; Baier, D. Chatbots in Retailers’ Customer Communication: How to Measure Their Acceptance?. J. Retail. Consum. Serv. 2020, 56, 102176. [CrossRef]

- Kuhail, M.A.; Thomas, J.; Alramlawi, S.; Shah, S.J.H.; Thornquist, E. Interacting with a Chatbot-Based Advising System: Understanding the Effect of Chatbot Personality and User Gender on Behavior. Informatics 2022, 9, 81. [CrossRef]

- Alimamy, S.; Kuhail, M.A. I Will Be with You Alexa! The Impact of Intelligent Virtual Assistant’s Authenticity and Personalization on User Reusage Intentions. Comput. Hum. Behav. 2023, 143, 107711. [CrossRef]

- Du, H.; Sun, Y.; Jiang, H.; Islam, A.Y.M.; Gu, X. Exploring the Effects of AI Literacy in Teacher Learning: An Empirical Study. Humanit. Soc. Sci. Commun. 2024, 11, 1-10. [CrossRef]

| Demographics | Frequency | Percentage (%) | |

|---|---|---|---|

| Gender | Male | 412 | 50.1 |

| Female | 410 | 49.9 | |

| Age | 18-24 | 139 | 16.9 |

| 25-34 | 322 | 39.2 | |

| 35-44 | 233 | 28.3 | |

| 45-54 | 86 | 10.5 | |

| 55-64 | 35 | 4.3 | |

| Above 65 | 7 | 0.9 | |

| Education Background |

High school and below | 164 | 20 |

| 3-year college | 252 | 30.7 | |

| Bachelor | 363 | 44.2 | |

| Master and above | 43 | 5.2 | |

| Monthly Income |

3000 CNY and below | 141 | 17.2 |

| 3001-5000 CNY | 423 | 51.5 | |

| 5001-7000 CNY | 140 | 17 | |

| 7001-9000 CNY | 61 | 7.4 | |

| 9000 CNY and above | 57 | 6.9 | |

| Frequency of using GenAI |

Several times per day | 29 | 3.5 |

| Once a day | 78 | 9.5 | |

| Several times per week | 106 | 12.9 | |

| Once a week | 364 | 44.3 | |

| Several times per month | 208 | 25.3 | |

| Once a month | 37 | 4.5 | |

| Constructs | Items | Item loadings | Cronbach’s Alpha | CR | AVE |

|---|---|---|---|---|---|

| Personalized Investment Suggestion | PIS1 | 0.924 | 0.926 | 0.928 | 0.619 |

| PIS2 | 0.778 | ||||

| PIS3 | 0.769 | ||||

| PIS4 | 0.741 | ||||

| PIS5 | 0.747 | ||||

| PIS6 | 0.744 | ||||

| PIS7 | 0.783 | ||||

| PIS8 | 0.791 | ||||

| Human-like Empathy | HLE1 | 0.898 | 0.949 | 0.95 | 0.635 |

| HLE2 | 0.793 | ||||

| HLE3 | 0.778 | ||||

| HLE4 | 0.78 | ||||

| HLE5 | 0.754 | ||||

| HLE6 | 0.788 | ||||

| HLE7 | 0.768 | ||||

| HLE8 | 0.768 | ||||

| HLE9 | 0.798 | ||||

| HLE10 | 0.814 | ||||

| HLE11 | 0.814 | ||||

| Continuous Improvement | CI1 | 0.87 | 0.915 | 0.917 | 0.613 |

| CI2 | 0.778 | ||||

| CI3 | 0.718 | ||||

| CI4 | 0.75 | ||||

| CI5 | 0.788 | ||||

| CI6 | 0.771 | ||||

| CI7 | 0.796 | ||||

| Perceived Authenticity |

PA1 | 0.886 | 0.845 | 0.853 | 0.66 |

| PA2 | 0.764 | ||||

| PA3 | 0.781 | ||||

| Utilitarian Attitude | UA1 | 0.887 | 0.865 | 0.876 | 0.587 |

| UA2 | 0.733 | ||||

| UA3 | 0.685 | ||||

| UA4 | 0.74 | ||||

| UA5 | 0.771 | ||||

| Willingness to communicate with GenAI | WCG1 | 0.888 | 0.894 | 0.898 | 0.596 |

| WCG2 | 0.721 | ||||

| WCG3 | 0.738 | ||||

| WCG4 | 0.726 | ||||

| WCG5 | 0.765 | ||||

| WCG6 | 0.78 | ||||

| Resistance to communicate with GenAI | RCG1 | 0.863 | 0.885 | 0.887 | 0.57 |

| RCG2 | 0.8 | ||||

| RCG3 | 0.672 | ||||

| RCG4 | 0.686 | ||||

| RCG5 | 0.762 | ||||

| RCG6 | 0.728 | ||||

| AI Literacy | AIL1 | 0.768 | 0.91 | 0.91 | 0.629 |

| AIL2 | 0.757 | ||||

| AIL3 | 0.844 | ||||

| AIL4 | 0.818 | ||||

| AIL5 | 0.76 | ||||

| AIL6 | 0.808 |

| PIS | HLE | CI | PA | UA | WCG | RCG | AIL | |

|---|---|---|---|---|---|---|---|---|

| PIS | 0.787 | |||||||

| HLE | .442** | 0.797 | ||||||

| CI | .423** | .446** | 0.783 | |||||

| AIL | .150** | .160** | .174** | 0.793 | ||||

| PA | .541** | .551** | .500** | .317** | 0.812 | |||

| UA | .451** | .493** | .480** | .195** | .614** | 0.766 | ||

| WCG | .348** | .332** | .324** | .143** | .413** | .669** | 0.772 | |

| RCG | -.315** | -.336** | -.371** | -.198** | -.473** | -.677** | -.435** | 0.755 |

| Fit Indices | χ 2/df | GFI | AGFI | NFI | CFI | IFI | SRMR | RMSEA |

|---|---|---|---|---|---|---|---|---|

| Recommended Criteria | <3 | >0.9 | >0.8 | >0.9 | >0.9 | >0.9 | <0.08 | <0.08 |

| Scores | 1.173 | 0.938 | 0.932 | 0.95 | 0.992 | 0.992 | 0.026 | 0.015 |

| Hypothesis | Path | β | P-value | R2 | Remarks | ||

|---|---|---|---|---|---|---|---|

| H1 | PIS | → | PA | 0.318 | *** | 0.569 | Supported |

| H2 | HLE | → | PA | 0.338 | *** | Supported | |

| H3 | CI | → | PA | 0.287 | *** | Supported | |

| H4 | PA | → | UA | 0.71 | *** | 0.505 | Supported |

| H5 | UA | → | WCG | 0.709 | *** | 0.503 | Supported |

| H6 | UA | → | RCG | -0.739 | *** | 0.546 | Supported |

| Moderating Effect | Path | β | P-value | Remarks | |||

| H7 | PIS*AIL | → | PA | 0.101 | *** | Supported | |

| H8 | HLE*AIL | → | PA | 0.097 | *** | Supported | |

| H9 | CI*AIL | → | PA | 0.108 | *** | Supported | |

| Fit Indices | χ 2/df | GFI | AGFI | NFI | CFI | IFI | SRMR | RMSEA |

|---|---|---|---|---|---|---|---|---|

| Recommended Criteria | <3 | >0.9 | >0.8 | >0.9 | >0.9 | >0.9 | <0.08 | <0.08 |

| Scores | 1.173 | 0.938 | 0.932 | 0.95 | 0.992 | 0.992 | 0.026 | 0.015 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).