1. Introduction

Agriculture is considered the foundation of human life, providing not only food but also the primary source of raw materials for various products. It also plays a vital role in the economic growth of nations [

1,

2]. However, many farmers around the world still use traditional farming methods that result in low crop yields. Therefore, smart agriculture, which aims to improve crop efficiency by automating agricultural practices, is receiving attention [

3].

Smart agriculture is an agricultural practice that utilizes advanced technologies such as robotics, AI, and IoT. This allows for improved efficiency and productivity in agricultural practices. Smart agriculture technologies include the use of ground robots and drones, as well as sensing technology using agricultural sensors.

Currently, smart farming systems using drones are widely used [

4,

5]. Drones can fly automatically on predetermined courses. Thus, the drone user has to operate it. In addition, agricultural drones can automatically perform certain tasks day and night. Therefore, the use of agricultural drones can reduce the burden on farmers in agricultural practice [

6]. Specifically, they are used for agricultural practices such as spraying pesticides and fertilizers, monitoring pests, and so on. Drones equipped with multispectral cameras are particularly useful for assessing crop growth, detecting early disease and predicting harvest time [

7]. This allows farmers to remotely monitor the health of their crops and take appropriate action. Adding various functions to drones has the potential to bring significant benefits to agriculture.

However, there are a number of challenges to the widespread adoption of smart agriculture. In particular, the high cost of implementing communication infrastructure to support sensing technology is a significant issue to be addressed in promoting the adoption of smart agriculture [

8]. Sensing technologies in smart agriculture can measure environmental parameters such as soil moisture and temperature using various agricultural sensors [

9,

10]. Analyzing this data can improve crop yield and quality and support agricultural management. Typically, sensor data from agricultural fields is collected using wireless protocols such as Low Power Wide Area (LPWA). However, the equipment to transmit sensor data is expensive. As a result, farmers may be reluctant to adopt high-density sensing systems that use many such devices. This is a serious problem for the adoption of smart agriculture.

To solve this problem, we focused on Optical Camera Communication (OCC), which enables high-density sensing at low cost. OCC is a communication technology that uses a camera and a light source. A light source, such as an LED panel, display, and digital signage, is used as a transmitter to send digital data as optical signals [

11]. A receiver camera films the transmitted signals using a complementary metal-oxide-semiconductor (CMOS) sensor. OCC is suitable for point-to-multipoint (P2MP) communications using many light sources in a certain area thanks to little interference among transmitters. Implementing sensing technology using OCC in agriculture can help achieve high-density sensing at low cost. However, there is currently no high-density agricultural sensing system using OCC.

In this paper, we propose an agricultural sensor network system using drones and Optical Camera Communication (OCC). In the proposed method, many sensor nodes with light sources are deployed in the farmland and transmit sensor data measured in the farmland using light sources. The transmitted data is received by filming the sensor nodes with a drone-mounted camera moving over the farmland. The advantages of OCC include low cost, the use of the visible light spectrum, which does not require a license, and low power consumption through the use of an LED transmitter. In particular, by using OCC as a communication method for wireless sensor networks in agriculture, low-cost light sources such as LED panels can be used as transmitters instead of the expensive communication modules previously used. In addition, since most modern agricultural drones are equipped with cameras, the agricultural drones already in use can be used as receivers [

12]. This approach reduces the cost of implementing a communications infrastructure. In addition, by reducing the cost of communication modules, it becomes possible to achieve high-density sensing, which has been difficult to achieve due to the high cost. To achieve high-density sensing, it is essential to collect sensor data efficiently. Therefore, we also propose a trajectory control algorithm to receive data appropriately according to the specifications of the camera and sensors. OCC is suitable for point-to-multipoint (P2MP) communication because there is little interference among transmitters. The received multiple light signals in the filmed image can be demodulated by separately cropping pixels corresponding to each light source [

13]. Taking this into account, the proposed algorithm calculates flight paths that can receive data more efficiently. The contribution of the proposed method is that it enables high-density sensing at low cost using already deployed agricultural drones. We report preliminary experimental results at a leaf mustard farm in Kamitonda-cho, Wakayama, to demonstrate the feasibility of the proposed system. In this paper, we have implemented a system in which drones automatically receive data from all sensor nodes deployed in the farmland. We also evaluate the accuracy of data transmission in the real environment.

The rest of this paper is organized as follows.

Section 2 summarizes related research relevant to this study. In

Section 3, we introduce the proposed system. We explain the overall flow of the proposed system and provide the flight route calculation method in the proposed algorithm. Then,

Section 4 provides the performance of the proposed algorithm using computer simulations and the effectiveness of the algorithm. Experimental results are presented in

Section 5. We have implemented a program for the drone to move autonomously and evaluated the feasibility of the proposed system. Finally,

Section 6 summarizes the conclusions of this paper.

2. Related Work

Advances in drone technology are enabling applications in a wide range of fields. In particular, the use of drones in agriculture is contributed significantly to the efficiency and productivity of agricultural practices [

14]. Drones are indispensable tools in agricultural monitoring and data collection due to their high mobility and extensive data collection capabilities [

15,

16]. There is active research into the use of drones in agriculture. [

17] showed that agricultural drones play an innovative role in agriculture. In particular, he explained that the use of drones allows for highly accurate data collection and data analysis, which significantly contributes to improving the efficiency and productivity of agriculture. [

18] proposed the use of drones for spraying pesticides and fertilizers. They showed that it improves the efficiency and uniformity of spraying compared to conventional spraying methods. [

19] studied crop condition monitoring by calculating NDVI with multispectral sensors and temperature sensors. They conducted field tests with drones equipped with multispectral and temperature sensors. [

20] developed a crop management scheme using real-time drone data coupled with IoT and cloud computing technologies. This scheme analyzes the data collected by the sensors to provide the required amount of water at the right time using a connected irrigation system. In [

21], wireless power transfer (WPT) technologies were developed to improve drone flight time, which is one of the major limitations of drone-based systems. In [

22], a routing algorithm is proposed for efficient and accurate data collection considering drone flight time and flight speed. A drone-assisted data collection process has been developed for monitoring large cornfields in [

23]. The drone is equipped with high-resolution cameras and sensors to collect images and data of the cornfields. The management of cornfields becomes more efficient as this system allows for real-time monitoring and response. [

24] presented a communication protocol for drones to monitor leaf temperature data across the farm using infrared thermometers. They implemented a crop monitoring system that combines wireless sensor networks and drones. This enables real-time monitoring of large agricultural areas and rapid data collection. In this way, drones are used to monitor crop yields and extend the communication range of wireless sensor networks.

In smart agriculture, remote sensing with sensor data is considered as one of the most important technologies [

25]. [

26] has developed a real-time soil health monitoring system using Long Range Wide Area Network (LoRaWAN)-based IoT technology. In this system, data collected from soil sensors is transmitted over the LoRaWAN network to the cloud platform. [

27] proposes a real-time temperature auto-monitoring and frost prevention control system based on a Z-BEE wireless sensor networks. The system performs real-time temperature auto-monitoring to prevent the tea plant from frost damage. An energy efficient and secure IoT-based wireless sensor network framework for smart agriculture applications was proposed in [

28]. This framework achieved more stable network performance between agricultural sensors and provided secure data transmission. [

29] evaluated the effectiveness of data collection and monitoring systems in agriculture using Narrowband Internet of Things (NB-IoT) technology. Performance metrics such as data accuracy, communication latency, and battery consumption were analyzed. [

30] proposed an environmental monitoring system for micro farms. In this system, environmental data collected from sensors are transmitted to a cloud server via a wireless network (Wi-Fi).

OCC is visible light communication (VLC) between a light source and a camera. OCC uses a light source as the transmitter and a camera as the receiver. The main advantages of OCC are its cost efficiency, license and low power consumption. Since most modern agricultural drones are equipped with cameras [

12], they can be used as receivers. Therefore, OCC is suitable for remote sensing in agriculture. In [

31], the performance of OCC was evaluated using parameters such as camera sampling rate, exposure time, focal length, pixel edge length, transmitter configurations, and optical flicker rate. The signal-to-interference-plus-noise ratio (SINR) was defined and different modulation schemes were analyzed. Noise from ambient light is a significant problem for visible light communication including OCC, some works have proposed solutions to address this problem. [

32] studied the effects of sunlight on visible light communications. They clarified the specific effects of sunlight on VLC and proposed a system to mitigate these effects. As a result, data rates exceeding 1 Gb/s were measured even under strong sunlight. [

33] proposed the Vehicle-to-Vehicle Communication System using OCC. They achieved

fps image data reception under real driving and outdoor lighting conditions. Therefore, experiments and evaluations of a 400-meter communication link using OCC were conducted. This experiment was conducted outdoors. It was demonstrated that the communication performance is maintained under various weather and outdoor lighting conditions.

However, there has been no work on drone-based smart agriculture using OCC. In this paper, we propose an agricultural sensor network system using drones and OCC. The contribution of this paper is that by using OCC for sensor data communication, it enables high-density sensing at low cost in farmland. The proposed system can utilize existing agricultural drones. In the next section, we present the details of the proposed method.

3. Proposed Scheme

3.1. Concept

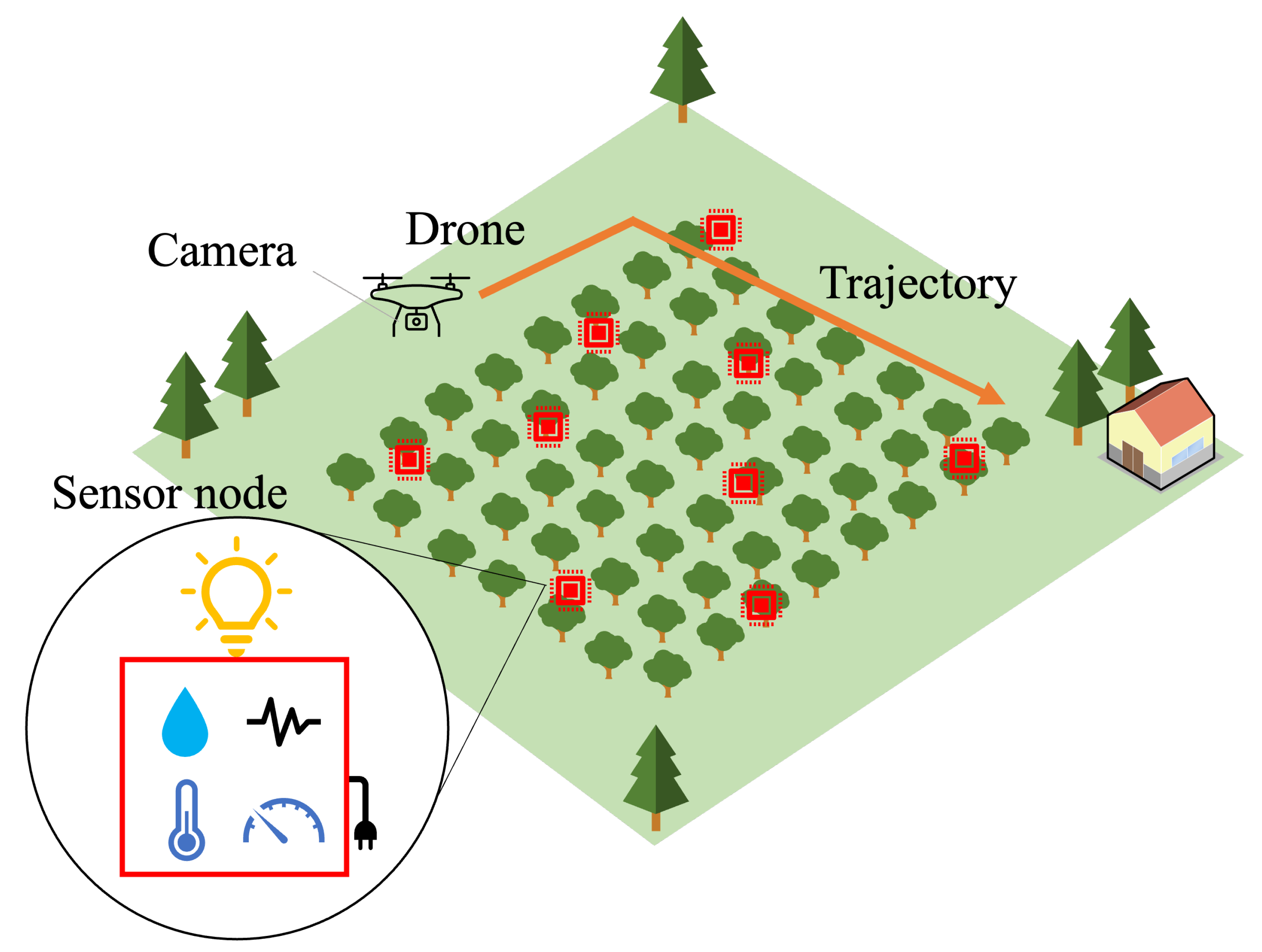

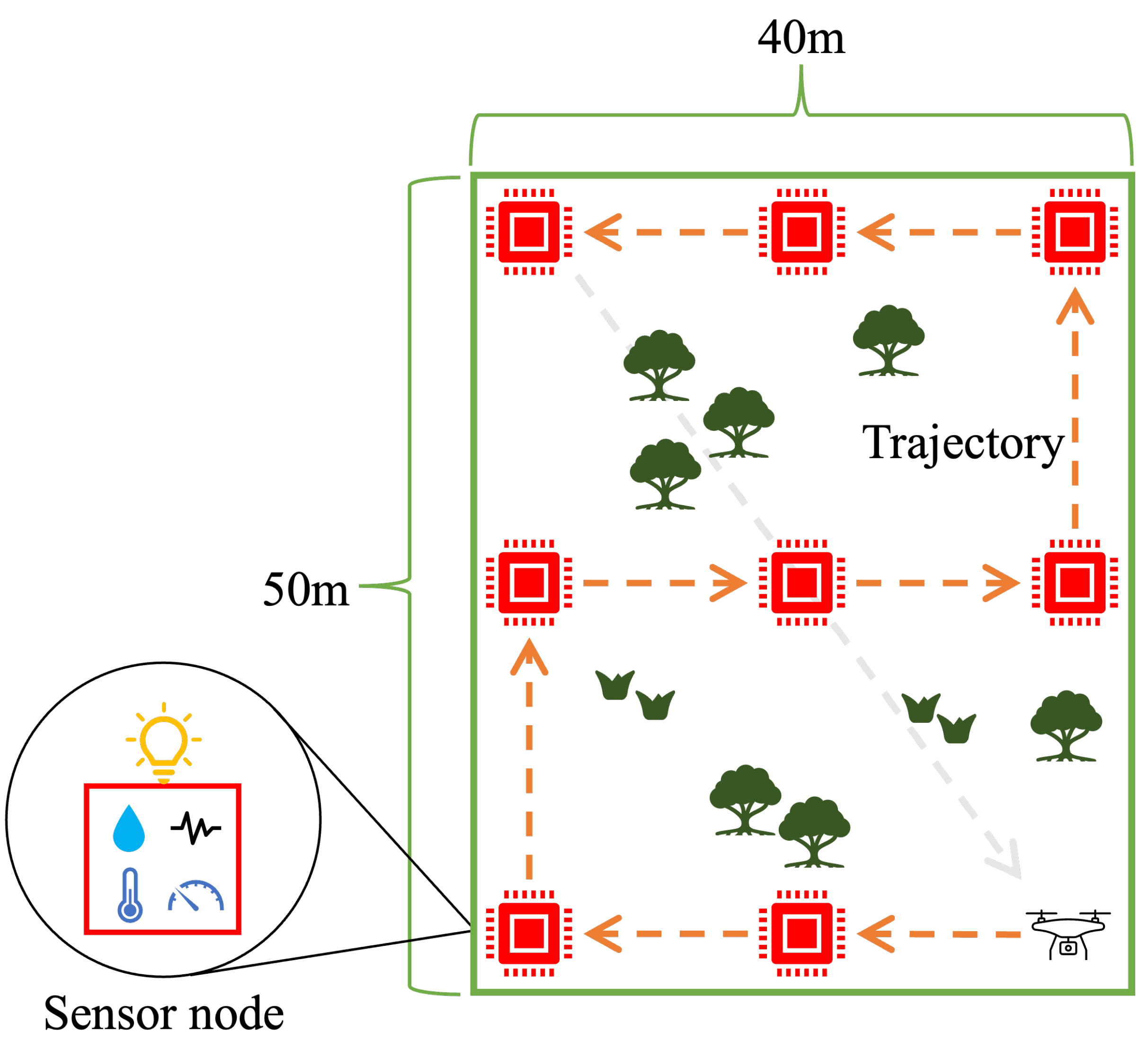

We propose a drone-based sensor network system using OCC. The conceptual system architecture is shown in

Figure 1. Many sensor nodes are deployed in a target area. A sensor node is equipped with sensors and a light source. The light source can be an LED light, an LED panel, or a display. The sensor data is encoded and modulated as optical signals to be transmitted from the light source. A receiver drone equipped with a camera moves around the target area to film the optical signals from the sensor nodes. The received signals are demodulated either at the drone or a cloud/edge server in real time.

We propose a trajectory control algorithm for the receiver drone to efficiently collect the sensor data. The proposed algorithm calculates the flight route and speed based on the locations and sizes of the light sources and the specifications of the receiver camera. The proposed system can accommodate a large number of sensor nodes without time- and frequency-domain interference thanks to OCC. It can be deployed in radio quiet or infrastructure-underdeveloped areas since it does not use any radio wave.

3.2. Variable Definition

3.2.1. Coordination Systems

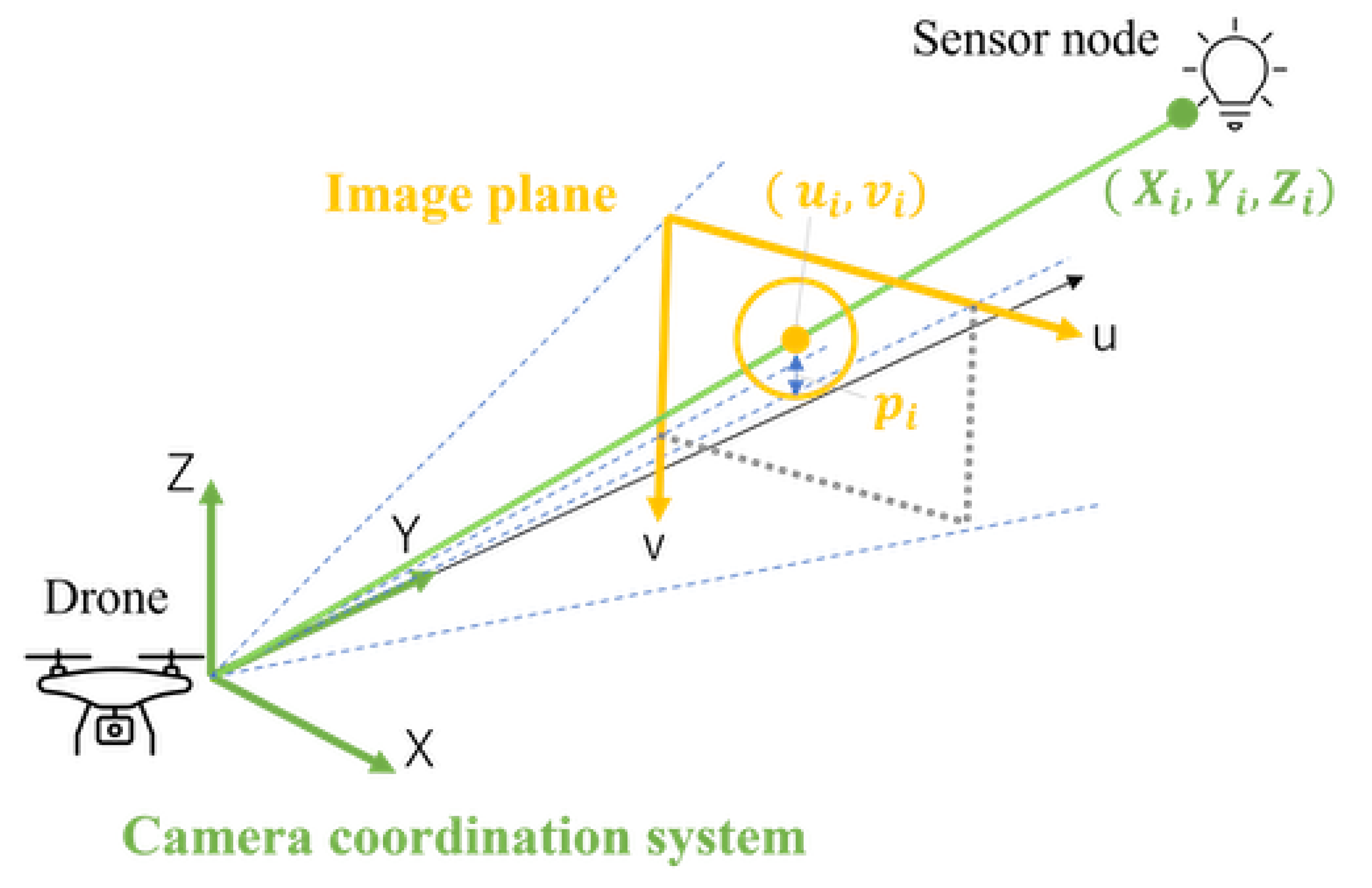

Here we explain the coordination systems used in the proposed model. We define the global coordination system as . The camera coordination system is also defined as . The Y-axis is equivalent to the center line of the image. Note that the origin is set to the receiver camera at . The elevation angle of the camera is defined as .

3.2.2. Variables

The variables used in the proposed model are summarized in

Table 1. Let

denote the set of sensor nodes, and

denote the identifier for them. The position of the

ith sensor node in the global coordinate system is defined as

. For simplicity, a sensor node is approximated as a sphere with radius

. Let

denote the coordinates of the center of the

ith sensor node in the image plane, The horizontal and vertical resolutions of the image are

and

, respectively. The horizontal and vertical angle of view of the camera are also denoted as

and

. The focal length of the camera is described as

f. The size of the image sensor is defined as

.

3.3. System Model

3.3.1. Coordination Transformation

The positions of the sensor nodes in the image plane are computed with the coordination transformation. Since we assume a moving receiver drone, the relative position between

and the origin

is computed. The position of the

ith sensor node in the camera coordination system is formulated as

which consists of the parallel displacement and the rotation by the elevation angle.

Then, the position of the

ith sensor node in the image plane is computed with the perspective transformation as

The perspective transformation is depicted in

Figure 2. Let

denote the size of a sensor node in the image plane. It is calculated with the radius of the projected circle as

The conditional expression that the

ith sensor node is filmed by the receiver drone is formulated as

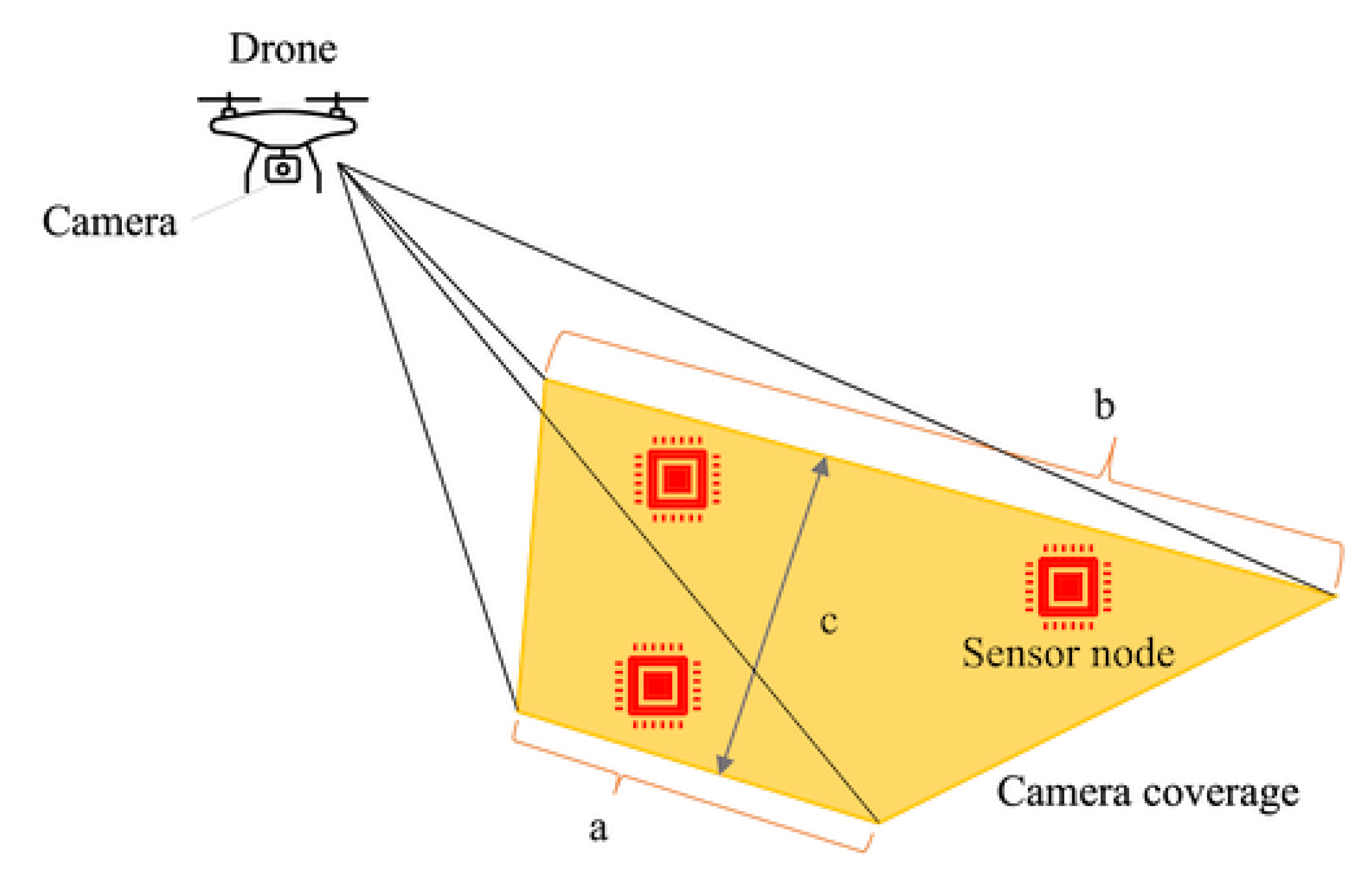

3.3.2. Ground Coverage

The ground coverage of the drone-mounted camera is a trapezoid shown in

Figure 3. The receiver camera can receive the optical signals from the sensor nodes within this trapezoid at the same time. The ground coverage is defined as

where

a is the top length,

b is the bottom length,

c is the height of the trapezoid, and

h is the altitude of the receiver camera.

3.3.3. Altitude Limit

The altitude of the receiver camera is limited to establish a stable link with a ground sensor node. The constraint is described as

where

denotes the threshold for the size of a sensor node in the image plane. In other words, a light source must be sufficiently large in the image to correctly demodulate the optical signals. From (

3) and (

6), the altitude limit of a receiver camera is determined as

3.3.4. Transmission Time

Here we formulate the data transmission time from a sensor node. The transmission rate of an OCC link is determined by the modulation number and symbol rate. Optical spatial modulation and color-shift keying (CSK) are employed to increase the data rate. Multiple light sources are employed in optical spatial modulation. CSK exploits the design of three-color luminaires of LED. Optical signals are modulated by modifying the light intensity to generate predefined constellation symbols [

34]. The range of the symbol rate is constrained by the frame rate of the receiver camera and the image processing speed.

The transmission rate is formulated as

where

is the data rate,

is the optical spatial multiplicity,

D is the symbol rate, and

is the number of constellation symbols. The maximum transmission time is computed as

where we define the maximum data size as

.

3.3.5. Trajectory Requirement

It is required for the receiver drone to film each sensor node for sufficient time duration to receive the transmitted data. Let

denote the time length where (

5) is satisfied. To ensure receiving the maximum data size from the

ith sensor node,

must satisfy

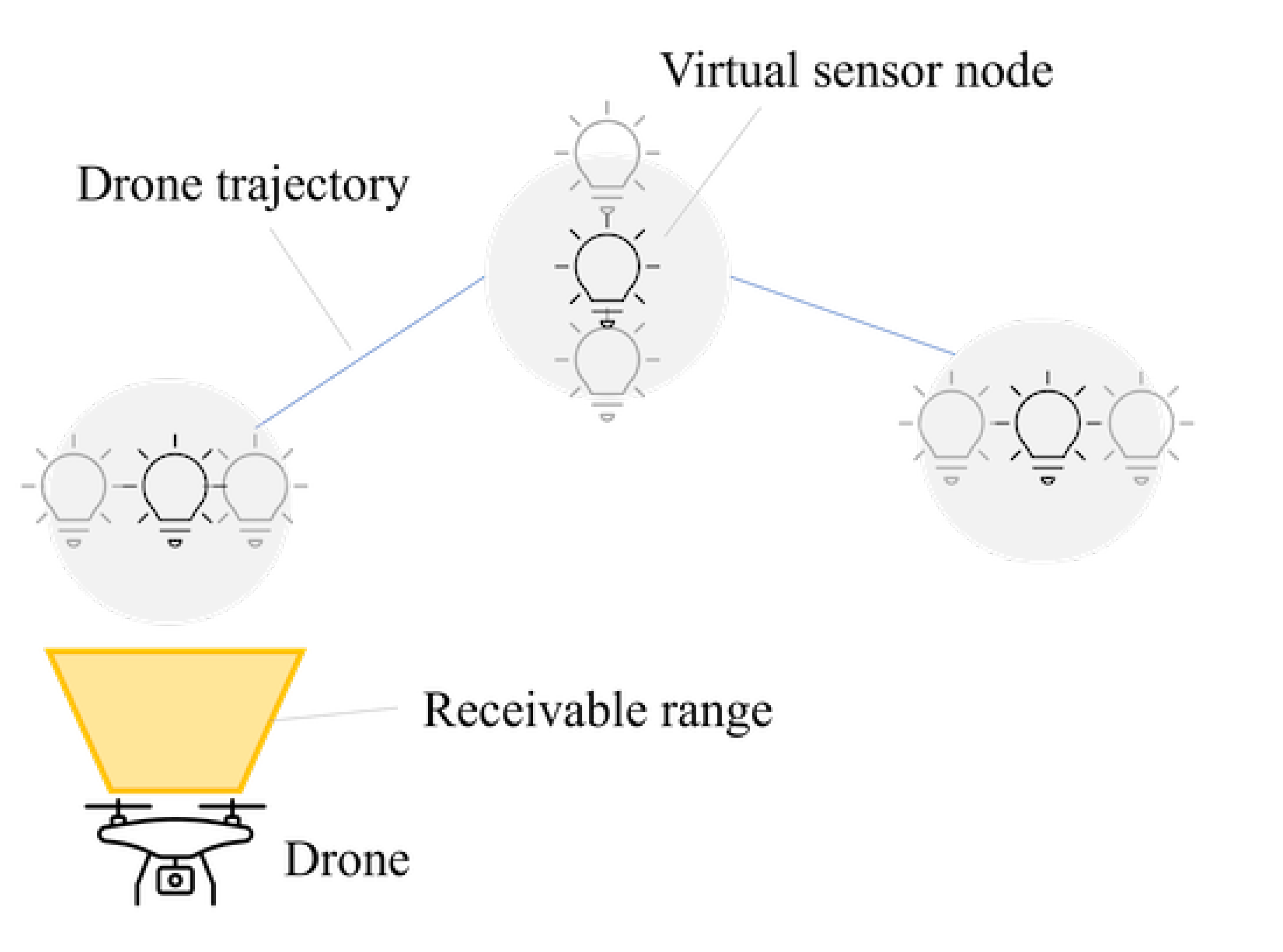

3.4. Algorithm

The goal of the proposed algorithm is to achieve approximately shortest trajectory ensuring data transmission from all sensor nodes. The concept of the trajectory control algorithm is shown in

Figure 4. The proposed algorithm consists of the following steps.

3.4.1. Node Clustering

The sensor nodes are clustered into groups to generate virtual sensor nodes. Neighboring sensor nodes are grouped to satisfy (

5). An appropriate algorithm can be employed based on the distribution of sensor nodes.

3.4.2. Graph Generation

A graph is generated with the virtual sensor nodes as vertices.

3.4.3. Trajectory Determination

The trajectory is computed by solving a traveling salesman problem (TSP) with graph

. The filming time for each virtual node is determined to satisfy (

9).

4. Computer Simulation

The performance of the proposed algorithm was verified via computer simulation.

4.1. Simulation Condition

Table 2 summarizes the parameters used in the simulation. The altitude of the receiver camera was set to 5 m. The speed of the drone was set to 3 m/s. The drone stops to film the sensor node for a certain amount of time depending on the size of the transmitted data. The filming time ranged from 0 to 3 seconds. The horizontal and vertical angles of view were

and

, respectively. The elevation angle of the camera was set to

.

The sensor nodes were distributed randomly in a square area. The density of sensor nodes was set to 4 per meters. The size of the study area ranged from to meters. The simulation was iterated 1000 times for each condition with different seeds. The performance of the proposed trajectory control algorithm was compared with that of the shortest path algorithm. We employed several clustering algorithms such as k-means, group average method, and Ward’s method.

4.2. Simulation Results

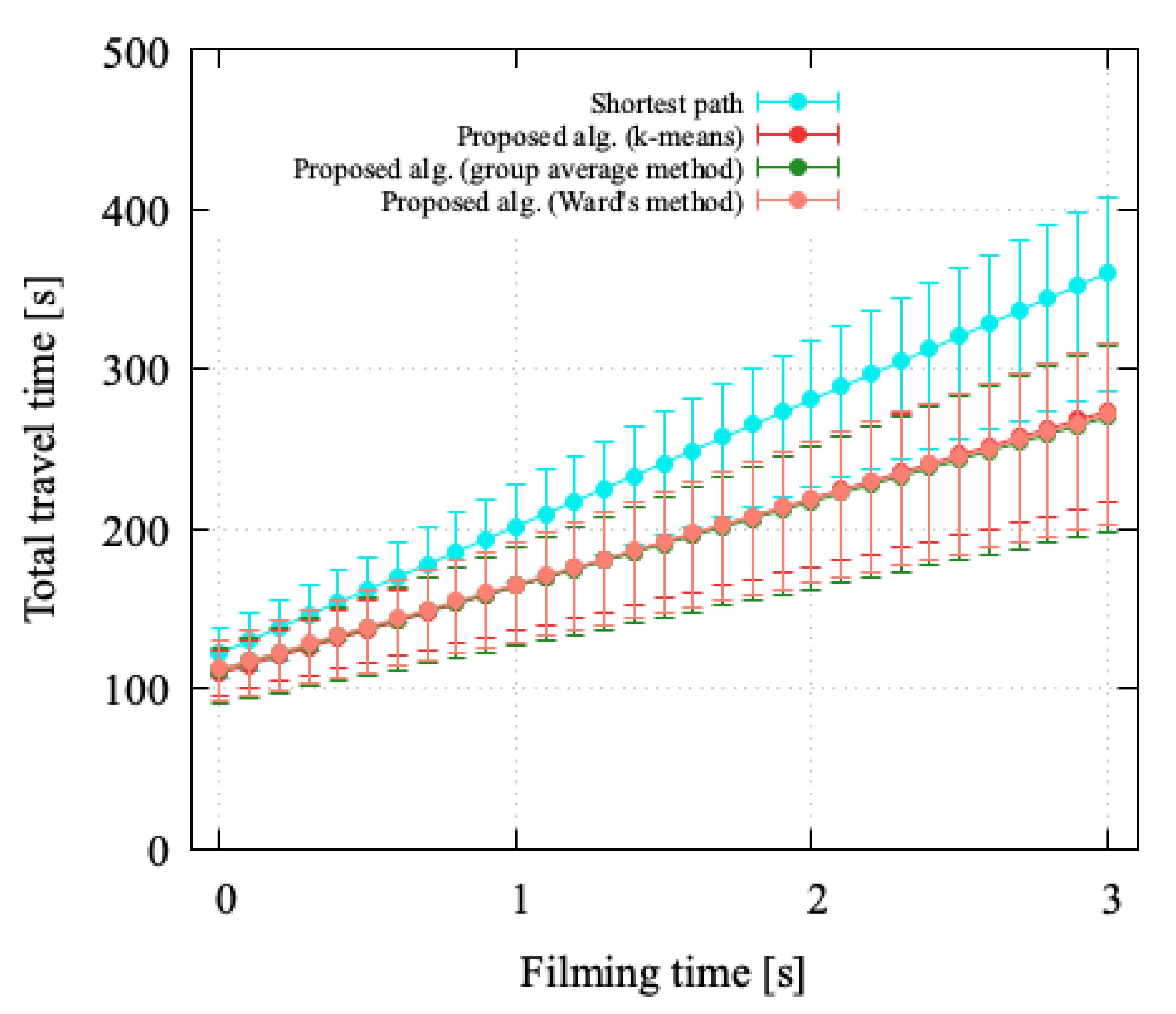

Figure 5 shows the total trajectory length to collect the data from all of the deployed sensor nodes. The points represent the average. The errorbars show the maximum and minimum values. The trajectory length increased according to the field size. The proposed algorithm reduced the trajectory length due to the clustering approach.

Figure 6 shows the total travel time to receive all the data from the sensor nodes where the field size was

meters. The points and errorbars represent the average and the maximum/minimum values. The total travel time increased as the filming time, i.e., data size of the sensor nodes, increased. The receiver drone completed the sensor data collection in a shorter time with the proposed algorithm. This is because the sensor nodes were clustered so that the receiver drone simultaneously received data from multiple sensor nodes. We confirmed that the proposed algorithm outperformed the shortest path algorithm regardless of the conditions and clustering algorithms. Although the expected performance was almost the same with the clustering algorithms employed, the minimum value of the total travel time was slightly shorter with the group average method.

5. Experimental Results

This section presents the experimental results of the proposed scheme. The feasibility of the proposed system and the accuracy of data transmission in the real environment were confirmed using a drone and an implemented sensor node.

5.1. Experimental Condition

5.1.1. Overview

The experiments consisted of three steps. First, the ground coverage of the drone-mounted camera was confirmed in the real environment. The theoretical equations were verified under different elevation angles of the camera. Second, an object detection model was trained using YOLOv5 in the real-world environment and evaluated for accuracy. This model is used in OCC to detect LED panels in images. Third, the accuracy of data transmission of OCC in the real-world environment was evaluated based on the bit error rate (BER). The performance was evaluated under different daylight conditions.

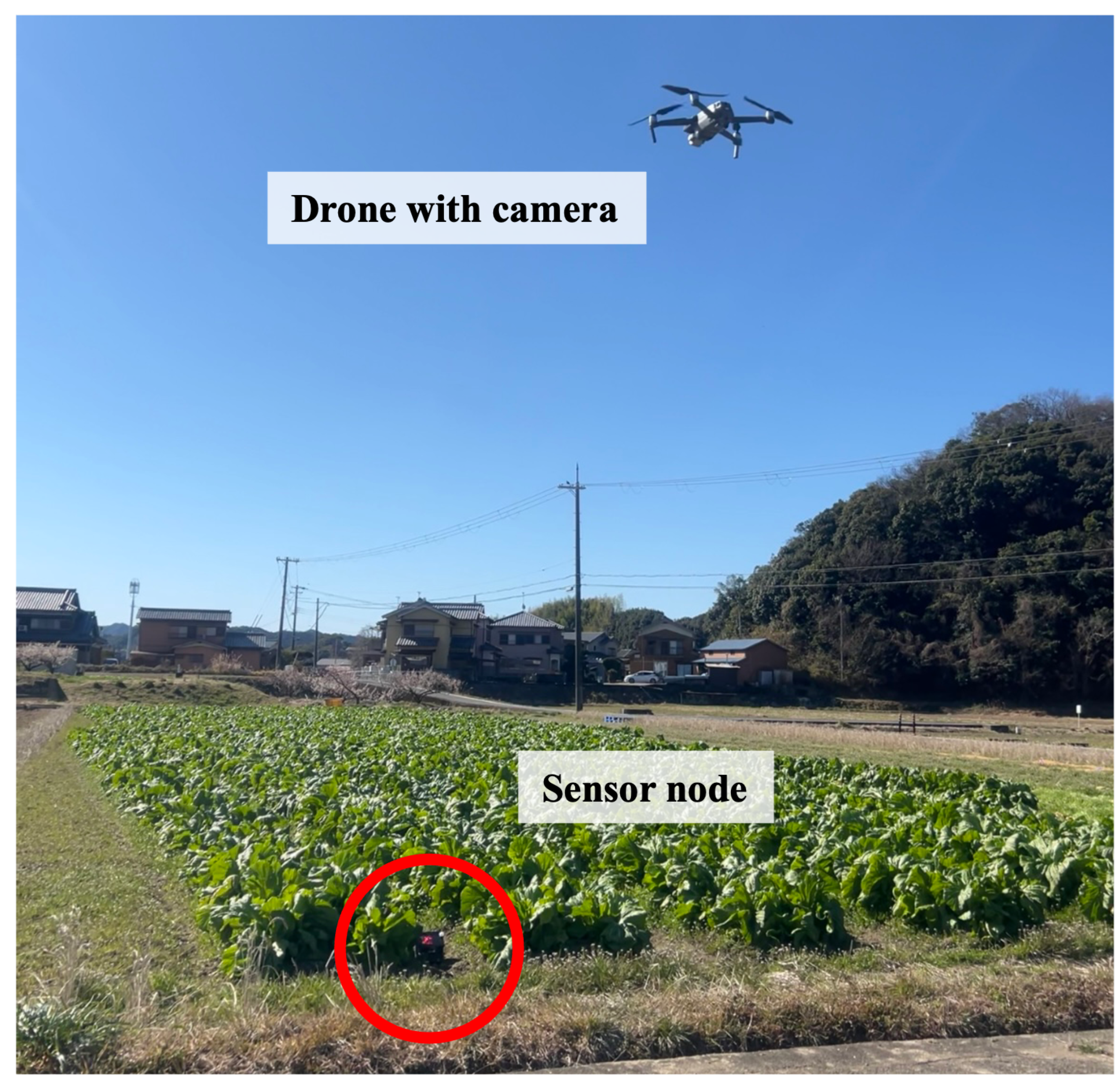

Figure 7 shows the experimental setup. The deployed sensor nodes are marked with circles. The sensor nodes are transmitting sensor data.

5.1.2. Experimental Setup

We employed a Mavic 2 Pro drone, launched by DJI Co., Ltd. The resolution of the receive camera was , the pixel count was 2 mega pixels, and the frame rate was 30 fps. The focal length was mm, and the zoom magnification was set to .

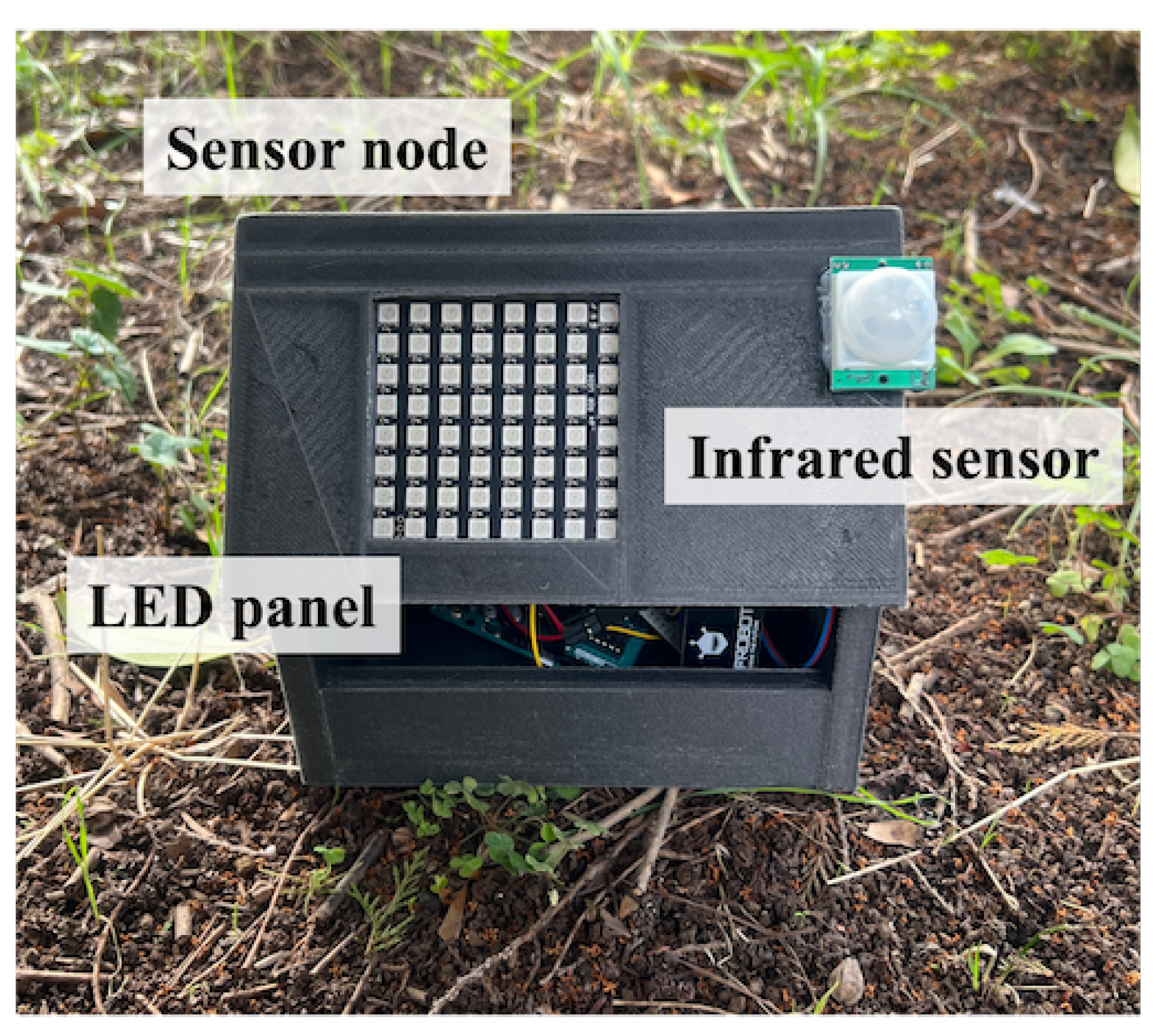

Figure 8 shows a sensor node. A sensor node was equipped with five sensors and an LED panel. It collected temperature, humidity, illumination, soil water content, and infrared sensor data. We employed WS2812B serial LED panels with 64 LED lights arranged in a square. An Arduino UNO micro controller was connected to the sensors and the LED panel to control them.

The battery supplies power to the sensors, the Arduino UNO micro controller, and the LED panel.

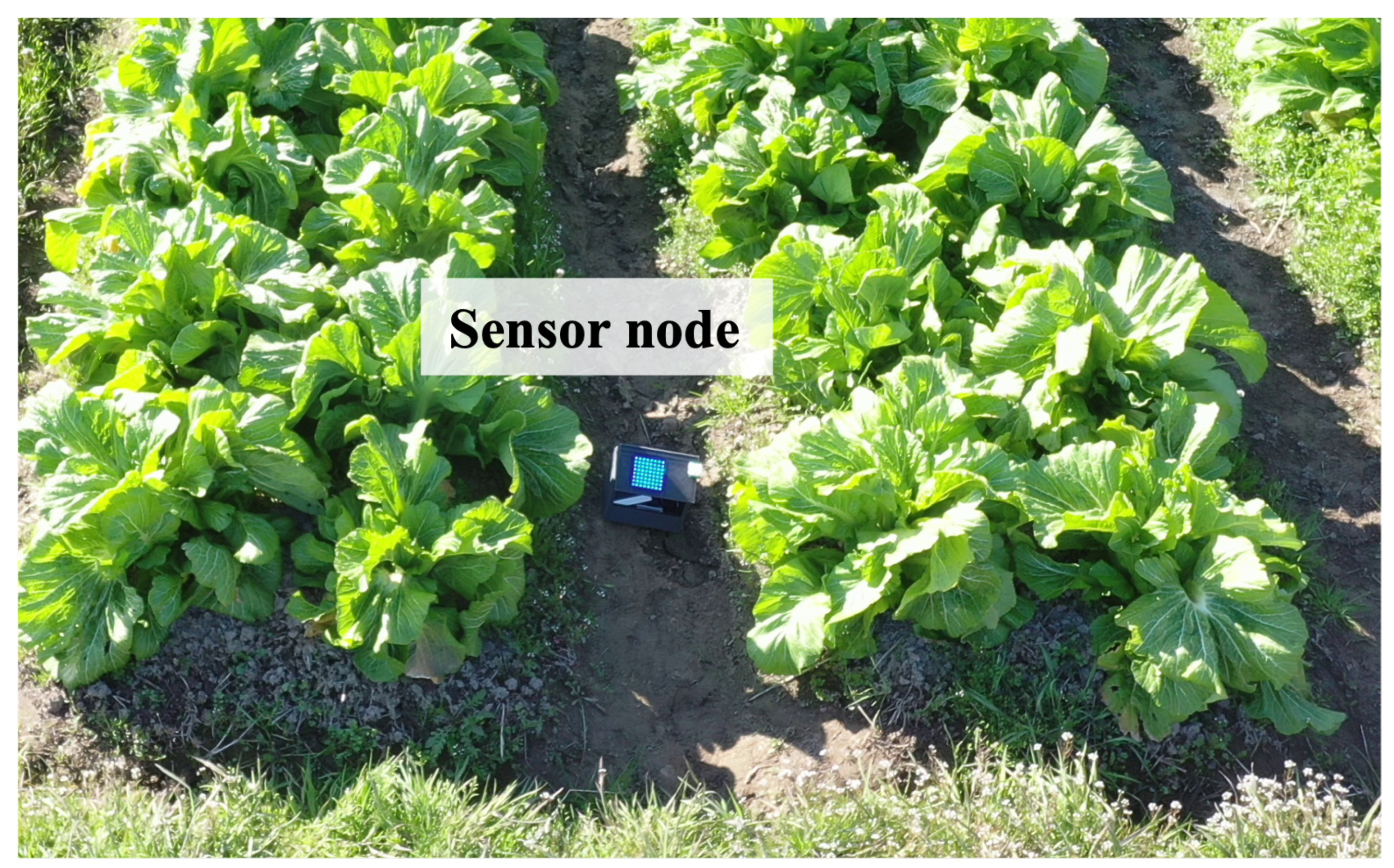

In this experiment, On-Off Keying (OOK) was used as the modulation scheme. The Arduino UNO micro controller encoded and modulated the sensor data as optical signals to be transmitted by the LED panel. A sensor node transmitted optical signals when a drone flew over it by detecting the drone with an infrared sensor to reduce energy consumption. An example image of the sensor node taken by the drone is shown in

Figure 9.

The study area was a leaf mustard farm in Kamitonda-cho, Wakayama, Japan. We deployed eight sensor nodes in an area of

meters square, which is shown in

Figure 10. The flight trajectory of the drone was calculated using the proposed algorithm.

5.1.3. Coding and Modulation

In this experiment, the sensor data collected at the sensor nodes is encoded and modulated as optical using On-Off Keying (OOK). More specifically, the sensor data was converted to binary. The bit sequence was then converted into a data signal using 3b4b coding. The LED panels mounted on the sensor nodes are used as transmitters. A signal sent from a data panel carries one bit with OOK. In other words, the one bit is modulated as the on-off signals of blue.

5.1.4. Demodulation

The transmitters mounted on the sensor nodes transmitted continuous light that was captured by a drone-mounted camera. The captured video was sent to an edge server to be divided into a series of static images. The edge server uses the filmed images to demodulate the optical signals from the sensor nodes. Specifically, it demodulates the optical signals by mapping the RGB values of the LED panels to the (x, y) color space. The color coordinates of each bit are determined by pilot signals. The optical signals are demodulated based on the determined color coordinates.

5.2. Results

5.2.1. Model Confirmation

First, we confirmed the mathematical model based on the coordination transformation. We measured the ground coverage of the drone-mounted camera in the real environment. Then, we compared the measured results with the theoretical model formulated in (

5).

Table 3 summarizes the difference of the measured values from the theoretical values. From this result, we confirmed the feasibility of the mathematical model.

5.2.2. Light Source Detection

We employed YOLOv5, which is a famous machine learning model using a CNN, to detect the LED panels from the optical signal images. To ensure the recognition accuracy of the LED panels mounted on the sensor nodes, we collected 5178 images as training data and marked the positions of the LED panels. The resolution of the images was changed to

. The machine learning parameters are shown in the

Table 4. The PC environment is Ubuntu

and the GPU environment is NVIDIA GeForce RTX 3090. The software environments were CUDA

, CUDNN

and Python

. The datasets were obtained by changing the location and time at which the images were taken to improve recognition accuracy under different conditions. This is because noise from ambient light changes the visibility of LED panels.

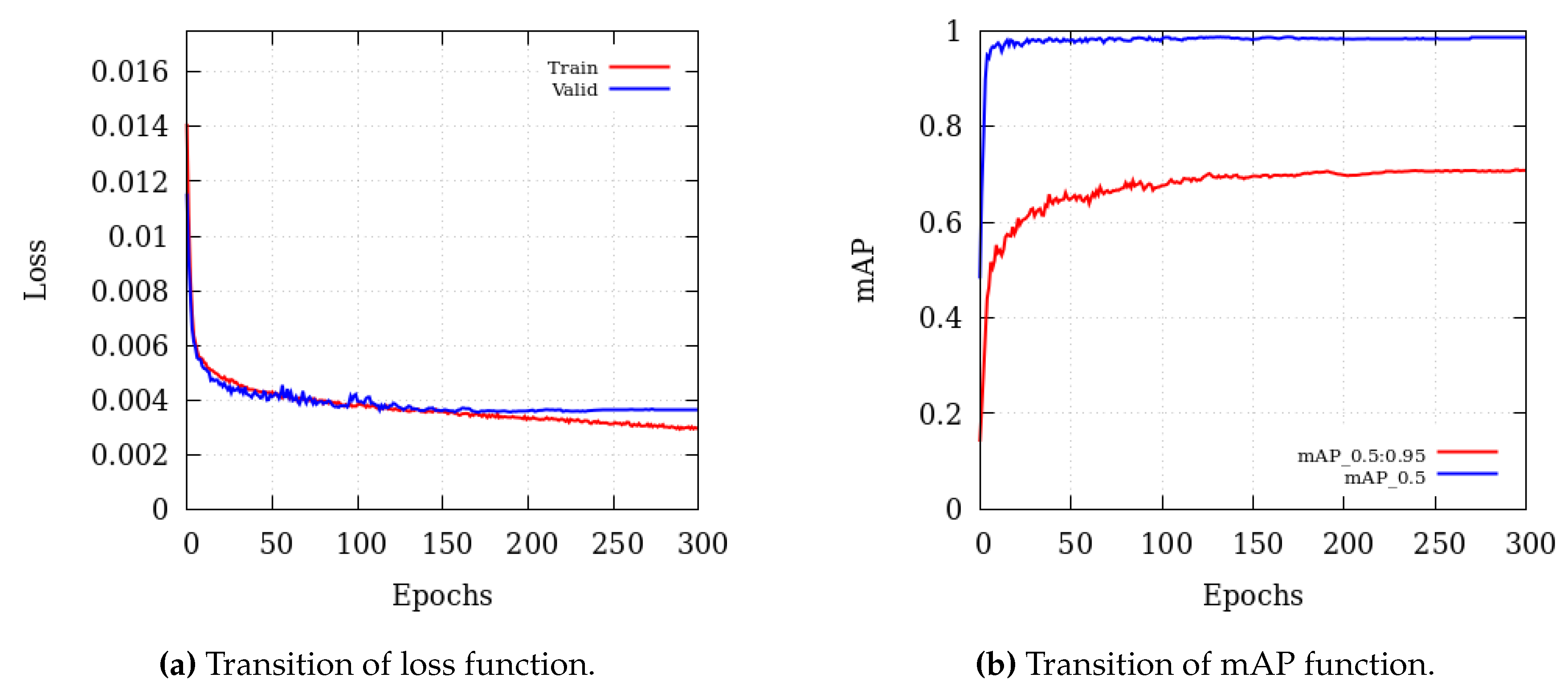

The results for the detection accuracy of the LED panels using YOLOv5 are shown in

Figure 11. The accuracy of the object detection model was evaluated using Loss and mAP. The object loss function is a measure of the probability that the detection target is within the region of interest. The lower the value of the loss function, the higher the accuracy.

Figure 11 shows the evolution of the loss function per epoch. Before the training batch reached 50, both loss function values decreased rapidly. When the training batch reached 50, the decrease of both loss function values gradually slowed down. The mAP is the average of the average accuracy per class. In this paper, mAP and AP are equal because the number of classes is one. The higher the mAP value, the more accurate the network.

Figure 11 shows the evolution of mAP per epoch. The value of mAP_

reached

. The mAP_

:

reached

. We confirmed that the object detection model successfully detected the LED panels.

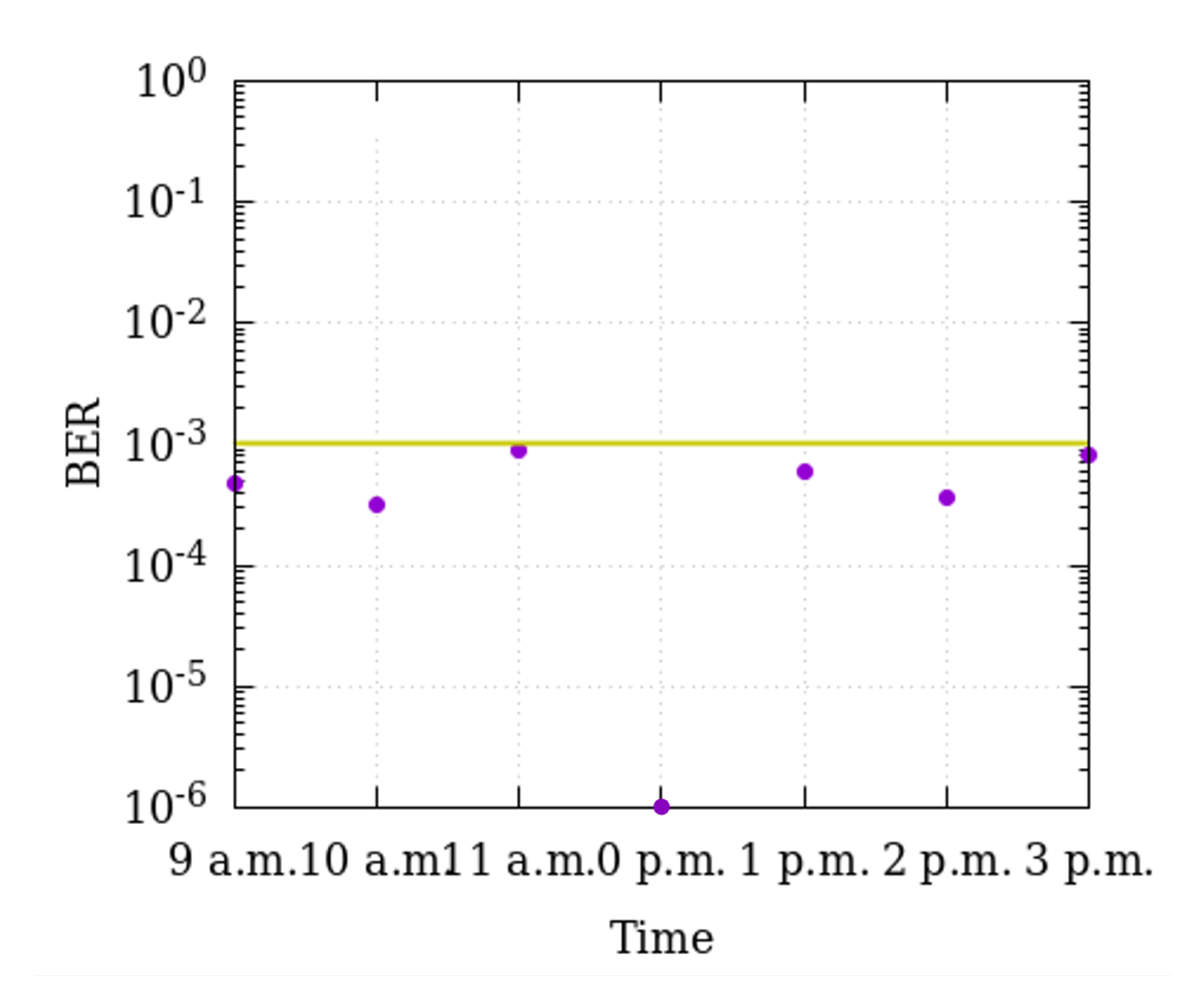

5.2.3. Signal Reception

First, we confirmed that the drone had successfully filmed all the sensor nodes for a sufficient duration. The drone autonomously moved along the calculated trajectory to film the optical signals from the LED panels. The sensor nodes detected the drone and transmitted sensor data. Second, we measured the bit error rate (BER) to evaluate the accuracy of data transmission in the real environment. To evaluate the performance under different outdoor lighting conditions, the BER was measured per filmed hour. The calculated BER is shown in

Figure 12. Assuming the use of

hard decision forward error correction (HD-FEC), the BER threshold was set to

. A receiver drone equipped with a camera received the transmitted data with sufficiently few errors. A receiver drone equipped with a camera received the transmitted data without error. As a result, it was confirmed that the BER was low enough to receive the sensor data regardless of the observation time.

6. Conclusion

In this paper, we reported a sensor network system using drones and OCC for farm monitoring. The proposed idea enables high-density sensing at low cost. With the proposed scheme, a drone uses OCC to collect sensor data acquired at the multiple sensor nodes deployed in farmland. By using OCC, the cost of implementing communication infrastructure can be reduced. This enables high-density sensing, which has been difficult to achieve due to high cost. We also proposed a trajectory control algorithm to receive data from many sensors deployed in a certain area. It allows drones to collect data efficiently by taking advantage of OCC, which is suitable for P2MP communication because there is little interference among transmitters. The advantage of the proposed system is that the sensor networks can be easily deployed in areas with underdeveloped infrastructure and radio silence. The agricultural drones that are already used for spraying pesticides can easily be used as receivers in the proposed system. It is easy to extend the system functions such as visual monitoring of leaves. From the preliminary experimental results at a leaf mustard farm in Kamitonda-cho, Wakayama, we demonstrated the feasibility of the proposed system. We also evaluated the accuracy of data transmission in the real environment. It was confirmed that the sensor data was sufficiently received regardless of the observation time. The proposed system contributes to the development and promotion of the implementation of intelligent agriculture.

Acknowledgments

A part of this work was supported by JSPS KAKENHI Grant Number JP20H04178 and JST, Presto Grant Number JPMJPR2137, and GMO Foundation, Japan.

References

- Scott, M.S.; Frank, L.; G Philip, R.; Stephen, K.H. Ecosystem services and agriculture: Cultivating agricultural ecosystems for diverse benefits. Ecological Economics 2007, 64, 245–252. [Google Scholar]

- Luc, C.; Lionel, D.; Jesper, K. The (evolving) role of agriculture in poverty reduction—An empirical perspective. Journal of Development Economics 2011, 96, 239–254. [Google Scholar]

- Sridevi, N.; A, S, C. S.S.; M, N, G.P. Agricultural Management through Wireless Sensors and Internet of Things. International Journal of Electrical and Computer Engineering 2017, 7, 3492–3499. [Google Scholar]

- Jaime, del, C. ; Christyan, Cruz, U.; Antonio, B.; Jorge, de, L.R. Unmanned Aerial Vehicles in Agriculture: A Survey. agronomy 2021, 11, 203. [Google Scholar] [CrossRef]

- Gayathri, Devi, K. ; Sowmiya, N.; Yasoda, K.; Muthulakshmi, K.; Kishore, B. REVIEW ON APPLICATION OF DRONES FOR CROP HEALTH MONITORING AND SPRAYING PESTICIDES AND FERTILIZER. Journal of Critical Reviews 2020, 7, 667–672. [Google Scholar]

- SHUBHANGI, G.R.; MRUNAL, S.T.; CHAITALI, V.W.; MANISH, D.M. A REVIEW ON AGRICULTURAL DRONE USED IN SMART FARMING. International Research Journal of Engineering and Technology 2021, 8, 313–316. [Google Scholar]

- Jacopo, P.; Salvatore, Filippo, D. G.; Edoardo, F.; Lorenzo, G.; Emanuele, L.; Alessandro, M.; Francesco, Primo, V. A flexible unmanned aerial vehicle for precision agriculture. precision agriculture 2012, 7, 517–523. [Google Scholar]

- Sinha, B.B.; Dhanalakshmi, R. Recent advancements and challenges of Internet of Things in smart agriculture: A survey. Future Generation Computer Systems 2022, 126, 169–184. [Google Scholar] [CrossRef]

- Adamchuk, V.I.; Hummel, J.W.; Morgan, M.T.; Upadhyaya, S.K. On-the-go soil sensors for precision agriculture. Computers and electronics in agriculture 2004, 44, 71–91. [Google Scholar] [CrossRef]

- Shaikh, F.K.; Karim, S.; Zeadally, S.; Nebhen, J. Recent trends in internet of things enabled sensor technologies for smart agriculture. IEEE Internet of Things Journal 2022. [Google Scholar] [CrossRef]

- Le, N.T.; Hossain, M.A.; Jang, Y.M. A survey of design and implementation for optical camera communication. Signal Processing: Image Communication 2017, 53, 95–109. [Google Scholar] [CrossRef]

- S, A.; R, S.; S, B.; G, N. Application of Drone in Agriculture. International Journal of Current Microbiology and Applied Sciences 2019, 8, 2500–2505. [Google Scholar]

- Onodera, Y.; Takano, H.; Hisano, D.; Nakayama, Y. Avoiding Inter-Light Sources Interference in Optical Camera Communication. IEEE Global Telecommunications Conference (GLOBECOM). IEEE, 2021.

- Abdul, H.; Mohammed, Aslam, H. ; Singh, S.; Anurag, C.; Mohd. Tauseef, K.; Navneet, K.; Abhishek, C.; Soni, S. Implementation of drone technology for farm monitoring and pesticide spraying: A review. Information Processing in Agriculture 2023, 10, 192–203. [Google Scholar]

- Kurkute, S.; Deore, B.; Kasar, P.; Bhamare, M.; Sahane, M. Drones for smart agriculture: A technical report. International Journal for Research in Applied Science and Engineering Technology 2018, 6, 341–346. [Google Scholar] [CrossRef]

- Nikki, J.S. Drones: The Newest Technology for Precision Agriculture. Natural Sciences Education 2015, 44, 89–91. [Google Scholar]

- Pasquale, D.; Luca, D.V.; Luigi, G.; Luigi, I.; Davide, L.; Francesco, P.; Giuseppe, S. A review on the use of drones for precision agriculture. IOP Conference Series: Earth and Environmental Science 2019, 275, 012022. [Google Scholar]

- S., S.; Shadaksharappa,B.; S.,S.; Manasa,V. Freyrdrone: Pesticide/fertilizers spraying drone - an agricultural approach. 2017 2nd International Conference on Computing and Communications Technologies (ICCCT). 2017; pp. 252–255. [CrossRef]

- Paulina, L.R.; Jaroslav, S.; Adam, D. Monitoring of crop fields using multispectral and thermal imagery from UAV. European Journal of Remote Sensing 2019, 52, 192–201. [Google Scholar]

- Namani, S.; Gonen, B. Smart agriculture based on IoT and cloud computing. 2020 3rd International Conference on Information and Computer Technologies (ICICT). IEEE, 2020, pp. 553–556.

- Jawad, A.M.; Jawad, H.M.; Nordin, R.; Gharghan, S.K.; Abdullah, N.F.; Abu-Alshaeer, M.J. Wireless power transfer with magnetic resonator coupling and sleep/active strategy for a drone charging station in smart agriculture. IEEE Access 2019, 7, 139839–139851. [Google Scholar] [CrossRef]

- Shidrokh, G.; Nazri, K.; Mohammad, Hossein, A. ; Sherali, Z.; Shahid, M. Data collection using unmanned aerial vehicles for Internet of Things platforms. Computers and Electrical Engineering 2019, 75, 1–15. [Google Scholar]

- Cicioğlu, M.; Çalhan, A. Smart agriculture with Internet of Things in cornfields. Computers & Electrical Engineering 2021, 90, 106982. [Google Scholar]

- Moribe, T.; Okada, H.; Kobayashl, K.; Katayama, M. Combination of a wireless sensor network and drone using infrared thermometers for smart agriculture. 2018 15th IEEE Annual Consumer Communications & Networking Conference (CCNC). IEEE, 2018, pp. 1–2.

- Rajendra, P.S.; Ram, L.R.; Sudhir, K.S. Applications of Remote Sensing in Precision Agriculture: A Review. Remote Sensing 2020, 12, 3136. [Google Scholar]

- Ramson, S.R.J.; León-Salas, W.D.; Brecheisen, Z.; Foster, E.J.; Johnston, C.T.; Schulze, D.G.; Filley, T.; Rahimi, R.; Soto, M.J.C.V.; Bolivar, J.A.L.; Málaga, M.P. A Self-Powered, Real-Time, LoRaWAN IoT-Based Soil Health Monitoring System. IEEE Internet of Things Journal 2021, 8, 9278–9293. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, S. A temperature auto-monitoring and frost prevention real time control system based on a Z-BEE networks for the tea farm 2012. pp. 644–647. [CrossRef]

- Khalid, H.; Ikram, Ud, D. ; Ahmad, A.; Naveed, I. An Energy Efficient and Secure IoT-Based WSN Framework: An Application to Smart Agriculture. Sensors 2020, 20, 2081. [Google Scholar]

- Valecce, G.; Petruzzi, P.; Strazzella, S.; Grieco, L.A. NB-IoT for Smart Agriculture: Experiments from the Field. 2020 7th International Conference on Control, Decision and Information Technologies (CoDIT), 2020, Vol. 1, pp. 71–75. [CrossRef]

- Tsai, C.F.; Liang, T.W. Application of IoT Technology in The Simple Micro-farming Environmental Monitoring. 2018 IEEE International Conference on Advanced Manufacturing (ICAM), 2018, pp. 170–172. [CrossRef]

- Moh, K.H.; Chowdhury, M.Z.; Md, S.; Nguyen, V.T.; Jang, Y.M. Performance Analysis and Improvement of Optical Camera Communication. Applied Sciences 2018, 8, 2527. [Google Scholar]

- Islim, M.S.; Videv, S.; Safari, M.; Xie, E.; McKendry, J.J.D.; Gu, E.; Dawson, M.D.; Haas, H. The Impact of Solar Irradiance on Visible Light Communications. Journal of Lightwave Technology 2018, 36, 2376–2386. [Google Scholar] [CrossRef]

- Takai, I.; Harada, T.; Andoh, M.; Yasutomi, K.; Kagawa, K.; Kawahito, S. Optical Vehicle-to-Vehicle Communication System Using LED Transmitter and Camera Receiver. IEEE Photonics Journal 2014, 6, 1–14. [Google Scholar] [CrossRef]

- Onodera, Y.; Takano, H.; Hisano, D.; Nakayama, Y. Adaptive N+1 Color Shift Keying for Optical Camera Communication. 2021 IEEE 94th Vehicular Technology Conference (VTC2021-Fall). IEEE, 2021, pp. 01–05.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).