Submitted:

21 August 2024

Posted:

22 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

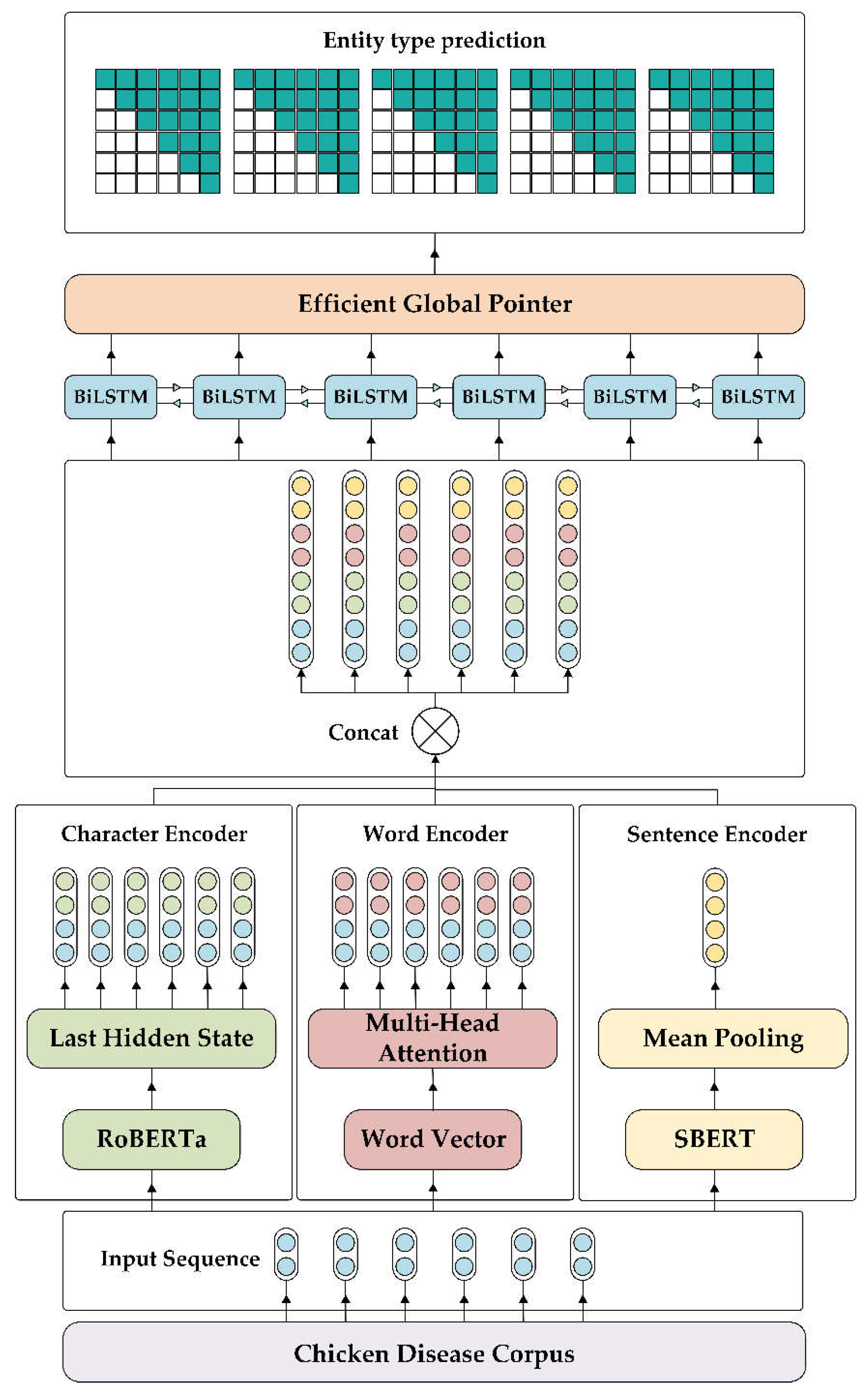

- We have constructed the MFGFF-BiLSTM-EGP model, which connects the fusion output of multi-fine-grained features to the BiLSTM neural network layer, and finally through a fully connected layer into the EGP to predicts the entity position.

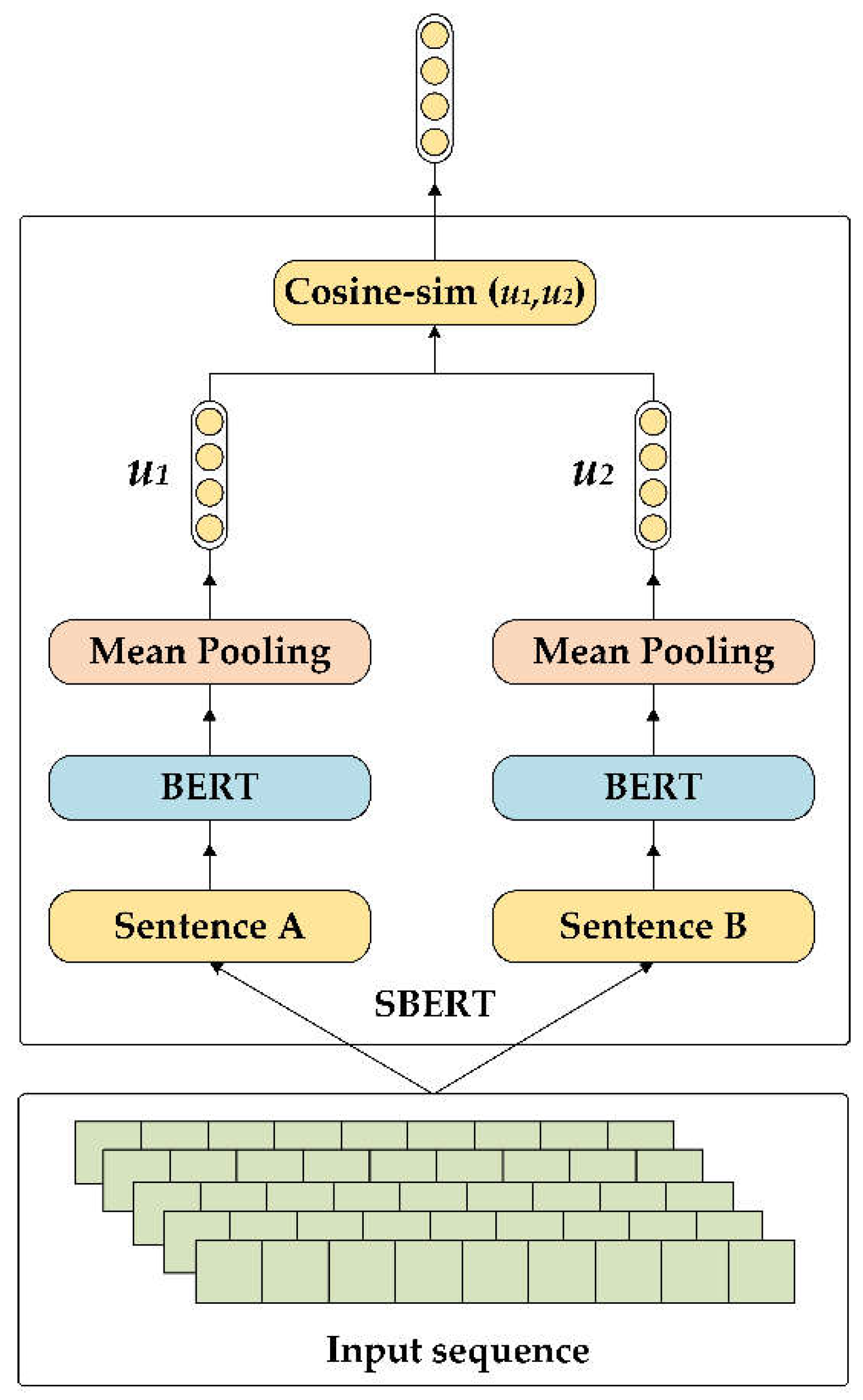

- In the MFGFF module we designed, the character encoder obtains character features by fine-tuning the RoBERTa pre-trained model, the word encoder acquires word features through word-character matching, word frequency weighting, and multi-head attention mechanism, and the sentence features are output using SBERT. MFGFF effectively integrates multiple fine-grained features. In addition, the introduction of EGP enables the prediction of nested entities by means of positional coding.

- We have constructed a comprehensive knowledge base for chicken diseases, which includes a 20-million-character corpus, a vocabulary containing 6760 specialized terms, a 200-dimensional word vector in the field of chicken diseases, and a high-quality annotated dataset CDNER annotated under the guidance of veterinarians.

2. Materials and Methods

2.1. Data and Lexicon

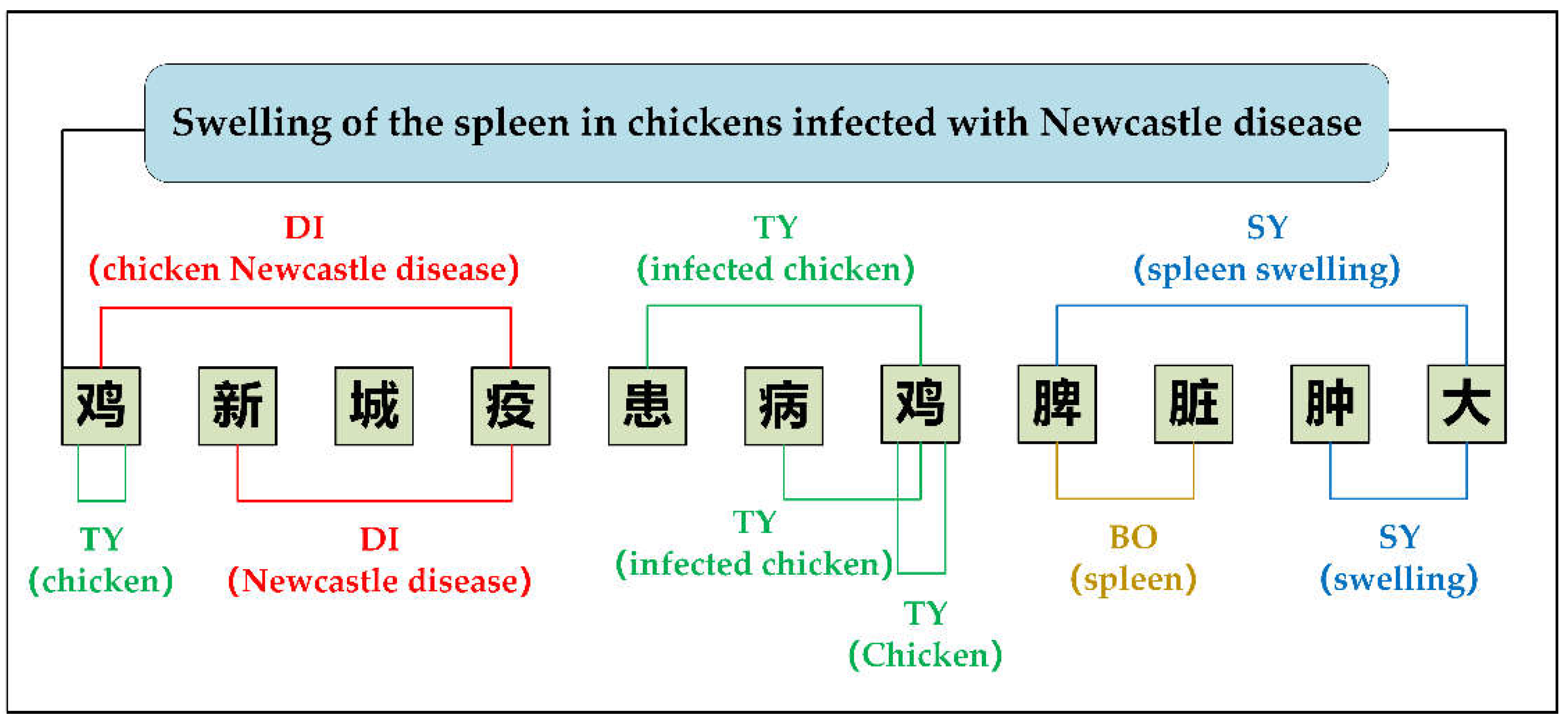

2.2. Entity Labelling

2.3. MFGFF-BiLSTM-EGP

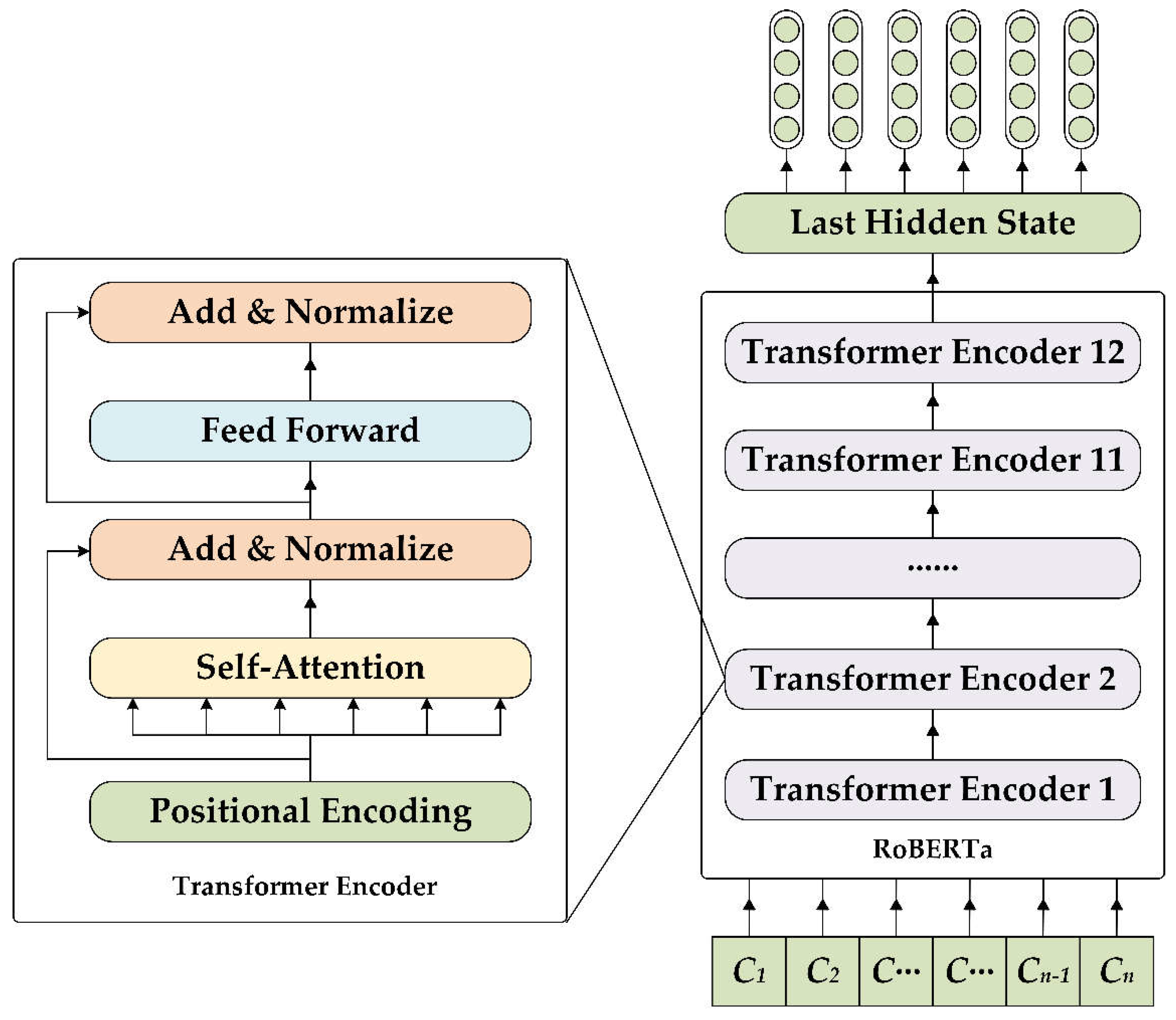

2.3.1. Character Encoder

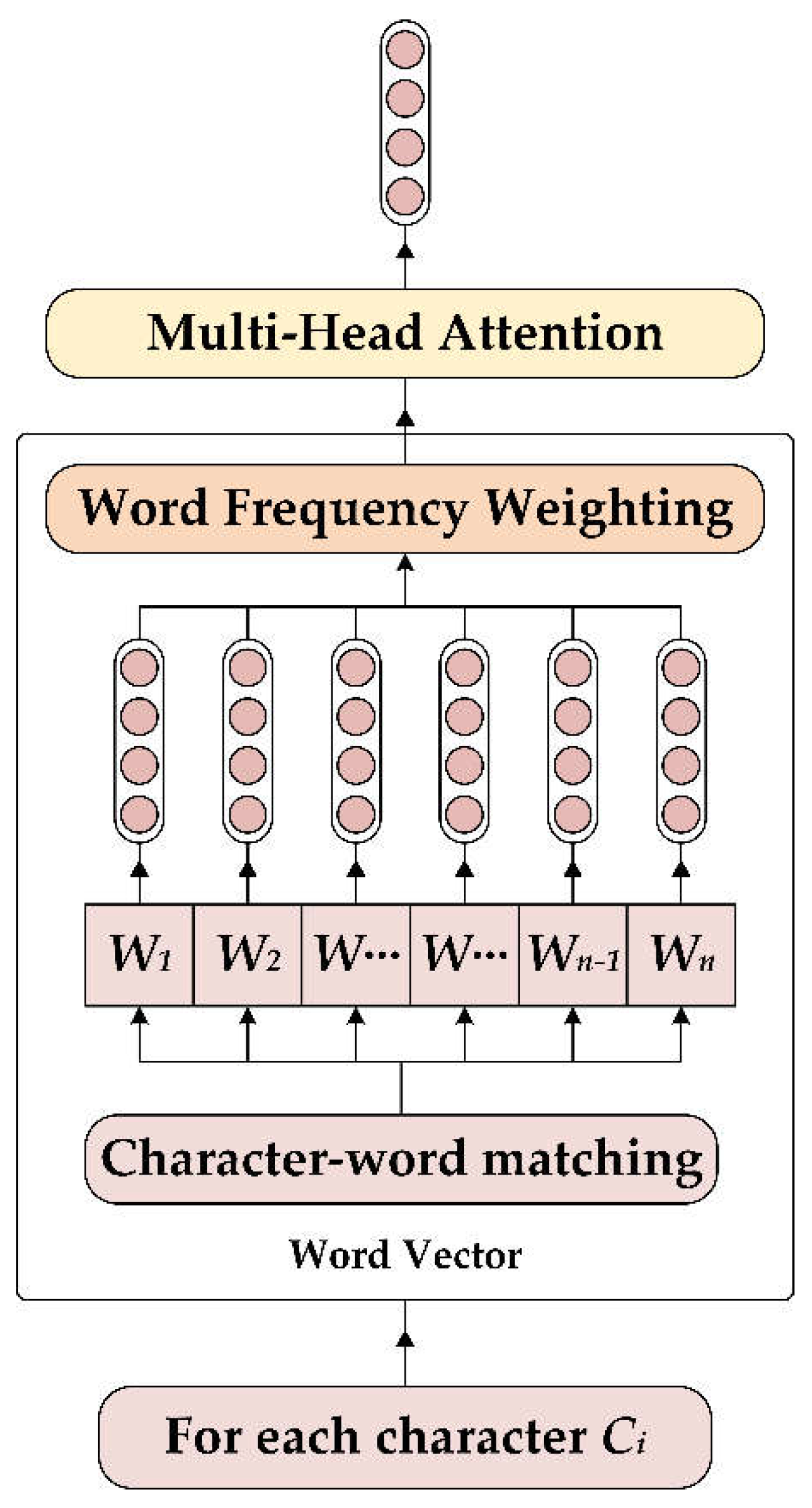

2.3.2. Word Encoder

2.3.3. Sentence Encoder

2.3.4. BiLSTM

2.3.5. Efficient Global Pointer

2.4. Experimental Environment and Hyperparameter

2.5. Evaluation Indicators

| Sample | Prediction | |

| Positive example | Negative example | |

| Positive example | TP | FN |

| Negative example | FP | TN |

3. Results

- CDNER (ours): Chicken Disease Named Entity Recognition dataset comprising 5,000 high-quality samples labeled by veterinary experts, containing five types of entities with nested structures.

- CMeEE V2 [47]: A Chinese medicine entity recognition dataset with 20,000 annotated samples across nine categories, including nested entities. The categories include diseases (dis), clinical symptoms (sym), drugs (dru), medical equipment (equ), medical procedures (pro), body (bod), medical examination items (ite), microbiology (mic), and departments (dep).

- CLUENER [48]: A general domain NER dataset with 12,091 annotated samples across ten categories: addresses, books, companies, games, government, movies, names, organizations, positions, and scenes.

3.1. Main Results Compared with Other Models

- Lattice LSTM [50]: Encodes input characters and all potential words in the matching dictionary.

- FLAT [51]: A Transformer-based model that utilizes a unique position encoding to integrate the Lattice structure, seamlessly introducing lexical information.

- BERT-CRF: Combines a traditional CRF decoder with the BERT pre-trained model.

- BERT-MRC [52]: Reframes the NER task as a reading comprehension problem.

- SLRBC [53]: Integrates lexical boundary information using the Softlexicon method with RoBERTa, BiLSTM, and CRF.

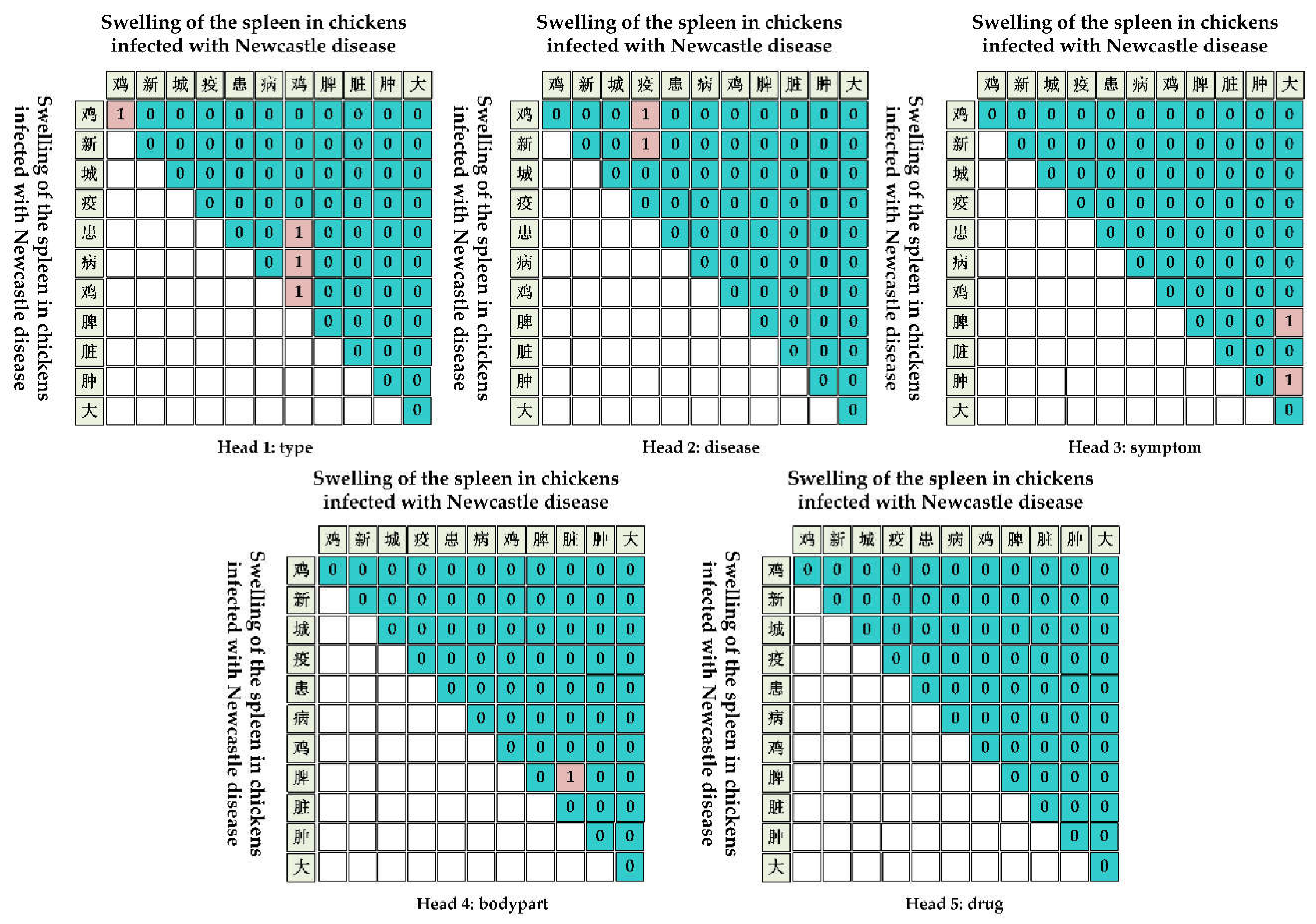

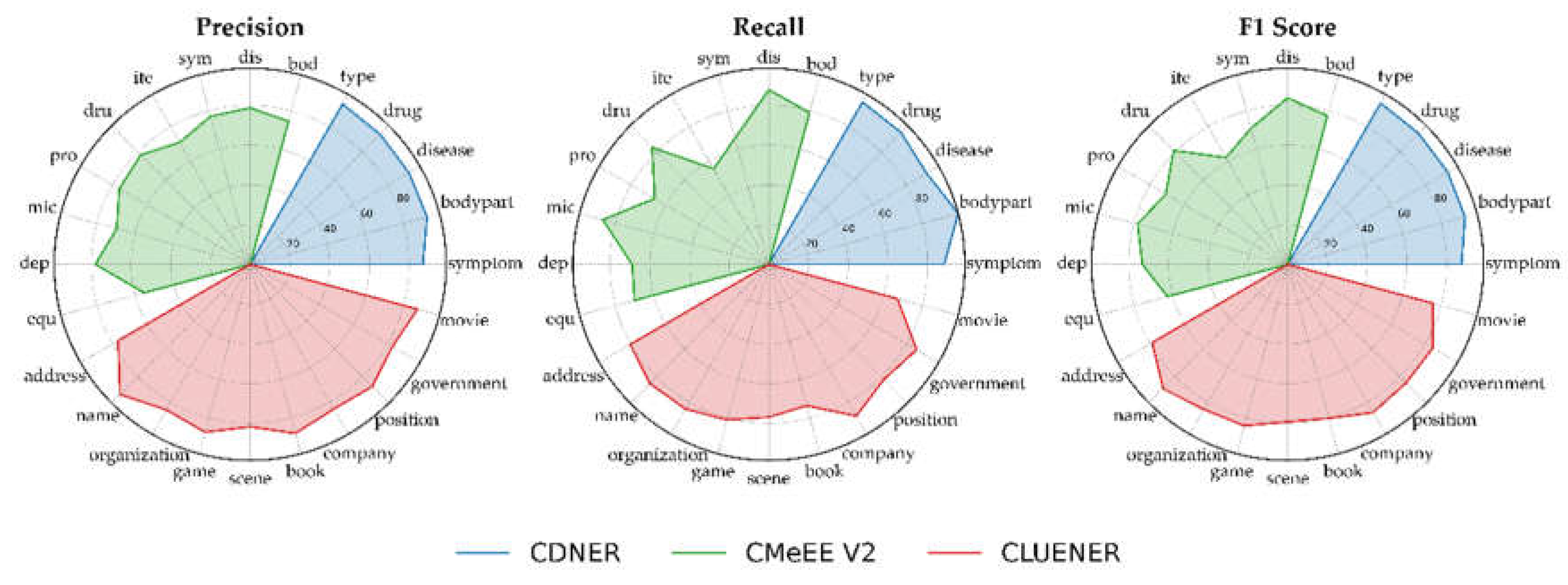

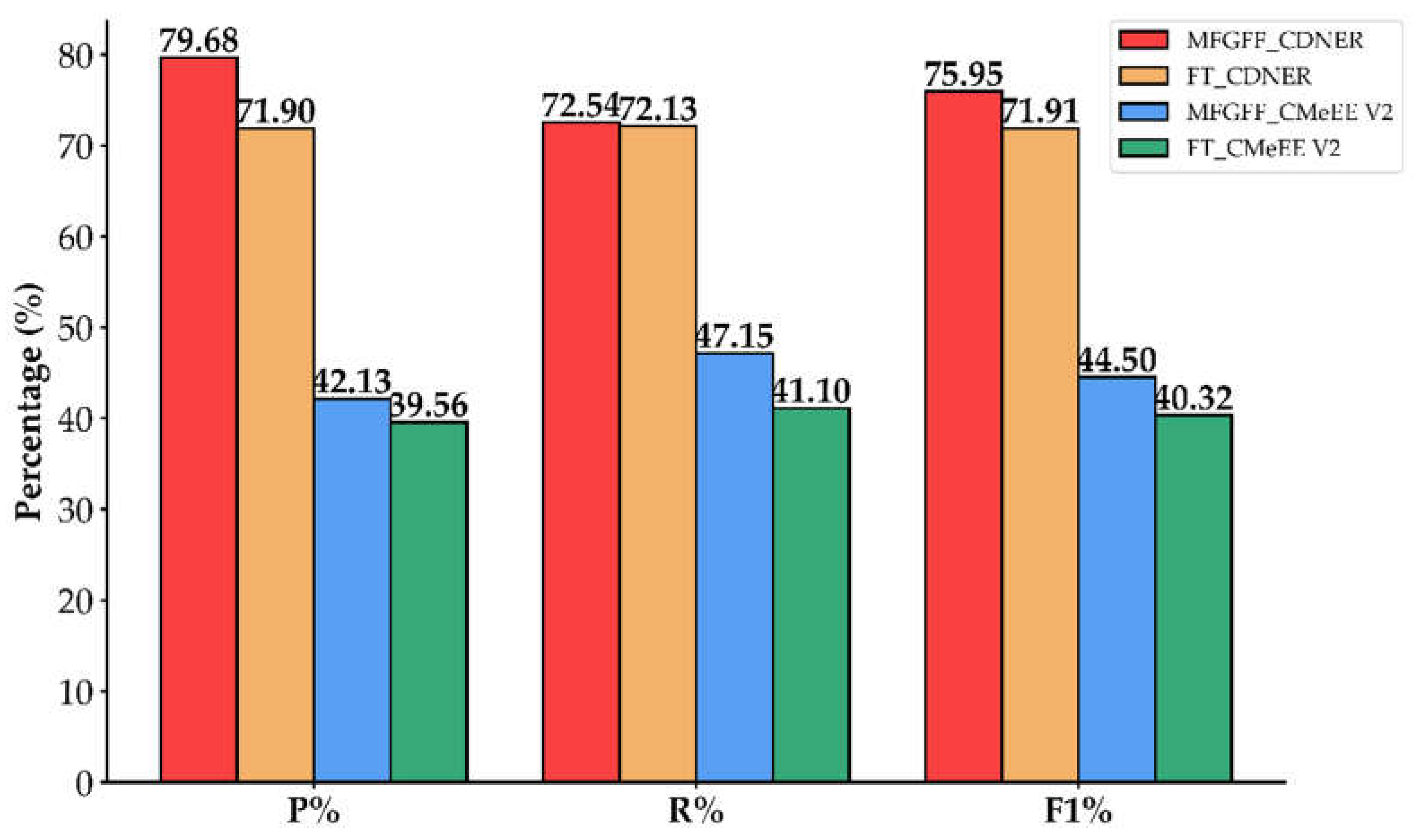

3.2. Entity Level Evaluation

3.3. Ablation Study

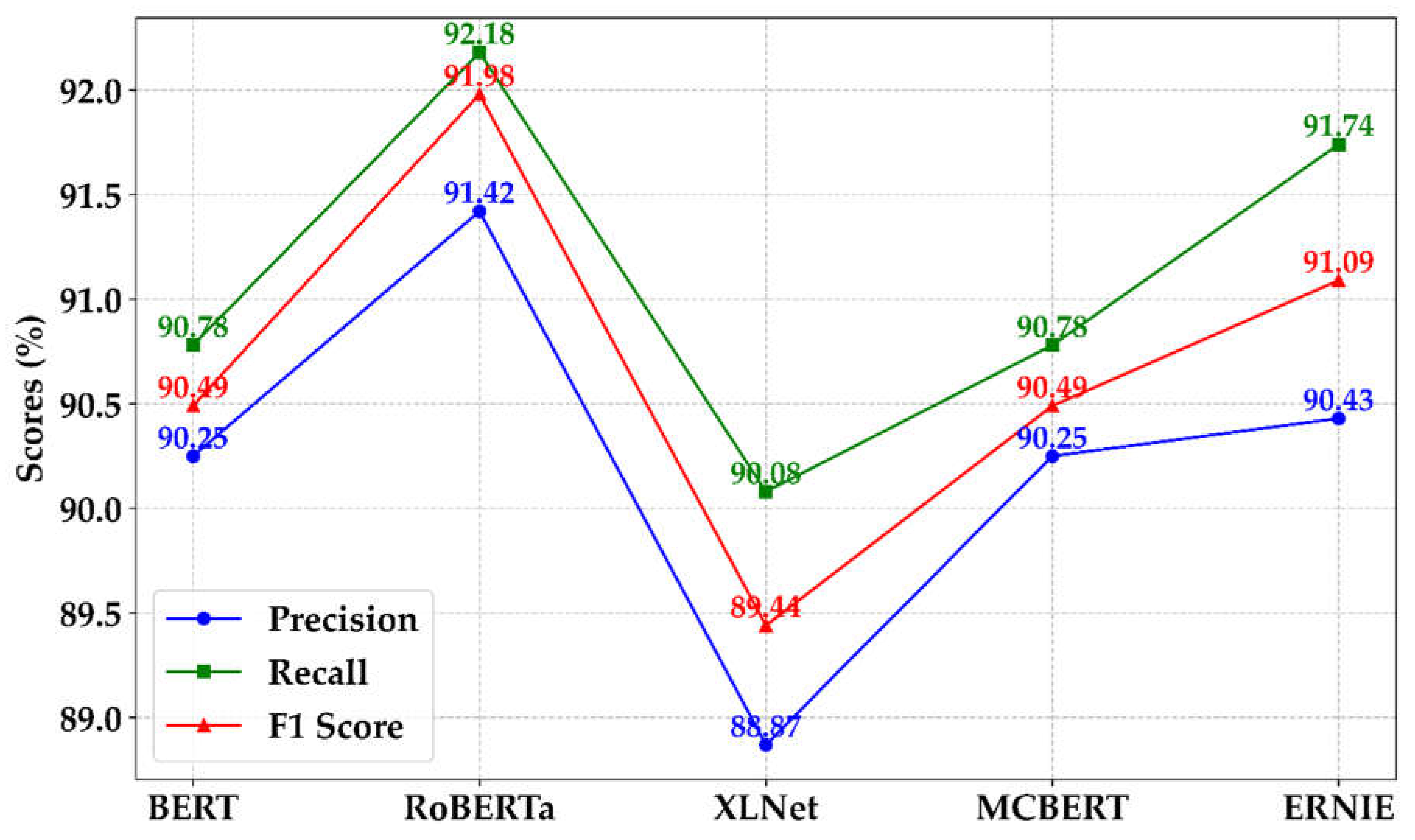

3.3.1. Effect of Pre-Trained Model

3.3.2. Effect of Word Encoder

3.3.3. Effect of Sentence Encoder

4. Discussion

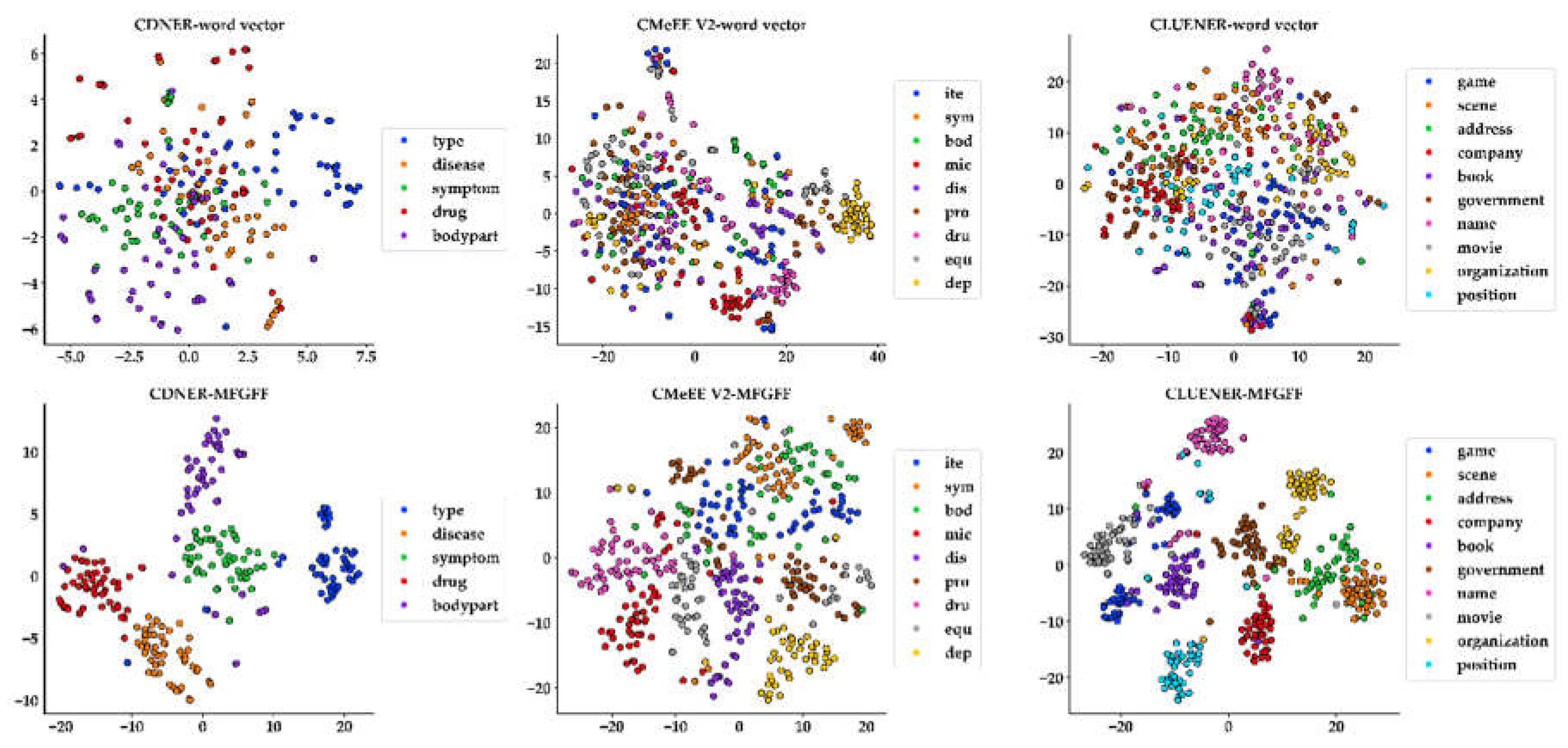

4.1. Visualization of Token Representations in Feature Space

4.2. Nested Entity Predictive Analytic

4.3. Comparative Analysis of Pre-rained Models

5. Conclusions

- The MFGFF-BiLSTM-EGP model exhibited strong performance across multiple datasets, achieving F1 scores of 91.98% on CDNER, 73.32% on CMeEE V2, and 82.54% on CLUENER. These results indicate that the model has a robust ability to generalize.

- The model's recognition accuracy improved across all three encoders tested. The character encoder provided the most significant enhancement, increasing the model's performance by 9.31%, while the sentence encoder contributed a smaller improvement of 0.65%. Additionally,the MFGFF module effectively integrates various fine-grained feature vectors, further enhancing the model’s accuracy .

- The model also performed well in identifying nested entities, with F1 scores of 75.95% on CDNER and 44.50% on CMeEE V2. This represents an improvement of 4.04% and 4.18%, respectively, compared to using word vectors as embeddings, highlighting the effectiveness of the EGP module.

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Algorithm A1. Pseudo-code for the MFGFF-BiLSTM-EGP nested named entity recognition mode. | |||

| Input: Pre-trained model RoBERTa, word vectors GloVe, sentence embeddings SBERT, network BiLSTM, Efficient Global Pointer EGP, The number of iterations E. | |||

| Output: Predicted entity spans , optimal model weights . | |||

| 1: | For = 1, ..., do | ||

| 2: | |||

| 3: | , according to Equation (4) to (5). | ||

| 4: | , according to Equation (6) to (11). | ||

| 5: | , according to Equation (12) to (14). | ||

| 6: | |||

| 7: | |||

| 8: | , according to Equation (17) to (20). | ||

| 9: | , according to Equation (21) to (22). | ||

| 10: | |||

| 11: | If | ||

| 12: | |||

| 13: | save | ||

| 14: | End for | ||

| 15: | Output: Final predicted spans , best weights . | ||

References

- Han, H.; Xue, X.; Li, Q.; Gao, H.; Wang, R.; Jiang, R.; Ren, Z.; Meng, R.; Li, M.; Guo, Y.; et al. Pig-Ear Detection from the Thermal Infrared Image Based on Improved YOLOv8n. Intelligence & Robotics 2024, 4, 1–19. [Google Scholar] [CrossRef]

- Hou, G.; Jian, Y.; Zhao, Q.; Quan, X.; Zhang, H. Language Model Based on Deep Learning Network for Biomedical Named Entity Recognition. Methods 2024, 226, 71–77. [Google Scholar] [CrossRef] [PubMed]

- Nadeau, D.; Sekine, S. A Survey of Named Entity Recognition and Classification. LI 2007, 30, 3–26. [Google Scholar] [CrossRef]

- Jehangir, B.; Radhakrishnan, S.; Agarwal, R. A Survey on Named Entity Recognition — Datasets, Tools, and Methodologies. Natural Language Processing Journal 2023, 3, 100017. [Google Scholar] [CrossRef]

- Morwal, S. Named Entity Recognition Using Hidden Markov Model (HMM). IJNLC 2012, 1, 15–23. [Google Scholar] [CrossRef]

- De Martino, A.; De Martino, D. An Introduction to the Maximum Entropy Approach and Its Application to Inference Problems in Biology. Heliyon 2018, 4, e00596. [Google Scholar] [CrossRef]

- Ju, Z.; Wang, J.; Zhu, F. Named Entity Recognition from Biomedical Text Using SVM. In Proceedings of the 2011 5th International Conference on Bioinformatics and Biomedical Engineering; IEEE: Wuhan, China, May 2011; pp. 1–4.

- Liu, K.; Hu, Q.; Liu, J.; Xing, C. Named Entity Recognition in Chinese Electronic Medical Records Based on CRF. In Proceedings of the 2017 14th Web Information Systems and Applications Conference (WISA); IEEE: Liuzhou, Guangxi Province, China, November 2017; pp. 105–110.

- Dash, A.; Darshana, S.; Yadav, D.K.; Gupta, V. A Clinical Named Entity Recognition Model Using Pretrained Word Embedding and Deep Neural Networks. Decision Analytics Journal 2024, 10, 100426. [Google Scholar] [CrossRef]

- Zhang, R.; Zhao, P.; Guo, W.; Wang, R.; Lu, W. Medical Named Entity Recognition Based on Dilated Convolutional Neural Network. Cognitive Robotics 2022, 2, 13–20. [Google Scholar] [CrossRef]

- Lerner, I.; Paris, N.; Tannier, X. Terminologies Augmented Recurrent Neural Network Model for Clinical Named Entity Recognition. Journal of Biomedical Informatics 2020, 102, 103356. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Computation 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.; Tang, Y.; Long, Y.; Hu, K.; Li, Y.; Li, J.; Wang, C.-D. Multi-Information Preprocessing Event Extraction With BiLSTM-CRF Attention for Academic Knowledge Graph Construction. IEEE Trans. Comput. Soc. Syst. 2023, 10, 2713–2724. [Google Scholar] [CrossRef]

- An, Y.; Xia, X.; Chen, X.; Wu, F.-X.; Wang, J. Chinese Clinical Named Entity Recognition via Multi-Head Self-Attention Based BiLSTM-CRF. Artificial Intelligence in Medicine 2022, 127, 102282. [Google Scholar] [CrossRef] [PubMed]

- Deng, N.; Fu, H.; Chen, X. Named Entity Recognition of Traditional Chinese Medicine Patents Based on BiLSTM-CRF. Wireless Communications and Mobile Computing 2021, 2021, 1–12. [Google Scholar] [CrossRef]

- Ma, P.; Jiang, B.; Lu, Z.; Li, N.; Jiang, Z. Cybersecurity Named Entity Recognition Using Bidirectional Long Short-Term Memory with Conditional Random Fields. Tinshhua Sci. Technol. 2021, 26, 259–265. [Google Scholar] [CrossRef]

- Baigang, M.; Yi, F. A Review: Development of Named Entity Recognition (NER) Technology for Aeronautical Information Intelligence. Artif Intell Rev 2023, 56, 1515–1542. [Google Scholar] [CrossRef]

- Wang, Y.; Tong, H.; Zhu, Z.; Li, Y. Nested Named Entity Recognition: A Survey. ACM Trans. Knowl. Discov. Data 2022, 16, 1–29. [Google Scholar] [CrossRef]

- Shen, D.; Zhang, J.; Zhou, G.; Su, J.; Tan, C.-L. Effective Adaptation of a Hidden Markov Model-Based Named Entity Recognizer for Biomedical Domain. In Proceedings of the Proceedings of the ACL 2003 workshop on Natural language processing in biomedicine -; Association for Computational Linguistics: Sapporo, Japan, 2003; Vol. 13, pp. 49–56.

- Yang, J.; Zhang, T.; Tsai, C.-Y.; Lu, Y.; Yao, L. Evolution and Emerging Trends of Named Entity Recognition: Bibliometric Analysis from 2000 to 2023. Heliyon 2024, 10, e30053. [Google Scholar] [CrossRef]

- Ming, H.; Yang, J.; Gui, F.; Jiang, L.; An, N. Few-Shot Nested Named Entity Recognition. Knowledge-Based Systems 2024, 293, 111688. [Google Scholar] [CrossRef]

- Huang, H.; Lei, M.; Feng, C. Hypergraph Network Model for Nested Entity Mention Recognition. Neurocomputing 2021, 423, 200–206. [Google Scholar] [CrossRef]

- Jiang, D.; Ren, H.; Cai, Y.; Xu, J.; Liu, Y.; Leung, H. Candidate Region Aware Nested Named Entity Recognition. Neural Networks 2021, 142, 340–350. [Google Scholar] [CrossRef] [PubMed]

- Nivre, J.; McDonald, R. Integrating Graph-Based and Transition-Based Dependency Parsers.

- Huang, P.; Zhao, X.; Hu, M.; Tan, Z.; Xiao, W. T 2 -NER : A T Wo-Stage Span-Based Framework for Unified Named Entity Recognition with T Emplates. Transactions of the Association for Computational Linguistics 2023, 11, 1265–1282. [Google Scholar] [CrossRef]

- Li, F.; Wang, Z.; Hui, S.C.; Liao, L.; Zhu, X.; Huang, H. A Segment Enhanced Span-Based Model for Nested Named Entity Recognition. Neurocomputing 2021, 465, 26–37. [Google Scholar] [CrossRef]

- Jiang, W. A Method for Ancient Book Named Entity Recognition Based on BERT-Global Pointer. IJCSIT 2024, 2, 443–450. [Google Scholar] [CrossRef]

- Zhang, P.; Liang, W. Medical Name Entity Recognition Based on Lexical Enhancement and Global Pointer. IJACSA 2023, 14. [Google Scholar] [CrossRef]

- Zhang, X.; Luo, X.; Wu, J. A RoBERTa-GlobalPointer-Based Method for Named Entity Recognition of Legal Documents. In Proceedings of the 2023 International Joint Conference on Neural Networks (IJCNN); IEEE: Gold Coast, Australia, June 18 2023; pp. 1–8.

- Cong, L.; Cui, R.; Dou, Z.; Huang, C.; Zhao, L.; Zhang, Y.; Chen, C.; Su, C.; Li, J.; Qu, C. Named Entity Recognition for Power Data Based on Lexical Enhancement and Global Pointer. In Proceedings of the Third International Conference on Electronic Information Engineering, Big Data, and Computer Technology (EIBDCT 2024); Zhang, J., Sun, N., Eds.; SPIE: Beijing, China, July 19 2024; p. 61.

- Yadav, V.; Bethard, S. A Survey on Recent Advances in Named Entity Recognition from Deep Learning Models 2019.

- Liu, Z.; Jiang, F.; Hu, Y.; Shi, C.; Fung, P. NER-BERT: A Pre-Trained Model for Low-Resource Entity Tagging 2021.

- BERT: Pre-Training of Deep Bidirectional Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding.for Language Understanding.

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.R.; Le, Q.V. XLNet: Generalized Autoregressive Pretraining for Language Understanding.

- Zhang, N.; Jia, Q.; Yin, K.; Dong, L.; Gao, F.; Hua, N. Conceptualized Representation Learning for Chinese Biomedical Text Mining 2020.

- Zhang, Z.; Han, X.; Liu, Z.; Jiang, X.; Sun, M.; Liu, Q. ERNIE: Enhanced Language Representation with Informative Entities 2019.

- Ma, R.; Peng, M.; Zhang, Q.; Wei, Z.; Huang, X. Simplify the Usage of Lexicon in Chinese NER. In Proceedings of the Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics; Association for Computational Linguistics: Online, 2020; pp. 5951–5960.

- Zhao, J.; Cui, M.; Gao, X.; Yan, S.; Ni, Q. Chinese Named Entity Recognition Based on BERT and Lexicon Enhancement. In Proceedings of the Proceedings of the 2022 4th International Conference on Robotics, Intelligent Control and Artificial Intelligence; ACM: Dongguan China, December 16 2022; pp. 597–604.

- Zhang, J.; Guo, M.; Geng, Y.; Li, M.; Zhang, Y.; Geng, N. Chinese Named Entity Recognition for Apple Diseases and Pests Based on Character Augmentation. Computers and Electronics in Agriculture 2021, 190, 106464. [Google Scholar] [CrossRef]

- Liu, Q.; Zhang, L.; Ren, G.; Zou, B. Research on Named Entity Recognition of Traditional Chinese Medicine Chest Discomfort Cases Incorporating Domain Vocabulary Features. Computers in Biology and Medicine 2023, 166, 107466. [Google Scholar] [CrossRef] [PubMed]

- Sun, M.; Yang, Q.; Wang, H.; Pasquine, M.; Hameed, I.A. Learning the Morphological and Syntactic Grammars for Named Entity Recognition. Information 2022, 13, 49. [Google Scholar] [CrossRef]

- Tian, Y.; Shen, W.; Song, Y.; Xia, F.; He, M.; Li, K. Improving Biomedical Named Entity Recognition with Syntactic Information. BMC Bioinformatics 2020, 21, 539. [Google Scholar] [CrossRef]

- Luoma, J.; Pyysalo, S. Exploring Cross-Sentence Contexts for Named Entity Recognition with BERT 2020.

- Jia, B.; Wu, Z.; Wu, B.; Liu, Y.; Zhou, P. Enhanced Character Embedding for Chinese Named Entity Recognition. Measurement and Control 2020, 53, 1669–1681. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need.

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. 2019. [CrossRef]

- Hongying, Z.; Wenxin, L.; Kunli, Z.; Yajuan, Y.; Baobao, C.; Zhifang, S. Building a Pediatric Medical Corpus: Word Segmentation and Named Entity Annotation. In Chinese Lexical Semantics; Liu, M., Kit, C., Su, Q., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, 2021; Vol. 12278, pp. 652–664 ISBN 978-3-030-81196-9.

- Xu, L.; Liu, W.; Li, L.; Liu, C.; Zhang, X. CLUENER2020: FINE-GRAINED NAMED ENTITY RECOGNITION DATASET AND BENCHMARK FOR CHINESE.

- Song, Y.; Shi, S.; Li, J.; Zhang, H. Directional Skip-Gram: Explicitly Distinguishing Left and Right Context for Word Embeddings. In Proceedings of the Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers); Association for Computational Linguistics: New Orleans, Louisiana, 2018; pp. 175–180.

- Zhang, Y.; Yang, J. Chinese NER Using Lattice LSTM. 2018. [CrossRef]

- Li, X.; Yan, H.; Qiu, X.; Huang, X. FLAT: Chinese NER Using Flat-Lattice Transformer 2020.

- Li, X.; Feng, J.; Meng, Y.; Han, Q.; Wu, F.; Li, J. A Unified MRC Framework for Named Entity Recognition 2022.

- Cui, X.; Yang, Y.; Li, D.; Qu, X.; Yao, L.; Luo, S.; Song, C. Fusion of SoftLexicon and RoBERTa for Purpose-Driven Electronic Medical Record Named Entity Recognition. Applied Sciences 2023, 13, 13296. [Google Scholar] [CrossRef]

| Entity | Labels | Example | Num |

|---|---|---|---|

| type | TY | adult chickens, laying hens, flocks, sick chickens | 4503 |

| disease | DI | Newcastle disease, avian influenza | 4098 |

| symptom | SY | clinical warming, oedema, congestion | 9265 |

| bodypart | BO | head, eyes, liver, lymphocyte | 5744 |

| drug | DR | oxytetracycline, gentamycin, tetracycline | 3201 |

| Total | 26811 |

| Hyperparameter | Value |

|---|---|

| Optimizer | Adam |

| Warm up | 0.1 |

| LSTM units | 256 |

| Batch size | 32 |

| Dropout | 0.4 |

| Max len | 128 |

| Epoch | 20 |

| Model | CDNER | CMeEE V2 | CLUENER | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P/% | R/% | F1/% | P/% | R/% | F1/% | P/% | R/% | F1/% | |

| Lattice LSTM | 82.43 | 84.51 | 83.46 | 61.26 | 62.33 | 61.79 | 71.69 | 70.78 | 71.23 |

| FLAT | 80.55 | 82.99 | 81.75 | 58.18 | 61.11 | 59.61 | 64.64 | 68.45 | 66.49 |

| BERT-CRF | 87.86 | 89.53 | 88.69 | 70.93 | 73.24 | 71.98 | 79.15 | 81.86 | 80.48 |

| BERT-MRC | 80.16 | 85.91 | 82.93 | 68.00 | 68.35 | 67.97 | 75.60 | 78.22 | 76.89 |

| SLRBC | 88.58 | 90.72 | 89.64 | 71.54 | 72.59 | 72.06 | 81.55 | 80.51 | 81.03 |

| MFGFF-BiLSTM-EGP (ours) | 91.42 | 92.18 | 91.98 | 73.12 | 73.68 | 73.32 | 85.21 | 80.38 | 82.54 |

| Data | Category | P % | R % | F1 % | Macro P % | Macro R % | Macro F1 % |

|---|---|---|---|---|---|---|---|

| CDNER | symptom | 86.99 | 88.26 | 87.6 | 91.42 | 92.18 | 91.98 |

| bodypart | 92.19 | 92.70 | 92.43 | ||||

| disease | 92.29 | 91.70 | 92.98 | ||||

| drug | 92.50 | 93.97 | 93.22 | ||||

| type | 93.11 | 94.25 | 93.68 | ||||

| CMeEE V2 | bod | 74.56 | 79.05 | 77.30 | 73.12 | 73.68 | 73.32 |

| dis | 78.73 | 87.85 | 83.81 | ||||

| sym | 77.10 | 65.75 | 70.9 | ||||

| ite | 70.87 | 55.58 | 62.04 | ||||

| dru | 77.94 | 83.23 | 81.10 | ||||

| pro | 76.09 | 66.30 | 70.84 | ||||

| mic | 69.56 | 86.60 | 78.19 | ||||

| dep | 77.97 | 68.78 | 73.1 | ||||

| equ | 55.23 | 70.01 | 62.62 | ||||

| CLUENER | address | 76.81 | 80.71 | 78.71 | 85.21 | 80.38 | 82.54 |

| name | 92.67 | 84.76 | 88.54 | ||||

| organization | 84.52 | 84.02 | 84.27 | ||||

| game | 87.42 | 80.98 | 84.08 | ||||

| scene | 81.75 | 76.87 | 79.23 | ||||

| book | 88.04 | 73.64 | 80.20 | ||||

| company | 84.09 | 88.10 | 86.05 | ||||

| position | 86.92 | 81.88 | 84.33 | ||||

| government | 82.72 | 85.90 | 84.28 | ||||

| movie | 87.21 | 66.96 | 75.76 |

| Model | Pre-trained Model |

Word Encoder |

Sentence Encoder |

F1/% |

|---|---|---|---|---|

| 1 | ✓ | 88.01 | ||

| 2 | ✓ | 82.32 | ||

| 3 | ✓ | ✓ | 82.67 | |

| 4 | ✓ | ✓ | 91.33 | |

| 5 | ✓ | ✓ | 88.34 | |

| 6 | ✓ | ✓ | ✓ | 91.98 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).