Appendix I: Cheryl’s Puzzles

Cheryl’s Birthday Puzzle and its Solution

Question:

Albert and Bernard just became friends with Cheryl, and they want to know when her birthday is. Cheryl gives them a list of 10 possible dates:

May 15, May 16, May 19

June 17, June 18

July 14, July 16

August 14, August 15, August 17

Cheryl then tells Albert and Bernard separately the month and the day of her birthday respectively.

Albert: I don’t know when Cheryl’s birthday is, but I know that Bernard doesn’t know too.

Bernard: At first, I don’t know when Cheryl’s birthday is, but I know now.

Albert: Then I also know when Cheryl’s birthday is.

So, when is Cheryl’s birthday?

Solution:

The answer is July 16, and the puzzle can be solved through date elimination after presenting the 10 given dates in a grid as shown below.

| May |

|

15 |

16 |

|

|

19 |

| June |

|

|

|

17 |

18 |

|

| July |

14 |

|

16 |

|

|

|

| August |

14 |

15 |

|

17 |

|

|

Based on the first line of the dialogue, Albert knows the month is not May or June and therefore claims that Bernard doesn’t know Cheryl’s birthday. All the 5 dates in May or June can be eliminated.

Based on the second line of the dialogue, Bernard finds Cheryl’s birthday because he knows the day. If the day is 14, he wouldn’t be able to distinguish between July 14 and August 14. So, both July 14 and August 14 can be eliminated.

Based on the third line of the dialogue, Albert sorts it out based on the month given to him. If the month is August, both August 15 and August 17 are possible, and he wouldn’t know Cheryl’s birthday. So, both dates are eliminated, and Cheryl’s birthday is on July 16.

Modified Cheryl’s Birthday Puzzle and its Solution

Question:

In the modified version of Cheryl’s Birthday Puzzle, the only part changed is the dialogue, which is as follows:

Bernard: I don’t know when Cheryl’s birthday is.

Albert: I still don’t know when Cheryl’s birthday is.

Bernard: At first I didn’t know when Cheryl’s birthday is, but I know now.

Albert: Then I also know when Cheryl’s birthday is.

Solution:

Despite the same date elimination technique can be leveraged, the answer is different. It is August 17.

| May |

|

15 |

16 |

|

|

19 |

| June |

|

|

|

17 |

18 |

|

| July |

14 |

|

16 |

|

|

|

| August |

14 |

15 |

|

17 |

|

|

Based on the first line of the dialogue, if Cheryl’s birthday is on May 19 or June 18, Bernard would know it. So, it cannot be on either of these 2 dates, and they can be eliminated.

Based on the second line of the dialogue, if Cheryl’s birthday is in June, Albert would know the exact date because, after June 18 has been eliminated after the first line, there is only one date left in June (i.e., June 17). So, all dates in June are eliminated.

Based on the third line of the dialogue, if Cheryl’s birthday is on 14, 15, or 16, there are two possible dates, and Bernard shouldn’t be able to sort it out. So, it can only be August 17 because June 17 has been eliminated in the second line.

Based on the fourth line, Albert can find Cheryl’s birthday by reworking of Bernard’s thinking steps.

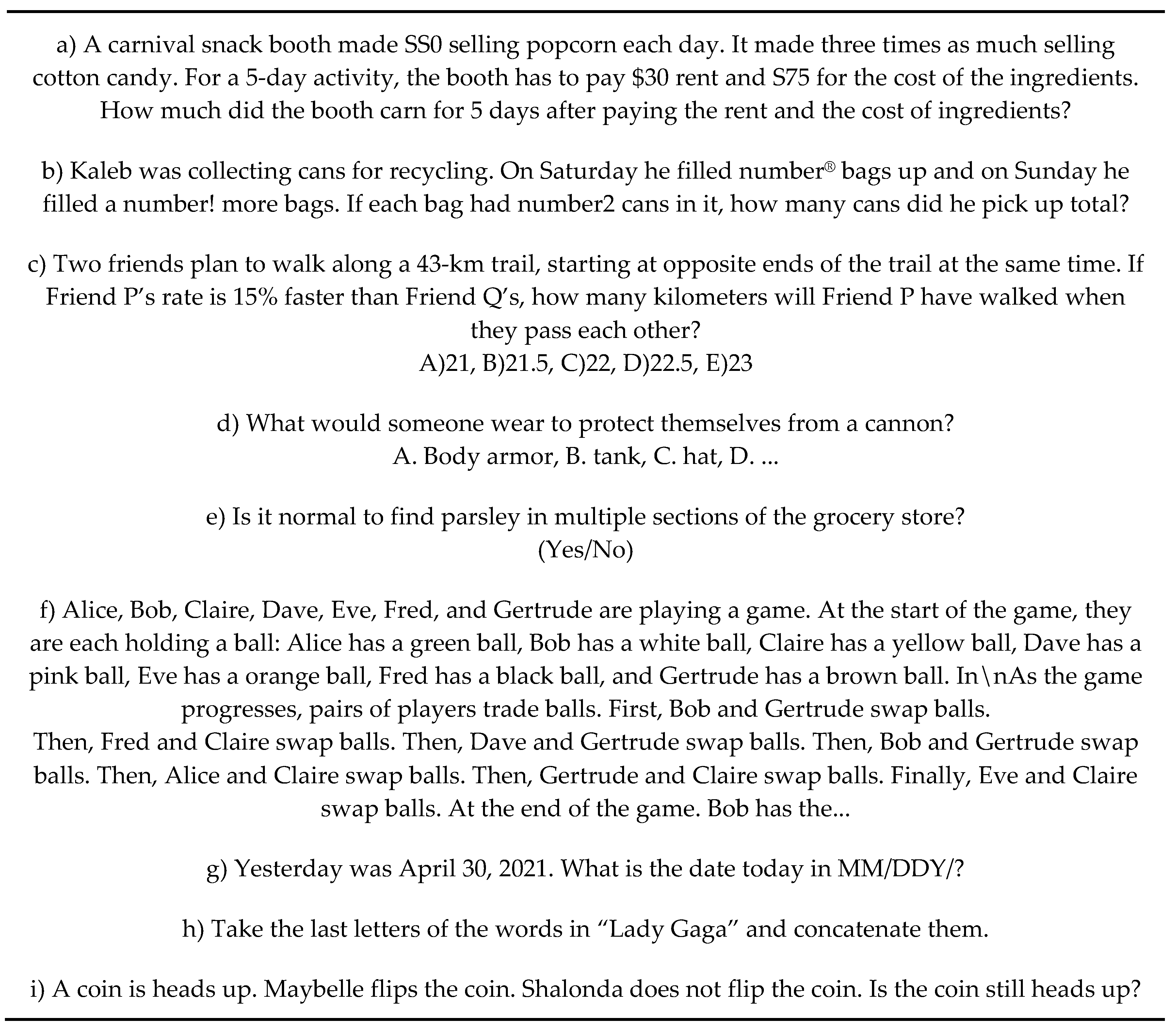

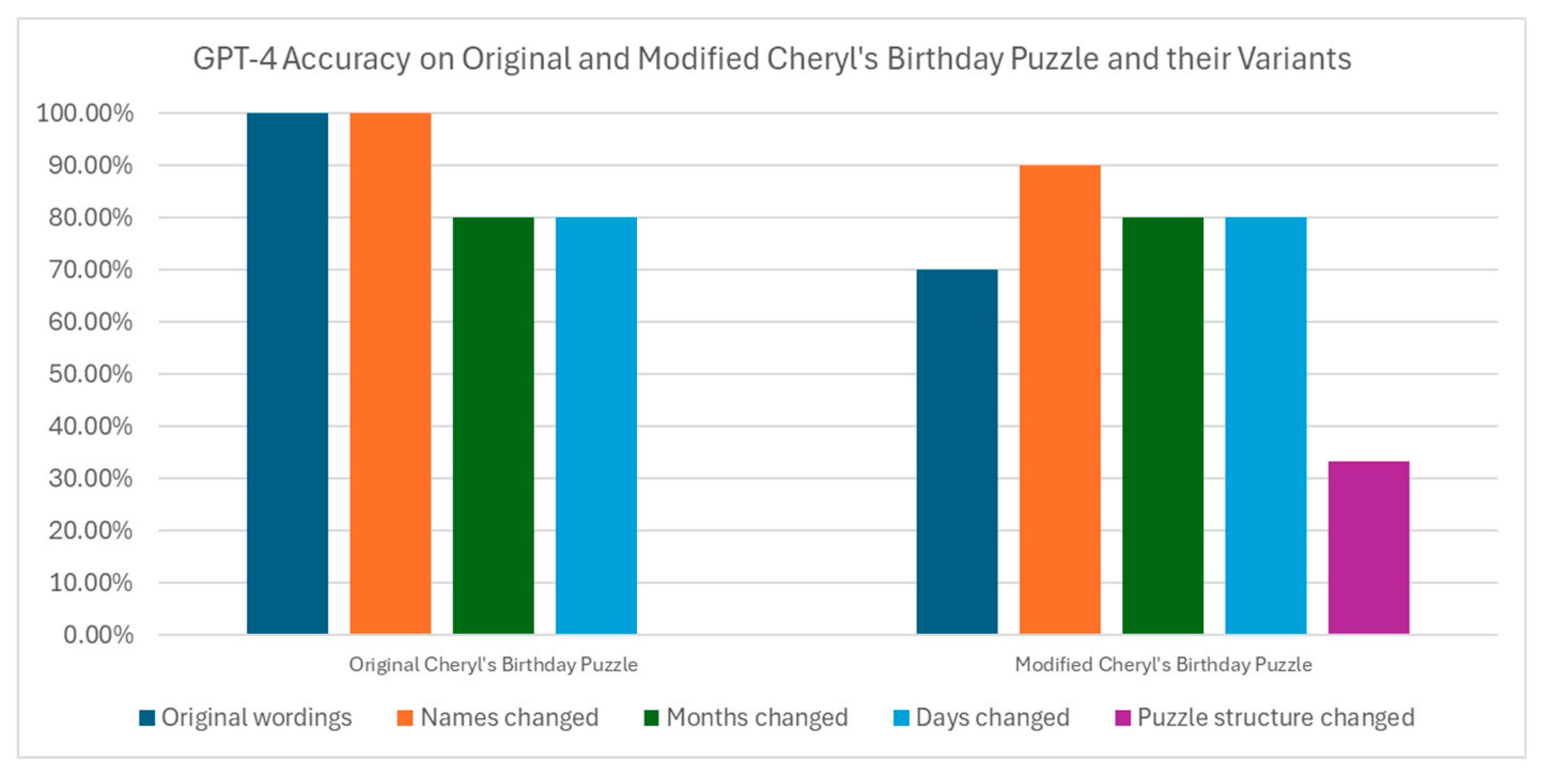

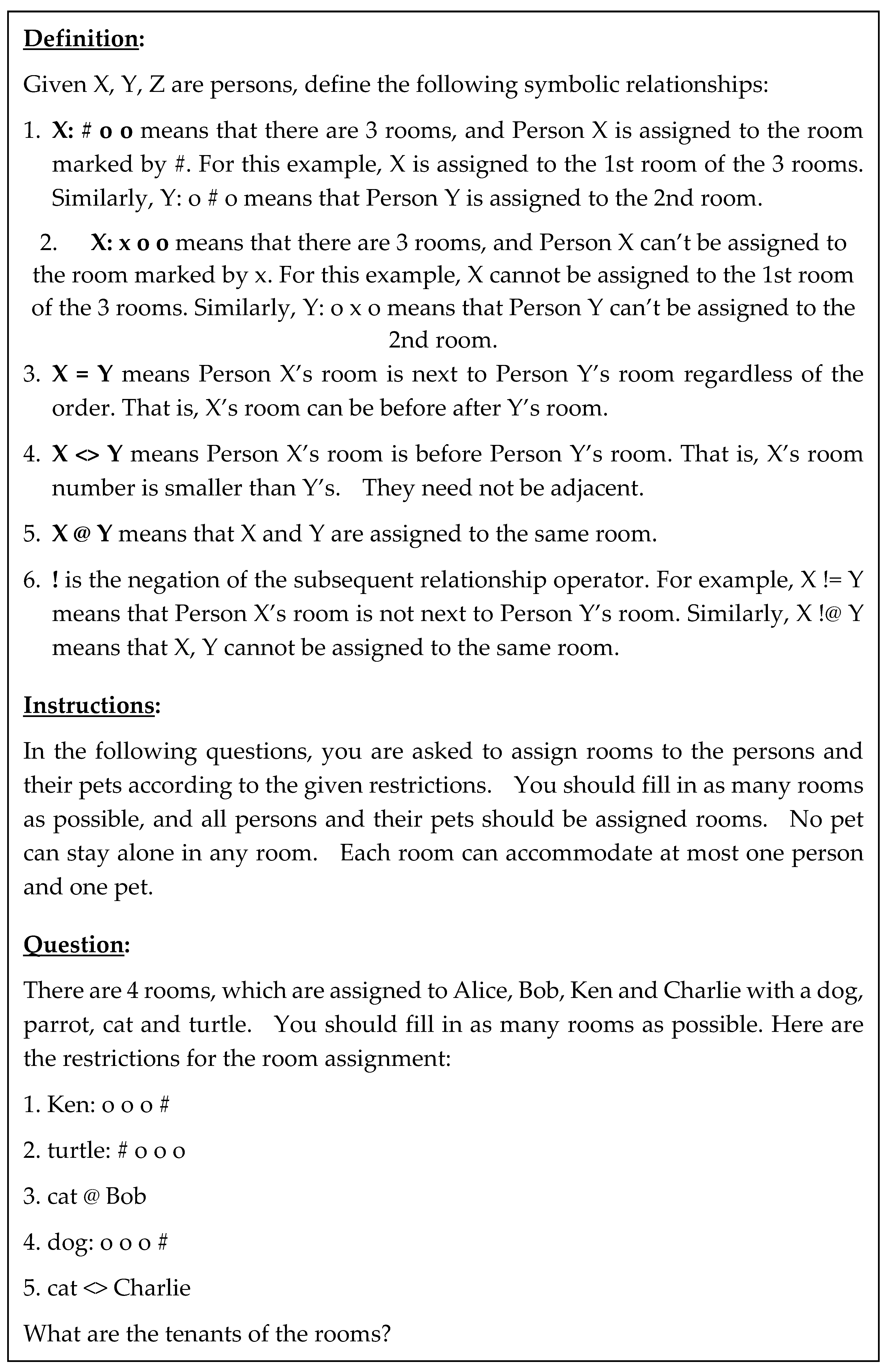

Variants of Cheryl’s Birthday Puzzle used in the Experiments of this Work

In the experiments carried out in this work, both the original and modified versions of Cheryl’s Birthday Puzzle are twisted in four ways: (1) names changed; (2) months changed; (3) days changed; and (4) date topology changed. Changing the first three is trivial. Below are some examples for the change of the puzzle structure of date topology used in this work.

| May |

|

|

16 |

|

|

19 |

| June |

14 |

|

|

17 |

18 |

|

| July |

|

15 |

16 |

17 |

|

|

| August |

|

15 |

|

|

18 |

|

| May |

|

|

16 |

|

|

19 |

| June |

14 |

|

|

17 |

|

19 |

| July |

|

15 |

16 |

17 |

|

|

| August |

|

15 |

|

|

18 |

|

| May |

|

15 |

|

|

|

19 |

23 |

| June |

|

|

16 |

17 |

18 |

|

|

| July |

14 |

|

|

|

|

19 |

|

| August |

14 |

15 |

|

17 |

|

|

|

| May |

|

15 |

|

|

|

19 |

23 |

| June |

|

|

16 |

|

|

|

23 |

| July |

|

|

16 |

|

18 |

19 |

|

| August |

14 |

|

|

17 |

|

|

|

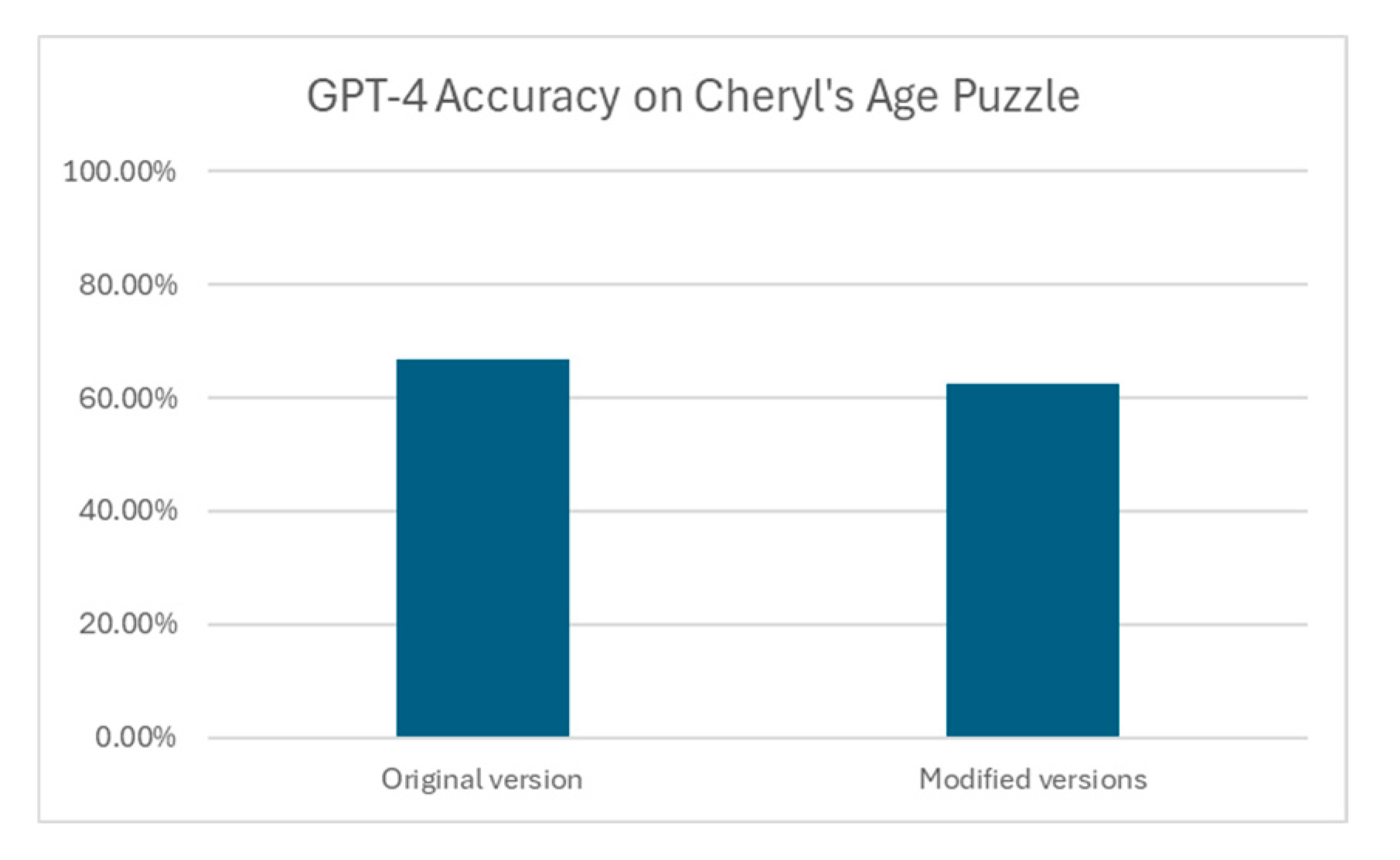

Cheryl’s Age Puzzle

Question:

Cheryl’s Age Puzzle, also created by the same team but less popular, reads as follows:

Albert and Bernard now want to know how old Cheryl is.

Cheryl: I have two younger brothers. The product of all our ages (i.e., my age and the ages of my two brothers) is 144, assuming that we use whole numbers for our ages.

Albert: We still don’t know your age. What other hints can you give us?

Cheryl: The sum of all our ages is the bus number of this bus that we are on.

Bernard: Of course, we know the bus number, but we still don’t know your age.

Cheryl: Oh, I forgot to tell you that my brothers have the same age.

Albert and Bernard: Oh, now we know your age.

So, what is Cheryl’s age?

Solution:

The answer is 9.

144 can be decomposed into prime number factors (i.e., 144 = 24 × 32), and all possible ages for Cheryl and her two brothers examined (for example, 16, 9, 1, or 8, 6, 3, and so on). The sums of the ages can then be computed.

Because Bernard (who knows the bus number) cannot determine Cheryl’s age despite having been told this sum, it must be a sum that is not unique among the possible solutions. On examining all the possible ages, it turns out there are two pairs of sets of possible ages that produce the same sum as each other: 9, 4, 4 and 8, 6, 3, which sum to 17, and 12, 4, 3 and 9, 8, 2, which sum to 19.

Cheryl then says that her brothers are the same age, which eliminates the last three possibilities and leaves only 9, 4, 4, so we can deduce that Cheryl is 9 years old, and her brothers are 4 years old, and the bus the three of them are on has the number 17.

Modified Cheryl’s Age Puzzle used in the Experiment of this Work

To carry out experiments in this work, the product of the ages of Cheryl and her two brothers is changed to form new questions. Since it is not easy to find a product with multiple factorizations leading to the same sum, the question text is modified according to the context of the new questions.