Submitted:

23 August 2024

Posted:

26 August 2024

You are already at the latest version

Abstract

Keywords:

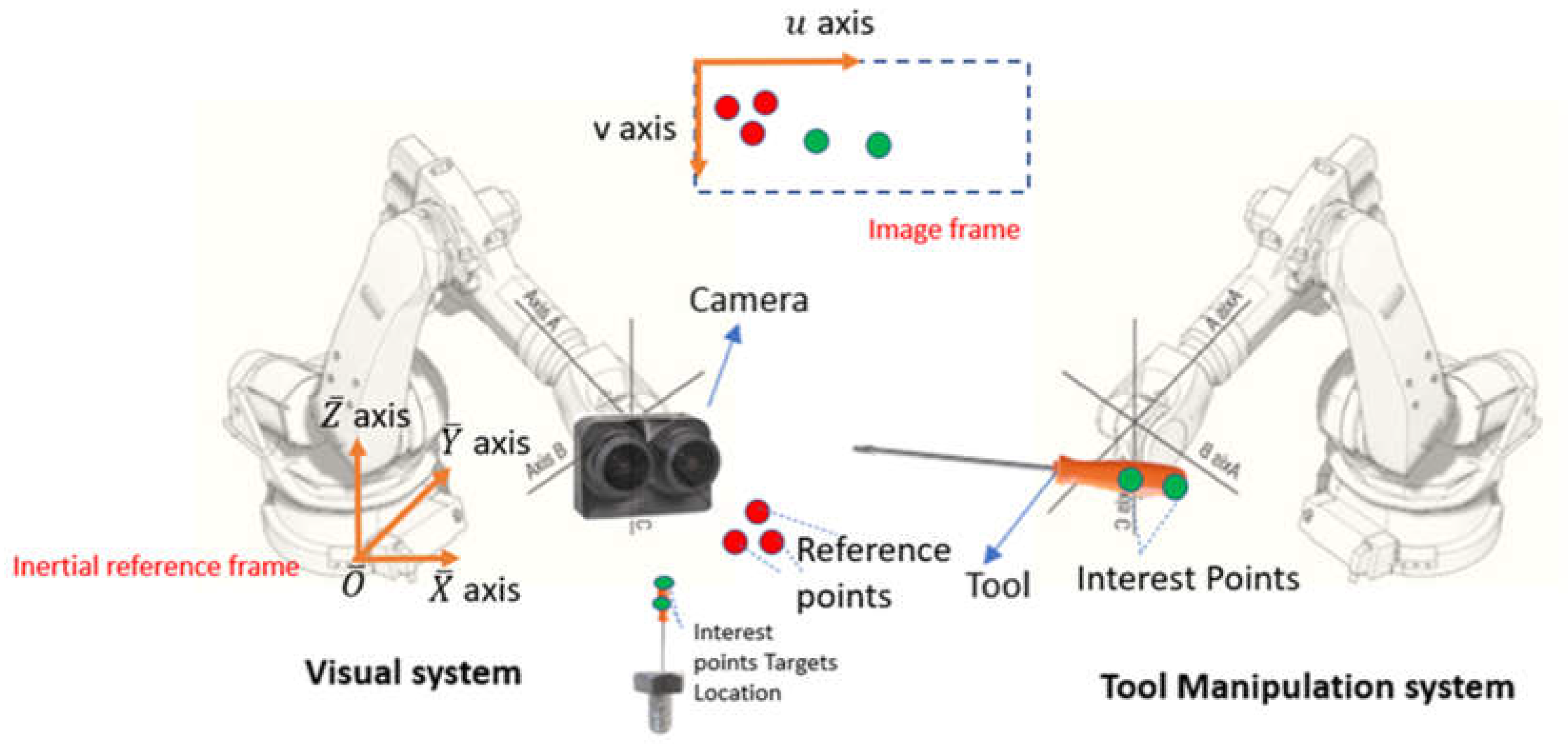

1. Introduction

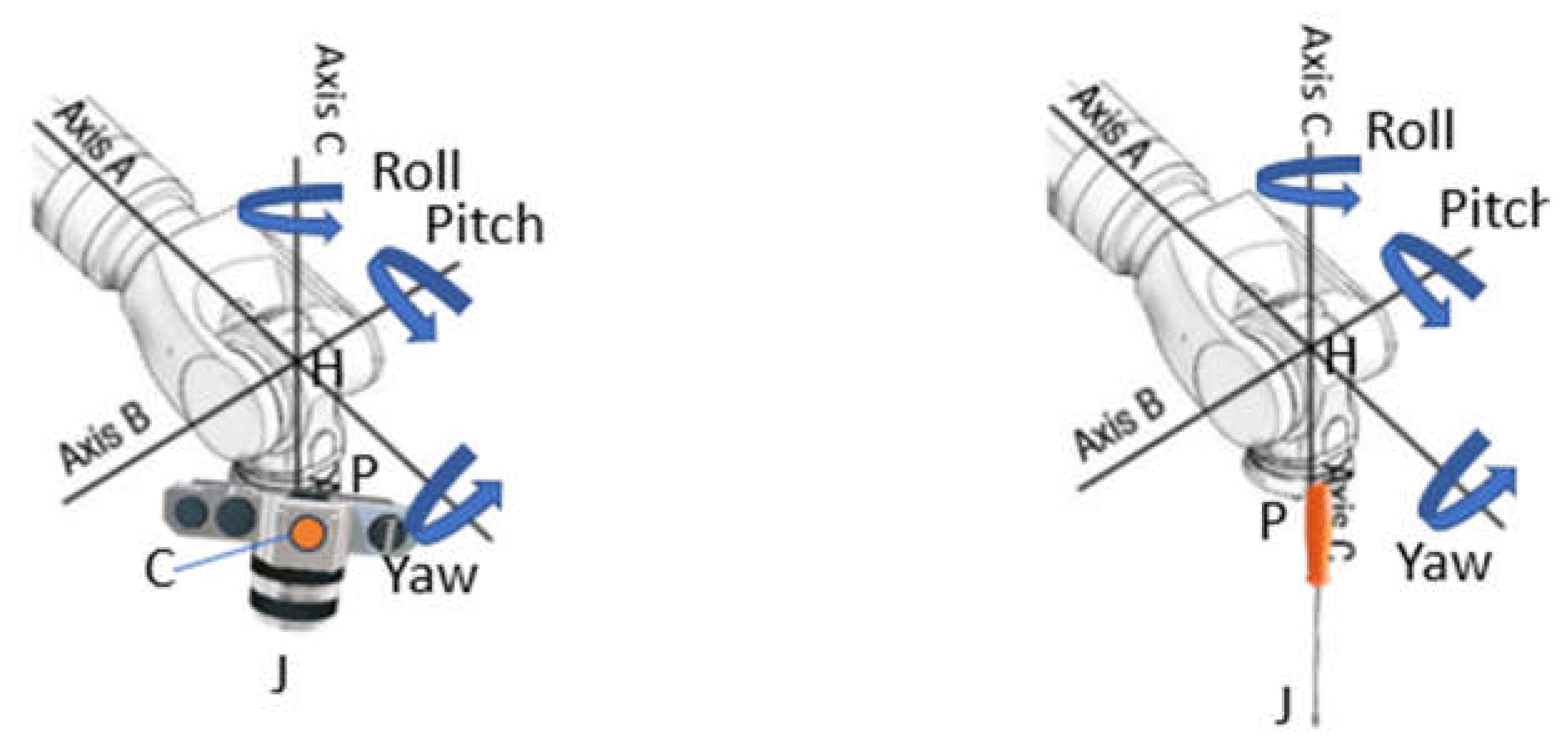

2. Methods

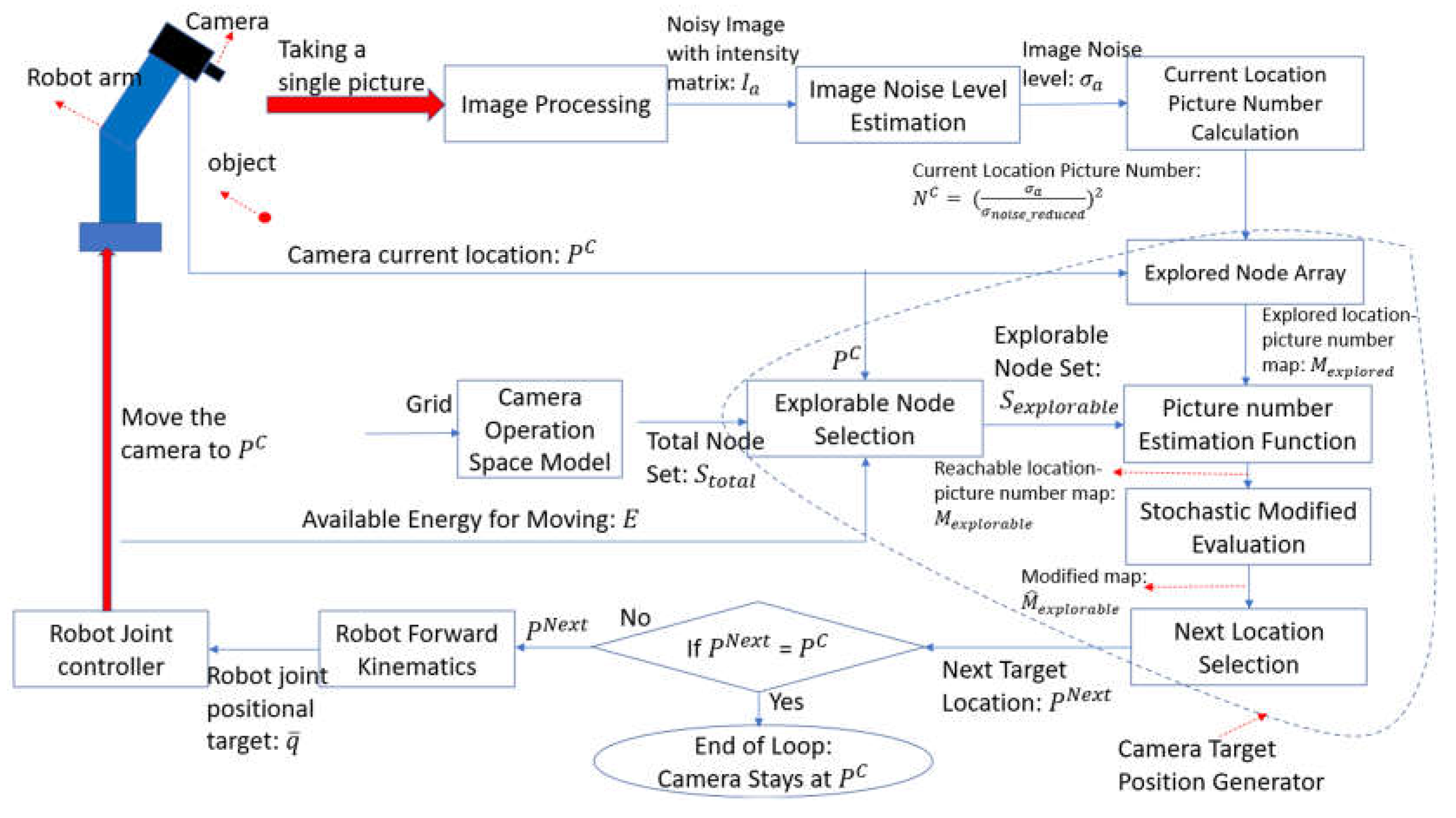

2.1. Camera Location Search Process and Algorithm Flowchart

2.2. Core Alogorithm

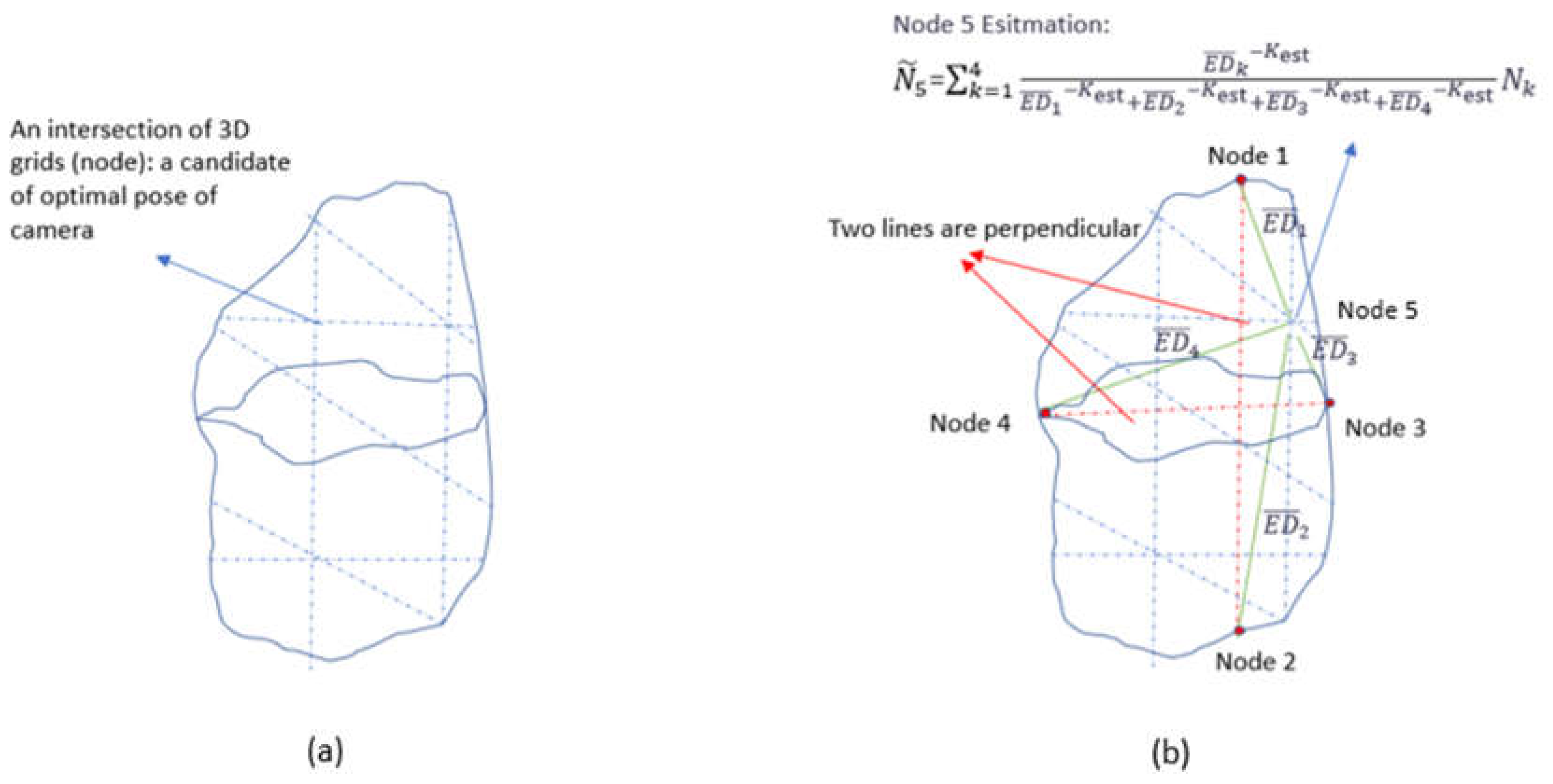

2.2.1. Picture Number Estimation Function

- Find the first two nodes on the boundary of operational space so that the Euclidean distance between these two nodes is the largest.

- Select other two nodes whose connection line is perpendicular to the previous connection line and the Euclidean distance between these two nodes is also the largest among all possible node pairs.

- Move the camera to those four nodes in space with proper orientation. Take a single image at each location and estimate the number of pictures required at those locations. And save all four nodes in and their values in

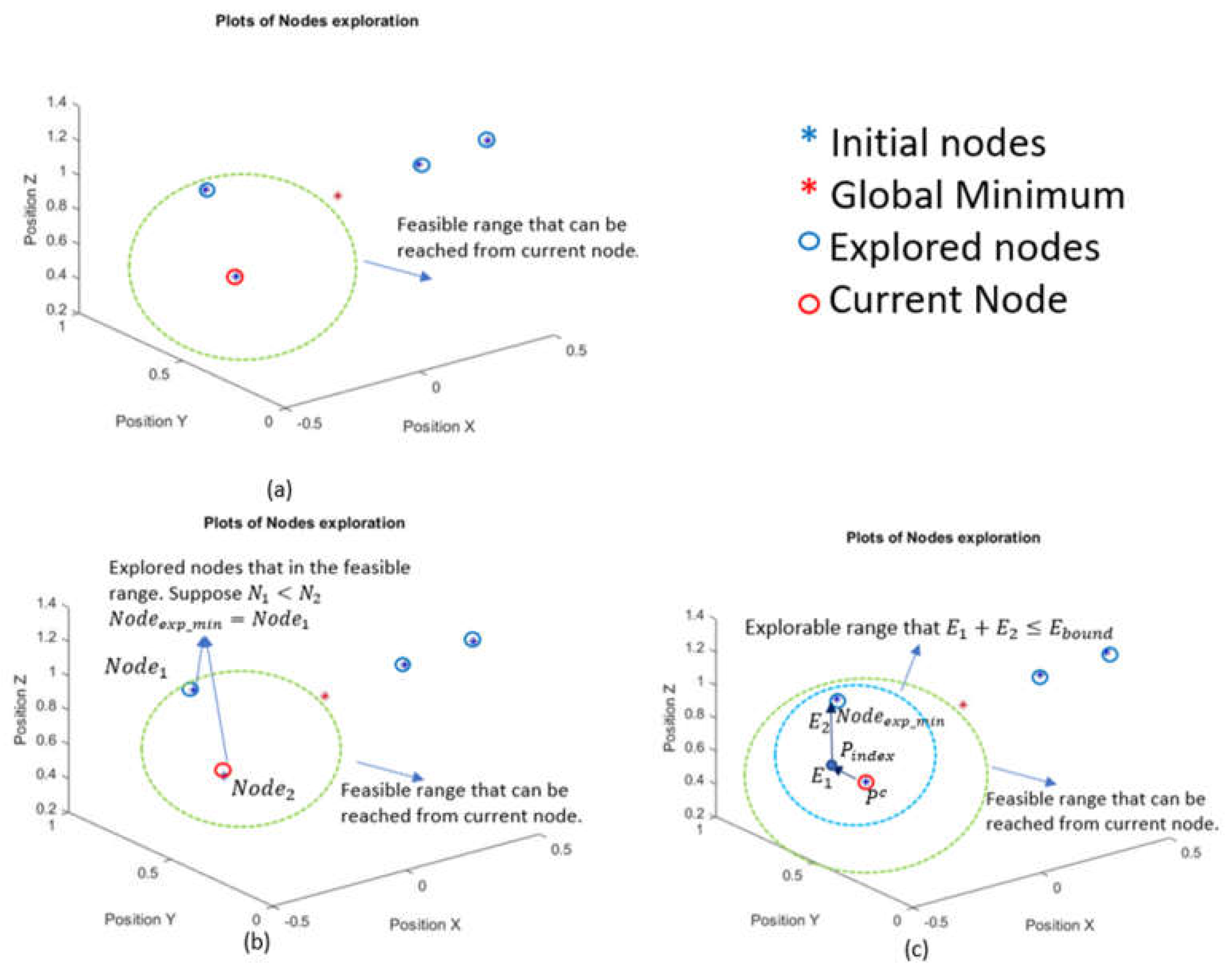

2.2.2. Explorable Node Selection

- With current energy bound , find all nodes in the space that can be reached from the current node In other words, all feasible nodes are in the set:where is the position of a node and is the estimated energy cost from .

- 2.

-

When , step 2 and step 3 are applied in finding the explorable set. In the feasible set, look at all explored nodes and find the one that has the smallest number of pictures. In other words:where is the set of all explored nodes.is the intersection set between and .

- 3.

- The estimation function may not give accurate results for some unexplored nodes. Therefore, it is possible that the algorithm may make the camera end up in a node that has a large number of pictures in some iteration loops. Because of that, our algorithm needs to make sure at worst it has enough energy to go back to the best (minimum number of pictures) node that has been explored when the available energy is low ( ). This further reduces the feasible set The explorable set can be written as:

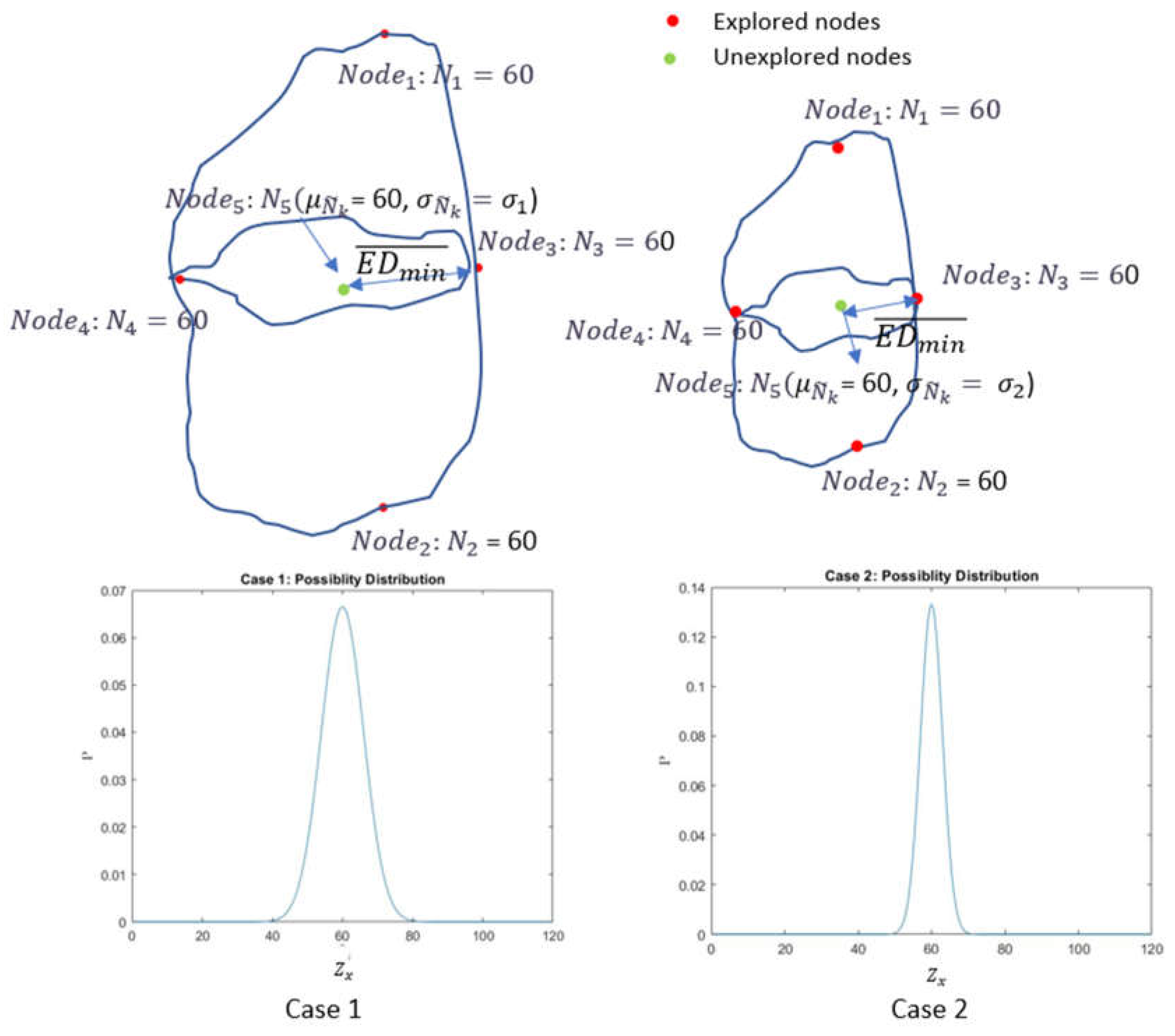

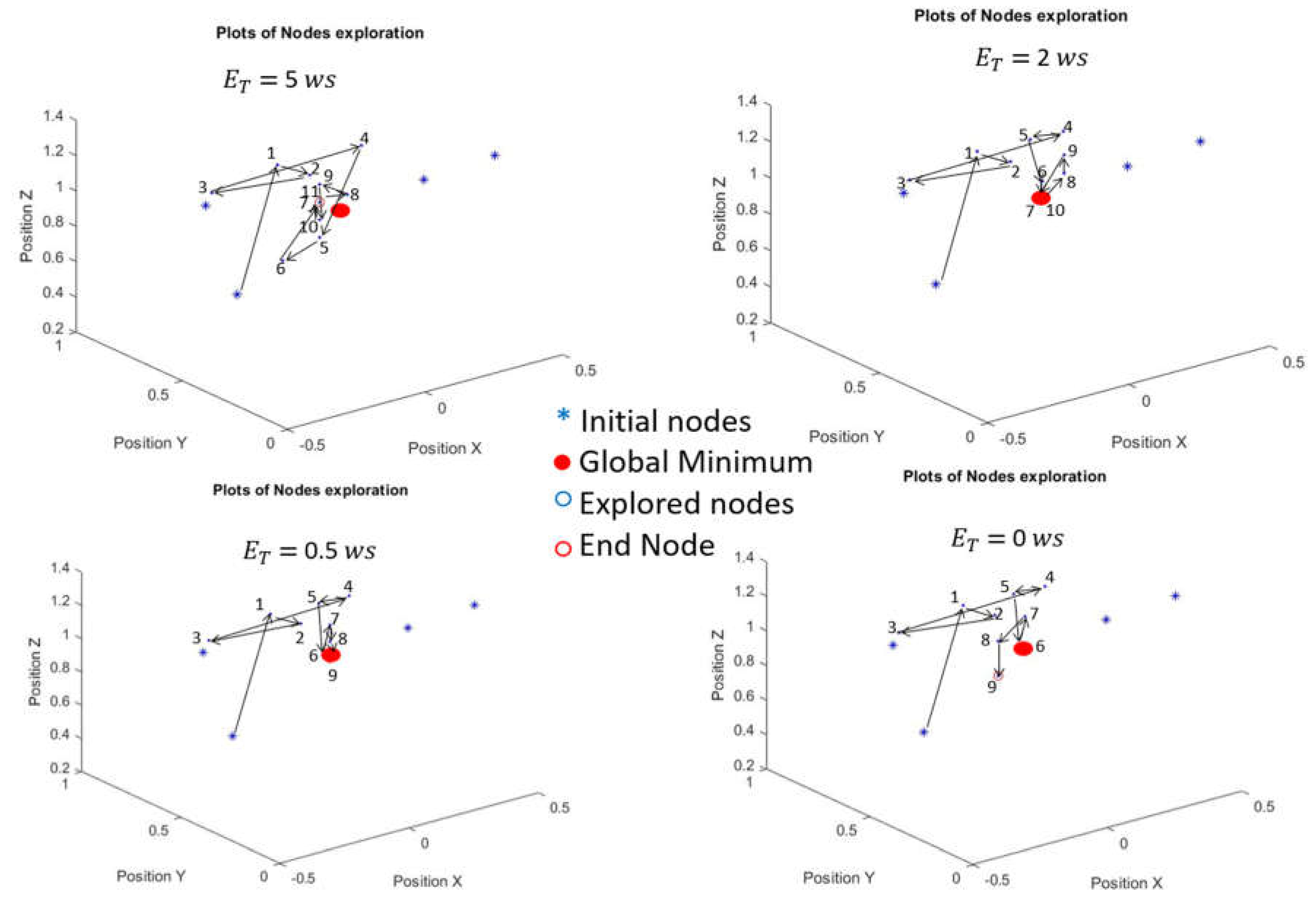

2.2.3. Stochastic Modified Evaluation

2.2.4. Estimated Energy Cost Function

- Select small for a conservative algorithm that searches a small area but ensures it ends up with the minimum that has been explored.

- Select large for an aggressive algorithm that searches a large area but risks not ending up at the minimum that has been explored.

3. Results and Discussion

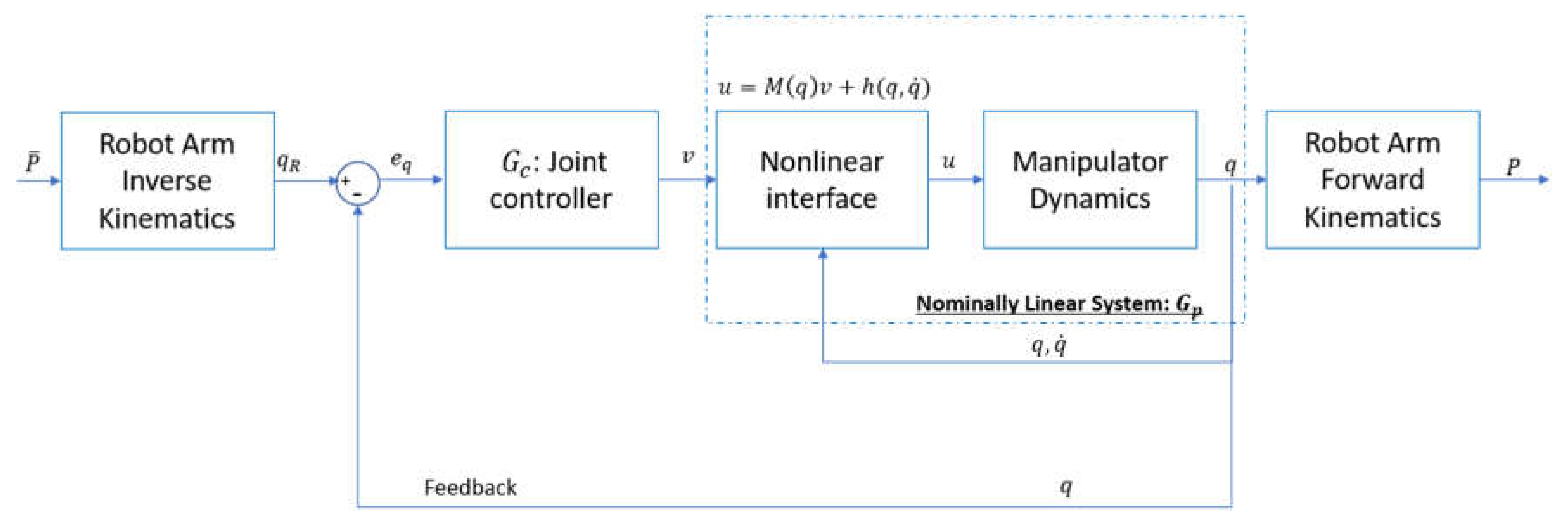

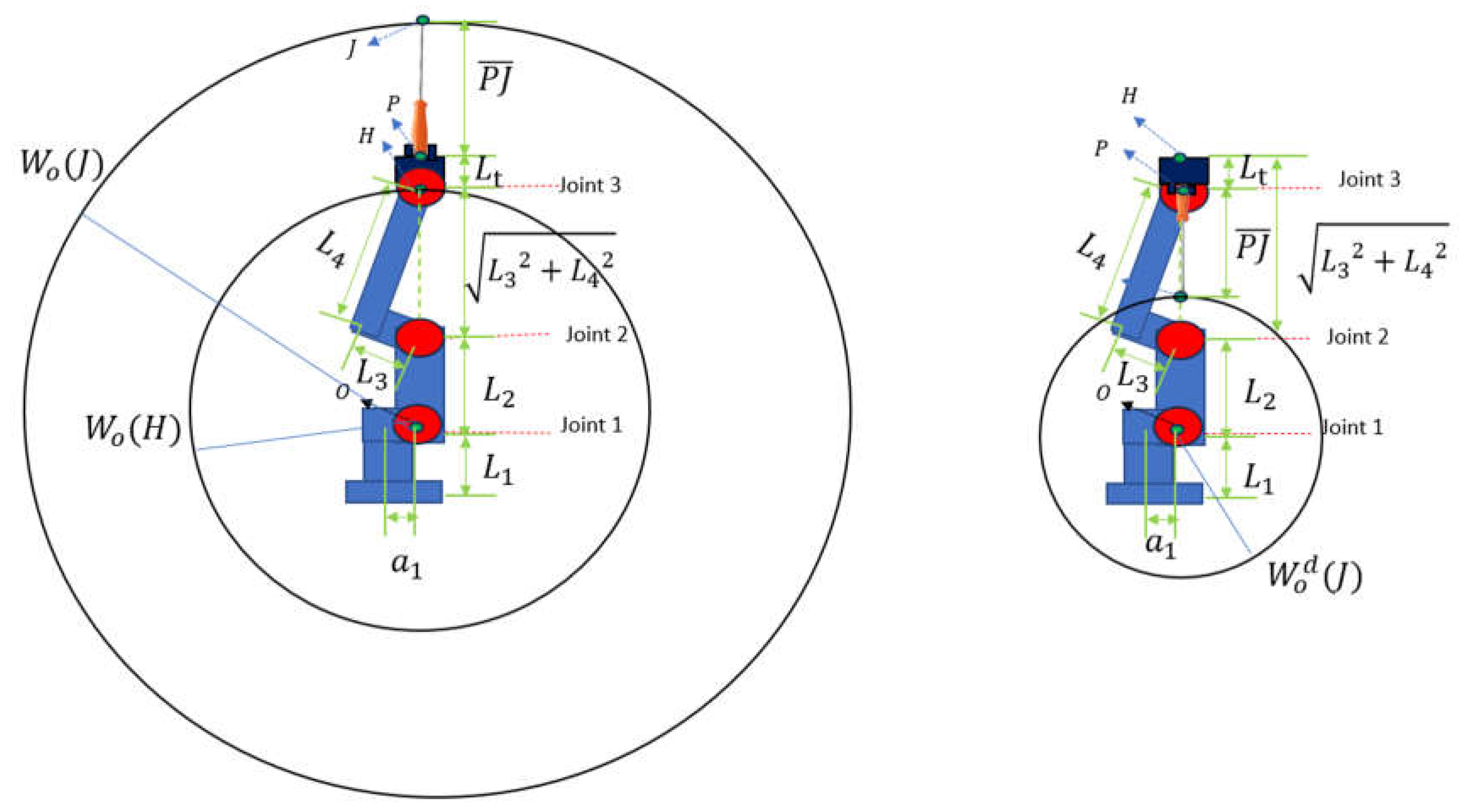

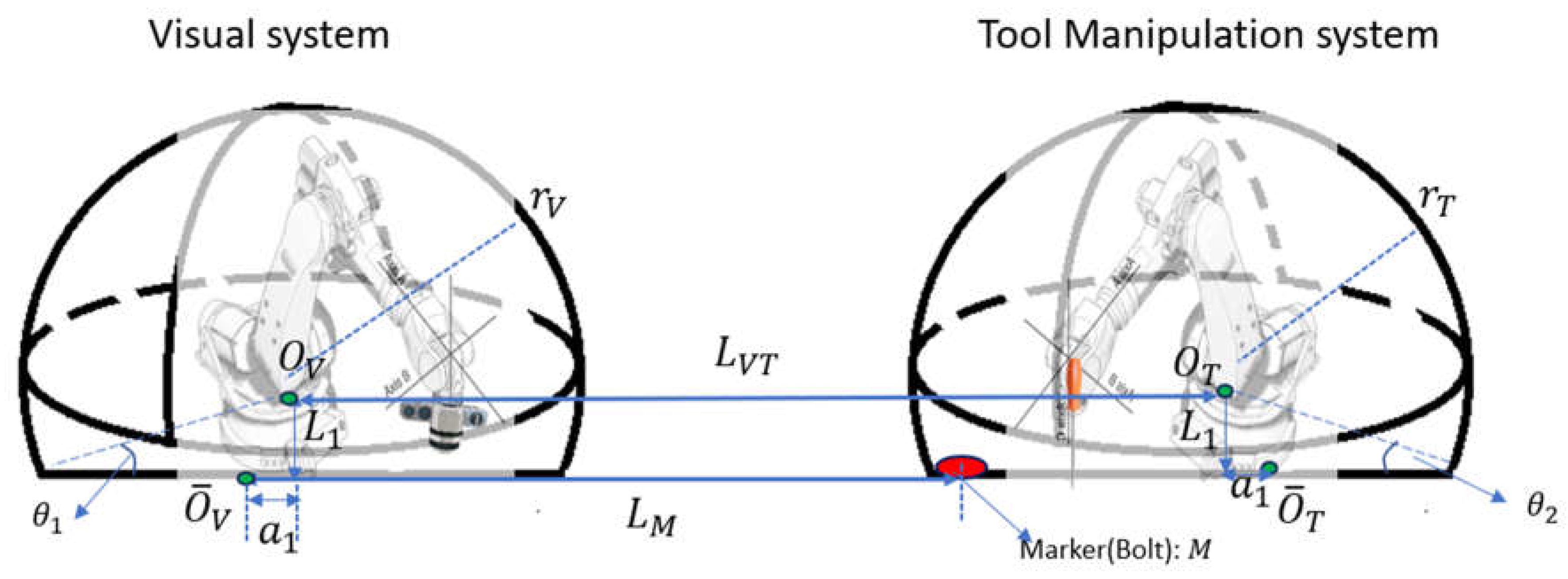

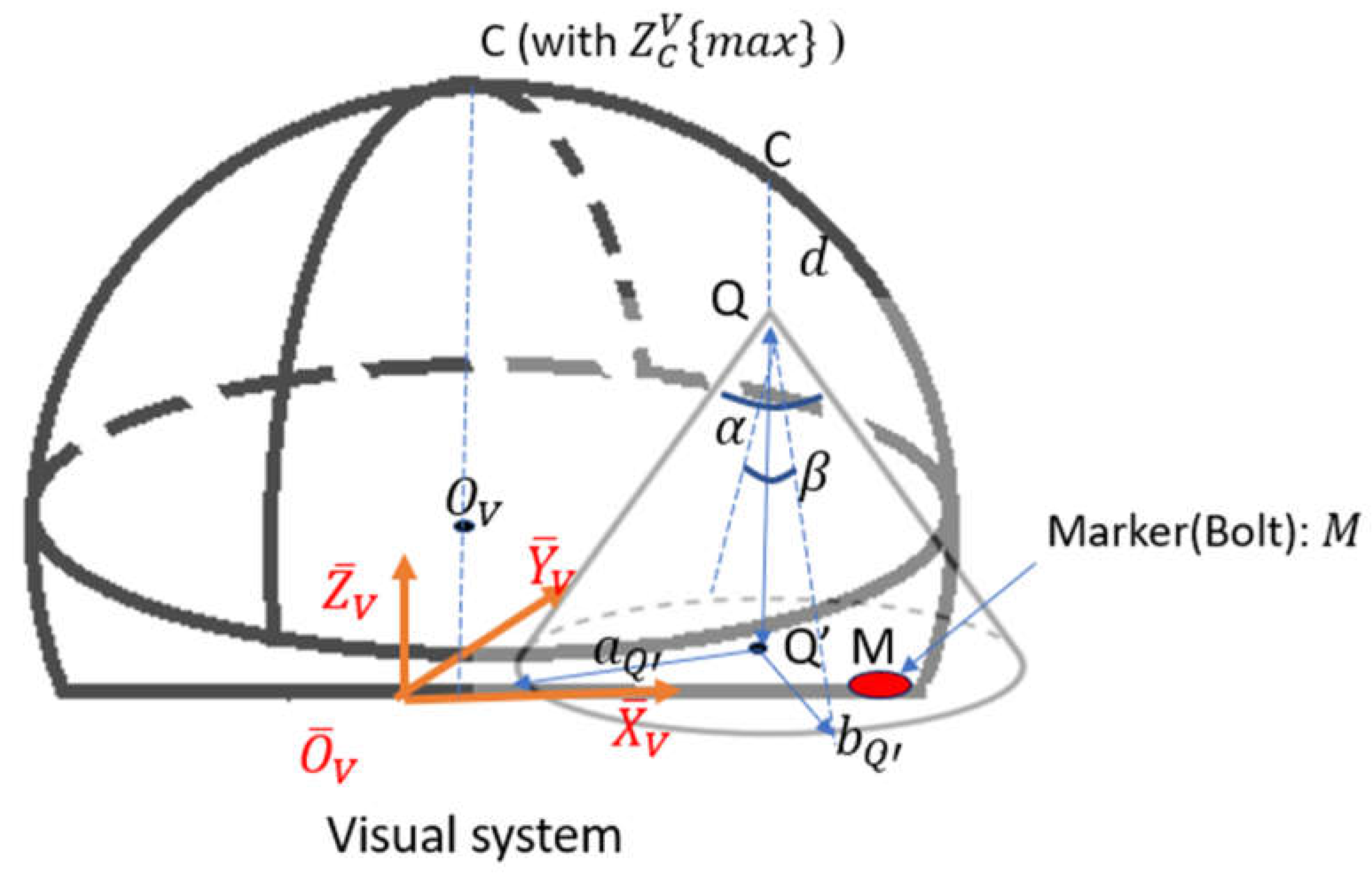

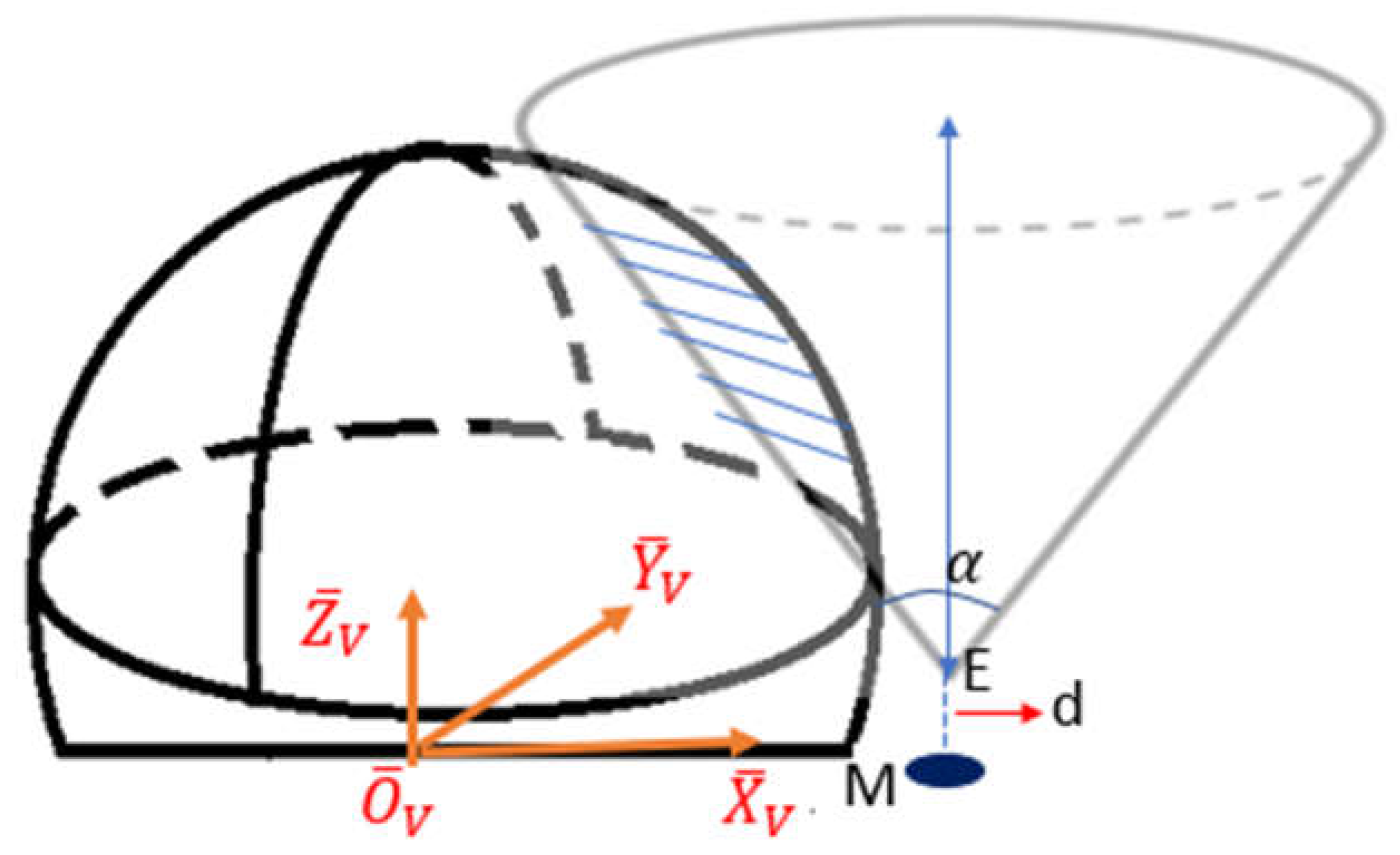

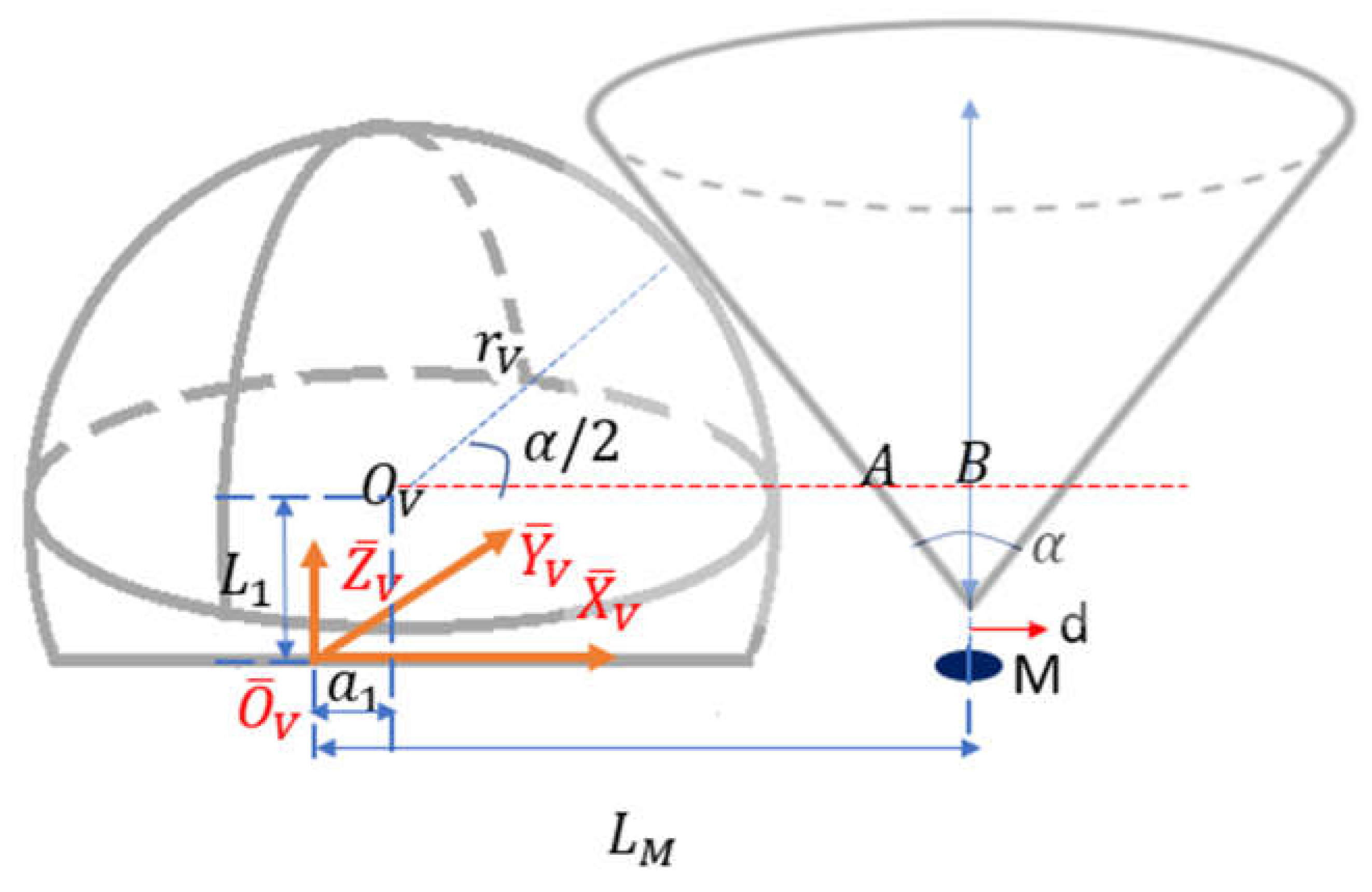

3.1. Camera Operational Space

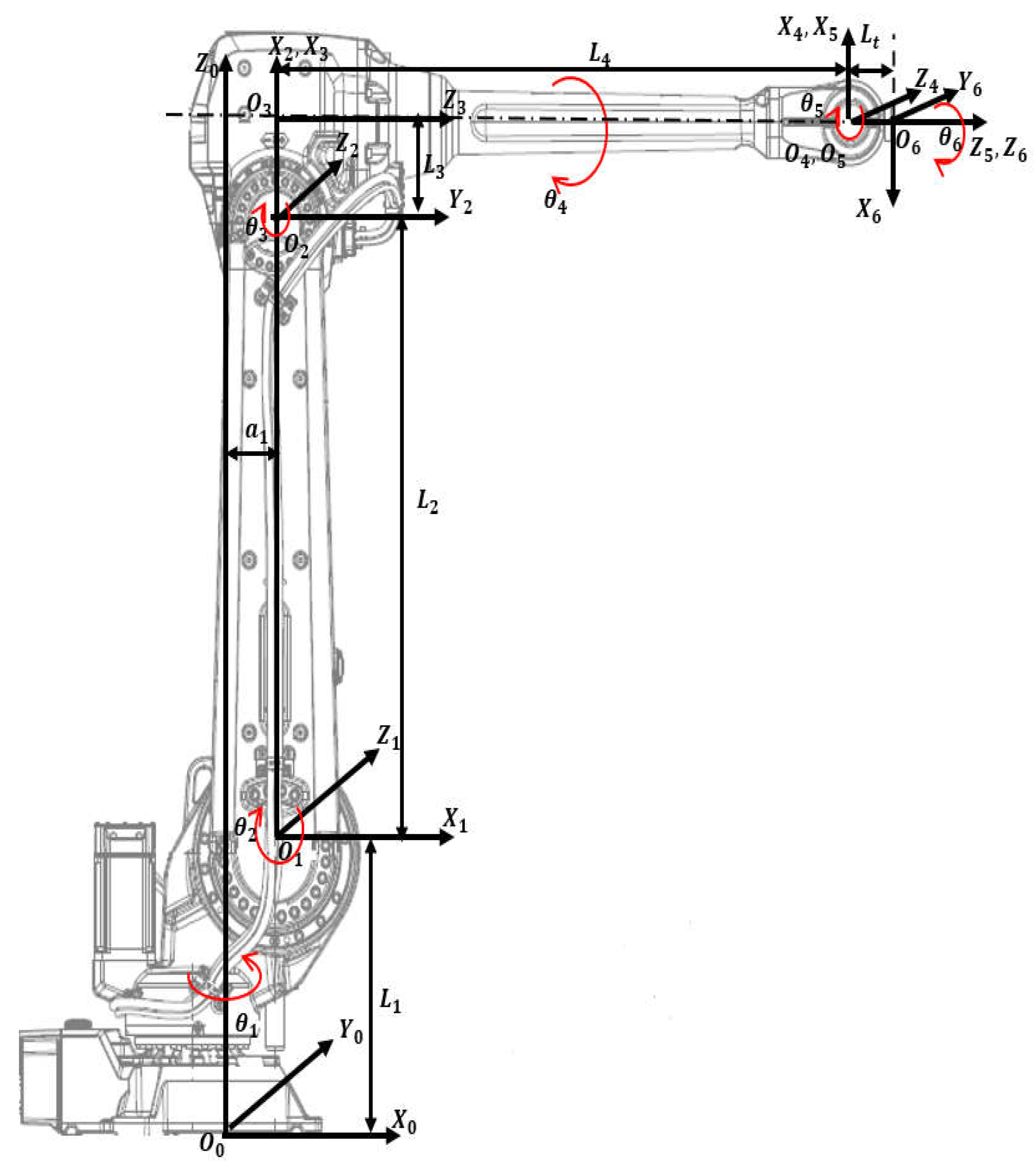

3.1.1. Reachable and Dexterous Workspace of Two-Hybrid Systems

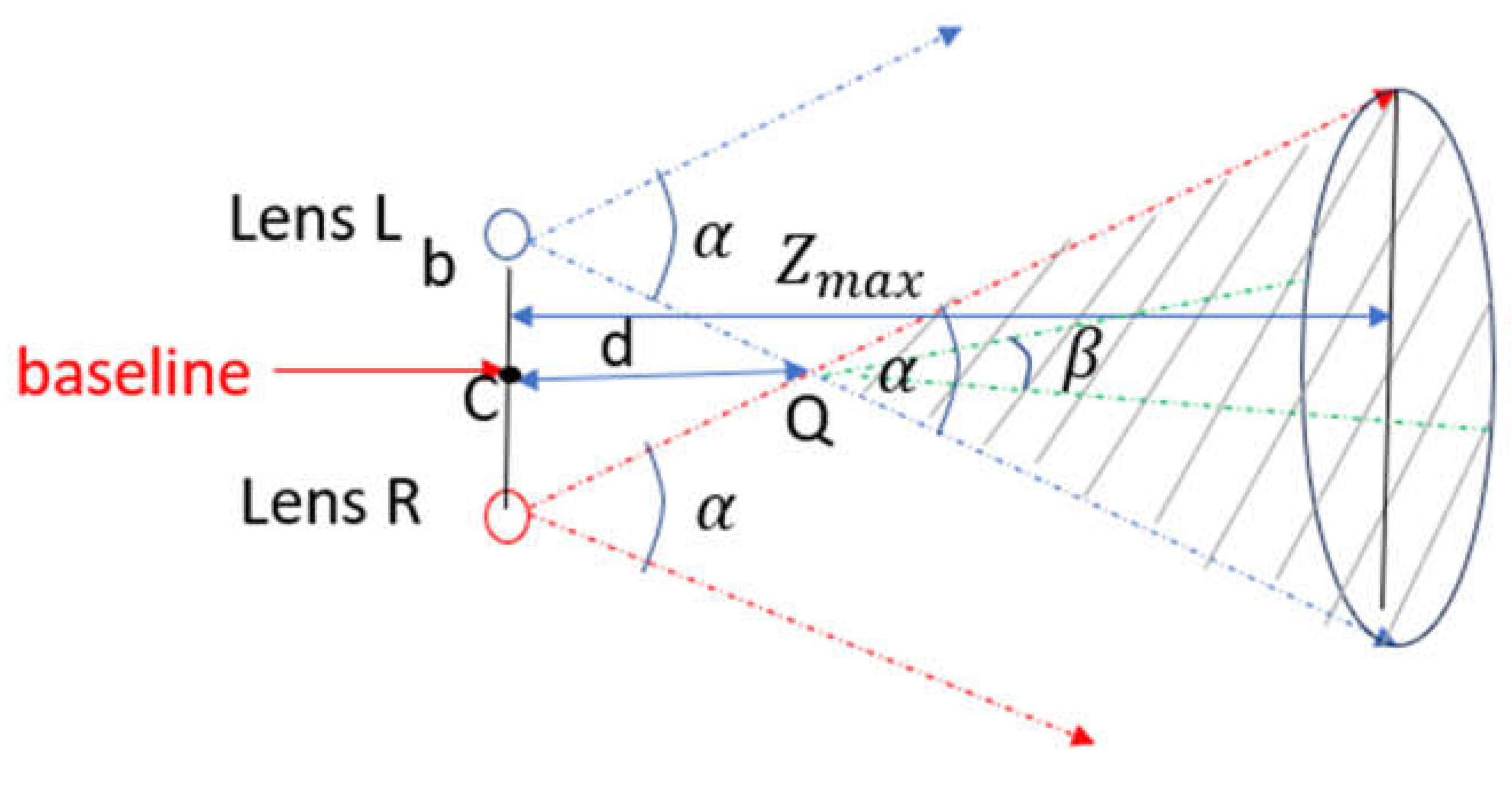

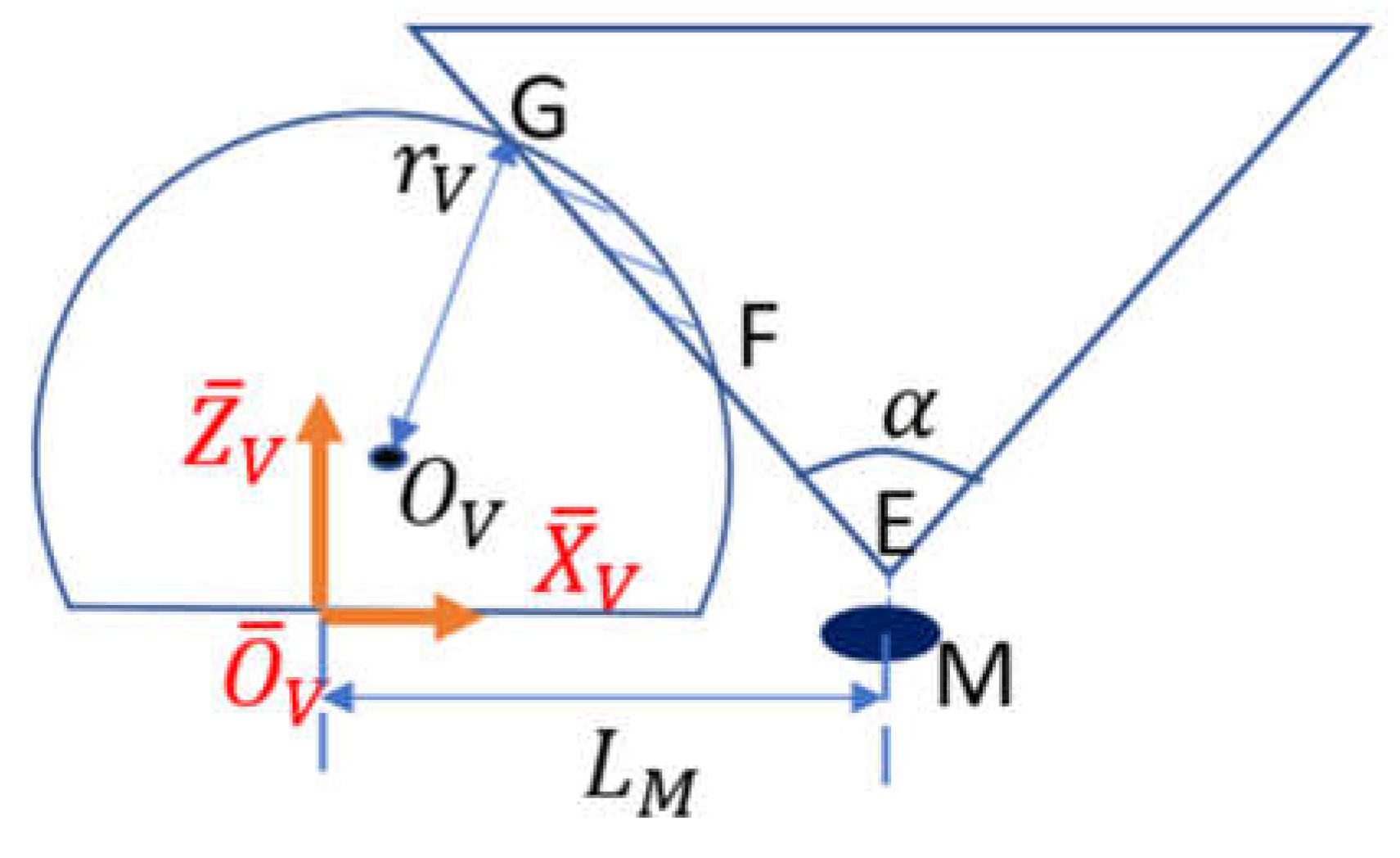

3.1.2. Detectable Space for the Stereo Camera

3.1.3. Camera Operational Space Development

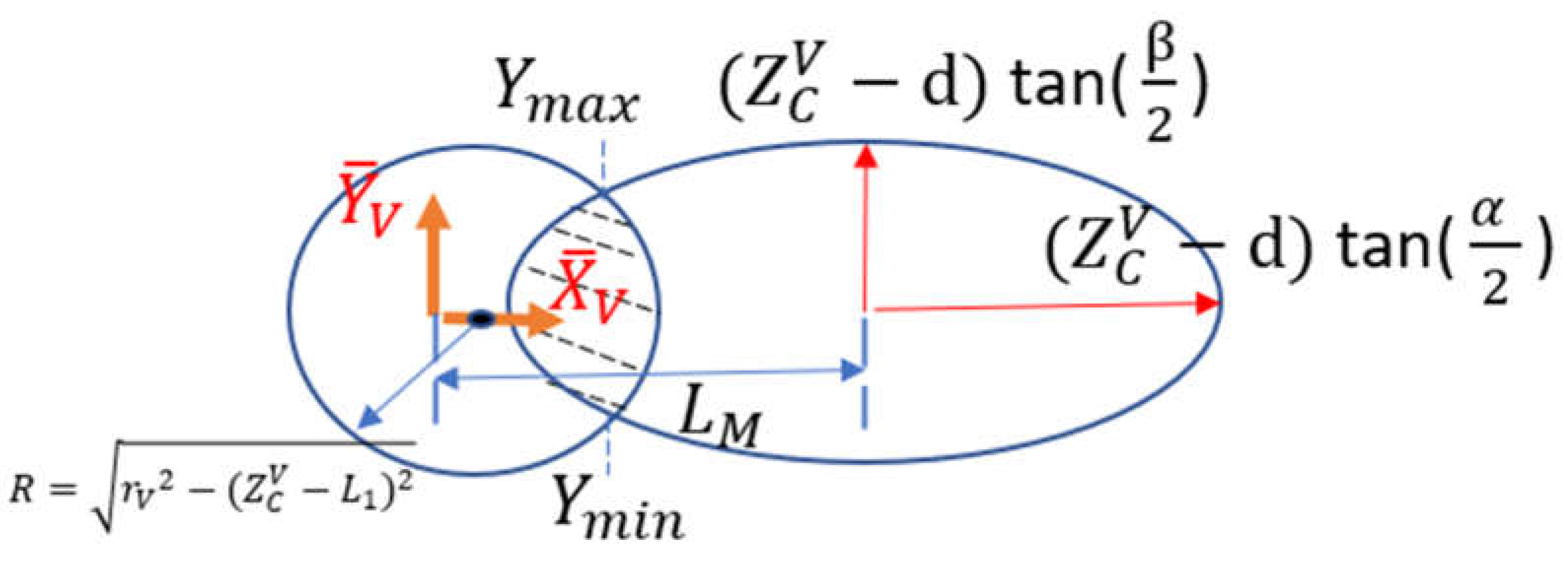

3.1.4. Mathematic Expression for Node Coordinates Within the Camera Operational Space

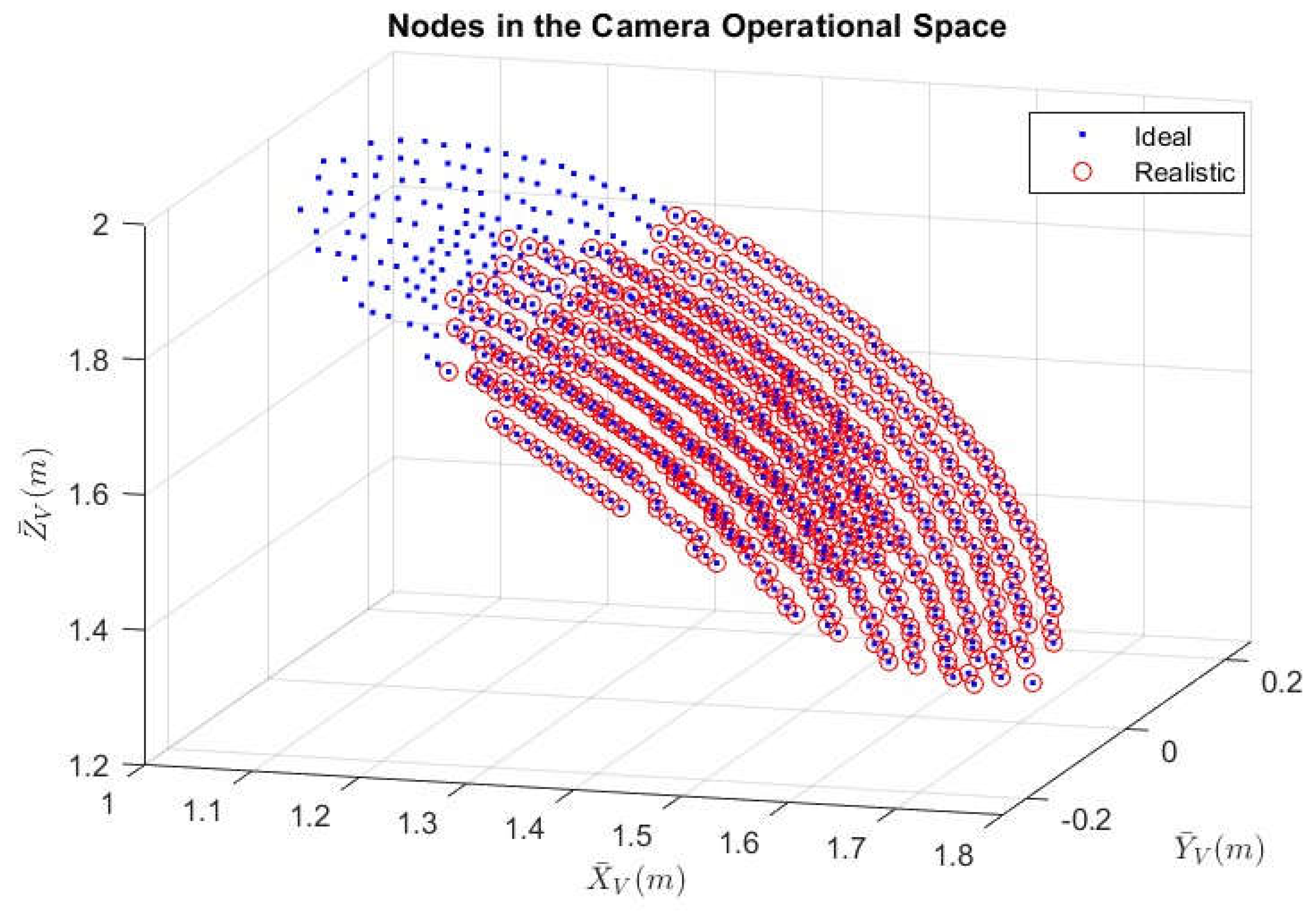

3.1.5. Numerical Solution of the Ideal Camera Operation Space

3.1.6. The Camera Operational Space from the Realistic Robot Manipulator

3.2. Simulations of scenarios

4. Conclusion

Appendix A

| Parameters | Values |

|---|---|

| Length of Link 1: | 495 mm |

| Length of Link 2: | 900 mm |

| Length of Link 3: | 175 mm |

| Length of Link 3: | 960 mm |

| Length of Link 1 offset: | 175 mm |

| Length of Spherical wrist: | 135 mm |

| Tool length (screwdriver): | 127 mm |

| Axis Movement | Working range |

|---|---|

| Axis 1 rotation | +180 to -180 |

| Axis 2 arm | +150 to -90 |

| Axis 3 arm | +75 to -180 |

| Axis 4 wrist | +400 to -400 |

| Axis 5 bend | +120 to -125 |

| Axis 6 turn | +400 to -400 |

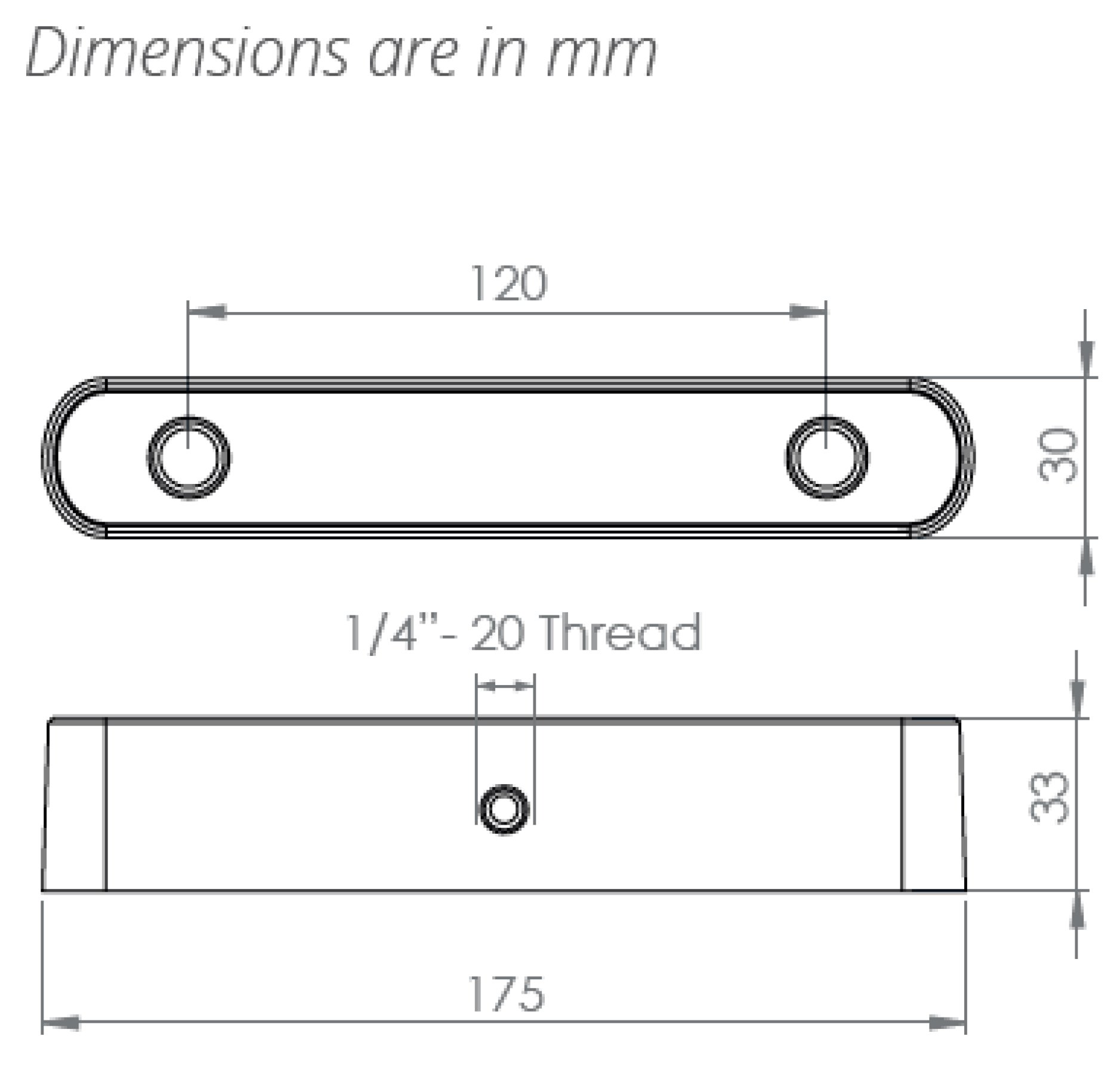

| Parameters | Values |

|---|---|

| Focus length: f | 2.8 mm |

| Baseline: B | 120 mm |

| Weight: W | 170g |

| Depth range: | 0.5m-25m |

| Diagonal Sensor Size: | 6mm |

| Sensor Format: | 16:9 |

| Sensor Size: W X H | 5.23mm X 2.94mm |

| Angle of view in width: | |

| Angle of view in height: | 55.35 |

| Parameters | Values |

|---|---|

| DC Motor | |

| Armature Resistance: | 0.03 |

| Armature Inductance: | 0.1 mH |

| Back emf Constant: | 7 mv/rpm |

| Torque Constant: | 0.0674 N/A |

| Armature Moment of Inertia: | 0.09847 kg |

| Gear | |

| Gear ratio: | 200:1 |

| Moment of Inertia: | 0.05 kg |

| Damping ratio: | 0.06 |

Appendix B

References

- W. Chang and C.-H. Wu, “Automated USB peg-in-hole assembly employing visual servoing,” in Proc. 3rd Int. Conf. Control, Autom. Robot. (ICCAR). Nagoya, Japan: IEEE. Apr. 2017, pp. 352–355.

- J. Xu, K. Liu, Y. Pei, C. Yang, Y. Cheng, and Z. Liu, “A noncontact control strategy for circular peg-in-hole assembly guided by the 6-DOF robot based on hybrid vision,” IEEE Trans. Instrum. Meas., vol. 71,2022, Art. no. 3509815.

- W. Zhu, H. Liu, and Y. Ke, “Sensor-based control using an image point and distance features for rivet-in-hole insertion,” IEEE Trans. Ind. Electron., vol. 67, no. 6, pp. 4692–4699, Jun. 2020.

- F. Qin, D. Xu, D. Zhang, W. Pei, X. Han and S. Yu, "Automated Hooking of Biomedical Microelectrode Guided by Intelligent Microscopic Vision," in IEEE/ASME Transactions on Mechatronics, vol. 28, no. 5, pp. 2786-2798, Oct. 2023. [CrossRef]

- Jung, Woo, Jin., Lee, Hyun, Jung. (2020). Automatic alignment type welding apparatus and welding method using the above auto-type welding apparatus.

- P. Guo, Z. Zhang, L. Shi, and Y. Liu, “A contour-guided pose alignment method based on Gaussian mixture model for precision assembly,” Assem. Autom., vol. 41, no. 3, pp. 401–411, Jul. 2021.

- F. Chaumette and S. Hutchinson, “Visual servo control Part I: Basic approaches,” IEEE Robotics & Automation Magazine, vol. 13, pp. 82–90, 2006.

- Y. Ma, X. Liu, J. Zhang, D. Xu, D. Zhang, and W. Wu, “Robotic grasping and alignment for small size components assembly based on visual servoing,” Int. J. Adv. Manuf. Technol., vol. 106, nos. 11–12, pp. 4827–4843, Feb. 2020.

- T. Hao, D. Xu and F. Qin, "Image-Based Visual Servoing for Position Alignment With Orthogonal Binocular Vision," in IEEE Transactions on Instrumentation and Measurement, vol. 72, pp. 1-10, 2023, Art no. 5019010. [CrossRef]

- G. Flandin, F. Chaumette and E. Marchand, "Eye-in-hand/eye-to-hand cooperation for visual servoing," Proceedings 2000 ICRA. Millennium Conference. IEEE International Conference on Robotics and Automation. Symposia Proceedings (Cat. No.00CH37065), San Francisco, CA, USA, 2000, pp. 2741-2746 vol. [CrossRef]

- Muis, A., & Ohnishi, K. (n.d.). Eye-to-hand approach on eye-in-hand configuration within real-time visual servoing. The 8th IEEE International Workshop on Advanced Motion Control, 2004. AMC ’04. [CrossRef]

- Ruf, M. Tonko, R. Horaud, and H.-H. Nagel: “Visual Tracking of An End-Effector by Adaptive Kinematic Prediction”, Proc. of the Intl. ConJ on Intelligent Robots and Systems(IROS’97). vol. 2, pp.893-898,Grenoble, France, Sep.( 1997).

- Jose Luis de Mena: “Virtual Environment for Development of Visual Servoing Control Algorithms”, Thesis, Lund Institute Technology, Sweden, May, (2002).

- K. Irie, I. M. Woodhead, A. E. McKinnon and K. Unsworth, "Measured effects of temperature on illumination-independent camera noise," 2009 24th International Conference Image and Vision Computing New Zealand, Wellington, New Zealand, 2009, pp. 249-253. [CrossRef]

- Patidar, P., Gupta, M., Srivastava, S., & Nagawat, A.K. Image De-noising by Various Filters for Different Noise. International Journal of Computer Applications. 2010; 9: 45-50.

- Das, S., Saikia, J., Das, S., & Goñi, N. A COMPARATIVE STUDY OF DIFFERENT NOISE FILTERING TECHNIQUES IN DIGITAL IMAGES. 2015.

- R. Zhao and H. Cui. Improved threshold denoising method based on wavelet transform. 2015 7th International Conference on Modelling, Identification and Control (ICMIC); 2015: pp. 1-4. [CrossRef]

- Ng J., Goldberger J.J. Signal Averaging for Noise Reduction. In: Goldberger J., Ng J. (eds) Practical Signal and Image Processing in Clinical Cardiology. London: Springer; 2010. p. 69-77. [CrossRef]

- G. Chen, F. Zhu and P. A. Heng. An Efficient Statistical Method for Image Noise Level Estimation. In: 2015 IEEE International Conference on Computer Vision (ICCV); 2015.p. 477-485. [CrossRef]

- Mark, W.S., M.V. (1989). Robot Dynamics and control. John Wiley & Sons, Inc. 1989.

- W. W. MARZOUK, A. ELBASET etc. (2019).” Modelling, Analysis and Simulation for A 6 Axis Arm Robot by PID Controller”, International Journal of Mechanical and Production Engineering Research and Development (IJMPERD) ISSN (P): 2249-6890; ISSN (E): 2249-8001 Vol. 9, Issue 4, Aug 2019, pp. 363-376. [CrossRef]

- Li, R., & Assadian, F. Role of Uncertainty in Model Development and Control Design for a Manufacturing Process. In: Majid T., Pengzhong L, & Liang L, editors. Production Engineering and Robust Control. London: IntechOpen 2022. pp. 137-167. [CrossRef]

- Anonymous. ABB IRB 4600 -40/2.55 Product Manual [Internet]. 2013. Available from: https://www.manualslib.com/manual/1449302/Abb-Irb-4600-40-2-55.html#manual [Accessed: 2024-7-17].

- Anonymous. Stereolabs Docs: API Reference, Tutorials, and Integration. Available from: https://www.stereolabs.com/docs [Accessed: 2024-7-18].

- Peer, P., & Solina, F. (2006). Where physically is the optical center? Pattern Recognition Letters, 27(10), 1117–1121. [CrossRef]

- Vassilios D Tourassis, Dimitrios M Emiris. (1993) “A comparative study of ideal elbow and dual-elbow robot manipulators”, Mechanism and Machine Theory, Volume 28, Issue 3, Pages 357-373, ISSN 0094-114X. [CrossRef]

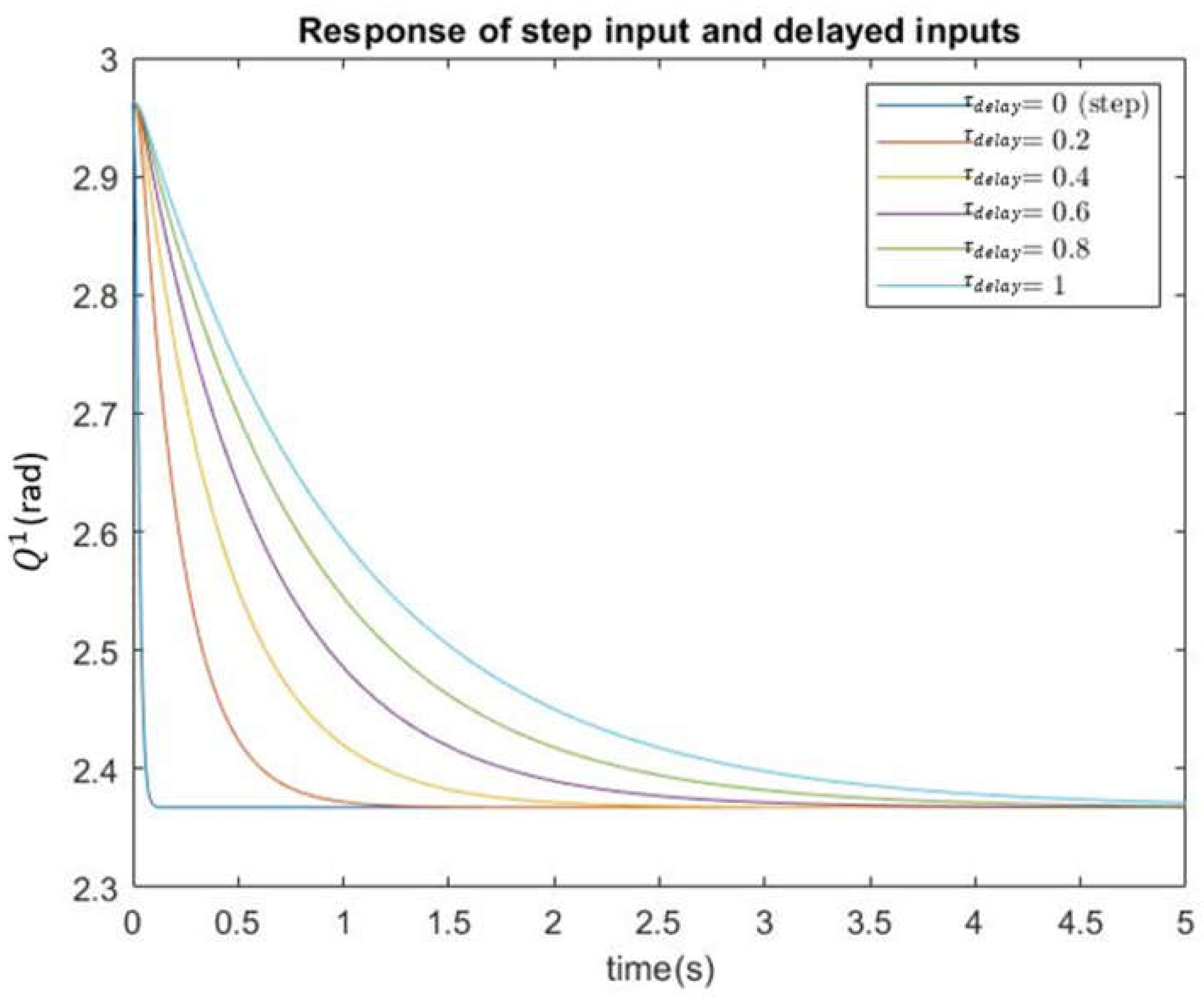

| [s] | [ws] | |

|---|---|---|

| 0 | 10.895 | 0.07 |

| 0.2 | 0.102 | 0.73 |

| 0.4 | 0.030 | 1.42 |

| 0.6 | 0.016 | 2.11 |

| 0.8 | 0.011 | 2.80 |

| 1 | 0.009 | 3.49 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).