Submitted:

23 August 2024

Posted:

26 August 2024

You are already at the latest version

Abstract

Keywords:

I. Introduction

II. State of the Art

III. Materials and Methods

A. Data Analysis

- 1)

- Dataset Preprocessing

B. Evaluation Metrics

IV.Experimental Analysis

A. Test Cross-Validation

V. Result Analysis

A. Binary Classification Results

B. Multi Classification Results

VI. Conclusion and Future Works

Funding

Data Availability

Conflict of Interest

Code Availability

References

- A. Rehman and F. G. Khan, “A deep learning based review on abdominal images,” Multimedia Tools and Applications 2020 80:20, vol. 80, no. 20, pp. 30321–30352, Sep. 2020. [CrossRef]

- D. Santhosh Reddy, P. Rajalakshmi, and M. A. Mateen, “A deep learning based approach for classification of abdominal organs using ultrasound images,” Biocybernetics and Biomedical Engineering, vol. 41, no. 2, pp. 779–791, Apr. 2021. [CrossRef]

- H. Arora and N. Mittal, “Image Enhancement Techniques for Gastric Diseases Detection using Ultrasound Images,” in Proceedings of the 3rd International Conference on Electronics and Communication and Aerospace Technology, ICECA 2019, Jun. 2019, pp. 251–256. [CrossRef]

- S. Maksoud, K. Zhao, P. Hobson, A. Jennings, and B. C. Lovell, “SOS: Selective objective switch for rapid immunofluorescence whole slide image classification,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2020, pp. 3861–3870. [CrossRef]

- C. M. Petrilli et al., “Factors associated with hospital admission and critical illness among 5279 people with coronavirus disease 2019 in New York City: Prospective cohort study,” The BMJ, vol. 369, May 2020. [CrossRef]

- K. D. Miller, M. Fidler-Benaoudia, T. H. Keegan, H. S. Hipp, A. Jemal, and R. L. Siegel, “Cancer statistics for adolescents and young adults, 2020,” CA: A Cancer Journal for Clinicians, vol. 70, no. 6, pp. 443–459, Nov. 2020. [CrossRef]

- V. Jurcak et al., “Automated segmentation of the quadratus lumborum muscle from magnetic resonance images using a hybrid atlas based - Geodesic active contour scheme,” in Proceedings of the 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS’08 - “Personalized Healthcare through Technology,” 2008, pp. 867–870. [CrossRef]

- M. Byra et al., “Liver Fat Assessment in Multiview Sonography Using Transfer Learning With Convolutional Neural Networks,” Journal of Ultrasound in Medicine, vol. 41, no. 1, pp. 175–184, Jan. 2022. [CrossRef]

- A. Rehman and F. G. Khan, “A deep learning based review on abdominal images,” Multimedia Tools and Applications, vol. 80, no. 20, pp. 30321–30352, Aug. 2021. [CrossRef]

- Y. Kazemi and S. A. Mirroshandel, “A novel method for predicting kidney stone type using ensemble learning,” Artificial Intelligence in Medicine, vol. 84, pp. 117–126, Jan. 2018. [CrossRef]

- Q. Zheng, S. L. Furth, G. E. Tasian, and Y. Fan, “Computer-aided diagnosis of congenital abnormalities of the kidney and urinary tract in children based on ultrasound imaging data by integrating texture image features and deep transfer learning image features,” Journal of Pediatric Urology, vol. 15, no. 1, pp. 75.e1-75.e7, Feb. 2019. [CrossRef]

- P. Rathnayaka, V. Jayasundara, R. Nawaratne, D. De Silva, W. Ranasinghe, and D. Alahakoon, “Kidney Tumor Detection using Attention based U-Net,” 2019. [CrossRef]

- G. S. Kashyap, D. Mahajan, O. C. Phukan, A. Kumar, A. E. I. Brownlee, and J. Gao, “From Simulations to Reality: Enhancing Multi-Robot Exploration for Urban Search and Rescue,” Nov. 2023, Accessed: Dec. 03, 2023. [Online]. Available: https://arxiv.org/abs/2311.16958v1.

- S. Naz and G. S. Kashyap, “Enhancing the predictive capability of a mathematical model for pseudomonas aeruginosa through artificial neural networks,” International Journal of Information Technology 2024, pp. 1–10, Feb. 2024. [CrossRef]

- H. Habib, G. S. Kashyap, N. Tabassum, and T. Nafis, “Stock Price Prediction Using Artificial Intelligence Based on LSTM– Deep Learning Model,” in Artificial Intelligence & Blockchain in Cyber Physical Systems: Technologies & Applications, CRC Press, 2023, pp. 93–99. [CrossRef]

- G. S. Kashyap et al., “Detection of a facemask in real-time using deep learning methods: Prevention of Covid 19,” Jan. 2024, Accessed: Feb. 04, 2024. [Online]. Available: https://arxiv.org/abs/2401.15675v1.

- G. S. Kashyap, A. Siddiqui, R. Siddiqui, K. Malik, S. Wazir, and A. E. I. Brownlee, “Prediction of Suicidal Risk Using Machine Learning Models.” Dec. 25, 2021. Accessed: Feb. 04, 2024. [Online]. Available: https://papers.ssrn.com/abstract=4709789.

- G. S. Kashyap, K. Malik, S. Wazir, and R. Khan, “Using Machine Learning to Quantify the Multimedia Risk Due to Fuzzing,” Multimedia Tools and Applications, vol. 81, no. 25, pp. 36685–36698, Oct. 2022. [CrossRef]

- N. Marwah, V. K. Singh, G. S. Kashyap, and S. Wazir, “An analysis of the robustness of UAV agriculture field coverage using multi-agent reinforcement learning,” International Journal of Information Technology (Singapore), vol. 15, no. 4, pp. 2317–2327, May 2023. [CrossRef]

- G. S. Kashyap et al., “Revolutionizing Agriculture: A Comprehensive Review of Artificial Intelligence Techniques in Farming,” Feb. 2024. [CrossRef]

- S. Wazir, G. S. Kashyap, and P. Saxena, “MLOps: A Review,” Aug. 2023, Accessed: Sep. 16, 2023. [Online]. Available: https://arxiv.org/abs/2308.10908v1.

- M. Kanojia, P. Kamani, G. S. Kashyap, S. Naz, S. Wazir, and A. Chauhan, “Alternative Agriculture Land-Use Transformation Pathways by Partial-Equilibrium Agricultural Sector Model: A Mathematical Approach,” Aug. 2023, Accessed: Sep. 16, 2023. [Online]. Available: https://arxiv.org/abs/2308.11632v1.

- P. Kaur, G. S. Kashyap, A. Kumar, M. T. Nafis, S. Kumar, and V. Shokeen, “From Text to Transformation: A Comprehensive Review of Large Language Models’ Versatility,” Feb. 2024, Accessed: Mar. 21, 2024. [Online]. Available: https://arxiv.org/abs/2402.16142v1.

- G. S. Kashyap, A. E. I. Brownlee, O. C. Phukan, K. Malik, and S. Wazir, “Roulette-Wheel Selection-Based PSO Algorithm for Solving the Vehicle Routing Problem with Time Windows,” Jun. 2023, Accessed: Jul. 04, 2023. [Online]. Available: https://arxiv.org/abs/2306.02308v1.

- S. Wazir, G. S. Kashyap, K. Malik, and A. E. I. Brownlee, “Predicting the Infection Level of COVID-19 Virus Using Normal Distribution-Based Approximation Model and PSO,” Springer, Cham, 2023, pp. 75–91. [CrossRef]

- R. Arora, S. Gera, M. Saxena “Mitigating Security Riskon Privacy of Sensitive Data used in Cloud-based ERP Applications”; Proceedings of the 15th INDIACom; INDIACom-2021; IEEE Conference ID: 51348. 2021 8th International Conference on “Computing for Sustainable Global Development”, 17th - 19th March, 2021. pp 458-463 Electronic ISBN:978-93-80544-43-4. PoD ISBN:978-1-7281-9546-9.

- A. Soni, S.Alla, S.Dodda,H.Volikatla “Advancing Household.

- Robotics: Deep Interactive Reinforcement Learning for Efficient Training and Enhanced Performance,” in Journal of Electrical Systems (JES), vol. 20, no. 3s(2024), pp. 1349-1355 May 2024. [CrossRef]

| 1 |

| Transformation | Parameters | |

|---|---|---|

| Geometric | Inversion | Horizontal |

| Rotation | [-5º, 5º] | |

| Translation | X axis: [-7%, 7%] | |

| Y axis: [-3%, 3%] | ||

| Gray Scale Level | Brightness | [-10%, 40%] |

| Contrast | [-10%, 40%] |

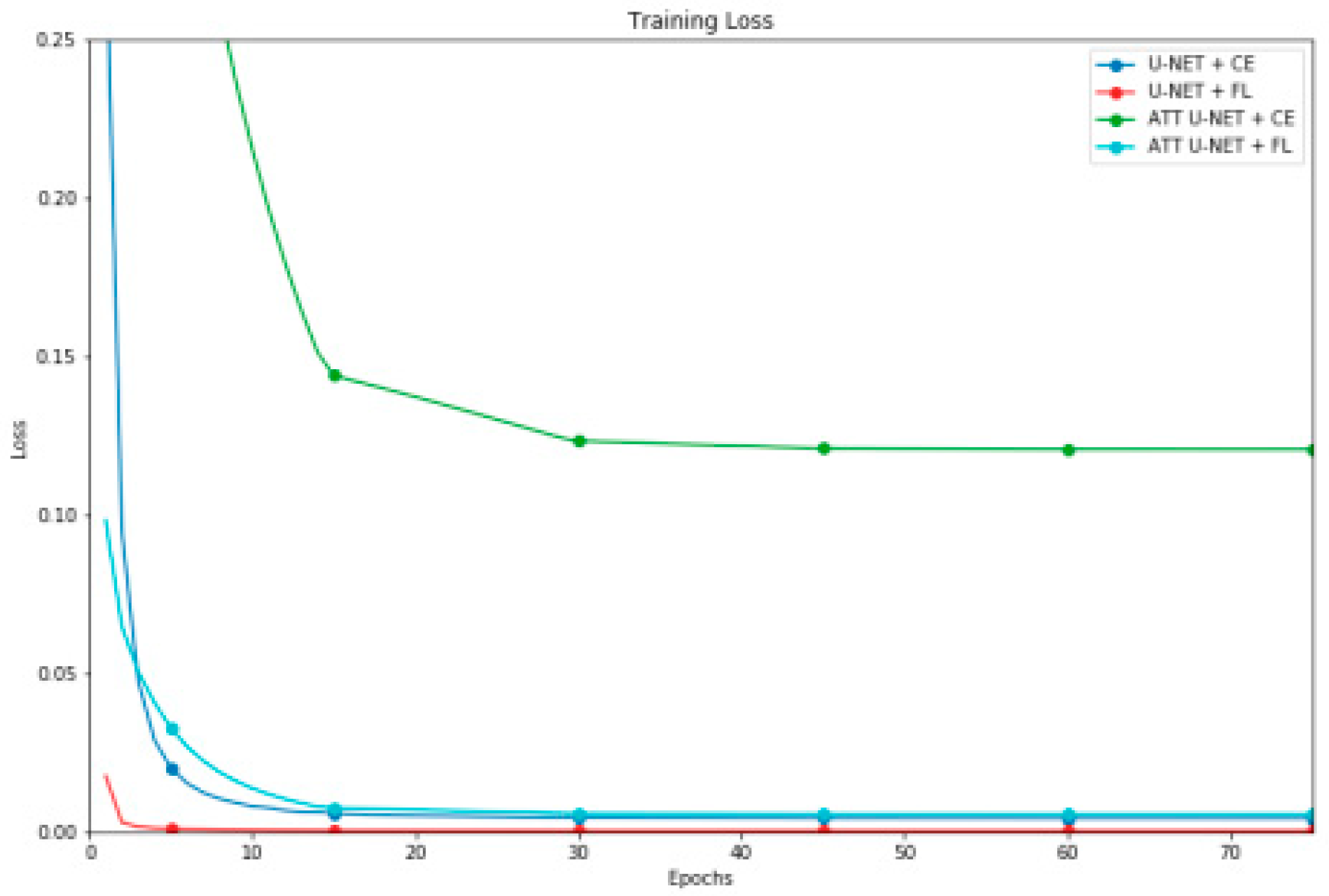

| Model Parameters |

U-Net | Attention U-Net |

|---|---|---|

| Starting LR | 10−2 | |

| LR decay | drop = 0.1; Step decay = 15 epochs | |

| Optimization Strategy | Adam (β1 = 0.9, β2 = 0.999, ϵ = 10−7) | |

| Epochs | 75 | |

| Batch size | 8 | |

| Dropout rate | 0.1 | |

| Batch normalization | True | |

| Segmentation Model | DCS | IoU | Accuracy | Precision | Recall |

|---|---|---|---|---|---|

| U-NET + CE | 0.966±0.009 | 0.934±0.016 | 0.997±0.001 | 0.967±0.012 | 0.965±0.019 |

| U-NET + FL | 0.960±0.011 | 0.923±0.020 | 0.997±0.001 | 0.980±0.010 | 0.941±0.023 |

| ATT U-NET + CE | 0.966±0.007 | 0.934±0.014 | 0.997±0.001 | 0.961±0.013 | 0.970±0.016 |

| ATT U-NET + FL | 0.944±0.094 | 0.902±0.094 | 0.996±0.004 | 0.970±0.096 | 0.920±0.095 |

| Segmentation Model | DCS | IoU | Accuracy | Precision | Recall |

|---|---|---|---|---|---|

| U-NET + CE | 0.937±0.015 | 0.888±0.024 | 0.995±0.002 | 0.936±0.019 | 0.956±0.020 |

| U-NET + FL | 0.936±0.016 | 0.887±0.024 | 0.995±0.002 | 0.935±0.020 | 0.938±0.014 |

| ATT U-NET + CE | 0.939±0.013 | 0.891±0.020 | 0.995±0.002 | 0.939±0.017 | 0.939±0.013 |

| ATT U-NET + FL | 0.937±0.015 | 0.889±0.024 | 0.995±0.002 | 0.937±0.019 | 0.939±0.015 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).