1. Introduction

The generation of meshes constitutes a crucial stage in numerically simulating diverse problems within the domain of computational science [

1]. Unstructured meshes find prevalent application, notably in fields like computational fluid dynamics (CFD) [

2,

3] and computational solid mechanics (CSM) [

4,

5], wherein the finite element (FE) method is employed to approximate solutions for general partial differential equations (PDE), particularly in domains featuring challenging geometries [

6,

7]. The accuracy of the FE solution is intricately linked to the quality of the associated mesh.

Mesh generation depends on a number of parameters, such as the local metrics at given points, but also, in the general case, the maximum and minimum sizes of cell elements, the Hausdorff coefficient, the acceptable gradation of the metric, and so on [

8]. A set of parameters whose choice is far from trivial and whose poor choice can lead to meshes of poor quality, a generation process that is too long (because it is over-constrained), or even a generation failure.

A mesh can be adapted and refined to suit the physical problem being solved. The adaptation of unstructured meshes has proven very effective for stationary calculations in Fluid Mechanics and Solid Mechanics, with the aim of capturing the behaviour of physical phenomena while obtaining the desired accuracy for the numerical solution. In addition, this method considerably reduces the computational cost of the numerical solution by reducing the number of degrees of freedom. The a priori and a posteriori error estimators generally give an upper bound in the approximation error of the solved PDE. These estimates depend on the approximation space, the mesh size, the equation, the approximate solution in the case of a posteriori estimators and the exact solution in the case of a priori estimators.

Theoretical frameworks for anisotropic error estimation have been extensively developed, resulting in a degree of standardisation in the adaptation process. Various works [

9,

10,

11], have contributed to the estimation of approximation and interpolation errors. Recent analyses of interpolation error [

12,

13,

14,

15,

16] have refocused attention on metric-based mesh adaptation, particularly utilising a metric derived from a recovered Hessian. Notably, Hessian-based metric mesh adaptation offers several advantages, including a general computational framework, a relatively straightforward implementation, and, most importantly, robustness.

The use of Machine Learning (ML) or Deep Learning (DL) in the field of numerical simulation has until now focused mainly on the prediction of stationary or non-stationary fields as solutions to a given differential equation. This work has been motivated by the desire to reduce the calculation time of an approximate solution by relying on a neural network (NN) for the prediction task, rather than on a FE solver [

17,

18]. The field has benefited from the emergence of neural networks, and more specifically convolution neural networks (CNNs), which are generally used to extract local and global information from an image, represented as an array of pixels [

19]. To reproduce this framework, the simulation domains are represented by Cartesian grids, onto which the geometry and certain initial conditions are projected [

20,

21,

22,

23,

24,

25]. This operation results in the loss of the information carried by the initial unstructured mesh, and produces regular meshes that can contain many more points, resulting in higher computation costs.

To address this constraint, a model can be formulated to perform convolutions on graph structures. The extension of CNNs to graphs holds significant importance, especially given that numerous solvers rely on FE methods utilising unstructured meshes for discretisation in both CFD and CSM.

Message passing neural networks (MPNNs) is a subclass of GNNs [

26] that have gained prominence in the domain of mesh-based simulations, offering a framework for handling complex spatial data. The use of MPNNs in the context of mesh-based simulations represents a departure from traditional convolutional approaches, providing a more flexible methodology.

One notable instance of MPNN application is found in the work of Gilmer et al. (2017) [

27], where a message-passing neural network is proposed for quantum chemistry simulations. This approach divides the convolution process into two stages: the aggregation of information from neighbouring nodes and edges into a hidden node state, followed by the use of this hidden state to update node features.

Battaglia et al. (2018) [

28] introduce a slightly different approach known as graph network (GN). Messages are first transmitted from nodes to edges through an edge convolution kernel, leading to the update of edge features. Subsequently, updated edge features are aggregated to nodes using permutation-invariant operations, forming the edge messages. Finally, a node convolution kernel processes the original node features along with the edge messages to produce updated node features. This method proves to be particularly adept at capturing intricate relationships within graph-structured data.

Pfaff et al. (2021) [

29] extended the application of graph networks to mesh-based simulations, focusing on scenarios such as incompressible flow around cylinders and compressible flow around airfoils. Their proposed neural network functions as an accurate incremental simulator with the added capability of adapting to the mesh structure. An intriguing feature of this approach is its ability to generalise well to mesh shapes and sizes not encountered during the training phase, emphasising its robustness in handling diverse simulation conditions.

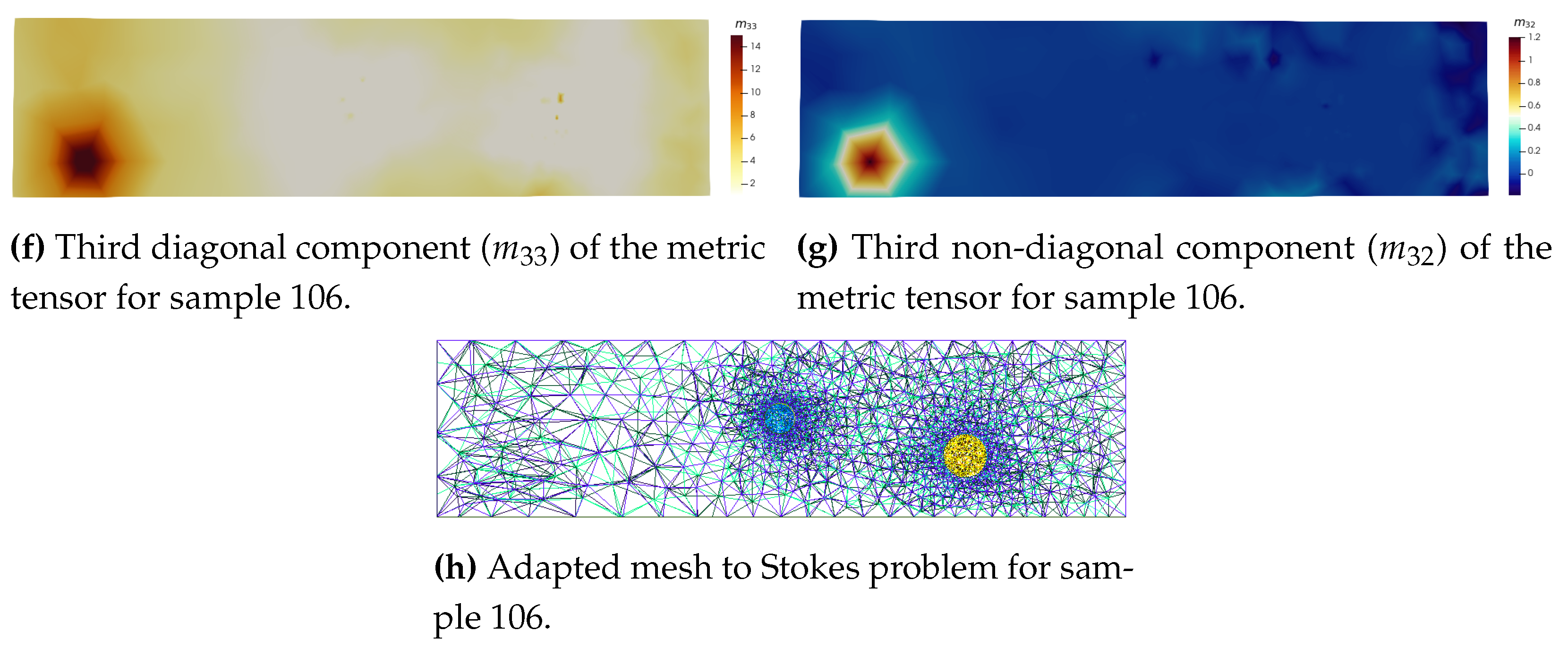

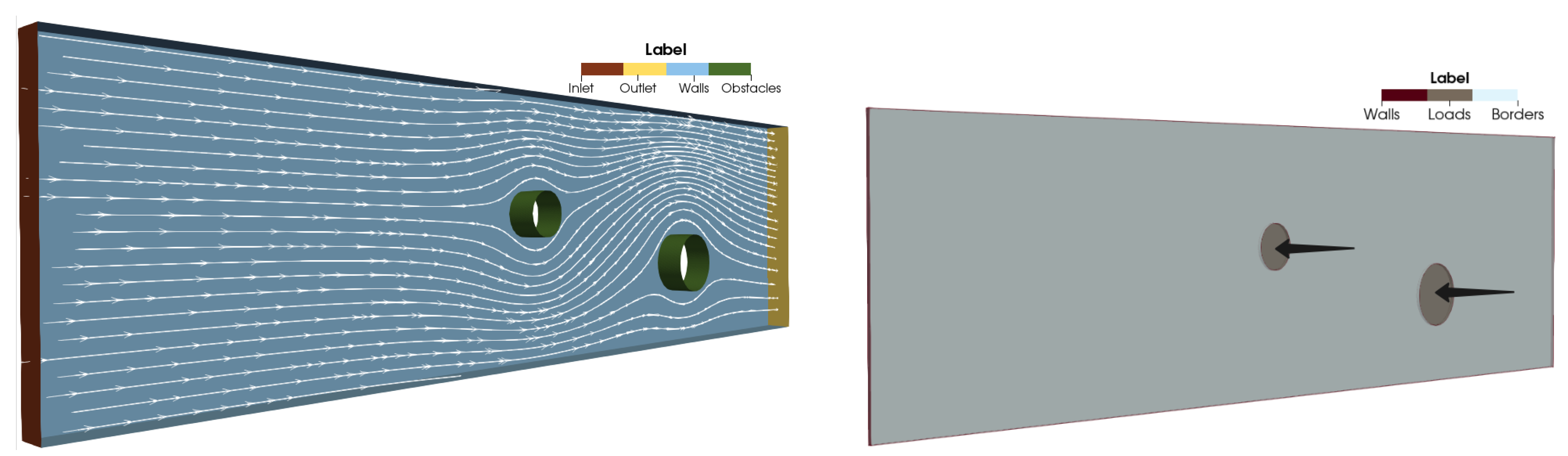

Yet, this recent literature has not been applied to predict the complete process of AMR, from the initial geometry to the adapted mesh, in three dimensions. To this end, we propose Adaptnet, which is a framework for learning mesh parameters and tetrahedral mesh-based simulations using GNNs (

Figure 1). This framework consists of two networks trained to generate unstructured meshes adapted to the physics under study:

Meshnet is trained to predict mesh parameters of a given geometry (CAD file) later used to generate an initial mesh.

Graphnet is trained to predict a metric field, either directly or indirectly by predicting the velocity field, later used to generate an anisotropic adapted mesh.

Both models are message passing graph neural networks that rely on the architecture of MeshGraphNets [

29,

30] that was further adapted to steady-state predictions. We will explain in detail material, methods and results for the case of the steady-state Stokes problem. The linear elasticity problem will be presented at the end to demonstrate the model’s ability to switch from one physics to another. We made use of open-source libraries to direct our work towards open-source solutions and to promote reproducibility.

The remainder of this paper is structured as follows:

Section 2: Materials and Methods - This section presents the problem statement, describes the dataset used, elaborates on the model architecture, and details the training configuration.

Section 3: Results - Here, we provide the results of our experiments, focusing on the performance of the proposed models Meshnet, Graphnet, and Adaptnet.

Section 4: Discussion - This section discusses the implications of our findings, in particular the generalization of our approach to unseen data and configurations.

Section 5: Conclusion - Finally, we summarize the key contributions of our work and suggest directions for future research.

2. Materials and Methods

2.1. Problem Statement

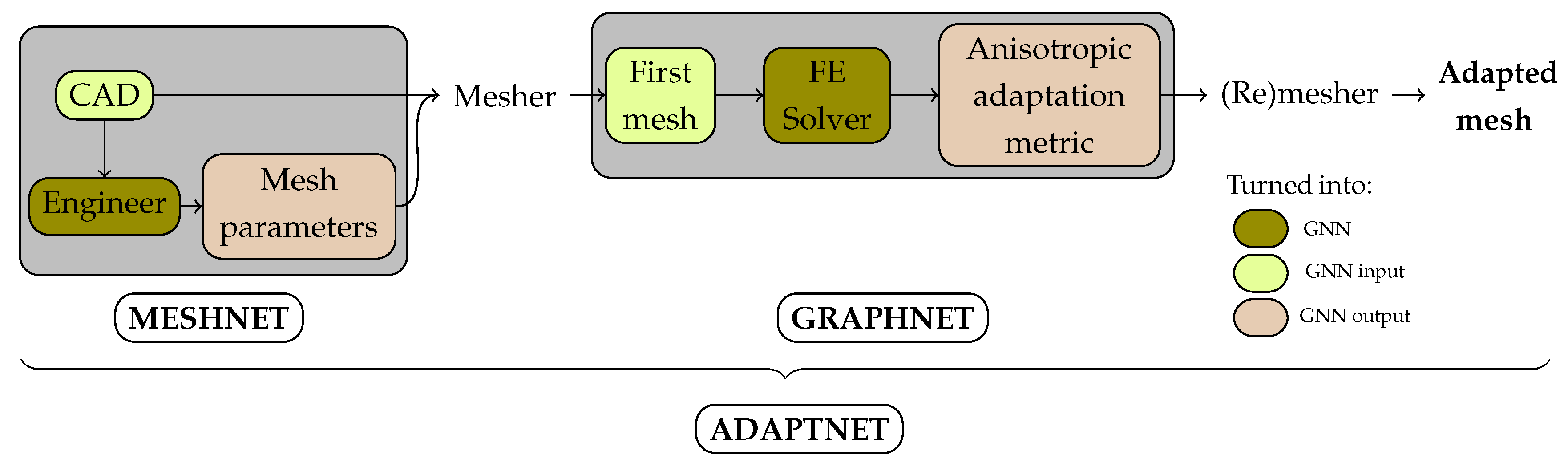

Both the Stokes flow and linear elasticity problems will be examined using the same geometric configuration. Specifically, each problem will be analyzed within a domain featuring a rectangular box with circular obstacles at its center, allowing for a consistent comparison of the effects of fluid flow and structural deformation on a shared geometry.

2.1.1. Stokes Problem

We consider the steady-state Stokes problem of a fluid flow around a cylinder. The domain

is a box of size

with circular obstacles of radius

r in the centre. The boundary

is divided into four parts:

,

,

and

, corresponding to the boundaries of inlet, outlet, wall, and obstacles, respectively. The problem is defined as follows:

where

is the velocity field,

p is the pressure field,

is the body force,

is the viscosity and

is the inlet velocity. The inlet velocity is set to

and the viscosity is set to

. The body force is set to

.

2.1.2. Linear Elasticity Problem

We consider the structural mechanics problem of an elastic plate, fixed on both sides, deformed by the action of two cylindrical actuators. The domain

is a plate of size

. The boundary

is divided into three parts:

,

and

, corresponding to the loaded cylinders, the fixed walls and the rest of the borders of the plate . The problem is defined as follows:

The load is set to , the Young modulus to and the Poisson ratio to . Body force is set to .

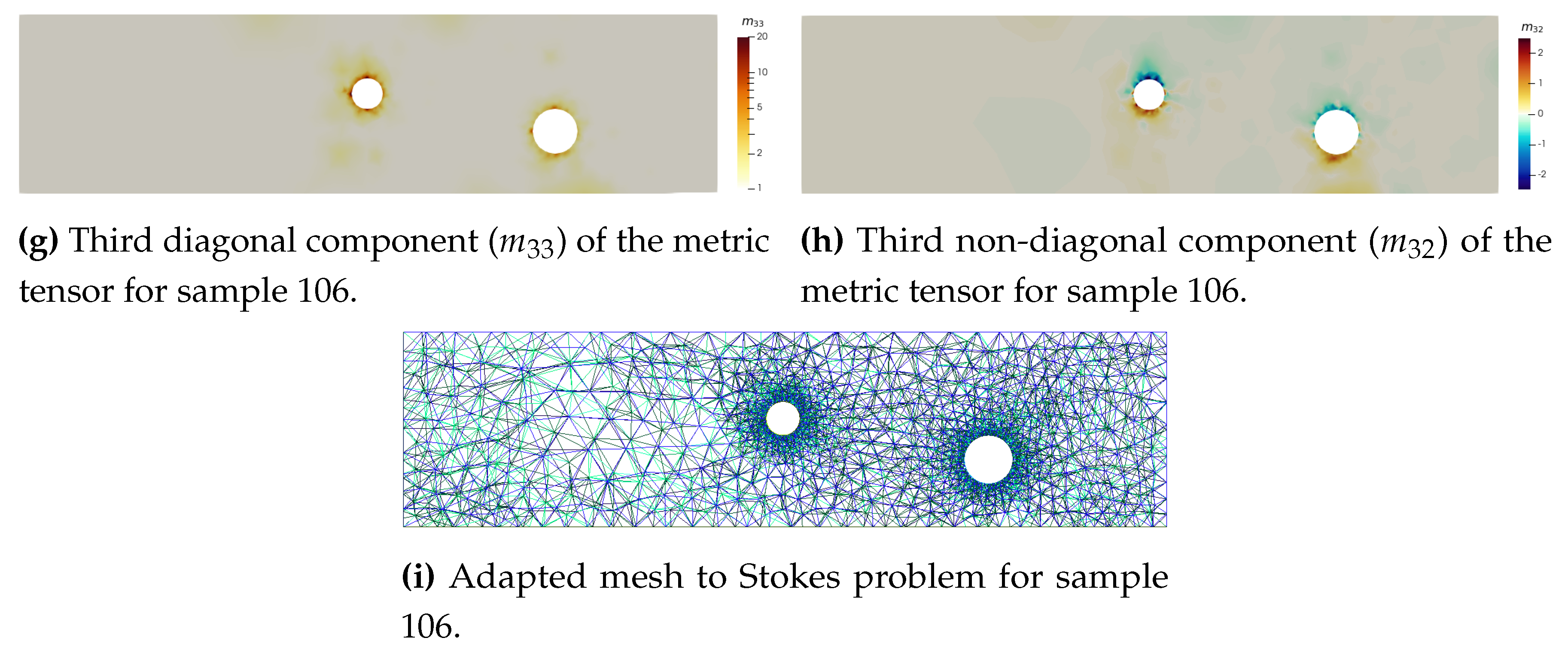

2.2. Dataset

The data set is composed of 500 geometries of size

with 2 circular obstacles of radius

inside (

Figure 2). The geometries are generated by randomly setting the box dimensions and the obstacles radius around control values.

The data sets are divided into 375 training samples, 75 validation samples, and 50 test samples. The training and validation samples are used to train the model, whereas the test samples are used to evaluate the model’s performance. Each sample is numbered from 0 to 499. Most illustrative figures will use sample 106 from the test set.

2.3. Mesh Parameters

CAD files are automatically generated under

.geo_unrolled extension using a python script. In this work, the mesh parameters are limited to the mesh size defined at each point of the CAD file. Mesh size is set randomly around

at the circular obstacles while the one at the box extremities is set accordingly to their proximity to an obstacle, in order to test the network under real-case mesh parametrization, rather than a uniform one.

Figure 3 highlights in red the 12 points representing each CAD file and labelled with a mesh size used to generate the initial mesh. Mesh generation is performed using

Gmsh [

31] software.

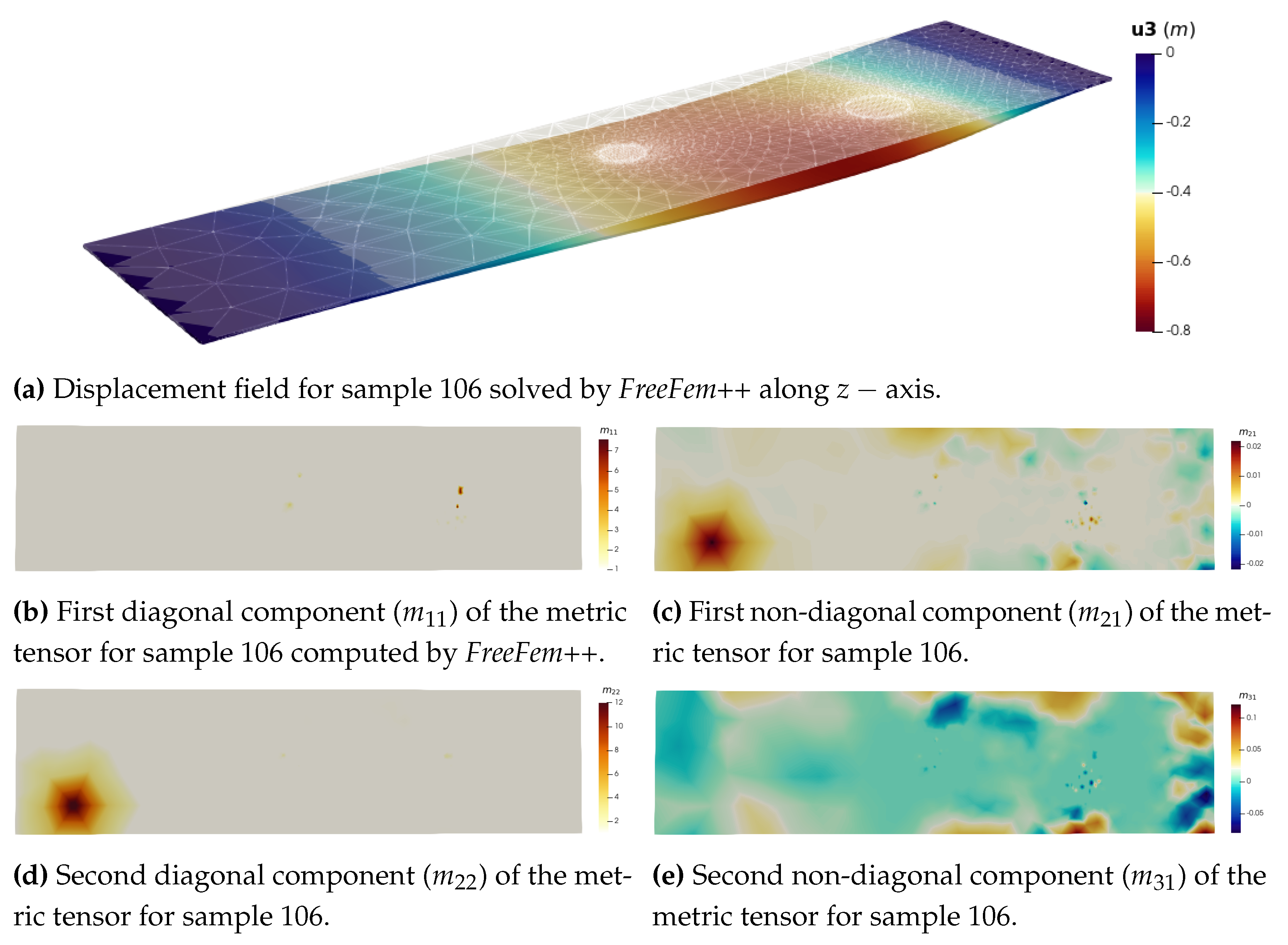

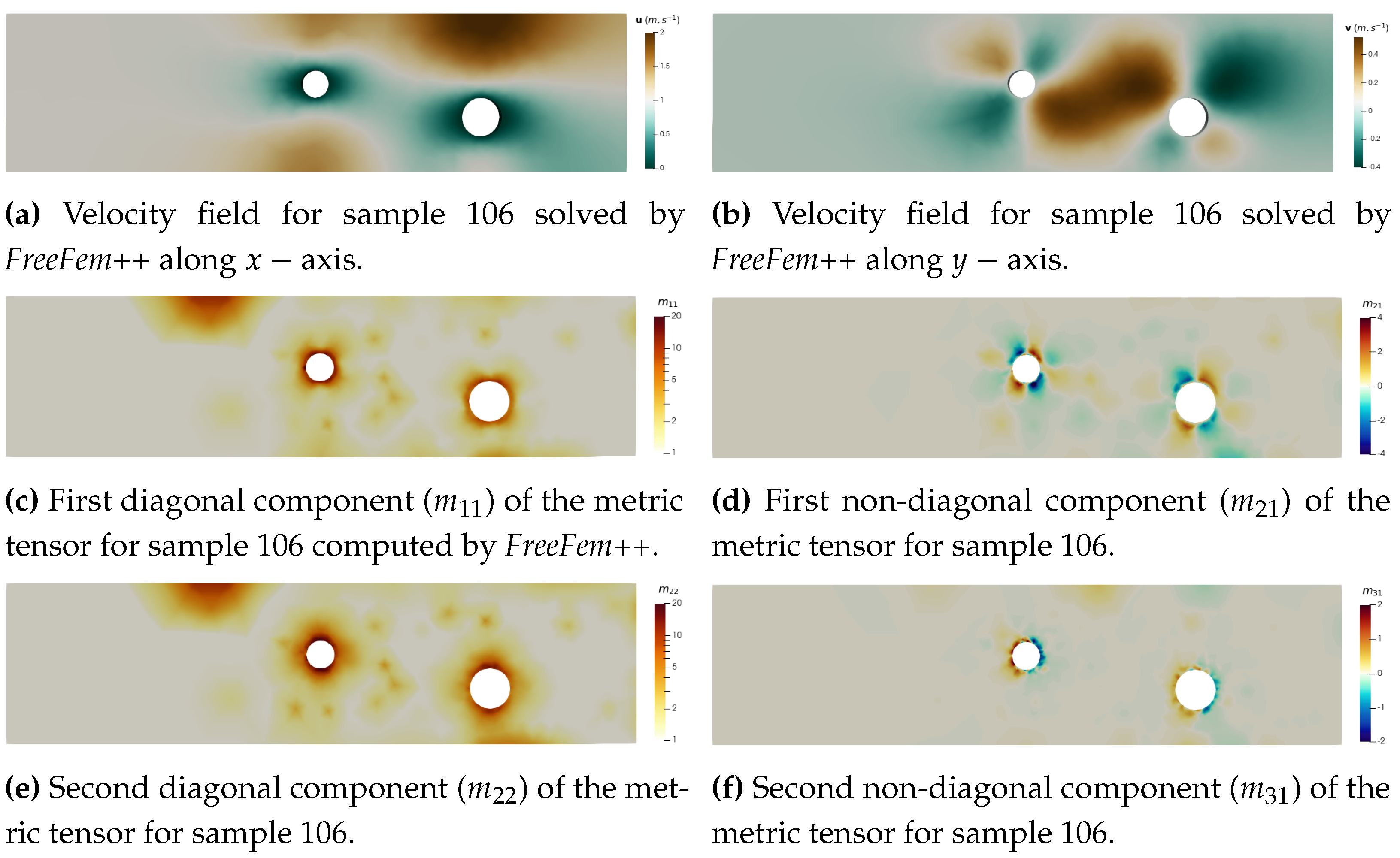

2.4. Mesh-Based Simulations

Mesh-based simulations are performed using the open-source FE library

FreeFem++ [

32]. For the Stokes problem, it outputs the velocity field

and the pressure field

p on the mesh (Figure a). This library uses an Hessian-based metric to perform anisotropic mesh adaptation. Given a triangulation

, one can derive an upper bound of the approximation error using an interpolation error analysis. This upper bound is expressed thanks to the recovered Hessian of the approximated solution

[

9]. In fact, using

linear elements, we usually cannot directly compute the Hessian of the solution. Instead, we compute an approximation called the recovered Hessian matrix

(

) [

10].

The recovered Hessian matrix is not a metric because it is not positive definite. Therefore, we define the following metric tensor:

where

is the orthogonal matrix built with the eigenvectors

of

(

) and

is the diagonal matrix of absolute value of the eigenvalues of

(

). By construction in

FreeFem++ and

Mmg[

33], the prescribed size is the inverse of the square root of the metric eigenvalues, so

is the size imposed in the first direction,

, in the second direction, and

in the third direction. Ultimately,

is symmetric positive definite and is characterised by only six components:

.

A similar set of figures can be found in

Appendix A.1 for the linear elasticity problem.

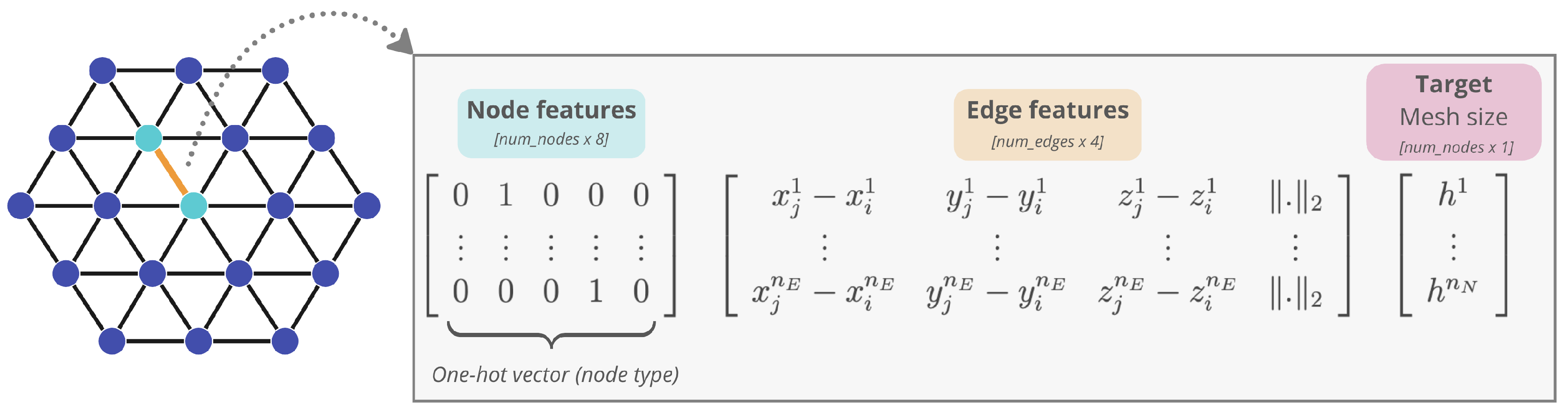

2.5. Graph Representation

CAD files and unstructured meshes are essentially a list of points and edges. We can take advantage of this structure to encode these input data into graphs. Each node and edge of the graph will carry an initial information (based on coordinates, boundary conditions, and initial conditions) and the target to predict (either mesh size or physical field). For each data, we can access:

Geometry: 3D coordinates

Topology: node connections

Boundary Conditions (BC): inlet, outlet, walls, obstacles or fluid

Initial Conditions (IC): velocity and pressure field (mesh-based simulations only)

A graph is denoted by where and are vertex and edge sets, respectively. Each node vertex i is represented by a graph vertex . Two nodes i and j connected are represented by two graph edges, with edge pointing to node j and edge pointing to node i. In other words, the mesh nodes become graph vertices , and mesh edges become bidirectional mesh-edges in the graph. Properties associated with node i are termed as node feature, . The properties corresponding to edge are referred to as edge feature, .

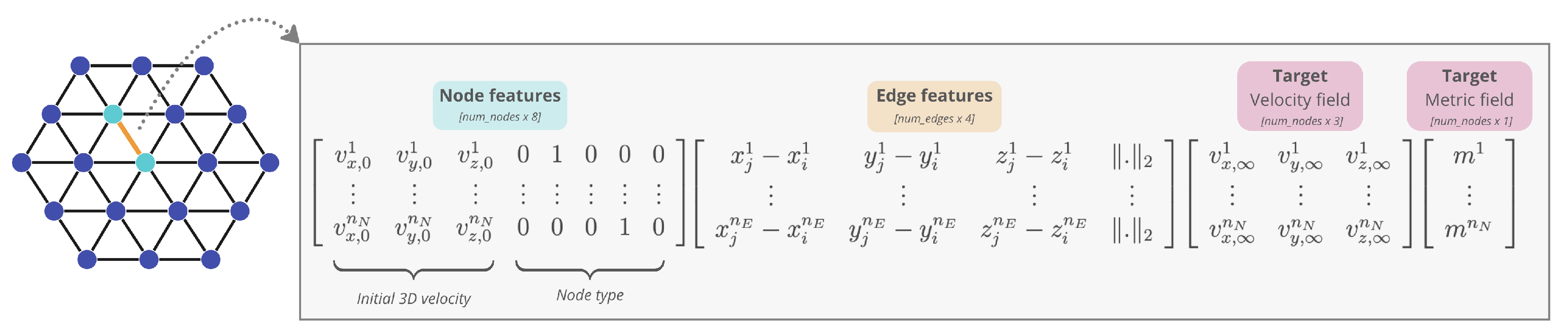

For both networks, node features

consists of the belonging to a physical surface (BC)

, a one-hot vector of dimension 5, and, for Graphnet, the velocity field, a vector of dimension 3, such that the dimension of the node features is

and

. The edge feature

of edge

is constructed to enrich the graph connectivity information with the (signed) distance between nodes,

, and its absolute value, such that

.

Figure 5 and

Figure 6 illustrate this process for Meshnet and Graphnet, respectively.

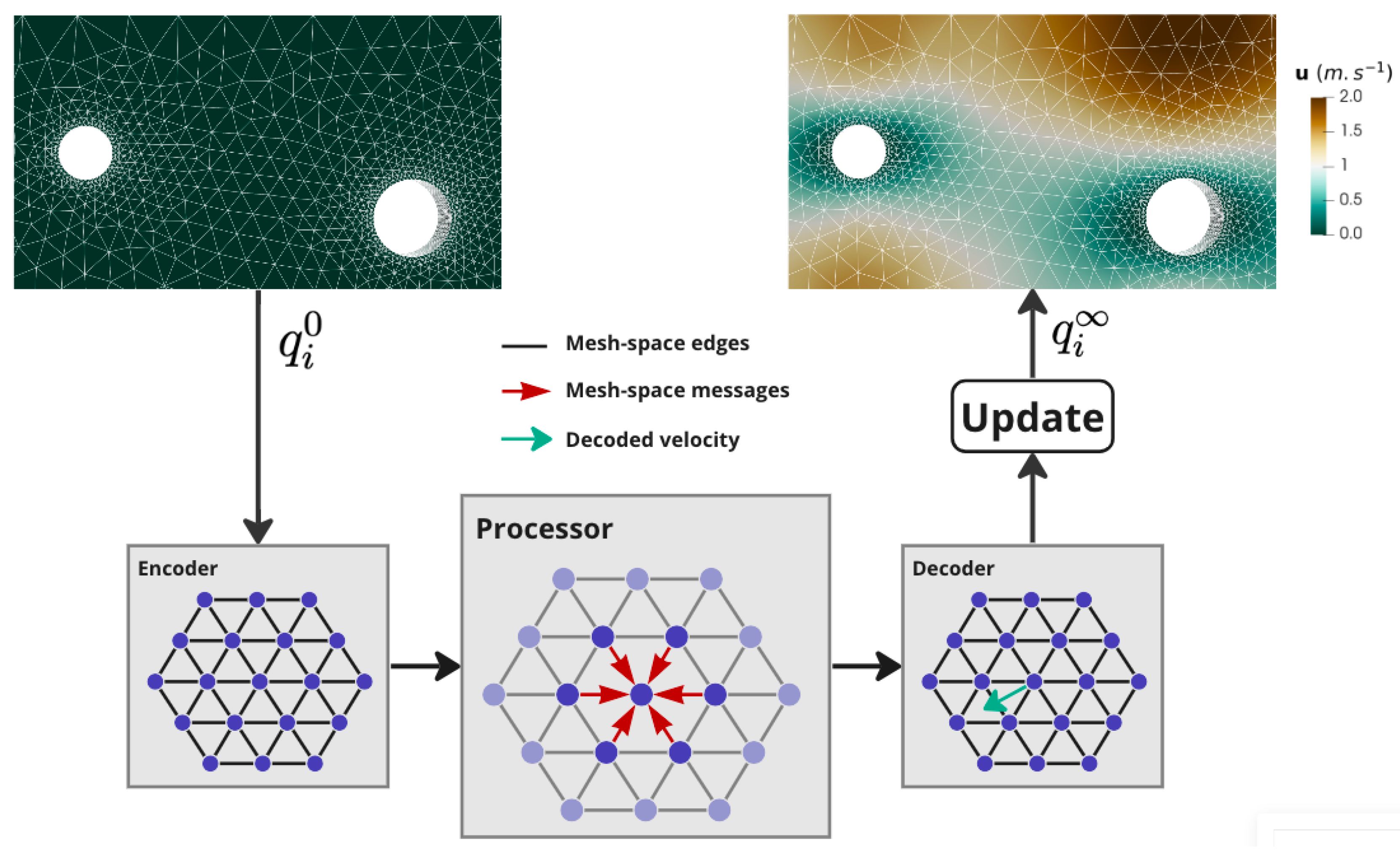

2.6. Model

In both Meshnet and Graphnet, the behaviour of the targeted variables are captured by learning directly on the graph using latent node and edge features derived from the physical node and edge features reviewed in

Section 2.5. We use a graph neural network model with an Encoder-Processer-Decoder architecture [

29,

34]. See

Appendix A.2 for more details.

The architecture proposed by Pfaff et al. [

29] is essentially designed to predict the dynamical states of a system over time. Since our test cases are steady-state predictions, we adapt this architecture following the work of Harsch et al. [

35] to better capture local and global features.

2.6.1. Encoder

Using the graph representation of

Section 2.5, we compute the initial latent feature vectors,

and

from the physical feature vectors,

and

. The hyperparameter

denotes the size of the latent vectors. The computation of the latent vectors is done using the node and edge multilayer perceptrons (MLPs) (

Table A1), denoted respectively by

and

, as follows:

2.6.2. Processor

The processor consists of m message-passing steps computed in sequence. At step

in the sequence, each graph edge feature

is updated using its value at the previous message-passing step

and the values of the adjacent node features at step

, as follows

to obtain the updated value. In Equation (

5), the operator

concatenates the given arguments on the feature dimension. Then, each graph node

is updated using its value at the previous message-passing step,

, and the aggregation of its incident edge features at step

:

where

is the set of nodes connected to node

i. Using Equations (

5) and (

6), the processor computes an updated set of node features.

The update of the edge-based messages,

, is the key to the accuracy of the flow predictions as it propagates information between neighbouring graph nodes (i.e., between neighbouring mesh cell elements). This design choice differentiates MeshGraphNets [

29] from other classical GNN frameworks relying only on node features, such as GCN and GraphSAGE [

36].

2.6.3. Decoder

After performing

m steps of the previously described process, the decoder maps the updated graph nodes latent features

to the node-based properties in physical state using MLP as follows:

where

m is the number of steps performed by the processor.

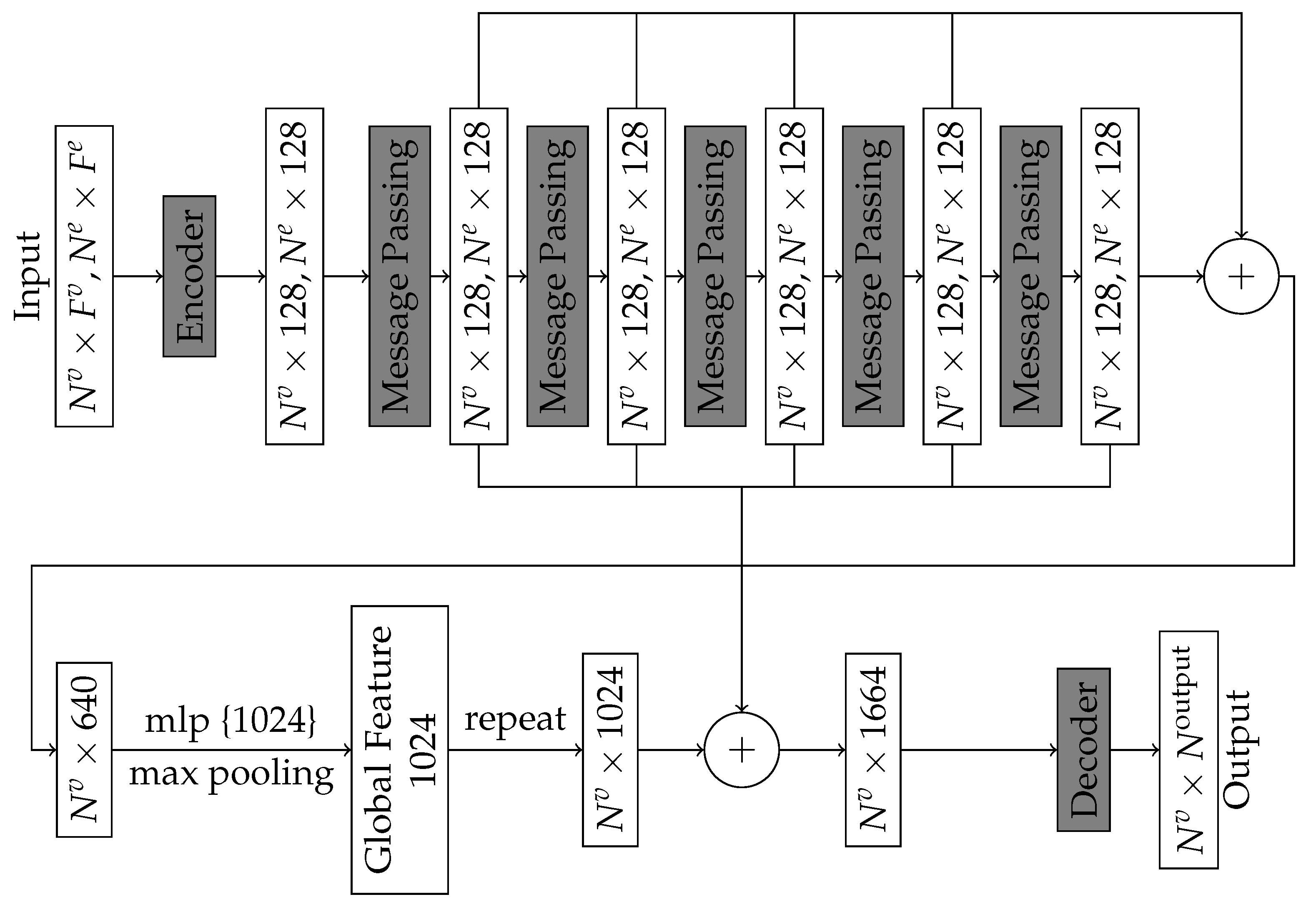

2.6.4. Local and Global Features

To increase the ability to extract local and global properties, we adopt the structure proposed by Wang et al. [

37] for Dynamic Graph CNN and adapted by Harsch et al. [

35]. This adaptation aggregates the information given by each message passing block to calculate a global feature vector. In that sense, the number of layers is reduced to 5, instead of 15 previously. To avoid potential overfitting, a pooling operation is added, and the result is concatenated with the previous local features. This adapted version is called Graphnet (Pool) (

Figure 8).

2.7. Loss Function and Optimiser

We train both networks by minimising the error between the true node label and the predicted node label. We use the per-node root mean square error (RMSE) loss to quantify the data error for each simulation. The loss function reads:

where

denotes the number of nodes in a batch of training meshes,

denotes the true output in the data set,

is the output predicted by the network, as formalised in Equation (

7).

ADAM optimiser is used and the learning rate is constant, equal to . With the data set and a fixed set of hyper-parameters at hand, each epoch takes on two NVIDIA A100 GPUs. The value of specific training hyperparameters is given in Table A3.

5. Conclusions

We propose a Graph Neural Network mesh-based method to model fluid dynamics and solid mechanics problems for an accurate and efficient prediction of anisotropic adaptation metric fields. We extended the approach of Pfaff et al. [

29] to tetrahedral meshes in three dimensions. Following the work of Harsch et al. [

35], we adapted the GNN architecture to better suit the prediction of steady states. Our method may allow for more efficient simulations than traditional simulators, and because it is differentiable, it could be used to retrieve the Hessian matrix directly, when traditional solvers have to compute a recovered Hessian matrix.

The experiments demonstrate the model’s strong understanding of the geometric structure. The method is independent of the structure of the simulation domain, being able to capture highly irregular meshes. We show that the model does not require any a priori domain information, e.g. inflow velocity or material parameters. Thus, the model can be used for any other systems represented as field data.

Training phase underlined the model’s strong understanding of the geometric structure of the unstructured meshes. It was shown that it can achieve effective prediction with the sole knowledge of connectivity and the belonging to a physical surface. It doesn’t rely on any prior information such as the inflow velocity or the material parameters. This, it can be easily adapted and scaled to other physical problems governed by PDEs. However, our test show that some specific tuning might be necessary to achieve correct predictions when adapting from a fluid problem to a solid one for instance.

The strength of this work lies in the tetrahedral mesh generation and adaptation pipeline. The emphasis was placed on producing work based on open source tools [

31,

32,

33] to promote reproducibility and enable others to build on it. Future work in this area should address several topics. It could explore more complex geometries to prove its applicability to industrial problems. Alternative architectures could also be explored. Adding concatenation and pooling has been shown to improve performance, but other options could show even better improvements. Finally, this framework could be further improved by adding a specific physical constraints to the loss function, similar to Physics-Informed Neural Networks (PINNs) [

39], which could enable learning on sparse datasets, reducing the need for generating hundreds of simulations.

Author Contributions

Conceptualization, Mesri Y. and Parret-Fréaud A.; methodology, Pelissier U. and Mesri Y.; software, Pelissier U.; validation, Mesri Y. and Parret-Fréaud A. and Bordeu F.; formal analysis, Pelissier U.; investigation, Pelissier U.; resources, Mesri Y. and Parret-Fréaud A. and Bordeu F.; data curation, Pelissier U.; writing—original draft preparation, Pelissier U.; writing—review and editing, Pelissier U. and Mesri Y. and Parret-Fréaud A. and Bordeu F.; visualization, Pelissier U.; supervision, Mesri Y. and Parret-Fréaud A. and Bordeu F.; project administration, Mesri Y. and Parret-Fréaud A.; funding acquisition, Mesri Y. and Parret-Fréaud A. All authors have read and agreed to the published version of the manuscript.

Figure 1.

Diagram of Adaptnet framework to predict a mesh adapted to some physics, given an initial geometry.

Figure 1.

Diagram of Adaptnet framework to predict a mesh adapted to some physics, given an initial geometry.

Figure 2.

Labelled CAD from fluid and structural mechanics cases.

Figure 2.

Labelled CAD from fluid and structural mechanics cases.

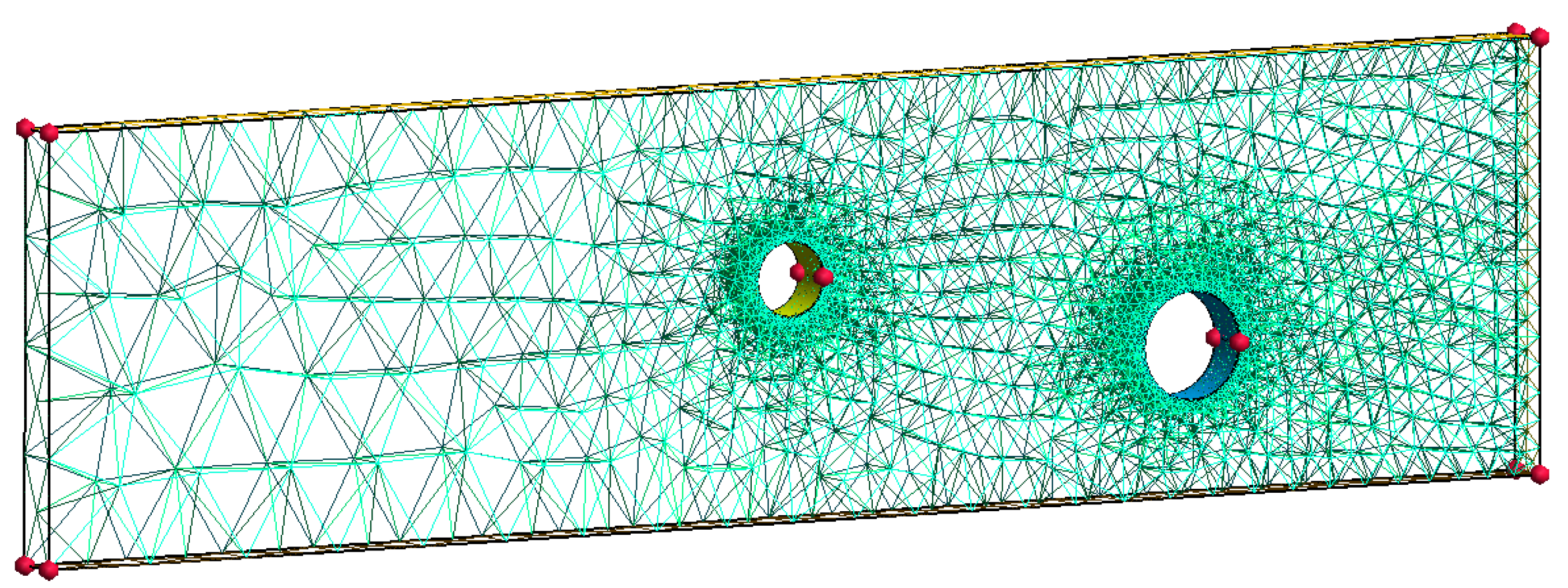

Figure 3.

Generated 3D tetrahedral mesh for sample 106 using mesh size at geometry points highlighted in red. The mesh is generated using

Gmsh [

31].

Figure 3.

Generated 3D tetrahedral mesh for sample 106 using mesh size at geometry points highlighted in red. The mesh is generated using

Gmsh [

31].

Figure 4.

Finite Element solving of Stokes problem and anisotropic metric computation.

Figure 4.

Finite Element solving of Stokes problem and anisotropic metric computation.

Figure 5.

Meshnet encoding illustration. The encoder encodes the current mesh into a graph . Mesh nodes become graph vertices , and mesh edges become bidirectional mesh-edges in the graph .

Figure 5.

Meshnet encoding illustration. The encoder encodes the current mesh into a graph . Mesh nodes become graph vertices , and mesh edges become bidirectional mesh-edges in the graph .

Figure 6.

Graphnet encoding illustration.

Figure 6.

Graphnet encoding illustration.

Figure 7.

Diagram of Graphnet operating on Stokes problem. The model uses an Encoder-Processer-Decoder architecture. The encoder transforms the input mesh into a graph. The processor performs several rounds of message passing along mesh edges, updating all node and edge embeddings. The decoder returns the velocity or metric field for each node, which is then used to compute the Hessian and the metric field in order to trigger remeshing.

Figure 7.

Diagram of Graphnet operating on Stokes problem. The model uses an Encoder-Processer-Decoder architecture. The encoder transforms the input mesh into a graph. The processor performs several rounds of message passing along mesh edges, updating all node and edge embeddings. The decoder returns the velocity or metric field for each node, which is then used to compute the Hessian and the metric field in order to trigger remeshing.

Figure 8.

Adapted model architecture using message passing block with local and global descriptor [

35] (operator ⊕ denotes the concatenation).

Figure 8.

Adapted model architecture using message passing block with local and global descriptor [

35] (operator ⊕ denotes the concatenation).

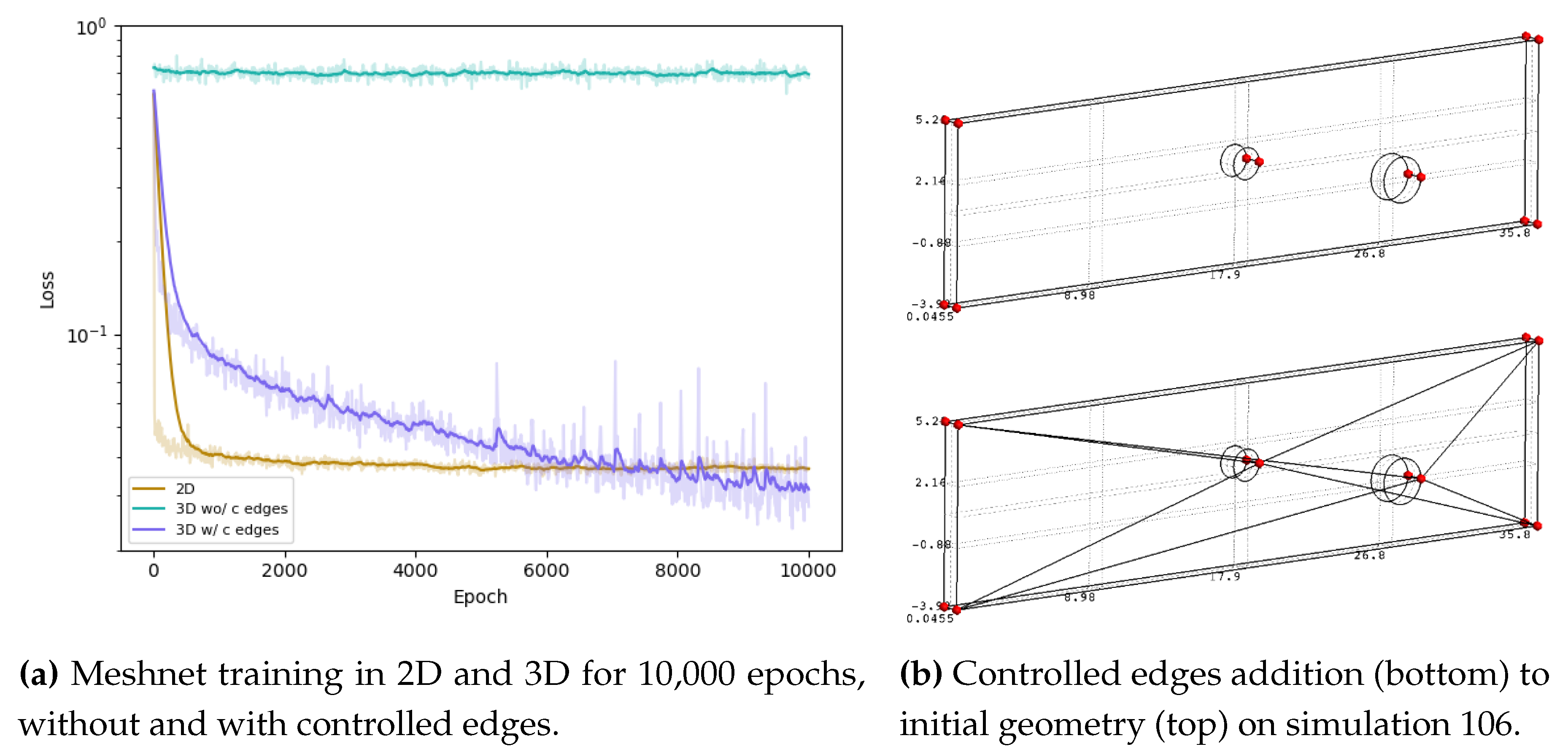

Figure 9.

Influence of the controlled edges on Meshnet learning curve through message-passing.

Figure 9.

Influence of the controlled edges on Meshnet learning curve through message-passing.

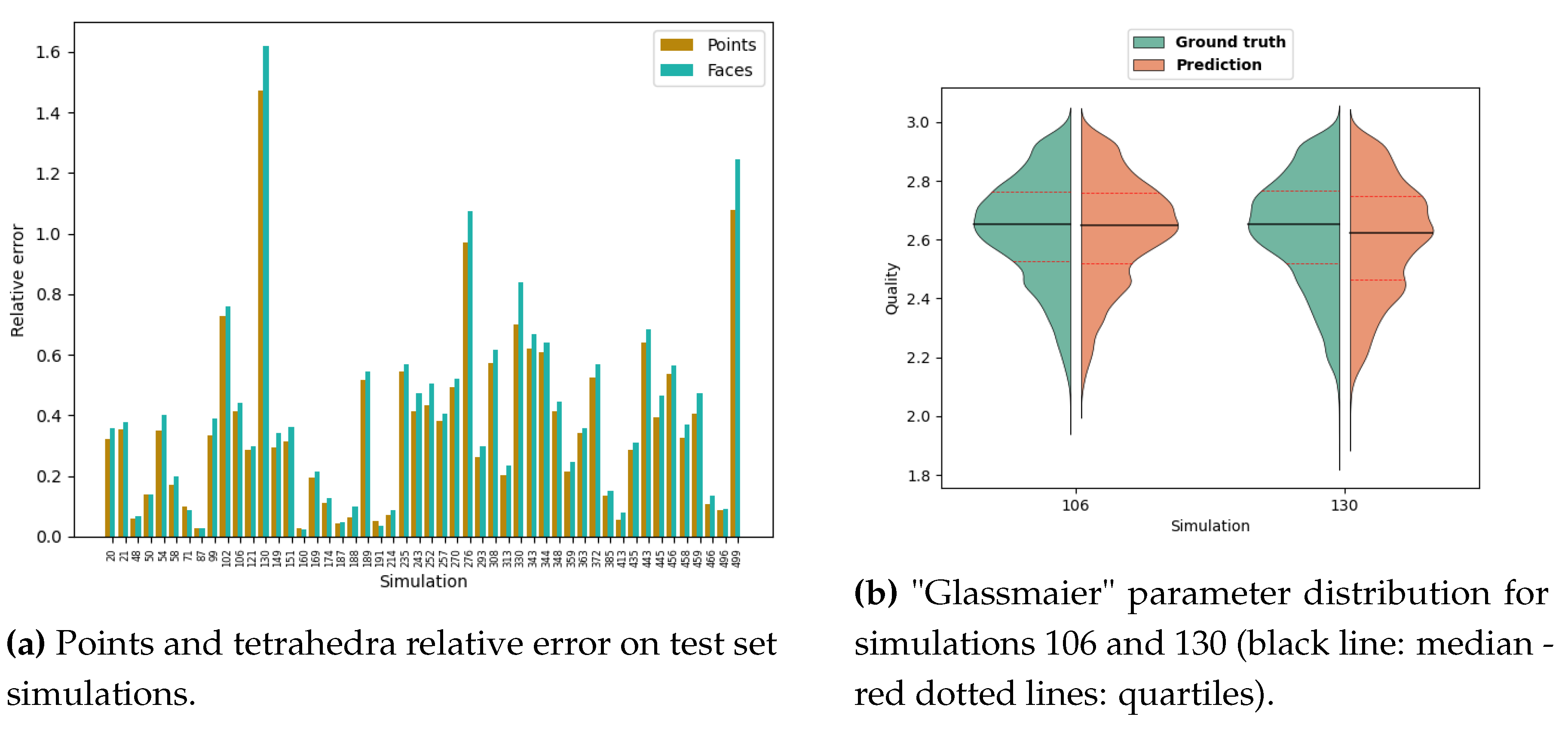

Figure 10.

Quality of meshes predicted by Meshnet on simulations from the test set.

Figure 10.

Quality of meshes predicted by Meshnet on simulations from the test set.

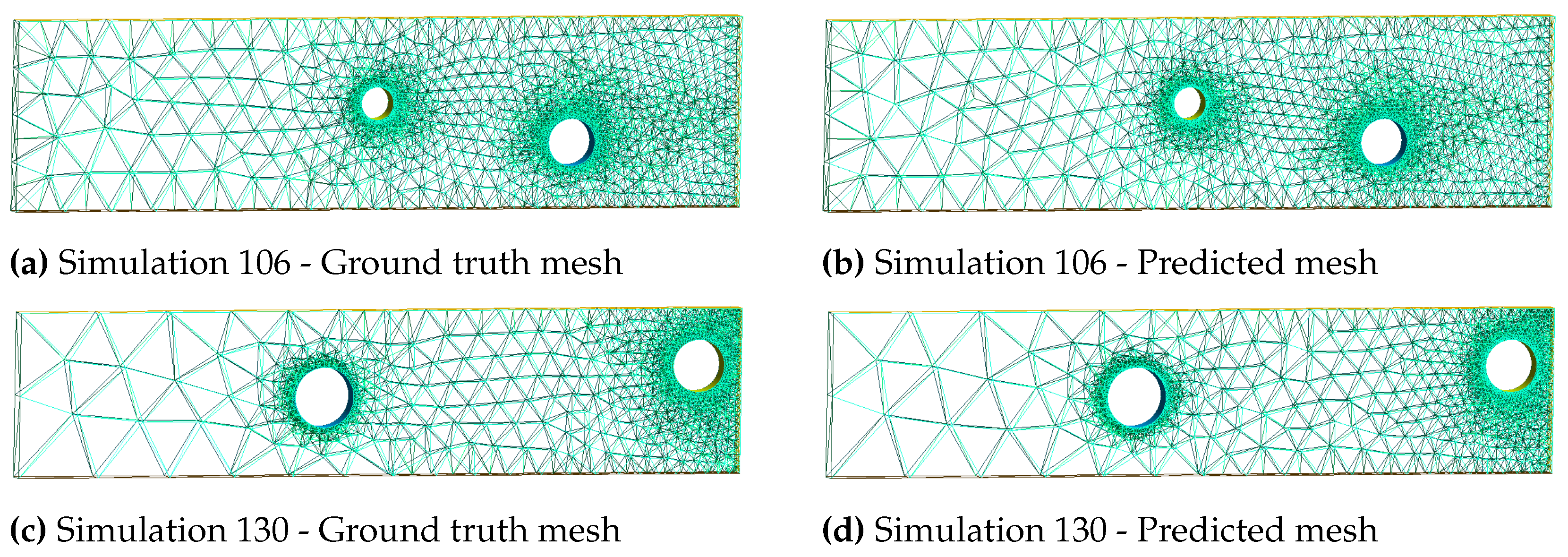

Figure 11.

Mesh visualisation of simulations 106 and 130 using Gmsh [

31].

Figure 11.

Mesh visualisation of simulations 106 and 130 using Gmsh [

31].

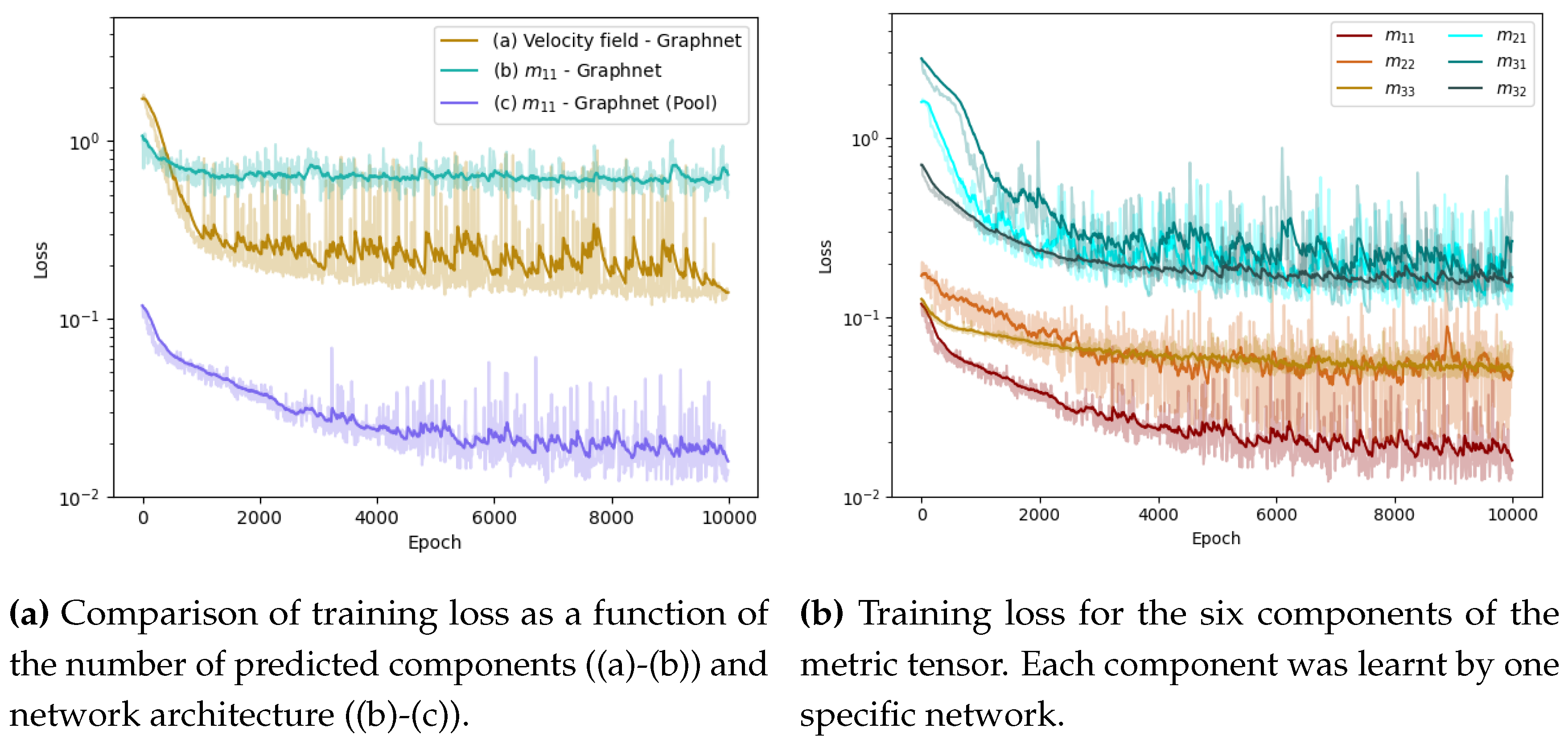

Figure 12.

Graphnet training for 10,000 epochs.

Figure 12.

Graphnet training for 10,000 epochs.

Figure 13.

Graphnet training for 10,000 epochs.

Figure 13.

Graphnet training for 10,000 epochs.

Figure 14.

Graphnet training for 10,000 epochs.

Figure 14.

Graphnet training for 10,000 epochs.

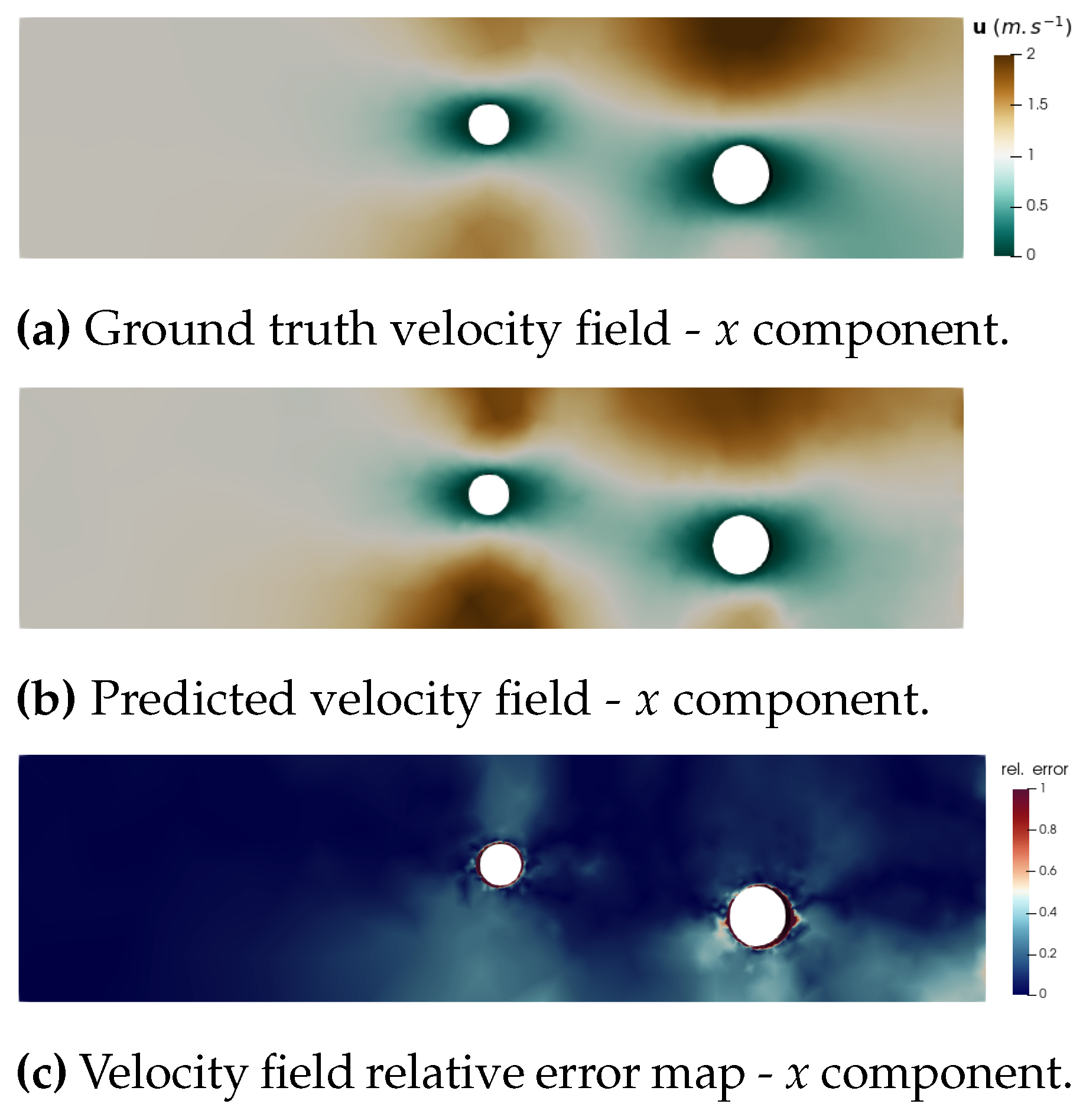

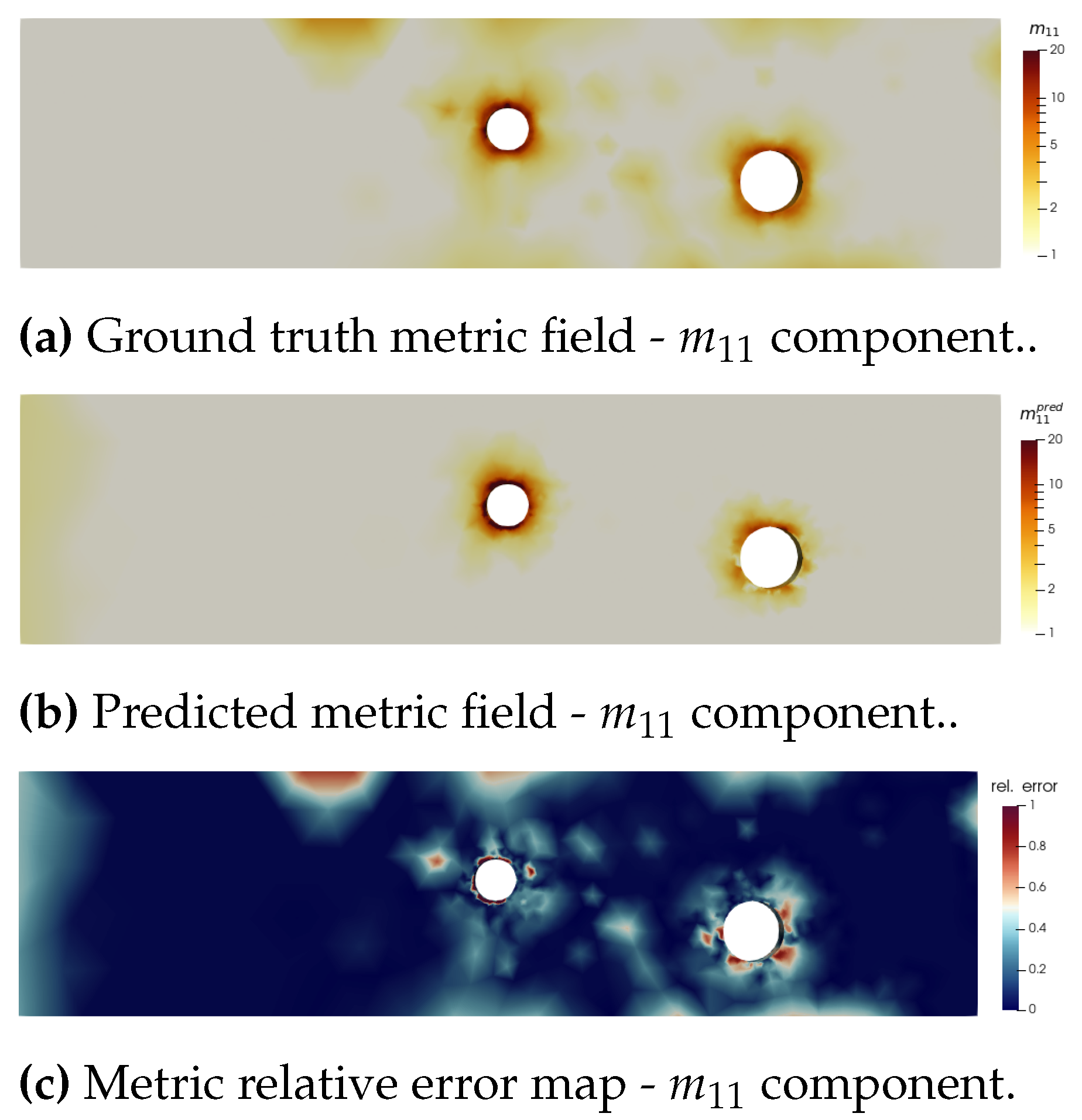

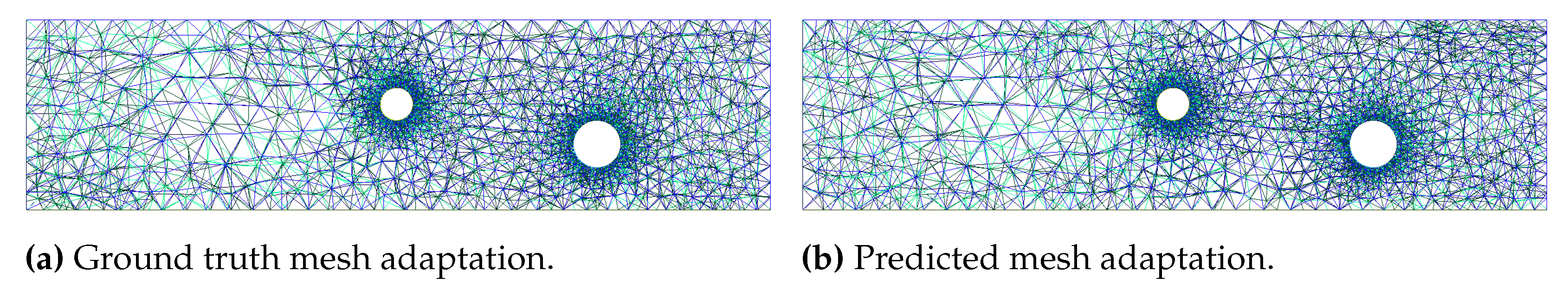

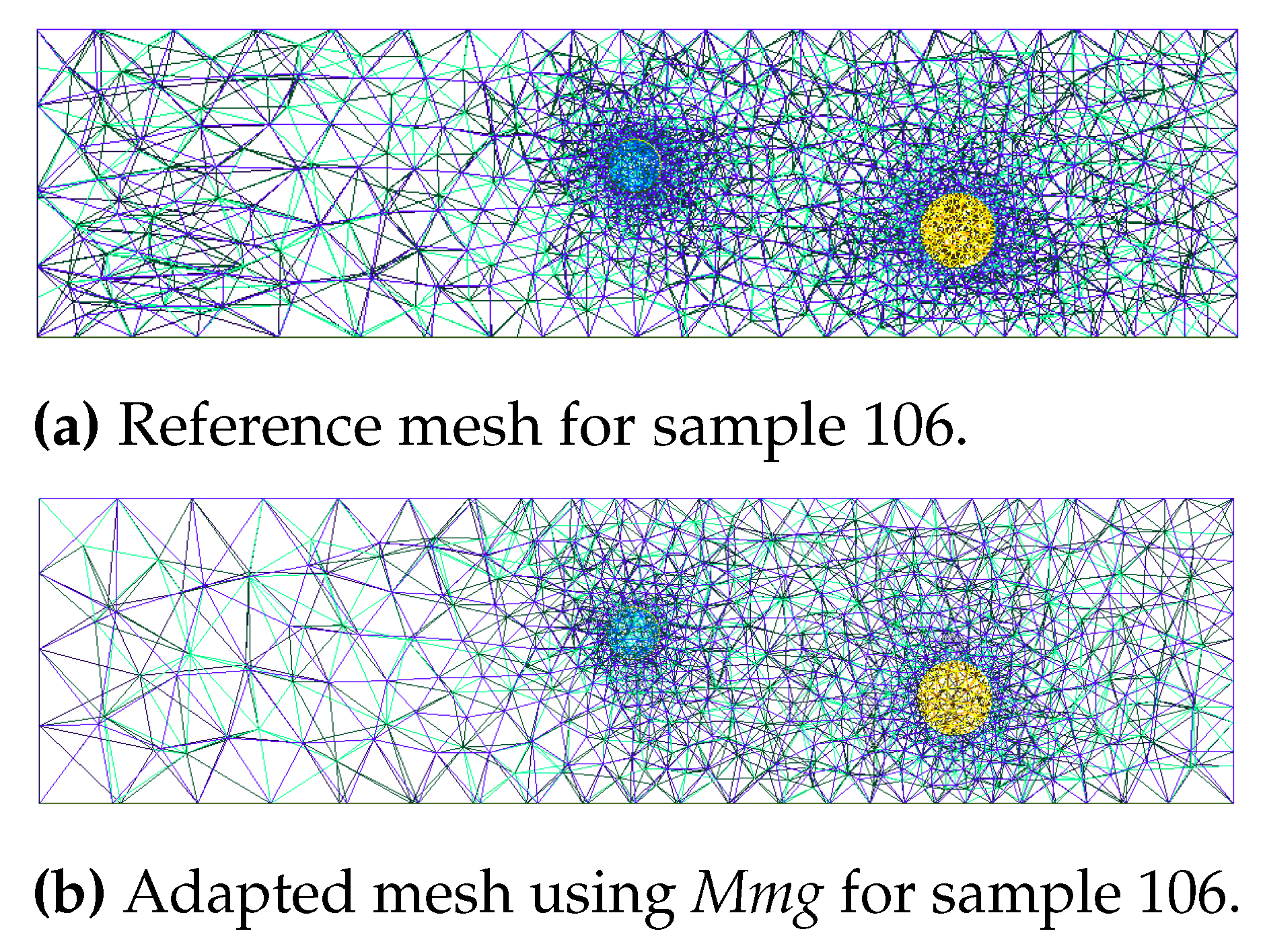

Figure 15.

3D mesh adaptation using Mmg for the ground truth metric field and the one predicted by Graphnet on simulation 106.

Figure 15.

3D mesh adaptation using Mmg for the ground truth metric field and the one predicted by Graphnet on simulation 106.

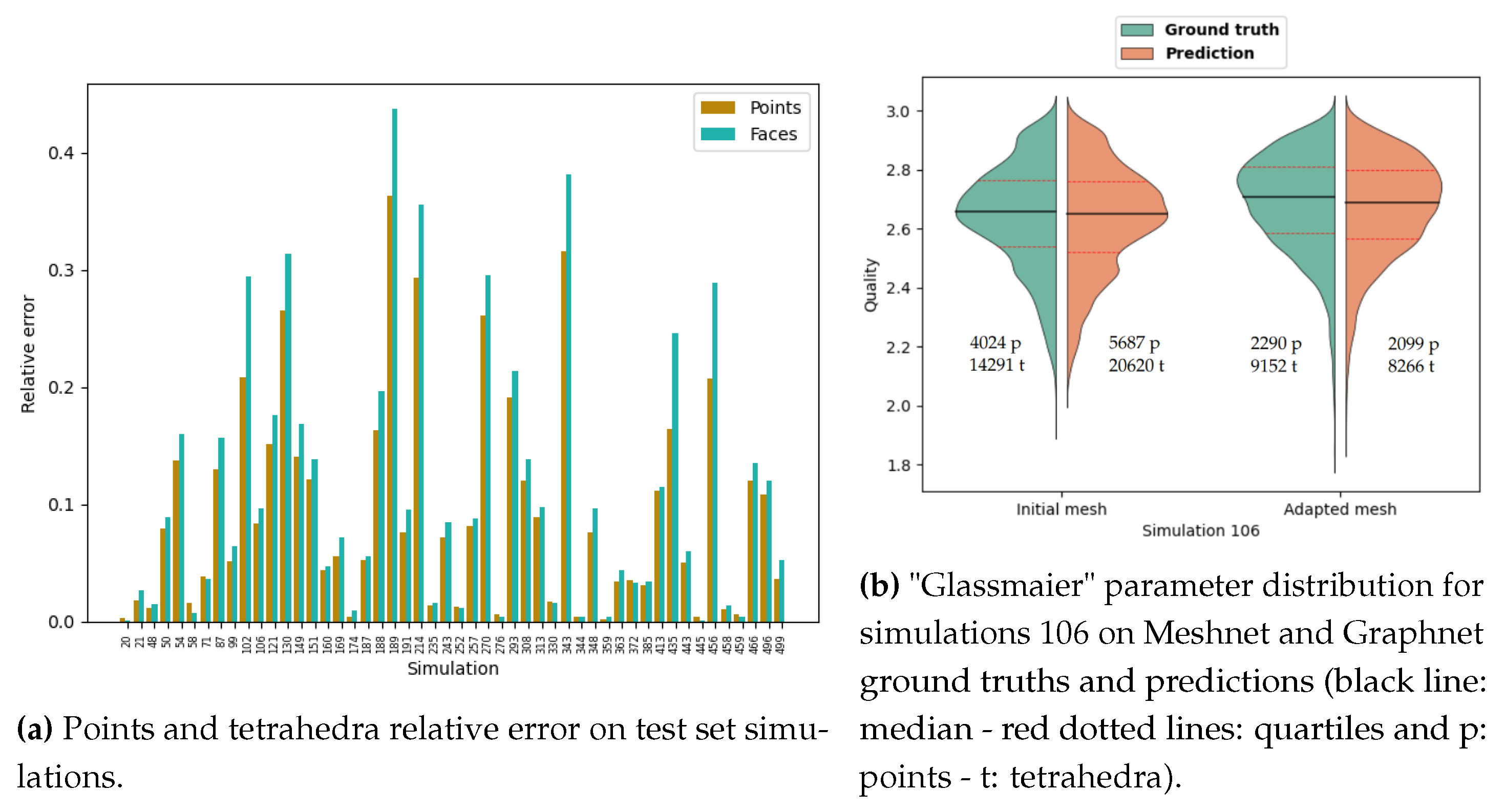

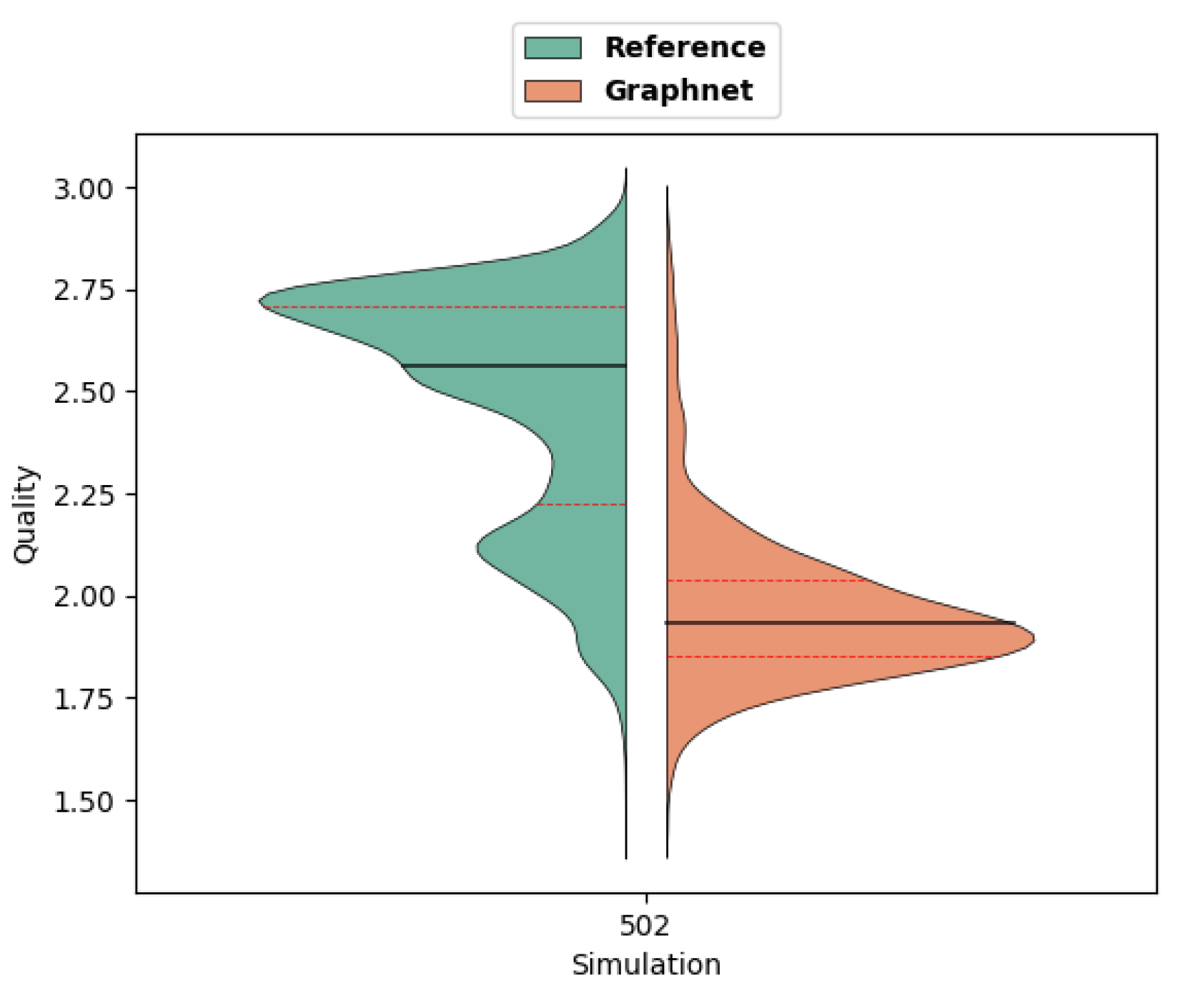

Figure 16.

Quality of meshes predicted by Graphnet on simulations from the test set.

Figure 16.

Quality of meshes predicted by Graphnet on simulations from the test set.

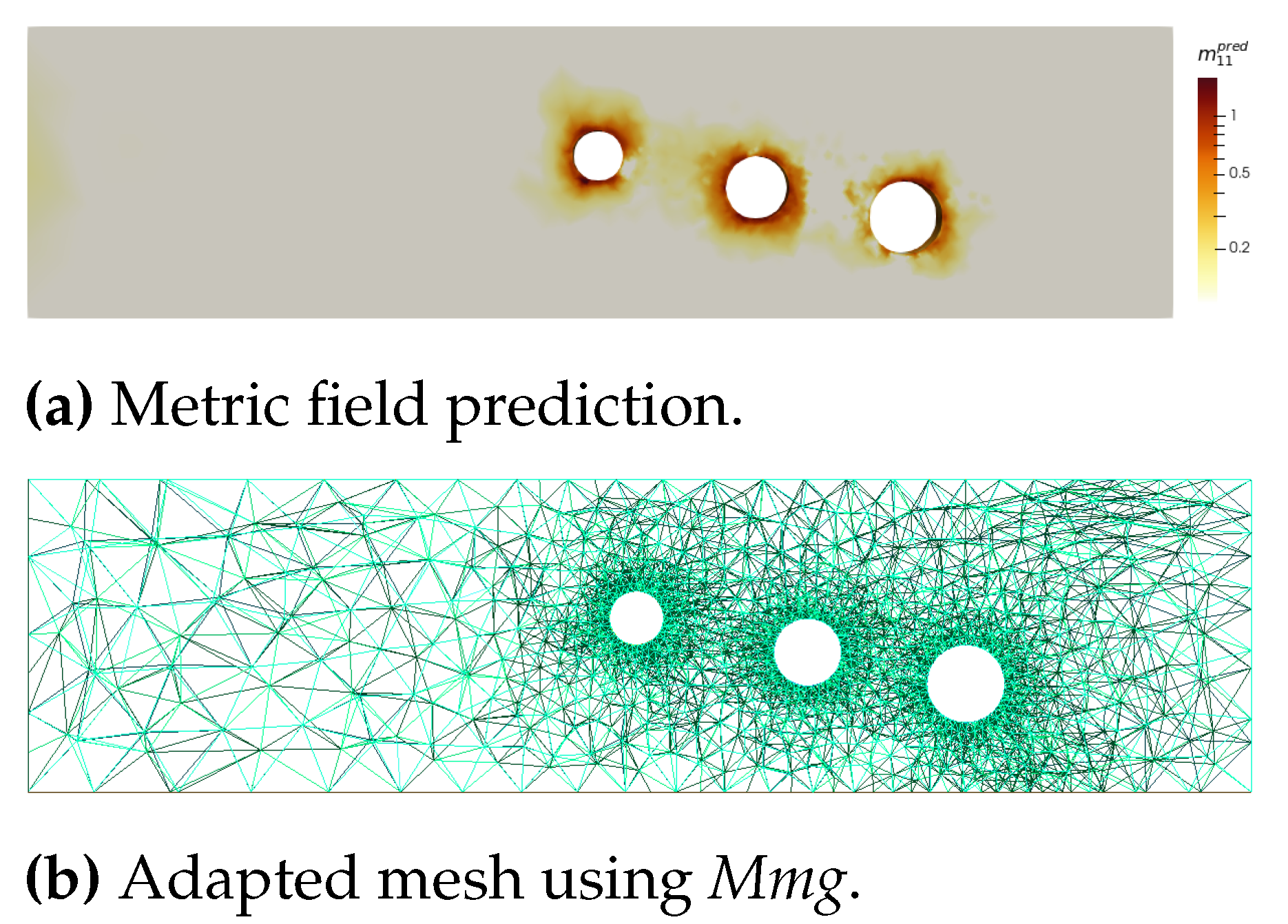

Figure 17.

Generalisation of the Meshnet prediction for 3-hole geometry.

Figure 17.

Generalisation of the Meshnet prediction for 3-hole geometry.

Figure 18.

Generalisation of the Meshnet prediction for 3-hole geometry.

Figure 18.

Generalisation of the Meshnet prediction for 3-hole geometry.

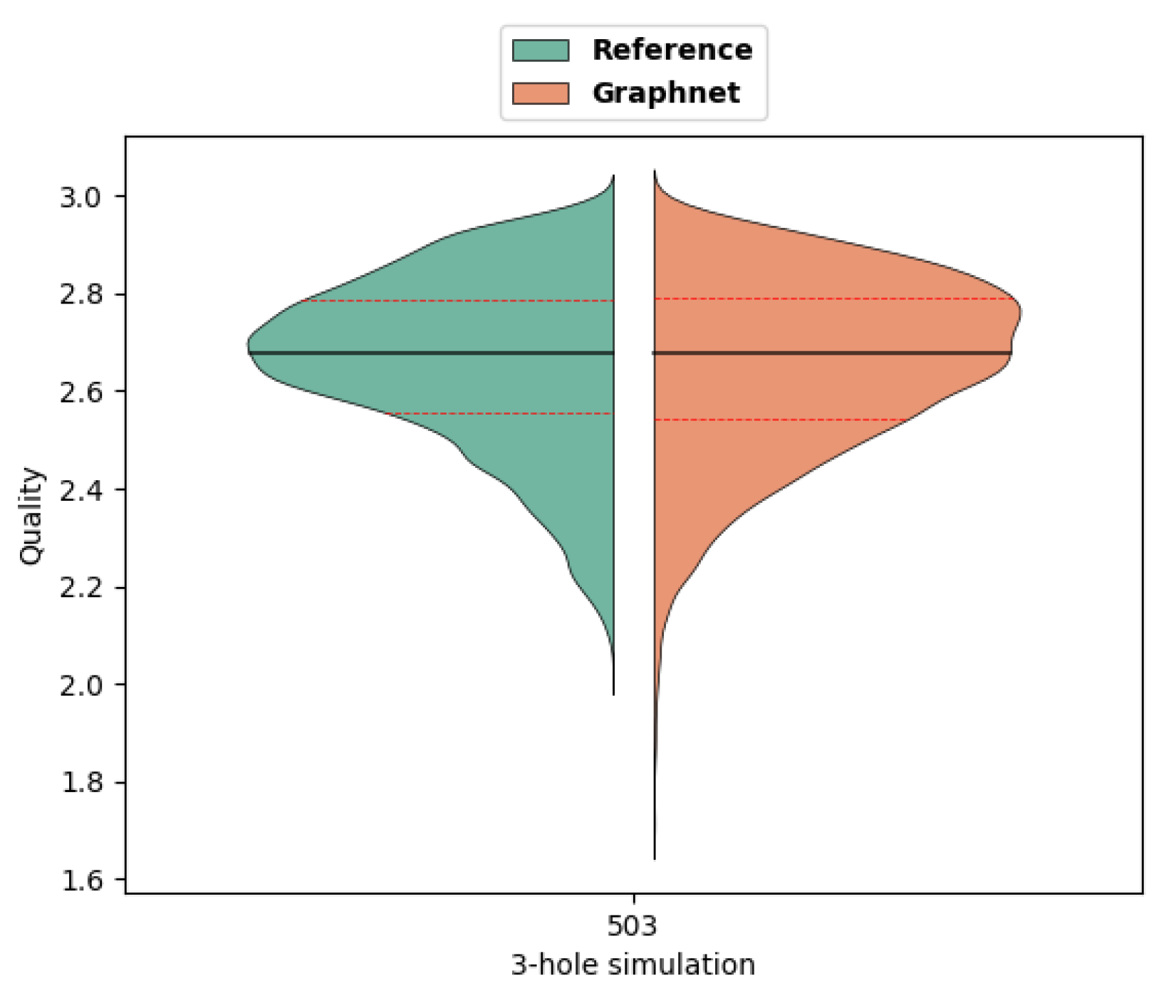

Figure 19.

"Glassmaier" parameter distribution (black line: median - red dotted lines: quartiles) for Meshnet and Graphnet predictions.

Figure 19.

"Glassmaier" parameter distribution (black line: median - red dotted lines: quartiles) for Meshnet and Graphnet predictions.

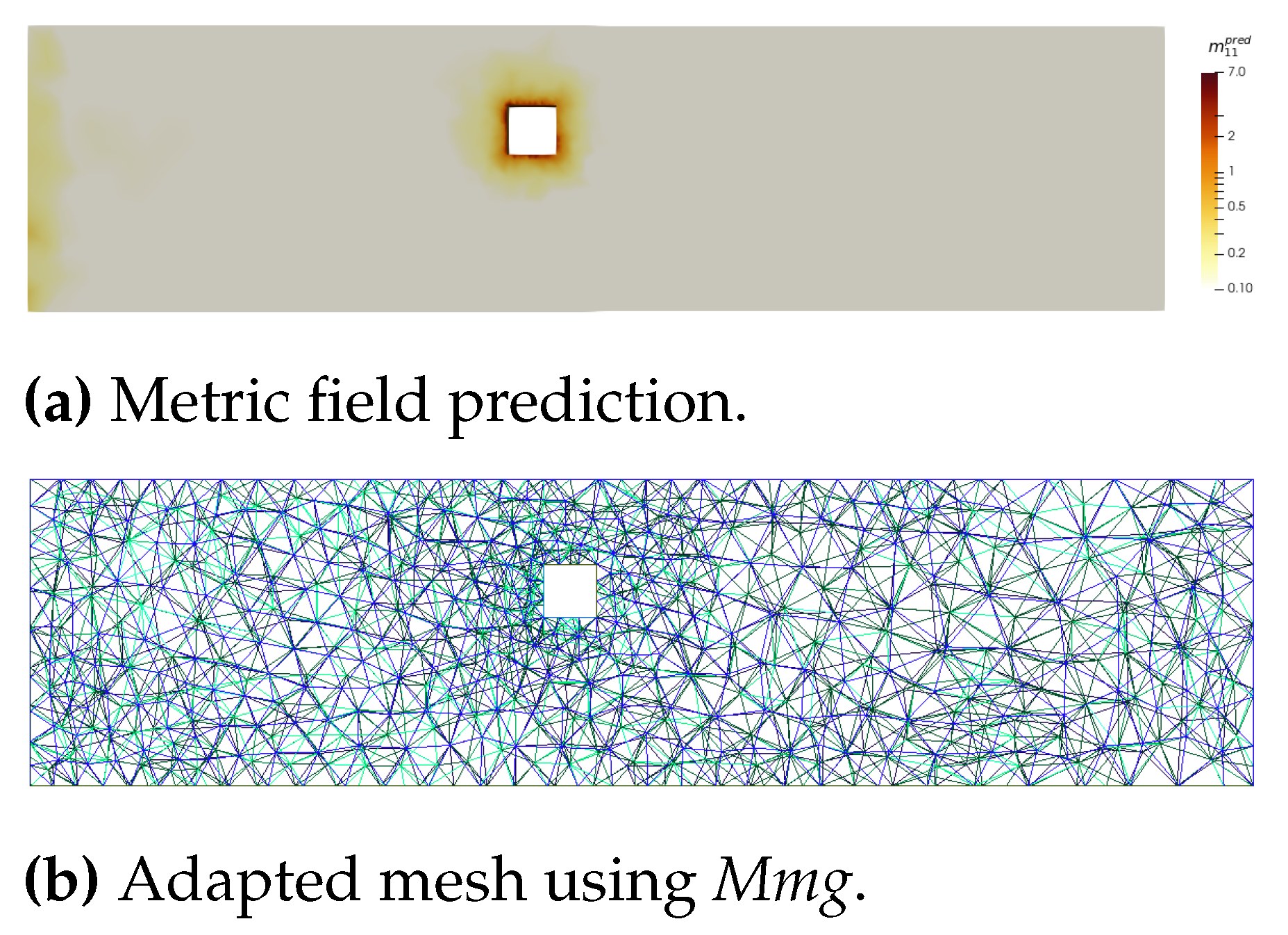

Figure 20.

Generalisation of the Graphnet prediction for square geometry.

Figure 20.

Generalisation of the Graphnet prediction for square geometry.

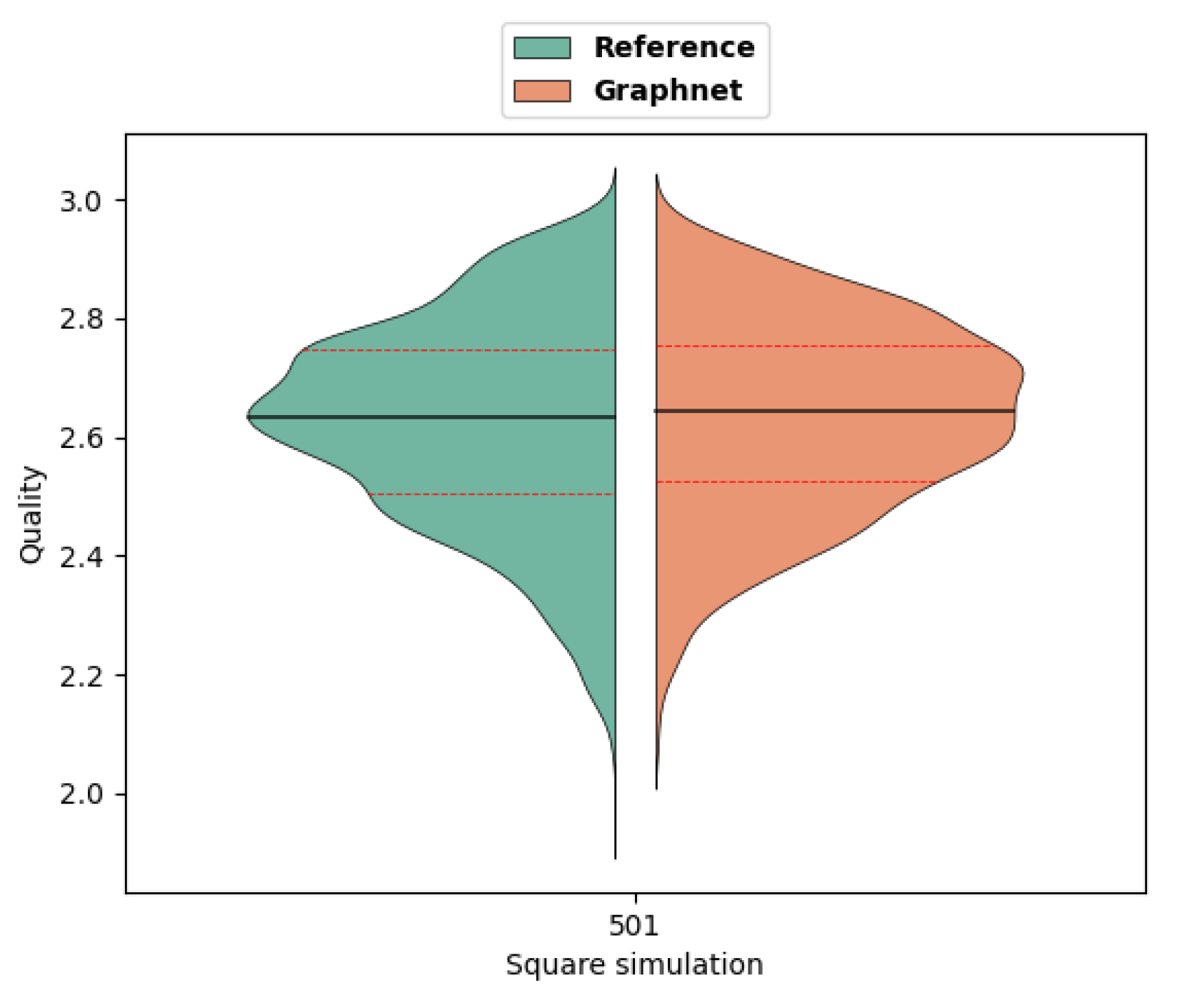

Figure 21.

"Glassmaier" parameter distribution (black line: median - red dotted lines: quartiles) for Meshnet and Graphnet predictions.

Figure 21.

"Glassmaier" parameter distribution (black line: median - red dotted lines: quartiles) for Meshnet and Graphnet predictions.

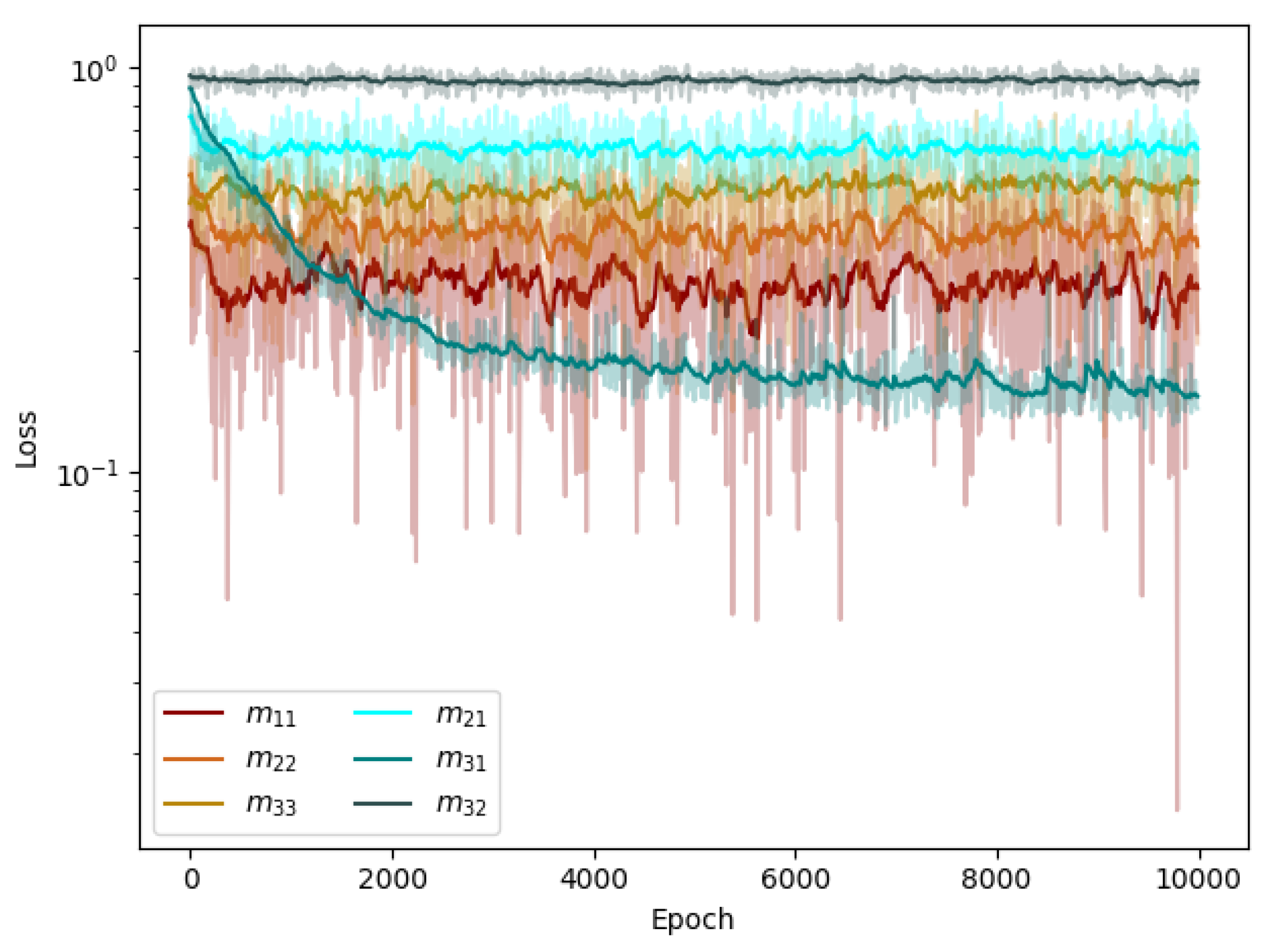

Figure 22.

Training loss for the six components of the metric tensor. Each component was learnt independently by one specific network.

Figure 22.

Training loss for the six components of the metric tensor. Each component was learnt independently by one specific network.

Figure 23.

Graphnet prediction for 3-hole geometry.

Figure 23.

Graphnet prediction for 3-hole geometry.

Figure 24.

"Glassmaier" parameter distribution (black line: median - red dotted lines: quartiles) for Reference and Graphnet predictions.

Figure 24.

"Glassmaier" parameter distribution (black line: median - red dotted lines: quartiles) for Reference and Graphnet predictions.

Table 1.

Special values of the Glassmeier parameter.

Table 1.

Special values of the Glassmeier parameter.

|

Meaning |

|

The four points are colinear. |

|

The four points all lie in a plane . |

|

A regular tetrahedron is formed . |

Table 2.

Training duration for two different batch sizes.

Table 2.

Training duration for two different batch sizes.

| Component |

|

|

|

|

|

|

| Duration (h) |

|

|

|

|

|

|

| Batch size |

2 |

2 |

2 |

4 |

4 |

4 |

Table 3.

CPU time comparison for computation and prediction of each phase.

Table 3.

CPU time comparison for computation and prediction of each phase.

| Model |

Meshnet |

Graphnet |

| Computation |

Engineer time |

50.06s |

| Prediction |

0.68s |

0.79s |

| Speed-up |

ND |

63.4 |