1. Introduction

The continuous development of the economy and culture has led to a perpetual improvement of various large public traffic areas (PTAs). In such scenarios involving pedestrian gatherings, unconventional and sudden incidents can trigger large-scale public traffic events. The risks inherent in crowd gatherings result from various factors including the venue type, spatial structure, organizational management, type of attendees, crowd movement characteristics, and safety measures [

1].

Even in the 21st century, large-scale stampede incidents caused by pedestrian fall behaviour have frequently occurred in PTAs worldwide. Among the most serious, the stampede at an Indian temple in September 2008 resulted in 179 deaths and over 100 injuries. In November 2011, the stampede in Phnom Penh, Cambodia caused 347 deaths and at least 410 injuries. In September 2015, the stampede that occurred during the Hajj pilgrimage in Mecca resulted in at least 1,399 deaths and over 2,000 injuries. In April 2020, the stampede on Mount Meron, Israel, resulted in 45 deaths and over 150 injuries. In October 2022, a stampede at a football stadium in Indonesia caused 132 deaths and more than 580 injuries. In the same month, the stampede in the Itaewon area of Seoul, South Korea, during Halloween celebrations, resulted in at least 146 deaths and 150 injuries [

2].

Fall behaviour is highly damaging owing to both physical and psychological injuries [

3]. Accident surveys have shown that regardless of the initial triggering factors, pedestrian fall behaviour is the most critical factor causing and aggravating crowd accidents [

2]. However, in the past, most fall detection studies focused primarily on monitoring the health of the elderly and did not consider public traffic [

3]. Detecting pedestrian falls in public places will not only allow timely notification to authorities for assistance but also effectively help to prevent subsequent crowd instability and stampede incidents, which is important.

The most promising tools include wearable sensors, such as gyroscopes and accelerometers, and visual sensors, such as RGB, infrared, and depth cameras and camera arrays (for three-dimensional reconstruction). Wearable sensors capture data such as the speed and angles of the wearer, and upon detecting abnormal values, they identify the occurrence of a fall and issue alerts to notify users and supervisors. However, this approach faces practical challenges, including device battery monitoring, frequent recharging, and inconvenience of wearing, which hinder its application and widespread adoption [

4]. Fall detection based on visual sensors and incorporating computer vision (CV) and deep learning has become a prominent direction in detection methods based on smartphones, Internet of Things (IoT), and other systems have been introduced. The increasing number of surveillance cameras in public places such as airports, train stations, subway stations, and roads can provide data support and application prospects for fall detection using visual sensors.

The major contributions of this paper are:

To elaborate on existing and current techniques proposed to fall detection.

To review related benchmark datasets for fall detection research.

To provide a critical analysis by considering application requirements in PTAs and significant future guidelines with issues and solutions are described.

The remainder of this paper is organized as follows:

Section 2 describes the retrieval and analysis methodology of fall-related literature,

Section 3 elaborates the literature of five prominent research methods,

Section 4 is a detailed comparison of related benchmark datasets,

Section 5 discusses the critical challenges and future trends in PTAs. Finally,

Section 6 concludes the paper.

2. Methodology

To obtain a better understanding of the state of the art (SOTA) methods for fall detection [

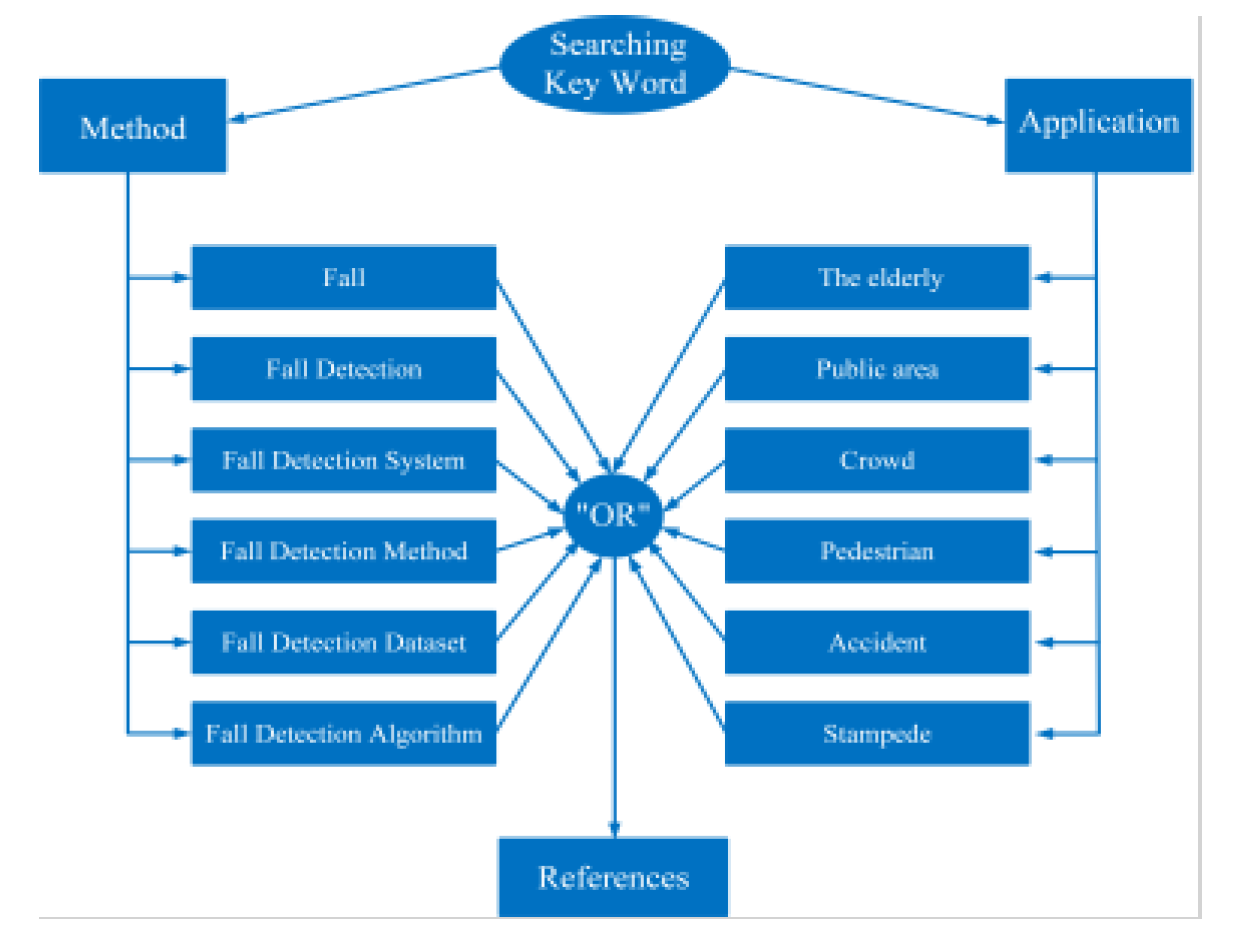

5], this study categorized the key terms related to fall detection research into two groups: detection methods and objectives. As

Figure 1 shows, the detection methods group includes terms such as “fall detection,” “fall detection system,” “fall detection method,” “fall detection dataset,” “fall detection algorithm,” and “fall.” The objectives group includes terms like “the elderly,” “public area,” “crowd,” “accident,” and “stampede.” By combining the keywords from both groups with logical “OR” operators, a literature search was conducted in various databases. After retrieving data from the databases, Google Scholar and Web of Science were selected as the primary sources of reference for this study.

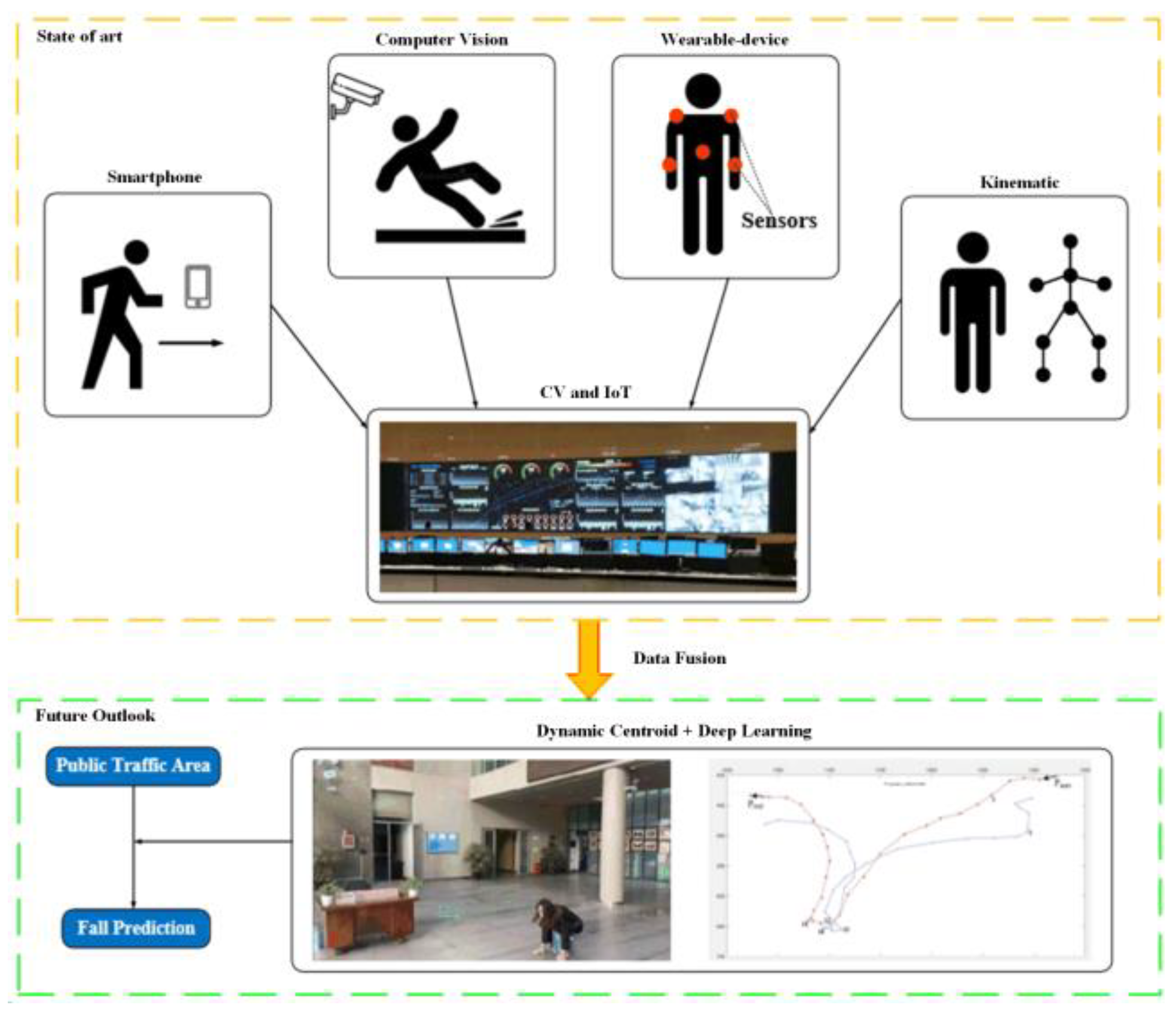

From 2013 to 2023, over 13,000 articles were found for “fall detection.” The research methods reported in these articles were classified into different categories: Computer Vision (CV), Machine Learning (ML), Internet of Things (IoT), Smartphone (SP), Kinematic (K), Sound Analysis (SA), Cloud-based (C), Sensor (S), Biomedical Signal (BS) and Wearable device-based (WD) methods. Among these, we selected five research methodologies with the most promising applicability in PTAs for thorough analysis: CV, IoT, SP, K, and WD.

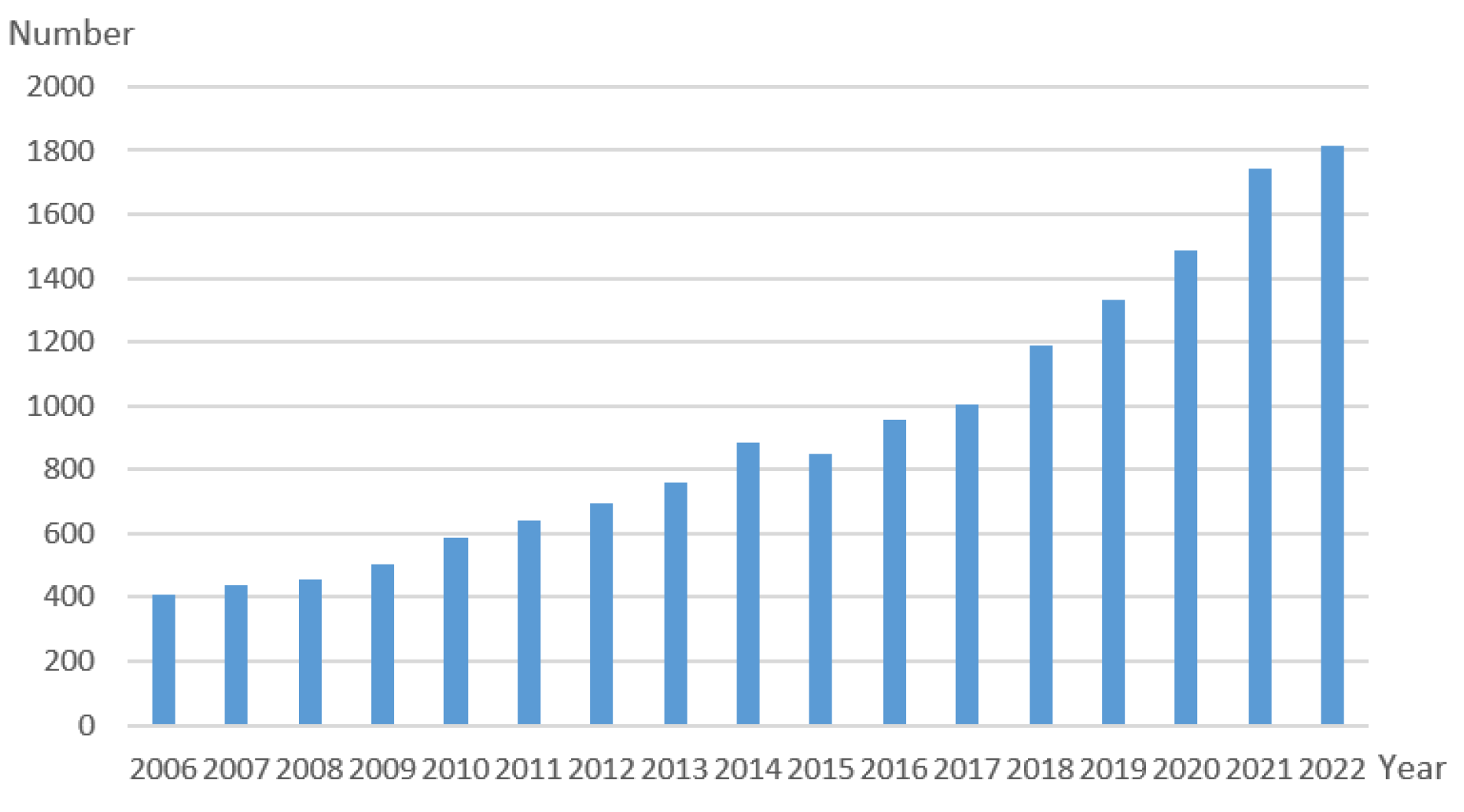

Figure 2 shows the number of research papers on fall detection on the Web of Science from 2006 to the present. Subsequently, bibliometric and content analyses were conducted to analyze the 31 selected articles and determine the prospects for research on fall detection.

To analyze the SOTA methods for fall detection from the retrieved articles, we conducted a survey considering that fall detection has been extensively studied from various perspectives in the past decade, which has generated significant research interest.

In the early stages, fall detection research was focused on providing more efficient medical assistance to the elderly. However, such methods do not appropriately meet the application requirements of fall detection. Therefore, considering the practical application needs of fall detection. For instance, in the context of elderly health management, it is necessary to assess the severity of a detected fall behavior, whether it poses a threat to the safety of the elderly individual, and whether it requires immediate contact with healthcare professionals for assistance.

3. SOTA Methods for Fall Detection

Sensors and CV were found to be the two most prominent research methods. Besides these, fall detection has been studied based on kinematics, smartphones, and IoT. In the early research stages, the primary objective of fall detection was to provide more efficient medical care services to the elderly and assist caregivers or clinical experts in monitoring their daily activities. The aim was to provide prompt and effective assistance on fall behaviour occurrence. With the deepening of research, fall behaviour detection also began to be considered in the field of public traffic, such as detecting falls of pedestrians in public spaces or workers in hazardous environments. The information from the 31 references selected is summarised in

Table 1, and each reference is presented in this section.

3.1. CV-Based Methods

With the increasing richness of video image data and progress in CV technology, fall detection has been achieved with CV technology. Compared with other methods, such as sensor-based fall detection, CV-based methods have been found to eliminate the burden of carrying sensors. Moreover, with the widening of CV applications, in addition to health monitoring of the elderly, some studies have applied CV to PTAs and manufacturing.

Sokolova et al. [

6] presented a fall detection method that includes a human detection algorithm for infrared videos and a fuzzy-based model for fall detection and inactivity monitoring. Fuzzy logic is used to increase the flexibility, avoid limitations in the representation of human figures, and smooth the limits of the evaluation parameters. They determined occurrence of a fall by studying the velocity of the deformation experienced by the segmented region of interest along an established time interval. However, the validation phase was limited to a few experiments using self-acquired videos, which cast doubt on the reliability of the experimental results.

Yang et al. [

7] proposed a fall detection method based on depth image analysis. In this method, after identifying a pedestrian according to skin colour pixels, the pedestrian tilt angle is obtained by searching the central line of the human silhouette and used as the main feature for fall detection. Moreover, the vertical velocity of a pedestrian is used as an assistive feature for fall detection. Owing to the consideration of the vertical velocity, this method can effectively avoid interfering actions, such as squatting and bending, which are identified as falls.

Wu et al. [

8] proposed an approach for processing images captured by a depth camera to predict the probable inclination of an imminent fall of a pedestrian. This method first reconstructs a three-dimensional (3D) human object based on a two-dimensional image and depth information extracted by a Kinect camera. The information obtained from the depth camera is then converted to a spatial position in the geodetic coordinate system, and principal component analysis is used to calculate the 3D inclination for fall detection. The authors stated that the model's robustness was validated by simulating scenarios of partially overlapping and occluded pedestrians using multiple volunteers. However, based on the experimental scene figures provided in the paper, the obstructed pedestrians did not exhibit falling behavior.

Feng et al. [

9] proposed an attention-guided long short-term memory (LSTM) model for fall detection in complex scenarios. In their method, YOLOv3 is used for pedestrian detection and the detected pedestrians are tracked using the DeepSORT method. Subsequently, the output of the last convolutional layer of VGG16 is utilized, and the features of each trajectory are input to the attention-guided LSTM model for fall event prediction. They validated the proposed model using a self-constructed complex-scene fall event dataset. However, the visual figures presented in the paper suggests that the challenges posed by complex-scene predominantly affect pedestrian detection and tracking, with limited consideration given to issues related to pedestrian occlusion.

Chang et al. [

10] proposed a hybrid convolutional neural network (CNN) and LSTM-based deep learning model for abnormal behaviour detection and a surveillance system that can instantly detect abnormal behaviour. In their method, YOLOv3 is used to detect pedestrians and the hybrid DeepSORT algorithm tracks pedestrians to obtain tracking trajectories from the sequence frames. Subsequently, a CNN is used to extract the action characteristics of each tracked trajectory, and an LSTM is used to build an anomalous behaviour identification model to predict abnormal behaviour, such as falling. When a detected abnormal behaviour exceeds a certain threshold, the monitoring system triggers a warning mechanism and sends a message to the monitor.

Geng et al. [

11] proposed a novel attention-guided fall detection algorithm. In their method, YOLOv3, block-based feature extraction, and attention modules are used to detect pedestrians. The DeepSORT algorithm is used to track each pedestrian for a trajectory containing a continuous event. A sliding window is used to store the feature maps, and a support vector machine (SVM) classifier is used to detect fall events. This method was tested on the CityPersons, Montreal Fall, and self-built datasets, achieving a pedestrian detection rate of 87.05% and accuracy of 98.55 %. Although the proposed method can keep tracking occluded pedestrians well, while detection accuracy remains suboptimal for heavy occlusions.

Zheng et al. [

12] proposed a lightweight fall detection algorithm which can migrate well to an embedded platform. First, a mosaic data enhancement algorithm is used to enhance the pedestrian detection algorithm. Subsequently, GhostNet is used to replace the DSPDarknet53 backbone network of the YOLOv4 network structure. Subsequently, the path convergence network is converted into a bi-directional feature pyramid network (BiFPN), and a deep separable convolution is used to replace the standard volume of the spatial pyramid pool, BiFPN, and YOLO head network product. Second, the TensorRt acceleration engine is used to optimise the attitude-estimation AlphaPose model, thereby accelerating the inference speed of attitude joint points. This method was tested on the UR and Le2i datasets, and accuracies of 97.28% and 96.86%, respectively, were achieved.

Zheng et al. [

13] proposed a pre-posed attention capture mechanism which can help improve fall detection accuracy by combining a human pose-based model with a key point-based model. In their method, based on the concept of dynamic key points, dynamic key points are automatically labelled to predicate the original attention mechanism of the depth model. This method was validated on two commonly used fall detection datasets, and the results showed that the fall detection accuracy was effectively improved. While the method for capturing attentional information is singular, which makes it challenging to fully compensate for the information loss caused by pedestrian occlusion.

3.2. IoT-Based Methods

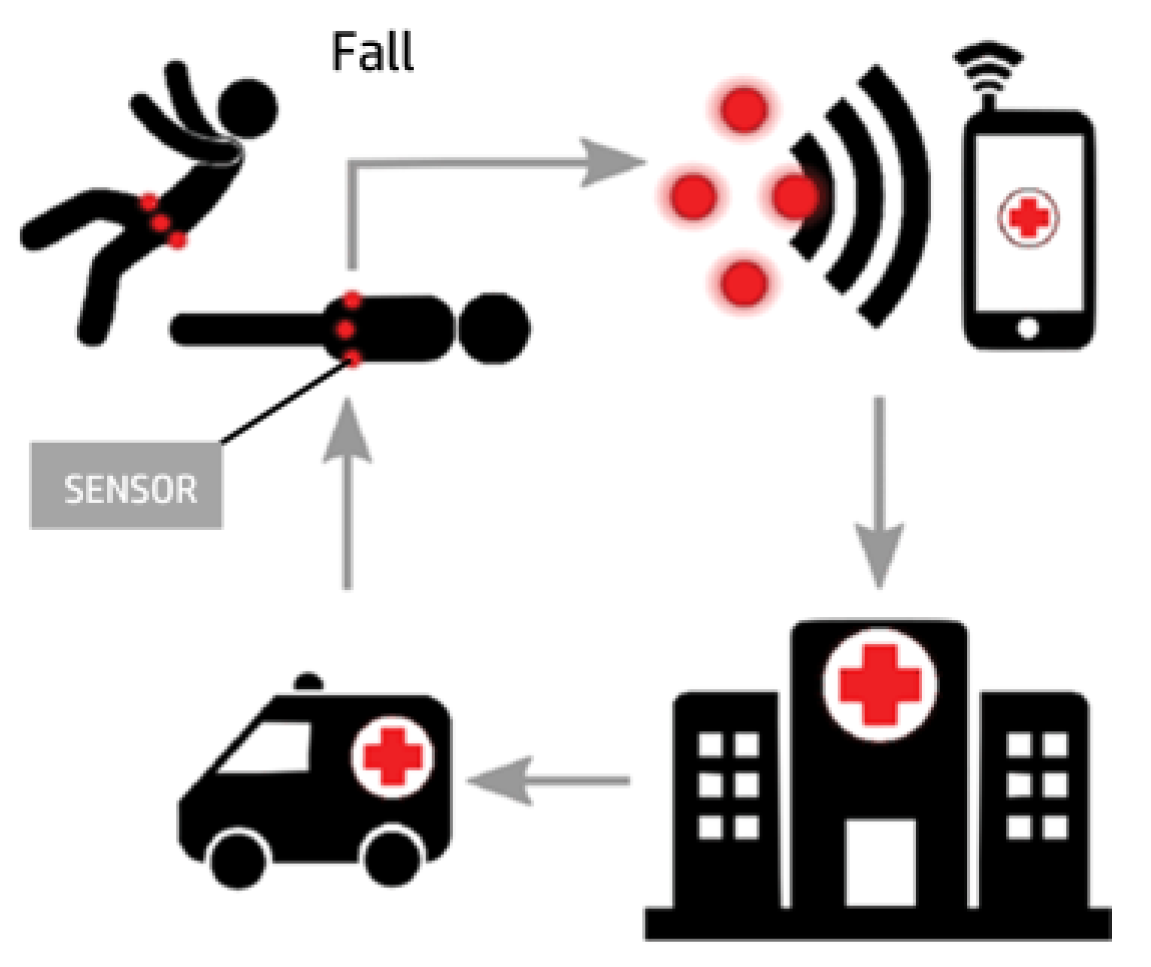

Fall detection systems based on the IoT are typically combined with sensors or CV. Most IoT-based methods for fall detection are considered applicable to the health of the elderly. Using an embedded fall detection module, an IoT system can provide more efficient assistance to the elderly. The basic structure of a fall detection system based on IoT is shown in

Figure 3.

Gia et al. [

14] proposed an IoT-based wearable system that could mitigate the serious consequences of fall behaviour. They minimised the energy consumption of the wearable sensor node in the IoT-based fall detection system and presented the design of an energy-efficient sensor node based on a customised nRF module. The sensor node was inexpensive, lightweight, and adaptable, making the system more efficient and feasible; however, limitations in terms of lifespan, interruptions, and system complexity still exist.

Dziak et al. [

15] proposed an IoT-based information system for elderly health problems which used a three-axial accelerometer and magnetometer, pedestrian dead reckoning, thresholding, and a decision tree algorithm. This system can position a monitored person and detect various behaviours, including falls. The detected behaviours can be classified as normal, suspicious, and dangerous. This system can only provide a coarse classification based on predefined threshold and is unable to accurately identify specific behaviors.

Hemmatpour et al. [

3] proposed a combination of real-time and future fall prediction and prevention algorithms using the computational capabilities of IoT nodes. This framework provides real-time emergency notifications when fall behaviour is detected and provides a mid-term analysis of the monitoring content for a period. The results and data can also be applied by clinical experts for long-term analysis, which can help them estimate the risk of future falls. The data analysis of three time dimensions reduces the false detection rate of fall behaviour and improves the efficiency of helping the elderly. While the paper addresses fall behavior prevention, it lacks empirical evidence to quantify the effectiveness of the prevention measures.

Gutiérrez-Madroñal et al. [

16] defined two types of falls based on an analysis of the major fall parameters. Based on the acceleration parameter characteristics of the two fall types, fall behaviour test events were generated using an IoT test event generator tool. Moreover, the obtained data were used to define the event processing language of the EsperTech pattern to detect fall behaviour. The validation of the proposed IoT system was conducted using generated test events, lacking configuration and testing in real-world scenarios. Furthermore, the classification of fall behavior types appears to be somewhat narrow.

To enhance data processing and prediction in the IoT-based health paradigm, Vimal et al. [

17] proposed an artificial intelligence-based deep CNN to further analyse the causes of fall behaviour. The foundational IoT framework was constructed using a Hadoop distributed file system (HDFS) module. The results of simulated experiments on benchmark datasets showed that the improved IoT system had a higher accuracy in the detection and classification of fall behaviour. However, based on the experimental results presented in the paper, there is insufficient evidence to support the improvement in fall behavior prediction.

Othmen et al. [

18] proposed a novel energy-aware IoT-based architecture for fall detection and message queuing telemetry Transport-based gatewayless monitoring. Based on this novel architecture, a hybrid double-prediction technique based on supervised dictionary learning was proposed to reinforce the detection efficiency and increase the reliability of wearable devices. To validate the technique, an offline controlled dataset was collected for training, and real fall data were used for online testing. The experimental results showed that this technique was superior to most methods using only an accelerometer for fall detection.

3.3. Smartphone-Based Methods

Smartphones have been widely used over the past decade and most people carry smartphones in their daily activities. Most smartphones provide various data using embedded sensors. Therefore, fall detection using smartphones has been focused on and studied.

Vermeulen et al. [

19] designed a series of experiments to determine the sensitivity and specificity of smartphone types and placements for smartphone-based fall detection. Eight volunteers participated in the simulated fall experiments, each carrying two mobile phones in different positions. Ten types of true falls, five types of falls with recovery, and eleven daily activities were simulated. Experimental results showed that the types and locations of the mobile phones affected the fall detection accuracy. The sensitivity of smartphone sensors leads to data noise issues during the acquisition of acceleration data, which may affect the accuracy of fall detection.

Considering that employing a smartphone as a unique sensor in a fall detection system may be accompanied by several limitations, Casilari et al. [

20] proposed a smartphone-based fall detection system incorporating a set of small sensing motes. The experimental results showed that the position of the smartphone did not increase the efficiency of the system; therefore, it could only be used to process signals or alerts from the system. Thus, a user can pay less attention to the position of the smartphone, and it can even be transported to an external point close to the user. During experiment data collection, the paper proposes various activities of daily living as control group based on user characteristics, rather than scenario features.

To improve the safety of smartphone users and prevent them from falling, Liu et al. [

21] proposed a ground-changing detection system called InfraSee, based on mobile phone infrared sensors. In this system, to reduce the energy consumption of the system, the infrared sensor can be turned off in specific situations. In addition, if a danger is detected ahead, it determines whether to issue an alert according to the reaction of the user. If a user is already aware of a danger, the system will not issue an alert. The proposed system not only requires a smartphone but also necessitates the addition of an infrared sensor, imposing an extra usage burden on pedestrians. Furthermore, the system experiences numerous false alarm issues when deployed in crowded areas.

Hakim et al. [

22] proposed a fall detection algorithm based on a threshold to solve the problem that fall detection based only on acceleration is prone to false alarms. In this study, built-in inertial measurement unit sensors of a smartphone were utilised to detect human falls, and an SVM was used to classify activities of daily living (ADL). Eight volunteers carrying smartphones on their bodies participated in the experiment to complete four different types of falls. The analyses of the experimental results showed high accuracy. However, in real life, a smartphone may not be located in the same position of pedestrian.

Impaired postural stability is an important predictor of falls in elderly individuals. Hsieh et al. [

23] determined whether a smartphone-embedded accelerometer can measure postural stability. In the experiments, 30 elderly people participated in a balance test, and the data was collected by holding a smartphone and force plate, respectively. The results showed a moderate-to-high significant correlation between measurements from the force plate and smartphone, indicating that the smartphone is a valid measurement tool for postural stability. The paper confirms that smartphones can measure postural stability, but there is a lack of thorough analysis on the relationship between postural stability and fall detection.

Greene et al. [

24] considered that clinical assessment of falls is expensive and requires non-portable equipment and specialist expertise. To reduce the need for clinical assessment, they proposed a smartphone application which included the assessment, management, and prevention of falls of the elderly. An analysis of 594 smartphone assessment samples identified a strong association among self-reported fall history, app-produced fall risks, and balance impairment scores. When utilizing data from real-world scenarios, the inherent presence of inevitable data gaps or noise can introduce substantial biases into the outcomes of fall assessments.

3.4. Kinematic-Based Methods

Kinematic characteristics are widely used for fall detection. In studies, fall behaviour has been detected by collecting and analysing kinematic data such as the impact force and change in the centre of mass (CoM). The purpose of the research has been to provide medical assistance for the elderly.

Hu et al. [

25] proposed a novel fall detection model based on a statistical process control chart. The fall indicator in the proposed model was defined using a linear combination of kinematic measures. A trial-and-error method was used to determine the weights of the selected kinematic measures. The results showed that compared to a single kinematic measure, the linear combination of kinematic measure performed better in fall detection. The efficacy of the weights determined through trial and error method in addressing dynamic scenarios warrants further investigation.

Van der Zijden et al. [

26] focused on impact mechanics in fall behaviour. Instead of using force plates to measure the direct fall impact force, they proposed a generic model for estimating hip-impact forces to assess the severity of sideways falls using kinematic measures. They hired 12 experienced judokas to perform different martial arts on a force plate and collected kinematic data. By analysing the data, four variables were determined as inputs: maximum upper body deceleration, body mass, shoulder angle at the instant of “maximum impact” and maximum hip deceleration. As the experimental data is confined to fall-prone populations such as the elderly, the proposed model requires further enhancement in terms of generalizability. Future studies should aim to validate its effectiveness across diverse populations and in more complex scenarios.

Yamagata et al. [

27] conducted an uncontrolled manifold (UCM) analysis to test the effects of fall history on kinematic synergy. Elderly volunteers were divided into two groups as experimental subjects. One group had a fall history within 12 months. Volunteers walked at different speeds on a pathway, and their kinematic data were collected and analysed using the UCM. The results showed that the fall history increased the kinematic synergy. The proposed method does not account for the influence of the upper body on kinematic synergy. Furthermore, this method demands high precision in the information regarding the body structure and kinematic characteristics of lower body, its efficacy in real-world scenarios necessitates further experimental validation.

Chen et al. [

28] proposed an approach for reorganising accidental falls based on symmetry. The skeletal information of the human body was extracted using OpenPose. Fall behaviour was detected using three key parameters: speed of descent at the centre of the hip joint, human body centreline angle with the ground, and width-to-height ratio of the human body external rectangle. Moreover, they considered the ability of individuals to stand up after falling. The paper has several limitations in the experimental section. Firstly, the model's effectiveness was not validated in complex occlusion scenarios. Secondly, the experimental data used for fall behavior detection were all captured from a lateral perspective, resulting in inadequate sample diversity.

To investigate how variance in segmental configurations stabilise the CoM related to future falls, Yamagata et al. [

29] collected kinematic data of 30 community dwelling elderly while walking using a 3D motion capture system. After one year, 12 participants fell. By comparing the differences among the elderly, they found that those who had a fall history showed destabilisation of the CoM in the vertical direction. But the experimental data collection was conducted using questionnaires, which may raise concerns about the reliability and objectivity of the obtained data.

3.5. Wearable Device-Based Methods

A wearable device can be placed in helmets, clothing, belts, shoes, and other areas on the human body, and it can provide various data for fall detection research. Most research on fall behaviour detection based on wearable devices aims to provide efficient and reliable medical assistance to the elderly. The main research content can be categorized into three issues: accuracy of fall behaviour detection, energy endurance of wearable devices, and acceptance of wearable devices by the elderly.

Hussain et al. [

30] proposed a wearable sensor-based continuous fall monitoring system to detect fall behaviour and identify fall patterns. They also analysed the individual performance of an accelerometer and gyroscope for fall detection. The performance of the proposed system was further investigated through a series of experiments using three machine learning algorithms. The study shows that the fusion of sensor data may enhance the accuracy of fall detection. However, the persuasiveness of this finding is somewhat limited due to the inclusion of experiments combining only two types of sensor data.

Boutellaa et al. [

31] proposed a novel fall detection system using wearable sensors which exploited the covariance matrix as a feature extractor and fusion approach of raw signals. The effectiveness of the covariance matrix in enhancing the classification performance was demonstrated by testing two publicly available fall datasets. Although the proposed system exhibit enhancements in experiment results compared to other similar methods, it still remains at the conceptual level.

Casilari et al. [

32] pointed out that in many fall detection studies, volunteers are instructed to simulate falling behaviour in a controlled laboratory environment and use these data to validate the effectiveness of the detection method. To evaluate the adequacy of this method, the statistical characteristics of the acceleration signals from two real fall databases and simulated falls from well-known related works were compared. The results of the comparison showed noteworthy differences between real-life and simulated falls, which indicated the necessity to alter the strategies for evaluating wearable fall detectors.

Jachowicz et al. [

33] presented a new testing method for fall arrest equipment aiming at protecting workers working at heights. They used a test stand consisting of a Hybrid III 50th Pedestrian ATD anthropomorphic manikin and a measuring set with three-axis acceleration transducers. The appropriate alarm threshold was determined by detecting falling behaviour and falling acceleration in different situations. This testing methodology could potentially complement other accelerometer threshold-based fall detection methods in the future, offering a practical approach for threshold determination.

In addition, some studies have used deep learning methods to conduct a more intensive analysis of the data obtained by wearable devices.

Yu et al. [

34] aimed to enhance the explainability of an existing fall detection model. They proposed a novel variation of a deep learning model that integrated a hierarchical attention mechanism into an existing CNN. This model identified the part of the sensor data that contributed the most to the decision. It was evaluated using two large publicly available datasets. Although cross-validation has been conducted on two datasets to demonstrate the effectiveness and practicality of the proposed model, the performance of the model may still be limited by the datasets. When applied in real-world scenarios, updating model parameters through learning user movement patterns could potentially yield better results.

Yu et al. [

35] presented a tiny CNN (TinyCNN) with two-stage efficient feature extraction and evaluated it on two large-scale public fall datasets (KFall and SisFall) collected from wearable inertial sensors. The results revealed the black box of TinyCNN and showed most of the model predictions. The study not only conducted a conceptual validation of the proposed model but also developed a wearable prototype system alongside a companion mobile application which consists of an ultralow-power microcontroller unit with TinyCNN. The experimental results demonstrate that the proposed wearable system exhibits considerable potential for fall detection applications.

4. International Benchmark Datasets Used for Fall Detection

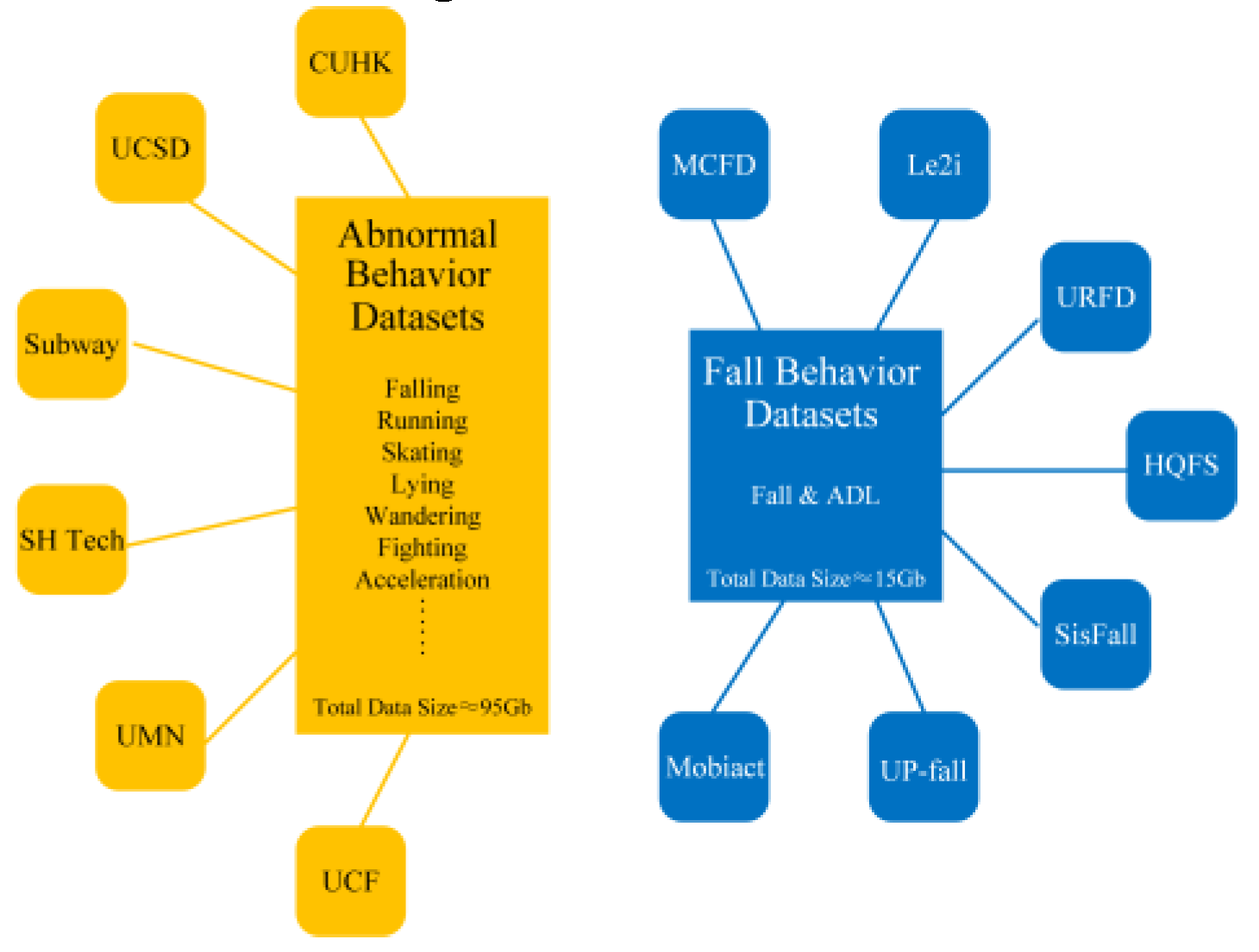

The widespread adoption of security surveillance video systems provides sufficient data for related studies. Numerous datasets containing pedestrian data have been used as experimental and validation data for research in this field. The international benchmark abnormal and fall behaviour datasets available until now are shown in

Figure 4.

In the early stages of research, datasets that included fall behaviour, such as the Chinese University of Hong Kong (CUHK) dataset, UCSD Anomaly Detection Dataset (UCSD), and ShanghaiTech Campus dataset, were commonly used.

CUHK Avenue Dataset [

36]: This dataset was collected from the campus of the Chinese University of Hong Kong. It includes 16 training and 21 testing video segments comprising 47 different abnormal events. The data images shown in

Figure 5, have a resolution of 640×360 pixels.

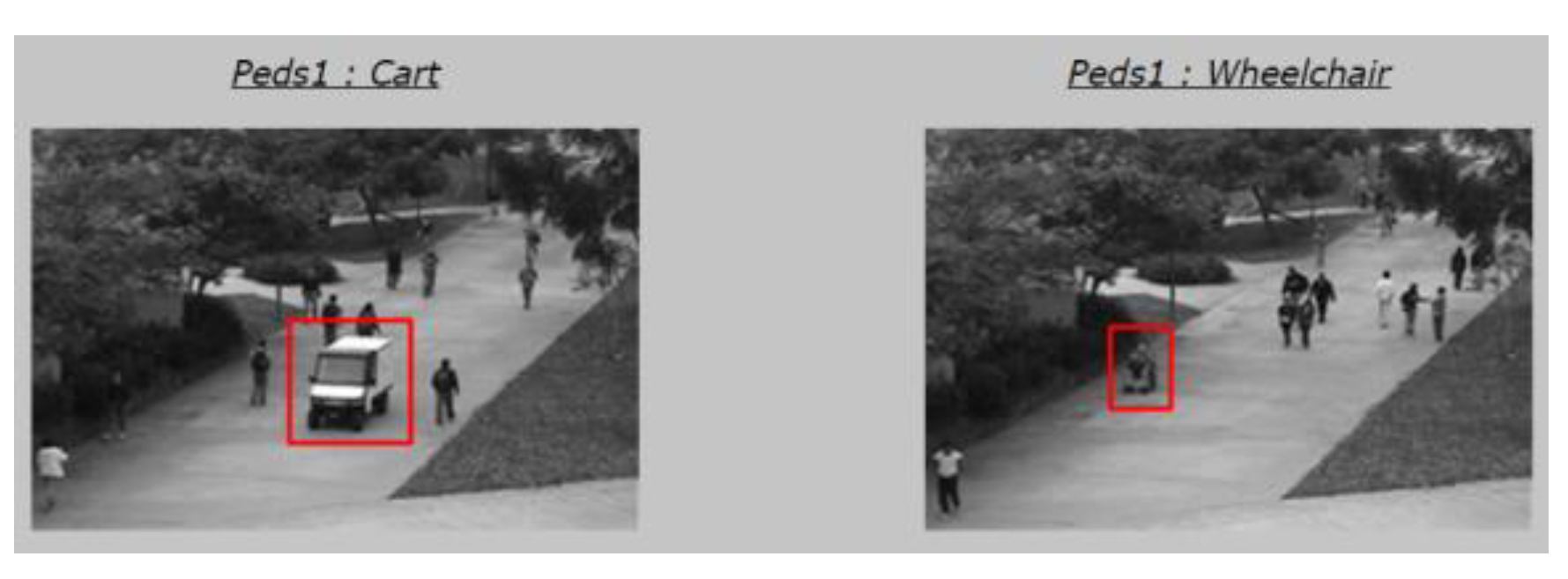

UCSD Anomaly Detection Dataset [

37]: Data were collected using fixed cameras installed at elevated positions on pedestrian walkways on campus. It defines two categories of anomalies: presence of nonhuman objects (e.g., vehicles) on walkways and abnormal pedestrian movement patterns (e.g., running and skating). The dataset is divided into two subsets based on different scenes: 50 training and 48 testing segments, with 52 different abnormal behaviours. The data images shown in

Figure 6 have resolutions of 240×360 and 158×238 pixels, respectively.

Subway Dataset [

38]: This dataset was collected in two indoor scenarios: subway entrances and exits. It includes two long videos collected in these two scenes, with primarily observed abnormal behaviours such as walking in the wrong direction, fare evasion, loitering, cleaning activities, crowding, and jumping. The data images shown in

Figure 7 have a resolution of 512×384 pixels.

ShanghaiTech Dataset [

39]: The data were collected from 13 scenes, including 330 normal training and 107 abnormal testing videos. They include 13 abnormal behaviours, such as cycling, skateboarding, and fighting. The data images shown in

Figure 8 have a resolution of 846×480 pixels.

University of Minnesota (UMN) Dataset [

40]: Researchers from the University of Minnesota collected this dataset for three different scenarios: lawns, indoor halls, and squares. It includes 11 short video segments with 7740 frames, and the data images have a resolution of 320×240 pixels. As

Figure 9 shows, normal behaviour in the videos involves crowds walking in orderly, whereas abnormal behaviour primarily involves the sudden dispersal of crowds.

UCF Crime Dataset [

41]: This dataset comprises surveillance videos collected from real-world surveillance cameras. It contains 1900 video segments with a total duration of approximately 128 hours. As shown in

Figure 10, the data images have a resolution of 320×240 pixels. The dataset includes 13 real-world abnormal situations: abuse, arrest, arson, assault, traffic accidents, burglary, explosions, fights, robberies, shootings, theft, shoplifting, and vandalism.

Video data in above datasets are collected through cameras in typical high-traffic real-world scenarios, such as campuses and subway stations. These datasets generally classify pedestrian behavior into normal and abnormal behavior, with fall behaviour being one type of abnormal behavior. When conducting fall detection research, other abnormal behaviours often cause false alarms. With research progress, datasets were also established for fall detection-related studies, which only include fall behaviours and daily activities. Examples of these datasets are Multiple Cameras Fall Dataset (MCFD), Le2i, High-quality Fall Simulation Dataset (HFSD), and University of Rzeszow Fall Detection (URFD).

MCFD [

42]: This dataset contains videos from 24 scenes recorded using 8 IP cameras. The first 22 scenes include various confounding events, including falls, whereas the last 2 scenes contain confounding events without falls. Confounding events include activities such as walking, lying on the ground, lying on the sofa, sitting, squatting, and standing. Of the 192 videos, 184 contain fall behaviours. For the data images shown in

Figure 11, the frame rate for data collection is 120 fps and the resolution is 720×480 pixels.

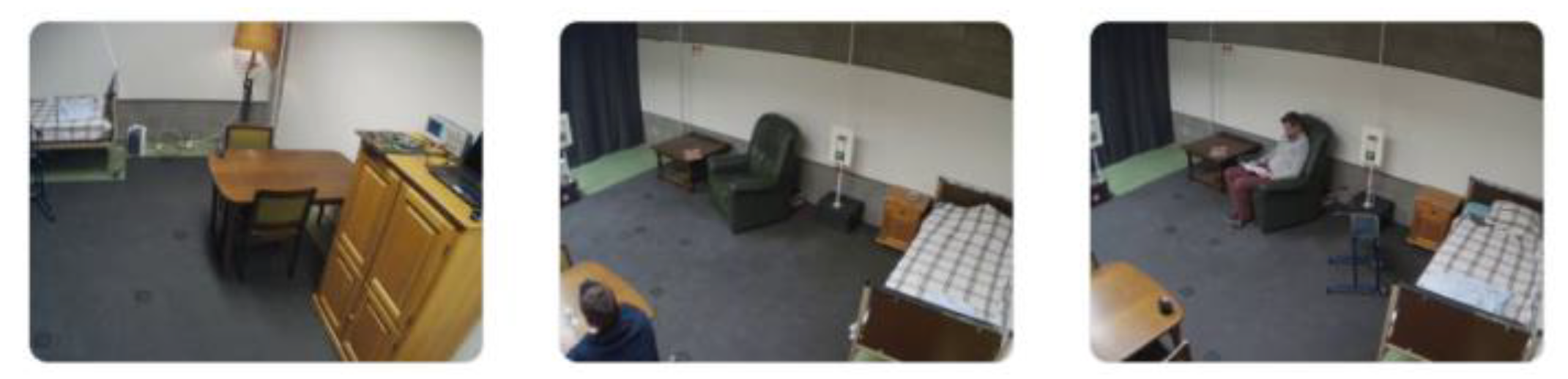

Le2i Fall Detection Dataset [

43]: This dataset includes 191 videos of human motion collected from four different environments (‘home,’ ‘coffee shop,’ ‘office,’ and ‘lecture hall’). The videos range from 30 s to 4 min in length, with an image resolution of 320×240 pixels. Some examples are shown in

Figure 12. Human motion in the videos was performed by different volunteers, and factors such as lighting, clothing colour, clothing texture, shadows, reflection, and camera angles were varied during data collection.

URFD Dataset [

44]: This dataset comprises depth and RGB images collected using two cameras from four scenes. The resolution of the images in

Figure 13 is 640×240 pixels. The dataset contains 40 ADL sequences, 30 fall sequences, and sensor data collected using accelerometers.

High-quality Fall Simulation Dataset [

45]: This dataset was collected in a nursing-home setting using five network cameras. The image resolution is 640×480 pixels. Ten volunteers performed 55 fall behaviours in different scenarios with variations in fall speed, starting posture, and ending posture. Additionally, data was collected for 17 different ADL scenarios, with an average length of 20 min and 39 s. Examples are shown in

Figure 14.

SisFall Dataset [

46]: Volunteers for this dataset included both young and healthy elderly individuals aged 62 years and above. The young volunteers participated in data collection for 19 ADLs and 15 fall behaviours, whereas the elderly volunteers contributed data for 15 ADLs. The data for each activity were collected using custom devices equipped with two types of accelerometers and a gyroscope.

UP-Fall Detection Dataset [

47]: This dataset involved 17 young volunteers aged 18–24 years. The volunteers performed 11 different actions while wearing sensors or in scenarios equipped with environmental and visual sensors. The actions included five fall-related and six daily actions (such as walking, standing, jumping, lying down, and picking up objects), with each action repeated thrice.

Mobiact Dataset [

48]: This dataset was collected through smartphone sensors carried by volunteers. It is commonly used for smartphone-based pedestrian action recognition research. It involved 50 volunteers participating in 9 types of ADLs and 54 volunteers participating in 4 types of fall behaviours.

In summary, all the benchmark datasets were compared with their distinguishing characteristics of scenarios, content, behaviour, resolution, and data size. The results are summarized in

Table 2.

However, in existing studies on fall detection behavior, many researchers choose to use self-built datasets to validate their proposed methods. These datasets are meticulously curated under controlled conditions, where volunteers are meticulously trained and supervised during the process of data acquisition pertaining to falls and routine activities. An analysis of the literature pertinent to this study reveals that approximately 62.5% of investigations rely on self-built datasets, 25% draw upon publicly accessible datasets, while 12.5% utilize a combination of self-built and public data repositories. The rationale behind this predilection may be attributed to nuanced factors encompassing the contextual fidelity, data precision, and reliability inherent within publicly accessible datasets.

The genesis of much early research in fall detection revolved around initiatives targeting eldercare and health assistance, thereby prompting data collection endeavors within enclosed laboratory settings on cushioned surfaces to simulate falls, thereby culminating in the establishment of publicly accessible datasets. However, the perpetuation of utilizing these datasets within a milieu transitioning towards domains such as public transit engenders contentious debates. Furthermore, the resolution of video data encapsulated within the majority of datasets remains markedly subpar, substantially trailing the contemporary capabilities of cameras to capture high-definition imagery.

Furthermore, Casilari et al. [

32] pointed out by comparing acceleration features that data collected by volunteers simulating falls in laboratories differs from real pedestrian fall data. For instance, the time interval between the final instant of free fall phase and the maximum acceleration magnitude in real scenarios is smaller after a fall behavior occurs. This finding prompts researchers to re-examine the effectiveness evaluation strategy of models utilizing synthetically generated datasets based on programmed actions. X. Q. Yu et al. [

35] indicated in their study that the accuracy of fall detection systems can be improved by fusing data from multiple sensors. They suggested that not only different sensor data but also different types of data can be fused. However, current fall detection datasets can’t meet this requirement. In the future, it may be possible to collect pedestrian fall feature data using multiple data collection methods (such as video, sensors, audio, etc.,) in specific application scenarios, within a single dataset.

5. Discussion on Limitations and Future Outlook

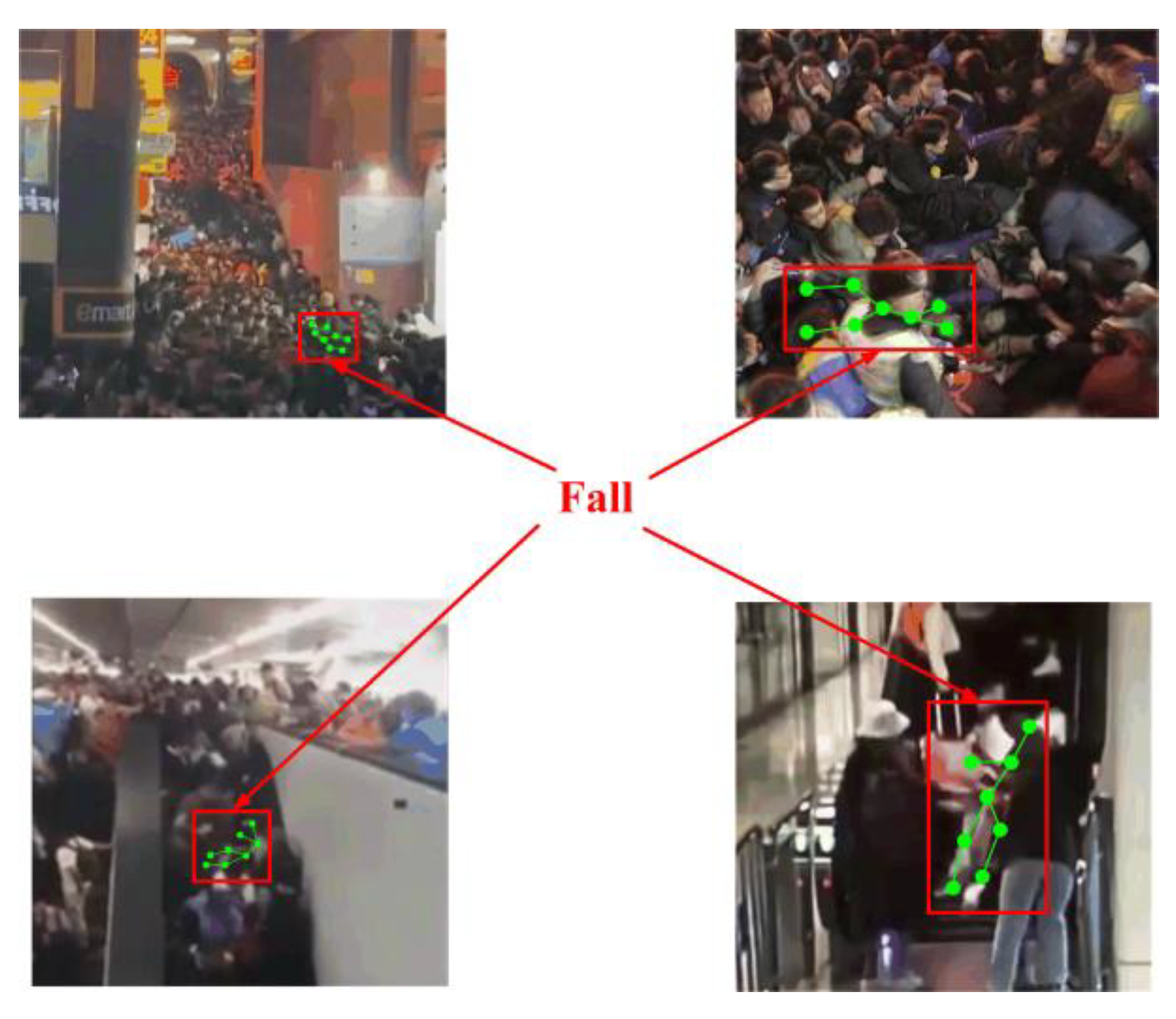

Since 2016, with the emergence of numerous fall detection methods based on CV technology and the popularity of video surveillance systems, researchers have been considering the application of fall detection technology in PTAs. Falls in crowds are frequently the primary cause of stampede accidents in public spaces.

Examples include the stampede accident on the Bund, Shanghai in 2014, the stampede accident on a Mexican subway in 2019, and the trample accident on Itaewon Street, South Korea in 2023. Therefore, accurate and efficient pedestrian fall detection are needed to effectively prevent or reduce stampede accidents in public places. Particularly, crowded public areas are extremely prone to pedestrian falls, as shown in

Figure 15.

When implementing pedestrian fall detection methods in PTAs, it not only encounters similar challenges as those in the field of elderly medical assistance but also introduces new limitations and challenges.

Typical issues include fall detection accuracy, false positives and negatives in fall behavior detection, non-standard posture fall recognition, identifying the location of pedestrian falls, context background of fall behavior, dataset diversity, and reliability, scene adaptability, real-time processing performance, hardware compatibility, sensor data reliability, burden of wearable devices, energy consumption, user personalization capability, user acceptance, and data privacy protection.

In the theoretical research stage, ensuring fall detection accuracy is paramount. Not only is high accuracy required, but false recognitions and omissions must also be minimized. in PTAs, the complex site structures, large numbers of diverse and moving pedestrians, potential occlusion phenomena, and various potential fall patterns pose challenges to accurately detecting falls. Most existing research focuses on falls occurring while pedestrians are walking or standing, whereas in real scenarios, pedestrians exhibit complex motion states. Falls can occur in various states such as walking, sitting, squatting, turning, running, etc. After detecting a fall, it is also necessary to identify the exact fall location to notify management personnel appropriately. However, frequently notifying management of every detected fall could burden staff and passenger flow. Therefore, it is necessary to analyze the contextual impact of the fall behavior incident. This includes assessing if the fallen pedestrian has sustained injuries if the fall behavior has disrupted crowd stability, and whether it is necessary to dispatch management personnel to the scene.

In the experimental verification stage, obtaining a diverse and representative reliable dataset is the primary challenge. The movement characteristics of pedestrians simulating falls in experimental environments differ from those in real scenarios, and significant differences exist between different scenes and pedestrian characteristics. Using standardized datasets with insufficient generalization capabilities can lead to erroneous performance assessments of proposed models and reduced accuracy in real scenarios. Furthermore, existing research typically validates model effectiveness using pre-collected data. In the actual application process in public transportation, it is necessary to detect pedestrian fall behavior in real-time within the scene. PTAs often have numerous video surveillance devices, and the interlinkage mechanisms between multiple cameras and the computational demands of processing large volumes of data pose significant challenges for researchers.

In the application configuration stage, hardware compatibility is an unavoidable challenge. When configuring a complete pedestrian fall behavior detection system, theoretical models are constrained by device performance. Optimizing the system architecture to meet various constraints such as memory, processing capability, and energy consumption while finding the optimal balance between detection accuracy and hardware limitations is crucial. During application, collecting long-term motion data for each user to analyze and individualize the parameters within the fall detection model may enhance detection accuracy. Meanwhile, methods using wearable sensor devices seem impractical in PTAs with large crowds, as it is impossible to provide every pedestrian with sensor equipment that requires daily wear. Suitable motion characteristic collection methods need to be found. Additionally, collecting personal motion data poses a risk of invading privacy, and public acceptance must be considered.

In the future, as

Figure 16 shows, existing fall detection methods can be applied in the field of PTAs and be optimized depending on the complex features of different scenarios.

The fusion of multiple sensor data has been proven to better reflect pedestrian motion features than a single sensor. Based on this, integrating various fall detection methods may overcome their limitations. For example, in PTAs with high crowd density, pedestrian occlusions make extracting pedestrian motion features from video data more difficult, which also reduce data reliability. Researchers often borrow solutions from other computer vision research fields (such as object tracking, object detection, etc.). However, sensor-derived motion feature data remains highly reliable in occluded situations, suggesting the combination of computer vision and wearable-device methods to ensure data reliability during occlusions.

Due to the high pedestrian flow in PTAs, issuing wearable devices to all pedestrians is challenging both economically and policy-wise. Various smart devices (such as smartphones, smartwatches, smart bracelets, etc.) have become daily necessities, serving as sensor carriers and providing software and hardware support for data analysis and transmission. Furthermore, long-term gait tracking can assess pedestrian fall risk, focusing on high-risk pedestrians may save public resources and improve management efficiency.

In addition, when a pedestrian falls in a PTA, understanding the potential disturbance and its impact on the stability of surrounding people is crucial. It is not only essential to detect or predict the occurrence of falls of a pedestrian but also to continuously assess over a long time series. This continuous assessment should consider factors such as the duration of the fall, the magnitude of the fall, and ability of the individual to recover, with the aim of determining the level of harm and necessity of intervention.

6. Conclusions

In this study, we reviewed fall detection research conducted over the past decade, classified references according to research methods, and introduced commonly used datasets in fall detection research. We examined the application prospects of fall detection methods in the field of public traffic areas. Fall detection has been gaining increasing attention in recent years. This study was aimed at providing researchers with new research ideas. The main conclusions of this study are summarised as follows:

1) Pedestrian fall behaviour may significantly affect the stability of the surrounding crowd, and further investigating pedestrian fall detection in PTAs is necessary.

2) The development of CV technology has provided new possibilities for pedestrian fall detection (senseless and long distance) in public traffic.

3) In PTAs, pedestrians in crowded places suffer from visual occlusion, which may reduce the accuracy of fall behaviour detection and become a challenge for future research.

4) A fall detection method based on multi-dimensional data fusion can utilise the advantages of various technologies and overcome their shortcomings in fall detection in pedestrian crowds, which may become a research trend in the future.

5) Current fall detection methods are mostly based on results, whereas human behaviour has its own evolutionary pattern. Therefore, a research method to analyse the historical movement and behavioural characteristics of individual pedestrians from the perspective of dynamics will be important, which may prospectively predict pedestrian fall behaviour.

The above overview will help researchers understand the SOTA of fall detection methods and propose new methodologies by improving and synthesizing the highlighted issues in PTAs.

Author Contributions

Conceptualization, W.J.Zhu and R.Y.Zhao.; methodology, H.Zhang.; software, A.Rahman and W.J.Zhu.; validation, formal analysis and investigation, C.F.Han, R.Y.Zhao and L.C.Ling.; resources, data curation, writing—original draft preparation, writing—review and editing & visualization, W.J.Zhu and B.Y.Wei.; supervision & project administration, H.Zhang. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (No. 72374154)

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Usmani, S.; Saboor, A.; Haris, M.; Khan, M.A.; Park, H. Latest Research Trends in Fall Detection and Prevention Using Machine Learning: A Systematic Review. Sensors 2021, 21, 5134. [Google Scholar] [CrossRef] [PubMed]

- J. Ping., Disturbance effect and stability analysis of crowd panic behavior based on multidimensional data fusion. M.S. thesis, School of Electronic and Information Engineering, Tongji Univ., Shanghai, China, 2023.

- M. Hemmatpour, R. Ferrero, F. Gandino et al. Internet of Things for fall prediction and prevention. J. Comput. Methods Sci. Eng. 2018, 18, 511–518. [Google Scholar]

- T. C. Kolobe, C. Tu, and P. A. Owolawi. A review on fall detection in smart home for elderly and disabled people. J. Adv. Comput. Intell. Intell. Inform. 2022, 26, 747–757. [Google Scholar] [CrossRef]

- S. Nooruddin, M. M. Islam, F. A. Sharna et al. Sensor-based fall detection systems: a review. J. Ambient. Intell. Humaniz. Comput. 2021, 2022, 1–17. [CrossRef]

- Sokolova, M.V.; Serrano-Cuerda, J.; Castillo, J.C.; Fernández-Caballero, A. A fuzzy model for human fall detection in infrared video. J. Intell. Fuzzy Syst. 2013, 24, 215–228. [Google Scholar] [CrossRef]

- Yang, S.-W.; Lin, S.-K. Fall detection for multiple pedestrians using depth image processing technique. Comput. Methods Programs Biomed. 2014, 114, 172–182. [Google Scholar] [CrossRef]

- Wu, C.-F.; Lin, S.-K. Fall detection for multiple pedestrians using a PCA approach to 3-D inclination. Int. J. Eng. Bus. Manag. 2019, 11. [Google Scholar] [CrossRef]

- Feng, Q.; Gao, C.; Wang, L.; Zhao, Y.; Song, T.; Li, Q. Spatio-temporal fall event detection in complex scenes using attention guided LSTM. Pattern Recognit. Lett. 2018, 130, 242–249. [Google Scholar] [CrossRef]

- Chang, C.-W.; Lin, Y.-Y. A hybrid CNN and LSTM-based deep learning model for abnormal behavior detection. Multimedia Tools Appl. 2022, 81, 11825–11843. [Google Scholar] [CrossRef]

- Geng, P.; Xie, H.; Shi, H.; Chen, R.; Tong, Y. Pedestrian Fall Event Detection in Complex Scenes Based on Attention-Guided Neural Network. Math. Probl. Eng. 2022, 2022, 1–10. [Google Scholar] [CrossRef]

- Zheng, H.; Liu, Y. Lightweight Fall Detection Algorithm Based on AlphaPose Optimization Model and ST-GCN. Math. Probl. Eng. 2022, 2022, 1–15. [Google Scholar] [CrossRef]

- Zheng, K.; Li, B.; Li, Y.; Chang, P.; Sun, G.; Li, H.; Zhang, J. Fall detection based on dynamic key points incorporating preposed attention. Math. Biosci. Eng. 2023, 20, 11238–11259. [Google Scholar] [CrossRef] [PubMed]

- T. N. Gia, V. K. Sarker, I. Tcarenko et al. Energy efficient wearable sensor node for IoT-based fall detection systems. Microprocess. Microsyst. 2018, 56, 34–46. [CrossRef]

- D. Dziak, B. Jachimczyk, and W. J. Kulesza. IoT-based information system for healthcare application: design methodology approach. Appl. Sci. 2017, 7, 596. [CrossRef]

- Gutierrez-Madronal, L.; La Blunda, L.; Wagner, M.F.; Medina-Bulo, I. Test Event Generation for a Fall-Detection IoT System. IEEE Internet Things J. 2019, 6, 6642–6651. [Google Scholar] [CrossRef]

- S. Vimal, Y. H. Robinson, S. Kadry et al. IoT based smart health monitoring with CNN using edge computing. J. Internet Technol. 2021, 22, 173–185. [Google Scholar]

- F. Othmen, M. Baklouti, A. E. Lazzaretti et al. Energy-aware IoT-based method for a hybrid on-wrist fall detection system using a supervised dictionary learning technique. Sensors 2023, 23, 3567. [CrossRef] [PubMed]

- Vermeulen, J.; Willard, S.; Aguiar, B.; De Witte, L.P. Validity of a Smartphone-Based Fall Detection Application on Different Phones Worn on a Belt or in a Trouser Pocket. Assist. Technol. 2014, 27, 18–23. [Google Scholar] [CrossRef] [PubMed]

- E. Casilari, J. A. Santoyo-Ramón, and J. M. Cano-García. Analysis of a smartphone-based architecture with multiple mobility sensors for fall detection. PLoS One 2016, 11, e0168069. [CrossRef]

- Liu, X.; Cao, J.; Wen, J.; Tang, S. InfraSee: An Unobtrusive Alertness System for Pedestrian Mobile Phone Users. IEEE Trans. Mob. Comput. 2016, 16, 394–407. [Google Scholar] [CrossRef]

- Hakim, A.; Huq, M.S.; Shanta, S.; Ibrahim, B. Smartphone Based Data Mining for Fall Detection: Analysis and Design. Procedia Comput. Sci. 2017, 105, 46–51. [Google Scholar] [CrossRef]

- Hsieh, K.L.; Roach, K.L.; Wajda, D.A.; Sosnoff, J.J. Smartphone technology can measure postural stability and discriminate fall risk in older adults. Gait Posture 2019, 67, 160–165. [Google Scholar] [CrossRef] [PubMed]

- Greene, B.R.; McManus, K.; Ader, L.G.M.; Caulfield, B. Unsupervised Assessment of Balance and Falls Risk Using a Smartphone and Machine Learning. Sensors 2021, 21, 4770. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Qu, X. Detecting falls using a fall indicator defined by a linear combination of kinematic measures. Saf. Sci. 2015, 72, 315–318. [Google Scholar] [CrossRef]

- van der Zijden, A.; Groen, B.; Tanck, E.; Nienhuis, B.; Verdonschot, N.; Weerdesteyn, V. Estimating severity of sideways fall using a generic multi linear regression model based on kinematic input variables. J. Biomech. 2017, 54, 19–25. [Google Scholar] [CrossRef]

- Yamagata, M.; Tateuchi, H.; Shimizu, I.; Ichihashi, N. The effects of fall history on kinematic synergy during walking. J. Biomech. 2019, 82, 204–210. [Google Scholar] [CrossRef]

- Chen, W.; Jiang, Z.; Guo, H.; Ni, X. Fall Detection Based on Key Points of Human-Skeleton Using OpenPose. Symmetry 2020, 12, 744. [Google Scholar] [CrossRef]

- Yamagata, M.; Tateuchi, H.; Shimizu, I.; Saeki, J.; Ichihashi, N. The relation between kinematic synergy to stabilize the center of mass during walking and future fall risks: a 1-year longitudinal study. BMC Geriatr. 2021, 21, 1–10. [Google Scholar] [CrossRef]

- Hussain, F.; Ehatisham-Ul-Haq, M.; Azam, M.A. Activity-Aware Fall Detection and Recognition Based on Wearable Sensors. IEEE Sensors J. 2019, 19, 4528–4536. [Google Scholar] [CrossRef]

- Boutellaa, E.; Kerdjidj, O.; Ghanem, K. Covariance matrix based fall detection from multiple wearable sensors. J. Biomed. Informatics 2019, 94, 103189. [Google Scholar] [CrossRef]

- Casilari, E.; Silva, C.A. An analytical comparison of datasets of Real-World and simulated falls intended for the evaluation of wearable fall alerting systems. Measurement 2022, 202. [Google Scholar] [CrossRef]

- Jachowicz, M.; Owczarek, G. Studies of Acceleration of the Human Body during Overturning and Falling from a Height Protected by a Self-Locking Device. Int. J. Environ. Res. Public Heal. 2022, 19, 12077. [Google Scholar] [CrossRef]

- S. Yu, Y. Chai et al. Fall detection with wearable sensors: A hierarchicalattention-based convolutional neural network approach. J. Manag. Inf. Syst. 2021, 38, 1095–1121. [CrossRef]

- Yu, X.; Park, S.; Kim, D.; Kim, E.; Kim, J.; Kim, W.; An, Y.; Xiong, S. A practical wearable fall detection system based on tiny convolutional neural networks. Biomed. Signal Process. Control. 2023, 86. [Google Scholar] [CrossRef]

- C. Lu, J. C. Lu, J. Shi, and J. Jia, 2013. Abnormal event detection at 150 fps in matlab. presented at the IEEE international conference on computer vision. Sydney.

- V. Mahadevan, W. X. V. Mahadevan, W. X. Li, V. Bhalodia et al, 2010. Anomaly detection in crowded scenes. presented at the IEEE conference on computer vision and pattern recognition. California.

- Adam, A.; Rivlin, E.; Shimshoni, I.; Reinitz, D. Robust Real-Time Unusual Event Detection using Multiple Fixed-Location Monitors. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 555–560. [Google Scholar] [CrossRef] [PubMed]

- W. Liu, W. W. Liu, W. Luo, D. Lian et al, 2018. Future frame prediction for anomaly detection–a new baseline. presented at the IEEE conference on computer vision and pattern recognition. Salt Lake City.

- University of Minnesota, Department of Computer Science and Engineering. University of minnesota dataset for detection of unusual crowdactivity, http://mha.cs.umn.edu/projrecognition.shtml.

- W. Sultani, C. Chen, and M. Shah, 2018. Real-world anomaly detection in surveillance videos. presented at the IEEE conference on computer vision and pattern recognition. Salt Lake City.

- E. Auvinet, C. E. Auvinet, C. Rougier et al, 2010. Multiple cameras fall dataset. DIRO-Université de Montréal, Tech. Rep. 1350(2010).

- Charfi, I.; Miteran, J.; Dubois, J.; Atri, M.; Tourki, R. Optimized spatio-temporal descriptors for real-time fall detection: comparison of support vector machine and Adaboost-based classification. J. Electron. Imaging 2013, 22, 041106–041106. [Google Scholar] [CrossRef]

- Kwolek, B.; Kepski, M. Human fall detection on embedded platform using depth maps and wireless accelerometer. Comput. Methods Programs Biomed. 2014, 117, 489–501. [Google Scholar] [CrossRef]

- Baldewijns, G.; Debard, G.; Mertes, G.; Vanrumste, B.; Croonenborghs, T. Bridging the gap between real-life data and simulated data by providing a highly realistic fall dataset for evaluating camera-based fall detection algorithms. Heal. Technol. Lett. 2016, 3, 6–11. [Google Scholar] [CrossRef]

- Sucerquia, A.; López, J.D.; Vargas-Bonilla, J.F. SisFall: A Fall and Movement Dataset. Sensors 2017, 17, 198. [Google Scholar] [CrossRef]

- Martínez-Villaseñor, L.; Ponce, H.; Brieva, J.; Moya-Albor, E.; Núñez-Martínez, J.; Peñafort-Asturiano, C. UP-Fall Detection Dataset: A Multimodal Approach. Sensors 2019, 19, 1988. [Google Scholar] [CrossRef]

- G. Vavoulas, C. Chatzaki, T. Malliotakis et al, 2016. The mobiact dataset: Recognition of activities of daily living using smartphones. presented at the International Conference on Information and Communication Technologies for Ageing Well and e-Health. Rome.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).