1. Introduction

1.1. Objectives of Wildlife Conservation

The World Association of Zoos and Aquaria (WAZA) aims to conserve endangered species through breeding programs and exchange of captive animals. This association requires certain standards of its members and emphasizes the importance of welfare among captive animals [

1]. Based on consistent and current research, good physical and psychological welfare among captive animals must be maintained and therefore associations such as this play an important role in conservation [

1,

2,

3,

4].

Because of the importance of animal welfare, it is essential to ensure that captive animals are consistently studied in relation to their behavioral reactions to different aspects of their captive lives, such as enclosure design, enrichment and much more [

5,

6,

7,

8]. An example of an animal that may require such studies to properly conserve the species are African elephants (

Loxodonta sp.), which have faced great declines in population size all over Africa [

5,

9].

1.2. Captive Elephant Behavior and Welfare

To accommodate the welfare needs of captive elephants, normal behaviors must first be monitored and understood [

6,

10,

11]. Behaviors such as foraging, locomotion, social behavior etc. likely influences the welfare of elephants and helps understand undesired behaviors that may indicate stress [

12,

13,

14]. It is important to also address the nocturnal behavior of elephants since night behavior can differ from behaviors observed during the day. An example of one such behavior is recumbent sleep, where the elephants lay down and sleep, which exclusively occurs during the night [

14,

15]. On average, captive African elephants lie down to sleep around two hours per night and tend to lie down more if their bedding is comfortable [

14,

15,

16,

17]. Besides rest, feeding and atypical behaviors are the behaviors most commonly observed in captive elephants, yet the activity level is lower at night compared to during the day [

14,

18].

Atypical behaviors observed in animals kept in captivity are usually those that deviate from the norm for their species and are not commonly observed in their natural habitat. These behaviors are frequently regarded as signs of compromised welfare [

19,

20,

21,

22]. A type of stereotypic behavior is characterized by the consistent and inappropriate repetition of specific movements or body postures. These actions seemingly lack any purpose or function and appear to be coping mechanisms to reduce stress, but the exact causes remain unclear [

21,

22,

23,

24,

25]. Different forms of stereotypic behavior have been observed in elephants, including whole-body movements [

10,

13,

22,

26,

27]. Among whole-body movements, ‘swaying’ is most common and is defined as a rhythmic side-to-side movement of the body, typically observed while standing [

12,

18,

22]. Consistent observations of elephants may be helpful in handling these behaviors when they arise.

1.3. Machine Learning as a Tool for Behavioral Analysis

One widely used method for observation of animal behavior is videography [

14,

28], although this method can be highly time consuming [

29,

30,

31,

32]. Manual scoring is limited by human capabilities, such as the observer not recognizing behavioral patterns or failing to spot new patterns. At the same time, it is difficult to standardize the scoring of behaviors by human observers due to subjectivity [

33]. Inconsistency between different observers can therefore not be avoided completely [

31]. Furthermore, it is challenging to track multiple animals and behaviors at the same time, despite the use of video material [

29,

31]. Some of these logistical problems with behavioral analysis may be aided with the use of machine learning [

32].

Machine learning used in video and image analysis (computer vision) for a variety of purposes has been explored in recent years [

34,

35]. The use of object detection as a machine learning tool to find and recognize a given object has been investigated previously and used to recognize animals and their behaviors [

32,

36,

37,

38]. Tools such as this may prove useful as a way of automating behavioral analysis in the near future, which may reduce the workhours required of the researcher [

30,

32]. However, these uses and methods are still in their infancy which necessitates further investigation of different machine learning models, methods, and implementations [

36,

37,

39].

DeepLabCut is a Machine Learning software that specializes in pose estimation in video material, by using points for tracking specific body parts [

40,

41]. This is utilized in behavior tracking by marking body parts of interest on a relatively small dataset of images showing a diverse range of behaviors by the subject of interest [

32]. Constructing a DeepLabCut model capable of accurately tracking body parts of interest may allow for automatization of behavior coding in behavioral studies [

42].

Create ML is a built-in application for iOS products with the ability to train custom machine learning models such as object detection models with no code. Create ML models can be trained to detect and recognize objects of interest, such as an elephant executing a specific behavior, by annotating a relatively small and diverse dataset. This annotation can be used in various ways, such as using the image annotation tool RectLabel for marking boxes, polygons, or skeletons on the subject, and categorizing the behavior. This may allow for construction of a simple model that can recognize simple behaviors with relatively user-friendly software [

43].

1.4. Aim of This Paper

This paper aims to use DeepLabCut and Create ML to construct models capable of tracking selected body parts and classifying elephant behaviors, aiming to streamline and automate behavioral analysis processes, thus ultimately alleviating the workload of researchers and zookeepers, and standardizing behavioral coding. This study simultaneously examines nocturnal activity of two captive African elephants with the following hypotheses:

This study expects that the machine learning models can predict selected behaviors on the same level as manual scoring.

This study expects that behavioral differences between the elephants and behavioral differences between days can be demonstrated using selected computer vision models.

2. Materials and Methods

2.1. Subjects and Enclosure

The behavior of two captive female African elephants, exhibited in Aalborg Zoo, Denmark, was examined. Both elephants were born wild in South Africa around 1982 and relocated to Aalborg Zoo in 1985. In this study the elephants are referred to as Subject A and B.

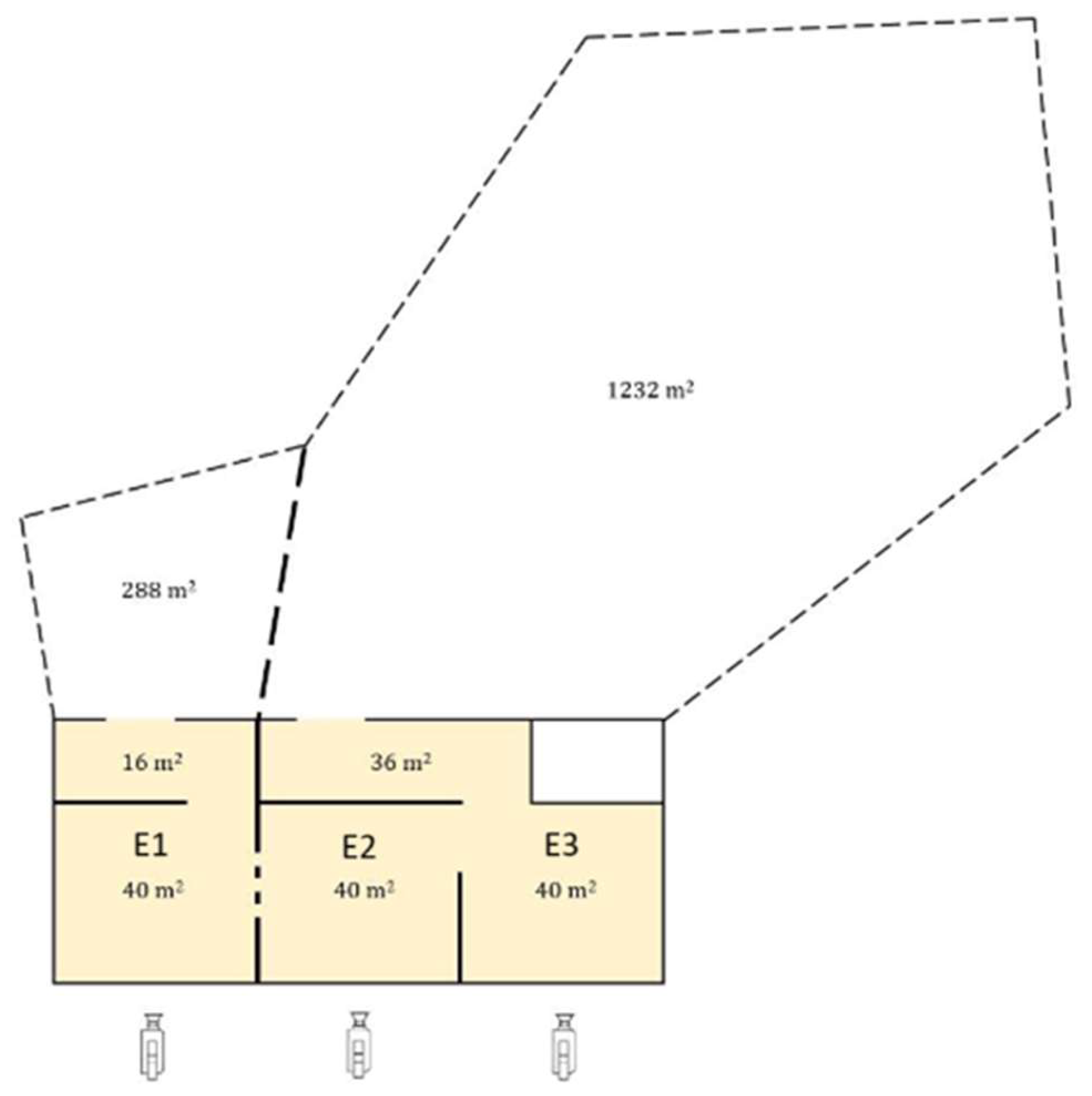

The elephant enclosure consisted of an indoor area and an outdoor area. The elephants did not have access to the outdoor enclosure at night during the examined period. The indoor enclosure consisted of concrete floors and walls with metal wires towards the visitor area (see

Appendix A). The two elephants were able to have physical contact during the night through metal bars between enclosure E1 and E2. Subject A had access to enclosure E1, and a corridor attached to the enclosure (56 square meters). Subject B had access to enclosure E2 and E3 as well as a corridor measuring a total of 116 square meters.

The elephants’ diet consisted of branches, seed grass hanging from nets in the inside and outside enclosures and concentrate pellets that would periodically be released into the enclosure from a timer automated mechanism, as a type of enrichment. Fresh fruits and vegetables were spread around their outdoor enclosure daily, which allowed for foraging behaviors. Foraging boxes, accessible with use of their trunks at the back wall of their enclosure, were also opened periodically throughout each night, controlled by a timer.

2.2. Data Collection

Prior to data collection an ethogram with selected behaviors was made. The behaviors were determined based on similar behavioral studies of the same elephants in previous publications, namely Bertelsen et al. (2020) and Andersen et al. (2020) and was modified for the purpose of this study [

14,

44,]. This ethogram was used to conduct a manual control and to compare the models; see

Table 1.

The data was collected from the 12th to 18th of March to use for model creation, and from the 16th to the 22nd of April for analysis purposes, using three cameras (ABUS, 25 FPS). The three cameras were placed at the visitor viewing side facing towards each enclosure (see

Appendix A). The two elephants were observed during the night for seven hours from 22:00 to 05:00 (DST).

2.3. Data Analysis

The analyzed days in this experiment provided data which were compared with statistical tests using Excel (Version 2404 Build 16.0.17531.20120) and RStudio (R version 4.1.2 (2021-11-01)).

For body part tracking DeepLabCut (version 2.3.9.) was used [

32,

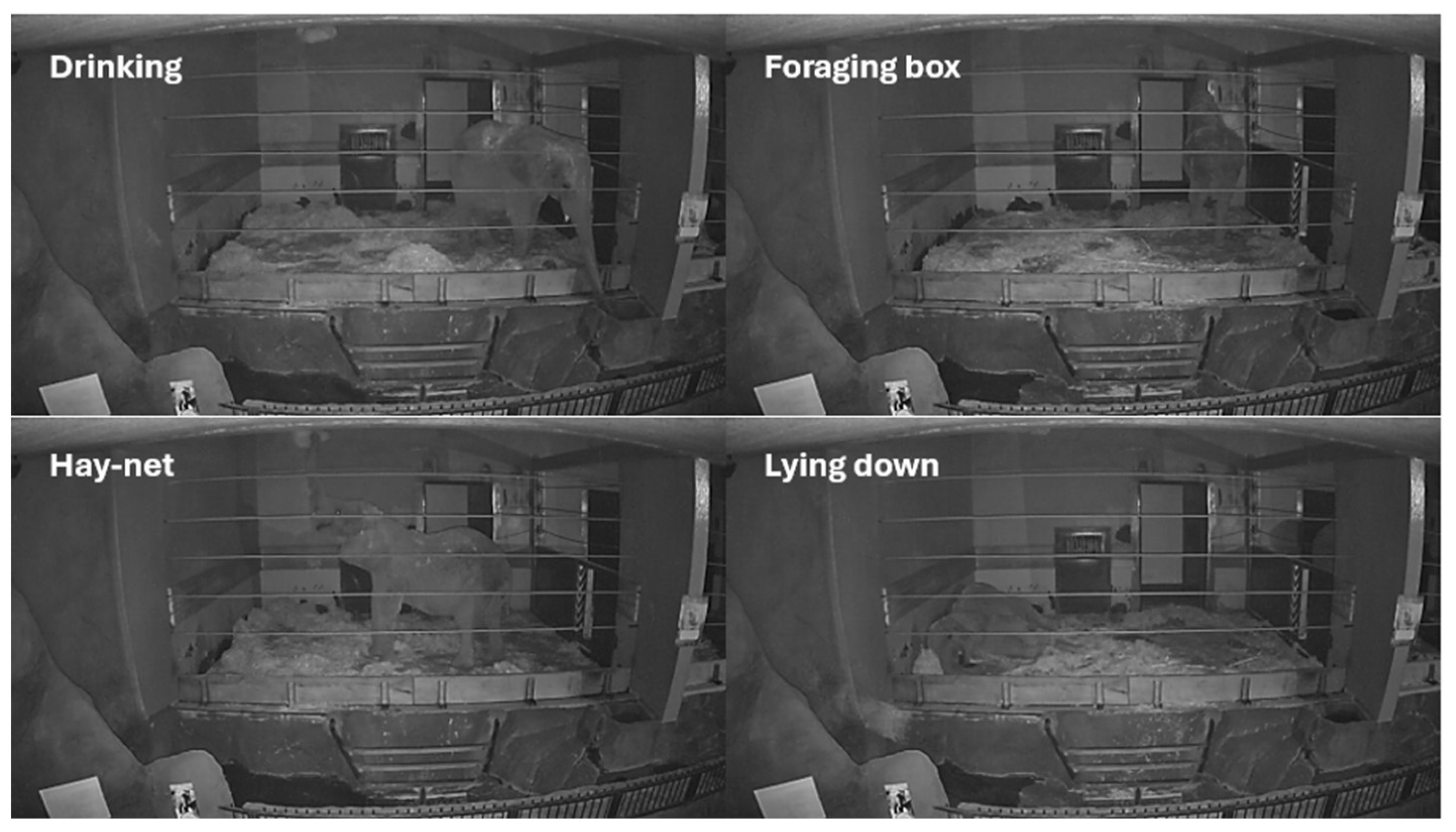

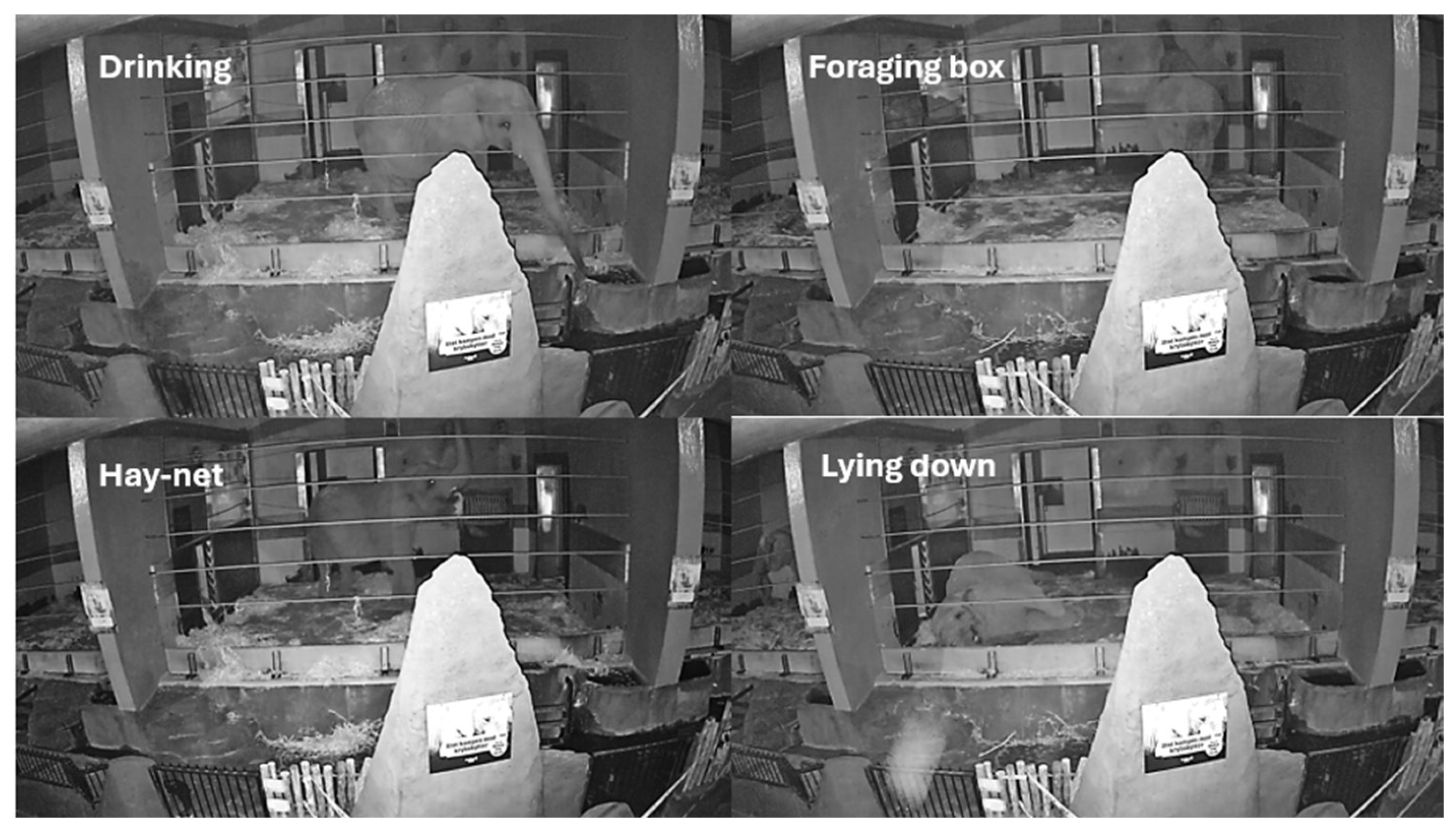

42]. Specifically, 244 frames taken from 70 30-minute videos were labelled (95% was used for training). ResNet-50-based neural network was used with the parameters set to 400000 training iterations. Validation was done with a single shuffle, and found the test error was: 17.44 pixels, train: 2.4 pixels (image size for creating the model was 1920 by 1080). A p-cutoff of 0.5 was used to condition the x- and y-coordinates for future analysis. DeepLabCut does not provide annotations of behavior, and it is required of the user to manually define and interpret the coordinates of the results. In this study, the behaviors were classified using certain parameters for the coordinates of specific body parts, which matched the selected behaviors during the control period (April 16th) to fit the ethogram. The locations of the selected behaviors can be seen in

Appendix B. Difficulties arose with defining some of the parameters, such as Drinking, which proved undefinable for both subjects as the label on the trunk tip was unstable. Furthermore, no distinct parameter was definable for the behavior Hay-net for Subject B as the foraging box and the hay net were located at approximately the same place in the video frame.

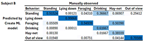

For object detection, Create ML (version 5.0 (121.1)) was used [43]. Specifically, 370 image frames were extracted from the model creation period and annotated using bounding boxes in Rectlabel Pro (version 2023.11.19). Each frame was annotated with a selected behavior as seen in

Table 1. Swaying was not labelled for the object detection part. The behavior Standing was labelled 232 times, Foraging was labelled 42 times, Lying down was labelled 39 times, Drinking was labelled 39 times and Hay-net was labelled 18 times. The dataset was split into two sets, one containing images for training and one containing images for validation. The model was trained with 6000 iterations, and the training set had an accuracy of 95% whilst the validation set had an accuracy of 75%.

To appraise the accuracy of the models designed with Create ML and DeepLabCut, a control was analyzed manually from the video footage from the 16th of April and used to compare the models results. To test the accuracy of the models compared to the control, a confusion matrix was conducted. In this case a multiple class confusion matrix was produced and analyzed [

45]. This will give insight into where the model performs well and has a high accuracy, as well as where mistakes occur, such as when the model mislabels a behavior. The columns of such a matrix represent the manually observed behavior of the individual while the rows represent the predicted behavior from the models. Time budgets and cumulative graphs were also used to further appraise the models [

46]. Furthermore, Kendall’s Coefficient of Concordance was used to measure the agreement between the models and control [

47].

The behavioral analysis consisted of time budgets, cumulative graphs, and Kendall’s Coefficient of Concordance between days. Time budgets were made for each elephant each day and for the whole study period. This was done using the sums, transformed into percentages, of observed time spent on each behavior, where the out of view percentage made up the time where no behavior was observed or classified. Time budgets for each day were used to investigate daily differences in behavior. The time budgets for the whole study period were used to see how much time was spent on each behavior in total and to compare the different models with the control. Kendall’s Coefficient of Concordance were used on the data from the time budgets to analyze the similarity between the observed behavior between different days.

Cumulative graphs were made for each behavior each day. The graphs were made with both the manually observed data and the model data for the control day, while the rest of the study period only had cumulative graphs made for the Create ML model.

A Spearman rank correlation test was used to investigate correlations between the subject’s ‘Lying Down’ behavior from night to night. Correlations were also used to test the similarities between the subjects and if they exhibited similar behavioral patterns throughout the night.

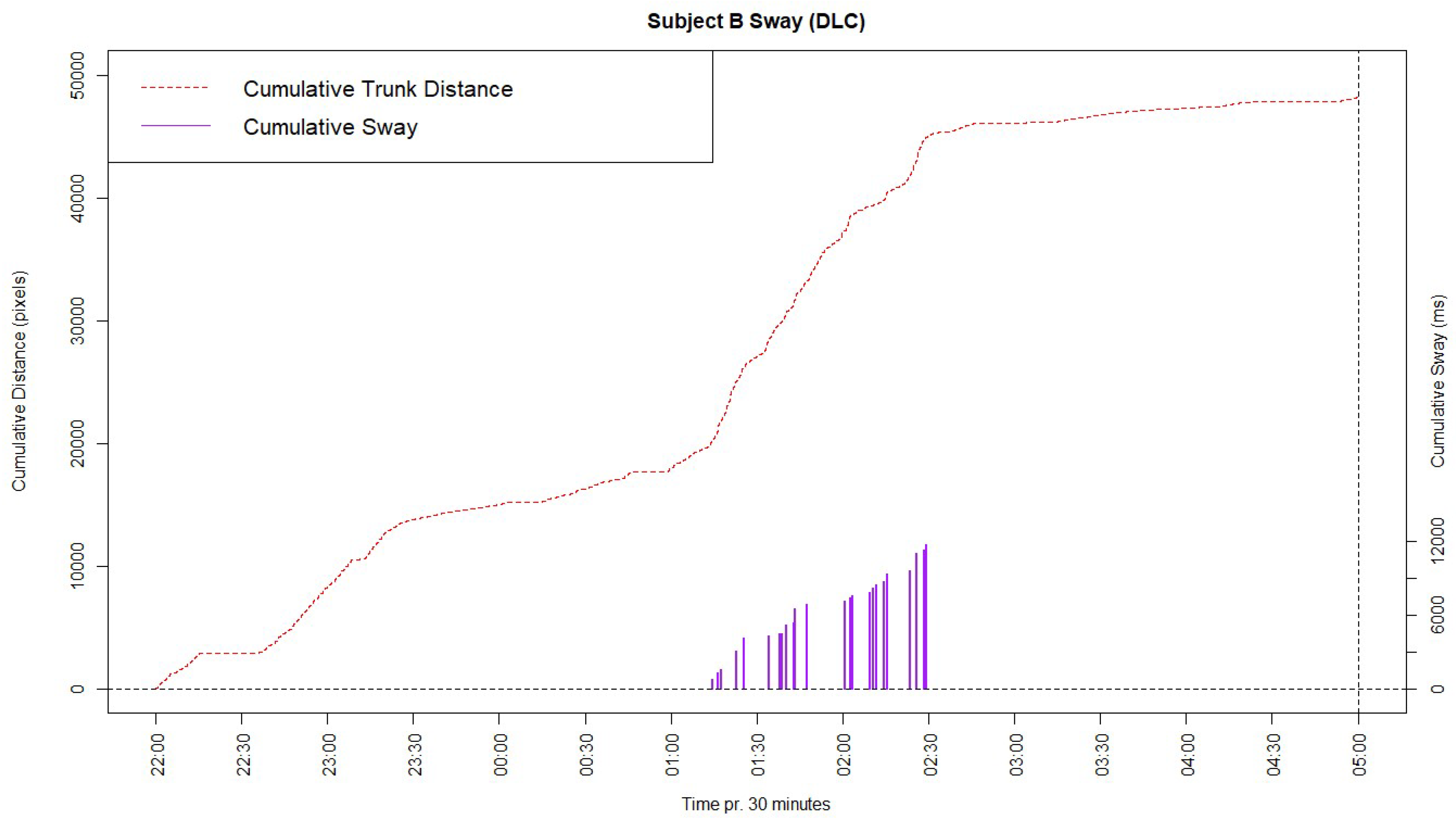

The possibility to observe the stereotypic behavior ‘Sway’ was also examined. This was accomplished by calculating the Euclidian distance between a given point of the trunk root, labelled by the DeepLabCut model, and the succeeding point [

48]. The distance between the points were calculated and plotted as a cumulative graph together with the actual cumulative time spent on the sway behavior, so that sway could be observed as steep increases in the cumulative sum.

3. Results

3.1. Comparability of Manual and Automatic Behavioural Observations

3.1.1. A General Overview

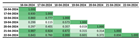

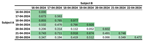

First, the capabilities of the two machine learning models, compared to a manually conducted analysis using an ethogram, have been displayed using time budgets, illustrated below in

Figure 1.

The time budgets for both subjects, using both models, showed similar percentages to the manual observations regarding standing and lying down with relatively small differences. For subject A, both models had a 10% out of view percentage. Since the manual observations showed an out of view percentage close to zero, this indicates that these were caused by uncertainty by the models, causing them to not label the subject. Both models for subject A also displayed a lower percentage for foraging than the manual observations. For subject B there were notable differences in Foraging and Hay-net, which is likely to be caused by the Hay-net being close to the foraging boxes, thus showing overlapping coordinates when observed by the DeepLabCut model. The Create ML model also had a lower Hay-net percentage, but contrarily a higher Foraging percentage than the manual observations. The out of view percentage for the DeepLabCut model was noticeably higher than the Create ML model, considering that the manual observations showed an out of view percentage close to zero. This was likely also caused by lack of labelling by the model. Drinking was left out of the DeepLabCut model due to limitations in defining the parameters of this behavior, caused by a lack of consistent appropriate labels needed to properly categorize the behavior.

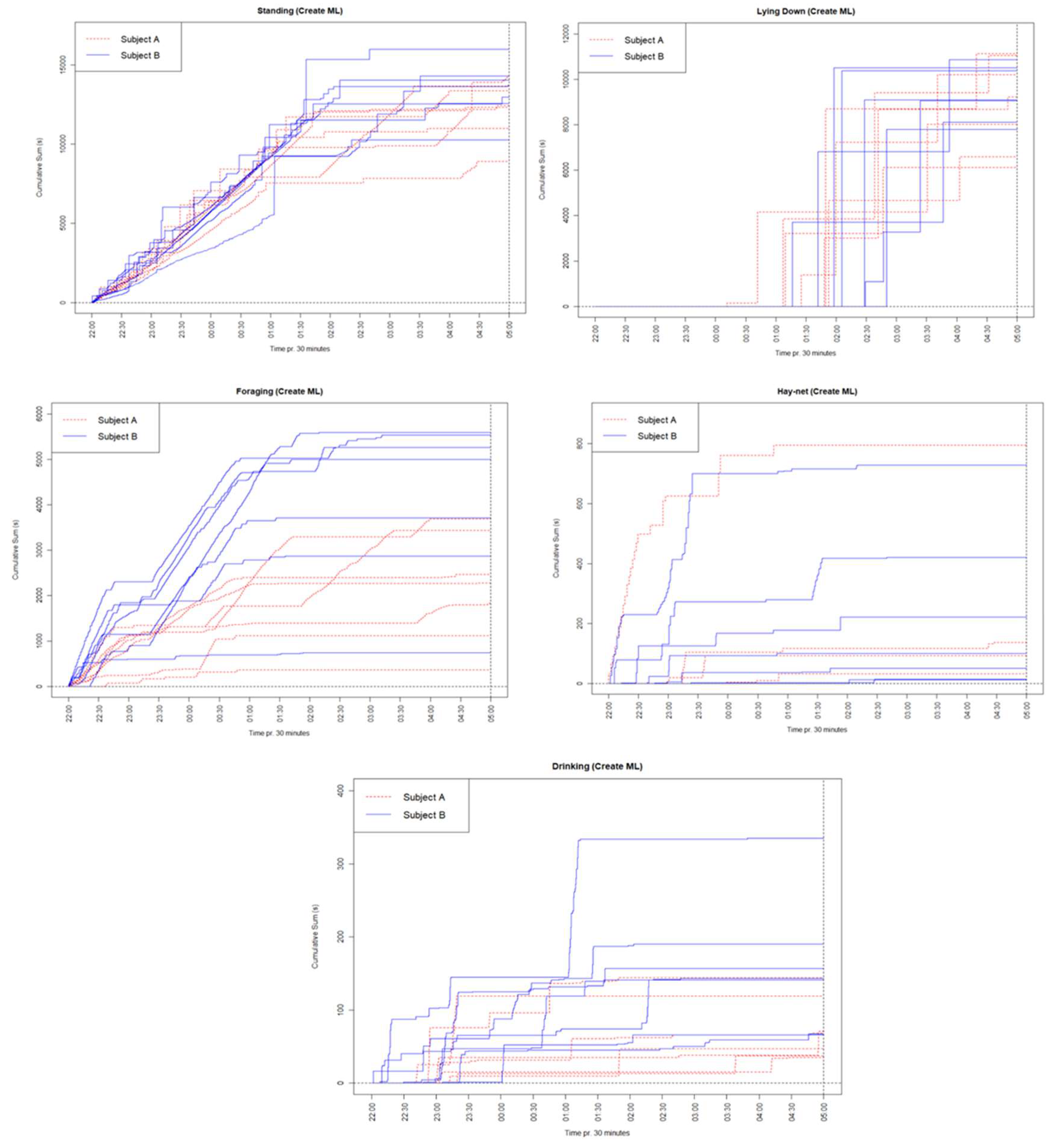

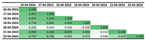

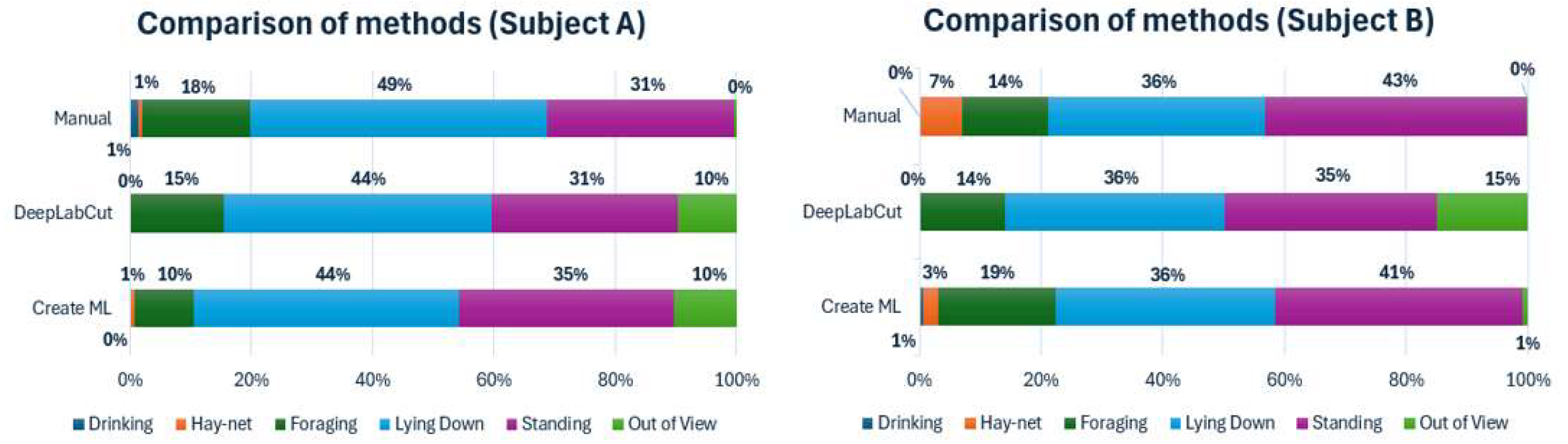

To further investigate the similarities between the machine learning models and the manually conducted observations, cumulative graphs for each subject, showing each behavior tracked with each method, have been constructed and displayed below in

Figure 2.

The cumulative graph for subject A showed a lower sum but similar shape for Lying down, which indicates that a period of observations of this behavior went unlabeled for both models. Standing had a similar shape for all methods and a very similar sum for the DeepLabCut model, whereas the Create ML model had a higher sum. Foraging had similar shapes for all methods; however, the sums were generally lower for the models. Hay-net and Drinking were difficult to distinguish clearly, due to low values.

The cumulative graph for subject B had very similar values for Lying down for all methods. All methods showed similar shapes for Standing, although the sums were lower for both models, noticeably for the DeepLabCut model. Foraging also had similar shapes but higher and lower sums for the Create ML model and DeepLabCut model respectively. Hay-net also showed a somewhat similar shape to the manual observations for the Create ML model, but this model had a lower sum. The DeepLabCut model showed a Hay-net sum close to zero, due to difficulty in defining these parameters in the enclosure.

Similarly to the time budgets, Drinking was left out of the cumulative graphs for the DeepLabCut model due to limitations in defining the parameters. Furthermore, the shapes in Lying down for both subjects seem largely different, however this is caused by the chosen type of cumulative graph.

3.1.2. Investigating the Reliability of Two Machine Learning Models

To investigate the reliability of the two models Kendall’s Coefficient of Concordance was utilized. The concordance (W-value) between the models and the control was found to be 0.85 with a p-value of 0.026 for Subject A, and 0.90 with a p-value of 0.019 for Subject B. This indicates a high concordance between the models that is not stochastic for both subjects. This high concordance means that the models and the manual scoring mostly agree on the observed behavior. However, this concordance is not perfect, so a slight disagreement is present.

To test the accuracy of the models a confusion matrix for the control period was made and the models were compared to the manually observed values and normalized (

Appendix C). As seen in

Table A1 to

Table A4, both models predicted highly similar values for the behavior Lying Down for both subjects compared to the manually observed values. For the DeepLabCut model the predicted values for Standing and Foraging were highly similar to the observed values for Subject A; but for Subject B the model was more inaccurate. The opposite applies for Create ML, where the model was generally most accurate for Subject B. For the behavior Hay-net the DeepLabCut model struggled to predict the correct behavior for Subject A, and for Subject B the classification parameters were not defined, and therefore no value was predicted for this behavior. The Create ML model for the behavior Hay-net, predicted highly similar values for Subject A, but for Subject B the behavior was often misclassified as Foraging. For the behavior Drinking the Create ML model often predicted the behavior as Standing for both subjects and for the DeepLabCut model the classification parameters were not defined and therefore no values were predicted correctly. Finally, the predicted values by both models for out of view for Subject B, were highly similar to the observed value but it is notable that the total manually observed value for this behavior is 17 seconds out of 7 hours of observation time and is therefore arguably negligible.

3.2. Using Machine Learning Models for Behavioural Analysis

3.2.1. Assessing Behavioral Differences

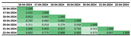

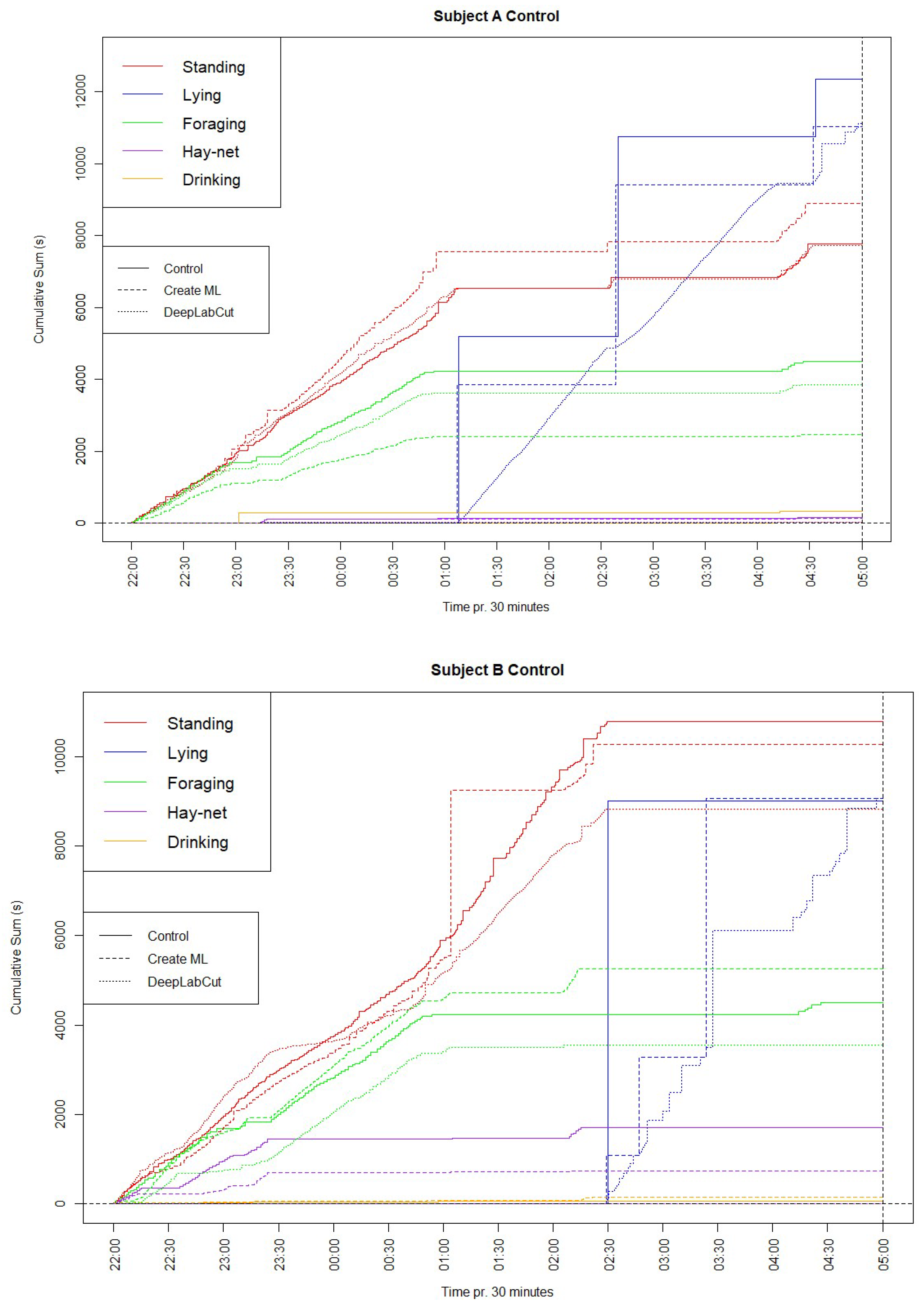

To analyze behavioral differences between the two subjects, two time budgets for the total sums of each behavior for 7 nights were constructed for both machine learning models, as is seen below in

Figure 3.

The time budgets display some differences between the models, especially noticeable in the out of view percentages for Subject B. Standing and Lying down were similar for both models for both subjects. Foraging was similar for Subject A in both models but was slightly higher for the Create ML model for Subject B, possibly due to the lower Out of View percentage. The DeepLabCut model did not measure the ‘Drinking’ behavior for either subject. Comparing the total time budgets for the period between the two subjects only showed slight differences.

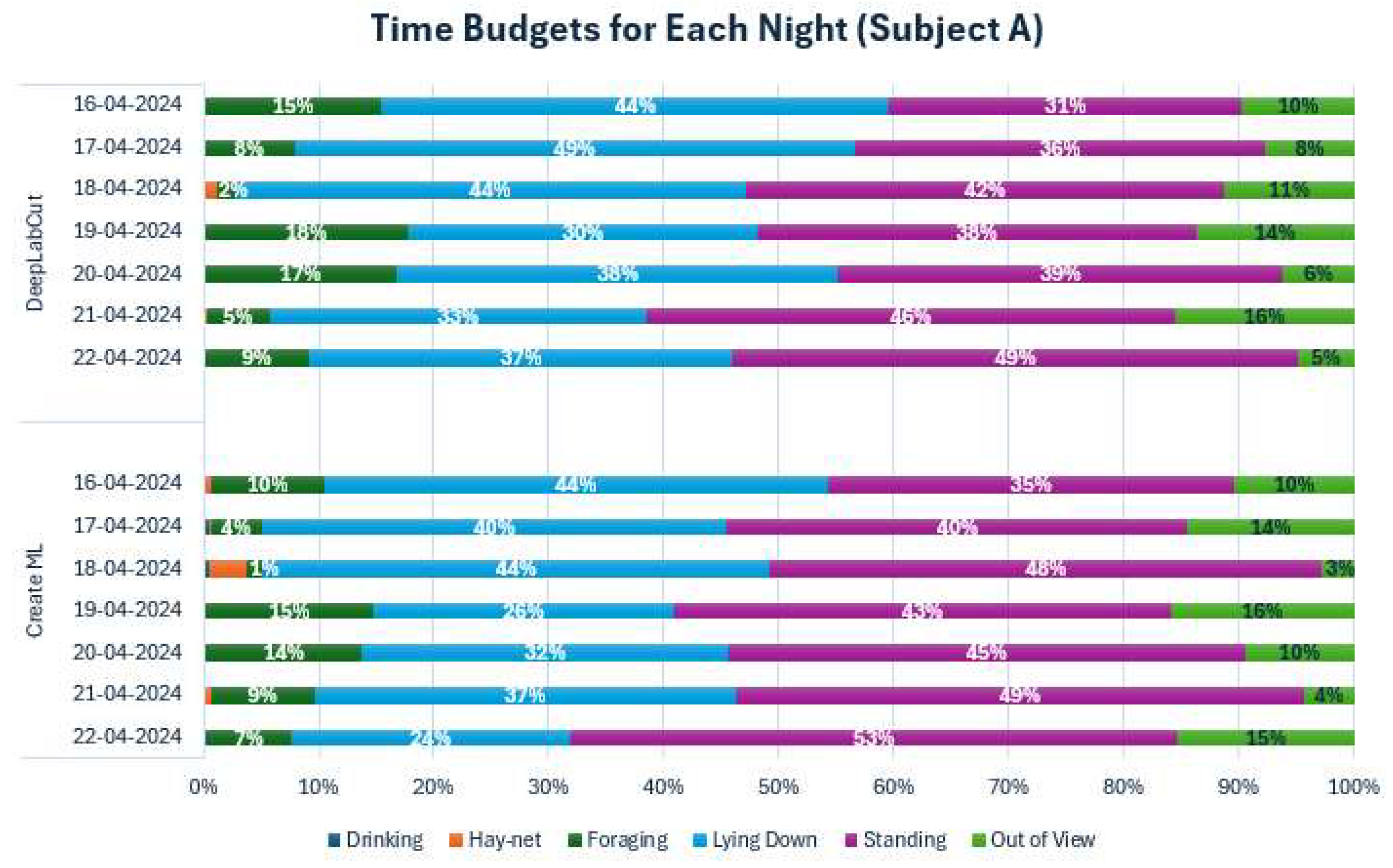

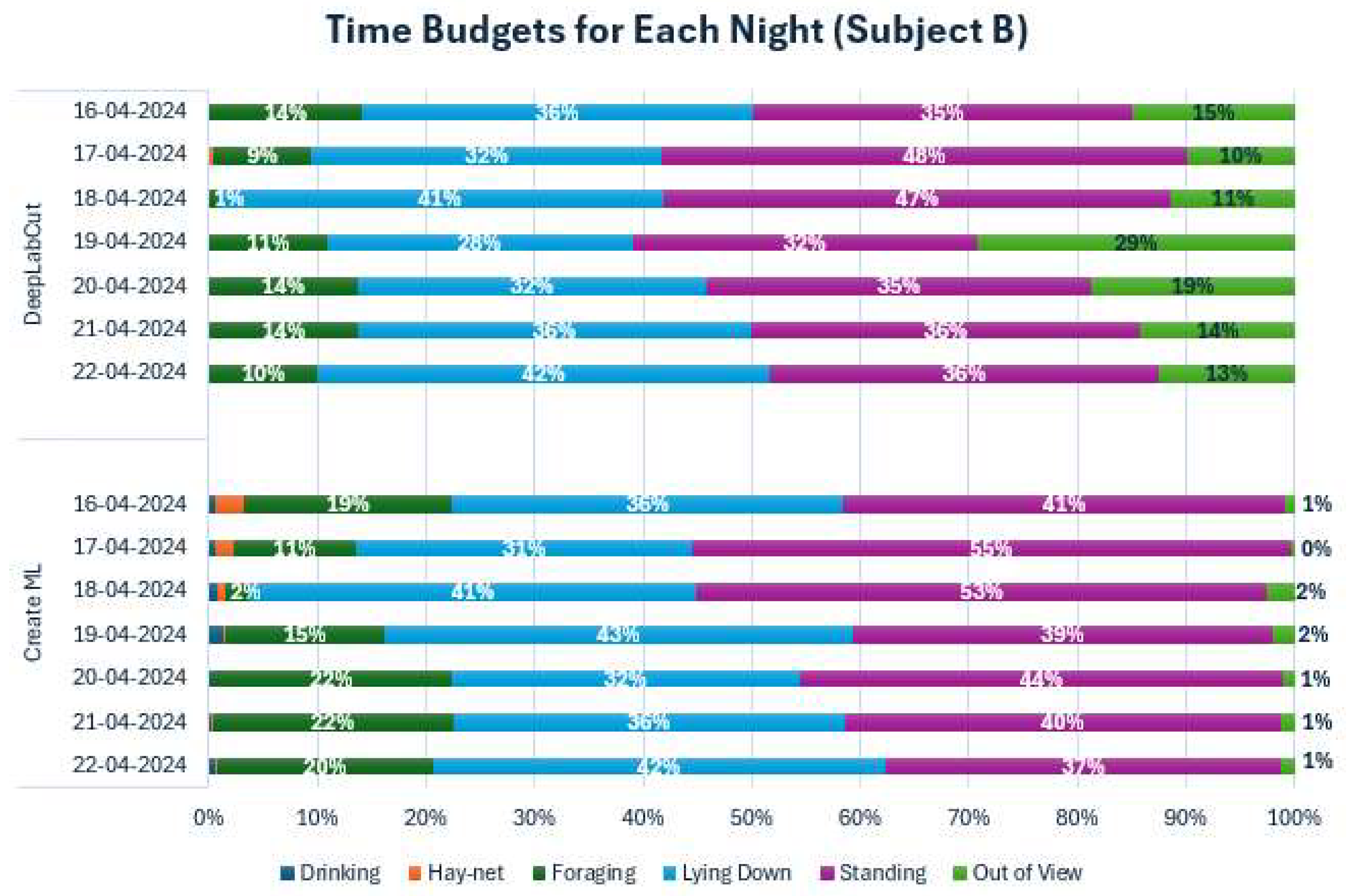

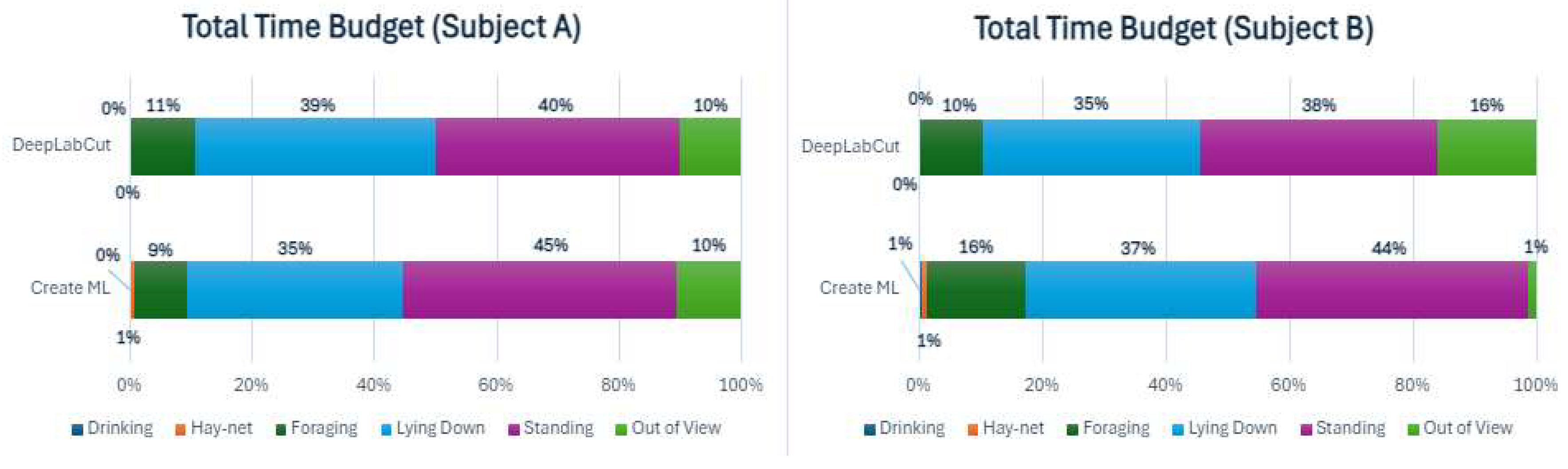

To further investigate the behaviors of both subjects during the observed period, the sums of behaviors for all individual days have been shown as time budgets in

Appendix D. The time budgets for Subject A showed some variation in the behaviors, especially in Foraging. Standing and Lying down also varied somewhat from night to night. Subject B also showed variation in foraging, but generally less so than Subject A. Standing up and Lying down varied somewhat for Subject B. There was a noticeable difference in Out of View percentages for Subject B, depending on the model, with a consistently much lower percentage for Create ML. The behavioral differences were examined further using cumulative graphs (

Appendix E).

Kendall’s Coefficient of Concordance was used to examine if the amount of time spent on each behavior was the same each day. The analysis was conducted on the results of both the DeepLabCut and the Create ML model. Subject A showed a concordance of 0.935 with a p-value of for the DeepLabCut model and 0.865 with a p-value of for the Create ML model. Subject B showed a concordance of 0.951 with a p-value of for the DeepLabCut model and 0.869 with a p-value of for the Create ML model. All the concordance values were high with a significant p-value indicating that the high concordance is not stochastic. This high concordance indicates an agreement in the observed time a subject spends on different behaviors from day to day. This concordance is not perfect meaning some variations are still present in the subjects’ nocturnal behavior.

Spearman’s rank correlation for the behavior Lying down was investigated for both models, and the analysis was split between days and individuals (

Appendix F).

The analysis between days resulted in mainly positive correlations. Subject A had correlations between 0.965 and -0.053 for DeepLabCut and 0.970 and -0.135 for Create ML. Negative correlations were observed between the 20th and 22nd of April for DeepLabCut and the 19th and 20th of April for Create ML. Subject B had correlations between 0.999 and 0.019 for DeepLabCut and 1.000 and 0.311 for Create ML. No negative correlations were found for Subject B.

The analysis between the two subjects also resulted in mainly positive correlations (

Appendix G). The results of the DeepLabCut model had correlations between 0.975 and -0.361. A single negative correlation was found between the 20th of April for Subject A and the 19th of April for Subject B. The results of the Create ML model had correlations between 0.977 and 0.052. No negative correlations were found for the Create ML model.

3.2.2. Investigating Further Applications of Automatic Behavioral Coding

Certain behaviors of a more complex character may potentially be assessed using machine learning methods for behavioral coding. One such behavior is the stereotyped behavior ‘Swaying’, which is largely relevant for elephants [14]. This behavior is difficult to categorize simply, as has been done for the previously mentioned behaviors, since swaying can happen anywhere in the frame and is primarily observable through a side-to-side motion of the elephant’s trunk and head. To visualize this behavior using data from the DeepLabCut model, the cumulative distance moved by the point labelled at the trunk root of Subject B were plotted; along with the actual sway noted manually in the control period as a cumulative graph in

Figure 4.

As seen in the cumulative graph, a steep increase in the trunk distance appears around 01:15, which approximately matches with the manually observed sway behavior occurring at this time. This is because a steep increase in distance moved by the trunk root will occur as a result of the sway behavior.

4. Discussion

4.1. Performance and Limitations of the Two Machine Learning Models

Before using the two constructed machine learning models, it must first be investigated how accurate they are compared to manually conducted observations. The concordance test between the models showed a high agreement between the models and manual observations (W=0.848), although there is seemingly room for improvement. The confusion matrixes for each model compared to the manual observations also displayed high accuracy in detecting some behaviors, such as Standing and Lying down, although with certain challenges, such as confusing the Hay-net behavior with Foraging for Subject B. This suggests that there may be a need for improving the parameters of classifying each behavior or training the models with better or more material to account for different environments, footage qualities and a broader range of behaviors. Mathis et al. (2018) discussed the capabilities and limitations of DeepLabCut for various behaviors, noting similar challenges in behavior recognition and out of view instances [

32].

It must however be noted that the accuracy of these models is based on comparison with manual observations, which itself has inherent problems. Manual observations are not entirely accurate, since they may lack precision in noting the exact time a behavior takes place, and there may be differences in how a sequence of behaviors is coded by different researchers, which usually necessitates inter-rater reliability tests [

41,

49,

50]. These issues should not be present in machine learning models since a behavior is coded at the exact frame and can be standardized across studies. The capabilities of models constructed in both Create ML and DeepLabCut thus emphasizes the potential of machine learning models to complement and enhance traditional manual observations in behavioral studies.

4.2. Nocturnal Behavioral Differences of the Two Subjects

The two machine learning models were used to automatically observe the two subjects of the study, with the aim of assessing whether the nocturnal behaviors varied between each subject and individually across each observed night. This analysis was carried out using time budgets, cumulative graphs, and correlations.

Firstly, the total time budgets for each subject across all nights displayed only slight differences between the subjects, most notably in foraging behavior, which might be higher for Subject B. This slight difference is supported by the high concordance values between the days, meaning the observed behavior only differs slightly from day to day. It is however inconclusive whether Subject B generally carries out more foraging behavior, due to the differing out of view percentages caused by the lack of confidence by the models. With a closer look at the behavioral patterns using the cumulative graphs (

Appendix E), it does however appear that the two subjects have some differences. Once again, it appears that foraging is generally higher for Subject B, along with Drinking. From the Lying down cumulative graph it also appears that sleeping patterns may be somewhat different, since Subject A appears to wake up and walk around more commonly throughout the night, whereas Subject B appears to be lying down for longer periods at a time. The correlations calculated regarding the sleeping patterns compared between the two subjects also showed some correlation, indicating that the subjects go to sleep at similar times, although this varies each night. This shows that the two elephants differ from each other in the nocturnal behavioral patterns, however, there does not appear to be large differences, and overall, the subjects typically carry out somewhat similar behavioral patterns throughout the night. This is in accordance with Bertelsen et al. (2020) which studied the same subjects and found some personality differences displayed through behavior, but similarly the differences were relatively small [

14]. A study by Rees (2009) also found behavioral differences on an individual basis in captive Asian elephants (

Elephas maximus) [

10]. This is similar to Tobler (1992), Holdgate et al. (2016), and Schiffmann et al. (2023) who examined recumbent sleep behavior in zoo housed Asian and African elephants, and also found differences on an individual basis [

15,

17,

51].

It was investigated whether the individual subjects differed in their behavioral patterns from night to night, using time budgets for each night, along with cumulative graphs and correlations of their sleeping behavior. From the time budgets, both subjects appear to vary from night to night in all behaviors. Foraging ranges from very low percentages (1-2%) to high percentages (18-22%), which is also apparent from the cumulative graph. This is in accordance with the study by Finch et al. (2021) who found varying feeding behavior in their nocturnal activity budgets for zoo housed Asian Elephants [

52]. Standing and Lying down for both subjects also differed across nights with a range of approximately 20% difference for both behaviors. Further investigation of sleeping patterns using Spearman rank correlations showed that most days had very highly correlated values, indicating a general circadian rhythm; which is in accordance with a study by Casares et al. (2016) that investigated cortisol levels to establish the circadian rhythm of African elephants [

53]. Although some days showed much weaker correlation, suggesting differences in night-to-night sleeping patterns caused by the elephants going to sleep at different times. This confirms the hypothesis that computer vision models are capable of demonstrating that the nocturnal behavioral patterns differ from night to night for both individuals, although there is some uncertainty of exactly how much the behaviors differ, due to the out of view percentages. This result is however in accordance with the studies by Rees (2009) and Holdgate et al. (2016) who found considerable day-to-day variation in activity budgets for a group of captive Asian elephants and for African and Asian elephants, respectively [

10,

15].

This study showed that the subjects were lying down approximately 35-39% of the observed time, or just over 2.5 hours until 5:00, at which point they would still be lying down, as is seen in the cumulative graphs. This is in accordance with studies such as Holdgate et al. (2016) and Schiffmann et al. (2023) who found that elephants in captivity generally tend to lie down for a similar amount of time during the night; although they also note that the elephants may not be sleeping throughout all of this time [

15,

17].

4.3. Other Applications of Machine Learning Models for Behavioral Coding

As was displayed in the results regarding the sway behavior, it may be difficult to address complex behaviors, even though it may be possible through different techniques. One such technique was displayed by plotting the distance moved by a point on the trunk root of Subject B. The steep incline on the graph largely matches with the manually observed sway during the night, which indicates that using such a measurement might be useful for observing Sway. Currently, the presentation of this behavior is however primarily visual since it may provide further challenges to precisely define the parameters capable of properly discerning when the gathered data should be categorized as sway behavior. Developing such parameters and applying them to similar machine learning model data in the future, would prove useful to quickly and accurately find stereotyped behavior to gain insight into the welfare of the animal.

5. Conclusions

It is apparent from this study that using machine learning models from DeepLabCut and Create ML provides a capable tool for aiding or even replacing certain aspects of behavioral studies. The models could detect simple behaviors with high accuracy, although limitations were met when assessing more complex behaviors such as Sway, and this challenge may require further work to be adequate.

Applying the models to 7 nights of footage of nocturnal behavior provided general insight into behavioral patterns and differences between the two studied subjects, as well as differences between individually observed days. This showed that the constructed computer vision models can effectively aid in behavioral analyses, and further expansion and adjustments may be desired.

Author Contributions

Conceptualization, S.M.L., J.N., F.G., M.G.N., C.P. and T.H.J.; methodology, S.M.L., J.N., F.G. and M.G.N.; validation, S.M.L., J.N., F.G. and M.G.N.; formal analysis, S.M.L., J.N., F.G. and M.G.N.; investigation, S.M.L., J.N., F.G. and M.G.N.; data curation, S.M.L., J.N., F.G. and M.G.N.; writing—original draft preparation, S.M.L., J.N., F.G. and M.G.N.; writing—review and editing, S.M.L., J.N., F.G., M.G.N., C.P. and T.H.J.; visualization, S.M.L., J.N., F.G. and M.G.N.; supervision, C.P. and T.H.J.; All authors have read and agreed to the published version of the manuscript.

Funding

Funding for this study was provided by the Aalborg Zoo Conservation Foundation (AZCF; grant number 07-2024).

Institutional Review Board Statement

Ethical review and approval were waived for this study as behavior observations of the animals were the only procedure included.

Informed Consent Statement

We obtained approval from Aalborg Zoo, and the study guarantees all work was done within good animal welfare and ethical circumstances. There was no change in daily routines for the animals of concern.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

We would like to thank the employees at Aalborg Zoo for facilitating this study, especially Anders Rasmussen, Paw Fonager Gosmer and Marianne (My) Eskelund Reetz. Special thanks to Kasper Kystol Andersen for assistance with the technical aspects. Lastly, we would like to thank Simeon Lucas Dahl for technical support.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Floor Plan of Elephant Enclosure

Figure A1.

Illustration of the elephant enclosure in Aalborg Zoo. The indoor enclosures are colored yellow and have the enclosure names written with the dimensions. The dashed lines indicate the outdoor enclosure and the part of the wall between enclosure E1 and E2 where the subjects can have physical contact at night. The locations and positions of the cameras are indicated with the camera icons. The illustration is a modification from Bertelsen, et al., 2020 [

14].

Figure A1.

Illustration of the elephant enclosure in Aalborg Zoo. The indoor enclosures are colored yellow and have the enclosure names written with the dimensions. The dashed lines indicate the outdoor enclosure and the part of the wall between enclosure E1 and E2 where the subjects can have physical contact at night. The locations and positions of the cameras are indicated with the camera icons. The illustration is a modification from Bertelsen, et al., 2020 [

14].

Appendix B. Selected Behaviors Displayed in Subject Enclosures

Figure A2.

Selected behaviors displayed in the subject enclosure E1 as observed through video monitoring. The images showcase four distinct behaviors: Drinking, Foraging at the box, Using the Hay-net, and Lying down.

Figure A2.

Selected behaviors displayed in the subject enclosure E1 as observed through video monitoring. The images showcase four distinct behaviors: Drinking, Foraging at the box, Using the Hay-net, and Lying down.

Figure A3.

Selected behaviors displayed in the subject enclosure E2 as observed through video monitoring. The images showcase four distinct behaviors: Drinking, Foraging at the box, Using the Hay-net, and Lying down.

Figure A3.

Selected behaviors displayed in the subject enclosure E2 as observed through video monitoring. The images showcase four distinct behaviors: Drinking, Foraging at the box, Using the Hay-net, and Lying down.

Appendix C. Confusion Matrix Comparison of Models

Table A1.

Confusion matrix produced from the manually observed values compared to the predicted values from the DeepLabCut model for Subject A. Darker blue indicates better prediction.

Table A1.

Confusion matrix produced from the manually observed values compared to the predicted values from the DeepLabCut model for Subject A. Darker blue indicates better prediction.

Table A2.

Confusion matrix produced from the manually observed values compared to the predicted values from the DeepLabCut model for Subject B. Darker blue indicates better prediction.

Table A2.

Confusion matrix produced from the manually observed values compared to the predicted values from the DeepLabCut model for Subject B. Darker blue indicates better prediction.

Table A3.

Confusion matrix produced from the manually observed values compared to the predicted values from the Create ML model for Subject A. Darker blue indicates better prediction.

Table A3.

Confusion matrix produced from the manually observed values compared to the predicted values from the Create ML model for Subject A. Darker blue indicates better prediction.

Table A4.

Confusion matrix produced from the manually observed values compared to the predicted values from the Create ML model for Subject B. Darker blue indicates better prediction.

Table A4.

Confusion matrix produced from the manually observed values compared to the predicted values from the Create ML model for Subject B. Darker blue indicates better prediction.

Appendix D. Time Budgets for Each Night

Figure A4.

Time Budgets for both models across each observed night for Subject A. Each color represents a behavior. Percentages were left out for Drinking and Hay-net due to low values.

Figure A4.

Time Budgets for both models across each observed night for Subject A. Each color represents a behavior. Percentages were left out for Drinking and Hay-net due to low values.

Figure A5.

Time Budgets for both models across each observed night for Subject B. Each color represents a behavior. Percentages were left out for Drinking and Hay-net due to low values.

Figure A5.

Time Budgets for both models across each observed night for Subject B. Each color represents a behavior. Percentages were left out for Drinking and Hay-net due to low values.

Appendix E. Cumulative Graphs for Every Behavior Each Night

Figure A6.

Cumulative graphs for every behavior for both subjects each observed night. Each color/line type signifies the subject, and every line signifies a different night.

Figure A6.

Cumulative graphs for every behavior for both subjects each observed night. Each color/line type signifies the subject, and every line signifies a different night.

The Standing behavior was roughly similar in shape and sum for each subject and across nights, although Subject B had somewhat higher sums during several nights. Lying down was also roughly similar for both subjects and between nights. Lying down generally started occurring later at night but would then usually occur a lot for the rest of the night. Foraging occurred mostly at the beginning of the night for both subjects, although Subject B tended to have higher sums than Subject A. Foraging shapes and sums also varied from night to night. Hay-net did not occur much for either individual but generally occurred most at the beginning of the night. Drinking similarly did not occur much for either individual but mostly occurred at the beginning of the night, and furthermore displayed higher sums for Subject B.

Appendix F. Spearman Rank Correlation between Days

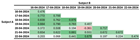

Table A5.

DeepLabCut - Subject A. Spearman rank correlation matrix for DeepLabCut model of Subject A, based on data collected between April 16, 2024, and April 22, 2024. The matrix displays the pairwise correlation coefficients between the data sets corresponding to each date. The color intensity represents the strength of the correlation, with darker green indicating higher positive correlation and a darker red indicating higher negative correlation.

Table A5.

DeepLabCut - Subject A. Spearman rank correlation matrix for DeepLabCut model of Subject A, based on data collected between April 16, 2024, and April 22, 2024. The matrix displays the pairwise correlation coefficients between the data sets corresponding to each date. The color intensity represents the strength of the correlation, with darker green indicating higher positive correlation and a darker red indicating higher negative correlation.

Table A6.

DeepLabCut - Subject B. Spearman rank correlation matrix for DeepLabCut model of Subject B, based on data collected between April 16, 2024, and April 22, 2024. The matrix displays the pairwise correlation coefficients between the data sets corresponding to each date. The color intensity represents the strength of the correlation, with darker green indicating higher positive correlation and a darker red indicating higher negative correlation.

Table A6.

DeepLabCut - Subject B. Spearman rank correlation matrix for DeepLabCut model of Subject B, based on data collected between April 16, 2024, and April 22, 2024. The matrix displays the pairwise correlation coefficients between the data sets corresponding to each date. The color intensity represents the strength of the correlation, with darker green indicating higher positive correlation and a darker red indicating higher negative correlation.

Table A7.

Create ML - Subject A. Spearman rank correlation matrix for Create ML model of Subject A, based on data collected between April 16, 2024, and April 22, 2024. The matrix displays the pairwise correlation coefficients between the data sets corresponding to each date. The color intensity represents the strength of the correlation, with darker green indicating higher positive correlation and a darker red indicating higher negative correlation.

Table A7.

Create ML - Subject A. Spearman rank correlation matrix for Create ML model of Subject A, based on data collected between April 16, 2024, and April 22, 2024. The matrix displays the pairwise correlation coefficients between the data sets corresponding to each date. The color intensity represents the strength of the correlation, with darker green indicating higher positive correlation and a darker red indicating higher negative correlation.

Table A8.

Create ML - Subject B. Spearman rank correlation matrix for Create ML model of Subject B, based on data collected between April 16, 2024, and April 22, 2024. The matrix displays the pairwise correlation coefficients between the data sets corresponding to each date. The color intensity represents the strength of the correlation, with darker green indicating higher positive correlation and a darker red indicating higher negative correlation.

Table A8.

Create ML - Subject B. Spearman rank correlation matrix for Create ML model of Subject B, based on data collected between April 16, 2024, and April 22, 2024. The matrix displays the pairwise correlation coefficients between the data sets corresponding to each date. The color intensity represents the strength of the correlation, with darker green indicating higher positive correlation and a darker red indicating higher negative correlation.

Appendix G. Spearman Rank Correlation between Individuals

Table A9.

Spearman rank correlation matrix comparing the data between Subject A and Subject B for the DeepLabCut model, from April 16, 2024, to April 22, 2024. The matrix presents the correlation coefficients between corresponding dates for the two subjects. The color intensity represents the strength of the correlation, with darker green indicating higher positive correlation and a darker red indicating higher negative correlation.

Table A9.

Spearman rank correlation matrix comparing the data between Subject A and Subject B for the DeepLabCut model, from April 16, 2024, to April 22, 2024. The matrix presents the correlation coefficients between corresponding dates for the two subjects. The color intensity represents the strength of the correlation, with darker green indicating higher positive correlation and a darker red indicating higher negative correlation.

Table A10.

Spearman rank correlation matrix comparing the data between Subject A and Subject B for the Create ML model, from April 16, 2024, to April 22, 2024. The matrix presents the correlation coefficients between corresponding dates for the two subjects. The color intensity represents the strength of the correlation, with darker green indicating higher positive correlation and a darker red indicating higher negative correlation.

Table A10.

Spearman rank correlation matrix comparing the data between Subject A and Subject B for the Create ML model, from April 16, 2024, to April 22, 2024. The matrix presents the correlation coefficients between corresponding dates for the two subjects. The color intensity represents the strength of the correlation, with darker green indicating higher positive correlation and a darker red indicating higher negative correlation.

References

- J. S. Veasey, "Differing animal welfare conceptions and what they mean for the future of zoos and aquariums, insights from an animal welfare audit," Zoo Biology, vol. 41, no. 4, pp. 292-307, 2022.

- R. Barongi, F. A. Fisken, M. Parker and M. Gusset, "Committing to Conservation: The World Zoo and Aquarium Conservation Strategy," World Association of Zoos and Aquariums (WAZA) Executive Office, Gland, Switzerland, 2015.

- European Association of Zoos and Aquaria, "Standards for the Accommodation and Care of Animals in Zoos and Aquaria," EAZA, 2014.

- Danish Association of Zoos and Aquaria, "DAZA etiske retningslinjer," DAZA, 2022.

- W. J. Sutherland, "The importance of behavioural studies in conservation biology," Animal Behaviour, vol. 56, no. 4, pp. 801-809, 1998.

- S. Wolfensohn, J. Shotton, H. Bowley, S. Davies, S. Thompson og W. S. M. Justice, »Assessment of Welfare in Zoo Animals: Towards Optimum Quality of Life,« Animals (Basel), årg. 8, nr. 7, p. 110, 2018.

- B. M. Perdue, S. L. Sherwen and T. L. Maple, "Editorial: The Science and Practice of Captive Animal Welfare," Frontiers in Psychology, vol. 11, no. 1851, pp. 1-2, 2020.

- S. L. Sherwen and P. H. Hemsworth, "The Visitor Effect on Zoo Animals: Implications and Opportunities for Zoo Animal Welfare," Animals, vol. 9, no. 6, p. 366, 2019.

- S. Hauenstein, N. Jassoy, A.-C. Mupepele, T. Carroll, M. Kshatriya, C. M. Beale and C. F. Dormann, "A systematic map of demographic data from elephant populations throughout Africa: implications for poaching and population analyses," Mammal Review, vol. 52, no. 3, pp. 438-453, 2022.

- A.P. Rees, "Activity budgets and the relationship between feeding and stereotypic behaviors in Asian elephants (Elephas maximus) in a Zoo," Zoo Biology, vol. 28, no. 2, pp. 79-97, 2009.

- L. Yon, E. Williams, N. D. Harvey and L. Asher, "Development of a behavioural welfare assessment tool for routine use with captive elephants," PLoS One, vol. 14, no. 2, p. e0210783, 2019.

- B. J. Greco, C. L. Meehan, J. L. Heinsius and J. A. Mench, "Why pace? The influence of social, housing, management, life history, and demographic characteristics on locomotor stereotypy in zoo elephants," Applied Animal Behaviour Science, vol. 194, pp. 104-111, 2017.

- P. Bansiddhi, K. Nganvongpanit, J. L. Brown, V. Punyapornwithaya, P. Pongsopawijit and C. Thitaram, "Management factors affecting physical health and welfare of tourist camp elephants in Thailand," Biodiversity and Conservation, 2019.

- S. S. Bertelsen, A. S. Sørensen, S. Pagh, C. Pertoldi and T. H. Jensen, "Nocturnal Behaviour of Three Zoo Elephants (Loxodonta Africana)," Genetics and Biodiversity Journal, pp. 93-113, 2020.

- M. R. Holdgate, C. L. Meehan, J. N. Hogan, L. J. Miller, J. Rushen, A. M. de Passillé, J. Soltis, J. Andrews and D. J. Shepherdson, "Recumbence Behavior in Zoo Elephants: Determination of Patterns and Frequency of Recumbent Rest and Associated Environmental and Social Factors," PLoS One, vol. 11, no. 7, pp. 1-19, 2016.

- S. A. Boyle, B. Roberts, B. M. Pope, M. R. Blake, S. R. Leavelle, J. J. Marshall, A. Smith, A. Hadicke, J. F. Falcone, K. Knott and A. J. Kouba, "Assessment of Flooring Renovations on African Elephant (Loxodonta africana) Behavior and Glucocorticoid Response," PLoS One, vol. 10, no. 11, pp. 1-19, 2015.

- C. Schiffmann, L. Hellriegel, M. Clauss, B. Stefan, K. Knibbs, C. Wenker, T. Hård og C. Galeffi, »From left to right all through the night: Characteristics of lying rest in zoo elephants,« Zoo Biology, årg. 42, pp. 17-25, 2023.

- B. J. Greco, C. L. Meehan, J. N. Hogan, K. A. Leighty, J. Mellen, G. J. Mason and J. A. Mench, "The Days and Nights of Zoo Elephants: Using Epidemiology to Better Understand Stereotypic Behvaior of African Elephants (Loxodonta africana) and Asian Elephants (Elephas maximus) in North American Zoos," PLoS ONE, vol. 11, no. 7, 2016.

- D. M. Broom, "Welfare of Animals: Behavior as a Basis for Decisions," Encyclopedia of Animal Behavior, vol. 3, pp. 580-584, 2010.

- S. L. Jacobson, S. R. Ross and M. A. Bloomsmith, "Characterizing abnormal behavior in a large population of zoo-housed chimpanzees: prevalence and potential influencing factors," PeerJ, 2016.

- H. Bacon, "Behaviour-Based Husbandry—A Holistic Approach to the Management of Abnormal Repetitive Behaviors," Animals, vol. 8, no. 7, p. 103, 2018.

- S. Fuktong, P. Yuttasaen, V. Punyapornwithaya, J. L. Brown, C. Thitaram, N. Luevitoonvechakij and P. Bansiddhi, "A survey of stereotypic behaviors in tourist camp elephants in Chiang Mai, Thailand," Applied Animal Behavior Science, vol. 243, 2021.

- G. J. Mason, "Stereotypies and suffering," Behavioural Processes, vol. 25, pp. 103-115, 1991.

- G. J. Mason and J. Rushen, "Stereotypic Animal Behaviour: Fundamentals and Applications to Welfare," CABI Digital Library, 2006.

- K. E. Mostard, "General understanding, neuro-endocrinologic and (epi)genetic factors of stereotypy," Nijmegen, The Netherlands, 2011.

- J. Altmann, "Observational Study of Behavior: Sampling Methods," Behaviour, vol. 49, no. 3/4, pp. 227-267, 1974.

- V. Vanitha, K. Thiyagesan and N. Baskaran, "Prevalence of stereotypies and its possible causes among captive Asian elephants (Elephas maximus) in Tamil Nadu, India," Applied Animal Behaviour Science, vol. 174, pp. 137-146, 2016.

- J. Adams and J. K. Berg, "Behavior of Female African Elephants (Loxodonta africana) in Captivity," Applied Animal Ethology, vol. 6, pp. 257-276, 1980.

- A.I. Dell, J. A. Bender, K. Branson, I. D. Couzin, G. G. de Polavieja, L. P. Noldus, A. Pérez-Escudero, P. Perona, A. D. Straw, M. Wikelski and U. Brose, "Automated image-based tracking and its application in ecology," Trends in Ecology & Evolution, vol. 29, no. 7, pp. 417-428, 2014.

- A.Gomez-Marin, J. J. Paton, A. R. Kampff, R. M. Costa and Z. F. Mainen, "Big Behavioral Data: Psychology, Ethology and the Foundations of Neuroscience," Nature Neuroscience, vol. 17, no. 11, pp. 1455-1462, 2014.

- D. J. Anderson and P. Perona, "Toward a Science of Computational Ethology," Neuron, vol. 84, no. 1, pp. 18-31, 2014.

- A. Mathis, P. Mamidanna, K. M. Cury, T. Abe, V. N. Murthy, M. W. Mathis and M. Bethge, "DeepLabCut: markerless pose estimation of user-defined body parts with deep learning," Nature Neuroscience, vol. 21, pp. 1281-1289, 2018.

- E. Mirkó, A. Dóka and Á. Miklósi, "Association between subjective rating and behaviour coding and the role of experience in making video assessments on the personality of the domestic dog (Canis familiaris)," Applied Animal Behaviour Science, vol. 149, no. 1-4, pp. 45-54, 2013.

- Z.-Q. Zhao, P. Zheng, S.-T. Xu og X. Wu, »Object Detection With Deep Learning: A Review,« IEEE Transactions on Neural Networks and Learning Systems, årg. 30, nr. 11, pp. 3212-3232, 2019.

- Z.-H. Zhou and S. Liu, Machine Learning, Nanjing, China: Springer Nature, 2021.

- J. Lenzi, A. F. Barnas, A. A. ElSaid, T. Desell, R. F. Rockwell and S. N. Ellis-Felege, "Artificial intelligence for automated detection of large mammals creates path to upscale drone surveys," Scientific Reports, vol. 13, no. 947, 2023.

- M. Bain, A. Nagrani, D. Schofield, S. Berdugo, J. Bessa, J. Owen, K. J. Hockings, T. Matsuzawa, M. Hayashi, D. Biro, S. Carvalho og A. Zisserman, »Automated audiovisual behavior recognition in wild primates,« Science Advances, årg. 7, nr. 46, 2021.

- F. Sakib and T. Burghardt, "Visual Recognition of Great Ape Behaviours in the Wild," arXiv, 2020.

- P. K. Hebbar and P. K. Pullela, "Deep Learning in Object Detection: Advancements in Machine Learning and AI," in 2023 International Conference on the Confluence of Advancements in Robotics, Vision and Interdisciplinary Technology Management (IC-RVITM), 2023.

- A. Hardin og I. Schlupp, »Using machine learning and DeepLabCut in animal behavior,« Acta ethologica, årg. 25, nr. 6, pp. 125-133, 16 July 2022.

- M. Marks, Q. Jin, O. Sturman, L. von Ziegler, S. Kollmorgen, W. von der Behrens, V. Mante, J. Bohacek and M. F. Yanik, "Deep-learning-based identification, tracking, pose estimation and behaviour classification of interacting primates and mice in complex environments," Nature Machine Learning, vol. 4, pp. 331-340, 2022.

- T. Nath, A. Mathis, A. C. Chen, A. Patel, M. Bethge and M. W. Mathis, "Using DeepLabCut for 3D markerless pose estimation across species and behaviors," Nature Protocols, vol. 14, no. 7, pp. 2152-2176, 2019.

- O. Marques, "Machine Learning with Core ML," in Image Processing and Computer Vision in IOS, Switzerland: Springer International Publishing AG, 2020, pp. 29-40.

- T. A. Andersen, C. Herskind, J. Maysfelt and R. W. Rørbæk, "The nocturnal behaviour of African elephants (Loxodonta africana) in Aalborg Zoo and how changes in the environment affect them," Genetics and Biodiversity Journal, vol. 4, no. 2, pp. 114-130, 2020.

- S. Ruuska, W. Hämäläinen, S. Kajava, M. Mughal, P. Matilainen and J. Mononen, "Evaluation of the confusion matrix method in the validation of an automated system for measuring feeding behaviour of cattle," Behavioural Processes, vol. 148, no. 3, pp. 56-62, 2018.

- J. F. Larsen, K. K. D. Andersen, J. Cuprys, T. B. Fosgaard, J. H. Jacobsen, D. Krysztofiak, S. M. Lund, B. Nielsen, M. E. B. Pedersen, M. J. Pedersen, A. Trige-Esbensen, E. M. Walther, C. Pertoldi, T. H. Jensen, A. K. O. Alstrup and J. O. Perea-García, "Behavioral analysis of a captive male Bornean orangutan (Pongo pygmaeus) when," Archives of Biological Sciences, vol. 75, no. 4, pp. 443-458, 2023.

- A.P. Field, "Kendall's Coefficient of Concordance," Encyclopedia of Statistics in Behavioral Science, vol. 2, pp. 1010-1011, 2005.

- J. Brownlee, "Machine Learning Mastery," Guiding Tech Media, 19 August 2020. [Online]. Available: https://machinelearningmastery.com/distance-measures-for-machine-learning/. [Accessed 22 May 2024].

- M. Malviya, N. T. Buswell and C. G. P. Berdanier, "Visual and Statistical Methods to Calculate Intercoder Reliability for Time-Resolved Observational Research," International Journal of Qualitative Methods, vol. 20, 2021.

- M. R. Whiteway, D. Biderman, Y. Friedman, M. Dipoppa, E. K. Buchanan, A. Wu, J. Zhou, N. Bonacchi, N. J. Miska, J.-P. Noel, E. Rodriguez, M. Schartner, K. Socha, A. E. Urai, C. D. Salzman, J. P. Cunningham og L. Paninski, »Partitioning variability in animal behavioral videos using semi-supervised variational autoencoders,« Plos Computational Biology, årg. 17, nr. 9, pp. 1-50, 2021.

- I.Tobler, "Fundamental Research Behavioral Sleep in the Asian Elephant in Captivity," Sleep, vol. 15, no. 1, pp. 1-12, 1992.

- K. Finch, F. Sach, M. Fitzpatrick og L. J. Rowden, »Insights into Activity of Zoo Housed Asian Elephants (Elephas maximus) during Periods of Limited Staff and Visitor Presence, a Focus on Resting Behaviour,« Zoological and Botanical Gardens, årg. 2, pp. 101-114, 2021.

- M. Casares, G. Silván, M. D. Carbonell, C. Gerique, L. Martinez-Fernandez, S. Cáceres and J. C. Illera, "Circadian rhythm of salivary cortisol secretion in female zoo-kept African elephants (Loxodonta africana)," Zoo Biology, vol. 35, no. 1, pp. 65-69, 2016.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).