Submitted:

29 August 2024

Posted:

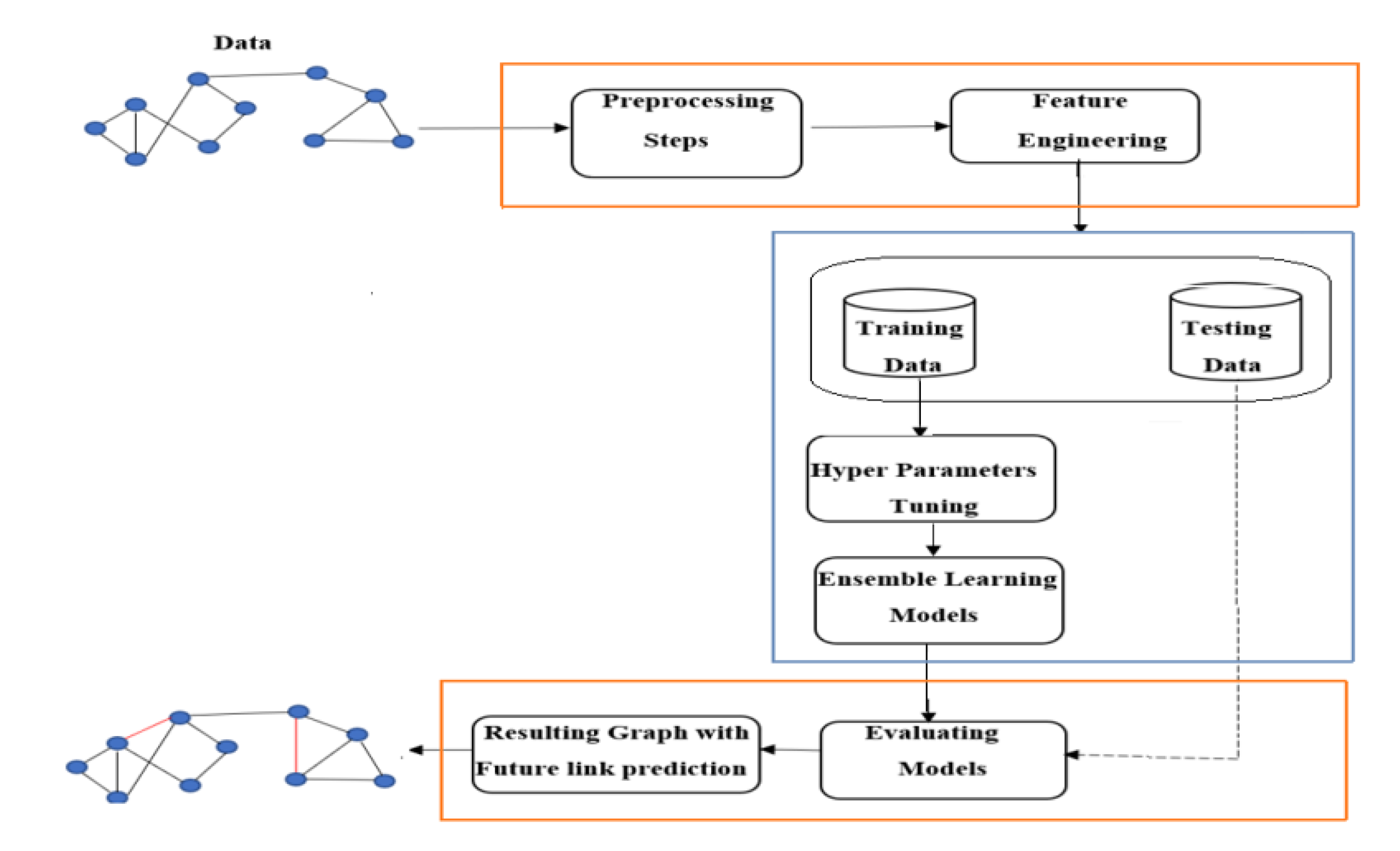

30 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- -

- To introduce a novel framework for link prediction using machine learning, aiming to predict the likelihood of new connections forming in a network.

- -

- To investigate how extracted features and ensemble learning models impact the effectiveness of link prediction process.

- -

- To determine the optimal hyperparameter values using the GridSearchCV technique.

- -

- To achieve the highest accuracy, we utilized machine learning classifiers with the most effective hyperparameter values determined through hyperparameter tuning.

- -

- To evaluate the performance of various ensemble machine learning models, we considered different measures like Accuracy, AUC, Recall, Precision, and F1-score.

2. Related Work

3. Proposed Methodology

3.1. Dataset

- 1

- Twitch is a social network widely used by gamers to live-stream their games. It allows users to watch and interact with gamers in real time, fostering a vibrant community of viewers and streamers. The platform features a small number of popular gamers who have a large number of followers, creating a highly skewed follower distribution. The Twitch dataset is chosen for this study because it presents a unique and under-explored opportunity for link prediction research, given the limited amount of previous works in this area. The distinct characteristics of the dataset, including the engagement patterns and follower dynamics, make it a very interesting subject for analyzing and predicting new connections.

- 2

- Facebook is a widely used social network that connects people from all over the world. Users create profiles, share content, and interact with friends, family, and various communities through posts, comments, likes and messages. The platform is a complex network structure with a large number of connections and interactions, making it a rich source of data for social network analysis. Given its extensive user base and the diversity of interactions, Facebook network provides numerous opportunities for studying link predictions. Understanding how connections form, evolve, and influence user behavior can provide valuable insights into social dynamics and the spread of information. The Facebook dataset, with its complex network of relationships, serves as a fertile ground for developing link prediction research, particularly in studying how online interactions translate into network growth and the formation of new connections.

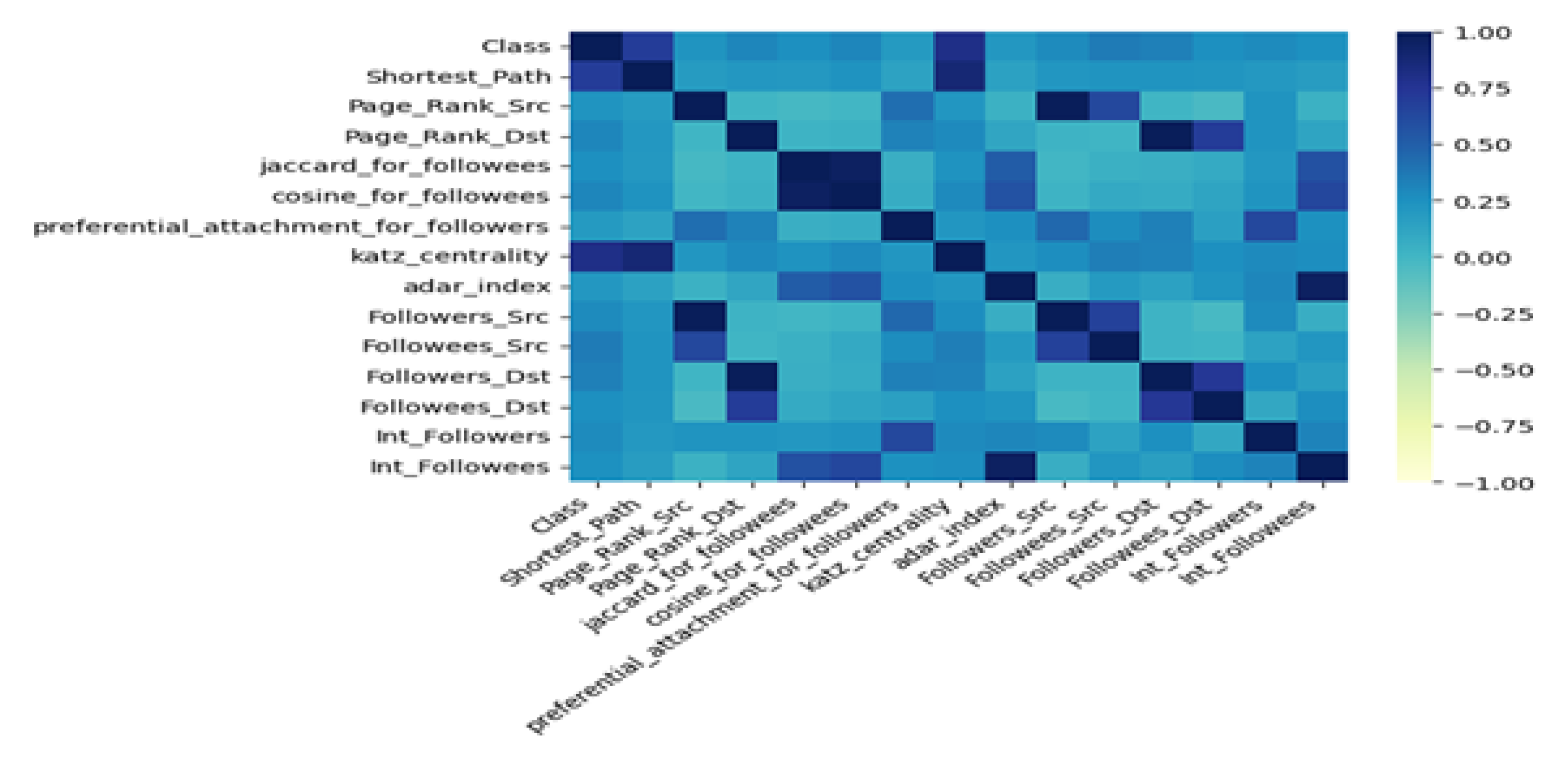

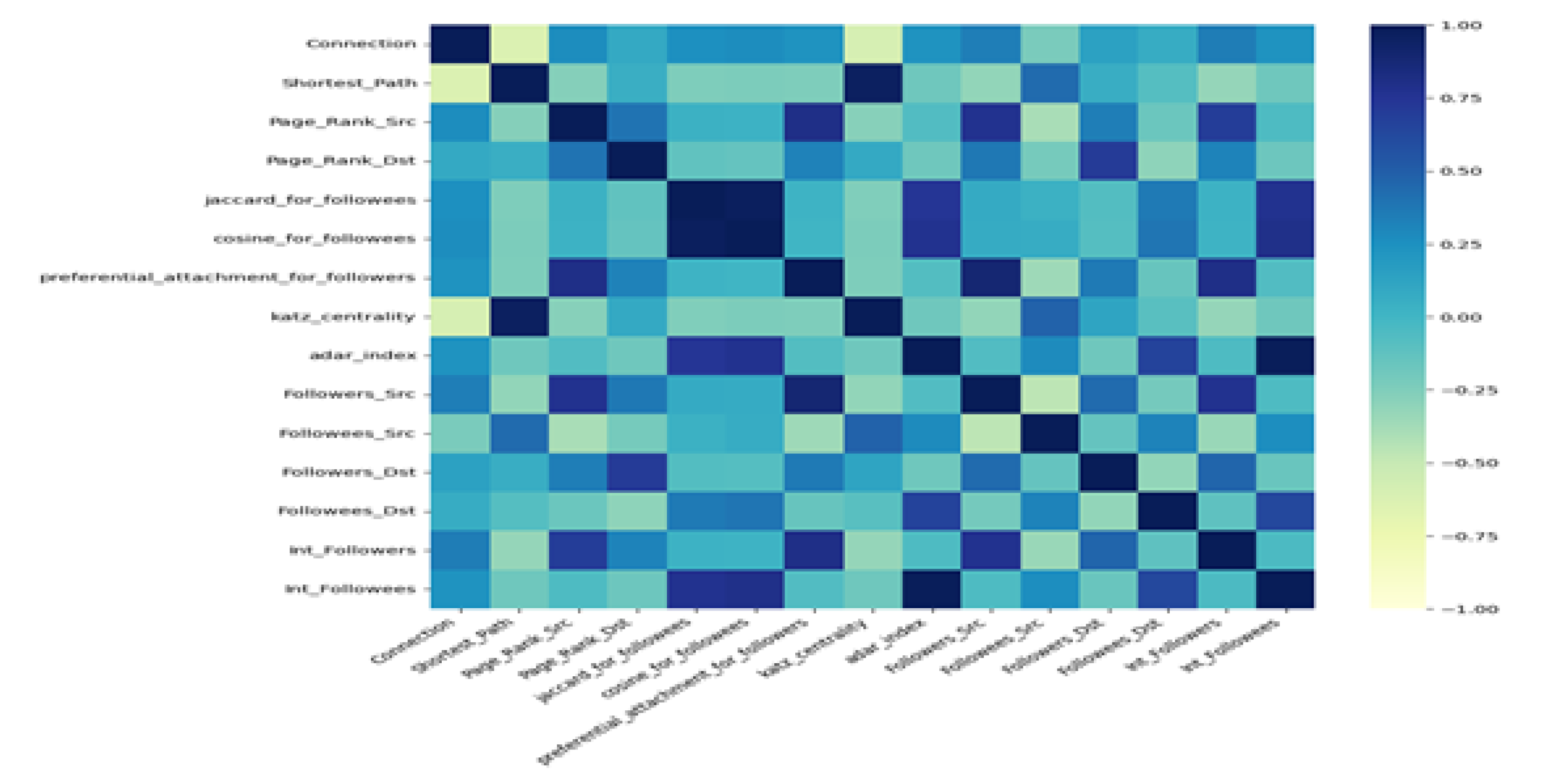

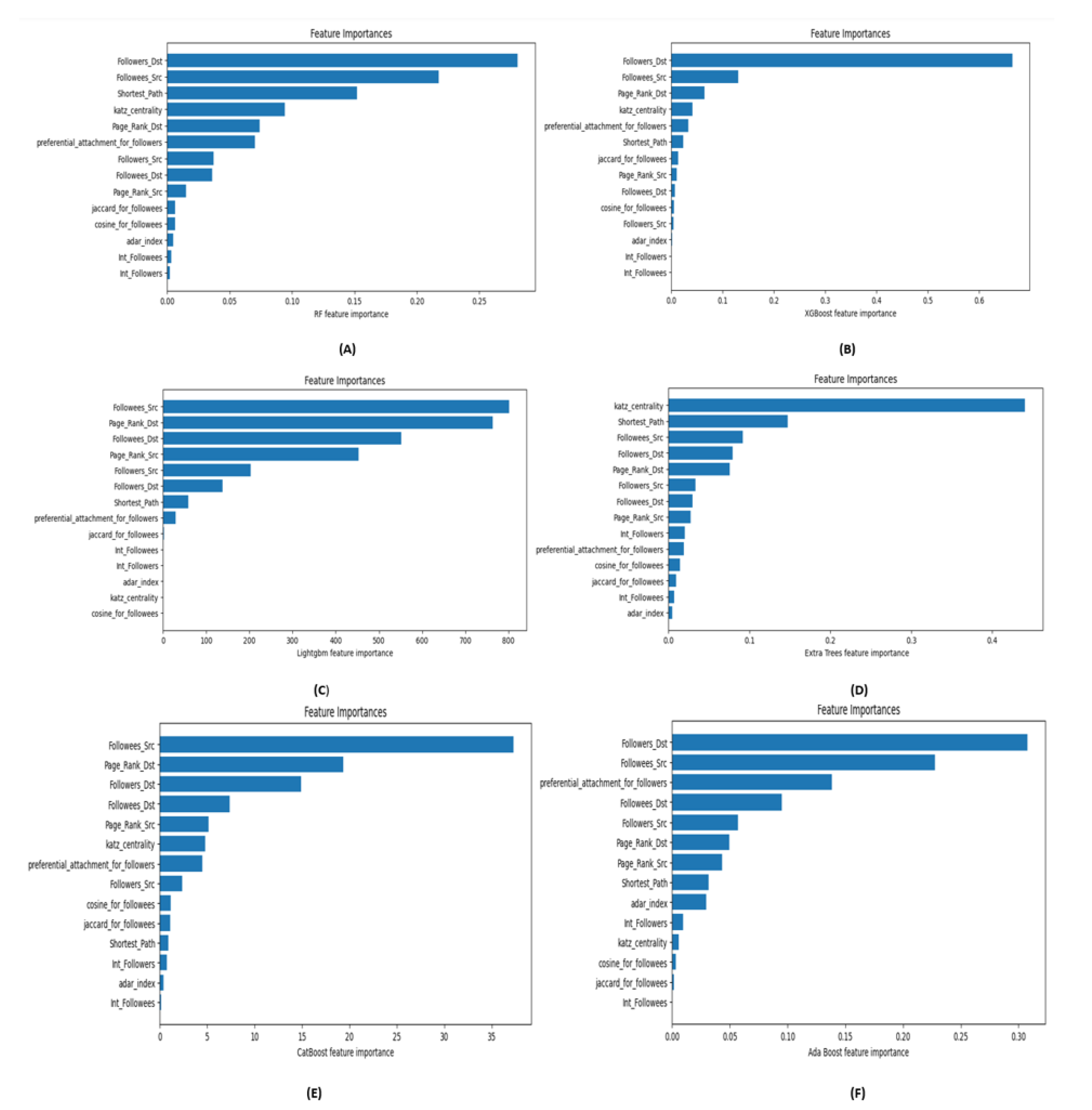

3.2. Feature Engineering

- 1

- Shortest Path: In graph theory, the shortest path between two vertices (or nodes) is the route that minimizes the total weight of the edges along the path. Various algorithms can be used to determine the shortest path, depending on the characteristics of the graph and the specific needs of the problem.

- 2

- Page Rank_Src (Source): It represents how much importance or "rank" the source page contributes to the linked or destination page. The higher the PageRank of the source page, the more influence it has on the rank of the destination page.

- 3

- Page Rank_Dst (Destination): It refers to the PageRank value of the destination page that receives hyperlinks from other pages. It is a key component in determining the importance and visibility of a webpage in search engine results. The Page Rank_Dst value accumulates contributions from all the source pages Page Rank_Src (Destination): that link to it.

- 4

- Jaccard_for_followees: Jaccard Similarity is a metric used to measure the similarity between two sets. It is defined as the size of the intersection divided by the size of the union of the two sets. When applied to followees (people or entities that users follow on a social media platform), it helps in understanding how similar the followees sets of two users are.

- 5

- Cosine_for_followees: Cosine similarity is another metric used to measure the similarity between two sets or vectors. Unlike Jaccard similarity, which considers the intersection and union of sets, cosine similarity considers the angle between two vectors in a multi-dimensional space. When applied to followees, it helps in understanding how similar the followee sets of two users are by representing by representing the followees of each user as a vector.

- 6

- Preferential_attachment_for_followers: Preferential attachment is a concept from network theory that describes how the probability of a new node connecting to an existing node in a network depends on the degree (number of connections) of the existing node. In the context of social networks and followers, preferential attachment helps to explain how users with more followers are more likely to gain additional followers.

- 7

- katz_centrality: is a measure used in network theory to assess the relative importance or centrality of nodes within a network. Unlike simpler centrality measures like degree centrality, which counts the number of direct connections, Katz centrality takes into account indirect connections and the quality of those connections.

- 8

- Adar_index: is a measure of similarity between nodes in a network, which is often used in social network analysis and recommendation systems. It quantifies how similar two nodes are based on their shared connections and the uniqueness of those connections. Nodes that share connections through rare nodes are considered more similar than those sharing connections through common nodes.

- 9

- Followers_Src: is a metric that refers to the followers of a source entity or user in the context of social media or network analysis. It denotes the set of users who follow a particular source entity or user.

- 10

- Followees_Src: refers to the users or entities that a particular source entity follows in the context of social-network platforms. It represents the set of accounts, profiles, or entities that the source entity has chosen to follow.

- 11

- Followers_Dst: refers to the followers of a destination entity or user in the context of social media or network analysis. It denotes the set of users who follow a particular destination entity or user.

- 12

- Followees_Dst: refers to the entities or users that a destination entity or user follows in the context of social media or network analysis. It denotes the set of users or entities that are being followed by a particular destination entity or user.

- 13

- Int_Followers: refer to users who are part of a specific network or community within the platform. This could include followers who are members of the same organization, group, or closed community, where certain updates or posts are limited to "internal followers" only.

- 14

- Int_Followees: Internal Followees are individuals, departments, or specific entities within an organization or network whom another entity such as a department, team, or user follows or subscribes to for updates, communications, or information within the network or organisation platform.

3.3. Ensemble Learning Algorithms Construction

3.4. Performance Metrics

- (a)

-

Accuracy: is the proportion of correctly predicted links, including both existing and non-existing ones, relative to the total number of predictions. For a classification problem, accuracy can be expressed as:Where:

- −

- TP = True Positives

- −

- TN= True Negatives

- −

- FP = False Positives

- −

- FN = False Negatives

- (b)

- Precision

- (c)

- Recall

- (d)

- F1-Score: is the combined average of precision and recall, offering a single measure that balances both factors. The F1-Score is calculated using Equation 4 below:

- (e)

-

AUC (area under the ROC curve): refers to a performance metric used to evaluate the effectiveness of a classification model. To calculate AUC, perform the following steps: randomly choose one existing edge and one non-existent edge from the test set, and compare their scores. If the score for the existing edge is higher, increment t_1 by 1. If the scores are equal, increment t_2 by 1. Finally, the AUC can be computed as:Where t represents the total number of comparisons.

4. Experimental Results and Discussion

4.1. Experimental Setup

- Define the Model: Select the machine learning algorithm to optimize.

- Create the Parameter Grid: Specify the parameters and their ranges to test, typically using a dictionary where the keys are parametric names and the values are lists of possible values.

- Configure GridSearchCV: Initialize the GridSearchCV object with the model, parameter grid, and options like the number of cross-validation folds.

- Fit the Model: Train the model on the training data using the specified parameter grid to cross-validate.

- Evaluate the Results: Analyze the results to identify the best parameters and to evaluate the model’s performance with those parameters

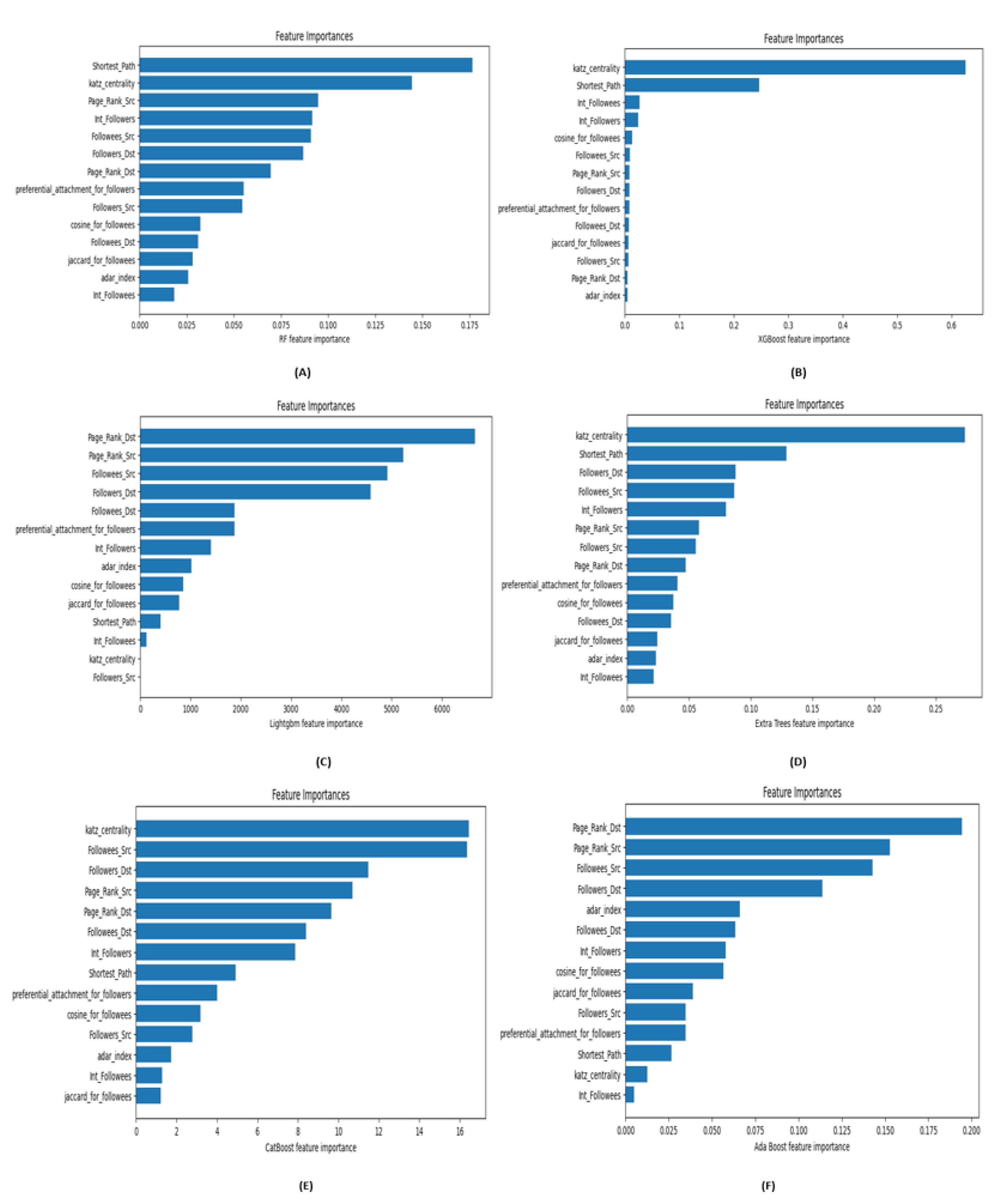

4.2. Feature Importance Models

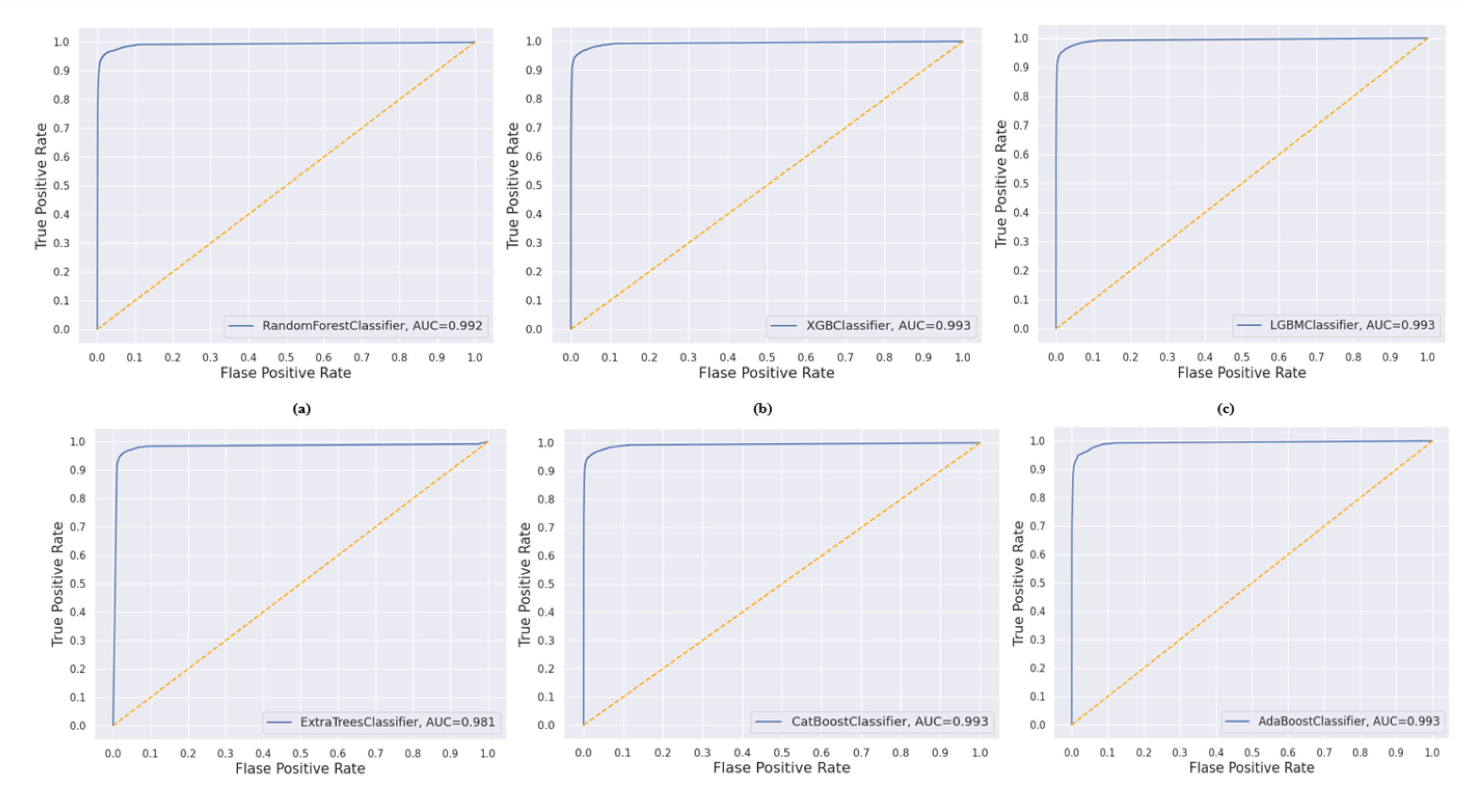

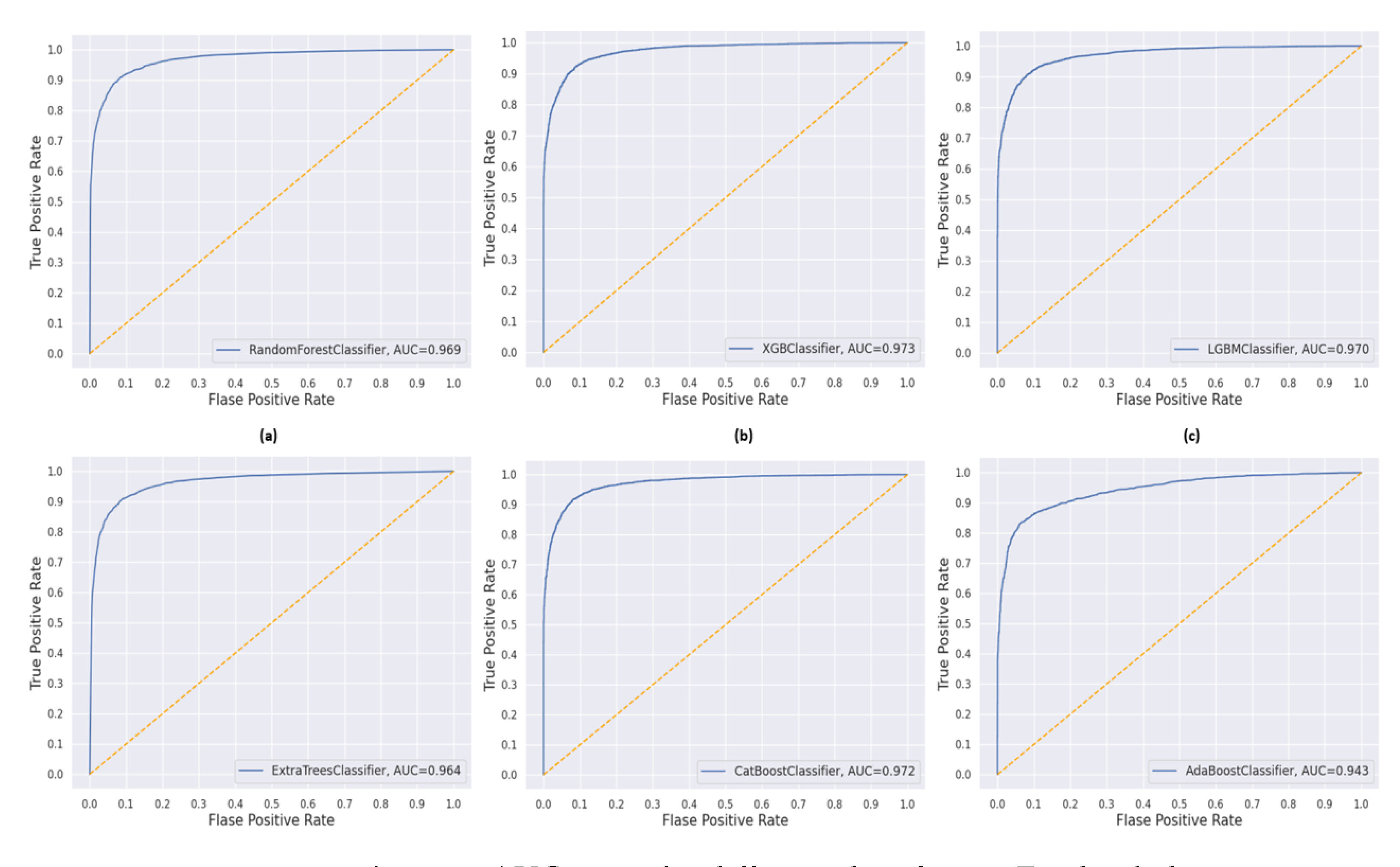

4.3. Results

4.4. Discussion

5. Conclusions

Acknowledgments

Conflicts of Interest

References

- Kumar, A. , Singh, S. S., Singh, K., and Biswas, B. "Link prediction techniques, applications, and performance: A survey." Physica A: Statistical Mechanics and its Applications 553 (2020): 124289.

- Chen, J. , and Ying, R. "Tempme: Towards the explainability of temporal graph neural networks via motif discovery." Advances in Neural Information Processing Systems 36 (2024).

- You, Y. , Lai, X., Pan, Y., Zheng, H., Vera, J., Liu, S., Deng, S., and Zhang, L. "Artificial intelligence in cancer target identification and drug discovery." Signal Transduction and Targeted Therapy 7, no. 1 (2022): 156.

- Li, J. , Shomer, H., Mao, H., Zeng, S., Ma, Y., Shah, N., Tang, J., and Yin, D. "Evaluating graph neural networks for link prediction: Current pitfalls and new benchmarking." Advances in Neural Information Processing Systems 36 (2024).

- Tanantong, T. , Sanglerdsinlapachai, N., and Donkhampai, U. "Sentiment classification on Thai social media using a domain-specific trained lexicon." In 2020 17th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), IEEE, 2020.

- Long, J. , et al. "A recommendation model based on multi-emotion similarity in the social networks." Information 10.1 (2019): 18.

- Yazdavar, A. H. , et al. "Mental health analysis via social media data." In 2018 IEEE International Conference on Healthcare Informatics (ICHI), IEEE, 2018.

- Ouyang, G. , Dey, D. K., and Zhang, P. "Clique-based method for social network clustering." Journal of Classification 37.1 (2020): 254-274.

- Xu, G. , Dong, C., and Meng, L. "Research on the collaborative innovation relationship of artificial intelligence technology in Yangtze River delta of China: A complex network perspective." Sustainability 14, no. 21 (2022): 14002.

- Ye, Z. , Wu, Y., Chen, H., Pan, Y., and Jiang, Q. "A stacking ensemble deep learning model for bitcoin price prediction using Twitter comments on bitcoin." Mathematics 10, no. 8 (2022): 1307.

- Li, C. , Yang, Q., Pang, B., Chen, T., Cheng, Q., and Liu, J. "A mixed strategy of higher-order structure for link prediction problem on bipartite graphs." Mathematics 9, no. 24 (2021): 3195.

- Kovács, I. A. , Luck, K., Spirohn, K., Wang, Y., Pollis, C., Schlabach, S., Bian, W., et al. "Network-based prediction of protein interactions." Nature Communications 10, no. 1 (2019): 1240.

- Yilmaz, E. A. , Balcisoy, S., and Bozkaya, B. "A link prediction-based recommendation system using transactional data." Scientific Reports 13.1 (2023): 6905.

- Bukhori, H. A. , and Munir, R. "Inductive link prediction banking fraud detection system using homogeneous graph-based machine learning model." In 2023 IEEE 13th Annual Computing and Communication Workshop and Conference (CCWC), IEEE, 2023.

- Tuninetti, M. , et al. "Prediction of new scientific collaborations through multiplex networks." EPJ Data Science 10.1 (2021): 25.

- Lim, M. , et al. "Hidden link prediction in criminal networks using the deep reinforcement learning technique." Computers 8.1 (2019): 8.

- Yuliansyah, H. , Othman, Z. A., and Bakar, A. A. "Taxonomy of link prediction for social network analysis: a review." IEEE Access 8 (2020): 183470-183487.

- Rai, A. K. , Tripathi, S. P., and Yadav, R. K. "A novel similarity-based parameterized method for link prediction." Chaos, Solitons & Fractals 175 (2023): 114046.

- Zareie, A. , and Sakellariou, R. "Similarity-based link prediction in social networks using latent relationships between the users." Scientific Reports 10.1 (2020): 20137.

- Fang, Y. , Yu, J., Ding, Y., and Lin, X. "Inferring complementary and substitutable products based on knowledge graph reasoning." Mathematics 11, no. 22 (2023): 4709.

- Mutlu, E. C. , et al. "Review on learning and extracting graph features for link prediction." Machine Learning and Knowledge Extraction 2.4 (2020): 672-704.

- Turki, T. , and Wei, Z. "A link prediction approach to cancer drug sensitivity prediction." BMC Systems Biology 11 (2017): 1-14.

- Wang, W. , Wu, L., Huang, Y., Wang, H., and Zhu, R. "Link prediction based on deep convolutional neural network." Information 10, no. 5 (2019): 172.

- Cao, Z. , Zhang, Y., Guan, J., and Zhou, S. "Link prediction based on quantum-inspired ant colony optimization." Scientific Reports 8, no. 1 (2018): 13389.

- Ahn, M. W. , and Jung, W. S. "Accuracy test for link prediction in terms of similarity index: the case of WS and BA models." Physica A: Statistical Mechanics and its Applications 429 (2015): 177-183.

- Li, X. , Li, Q., Wei, W., and Zheng, Z. "Convolution-based graph representation learning from the perspective of high order node similarities." Mathematics 10, no. 23 (2022): 4586.

- Hoffman, M. , Steinley, D., and Brusco, M. J. "A note on using the adjusted Rand index for link prediction in networks." Social Networks 42 (2015): 72-79.

- Samad, A. , Qadir, M., Nawaz, I., Islam, M. A., and Aleem, M. "A comprehensive survey of link prediction techniques for social network." EAI Endorsed Transactions on Industrial Networks and Intelligent Systems 7, no. 23 (2020): e3-e3.

- Divakaran, A. , and Mohan, A. "Temporal link prediction: A survey." New Generation Computing 38, no. 1 (2020): 213-258.

- Cai, L. , et al. "Line graph neural networks for link prediction." IEEE Transactions on Pattern Analysis and Machine Intelligence 44.9 (2021): 5103-5113.

- Chen, J. , Wang, X., and Xu, X. "GC-LSTM: Graph convolution embedded LSTM for dynamic network link prediction." Applied Intelligence (2022): 1-16.

- Balvir, U. , Raghuwanshi, M. M., and Shobhane, P. D. "Improving social network link prediction with an ensemble of machine learning techniques." International Journal of Computing and Digital Systems 16.1 (2024): 1-11.

- Badiy, M. , and Amounas, F. "Embedding-based method for the supervised link prediction in social networks." International Journal on Recent and Innovation Trends in Computing and Communication 11.3 (2023): 105-116.

- Wang, T. , Jiao, M., and Wang, X. "Link prediction in complex networks using recursive feature elimination and stacking ensemble learning." Entropy 24.8 (2022): 1124.

- Ayoub, J. , Lotfi, D., and Hammouch, A. "Mean received resources meet machine learning algorithms to improve link prediction methods." Information 13.1 (2022): 35.

- Malhotra, D. , and Goyal, R. "Supervised-learning link prediction in single layer and multiplex networks." Machine Learning with Applications 6 (2021): 100086.

- Aziz, F. , Gul, H., Uddin, I., and Gkoutos, G. V. "Path-based extensions of local link prediction methods for complex networks." Scientific Reports 10, no. 1 (2020): 19848.

- Kumari, A. , Behera, R. K., Sahoo, K. S., et al. "Supervised link prediction using structured-based feature extraction in social network." Concurrency and Computation: Practice and Experience, vol. 34, 2020, pp. e5839.

- Ran, Y. , Xu, X. K., and Jia, T. "The maximum capability of a topological feature in link prediction." PNAS Nexus 3, no. 3 (2024): pgae113.

- Yang, R. , Chen, J., Wang, H., Wang, M., Cui, Z., Leung, V. C. M., and Wang, D. "Adversarial enhanced representation for link prediction in multi-layer networks.

- Gadár, L. , and Abonyi, J. "Explainable prediction of node labels in multilayer networks: a case study of turnover prediction in organizations." Scientific Reports 14, no. 1 (2024): 9036.

- Mamat, N. , Othman, M. F., Abdulghafor, R., Alwan, A. A., and Gulzar, Y. "Enhancing image annotation technique of fruit classification using a deep learning approach." Sustainability 15, no. 2 (2023): 901.

- Mienye, I. D. , and Sun, Y. "A survey of ensemble learning: Concepts, algorithms, applications, and prospects." IEEE Access 10 (2022): 99129-99149.

- Boutahir, M. K. , et al. "Effect of feature selection on the prediction of direct normal irradiance." Big Data Mining and Analytics 5.4 (2022): 309-317.

| Reference | Classifier | Performance achieved | ||

|---|---|---|---|---|

| Metrics | Value | Dataset | ||

| [27] | Ensemble Method | Accuracy | 94.23% | |

| [28] | SVM | AUC | 98.5% | Twitch EN |

| [29] | RF-RFE-SELLP | AUC | 99.49% | NetScience |

| [30] | Improved RA | AUC | 95.5% | USAir |

| [31] | RF | Precision | 95.72% | Caida |

| [32] | SCN-HA | AUC | 90% | Wiki-Vote |

| [33] | SVM | AUC | 93.6% | Synthetic network 3 |

| [34] | AdaBoost | Recall | 86.98% | Vicker |

| Dataset | Number of nodes | Number of edges | Source |

|---|---|---|---|

| Twitch | 7126 | 35324 | [35] |

| 4039 | 88234 | [36] |

| Algorithms | Best hyperparameter values | |

|---|---|---|

| T w i t c h |

RF | ’max_depth’ = 10, ’min_samples_split’ = 10, ’n_estimators’ = 300 |

| XGBoost | ’colsample_bytree’ = 0.8, ’learning_rate’ = 0.01, ’max_depth’ = 6, ’n_estimators’ = 200, ’subsample’ = 1.0 |

|

| LightGBM | ’learning_rate’ = 0.01, ’max_depth’ = 10, ’n_estimators’ = 100, ’num_leaves’ = 31 |

|

| CatBoost | ’depth’ = 6, ’iterations’ = 100, ’I2_leaf_reg’ = 7, ’learning_rate’ = 0.1 | |

| ExtraTrees | ’max_depth’ = 30, ’min_samples_leaf’ = 1, ’min_samples_split’ = 10, ’n_estimators’ = 300 |

|

| AdaBoost | ’base_estimator__max_depth’ = 4, ’learning_rate’ = 0.1, ’n_estimators’ = 50 |

|

| F a c e b o o k |

RF | ’max_depth’ = None, ’min_samples_split’ = 2, ’n_estimators’ = 300 |

| XGBoost | ’colsample_bytree’ = 0.8, ’learning_rate’ = 0.2, ’max_depth’ = 9, ’n_estimators’ = 300, ’subsample’ = 0.8 |

|

| LightGBM | ’learning_rate’ = 0.1, ’max_depth’ = 10, ’n_estimators’ = 300, ’num_leaves’ = 100 |

|

| CatBoost | ’depth’ = 8, ’iterations’ = 300, ’I2_leaf_reg’ = 7, ’learning_rate’ = 0.2 | |

| ExtraTrees | ’max_depth’ = None, ’min_samples_leaf’ = 1, ’min_samples_split’ = 5, ’n_estimators’ = 300 |

|

| AdaBoost | ’base_estimator__max_depth’ = 3, ’learning_rate’ = 1, ’n_estimators’ = 200 |

| Classifier | Accuracy | AUC | Recall | Precision | F1-score | |

|---|---|---|---|---|---|---|

| RF | 0.967 | 0.992 | 0.955 | 0.983 | 0.968 | |

| T | XGBoost | 0.968 | 0.993 | 0.952 | 0.984 | 0.968 |

| w | LightGBM | 0.967 | 0.993 | 0.954 | 0.982 | 0.968 |

| i | CatBoost | 0.968 | 0.993 | 0.955 | 0.981 | 0.968 |

| t | ExtraTrees | 0.966 | 0.981 | 0.955 | 0.983 | 0.968 |

| c | AdaBoot | 0.967 | 0.993 | 0.950 | 0.985 | 0.967 |

| h | ||||||

| F | RF | 0.911 | 0.972 | 0.914 | 0.931 | 0.923 |

| a | XGBoost | 0.921 | 0.976 | 0.931 | 0.932 | 0.931 |

| c | LightGBM | 0.920 | 0.972 | 0.928 | 0.933 | 0.931 |

| e | CatBoost | 0.919 | 0.974 | 0.926 | 0.934 | 0.930 |

| b | ExtraTrees | 0.906 | 0.967 | 0.910 | 0.934 | 0.922 |

| o | AdaBoot | 0.902 | 0.942 | 0.908 | 0.919 | 0.913 |

| o | ||||||

| k |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).