Submitted:

30 August 2024

Posted:

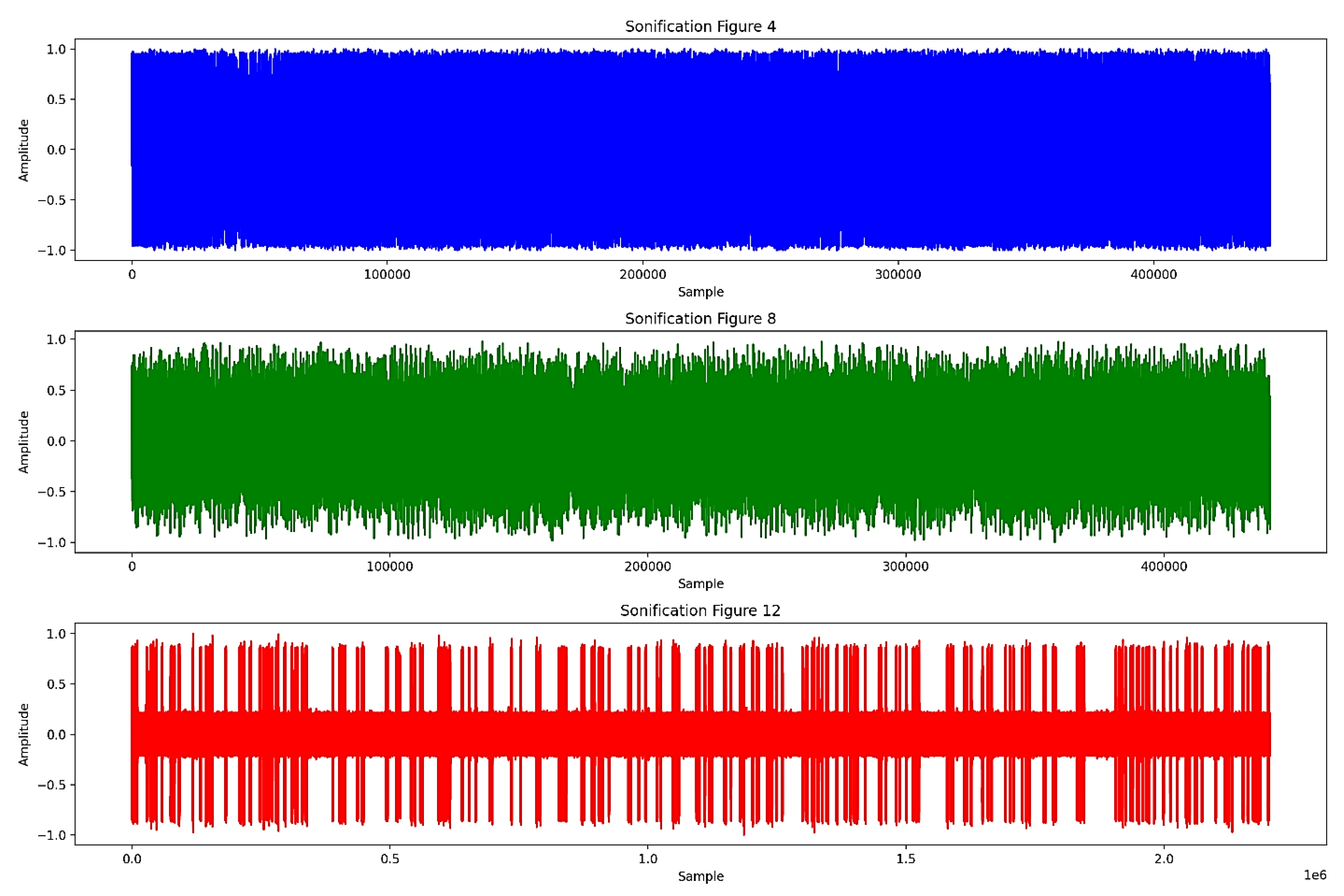

02 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Aims

2. Salient Discussions in Humanoid Robotics

2.1. Potential Benefits and Applications

2.2. User Acceptance and Interaction

2.3. Ethical and Policy Considerations

2.4. Challenges and Limitations

2.5. Impact on Healthcare Professionals

2.6. Future Directions

3. Methods

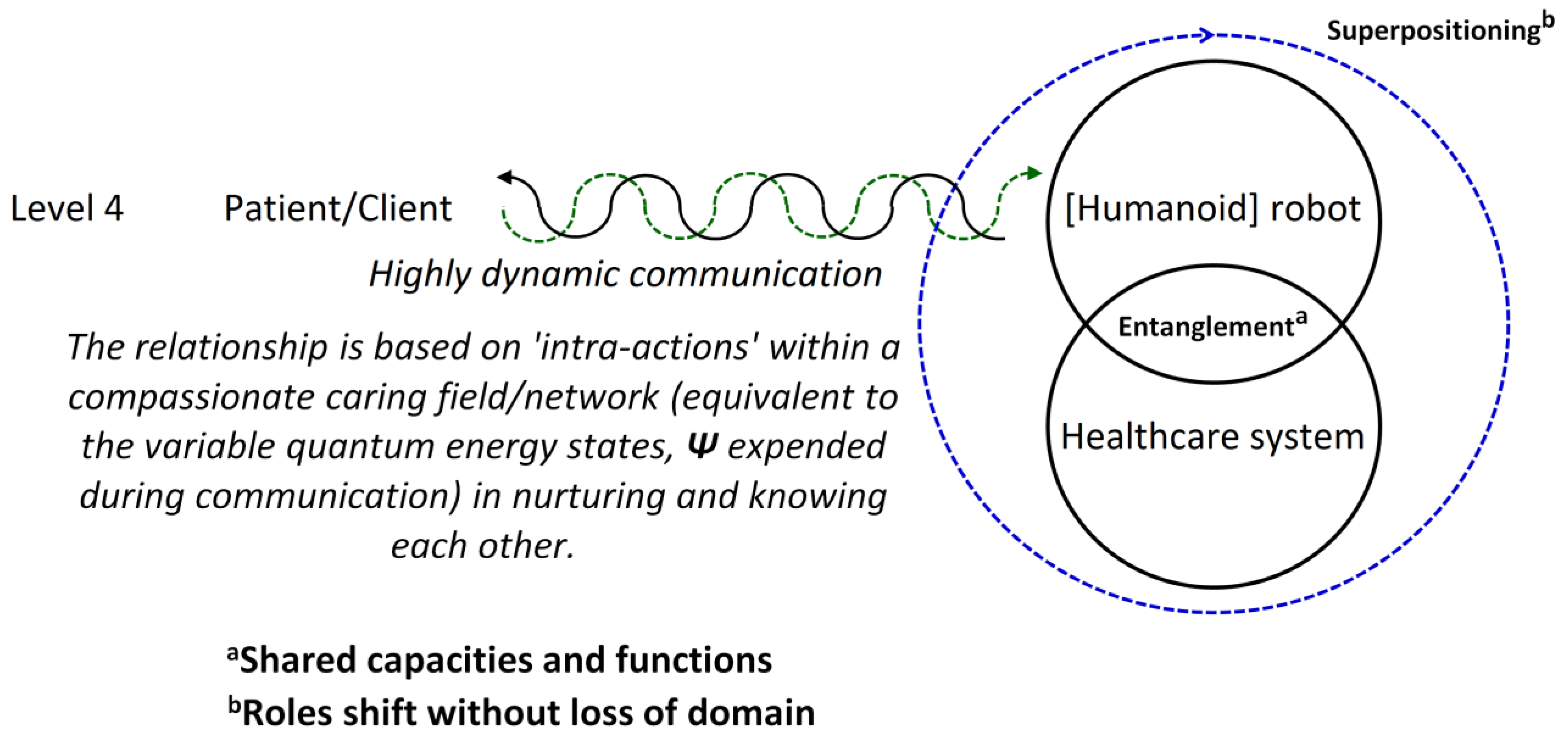

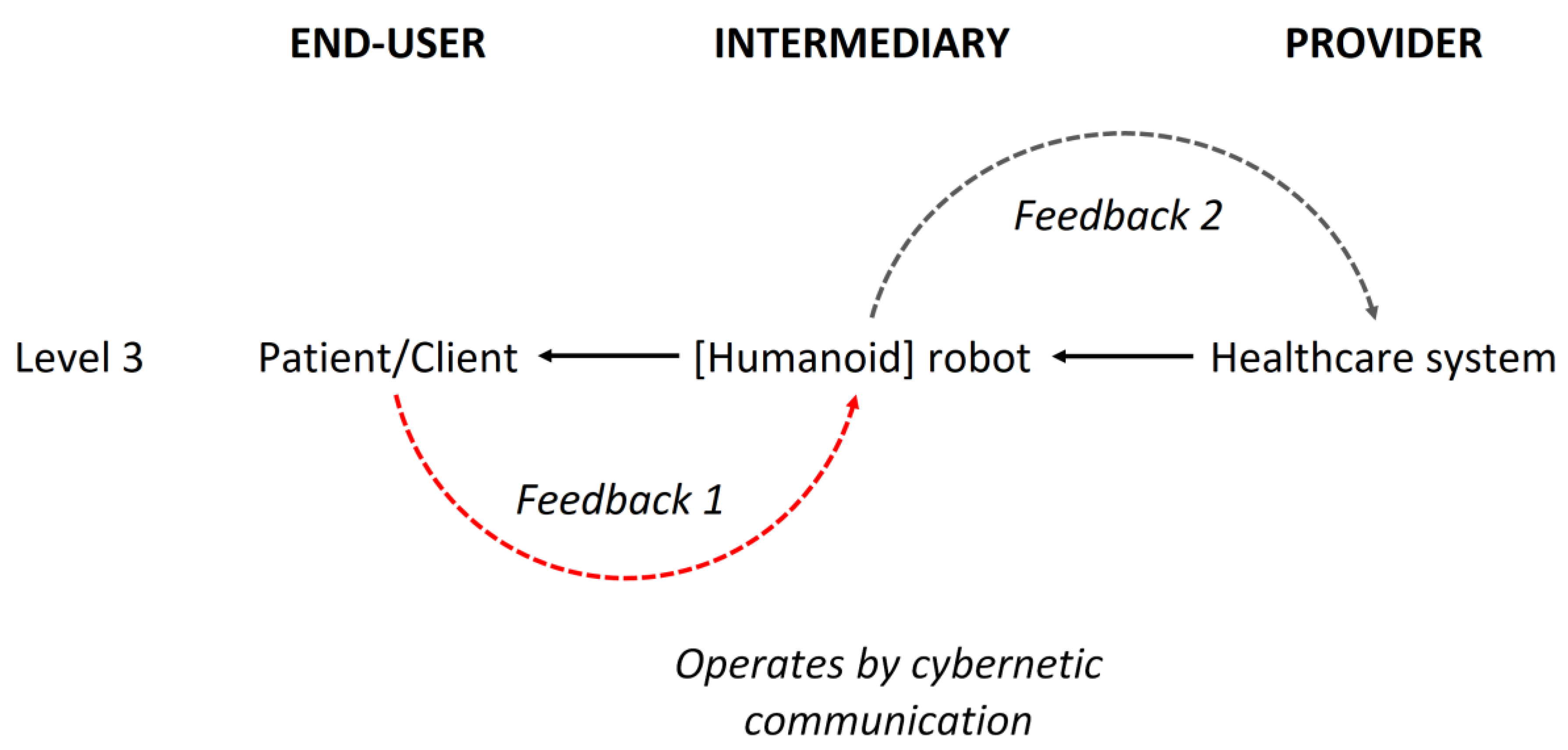

3.1. Conceptualizations of Humanoid Robots and Healthcare Systems

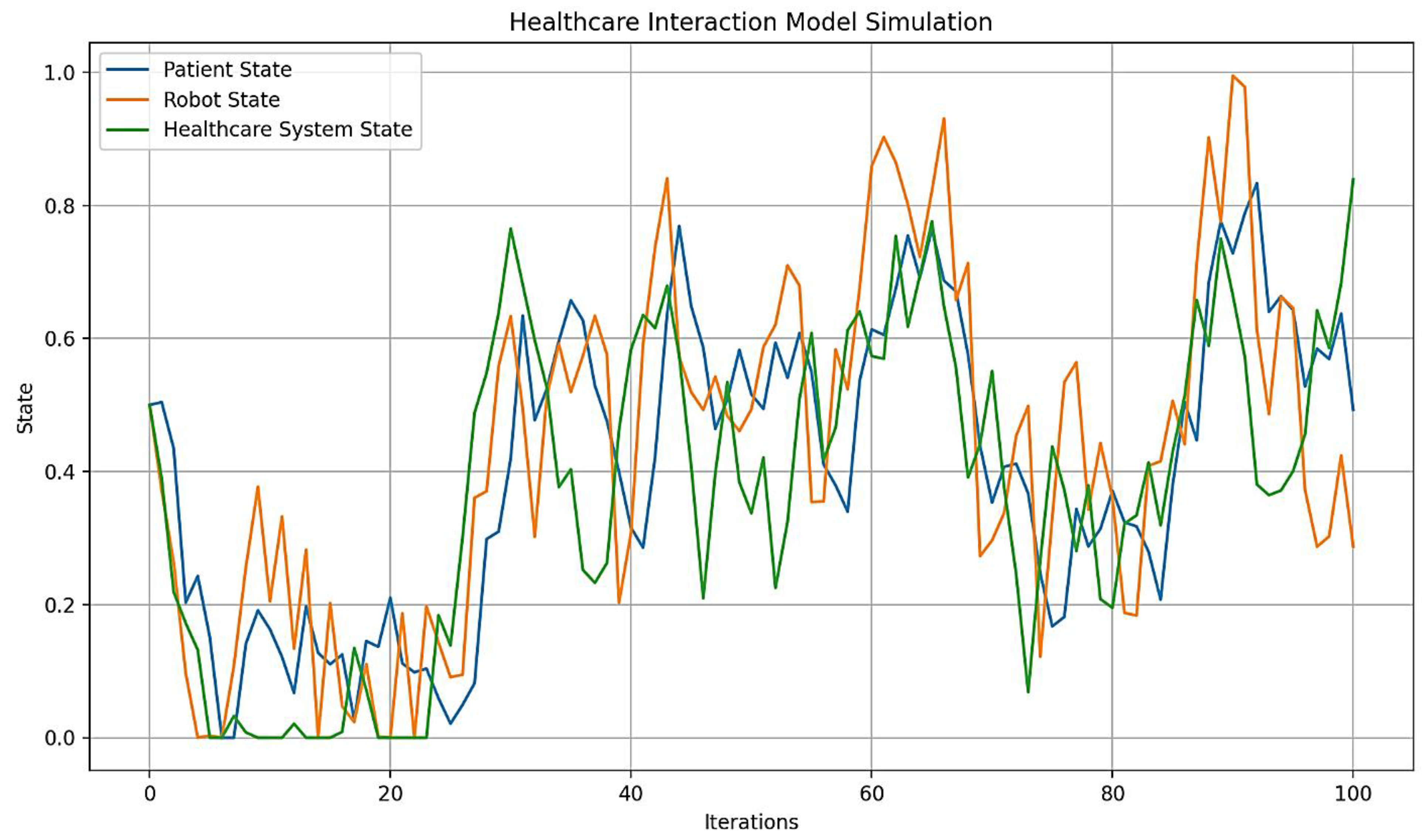

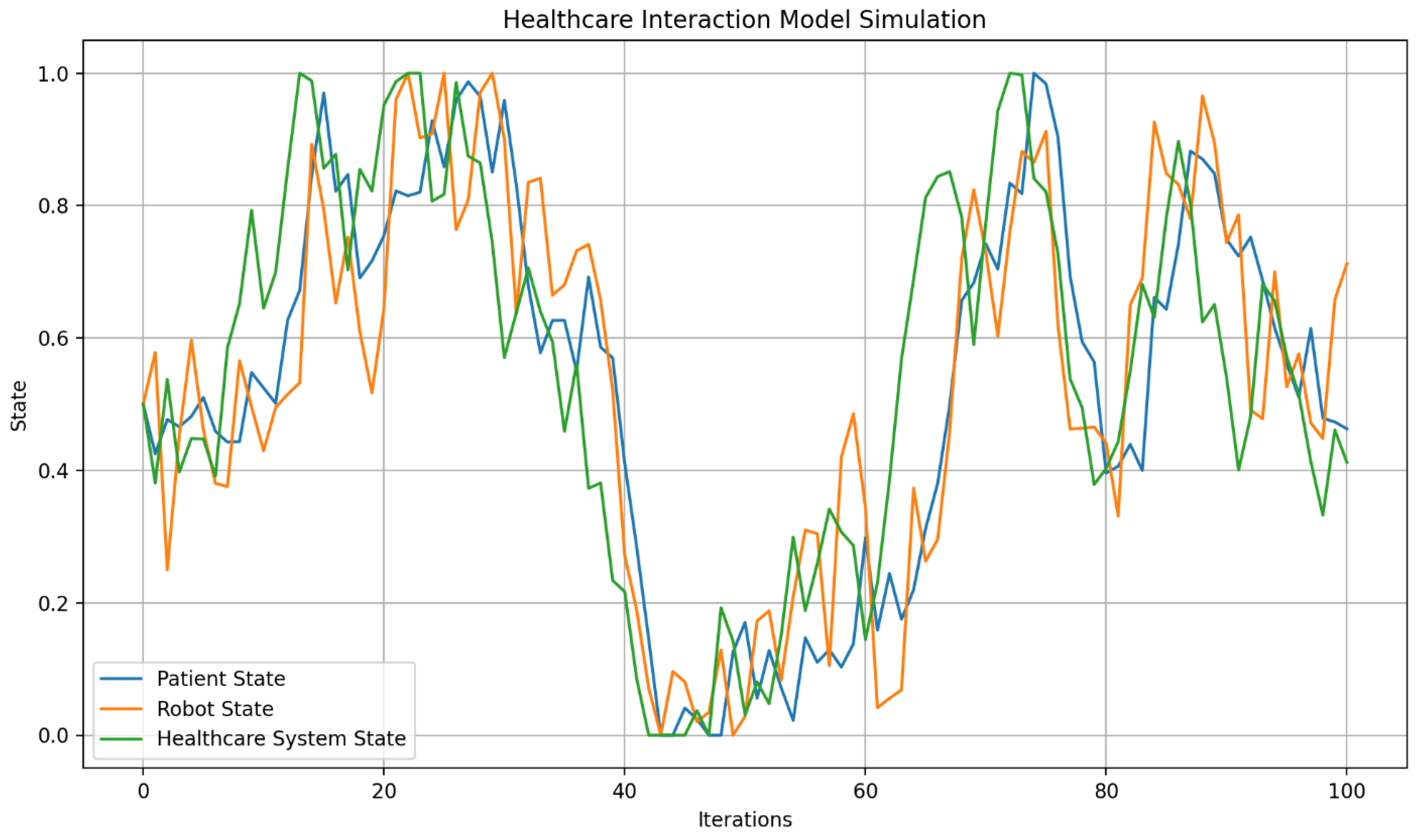

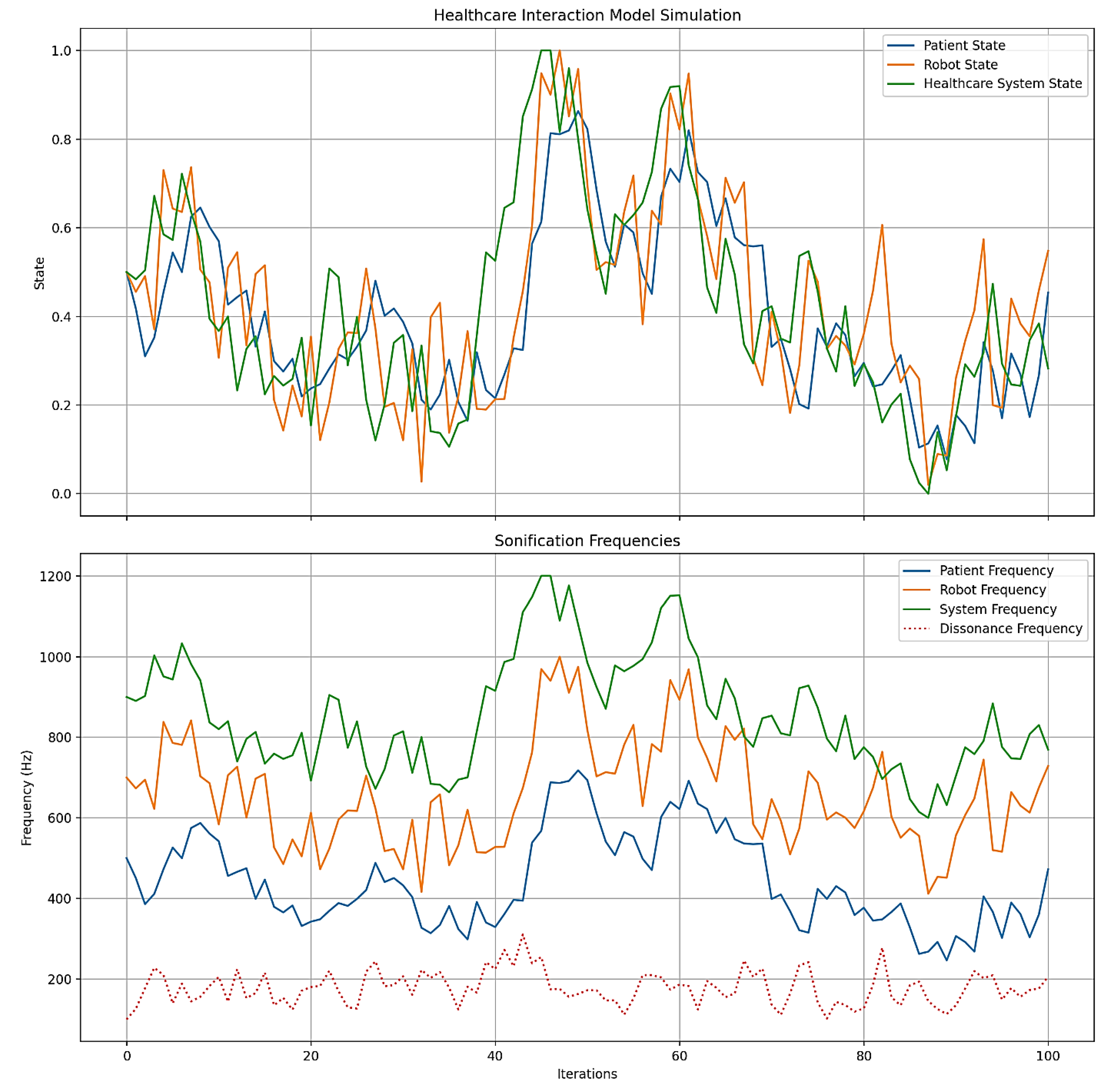

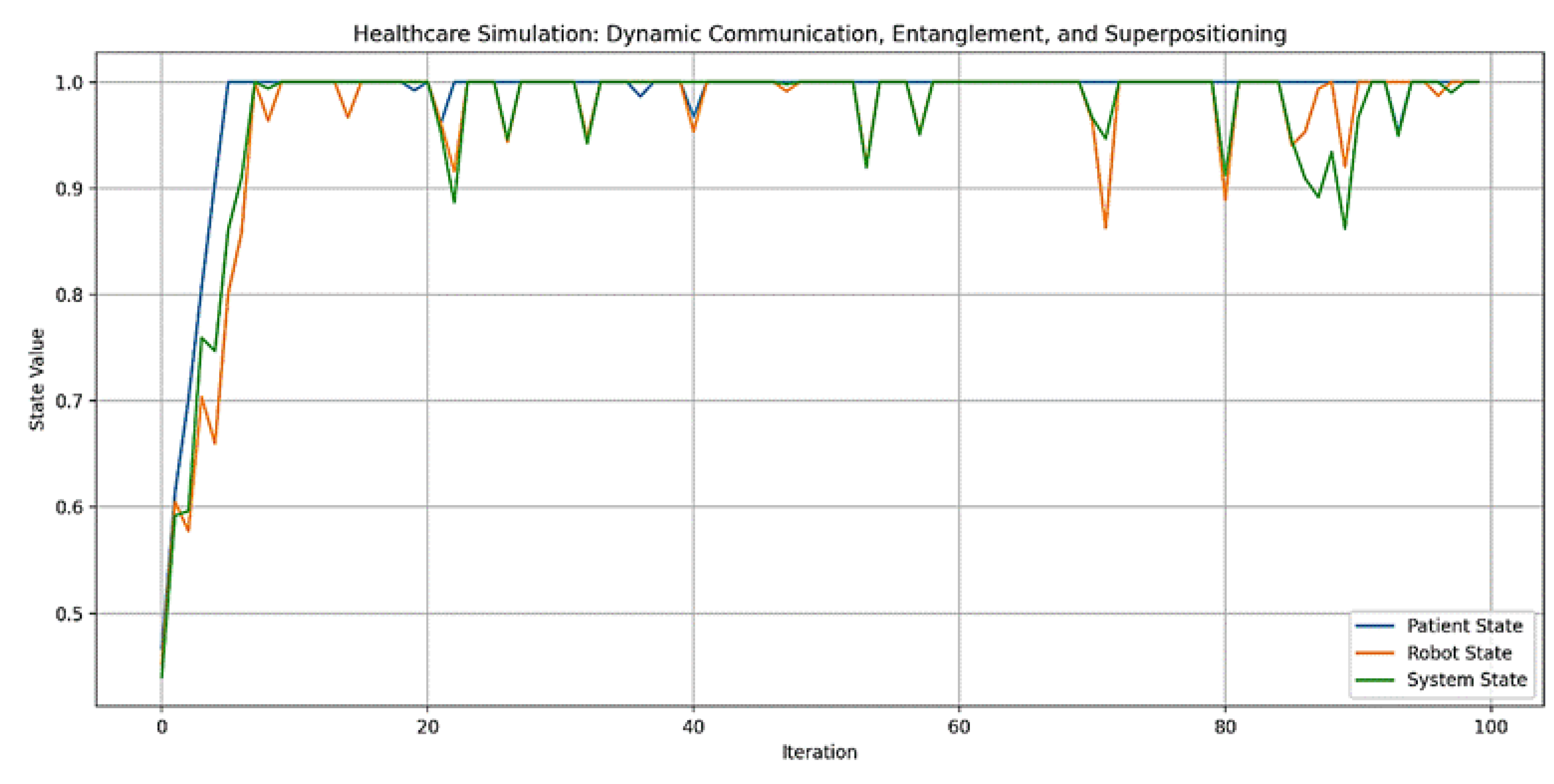

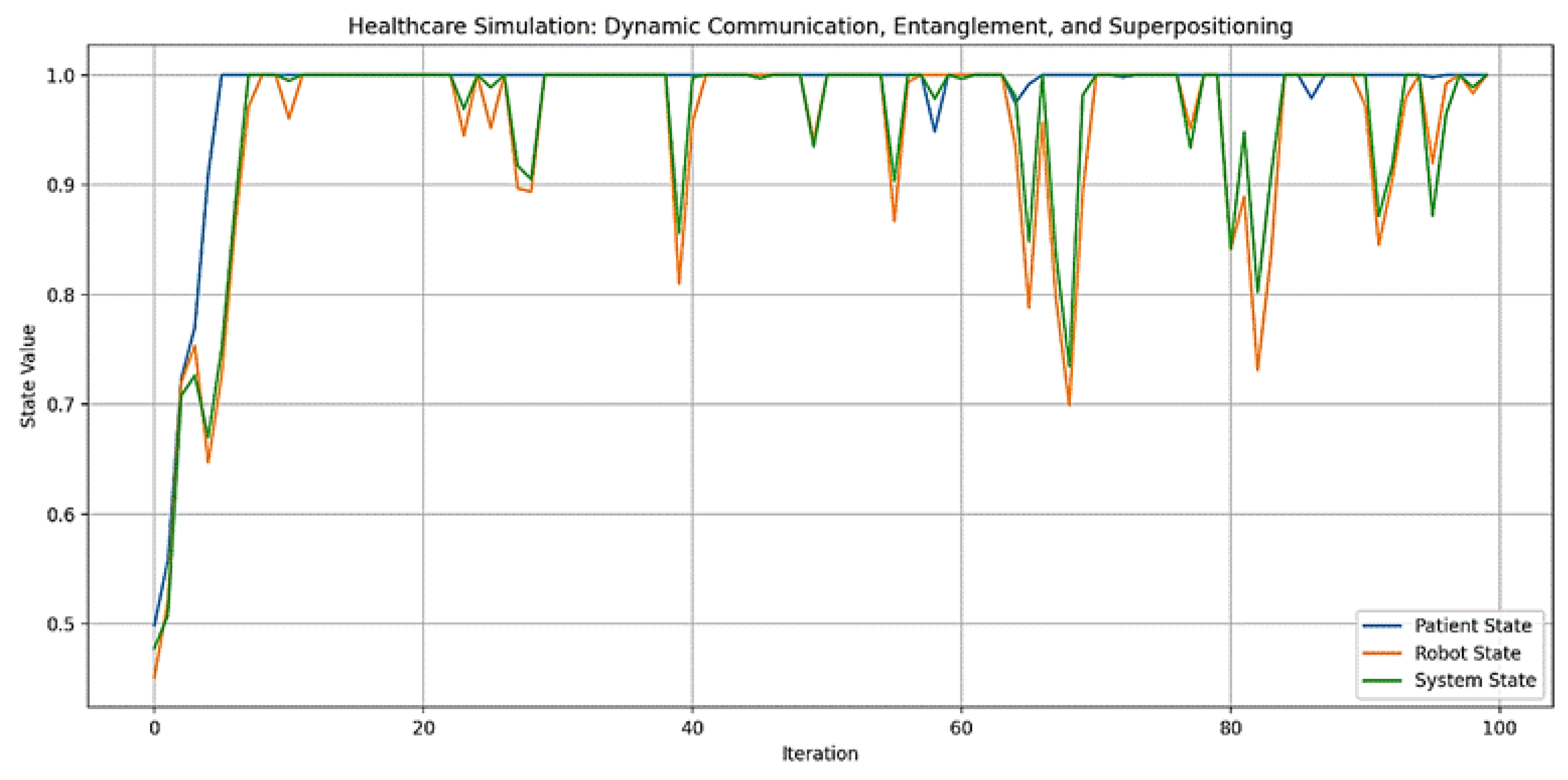

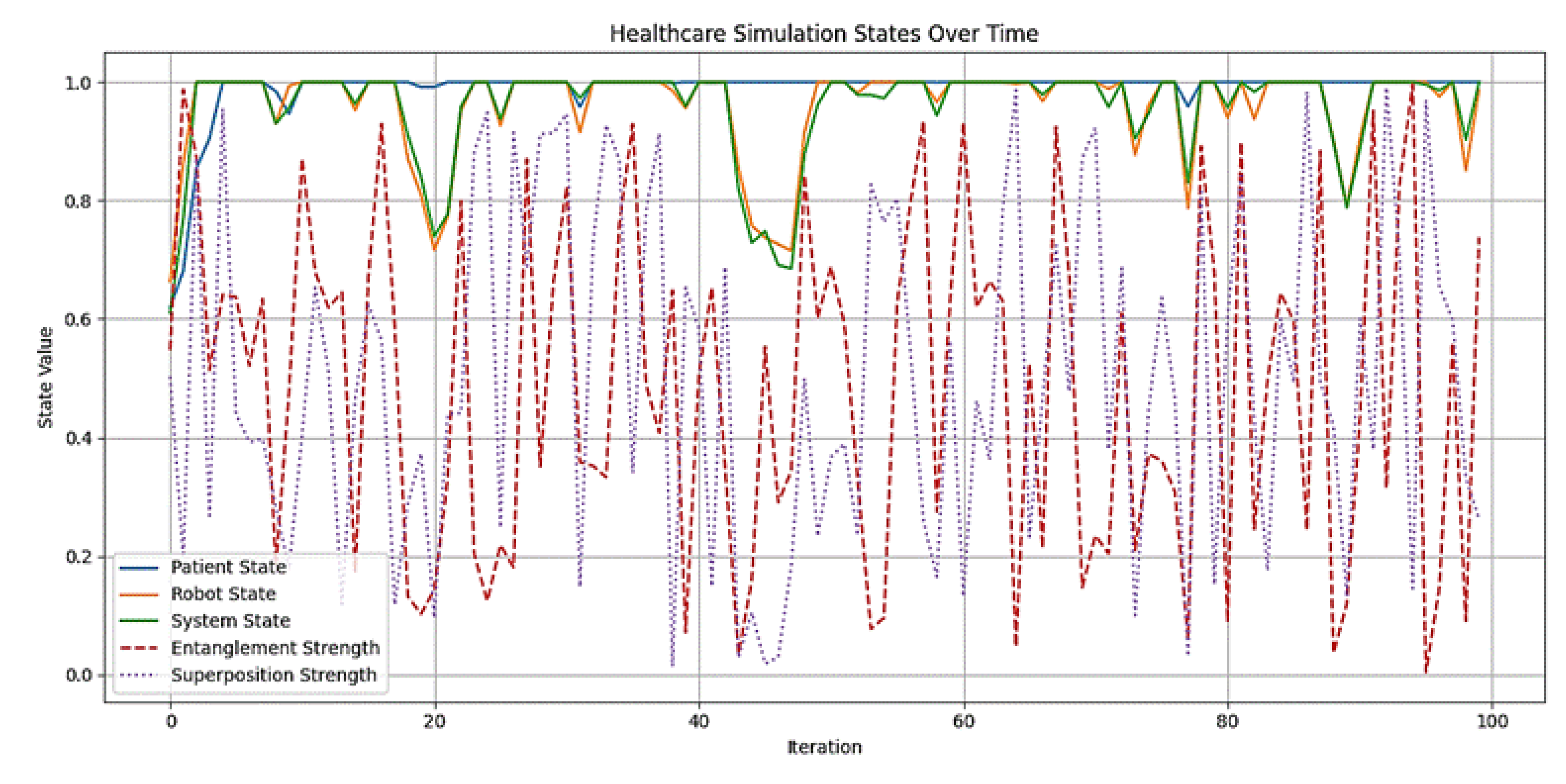

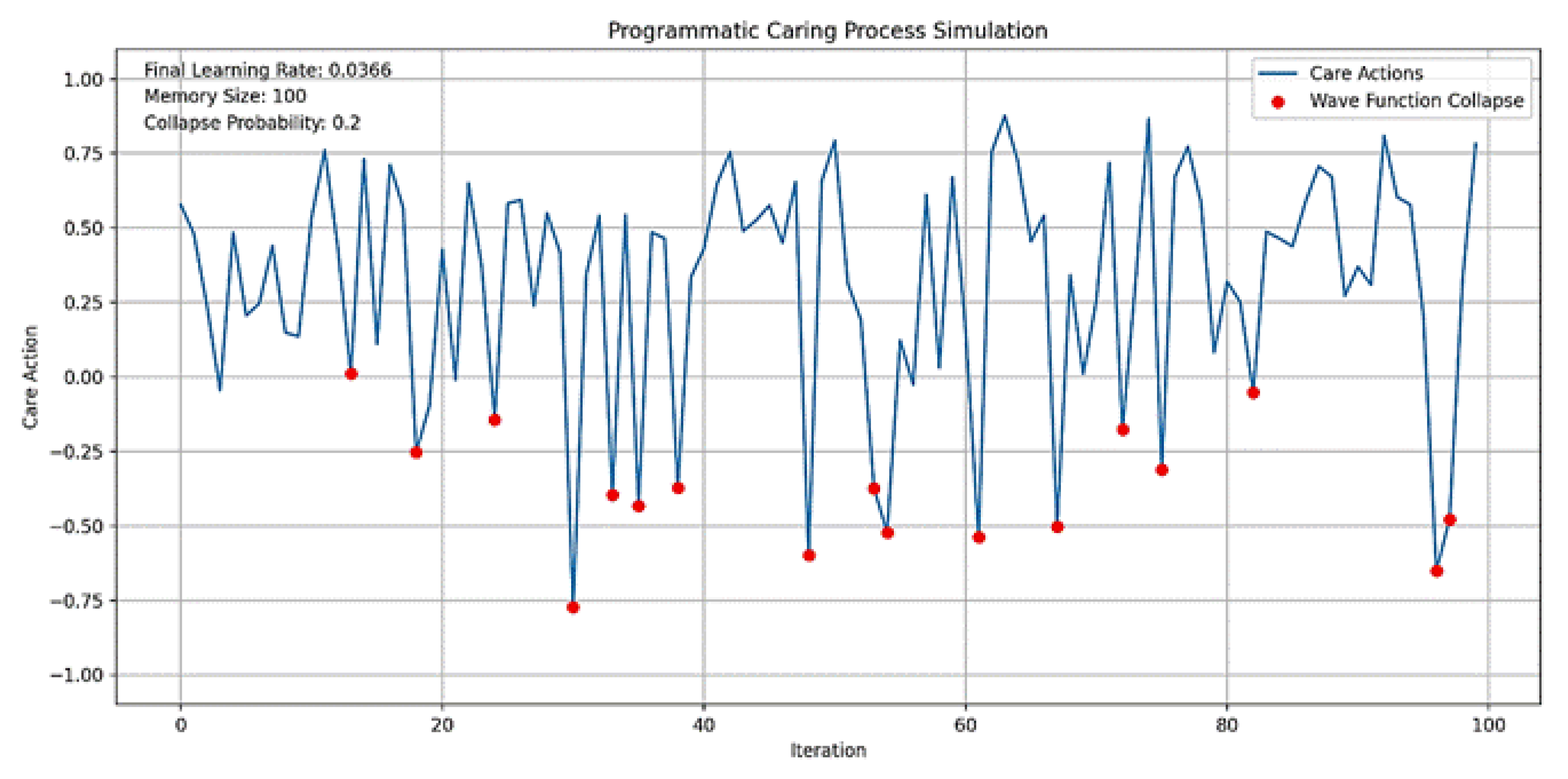

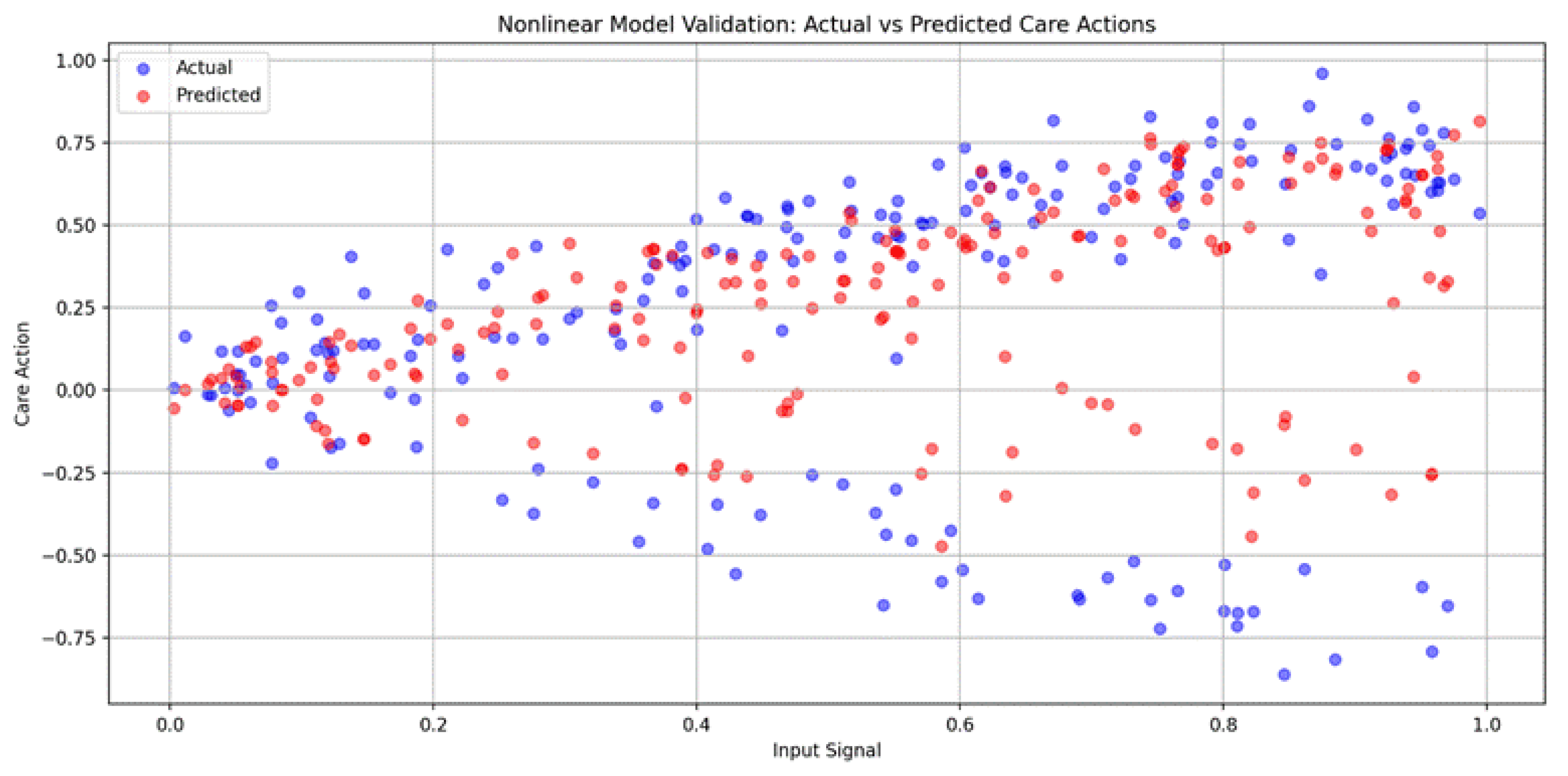

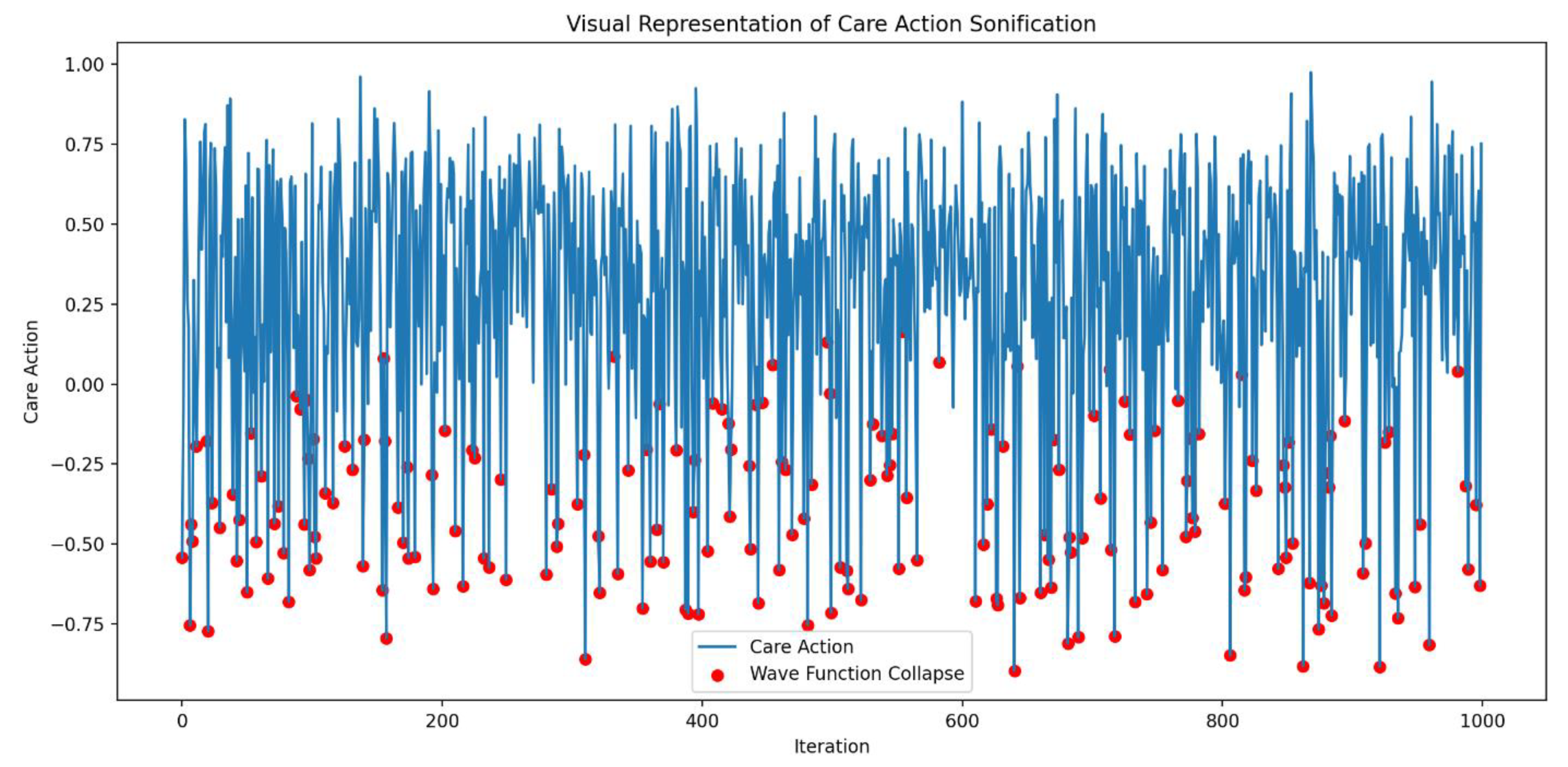

3.2. Simulations

4. Results

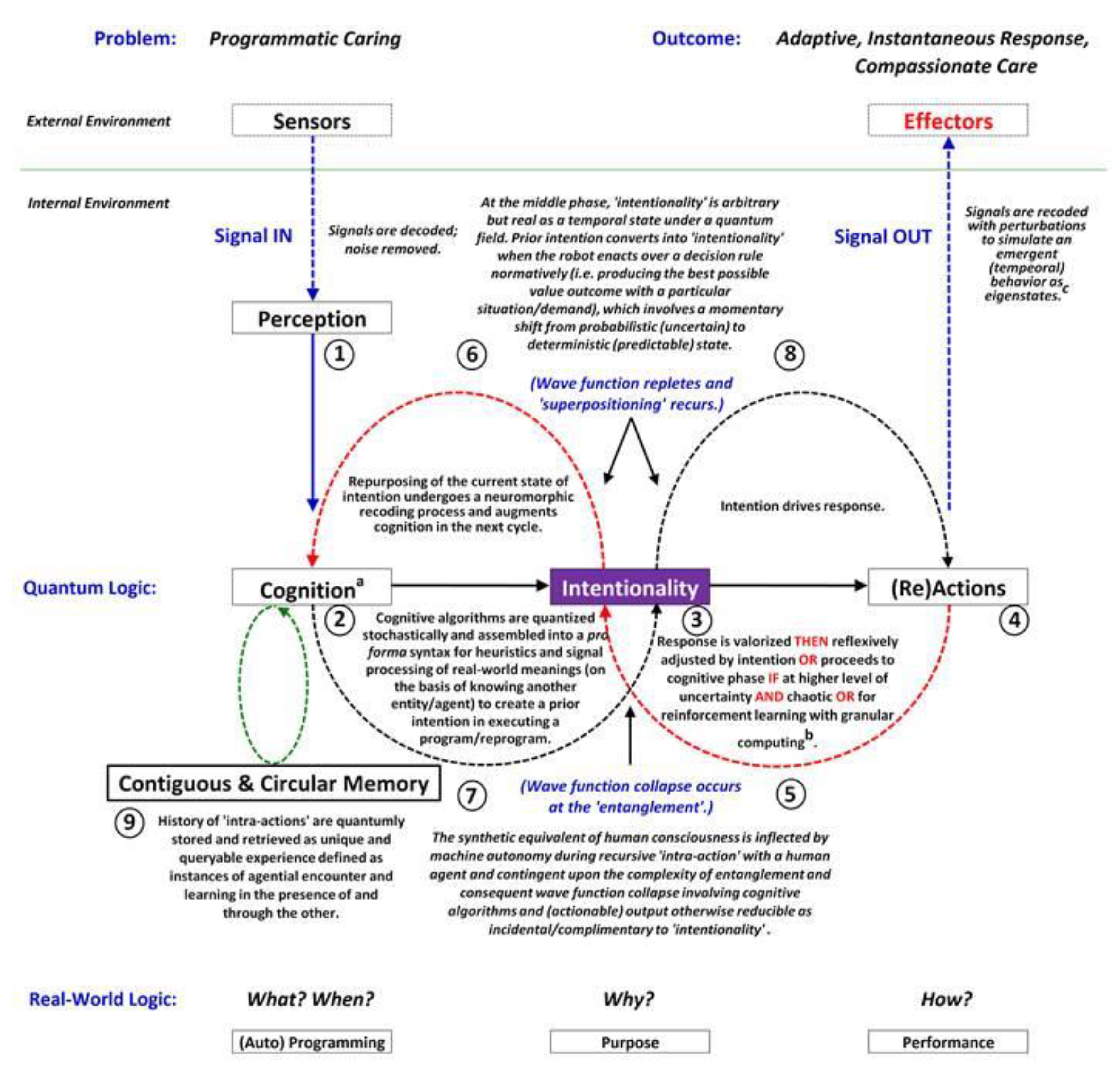

- Sensor Input and Perception: The system detects signals from both external and internal environments through sensors, filters out noise, and interprets these signals to understand the context and needs of the environment.

- Cognition and Intentionality: The system applies cognitive algorithms to process the information stochastically and assemble it into a meaningful form that reflects real-world understanding. This stage is crucial for forming a prior intention to execute a program. The intentionality phase is where the system’s response is valorized and adjusted based on the intention, or it may proceed to further cognitive processing if there is significant realization or uncertainty.

- Memory and Learning: The system incorporates a contiguous and circular memory, storing and retrieving interaction histories as unique experiences, which are used to inform future responses. The system also incorporates reinforcement learning with granular mapping to refine its responses.

- Wave Function Collapse and Quantum-like Behavior: A key feature of the system is the wave function collapse, occurring at the entanglement stage, where the synthetic equivalent of human consciousness interacts with human agents. This interaction is contingent upon the complexity of the entanglement and involves cognitive algorithms that may be reversible or incidental to intentionality.

- Action and Adaptation: The system’s (re)actions are driven by the intention, leading to the execution of the response. The system also outputs signals through effectors, recoding them with perturbations to simulate emergent exigencies. The process is dynamic and iterative, with feedback loops between perception, cognition, intentionality, and actions, allowing the system to continuously learn and adapt to provide compassionate care.

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Code Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nakano, Y.; Tanioka, T.; Yokotani, T.; Ito, H.; Miyagawa, M.; Yasuhara, Y.; Betriana, F.; Locsin, R. Nurses' perception regarding patient safety climate and quality of health care in general hospitals in Japan. J. Nurs. Manag. 2021, 29, 749–758. [Google Scholar] [CrossRef]

- Tanioka, T.; Yokotani, T.; Tanioka, R.; Betriana, F.; Matsumoto, K.; Locsin, R.; Zhao, Y.; Osaka, K.; Miyagawa, M.; Schoenhofer, S. Development issues of healthcare robots: Compassionate communication for older adults with dementia. Int. J. Environ. Res. Public Health 2021, 18, 4538. [Google Scholar] [CrossRef]

- Osaka, K.; Tanioka, R.; Betriana, F.; Tanioka, T.; Kai, Y.; Locsin, R.C. Robot therapy program for patients with dementia: Its framework and effectiveness. In Information Systems-Intelligent Information Processing Systems; IntechOpen, 2021. [Google Scholar] [CrossRef]

- Griffith, T.D.; Hubbard Jr, J.E. System identification methods for dynamic models of brain activity. Biomed. Signal Process. Control 2021, 68, 1–10. [Google Scholar] [CrossRef]

- Li, A.X.; Florendo, M.; Miller, L.E.; Ishiguro, H.; Saygin, A.P. Robot Form and Motion Influences Social Attention. In Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction; Association for Computing Machinery (ACM) Digital Library, 2015; pp. 43–50. [Google Scholar] [CrossRef]

- Fields, C. The bicentennial man; 2011. https://sites.google.com/a/depauw.edu/the-bicentennial-man/movie-analysis.

- Rogers, M.E. Nursing science and the space age. Nurs. Sci. Q. 1992, 5, 27–34. https://moscow.sci-hub.st/3017/1e138e0d0fdaa8d9173640a218905cc2/rogers1992.pdf. [CrossRef] [PubMed]

- Baumann, S.L.; Wright, S.G.; Settecase-Wu, C. A science of unitary human beings perspective of global health nursing. Nurs. Sci. Q. 2014, 27, 324–328. https://www.researchgate.net/publication/266086499_A_Science_of_Unitary_Human_Beings_Perspective_of_Global_Health_Nursing. [CrossRef] [PubMed]

- Locsin, R.C. The co-existence of technology and caring in the theory of technological competency as caring in nursing. J Med Invest 2017, 64, 160–164. [Google Scholar] [CrossRef]

- Tanioka, T. The development of the transactive relationship theory of nursing (TRETON): A nursing engagement model for persons and humanoid nursing robots. Int. J. Nurs. Pract. 2017, 4, 1–8. [Google Scholar] [CrossRef]

- Pepito, J.A.; Locsin, R. Can nurses remain relevant in a technologically advanced future? Int. J. Nurs. Sci. 2019, 6, 106–110. [Google Scholar] [CrossRef] [PubMed]

- Tanioka, T.; Osaka, K.; Locsin, R.; Yasuhara, Y.; Ito, H. Recommended design and direction of development for humanoid nursing robots’ perspective from nursing researchers. ICA 2017, 8, 96–110. [Google Scholar] [CrossRef]

- Tanioka, R.; Sugimoto, H.; Yasuhara, Y.; Ito, H.; Osaka, K.; Zhao, Y.; Kai, Y.; Locsin, R.; Tanioka, T. Characteristics of transactive relationship phenomena among older adults, care workers as intermediaries, and the pepper robot with care prevention gymnastics exercises. J Med Invest 2019, 66, 46–49. [Google Scholar] [CrossRef]

- Tanioka, R.; Sugimoto, H.; Yasuhara, Y.; Ito, H.; Osaka, K.; Zhao, Y.; Kai, Y.; Locsin, R.; Tanioka, T. Characteristics of transactive relationship phenomena among older adults, care workers as intermediaries, and the Pepper robot with care prevention gymnastics exercises. J Med Invest 2019, 66, 46–49. [Google Scholar] [CrossRef]

- Tanioka, T.; Yasuhara, Y.; Dino, M.J.S.; Kai, Y.; Locsin, R.C.; Schoenhofer, S.O. Disruptive engagements with technologies, robotics, and caring: Advancing the transactive relationship theory of nursing. Nurs. Adm. Q. 2019, 43, 313–321. [Google Scholar] [CrossRef]

- Cano, S.; Díaz-Arancibia, J.; Arango-López, J.; Libreros, J.E.; García, M. Design path for a social robot for emotional communication for children with autism spectrum disorder (ASD). J. Sens. 2023, 23, 5291. [Google Scholar] [CrossRef] [PubMed]

- Trainum, K.; Tunis, R.; Xie, B.; Hauser, E. Robots in assisted living facilities: Scoping review. JMIR Aging 2023, 6, e42652. [Google Scholar] [CrossRef] [PubMed]

- Osaka, K.; Sugimoto, H.; Tanioka, T.; Yasuhara, Y.; Locsin, R.; Zhao, Y.; Okuda, K.; Saito, K. Characteristics of a transactive phenomenon in relationships among older adults with dementia, nurses as intermediaries, and communication robot. ICA 2017, 8, 111. http://www.scirp.org/journal/PaperInformation.aspx?PaperID=76520&#abstract. [CrossRef]

- Miyagawa, M.; Yasuhara, Y.; Tanioka, T.; Locsin, R.; Kongsuwan, W.; Catangui, E.; Matsumoto, K. The optimization of humanoid robot’s dialog in improving communication between humanoid robot and older adults. ICA 2019, 10, 118–127. [Google Scholar] [CrossRef]

- Hung, L.; Liu, C.; Woldum, E.; Au-Yeung, A.; Berndt, A.; Wallsworth, C.; Horne, N.; Gregorio, M.; Mann, J.; Chaudhury, H. The benefits of and barriers to using a social robot PARO in care settings: A scoping review. BMC Geriatr. 2019, 19, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Hung, L.; Wong, J.; Smith, C.; Berndt, A.; Gregorio, M.; Horne, N.; Jackson, L.; Mann, J.; Wada, M.; Young, E. Facilitators and barriers to using telepresence robots in aged care settings: A scoping review. JRATE 2022, 9, 20556683211072385. [Google Scholar] [CrossRef]

- Abdi, J.; Al-Hindawi, A.; Ng, T.; Vizcaychipi, M.P. Scoping review on the use of socially assistive robot technology in elderly care. BMJ Open 2018, 8, e018815. https://bmjopen.bmj.com/content/8/2/e018815.

- Guemghar, I.; Pires de Oliveira Padilha, P.; Abdel-Baki, A.; Jutras-Aswad, D.; Paquette, J.; Pomey, M.P. Social robot interventions in mental health care and their outcomes, barriers, and facilitators: scoping review. JMIR Ment. Health 2022, 9, e36094. [Google Scholar] [CrossRef]

- David, D.; Thérouanne, P.; Milhabet, I. The acceptability of social robots: A scoping review of the recent literature. Comput. Hum. Behav. 2022, 107419. [Google Scholar] [CrossRef]

- Betriana, F.; Tanioka, T.; Osaka, K.; Kawai, C.; Yasuhara, Y.; Locsin, R.C. Interactions between healthcare robots and older people in Japan: A qualitative descriptive analysis study. Japan J. Nurs. Sci. 2021, 18, e12409. [Google Scholar] [CrossRef]

- Betriana, F.; Tanioka, R.; Gunawan, J.; Locsin, R.C. Healthcare robots and human generations: Consequences for nursing and healthcare. Collegian 2022, 29, 767–773. [Google Scholar] [CrossRef]

- Hurst, N.; Clabaugh, C.; Baynes, R.; Cohn, J.; Mitroff, D.; Scherer, S. Social and emotional skills training with embodied Moxie. arXiv 2020, arXiv:2004.12962. [Google Scholar] [CrossRef]

- Triantafyllidis, A.; Alexiadis, A.; Votis, K.; Tzovaras, D. Social robot interventions for child healthcare: A systematic review of the literature. Comput. Methods Programs Biomed. Update 2023, 100108. [Google Scholar] [CrossRef]

- Sætra, H.S. The foundations of a policy for the use of social robots in care. Technol. Soc. J 2020, 63, 101383. [Google Scholar] [CrossRef]

- Locsin, R.C.; Ito, H. Can humanoid nurse robots replace human nurses. J. Nurs. 2018, 5, 1–6. [Google Scholar] [CrossRef]

- Locsin, R.C.; Soriano, G.P.; Juntasopeepun, P.; Kunaviktikul, W.; Evangelista, L.S. Social transformation and social isolation of older adults: Digital technologies, nursing, healthcare. Collegian 2021, 28, 551–558. [Google Scholar] [CrossRef]

- Kipnis, E.; McLeay, F.; Grimes, A.; de Saille, S.; Potter, S. Service robots in long-term care: A consumer-centric view. J. Serv. Res. 2022, 25, 667–685. [Google Scholar] [CrossRef]

- Persson, M.; Redmalm, D.; Iversen, C. Caregivers’ use of robots and their effect on work environment–A scoping review. JTHS 2022, 40, 251–277. [Google Scholar] [CrossRef]

- González-González, C.S.; Violant-Holz, V.; Gil-Iranzo, R.M. Social robots in hospitals: A systematic review. Appl. Sci. 2021, 11, 5976. [Google Scholar] [CrossRef]

- Dawe, J.; Sutherland, C.; Barco, A.; Broadbent, E. Can social robots help children in healthcare contexts? A scoping review. BMJ Paediatr. Open 2019, 3. [Google Scholar] [CrossRef]

- Hernandez, J.P.T. Network diffusion and technology acceptance of a nurse chatbot for chronic disease self-management support: A theoretical perspective. J. Med. Invest. 2019, 66(1.2), 24–30. [Google Scholar] [CrossRef]

- Morgan, A.A.; Abdi, J.; Syed, M.A.; Kohen, G.E.; Barlow, P.; Vizcaychipi, M.P. Robots in healthcare: A scoping review. Current Robotics Reports 2022, 3, 271–280. [Google Scholar] [CrossRef]

- Soriano, G.P.; Yasuhara, Y.; Ito, H.; Matsumoto, K.; Osaka, K.; Kai, Y.; Locsin, R.; Schoenhofer, S.; Tanioka, T. Robots and robotics in nursing. Healthcare 2022, 10, 1571. [Google Scholar] [CrossRef] [PubMed]

- Ohneberg, C.; Stöbich, N.; Warmbein, A.; Rathgeber, I.; Mehler-Klamt, A.C.; Fischer, U.; Eberl, I. Assistive robotic systems in nursing care: A scoping review. BMC Nursi. 2023, 22, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Kyrarini, M.; Lygerakis, F.; Rajavenkatanarayanan, A.; Sevastopoulos, C.; Nambiappan, H.R.; Chaitanya, K.K.; Ashwin Ramesh Babu, A.R.; Mathew, J.; Makedon, F. A survey of robots in healthcare. Technologies 2021, 9, 8. [Google Scholar] [CrossRef]

- Kitt, E.R.; Crossman, M.K.; Matijczak, A.; Burns, G.B.; Kazdin, A.E. Evaluating the role of a socially assistive robot in children’s mental health care. J. Child Fam. Stud. 2021, 30, 1722–1735. [Google Scholar] [CrossRef] [PubMed]

- Revelles-Benavente, B. Material knowledge: Intra-acting van der Tuin’s new materialism with Barad’s agential realism. Enrahonar. An International Journal of Theoretical and Practical Reason 2018, 60, 75–91. https://ddd.uab.cat/pub/enrahonar/enrahonar_a2018v60/enrahonar_a2018v60p75.pdf.

- Ahmad, M.; Mubin, O.; Orlando, J. A systematic review of adaptivity in human-robot interaction. MTI 2017, 3, 1–25. [Google Scholar] [CrossRef]

- Campa, R. The rise of social robots: A review of the recent literature. J. Evol. Tech. 2016, 26, 106–113. https://jetpress.org/v26.1/campa.pdf. [CrossRef]

- Papadopoulos, I.; Koulouglioti, C.; Lazzarino, R.; Ali, S. Enablers and barriers to the implementation of socially assistive humanoid robots in health and social care: A systematic review. BMJ Open 2020, 10, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Mou, Y.; Shi, C.; Shen, T.; Xu, K. A systematic review of the personality of robot: Mapping its conceptualization, operationalization, contextualization and effects. Int. J. Hum. Comput. 2019, 36, 591–605. [Google Scholar] [CrossRef]

- Rossi, S.; Conti, D.; Garramone, F.; Santangelo, G.; Staffa, M.; Varrasi, S.; Di Nuovo, A. The role of personality factors and empathy in the acceptance and performance of a social robot for psychometric evaluations. Robotics 2020, 9, 1–19. [Google Scholar] [CrossRef]

- Turner, C.K. A principle of intentionality. Front. Psychol. 2017, 8, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Clark, C.S. Watson’s human caring theory: Pertinent transpersonal and humanities concepts for educators. Humanities 2016, 5, 1–12. [Google Scholar] [CrossRef]

- Tononi, G. Consciousness as integrated information: A provisional manifesto. Biol. Bull. 2008, 215, 216–242. [Google Scholar] [CrossRef]

- Betriana, F.; Osaka, K.; Matsumoto, K.; Tanioka, T.; Locsin, R. Relating Mori’s Uncanny Valley in generating conversations with artificial affective communication and lateral language processing. Nurs. Philos. 2020, 22, e12322. [Google Scholar] [CrossRef]

- Pepito, J.; Ito, H.; Betriana, F.; Tanioka, T.; Locsin, R. Intelligent humanoid robots expressing artificial humanlike empathy in nursing situations. Nurs. Philos. 2020, 21, e12318. [Google Scholar] [CrossRef]

- Bishop, J.M.; Nasuto, J.S. Second-order cybernetics and enactive perception. Kybernetes 2005, 34, 1309–1320. http://www.doc.gold.ac.uk/~mas02mb/Selected%20Papers/2005%20Kybernetes.pdf. [CrossRef]

- Yao, J.T.; Vasilakos, A.V.; Pedrycz, W. Granular computing: Perspectives and challenges. IEEE Trans. Cybern. 2013, 43, 1977–1989. https://ieeexplore.ieee.org/document/6479257. [CrossRef]

- Downes, S. Becoming Connected [Video]. 25 Oct 2017. https://youtu.be/n69kCVWn2D8.

- Locsin, R.C.; Ito, H.; Tanioka, T.; Yasuhara, Y.; Osaka, K.; Schoenhofer, S.O. Humanoid nurse robots as caring entities: A revolutionary probability. Int. J. Nurs. Stud. 2018, 3, 146–154. [Google Scholar] [CrossRef]

- Duffy, B. Robots social embodiment in autonomous mobile robotics. IJARS 2004, 1, 155–170. [Google Scholar] [CrossRef]

- van Wynsberghe, A.; Li, S. A paradigm shift for robot ethics: From HRI to human-robot-system interaction (HRSI). Medicolegal and Bioethics 2019, 9, 11–21. [Google Scholar] [CrossRef]

- Goi, E.; Zhang, Q.; Chen, X.; Luan, H.; Gu, M. Perspective on photonic memristive neuromorphic computing. PhotoniX 2020, 1, 1–26. [Google Scholar] [CrossRef]

- Artificial Intelligence Board of America. Neuromorphic computing: The next-level artificial intelligence [Blog post]. 2020. Available online: https://www.artiba.org/blog/neuromorphic-computing-the-next-level-artificial-intelligence (accessed on 27 May 2024).

- Giger, J.C.; Picarra, N.; Alves-Oliveira, P.R.; Arriaga, P. Humanization of robots: Is it really such a good idea? Hum. Behav. Emerg. 2019, 1, 111–123. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).