Submitted:

01 September 2024

Posted:

03 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- modelling TSP executions as timed interpreted systems with real-time, in which each agent (user) taking part in the protocol is modelled as a network of timed, synchronised automata – it is a more realistic solution compared with the previous ones;

- expressing times of actions performed during the protocol’s execution and the network delays (which includes message generation times, encryption, decryption and network transfer times) – using these, we can compute dependencies between these values and lifetimes of TSPs’ time primitives (time tickets), which is essential when considering the possibility of an attack;

- the ability to detect and prevent the existence in the network of a passive Intruder in Man-in-the-Middle configurations;

- considering a strongly monotonic version of dense timed semantics, and many different lifetimes, and their values;

- new, suitable implementation and experimental results.

Related Work

Paper Organisation

2. Timed Interpreted Systems

3. Modelling of TSP Executions

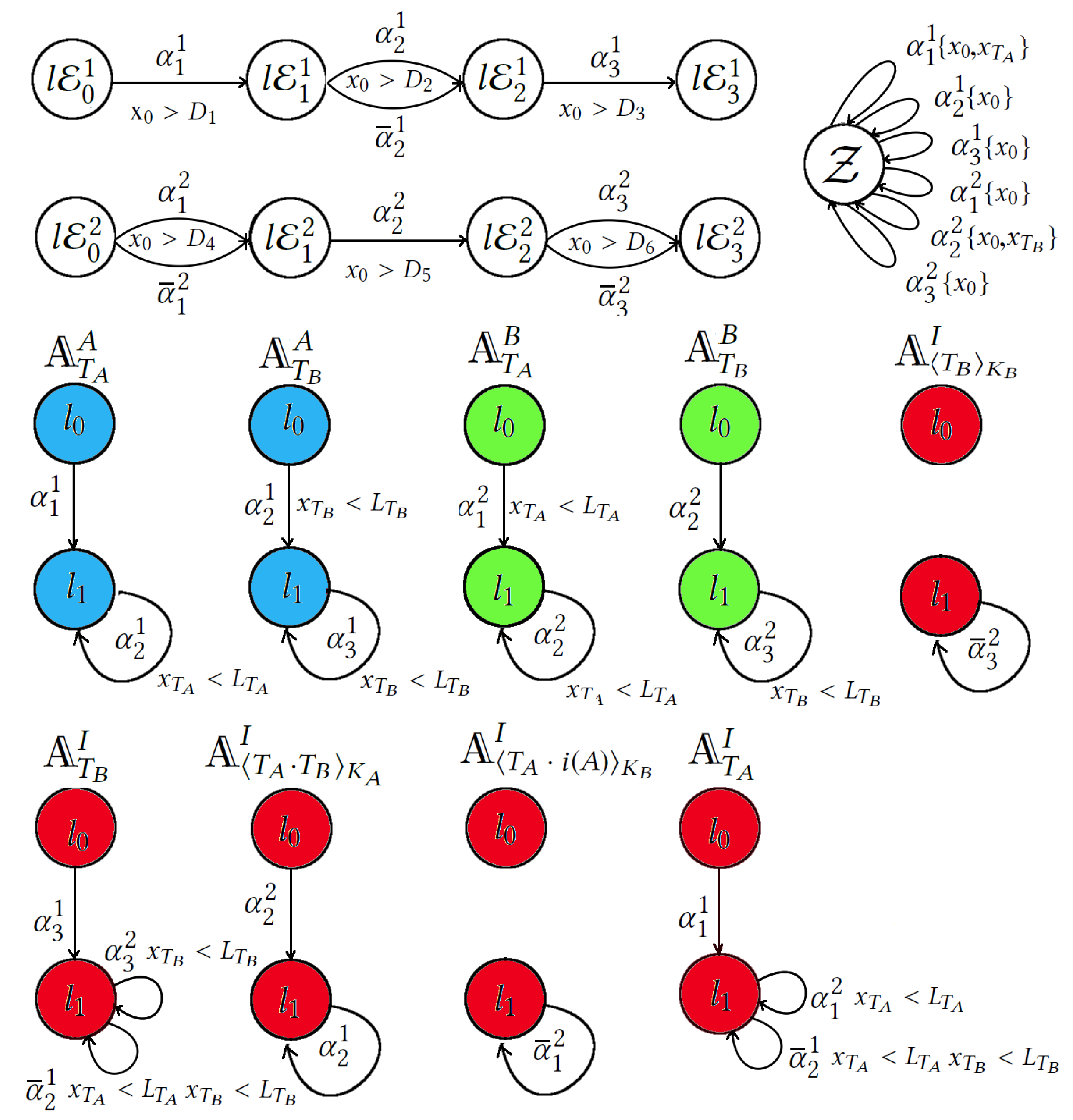

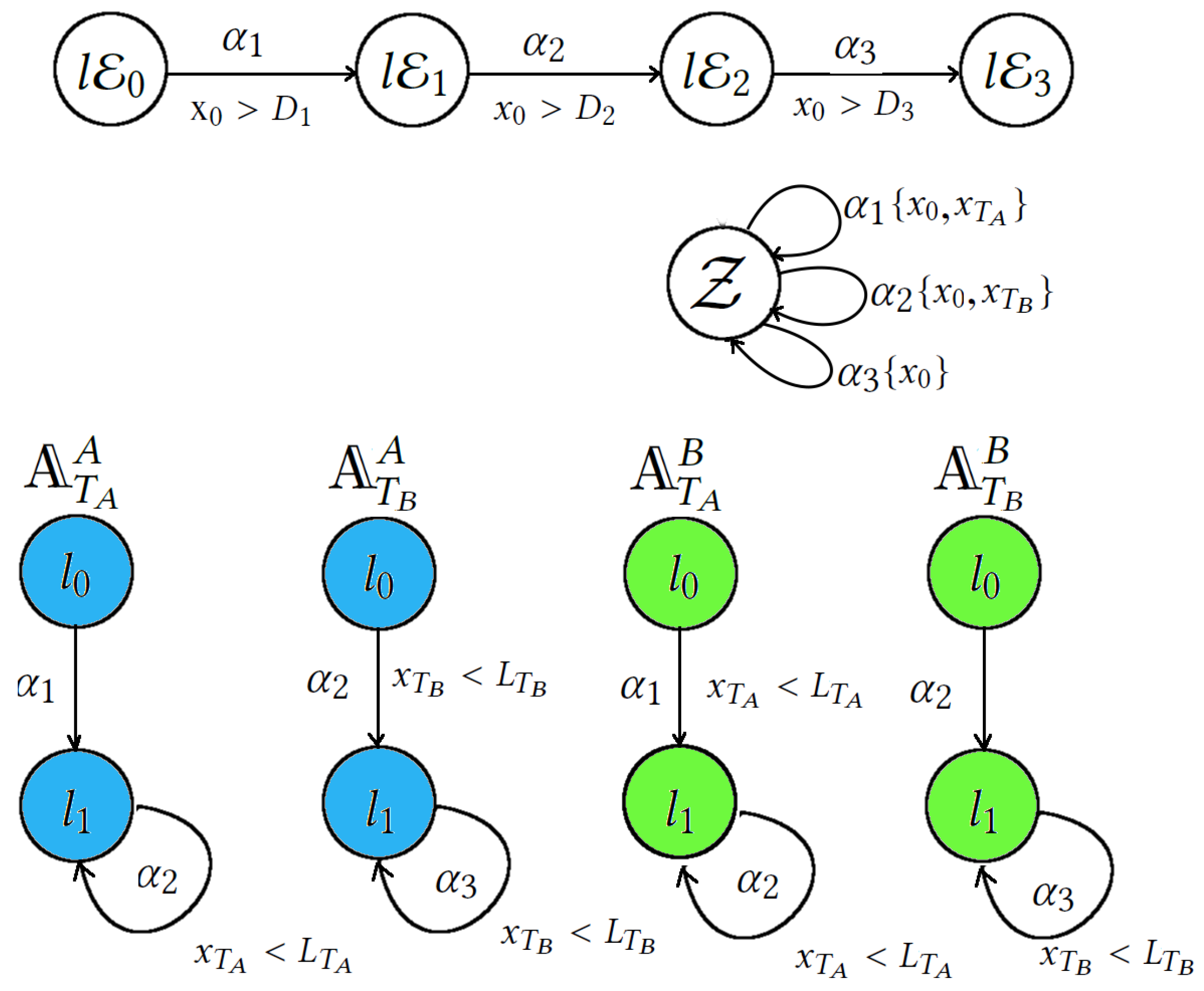

3.1. TIS for NSPKT Executions Modelling

4. Reachability Checking

4.1. Timed Model

4.2. Reachability Analysis

| Algorithm 1 The standard bounded model checking algorithm for testing reachability |

|

- refined the definitions for the encoding of the transition relation (as the definition in the cited paper lacked precision), and

- incorporated real-time aspects of multi-agent systems (MAS), whereas the previous SMT encoding was limited to discrete time.

- encodes the state s of the model ,

- encodes an action transition and ensures that every local action in is performed by each agent in which this action appears,

- encodes a time transition in .

5. Experimental Results

5.1. Implementation.

5.2. SMT-Solvers

5.3. Performance Evaluation

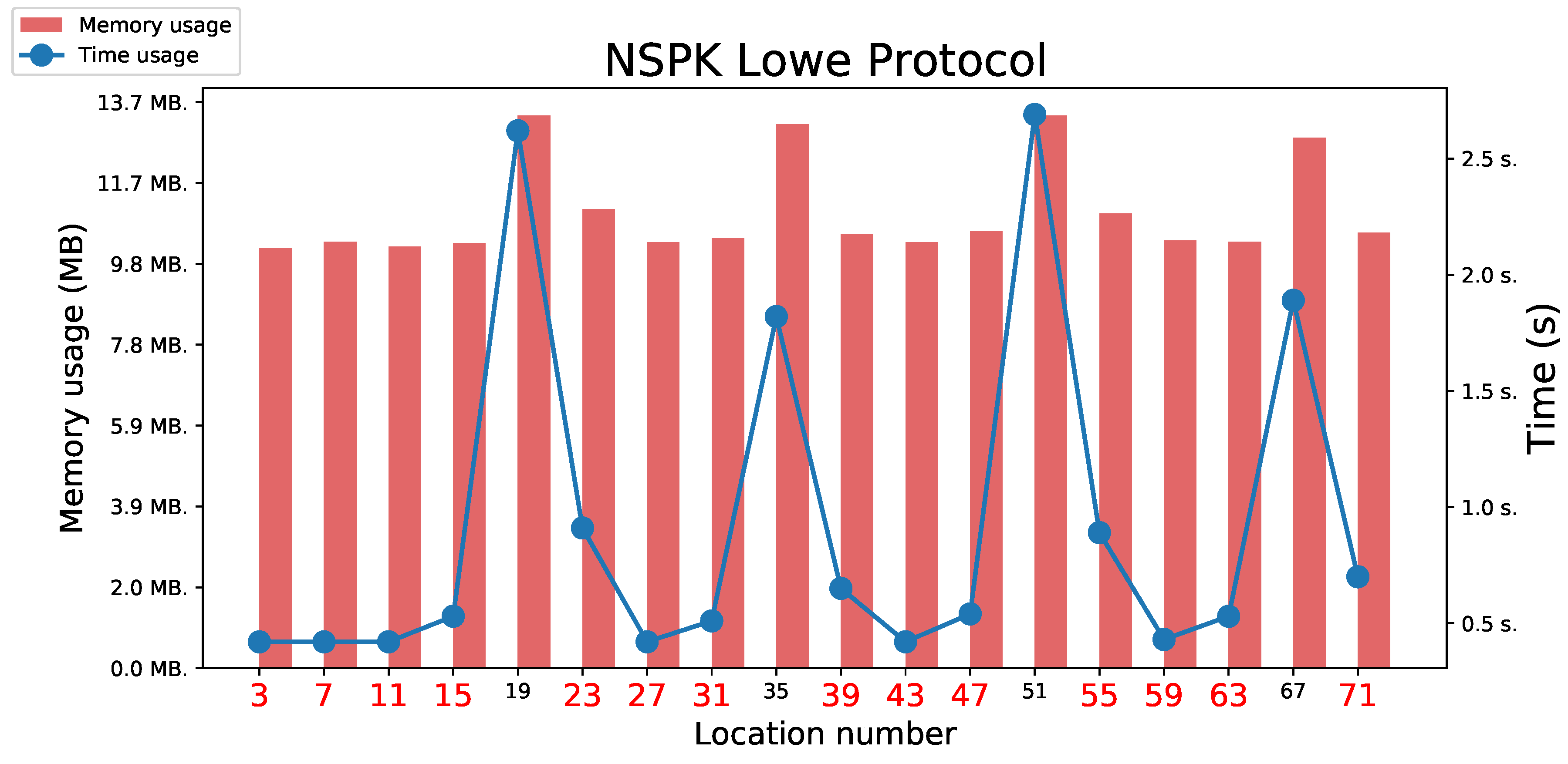

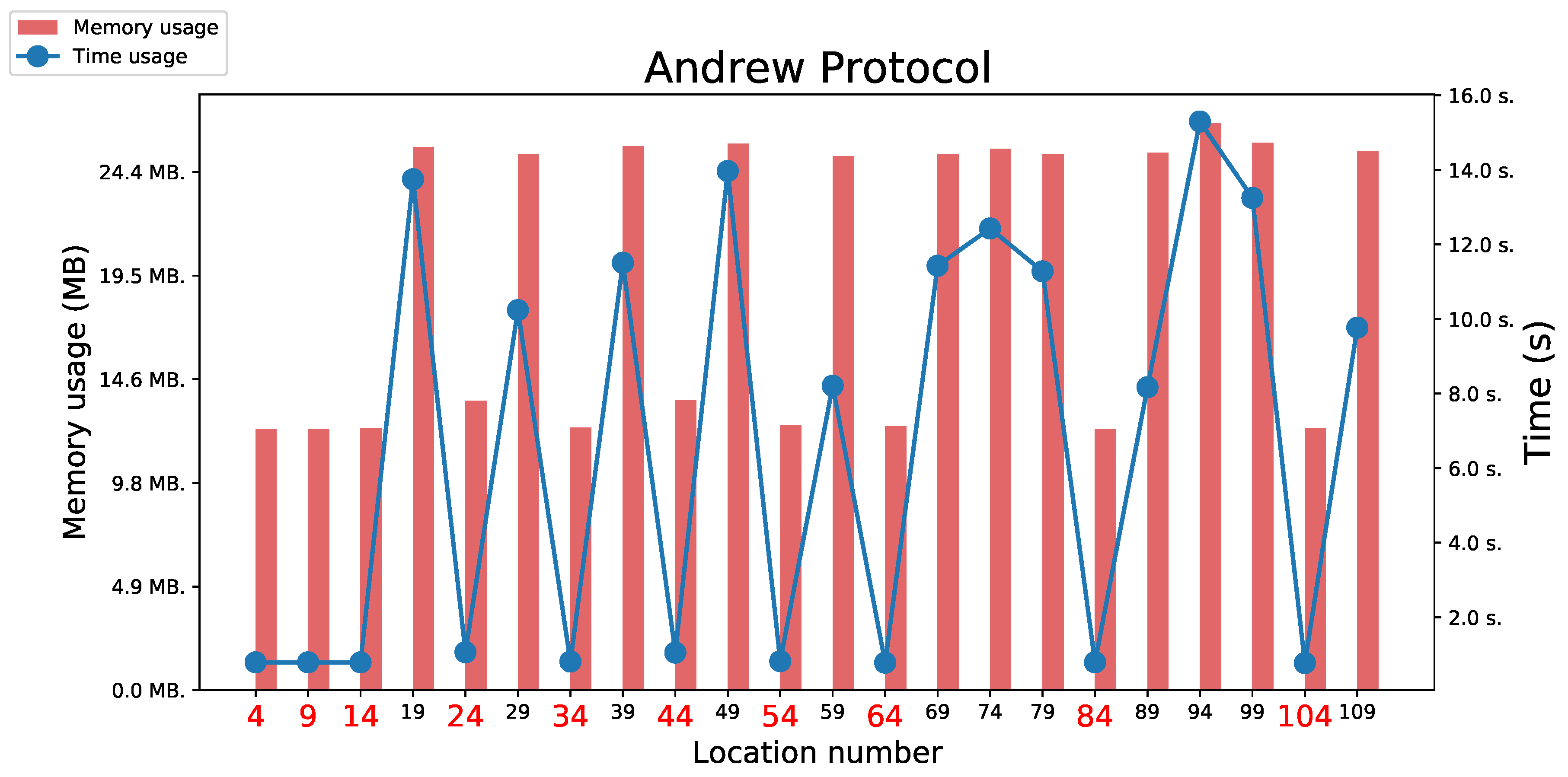

5.3.1. Andrew protocol

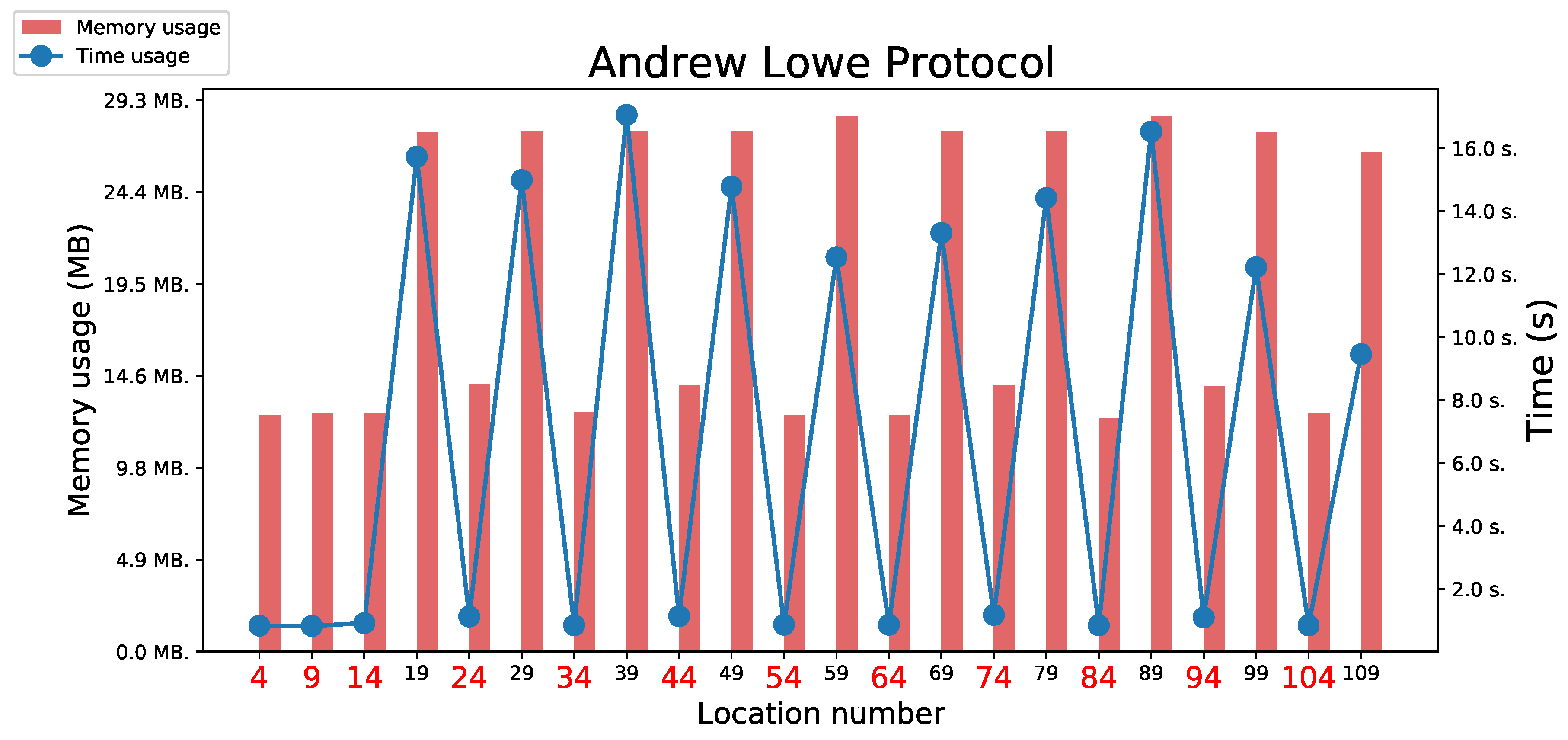

5.3.2. Lowe’s Modification of Andrew Protocol

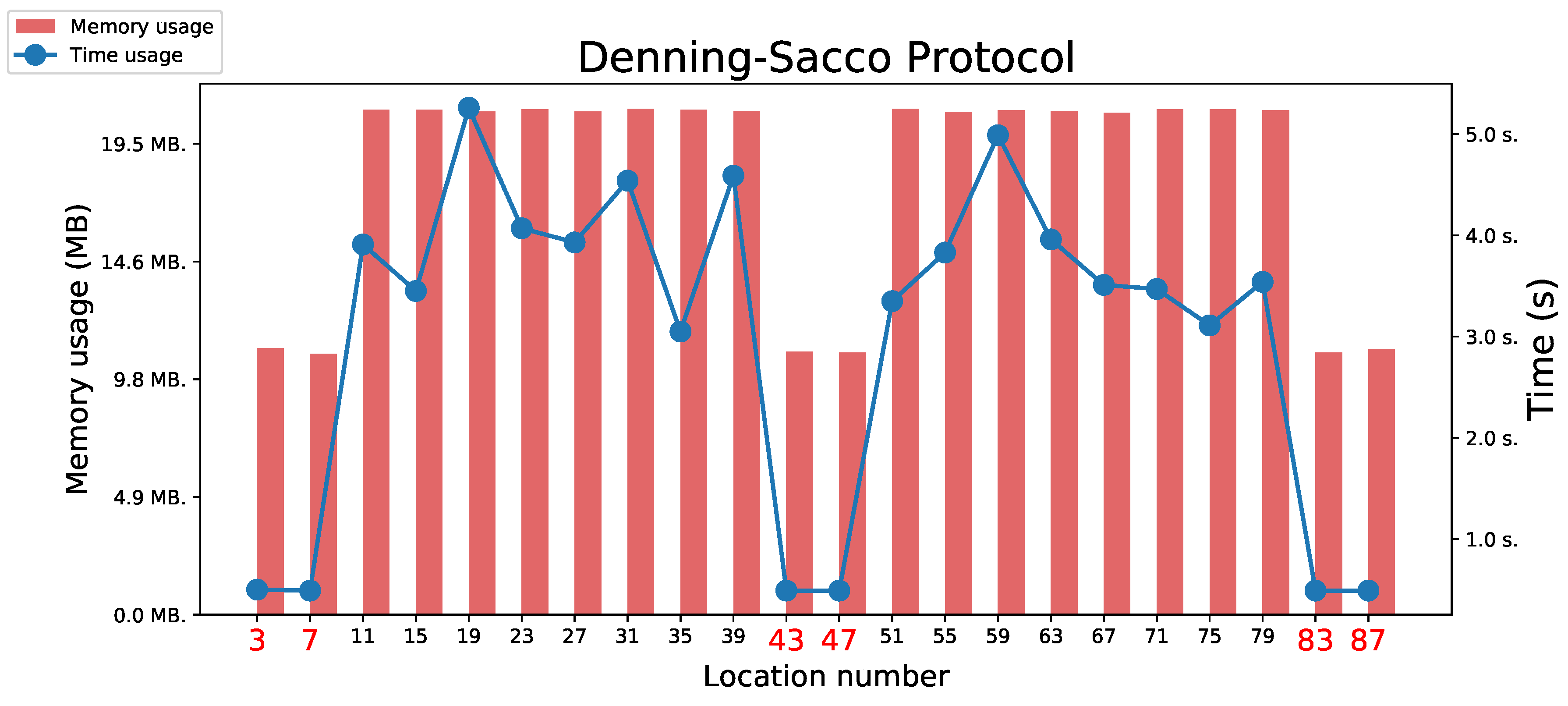

5.3.3. Denning–Sacco Protocol

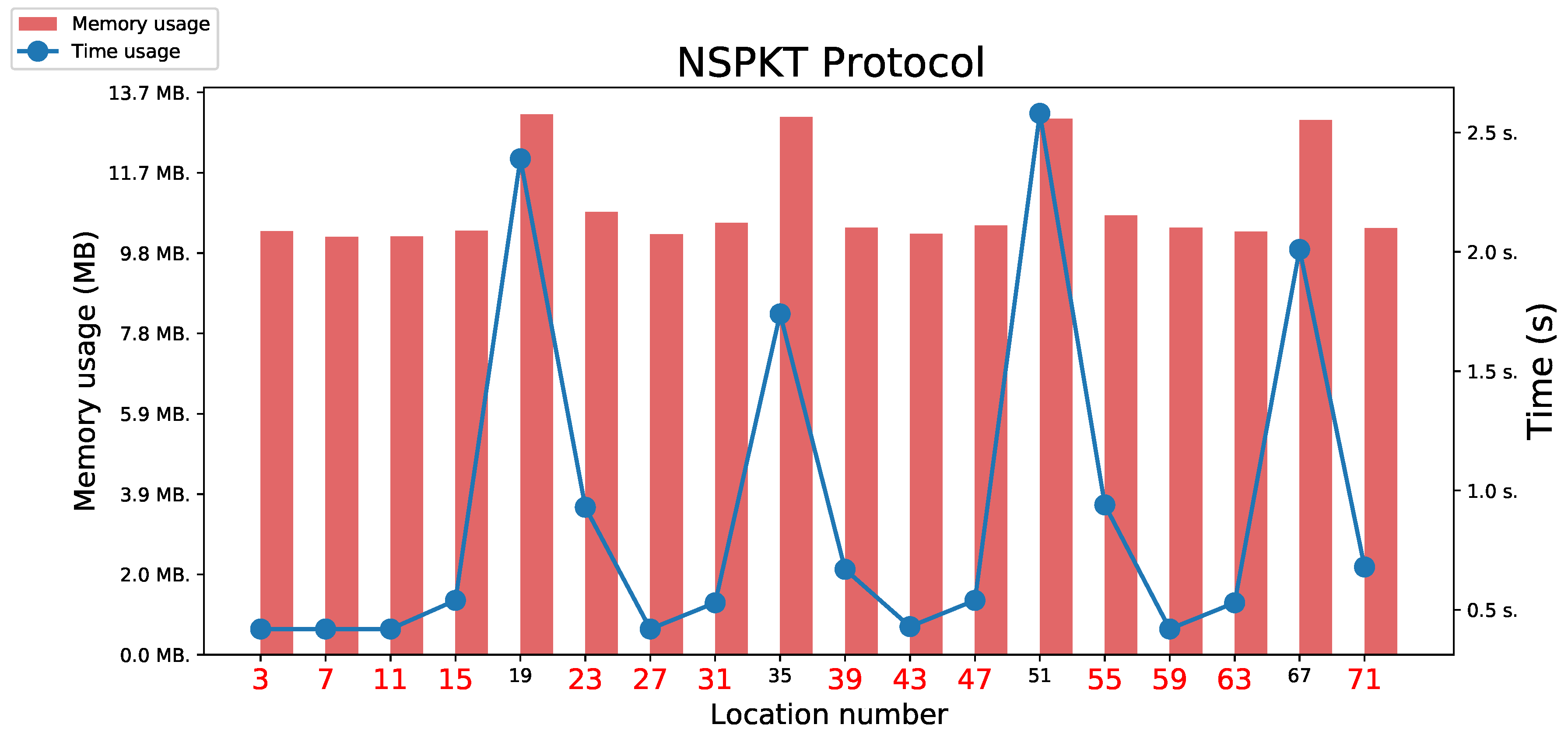

5.3.4. Needham Schroeder Public Key Protocol

5.3.5. Lowe’s Modification of Needham Schroeder Public Key Protocol

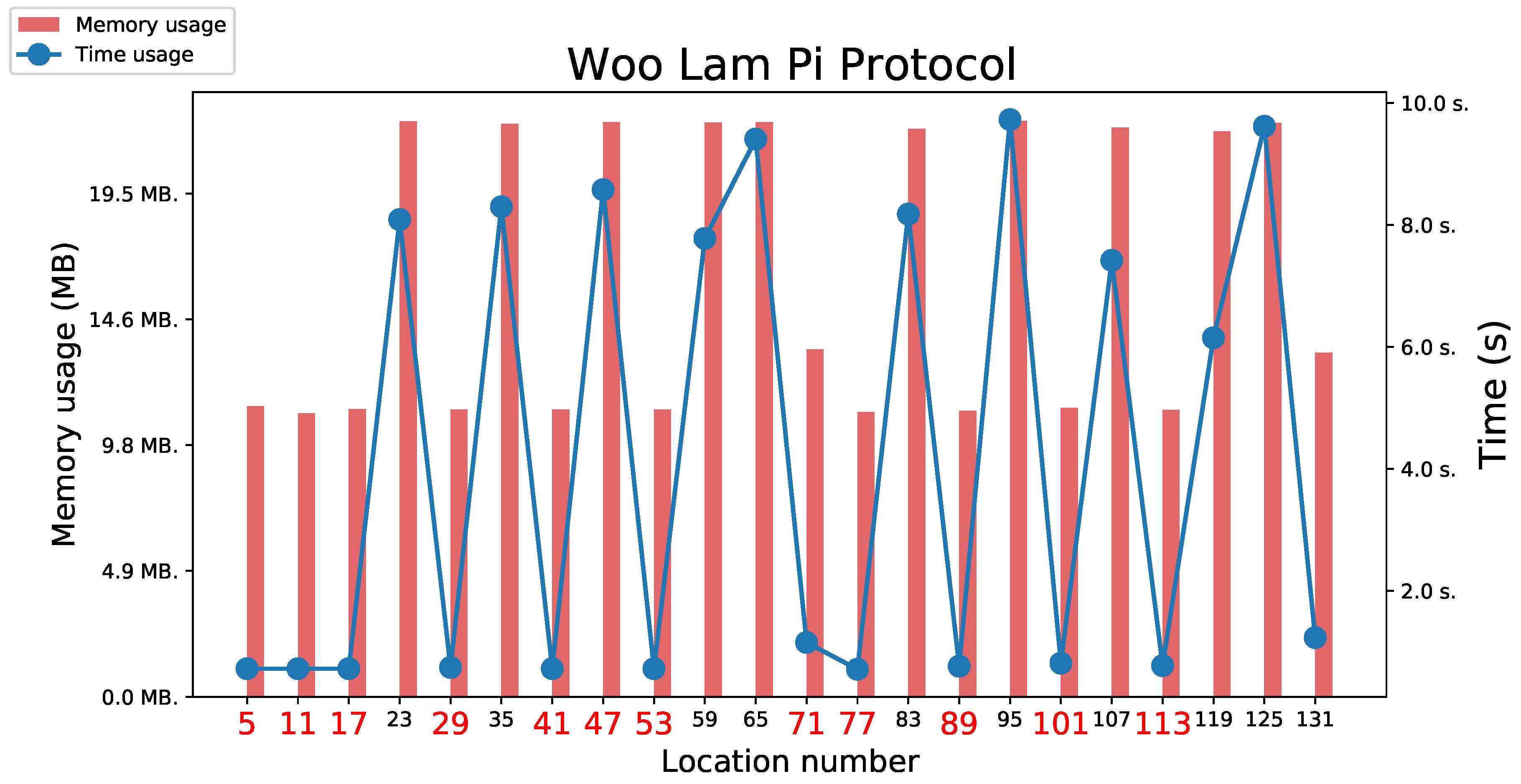

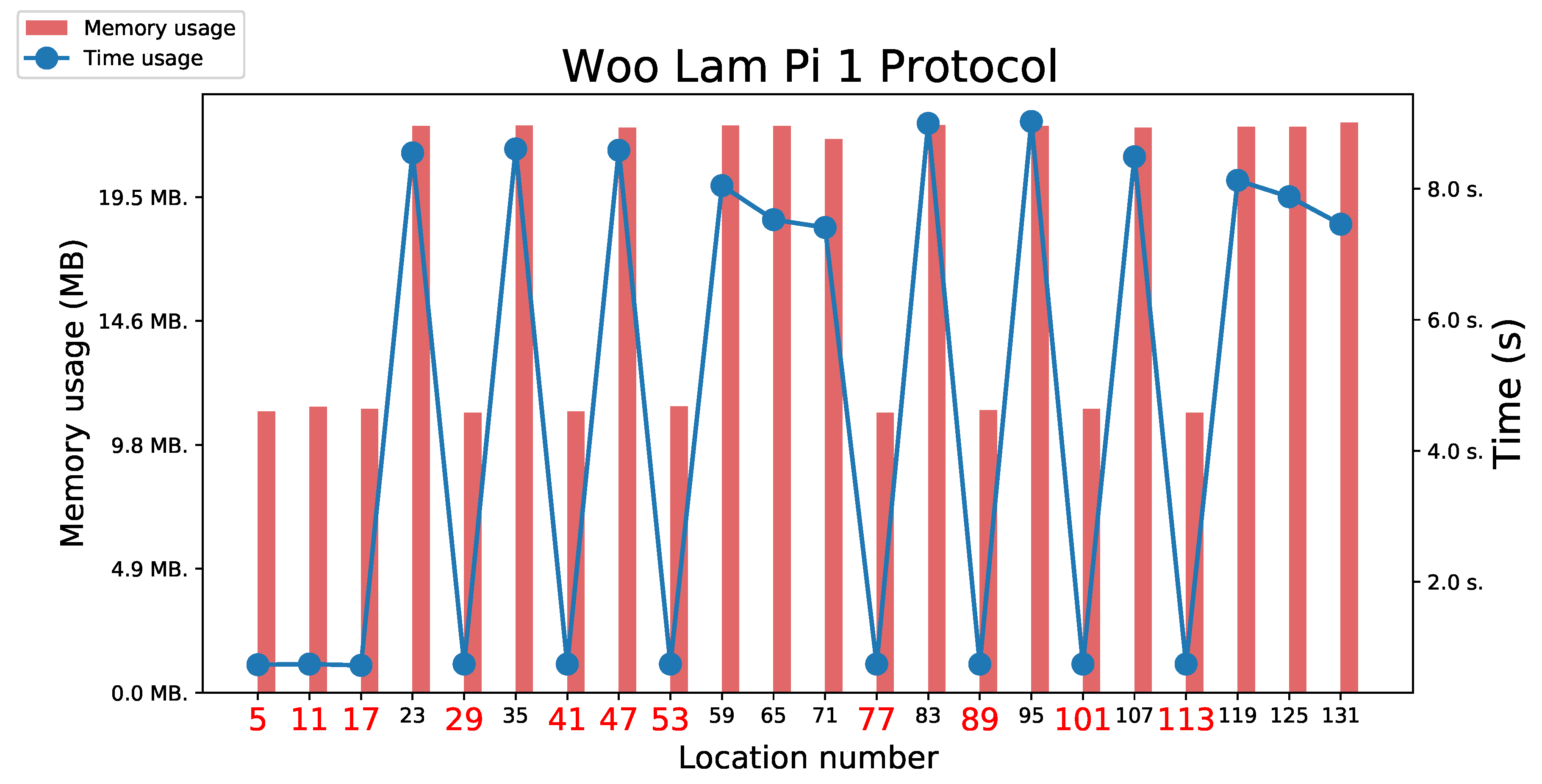

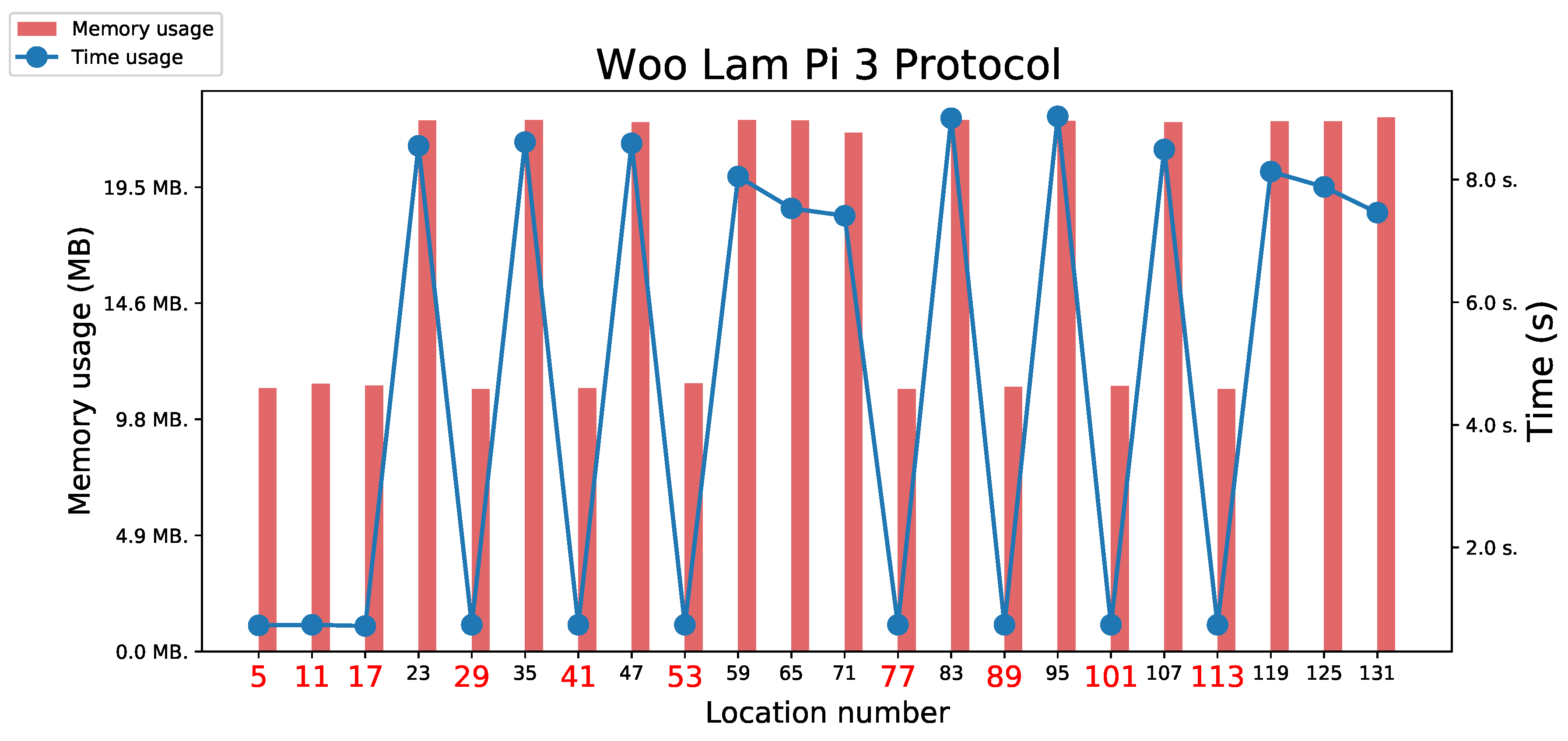

5.3.6. Woo Lam Pi Protocol

5.3.7. Woo Lam Pi 1 and 3

5.3.8. MobInfoSec Protocol

5.4. Conclusions on Performance Evaluation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Lowe, G. Breaking and Fixing the Needham-Schroeder Public-Key Protocol Using FDR. Proc. of TACAS’96; Springer-Verlag: London, UK, 1996; pp. 147–166. [Google Scholar]

- Burrows, M.; Abadi, M.; Needham, R. A Logic of Authentication. ACM Trans. Comput. Syst. 1990, 8, 18–36. [Google Scholar] [CrossRef]

- Dojen, R.; Jurcut, A.; Coffey, T.; Gyorodi, C. On Establishing and Fixing a Parallel Session Attack in a Security Protocol. Intelligent Distributed Computing, Systems and Applications. Springer, 2008, pp. 239–244.

- Boureanu, I.; Delicata, S.; Rasmussen, K. Timed Authentication in Security Protocols. International Journal of Information Security 2019, 18, 287–309. [Google Scholar]

- van der Meyden, R.; Su, K. Timed Epistemic Logic for Security Protocols. International Symposium on Trustworthy Global Computing. Springer, 2012, pp. 224–241. [CrossRef]

- Kremer, S.; Ryan, M.D.; Steel, G. A Compositional Proof System for Cryptographic Protocols with Time Constraints. In International Conference on Computer Aided Verification; Springer, 2018; pp. 110–128. [Google Scholar] [CrossRef]

- Dolev, D.; Halpern, J.Y.; Pinter, S. On Clocks and Messaging in Cryptographic Protocols. Journal of Cryptology 2004, 17, 41–58. [Google Scholar]

- Alur, R.; Dill, D.L. A Theory of Timed Automata. Theoretical Computer Science. Springer, 1994, pp. 116–135. [CrossRef]

- Halpern, J.Y.; Vardi, M.Y. Epistemic Logic for Distributed Protocols. Proceedings of the First Symposium on Logic in Computer Science. IEEE, 1989, pp. 250–260.

- Lomuscio, A.R.; Penczek, W.; Qu, H. Model Checking for Multi-Agent Systems with Time Constraints. Software and Systems Modeling 2017, 16, 425–444. [Google Scholar] [CrossRef]

- Herzberg, A.; Jarecki, S. Timed Communication Protocols in Adversarial Networks. International Workshop on Theory and Practice in Public Key Cryptography. Springer, 1995, pp. 73–89. [CrossRef]

- Kuhn, M.G.; Anderson, R.J. Timing Attacks on Cryptographic Protocols: Formal Analysis and Prevention. ACM Transactions on Information and System Security (TISSEC) 2010, 13, 47. [Google Scholar] [CrossRef]

- Grosser, A.; Kurkowski, M.; Piatkowski, J.; Szymoniak, S. ProToc - An Universal Language for Security Protocols Specifications. Soft Computing in Computer and Information Science. Springer, 2014, Vol. 342, Advances in Intelligent Systems and Computing, pp. 237–248.

- Kurkowski, M.; Penczek, W. Applying Timed Automata to Model Checking of Security Protocols. Handbook of Finite State Based Models and Applications, CRC Press, Taylor & Francis Group, 2016; 223–254. [Google Scholar] [CrossRef]

- Zbrzezny, A.M.; Siedlecka-Lamch, O.; Szymoniak, S.; Kurkowski, M. SMT Solvers as Efficient Tools for Automatic Time Properties Verification of Security Protocols. 20th Int’l Conf. PDCAT. IEEE, 2019, pp. 320–327.

- Kanovich, M.; Kirigin, T.; Nigam, V.; Scedrov, A.; Talcott, C. Discrete vs. Dense Times in the Analysis of Cyber-Physical Security Protocols. Proc. of 4th Conf. on Principles of Security and Trust, vol. 9036. Springer, 2015, p. 259–279.

- Basin, D.A.; Cremers, C.; Meadows, C.A. Model Checking Security Protocols. In Handbook of Model Checking; Springer, 2018; pp. 727–762. [Google Scholar]

- Biere, A.; Cimatti, A.; Clarke, E.M.; Fujita, M.; Zhu, Y. Symbolic Model Checking Using SAT Procedures instead of BDDs. Proc. of the 36th Conf. on Design Automation., 1999, pp. 317–320.

- Kurkowski, M.; Srebrny, M. A Quantifier-free First-order Knowledge Logic of Authentication. Fundam. Inform. 2006, 72, 263–282. [Google Scholar]

- Lomuscio, A.; Raimondi, F.; Wozna, B. Verification of the TESLA protocol in MCMAS-X. Fundam. Inform. 2007, 79, 473–486. [Google Scholar]

- Basin, D.A.; Cremers, C.; Dreier, J.; Sasse, R. Symbolically analyzing security protocols using tamarin. ACM SIGLOG News 2017, 4, 19–30. [Google Scholar] [CrossRef]

- Siedlecka-Lamch, O.; Szymoniak, S.; Kurkowski, M. A Fast Method for Security Protocols Verification. Proc. of 18th Int’l Conf. CISIM, 2019, pp. 523–534.

- Hess, A.V.; Mödersheim, S. Formalizing and Proving a Typing Result for Security Protocols in Isabelle/HOL. 30th IEEE Computer Security Foundations Symposium, CSF 2017, Santa Barbara, CA, USA, August 21-25, 2017. IEEE Computer Society, 2017, pp. 451–463. 21 August. [CrossRef]

- Mödersheim, S.; Viganò, L.; Basin, D.A. Constraint differentiation: Search-space reduction for the constraint-based analysis of security protocols. J. Comput. Secur. 2010, 18, 575–618. [Google Scholar] [CrossRef]

- Armando, A.; Carbone, R.; Compagna, L. SATMC: a SAT-based model checker for security protocols, business processes, and security APIs. Int. J. Softw. Tools Technol. Transf. 2016, 18, 187–204. [Google Scholar] [CrossRef]

- Li, L.; Sun, J.; Liu, Y.; Sun, M.; Dong, J. A Formal Specification and Verification Framework for Timed Security Protocols. IEEE Transactions on Software Engineering 2018, 44, 725–746. [Google Scholar] [CrossRef]

- Benerecetti, M.; Cuomo, N.; Peron, A. TPMC: A Model Checker For Time–Sensitive Security Protocols. Journal of Computers 2009, 4, 366–377. [Google Scholar] [CrossRef]

- Szymoniak, S.; Siedlecka-Lamch, O.; Kurkowski, M. On Some Time Aspects in Security Protocols Analysis. Proc. of 25th Int’l Conf. CN’18, 2018, pp. 344–356.

- Zbrzezny, A.M.; Zbrzezny, A.; Siedlecka-Lamch, O.; Szymoniak, S.; Kurkowski, M. VerSecTis - an agent based model checker for security protocols. Proc. of Int’l Conf. AAMAS’20. ACM, 2020, pp. 2123–2125.

- Lomuscio, A.; Penczek, W. LDYIS: a Framework for Model Checking Security Protocols. Fundam. Inform. 2008, 85, 359–375. [Google Scholar]

- Boureanu, I.; Cohen, M.; Lomuscio, A. Model checking detectability of attacks in multiagent systems. Proc. of 9th Int’l Conf. AAMAS’10, 2010, Vol. 1-3, pp. 691–698.

- Boureanu, I.; Jones, A.V.; Lomuscio, A. Automatic verification of epistemic specifications under convergent equational theories. Proc. of 11th Int’l Conf. AAMAS’12, 2012, Vol. 3, pp. 1141–1148.

- Millen, J.K. CAPSL: Common Authentication Protocol Specification Language. Proc. of the 1996 Workshop on New Security Paradigms, 1996, p. 132.

- Boureanu, I.; Kouvaros, P.; Lomuscio, A. Verifying Security Properties in Unbounded Multiagent Systems. Proc. AAMAS’16 Conf., 2016, pp. 1209–1217.

- Corin, R.; Etalle, S.; Hartel, P.H.; Mader, A. Timed analysis of security protocols. Journal of Computer Security 2007, 15, 619–645. [Google Scholar] [CrossRef]

- Jakubowska, G.; Penczek, W. Modelling and Checking Timed Authentication of Security Protocols. Fundam. Inform. 2007, 79, 363–378. [Google Scholar]

- Zbrzezny, A.M.; Szymoniak, S.; Kurkowski, M. Efficient Verification of Security Protocols Time Properties Using SMT Solvers. Proc. of 12th Int’l Conf. CISIS’19, 2019, pp. 25–35. [CrossRef]

- Li, L.; Sun, J.; Dong, J.S. Automated Verification of Timed Security Protocols with Clock Drift. FM 2016: Formal Methods - 21st International Symposium, Proceedings, 2016, Vol. 9995, Lecture Notes in Computer Science, pp. 513–530. [CrossRef]

- Mu, Y.; Juan, L.; Shen, G.; Zihao, W. Runtime verification of self-adaptive multi-agent system using probabilistic timed automata. Journal of Intelligent and Fuzzy Systems 2023. [Google Scholar] [CrossRef]

- Sankur, O. Timed Automata Verification and Synthesis via Finite Automata Learning. International Conference on Tools and Algorithms for Construction and Analysis of Systems, 2023, pp. 335–349. [CrossRef]

- Barthe, G.; Lago, U.D.; Malavolta, G.; Rakotonirina, I. Tidy: Symbolic Verification of Timed Cryptographic Protocols. Proceedings of the 2022 ACM SIGSAC Conference on Computer and Communications Security, 2022. [CrossRef]

- Middelburg, C. Dormancy-aware timed branching bisimilarity with an application to communication protocol analysis. Theoretical Computer Science 2024, 912, 114681. [Google Scholar] [CrossRef]

- Sahu, P. Automated Verification for Real-Time Systems. Lecture Notes in Computer Science, 2023. [CrossRef]

- Fagin, R.; Halpern, J.Y.; Moses, Y.; Vardi, M.Y. Reasoning about Knowledge; MIT Press: Cambridge, 1995. [Google Scholar]

- Wooldridge, M. An Introduction to Multi-Agent Systems - Second Edition, John Wiley & Sons, 2009.

- Lomuscio, A.; Sergot, M.J. Deontic Interpreted Systems. Studia Logica 2003, 75, 63–92. [Google Scholar] [CrossRef]

- Woźna-Szcześniak, B. SAT-Based Bounded Model Checking for Weighted Deontic Interpreted Systems. Proc. of Conf. EPIA’13, 2013, pp. 444–455.

- Woźna-Szcześniak, B.; Zbrzezny, A. Checking EMTLK Properties of Timed Interpreted Systems Via Bounded Model Checking. Studia Logica 2015, 1–38. [Google Scholar]

- Needham, R.; Schroeder, M. Using Encryption for Authentication in Large Networks of Computers. Commun. ACM 1978, 21, 993–999. [Google Scholar] [CrossRef]

- Lowe, G. An Attack on the Needham-Schroeder Public-Key Authentication Protocol. Inf. Process. Lett. 1995, 56, 131–133. [Google Scholar] [CrossRef]

- Baier, C.; Katoen, J.P. Principles of Model Checking; MIT Press, 2008; pp. I–XVII, 1–975. [Google Scholar]

- Zbrzezny, A.M.; Zbrzezny, A. Checking WECTLK Properties of Timed Real-Weighted Interpreted Systems via SMT-based Bounded Model Checking. Proc. of 17th Conf. EPIA’15. Springer, 2015, Vol. 9273, LNCS, pp. 638–650.

- Moura, L.D.; Bjørner, N. Z3: an Efficient SMT solver. Proc. of 14th Int’l Conf. TACAS’08. Springer-Verlag, 2008, Vol. 4963, LNCS, pp. 337–340.

- Dutertre, B. Yices 2.2. Proc. of CAV’14 Conf. Springer, 2014, Vol. 8559, LNCS, pp. 737–744.

- Barrett, C.; Conway, C.L.; Deters, M.; Hadarean, L.; Jovanovi’c, D.; King, T.; Reynolds, A.; Tinelli, C. CVC4. Proc. of the 23rd CAV Conf. Springer, 2011, Vol. 6806, LNCS, pp. 171–177.

- Zbrzezny, A.M.; Zbrzezny, A. SAT-Based BMC Approach to Verifying Real-Time Properties of Multi-Agent Systems. 15th IEEE/ACS Int’l Conf. AICCSA’18, 2018, pp. 1–8.

- Callegati, F.; Cerroni, W.; Ramilli, M. Man-in-the-Middle Attack to the HTTPS Protocol. IEEE Secur. Priv. 2009, 7, 78–81. [Google Scholar] [CrossRef]

- Denning, D.E.; Sacco, G.M. Timestamps in Key Distribution Protocols. Commun. ACM 1981, 24, 533–536. [Google Scholar] [CrossRef]

- Woo, T.Y.C.; Lam, S.S. A Lesson on Authentication Protocol Design. SIGOPS Oper. Syst. Rev. 1994, 28, 24–37. [Google Scholar] [CrossRef]

- Satyanarayanan, M. Integrating security in a large distributed system. ACM Trans. Comput. Syst. 1989, 7, 247–280. [Google Scholar] [CrossRef]

- Lowe, G. Some new attacks upon security protocols. Proceedings 9th IEEE Computer Security Foundations Workshop, 1996, pp. 162–169.

- Siedlecka-Lamch, O.; El Fray, I.; Kurkowski, M.; Pejas, J. Verification of Mutual Authentication Protocol for MobInfoSec System. Proc. of 14th Int’l Conf. CISIM, 2015, pp. 461–474.

| 1 | The implementation [29], along with instructions for installing the necessary software and the specifications of the tested protocols, can be found on GitHub (https://github.com/amz-research-veri/VerSecTis). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).