1. Introduction

Artificial intelligence is a product of the rapid development of information technology and simulates specific human characteristics that mimic certain human behaviors. With the gradual rise of artificial intelligence technology, digital media interactive product design has found a significant breakthrough. Applying artificial intelligence to design, optimizing intelligent services, and providing more convenience for people demonstrates its advantages and promotes continuous development in interactive design for digital media. [

1] This intervention enhances the efficiency of art design and expands the creative space for human beings, catalyzing the transformation of traditional art aesthetics. For instance, artworks generated by artists using generative adversarial networks [

2] (GANs) during the creative process have expanded their horizons, sparking extensive artistic discussions and appreciation among audiences. Moreover, analyzing users' aesthetic preferences through deep learning algorithms on digital art platforms personalizes artworks, enriches user experiences, and facilitates the dissemination and sale of these works.

In addition to these advancements, integrating artificial intelligence into interactive design extends to voice interfaces, exemplified by technologies like Cortana. Cortana, Microsoft's virtual assistant powered by AI, exemplifies how voice interaction can revolutionize user experiences across digital media. By leveraging machine learning models, [

3] Cortana enables seamless voice commands and responses, enhancing accessibility and user engagement. This integration streamlines tasks and opens new avenues for personalized interactions, transforming how users interact with digital platforms and services.

This paper explores the evolving relationship between artificial intelligence and artistic design and its application to the design of interactive products in digital media. By studying the application of artificial intelligence technology in art creation, such as generative adversarial networks (GANs) and deep learning algorithms, the paper analyzes how artificial intelligence can improve design efficiency, expand creative space, and promote the transformation of traditional art aesthetics. In addition, this paper also introduces the application of artificial intelligence in voice interaction, taking Microsoft's virtual assistant Cortana as an example to show how voice interaction technology can enhance user experience, improve task efficiency, and enable personalized interaction through machine learning models. This paper aims to reveal the impact of AI on creativity and user interaction, guiding the future development of digital media and artistic innovation.

2. Related Work

2.1. Artificial Intelligence and Interaction Design

In digital media interactive product design, artificial intelligence technology has made remarkable progress and application. Beginning in the 1980s and 1990s, the emergence of artificial neural networks (ANNs) marked a hot spot in AI research, with the emergence of more powerful mathematical models, such as the Random Forest (RF) model proposed by Breiman and Cutler, which integrates and optimizes the Bagging algorithm and the decision tree algorithm. Artificial intelligence has entered a second period of vigorous development. However, the collapse of the dedicated LISP machine hardware sales market, the rising maintenance costs of expert systems, and the failure of many AI products to live up to their promises have led to expert systems and AI being questioned and criticized again, and AI falling into a second low. After entering the 21st century, with the continuous improvement of computer computing power and further innovation of theoretical algorithms, artificial intelligence has made breakthroughs in many fields, such as computer vision and natural language processing. For example, Hinton et al. proposed a class of advanced machine learning algorithms based on deep neural networks, namely deep learning (DL), which has been widely used in related fields, and artificial intelligence has ushered in the third boom period.

In recent years, artificial intelligence has empowered people from all walks of life with its rapid pace of development. A typical example is the generative adversarial networks (GANs) proposed by Ian J Goodfellow et al. This generative adversarial network consists of a generator that captures the sample data and generates new images not present in the original dataset and a discriminator that determines whether the input is accurate data or a generated sample. This adversarial learning approach excels in tasks such as image generation and semantic segmentation, laying a good foundation for AI attempts in the design field. [

4] In 2023, Liu Shanlin et al. proposed the concept of a generative Adduction Network (SSGAN) based on a color mean segmentation encoder (CMS-Encoder), which extracts image features by clustering method. This new method performs well in generating high-quality, large-scale images. At present, generative adversarial networks have been widely used in various fields of computer vision, such as image segmentation, video prediction, style transfer, etc., which has promoted the development of these fields.

In addition, the combination of AI and finance is also natural. Data is an essential input to artificial intelligence, and the financial industry, as a highly digitized industry, has accumulated a large amount of user and transaction data in daily business, becoming one of the ideal scenarios for artificial intelligence applications. According to a Business Insider survey, 80% of US banks believe that AI can help improve financial services and have already integrated or plan to integrate AI with financial operations. [

5] The global AI financial services market is expected to reach

$130 billion by 2027. In these scenarios, the application of artificial intelligence technology enhances the interactivity and practicality of the product and significantly improves the user's product experience.

To sum up, as AI technology continues to mature and improve, the various fields it enables are becoming increasingly intelligent. Especially in visual communication design, which mainly transmits visual symbol information, the application of artificial intelligence technology will make product design and user experience more diversified and intelligent.

2.2. Application of Artificial Intelligence Technology in Digital Media Interaction Design Advantage

Artificial intelligence (AI) technology has significantly advanced digital media interaction design by breaking traditional design limitations and enhancing human-machine interaction [

6,

7]. Here are critical advantages supported by previous research methods and technologies in product design:

Breaking the Limitations of Traditional Design: AI has enabled a shift from tangible to intangible interactions, enhancing user experiences with intelligent, convenient, and digital product characteristics. As technology evolves, AI facilitates natural human-computer interactions by identifying user habits and adapting machines accordingly. This evolution supports the move towards more natural and intuitive interfaces [

8,

9,

10].

Innovative Interaction Design: AI-driven human-computer interaction fosters symbiotic relationships where technology provides information efficiently and understands and responds to user needs. This bidirectional interaction improves usability and user satisfaction [

11,

12].

Enhancing Human-Machine Emotional Interaction: AI integrates emotional intelligence into interactions despite machines lacking human subjective elements. Biometric sensors analyze user emotions through data like skin reactions and brain waves, enabling personalized and responsive design adjustments. This enhances emotional connections and user engagement, exemplified by fluent interactions with voice assistants and intelligent robots [

13].

Optimizing Interaction Design Structure: In the era of big data, AI optimizes digital structures to meet increasing demands for efficiency and diversity. AI technologies like intelligent voice input streamline data retrieval and task execution, improving interaction efficiency and user experience. This optimization supports more effective and personalized interactions tailored to user needs.

By leveraging previous research on methods and technologies in digital media interaction design, the theoretical foundations and empirical evidence provided pave the way for further methodological advancements in AI applications.

2.3. Effective Application of Artificial Intelligence Technology in Digital Media Interaction Design

Artificial intelligence (AI) technology has revolutionized daily life, prominently transforming digital media interaction design across multiple domains. In-home appliance design and AI integration, exemplified by Xiaomi's Xiao Ai, has ushered in a new era of smart home functionalities. By enabling seamless voice commands for remote control of appliances, AI enhances user convenience and sets a precedent for integrated innovative environments [

14,

15]. Similarly, in-app product design, AI plays a pivotal role in Google Apps, enhancing the user experience through innovations like Gmail's Smart Compose and personalized content recommendations. These advancements underscore AI's capacity to streamline user interaction and content delivery, thereby shaping modern digital experiences [

16].

Moreover, AI's impact extends to transportation design, where technologies like Waymo demonstrate AI's prowess in autonomous driving. AI ensures safer and more efficient transportation systems by leveraging machine learning for real-time decision-making and enhancing vehicle perception capabilities [

17,

18,

19]. AI enables platforms like Weibo and WeChat to facilitate dynamic communication and content dissemination in social interaction platforms. Features such as personalized content curation and interactive functionalities foster community engagement and redefine how users interact and share information in real-time [

20].

This comprehensive integration of AI across diverse sectors underscores its transformative potential in digital media interaction design, setting the stage for a methodological approach that builds upon these technological advancements to address current design challenges effectively.

3. Methodology

Voice-enabled personal assistants like Microsoft Cortana continue to evolve, enabling users to accomplish more tasks daily. Despite significant technological advancements, these assistants still need help handling specific user queries satisfactorily. We analyze millions of user queries to address this gap and develop a machine-learning system. [

21,

22,

23] This system classifies queries into two categories: those Cortana can handle well with high user satisfaction and those it cannot. Using unsupervised learning, we cluster similar questions and assign them to human assistants to complement Cortana's functionality efficiently [

24].

3.1. Datasets

For The model training, we utilized hundreds of thousands of anonymized Cortana query logs sourced from a proprietary Microsoft internal data warehouse. Each log entry corresponds to a user query and includes metadata such as time, location, duration, and telemetry data reflecting user interactions with Cortana responses and query status. Queries are categorized into three main types: General Search, where Cortana redirects to Bing search; [

25,

26] Command and Control (C&C), involving tasks executed via voice commands like calendar appointments or text messages; and Enriched Search, where Cortana provides enhanced responses such as weather updates or jokes. Focusing on C&C and Enriched queries, which align closely with Cortana's targeted enhancements, we trained our models using data from a one-day window due to computational constraints [

27,

28].

Table 1.

Summary of Dataset.

Table 1.

Summary of Dataset.

| Category |

Class 1 to 0 ratio |

Train size |

Test size |

| General |

0.304 |

302k |

33k |

| C&C |

0.596 |

223k |

25k |

| Enriched |

6.14 |

28k |

3.1k |

3.2. Data Processing

We employed two heuristic methods based on information extracted from the query logs to label our data efficiently without manual intervention. The first heuristic, Repetition Tagging (RBT) [

29], assumes an unsatisfied user often resubmits a slightly modified query within the same session. Using a Lowenstein distance threshold of 5, we identified queries where users submitted similar versions multiple times and labeled them as '1' (unsatisfactory Cortana response) [

30]. Feedback Tagging (FBT), the second heuristic, leverages explicit feedback signals from Cortana logs, specifically for Enriched and Command and Control (C&C) queries. Cortana prompts users for feedback on satisfaction after responding to these queries, and we used this feedback to label query entries [

31]. However, reliability concerns arise, particularly with Enriched data, where users may navigate away without providing feedback, leading to potentially inaccurate labels indicating dissatisfaction.

We focused on using the raw query text as our primary feature extraction feature and employed an event model representation. Initially, we built a dictionary of words by preprocessing all queries, which involved removing noise, non-alphabetic characters, and non-English words and applying essential stemming to unify related terms [

32]. Each query was then encoded into a binary vector of size k, corresponding to the number of unique terms in our vocabulary. Each bit in the vector indicates the presence or absence of a term in the query, resulting in 10,414 features after preprocessing and feature extraction. This approach ensured that our models could efficiently process and classify queries based on their textual content and semantic context derived from the vocabulary terms.

3.3. Support Vector Machine Classifier

We employed Support Vector Machines (SVM) [

33] with a linear kernel using the Feedback Based Tagging (FBT) approach on Command and Control (C&C) data to evaluate its performance compared to Naive Bayes (NB). The SVM implementation utilized the LIBLINEAR library and addressed the challenge of unbalanced training and test data by experimenting with different class weights in the C-SVM formulation. This formulation adjusts the penalty parameter CC to control the trade-off between margin maximization and classification error, particularly favoring the minority class (class 1, indicating unsatisfactory Cortana responses).

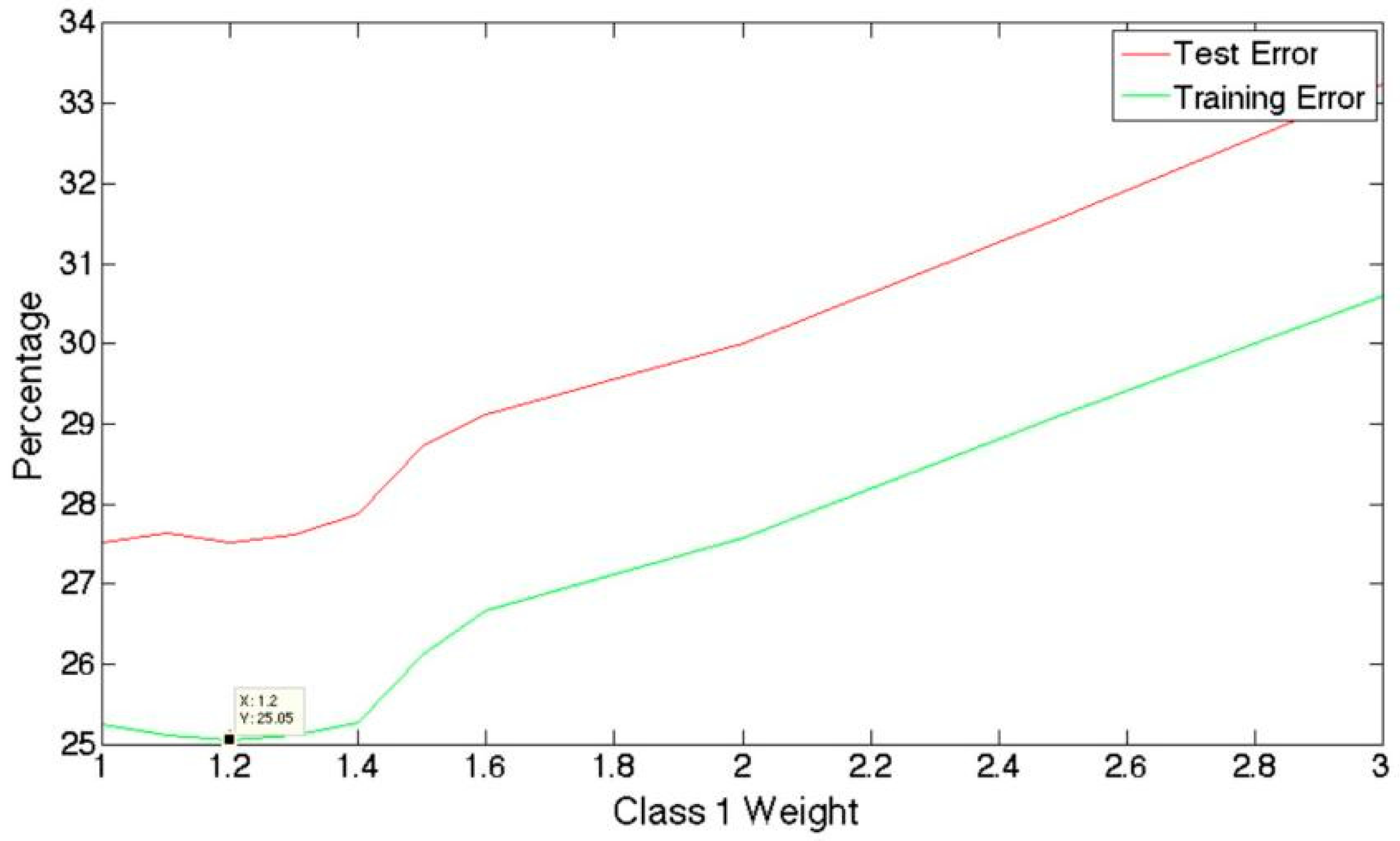

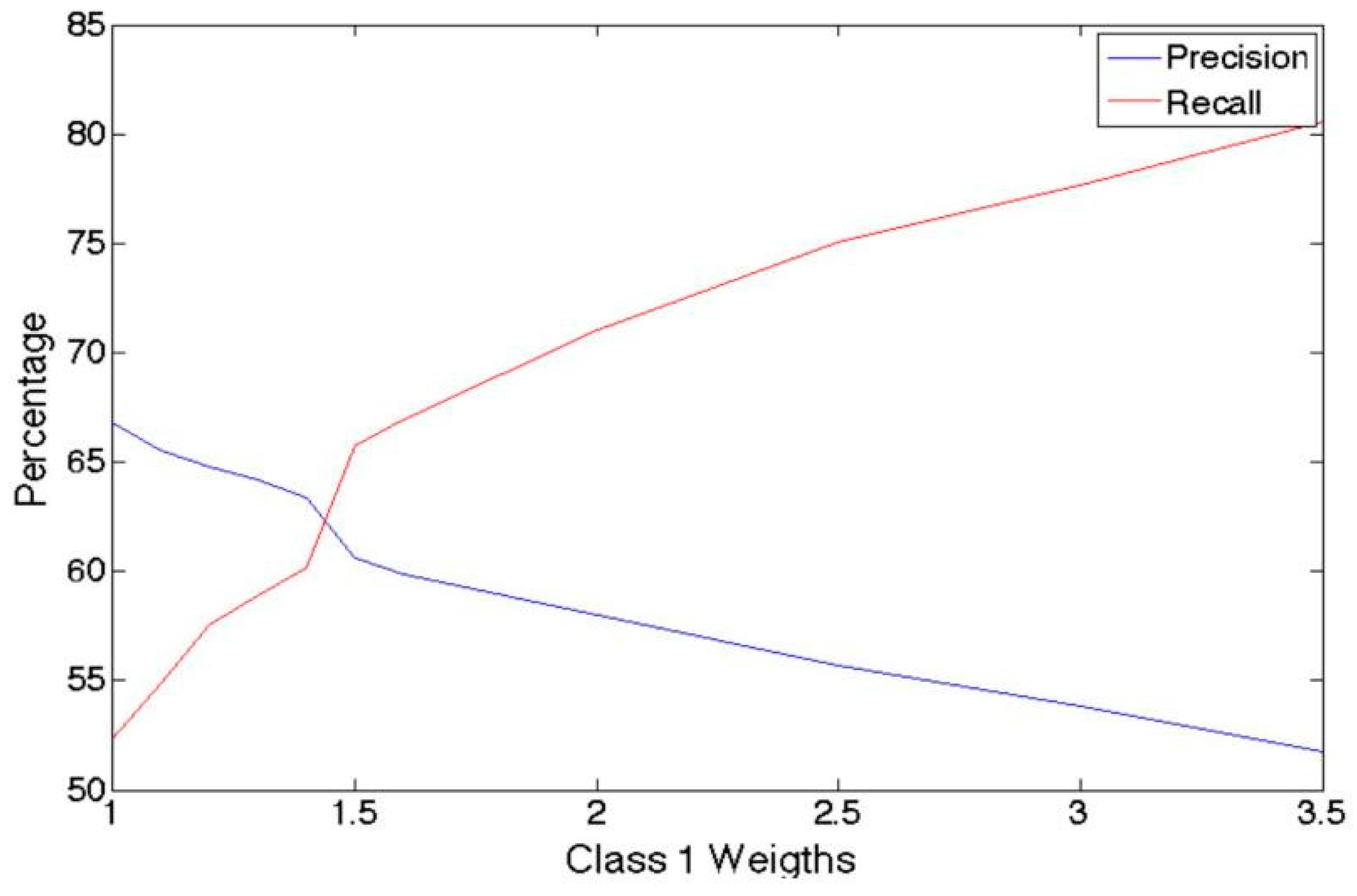

The results, as depicted in

Figure 1 and

Figure 2, demonstrate promising outcomes with SVM. By optimizing the class weights, particularly setting weight = 1.5 for class 1, we achieved a test error of 28.7%, recall of 60%, and precision of 65.8%. These metrics show a balanced performance where the classifier effectively identifies unsatisfactory responses while maintaining reasonable precision in classifying them. Increasing the weight beyond the optimal point (around 1.4) led to higher training and test errors due to increased bias towards class 1, resulting in more false positives (incorrectly tagging satisfactory responses as unsatisfactory).

3.4. Bias vs Variance

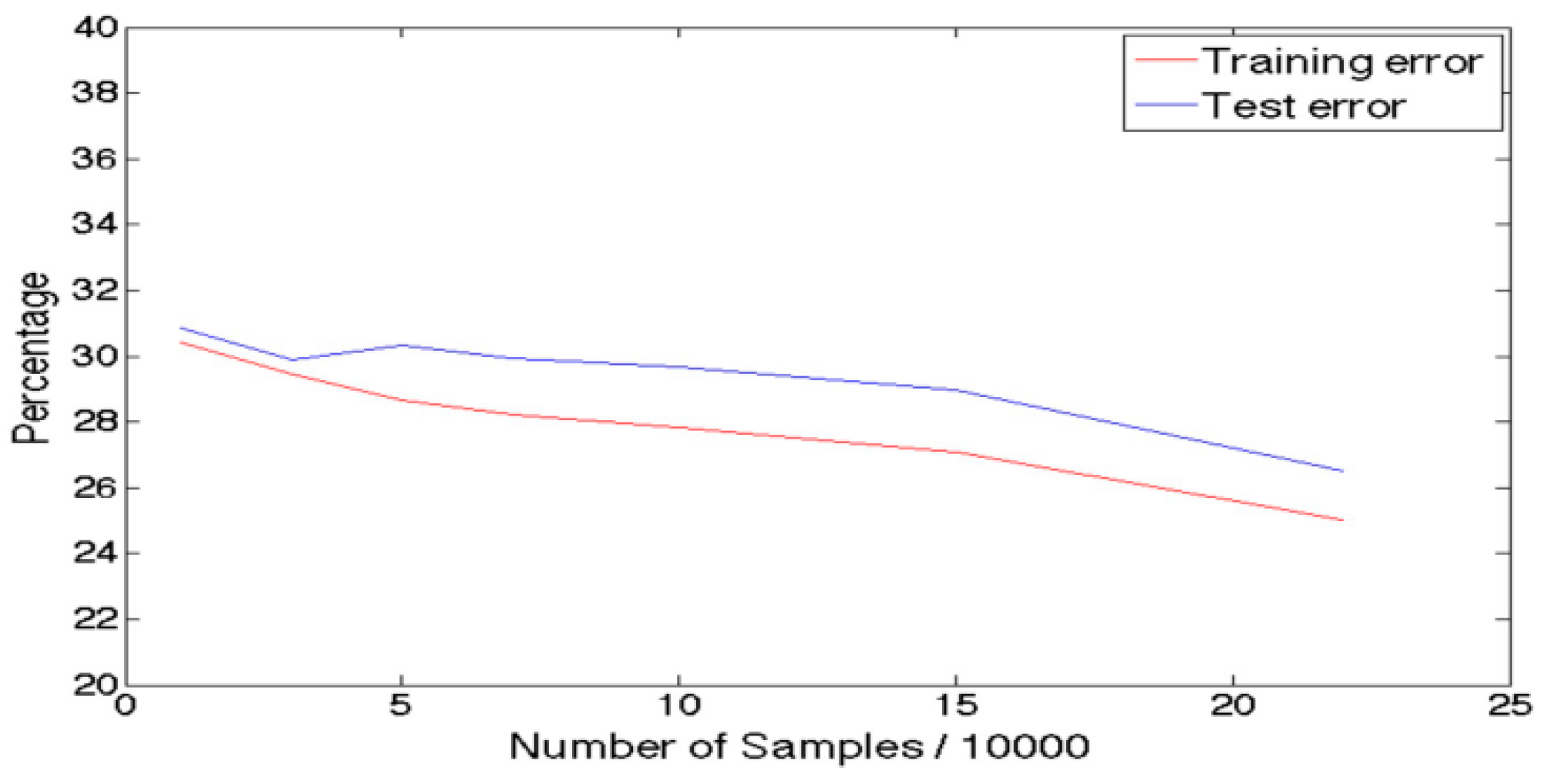

focusing on Command and Control (C&C) data using the Feedback Based Tagging (FBT) approach with a linear kernel SVM. The ratio of training samples to features was substantial, exceeding 20 for both C&C and General data and 2.8 for Enriched data. This ratio indicated sufficient data for training robust models.

Figure 3 illustrates that the training and test errors remain closely aligned across varying training set sizes for C&C data with Linear SVM. The slight difference between training and test errors, approximately 5% when scaling the size from 10,000 to 200,000 samples, suggests that the models adequately handle bias and variance. This observation indicates that the models neither underfit nor overfit the data, implying a balanced generalization and model complexity performance.

Therefore, based on these findings, we conclude that the datasets used, and the number of features (10,414) are sufficiently large to support practical model training without significant bias or variance issues affecting model performance.

3.5. Experimental Conclusion

Linear SVM vs. Logistic Regression

Performance Comparison: Linear SVM and Logistic Regression models perform similarly due to solving similar optimization problems. The regularization in Logistic Regression did not significantly improve performance, likely due to the large dataset size mitigating high variance issues.

Experimental Setup: The models were tested against three different types of test datasets:

All 0's A test set with negative samples (class 0).

All 1's A test set with positive samples (class 1).

50-50: A balanced test set with equal positive and negative samples.

Performance Metrics: Using Linear SVM with a class 1 weight of 1.5, the model's performance on standard test sets was:

Test Error: 28.72%

Recall: 65.78%

Precision: 60.64%

Results Analysis:

All 1's Test Set: The model achieved a test error of 33.96%, with perfect precision (100%) and reasonable recall (66.03%), indicating robustness in correctly identifying positive samples.

All 0's Test Set: The model achieved a test error of 25.36%, with precision being undefined (inf) and recall 0%, as expected since there are no positive samples to predict.

50-50 Test Set: The model achieved a test error of 30.03%, with balanced precision (71.88%) and recall (65.79%), indicating good performance on balanced datasets.

Implications: The model maintains accuracy across different test scenarios, demonstrating its resilience against naive algorithms that consistently predict one class. This indicates that the model has balanced the training data, maintaining its ability to generalize well.

Methodology: K-means clustering used cosine similarity as the metric to group similar queries.

Validation: Both custom and MATLAB built-in implementations of k-means yielded similar results, validating the clustering process.

Future Directions: Exploring factor analysis as an alternative to the EM algorithm for sparse bit-vector data representations will enhance clustering accuracy.

In conclusion, the Linear SVM model with appropriate weighting demonstrates robust performance across various test scenarios, indicating its effectiveness in handling imbalanced and aggressive class ratio datasets [

33,

34,

35]. The clustering of queries using k-means shows promise, with validation confirming the reliability of the clustering approach used.

The project aimed to predict queries the existing Cortana system cannot handle satisfactorily, indicating a need for human intervention as a premium service. [

36,

37] Initially, using a Naive Bayes model and repetition-based tagging for General search queries resulted in error rates exceeding 55%. Implementing Feedback-Based Tagging (FBT) for improved data labeling and utilizing SVM and Logistic Regression, models reduced test errors significantly to as low as 28%, with precision improving from 24% to 60% [

38,

39]. It was observed that SVM and regularized logistic regression models perform similarly [

40]. Moreover, the SVM model, especially with FBT tagging, demonstrated resilience across different class ratio scenarios in test samples compared to the training set.

4. Conclusion

Based on the article's content, it is evident that artificial intelligence (AI) is profoundly transforming artistic design and interactive product development in digital media [

41]. Integrating AI technologies such as generative adversarial networks (GANs) and deep learning algorithms has revolutionized creative processes by enhancing efficiency and expanding creative possibilities [

42,

43]. This advancement optimizes design processes and catalyzes the evolution of traditional art aesthetics, sparking new artistic discussions and enhancing user experiences through personalized interactions.

In this collaborative relationship, the AI system gives full play to its advantages of learning and processing massive data to provide material accumulation, rule mining, and creative generation support for creation [

44]. At the same time, designers rely on their professional experience and aesthetic judgment to screen, optimize, and improve the works generated by artificial intelligence and inject personal independent style and emotional experience. The man-machine combination is realized through innovative concepts. [

45,

46,

47] In visual design, designers can use artificial intelligence systems to quickly and efficiently complete initial creation and then focus on high-level creative design and deepening to achieve complementarity and synergy between the two sides [

48].

Traditional design is limited to a single and one-sided, and the introduction of artificial intelligence technology makes the design process more diversified and integrated. By integrating production, consumption, and distribution, platforms like Netflix show how AI can transform design from functional to relevant and from decentralized to unified to maximize user needs [

49]. In the future, with the further development of digital media technology, the country and industry's emphasis on interactive product design and user experience will drive more innovation. Artificial intelligence will continue to change design concepts and practices and profoundly affect the user's interaction and perceived expertise in the digital media environment, driving the advancement and application of digital media technologies [

50].

Acknowledgments

I am deeply grateful for the groundbreaking research conducted by Shi, C., Liang, P., Wu, Y., Zhan, T., & Jin, Z. (2024), particularly their pioneering work on [1] LLMOps-driven personalized recommendation systems. Their insightful findings have contributed significantly to my research endeavors and profoundly inspired and guided my exploration into enhancing user experience in artificial intelligence and interaction design. Their innovative approach to leveraging LLMOps for personalized recommendations has provided a pivotal framework for understanding user behavior and preferences, influencing my methodologies in designing AI-driven systems. Their work continues to serve as a guiding light in my pursuit of excellence in artificial intelligence and interaction design. Thank you. Shi, Y., Li, L., Li, H., Li, A., & Lin, Y. (2024), [2] Aspect Level Sentiment Analysis of Customer Reviews Based on Neural Multi-task Learning is essential research in the field of artificial intelligence and a profound inspiration for my research. This article expanded my understanding of sentiment analysis and provided valuable ideas and guidance for my research.

References

- Shi, C., Liang, P., Wu, Y., Zhan, T., & Jin, Z. (2024). They are maximizing User Experience with LLMOps-Driven Personalized Recommendation Systems—arXiv preprint arXiv:2404.00903.

- Shi, Y., Li, L., Li, H., Li, A., & Lin, Y. (2024). Aspect-Level Sentiment Analysis of Customer Reviews Based on Neural Multi-task Learning. Journal of Theory and Practice of Engineering Science, 4(04), 1-8. [CrossRef]

- Li, S., Xu, H., Lu, T., Cao, G., & Zhang, X. (2024). Emerging Technologies in Finance: Revolutionizing Investment Strategies and Tax Management in the Digital Era. Management Journal for Advanced Research, 4(4), 35-49.

- Shi J, Shang F, Zhou S, et al. Applications of Quantum Machine Learning in Large-Scale E-commerce Recommendation Systems: Enhancing Efficiency and Accuracy[J]. Journal of Industrial Engineering and Applied Science, 2024, 2(4): 90-103.

- Wang, S., Zheng, H., Wen, X., & Fu, S. (2024). DISTRIBUTED HIGH-PERFORMANCE COMPUTING METHODS FOR ACCELERATING DEEP LEARNING TRAINING. Journal of Knowledge Learning and Science Technology ISSN: 2959-6386 (online), 3(3), 108-126.

- Zhang, M., Yuan, B., Li, H., & Xu, K. (2024). LLM-Cloud Complete: Leveraging Cloud Computing for Efficient Large Language Model-based Code Completion. Journal of Artificial Intelligence General science (JAIGS) ISSN: 3006-4023, 5(1), 295-326. [CrossRef]

- Wang, B., Zheng, H., Qian, K., Zhan, X., & Wang, J. (2024). Edge computing and AI-driven intelligent traffic monitoring and optimization. Applied and Computational Engineering, 77, 225-230. [CrossRef]

- Li, H., Wang, S. X., Shang, F., Niu, K., & Song, R. (2024). Applications of Large Language Models in Cloud Computing: An Empirical Study Using Real-world Data. International Journal of Innovative Research in Computer Science & Technology, 12(4), 59-69. [CrossRef]

- Ping, G., Zhu, M., Ling, Z., & Niu, K. (2024). Research on Optimizing Logistics Transportation Routes Using AI Large Models. Applied Science and Engineering Journal for Advanced Research, 3(4), 14-27.

- Shang, F., Shi, J., Shi, Y., & Zhou, S. (2024). Enhancing E-Commerce Recommendation Systems with Deep Learning-based Sentiment Analysis of User Reviews. International Journal of Engineering and Management Research, 14(4), 19-34.

- Xu, H., Li, S., Niu, K., & Ping, G. (2024). Utilizing Deep Learning to Detect Fraud in Financial Transactions and Tax Reporting. Journal of Economic Theory and Business Management, 1(4), 61-71.

- Xu, K., Zhou, H., Zheng, H., Zhu, M., & Xin, Q. (2024). Intelligent Classification and Personalized Recommendation of E-commerce Products Based on Machine Learning. arXiv preprint arXiv:2403.19345. [CrossRef]

- Xu, K., Zheng, H., Zhan, X., Zhou, S., & Niu, K. (2024). Evaluation and Optimization of Intelligent Recommendation System Performance with Cloud Resource Automation Compatibility. [CrossRef]

- Zheng, H., Xu, K., Zhou, H., Wang, Y., & Su, G. (2024). Medication Recommendation System Based on Natural Language Processing for Patient Emotion Analysis. Academic Journal of Science and Technology, 10(1), 62-68. [CrossRef]

- Zheng, H.; Wu, J.; Song, R.; Guo, L.; Xu, Z. Predicting Financial Enterprise Stocks and Economic Data Trends Using Machine Learning Time Series Analysis. Applied and Computational Engineering 2024, 87, 26–32. [CrossRef]

- Zhan, X., Shi, C., Li, L., Xu, K., & Zheng, H. (2024). Aspect category sentiment analysis based on multiple attention mechanisms and pre-trained models. Applied and Computational Engineering, 71, 21-26. [CrossRef]

- Liu, B., Zhao, X., Hu, H., Lin, Q., & Huang, J. (2023). Detection of Esophageal Cancer Lesions Based on CBAM Faster R-CNN. Journal of Theory and Practice of Engineering Science, 3(12), 36-42. [CrossRef]

- Liu, B., Yu, L., Che, C., Lin, Q., Hu, H., & Zhao, X. (2024). Integration and performance analysis of artificial intelligence and computer vision based on deep learning algorithms. Applied and Computational Engineering, 64, 36-41. [CrossRef]

- Liu, B. (2023). Based on intelligent advertising recommendation and abnormal advertising monitoring system in the field of machine learning. International Journal of Computer Science and Information Technology, 1(1), 17-23. [CrossRef]

- Wu, B., Xu, J., Zhang, Y., Liu, B., Gong, Y., & Huang, J. (2024). Integration of computer networks and artificial neural networks for an AI-based network operator. arXiv preprint arXiv:2407.01541. [CrossRef]

- Liang, P., Song, B., Zhan, X., Chen, Z., & Yuan, J. (2024). Automating the training and deployment of models in MLOps by integrating systems with machine learning. Applied and Computational Engineering, 67, 1-7. [CrossRef]

- Wu, B., Gong, Y., Zheng, H., Zhang, Y., Huang, J., & Xu, J. (2024). Enterprise cloud resource optimization and management based on cloud operations. Applied and Computational Engineering, 67, 8-14. [CrossRef]

- Zhang, Y., Liu, B., Gong, Y., Huang, J., Xu, J., & Wan, W. (2024). Application of machine learning optimization in cloud computing resource scheduling and management. Applied and Computational Engineering, 64, 9-14. [CrossRef]

- Liu, B., & Zhang, Y. (2023). Implementation of seamless assistance with Google Assistant leveraging cloud computing. Journal of Cloud Computing, 12(4), 1-15. [CrossRef]

- Guo, L., Li, Z., Qian, K., Ding, W., & Chen, Z. (2024). Bank Credit Risk Early Warning Model Based on Machine Learning Decision Trees. Journal of Economic Theory and Business Management, 1(3), 24-30.

- Xu, Z., Guo, L., Zhou, S., Song, R., & Niu, K. (2024). Enterprise Supply Chain Risk Management and Decision Support Driven by Large Language Models. Applied Science and Engineering Journal for Advanced Research, 3(4), 1-7.

- Song, R., Wang, Z., Guo, L., Zhao, F., & Xu, Z. (2024). Deep Belief Networks (DBN) for Financial Time Series Analysis and Market Trends Prediction.World Journal of Innovative Medical Technologies, 5(3), 27-34. [CrossRef]

- Guo, L.; Song, R.; Wu, J.; Xu, Z.; Zhao, F. Integrating a Machine Learning-Driven Fraud Detection System Based on a Risk Management Framework. Preprints 2024, 2024061756. [CrossRef]

- Feng, Y., Qi, Y., Li, H., Wang, X., & Tian, J. (2024, July 11). Leveraging federated learning and edge computing for recommendation systems within cloud computing networks. In Proceedings of the Third International Symposium on Computer Applications and Information Systems (ISCAIS 2024) (Vol. 13210, pp. 279-287). SPIE.

- Zhao, F.; Li, H.; Niu, K.; Shi, J.; Song, R. Application of Deep Learning-Based Intrusion Detection System (IDS) in Network Anomaly Traffic Detection. Preprints 2024, 2024070595. [CrossRef]

- Yu, K., Bao, Q., Xu, H., Cao, G., & Xia, S. (2024). An Extreme Learning Machine Stock Price Prediction Algorithm Based on the Optimisation of the Crown Porcupine Optimisation Algorithm with an Adaptive Bandwidth Kernel Function Density Estimation Algorithm.

- Li A, Zhuang S, Yang T, Lu W, Xu J. Optimization of logistics cargo tracking and transportation efficiency based on data science deep learning models. Applied and Computational Engineering. 2024 Jul 8;69:71-7. [CrossRef]

- Xu, J., Yang, T., Zhuang, S., Li, H. and Lu, W., 2024. AI-based financial transaction monitoring and fraud prevention with behaviour prediction. Applied and Computational Engineering, 77, pp.218-224. [CrossRef]

- Ling, Z., Xin, Q., Lin, Y., Su, G. and Shui, Z., 2024. Optimization of autonomous driving image detection based on RFAConv and triplet attention. Applied and Computational Engineering, 77, pp.210-217. [CrossRef]

- He, Z., Shen, X., Zhou, Y., & Wang, Y. (2024, January). Application of K-means clustering based on artificial intelligence in gene statistics of biological information engineering. In Proceedings of the 2024 4th International Conference on Bioinformatics and Intelligent Computing (pp. 468-473).

- Gong, Y., Zhu, M., Huo, S., Xiang, Y., & Yu, H. (2024, March). Utilizing Deep Learning for Enhancing Network Resilience in Finance. In 2024 7th International Conference on Advanced Algorithms and Control Engineering (ICAACE) (pp. 987-991). IEEE.

- Xin, Q., Xu, Z., Guo, L., Zhao, F., & Wu, B. (2024). IoT traffic classification and anomaly detection method based on deep autoencoders. Applied and Computational Engineering, 69, 64-70. [CrossRef]

- Yang, T., Li, A., Xu, J., Su, G. and Wang, J., 2024. Deep learning model-driven financial risk prediction and analysis. Applied and Computational Engineering, 77, pp.196-202. [CrossRef]

- Zhou, Y., Zhan, T., Wu, Y., Song, B., & Shi, C. (2024). RNA Secondary Structure Prediction Using Transformer-Based Deep Learning Models. arXiv preprint arXiv:2405.06655. [CrossRef]

- Liu, B., Cai, G., Ling, Z., Qian, J., & Zhang, Q. (2024). Precise Positioning and Prediction System for Autonomous Driving Based on Generative Artificial Intelligence. Applied and Computational Engineering, 64, 42-49. [CrossRef]

- Cui, Z., Lin, L., Zong, Y., Chen, Y., & Wang, S. (2024). Precision Gene Editing Using Deep Learning: A Case Study of the CRISPR-Cas9 Editor. Applied and Computational Engineering, 64, 134-141. [CrossRef]

- Zhang, X., 2024. Machine learning insights into digital payment behaviors and fraud prediction. Applied and Computational Engineering, 67, pp.61-67. [CrossRef]

- Zhang, X. (2024). Analyzing Financial Market Trends in Cryptocurrency and Stock Prices Using CNN-LSTM Models.

- Xu, X., Xu, Z., Ling, Z., Jin, Z., & Du, S. (2024). Emerging Synergies Between Large Language Models and Machine Learning in Ecommerce Recommendations. arXiv preprint arXiv:2403.02760.

- Ping G, Wang S X, Zhao F, et al. Blockchain Based Reverse Logistics Data Tracking: An Innovative Approach to Enhance E-Waste Recycling Efficiency[J]. 2024.

- Lu, W., Ni, C., Wang, H., Wu, J., & Zhang, C. (2024). Machine Learning-Based Automatic Fault Diagnosis Method for Operating Systems.

- Zhang, Y., Xie, H., Zhuang, S., & Zhan, X. (2024). Image Processing and Optimization Using Deep Learning-Based Generative Adversarial Networks (GANs). Journal of Artificial Intelligence General science (JAIGS) ISSN: 3006-4023, 5(1), 50-62. [CrossRef]

- Lei, H., Wang, B., Shui, Z., Yang, P., & Liang, P. (2024). Automated Lane Change Behavior Prediction and Environmental Perception Based on SLAM Technology. arXiv preprint arXiv:2404.04492. [CrossRef]

- Wang, B., He, Y., Shui, Z., Xin, Q., & Lei, H. (2024). Predictive Optimization of DDoS Attack Mitigation in Distributed Systems using Machine Learning. Applied and Computational Engineering, 64, 95-100. [CrossRef]

- Xu, Y., Liu, Y., Xu, H., & Tan, H. (2024). AI-Driven UX/UI Design: Empirical Research and Applications in FinTech. International Journal of Innovative Research in Computer Science & Technology, 12(4), 99-109.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).