1. Introduction

Artificial intelligence (AI) has become increasingly popular recently, and if used wisely and effectively, it has the potential to surpass our most optimistic expectations in a variety of practical applications. AI is seen as a facilitator in achieving the United Nations’ Sustainable Development Goals (SDGs) by automating economic sectors [

1,

2]. A major challenge in AI development is the lack of explainability in many powerful techniques, especially those emerging recently. This includes large language models (LLMs) based on transformer models, ensemble methods, and Deep Neural Networks (DNNs) [

3,

4]. Nevertheless, XAI is an emerging field that focuses on introducing transparency and interpretability to complex DNNs or Transformer-based LLMs. These models are often referred to as black-box models due to their inability to provide insight into their decision-making processes or to offer explanations for their predictions and biases [

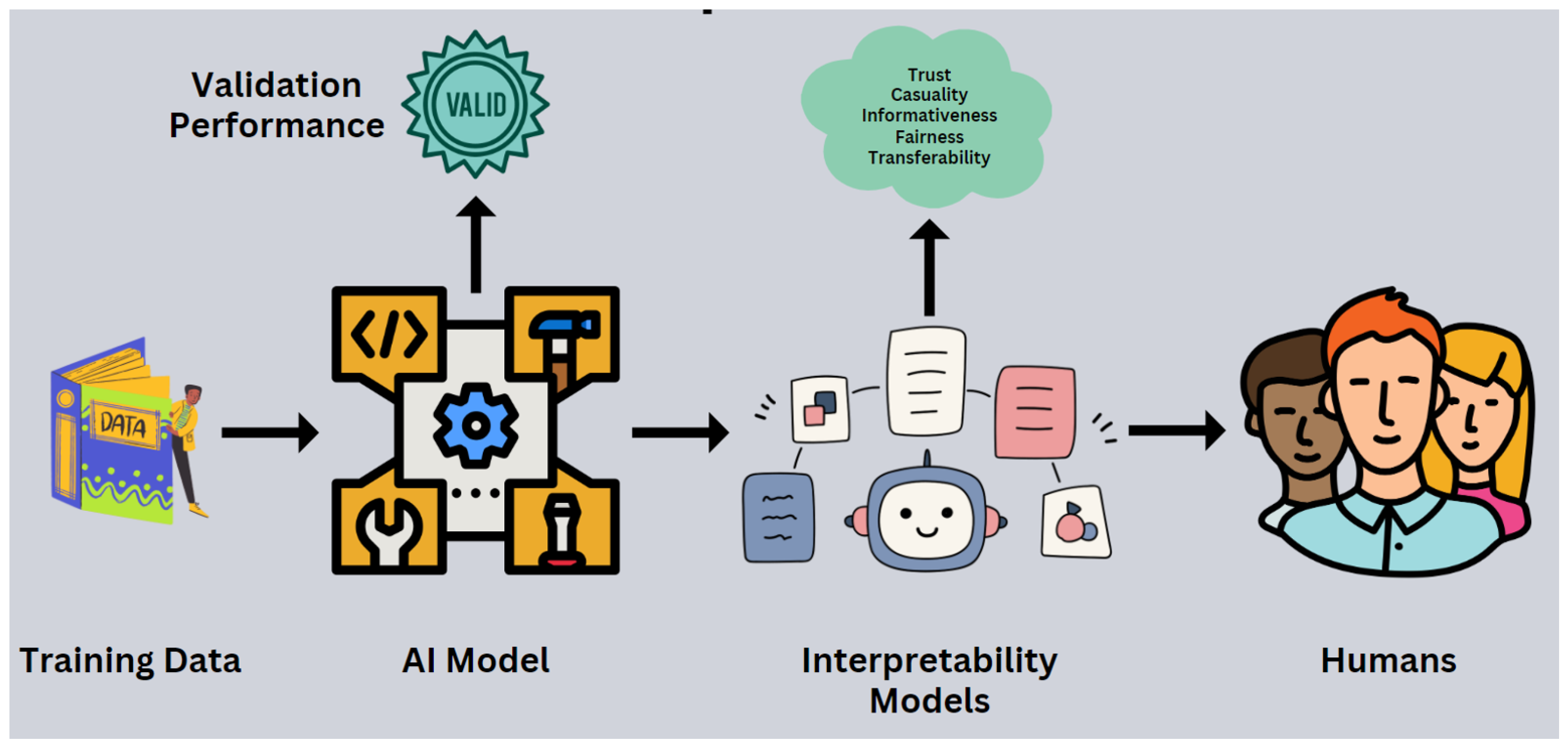

5]. As illustrated in

Figure 1, XAI refers to a collection of processes and methods that empower human users to understand and trust the outputs generated by machine learning algorithms [

5,

6]. This transparency is essential to ensure transparent decision-making in AI applications and align with the SDGs [

7,

8]. XAI has demonstrated its value in medical imaging research [

9], human-computer interactions, stock price prediction [

10], and NLP tasks, providing insights into machine learning models’ application processes and human-centered approaches [

6,

11,

12]. Despite these advancements, a significant gap exists in integrating human factors into AI-generated explanations, making them more understandable and performance-enhancing in NLP for African languages. XAI enables users to develop trust in NLP applications like sentiment analysis, creating a positive feedback loop that continually improves the NLP model’s performance.

Sentiment analysis is a crucial task in NLP that involves determining the emotional tone or attitude expressed in a piece of textual content [

13]. It holds immense importance in numerous domains, such as marketing, customer service, and public opinion analysis on social challenges [

14]. By automatically classifying text as positive, negative, or neutral, sentiment analysis provides valuable insights into customer feedback and helps businesses make informed decisions [

13,

14]. Most recently, sentiment analysis research has extended its focus from high-resourced languages to low-resourced languages, such as over 2000+ African languages [

15]. Many researchers have concentrated on creating datasets and Transformer-based NLP models to tackle the difficulties in African languages [

16,

17,

18,

19]. However, the interpretability and explainability of these models have not been explored. These languages are classified as low-resourced since they often lack sufficient labelled data and resources, thus presenting unique challenges for existing sentiment analysis models. Moreover, due to the increased use of social media, particularly Twitter, the use of multiple languages in multilingual communities is seen as a common practice, which further presents challenges in current models [

19]. To address these issues, researchers have proposed various methods, such as transfer learning, multilingual and cross-lingual approaches [

14], utilizing machine learning and DNN approaches [

20]. Lately, we have witnessed the development of sentiment datasets for low-resource languages, together with multilingual PLMs. These models are fine-tuned for specific NLP tasks, contributing to the improvement of these less privileged languages.

Established PLMs like BERT [

21], RoBERTa [

22], and XLM-R [

23] have achieved impressive results (including state-of-the-art performance in some cases) on various NLP tasks, including sentiment analysis. Despite these PLMs being pre-trained in over 100+ languages, many African languages are still not well represented in the model pre-training process. Inspired by the success of mainstream PLMs, researchers have developed similar models specifically for African languages. Examples include AfriBERTa [

24], Afro-XLMR [

17], and AfroLM [

18]. These Afro-centric PLMs are trained on massive datasets of African text data and can handle multiple African languages, promoting NLP advancements within the continent. AfriBERTa, a pioneering African language model for transfer learning and fine-tuning in various NLP tasks, does not currently include South African languages [

24]. This approach allows us to adapt the model’s knowledge to our target languages and improve its performance for sentiment analysis. Furthermore, a recent development is SERENGETI [

16], a massively multilingual language model designed to cover a remarkable 517 African languages. This model holds promise for our sentiment analysis tasks, and we will investigate its suitability for our specific language set. Unlike mainstream PLMs, which often struggle with intricate tonal systems, complex morphology, and code-switching in African languages, Africa-centric models demonstrate promise for accurate sentiment analysis. However, a significant gap exists in understanding the internal workings of these models–-specifically, how they represent and utilise features to classify text into sentiment categories. This lack of transparency hinders our ability to trust and interpret their decisions.

Fortunately, XAI methods offer a solution [

25]. Using XAI techniques, we can better understand how multilingual PLMs work and how they figure out sentiment predictions.This improved understanding fosters trust and allows for targeted improvements in future model development for African languages [

12]. To achieve this understanding, we can apply XAI techniques like LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (Shapley Additive exPlanations), including their adaptation for transformer-based models. These techniques provide valuable insight into how Afrocentric PLMs make sentiment predictions. For instance, LIME can highlight specific words or phrases that significantly influence the model’s sentiment classification. Similarly, SHAP can explain the contribution of each feature (word, n-gram, etc.) to the final sentiment score, allowing us to understand the reasoning behind the model’s decisions [

3]. We believe that this is the first investigation into XAI for sentiment analysis using Afro-centric PLMs adapted specifically for low-resource South African languages. Additionally, by incorporating XAI techniques, we aim to unlock a deeper understanding of these models’ decision-making for sentiment classification in the under-represented language group.

This study introduces an approach to sentiment analysis that combines Africa-centric PLMs with XAI techniques. We explore the applicability of LIME and SHAP, specifically focusing on their ability to generate explanations for transformer models like Afro-centric PLMs. To strengthen our multilingual sentiment analysis models for five under-resourced African languages: Sepedi, Setswana, Sesotho, isiXhosa, and isiZulu, we strategically employ the SAfriSenti corpus. This corpus is crucial for fine-tuning our models and evaluating their performance on sentiment analysis tasks. These languages are widely spoken in South Africa and extend to neighboring countries such as Botswana, Lesotho, Eswatini (formerly Swaziland), Mozambique, and Zimbabwe.

The main contributions of our study are summarised as follows:

We propose a novel hybrid approach that integrates Africa-centric multilingual PLMs with XAI techniques. This integration allows us to apply the sentiment analysis capabilities of Afro-centric PLMs while simultaneously incorporating XAI methods to explain their decision-making processes for improved transparency and trust.

Our approach utilises fine-tuned benchmark Africa-centric PLMs specifically designed for African languages. This choice capitalises on their understanding of linguistic nuances in these languages, potentially leading to superior sentiment analysis performance compared to mainstream PLMs.

By incorporating attention mechanisms and visualization techniques, we enhance the transparency of the Africa-centric sentiment analysis model. This allows users to understand which parts of the input text the model focuses on when making sentiment predictions, fostering trust in its decision-making process.

We demonstrate that incorporating LIME and SHAP techniques into the sentiment classifier’s output enhances the model’s interpretability and explainability.

We also show that by leveraging XAI strategies, the study ensures that the model’s sentiment predictions are accurately interpretable and understandable. Furthermore, the feedback survey shows that many of the participants are in agreement with the results of the models and XAI explanations.

In the next section, we present the related work and the latest approaches of other researchers for XAI methods for sentiment analysis concerning explainability in transformer-based PLMs.

Section 3 presents our sentiment datasets. In

Section 4, we provide our research on XAI techniques for sentiment analysis through the adaptation of Afrocentric PLMs.

Section 5 describes the experimental setup for our study. The results and discussion are described in

Section 5. We conclude our work and suggest further steps in

Section 6.

5. Experimental Results and Explanations

In this section, we present the experiments and results of sentiment analysis systems. Furthermore, we explain the attention mechanisms and visualization outcomes using XAI techniques such as LIME and TransSHAP.

5.1. Experimental Setup

In this study, we conducted training and fine-tuning procedures on four PLMs for sentiment analysis on a set of five sentiment datasets. The data for each language are made up of the 80% and 20% data partition for training and testing, respectively. We perform model fine-tuning by considering the final hidden vectors of the first special token as the aggregate input sentence representation and then passing them onto the softmax classification layer to get the predictions. Our PLMs use hyper-parameters like the AdamW optimizer with a initial learning rate, 10 epochs, and a batch size of 16. All training experiments were performed using the HuggingFace Transformers library. We used paid Google Colab Pro services to run all of our experiments.

5.2. Performance Results

Table 2 demonstrates that the performance of the models varies significantly across different languages. The choice of language model has a significant impact on sentiment analysis results for different African languages. We also report on the F1-score of our models. The F1-score is a metric used to evaluate the performance of machine learning models, particularly in classification tasks [

41]. It combines two essential aspects of model performance: precision and recall. It summarizes a model’s accuracy in a way that balances the trade-off between precision and recall. The F1-score can be calculated using the following formula:

True Positives (TP): The number of tweets correctly labelled as having the positive sentiment.

True Negatives (TN): The number of tweets correctly labelled as not having the positive sentiment.

False Positives (FP): The number of items incorrectly labelled as positive sentiment.

False Negatives (FN): The number of items incorrectly labelled as not having a positive sentiment.

where precision is the ability of a model to avoid labeling negative instances as positive and recall is the ability of a model to find all positive instances.

With the use of the languages isiZulu and isiXhosa, as well as Sepedi, Setswana, and Sesotho, which share linguistic similarities and characteristics, Afro-XLMR also performs extremely well, demonstrating its adaptability to multiple African languages. This is more likely due to fine-tuning language-specific data (in-domain dataset) and linguistic features. Also, this is due to Afro-XLMR being initially pre-trained in some of these languages (Sesotho, isiXhosa, and isiZulu). Additionally, the mBERT model also performs better on isiZulu and isiXhosa languages that share linguistic similarities and characteristics. The pre-training phase of the mBERT model involved these two languages, which contributed to the notable performance improvement of over 80%. Furthermore, the highest quantity and quality of training data for each language also affect performance in the isiXhosa and isiZulu languages. Furthermore, further research and model fine-tuning may be necessary to improve sentiment analysis performance in some languages, especially those with lower sentiment scores, such as Setswana and Sesotho. Here, it is also observed that the Afro-centric PLMs also perform significantly better than the mBERT and XLM-R models in general.

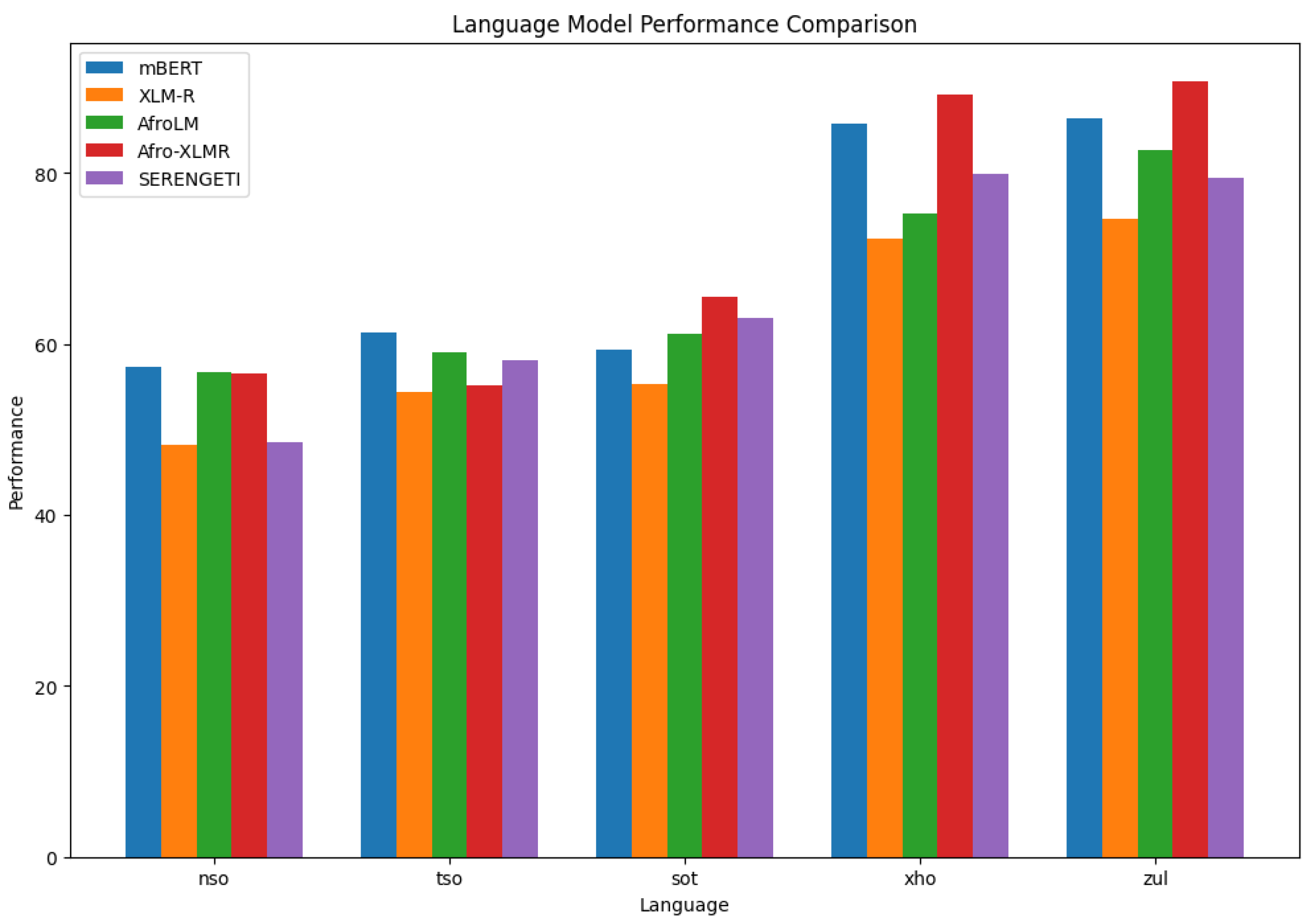

Figure 4 compares the performance of five different language models (mBERT, XLM-R, AfroLM, Afro-XLMR, and SERENGETI) in five different languages (nso, tso, sot, xho, and zul). Performance is measured using the F1 score. Afro-XLMR generally performs the best in all languages, followed closely by XLM-R. mBERT and SERENGETI show comparable performance, while AfroLM tends to lag behind the others. XLM-R performs the best, followed closely by Afro-XLMR and mBERT in Sepedi. Afro-XLMR performs the best, with XLM-R and mBERT not far behind in Setswana. Afro-XLMR again takes the lead, followed by XLM-R and SERENGETI in Sesotho. In isiXhosa, Afro-XLMR and XLM-R are the top performers, with SERENGETI and AfroLM close behind. AfroLM shows its best performance in isiZulu, but still falls short of Afro-XLMR. XLM-R and SERENGETI also perform well. The results suggest that models specifically trained on African languages, such as Afro-XLMR, tend to perform better than more general models such as mBERT or XLM-R. This highlights the importance of developing language models tailored to specific linguistic contexts, especially for underrepresented languages. Our results are comparable to those of previous work [

18,

36]. The relatively strong performance of SERENGETI, AfroLM AND Afro-XMLR across different languages suggests that it might be a good choice for general-purpose language processing tasks in South Africa.

5.3. Attention Explanations

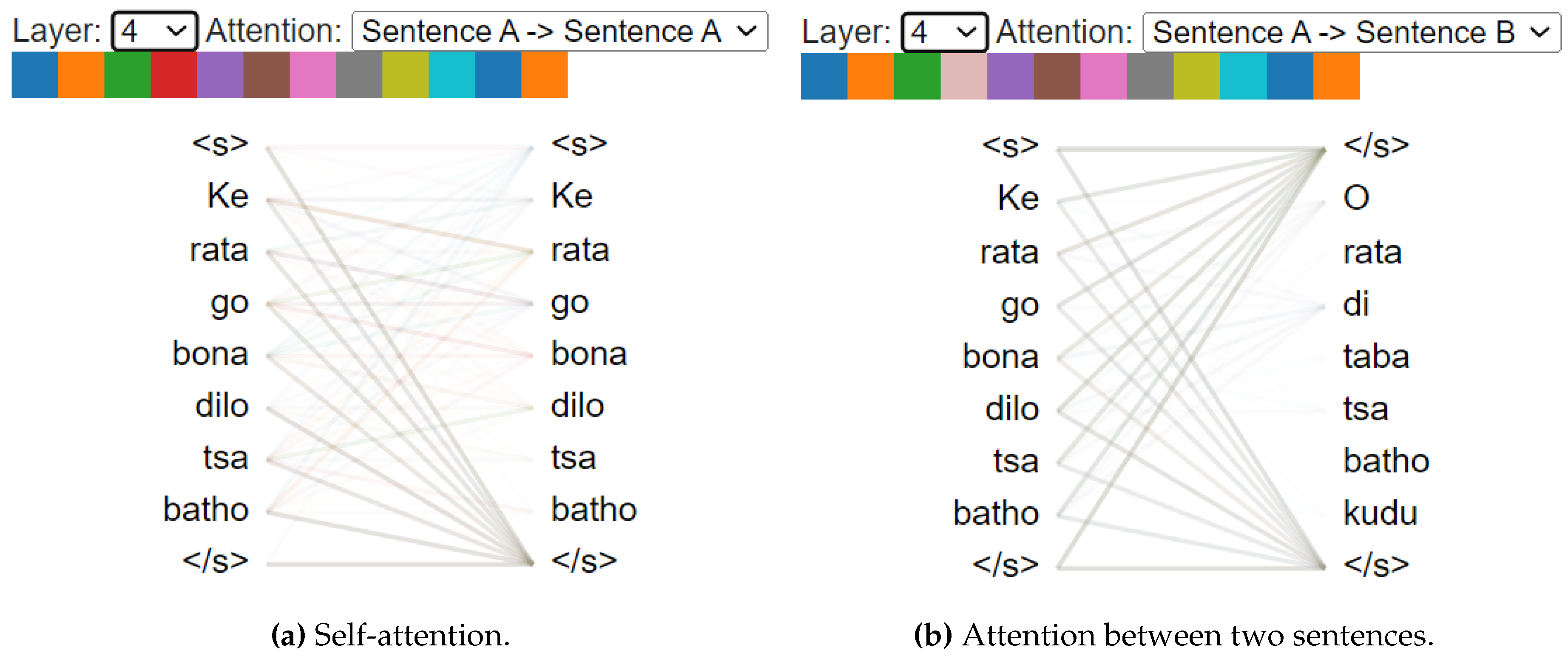

Attention allows the model to weigh the importance of different words in a sentence when understanding the meaning of a particular word. It is like the model focusing its "attention" on certain parts of the sentence. Let us consider the two sentences; (sentence A: "Ke rata go bona dilo tsa batho" /I like to look at other people’s belongings/ and sentence B: "O rata di taba tsa batho kudu" /You like other people’s business/affairs a lot/.

Figure 5a demonstrates "self-attention" where a sentence is compared to itself. This helps the model understand the internal relationships within the sentence. The visualization illustrates self-attention, where a sentence attends to itself. Each word in the sentence is assessing its relationship with every other word. The words of sentence A are vertically listed on both sides. Each line connects a word on the left with a word on the right. The opacity (thickness) of each line indicates the strength of the attention relationship between the connected words. The most opaque lines reveal the strongest attention relationships within the sentence. In this visualization, there is a high degree of self-attention. Each word seems to focus heavily on itself, indicating a strong sense of individual importance.

There are also notable connections between words like "bona" (see) and "dilo" (things), suggesting that the model recognizes the semantic relationship between these words. The faintest lines represent the weakest attention relationships. These words may be considered less relevant to each other in the context of the sentence. The strong pattern of self-attention indicates that the model has learned to emphasize individual words, possibly because of the importance of each word in conveying meaning in this specific sentence. The connections between words like "bona" and "dilo" show the model’s ability to capture semantic relationships within the sentence, contributing to a richer understanding of the text.

Figure 5b shows the visualization of the attention mechanism in layer 4 of a transformer model, specifically illustrating the attention between two sentences (Sentence A and Sentence B). The words of both sentences are listed, A on the left and B on the right. Each line connects a word in A to one in B. Thicker lines mean stronger attention between the connected words. In this case, all lines seem equally faint. This suggests a uniform attention distribution, where each word in sentence A is paying roughly equal attention to all words in sentence B. This could indicate that the model is struggling to identify clear relationships between the two sentences in this layer.

5.4. Sentiment Decision Explanations

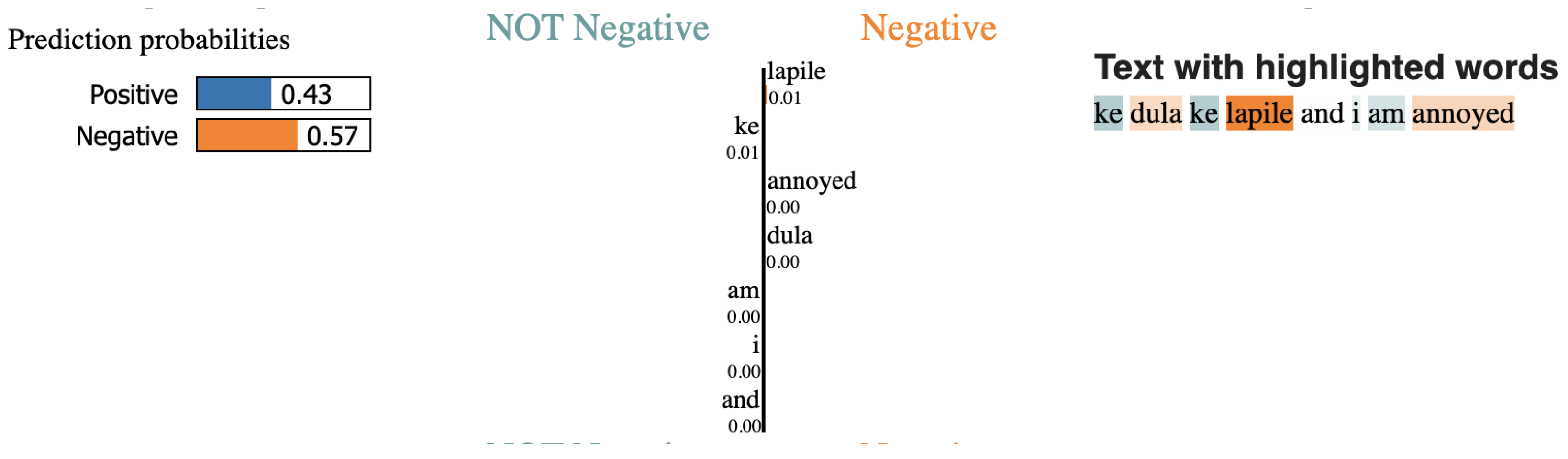

We generated all XAI visualizations using the Afro-XMLR model due to its superior performance. The visualization techniques employed in the explanation methods LIME and SHAP are primarily designed for interpreting tabular data [

2,

6]. We can use the LIME method to create visualizations such as the one in

Figure 6, which helps us to understand which words in parts of the tweets have the greatest influence on the final prediction of the model. The model has analyzed the text given and determined that it has a 57% probability of being negative and a 43% probability of being positive. We can observe from the example ”Ke dula ke lapile and I am annoyed” /

I am always tired and I am annoyed/ that the model is performing successfully and can obtain the feeling that the tweet is negative.

It is paying attention to words that are negative in this context. The fact that words receiving the most attention can form a plausible explanation is due to the model’s consideration of relevant words such as "lapile," "tired," and "annoyed" (orange), which indicate the negative sentiment of this tweet. Furthermore, the model also indicates words that are not negative, like ”ke” and ”I am” (blue). LIME focuses on the words that most influenced the model’s decision. In this case, the words "lapile" (tired) and "ke" (I) are highlighted as the most influential for a negative prediction. The numbers next to each word (e.g., 0.01 for "lapile") represent the weight or importance assigned to that word by the simpler, local model in determining the sentiment. In this case, the model seems to be associating the words "lapile" and "ke" with negative sentiment, even though "ke" might not be inherently negative in all contexts.

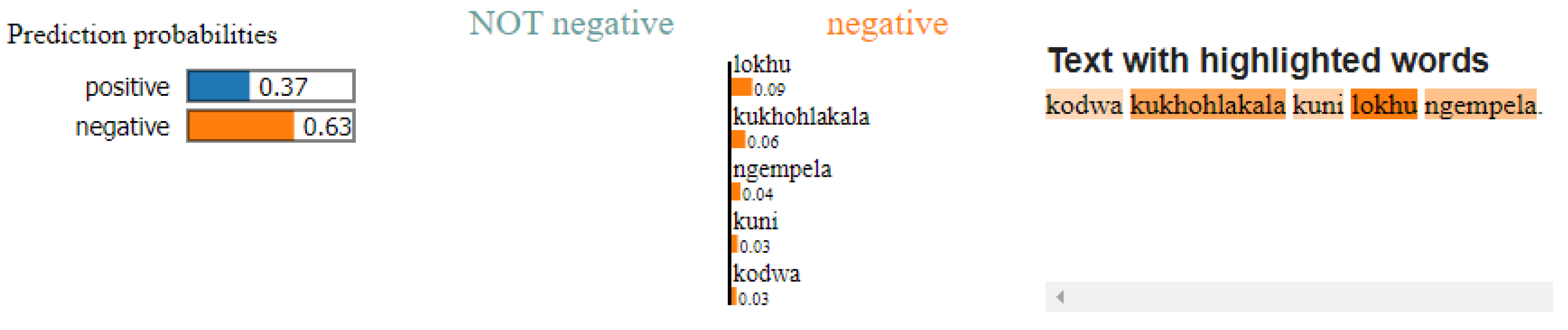

The LIME results in Figue

Figure 7 show the prediction probabilities for the text "kodwa kukhulakala kuni lokhu ngempela" /

but this really makes sense to you/ as negative (0.63) and positive (0.37). The words contributing the most to the negative prediction are "kukhohlakala" and "ngempela", while the words contributing the most to the positive prediction are "lokhu" and "kodwa." The overall prediction is negative, suggesting that the model interprets the text as expressing a negative sentiment. Moreover, the LIME results in

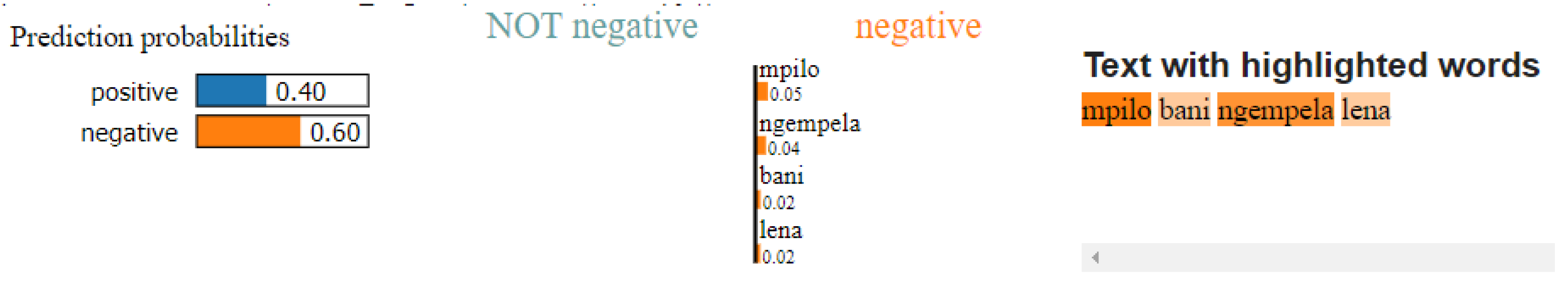

Figure 8 shows the prediction probabilities for the text "mpilo bani ngempela lena" as negative (0.60) and positive (0.40). The words contributing the most to the negative prediction are "ngempela", while "mpilo" contributes the most to the positive prediction. The words "bani" and "lena" have a lower impact on the overall prediction. Despite the contributions to the positive class, the overall prediction is negative.

The model incorrectly classified the tweet in

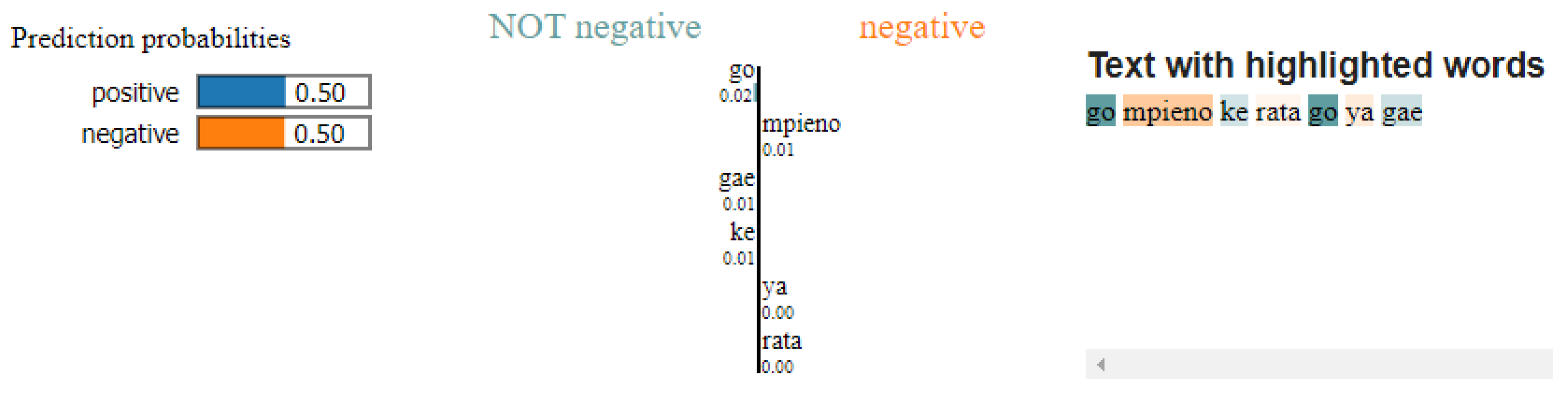

Figure 9 as "negative," despite the original tweet expressing a positive sentiment about going home. This discrepancy indicates a potential error in the model’s understanding of the text. LIME highlights the words it believes contributed most to the prediction. Surprisingly, none of the words have a strong influence towards either positive or negative sentiment, as indicated by their low values close to 0. The word "rata" (like) has the highest value but it’s still only 0.01. This suggests that the model might be struggling to interpret the overall meaning of the sentence due to context or nuances it does not fully grasp. The prediction probabilities are split evenly at 0.50 for both positive and negative, reflecting the model’s uncertainty in classifying this particular tweet.

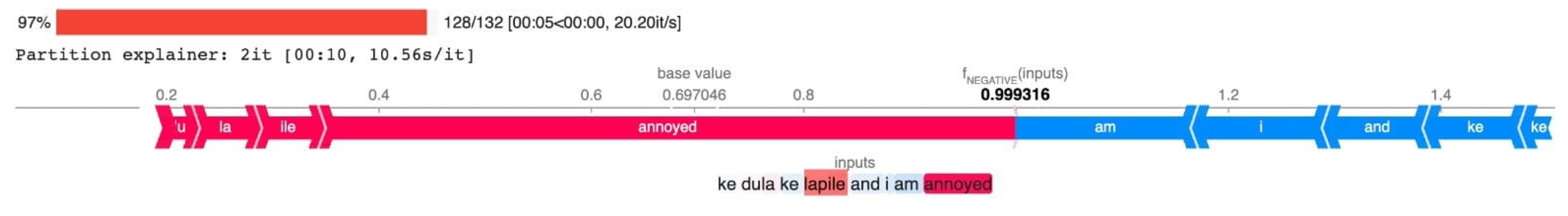

The visualisation with the SHAP method for the same sentence in

Figure 6 is illustrated in

Figure 10. We can see that the features with the strongest impact on the prediction correspond to longer arrows that point in the direction of the predicted class, which is negative. This confirms that a model with higher accuracy tends to generate more reliable explanations. When the model accurately predicts the results, the explanations it provides for those predictions are more likely to be reliable and meaningful. This represents the average prediction of the model across the entire dataset. In this example, the base value leans slightly towards negative sentiment (0.697). This is the model’s prediction for the specific input text "ke dula ke lapile and i am annoyed". The output value is almost 1.0, indicating a strong negative sentiment prediction. Each word in the input text is a feature. The plot shows the impact of each word on the model’s prediction, moving it away from the base value towards the output value. The color and length of the horizontal bar for each feature (word) indicate the magnitude and direction of its impact. Red bars indicate features that push the prediction towards negative sentiment, while blue bars push toward positive. The longer the bars, the stronger the impact.

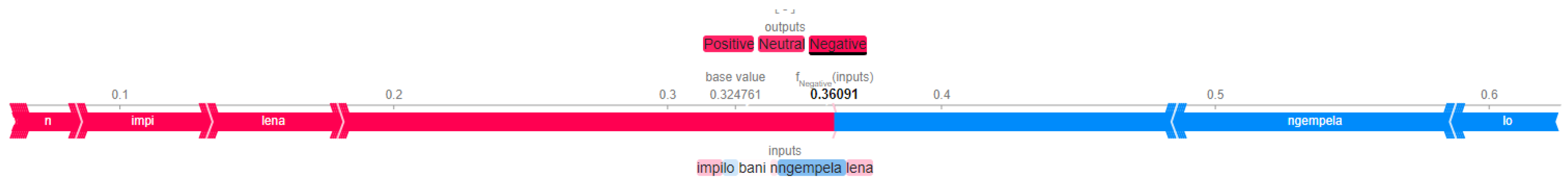

The SHAP results for the text "mpilo bani ngempela lena" in

Figure 11 indicate a higher probability of negative sentiment (0.640081) compared to positive (0.36091). The words "lena" and "mpilo" contribute slightly towards the positive sentiment, while "ngempela" strongly pushes the prediction towards the negative sentiment. Although there are some positive influences, the overall sentiment leans towards negative due to the significant impact of the word "ngempela." The base value of 0.5 indicates the average prediction in the absence of any input features.

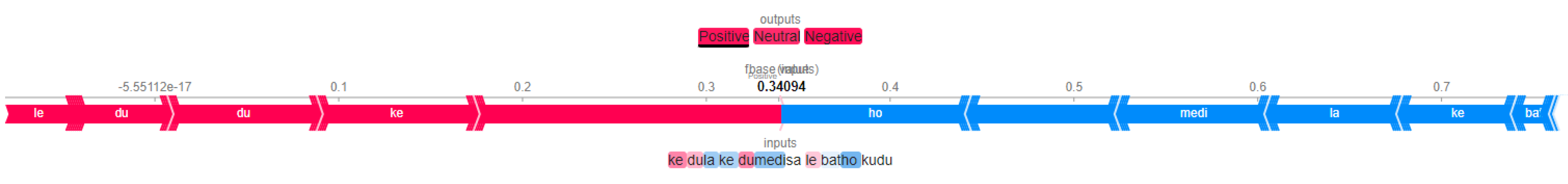

In

Figure 12, SHAP results of the text in Sepedi "ke dula ke dumedisa le batho kudu" /

I am always greeting people/ indicate a high probability of a positive outcome with an output value of 0.6914. The base value of 0.3494 is the average outcome across the entire dataset. The words "batho" and "kudu" contribute the most to this positive prediction, with "batho" having the highest impact. The other words in the sentence, like "ke", "dula", "dumedisa", and "le", also have a positive impact but to a lesser extent. The words "ke", "dula" and "le" are colored red, indicating they are pushing the prediction towards a negative sentiment, but their impact is outweighed by the stronger positive influence of other words.

In conclusion, our XAI methods effectively interpret the sentiments of the Xhosa and isiZulu tweets, outperforming the Sepedi, Sesotho, and Setswana tweets, suggesting a correlation between model performance and explanation accuracy. However, challenges arise when explaining less certain predictions, especially in languages where the model struggles. In particular, our approach identifies the keywords that influence sentiment, providing valuable insights into model decisions. Additionally, the effectiveness of our XAI methods extends to code-switched tweets, showcasing their potential in diverse linguistic environments. Lastly, XAI methods utilising PLMs can be applied to any African language that has been fine-tuned for sentiment analysis.

5.5. Human Assessment

One of the main goals in the XAI research field is to assist end-users in becoming more effective in the use of AI decision-support systems. The effectiveness of the human-AI interface can be measured by the AI’s ability to support the human in achieving the task. In the context of NLP systems, this is also the case. To evaluate the methods used for XAI in our sentiment analysis for low-resourced languages, we used an interactive human-centered feedback strategy to further evaluate the XAI methods. The results and explanations of two sets of participants are presented in the below sub-sections. The participants, including experienced participants with technical expertise who often use the AI models (56.7%) and users who are familiar with AI models but do not use them (38.3%), were involved in this study. Only three (3) participants (5%) opted not to participate in this present study.

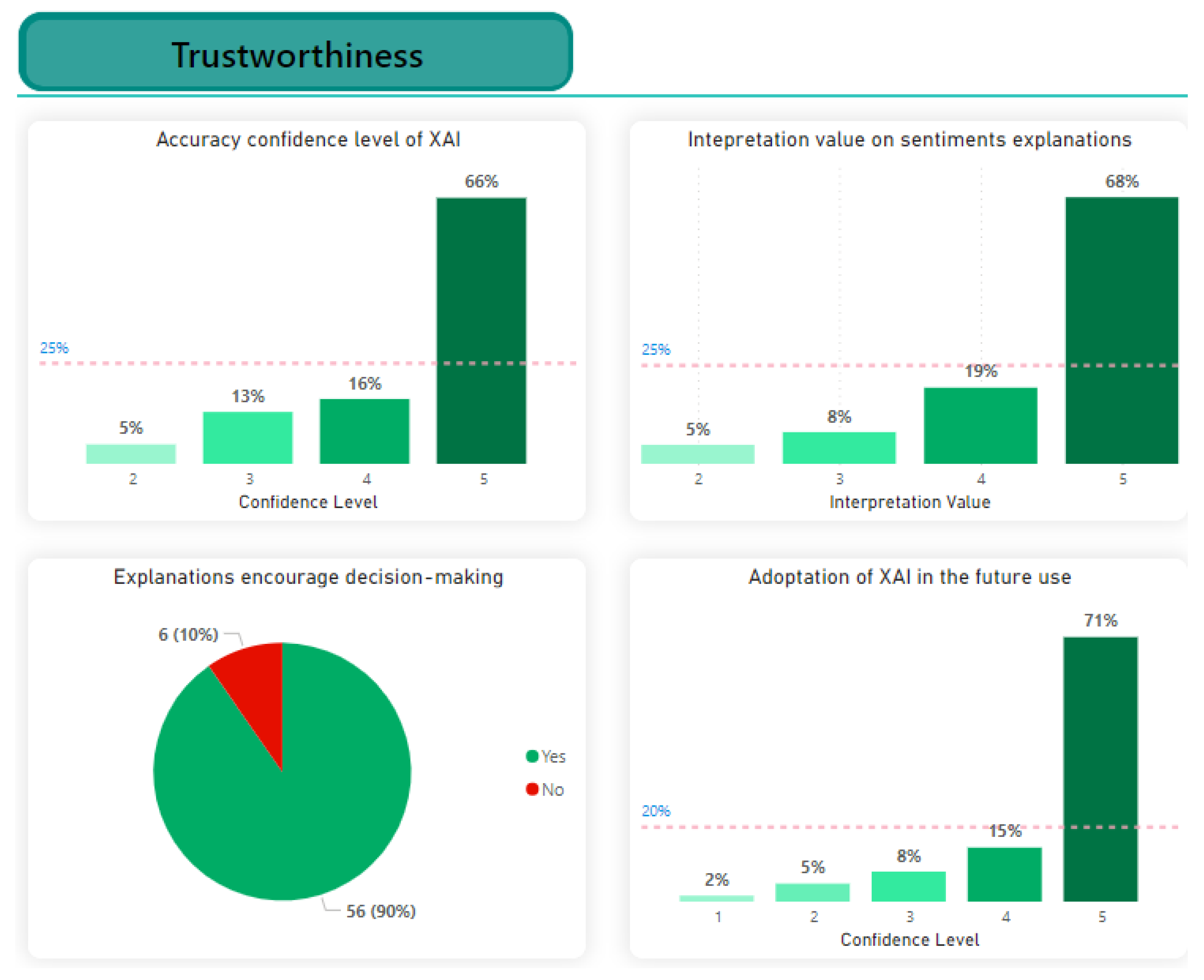

The feedback strategy uses online questionnaires, each question being rated on a scale of 1 to 5 or as a binary choice of [Yes, No]. Participants are allowed to use the online sentiment analysis system, which generates sentiment predictions and explanations. They do so by visualising their examples from the sentiment analysis system before completing the online questionnaires to rate the level of interpretation and explanation of the decision. The participants are asked to evaluate trust, transparency, reliability, and interpretability. The questions used in this study were built using GoogleForm

4. The results of 57 completed questionnaires are presented in

Figure 13,

Figure 14,

Figure 15 and

Figure 16. These results focused on answering questions based on the explanations generated by the LIME and SHAP methods.

5.5.1. Trustworthiness

Trust is a crucial factor in the context of XAI, especially in sentiment analysis applications, as users often want to understand and trust the decisions made by AI models. Four key questions were considered when asking participants to assess trust in XAI methods for sentiment analysis. The following questions were considered:

How confident do you feel in the accuracy of the sentiment analysis results provided by the XAI system?

How valuable do you find the explanations in helping you trust and interpret the sentiment analysis results?

Did the XAI system’s explanations positively influence any changes in your decision-making based on the sentiment analysis results?

How likely are you to use the XAI system for future sentiment analysis tasks, considering the explanations it provides?

The results that evaluated the trust factor are presented in

Figure 13. The scale results indicate the distribution of responses regarding confidence in the accuracy of explanations provided by the XAI system. Each numerical value from 1 to 5 represents different levels of confidence (1 - Not confident, 2 - Slightly confident, 3 - Somewhat confident, 4 - Moderate confident and 5 - Highly confident) or (1 - Not likely, 2 - Slightly likely, 3 - Somewhat likely, 4 - Moderate likely and 5 - Highly likely).

None of the participants indicated they had no confidence in the accuracy of the explanations provided by the XAI system. This suggests that no one outright dismissed the accuracy, which can be viewed as a positive sign. A substantial majority of participants (66%) displayed high confidence in the accuracy of explanations provided by the XAI system. This reflects a generally positive outlook and a high level of trust in the system’s performance. Although a significant portion expressed high confidence, there were still some participants (18%) who showed moderate confidence levels. Many of the participants had an understanding of AI models but did not use them. The results of the scale indicate that a significant proportion of participants (68%) feel very confident in the value of explanations to trust and interpret the results of the sentiment analysis. A gradual increase in confidence levels is evident across the scale, with only (5%) expressing a slight confidence, indicating a strong overall positive sentiment towards the explanatory value of the XAI method. The distribution suggests that a majority of participants rely on and value these explanations for establishing trust in the sentiment analysis outcomes.

Furthermore, the results indicate a high level of influence from the XAI system’s explanations on decision-making, with 90% of the participants reporting that the system’s explanations did impact their decisions. This strong positive response suggests that the ability of the XAI system to provide transparent and interpretable explanations played a significant role in influencing participants’ decision-making processes, highlighting the importance of effective XAI in enhancing trust and usability in sentiment analysis applications. The scale results indicate a clear inclination towards using the XAI system for future sentiment analysis tasks, as 71% of participants expressed a high likelihood. A substantial majority of the participants, totaling 94%, expressed the likelihood of using the XAI methods for explanations (rating 4 or 5), suggesting strong potential adoption. However, there remains a smaller percentage of 6% (ratings 1 to 3) who could require further investigation regarding the explanations of the XAI system for general acceptance. Despite a minority expressing lower inclinations (2% in ’not likely’), the overall trend shows favourable acceptance and significant potential for adoption due to the perceived value of the explanations provided by the XAI system.

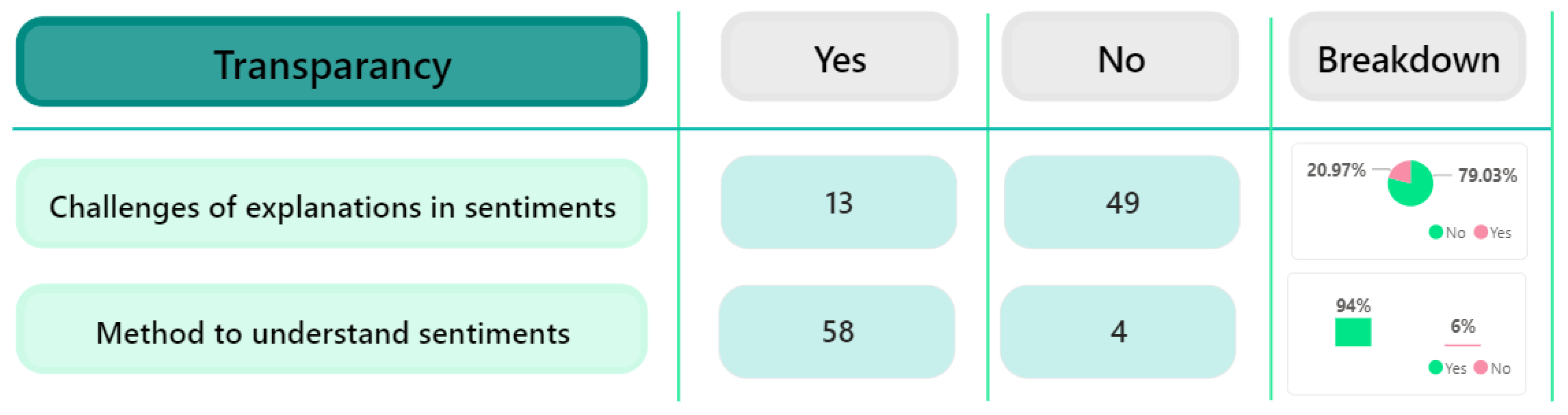

5.5.2. Transparency

Transparency promotes trust in the model. Trust is an emotional and cognitive component that determines how a system is perceived, either positively or negatively. Thus, when the decision-making process in the XAI model is thoroughly understood, the model becomes transparent. For this case, we evaluated the transparency based on two key questions:

Were there any challenges or difficulties you encountered while trying to interpret the XAI system’s explanations of sentiment?

Did the explanations provided by the XAI system help you understand why certain sentiments were identified in the text?

The results of the participants for the above-mentioned questions are presented in

Figure 14.

The significant majority, which represented 79.03% of the participants reported not facing any challenges or difficulties in interpreting the explanations of the XAI system of sentiment. This overwhelming result suggests that the explanations of the system may have been clear and easily understood by a large percentage of users. Nonetheless, the 20.97% of users who experienced issues could suggest that the system’s interpretability needs to be improved to accommodate a smaller but significant portion of users who found the explanations difficult to understand. Further enhancements to the XAI’s explanation methods could be beneficial to address the concerns raised by this minority.

Lastly, the huge 94% positive response that states that the explanations provided by the XAI system helped to understand why certain sentiments were identified in the text indicates a high level of effectiveness and clarity in the system’s explanations. This significant majority suggests that the interpretability features of XAI were successful in clarifying the reasoning behind the sentiment predictions of the vast majority of participants, highlighting a strong positive impact on comprehension and trust in the AI decision-making process. However, the relatively low 6% unfavourable reaction calls for more examination to identify particular areas of the visualisation for improvement or to meet the requirements of users who may have had difficulties or considered the explanations unsatisfactory, to improve general transparency and customer satisfaction.

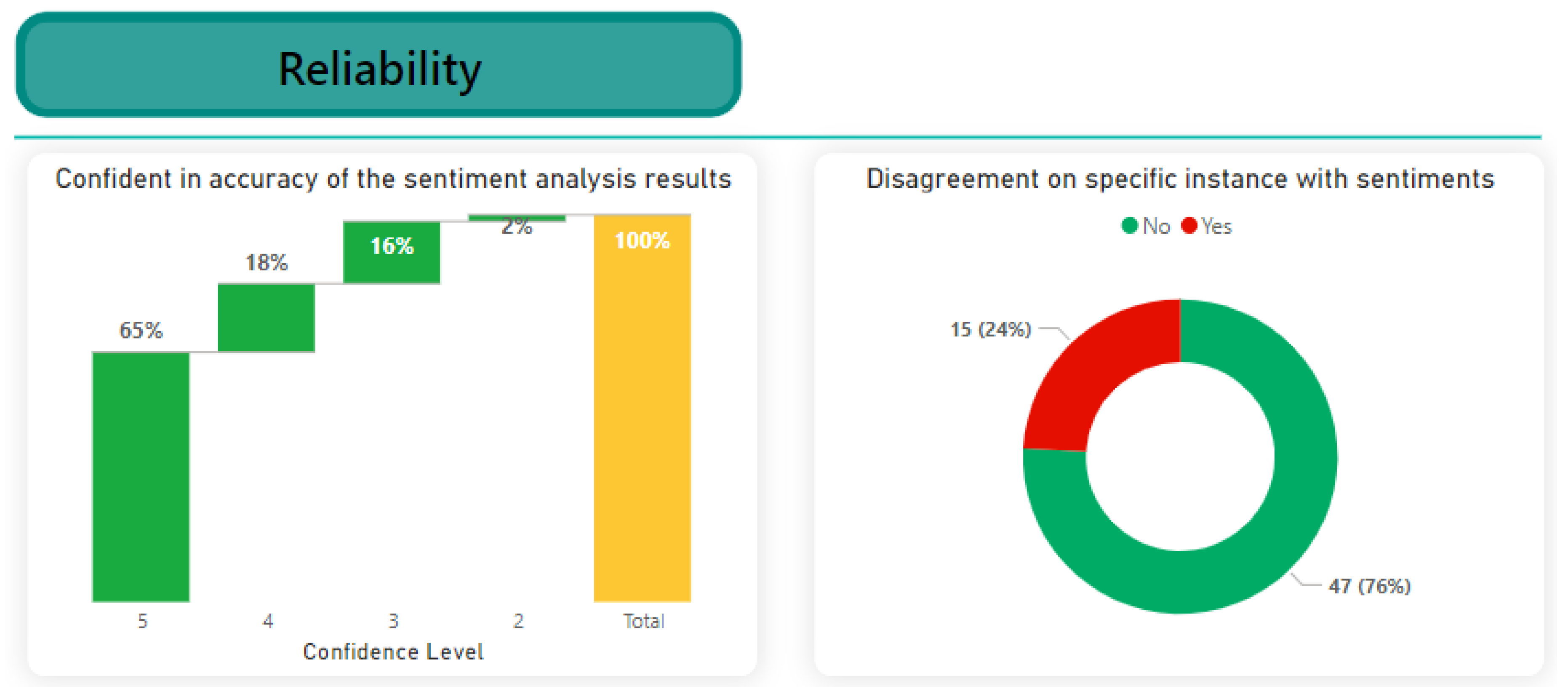

5.5.3. Reliability

This section focused on evaluating the effectiveness and reliability of these XAI methods. For this, we evaluated two key questions to identify the effectiveness and reliability of the XAI methods:

How confident do you feel in the accuracy of the sentiment analysis results provided by the XAI system?

Were there any specific instances where you disagreed with the sentiment assigned by the XAI system, despite its explanations?

As indicated in

Figure 15, the results for the reliability of the model provided by the XAI explanation methods show a range of confidence levels among participants.

Most of the participants (65%) expressed high confidence in the precision of the sentiment analysis. However, a significant portion, 18%, also exhibited a fairly high level of confidence. A lower percentage, 16%, indicated moderate confidence, while only 2% showed slight confidence. In particular, a segment, representing 0%, expressed no confidence in the accuracy of the XAI system sentiment analysis results. Furthermore, the answers to the other question show that 76% of the respondents agreed with the sentiment that the XAI system assigned and the justifications that followed. However, 24% disagreed with the sentiment despite the explanations provided. This suggests a substantial level of trust, yet a notable portion of participants still encountered instances where they held a differing sentiment judgment from the XAI system, despite the explanations offered. Further exploration into these disagreements may provide valuable insights into improving the reliability and accuracy of the XAI system for sentiment analysis in a low-resource language context.

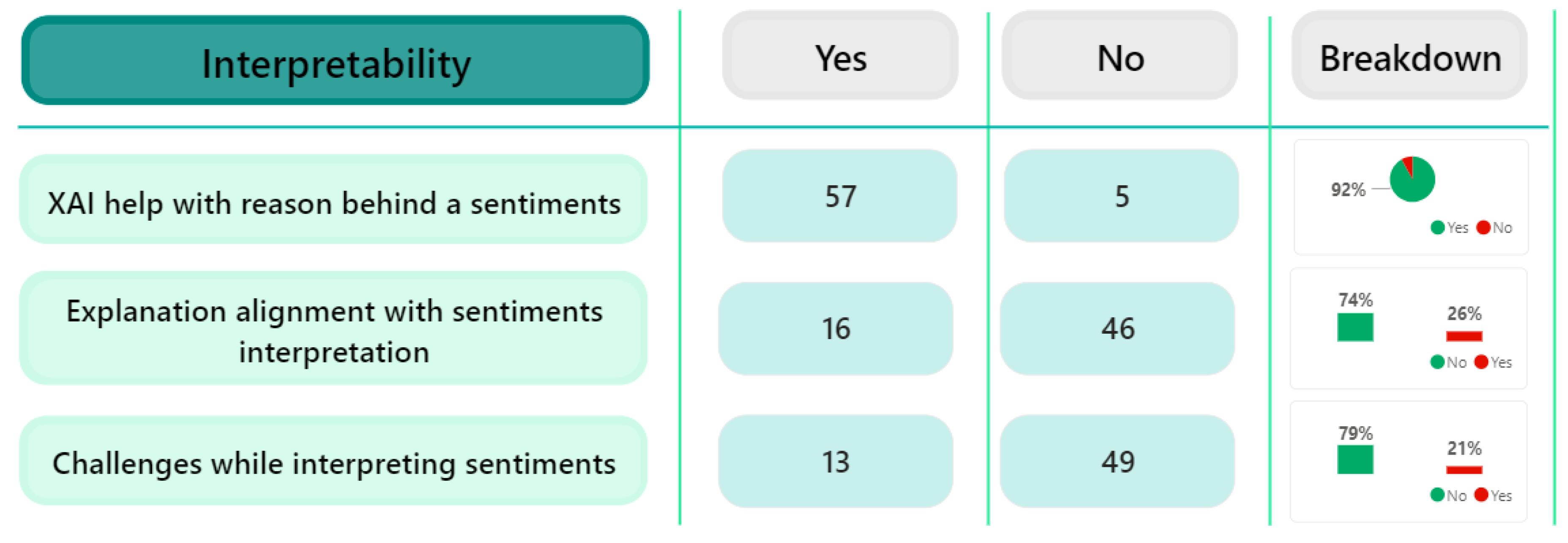

5.5.4. Interpretability

Interpretability is defined as the ability to explain to a human being the decision made by an artificial intelligence model [

5]. In this case, we further evaluated interpretability in the XAI methods by asking three questions:

Did the explanations provided by the XAI system help you understand why certain sentiments were identified in the text?

Were there any instances where the XAI system’s explanation of sentiment did not align with your interpretation?

Were there any challenges or difficulties you encountered while trying to interpret the XAI system’s explanations of sentiment?

In this section, our participants were asked to answer the three questions related to interpretability.

Figure 16 shows the results obtained from the participants.

The results indicate a high level of agreement, with 92% of the participants answering ’Yes’ and only 8% responding ’No’ to the question about the effectiveness of the explanations provided by the XAI system in understanding the identified sentiments in the text. This substantial majority of positive responses suggest that the explanations offered by the XAI system significantly contributed to enhancing the participants’ comprehension of why specific sentiments were identified within the tweets. These findings strongly support the idea that the explanations generated by the XAI system played a crucial role in helping users understand the results of the sentiment analysis. Additionally, the results also indicate that 74% of participants reported instances where the XAI system’s explanation of sentiment did not match their interpretation, while 26% stated otherwise. These results suggest that a significant majority found discrepancies between their understanding of sentiment and the explanations provided by the XAI system. Such disparities may indicate potential limitations in the system’s ability to align with human interpretation, raising concerns about its reliability in accurately explaining sentiments. More research is required on the nature of these discrepancies to improve the reliability of the XAI system and bridge the gap between human and AI sentiment interpretations. Finally, the findings further show that the majority, which constitutes 79%, did not encounter challenges when interpreting the explanations provided by the XAI system regarding sentiment analysis. In contrast, a smaller percentage, accounting for 21%, acknowledged facing difficulties or obstacles when trying to understand the explanations of the XAI system. This distribution highlights a predominant ease in comprehending the system’s provided explanations for sentiment analysis, although a notable minority found certain aspects challenging, signifying a need for potential improvements in explanation clarity or user guidance for enhanced comprehension.

6. Conclusions

In this paper, we propose an approach that combines XAI methods and Afro-centric models for sentiment analysis. Although sentiment analysis tools and techniques are available in English, it is essential to cover other low-resourced African languages, and the models make decisions. We used the SAfriSenti corpus to perform model fine-tuning and sentiment classification on five low-resourced African languages. We modified LIME and TransSHAP suitable for transformer-based Afrocentric models. Although the models perform relatively well across the languages, the Afro-XLMR model outperformed all the models in this sentiment analysis task, showing its adaptability to low-resource languages. Furthermore, we used XAI tools to visualize the attention in the texts. Then, we used LIME and TransSHAP to visualize the tweets and their interpretations. While the Afro-XMLR model effectively interprets words conveying the sentiment, it provides inaccurate explanations in languages with lower sentiment performance. Furthermore, the survey included a quantitative comparison of the explanations provided by TransSHAP and LIME, in addition to evaluating trustworthiness, reliability, transparency, and interoperability. The results of the feedback survey additionally validate that a significant majority of the participants agree with the decisions made by the XAI methods combined with the Afrocentric PLM model. Based on the outcomes generated by these XAI methods, we can conclude that they not only enhance the accuracy and interpretability of sentiment predictions but also promote understandability. This, in turn, cultivates trust and credibility in the decision-making process facilitated by Afro-XLMR as a sentiment analysis classifier. The use of XAI methods for sentiment explanations can be easily extended to other African languages. In the future, we are going to investigate the use of other XAI methods on these pre-trained models. We also plan to address problems of the perturbation-based explanation process when dealing with textual data. Furthermore, we intend to investigate counterfactual visualizations, in which we alter various elements to assess the impact of the explanations. Additionally, by extending the features of explanations from single words to longer textual units composed of words that are grammatically and semantically related, the explanations could be further improved. However, it is important to acknowledge the limitations of this study, particularly in handling sarcastic phrases and idiomatic expressions that may lead to incorrect sentiment results in tweets processed by MPLM models.

Figure 1.

A step-by-step general purpose framework for XAI systems.

Figure 1.

A step-by-step general purpose framework for XAI systems.

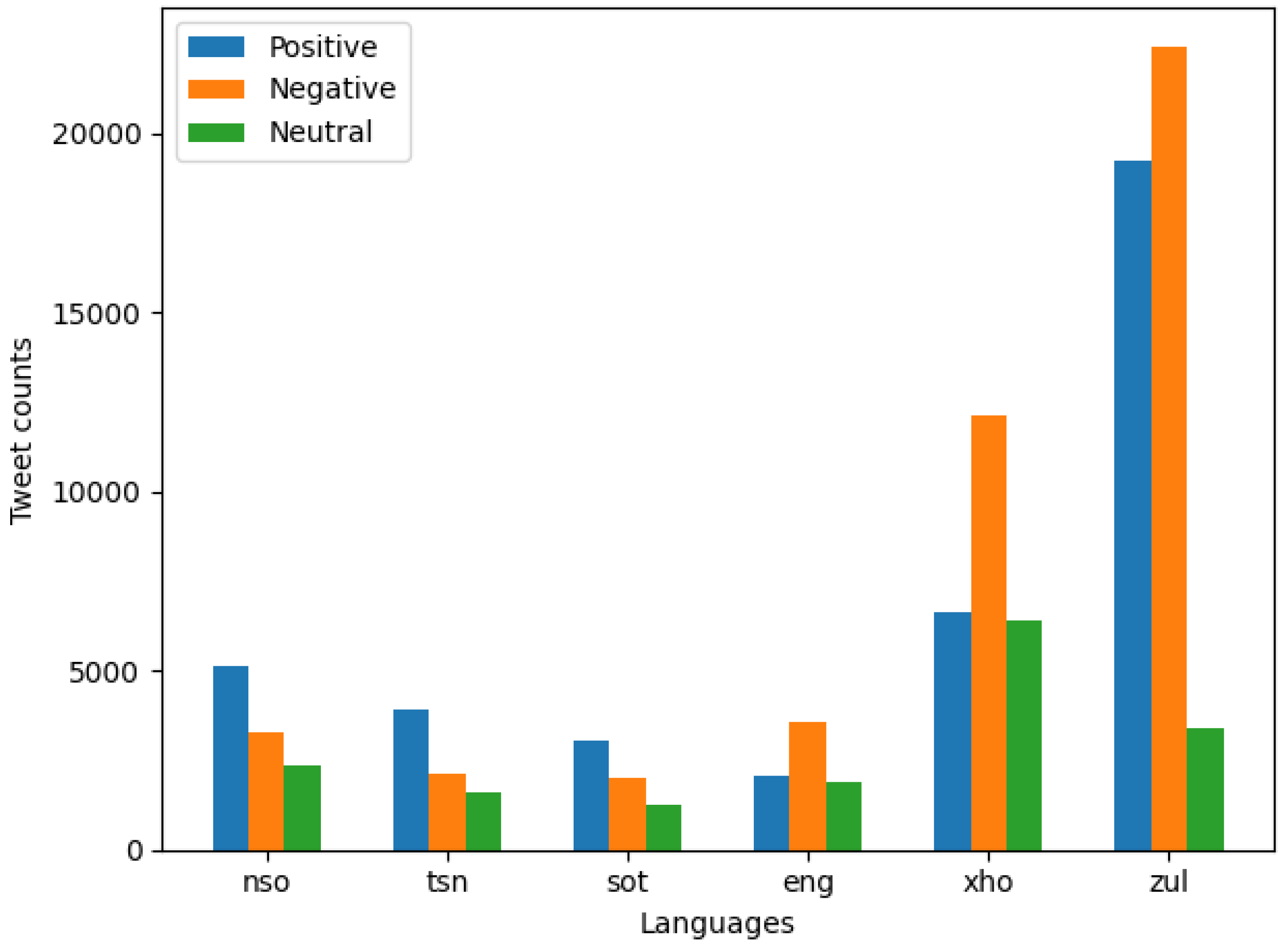

Figure 2.

Distribution of Tweets Across Languages. We show the graphical comparison of the various tweets across the 5 Languages for sentiment analysis.

Figure 2.

Distribution of Tweets Across Languages. We show the graphical comparison of the various tweets across the 5 Languages for sentiment analysis.

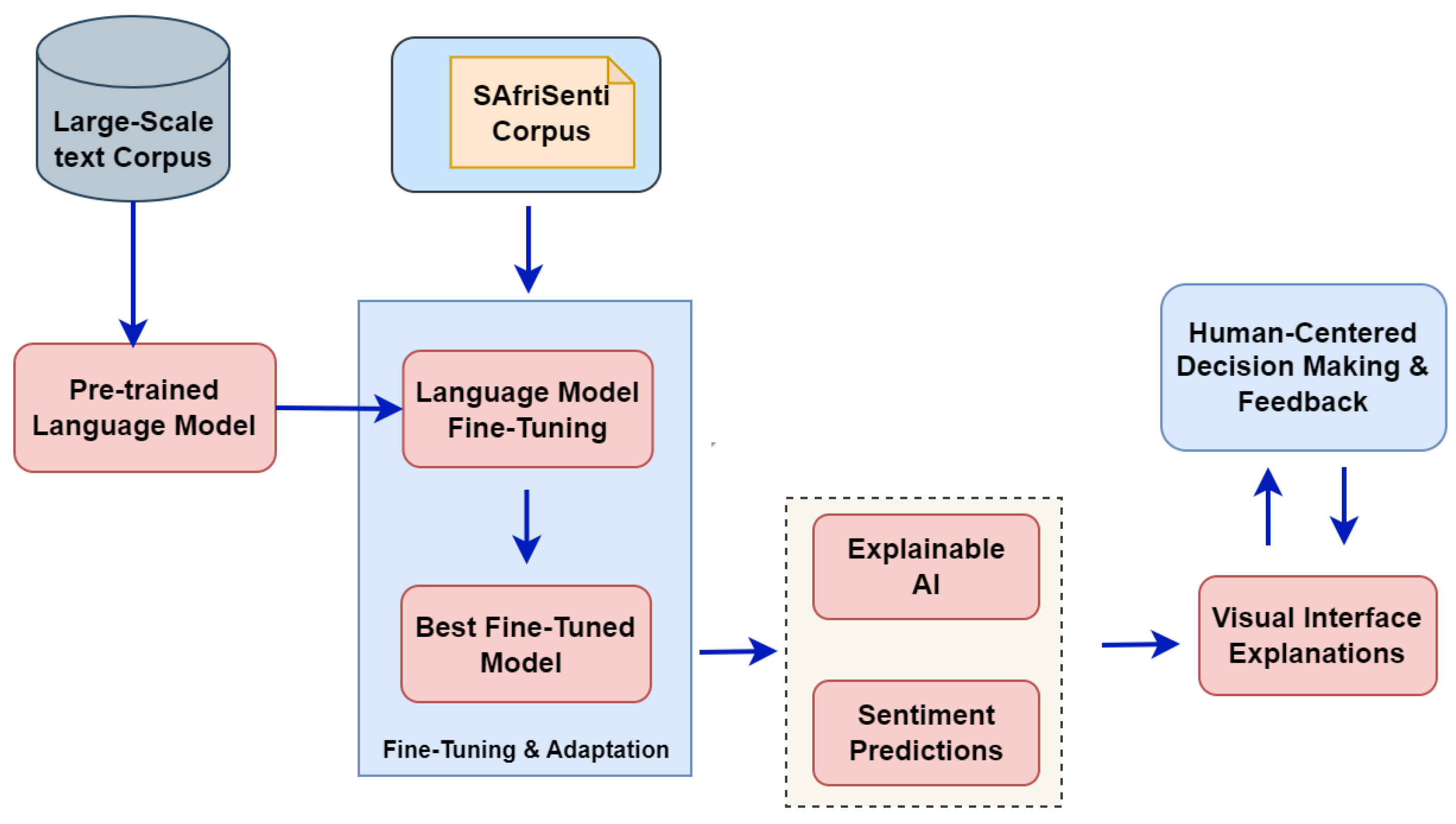

Figure 3.

Overview of Explainable AI Framework for Sentiment Analysis.

Figure 3.

Overview of Explainable AI Framework for Sentiment Analysis.

Figure 4.

Overview of Explainable AI Framework for Sentiment Analysis.

Figure 4.

Overview of Explainable AI Framework for Sentiment Analysis.

Figure 5.

Comparison of attention visualizations.

Figure 5.

Comparison of attention visualizations.

Figure 6.

The LIME visualisations of a negative tweet in Sepedi mixed with English.

Figure 6.

The LIME visualisations of a negative tweet in Sepedi mixed with English.

Figure 7.

The LIME visualisations of a negative tweet in isiZulu language.

Figure 7.

The LIME visualisations of a negative tweet in isiZulu language.

Figure 8.

The LIME visualisations of a negative tweet in isiZulu language.

Figure 8.

The LIME visualisations of a negative tweet in isiZulu language.

Figure 9.

The LIME visualisations of an incorrect prediction in Setswana language.

Figure 9.

The LIME visualisations of an incorrect prediction in Setswana language.

Figure 10.

The SHAP visualisations of a tweet with a negative sentiment.

Figure 10.

The SHAP visualisations of a tweet with a negative sentiment.

Figure 11.

The SHAP visualisations of a tweet with negative sentiment.

Figure 11.

The SHAP visualisations of a tweet with negative sentiment.

Figure 12.

The SHAP visualisations of a tweet with a positive sentiment.

Figure 12.

The SHAP visualisations of a tweet with a positive sentiment.

Figure 13.

The results of the trustworthy factor of the XAI methods.

Figure 13.

The results of the trustworthy factor of the XAI methods.

Figure 14.

Results of the transparency factor of the XAI methods.

Figure 14.

Results of the transparency factor of the XAI methods.

Figure 15.

The results of the reliability factor of the XAI methods. These were based on LIME and SHAP visualisations.

Figure 15.

The results of the reliability factor of the XAI methods. These were based on LIME and SHAP visualisations.

Figure 16.

The results of the interpretability factor of the LIME and TransSHAP methods. The results presented evaluated XAI methods such as LIME and TransSHAP.

Figure 16.

The results of the interpretability factor of the LIME and TransSHAP methods. The results presented evaluated XAI methods such as LIME and TransSHAP.

Table 1.

Distribution of positive, negative, and neutral sentiments across South African languages.

Table 1.

Distribution of positive, negative, and neutral sentiments across South African languages.

| Language |

Positive |

Negative |

Neutral |

Total |

| (ISO 639) |

Pos |

% |

Neg |

% |

Neu |

% |

|

| Sepedi (nso) |

5,153 |

48% |

3,270 |

30% |

2,355 |

22% |

10,778 |

| Setswana (tsn) |

3,932 |

51% |

2,150 |

28% |

1,590 |

21% |

7,672 |

| Sesotho (sot) |

3,050 |

48% |

2,024 |

32% |

1,241 |

20% |

6,314 |

| isiXhosa (xho) |

6,657 |

25.79% |

12,125 |

48.10% |

6,421 |

25.47% |

25,203 |

| isiZulu (zul) |

19,252 |

42.49% |

22,400 |

49.44% |

3,378 |

7.45% |

45,303 |

Table 2.

Language model F1-score performance comparison.

Table 2.

Language model F1-score performance comparison.

| Language |

mBERT |

XLM-R |

AfroLM |

Afro-XLMR |

SERENGETI |

|

| nso |

57.69% |

48.20% |

76.69% |

54.54% |

51.75% |

|

| tso |

61.54% |

54.44% |

64.23% |

55.23% |

58.01% |

|

| sot |

65.21% |

55.31% |

58.32% |

65.49% |

61.20% |

|

| xho |

85.78% |

72.31% |

75.32% |

89.17% |

80.01% |

|

| zul |

86.46% |

74.60% |

82.77% |

90.74% |

79.39% |

|