1. Introduction

Fire incidents pose a substantial global threat, capable of causing widespread devastation once they ignite. These destructive events endanger lives and property worldwide. When a fire begins, it can quickly escalate, leading to severe destruction. Beyond the immediate threats to human life and property, fires also have long-term environmental consequences, including deforestation, air pollution, and the release of toxic chemicals. The economic impact is equally significant, with billions of dollars lost each year due to fire-related damages [

1]. Fires can also disrupt communities, leading to prolonged displacement of residents, loss of livelihoods, and significant psychological trauma [

2]. The cumulative effects of fire incidents are not limited to the immediate aftermath but can have repercussions that persist for decades, affecting future generations and the stability of entire regions [

1,

3]. Given the unpredictable nature and rapid escalation of fires, early detection is vital. The ability to identify and respond to fires in their earliest stages can make the difference between a minor incident and a major disaster. Addressing this challenge requires the development of advanced, highly accurate monitoring systems capable of early fire detection to minimize damage. Traditional sensor-based methods, such as smoke detectors and heat sensors, often prove inadequate, as they typically respond too late or fail to detect fires in their initial stages, especially in large or open areas [

4]. Additionally, these methods are limited by their inability to cover vast spaces or detect fires in complex environments like forests or industrial settings, where early detection is most critical.

Although image-based systems hold promise, existing models often lack the necessary precision in critical situations [

5]. These systems, despite their potential, are hindered by limitations such as low detection accuracy under varying lighting conditions, difficulty distinguishing between fire and non-fire objects, and challenges in efficiently processing real-time data [

6]. In high-stakes scenarios where every second is crucial, these shortcomings can have devastating consequences. The risk of false alarms is particularly problematic, as they can desensitize response teams, leading to delays in addressing real emergencies [

7]. Moreover, in areas where fire detection is crucial, such as densely populated urban centers or remote forest regions, the failure of these systems can result in catastrophic outcomes. The increasing threat posed by fires, combined with the limitations of current detection technologies, highlights the need for a more advanced solution. As climate change intensifies and urbanization continues to expand, the frequency and severity of fire incidents are expected to rise. This reality underscores the urgency of developing a fire detection system that is not only accurate but also robust enough to operate effectively in diverse environments. In response to this critical need, we have developed a state-of-the-art deep learning approach tailored to meet these demands. By utilizing computer vision, our solution offers a more precise and reliable method of fire detection, ensuring that fires are identified and managed before they cause significant harm.

Previous research has explored various frameworks such as YOLOv3, YOLOv5, R-CNN, vanilla CNN, and dual CNN models for fire detection [

2]. However, these models often faced challenges related to accuracy and speed, particularly in high-pressure situations [

2,

8]. Several studies have pointed out the limitations of these fire detection models. For instance, the YOLOv3-based model exhibited a tendency towards false positives, often misidentifying electrical lamps as fires, especially during nighttime conditions [

2]. Similarly, the YOLOv2-based model also experienced issues with false positives, particularly in complex environments [

1]. Additionally, the YOLOv4-based model was found to be less effective when applied to larger areas [

4]. These models also struggled to adapt to new and unforeseen scenarios, which is crucial for real-world fire detection applications. Furthermore, some CNN-based models encountered difficulties in generalizing fire detection across new situations, often resulting in a high rate of false alarms [

9,

10]. False alarms not only waste valuable resources but can also lead to "alarm fatigue," where responders become desensitized to alarms, potentially overlooking genuine emergencies. Moreover, dual CNN-based detection systems exhibited inconsistent performance depending on the environment [

11,

12]. These inconsistencies made it challenging to rely on these models in critical situations where a high degree of precision and reliability is necessary. Furthermore, CNN-based technology with aerial 360-degree cameras mounted on UAVs to capture unlimited field of view images also had drawbacks. UAVs were affected by weather conditions, limiting their effectiveness and the system was not capable of detecting wildfires at night [

3]. In other studies, some researchers utilized a multifunctional AI framework and the Direct-MQTT protocol to enhance fire detection accuracy and minimize data transfer delays. This approach also applied a CNN algorithm for visual intelligence and used the Fire Dynamics Simulator for testing. However, it did not consider sensor failures and used static thresholds [

13]. In another study, researchers utilized the ELASTIC-YOLOv3 algorithm to quickly and accurately detect fire candidate areas and combined it with a random forest classifier to verify fire candidates. Additionally, they used a temporal fire-tube and bag-of-features histogram to reflect the dynamic characteristics of nighttime flames. However, the approach faced limitations in real-time processing due to the computational demands of combining CNN with RNN or LSTM and it struggled with distinguishing fire from fire-like objects in nighttime urban environments [

14]. The Intermediate Fusion VGG16 model and the Enhanced Consumed Energy-Leach protocol were utilized in a study for early detection of forest fires. Drones were employed to capture RGB and IR images, which were then processed using the VGG16 model. However, the study faced limitations due to the lack of real-world testing and resource constraints that hindered comprehensive evaluation [

5]. In another computer vision-based study, researchers utilized a YOLOv5 fire detection algorithm based on an attention-enhanced ghost model, mixed convolutional pyramids, and flame-center detection. It incorporated Ghost bottlenecks, SECSP attention modules, GSConv convolution, and the SIoU loss function to enhance accuracy. However, the limitations included potential challenges in real-time detection due to high computational complexities and the need for further validation in diverse environments [

6]. In a different study that was based on CNN, the researchers modified the CNN for forest fire recognition, integrating transfer learning and a feature fusion algorithm to enhance detection accuracy. The researchers had utilized a diverse dataset of fire and non-fire images for training and testing. However, the study had faced limitations due to the small sample size and the need for further validation in real-world scenarios to ensure robustness and generalizability. In a different study to detect fire and smoke, the researchers have utilized a capacitive particle-analyzing smoke detector for very early fire detection, employing a multiscale smoke particle concentration detection algorithm. This method involved capacitive detection of cell structures and time-frequency domain analysis to calculate particle concentration. However, the study had faced limitations in distinguishing particle types and had struggled with false alarms in complex environments.

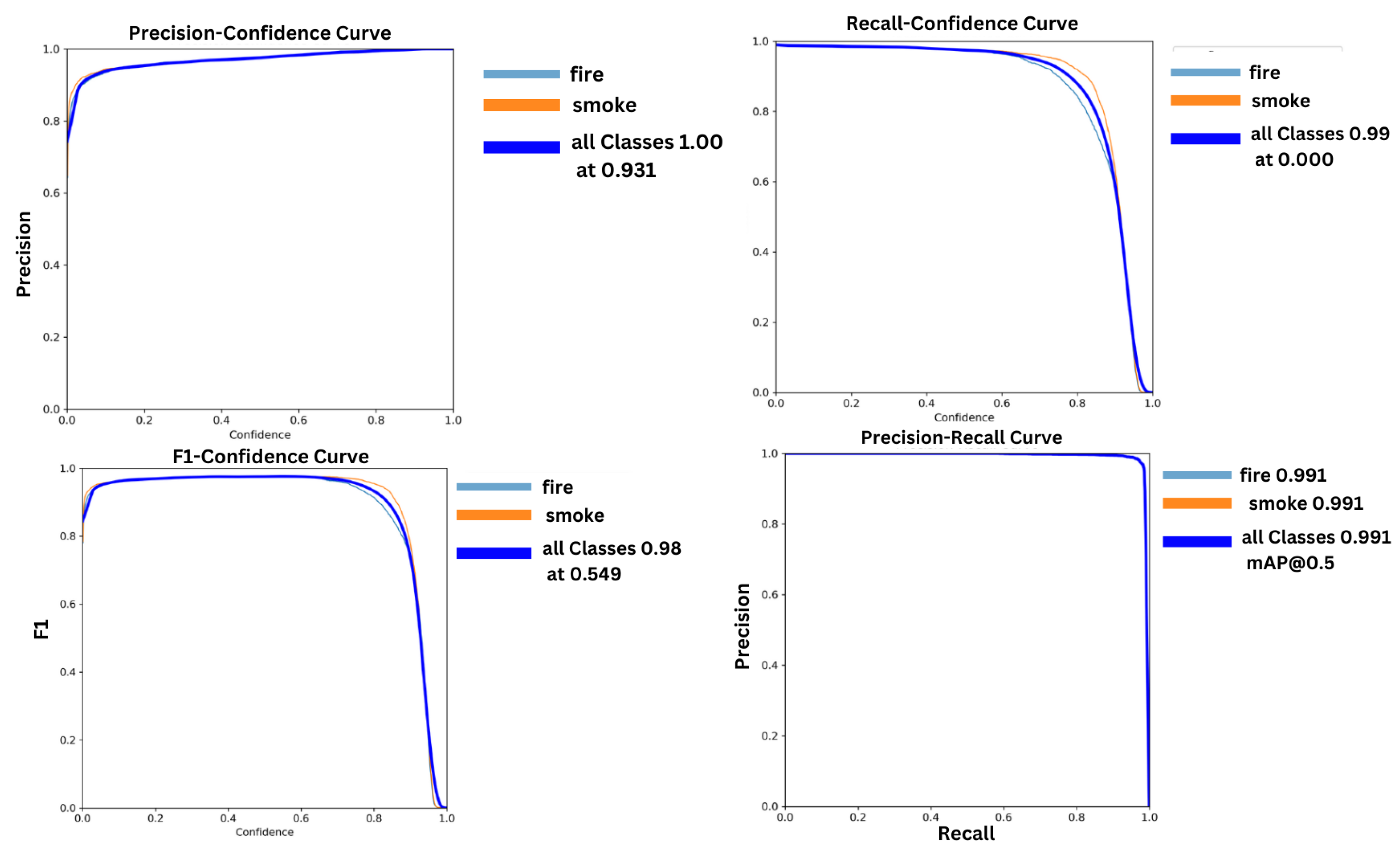

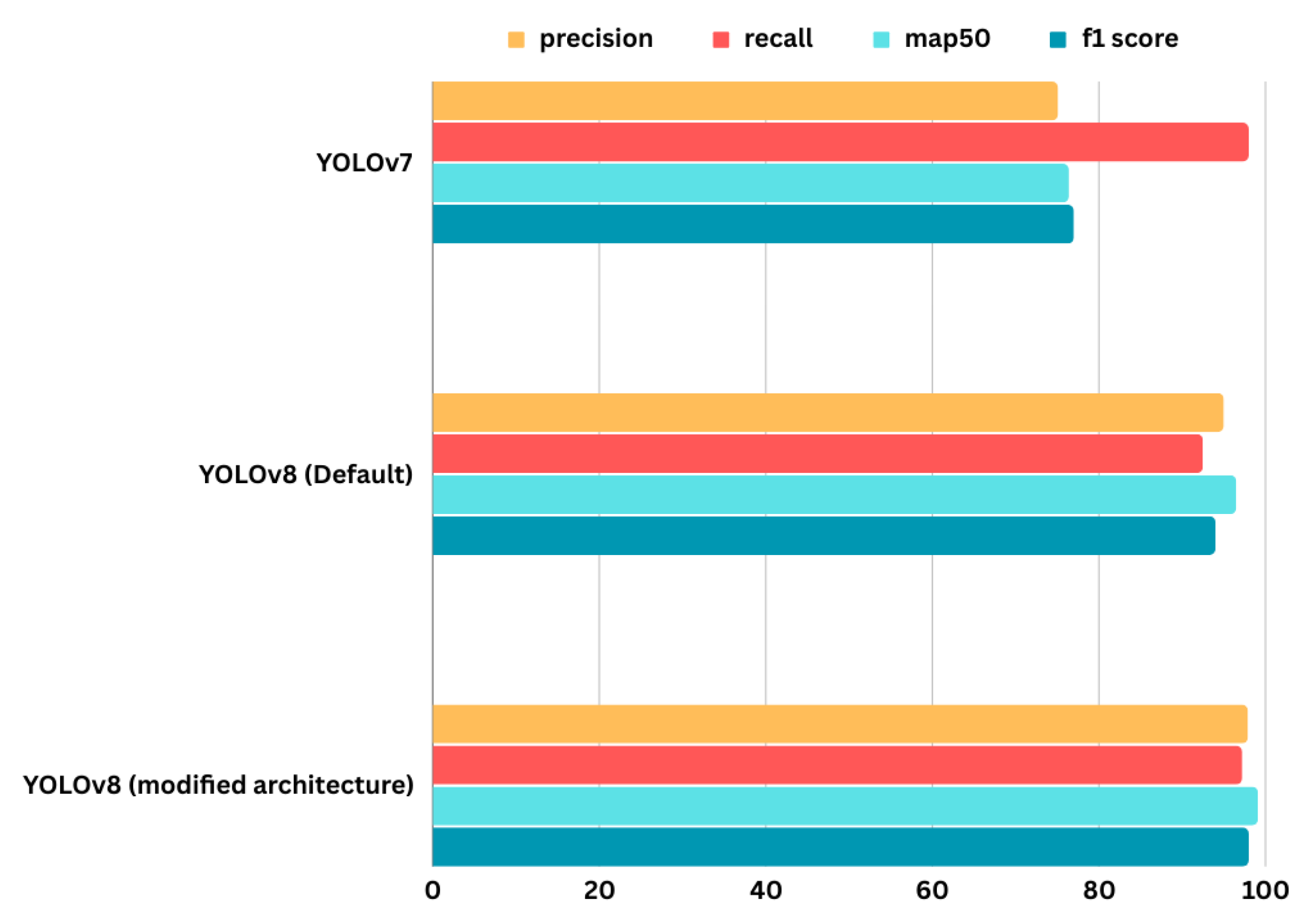

In our pursuit of a more reliable solution, we turned to the robust capabilities of YOLOv8, a model renowned for its superior object detection abilities. Our goal was to significantly enhance fire detection performance by optimizing the architecture of YOLOv8 to better identify fire-specific visual cues. Through extensive training on a comprehensive fire image dataset, the modified YOLOv8 model has demonstrated improved precision, recall, mAP@50, and F1 score. This advanced model excels at detecting not only flames but also smoke, which is often an early indicator of larger fires, thereby providing an early warning system that can prevent a small incident from escalating into a full-blown disaster.

To further enhance the interpretability of our YOLOv8 model, we utilized EigenCAM, a technique within explainable AI that generates class activation maps. EigenCAM allows us to visualize the regions in the input images that the model focuses on when making predictions [

15,

16]. By highlighting these areas, we gain insights into which features the model considers critical for detecting fire and smoke. This transparency is essential for building trust in AI-based systems, particularly in crucial applications like fire detection. With these technologies in place, the issue of false alarms triggered by orange hues or steam can be effectively mitigated. Our approach not only aims to reduce false positives but also ensures that fire detection remains accurate and reliable across a wide range of environments and scenarios. The development of this cutting-edge deep learning solution marks a significant leap forward in the field of fire detection. By capitalizing on the strengths of YOLOv8 and enhancing its capabilities with EigenCAM, we have created a system that is both highly accurate and interpretable. This innovative approach addresses the shortcomings of previous models and provides a robust tool for early fire detection, which is crucial for minimizing damage and safeguarding lives and property globally. This breakthrough paves the way for ultra-reliable fire monitoring in homes, industries, and beyond. The modified YOLOv8 fire detection technique is ushering in a new era of fire safety innovations. It is crucial to take a stand against fire devastation. Together, armed with smarter AI, we can make a real difference, saving lives and property while forging a safer future. The main contributions of this research are as follows:

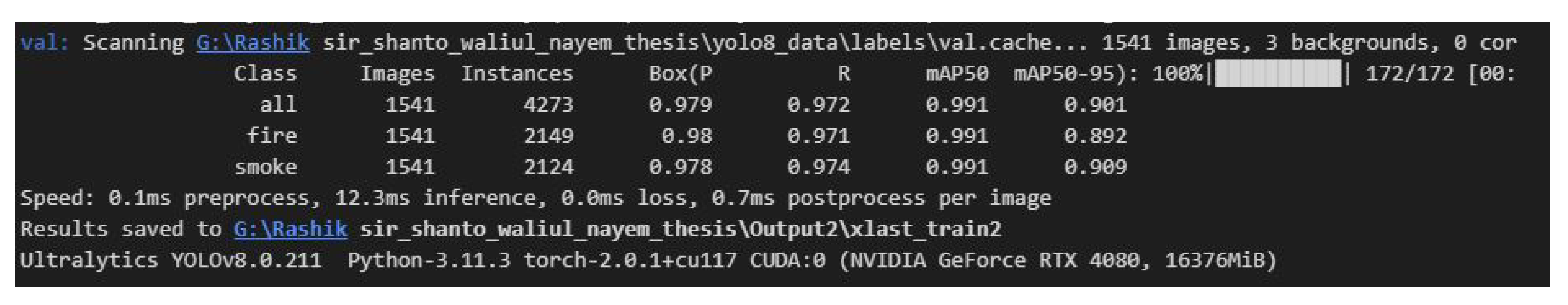

In this work, a modified YOLOv8 architecture is proposed for the development of an efficient automated fire and smoke detection system, achieving 98% accuracy in fire and 97.8% accuracy in smoke detection.

A comprehensive fire dataset has been created, consisting of 4,301 labeled images. Each image has been carefully annotated to ensure accuracy, providing a valuable resource for improving the performance of models in identifying fire and smoke in various conditions.

EigenCAM is employed to explain and visualize the results of the proposed model, highlighting the image areas that most influenced the model’s decisions. This approach enhances the understanding of the model’s behavior, thereby improving the interpretability and transparency of its predictions.

The rest of this research paper is organized as follows. First of all,

Section 2 discusses the background and related work in this field. Additionally, we present the methodology and a detailed description of the dataset in

Section 3. Experimental results analysis of these methods are discussed in

Section 4. In

Section 5, we introduce the explainable AI to interpret our proposed model and its results. Finally,

Section 6 and

Section 7 includes the discussion, summary and feasible future directions for this research.

2. Related Works

Deep learning-based methods for fire and smoke detection in smart cities have been receiving increasing attention recently. Hybrid techniques that incorporate multiple deep-learning algorithms for fire detection have been proposed in several studies.

Ahn et al. [

17] introduce a fire detection model leveraging computer vision-based Closed-Circuit Television (CCTV) for early fire identification in buildings, employing the YOLO algorithm. The proposed model demonstrates an overall accuracy with recall, precision, and mAP@0.5 performances of 0.97, 0.91, and 0.96, respectively. Notably, the findings indicate the ability to detect a fire within 1 second of the maximum visible range of the CCTV, revealing a time discrepancy of up to 307 seconds when comparing the fire detection times of the Early Fire Detection Model (EFDM) and conventional fire detectors. The paper has also recommended future research directions, emphasizing the development of a more robust and accurate model through the incorporation of additional features.

Pincott et al. [

8] propose a vision-based indoor fire and smoke detection system using computer vision techniques. The study has explored and adopted existing models based on Faster R-CNN Inception V2 and SSD MobileNet V2 models. They have evaluated the performance results of Faster R-CNN (Model A) with an average accuracy of 95% for fire detection and 62% for smoke detection, while SSD (Model B) has an average accuracy of 88% for fire detection and 77% for smoke detection. The limitation of this methodology is that it does not evaluate the proposed approach in outdoor environments, which could be a potential area for future research.

Avazov et al. [

4] propose a deep-learning-based fire detection method for smart city environments. The authors have developed a novel convolutional neural network to detect fire regions using an enhanced YOLOv4 network. The overall fire detection accuracy is 98.8%. The proposed method aims to improve fire safety in society using emerging technologies, such as digital cameras, computer vision, artificial intelligence, and deep learning. However, the method requires digital cameras and a Banana Pi M3 board, which may not be available in all settings, and may not be suitable for detecting fires in large areas, such as forests or industrial sites. Additionally, the method may struggle to detect fires that are hidden or occur in areas not visible to digital cameras.

Abdusalomov et al. [

2] propose fire detection and classification methods using the YOLOv3 algorithm for surveillance systems. The study focuses on enhancing the accuracy and efficiency of fire detection algorithms for real-time monitoring, achieving an overall fire detection accuracy of 98.9%. The limitations of the proposed method include the possibility of errors in detecting electrical lamps as real fires, especially at night.

Muhammad et al. [

18] propose a fire detection system based on convolutional neural networks (CNN) for effective disaster management. They have used two datasets: Foggia’s video dataset and Chino’s dataset. Foggia’s dataset consists of 31 videos with both indoor and outdoor environments, of which 14 videos contain fire and the remaining 17 videos do not. The overall accuracy of the proposed fire detection scheme is 98.5%, which is higher than state-of-the-art methods. Future work suggested in this paper includes maintaining a balance between accuracy and false alarms.

Khan Muhammad [

9] implement a cost-effective CNN architecture for fire detection in surveillance videos, inspired by GoogleNet1. The goal is to balance computational complexity and accuracy for real-time. Their proposed model is based on GoogleNet, which is fine-tuned for fire detection. Pre-trained weights from GoogleNet are used and further fine-tuned for the specific task. The proposed model achieves an accuracy of 94.43%. The model has reduced the false alarm to 5.4%. The model needs further tuning to handle both smoke and fire detection in more complex real-world scenarios.

Byoungjun Kim and Joonwhoan Lee [

19] propose a deep learning-based method for detecting fires using video sequences. The goal is to improve accuracy and reduce false alarms compared to traditional methods. Their research has utilized a faster region-based convolutional neural network to identify suspected regions of fire and non-fire objects. The Long Short-Term Memory networks accumulate features over successive frames to classify fire presence. The research combines short-term decisions using majority voting for a final long-term decision. The proposed method significantly improves the fire detection accuracy by reducing false detections and misdetections and also successfully interprets the temporal behavior of flames and smoke, providing detailed fire information. The model had some false positives still occur, especially with objects like clouds, chimney smoke and sunsets.

Yakun Xia et al. [

20] introduce a method to enhance fire detection accuracy in videos. The authors combine motion-flicker-based dynamic features with deep static features extracted using an adaptive lightweight convolutional neural network. By analyzing motion and flicker differences between fire and other objects and integrating these with deep static features, the method improves detection accuracy and reduces false alarms. Tested on three datasets, it outperformed state-of-the-art methods in terms of accuracy and run time, proving effective even in complex video scenarios and on resource-constrained devices. However, the paper notes challenges in achieving high robustness in complex scenarios and highlights the need for further research on fire spread prediction and spatial positioning.

Pu Li and Wangda Zhao [

21] introduce advanced methods for detecting fires in images using convolutional neural networks. The researchers aim to enhance the accuracy and speed of fire detection by comparing four CNN-based object detection models: Faster-RCNN, R-FCN, SSD and Yolo v3. They utilize a self-built dataset containing 29,180 images of fire, smoke and disturbances and apply transfer learning with pre-trained networks on the COCO dataset. The results show that YOLO v3 achieves the highest average precision of 83.7 and the fastest detection speed of 28 FPS, making it the most robust among the tested models. However, the study also highlight some limitations, such as difficulties in detecting fires in complex scenes and small fire and smoke regions, as well as the significant computational costs of some methods, which can affect detection speed.

Sergio Saponara et al. [

1] present a real-time video-based fire and smoke detection system using the YOLOv2 Convolutional Neural Network for antifire surveillance. Designed for low-cost embedded devices like the Jetson Nano and standard CCTV cameras, the system employs a lightweight neural network architecture for real-time processing. The model is trained offline with diverse indoor and outdoor fire and smoke image sets, with ground truth data generated using a labeling app. The system demonstrates high detection rates, low false alarm rates and fast processing speeds, achieving 93 accuracy in validation tests and 96.82 accuracy in experimental results. However, it faces limitations such as a small training dataset of 400 images which may affect generalization and false positives due to challenging features like clouds and sunlight and its performance is dependent on the capabilities of the embedded device used.

Yakhyokhuja Valikhujaev et al. [

11] introduce a novel approach for detecting fire and smoke using deep learning techniques, specifically a convolutional neural network with dilated convolutions. The architecture of the CNN with dilated convolutions enhances feature extraction and reduces false alarms. The results demonstrate that this method outperformed other state-of-the-art architectures in terms of classification performance and complexity and it is effectively generalized to unseen data, minimizing false alarms. However, the method has limitations, such as potential errors when fire and smoke pixel values are similar to the background, particularly in cloudy weather, and its dependency on the custom dataset, which may affect its generalizability to other datasets.

Dali Sheng et al.[

22] focus on developing a robust method for detecting smoke in complex environments. The authors combine simple linear iterative clustering for image segmentation and density-based spatial clustering of applications with noise for clustering similar super-pixels, which are then processed by a convolutional neural network to distinguish smoke from non-smoke features. The results show improved accuracy and reduced false positives compared to traditional methods. However, the method’s high sensitivity lead to a slight increase in false positives, indicating a need for further enhancements to ensure robustness in real-world applications.

Gaohua Lin and Yongming Zhang [

23] focus on early smoke detection in video sequences to enhance fire disaster prevention. The authors develope a joint detection framework that combines faster RCNN for locating smoke targets and 3D CNN for recognizing smoke. They utilize various data augmentation techniques and preprocessing methods like optical flow to improve the training dataset. The framework achieves a detection rate of 95.23% and a low false alarm rate of 0.39%, significantly outperforming traditional methods. However, the study faced limitations such as data scarcity, which can lead to overfitting and the need for more realistic synthetic smoke videos to enhance training performance.

Arun Singh Pundir et al. [

12] introduce a method for early and robust smoke detection using a dual deep learning framework. This framework leverages image-based features extracted by Deep Convolutional Neural Networks (CNN) from smoke patches identified by a superpixel algorithm and motion-based features captured using optical flow methods, also processed by CNN. The method achieves impressive accuracy rate of 98.29% for nearby smoke detection, 91.96% for faraway smoke detection and an average accuracy of 97.49% across various scenarios. However, the method faces challenges in distinguishing smoke from similar non-smoke conditions like clouds, fog and sandstorms.

Yunji Zhao et al. [

24] introduce the target awareness and depthwise separability algorithms for early fire smoke detection, which are vital for early warning systems. The authors utilize pre-trained convolutional neural networks to extract deep feature maps for modeling smoke objects and employ depthwise separable convolutions to enhance the algorithm’s speed, making real-time detection feasible. The results demonstrate that the proposed algorithm can detect early smoke in real-time and surpasses current methods in both accuracy and speed. However, the study notes that detection accuracy decreases in certain video sequences under varying conditions and some information might be lost due to the depthwise separable method.

Pedro et al. [

10] present an automatic fire detection system using deep convolutional neural networks (CNNs) tailored for low-power, resource-constrained devices like the Raspberry Pi 4. The objective is to create an efficient fire detection system by reducing computational costs and memory usage without sacrificing performance. The authors employ filter pruning to eliminate less important convolutional filters and utilize the YOLOv4 algorithm for fire and smoke detection, optimizing it through various pruning techniques. The results show a significant reduction in computational cost (up to 83.60) and memory consumption (up to 83.86), while maintaining high predictive performance. However, the system faces limitations in generalizing to new environments and dealing with high false alarm rates, especially under varying lighting conditions and camera movements.

Naqqash Dilshad et al. [

25] introduce E-FireNet, a deep learning framework aimed at real-time fire detection in complex surveillance settings. The authors modify the VGG16 network by removing Block-5 and adjusting Block-4, using smaller convolutional kernels to enhance detail extraction from images. The framework operates in three stages: data preprocessing, fire detection and alarm generation. E-FireNet achieves impressive results with an accuracy of 0.98, 1 precision, 0.99 recall, and 0.99 F1-score, outperforming other models in accuracy, model size, and execution time, achieving 22.17 FPS on CPU and 30.58 FPS on GPU. However, the study notes the need for a more diverse dataset and suggests future research to expand the dataset and explore the use of vision transformers for fire detection.

Yunsov et al. [

26] introduce a YOLOv8 model with transfer learning to detect large forest fires. For smaller fires, they use the TranSDet model, also leveraging transfer learning. Their approach achieves a total 97% accuracy rate. However, the model faces challenges in detecting fire when only smoke is present and occasionally misidentifies the sun and electric lights as fire.

Table 1 shows a summary of related works on fire detection.

3. Methodology

Fires can cause significant harm and substantial damage to both people and their properties. An efficient fire detection system is crucial to reducing these dangers by facilitating quicker response times. To create an advanced computer vision model for this purpose, we undertook a series of systematic steps.

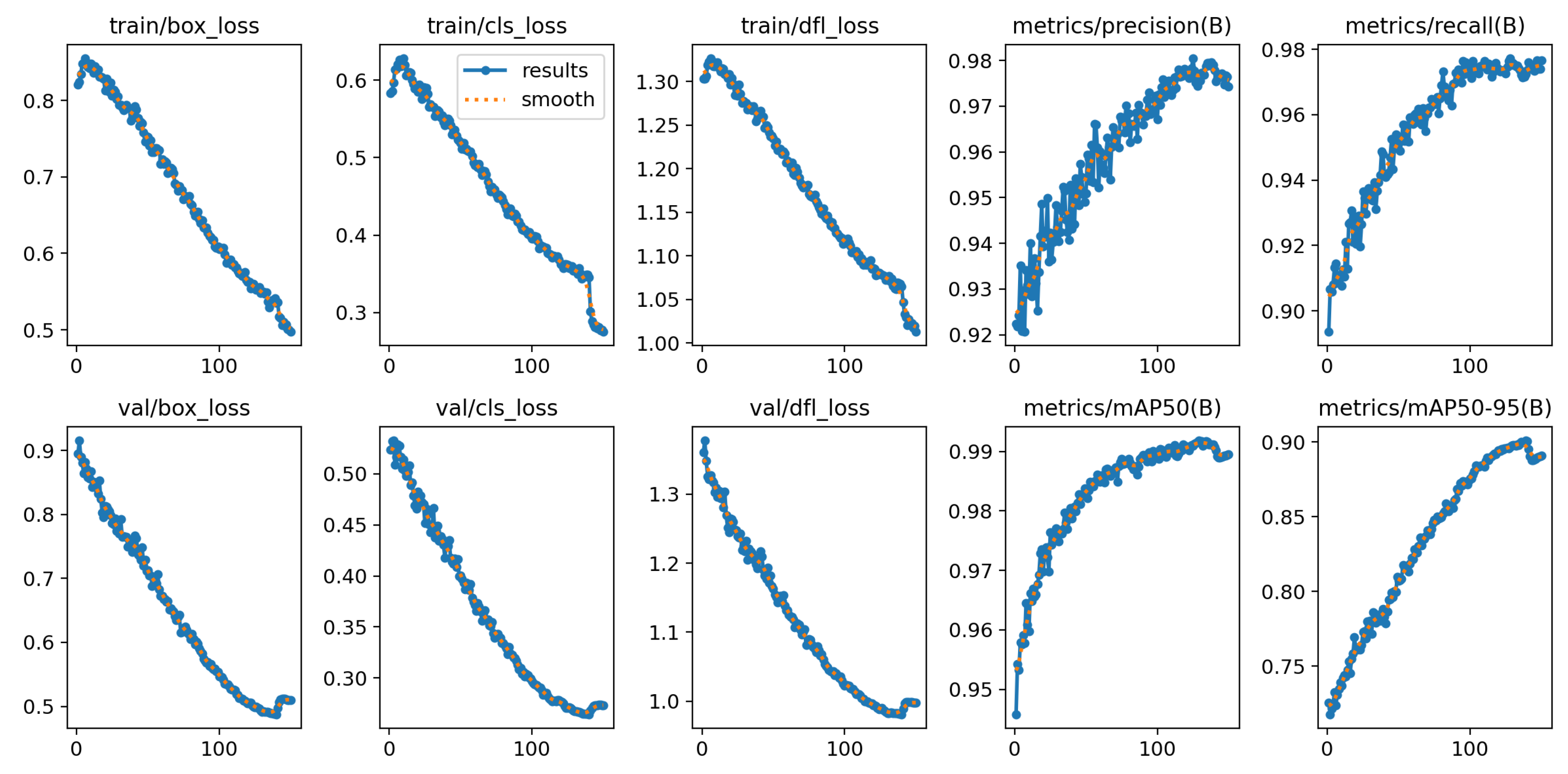

Initially, we have gathered and labeled a robust dataset, enhancing it further through data augmentation techniques to ensure its comprehensiveness. Our initial training phase employs the standard YOLOv8 [

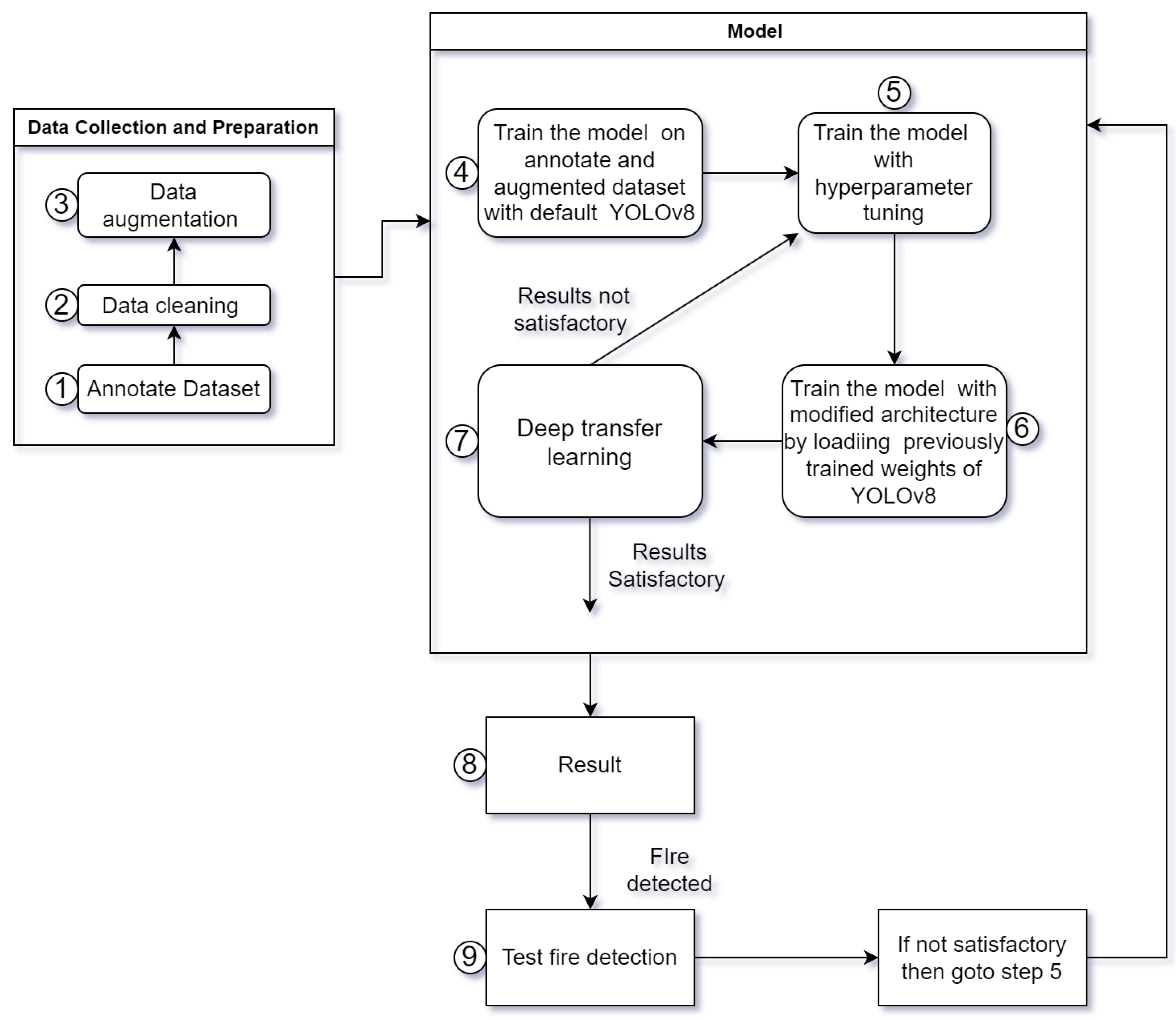

27] architecture, serving as our baseline. We then delve into hyperparameter tuning, exploring a variety of parameters to identify the optimal set for peak performance. This involves multiple training iterations, each time tweaking the hyperparameters to achieve the best results. With the optimal hyperparameters identified, we retrain our model using a modified version of the YOLOv8 architecture, incorporating the previously trained weights from the initial model. This step is crucial for leveraging the learned features from the earlier training phase, a process known as deep transfer learning. Further refinement is achieved through additional training phases, continuously using the improved weights and our adjusted architecture. To ensure transparency in the model’s decision-making process, we apply EigenCAM, enbling us to visualize and interpret how the model makes its predictions. This detailed methodology, which is visually summarized in

Figure 1, outlines our approach to developing an effective fire detection system.

3.1. Dataset Description

The initial dataset we collected consists of 4301 labeled fire and smoke images. To further enrich the dataset, we have added a curated selection of images showcasing different types of fire, sourced from reputable online platforms. The bounding boxes in the CVAT tool are labeled with the names "fire" and "smoke." Subsequently, the dataset is randomly partitioned into two separate sets, one designated for training purposes (80%) and the other allocated for validation (20%).

Figure 2 includes several fire and smoke images that are clearly annotated, providing precise labels that highlight the specific areas associated with fire and smoke.

3.2. Dataset Augmentation and Replication

The original dataset has extensive variation in terms of smoke and fire types and settings-ranging from outdoor and indoor fires, large and small, to bright and low-light conditions. After splitting the dataset and copying all images and labels, we halt the replication process to further enhance the training and validation sets. Specifically, we duplicate existing images and labels to increase the size of the relevant dataset portions. The goal is to make the dataset more diverse, enabling the model to learn from a wider range of examples. The original dataset contains 4,301 images, with 3,441 allocated for training and 860 for validation. After the replication process, the dataset is expanded to 5,663 total images. The new training set comprises 4,122 images, while the validation set grows to 1,541 images. During the copying process, we randomly apply some data augmentation techniques to further increase diversity. This repetition aims to enrich the dataset, ensuring that the model encounters a broader array of scenarios during training. The key objective of this enriched dataset is to expose the model to a wide variety of fire and smoke situations. By increasing variations in size, location, and other factors, the model becomes better equipped to handle a broader range of real-world cases. More diversity in training leads to better adaptability and performance.

3.3. Model Architecture and Modifications

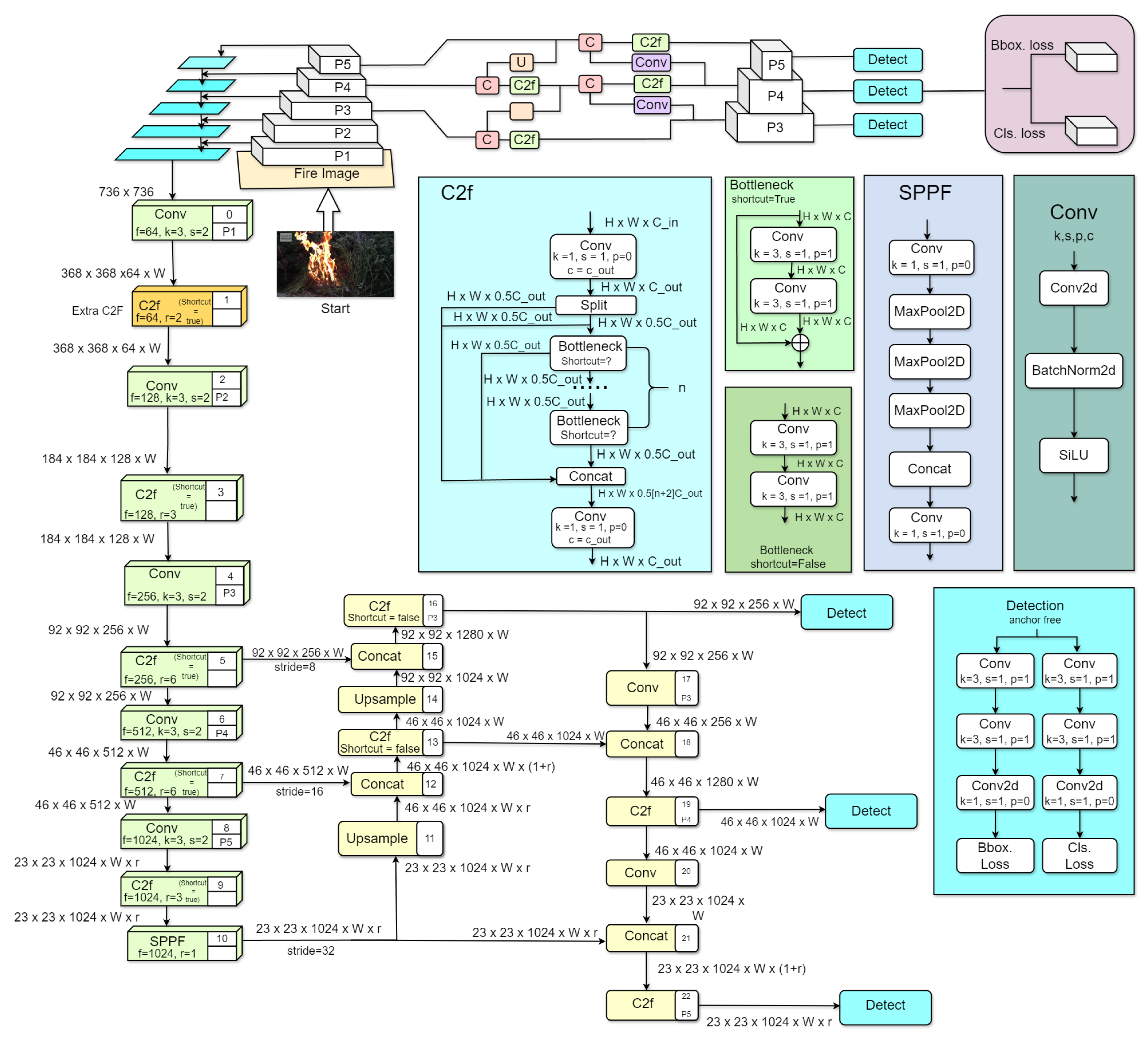

Figure 3 shows the overall architecture of our proposed model. Our suggested method provides a significant improvement to the YOLOv8 design by carefully adding an extra Context to Flow (C2F) layer to the backbone network. The goal of this change is to enhance the model’s ability to capture contextual information about fire, refine features, and improve fire detection accuracy [

28].

Here is an outline of our suggested method. It is based on the YOLOv8 model, which was chosen due to its superior accuracy and efficiency compared to previous versions of the YOLO model. The C2F module was introduced to provide the model with enhanced capabilities to capture both contextual and flow information crucial for accurate fire detection [

28]. We added an extra C2F module after the first conventional layer, which facilitates the model in gathering both environmental and flow data necessary for accurate fire detection, as shown in

Figure 3.

3.4. Changes to the YOLOv8 Backbone

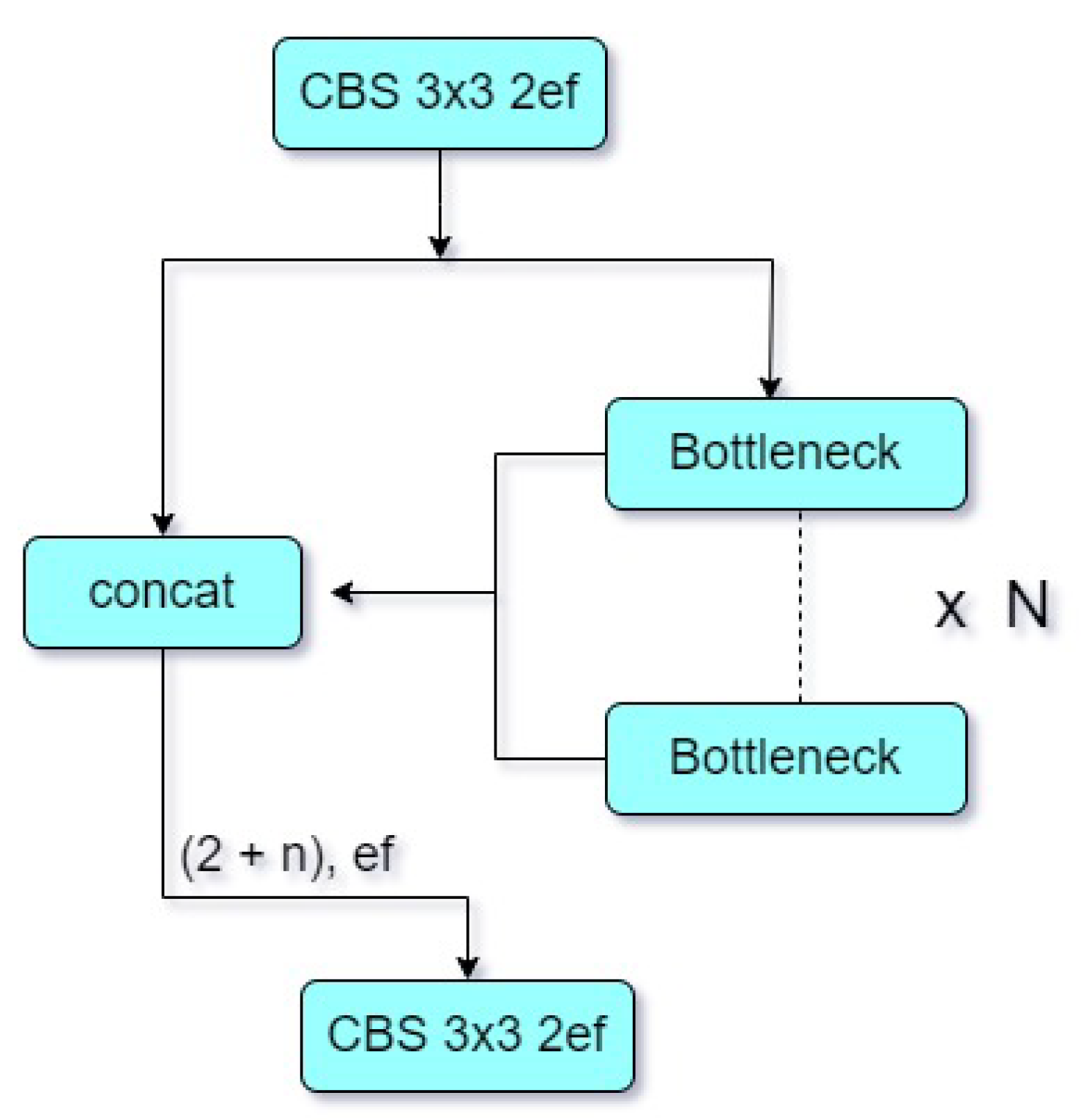

The C2F module stands for "Context-to-Flow" and is a component of the deep learning architecture. As shown in

Figure 4, it consists of two parallel branches: the context branch and the flow branch. The context branch captures the contextual information from the input data and processes it using convolutional layers [

29]. In the backbone, after the first convolutional layer, we add a C2F layer to start combining contextual information right away. As shown in

Figure 4, this layer, called C2F, is a key link between the first step of feature extraction and the subsequent steps. It identifies important contextual information in the feature representations, which helps the model understand the surrounding context and extract relevant features.

The flow branch focuses on capturing the flow of information within the data. It uses convolutional layers to analyze the spatial and temporal changes in the input [

29]. This branch aids the model in understanding the dynamic aspects of the data. The outputs from both branches are then combined to form a robust representation of the input data. This integration of context and flow information enhances the model’s ability to capture complex patterns and make accurate predictions. Overall, the C2F module plays a crucial role in deep learning models by facilitating the integration of contextual and flow information, leading to improved performance and accuracy.

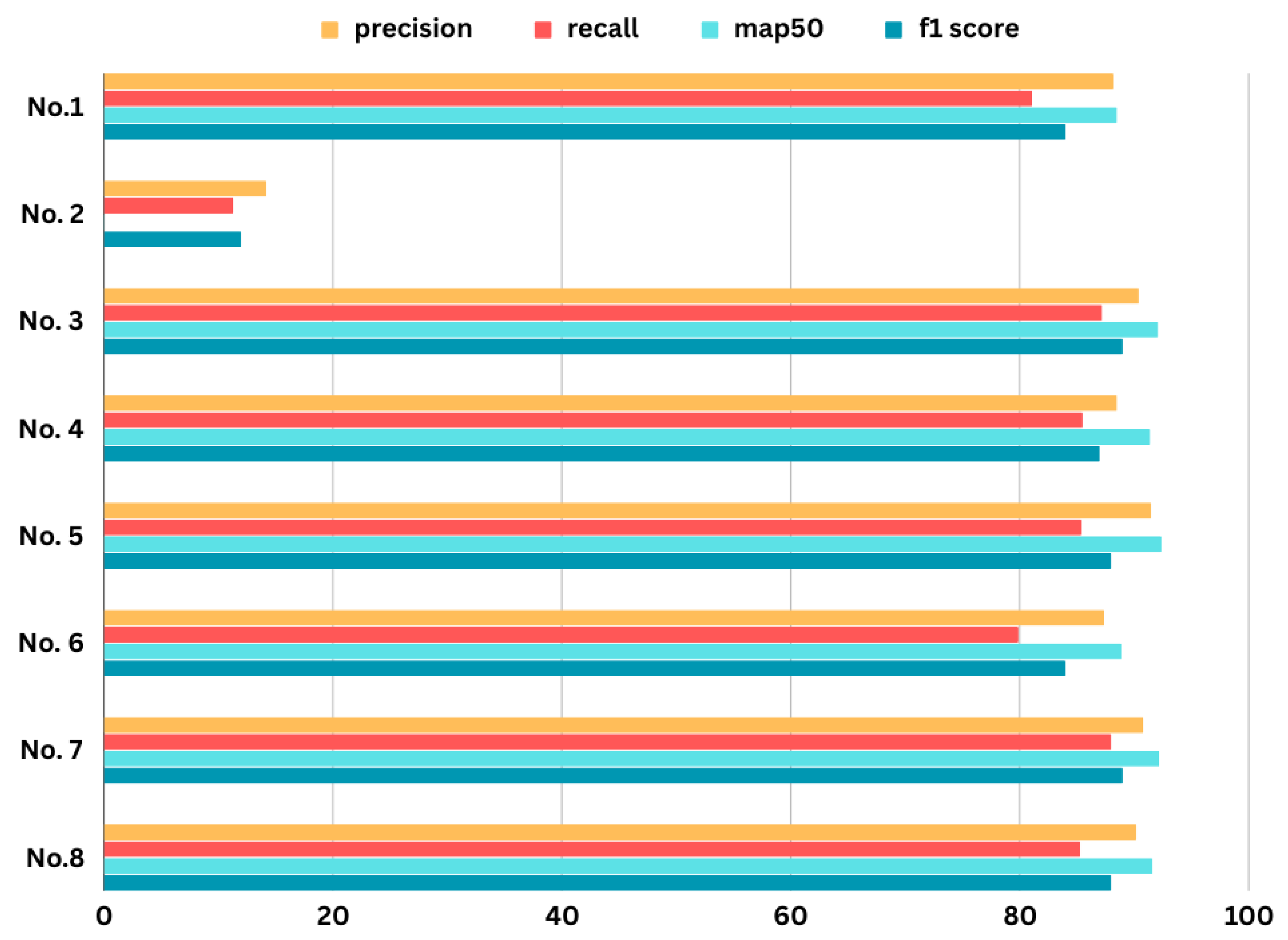

3.5. The Training and Success Measure Process

The training process is separated into several steps to achieve better output. Initially, the default YOLOv8 framework is used to train a model with a variety of hyperparameters and activation functions, such as LeakyReLU, ReLU, Sigmoid, Tanh, Softmax, and Mish. After that, training is performed on the modified architecture by loading the default YOLOv8 with the best-performing hyperparameters, as shown in

Table 2. In particular, the model with the LeakyReLU activation function and the given hyperparameters performs exceptionally well. Moreover, we retrain the model using the same hyperparameters on our modified architecture by loading the previously trained weights. This method of training is essentially a form of deep transfer learning, where the knowledge obtained from the previous model is utilized by the next model to enhance its performance.

3.6. Improvements After Using C2f Layer

In the C2F layer, contextual information is added early on, allowing the model to capture global characteristics from the very first layers of the backbone. This additional background information helps the network understand the connections between different parts of the input image. By refining features through the C2F layer, the network gains improved discriminatory power. The C2F layer adapts to focus on important traits while ignoring noise and irrelevant data. By combining features from the first convolutional layer with those from subsequent layers, the C2F layer facilitates multi-scale fusion. This fusion enhances the model’s ability to handle objects of varying sizes and scales in the input image, thereby improving detection accuracy.

Adding the C2F layer aids in establishing spatial ordering in the feature maps, which is crucial for accurate object detection and recognition. It enables the network to better understand the surrounding context more quickly during training by providing detailed background information earlier on. The YOLOv8 architecture has been improved by incorporating an additional Context-to-Flow (C2F) layer. This enhancement has resulted in a more comprehensive model, now comprising 387 layers, 81,874,710 parameters, and 81,874,694 gradients, compared to the default YOLOv8 model, which includes 365 layers, 43,631,382 parameters, and 43,631,366 gradients. The computational intensity of the model has significantly increased from 165.4 GFLOPs to 373.1 GFLOPs.

The change in architecture demonstrates how the additional C2F layer has improved the network’s feature representation, making it more effective in capturing contextual and dynamic information. As a result, the model’s overall accuracy has significantly improved. As previously mentioned, our modified model consists of 387 layers, 81,874,710 parameters, and 81,874,694 gradients. This increase in parameters and gradients enables the model to learn complex patterns and nuances in the data, highlighting the potential of this modified YOLOv8 architecture in advancing object detection performance.

Our proposed model demonstrates a substantial improvement in accuracy for object detection tasks. The C2F layer plays a key role in enhancing the detection capabilities of the YOLOv8 architecture by gathering more contextual and flow information from the input images. This proposed approach appears to be a promising direction for further advancement in deep learning-based object detection research.

Author Contributions

Conceptualization, M.W.H., S.S., J.N., R.R., and T.H.; Formal analysis, M.W.H., S.S., and J.N.; Funding acquisition, Z.R. and S.T.M.; Investigation, M.W.H., S.S., and J.N.; Methodology, M.W.H., S.S., J.N., T.H., R.R., and S.T.M.; Supervision, R.R., T.H.; Validation, R.R., T.H., S.T.M, and Z.R.; Visualization, M.W.H., S.S., and J.N..; Writing—original draft, M.W.H., S.S., and J.N.; Writing—review and editing, M.W.H., S.T.M, T.H., R.R. and Z.R. All authors have read and agreed to the published version of the manuscript.