0. Introduction

Stochastic processes on graphs are widely used in the construction and research of applied stochastic models (see, for example, [

1,

2,

3,

4,

5] and references to them). These applied problems are closely connected with admission and pricing optimization of on-street parking with delivery bays [

6]. It is worthy to say about joint optimization of preventive maintenance and triggering mechanism for k-out-of-n: F systems with protective devices based on periodic inspection [

7]. Another applied problem is considered in managing production-inventory-maintenance systems with condition monitoring [

8].

In particular, these constructions may be used in modelling of the so-called functional systems introduced by P.K. Anokhin [

9,

10] in neurophysiology. According to Anokhin’s definition, a functional system is urgently formed to obtain the desired result and disintegrates after receiving this result. In particular, as a student of the famous physiologist I.P. Pavlov, P.K. Anokhin proved that the feedback cycle in the body is much more complicated and includes entire zones in the brain. Following this, P.K. Anokhin tried to involve even N. Wiener in the analysis of such complex interactions. This example clearly shows how the complexity of tasks and related queuing network models increases. At the same time, there is a whole series of modern anatomical and psychological studies on the transformation of short-term memory into long-term memory with the participation of the hippocampus [

11,

12,

13,

14].

To construct a mathematical model of queuing networks with a varying structure, the construction of discrete ergodic Markov processes with continuous time is used in this paper. And the model itself is a combination of several queuing systems. In this case, the set of states of the combined system is the union of the sets of states of the combined subsystems. This is the difference from the classical queuing networks, in which the set of states of the system is a direct product of the sets of states of the combined systems [

15,

16]. The transient intensities between the states of different systems may be considered as a control which defines a protocol of functioning the system. And stationary distribution of combined system becomes probabilistic mixture of stationary distributions of combined subsystems.

Second and very important calculation problem closely connected with first one as in technical so in a meaningful sense is to analyse a method for controlling a single-channel queuing system with failures in order to give it properties that display patterns of real processes interesting for applications. It is noteworthy that in order for these systems to be acceptable for repeated use, they must have a sufficiently smooth distribution over a variety of states. The attention to uniform distribution is explained by the fact that it is characterized by the absence of any priorities for individual states. To do this, here, by analogy with the statistical mechanics [

17,

18], a queuing system with uniform distribution over a set of states is designed.

The construction of queuing system with a uniform stationary distribution is based on a graph of transient intensities, in each node of which the sum of input intensities is equal to the sum of output intensities. Such a network is constructed in the paper from a deterministic transport network (see, for example, [

19,

20]) by introducing an additional transient intensity from the final vertex to the initial one so that the intensity of the flow along it coincides with the total flow in the bipolar. Such queuing systems is a special case of stochastic cleaning systems that accept and accumulate input data of random variables over random time intervals until pre-defined criteria are met. Then some or all of these inputs are instantly cleared (see, for example, [

21,

22,

23,

24]).

An alternative way to solve this problem in the paper is to use the product theorems [

15,

16]. To construct such a queuing system, the product theorem for a connected graph of transient intensities consisting of triangles/pyramids with disjoint edges [

25], which is a generalization of the classical Jackson product theorem with a finite number of states and with unit factors in all nodes.

1. Materials and Methods

In this paper, the ergodicity theorem is used for discrete Markov processes with a countable set of states. The main condition of this theorem is the existence of a limiting distribution satisfying the Kolmogorov-Chapman equalities. The next method is product theorem for open (Jackson, [

15]) or closed (Gordon-Newell [

16]) queuing network. The main result of these theorems, used later in the article, are the formulas themselves for calculating limit distributions, including products of degrees of load coefficients in individual network nodes. The article also uses the concept of a deterministic transportation network [

19,

20] to design a queuing network with a stationary uniform distribution.

1.1. Ergodicity Theorem

The following ergodicity theorem of discrete Markov process with continuous time is proved (see, for example, [

26,

27], Theorems 2.4, 2.5]).

Theorem 1. Suppose that a homogeneous Markov process with a discrete and counting set of states , with transient intensities satisfies the conditions

has at least one solution such that ,

(B)all process states are communicating, i.e.

(C)the regularity condition is met, i.e.

Then the process is ergodic, its stationary distribution coincides with the limit (ergodic) and is uniquely determined from the system (1) and the normalization conditions.

1.2. Product Theorems

An open Jackson network

G is a network with an intensity

of Poisson input flow. It consists of

m single-server queuing systems with service intensities

Between these nodes customers are circulating. The dynamics of the customers motion in the network is set by the route matrix

where

are probabilities of transition after service at node

i to mode to node

j,

, node number 0 is an external source. It is assumed that the route matrix is indecomposable, i.e.

Then the vector

is the only solution to the system

The functioning of the entire network (the number of customers in service centres) is described by a discrete Markov process

with multiple states

and non-zero transient intensities (

):

Here

is a

m-dimensional vector whose

k component is

and rest are

In these conditions the following product theorem is proved in [

15].

Theorem 2.

If then Markov process is ergodic and its stationary distribution satisfies the formula

Consider now closed queuing network

with states set

where and all

are integers. Here

K is the total number of customers in the network

. Then the following product theorem is proved in [

16].

Theorem 3.

Assume that the network is described by discrete Markov process with states set and transition intensities . If route matrix of the set satisfies the condition

then appropriate system of motion equations [16]

has infinite number of solutions with non-negative components belonging half axis. The Markov process is ergodic and its stationary distribution is calculated by the formula

2. Results

The main results of the paper are presented in two subsections. The first subsection contains the procedure for switching between queuing subsystems/networks using additional transient intensities. It is proved that for such systems with switching between subsystems, the stationary distribution is a probabilistic mixture of stationary distributions for switched subsystems. The second subsection is devoted to the construction of queuing systems with uniform stationary distribution. In this subsection, the two-pole construction is used as a graph of transient intensities with the introduction of a transient intensity from the output vertex to the input one. In addition, open networks with a finite number of states and with single load factors in nodes are designed as queuing networks with uniform stationary distribution.

2.1. Queuing Networks with Varying Structure

Using ergodicity theorem 1, limit relations are constructed in this section for stationary distributions of discrete Markov processes with continuous time. They are used to calculate the marginal distributions of exponential queuing networks operating in a random environment. We are talking about networks whose structure (a set of operating nodes, an intensity of service and input flow, a route matrix, a number of states, transitions between nodes) or type (open, closed network) changes when some state of a system reaches some meaning. Based on the proved theorem and the choice of the functioning scheme for the models under consideration, formulas for calculating their marginal distributions are obtained and additional computational algorithms are constructed. These results are illustrated by Example 1 of switching between two different Markov processes.

2.1.1. Basic Theorems on Stationary Distributions of Markov Processes with Connecting Transient Intensities

Consider

m discrete, homogeneous and irreducible (and therefore ergodic) Markov processes

with sets of states

with transient intensities

and stationary probabilities

From the stationary Kolmogorov-Chapman equations, the equalities follow

Theorem 4.

Suppose that additional transient intensities are introduced between some states of processes satisfying for some conditions

Then the process with a set of states and transient intensities

is also ergodic and its stationary distribution π has the form

Proof. The proof of this theorem is based on the ergodicity theorem 1 and the stationary Kolmogorov-Chapman equations for the distribution of

following from the formulas (

8), (

9).

Corollary 1.

Let the numbers satisfy the conditions

and the numbers satisfy the equalities

Then the equalities imply the equalities (9). The equalities (12) allow satisfying the conditions (11), to determine the transient intensities For us, the most interesting case will be when the equalities are fulfilled.

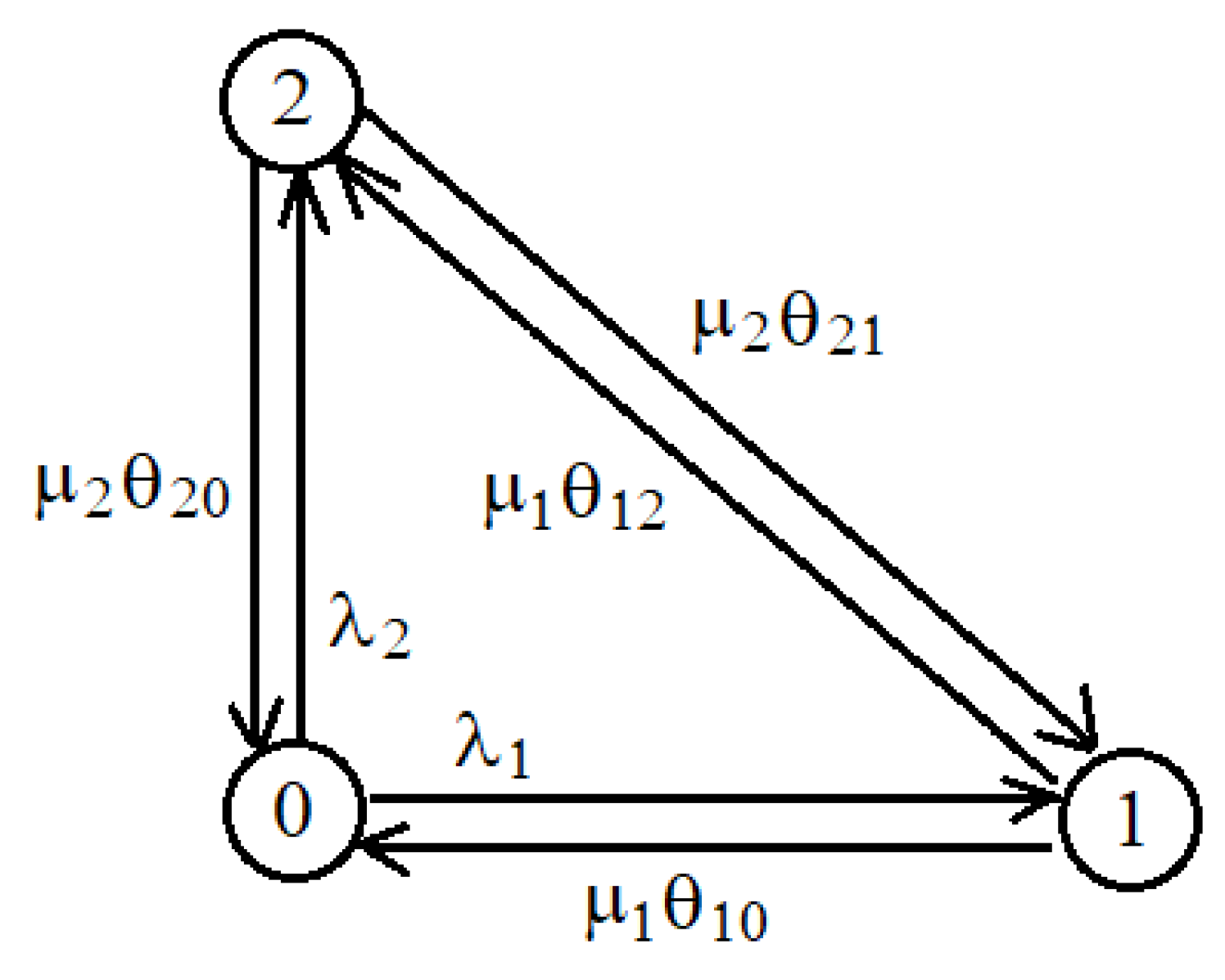

Example 1.

Suppose that the discrete Markov processes considered in Theorem 2 describe the number of customers in single-server queuing systems with Poisson input flows with intensities and with service intensities Then discrete Markov processes describing the numbers of customers in first and second queuing systems have marginal distributions Select the states select and determine the intensities of transitions from the conditions (9):

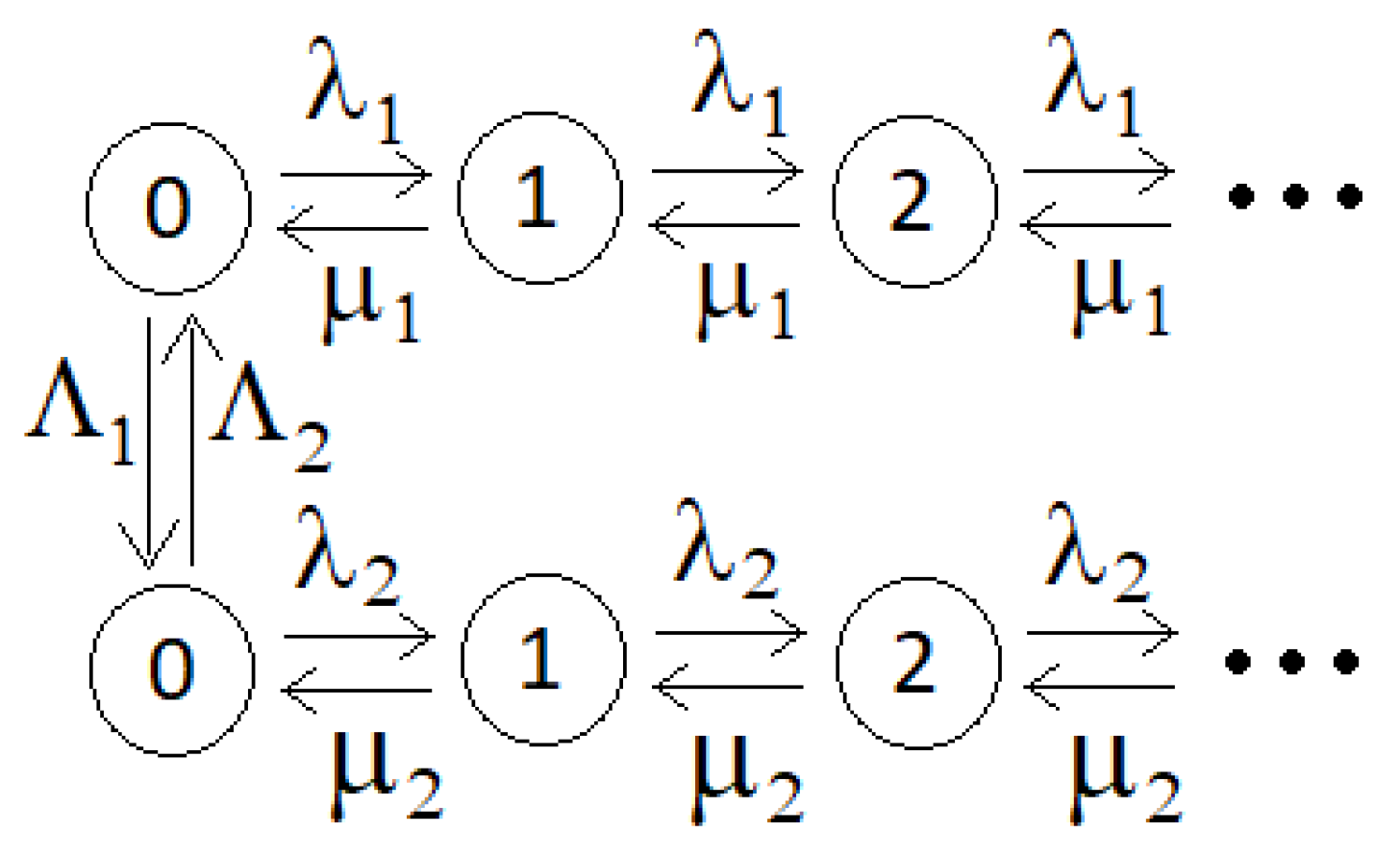

For this example, the positive intensities of transitions between states are described by Figure 1.

Figure 1. Transient intensities in the queuing system from Example 1.

Assume that then and so we may take, for example,

Remark 1. Markov processes may describe open/closed queuing networks. For such networks, Jackson’s/Gordon’s theorems allow to calculate both the distributions and their values at points and to determine the transition intensities between using the selected If the distributions correspond open networks, then it is convenient to choose zero vectors as

Remark 2. Analogous but more complicated models of switching from one Markov process to another Markov process (or from on to off periods of input flow) are considered in [28].

2.2. Queuing Networks with Uniform Stationary Distributions

All networks describing by Markov process with stationary uniform distribution are constructed using the following statement.

2.2.1. Main Balance Theorem

Theorem 5.

Suppose that a discrete Markov process with a finite set of states and with transient intensities satisfies the following condition of states connectivity. For so that

and for any , the equalities are fulfilled

Then the Markov process is ergodic and its stationary distribution is uniform on the set of states

Proof. Indeed, the relations (

13), (

14) and Theorem 1 imply ergodicity (and hence the existence of a single stationary distribution) for the discrete Markov process

[

26,

27]. Moreover, it follows from the equalities (

14) that the uniform distribution

satisfies the stationary Kolmogorov-Chapman equations.

Example 2.

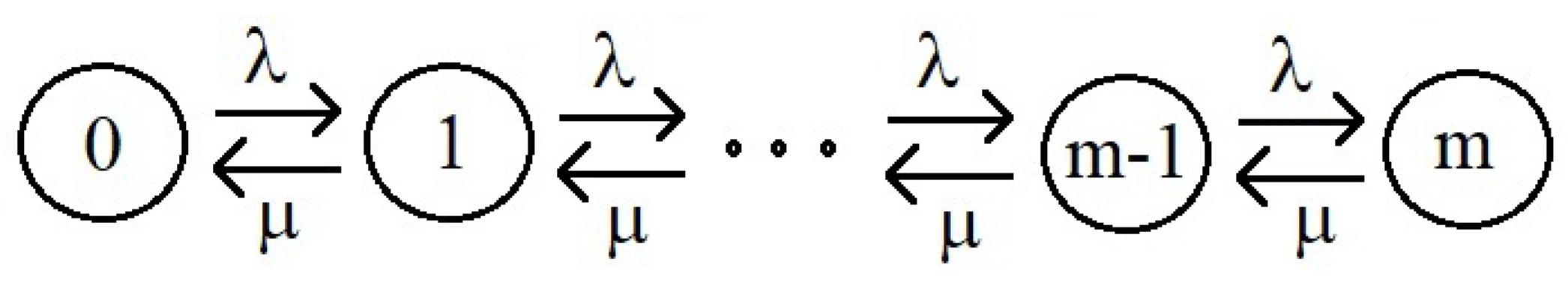

Consider a model of a single-server queuing system with failures when the number of customers exceeds the upper limit We describe this queuing system by a discrete Markov process with states characterizing the number of customers in the system. Suppose that the Poisson input flow to this queuing system has an intensity and the service intensity is Then the transition intensities between the states of the system have the form (Figure 2)

Figure 2. Transient intensities in a system with failures .

Let’s denote the system load factor, then the stationary probability of finding the system in the state i satisfies the equalities

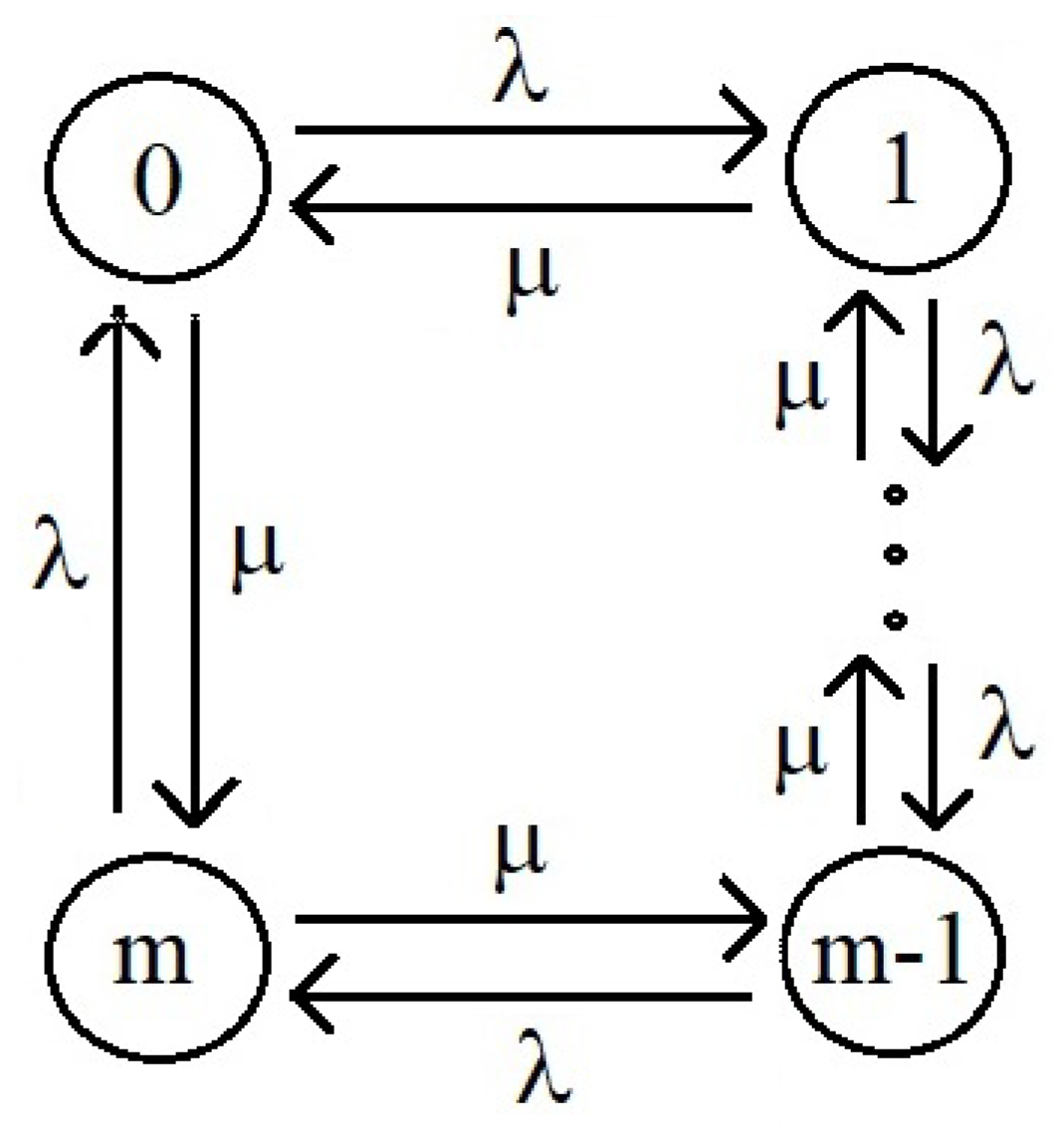

Now let’s transform the system with failures into a system with a uniform distribution of stationary probabilities (Figure 3). To do this, we will introduce additional transition intensities into the system, characterizing the cleaning of the system from customers and the replenishment of the system with deleted customers

Figure 3. Transient intensities in a system with uniform stationary distribution.

An elementary calculation shows that the states form a cycle in which the sum of the intensities entering the state is equal to the sum of the intensities leaving the state (and is equal to ). It follows that the stationary probabilities of being in the states of the extended system satisfy the equality

and the stationary distribution itself is uniform. Thus, by introducing only two new transient intensities given in the formula (17), the stationary distribution (16) is transformed into a uniform stationary distribution (10). This change has a particularly strong effect for the state into which the discrete Markov process characterizing the number of customers in the system at the moment passes from the state

Remark 3. It should be noted that the transition intensities introduced in Figure 4 may be changed. Indeed, at the vertices of a graph depicting the states of the system and its transient intensities (Figure 4), the difference between the sum of the output intensities and the sum of the input intensities is zero. At the vertex 0, this difference is equal to and at the vertex m it is equal to Therefore, introducing between the vertices transient intensities it is possible to obtain equality to zero of the corresponding differences. Moreover, with , we can select If then it is possible to choose

Similarly, if then the transient intensities, introducing between the vertices the transient intensities it is possible with to get the corresponding differences of incoming and outgoing intensities are equal to zero. In this case we can select then it is possible to choose It should be noted that in order to weaken the connection leading to frequent switches between these states it is enough to require the condition

2.2.2. Queuing Network Formed from a Transportation Network

Let’s now consider a queuing network composed of single-server queuing systems at the nodes of some deterministic transport network [

19]. Let this transport network be represented as an oriented acyclic bipolar with a set of vertices

V and a set of edges

On each edge

u of the set

E, the value of the flow

is determined so that for each vertex (excluding the initial and final vertices) the sum of the flows entering it equals the sum of the outgoing ones there are flows from it. Let’s denote

the amount of the flow in this deterministic transport network (the sum of flows leaving the initial vertex equal to the sum of flows entering the final vertex).

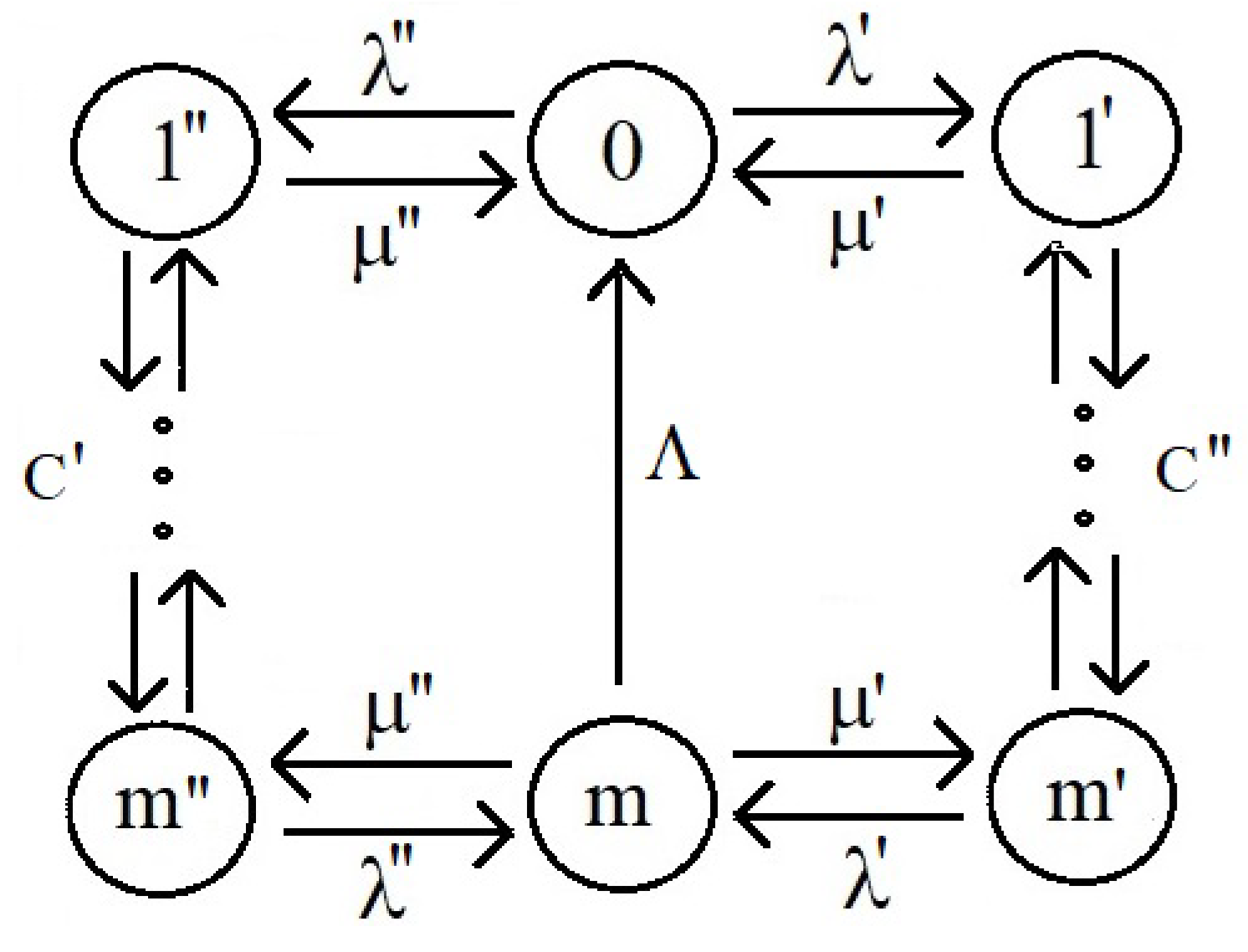

Example 3. Let us now proceed to the definition of discrete Markov process describing this network. On each edge , we define several states of the discrete Markov process indexing them Such a Markov process defines some kind of queuing network, in the nodes of which there are single-server queuing systems with failures. The states characterize the number of customers in the system located on the edge Moreover, the extreme states can also describe the number of customers in systems adjacent to this system if there is a transition of customers between these systems. Additionally, we introduce the transient intensity Λ from the terminal node of the bipolar to the initial node. Then all states of the process will be communicating, and the process will be ergodic. From the construction of the transient intensities of the process (Figure 4), it follows that the sum of the input intensities is equal to the sum of the output intensities for each state. Therefore, the stationary distribution of the so-constructed process defining a certain queuing network, is uniform.

Assume that then

Figure 4. Graph of the transient intensities of Markov process based on a deterministic transport network.

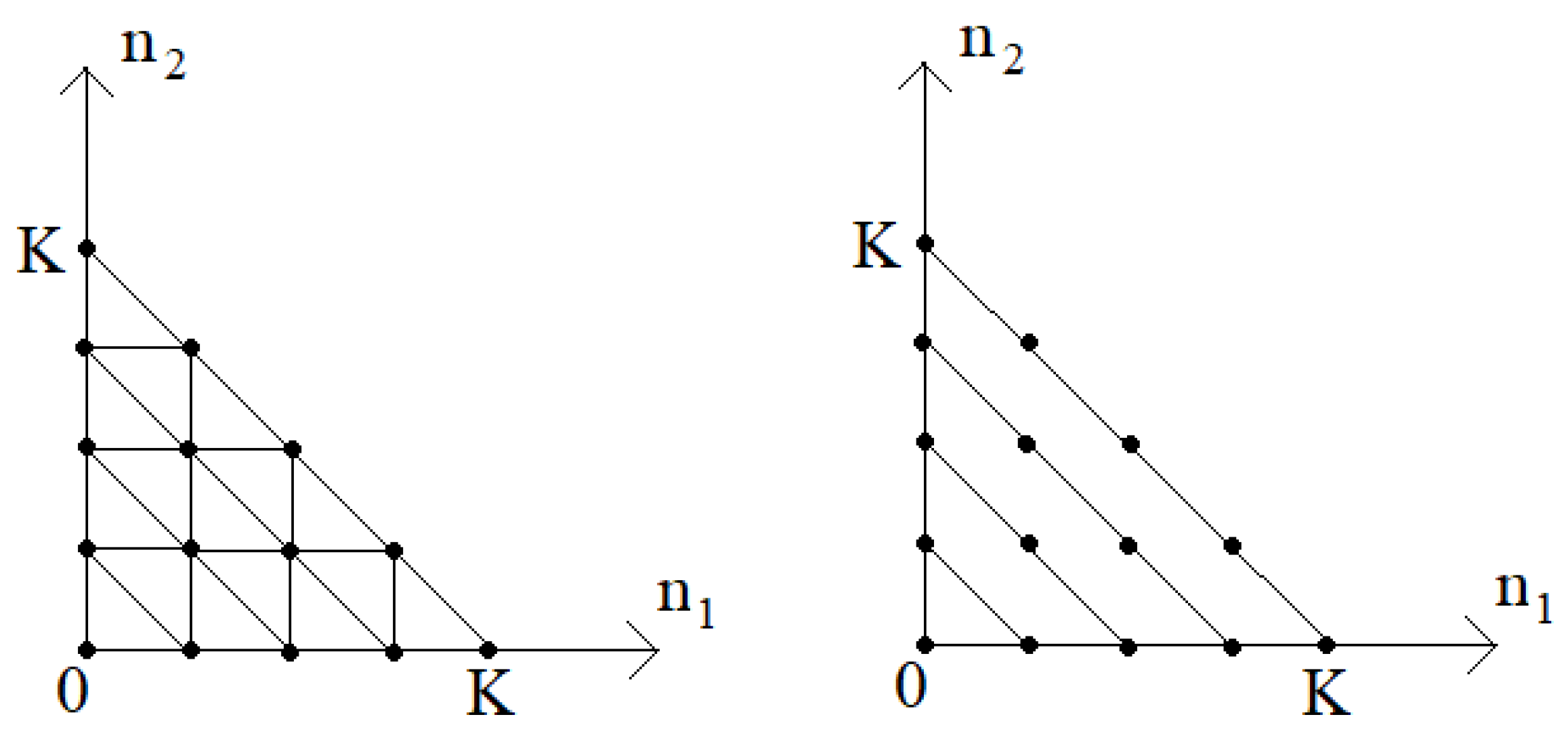

2.3. Some Generalizations of Product Theorems

For an open Jackson network, the graph of transient intensities consists of a set of triangles (pyramids for ) filling the entire first quadrant. In Figure 5, the graph consists of a single triangle. This subsection presents more complex graphs of transient intensities for queuing networks with a limited number of customers at different nodes.

Figure 5. Transient intensities in the simplest connected graph illustrating Formulas (

2), (

3).

Everywhere else, we will use the generalization of the relations (

2), (

3) for the case when for

vectors (Figure 1)

create the connected graph

As examples of the set

it is possible to take

2.3.1. Main Product Theorem

Then the graph

contains a finite number of vertices (which we will also denote

) and a finite number of edges. Belonging the edge

to the graph

characterizes permission for a customer to enter node

k from the outside (from node 0). And the absence of the edge

in the graph

means that the customer received by the node

k from the outside leaves the network. Belonging the edge

to the graph

means permission to complete the customer service in node

and the absence of the edge edge

in the graph

is a ban of a such procedure. Belonging the edge

to the graph

means permission to complete the service of the customer in the node

i, followed by a transition to the

k node and to complete the customer service at the

k node, followed by a transition to the

i node. On the contrary, the absence of the edge

in the graph

means a ban on the corresponding transitions (in both directions) (see, for example Figure 1). Such permissions and prohibitions define the protocol of the

G network. Then, using the product theorem for open queuing networks [

15], it is proved the following product theorem in [

25]. In this subsection, the number of vertices of the corresponding graphs determines the parameter of a uniform stationary distribution.

Theorem 6.

The Markov process defined by such graph Γ is ergodic and its stationary distribution [25] is calculated by the formula

In Theorem 2 graph

is connected and consists of triangles (or pyramids). Without this assumption product formula for stationary distribution can not be proved. Theorem 2 is proved by checking Kolmogorov-Chapman equalities in conditions of formula (

4) for a single triangle/pyramid. Then these triangles are glued together by their vertices (not by edges). Then the obtained equalities are already fulfilled for the entire network.

Corollary 2. If then limit distribution is uniform. Main parameter of uniform distribution here is a number of states.

2.3.2. Closed Queuing Network

From Theorem 3, using ergodicity theorems for Markov chains with a finite number of states [

26], it is not difficult to prove the following statement. All solutions of a system of linear algebraic equations (

6) consisting of positive components are representable as

where

and there is (single) vector

satisfying the equality

Then, using the product theorem 3 for closed queuing networks with single-channel systems nodes [

16], it is not difficult to establish that when the equalities

limit distribution

of the Markov process characterizing the number of customers in the network nodes is uniform. Moreover, the uniform stationary distribution in this network has the number

of states. Here

is the number of solutions of the Diaphantine equation

in non-negative integers or the number of placements of

K indistinguishable particles over

m distinguishable cells [

29,

30] equal to

2.4. Open Queuing Networks with Finite Numbers of Customers

In this section, we assume that which ensures a uniform stationary distribution.

Example 4. Let’s consider an open network with a total number of customers of no more than described in the Theorem 3. The graph characterizing the transient intensities of this network is shown in Figure 5 on the left.

Figure 5. Example of a graph defining a network with a total number of customers of no more than

The set of vertices of this graph (Figure 5, on the right)

let’s imagine it as a union of disjoint subsets where

Then from Formula (20) we find the number of vertices in the set

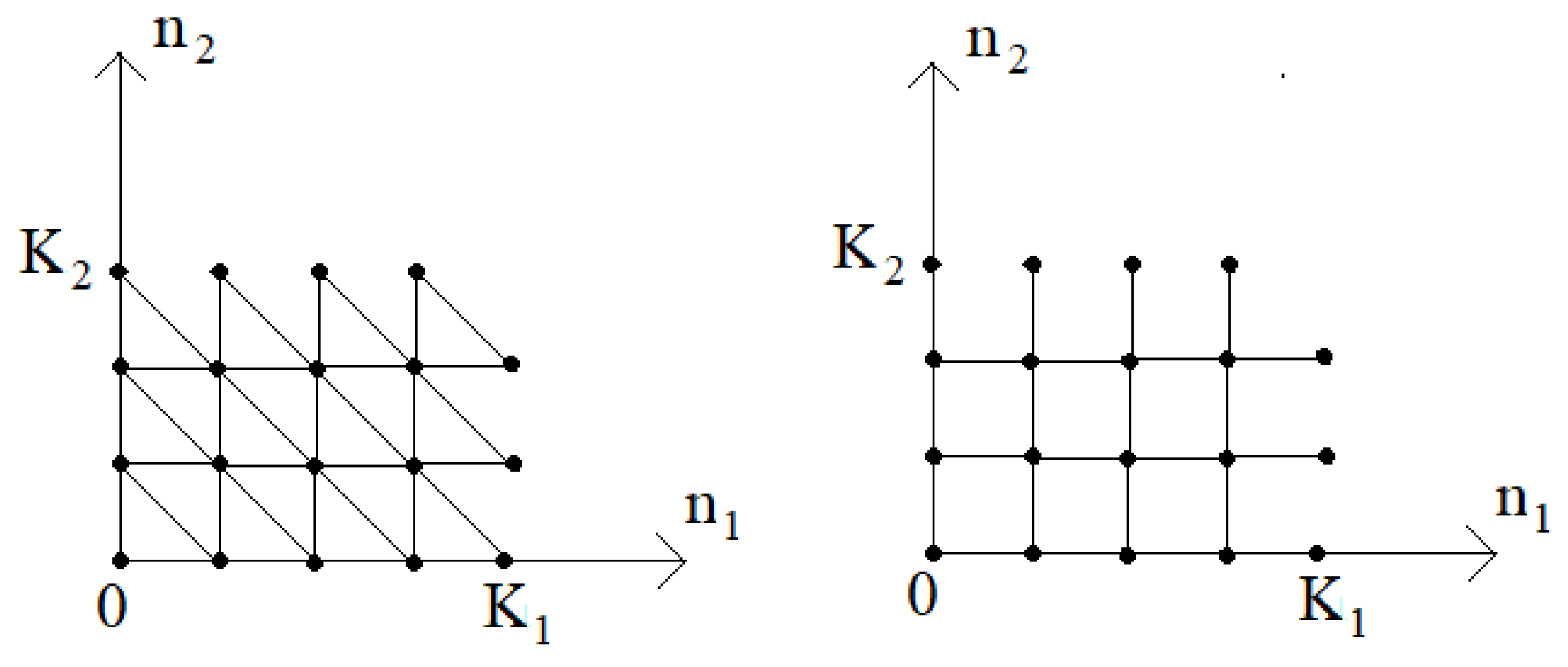

Let’s now turn to the consideration of an open network (Figure 6) with a limited number of customers, characterized by the condition

Example 5.

The graph (Figure 6 on the left) has a set of network states (Figure 6 on the right) which may be represented as a union of disjoint subsets where

Figure 6. Example of graph defining network with the number of customers

It follows that the number M of vertices in the set satisfies the equality

Corollary 3. Suppose that the graph Γ is defined by a set of vertices Replace in each triangle/pyramid defined for (see Figure 5) by Then, with such a replacement, the statement of Theorem 6 and Corollary 2 will remain valid. Then parameters may be considered as queuing network management.

3. Discussion

The number of examples in the first model can be significantly expanded using well-known formulas for marginal distributions in various queuing systems, the numbers of customers in which describe the process of death and birth [

26]. Similarly, random processes describing the number of customers in nodes of an open queuing network [

15] or a closed queuing network [

16] can also be used for this purpose? limit distributions in which obey product theorems.

The design of the managed queuing network given in the second model allows for numerous generalizations based on the model of a deterministic transport network with single input and output nodes [

19,

20]. By determining the total flow to the output node in a deterministic network and building an edge from the output node to the input node with this flow, it is possible to obtain a system with a uniform stationary distribution. To determine the number of states of a Markov process with a stationary distribution, it is necessary to use the known combinatorics results obtained, in particular, by solving the Diaphantine equations.

4. Conclusions

This article presents two models of managed queuing systems with a variable structure. The first model is based on a combination of several well-known models with switching between them. The second model is based on the deterministic transport network model and uses the introduction of feedback in a queuing system with failures and with a single server. Stationary distributions are calculated for both models. In the first model, this distribution may be represented as a mixture of stationary distributions with given weights and a fixed number of customers. For the second model, this is a uniform distribution. The article calculates the number of states of a discrete Markov process describing queuing networks with uniform stationary distributions.

It is planned to expand the set of models of queuing networks with a variable structure (subsection 2.1) and consider models of networks with failures and server recovery. It is also planned to additionally consider models of queuing networks built using deterministic transport networks (example 5). Referring to fundamental examples in which new continuations are discovered helps to see what new possibilities arise when considering systems with a changing structure or systems with a uniform stationary distribution.

The research was carried out within the state assignment for IAM FEB RAS (N 075-00459-24-00).

Funding

This research received no external funding.

Acknowledgments

The author thanks Y.N. Kharchenko for his help in interpreting some results of statistical physics.

Conflicts of Interest

The author declares no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Grimmett, G. Probability on Graphs, Second Edition, Volume 8 of the IMS Textbooks Series; Cambridge University Press: Cambridge, UK, 2018. [Google Scholar]

- Van der Hofstad, R. Random Graphs and Complex Networks. Volume 1. Cambridge Series in Statistical and Probabilistic Mathematics; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Bet, G.; van der Hofstad, R.; van Leeuwaarden, J.S. Big jobs arrive early: From critical queues to random graphs. Stochastic Syst. 2020, 10, 310–334. [Google Scholar] [CrossRef]

- Kriz, P.; Szala, L. The Combined Estimator for Stochastic Equations on Graphs with Fractional Noise. Mathematics 2020, 8. [Google Scholar] [CrossRef]

- Overtona, Ch.E.; Broomb, M.; Hadjichrysanthouc, Ch.; Sharkeya, K.J. Methods for approximating stochastic evolutionary dynamics on graphs. J. Theor. Biol. 2019, 468, 45–59. [Google Scholar] [CrossRef] [PubMed]

- Legros, B.; Fransoo, J.C. Production, Manufacturing, Transportation and Logistics Admission and pricing optimization of on-street parking with delivery bays. European Journal of Operational Research 2024 312 (1) 138–149.

- Ning, R.; Wang, X.; Zhao, X.; Li, Z. Joint optimization of preventive maintenance and triggering mechanism for k-out-of-n: F systems with protective devices based on periodic inspection. Reliability Engineering and System Safety 2024, 251, 110396. [Google Scholar] [CrossRef]

- Feng, H.; Zhang, Sh.H.; Zhang, Y. Production, Manufacturing, Transportation and Logistics Managing production-inventory-maintenance systems with condition monitoring. European Journal of Operational Research 2023 310 (2) 698–711.

- Anokhin, P.K. Ideas and facts in the development of the theory of functional systems. Psychological Journal 1984 5 107–118. (In Russian).

- Redko, V.G.; Prokhorov, D.V.; Burtsev, M.S. Theory of Functional Systems, Adaptive Critics and Neural Networks. Proceedings of IJCNN 2004, 1787–1792. [Google Scholar]

- Vartanov, A.V.; Kozlovsky, S.A. et al. Human memory and anatomical features of the hippocampus. Vestn. Moscow. Univ. Ser. 14. Psychology, 2009 4, 3–16. (In Russian).

- Lye, T.C.; Grayson, D.A. et al. Predicting memory performance in normal ageing using different measures of hippocampal size. Neuroradiology, 2006 48 (2), 90-—99.

- Lupien, S.J.; Evans,; A.; et al. Hippocampal volume is as variable in young as in older adults: Implications for the notion of hippocampal atrophy in humans. Neuroimage, 2007 34 (2), 479-—485.

- Squire, L.R. The legacy of patient H.M. for neuroscience. Neuron 2009 61 (1), 6-—9.

- Jackson, J.R. Networks of Waiting Lines. Oper. Res. 1957, 5, 518–521. [Google Scholar] [CrossRef]

- Gordon, K.D.; Newell, G.F. Closed Queuing Systems with Exponential Servers. Oper. Research 1967 15 (2) 254–265.

- Pathria, R.K.; Beale, P.D. Statistical Mechanics, 3-rd edition. Elsevier. 2011.

- Gyenis, B. Maxwell and the normal distribution: A coloured story of probability, independence, and tendency toward equilibrium. Studies in History and Philosophy of Science. Part B: Studies in History and Philosophy of Modern Physics. 2017 57, 53–65.

- Ford, L.R.; Fulkerson, D.R. Maximal flow through a network. Canadian Journal of Mathematics, 1956 8, 399–404.

- Cormen, Th.H.; Leiserson, Ch.E.; Rivest, R.L.; Stein, Cl. Algorithms: Introduction to Second Edition. The MIT Press Cambridge. Cambridge, UK, 1990.

- Ghosh, S.; Hassin, R. Inefficiency in stochastic queueing systems with strategic customers. European J. Oper. Res. 2021, 295(1), 1–11. [Google Scholar] [CrossRef]

- He, Q.M.; Bookbinder, J.H.; Cai, Q. Optimal policies for stochastic clearing systems with time-dependent delay penalties. Naval Res. Logist., 2020 67 (7), 487–502.

- Kim, B.K.; Lee, D.H. The M/G/1 queue with disasters and working breakdowns. Appl. Math. Model. 2014 38 (5-6), 1788–1798.

- Missbauer, H.; Stolletz, R.; Schneckenreither, M. Order release optimisation for time-dependent and stochastic manufacturing systems. Int. J. Prod. Res., 2023 62 (7), 2415–2434.

- Tsitsiashvili, G.Sh.; Osipova, M.A. New multiplicative theorems for queuing networks. Problems of information transmission. 2005 41 (2), 111–122. (In Russian).

- Ivchenko, G.I.; Kashtanov, V.A.; Kovalenko, I.N. Theory of queuing: A textbook for universities. Moscow: Higher School. Moscow, Russia, 1982. (In Russian).

- Kovalenko, I.N.; Kuznetsov, N.Yu.; Shurenkov, V.M. Random processes. Kiev: Naukova dumka, 1983. (In Russian).

- Mikosch, T.; Resnick, S.; Rootzen, H.; Stegeman, A. Is network traffic approximated by stable Levy motion or fractional Brownian motion? Annals of Applied Probability 2002, 12, 23–68. [Google Scholar] [CrossRef]

- Stillwell, J. Mathematics and its History (Second ed.). Springer Science and Business Media Inc, 2004.

- Vilenkin, N.Ya. Combinatorics. Moscow: Nauka, Moscow, Russia, 1969. (In Russian).

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).