Submitted:

06 September 2024

Posted:

09 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

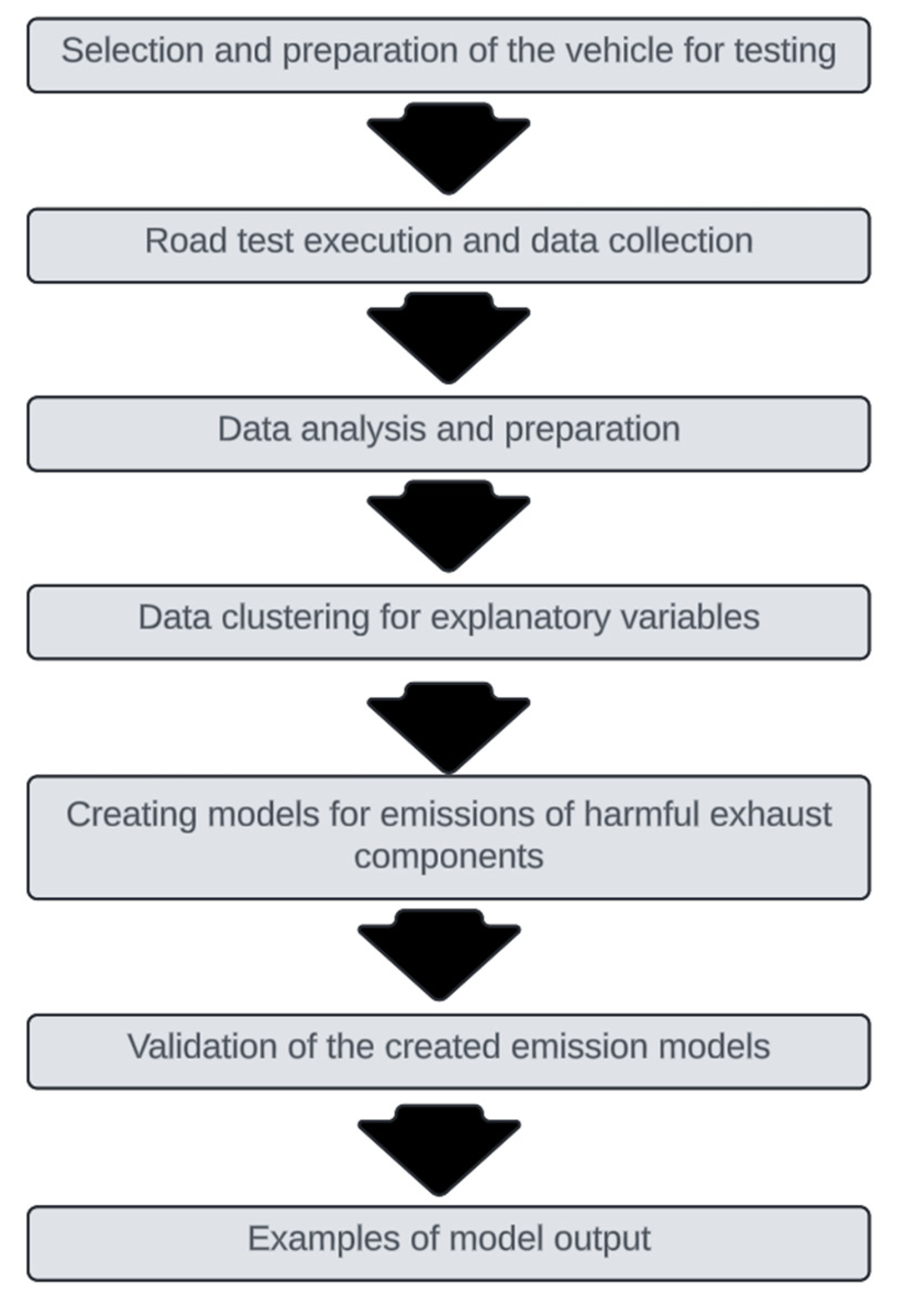

2. Methods

2.1. Research Vehicle, Route and Apparatus Used

2.2. Software Used and Data Processing

3. Results

3.1. Exhaust Emissions Results from Road Tests

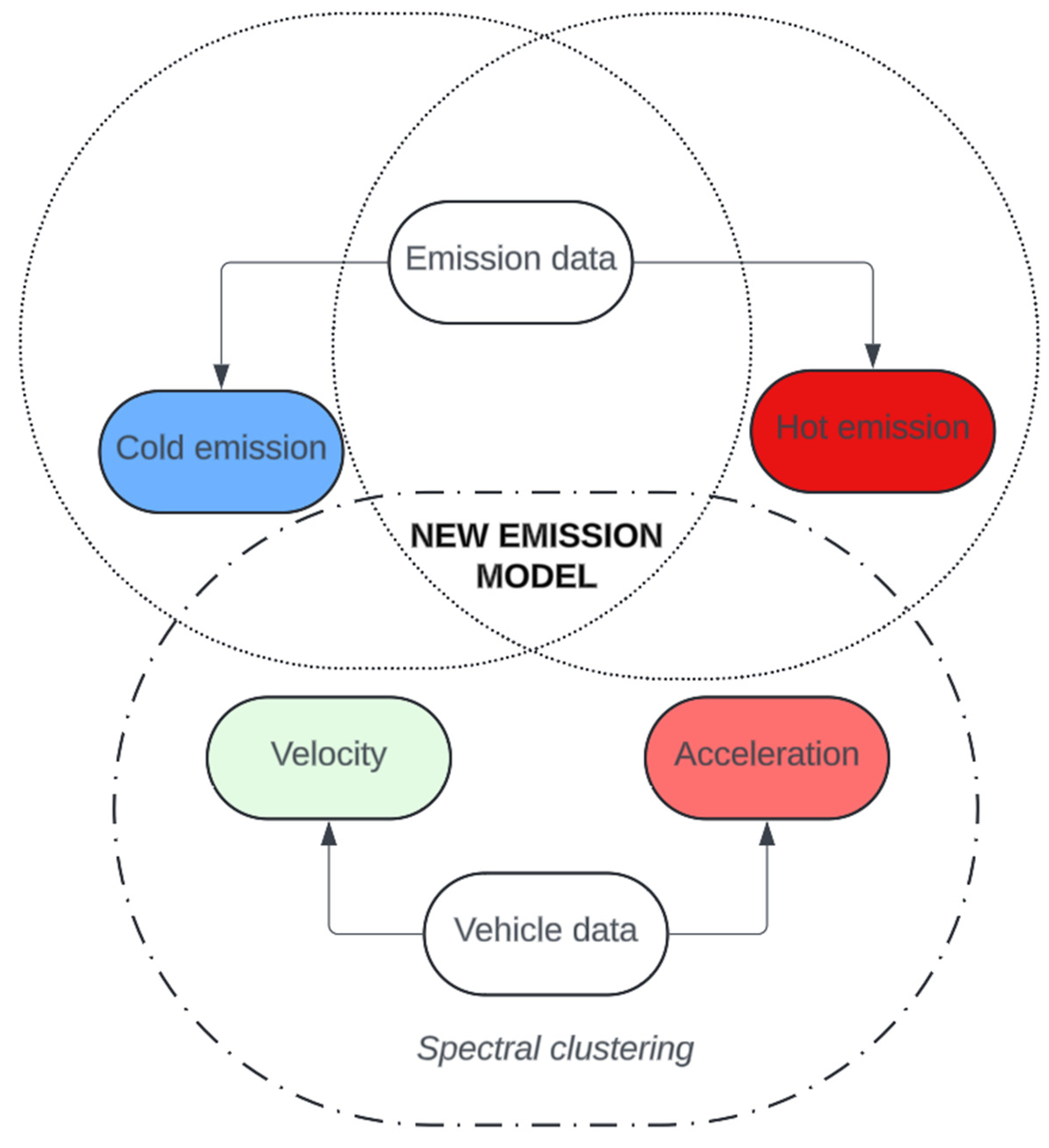

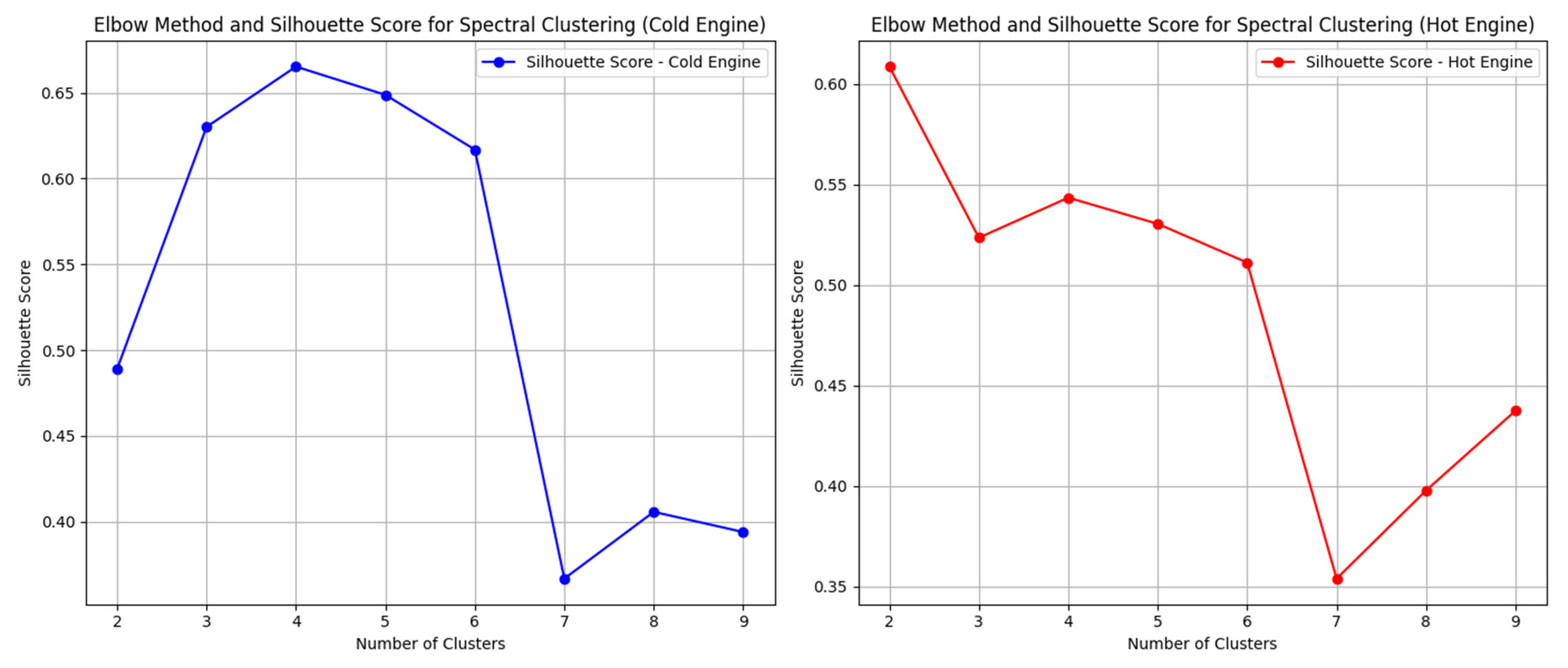

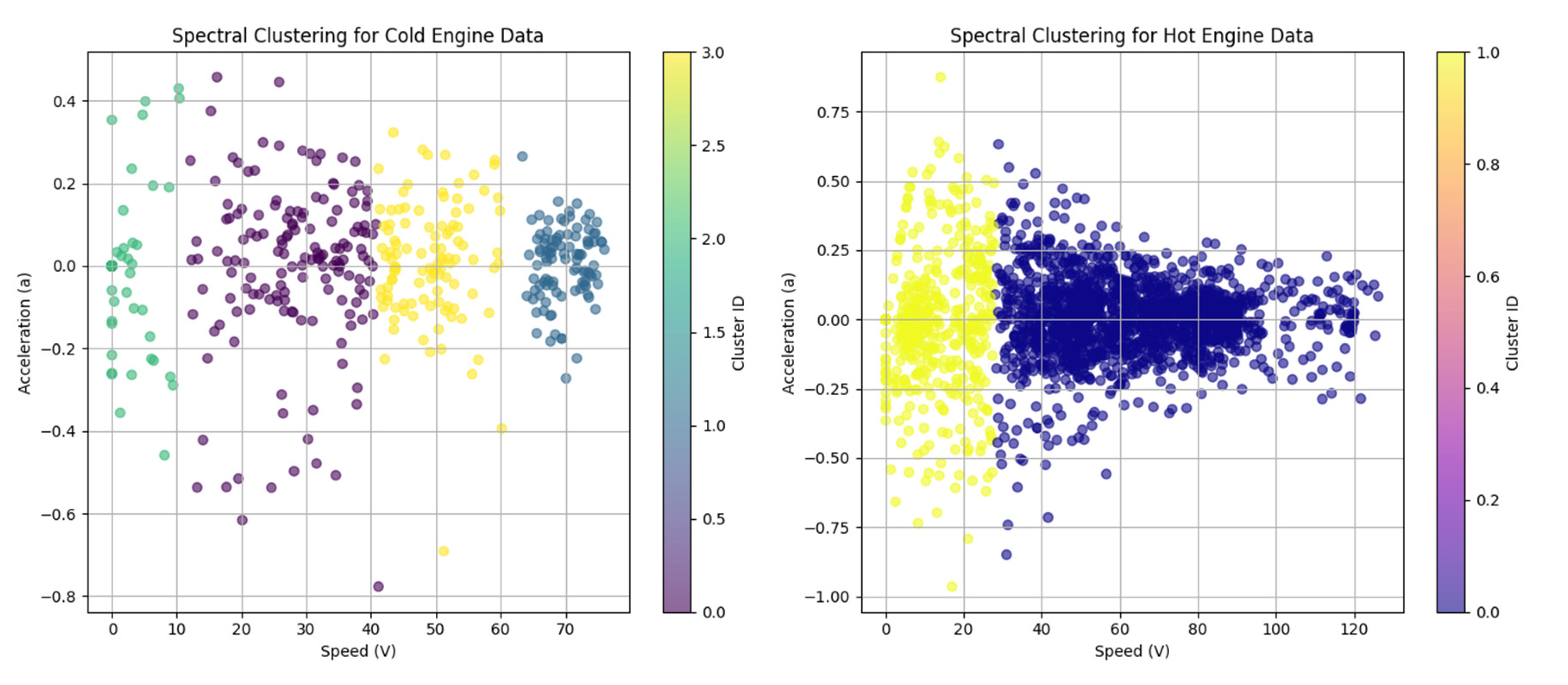

3.2. Clustering of Model Learning Inputs

3.3. Emission Modeling and Validation

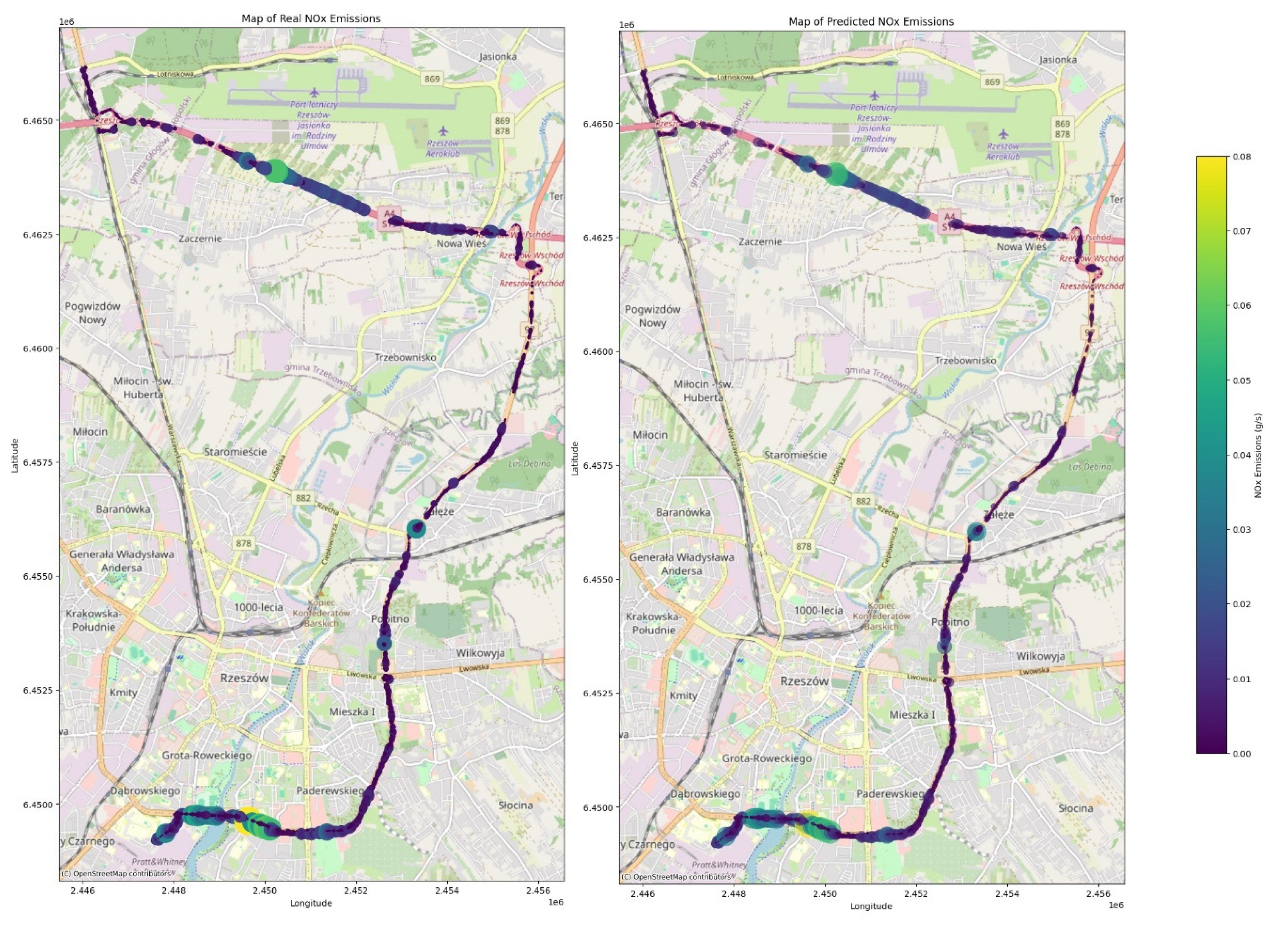

3.4. Example Use of Models

4. Discussion

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Agamloh, E., Von Jouanne, A., & Yokochi, A. (2020). An overview of electric machine trends in modern electric vehicles. Machines, 8(2), 20. [CrossRef]

- Suarez-Bertoa, R., Selleri, T., Gioria, R., Melas, A. D., Ferrarese, C., Franzetti, J.,... & Giechaskiel, B. (2022). Real-time measurements of formaldehyde emissions from modern vehicles. Energies, 15(20), 7680. [CrossRef]

- Sharma, R., Kumar, R., Singh, P. K., Raboaca, M. S., & Felseghi, R. A. (2020). A systematic study on the analysis of the emission of CO, CO2 and HC for four-wheelers and its impact on the sustainable ecosystem. Sustainability, 12(17), 6707. [CrossRef]

- Ge, J. C., Wu, G., Yoo, B. O., & Choi, N. J. (2022). Effect of injection timing on combustion, emission and particle morphology of an old diesel engine fueled with ternary blends at low idling operations. Energy, 253, 124150. [CrossRef]

- Gao, C., Gao, C., Song, K., Xing, Y., & Chen, W. (2020). Vehicle emissions inventory in high spatial–temporal resolution and emission reduction strategy in Harbin-Changchun Megalopolis. Process Safety and Environmental Protection, 138, 236-245.

- Alizadeh, H., & Sharifi, A. (2023). Analyzing urban travel behavior components in Tehran, Iran. Future transportation, 3(1), 236-253.

- de Meij, A., Astorga, C., Thunis, P., Crippa, M., Guizzardi, D., Pisoni, E.,... & Franco, V. (2022). Modelling the impact of the introduction of the EURO 6d-TEMP/6d regulation for light-duty vehicles on EU air quality. Applied Sciences, 12(9), 4257.

- Wallington, T. J., Anderson, J. E., Dolan, R. H., & Winkler, S. L. (2022). Vehicle emissions and urban air quality: 60 years of progress. Atmosphere, 13(5), 650.

- Singh, S., Kulshrestha, M. J., Rani, N., Kumar, K., Sharma, C., & Aswal, D. K. (2023). An overview of vehicular emission standards. Mapan, 38(1), 241-263.

- Zhan, T., Ruehl, C. R., Bishop, G. A., Hosseini, S., Collins, J. F., Yoon, S., & Herner, J. D. (2020). An analysis of real-world exhaust emission control deterioration in the California light-duty gasoline vehicle fleet. Atmospheric Environment, 220, 117107.

- Jaworski, A., Mądziel, M., Kuszewski, H., Lejda, K., Balawender, K., Jaremcio, M.,... & Ustrzycki, A. (2020). Analysis of cold start emission from light duty vehicles fueled with gasoline and LPG for selected ambient temperatures (No. 2020-01-2207). SAE Technical Paper.

- Wallington, T. J., Anderson, J. E., Dolan, R. H., & Winkler, S. L. (2022). Vehicle emissions and urban air quality: 60 years of progress. Atmosphere, 13(5), 650.

- Guno, C. S., Collera, A. A., & Agaton, C. B. (2021). Barriers and drivers of transition to sustainable public transport in the Philippines. World Electric Vehicle Journal, 12(1), 46.

- Guttikunda, S. K. (2024). Vehicle Stock Numbers and Survival Functions for On-Road Exhaust Emissions Analysis in India: 1993–2018. Sustainability, 16(15), 6298.

- Le Cornec, C. M., Molden, N., van Reeuwijk, M., & Stettler, M. E. (2020). Modelling of instantaneous emissions from diesel vehicles with a special focus on NOx: Insights from machine learning techniques. Science of The Total Environment, 737, 139625.

- Aliramezani, M., Koch, C. R., & Shahbakhti, M. (2022). Modeling, diagnostics, optimization, and control of internal combustion engines via modern machine learning techniques: A review and future directions. Progress in Energy and Combustion Science, 88, 100967.

- Zhao, B., Yu, L., Wang, C., Shuai, C., Zhu, J., Qu, S.,... & Xu, M. (2021). Urban air pollution mapping using fleet vehicles as mobile monitors and machine learning. Environmental Science & Technology, 55(8), 5579-5588.

- Mądziel, M. (2024). Instantaneous CO2 emission modelling for a Euro 6 start-stop vehicle based on portable emission measurement system data and artificial intelligence methods. Environmental Science and Pollution Research, 31(5), 6944-6959.

- Hoang, A. T., Nižetić, S., Ong, H. C., Tarelko, W., Le, T. H., Chau, M. Q., & Nguyen, X. P. (2021). A review on application of artificial neural network (ANN) for performance and emission characteristics of diesel engine fueled with biodiesel-based fuels. Sustainable Energy Technologies and Assessments, 47, 101416.

- Acheampong, A. O., & Boateng, E. B. (2019). Modelling carbon emission intensity: Application of artificial neural network. Journal of Cleaner Production, 225, 833-856.

- Mądziel, M. Modelling CO2 Emissions from Vehicles Fuelled with Compressed Natural Gas Based on On-Road and Chassis Dynamometer Tests. Energies 2024, 17, 1850. [Google Scholar] [CrossRef]

- Mądziel, M. Liquified Petroleum Gas-Fuelled Vehicle CO2 Emission Modelling Based on Portable Emission Measurement System, On-Board Diagnostics Data, and Gradient-Boosting Machine Learning. Energies 2023, 16, 2754. [Google Scholar] [CrossRef]

- Liu, H., Qi, L., Liang, C., Deng, F., Man, H., & He, K. (2020). How aging process changes characteristics of vehicle emissions? A review. Critical Reviews in Environmental Science and Technology, 50(17), 1796-1828.

- Kadijk, G., Elstgeest, M., Vroom, Q., Paalvast, M., Ligterink, N., & van der Mark, P. (2020). On road emissions of 38 petrol vehicles with high mileages. TNO report, 11883.

- Ziółkowski, A., Fuć, P., Lijewski, P., Jagielski, A., Bednarek, M., & Kusiak, W. (2022). Analysis of exhaust emissions from heavy-duty vehicles on different applications. Energies, 15(21), 7886.

- Pielecha, J., Skobiej, K., Gis, M., & Gis, W. (2022). Particle number emission from vehicles of various drives in the RDE tests. Energies, 15(17), 6471.

- Ziółkowski, A., Fuć, P., Lijewski, P., Bednarek, M., Jagielski, A., Kusiak, W., & Igielska-Kalwat, J. (2023). The Influence of the Type and Condition of Road Surfaces on the Exhaust Emissions and Fuel Consumption in the Transport of Timber. Energies, 16(21), 7257.

- Andrych-Zalewska, M., Chlopek, Z., Merkisz, J., & Pielecha, J. (2022). Comparison of gasoline engine exhaust emissions of a passenger car through the WLTC and RDE Type Approval Tests. Energies, 15(21), 8157.

- Johary, R., Révillion, C., Catry, T., Alexandre, C., Mouquet, P., Rakotoniaina, S.,... & Rakotondraompiana, S. (2023). Detection of large-scale floods using Google Earth Engine and Google Colab. Remote Sensing, 15(22), 5368.

- Li, Z. (2022). Forecasting weekly dengue cases by integrating google earth engine-based risk predictor generation and google colab-based deep learning modeling in fortaleza and the federal district, Brazil. International journal of environmental research and public health, 19(20), 13555.

- Govender, P., & Sivakumar, V. (2020). Application of k-means and hierarchical clustering techniques for analysis of air pollution: A review (1980–2019). Atmospheric pollution research, 11(1), 40-56.

- Teymoori, M. M., Chitsaz, I., & Zarei, A. (2023). Three-way catalyst modeling and fuel switch optimization of a natural gas bi-fuel-powered vehicle. Fuel, 341, 126979.

- Hamedi, M. R., Doustdar, O., Tsolakis, A., & Hartland, J. (2021). Energy-efficient heating strategies of diesel oxidation catalyst for low emissions vehicles. Energy, 230, 120819.

- Shutaywi, M., & Kachouie, N. N. (2021). Silhouette analysis for performance evaluation in machine learning with applications to clustering. Entropy, 23(6), 759.

- Kim, S. J., Bae, S. J., & Jang, M. W. (2022). Linear regression machine learning algorithms for estimating reference evapotranspiration using limited climate data. Sustainability, 14(18), 11674.

- Steigmann, L., Di Gianfilippo, R., Steigmann, M., & Wang, H. L. (2022). Classification based on extraction socket buccal bone morphology and related treatment decision tree. Materials, 15(3), 733.

- Petersen, A. H., & Ekstrøm, C. (2024). Technical Validation of Plot Designs by Use of Deep Learning. The American Statistician, 78(2), 220-228.

- Mądziel, M. Quantifying Emissions in Vehicles Equipped with Energy-Saving Start–Stop Technology: THC and NOx Modeling Insights. Energies 2024, 17, 2815. [Google Scholar] [CrossRef]

- Mądziel, M. Energy Modeling for Electric Vehicles Based on Real Driving Cycles: An Artificial Intelligence Approach for Microscale Analyses. Energies 2024, 17, 1148. [Google Scholar] [CrossRef]

- Zimakowska-Laskowska, M.; Laskowski, P. Emission from Internal Combustion Engines and Battery Electric Vehicles: Case Study for Poland. Atmosphere 2022, 13, 401. [Google Scholar] [CrossRef]

- Mądziel, M. Future Cities Carbon Emission Models: Hybrid Vehicle Emission Modelling for Low-Emission Zones. Energies 2023, 16, 6928. [Google Scholar] [CrossRef]

- Sun, S., Sun, L., Liu, G., Zou, C., Wang, Y., Wu, L., & Mao, H. (2021). Developing a vehicle emission inventory with high temporal-spatial resolution in Tianjin, China. Science of the Total Environment, 776, 145873.

| Emission Compound | Best Model | MSE | R2 |

|---|---|---|---|

| THC | Random Forest (Cold Engine) | 0.00002 | 0.74408 |

| NOx | Polynomial Regression (Cold Engine) | 0.00006 | 0.59200 |

| CO | Gradient Boosting (Cold Engine) | 0.00291 | 0.47986 |

| CO2 | Polynomial Regression (Cold Engine) | 0.00321 | 0.92200 |

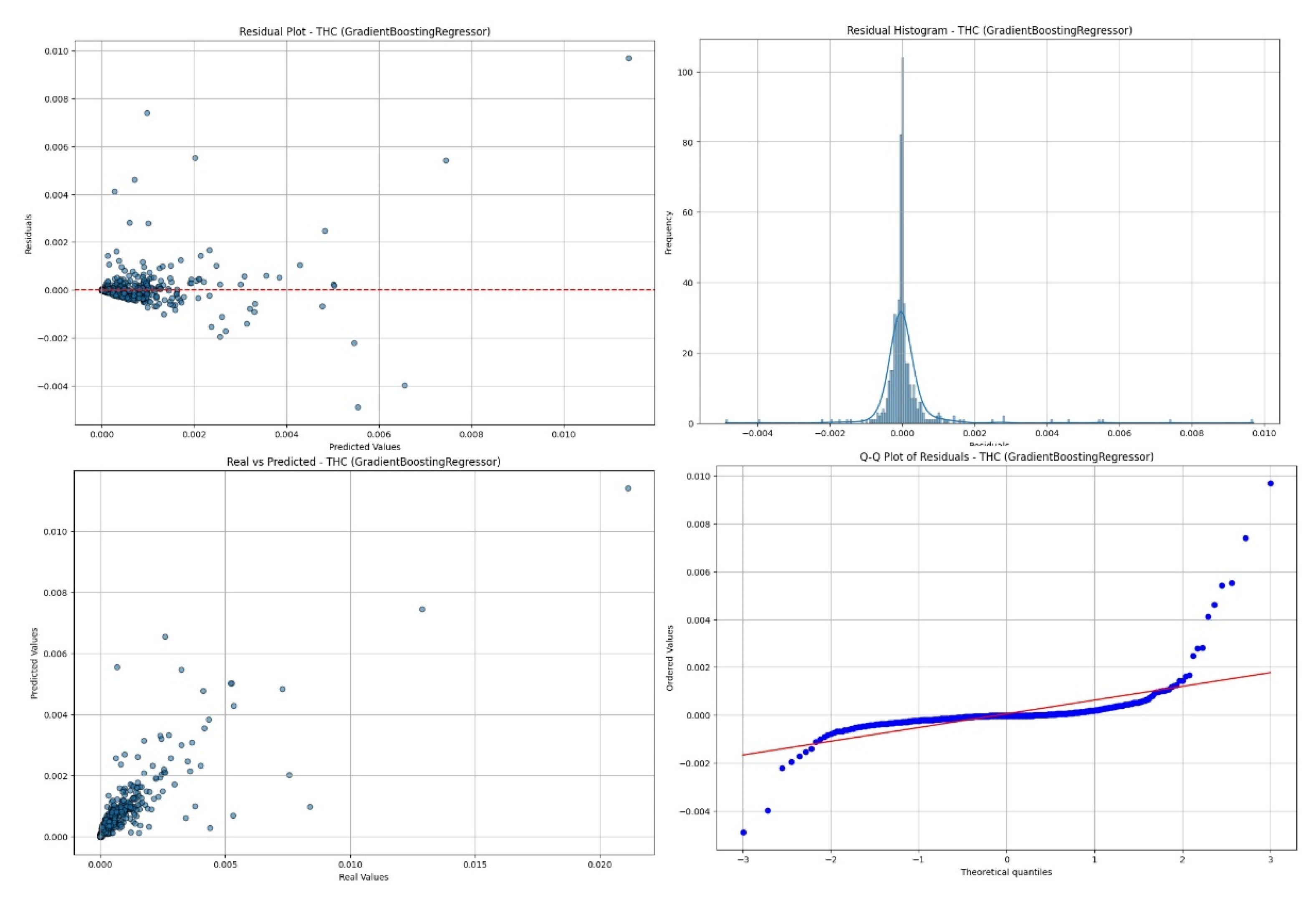

| THC | Gradient Boosting (Warm Engine) | 0.00001 | 0.65674 |

| NOx | Polynomial Regression (Warm Engine) | 0.00001 | 0.41565 |

| CO | Polynomial Regression (Warm Engine) | 0.00277 | 0.21246 |

| CO2 | Polynomial Regression (Warm Engine) | 0.00221 | 0.95100 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).