Preprint

Article

Derangetropy in Probability Distributions and Information Dynamics

Altmetrics

Downloads

53

Views

46

Comments

0

A peer-reviewed article of this preprint also exists.

This version is not peer-reviewed

Submitted:

07 September 2024

Posted:

09 September 2024

You are already at the latest version

Alerts

Abstract

We introduce derangetropy, a novel functional measure designed to characterize the dynamics of information within probability distributions. Unlike scalar measures such as Shannon entropy, derangetropy offers a functional representation that captures the dispersion of information across the entire support of a distribution. By incorporating self-referential and periodic properties, it provides deeper insights into information dynamics governed by differential equations and equilibrium states. Through combinatorial justifications and empirical analysis, we demonstrate the utility of derangetropy in depicting distribution behavior and evolution, providing a new tool for analyzing complex and hierarchical systems in information theory.

Keywords:

Subject: Computer Science and Mathematics - Artificial Intelligence and Machine Learning

1. Introduction

The accurate quantification and analysis of information are critical in many scientific disciplines, from data science and information theory to quantum mechanics and statistical physics. Traditionally, Shannon entropy has long served as the foundational measure of information, providing a scalar summary of uncertainty within a probability distribution [1]. While Shannon entropy’s elegance and simplicity have ensured its widespread adoption, it also bears significant limitations, particularly when dealing with complex, non-Gaussian distributions or systems characterized by high-dimensional data.

A key limitation of Shannon entropy is its reduction of the functional space of distributions to a single scalar value, which can lead to identical entropy values for distinct distributions, thereby potentially obscuring their unique characteristics. This issue is particularly pronounced in analyzing non-Gaussian distributions, common in fields such as finance, genomics, and signal processing, where Shannon entropy often fails to capture intricate dependencies and tail behaviors critical to understanding information dynamics.

Moreover, Shannon entropy’s reliance on a scalar summary frequently decouples it from other important statistical properties, such as variance, which may offer more direct insights into data spread and reliability [2]. In certain cases, particularly involving nonlinear mappings, Shannon entropy can paradoxically suggest a reduction in information even when measurements enhance understanding of a variable, as it primarily emphasizes distribution flatness at the expense of other significant features [3].

To address these challenges, various extensions of entropy have been proposed, including Rényi entropy [4] and Tsallis entropy [5]. While these measures have their merits, they remain fundamentally scalar-centric and do not fully address the need to capture the functional characteristics of information distribution across a distribution’s entire support.

In response to these limitations, we propose derangetropy, a novel conceptual framework that departs from traditional approaches to information measurement. Unlike existing measures, derangetropy is not merely an extension of entropy but represents a new framework that captures the dynamics of information across the entire support of probability distributions. By incorporating self-referential and periodic properties, derangetropy offers a richer understanding of information dynamics, particularly in systems where information evolve cyclically or through feedback mechanisms such as artificial neural networks.

This paper establishes the foundation of derangetropy by exploring its theoretical framework and properties. We begin in Section 2 by introducing the mathematical definition and key properties of derangetropy. Section 3 analyzes the information dynamics and equilibrium within this framework. In Section 4, we discuss the self-referential and self-similar nature of derangetropy. Section 5 provides a combinatorial perspective on derangetropy, connecting it to fundamental principles of combinatorial analysis. Finally, Section 6 summarizes the findings and suggests potential avenues for future research, including applications in information theory and beyond.

2. Mathematical Definition and Properties

2.1. Derangetropy Functional

Let be a probability space, where denotes the sample space, is a -algebra of measurable subsets of , and is a probability measure on . Consider a real-valued random variable that is measurable with respect to the Borel -algebra on . Assume that X has an absolutely continuous distribution with a probability density function (PDF) , where denotes the Lebesgue measure on . The cumulative distribution function (CDF) associated with random variable X is given by

In the following, we present the formal definition of the derangetropy functional.

Definition 1

(Derangetropy). The derangetropy functional is defined by the following mapping

where f is the PDF associated with random variable X, and refers to the function in obtained through this mapping. The evaluation of the derangetropy functional at a specific point is denoted by .

The sine function’s periodicity plays a crucial role in modeling systems characterized by cyclical or repetitive phenomena, where information alternates between concentrated and dispersed states. Specifically, the sine function serves as both a modulation mechanism and a projection operator. As a modulation mechanism, the sine function encodes the cyclical aspects of information content, capturing the periodic nature of information flow in systems with intrinsic cycles, such as those observed in time series data. By modulating the information content, the sine function effectively characterizes the periodic dynamics of information distribution within the probability space. Simultaneously, as a projection operator, the sine function translates the modulated information into a functional space where the dynamics of information flow become more apparent. This dual role facilitates the analysis of complex systems, enabling the representation of higher-dimensional informational structures and their influence on observable behavior.

To gain more insights into its structure, the derangetropy functional can be interpreted as a Fourier-type transformation as follows:

where

is the Shannon entropy for a Bernoulli distribution with success probability . The term quantifies the uncertainty or informational balance between the regions to the left and right of x, as indicated by the CDF values and , respectively. In this interpretation, the derangetropy functional can be viewed as mapping the current informational energy space, represented by Shannon entropy, into a space characterized by oscillatory behavior. The exponential term modulates the sine function , adding a layer of complexity that reflects how information is concentrated or dispersed across the distribution. This modulation is essential for understanding localized informational dynamics, where the oscillations of the sine function mirror the underlying fluctuations in information content.

2.2. Empirical Observations and Insights

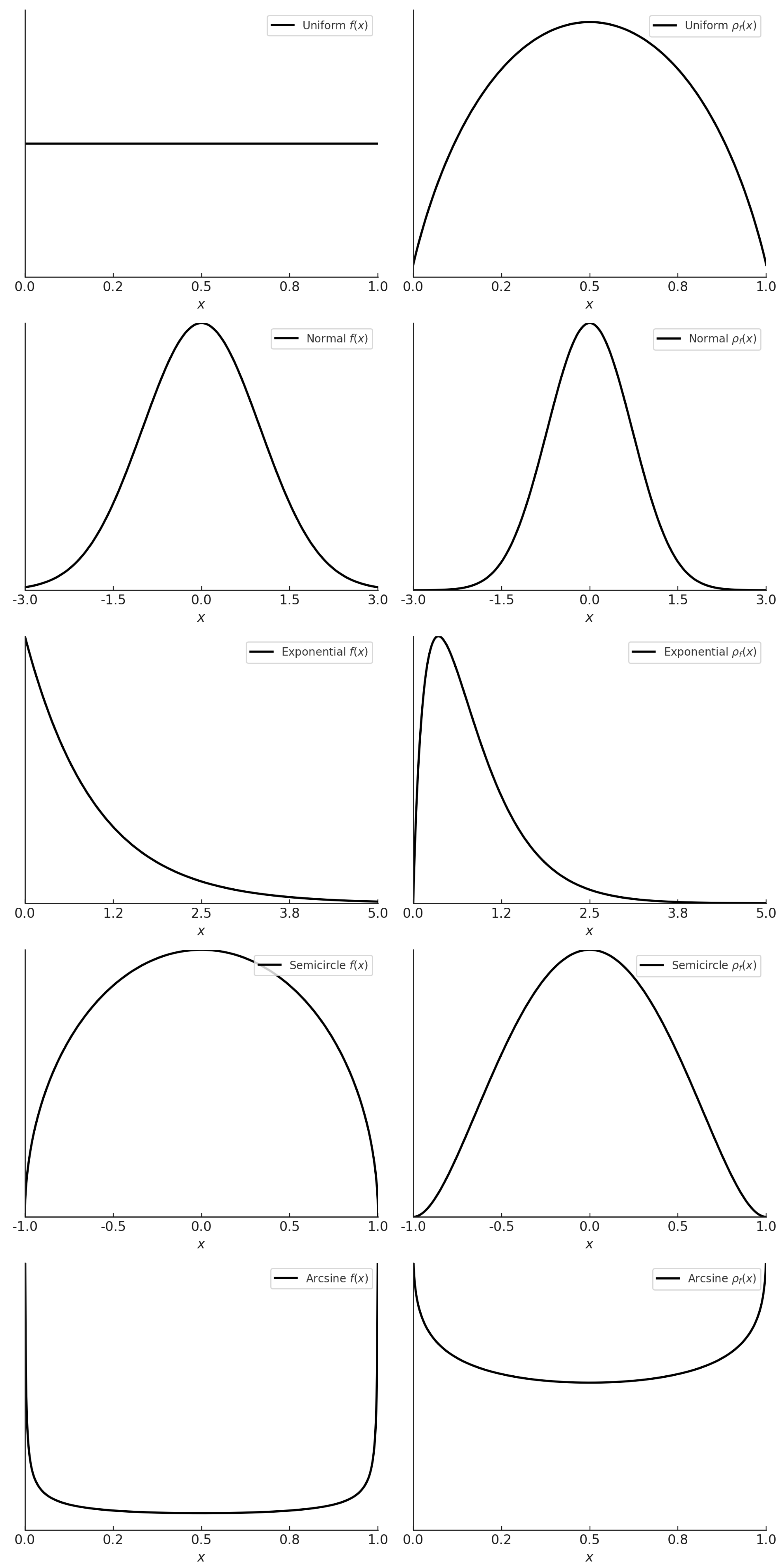

To illustrate the behavior of , we examine five representative distributions: uniform, normal, exponential, semicircle, and arcsin. Each distribution highlights different characteristics such as symmetry, skewness, tail behavior, and boundary effects, offering insights into the relationship between probability density functions and their corresponding derangetropy functionals.

As depicted in Figure 1, symmetric distributions, like the normal and semicircle distributions, result in symmetric derangetropy functionals with prominent central peaks, indicating regions of high informational content. This symmetry suggests a strong correspondence between areas of high probability density and regions of significant information content, particularly in unimodal distributions where the central peaks of aligns with the median, marking a key informational hotspot.

In contrast, asymmetric distributions, such as the exponential distribution, produce skewed derangetropy patterns. These patterns reveal how the concentration of probability mass influences informational dynamics, with shifting towards regions of higher probability density. This behavior is critical for understanding how information is distributed in systems characterized by non-uniform or skewed data.

Furthermore, the behavior of near the boundaries of the distribution’s support, particularly in distributions like the exponential and arcsin, emphasizes the importance of boundary dynamics in shaping the informational landscape. The pronounced derangetropy at the boundaries suggests that information content is significantly influenced by the extremities of the distribution. This observation is particularly relevant in fields like risk management or extreme value theory, where the accumulation and dissipation of information at the boundaries can provide critical insights into extreme events or rare occurrences.

Finally, the amplitude and frequency of oscillations in are directly tied to the complexity of the underlying distribution. For distributions with uniform or smoothly varying density, such as the uniform or normal distributions, the oscillations in are regular and predictable, indicating a steady flow of information. Conversely, distributions with more complex or non-uniform density, like the arcsin distribution, exhibit irregular oscillations, signaling that the flow of information is more dynamic and potentially chaotic. Thus, derangetropy can serve as a measure of the informational complexity of a distribution.

3. Information Dynamics and Equilibrium

3.1. Framework for Informational Energy

The dynamics of informational content within a probability distribution can be likened to energy conservation principles in classical mechanics. However, the derangetropy functional reveals a more intricate interaction between different forms of informational energy, moving beyond strict conservation. Instead, the functional emphasizes a dynamic equilibrium between these energies, illustrating how information is distributed, concentrated, or dissipated across the distribution. This perspective provides deeper insights into the stability and evolution of information within complex systems.

The informational content described by the derangetropy functional can be decomposed into distinct components that represent various aspects of information distribution. Mathematically, this decomposition is expressed as:

where is a constant. The total informational energy within a distribution is the sum of two primary components: oscillatory and structural informational energies. These components interact dynamically, maintaining a form of equilibrium across the distribution:

On the one hand, the oscillatory informational energy defined by

captures the dynamic, cyclical flow of information within the distribution, similar to kinetic energy in physics, as energy shifts between different states or locations within the system. On the other hand, the structural informational energy defined by

reflects the inherent stability and uncertainty of the distribution, analogous to potential energy in physics.

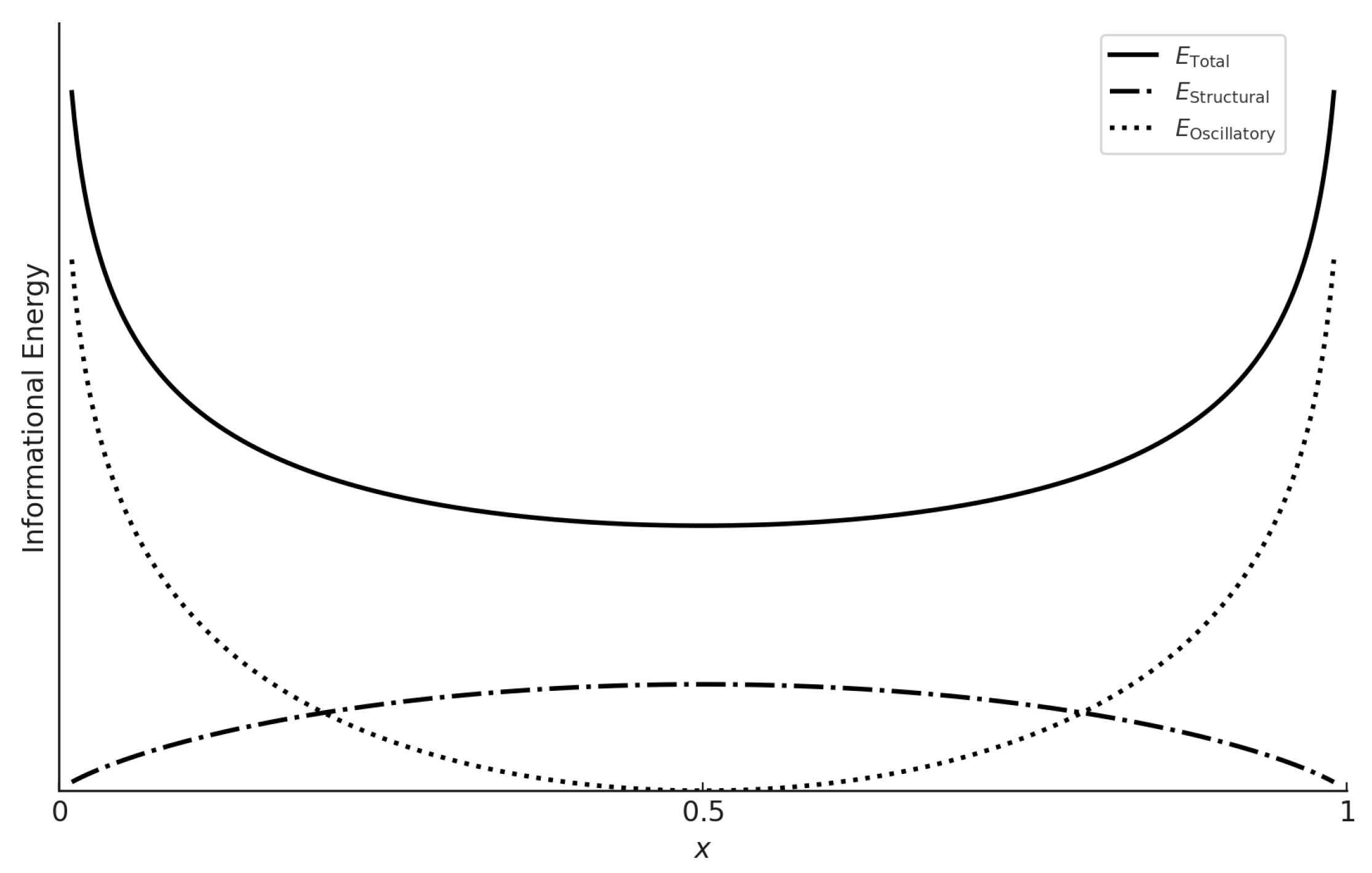

Using the uniform distribution defined on for illustrative purposes, Figure 2 visually demonstrates how the total informational energy varies across the distribution, driven by the interaction between oscillatory and structural energies. The solid line in the figure represents the total informational energy, which exhibits a parabolic shape with a minimum at and steep rises near the boundaries. This pattern indicates that the energy distribution is influenced by both the oscillatory and structural components, with higher energy values near the boundaries suggesting a concentration of information content in these regions, where the distribution is less stable.

Furthermore, the dotted line, representing the oscillatory energy, dominates near the boundaries and . The sharp increase in this component near the edges reflects the intense, cyclic nature of the information content in these areas, where the distribution is most unstable. As x approaches 0 or 1, the sine function approaches zero, leading to spikes in oscillatory energy, which in turn causes significant instability. Also, the dashed line represents the structural energy, which peaks around the median of the distribution. This peak reflects the inherent stability and maximum uncertainty at the center of the distribution. The structural energy remains relatively flat across the distribution, except near the boundaries where it diminishes, reflecting the distribution’s overall stability in its central region compared to the more volatile boundary regions.

This figure shows that the total energy is not conserved in the traditional sense; it varies due to the compensatory interaction between and . As oscillatory energy increases near the boundaries, structural energy’s relative contribution diminishes, and vice versa near the center. This dynamic equilibrium highlights how information is distributed and transformed within the distribution, providing a visual validation of the theoretical concepts discussed.

3.2. Equilibrium Analysis

Understanding equilibrium within the derangetropy framework requires analyzing the interaction between oscillatory and structural informational energies. Oscillatory energy, characterized by its fluctuations, tends to dominate near the boundaries of the distribution, where the probability density is low, and the informational content is more volatile. Conversely, structural energy is more pronounced near the center, reflecting the balance and uniformity of information in that region. This dynamic interplay forms the basis for understanding how equilibrium is achieved and maintained—or disrupted—within the distribution.

Equilibrium points within the derangetropy framework are critical for understanding the stability and dynamics of information distribution. These points are defined as locations where the derivative of the total informational energy with respect to x is zero. The nature of these equilibrium points—whether they are stable, unstable, or saddle points—can be determined by examining the second derivative of the total informational energy.

For the uniform distribution, where and , the total informational energy is:

At , the equilibrium analysis reveals a critical point of instability. The minimum in the total energy curve at suggests that this is a point of balance between the oscillatory and structural energies. However, this point is an unstable equilibrium, where small perturbations could cause the system to move away from equilibrium. Near the boundaries and , the total energy increases steeply, indicating regions of instability. The oscillatory energy drives this instability, while the structural energy diminishes, making these points prone to significant shifts in the information distribution. This behavior is particularly relevant for understanding the distribution’s tail properties, where extreme or rare events are more likely to occur.

3.3. Key Mathematical Properties

We now establish key mathematical properties of the derangetropy functional, , that underscore its utility in analyzing probability distributions. These properties provide insight into the behavior of across different types of distributions and highlight its relevance to information dynamics. For any absolutely continuous PDF , the derangetropy functional is a nonlinear operator that belongs to the space having the following first derivative

where the derivatives are taken with respect to x. The following theorem shows that the derangetropy functional involving distribution functions of random variable X, itself is a valid PDF for another random variable.

Theorem 1.

For any absolutely continuous , the derangetropy functional is a valid PDF.

Proof.

To prove that is a valid PDF, we need to show that for all and that . The non-negativity of is clear due to the non-negativity of the terms involved in its definition. Next, the normalization condition can be verified by the change of variables , yielding

As shown in Appendix A, the integral

which, in turn, implies that

Hence, is a valid PDF. □

Furthermore, since the derangetropy functional is a valid PDF, it possesses well-defined theoretical properties, such as the existence of a mode. The following theorem demonstrates that, for any symmetric unimodal distribution, the mode of coincides with the median of the underlying distribution.

Theorem 2.

For any symmetric unimodal probability distribution having PDF , the derangetropy functional is maximized at the median of the distribution.

Proof.

We aim to demonstrate that attains its maximum at the median m, where . Given the symmetry of the distribution, the median m coincides with the mode, and the PDF is symmetric around m, implying that . By analyzing the derivative of the derangetropy functional, it follows that is a critical point of . To confirm that m is indeed the point of maximum, we examine the second derivative of at :

Since due to the unimodal nature of the distribution, the second derivative is negative at , confirming that has a local maximum at this point. The symmetry of the distribution ensures that this local maximum at m is, in fact, the global maximum of the derangetropy functional. Thus, is maximized at the median m of any symmetric unimodal distribution.efore, is maximized at the median of any symmetric unimodal distribution. □

3.4. Differential Equation

The analysis of information dynamics within a probability distribution, particularly within the framework of the derangetropy functional, leads to the derivation of a nonlinear second-order ordinary differential equation that governs the evolution of informational content given by the following theorem.

Theorem 3.

Let X be a random variable following a uniform distribution on the interval (0,1). Then, the derangetropy functional satisfies the following second-order linear ordinary differential equation:

where and the initial conditions are given by

Proof.

To eliminate the first-order derivative term, we multiply the entire equation by the integrating factor , where:

Next, introduce a new function such that:

Substituting this expression into the differential equation simplifies it to:

The general solution to this simplified differential equation is:

where and are constants. Finally, evaluating the initial values yields and , implying that is indeed a solution to the differential equation. □

This differential equation encapsulates the intricate dynamics of the derangetropy functional, driven by both linear and nonlinear influences. At the median of the distribution, where , the function vanishes, leading to a stabilization in the rate of change of the informational content. This indicates that the median acts as a point of equilibrium, where the evolution of the derangetropy functional is locally stable.

As approaches the boundaries of the distribution, the inverse hyperbolic tangent function diverges, introducing significant nonlinearity into the equation. This divergence signals that the informational content becomes highly sensitive near the boundaries, where the probability mass is concentrated. The differential equation suggests that there is an accumulation or concentration of informational change at these boundaries. This is intuitive, as the cumulative distribution compresses the probability mass into smaller regions near the boundaries, leading to more significant changes in the derangetropy functional. The boundary-sensitive term , inversely proportional to the variance of a Bernoulli distribution with probability of success , further amplifies this effect, ensuring that the derangetropy functional adjusts dynamically in response to the distribution’s proximity to its limits.

The stabilization at the median and the sensitivity near the boundaries highlight the complex interplay between linear and nonlinear dynamics within the differential equation. The coexistence of linear and nonlinear terms suggests that the evolution of the derangetropy functional is neither purely smooth nor entirely chaotic. Instead, it follows a complex pattern where smooth changes can be abruptly influenced by nonlinear effects, particularly near the boundaries and around the median. This interaction creates a dynamic equilibrium where the informational content is continually adjusted by the competing influences of linearity and nonlinearity. The derangetropy functional, therefore, adapts to different regions of the distribution, ensuring that the evolution of information is context-dependent.

The differential equation governing the derangetropy functional can also be interpreted through the framework of utility theory, offering a rigorous analogy between the evolution of informational content within a probability distribution and the concept of economic utility. As information begins to accumulate within the distribution, the utility—represented by the derangetropy functional—initially experiences rapid growth, analogous to the increasing satisfaction an economic agent derives from consuming additional units of a good. This phase of growth continues as the distribution approaches its median, where the utility reaches a peak. At this critical juncture, the informational content is optimally balanced, and the system achieves a state of maximum utility, reflecting the most efficient allocation of informational resources. The median serves as a natural equilibrium point, analogous to the optimal allocation of resources in economic theory, where utility is maximized through a balanced distribution of probability mass.

As the distribution progresses beyond the median and nears its boundaries, the utility derived from additional informational content begins to diminish, illustrating the principle of diminishing marginal utility in economics. The differential equation governing the derangetropy functional captures this transition, revealing that the rate of change in utility decreases as the cumulative distribution function approaches the extremes of the distribution. This decline in utility is akin to the reduced satisfaction an economic agent experiences when over-consuming a good, where the benefits of further consumption become increasingly marginal and may even turn negative. Consequently, the derangetropy functional not only models the accumulation and optimization of information but also encapsulates the risks associated with over-concentration. This interpretation underscores the functional’s role in maintaining a balance analogous to the equilibrium sought in economic utility optimization, thereby providing a profound connection between information theory and economic principles.

4. Self-referential Nature

The derangetropy functional, denoted by , is distinguished by its self-referential nature, whereby itself is a valid PDF. This implies that not only encapsulates the informational content of the underlying distribution but also recursively uncovers the hierarchical structure of its own information content. This recursive and hierarchical relationship is formalized as:

where represents the nth iteration of the derangetropy functional, and

is the associated CDF. The initial conditions are set by

where denotes the CDF of the original distribution. This recursive structure ensures that each subsequent layer integrates the informational content of all preceding layers, thereby constructing a comprehensive hierarchical representation of information.

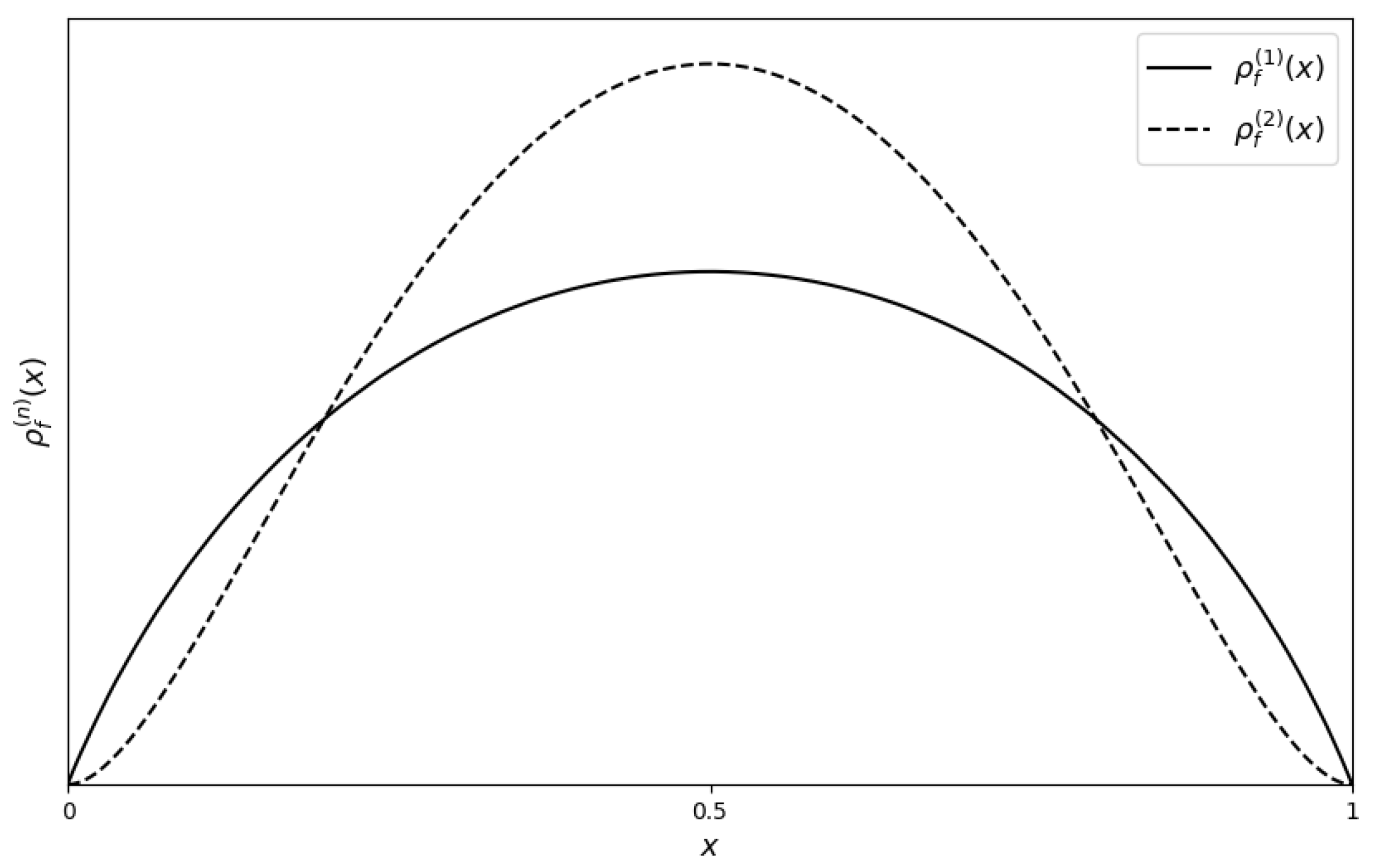

To elucidate this self-referential property, we consider the first and second layers of the derangetropy functional for the uniform distribution over the interval , as illustrated in Figure 3. The first layer, , reflects the basic informational structure of the uniform distribution. In contrast, the second layer, , introduces additional complexity, manifesting in pronounced peaks and troughs. This evolution underscores the recursive amplification inherent in the derangetropy functional, progressively unveiling deeper informational layers with each iteration.

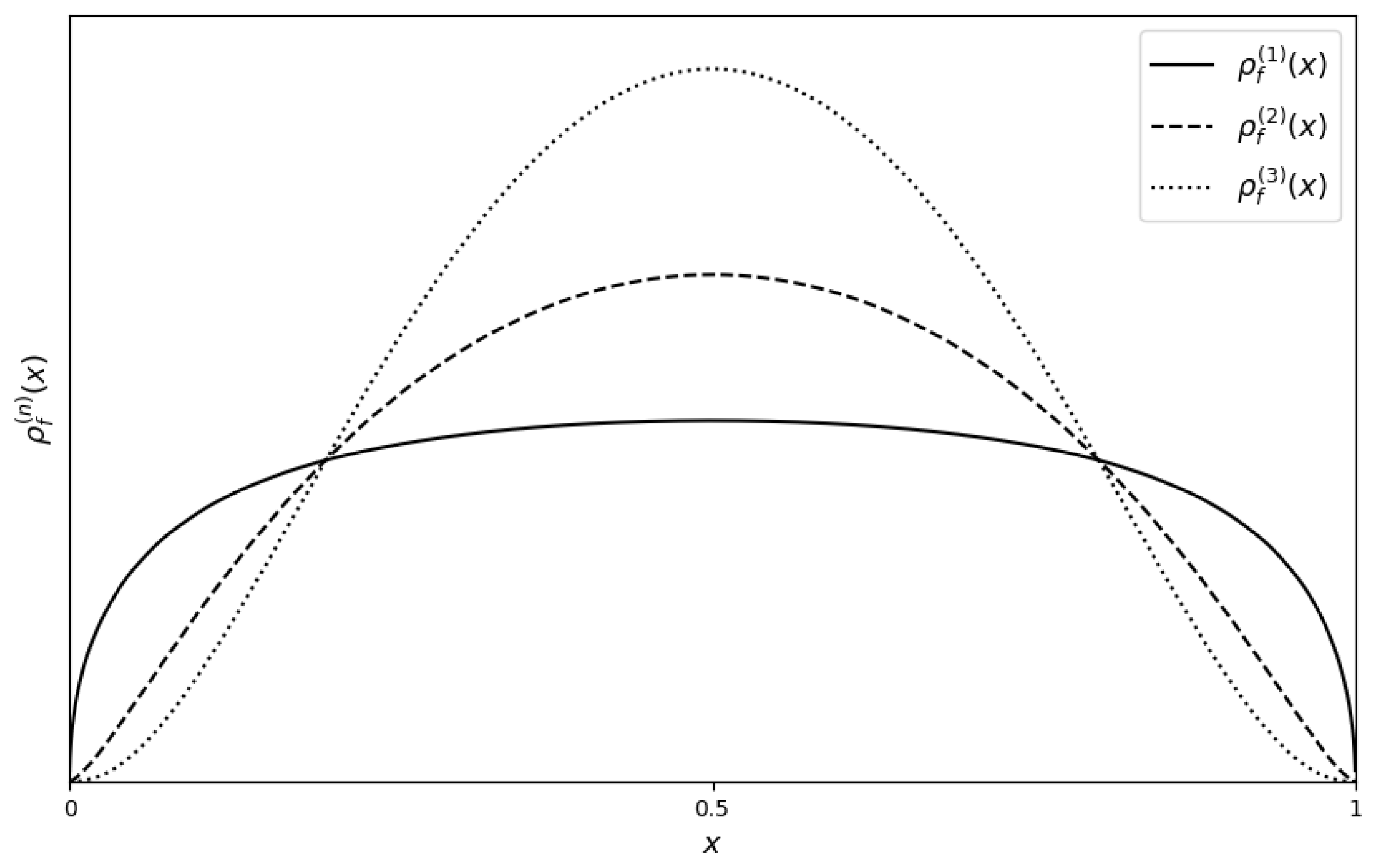

Further insights into the self-referential nature of the derangetropy functional can be obtained by examining its behavior for the arcsin distribution, as shown in Figure 4. The first layer captures the strong boundary effects characteristic of the arcsin distribution, resulting in a pronounced concave upward profile near the interval’s endpoints. As recursion advances to the second layer, these boundary effects are amplified, giving rise to more pronounced oscillations and a complex informational structure. The transition in concavity observed between the first and second layers signifies a shift from a simple, boundary-focused representation to a more intricate depiction of the distribution’s information content.

The third layer introduces a bell-shaped curve, indicative of a stabilization process within the recursive structure. The previously amplified boundary effects become harmonized, redistributing the information more uniformly across the distribution. This bell-shaped curve suggests a tendency towards centralization and equilibrium within the distribution as recursion progresses. The smoothing of oscillations in the third layer reflects the derangetropy functional’s inherent ability to guide the system towards an informational equilibrium, where initially sharp boundary effects are moderated and the information distribution becomes more balanced and Gaussian-like.

An intriguing and nontrivial observation emerges when comparing the second- and third-level derangetropies of the arcsin distribution with the first- and second-level derangetropies of the uniform distribution. Notably, the second-level derangetropy of the arcsin distribution closely resembles the first-level derangetropy of the uniform distribution, while the third-level derangetropy of the arcsin distribution mirrors the second-level derangetropy of the uniform distribution. This resemblance reveals a deep structural connection between these distributions under the framework of the derangetropy functional.

The transformation such that , which maps the uniform distribution to the arcsin distribution, is pivotal in explaining this connection. The sine function within the derangetropy functional plays a crucial role in this transformation. When applied to the arcsin distribution, whose CDF is given by , the sine function simplifies as follows:

This expression directly mirrors the transformation , establishing a structural similarity between the arcsin and uniform distributions. The recursive nature of the derangetropy functional, which inherently tends to smooth and centralize information, reflects this underlying transformation. The arcsin distribution, characterized by its strong boundary effects, requires additional recursive layers to attain a similar informational structure to that of the uniform distribution. This explains why the second-level derangetropy of the arcsin distribution mirrors the first-level derangetropy of the uniform distribution, and why the third-level derangetropy of the arcsin distribution resembles the second-level derangetropy of the uniform distribution. Through its recursive application, the derangetropy functional reveals the deep structural relationships between these distributions.

Lastly, we note that as n approaches infinity, the recursive process drives the distribution towards a degenerate distribution centered at the median. Mathematically, this means that the level derangetropy converges in distribution to a Dirac delta function , where all the probability mass is concentrated at the median ; i.e.,

This convergence behavior is a direct consequence of the derangetropy functional’s design, which inherently favors the centralization of information. The recursive smoothing effect ensures that, with each iteration, the distribution becomes more focused around the central point, ultimately leading to a situation where all mass is concentrated at the median. It is worth mentioning that the derangetropy functional exhibits self-similar patterns, particularly in the earlier stages of recursion. However, as the recursion deepens, the structure becomes more centralized about median and less fractal-like, leading to increasingly negative scaling exponents.

5. Combinatorial Perspective

The concept of derangetropy is deeply rooted in combinatorial principles, particularly the notion of derangements. In combinatorics, a derangement is a permutation of a set where no element appears in its original position, representing a state of maximal disorder. Extending this concept to continuous probability distributions, the derangetropy functional quantifies the local interplay between order and disorder at each point in the distribution.

A key mathematical tool in deriving the properties of derangetropy is Euler’s reflection formula, given by:

where is the Gamma function, a continuous analogue of the factorial function. This formula establishes a fundamental connection between the sine function and the combinatorial structures underlying the derangetropy functional. The derangetropy functional, initially defined by formula (1) can be reformulated using Euler’s reflection formula as:

This reformulation highlights the recursive self-referential nature of the distribution, where the distribution refers to its own values at each point x, thereby influencing the overall informational dynamics of the system. The terms and introduce a self-weighting modulation mechanism that amplifies disorder non-linearly in regions of high probability, while regions with large values experience a similar recursive influence. In continuous distributions, the combinatorial complexity is encapsulated by the Gamma functions and . These functions serve as continuous analogues of the derangement process, quantifying the degree of disorder at each point by representing the combinatorial complexity associated with arranging elements less than x or greater than x, respectively. The interplay between these Gamma functions at each point and the self-weighing mechanism determine the local derangetropy and reflects the underlying combinatorial structure of the distribution.

Moreover, the system governed by the derangetropy functional continuously transitions between states of order and disorder. This dynamic conversion is modulated by the oscillatory and structural informational energies inherent within the distribution, reflecting the probabilistic laws that govern the system’s behavior. Equilibrium is achieved when the total informational energy is minimized across the distribution, representing a balance between order and disorder. Deviations from equilibrium increase derangetropy, indicating higher disorder at specific points x within the distribution, a behavior crucial for understanding extreme events or rare occurrences.

Consequently, the derangetropy functional also captures both local variability and global stability within the system. At any given point x, the local derangetropy is determined by whether x aligns with its expected order. Aggregating these local measures provides a global assessment of the system’s informational content, consistent with the principles of complex systems, where global properties emerge from local interactions. This dual capability makes derangetropy a powerful tool for analyzing both micro and macro structures within a wide range of probabilistic systems.

6. Conclusion and Future Work

This paper introduced derangetropy, a functional measure designed to capture the dynamic nature of information within probability distributions. By offering a functional representation rather than a scalar summary, derangetropy provides deeper insights into the interplay between order and disorder across a distribution’s entire support. The measure’s self-referential and periodic properties facilitate a rigorous analysis of information stability and evolution, enhancing our understanding of complex systems.

The versatility of derangetropy was demonstrated through its mathematical formulation and empirical analysis across various distributions. This novel approach extends beyond the capabilities of traditional entropy measures, allowing for a more detailed examination of information dynamics.

Looking forward, several promising research directions emerge. In the realm of deep neural networks, derangetropy could regulate information flow, preventing overconcentration or dispersion and thereby enhancing network robustness and generalization. Incorporating equilibrium relations into the backpropagation process may also introduce more efficient training methods.

In quantum information theory, derangetropy could provide new tools for analyzing information dynamics in quantum systems, offering insights where traditional measures fall short. Additionally, applying derangetropy within statistical mechanics and thermodynamics could deepen our understanding of complex systems at both equilibrium and nonequilibrium states.

In summary, derangetropy represents a significant conceptual and practical advancement in information theory, opening new avenues for theoretical exploration and practical application. Future research will likely expand its utility, revealing further insights into the dynamics of information and its potential to enhance the design and function of deep neural networks and other complex systems.

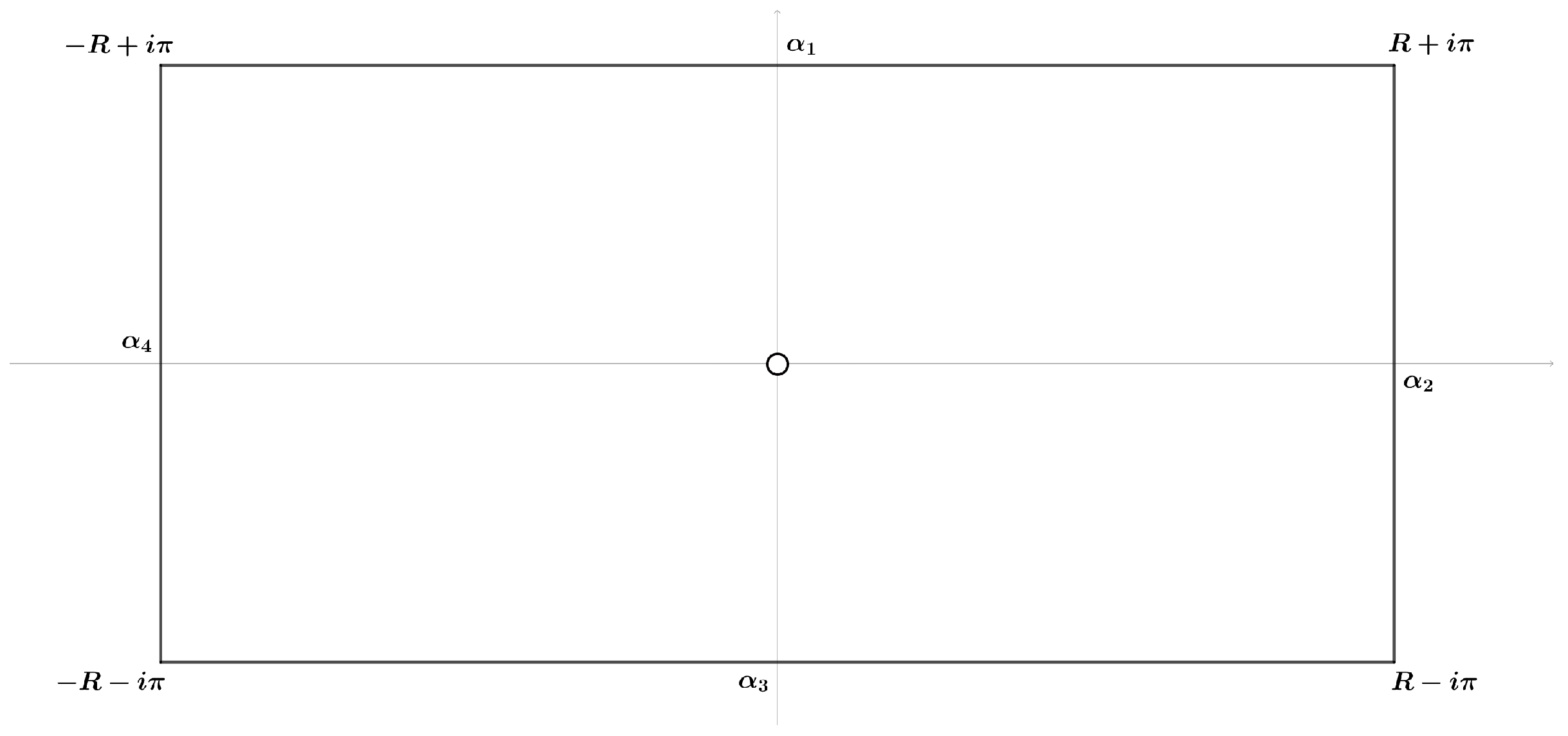

7.

Let us compute by defining

By substituting , we get

which in turn yields

Now consider the following function

This function is meromorphic on the strip

and its only point is located at having

Furthermore, consider the rectangular clockwise path , as depicted in Figure 5, which is composed of , yielding

Then, for any sequence , we have that . In turn, this leads to approach infinity. For this reason, and both become zero as . By further noting that , one obtains which yields

Thus,

References

- Shannon, C.E. A Mathematical Theory of Communication. Bell System Technical Journal 1948, 27, 379–423. [CrossRef]

- Petty, G.W. On some shortcomings of Shannon entropy as a measure of information content in indirect measurements of continuous variables. Journal of Atmospheric and Oceanic Technology 2018, 35, 1011–1021. [CrossRef]

- Cincotta, P.M.; Giordano, C.M.; Silva, R.A.; Beaugé, C. The Shannon entropy: An efficient indicator of dynamical stability. Physica D: Nonlinear Phenomena 2021, 417, 132816. [CrossRef]

- Rényi, A. On measures of entropy and information. Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Contributions to the Theory of Statistics 1961, pp. 547–561.

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. Journal of Statistical Physics 1988, 52, 479–487. [CrossRef]

Figure 1.

Plots of probability density functions (left) and derangetropy functionals (right) for , , , , and distributions.

Figure 1.

Plots of probability density functions (left) and derangetropy functionals (right) for , , , , and distributions.

Figure 2.

Plots of total (solid line), structural (dashed line) and oscillatory (dotted line) informational energies for distribution.

Figure 2.

Plots of total (solid line), structural (dashed line) and oscillatory (dotted line) informational energies for distribution.

Figure 3.

Plots of (solid line) and (dashed line) for distribution.

Figure 4.

Plots of (solid line), (dashed line) and (dotted line) for distribution.

Figure 5.

Schematic of the rectangular clockwise integration path.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Copyright: This open access article is published under a Creative Commons CC BY 4.0 license, which permit the free download, distribution, and reuse, provided that the author and preprint are cited in any reuse.

MDPI Initiatives

Important Links

© 2024 MDPI (Basel, Switzerland) unless otherwise stated