Submitted:

08 September 2024

Posted:

09 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- A novel cost-effective multi-time series forecasting system (MSFS) for dynamic cloud resource reservation planning.

- A context-aware multi-time series optimization method called similarity-based time-series grouping (STG) for scalable, automated, and feature-based time series segmentation.

- A novel hybrid ensemble anomaly detection algorithm (HEADA).

- A multifaceted evaluation of multi-time series forecasting (MSFS) system using a real-life dataset from a production cloud environment.

- FinOps-driven qualitative and quantitative assessment of dynamic resource reservation plans.

- The experiments conducted demonstrated that the group-specific forecasting model (GSFM) approach presented in this study outperformed both the global forecasting model (GFM) and local forecasting model (LFM) concepts, resulting in an average cost reduction of up to 44.71% in cloud environments.

2. Related Work

2.1. Time Series Forecasting

2.2. Cloud Resource Usage Optimization

2.3. Summary

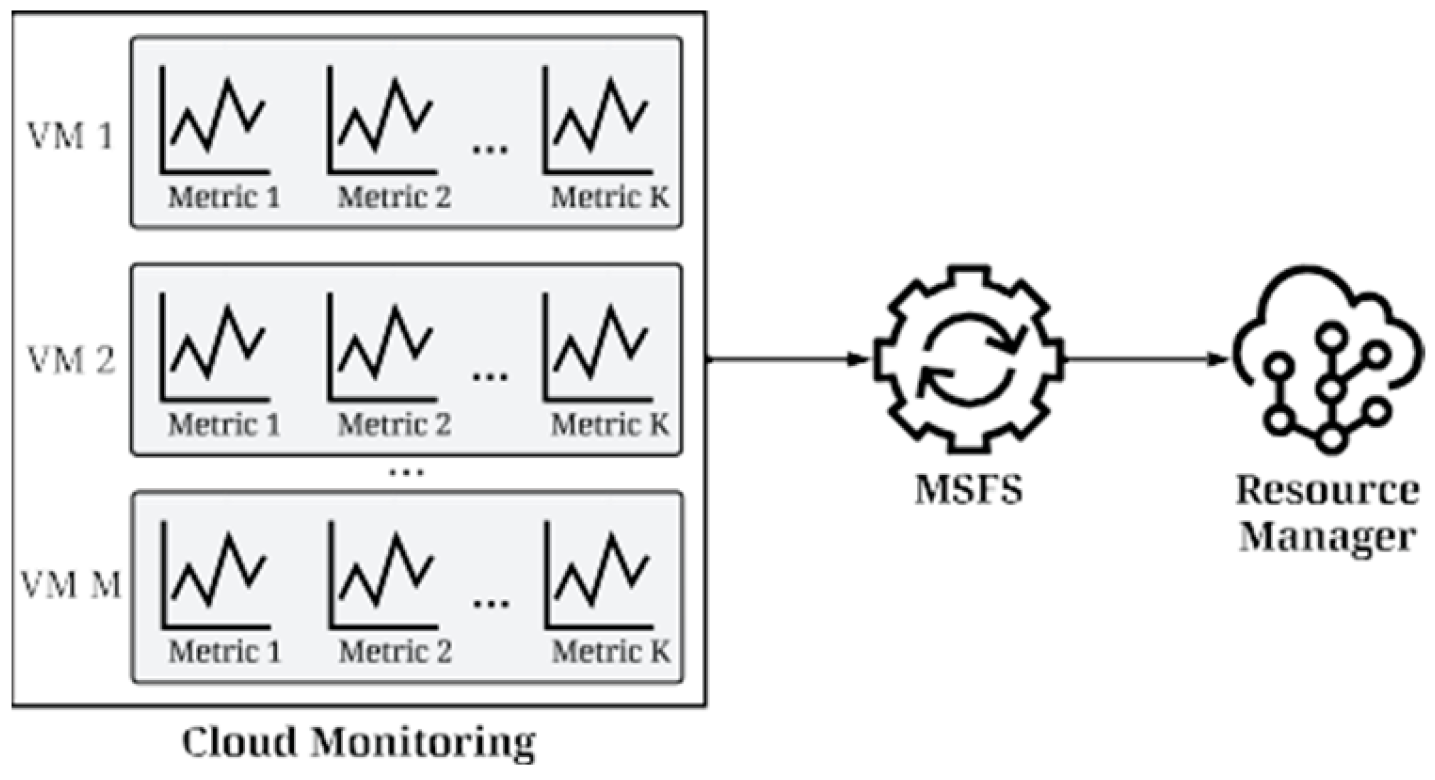

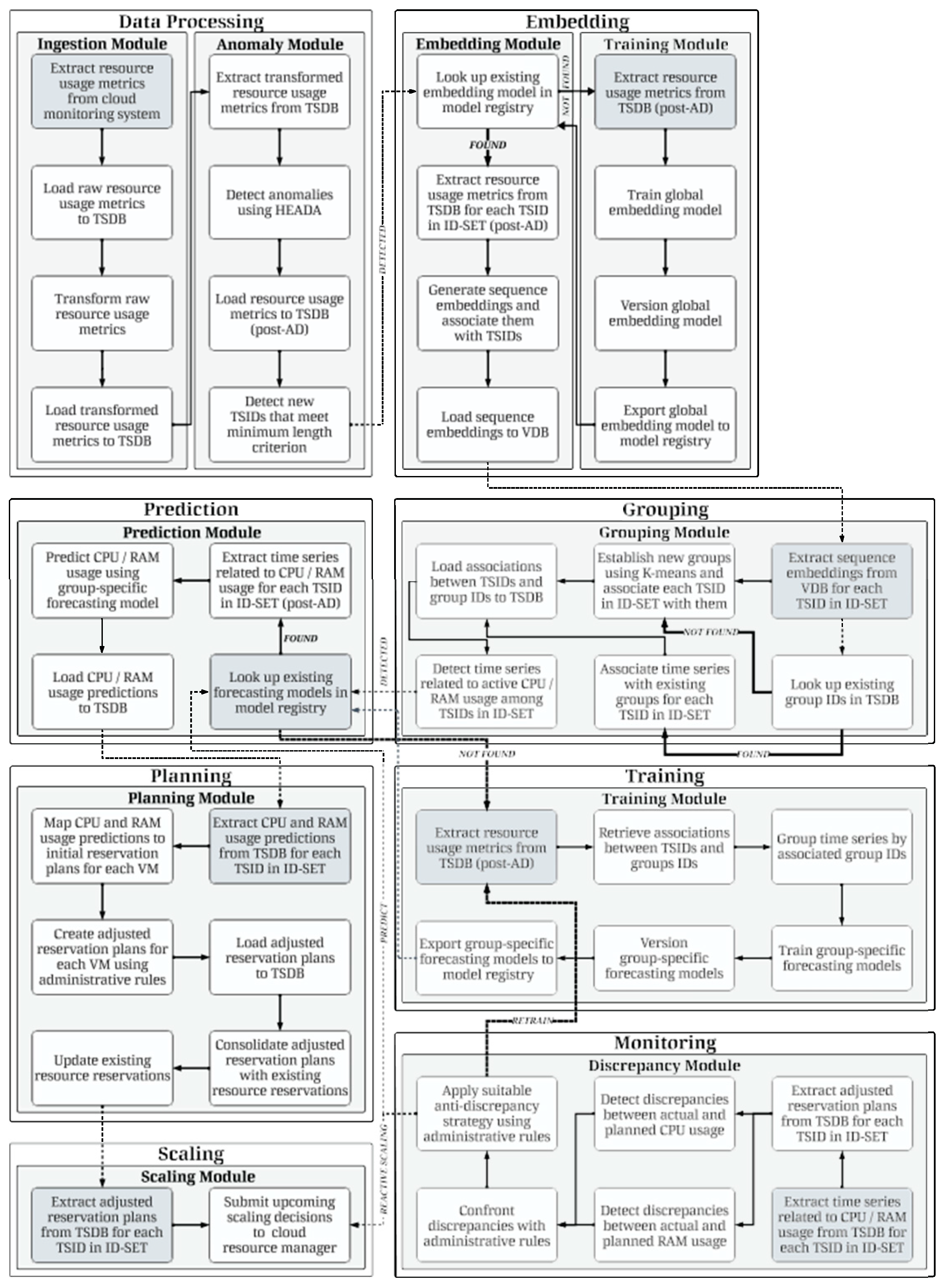

3. Multi-Time Series Forecasting System

3.1. System Overview

| Co | ntext | Virtual | Machine | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Evaluation Metric | Resource | Method | VM01 | VM02 | VM03 | VM04 | VM05 | VM06 | VM07 | VM08 |

| MAE | CPU | GFM | 0.123 | 0.209 | 3.282 | 1.653 | 3.863 | 2.656 | 1.510 | 2.787 |

| GSFM | 0.025 | 0.249 | 2.788 | 1.674 | 3.552 | 2.882 | 1.479 | 2.775 | ||

| LFM | 1.019 | 0.308 | 2.966 | 2.379 | 12.511 | 4.156 | 4.503 | 2.910 | ||

| RAM | GFM | 1.057 | 0.288 | 0.850 | 1.173 | 1.152 | 1.012 | 0.841 | 0.633 | |

| GSFM | 0.216 | 0.072 | 0.724 | 0.617 | 1.284 | 0.994 | 0.475 | 0.704 | ||

| LFM | 8.764 | 0.285 | 1.024 | 4.270 | 2.291 | 1.270 | 3.712 | 1.364 | ||

| RMSE | CPU | GFM | 0.123 | 0.370 | 3.700 | 1.993 | 4.335 | 3.066 | 1.758 | 3.405 |

| GSFM | 0.031 | 0.369 | 3.205 | 2.046 | 4.109 | 3.208 | 1.773 | 3.414 | ||

| LFM | 1.022 | 0.396 | 3.390 | 2.698 | 13.027 | 4.743 | 4.785 | 3.429 | ||

| RAM | GFM | 1.061 | 0.296 | 0.938 | 1.213 | 1.368 | 1.160 | 0.884 | 0.789 | |

| GSFM | 0.264 | 0.078 | 0.846 | 0.658 | 1.481 | 1.178 | 0.523 | 0.836 | ||

| LFM | 8.793 | 0.288 | 0.179 | 4.336 | 2.426 | 1.333 | 3.761 | 1.489 | ||

| MdAE | CPU | GFM | 0.128 | 0.085 | 3.155 | 1.460 | 3.819 | 2.438 | 1.453 | 2.290 |

| GSFM | 0.022 | 0.149 | 2.652 | 1.391 | 3.180 | 2.910 | 1.314 | 2.441 | ||

| LFM | 1.045 | 0.260 | 2.857 | 2.420 | 13.348 | 4.792 | 4.752 | 2.424 | ||

| RAM | GFM | 1.102 | 0.298 | 0.823 | 1.217 | 1.052 | 0.911 | 0.869 | 0.529 | |

| GSFM | 0.189 | 0.071 | 0.670 | 0.617 | 1.210 | 0.859 | 0.473 | 0.635 | ||

| LFM | 8.984 | 0.274 | 0.980 | 4.363 | 2.368 | 1.288 | 3.784 | 1.419 | ||

| FR | CPU | GFM | 1.000 | 0.383 | 0.269 | 0.363 | 0.352 | 0.441 | 0.351 | 0.436 |

| GSFM | 1.000 | 0.792 | 0.397 | 0.443 | 0.369 | 0.315 | 0.458 | 0.465 | ||

| LFM | 1.000 | 0.818 | 0.402 | 0.182 | 0.038 | 0.150 | 0.022 | 0.523 | ||

| RAM | GFM | 1.000 | 0.000 | 0.327 | 0.000 | 0.405 | 0.351 | 0.000 | 0.349 | |

| GSFM | 1.000 | 0.000 | 0.426 | 0.000 | 0.324 | 0.373 | 0.000 | 0.281 | ||

| LFM | 1.000 | 0.000 | 0.843 | 0.000 | 0.133 | 0.286 | 0.000 | 0.077 |

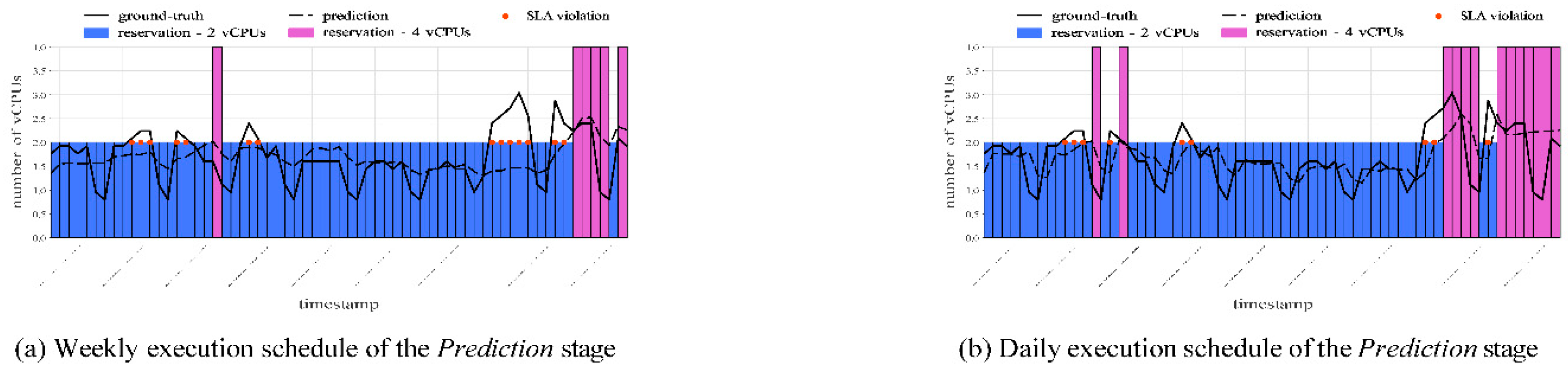

4.4. Dynamic Resource Reservation Planning

| Reference type | Percentage cost reduction(with MSFS) | Daily USD cost (without MSFS) | Daily USD cost (with MSFS) | Percentage CPU usage(with MSFS) | Percentage RAM usage(with MSFS) | Scaling events | Violation events | Percentage availability |

|---|---|---|---|---|---|---|---|---|

| e2-standard-2 | 0.00 | 2.07 | 2.07 | 17.69 | 22.13 | 0.00 | 0.00 | 100.00 |

| e2-standard-4 | 39.40 | 4.14 | 2.51 | 35.39 | 38.17 | 0.63 | 0.13 | 99.81 |

| e2-standard-8 | 48.24 | 8.29 | 4.29 | 48.88 | 44.40 | 1.13 | 0.63 | 99.04 |

| e2-standard-16 | 50.74 | 16.58 | 8.17 | 55.41 | 56.86 | 2.25 | 2.00 | 96.93 |

| e2-standard-32 | 57.58 | 33.15 | 14.06 | 60.60 | 57.52 | 2.25 | 2.13 | 96.75 |

| Reference type | Percentage cost reduction(with MSFS) | Daily USD cost (without MSFS) | Daily USD cost (with MSFS) | Percentage CPU usage(with MSFS) | Percentage RAM usage(with MSFS) | Scaling events | Violation events | Percentage availability |

|---|---|---|---|---|---|---|---|---|

| e2-standard-2 | 0.00 | 2.07 | 2.07 | 17.69 | 22.13 | 0.00 | 0.00 | 100.00 |

| e2-standard-4 | 20.87 | 4.14 | 3.28 | 29.52 | 36.42 | 0.75 | 0.00 | 100.00 |

| e2-standard-8 | 34.02 | 8.29 | 5.47 | 35.33 | 40.23 | 1.50 | 0.00 | 100.00 |

| e2-standard-16 | 36.98 | 16.58 | 10.45 | 35.06 | 41.12 | 4.75 | 0.25 | 99.62 |

| e2-standard-32 | 44.71 | 33.15 | 18.33 | 36.07 | 39.75 | 4.75 | 0.25 | 99.62 |

4.5. Summary

5. Conclusions

CRediT Authorship Contribution Statement

Declaration of Competing Interest

Data Availability

Acknowledgments

References

- Alqahtani, D., 2023. Leveraging sparse auto-encoding and dynamic learning rate for efficient cloud workloads prediction. IEEE Access.

- Audibert, J., Michiardi, P., Guyard, F., Marti, S., Zuluaga, M.A., 2020. Usad: Unsupervised anomaly detection on multivariate time series, in: Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Association for Computing Machinery, New York, NY, USA. p. 3395–3404.

- Bader, J., Lehmann, F., Thamsen, L., Leser, U., Kao, O. Lotaru: Locally predicting workflow task runtimes for resource management on heterogeneous infrastructures. Future Generation Computer Systems 2024, 150, 171–185. [CrossRef]

- Bandara, K. , Bergmeir, C., Hewamalage, H., 2021a. LSTM-MSNet: Leveraging forecasts on sets of related time series with multiple seasonal patterns. IEEE Transactions on Neural Networks and Learning Systems 32, 1586–1599.

- Bandara, K., Bergmeir, C., Smyl, S. Forecasting across time series databases using recurrent neural networks on groups of similar series: A clustering approach. Expert systems with applications 2020, 140, 112896. [CrossRef]

- Bandara, K., Hewamalage, H., Liu, Y.H., Kang, Y., Bergmeir, C., 2021b. Improving the accuracy of global forecasting models using time series data augmentation. Pattern Recognition 120, 108148.

- Barbado, A., Óscar Corcho, Benjamins, R. Rule extraction in unsupervised anomaly detection for model explainability: Application to oneclass svm. Expert Systems with Applications 2022, 189, 116100. [CrossRef]

- Barut, C., Yildirim, G., Tatar, Y., 2024a. An intelligent and interpretable rule-based metaheuristic approach to task scheduling in cloud systems. Knowledge-Based Systems 284, 111241.

- Barut, C., Yildirim, G., Tatar, Y., 2024b. An intelligent and interpretable rule-based metaheuristic approach to task scheduling in cloud systems. Knowledge-Based Systems 284, 111241.

- Behera, I., Sobhanayak, S. Task scheduling optimization in heterogeneous cloud computing environments: A hybrid ga-gwo approach. Journal of Parallel and Distributed Computing 2024, 183, 104766. [CrossRef]

- Cai, B., Yang, S., Gao, L., Xiang, Y., 2023. Hybrid variational autoencoder for time series forecasting. arXiv preprint arXiv:2303.07048.

- Cai, T.T., Ma, R. Theoretical foundations of t-sne for visualizing high-dimensional clustered data. Journal of Machine Learning Research 2022, 23, 1–54.

- Chen, W.J., Yao, J.J., Shao, Y.H. Volatility forecasting using deep neural network with time-series feature embedding. Economic research-Ekonomska istraživanja 2023, 36, 1377–1401. [CrossRef]

- Daraghmeh, M., Agarwal, A., Manzano, R., Zaman, M., 2021. Time series forecasting using facebook prophet for cloud resource management, in: 2021 IEEE International Conference on Communications Workshops (ICC Workshops), pp. 1–6.

- Dogani, J., Khunjush, F., Mahmoudi, M.R., Seydali, M. Multivariate workload and resource prediction in cloud computing using cnn and gru by attention mechanism. The Journal of Supercomputing 2023, 79, 3437–3470. [CrossRef]

- Froese, V., Jain, B., Rymar, M., Weller, M. Fast exact dynamic time warping on run-length encoded time series. Algorithmica 2023, 85, 492–508. [CrossRef]

- Gabhane, J.P., Pathak, S., Thakare, N.M. A novel hybrid multi-resource load balancing approach using ant colony optimization with tabu search for cloud computing. Innovations in Systems and Software Engineering 2023, 19, 81–90. [CrossRef]

- Optimizing multi-time series forecasting for enhanced cloud resource utilization.

- Gagolewski, M., Bartoszuk, M., Cena, A. Are cluster validity measures (in) valid? Information Sciences 2021, 581, 620–636. [CrossRef]

- Geetha, P., Vivekanandan, S., Yogitha, R., Jeyalakshmi, M. Optimal load balancing in cloud: Introduction to hybrid optimization algorithm. Expert Systems with Applications 2024, 237, 121450. [CrossRef]

- Gupta, R., Saxena, D., Gupta, I., Makkar, A., Singh, A.K., 2022a. Quantum machine learning driven malicious user prediction for cloud network communications. IEEE Networking Letters 4, 174–178.

- Gupta, R., Saxena, D., Gupta, I., Singh, A.K., 2022b. Differential and triphase adaptive learning-based privacy-preserving model for medical data in cloud environment. IEEE Networking Letters 4, 217– 221.

- Hao, Y., Zhao, C., Li, Z., Si, B., Unger, H. A learning and evolution-based intelligence algorithm for multi-objective heterogeneous cloud scheduling optimization. Knowledge-Based Systems 2024, 286, 111366. [CrossRef]

- Hewamalage, H., Bergmeir, C., Bandara, K. Global models for time series forecasting: A simulation study. Pattern Recognition 2022, 124, 108441. [CrossRef]

- Jastrzebska, A., Nápoles, G., Salgueiro, Y., Vanhoof, K. Evaluating time series similarity using concept-based models. KnowledgeBased Systems 2022, 238, 107811.

- Jeong, B., Baek, S., Park, S., Jeon, J., Jeong, Y.S. Stable and efficient resource management using deep neural network on cloud computing. Neurocomputing 2023, 521, 99–112. [CrossRef]

- Jiang, J., Liu, F., Ng, W.W., Tang, Q., Zhong, G., Tang, X., Wang, B. Aerf: Adaptive ensemble random fuzzy algorithm for anomaly detection in cloud computing. Computer Communications 2023, 200, 86–94. [CrossRef]

- Kim, S., Chung, E., Kang, P. Feat: A general framework for feature-aware multivariate time-series representation learning. Knowledge-Based Systems 2023, 277, 110790. [CrossRef]

- Li, H., Zheng, W., Tang, F., Zhu, Y., Huang, J., 2023a. Few-shot time-series anomaly detection with unsupervised domain adaptation. Information Sciences 649, 119610.

- Parekh, Ruchit, and Charles Smith. “Innovative AI-driven software for fire safety design: Implementation in vast open structure.” World Journal of Advanced Engineering Technology and Sciences 12.2 (2024): 741-750.

- fficient time series augmentation methods, in: 2020 13th International.

- Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), pp. 1004–1009.

- Liu, P., Liu, J., Wu, K., 2020b. Cnn-fcm: System modeling promotes stability of deep learning in time series prediction. Knowledge-Based Systems 203, 106081.

- Liu, Z., Zhang, J., Li, Y. Towards better time series prediction with model-independent, low-dispersion clusters of contextual subsequence embeddings. Knowledge-Based Systems 2022, 235, 107641. [CrossRef]

- Mahadevan, A. Mahadevan, A., Mathioudakis, M. Cost-aware retraining for machine learning. Knowledge-Based Systems 2024, 293, 111610. [Google Scholar] [CrossRef]

- Nalmpantis, C., Vrakas, D., 2019. Signal2vec: Time series embedding representation, in: International conference on engineering applications of neural networks, Springer. pp. 80–90.

- Nawrocki, P., Smendowski, M., 2023. Long-term prediction of cloud resource usage in high-performance computing, in: International Conference on Computational Science, Springer. pp. 532–546.

- Nawrocki, P., Smendowski, M., 2024a. Finops-driven optimization of cloud resource usage for high-performance computing using machine learning. Journal of Computational Science , 102292.

- Nawrocki, P., Smendowski, M., 2024b. Optimization of the use of cloud computing resources using exploratory data analysis and machine learning. Journal of Artificial Intelligence and Soft Computing Research 14, 287–308.

- Nawrocki, P., Sus, W. Anomaly detection in the context of longterm cloud resource usage planning. Knowledge and Information Systems 2022, 64, 2689–2711. [CrossRef]

- Osypanka, P., Nawrocki, P. Qos-aware cloud resource prediction for computing services. IEEE Transactions on Services Computing 2023, 16, 1346–1357. [CrossRef]

- Parekh, Ruchit, and Charles Smith. “Innovative AI-driven software for fire safety design: Implementation in vast open structure.” World Journal of Advanced Engineering Technology and Sciences 12.2 (2024): 741-750.

- D., Gupta, I., Gupta, R., Singh, A.K., Wen, X., 2023a. An ai-driven vm threat prediction model for multi-risks analysis-based cloud cybersecurity. IEEE Transactions on Systems, Man, and Cybernetics: Systems.

- Saxena, D., Gupta, R., Singh, A.K., Vasilakos, A.V., 2023b. Emerging vm threat prediction and dynamic workload estimation for secure resource management in industrial clouds. IEEE Transactions on Automation Science and Engineering.

- Shu, W., Cai, K., Xiong, N.N. Research on strong agile response task scheduling optimization enhancement with optimal resource usage in green cloud computing. Future Generation Computer Systems 2021, 124, 12–20. [CrossRef]

- Si, W., Pan, L., Liu, S. A cost-driven online auto-scaling algorithm for web applications in cloud environments. KnowledgeBased Systems 2022, 244, 108523.

- Singh, A.K., Gupta, R. A privacy-preserving model based on differential approach for sensitive data in cloud environment. Multimedia Tools and Applications 2022, 81, 33127–33150. [CrossRef]

- Singh, A.K., Swain, S.R., Saxena, D., Lee, C.N., 2023. A bio- inspired virtual machine placement toward sustainable cloud resource management. IEEE Systems Journal.

- Song, K., Yu, Y., Zhang, T., Li, X., Lei, Z., He, H., Wang, Y., Gao, S. Short-term load forecasting based on ceemdan and dendritic deep learning. Knowledge-Based Systems 2024, 294, 111729. [CrossRef]

- Storment, J., Fuller, M., 2023. Cloud FinOps. O’Reilly Media, Inc.

- Tran, M.N., Kim, Y. Optimized resource usage with hybrid auto-scaling system for knative serverless edge computing. Future Generation Computer Systems 2024, 152, 304–316. [CrossRef]

- Uribarri, G., Mindlin, G.B. Dynamical time series embeddings in recurrent neural networks. Chaos, Solitons & Fractals 2022, 154, 111612.

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I., 2017. Attention is all you need. Advances in neural information processing systems 30.

- Wang, Y., Long, H., Zheng, L., Shang, J., 2023. Graphformer: Adaptive graph correlation transformer for multivariate long sequence time series forecasting. Knowledge-Based Systems , 111321.

- Wang, Z., Pei, C., Ma, M., Wang, X., Li, Z., Pei, D., Rajmohan, S., Zhang, D., Lin, Q., Zhang, H., et al., 2024. Revisiting vae for unsupervised time series anomaly detection: A frequency perspective, in: Proceedings of the ACM on Web Conference, pp. 3096–3105.

- Wen, Q., Zhou, T., Zhang, C., Chen, W., Ma, Z., Yan, J., Sun, L., 2022. Transformers in time series: A survey. arXiv preprint arXiv:2202.07125.

- Yadav, H., Thakkar, A. Noa-lstm: An efficient lstm cell archi- tecture for time series forecasting. Expert Systems with Applications 2024, 238, 122333. [CrossRef]

- Yi, K., Zhang, Q., Fan, W., Wang, S., Wang, P., He, H., An, N., Lian, D., Cao, L., Niu, Z., 2024. Frequency-domain mlps are more effective learners in time series forecasting. Advances in Neural Information Processing Systems 36.

- Yuan, H., Liao, S., 2024. A time series-based approach to elastic kubernetes scaling. Electronics 13.

- Zhang, F., Guo, T., Wang, H. Dfnet: Decomposition fusion model for long sequence time-series forecasting. Knowledge-Based Systems 2023, 277, 110794. [CrossRef]

- Zhu, J., Bai, W., Zhao, J., Zuo, L., Zhou, T., Li, K. Variational mode decomposition and sample entropy optimization based trans- former framework for cloud resource load prediction. Knowledge- Based Systems 2023, 280, 111042. [CrossRef]

| Feature | Articles |

|---|---|

| [4,5,6,11,13,23,27,31,32,51,56,59], | |

| Machine learning-based | [1,2,3,10,17,24,25,28,32,34,36,37,38,39,40,42,47,48,50,52,53,54,57,58,60], |

| [20,21,43,46] | |

| Statistical learning-based | [29,36,37,38] |

| Clustering enabled | [5,23,29,32,33] |

| Anomaly detection aware | [2,26,28,36,37,38,39,40,54] |

| Resource reservation | [37,39,40] |

| FinOps aware | [37,40] |

| Forecasting optimization | [5,24,32,33,34,53,57] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).