Submitted:

07 September 2024

Posted:

09 September 2024

You are already at the latest version

Abstract

Keywords:

I. Introduction

- Multilinear Side-Information based Discriminant Analysis (MSIDA) is employed to reduce dimensionality and classify tensor data in cases where complete class labels are unavailable. MSIDA transforms the input face tensor into a new multilinear subspace, enhancing the separation between samples from different classes while minimizing the variance within samples of the same class. Furthermore, MSIDA reduces the dimensionality of each tensor mode [9].

- We empirically evaluate the proposed approach for face based identity verification on four challenging face benchmark CelebA. Comparisons against the state-of-the-art methods demonstrate the efficiency of our approach.

II. Multilinear Side-Information based Discriminant Analysis

II. Face Pair Matching Using MSIDA

A. Features Extraction

B. Matching

IV. Experiments

A. Datasets

B. Parameter Settings

C. Results

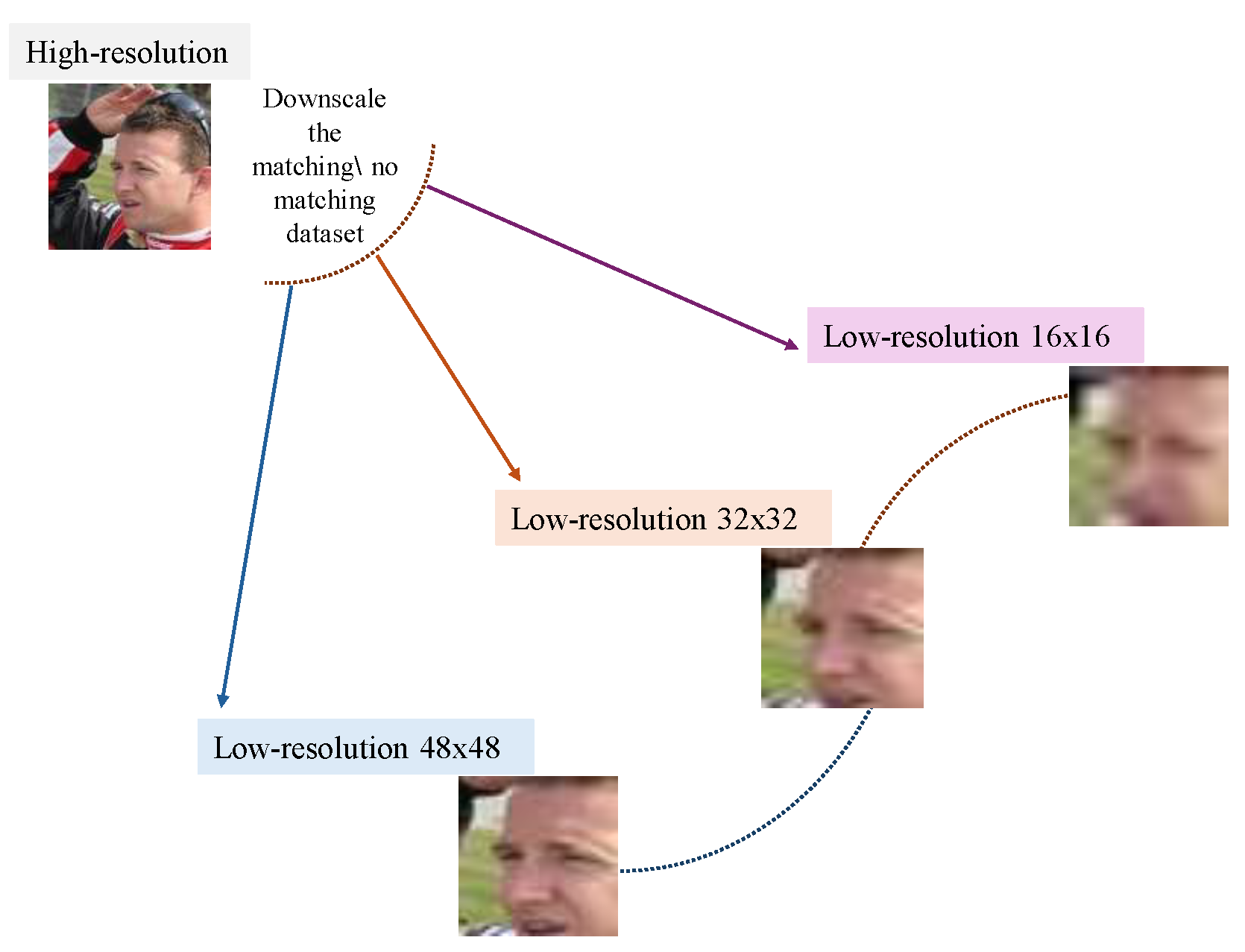

1) Evaluation of Low-Resolution Images

2) Evaluation of State-of-the-Art in High-Resolution (HR) and Low-Resolution (LR)

V. Conclusion

References

- A. Chouchane, M. Belahcene, A. Ouamane, and S. Bourennane, “3d face recognition based on histograms of local descriptors,” in 2014 4th International Conference on Image Processing Theory, Tools and Applications (IPTA), pp. 1–5, IEEE, 2014.

- M. Belahcène, A. Ouamane, and A. T. Ahmed, “Fusion by combination of scores multi-biometric systems,” in 3rd European Workshop on Visual Information Processing, pp. 252–257, IEEE, 2011.

- R. Aliradi, A. Belkhir, A. Ouamane, and A. S. Elmaghraby, “Dieda: discriminative information based on exponential discriminant analysis combined with local features representation for face and kinship verification,” Multimedia Tools and Applications, pp. 1–18, 2018.

- A. Ouamane, E. Boutellaa, M. Bengherabi, A. Taleb-Ahmed, and A. Hadid, “A novel statistical and multiscale local binary feature for 2d and 3d face verification,” Computers & Electrical Engineering, vol. 62, pp. 68–80, 2017.

- M. Lal, K. Kumar, R. H. Arain, A. Maitlo, S. A. Ruk, and H. Shaikh, “Study of face recognition techniques: A survey,” International Journal of Advanced Computer Science and Applications, vol. 9, no. 6, 2018.

- O. Laiadi, A. Ouamane, E. Boutellaa, A. Benakcha, A. Taleb-Ahmed, and A. Hadid, “Kinship verification from face images in discriminative subspaces of color components,” Multimedia Tools and Applications, vol. 78, pp. 16465–16487, 2019.

- A. Chouchane, M. Bessaoudi, E. Boutellaa, and A. Ouamane, “A new multidimensional discriminant representation for robust person re-identification,” Pattern Analysis and Applications, vol. 26, no. 3, pp. 1191–1204, 2023.

- A. Ouamane, A. Chouchane, E. Boutellaa, M. Belahcene, S. Bourennane, and A. Hadid, “Efficient tensor-based 2d+ 3d face verification,” IEEE Transactions on Information Forensics and Security, vol. 12, no. 11, pp. 2751–2762, 2017.

- M. Bessaoudi, A. Ouamane, M. Belahcene, A. Chouchane, E. Boutellaa, and S. Bourennane, “Multilinear side-information based discriminant analysis for face and kinship verification in the wild,” Neurocomputing, vol. 329, pp. 267–278, 2019.

- A. Ouamane, M. Bengherabi, A. Hadid, and M. Cheriet, “Side-information based exponential discriminant analysis for face verification in the wild,” in 2015 11th IEEE international conference and workshops on automatic face and gesture recognition (FG), vol. 2, pp. 1–6, IEEE, 2015.

- A. Chouchane, A. Ouamane, E. Boutellaa, M. Belahcene, and S. Bourennane, “3d face verification across pose based on euler rotation and tensors,” Multimedia Tools and Applications, vol. 77, pp. 20697–20714, 2018.

- C. Ammar, B. Mebarka, O. Abdelmalik, and B. Salah, “Evaluation of histograms local features and dimensionality reduction for 3d face verification,” Journal of information processing systems, vol. 12, no. 3, pp. 468–488, 2016.

- J. C. L. Chai, T.-S. Ng, C.-Y. Low, J. Park, and A. B. J. Teoh, “Recognizability embedding enhancement for very low-resolution face recognition and quality estimation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9957–9967, 2023.

- M. Belahcene, M. Laid, A. Chouchane, A. Ouamane, and S. Bourennane, “Local descriptors and tensor local preserving projection in face recognition,” in 2016 6th European workshop on visual information processing (EUVIP), pp. 1–6, IEEE, 2016.

- A. Ouamane, A. Benakcha, M. Belahcene, and A. Taleb-Ahmed, “Multimodal depth and intensity face verification approach using lbp, slf, bsif, and lpq local features fusion,” Pattern Recognition and Image Analysis, vol. 25, pp. 603–620, 2015.

- M. Belahcene, A. Chouchane, and H. Ouamane, “3d face recognition in presence of expressions by fusion regions of interest,” in 2014 22nd Signal Processing and Communications Applications Conference (SIU), pp. 2269–2274, IEEE, 2014.

- I. Serraoui, O. Laiadi, A. Ouamane, F. Dornaika, and A. Taleb-Ahmed, “Knowledge-based tensor subspace analysis system for kinship verification,” Neural Networks, vol. 151, pp. 222–237, 2022.

- O. Guehairia, F. Dornaika, A. Ouamane, and A. Taleb-Ahmed, “Facial age estimation using tensor based subspace learning and deep random forests,” Information Sciences, vol. 609, pp. 1309–1317, 2022.

- M. Khammari, A. Chouchane, A. Ouamane, M. Bessaoudi, Y. Himeur, M. Hassaballah, et al., “High-order knowledge-based discriminant features for kinship verification,” Pattern Recognition Letters, vol. 175, pp. 30–37, 2023.

- A. A. Gharbi, A. Chouchane, A. Ouamane, Y. Himeur, S. Bourennane, et al., “A hybrid multilinear-linear subspace learning approach for enhanced person re-identification in camera networks,” Expert Systems with Applications, p. 125044, 2024.

- O. Laiadi, A. Ouamane, A. Benakcha, A. Taleb-Ahmed, and A. Hadid, “Learning multi-view deep and shallow features through new discriminative subspace for bi-subject and tri-subject kinship verification,” Applied Intelligence, vol. 49, no. 11, pp. 3894–3908, 2019.

- H. Lu, K. N. Plataniotis, and A. N. Venetsanopoulos, “A survey of multilinear subspace learning for tensor data,” Pattern Recognition, vol. 44, no. 7, pp. 1540–1551, 2011.

- M. Safayani and M. T. M. Shalmani, “Three-dimensional modular discriminant analysis (3dmda): a new feature extraction approach for face recognition,” Computers & Electrical Engineering, vol. 37, no. 5, pp. 811–823, 2011.

- E. Boutellaa, M. B. López, S. Ait-Aoudia, X. Feng, and A. Hadid, “Kinship verification from videos using spatio-temporal texture features and deep learning,” arXiv preprint arXiv:1708.04069, 2017.

- M. Bessaoudi, A. Chouchane, A. Ouamane, and E. Boutellaa, “Multilinear subspace learning using handcrafted and deep features for face kinship verification in the wild,” Applied Intelligence, vol. 51, pp. 3534–3547, 2021.

- O. Parkhi, A. Vedaldi, and A. Zisserman, “Deep face recognition,” in BMVC 2015-Proceedings of the British Machine Vision Conference 2015, British Machine Vision Association, 2015.

- H. V. Nguyen and L. Bai, “Cosine similarity metric learning for face verification,” in Asian conference on computer vision, pp. 709–720, Springer, 2010.

- Z. Liu, P. Luo, X. Wang, and X. Tang, “Deep learning face attributes in the wild,” in Proceedings of International Conference on Computer Vision (ICCV), December 2015.

- Q. Jiao, R. Li, W. Cao, J. Zhong, S. Wu, and H.-S. Wong, “Ddat: Dual domain adaptive translation for low-resolution face verification in the wild,” Pattern Recognition, vol. 120, p. 108107, 2021.

| MSIDA | SILD | |

|---|---|---|

| High-Resolution | 90.60 | 83.80 |

| LR 16x16 | 75.97 | 67.60 |

| LR 32x32 | 86.50 | 78.30 |

| LR 48x48 | 88.23 | 81.30 |

| HR | 32x32 | ||

|---|---|---|---|

| Jiao, Q . | |||

| (2021), [29] | CenterLoss | 56.2 | - |

| SphereConv w/ linear operator | 54.8 | - | |

| SphereConv w/ cosine operator | 53.6 | - | |

| SphereConv w/ sigmoid operator | 53.3 | - | |

| CosFace | - | 66.2 | |

| SphereFace | - | 66.5 | |

| Baseline | 57.5 | - | |

| CSRI | 61.2 | - | |

| DDAT | 70.6 | - | |

| DDAT w/ target domain labels | 71.2 | - | |

| MSIDA | 90.60 | 86.50 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).