1. Introduction: Theoretical Foundations of the Extended Mind and Embodiment

1.1. The Extended Mind in the Context of the Metaverse

The traditional understanding of human cognition has long confined the workings of the mind within the boundaries of the brain. This perspective, rooted in early neuroscientific studies, views cognitive processes such as memory, thought, and perception as solely functions of the brain’s internal networks of neurons, synapses, and chemicals [

1]. In this brain-centric model, the mind is seen as an isolated system, working autonomously to process information from the external world. These processes were believed to be locked within the brain, and the tools we use—such as notebooks, calculators, or computers—were considered external aids, disconnected from the intrinsic workings of cognition itself. Early cognitive science and neuroscience laid a strong emphasis on mapping the neural pathways responsible for specific cognitive functions, assuming that understanding these brain-based mechanisms was the key to understanding cognition as a whole [

2].

However, this traditional model of cognition has been challenged by more recent developments in cognitive science, spurred by thinkers like Andy Clark and David Chalmers (1998) [

3]. Their Extended Mind Theory proposes a much broader and more inclusive view of cognition, one that extends beyond the confines of the skull. This theory asserts that the mind does not operate in isolation; rather, it is distributed across the brain, body, tools, and environment with which individuals interact. Clark and Chalmers argue that external objects, technologies, and environments play an active role in cognitive processes, functioning as extensions of the mind rather than mere passive aids. This marks a significant departure from traditional views, suggesting that human cognition is not solely internal but also external, involving the tools and environments that shape and support cognitive functions [

4].

One of the most influential examples used by Clark and Chalmers to illustrate this theory is the case of Otto, a man with Alzheimer’s disease, who uses a notebook to store information he can no longer reliably keep in memory. According to the traditional model, Otto’s memory would be confined to his brain, and the notebook would be seen as a simple external tool. However, the extended mind theory argues that the notebook is, in fact, part of Otto’s cognitive system—functionally equivalent to his biological memory. In this way, external devices and tools become integrated into our cognitive processes, reshaping how we perceive, think, and remember [

3].

In the digital age, particularly within the immersive environments of the metaverse and extended realities (XR), the extended mind theory has become even more relevant. Technologies such as augmented reality (AR), virtual reality (VR), and mixed reality (MR) are not merely external tools used for cognitive support; they are increasingly integral to shaping how individuals think, learn, and interact with the world around them [

5]. These immersive environments allow for real-time interaction and feedback, making the digital tools not just facilitators of cognition but active participants. This convergence of the digital and the physical exemplifies the core idea of the extended mind theory: cognition extends beyond the brain and is distributed across both internal neural processes and external technological systems.

Within AR and VR, users engage with digital objects and environments that augment their cognitive capacities, offering new ways to process information, solve problems, and understand complex concepts. For example, when interacting with a virtual environment, the user’s actions—such as manipulating a virtual object—are not just outputs of internal cognitive processes but are shaped by the feedback from the external environment. In this way, the digital environment becomes part of the cognitive process itself, functioning as a cognitive partner. Technologies like eye-tracking and gesture recognition enable seamless interaction with these environments, allowing the user to offload cognitive tasks onto the external world [

6]. This offloading exemplifies how immersive environments in the metaverse blur the line between internal cognition and external augmentation, creating a continuum between the mind and the tools it interacts with [

7].

The metaverse, as a convergence of immersive virtual environments, provides a fertile ground for exploring the extended mind. In these environments, virtual worlds are not passive; they are dynamic, interactive spaces that respond to the user’s actions and cognitive state. Through multimodal interfaces—ranging from simple input devices like keyboards and mice to advanced systems such as eye-tracking, haptic feedback, and brain-computer interfaces (BCIs)—users can manipulate and navigate these worlds in ways that extend their cognitive capabilities. These tools do not simply assist cognition; they become integral components of the cognitive process, shaping how information is processed and how experiences are interpreted. Haptic feedback, for instance, allows users to experience tactile sensations in a virtual world, enabling them to engage more deeply with virtual objects, which in turn alters their cognitive engagement with the environment [

8,

9,

10,

11]. Similarly, BCIs enable users to control virtual objects directly through neural activity, bypassing the need for physical input devices, further demonstrating the integration of external technology into the cognitive system [

12].

These immersive tools and environments in the metaverse mirror the central tenets of the extended mind theory: cognition is not confined to the brain but is distributed across the physical and digital tools that enable and extend our thinking capabilities [

3]. As the boundaries between the physical and digital worlds continue to blur, the extended mind theory provides a compelling framework for understanding how our cognitive systems are evolving in response to new technologies. In the context of the metaverse, human cognition is increasingly augmented by digital tools, creating new possibilities for learning, problem-solving, and interaction that transcend the limitations of biological cognition alone [

13]. As XR technologies continue to develop, they will play an increasingly central role in expanding the boundaries of human cognition, allowing us to integrate more seamlessly with the external world, both physical and digital..

1.2. Virtual Tools as Cognitive Extensions

Within the metaverse, digital environments and virtual objects do not merely support cognition—they actively shape and transform it. These environments provide users with enhanced cognitive capabilities by integrating external tools and technologies that allow for interactions impossible in the physical world. For example, in VR learning environments, users can engage in highly immersive and interactive tasks, such as exploring the human body at a cellular level, conducting complex physics experiments, or manipulating molecular structures. These tasks, which would be challenging or even impossible in the real world due to constraints of time, space, or resources, become feasible and intuitive within virtual environments. This creates a form of cognitive scaffolding where virtual tools offload information processing tasks from the brain to the environment, enhancing the user’s ability to understand and retain complex concepts [

13]. The immersive nature of VR enables learners to interact directly with the content, deepening their engagement and making abstract ideas more tangible and easier to comprehend.

Similarly, BCIs allow users to interact with virtual environments using neural signals, bypassing traditional input methods such as keyboards or controllers. BCIs represent a radical extension of cognition into the digital realm, enabling users to control virtual objects, navigate digital landscapes, and solve complex problems through direct neural activity. This type of interaction not only facilitates a more intuitive and seamless user experience but also creates new avenues for cognitive offloading and enhancement. Cognitive tasks, such as spatial navigation and problem-solving, which were once confined to the brain and body, are now distributed across the brain, body, and virtual tools [

12]. In this sense, the metaverse, through the use of BCIs and other advanced interfaces, becomes a cognitive partner, augmenting the brain’s natural abilities and allowing users to perform tasks beyond their biological capabilities.

As technologies in the metaverse evolve, they increasingly take on roles that traditional cognitive tools cannot. For example, haptic feedback systems allow users to feel the texture, weight, and resistance of virtual objects. This form of sensory feedback engages the sensory-motor systems, which are critical to embodied cognition—the idea that cognition is deeply rooted in the body’s interactions with the physical world [

8,

9,

10,

11]. In virtual environments, haptic feedback allows users to engage in tactile interactions that simulate real-world experiences, enabling them to "feel" virtual objects as if they were physical. This sensory engagement transforms how users perceive and interact with the digital world, influencing their decision-making processes, motor skills, and learning outcomes.

These sensory experiences are not passive—they actively shape how users interact with virtual environments. By providing tactile and kinesthetic feedback, haptic systems enhance the sense of presence in the metaverse, making users feel as though they are physically present within the digital space. This heightened sense of presence has been shown to improve performance in tasks such as object manipulation, spatial reasoning, and complex problem-solving [

8,

9,

14,

15]. The digital environment, through these interfaces, becomes a cognitive partner, working in tandem with the user’s brain and body to extend cognitive capabilities beyond their natural limits. Multimodal interactions, which combine visual, auditory, and tactile inputs, create an enriched environment where cognitive processes such as attention, memory, and learning are enhanced by the simultaneous engagement of multiple senses [

16].

Through the use of such interfaces, the digital environment becomes more than just a space for interaction—it becomes a co-constructive agent in the cognitive process. Users can offload tasks onto the virtual environment, allowing them to focus their cognitive resources on higher-level thinking, creativity, and problem-solving. The metaverse, through its integration of multimodal feedback systems, provides users with an unprecedented degree of cognitive augmentation. By extending the user’s mind beyond biological constraints, these technologies foster new forms of cognition that were previously unattainable, offering a glimpse into the future of human-computer interaction and cognitive enhancement [

5].

1.3. Embodied Experiences in the Metaverse

The concept of embodiment is crucial for understanding the extended mind within the context of the metaverse. Embodiment refers to the deep integration of the mind, body, and environment in cognitive processes. It suggests that cognition does not occur solely within the brain but is shaped by our bodily interactions with the physical world. In the metaverse, users can embody digital avatars that mimic real-world physicality, creating immersive experiences where cognitive processes such as spatial reasoning, memory, and attention are enhanced [

17]. The ability to embody a virtual body in this way blurs the boundaries between internal cognition and external technology, reinforcing the central tenets of the extended mind theory.

The virtual body, or avatar, becomes an extension of the user’s physical self, facilitating interaction with the virtual environment and allowing the user to engage in complex tasks in new and intuitive ways [

18,

19]. This sense of embodiment in the metaverse creates a profound psychological effect, as users experience cognitive processes—like social interaction, decision-making, and problem-solving—within the virtual world just as they would in the real world. The degree of embodiment experienced by users can deeply influence their behavior and cognitive engagement in virtual environments.

1.3.1. The Role of Illusions in Embodied Experiences

Within the metaverse, the sense of embodiment is not a simple byproduct of interacting with a virtual environment—it is actively constructed through several illusions that shape how users experience their virtual selves and surroundings. According to Slater (2018) [

20], four key illusions play an essential role in creating the immersive and embodied experience within virtual worlds: placement illusions, plausibility illusions, embodiment illusions, and time illusions.

Placement Illusions: The first illusion is the placement illusion, which refers to the sense that the user’s body and consciousness are physically present within the virtual environment. This illusion is critical in fostering immersion, making users feel as though they have truly entered the virtual world. Placement illusions occur when the virtual environment is responsive to the user’s movements and actions in real time, enhancing the feeling of physical presence. For example, when a user reaches out to touch a virtual object, the virtual environment responds in ways that make the action feel natural and aligned with the user’s expectations, creating a convincing sense of being "placed" in the virtual space [

20]. Research shows that when placement illusions are strong, users exhibit behavioral and cognitive changes that reflect the same patterns they would display in the real world, reinforcing the integration of the virtual environment into their cognitive processes [

5].

Plausibility Illusions: The second critical illusion is the plausibility illusion, which refers to the degree to which the virtual environment behaves in a believable and coherent manner. For users to feel fully immersed and embodied in the metaverse, the virtual world must respond to their actions in ways that are plausible within the context of the virtual environment. This illusion is vital for maintaining a user’s sense of presence, as it ensures that the virtual world behaves in a way that matches their expectations. For instance, if a user interacts with a virtual object, the object should behave as expected based on its virtual properties—if a virtual glass of water is knocked over, the water should spill accordingly. When plausibility illusions are disrupted (e.g., when virtual objects behave in an unnatural or unexpected manner), it breaks the user’s immersion and diminishes the sense of embodiment [

21].

Embodiment Illusions: The third type, the embodiment illusion, directly concerns the user’s avatar in the virtual world. This illusion refers to the experience of "owning" the virtual body as if it were the user’s own. The avatar is not just a representation on a screen but becomes integrated into the user’s sense of self, such that movements of the virtual body feel as though they are the user’s own physical movements. This phenomenon is particularly evident when users perform motor tasks in the metaverse, such as reaching, grasping, or walking. Embodied cognition theories suggest that the ability to interact with and control a virtual body can lead to changes in how users perceive themselves and others, influencing cognitive functions such as social interaction, empathy, and self-awareness [

17,

19]. The stronger the embodiment illusion, the more users treat their virtual body as if it were their real one, leading to more naturalistic behaviors and thought processes in the virtual world [

20,

21].

Time Illusions: Lastly, time illusions refer to the altered perception of time that users experience within immersive environments. In the metaverse, time may appear to pass more quickly or slowly depending on the user’s level of engagement and the design of the virtual experience. Time illusions occur when the user becomes so absorbed in the virtual world that they lose track of time in the real world. This illusion is particularly important in environments designed for extended interaction, such as educational simulations, where prolonged engagement is desirable. The perception of time can be manipulated through the use of fast-paced tasks, slow-motion effects, or carefully timed feedback to either accelerate or decelerate the user’s sense of time [

22]. Time illusions highlight the extent to which the metaverse can alter fundamental aspects of cognition, including attention and decision-making, by reshaping how users experience the passage of time.

1.3.2. Embodied Cognition and the Metaverse

The interplay of these illusions within the metaverse creates an environment where the user’s mind, body, and environment are deeply interconnected. The experience of embodiment allows users to interact with virtual objects and other avatars in ways that feel natural and intuitive, further blurring the line between internal cognition and external technologies. As users engage with virtual objects, their actions and perceptions are transformed, leading to shifts in cognitive processes such as problem-solving, collaboration, and social interaction. In the context of the extended mind theory, these virtual tools and environments act as cognitive extensions, becoming integral to the user’s cognitive system [

3].

For example, in a collaborative metaverse simulation, where users must work together to solve a complex problem, their interactions with virtual objects and with each other become part of the collective cognitive process. Each user’s avatar, through the embodiment illusion, allows them to perform tasks that influence not only their own cognitive processes but also those of their collaborators. In this sense, the virtual environment serves as a shared cognitive space where thought, perception, and action are distributed across multiple users and virtual tools. This reflects the principles of the extended mind, where cognition is distributed across individuals, tools, and environments [

5].

1.4. Implications and Future Directions

The implications of the extended mind theory in the metaverse are profound, particularly as XR technologies—encompassing VR, AR, and MR—continue to advance. These technologies allow users to offload various cognitive tasks onto external, digital environments, fundamentally reshaping how humans process information, solve problems, and engage with learning. As XR systems become more sophisticated, they offer unprecedented opportunities for cognitive augmentation, where users can extend their cognitive capabilities beyond the natural limits of human biology. The offloading of cognitive functions, such as memory recall, spatial reasoning, and problem-solving, into immersive digital environments marks a significant shift in how cognition is understood and applied across different domains.

Eventually, the metaverse has the potential to become the ultimate manifestation of the extended mind theory, where cognition is not confined to the brain but is distributed across a vast network of physical, digital, and artificial agents. As XR technologies, AI systems, and multimodal interfaces become more integrated and sophisticated, the metaverse will offer users the ability to engage in hyper-augmented cognitive experiences. These experiences will allow individuals to offload, extend, and enhance their cognitive processes in ways that were previously unimaginable. Whether in education, therapy, professional training, or even entertainment, the metaverse represents a profound shift in how human cognition is understood and applied.

In this new cognitive landscape, users are not simply interacting with a digital environment; they are engaging with a dynamic, adaptive, and intelligent system that actively participates in their cognitive processes. The implications of this shift are far-reaching, as it redefines the relationship between humans and technology, enabling new forms of cognition, creativity, and collaboration that transcend the limitations of biological intelligence alone [

3]. As XR technologies continue to evolve, the metaverse will become a central space for exploring and expanding the boundaries of the human mind, offering a glimpse into the future of cognitive enhancement and digital interaction.

2. The Virtual Continuum: XR, AR, VR, and MR in Extended Realities

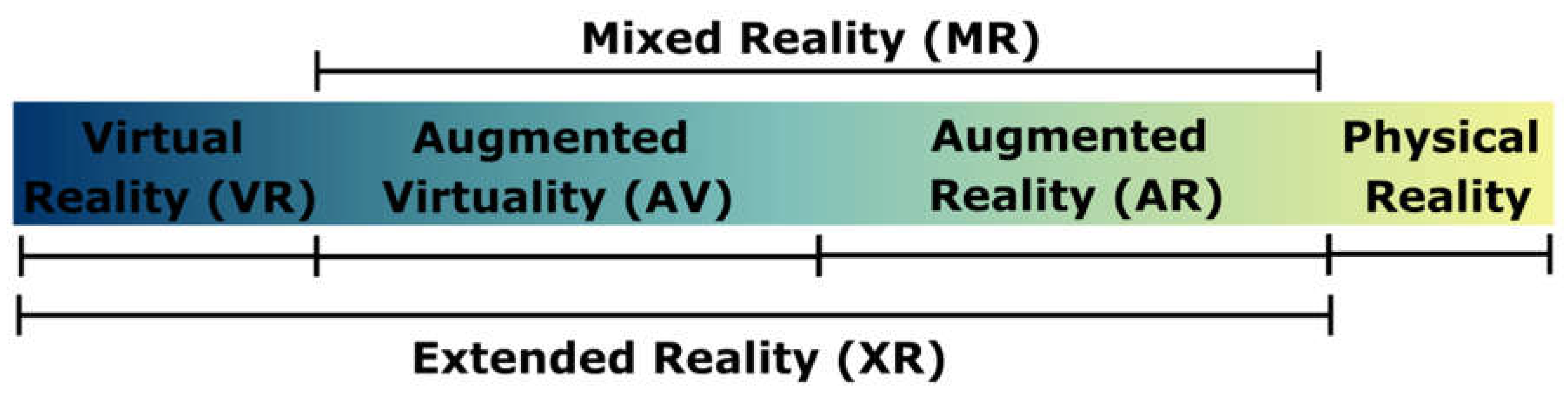

The concept of the Virtual Continuum, first introduced by Milgram and Kishino (1994) [

23], provides a framework to understand how physical and virtual realities interact across a spectrum (see

Figure 1). This continuum ranges from entirely physical environments at one end to fully virtual environments at the other. Within this spectrum lies a blend of real and virtual experiences facilitated by immersive technologies such as VR, AR, and MR. Collectively referred to as XR, these technologies augment human cognition, perception, and interaction by offering varying levels of immersion and interaction between the digital and physical worlds.

2.1. Virtual Reality in the Virtual Continuum

At the extreme end of the Virtual Continuum is VR, where users are fully immersed in a computer-generated environment, entirely replacing the real world. This immersion is achieved through head-mounted displays (HMDs), controllers, and body-tracking systems that enable users to navigate, manipulate objects, and experience sensory feedback in the virtual space. VR’s ability to create an intense sense of presence has made it a powerful tool for applications such as education, therapy, and entertainment [

20].

In education, VR enables students to explore environments that would be otherwise impossible to visit in real life, such as ancient cities or deep ocean habitats. For example, scientific experiments that would be too costly, dangerous, or impractical in a physical setting can be safely and interactively conducted in VR, allowing for deeper engagement and experiential learning [

24,

25]. VR’s immersive qualities also lend themselves to therapeutic applications, where patients can confront fears in controlled environments (e.g., phobias, PTSD) or practice motor skills in rehabilitation [

26].

The total immersion provided by VR allows for significant cognitive offloading, enabling users to delegate tasks like spatial reasoning, memory recall, or problem-solving to the virtual environment [

13]. VR’s potential in these areas continues to grow, particularly with advancements in haptic feedback and brain-computer interface (BCI) technologies, which will further extend the scope of cognitive augmentation [

27,

28].

2.2. Augmented Reality (AR) in the Virtual Continuum

At the opposite end of the Virtual Continuum is AR, which enhances the real world with overlaid digital elements, offering a blend of physical and virtual content. Unlike VR, which replaces the physical world, AR complements it, typically through devices like smartphones, tablets, or AR glasses. This augmentation allows users to interact with digital information while remaining fully engaged with their real-world environment.

AR has found significant use in sectors such as education, healthcare, retail, and industrial applications. In educational settings, AR allows for interactive learning, such as overlaying 3D models onto textbooks or projecting historical events into physical spaces for a more immersive experience [

29]. AR’s capacity to provide real-time, context-sensitive information makes it particularly valuable in professional training. For instance, in the medical field, AR can overlay anatomical data or surgical instructions onto patients, enhancing precision during complex procedures [

30].

In industrial applications, AR is used to train workers by superimposing assembly instructions onto machinery or guiding maintenance tasks with interactive visual aids [

31]. The integration of AR with AI and other technologies has made it a critical tool for enhancing spatial cognition and decision-making, as users can offload tasks like navigation, visualization, and complex spatial reasoning onto the augmented environment [

29,

32].

2.3. Mixed Reality (MR) in the Virtual Continuum

Sitting between AR and VR on the continuum MR, a hybrid technology that allows real and virtual elements to interact in real-time. In MR, users can manipulate both physical and digital objects, with virtual elements responding to physical actions and vice versa. This creates a seamless blend of reality and simulation, enabling more dynamic interactions than AR or VR alone.

MR applications are particularly valuable in fields such as architecture, engineering, and healthcare. For example, architects can use MR to visualize a building design within its intended physical space, offering clients a walkthrough of a structure before it is built [

32,

33]. Similarly, in healthcare, MR can be used to simulate surgeries where real and virtual tools interact in real time, providing a safe environment for training medical students and professionals [

30,

34]. MR’s ability to merge real and digital objects extends the concept of the extended mind, allowing users to offload cognitive tasks onto virtual tools while engaging physically with their environment. This is particularly useful in collaborative scenarios, where teams can work on shared virtual models in real-world settings, enhancing distributed cognition and real-time problem-solving [

35].

2.4. Extended Reality (XR): The Integration of AR, VR, and MR

XR)is an umbrella term that encompasses the full range of experiences across the Virtual Continuum, from AR to VR to MR. XR technologies allow users to engage with different levels of immersion and interaction, creating a rich landscape for cognitive augmentation. As XR technologies become more advanced, the lines between physical and virtual worlds will blur, offering seamless transitions between these spaces. XR’s potential extends across diverse fields, including professional training, healthcare, education, and entertainment. For instance, in the entertainment industry, XR enables users to experience interactive virtual concerts or events, blending physical and virtual spaces to create new forms of artistic expression. In healthcare, XR systems can provide real-time medical data overlays during surgeries or create fully immersive environments for therapy and rehabilitation [

34].

As these technologies integrate with AI, BCI, and multimodal interfaces, XR will continue to evolve as a powerful tool for cognitive extension. AI-driven XR systems can dynamically adjust to users’ cognitive loads or emotional states, providing real-time personalized experiences that enhance cognitive performance [

36,

37]. Moreover, the potential for cognitive offloading in XR environments, where tasks such as memory recall, navigation, and problem-solving are distributed across digital and physical systems, creates significant opportunities for enhancing human cognition [

38].

However, with this growth comes concerns about data privacy, ethical use, and potential misuse. XR systems collect vast amounts of biometric and behavioral data, raising concerns about how this data is used by corporations and governments [

39]. Additionally, the risk of addiction to immersive environments, cyberbullying, and manipulation of public opinion within these spaces necessitates a robust ethical framework to ensure the responsible development of XR technologies [

40]. As XR technologies continue to advance and integrate, it becomes crucial to explore how multiple modalities—such as haptic feedback, gaze tracking, and brain signals—play a role in shaping immersive experiences within the Virtual Continuum.

3. Multiple Modalities in XR: Enhancing Immersion in the Metaverse

The rise of XR technologies has ushered in a new era of human-computer interaction that leverages multiple sensory and physiological modalities to create deeply immersive and responsive virtual environments. These multimodal interfaces enhance both cognitive and physical engagement, providing real-time feedback that adjusts the virtual experience based on the user’s actions, emotions, and physiological states (see

Table 1). This section delves into the key modalities—eye-tracking, facial tracking, hand and body tracking, haptic feedback, and more—that are shaping the future of XR and the metaverse.

3.1. Eye-Tracking: Precision and Cognitive Insights

Eye-tracking technology is a fundamental modality in XR environments, offering an intuitive and dynamic way for systems to monitor and respond to where users are directing their visual attention experience [

41]. By capturing real-time data on the user’s gaze, eye-tracking allows virtual environments to be more responsive, creating more immersive and engaging experiences. The ability to track eye movements enhances the user interface by dynamically adjusting content and providing more intuitive ways to interact with virtual objects and environments. This technology is particularly beneficial in immersive applications like education, professional training, gaming, and even healthcare, where cognitive load and user attention are critical.

One of the primary functions of eye-tracking is dynamic interface adjustment. When a user looks at specific objects or areas within a virtual environment, the system can automatically highlight those elements, bring them into sharper focus, or trigger relevant actions. For example, in a professional training simulation, eye-tracking can be used to detect where a trainee is focusing their attention, and the system can provide real-time feedback or additional information about the object or task being observed. Similarly, in gaming or educational settings, environments can shift scenes or change interactive elements based on the user’s gaze, creating a more engaging and seamless experience [

41].

Eye-tracking also provides valuable insights into the user’s cognitive state by analyzing specific gaze patterns, such as fixation duration (how long the user’s eyes remain focused on a particular object) and saccades (rapid movements of the eyes between points of focus) [

42,

43]. These metrics can be used to estimate cognitive load, or the amount of mental effort the user is exerting at any given time. This data is particularly useful in educational and professional training applications, where an understanding of cognitive load can inform how content is presented. For example, if eye-tracking data suggests that a user is experiencing high cognitive load—such as long fixation durations on a complex object or scene—the system can simplify the environment or reduce distractions to improve comprehension [

44]. In contrast, if low cognitive load is detected, the system may introduce more challenges or additional information to maintain engagement and optimize learning outcomes.

Additionally, eye-tracking enhances interaction capabilities within XR environments, enabling users to interact with virtual objects in a more natural and seamless manner [

45]. Instead of relying on traditional input devices like controllers or keyboards, users can select or manipulate objects simply by looking at them. This gaze-based interaction creates a highly intuitive and immersive experience, making it easier for users to navigate complex virtual environments. For instance, in a virtual museum tour, eye-tracking could allow visitors to focus on an exhibit, triggering detailed information or a 3D model to appear, enriching the educational experience without the need for physical interaction. In medical applications, eye-tracking could help doctors review digital patient files by highlighting relevant data as they scan through information, thereby improving efficiency and reducing cognitive strain [

46].

The future of eye-tracking in XR also holds significant potential for personalized learning and professional training. By continuously monitoring where users direct their attention, systems can tailor experiences to meet individual learning needs or adapt training modules based on a user’s progress. In virtual classrooms or corporate training sessions, eye-tracking could be used to ensure participants are focusing on critical information and to provide real-time guidance or feedback based on their gaze patterns. Moreover, for professionals in high-stress environments, such as pilots or surgeons, eye-tracking could enhance training simulations by monitoring stress levels and cognitive load, ensuring that users maintain focus and achieve high levels of precision during critical tasks.

As XR technologies continue to evolve, the integration of eye-tracking will play a pivotal role in creating more personalized, responsive, and immersive virtual experiences. The ability to track and analyze visual attention in real time not only enhances the usability of XR interfaces but also provides invaluable insights into the cognitive processes of users, making it a cornerstone of future applications in education, training, healthcare, and entertainment. This foundation of visual interaction leads naturally into a broader discussion of how multiple modalities such as facial tracking, hand tracking, and physiological monitoring work in synergy within XR to create a comprehensive and immersive user experience..

3.2. Facial Tracking: Enhancing Emotional and Social Presence

Facial tracking is an increasingly pivotal modality in XR environments, significantly enhancing emotional and social presence within virtual spaces. By capturing users’ facial movements in real-time, this technology enables virtual avatars to replicate and mirror the user’s facial expressions, adding a level of authenticity and emotional depth to social interactions in the metaverse. Whether it’s a smile, a frown, or a raised eyebrow, facial tracking allows virtual avatars to convey the subtleties of human emotion, making interactions in XR environments more natural and engaging. This capability fosters deeper emotional connections, enabling more genuine communication and collaboration in virtual settings, such as virtual workspaces, social hangouts, or even virtual reality games [

47].

In social contexts, facial tracking serves to bridge the gap between the real and virtual worlds by replicating the nuances of non-verbal communication that are often lost in traditional online interactions. When avatars reflect real-time facial expressions, users experience heightened social presence, the feeling that they are truly interacting with others in a shared space. This has significant implications for collaborative work environments, where non-verbal cues such as a nod of approval or a quizzical look can inform group dynamics and decision-making processes. Similarly, in virtual social spaces, facial tracking allows users to better express their emotions and intentions, promoting empathy and mutual understanding [

48].

Facial tracking also enhances emotional feedback within XR environments by detecting and interpreting changes in the user’s emotional state. For instance, systems equipped with facial tracking can analyze micro-expressions—subtle, involuntary facial movements that often indicate emotions such as stress, frustration, or joy. By continuously monitoring these expressions, the system can adapt the virtual environment in real time to better suit the user’s emotional needs. This capability is particularly valuable in therapeutic and counseling settings, where emotional tracking can help therapists create more personalized and responsive virtual environments. For example, in virtual exposure therapy designed to treat phobias or PTSD, facial tracking can detect signs of anxiety, such as furrowed brows or tensed facial muscles, and automatically adjust the environment to reduce stress or offer calming stimuli [

49]. By tailoring the virtual experience to the user’s emotional state, facial tracking enables more effective and supportive therapeutic interventions.

Another important application of facial tracking is in emotionally adaptive virtual environments. As XR technologies advance, virtual spaces are becoming increasingly interactive and responsive, not just to physical actions but also to emotional cues. For instance, in a virtual classroom, facial tracking could detect when a student appears confused or disengaged and offer additional resources, such as a simplified explanation or more engaging content. Similarly, in professional training scenarios, facial tracking could identify when a user is under stress and adjust the task difficulty or provide more guidance, ensuring a better learning experience. These emotionally intelligent environments foster higher levels of user engagement and improve outcomes by creating a more personalized and adaptive experience [

49].

In gaming and entertainment, facial tracking introduces new levels of immersion and emotional engagement. Imagine playing a game where the virtual characters respond to your expressions—if you show signs of fear or surprise, the game might adjust its difficulty or alter the storyline in response. By making virtual environments emotionally reactive, facial tracking enhances immersion and creates more compelling, personalized narratives that respond to the player’s emotions in real time. This kind of interactive storytelling can be deeply engaging, allowing users to form emotional connections with virtual characters and environments in ways previously impossible.

Finally, facial tracking holds potential for remote and hybrid work environments, where communication often lacks the emotional richness of face-to-face interactions. By enabling avatars to reflect real-time facial expressions, teams collaborating in virtual environments can communicate more effectively, with the added dimension of non-verbal cues. This can be particularly important in negotiations, brainstorming sessions, or client meetings, where subtle facial expressions convey important information about mood, engagement, and decision-making. With facial tracking, remote interactions can more closely mimic the emotional complexity of in-person meetings, making virtual workspaces more effective and satisfying [

47].

As facial tracking becomes more integrated into XR systems, it will contribute to the development of emotionally responsive and socially rich virtual environments. By accurately capturing and reflecting emotional states, facial tracking not only enhances communication but also opens the door to more adaptive and personalized virtual experiences. This capability sets the stage for the broader integration of multiple modalities—such as hand tracking, full-body tracking, and physiological monitoring—which will work in tandem to create more comprehensive, immersive, and emotionally responsive virtual experiences.

3.3. Finger and Hand Tracking: Intuitive Manipulation of Virtual Objects

Finger and hand tracking are transformative modalities in XR environments, offering users the ability to interact with virtual objects in a natural, intuitive manner. By capturing the fine movements of fingers and hands, this technology allows users to engage with digital environments through familiar gestures such as pinching, grabbing, swiping, and pointing. These actions mimic real-world interactions, eliminating the need for traditional controllers, and creating a more immersive experience. Users can reach out to manipulate objects in the virtual world just as they would in physical space, making the interface more seamless and enhancing overall user engagement [

50].

This form of interaction is particularly valuable in XR environments designed for education and training. For example, medical trainees can use hand tracking to practice complex surgical procedures, manipulating virtual surgical tools with precision and receiving real-time feedback on their movements. This form of hands-on training allows users to develop muscle memory and technical skills in a risk-free virtual environment. Similarly, in engineering or design fields, hand tracking enables users to interact with virtual prototypes, making adjustments in real time as if they were handling physical models. The tactile-like nature of hand gestures fosters deeper learning and retention, as users are actively engaged in problem-solving through intuitive, embodied interaction [

51].

Moreover, hand tracking is essential for enhancing collaborative work in XR environments. In virtual workspaces or design studios, multiple users can interact with the same virtual objects in real time, allowing for simultaneous co-creation of digital models or prototypes. For instance, in a virtual architecture studio, team members from different locations can manipulate building designs together, making adjustments collaboratively as though they were physically present in the same room. The ability to "handle" virtual objects with precision not only makes collaboration more fluid but also fosters creativity and teamwork by enabling real-time interactions [

14,

14,

15,

52]. This kind of shared engagement is invaluable in industries like product design, where rapid iterations and adjustments are essential to the creative process.

Finger and hand tracking also provide significant benefits in professional training and simulation-based learning. In environments where precision and accuracy are crucial, such as aviation or military training, real-time hand tracking allows users to interact with control systems, machinery, or tools in virtual simulations, receiving immediate feedback on their actions. For example, an aircraft mechanic can practice assembling or repairing engines in a virtual environment, with hand tracking capturing the precise movements needed for delicate tasks. The system can provide feedback on the accuracy of each step, ensuring that users improve their skills through repeated practice. This combination of hands-on learning and real-time feedback enhances the effectiveness of training and reduces the risks associated with real-world errors [

15,

52].

The benefits of hand tracking extend beyond technical skills and training into the realm of social and collaborative XR experiences. By enabling real-time, expressive hand gestures, this technology enhances communication and interaction between users. In virtual meetings, for instance, participants can use gestures such as pointing, waving, or offering a thumbs-up to convey non-verbal cues, adding a layer of richness to virtual communication that text or voice chat alone cannot provide. This is particularly important in remote work environments, where non-verbal communication helps build rapport and trust between team members. The ability to see and interpret hand movements in real time strengthens social presence in the metaverse, making interactions feel more authentic and engaging [

53].

In addition to its applications in professional and social contexts, hand tracking holds immense potential for creative industries such as art, gaming, and entertainment. In virtual art studios, for example, artists can manipulate digital brushes or sculpt with virtual clay using their hands, offering new possibilities for creative expression. Similarly, in gaming, hand tracking allows players to perform complex in-game actions, such as wielding weapons or casting spells, by simply moving their hands. This kind of interaction not only enhances immersion but also creates more dynamic and personalized gameplay experiences, as players feel physically connected to their virtual actions [

8,

54].

As hand tracking technologies continue to evolve, they will likely integrate with other modalities such as haptic feedback to create even more immersive experiences. For example, combining hand tracking with tactile sensations will allow users to not only see and move virtual objects but also "feel" them, adding a new dimension of realism and engagement. This synergy of modalities will deepen the user’s connection with the virtual world, making the manipulation of digital objects more lifelike and enhancing the overall immersive experience in XR environments.

In the next section, we will explore full-body tracking, another critical modality that, when combined with hand and finger tracking, enhances the physical embodiment and realism of XR experiences. By mapping the user’s entire body movement, full-body tracking offers even more comprehensive interaction with the virtual world, enabling activities that range from fitness training to immersive social interactions.

3.4. Full-Body Tracking: Immersive Embodiment and Physicality

Full-body tracking is a powerful modality in XR environments, offering users the ability to fully embody their virtual avatars by capturing and mapping the movement of their entire body. This technology provides a heightened sense of immersion by aligning virtual representations with the user’s real-world movements, significantly enhancing the realism and presence within the virtual world. As users move their bodies in physical space, their virtual avatars replicate those movements, leading to more lifelike and dynamic interactions [

55,

56]. This capability is especially crucial in social and collaborative XR environments, where non-verbal communication, such as body language and gestures, plays a key role in interpersonal interactions and teamwork.

In social and collaborative XR settings, full-body tracking enables more natural and fluid communication between users. For instance, in virtual meetings or group activities, users can convey emotions and intentions not only through speech but also through body movements and posture. This replication of real-world body language enhances the social presence of avatars, making interactions feel more authentic and personal. In virtual events or online multiplayer games, full-body tracking allows users to dance, gesture, or perform physical tasks together, further strengthening the sense of connection and engagement within the virtual world [

57,

58].

The integration of full-body tracking into fitness and physical therapy applications has also opened up exciting possibilities for health and wellness in XR environments. In virtual fitness programs, for example, users can perform activities such as aerobics, yoga, or sports, with their movements mirrored in the virtual world. This not only makes the workout more engaging but also allows for real-time feedback on posture, form, and intensity, enabling users to optimize their exercise routines [

59,

60]. For physical therapists, full-body tracking provides a valuable tool for monitoring patients’ rehabilitation exercises. By tracking precise body movements, the system can assess the quality and range of motion, providing feedback to both the therapist and the patient on the progress of recovery. This real-time feedback helps improve the effectiveness of rehabilitation programs and supports remote therapy, making care more accessible [

61].

Moreover, full-body tracking has proven to be a game-changer in immersive learning environments, particularly in educational scenarios that require physical engagement. For instance, students learning about anatomy can walk around a three-dimensional model of the human body, exploring it from all angles. In history or geography lessons, learners can walk through virtual reconstructions of historical sites or geographic landscapes, engaging with the content in a more embodied and hands-on manner [

62,

63]. This physical exploration of virtual environments supports deeper cognitive engagement and retention, as it allows users to interact with complex information in a multisensory way.

Beyond these individual applications, full-body tracking also enhances the sense of embodiment, a key factor in virtual reality’s impact on user experience. The more accurately a user’s movements are replicated in the virtual world, the stronger their sense of "being there" in that environment. This immersive embodiment fosters deeper engagement, particularly in simulations and training scenarios, where users need to practice real-world skills in a safe and controlled virtual setting. For instance, in military or law enforcement training, full-body tracking can be used to simulate real-world physical environments and challenges, allowing trainees to practice movement-based strategies, teamwork, and decision-making under pressure [

64]. Similarly, in sports training, athletes can use full-body tracking to simulate game scenarios or practice specific movements, receiving feedback that helps refine their technique [

65].

Looking ahead, as haptic feedback technologies continue to evolve, full-body tracking is expected to integrate with these systems to create even more immersive physical experiences in XR. By combining the ability to track full-body movements with tactile sensations, users will not only see their virtual selves move but also feel the resistance, pressure, or texture of objects they interact with. This will enhance not only entertainment and social applications but also professional training and therapy programs by providing users with more comprehensive and lifelike physical experiences.

In the next section, we will explore haptic feedback, another critical modality that complements full-body tracking by providing tactile sensations that enrich the physical engagement with virtual environments. The integration of these modalities will continue to shape the future of XR, creating environments where users can interact with the digital world in ways that mimic real-world physicality, deepening the sense of immersion and engagement.

3.5. Haptic Feedback: Bringing Touch to the Virtual World

Haptic feedback technology plays a pivotal role in enhancing the immersion and realism of XR by introducing the sense of touch into virtual experiences. Unlike visual and auditory modalities, which primarily stimulate sight and hearing, haptic feedback engages the sense of touch, allowing users to physically feel the virtual objects they interact with. This capability is enabled through various devices, such as haptic gloves, vests, or controllers, which provide tactile sensations when users manipulate digital objects. These sensations can include textures, vibrations, resistance, and even temperature, making virtual interactions feel tangible and lifelike ([

9,

66].

In training simulations, haptic feedback is invaluable, particularly in fields that require fine motor skills and precision. For instance, in medical training, surgeons can practice complex procedures in virtual environments while receiving tactile feedback that mimics the sensation of handling real surgical instruments or interacting with human tissue. This feedback is essential for developing the dexterity and precision required in real-life operations, and it allows medical professionals to practice in a safe, controlled, and repeatable setting without the risk of harm to patients[

11]. Similarly, in mechanical training, haptic feedback enables users to feel the resistance and weight of virtual tools or materials, improving their ability to perform tasks like assembling machinery or repairing engines. By simulating the physical properties of objects, haptic feedback helps users refine their motor skills, making training in virtual environments more effective [

11,

67].

Beyond its utility in professional training, haptic feedback also enhances emotional engagement in XR environments. The ability to physically feel virtual objects can amplify emotional responses, making interactions more meaningful and immersive. For example, in social XR settings, a virtual handshake or hug can be made more impactful by the sensation of touch, fostering deeper emotional connections between users. Similarly, in gaming, haptic feedback allows players to feel the impact of in-game actions, such as the recoil of a virtual weapon or the texture of a virtual surface, increasing the intensity and realism of the experience [

67]. The tactile sensations provided by haptic feedback devices make virtual environments feel more immersive and emotionally engaging, leading to a deeper connection between users and the digital world.

In therapy and rehabilitation, haptic feedback has significant applications, particularly in treatments involving motor recovery and emotional regulation. For example, patients undergoing physical therapy for motor impairments can use haptic devices to practice movements in virtual environments, receiving immediate feedback on their performance. This feedback helps patients adjust their movements in real-time, accelerating the rehabilitation process. In emotional therapy, haptic feedback can be used to recreate comforting physical sensations, such as a soothing touch or a relaxing vibration, to help individuals manage anxiety or stress in virtual environments. By creating a multisensory experience, haptic feedback allows therapy to be more personalized and effective [

68].

Furthermore, haptic feedback enhances the realism of virtual simulations, where the sense of touch is crucial for user interaction. In architectural or engineering simulations, users can feel the weight and texture of materials as they construct or manipulate virtual models, making the design process more intuitive and interactive. This tactile engagement allows professionals to experiment with designs in a more hands-on way, improving their understanding of how materials behave in real-world scenarios [

52]

Looking ahead, as haptic technology evolves, we are likely to see even more sophisticated devices capable of providing highly detailed tactile sensations, including advanced textures, force feedback, and temperature variations. These advancements will further enhance the realism of XR environments, making virtual objects feel indistinguishable from their real-world counterparts. The integration of haptic feedback with other XR modalities, such as full-body tracking and eye-tracking, will create an even more immersive experience, where users can see, hear, and physically interact with digital worlds in a seamless and natural way.

3.6. Galvanic Skin Response (GSR): Monitoring Emotional States

Galvanic Skin Response (GSR) is a powerful physiological modality that measures changes in the skin’s electrical conductance, which is directly linked to the body’s emotional arousal levels. By detecting fluctuations in the skin’s moisture levels, GSR sensors capture real-time data on emotional states such as stress, excitement, or relaxation. In XR environments, this information can be used to create more adaptive and emotionally responsive experiences, where the virtual environment adjusts in real time to the user’s physiological signals [

69].

The integration of GSR in XR environments has significant potential for personalizing user experiences. For instance, in a virtual therapy session designed for stress management, if the system detects elevated stress levels via GSR, it could automatically adjust the environment to promote relaxation. This might involve dimming virtual lights, reducing auditory stimuli, or introducing calming elements like soft music or soothing natural sounds. Such adjustments create a more responsive and immersive therapeutic experience, allowing the virtual environment to better support the user’s emotional needs [

70,

71]. This adaptability makes GSR a valuable tool for applications in mental health and emotional regulation, where tailoring environments to individual emotional states can enhance treatment effectiveness.

Moreover, GSR is essential in educational and training scenarios, particularly those designed to challenge users’ emotional and cognitive limits. For example, in a virtual simulation designed to prepare users for high-stress scenarios—such as emergency response training or flight simulations—the system can monitor GSR levels to gauge emotional responses. If the user shows signs of becoming overly stressed, the simulation can adjust to offer support, perhaps slowing down the pace of the scenario or providing real-time feedback to help manage emotional regulation [

36].

In entertainment and gaming, GSR can also enhance user engagement by modifying gameplay based on emotional responses. For example, a horror game could increase or decrease its intensity based on the player’s emotional arousal, detected through GSR, making the experience more thrilling or manageable depending on the user’s preference. Similarly, virtual reality environments designed for relaxation could track the user’s emotional state and continuously adjust the environment to maintain an optimal level of calmness, ensuring a personalized and immersive experience [

71].

Beyond individual experiences, GSR data can also provide insights into group dynamics in collaborative virtual environments. For instance, in team-based training exercises or collaborative design projects, the system could monitor the emotional states of all participants. If collective stress levels rise, the system could offer suggestions to ease tension, such as facilitating a break or introducing calming visual elements into the shared space. This ability to gauge emotional responses at both individual and group levels can enhance collaboration by ensuring that participants remain engaged and focused without becoming overwhelmed by stress [

26].

Looking ahead, GSR can be further integrated with other physiological modalities, such as heart rate monitoring and facial tracking, to provide even more comprehensive insights into the user’s emotional and physical state. This multimodal approach will allow XR environments to adapt to a broader range of signals, making interactions more nuanced and responsive to the user’s overall experience. As we transition to a deeper exploration of multimodal systems, combining GSR with other forms of physiological data will be key to unlocking richer, more personalized, and emotionally intelligent XR environments.

3.7. Heart Rate Monitoring: Tracking Physiological Engagement

Heart rate monitoring offers valuable insights into both the emotional and physical states of users during XR experiences. By continuously measuring changes in heart rate, XR systems can assess physiological markers of arousal, excitement, or stress, allowing the virtual environment to respond and adapt in real-time. This form of biometric feedback plays a critical role in personalizing experiences, especially in applications such as virtual fitness, stress management, and emotional engagement.

In virtual fitness programs, heart rate monitoring ensures that users are exercising at optimal intensity levels, contributing to safer and more effective workout sessions. For instance, as users engage in physically demanding activities like virtual running, dancing, or other cardiovascular exercises, the system tracks their heart rate and adjusts the workout intensity based on real-time cardiovascular performance [

72]. If the user’s heart rate exceeds a certain threshold, the system may prompt the user to reduce the intensity or take breaks, ensuring that workouts remain within safe parameters. Conversely, if the heart rate is too low, the system may encourage the user to increase intensity for maximum fitness benefits. This dynamic adjustment based on heart rate allows virtual fitness programs to be tailored to individual needs, improving both user experience and exercise outcomes.

Beyond fitness, heart rate monitoring is equally important in stress management and emotional regulation within XR environments. In high-stress virtual simulations—such as those designed for military, emergency response, or flight training—heart rate data can be used to detect elevated stress levels. In these scenarios, the system might offer real-time adjustments, such as reducing the pace of the scenario or introducing calming environmental changes, to help the user manage their emotional state and avoid overwhelming stress responses [

70]. This is particularly valuable in training simulations where users must maintain focus and make critical decisions under pressure, ensuring that their performance is not compromised by excessive emotional arousal.

Moreover, heart rate monitoring enhances the immersive emotional response in virtual environments by aligning the virtual experience with the user’s physiological state. For example, in virtual reality therapy or mindfulness applications, the system can monitor heart rate as an indicator of the user’s relaxation or stress levels. If the user’s heart rate begins to increase, indicating rising anxiety or stress, the virtual environment can be modified to create a more calming atmosphere, such as softening background sounds, dimming lights, or slowing the pace of the virtual experience. This adaptive feedback based on heart rate enables a more personalized and emotionally intelligent interaction, enhancing the user’s connection with the virtual environment [

36].

In gaming and entertainment, heart rate monitoring can be used to heighten immersion and interactivity. For example, in a horror game, the system could increase or decrease the intensity of jump scares or other frightening elements based on the player’s heart rate. If the system detects that the player’s heart rate is too high, it might dial back the intensity to prevent overwhelming the player, while a low heart rate could prompt the game to become more intense. This responsive approach to entertainment, where physiological data directly impacts the gameplay, enhances the player’s emotional engagement and creates a more personalized gaming experience [

70].

Heart rate monitoring in XR can also play a crucial role in collaborative environments, where physiological feedback is shared among participants to enhance group dynamics. For example, in team-based virtual simulations or remote collaboration scenarios, the system can monitor heart rate data across all participants. If a member of the team exhibits signs of stress or anxiety (as indicated by an elevated heart rate), the system could alert other team members or suggest strategies to manage the group’s emotional state, fostering a more supportive and effective collaborative environment.

As XR systems continue to evolve, heart rate monitoring, when combined with other physiological modalities such as GSR and facial tracking, will enable even more sophisticated emotional and cognitive responses. Together, these tools create a holistic view of the user’s physical and emotional state, offering the potential for highly adaptive, personalized XR experiences. This physiological insight also sets the stage for our next exploration into brain activation monitoring, which delve deeper into the user’s cognitive states, offering new dimensions of control and interaction within XR environments.

3.8. Electroencephalogram (EEG): Brain-Computer Interfaces and Cognitive Control

Electroencephalogram (EEG) technology, which measures electrical activity in the brain, serves as the foundation for brain-computer interfaces (BCIs). BCIs offer users the ability to control virtual objects or navigate virtual environments directly through brain activity, bypassing traditional input devices like keyboards or controllers. This technology is particularly transformative in XR, providing new avenues for accessibility, cognitive insights, and personalized interaction. Through the integration of EEG with XR, users can engage more deeply with virtual worlds, transforming how they interact, learn, and perform tasks within the metaverse.

One of the most significant contributions of EEG-based BCIs to XR is in enhancing accessibility. For individuals with physical disabilities or limited mobility, BCIs offer a way to interact with the virtual world using only their thoughts. By analyzing specific brainwave patterns, EEG systems can detect user intentions, allowing them to select, move, or manipulate virtual objects without physical movement. This capability opens up the metaverse and other virtual environments to users who may not be able to engage through conventional means, offering a more inclusive and participatory experience [

73,

74]. The use of BCIs in XR enhances autonomy and independence, empowering users to perform complex tasks without relying on traditional input mechanisms.

Beyond accessibility, EEG in XR provides real-time cognitive insights by monitoring brainwave patterns associated with different mental states. For example, EEG sensors can track changes in focus, engagement, or fatigue, enabling XR systems to adapt dynamically to the user’s cognitive state. When the system detects that a user’s attention is waning, it can adjust the difficulty, pacing, or complexity of tasks to re-engage the user and optimize their performance [

28,

75]. This is especially useful in educational and training environments, where maintaining an optimal cognitive load is critical for effective learning. By personalizing the user experience based on real-time cognitive feedback, EEG technology enhances both learning outcomes and user satisfaction [

76].

EEG also plays a crucial role in creating adaptive environments that respond to the user’s mental state. By continuously analyzing brainwave patterns, EEG systems can gauge the user’s cognitive load, which reflects the amount of mental effort required to perform a task. In high-stakes virtual simulations—such as those used for military training, medical procedures, or emergency response—the system can use EEG data to detect when the user is becoming mentally overwhelmed and adjust the environment accordingly [

77,

78]. For example, the system might reduce task complexity, slow down the pace of interactions, or provide additional guidance to help the user manage their cognitive load. Conversely, if the user’s EEG data indicates that they are under-stimulated, the system can introduce more challenging tasks or increase the pace of the simulation to maintain engagement [

76]. This ability to tailor experiences to individual cognitive needs enhances both performance and immersion in XR environments.

The integration of EEG-based BCIs with XR technologies also opens the door to novel forms of interaction. In addition to controlling virtual objects, users can interact with the environment in ways that extend beyond traditional physical interfaces. For instance, a user could navigate a virtual landscape by simply focusing on a specific location, or they could interact with virtual characters by directing their attention to specific tasks. This kind of cognitive control offers a more fluid and intuitive way of engaging with the virtual world, eliminating the need for physical input devices and creating a more seamless and immersive experience [

73,

74].

Furthermore, EEG can be used in therapeutic settings, where real-time monitoring of brain activity can support mental health treatments. In virtual therapy sessions, EEG data can be used to track a user’s emotional and cognitive responses to different virtual scenarios, providing therapists with valuable insights into their mental state. For example, EEG could detect heightened anxiety during a virtual exposure therapy session, allowing the system to adjust the environment to help the user manage their stress levels [

27,

28,

79]. This real-time cognitive feedback can make therapeutic interventions more effective, offering personalized treatments based on the user’s mental state.

As EEG technology continues to advance, it is poised to become an integral part of adaptive and personalized XR environments, offering users the ability to engage with virtual worlds in ways that are tailored to their cognitive and emotional needs. The fusion of EEG and XR is not only transforming accessibility and interaction but also redefining the boundaries of cognitive augmentation, paving the way for more immersive and intuitive XR experiences.

4. XR Applications: Expanding Multimodal Interactions across Domains

XR offers groundbreaking opportunities across a wide range of fields. By integrating multiple modalities—such as eye-tracking, hand-tracking, haptic feedback, EEG, and biometric sensors—XR enhances immersion, interaction, and user performance in virtual environments. These multimodal systems are critical in optimizing the applications of XR, making it an essential tool for clinical interventions, education, professional training, entertainment, and beyond (see

Table 2). Below is an exploration of XR’s transformative potential across various fields, highlighting the role of multimodal integration in each context.

4.1. Clinical Applications: Therapy, Neuropsychological Assessment, and Rehabilitation

XR technologies are making significant advancements in clinical applications, ranging from mental health therapy and neuropsychological assessment to physical rehabilitation and pain management. Through the integration of multimodal feedback systems, such as eye-tracking, facial tracking, GSR, EEG, and heart rate monitoring, XR environments can provide real-time responses to a patient’s emotional and physiological states, resulting in personalized therapeutic interventions. This adaptability and personalization position XR technologies as transformative tools in clinical interventions, expanding both mental and physical health care.

4.1.1. Therapy

One of the key clinical applications of XR is VR exposure therapy, a method that has shown great promise in treating anxiety disorders, PTSD, and phobias. Patients are immersed in controlled virtual environments where they can gradually confront and manage their fears in a safe setting, allowing for more controlled and personalized treatment compared to traditional exposure therapies. A recent meta-analysis found that VR exposure therapy significantly reduces symptoms of PTSD and anxiety in a range of clinical populations [

80,

81].

The integration of multimodal sensors enhances this approach by monitoring the patient’s physiological responses. GSR measures stress through changes in skin conductance, while EEG provides insight into brain activity related to anxiety or relaxation. For instance, if the GSR data shows elevated stress levels during exposure to a feared stimulus (such as heights or spiders), the virtual environment can be automatically adjusted, reducing the intensity of the stimuli to encourage gradual desensitization [

36,

82,

83]. This allows for a more dynamic and responsive form of therapy, where the treatment environment adapts in real time based on the patient’s physiological state.

Furthermore, XR-based cognitive-behavioral therapy (CBT) is increasingly being used for the treatment of depression, addictions, and eating disorders [

26,

84]. In these applications, XR’s ability to simulate real-world environments allows patients to engage in therapeutic exercises that target maladaptive thoughts and behaviors in controlled, immersive settings. The combination of haptic feedback, eye-tracking, and facial recognition enables deeper emotional engagement, making therapy sessions more interactive and personalized.

4.1.2. Neuropsychological Assessment

XR offers enhanced tools for neuropsychological assessments, allowing for a more accurate and engaging evaluation of cognitive functions such as memory, attention, and executive functioning. Traditional assessments often rely on static paper-based tests, which can be limited in ecological validity. XR technologies provide an interactive alternative, where patients can perform tasks in immersive, dynamic environments, with their performance tracked in real time.

For instance, eye-tracking technology allows clinicians to evaluate how patients interact with stimuli during assessments, such as tracking gaze patterns to measure attention or fixations [

85,

86,

87]. In tasks where memory or decision-making is involved, eye-tracking data can indicate cognitive load, revealing whether a patient is struggling to process information or focusing on irrelevant stimuli. Such detailed data allows for a more nuanced understanding of cognitive impairments, such as attention deficits, compared to traditional methods [

85,

86,

87]

In addition to eye-tracking, EEG data provides insights into cognitive engagement and fatigue. Real-time EEG monitoring can detect cognitive overload, lapses in attention, or difficulty with memory retrieval, helping clinicians adjust the task’s complexity during assessments [

79,

88,

89]. By integrating multimodal feedback systems, clinicians can develop a more detailed and accurate cognitive profile for each patient, facilitating tailored treatment plans.

4.1.3. Rehabilitation

Physical rehabilitation is another area where XR is having a substantial impact. With full-body tracking and haptic feedback, patients recovering from injuries, surgeries, or neurological conditions can perform exercises in virtual environments that track and mirror their movements. For example, stroke patients can practice motor skills in a virtual environment where their movements are tracked in real-time, providing therapists with detailed data to monitor progress [

61].

A 2020 review found that VR-based rehabilitation leads to significant improvements in motor function for stroke survivors, particularly when combined with multisensory feedback [

57,

89,

90]. By providing tactile sensations, such as resistance when lifting virtual objects, haptic devices enhance motor learning and skill acquisition. Patients can engage in immersive exercises that mimic real-world tasks, such as picking up objects or walking through a virtual environment, encouraging recovery through realistic and engaging activities.

Beyond motor rehabilitation, XR is also used in cognitive rehabilitation for individuals with traumatic brain injury (TBI) or neurodegenerative diseases such as Alzheimer’s. Virtual environments provide patients with tasks that mimic everyday challenges, such as navigating a virtual store or solving a puzzle, while multimodal sensors monitor their cognitive performance. The system can adjust the difficulty level based on the patient’s responses, creating a personalized rehabilitation plan that supports both cognitive recovery and patient engagement [

81,

91].

4.1.4. Pain Management

XR is proving to be a powerful tool for pain management, particularly in addressing chronic pain. VR distraction therapy involves immersing patients in virtual environments that divert attention away from pain by engaging them in complex, immersive tasks. The use of multisensory feedback, such as visual, auditory, and tactile inputs, helps to reduce the perception of pain, with patients reporting significant pain relief during immersive VR sessions [

92].

Additionally, XR-based virtual mirror therapy has shown promise in treating phantom limb pain in amputees. By creating the visual illusion of movement in the missing limb using full-body tracking, patients can "see" their limb moving in the virtual environment, which helps reduce pain [

93,

94]. The combination of haptic feedback and EEG monitoring allows clinicians to better understand how pain perception can be modulated through virtual experiences, providing new avenues for non-pharmacological pain treatment.

4.2. Educational Applications: Engaging Learning Through XR

XR technologies are transforming the educational landscape by creating interactive and immersive environments that engage multiple sensory modalities, enhancing both learning and retention. By incorporating eye-tracking, hand-tracking, haptic feedback, and multimodal interfaces, XR brings abstract concepts to life and tailors the learning experience to individual needs. These innovations make learning more engaging and adaptable, promoting active participation and deeper comprehension in a wide range of subjects.

4.2.1. STEM Education

XR environments hold immense potential for STEM education, enabling students to visualize and manipulate complex scientific concepts that are often difficult to grasp through traditional learning methods. In subjects such as biology, physics, and chemistry, XR allows learners to interact with digital representations of biological structures, chemical reactions, or physical simulations. For example, students can explore the human body at a cellular level or perform virtual dissections, offering a more interactive approach to understanding anatomical structures [

95].

Hand-tracking technologies facilitate this process by allowing students to manipulate digital models with natural gestures like pinching or grabbing, making learning more intuitive. Similarly, eye-tracking can monitor students’ focus, providing feedback to instructors about which parts of the lesson are capturing attention and which are not, allowing real-time adjustments to optimize engagement [

44].

In chemistry, for instance, students can perform virtual experiments by interacting with molecular models or balancing chemical equations in a dynamic environment, ensuring a hands-on learning experience without the risks associated with traditional lab settings. Haptic feedback further enriches the learning process by introducing tactile sensations, enabling students to "feel" chemical bonds breaking or forming, or to experience resistance when interacting with virtual objects, which enhances the understanding of abstract concepts.

4.2.2. History and Humanities

XR also plays a crucial role in enhancing learning in the humanities, particularly in history education. Through AR, historical artifacts and cultural landmarks can be superimposed onto physical spaces, allowing students to explore ancient civilizations or historical events from the classroom. For instance, AR applications can project virtual Roman ruins or medieval castles into the school environment, enabling students to walk through and interact with these reconstructions [

29].