Submitted:

10 September 2024

Posted:

11 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Methods

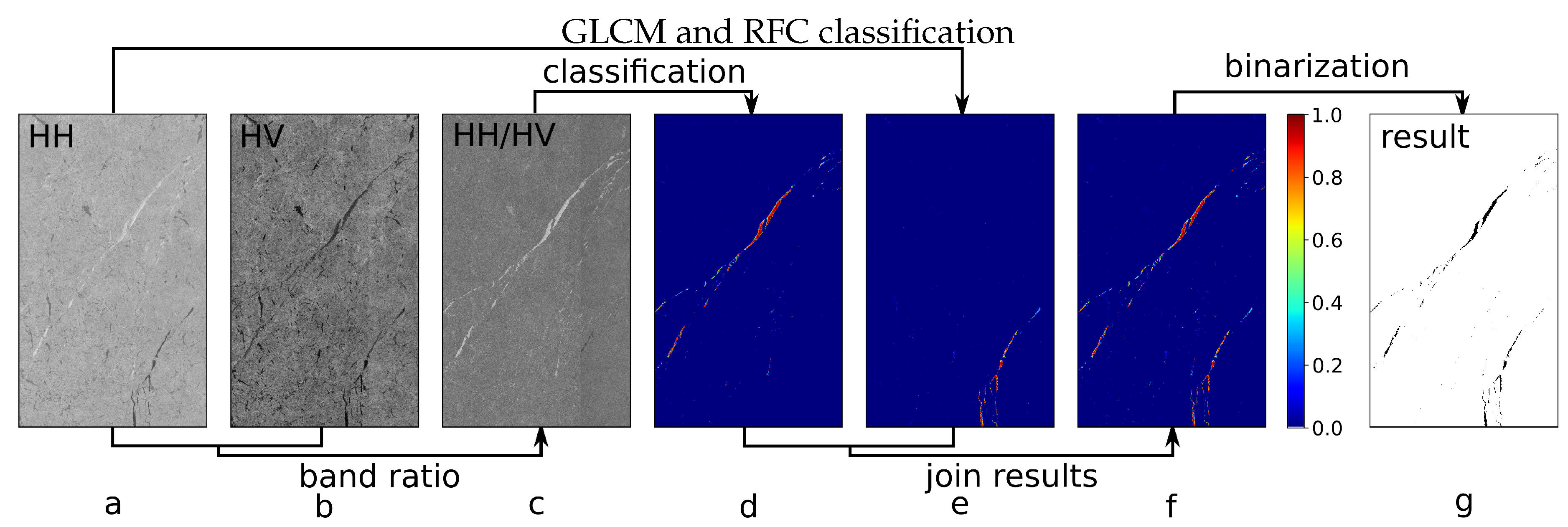

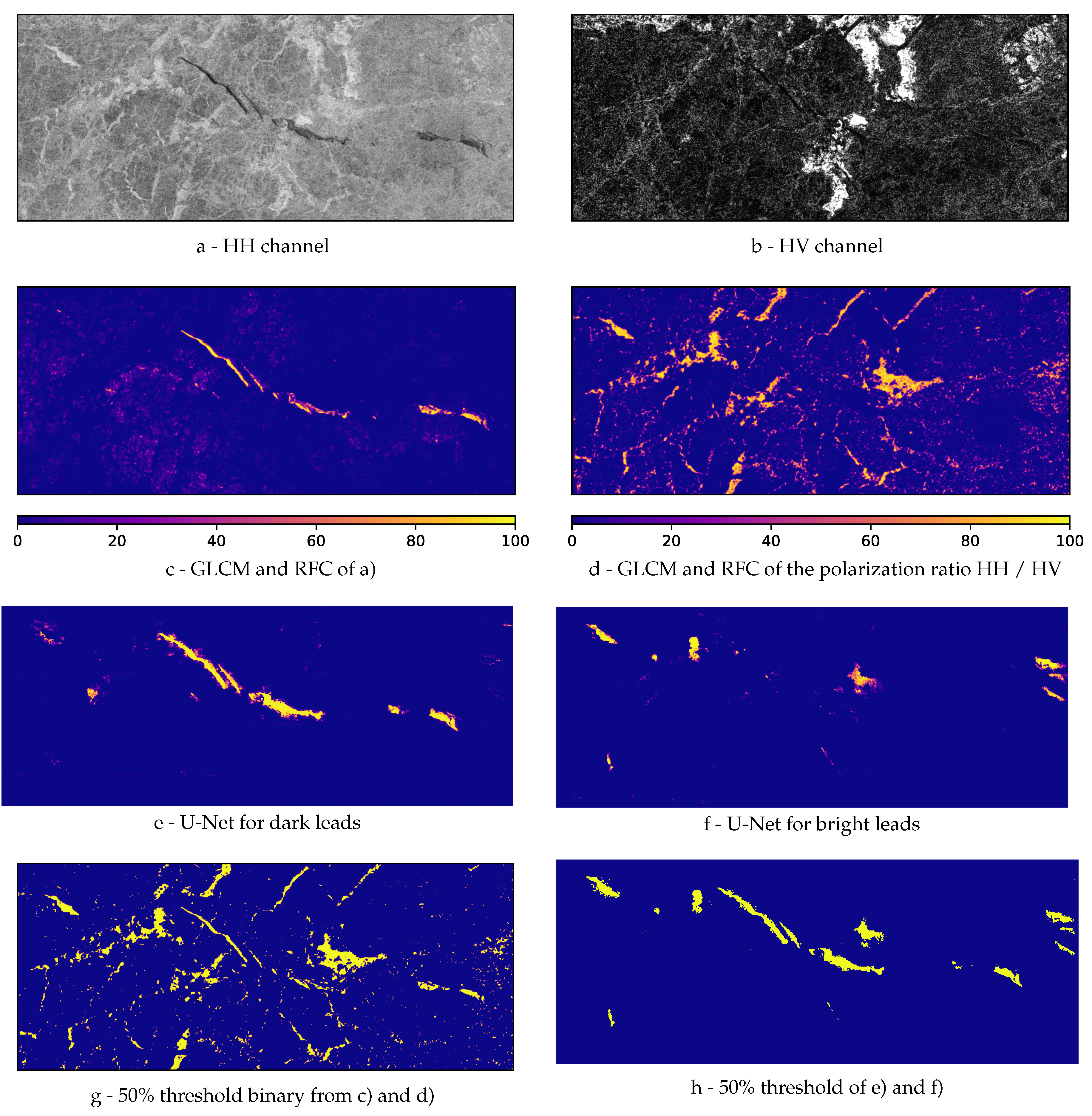

2.1. Existing Lead Classification Method

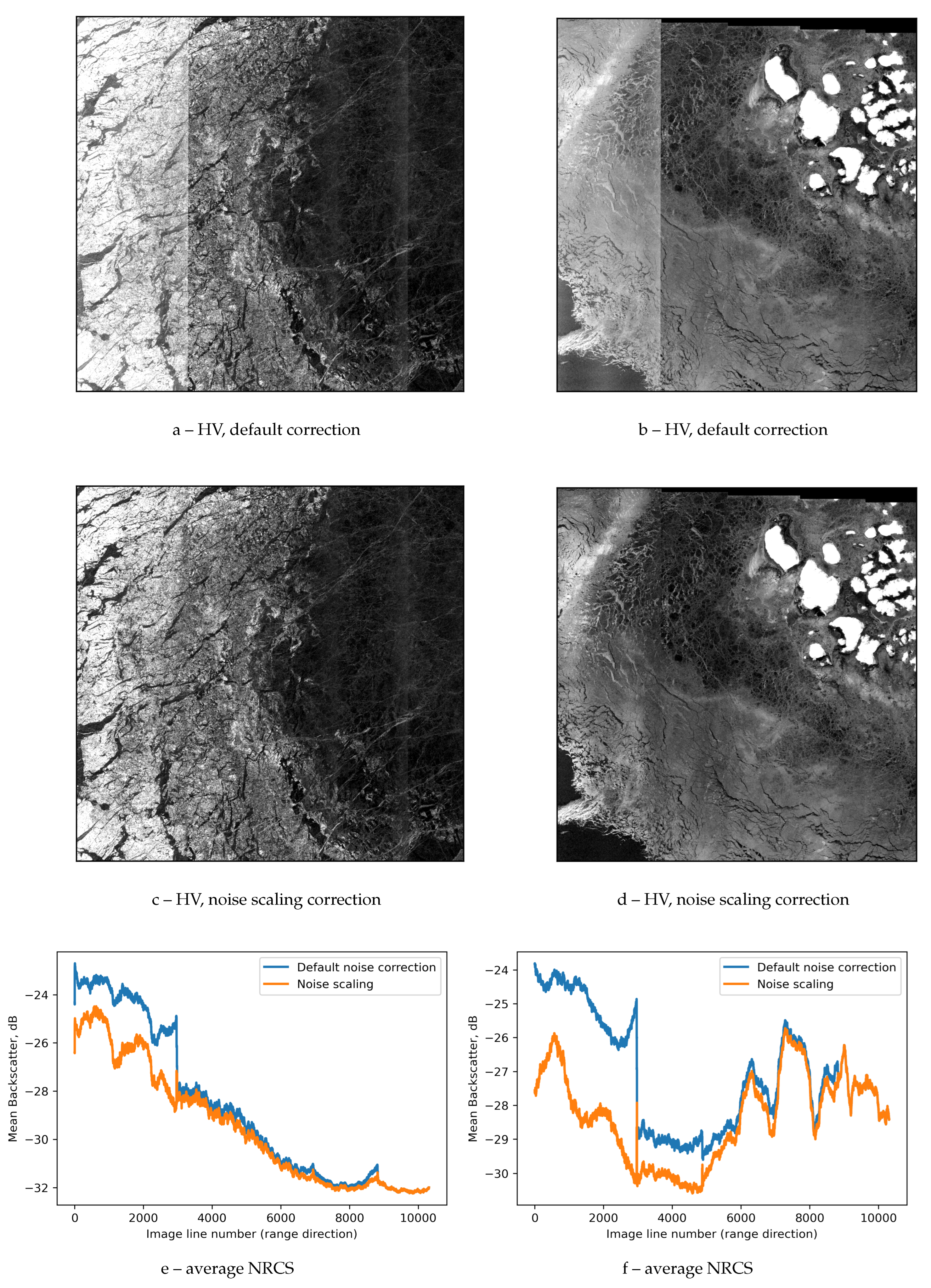

2.2. Improved Sentinel-1 Preprocessing

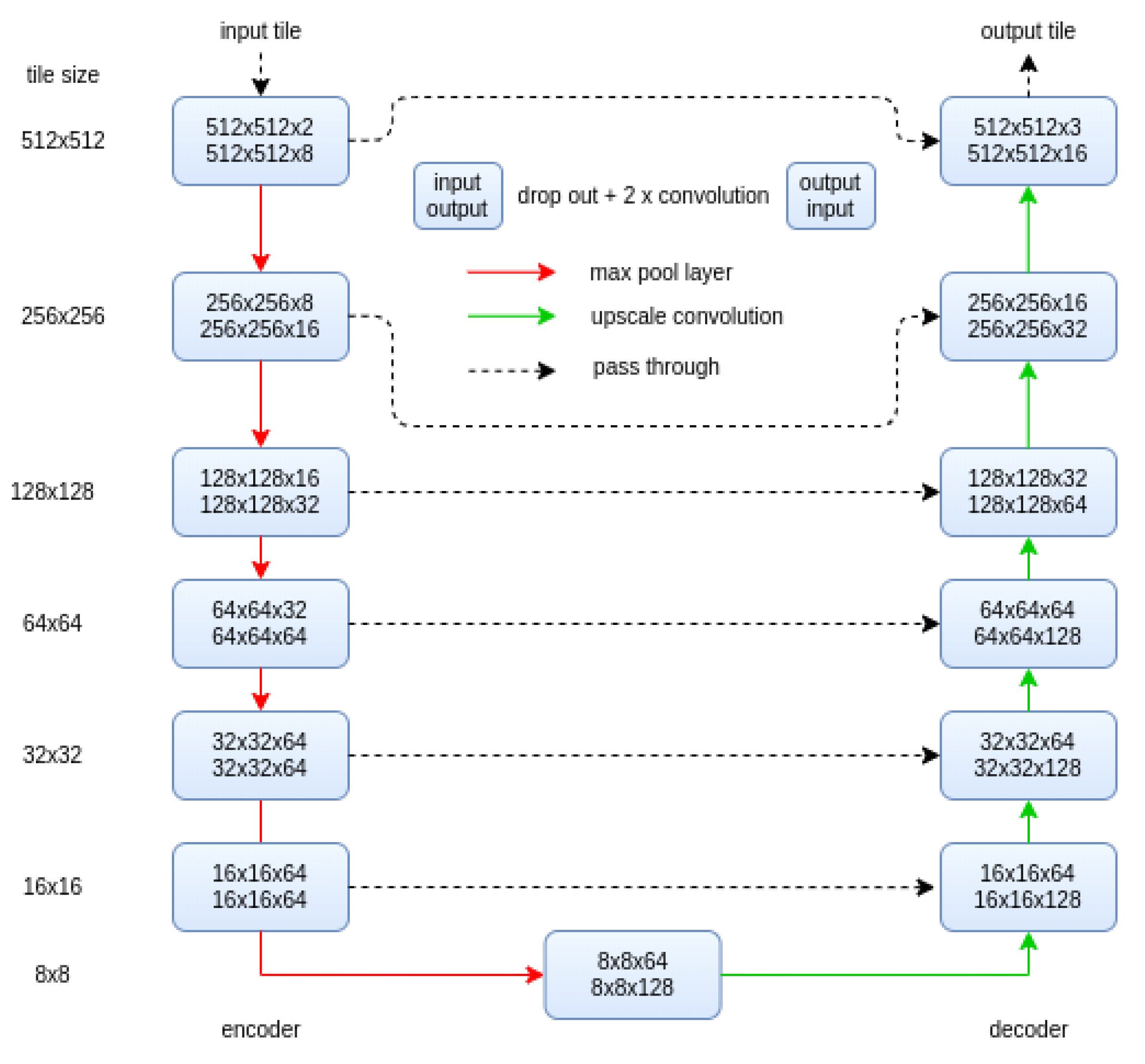

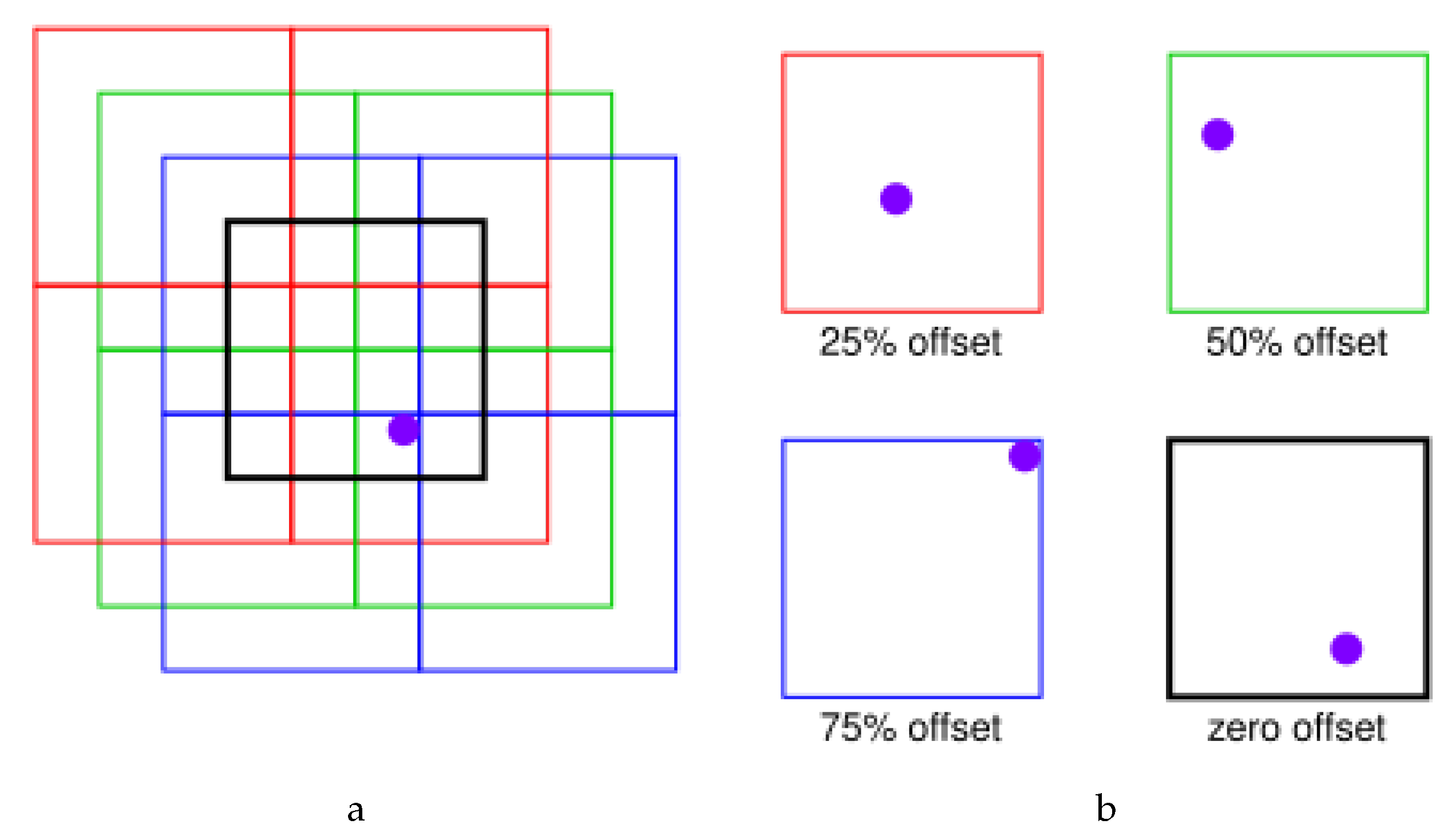

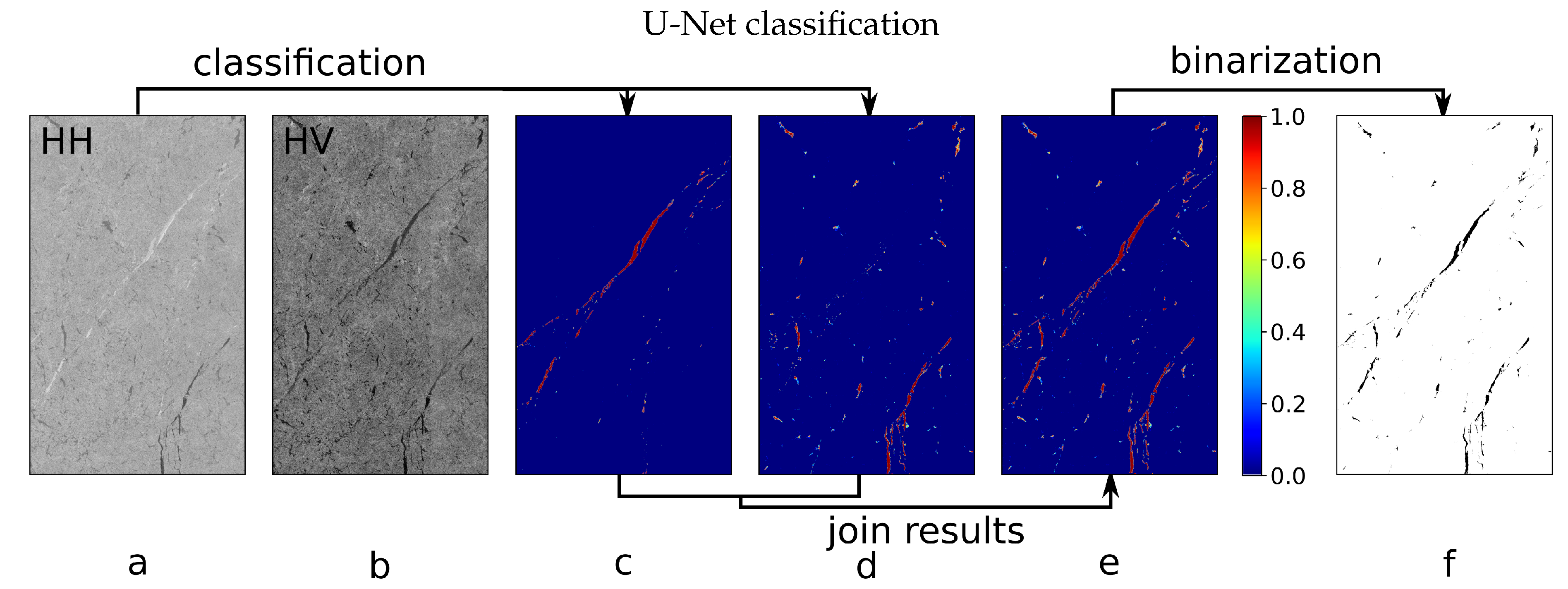

2.3. Improved Lead Detection

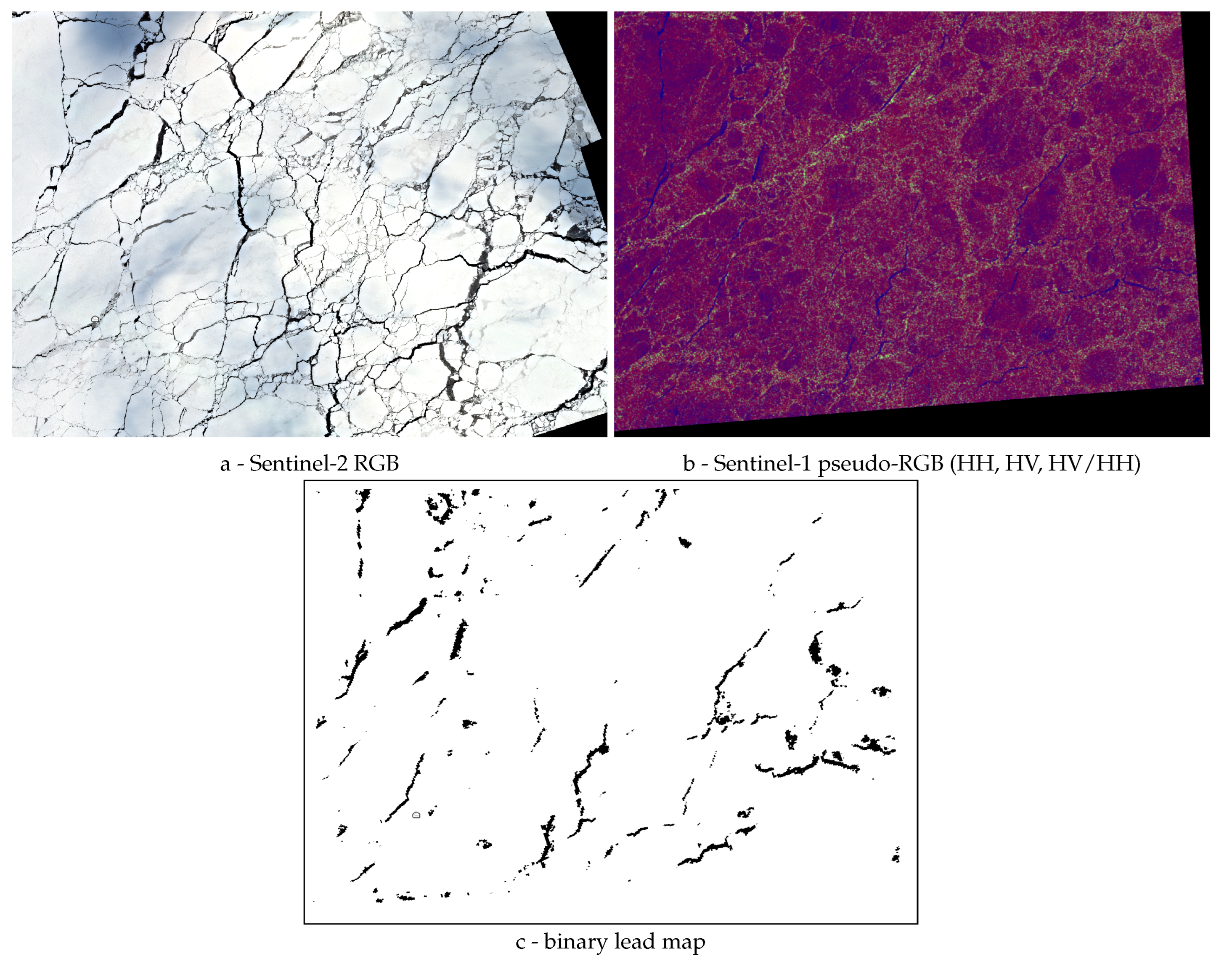

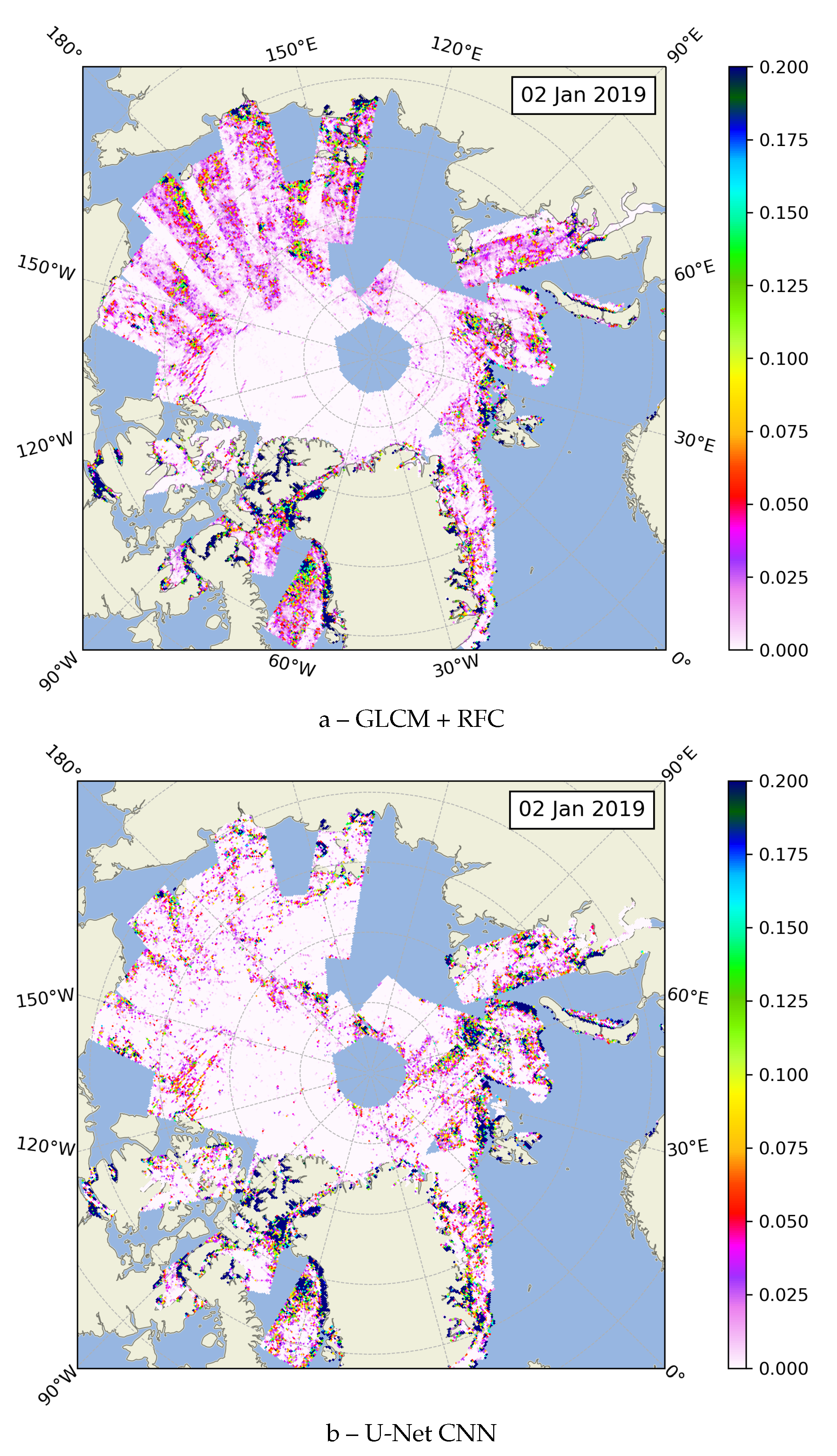

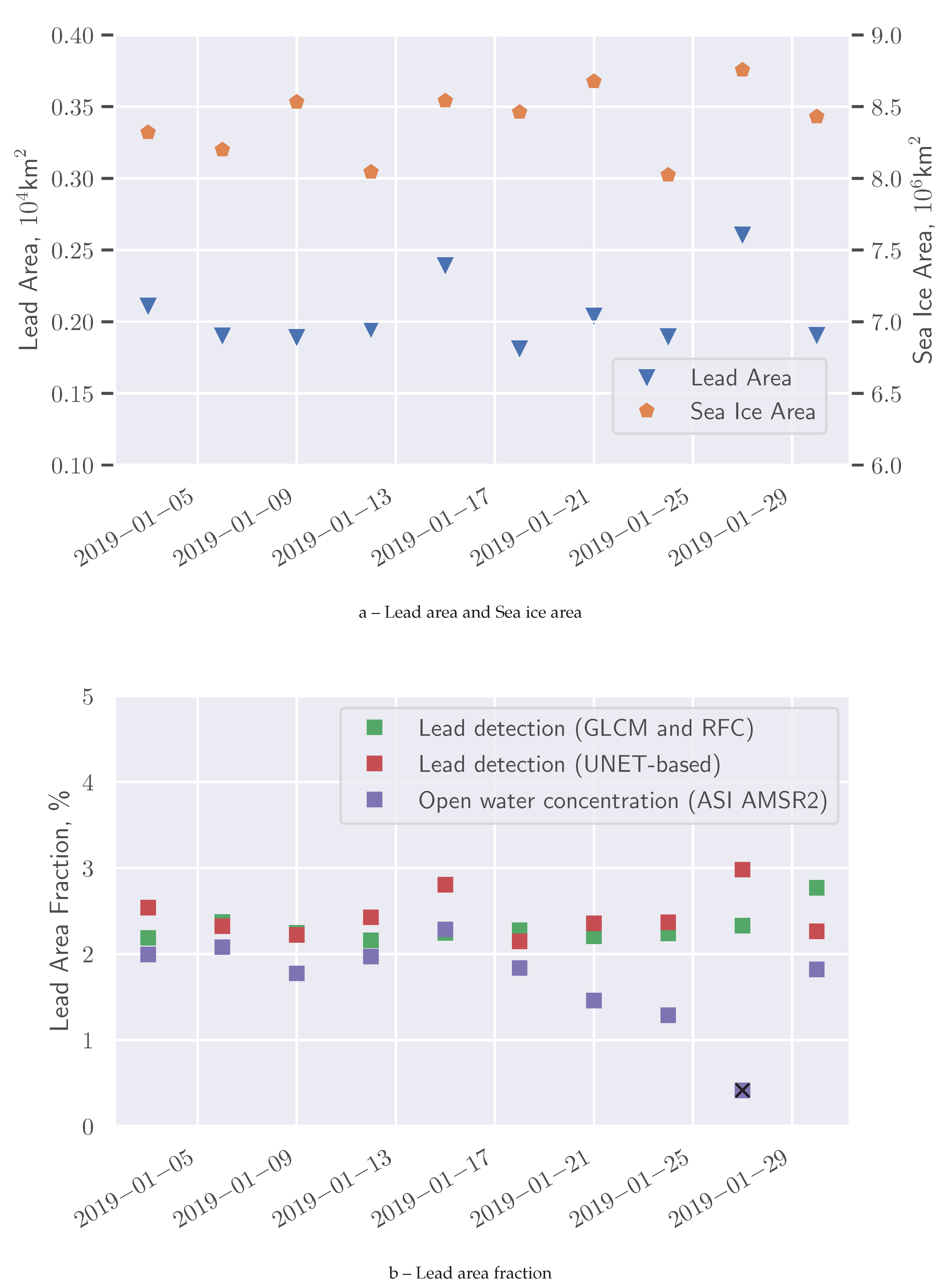

3. Results

4. Discussion

5. Conclusions

Acknowledgments

References

- Lindsay, R.W.; Rothrock, D.A. Arctic Sea Ice Leads from Advanced Very High Resolution Radiometer Images. Journal of Geophysical Research 1995, 100, 4533–4544. [Google Scholar] [CrossRef]

- Reiser, F.; Willmes, S.; Heinemann, G. A New Algorithm for Daily Sea Ice Lead Identification in the Arctic and Antarctic Winter from Thermal-Infrared Satellite Imagery. Remote Sensing 2020, 12, 1957. [Google Scholar] [CrossRef]

- Hoffman, J.P.; Ackerman, S.A.; Liu, Y.; Key, J.R.; McConnell, I.L. Application of a Convolutional Neural Network for the Detection of Sea Ice Leads. Remote Sensing 2021, 13, 4571. [Google Scholar] [CrossRef]

- Röhrs, J.; Kaleschke, L. An Algorithm to Detect Sea Ice Leads by Using AMSR-E Passive Microwave Imagery. The Cryosphere 2012, 6, 343–352. [Google Scholar] [CrossRef]

- Wernecke, A.; Kaleschke, L. Lead Detection in Arctic Sea Ice from CryoSat-2: Quality Assessment, Lead Area Fraction and Width Distribution. The CryosphereCryosphere 2015, 9, 1955–1968. [Google Scholar] [CrossRef]

- Longepe, N.; Thibaut, P.; Vadaine, R.; Poisson, J.C.; Guillot, A.; Boy, F.; Picot, N.; Borde, F. Comparative Evaluation of Sea Ice Lead Detection Based on SAR Imagery and Altimeter Data. IEEE Transactions on Geoscience and Remote Sensing 2019, 57, 4050–4061. [Google Scholar] [CrossRef]

- Ivanova, N.; Rampal, P.; Bouillon, S. Error Assessment of Satellite-Derived Lead Fraction in the Arctic. The Cryosphere 2016, 10, 585–595. [Google Scholar] [CrossRef]

- Komarov, A.S.; Buehner, M. Adaptive Probability Thresholding in Automated Ice and Open Water Detection from RADARSAT-2 Images. IEEE Geoscience and Remote Sensing Letters 2018, 15, 552–556. [Google Scholar] [CrossRef]

- Linow, S.; Dierking, W. Object-Based Detection of Linear Kinematic Features in Sea Ice. Remote Sensing 2017, 9, 1–15. [Google Scholar] [CrossRef]

- Hutter, N.; Zampieri, L.; Losch, M. Leads and Ridges in Arctic Sea Ice from RGPS Data and a New Tracking Algorithm. Cryosphere 2019, 13, 627–645. [Google Scholar] [CrossRef]

- Von Albedyll, L.; Hendricks, S.; Hutter, N.; Murashkin, D.; Kaleschke, L.; Willmes, S.; Thielke, L.; Tian-Kunze, X.; Spreen, G.; Haas, C. Lead Fractions from SAR-derived Sea Ice Divergence during MOSAiC. The Cryosphere 2024, 18, 1259–1285. [Google Scholar] [CrossRef]

- Komarov, A.S.; Landy, J.C.; Komarov, S.A.; Barber, D.G. Evaluating Scattering Contributions to C-Band Radar Backscatter From Snow-Covered First-Year Sea Ice at the Winter – Spring Transition Through Measurement and Modeling. IEEE Transactions on Geoscience and Remote Sensing 2017, 55, 5702–5718. [Google Scholar] [CrossRef]

- Fors, A.S.; Member, S.; Brekke, C.; Gerland, S.; Doulgeris, A.P.; Beckers, J.F.; Member, S. Late Summer Arctic Sea Ice Surface Roughness Signatures in C-Band SAR Data. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 2016, 9, 1199–1215. [Google Scholar] [CrossRef]

- Ressel, R.; Singha, S.; Lehner, S.; Rosel, A.; Spreen, G. Investigation into Different Polarimetric Features for Sea Ice Classification Using X-Band Synthetic Aperture Radar. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 2016, 9, 3131–3143. [Google Scholar] [CrossRef]

- Singha, S.; Johansson, M.; Hughes, N.; Hvidegaard, S.M.; Skourup, H. Arctic Sea Ice Characterization Using Spaceborne Fully Polarimetric L-, C-, and X-Band SAR with Validation by Airborne Measurements. IEEE Transactions on Geoscience and Remote Sensing 2018, 56, 3715–3734. [Google Scholar] [CrossRef]

- Xie, T.; Perrie, W.; Wei, C.; Zhao, L. Discrimination of Open Water from Sea Ice in the Labrador Sea Using Quad-Polarized Synthetic Aperture Radar. Remote Sensing of Environment 2020, 247, 111948. [Google Scholar] [CrossRef]

- Zhao, L.; Xie, T.; Perrie, W.; Yang, J. Sea Ice Detection from RADARSAT-2 Quad-Polarization SAR Imagery Based on Co- and Cross-Polarization Ratio. Remote Sensing 2024, 16, 515. [Google Scholar] [CrossRef]

- Leigh, S.; Wang, Z.; Clausi, D.A. Automated Ice-Water Classification Using Dual Polarization SAR Satellite Imagery. IEEE Transactions on Geoscience and Remote Sensing 2014, 52, 5529–5539. [Google Scholar] [CrossRef]

- Liu, H.; Guo, H.; Zhang, L. SVM-Based Sea Ice Classification Using Textural Features and Concentration From RADARSAT-2 Dual-Pol ScanSAR Data. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 2015. [Google Scholar] [CrossRef]

- Zakhvatkina, N.; Korosov, A.; Muckenhuber, S.; Sandven, S.; Babiker, M. Operational Algorithm for Ice–Water Classification on Dual-Polarized RADARSAT-2 Images. The Cryosphere 2017, 11, 33–46. [Google Scholar] [CrossRef]

- Li, X.M.; Sun, Y.; Zhang, Q. Extraction of Sea Ice Cover by Sentinel-1 SAR Based on Support Vector Machine with Unsupervised Generation of Training Data. IEEE Transactions on Geoscience and Remote Sensing 2021, 59, 3040–3053. [Google Scholar] [CrossRef]

- Qu, M.; Lei, R.; Liu, Y.; Li, N. Arctic Sea Ice Leads Detected Using Sentinel-1B SAR Image and Their Responses to Atmosphere Circulation and Sea Ice Dynamics. Remote Sensing of Environment 2024, 308, 114193. [Google Scholar] [CrossRef]

- Boulze, H.; Korosov, A.; Brajard, J. Classification of Sea Ice Types in Sentinel-1 SAR Data Using Convolutional Neural Networks. Remote Sensing 2020, 12, 2165. [Google Scholar] [CrossRef]

- Chen, X.; Patel, M.; Pena Cantu, F.J.; Park, J.; Noa Turnes, J.; Xu, L.; Scott, K.A.; Clausi, D.A. MMSeaIce: A Collection of Techniques for Improving Sea Ice Mapping with a Multi-Task Model. The Cryosphere 2024, 18, 1621–1632. [Google Scholar] [CrossRef]

- Malmgren-Hansen, D.; Pedersen, L.T.; Nielsen, A.A.; Kreiner, M.B.; Saldo, R.; Skriver, H.; Lavelle, J.; Buus-Hinkler, J.; Krane, K.H. A Convolutional Neural Network Architecture for Sentinel-1 and AMSR2 Data Fusion. IEEE Transactions on Geoscience and Remote Sensing 2021, 59, 1890–1902. [Google Scholar] [CrossRef]

- Wang, Y.R.; Li, X.M. Arctic Sea Ice Cover Data from Spaceborne Synthetic Aperture Radar by Deep Learning. Earth System Science Data 2021, 13, 2723–2742. [Google Scholar] [CrossRef]

- Liang, Z.; Pang, X.; Ji, Q.; Zhao, X.; Li, G.; Chen, Y. An Entropy-Weighted Network for Polar Sea Ice Open Lead Detection From Sentinel-1 SAR Images. IEEE Transactions on Geoscience and Remote Sensing 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Murashkin, D.; Spreen, G.; Huntemann, M.; Dierking, W. Method for Detection of Leads from Sentinel-1 SAR Images. Annals of Glaciology 2018, 59, 124–136. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation, 2015, [arXiv:cs/1505.04597]. [CrossRef]

- Spreen, G.; Kaleschke, L.; Heygster, G. Sea Ice Remote Sensing Using AMSR-E 89-GHz Channels. Journal of Geophysical Research 2008, 113. [Google Scholar] [CrossRef]

- Tomasi, C.; Manduchi, R. Bilateral Filtering for Gray and Color Images. Sixth International Conference on Computer Vision, 1998. [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Transactions on Systems, Man, and Cybernetics 1973, smc-3, 610–621. [Google Scholar] [CrossRef]

- Ho, T.K. The Random Subspace Method for Constructing Decision Forests. IEEE Transactions on Pattern Analysis and Machine Intelligence 1998, 20, 832–844. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Machine Learning 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Park, J.W.; Won, J.S.; Korosov, A.A.; Babiker, M.; Miranda, N. Textural Noise Correction for Sentinel-1 TOPSAR Cross-Polarization Channel Images. IEEE Transactions on Geoscience and Remote Sensing 2019, 57, 4040–4049. [Google Scholar] [CrossRef]

- Sun, Y.; Li, X.M. Denoising Sentinel-1 Extra-Wide Mode Cross-Polarization Images Over Sea Ice. IEEE Transactions on Geoscience and Remote Sensing 2021, 59, 2116–2131. [Google Scholar] [CrossRef]

- Korosov, A.; Demchev, D.; Miranda, N.; Franceschi, N.; Park, J.W. Thermal Denoising of Cross-Polarized Sentinel-1 Data in Interferometric and Extra Wide Swath Modes. IEEE Transactions on Geoscience and Remote Sensing 2022, 60, 1. [Google Scholar] [CrossRef]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving Neural Networks by Preventing Co-Adaptation of Feature Detectors, 2012, [arXiv:cs/1207.0580].

- Fukushima, K. Visual Feature Extraction by a Multilayered Network of Analog Threshold Elements. IEEE Transactions on Systems Science and Cybernetics 1969, 5, 322–333. [Google Scholar] [CrossRef]

- Cortes, C.; Mohri, M.; Rostamizadeh, A. L2 Regularization for Learning Kernels 2009.

- Maykut, G.A. Energy Exchange over Young Sea Ice in the Central Arctic. Journal of Geophysical Research 1978, 83, 3646–3658. [Google Scholar] [CrossRef]

- Thorndike, A.S.; Rothrock, D.A.; Maykut, G.A.; Colony, R. The Thickness Distribution of Sea Ice. Journal of Geophysical Research 1975, 80, 4501–4513. [Google Scholar] [CrossRef]

- Murashkin, D. Remote Sensing of Sea Ice Leads with Sentinel-1 C-band Synthetic Aperture Radar 2024. [CrossRef]

| true\predicted | dark leads | bright leads | sea ice | |

|---|---|---|---|---|

| dark leads | 0.989 | 0.0 | 0.011 | |

| bright leads | 0.0 | 0.989 | 0.011 | |

| sea ice | 0.001 | 0.0 | 0.999 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).