1. Introduction

According to the International Marine Organization (IMO) records, more than 90% of global trade and transportation rely on maritime shipping, as it is the most cost-effective and efficient method for international trade [

1]. However, the development of shipping has also led to marine environmental pollution. Therefore, it is crucial to raise Maritime Domain Awareness (MDA) and protect ocean resources. Oil spills are one of the primary causes of marine environmental pollution. In marine oil spill incidents, there are different sources of pollution, including offshore platform, ship accidents, illegal discharges from ships, submarine oil pipeline leaks and port operations. With the development of the economy, industrial facilities discharge the most of oil pollution. However, oil pollution from ship accidents or illegal discharges occurs more frequently in marine environment [

2]. Oil pollution not only harms the environment and wildlife, but also requires substantial manpower and resources for cleanup.

In the past, using aircraft and ships to inspect oil spills on the sea surface was relatively flexible. However, due to the small observation range, it required patrolling the entire area, which was time-consuming. Additionally, inspections and monitoring by aircraft and ships were mainly based on visible light or infrared sensors, which could not detect oil spills at night or under adverse weather conditions due to limitations imposed by weather and distance. In recent years, spaceborne Synthetic Aperture Radar (SAR) imagery has played a significant role in marine environment monitoring. Oil spills on the sea surface inhibit capillary waves, reducing radar backscatter. Consequently, oil spill areas appear as dark regions in SAR images [

3,

4]. Utilizing this feature, SAR imagery enables 24-hour all-weather observation of marine oil spills. Ships typically appear as strong scatters in SAR images due to their metal structures and complex shapes, which cause significant radar reflections [

5]. These strong scatters often appear as bright spots in the SAR images. Therefore, oil spills and ships in SAR imagery can be detected using deep learning models [

6,

7,

8,

9,

10,

11,

12,

13]. However, SAR images can be used to observe oil pollution incidents, but they cannot provide detailed information about the ships suspected of leaking oil. Automatic Identification System (AIS) is an automatic tracking system installed on ships that regularly transmits ship navigation-related information, including static information such as vessel name, length, and width, as well as dynamic information such as position (latitude and longitude), speed, heading and course. However, AIS data is only applicable to vessels equipped with AIS receivers, and in densely populated areas, AIS signal overlap and loss frequently occur. The European Maritime Safety Agency (EMSA) is an agency of the European Union (EU) established to enhance maritime safety and security, prevent pollution from ships, and respond to maritime incidents. CleanSeaNet is an important service provided by the EMSA that focuses on oil spill detection, monitoring, and response [

14]. By providing advanced satellite-based monitoring and timely alerts on oil spills, CleanSeaNet enables the users to respond swiftly and effectively to oil pollution incidents. In addition, the system also integrates corresponding AIS data to track ships. Although individual technologies may have inherent limitations, their combination can provide effective monitoring of vessel activities and assist in investigating suspicious behavior occurring near management areas. Therefore, combining SAR and AIS data can effectively monitor the marine environment and track vessels suspected of discharging oil spills.

In recent years, many studies have attempted to use SAR and AIS data for marine environment monitoring. Rodger et al. [

15] focused on the integration of SAR and AIS data for ship detection in environments with densely distributed ships. Leveraging the large coverage area of SAR, the study extracted information from regions with high ship density. However, the fusion of SAR and AIS data poses challenges, especially in matching ships from both datasets, and the efficiency and accuracy can be reduced in densely populated areas. In the experiment, 6,084 targets were used to train the model, achieving an overall accuracy of 91.8%. Galdelli et al. [

16] compared SAR and AIS data to predict navigation trajectories. First, SAR targets and AIS data were checked to see if they were within a certain distance range. If their positions were close, the ships were confirmed. Next, the navigation status and position of ship targets were predicted to detect any abnormal navigation trajectories or determine if ships had turned off AIS to engage in illegal activities by using AIS information. Therefore, combining SAR and AIS data can achieve efficient ship detection and enhance maritime traffic management effectiveness. Liu et al. [

17] first utilized SAR imagery for target detection to determine the presence of abnormal objects on the sea surface. Next, extension direction, sea surface distribution, and geographical location characteristics were used to assess whether these abnormal targets were oil spills. Once identified as oil spills, information such as wind speed, the distribution of oil platforms, and ship navigation routes were used to ascertain whether the oil spills were caused by oil platform leaks or ship discharges. Finally, if identified as ship discharges, AIS data were analyzed to identify the polluting ships. Therefore, by combining SAR and AIS data, the efficiency of marine ship detection can be enhanced, as well as ship monitoring and tracking.

In this study, the semantic segmentation model FA-MobileUNet [

18] was adopted to detect the oil spills and ships using SAR images. However, the model was trained by the MKLab dataset [

19], and the ground truth data was not post-processed. Therefore, many small holes can be found in the oil spill detection results of the model. This study referenced morphological concepts to improve the spatial feature extraction of the model, allowing the training process to produce more complete feature maps, resulting in more comprehensive detection results of oil spill areas. Finally, through various oil spill incidents in Taiwan, corresponding SAR images and AIS data were acquired to verify the effectiveness of this oil spill detection method for marine environmental surveillance.

The rest of this paper is organized as follows:

Section 2 describes the oil spill dataset used in this study and introduces the proposed oil spill detection method. The experimental results are shown in

Section 3. Some discussions are presented in

Section 4. Finally, the study is concluded in

Section 5.

2. Materials and Methods

2.1. Oil Spill Dataset

Previous studies [

6,

7,

8,

12,

13] did not have a common oil spill dataset, making it impossible to effectively compare and analyze performance. Moreover, oil spills have a relatively small distribution area in the ocean, making it difficult to obtain a large amount of data for training deep learning networks. Therefore, the U-Net oil spill detection model was trained on the extended MKLab dataset provided by Chen et al. [

18] in this study. The original MKLab dataset was created using Sentinel-1 images from the Copernicus Open Access Hub [

20] by European Space Agency (ESA), and is available on their public website [

21]. The data from MKLab was collected from oil spill incidents recorded by EMSA’s CleanSeaNet system. Sentinel-1 images corresponding to the timestamp and geo-location of the oil spill incidents were downloaded for identification. The data collection period for the original MKLab dataset ranged from September 2015 to October 2017. The extended MKLab dataset included oil spill incidents collected up to 2022.

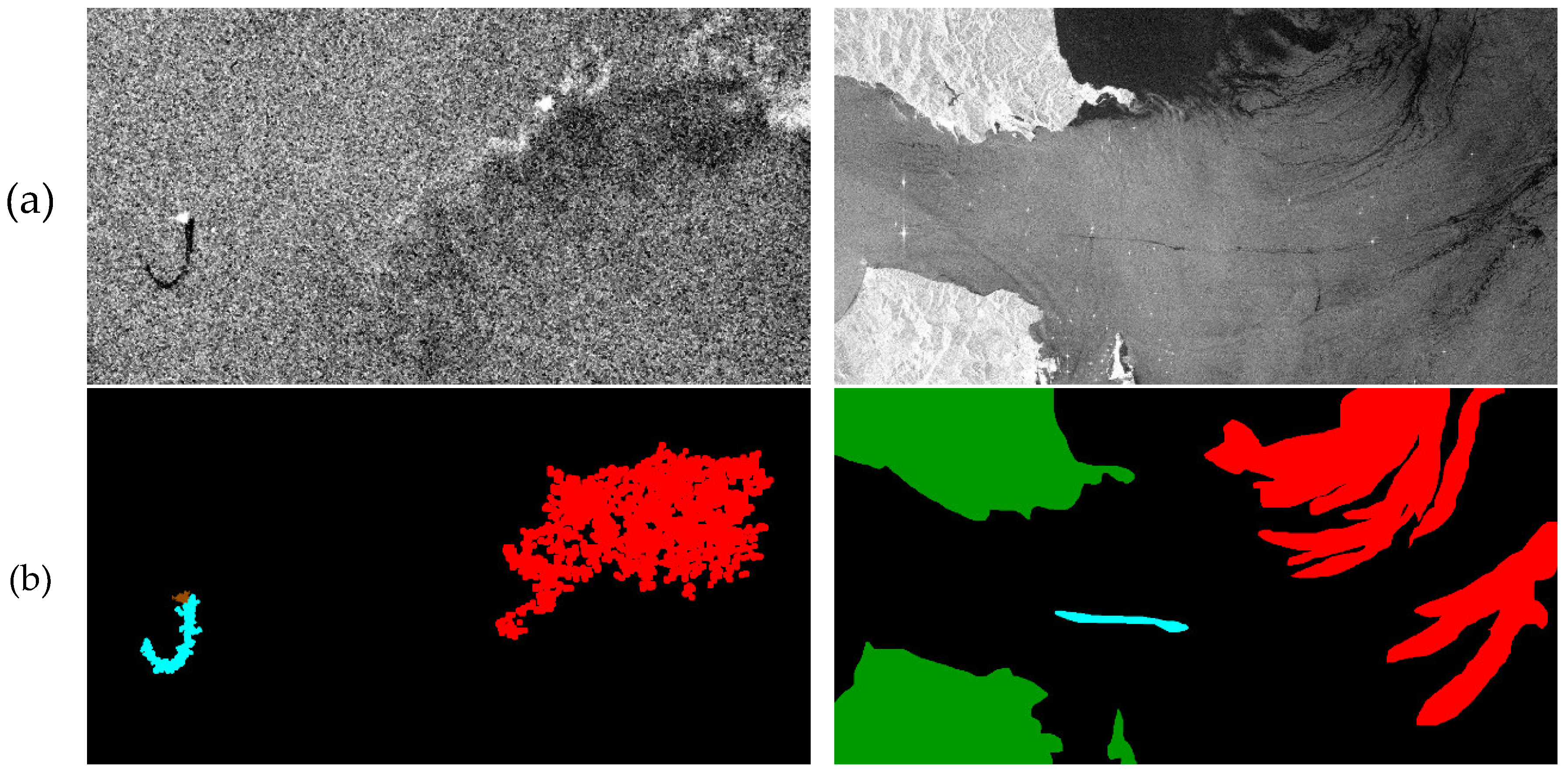

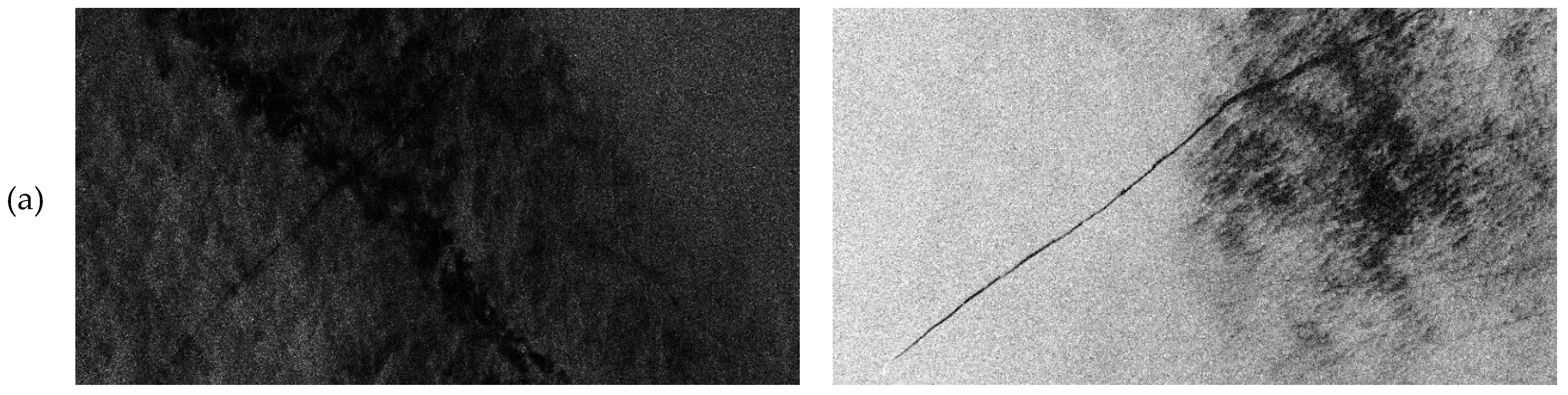

The oil spill dataset contained a total of 1239 images, with a size of 1250 × 650 pixels, including 1,129 training images and 110 testing images. The dataset used Sentinel-1 VV polarization with C-band images, with a pixel spacing of 10 m × 10 m. The dataset includes five categories: oil spills, look-alikes, ships, sea surface, and land. Each category is annotated with different RGB colors: oil spills in cyan, look-alikes in red, ships in brown, sea surface in black, and land in green, as shown in

Figure 1. Moreover, one-dimensional annotation data was provided, assigning each category an integer value between 0 and 4.

2.2. Improved Oil Spill Detection Model

Semantic segmentation networks have been widely applied to SAR image oil spill segmentation in recent years, achieving excellent detection performance. The U-Net model [

22,

23], an encoder-decoder architecture, can be trained with a small amount of data to yield fast and accurate segmentation results. To achieve more accurately segmentation results of oil spill areas, this study adopted the FA-MobileUNet oil spill detection model proposed by Chen et al. [

18]. This model architecture strengthened feature extraction for targets at different scales in the MKLab dataset, effectively extracting information of various categories for learning. Moreover, the model can correctly distinguish the look-alikes, which is often confused with oil spills, significantly improving the detection performance. However, the detection results of some oil spills were incomplete and fragmented. For example, there were often some small holes within the detected oil spill areas. The complete oil spill detection range can help with the shape description and area reporting of oil spills in marine environment monitoring. Therefore, in order to monitor marine oil spill pollution more effectively, the concept of morphology was introduced into FA-MobileUNet to enhance the spatial information extraction capability of the model and obtained more complete oil spill segmentation results. The architecture of the improved FA-MobileUNet model was shown in

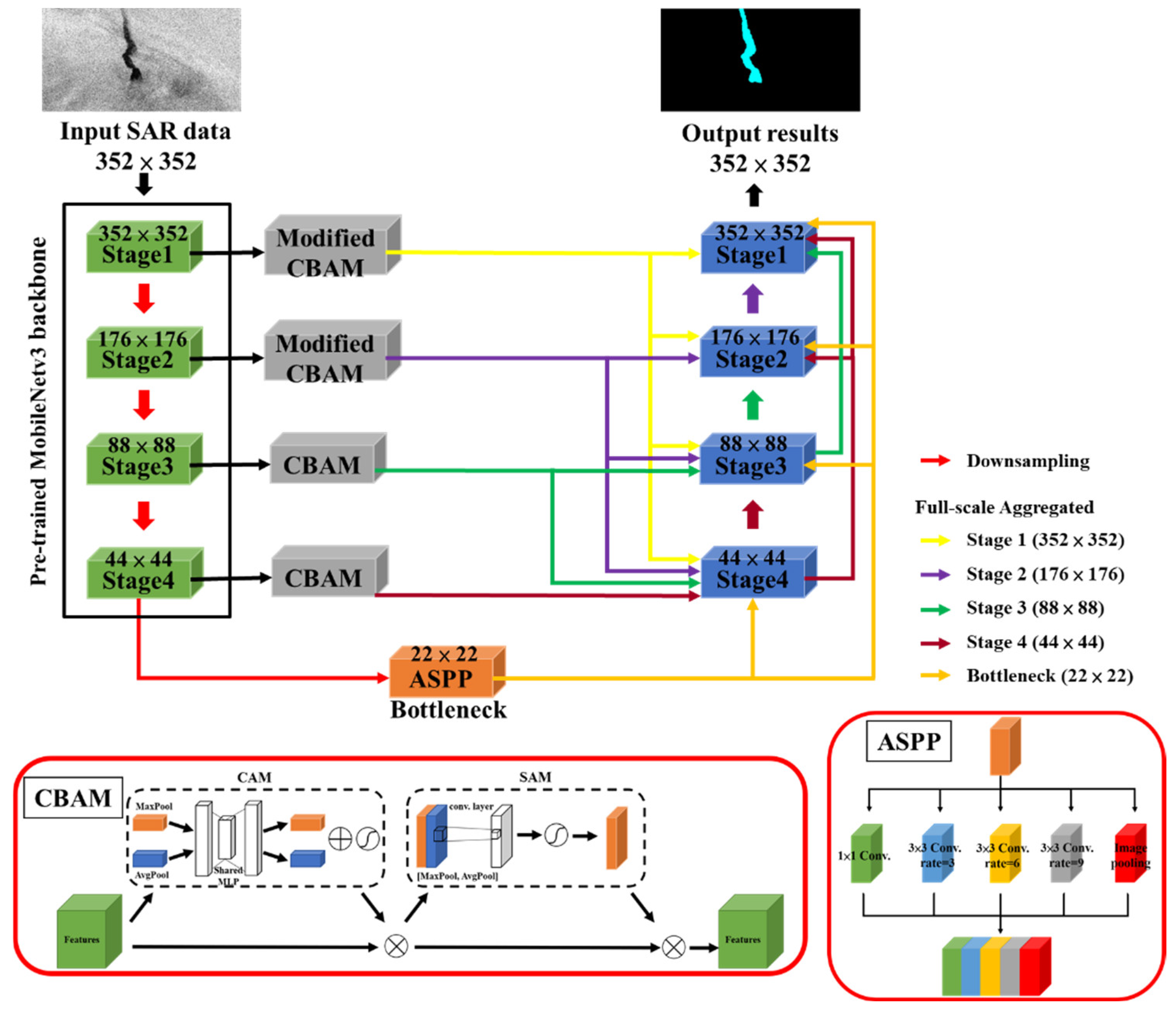

Figure 2.

2.2.1. FA-MobileUNet Model

Due to the U-Net model’s encoder-decoder architecture and the skip connections approach, the model can output high-resolution segmentation results. The encoder effectively captures the contextual information of the input data, which is then used by the decoder to produce accurate segmentation results. This FA-MobileUNet model enhanced feature extraction capabilities by replacing the encoder’s backbone network with MobileNetv3 [

24]. MobileNetv3 is a convolutional neural network (CNN) architecture designed for mobile and edge devices, focusing on achieving a balance between low latency and high accuracy. MobileNetv3 relies heavily on depthwise separable convolutions to reduce computational complexity. In addition, Inverted Residual Blocks and Squeeze-and-Excitation (SE) modules [

25] are utilized to enhance feature extraction capabilities without increasing computational burden.

Next, the attention mechanism in deep learning models serves important purposes that enhance the model’s ability to focus on relevant parts of the input data, improve performance and interpretability. Attention mechanisms allow models to dynamically prioritize different parts of the input data when generating each element of the output. This approach helps the model focus on the most relevant contextual information in the input data and extracting the meaningful features. Therefore, the FA-MobileUNet model adopted the Convolutional Block Attention Module (CBAM) [

26], which enhanced the feature representation of the feature maps output by the encoder at different scales through channel attention module (CAM) and spatial attention module (SAM).

In addition, Atrous Spatial Pyramid Pooling (ASPP) is a module used in deep learning, particularly in the context of semantic segmentation tasks [

27]. Its main purpose is to capture multi-scale contextual information and improve the ability of CNNs to segment images at different scales. Atrous convolutions enable ASPP to increase the receptive field without reducing the spatial resolution of feature maps. Therefore, the bottleneck connecting the encoder and decoder in FA-MobileUNet model incorporated the ASPP module to enhance multi-scale feature extraction.

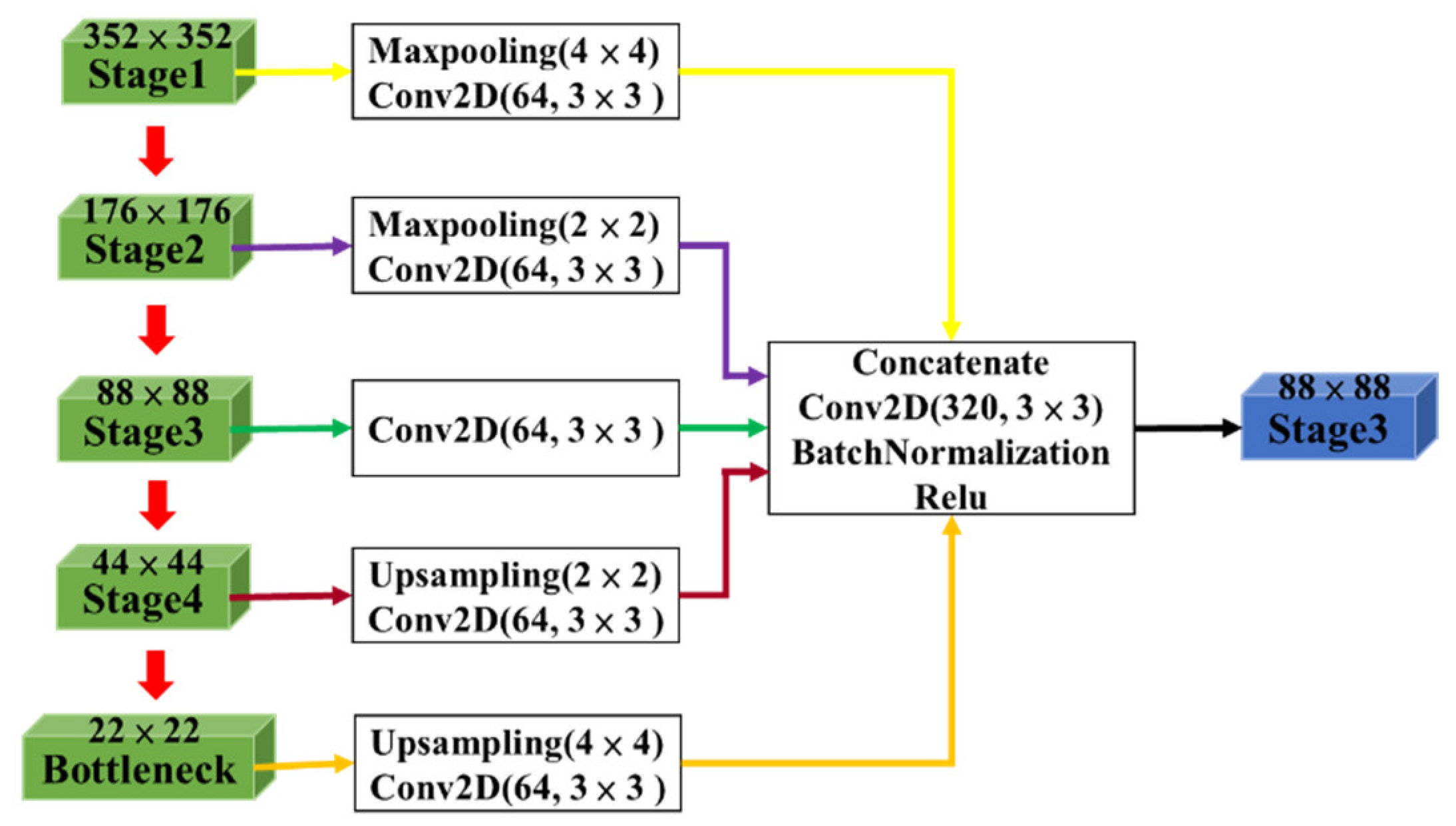

However, as the U-Net model passes through multiple convolutional layers and downsampling, meaningful features gradually disappear and reduce in spatial resolution. Therefore, to effectively utilize low-level spatial information and high-level semantic features, the Full-Scale Aggregated (FA) module concatenated the features at different scales from the encoder block and aggregated with the feature maps of decoder block, as shown in

Figure 3. This method allowed the model to effectively train targets at different scales, improving oil spill detection performance. Therefore, the model can effectively utilize the features maps of different scales for training, resulting in accurate detection results.

2.2.2. Modified CBAM

The segmentation results of SAR maritime images often suffer from numerous imperfections such as fragmented regions and incomplete shapes. These deficiencies may arise from inherent non-stationary and non-uniform sea clutter. As shown in the experiments in

Section 3, there are some defects in the segmentation results, including incomplete oil spill regions or some small holes within the oil spill and look-alikes areas.

Morphological image processing can focus on the shape or structure of features in an image through nonlinear operations. For example, the closing operation can provide continuous and smooth region boundaries, and the hole filling operation can be used to fill small holes inside an object while retaining the shape and size of the original object. Therefore, this effective morphological image processing can provide a feasible solution to the imperfections of SAR image segmentation. Previous studies [

28,

29,

30] used the morphological dilation and erosion operations to replace the convolutional layers to verify their effectiveness in feature extraction and noise removal. To improve the performance of the FA-MobileUNet model, this study referred to the concept of morphology to enhance the feature extraction of spatial information. Morphological operations slide structural elements across the image, similar to convolution operations using kernel functions, highlighting spatial features in the output. Erosion2D

and Dilation2D

operations on an input image

I are defined as follows

where

and

are the structuring elements of erosion and dilation operations of size

,

,

, and

. In addition, the closing operation involves a dilation operation followed by an erosion operation.

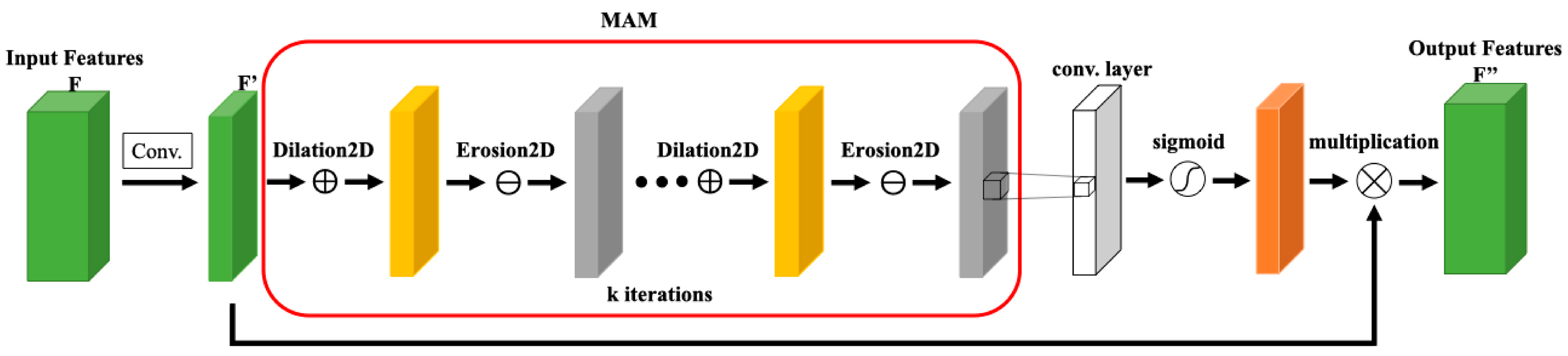

This study improved the FA-MobileUNet model, by replacing the spatial attention module (SAM) in the original CBAM with the morphological attention module (MAM) to further enhance attention to spatial features. The closing operations was applied in MAM, which replaced the convolutional layers in SAM and the structure of MAM was shown in

Figure 4. The initial kernel size of the morphological operations was set to 3 × 3, allowing for adjustment during training. As shown in

Figure 4, the input feature map

F was first processed by a 1 × 1 convolution layer

, and then the morphological closing operations were performed through a series of Dilation2D and the Erosion2D layers. The output of MAM can be expressed as

where

,

and

.

is the morphological closing operation. Finally, the morphological feature maps were then processed through a 1 × 1 convolution employing the Sigmoid activation function. However, the closing operation may lose some details while maintaining spatial shape and structural characteristics. Paying attention to these two features simultaneously, and based on the experimental results in

Section 3, it was proposed to enhance the low-level spatial features extracted from Stages 1 and 2 of the encoder through the modified CBAM, while processing the high-level semantic features extracted from Stages 3 and 4 through the original CBAM.

2.3. Loss Function

The loss function is one of the crucial elements of deep learning and is used for model training and evaluating. By measuring the difference between predicted and actual values through a loss function, the model can be adjusted to optimize parameters. In the context of semantic segmentation, cross-entropy is widely applied to this classification problem. To evaluate the difference between probability distributions of multi-categories, categorical cross-entropy (CCE) function was used in this study and defined as

where

N is the number of pixels,

C is the number of categories,

is the number of pixels in category C,

is the true label and

is the predicted probability of the true class. In addition, the true labels in CCE function are one-hot encoded vectors, where the element corresponding to true class is 1 and all other elements are 0.

One of the most common problems encountered in deep learning tasks is the issue of imbalanced dataset. In practical applications, datasets are rarely balanced, meaning the amount of available data is seldom equal for all categories, such as medical images and SAR oil spill images. Due to the different proportions of data used for model training, the model is likely to be biased toward the majority class. As we provide a large amount of data for the majority class, the classification model will correctly learn to identify samples in the majority class. However, due to the insufficient data for the minority class, the model has poor predictive ability for such samples. As a result, the model tends to classify a large number of samples belonging to the minority class into the majority class. In other words, the model becomes overly confident in its predictions for the majority class samples. Label smoothing [

31,

32] reduces the model’s overconfidence in the majority class samples and also helps improve the model’s generalization ability. In this method, the labels of the training samples were modified to

where

is smoothing factor,

is the label after label smoothing,

is original label and K is number of categories. This study applied label smoothing to soften the true labels in the training data, preventing the deep learning model from overfitting and attempting to penalize overconfident outputs. This approach did not compromise the model’s performance while providing higher generalization ability. In the study, the

value was set to 0.1, because increasing the

value hindered the model’s ability to effectively converge during the training process, thereby reducing the detection performance.

2.4. Evaluation Metric

In the study, the Intersection over Union (IoU) was used to evaluate the performance of the detection results, which is defined as

where TP and FP represent the number of correct and false pixels on the detection results, respectively. FN is the number of undetected pixels on the ground truth pixels. IoU is used to evaluate the performance of segmentation results by comparing the ground truth data to the predicted results. In the experiments, IoU was measured for each category in the dataset, and the mean IoU (mIoU) was computed as the average values of IoU across five categories.

3. Results

3.1. Experimental Setting

The following experiments were conducted using a PC with a 12th Gen Intel Core i7-12700KF CPU and 16GB memory. In addition, the GeForce RTX3080 with 12GB memory was equipped, using CUDA v12.1 and cuDNN v8.8.0. The operating system was Windows 10 with processor of 64-bit. The deep learning model was built in the environment of TensorFlow-gpu version 2.10.1 and Keras version 2.10.0. In this study, the oil spill detection model was modified based on the U-Net model proposed by Chen et al. [

18].

The oil spill detection model was trained by the extended MKLab dataset [

18], which consisted of 1,239 images, with 1,129 and 110 images in training and testing sets, respectively. The original image size was 1,250 × 650, which was resized to 352 × 352 as input for model training. During the training process, the numbers of epochs and batch size were set to 1000 and 8, respectively. In addition, the learning rate was set to 5 × 10

-5. The Adam [

33] and categorical cross-entropy were chosen as the optimization method and loss function, respectively. The training process of the improved FA-MobileUNet model was shown in

Figure 5, reaching an accuracy of 0.9912 and a loss of 0.0008.

3.2. Performance Evaluation

The experiment first evaluated the performance of the modified CBAM in the improved FA-MobileUNet model for oil spill detection. The original CBAM modules of FA-MobileUNet model were replaced by the modified CBAM from shallow to deep layers, and its performance was shown in

Table 1. The FA-MobileUNet model using the original CBAM achieved an mIoU of 83.74%. By replacing CBAM modules in stages 1 and 2 with the modified CBAM, the improved FA-MobileUNet achieved better detection performance, with an mIoU of 83.83%. However, if the CBAM in stage 3 was also replaced with the modified CBAM, the mIoU slightly decreased to 83.67%. Therefore, the study enhanced the performance of the improved FA-MobileUNet model by applying the modified CBAM to stages 1 and 2 of the encoding layers.

Since morphological operations, like convolution operations, can be iterated multiple times, it is necessary to select an appropriate number of iterations for the closing operation during model training. Next, the impact of iteration number of the closing operations in MAM on network performance was examined, as shown in

Table 2. The FA-MobileUNet model using the original CBAM achieved 83.74% mIoU, in which the IoU of oil spills and look-alikes reached 75.85% and 72.67%, respectively. Then, different iterations (from 1 to 3 times) of the closing operation were performed in the modified CBAM of the improved FA-MobileUNet model. However, too many iterations of the closing operation may result in overestimation, negatively affecting the training of the model. It can be observed that performing 2 iterations of closed operations achieved better detection performance, with a slight improvement in the IoU for oil spills and look-alikes, reaching 83.94% mIoU.

Finally, the label smoothing was applied to the improved FA-MobileUNet model and its performance was verified. The detection performance of the improved FA-MobileUNet model with label smoothing was shown in

Table 3. Although the improved FA-MobileUNet model achieved good detection results on different categories by enhancing multi-scale feature extraction, the utilize of label smoothing can still slightly improve the detection performance, especially for minority categories. Using label smoothing in the training process, the IoU of the oil spills and look-alikes increased by about 1.0% and 2.5%, respectively. Therefore, the experiments validated that the label smoothing can improve the performance of the model and generalization ability to imbalanced datasets.

3.3. Segmentation Network Comparison

The segmentation performance of the improved FA-MobileUNet model was compared with other semantic segmentation networks in this section. First, Krestenitis et al. [

19] tested the MKLab dataset with different semantic segmentation networks, including U-Net, LinkNet, PSPNet, DeepLabv2 and DeepLabv3+. Among them, except for DeepLabv3+ which used the MobileNetv2 as backbone network, the other networks used the ResNet-101 as the backbone network. Fan et al. [

34] improved the U-Net model by combining the threshold segmentation algorithm and feature merge network to segment oil spills. Basit et al. [

35] proposed a new loss function about gradient profile (GP) to train the U-Net model and combined it with Jaccard and Focal loss function. Rousso et al. [

36] first applied different image filters to the training images, and then used the U-Net and DeepLabv3+ models for ensemble training to detect oil spills. Chen et al. [

18] proposed the FA-MobileUNet model by incorporating CBAM, ASPP and full-scale aggregated modules, and replacing the backbone architecture with MobileNetv3.

Table 4 summarized the performance evaluation of the models in terms of IoU. In [

19], the U-Net model with ResNet-101 backbone and DeepLabv3+ with MobiletNetv2 backbone can achieve better detection results, with the mIoU of 67.40% and 67.41%, respectively. In the detection results of the U-Net and Deeplabv3+ models, the IoU of oil spills reached 57.07% and 56.34%, respectively. However, the Deeplabv2 model performed the worst in detecting oil spills, with an mIoU of only 56.37%. Therefore, models with an encoder-decoder architecture achieved better detection performance in this multi-class semantic segmentation task. Although Tozero FMNet incorporated different threshold segmentation methods into the U-Net model, its detection performance on the five categories in the MKLab dataset was worse than the original U-Net model. Next, CoAtNet-0 and EfficientNetv2 adopted a combination of GP, Jaccard and Focal loss functions during training process, increasing the mIoU to 70%. However, the performance of these two models in detection oil spills has not been significantly improved, with the IoU of only 50.22% and 56.42%, respectively. Although the Ensemble model integrated the U-Net and DeepLabv3+ models for training, its detection performance for oil spills and look-alikes has not improved, with the mIoU of 71.12%. FA-MobileUNet model enhanced multi-scale feature extraction, improving the IoU for oil spills, look-alikes and ships to over 70%, reaching 83.67% mIoU. Finally, the improved FA-MobileUNet model with the modified CBAM and label smoothing method achieved the best detection performance, with an mIoU of 84.55%. The experiments validated the efficiency of modified CBAM and label smoothing in detecting oil spills and look-alikes.

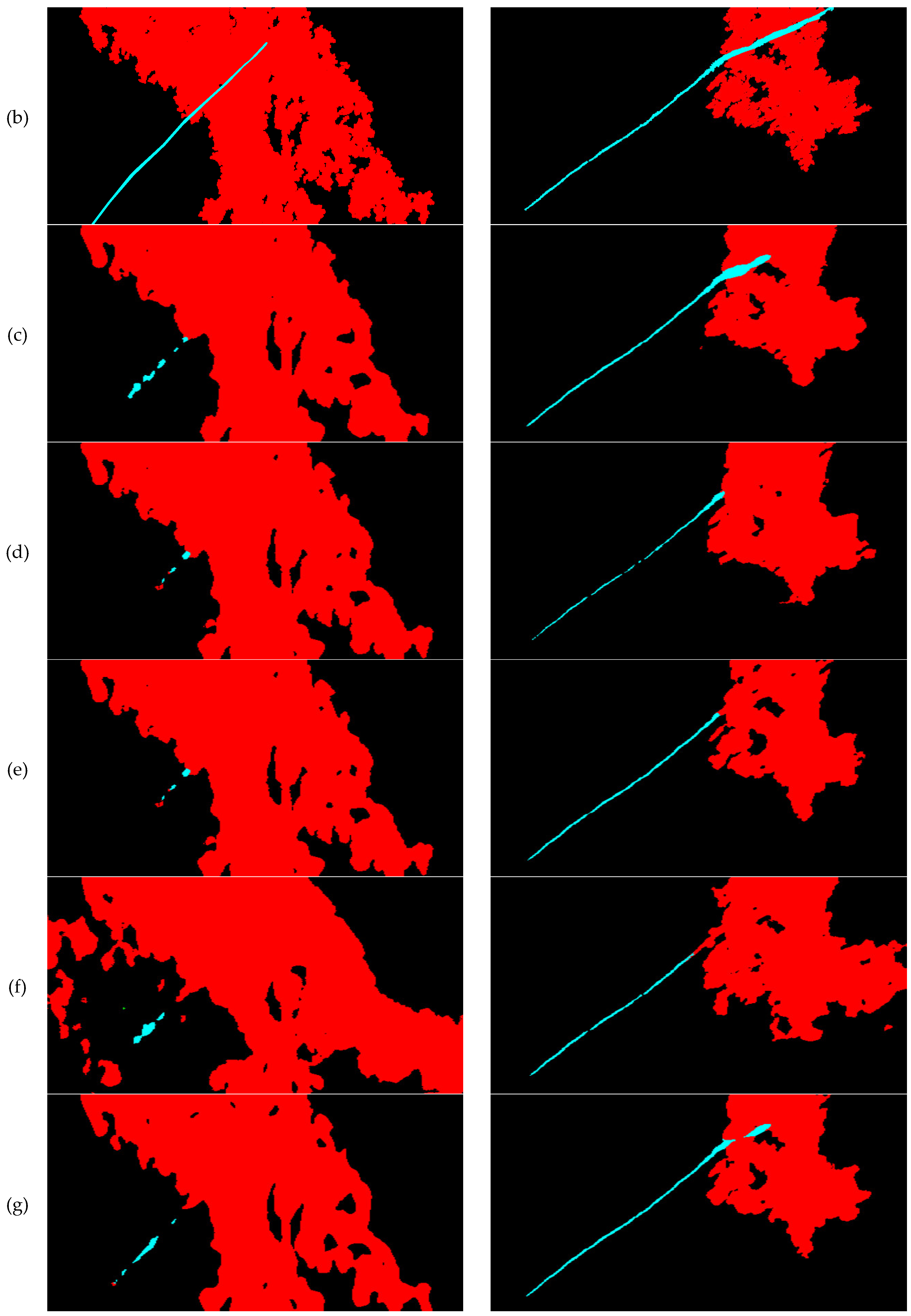

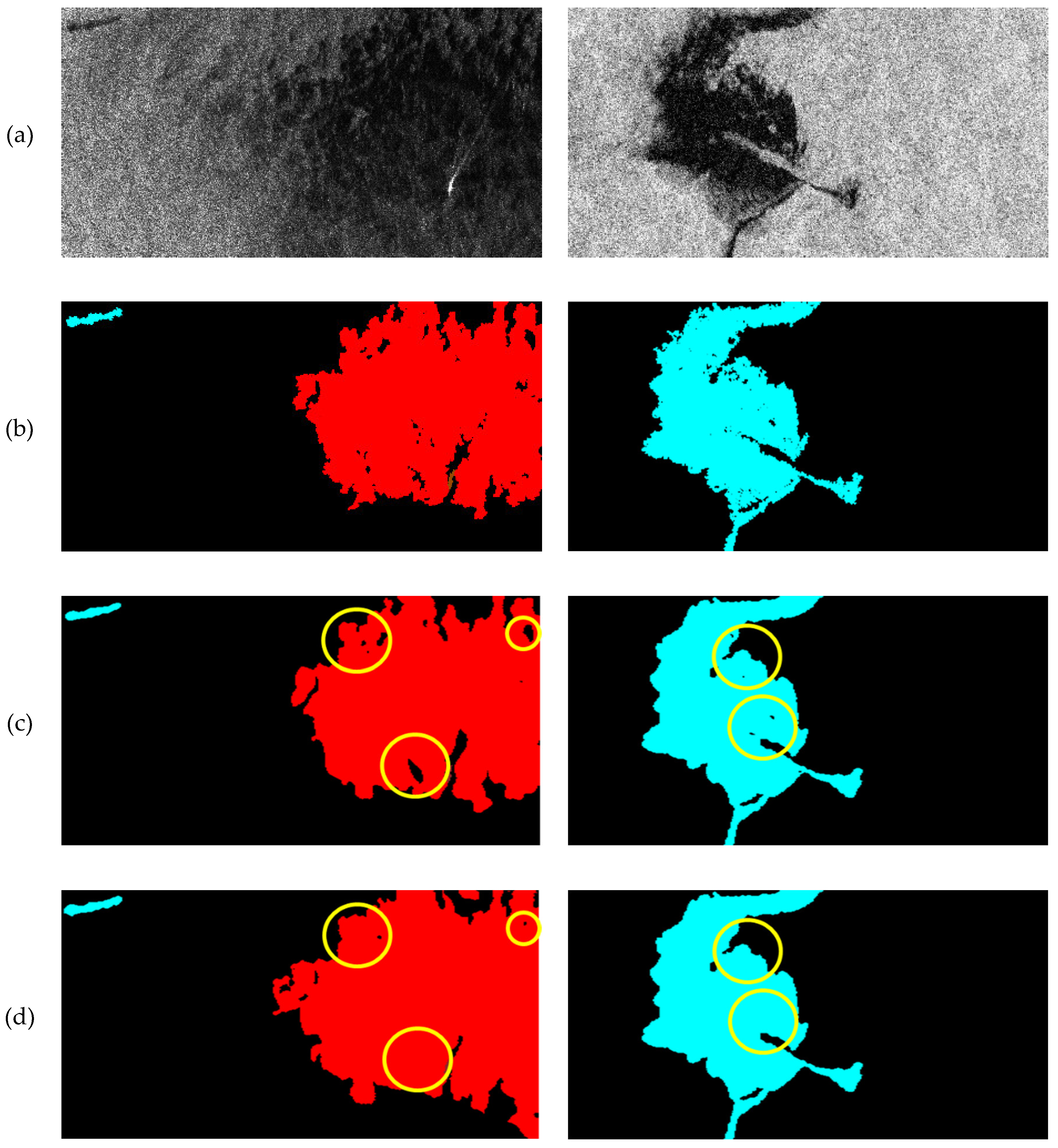

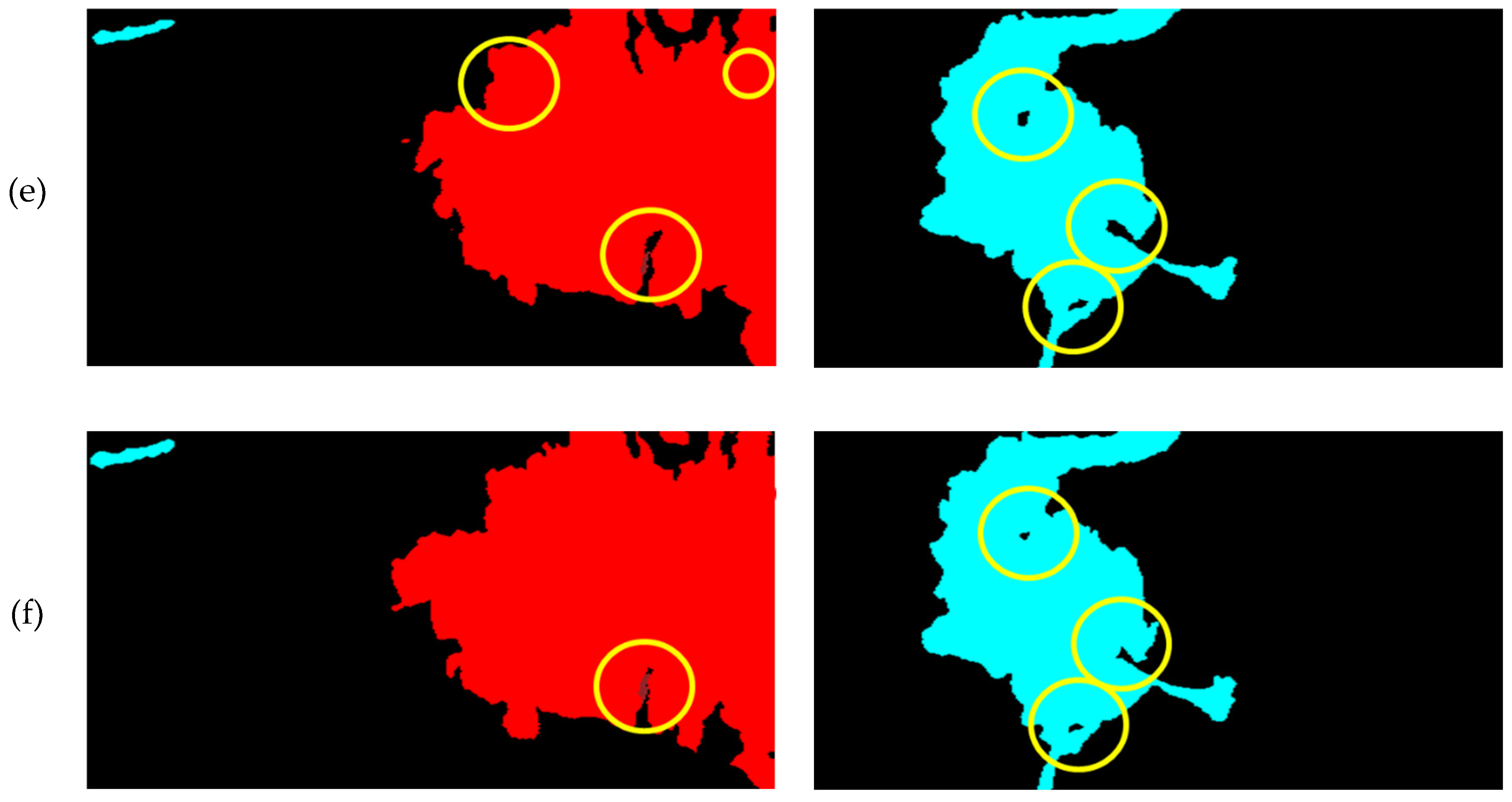

3.4. Oil Spill Detection Results Improvement

In order to further validate the performance of the improved FA-MobileUNet model and compared it with other semantic segmentation networks, the segmentation results of some test images were shown in

Figure 6. The U-Net, LinkNet, PSPNet, DeepLabv2, DeepLabv3+, FA-MobileUNet and the improved FA-MobileUNet models were conducted to detect the oil spills in test images. The black, cyan, and red colors represent the sea surface, oil spills and look-alikes, respectively. As shown in

Figure 6a, the black areas of oil spills overlapped with look-alikes areas in the SAR images, causing identification difficulties for these semantic segmentation models. In addition, the original ground truth data in

Figure 6b, has not been post-processed, and it can be seen that the oil spill and look-alikes areas are somewhat fragmented. From the detection results of Test 1 image, the improved FA-MobileUNet model can detect the oil spill area more accurately and completely, compared with the fragmented detection results of other models. Moreover, compared with the FA-MobileUNet, the improved FA-MobileUNet model provided more complete detection results for the look-alikes areas, with some smaller holes filled. Observing the detection results of Test 2 image, although all these models detected oil spills, only the U-Net, DeepLabv3+, FA-MobileUNet, and improved FA-MobileUNet models identified some overlapping areas. Among them, the improved FA-MobileUNet model detected the most complete oil spills area. Although the modified CBAM seemed to have limited improvement in mIoU from the previous experimental results of

Section 3.2, it was observed that FA-MobileUNet combined with the modified CBAM can achieve more complete oil spill detection, with fragmented hole areas being filled. The experimental results validated that the improved FA-MobileUNet model can effectively detect oil pollution even in complex scenes. Therefore, this model can be applied to practical oil pollution incidents to verify its effectiveness in oil pollution identification.

3.5. Oil Pollution Incidents.

Marine oil spills come from various sources, including offshore platforms, ship accidents, illegal discharges from ships, submarine oil pipeline leaks and port operations. As the economy develops, industrial facilities emit most of the oil pollution. However, oil pollution from ship accidents or illegal discharges occurs more frequently in the marine environment [

37]. Oil pollution not only harms the environment and wildlife, but also requires substantial manpower and resources for cleanup. Through the proposed oil spill detection model and SAR images, extensive observations and monitoring of the marine environment can be fulfilled. However, to effectively track marine oil spills caused by ships, it is not only necessary to improve oil spill detection performance but also require detailed navigation information of ships.

Maritime SAR images have been widely used in ships and oil spill detection. However, effective monitoring of the marine environment requires detailed information on tracking ship targets, which cannot be provided by SAR images alone. Therefore, AIS data is needed to provide corresponding ship information and help identify ships in the sea area. Each vessel equipped with an AIS transponder autonomously broadcasts its static details like name and dimensions, as well as dynamic data including precise GPS-derived location and current navigation status. Therefore, the study combined SAR and AIS data for marine environment monitoring. First, detected the marine targets in SAR images, including oil spills and ships. Then, if oil spills were detected, the corresponding AIS data was filtered based on the acquisition time and location of the SAR image. Finally, the ship’s navigation trajectory was inferred based on the AIS data to determine whether it was a suspected oil-discharging ship.

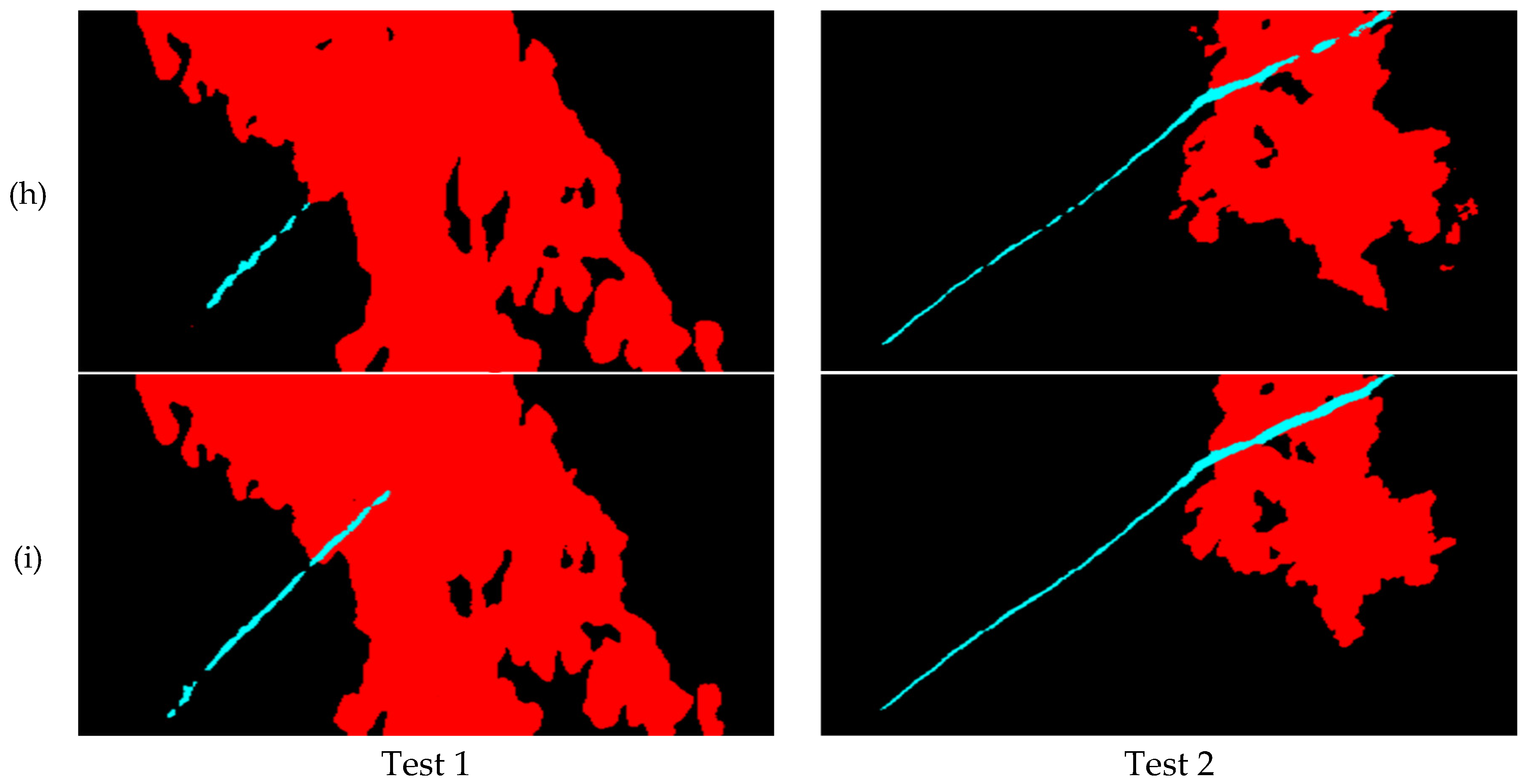

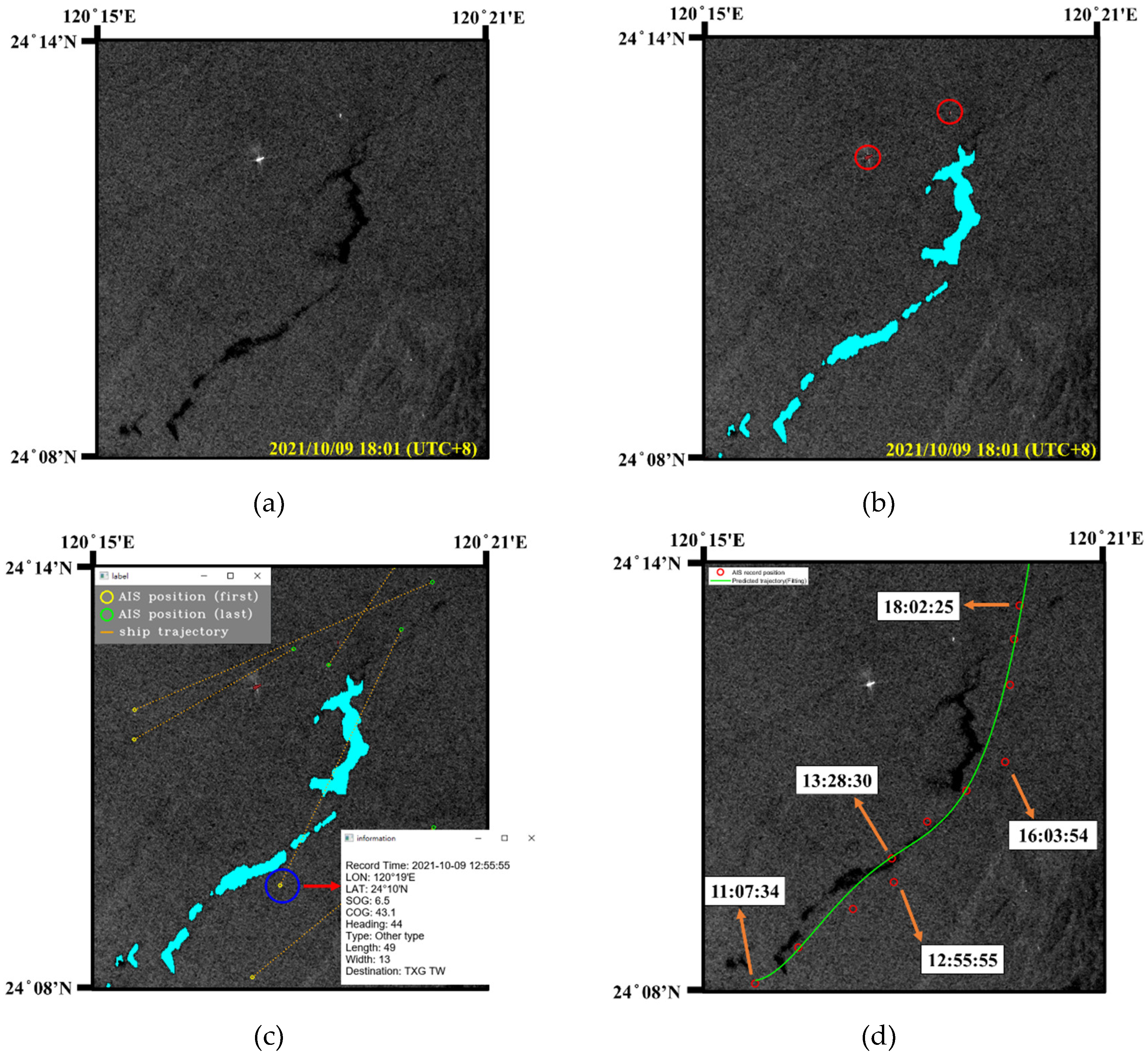

The experiments in this section were conducted to verify the effectiveness of the proposed oil spill detection model through oil pollution incidents. This study collected marine oil pollution incidents in Taiwan. As shown in

Figure 7, three oil pollution incidents occurred in Regions A, B and C located near the Taichung Port, Kaohsiung Port and Xiaoliuqiu, respectively. Kaohsuing Port and Taichung Port are Taiwan’s international ports and the top two ports in terms of container throughput. Therefore, most oil pollution incidents in these two ports were caused by ships. Moreover, there is an oil refinery along the coast of Kaohsiung. Once an underwater oil pipeline ruptures, the oil spills will spread to the nearby coastline of Xiaoliuqiu and Kenting. However, SAR images can be used to observe oil pollution incidents, but they cannot provide detailed information about the ships in the images. Therefore, the experiments first used the proposed model to detect targets in SAR images. Then, the corresponding AIS data was collected to track the ships in the SAR images based on the time and geo-location of the SAR images. Combining the oil spill detection results in SAR images with the ship information reported by AIS can achieve effective monitoring of the marine environment. The following sections described the oil pollution incident and the oil spill detection results.

3.5.1. Tracking Oil-discharge Ships

Taichung Port is the second largest international port in terms of container throughput. Therefore, container ships and oil tankers often navigate and anchor in this area. While collecting oil spill images, it was found that there was an oil spill area suspected of being discharged by ship in the SAR image. This suspected oil pollution incident occurred in Region A of

Figure 7. The Sentinel-1 IW mode SAR image containing the oil spills was taken at 18:01 on October 9, 2021 (UTC+8), with an image size of 1,171 × 1,252. As shown in

Figure 8a, a black area on the sea surface with ships nearby can be observed in the SAR images. The proposed model was used to detect the targets in this SAR image and successfully identified the oil spill areas and the ships (red circled area), as shown in

Figure 8b. Since the ship was far away from the oil spill area, it could not be confirmed whether this ship discharged the oil spills.

In order to identify and track the ship that caused the oil spill, the study collected the AIS data corresponding to this area based on the timestamp of Sentinel-1 image. Moreover, AIS records within 7 hours before the image capture time were collected to obtain the ship trajectories. The superposition result of the detected oil spills and AIS data was shown in

Figure 8c. This study first filtered out the position of each ship from the AIS data through MMSI (Maritime Mobile Service Identity), marking the first and last reported AIS positions during the period with yellow and green circles, respectively. These two points were connected by an orange dot line to represent ship’s trajectory. In

Figure 8c, the navigation trajectory of the ship marked by a blue circle was closest to the oil spill area. Therefore, this study marked the location reported by the suspicious ship during this period, as shown by red circles in

Figure 8d. Based on the received AIS data, including time, position, speed, and heading angle, the ship trajectory was calculated and fitted with a quartic curve, as shown by the green line in

Figure 8d. Finally, it was found that the ship’s trajectory closely matched the extension direction of the oil spill, inferring that this ship was suspected of the oil discharge.

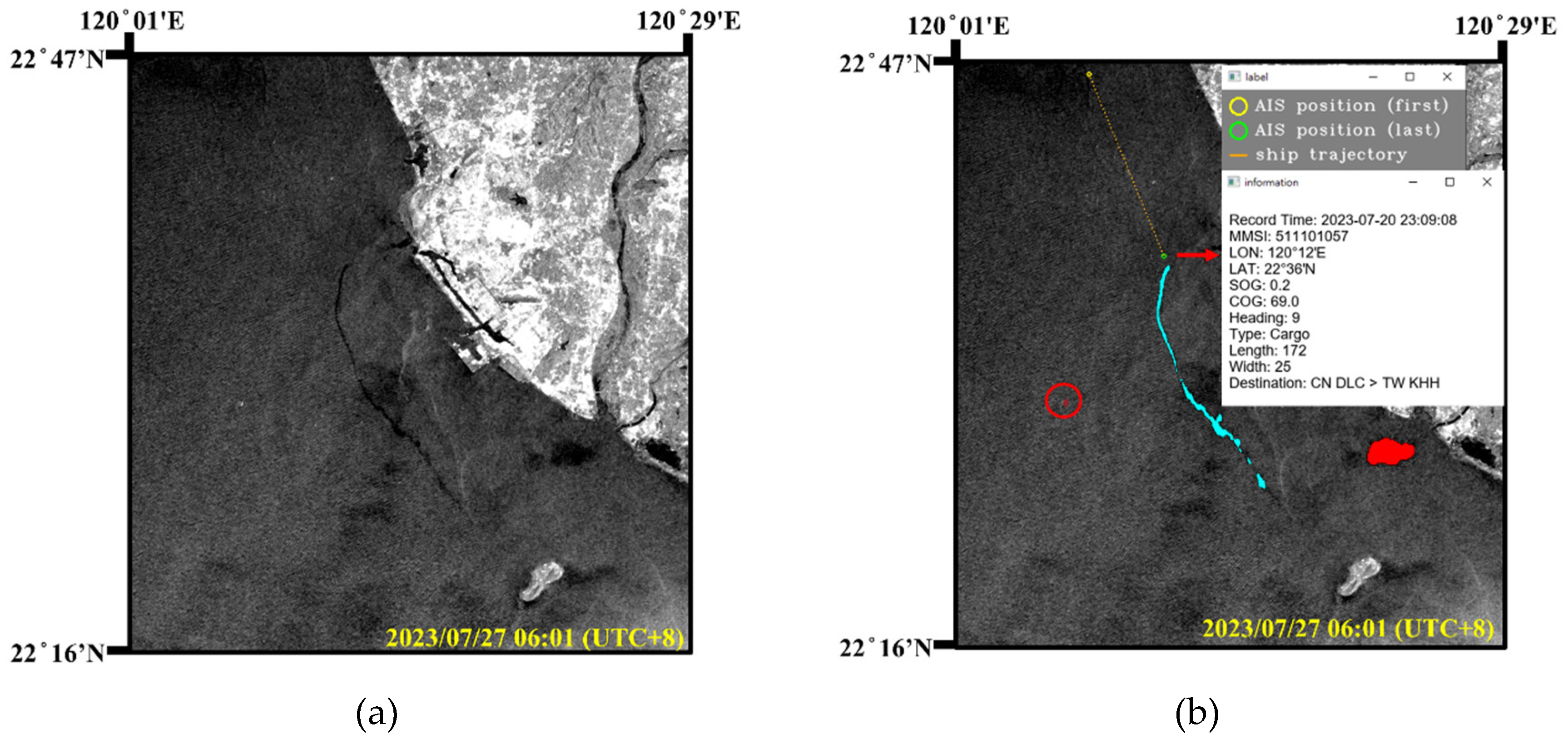

3.5.2. Oil Pollution Caused by Shipwreck

Next, an oil pollution incident caused by a shipwreck occurred in Region B of

Figure 7. On July 21, 2023, a Palau-flagged container ship ANGEL sank due to water ingress into the cabin, causing the hull to tilt. According to the incident report, the ship carried a total of 491.848 metrics tons of oil. Therefore, the shipwreck resulted in an oil pollution incident in the surrounding marine areas, as shown in the black area in

Figure 9a. The Sentinel-1 image was captured at 06:01 on 2023/07/27 (UTC+8), with an image size of 1,320 × 1,409.

On June 24, 2023, the container ship ANGEL departed from Dalian Port in China, after loading containers. However, before departure, the ship had already experienced water ingress due to a hull breach and the ballast water system was temporarily used to pump out the water. During its voyage, the ship sent a message to the South Taiwan Maritime Affairs Center of the Maritime Port Bureau (MPB), requesting permission to enter Kaohsiung Port for inspection and repairs. On July 2, the ship arrived and anchored offshore of Kaohsiung Port, as indicated by the green circle from AIS data in

Figure 9b. However, MPB rejected the request for repairs, so the container ship ANGEL remained anchored in place. On July 20, a generator on ANGEL malfunctioned, causing a loss of power. Consequently, the ballast water system failed to operate and the accumulated water could not be pumped out, causing the ship’s hull to gradually tilt until it completely sank on the morning of July 21.

The acquired Sentinel-1 image was in EW mode with the resolution of 40 m × 40 m. An area of oil spills, look-alikes, and a ship (marked by a red circle) were detected in the SAR image by the proposed model. The oil spill detection results covered a total of 3,621 pixels, which was approximately 5.79 square kilometers. It was not until Typhoon DOKSURI passed through on July 29 that maritime monitoring was conducted, revealing the oil spill area at least about 1.95 square kilometers. Therefore, the use of SAR images and the oil spill detection model can effectively monitor the marine environment without being affected by weather conditions..

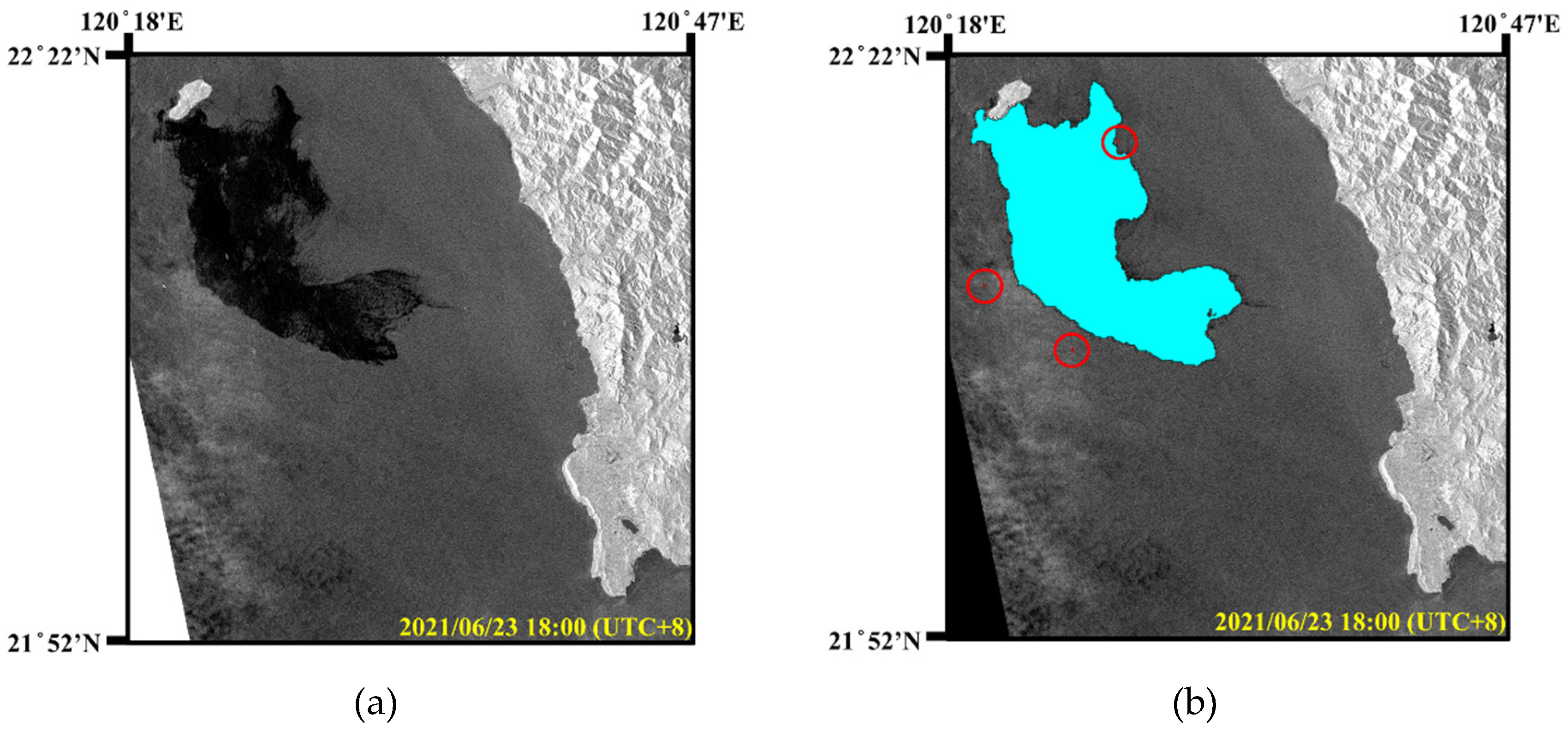

3.5.3. Undersea Oil Pipeline Rupture Incident

There is a Dalin oil refinery along the coast of Xiaogang, Kaohsiung. In the early morning of June 22, 2021, an offshore oil pipeline ruptured. Due to the rain and poor visibility, it was impossible to visually identify the oil spills on the sea surface. As the ocean current in this area flows to the southeast, the oil spills drifted towards Xiaoliuqiu. As shown in

Figure 10a, the Sentinel-1 IW mode image on June 23, 2021 clearly showed the oil spills near the marine area of Xiaoliuqiu island located in Region C in

Figure 7. The acquired Sentinel-1 image was in IW mode with a resolution of 10 m × 10 m and an image size of 5,369 × 5,555. According to records of the oil spill incident, the oil spills covered an area of approximately 290 square kilometers. As shown in

Figure 10b, the detection results in the SAR image included ships (in red circles) and oil spills. The detected oil spill area was 2,889,041 pixels, approximately 288.9 square kilometers.

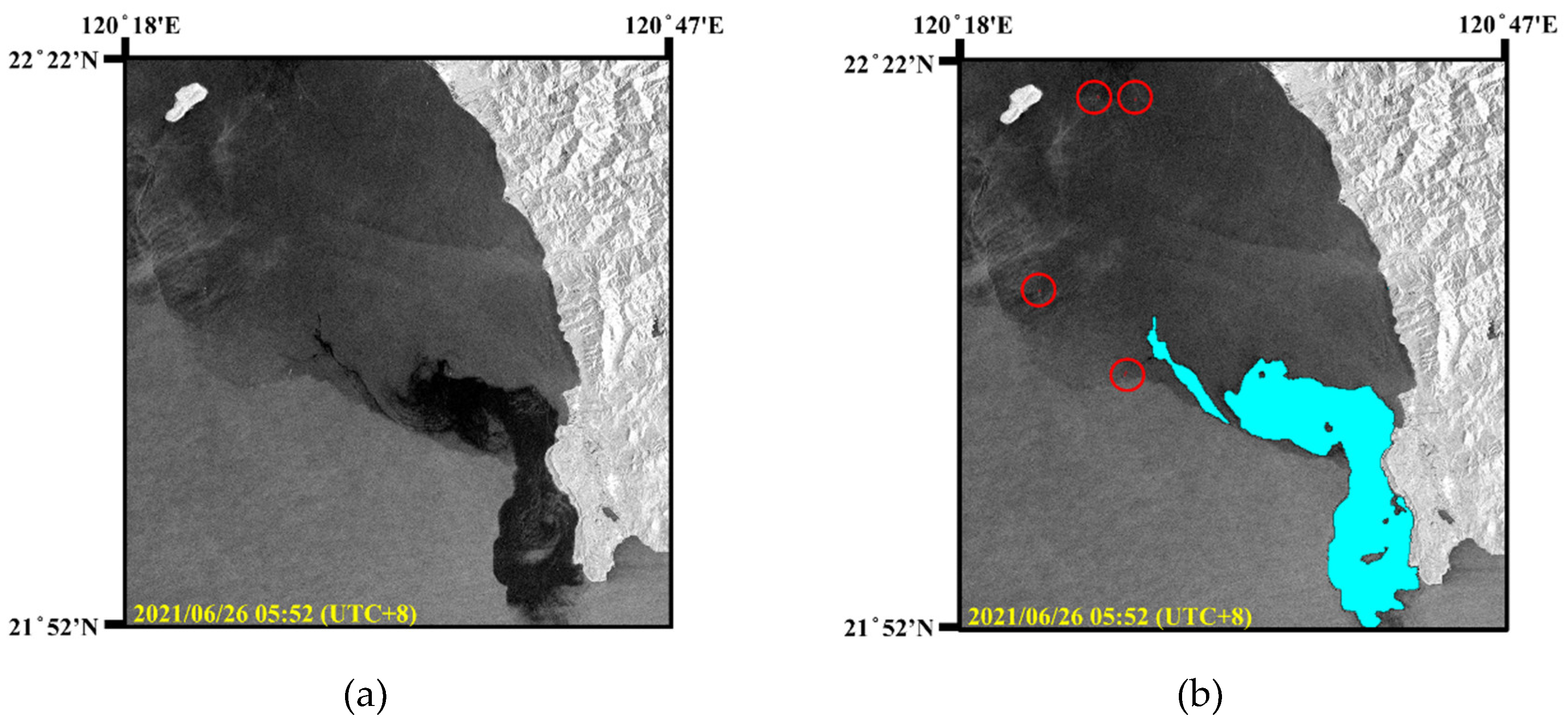

Subsequently, the oil spills continued to drift southeastward and spread to the coast of Kenting National Park, severely affecting the local ecosystem and fishery resources. As shown in

Figure 11a, the Sentinel-1 IW mode image on June 26, 2021 revealed the oil spill area. According to the oil spill detection results in

Figure 11b, this area contained 1,829,760 pixels, approximately 182.9 square kilometers. Consequently, this pollution required extensive manpower for cleanup and caused serious environmental damage to coastal areas. In summary, the proposed oil spill detection model based on SAR images can realize all-day and all-weather marine environment monitoring, and provide real-time oil spill information.

4. Discussion

Although the FA-MobileUNet model [

18] incorporated the spatial pyramid model and attention mechanism can effectively improve the accuracy of oil spills and look-alikes detection, there were still some fragments and small holes present in the detection results. In order to facilitate the description of the spread range and area measurement of oil spills when an oil pollution incident occurs, it is necessary to detect a relatively complete oil pollution area. However, post-processing of detection results might cause smaller ocean targets, such as ships, to disappear. To address this issue, the improved FA-MobileUNet model incorporated morphological concepts into CBAM, achieving more complete detection areas. Therefore, the experiments were conducted on the FA-MobileUNet model and the improved FA-MobileUNet model combined with the modified CBAM for explicit comparison. Additionally, the experiments also compared the impact of different iterations of the closing operation in the modified CBAM on the detection results.

The segmentation results of the improved FA-MobileUNet model combined with the modified CBAM and FA-MobileUNet were shown in

Figure 12. It can be observed that the labeled data was fragmented, as shown in

Figure 12b, because it has not been post-processed. There were still some small holes in the detection results of FA-MobileUNet model, which was combined with the original CBAM, as shown in

Figure 12c. The study referred to the concept of morphology to modified the spatial attention module in the CBAM. Most of the small holes in the detection results can be filled through the improved FA-MobileUNet model combined with modified CBAM, in which the closing operation was performed once, as shown in yellow circled in

Figure 12d. However, there were still some unfilled holes in the detection results. Next, the improved FA-MobileUNet model improved the hole filling in the detection results and reduced the size of larger holes by iterating 2 times of closing operations, as shown by the yellow circles in

Figure 12e. However, the improved FA-MobileUNet model using 3 iterations of closing operations overfilled the holes, resulting in greater discrepancies compared with the ground truth data, as shown in

Figure 12f. Therefore, overfilling the holes will lose some object details, affecting object detection performance. The experimental results showed that the CBAM incorporating the concept of morphology into the improved FA-MobileUNet model can obtain more complete detection results, but excessive closing operations may affect the overall performance. Therefore, the improved FA-MobileUNet model, using the modified CBAM with 2 iterations of closing operation, was able to appropriately fill the holes and achieve better detection results.

5. Conclusions

This study proposed an improved FA-MobileUNet model for the maritime environment surveillance. In order to achieve a more comprehensive oil spill detection regions, the original CBAM in the FA-MobileUNet model was modified based on morphological concepts. By using the morphological closing operation, the model can extract more complete target structures during the training process, enhancing the integrity of the oil spill detection results. In addition, considering the category imbalance in the MKLab oil spill dataset, label smoothing was adopted during the training process. The experiments first evaluated the oil spill detection performance of the improved FA-MobileUNet model and other semantic segmentation networks based on the extended MKLab dataset. Experimental results showed that the improved FA-MobileUNet model achieved the best oil spill detection performance, reaching 84.55% mIoU. Compared with the FA-MobileUNet model, the improved FA-MobileUNet has improved the IoU of oil spills and look-alikes by 1.65% and 3.12%, respectively. In addition, the effectiveness of the improved FA-MobileUNet model in oil spill detection was further verified through three oil pollution incidents. The oil spill detection results closely matched the oil pollution areas recorded in the incidents, verifying that the improved FA-MobileUNet model can effectively monitor the marine oil pollution.

Author Contributions

Conceptualization, Lena Chang.; methodology, Lena Chang; software, Yi-Ting Chen; validation, Lena Chang, and Yi-Ting Chen.; formal analysis, Lena Chang, and Yi-Ting Chen; writing—original draft preparation, Yi-Ting Chen; writing—review and editing, Lena Chang and Ching-Min Cheng.; visualization, Shang-Chih Ma.; supervision, Lena Chang; project administration, Lena Chang and Yang-Lang Chang.; funding acquisition, Lena Chang. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Science and Technology Council, Taiwan (NSTC 112-2221-E-019-039).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the authors. The data are not publicly available due to privacy restriction.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- International Marine Organization. Available online: https://www.imo.org/en/OurWork/Environment/Pages/Default.aspx (accessed on 01 July 2024).

- Zhang, B.; Matchinski, E.J.; Chen, B.; Ye, X.; Jing, L.; Lee, K. Chapter 21 – Marine oil spills – oil pollution, sources and effects. Sheppard, C. (Ed.), World Seas: an environmental evaluation (second ed.), Academic Press, 2019, 391-406.

- Topouzelis, K. Oil spill detection by SAR images: Dark formation detection, feature extraction and classification algorithms. Sensors, 2008, 8, 6642–6659. [Google Scholar] [CrossRef] [PubMed]

- Solberg, A.; Storvik, G.; Solberg, R.; Volden, E. Automatic detection of oil spills in ERS SAR images. IEEE Trans. Geosci. Remote Sens., 1999, 37, 1916–1924. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, D.; Fan, L.; Ma, H.; Li, Y. An automated SAR-based method for ship detection in maritime surveillance system. 2023 7th Int. Conf. ICTIS, Xi’an, China, 2023, 213-218.

- Orfanidis, G.; Ioannidis, K.; Avgerinakis, K.; Vrochidis, S.; Kompatsiaris, I. A deep neural network for oil spill semantic segmentation in SAR images. 2018 5th IEEE Int. Conf. ICIP, Athens, Greece, 2018, 3773-3777.

- Yekeen, S.T.; Balogun, A.L. Automated marine oil spill detection using deep learning instance segmentation model. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2020, XLIII-B3-2020, 1271–1276. [Google Scholar] [CrossRef]

- Ma, X.; Xu, J.; Wu, P.; Kong, P. Oil spill detection based on deep convolutional neural networks using polarimetric scattering information from Sentinel-1 SAR images. IEEE Trans. Geosci. Remote Sens., 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Shaban, M.; Salim, R.; Khalifeh, H.A.; Khelifi, A.; Shalaby, A.; El-Mashad, S.; Mahmoud, A.; Ghazal, M.; El-Baz, A. A Deep-Learning Framework for the Detection of Oil Spills from SAR Data. Sensors, 2021, 21, 2351. [Google Scholar] [CrossRef]

- Fan, Y.L.; Rui, X.P. Feature merged network for oil spill detection using SAR images. Remote Sens., 2021, 13, 3174. [Google Scholar] [CrossRef]

- Rousso, R.; Katz, N.; Sharon, G.; Glizerin, Y.; Kosman, E.; Shuster, A. Automatic recognition of oil spills using neural networks and classic image processing. Water, 2022, 14, 1127. [Google Scholar] [CrossRef]

- Li, C.; Wang, M.; Yang, X.; Chu, D. DS-UNet: Dual-stream U-Net for oil spill detection of SAR image. IEEE Geosci. Remote Sens. Lett., 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Mahmoud, A.S.; Mohamed, S.A.; El-Khoriby, R.A.; Abdelsalam, H.M.; El-Khodary, I.A. Oil spill identification based on dual attention UNet model using Synthetic Aperture Radar images. J. Indian Soc. Remote Sens., 2023, 51, 121–133. [Google Scholar] [CrossRef]

- Perkovic, M.; Ristea, M.; Lazuga, K. Simulation based emergency response training. Sci. Bull. Nav. Acad., 2016, 19, 85–90. [Google Scholar]

- Rodger, M.; Guida, R. SAR and AIS data fusion for dense shipping environments. IEEE IGARSS, Waikoloa, Hi, USA, 2020, 2077-2080.

- Galdelli, A.; Mancini, A.; Ferra, C.; Tassetti, A.N. A synergic integration of AIS data and SAR imagery to monitor fisheries and detect suspicious activities. Sensors, 2021, 21, 2756. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Zhang, W.; Han, J.; Li, Y. Tracing illegal oil discharges from vessels using SAR and AIS in Bohai Sea of China. Ocean Coast. Manag., 2021, 211, 105783. [Google Scholar] [CrossRef]

- Chen, Y.T.; Chang, L.; Wang, J.H. Full-Scale aggregated MobileUNet: An improved U-Net architecture for SAR oil spill detection. Sensors, 2024, 24, 3724. [Google Scholar] [CrossRef] [PubMed]

- Krestenitis, M.; Orfanidis, G.; Ioannidis, K.; Avgerinakis, K.; Vrochidis, S.; Kompatsiaris, I. Oil spill identification from satellite images using deep neural networks. Remote Sens., 2019, 11, 1762. [Google Scholar] [CrossRef]

- Copernicus Open Access Hub. Available online: https://scihub.copernicus.eu/ (accessed on 01 July 2024).

- MKLab Dataset. Available online: https://mklab.iti.gr/results/oil-spill-detection-dataset/ (accessed on 24 January 2022).

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: New York, NY, USA, 2015. [Google Scholar]

- Zhixuhao. Zhixuhao/unet. 2017. Available online: https://github.com/zhixuhao/unet (accessed on 01 July 2024).

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; Le, Q.V.; Adam, H. Searching for MobileNetV3. Proceedings of the IEEE International Conference on Computer Vision, Seoul, 27 October-2 November 2019, 1314-1324.

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation networks. In Proc. IEEE CVPR, Salt Lake City, UT, USA, 2018, 7132-7141.

- Woo, S.; Park, J.C.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8-14 September 2018; pp. 3–19. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell., 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Mondal, R.; Purkait, P.; Santra, S.; Chanda, B. Morphological networks for image de-raining. arXiv 2019, arXiv:1901.02411. [Google Scholar]

- Decelle, R.; Ngo, P.; Debled-Rennesson, I.; Mothe, F.; Longuetaud, F. Light U-Net with a morphological attention gate model application to alanyse wood sections. In Proc. ICPRAM, Lisbon, Portugal, 2023, 1, 759-766.

- Shen, Y.; Zhong, X.; Shih, F. Deep morphological neural networks. arXiv 2019, arXiv:1909.01532. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proc. IEEE CVPR, Las Vegas, NV, USA, 2016, 2818-2826.

- Muller, R.; Kornblith, S.; Hinton, G. What does label smoothing help? arXiv 2019, arXiv:1906.02629. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- Fan, Y.; Rui, X.; Zhang, G.; Yu, T.; Xu, X. Poslad, S. Feature merged network for oil spill detection using SAR images. Remote Sens., 2021, 13, 3174. [Google Scholar] [CrossRef]

- Basit, A.; Siddique, M.A.; Bhatti, M.K.; Sarfraz, M.S. Comparison of CNNs and vision transformers-based hybrid models using gradient profile loss for classification of oil spills in SAR images. Remote Sens., 2022, 14, 2085. [Google Scholar] [CrossRef]

- Rousso, R.; Katz, N.; Sharon, G.; Glizerin, Y.; Kosman, E.; Shuster, A. Automatic recognition of oil spills using neural networks and classic image processing. Water, 2022, 14, 1127. [Google Scholar] [CrossRef]

- Zhang, B.; Matchinski, E.J.; Chen, B.; Ye, X.; Jing, L.; Lee, K. Chapter 21 - Marine oil spills – oil pollution, sources and effects. Sheppard, C. (ed.), World Seas: an environmental evaluation (second ed.), Academic Press, 2019, 391-406.

Figure 1.

Oil spill images from the dataset. Cyan, red, brown, green, and black correspond to oil spills, look-alikes, ships, land, and sea surface, respectively. (a) original SAR image. (b) ground truth data.

Figure 1.

Oil spill images from the dataset. Cyan, red, brown, green, and black correspond to oil spills, look-alikes, ships, land, and sea surface, respectively. (a) original SAR image. (b) ground truth data.

Figure 2.

The structure of the improved FA-MobileUNet model.

Figure 2.

The structure of the improved FA-MobileUNet model.

Figure 3.

The structure of FA module of stage 3.

Figure 3.

The structure of FA module of stage 3.

Figure 4.

The structure of the MAM.

Figure 4.

The structure of the MAM.

Figure 5.

The training process of the improved FA-MobileUNet model. (a) Accuracy. (b) Loss.

Figure 5.

The training process of the improved FA-MobileUNet model. (a) Accuracy. (b) Loss.

Figure 6.

The segmentation results of different semantic segmentation models. (a) SAR image. (b) Ground truth data. (c) U-Net model. (d) LinkNet model. (e) PSPNet model. (f) DeepLabv2 model. (g) DeepLabv3+ model. (h) FA-MobileUNet model. (i) Improved FA-MobileUNet model. Black, cyan and red represent the sea surface, oil spills and look-alikes, respectively.

Figure 6.

The segmentation results of different semantic segmentation models. (a) SAR image. (b) Ground truth data. (c) U-Net model. (d) LinkNet model. (e) PSPNet model. (f) DeepLabv2 model. (g) DeepLabv3+ model. (h) FA-MobileUNet model. (i) Improved FA-MobileUNet model. Black, cyan and red represent the sea surface, oil spills and look-alikes, respectively.

Figure 7.

The areas of oil pollution incidents in Taiwan. Regions A-C are located near the Taichung Port, Kaohsuing Port and Xiaoliuqiu, respectively.

Figure 7.

The areas of oil pollution incidents in Taiwan. Regions A-C are located near the Taichung Port, Kaohsuing Port and Xiaoliuqiu, respectively.

Figure 8.

Tracking ships suspected of discharging oil spills using SAR and AIS data. (a) SAR image. (b) Oil spill detection results (oil spills: cyan, ships: brown). (c) Ship trajectories provided by AIS (in orange dotted line). (d) Trajectory of suspected oil-discharging ship.

Figure 8.

Tracking ships suspected of discharging oil spills using SAR and AIS data. (a) SAR image. (b) Oil spill detection results (oil spills: cyan, ships: brown). (c) Ship trajectories provided by AIS (in orange dotted line). (d) Trajectory of suspected oil-discharging ship.

Figure 9.

Oil spill detection results caused by a shipwreck. (a) SAR image. (b) oil spill detection results with AIS data (oil spills: cyan, look-alikes: red, ships: brown).

Figure 9.

Oil spill detection results caused by a shipwreck. (a) SAR image. (b) oil spill detection results with AIS data (oil spills: cyan, look-alikes: red, ships: brown).

Figure 10.

Oil spill detection results in Xiaoliuqiu. (a) SAR image. (b) Oil spill detection results (oil spills: cyan, ships: brown).

Figure 10.

Oil spill detection results in Xiaoliuqiu. (a) SAR image. (b) Oil spill detection results (oil spills: cyan, ships: brown).

Figure 11.

Oil spill detection results in Kenting National Park. (a) SAR image. (b) Oil spill detection results (oil spills: cyan, ships: brown).

Figure 11.

Oil spill detection results in Kenting National Park. (a) SAR image. (b) Oil spill detection results (oil spills: cyan, ships: brown).

Figure 12.

Comparison of the segmentation results by the proposed model combined with the original and modified CBAM, respectively. (a) SAR image; (b) Ground truth data; (c) The FA-MobileUNet model using original CBAM; (d) The improved FA-MobileUNet model using modified CBAM (with 1 iteration of closing operation). (e) The improved FA-MobileUNet model using modified CBAM (with 2 iterations of closing operations). (f) The improved FA-MobileUNet model using modified CBAM (with 3 iterations of closing operations). Black, cyan, red and brown represent the sea surface, oil spills, look-alikes and ships, respectively.

Figure 12.

Comparison of the segmentation results by the proposed model combined with the original and modified CBAM, respectively. (a) SAR image; (b) Ground truth data; (c) The FA-MobileUNet model using original CBAM; (d) The improved FA-MobileUNet model using modified CBAM (with 1 iteration of closing operation). (e) The improved FA-MobileUNet model using modified CBAM (with 2 iterations of closing operations). (f) The improved FA-MobileUNet model using modified CBAM (with 3 iterations of closing operations). Black, cyan, red and brown represent the sea surface, oil spills, look-alikes and ships, respectively.

Table 1.

Detection performance of the improved FA-MobileUNet model with different replacement of the modified CBAM in terms of IoU (%). “✗”represents the model using original CBAM.

Table 1.

Detection performance of the improved FA-MobileUNet model with different replacement of the modified CBAM in terms of IoU (%). “✗”represents the model using original CBAM.

| Modified CBAM |

Sea surface |

Oil spills |

Look-alikes |

Ships |

Land |

mIoU |

| Encoder stage |

✗ |

97.54 |

75.85 |

72.67 |

76.19 |

96.48 |

83.74 |

| 1 |

97.31 |

75.86 |

72.91 |

76.19 |

96.49 |

83.75 |

| 1, 2 |

97.22 |

75.97 |

73.25 |

76.21 |

96.49 |

83.83 |

| 1, 2, 3 |

96.03 |

76.34 |

73.39 |

76.20 |

96.40 |

83.67 |

Table 2.

Detection performance of the improved FA-MobileUNet model under different closing operation iterations in the modified CBAM in terms of IoU (%). “✗”represents the model using original CBAM.

Table 2.

Detection performance of the improved FA-MobileUNet model under different closing operation iterations in the modified CBAM in terms of IoU (%). “✗”represents the model using original CBAM.

| Module |

Iteration No. |

Sea surface |

Oil spills |

Look-alikes |

Ships |

Land |

mIoU |

| Modified CBAM (closing operation) |

✗ |

97.54 |

75.85 |

72.67 |

76.19 |

96.48 |

83.74 |

| 1 |

97.32 |

75.94 |

73.52 |

76.22 |

96.47 |

83.89 |

| 2 |

97.08 |

76.12 |

73.88 |

76.22 |

96.40 |

83.94 |

| 3 |

94.85 |

76.88 |

74.13 |

76.23 |

96.38 |

83.69 |

Table 3.

Performance evaluation of the improved FA-MobileUNet using label smoothing in terms of IoU (%).

Table 3.

Performance evaluation of the improved FA-MobileUNet using label smoothing in terms of IoU (%).

| Method |

Sea surface |

Oil spills |

Look-alikes |

Ships |

Land |

mIoU |

| Label smoothing |

✗ |

97.54 |

75.85 |

72.67 |

76.19 |

96.48 |

83.74 |

| ✓ |

97.29 |

76.84 |

75.21 |

76.42 |

96.45 |

84.44 |

Table 4.

Performance evaluation of different semantic segmentation networks in terms of IoU (%).

Table 4.

Performance evaluation of different semantic segmentation networks in terms of IoU (%).

| Model |

Backbone |

Parameters |

Sea surface |

Oil spills |

Look-alikes |

Ships |

Land |

mIoU |

| U-Net |

ResNet-101 |

51.5 M |

95.47 |

57.01 |

44.82 |

46.62 |

93.08 |

67.40 |

| LinkNet |

ResNet-101 |

47.7 M |

94.82 |

52.95 |

47.52 |

45.11 |

93.12 |

66.70 |

| PSPNet |

ResNet-101 |

3.8 M |

93.03 |

45.65 |

40.62 |

30.25 |

91.12 |

60.13 |

| DeepLabv2 |

ResNet-101 |

42.8 M |

95.02 |

43.12 |

46.23 |

15.12 |

82.34 |

56.37 |

| DeepLabv3+ |

MobileNetv2 |

2.1 M |

96.57 |

56.34 |

57.06 |

32.92 |

94.18 |

67.41 |

| ToZero FMNet |

x |

36.0 M |

94.53 |

49.95 |

41.40 |

25.44 |

87.11 |

61.90 |

| CoAtNet-0 |

x |

29.4 M |

95.40 |

50.22 |

58.85 |

69.09 |

94.49 |

73.61 |

| EfficientNetv2 |

B1 |

16.7 M |

95.19 |

56.42 |

62.23 |

72.80 |

96.59 |

76.65 |

| Ensemble Model |

x |

x |

96.78 |

56.10 |

58.88 |

47.28 |

96.59 |

71.12 |

| FA-MobileUNet |

MobileNetv3 |

14.9M |

97.12 |

75.85 |

72.69 |

76.22 |

96.47 |

83.67 |

| Improved FA-MobileUNet |

MobileNetv3 |

14.9M |

96.58 |

77.50 |

75.81 |

76.67 |

96.18 |

84.55 |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).