2.1. CNN Neural Network

Convolutional Neural Network (CNN) is a deep feedforward neural network model with strong automatic feature extraction capabilities. Its basic structure consists of an input layer, convolutional layers, pooling layers, fully connected layers, and an output layer.

(1) Input layer

The CNN model is a supervised learning model that requires learning under the supervision of sample labels. Therefore, the input consists of samples and sample labels . For example, for a C-class classification problem, the model input, which is defined as Equation (1):

where

is the number of samples input into the model,

is the iii-th sample, and

yi is the class label corresponding to the i-th sample.

(2) Convolutional layer

The convolutional layer is the core component of the CNN model, implementing the concepts of local connectivity and weight sharing through convolutional kernels. The convolutional kernel slides horizontally and vertically along the coordinates of the input feature map, performing convolution operations with the data within the receptive field to extract structural features hidden within the data. The convolutional layer is organized in three dimensions: depth, width, and height. The width and height refer to the width and height of the convolutional kernel, which define the size of the local receptive field. Vibration signals are one-dimensional data, so the size of the convolutional kernel is

, with

constrained by the length of the input sample. Depth refers to the number of convolutional kernels. To extract different features from the input feature map, the convolutional layer performs convolution operations using a certain number of convolutional kernels, each with different weights, corresponding to different feature extractions. The convolution operation extracts features from the input feature map based on the size of the convolutional kernel and the stride. The process of feature extraction by the convolutional kernel is defined as follows:

In the equation, and represent the weight and bias of the -th convolutional kernel in the -th convolutional layer, respectively. is the number of convolutional kernels (the width of the convolutional layer), is the stride, which is the distance the convolutional kernel slides over the input feature map, is the padding size, is the -th feature map of size output by the -th layer, and is the -th convolutional kernel in the -th convolutional layer, extracting a feature map of size from the input feature map. Each convolutional kernel will find specific features at every position in the feature map, and the types of features learned are dynamically determined by the algorithm.

(3) Activation function

After the convolution operation, the results are processed by the activation function to obtain the corresponding output features. The activation function maps the originally linearly inseparable multidimensional features to another space, enabling the neural network to fit the nonlinear relationship between the input sample data and labels. The choice of activation function affects network training time and has a significant impact on performance on large datasets. Common activation functions in the field of fault diagnosis include the Sigmoid function, the Hyperbolic Tangent (Tanh) function, and the Rectified Linear Units (ReLU) function. The representations of these three types of functions, which are defined as follows:

In the equations, and represent the input and output of the function, respectively. The Sigmoid and Tanh functions are saturated nonlinear functions, mapping the input to the intervals [0,1] and [−1,1], respectively. During model training, if the neuron’s initialization or optimization enters the saturation region, the Sigmoid and Tanh functions are prone to the vanishing gradient problem, making further optimization difficult. Additionally, as the number of network layers increases, due to the chain rule, the derivatives of the multiplied Sigmoid and Tanh functions become increasingly small, hindering gradient backpropagation and reducing the network’s convergence speed, or even preventing the network from converging to an optimal state. In contrast, the ReLU function is a non-saturated nonlinear function that ensures all outputs are positive, effectively reducing the risk of vanishing gradients and gradient explosion during training. This alleviates the difficulty of training internal parameters in deep neural networks and facilitates faster network training. Therefore, the ReLU function is used as the activation function for all network layers.

(4) Pooling layer

The pooling layer, also known as the subsampling layer, is a network layer that performs pooling operations. After feature extraction by the convolutional layer, directly using these features for classification would face significant computational challenges and the risk of overfitting. Thus, it is necessary to perform pooling on the feature maps to reduce their data dimensionality. Pooling is a process of further abstracting information, similar to the feature extraction process of the convolutional layer. It involves sliding a window over the feature map and taking the statistical value of the local region corresponding to the window as the sampling value for that region. These values from the local regions are then concatenated to form a new feature map. Pooling operations are usually applied after convolutional layers, reducing feature dimensionality while preserving significant feature information and maintaining spatial invariance. Unlike convolution operations, pooling does not involve parameter settings and memory usage, greatly reducing the computational load. The pooling operation can be represented as:

where

is the pooling method,

is the size of the pooling function. When

, it indicates local pooling of the feature map, and when

, it indicates global pooling, where the entire feature map is pooled.

is the stride of the pooling function, and

is the output feature map after the corresponding pooling operation, with its size being

(5) Fully connected layer

After multiple convolutional layers and max pooling layers, there are usually a few fully connected layers to integrate the local features extracted by the convolutional or pooling layers. The fully connected layer is a traditional multilayer perceptron that connects each neuron from the previous layer to every neuron in the next layer, generally using the ReLU function. For a one-dimensional input

of length

, with

neurons in the fully connected layer, the output of each neuron can be represented as:

In the equation, is the connection weight from the i-th neuron in the -th layer to the -th neuron in the -th layer; is the input to the -th neuron in the -th layer; is the bias of the -th neuron in the -th layer; and and represent the number of neurons in the -th layer and the -th layer, respectively.

(6) Output layer

The output layer uses a classifier to output the model’s recognition results in the form of categories or probabilities. The most commonly used function is the nonlinear Softmax function, which is an extension of the logistic function and is typically used for multi-class classification problems. The Softmax function converts the extracted features into a probability distribution, using logarithmic probability values to estimate the likelihood of a sample belonging to a particular category. A higher value indicates greater confidence. It is represented as follows:

where

is the model’s parameters, and

is the feature vector associated with the input

. The Softmax function maps the feature set to a c-dimensional vector

, with each value in the vector ranging from (0,1) and the sum of all values in the vector equal to 1. Each element can be considered a category corresponding to the classifier parameters.

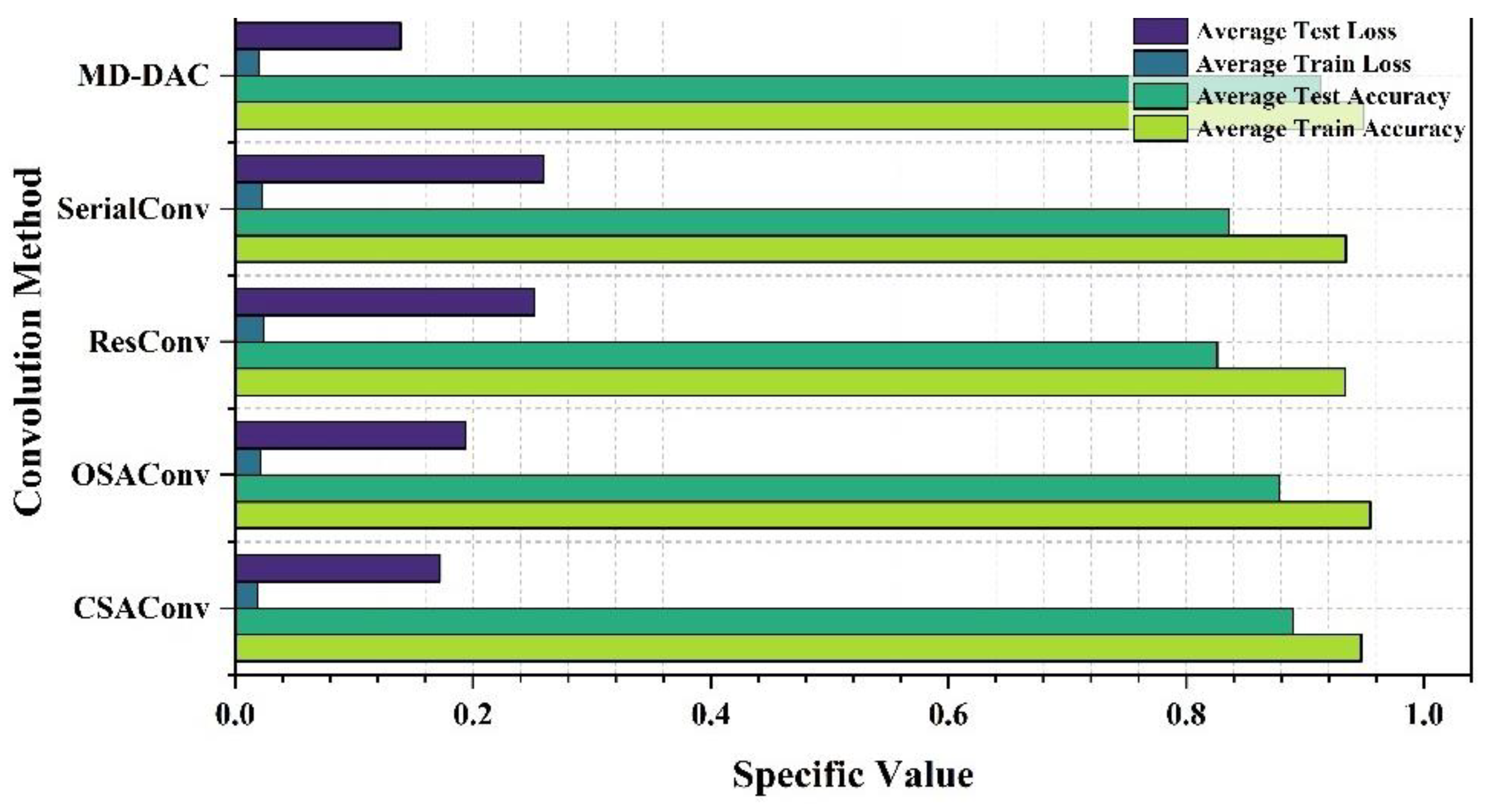

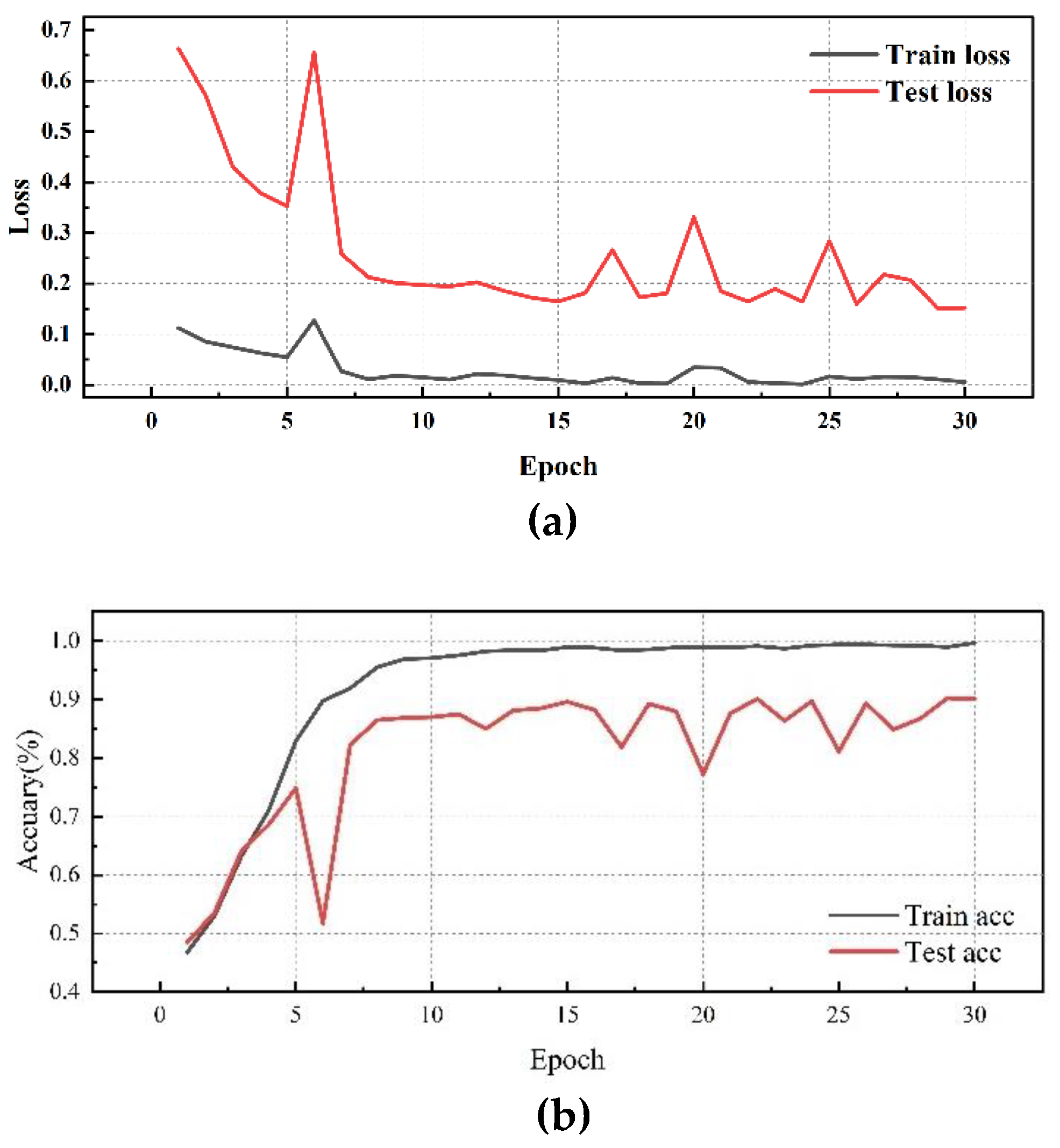

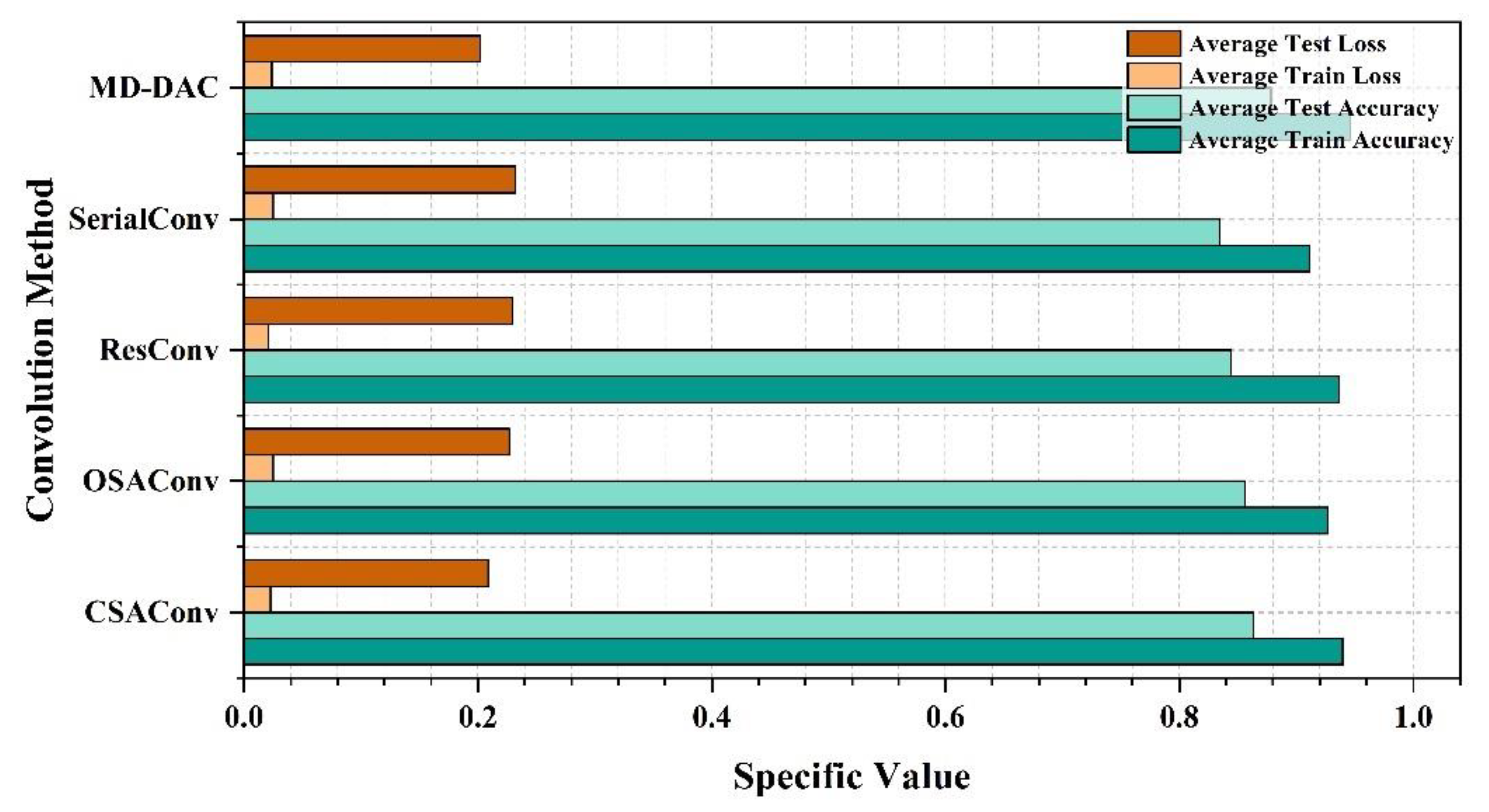

2.2. Construction of a Novel Network Architecture

To enhance feature extraction capabilities, this paper proposes five network structures based on the fundamental CNN architecture by stacking different convolutional layers and pooling layers, as well as multiple feature fusions and channel expansions. These structures effectively extract complex features from sample data as channels expand and increase. The main innovation of the network structures lies in the design of efficient expanded convolution layers, with each module employing multi-path convolution and feature concatenation strategies.

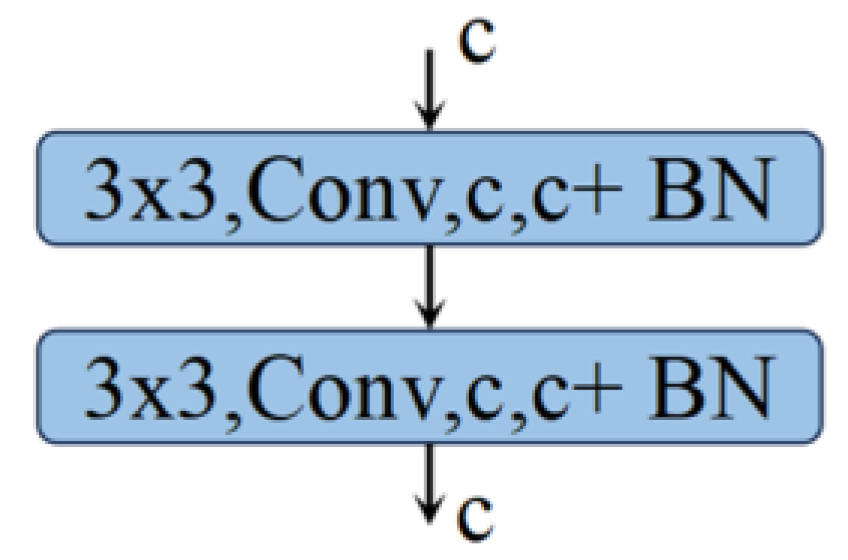

2.2.1. Serial Convolution (SerConv)

The SerConv network consists of two sequential convolutional blocks, each comprising a convolutional layer and a batch normalization layer. This setup stabilizes the learning process by normalizing the output of the convolutional layers, thereby enhancing the network’s generalization ability.

Let represent the function applied by our convolutional block, where denotes the block input, and is the parameters of the convolutional layer and batch normalization. This process can be defined mathematically as follows:

Convolutional Layer 1:

where

and

are the weights and bias of the first convolutional layer, and

is the batch normalization operation.

Convolutional Layer 2:

where

and

are the weights and bias of the second convolutional layer.

By sequentially connecting two convolutional blocks, the SerConv network can perform deeper feature extraction and processing on the input data without increasing computational complexity. Each convolutional block’s output undergoes batch normalization, which not only enhances the stability of the network during training but also improves the model’s ability to adapt to different data distributions to some extent. The specific structure is shown in

Figure 1.

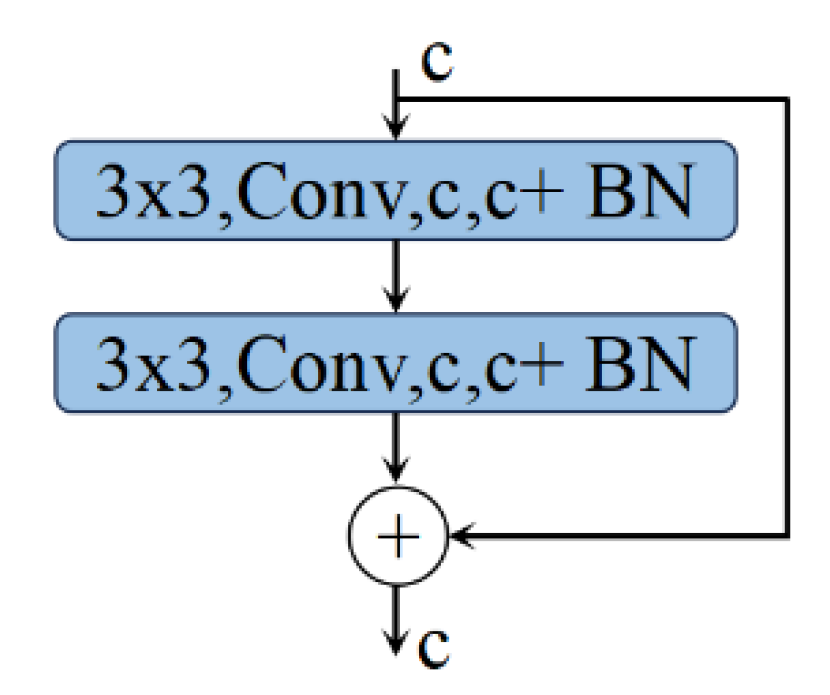

2.2.2. Residual Convolution (ResConv)

The ResConv structure consists of two consecutive convolutional blocks, each comprising a convolutional layer and a batch normalization layer. The residual connection is achieved by directly adding the input

to the output of the second convolutional block, merging the information before and after, thus allowing the network to learn incremental modifications to the input. The process for Convolutional Layer 1 and Convolutional Layer 2 is consistent with SerConv (Equations (1)–(2)), and

is the final output.The process, which is defined as follows:

By introducing residual connections into the convolutional network, the ResConv module increases the training depth of the network without compromising performance, effectively facilitating the learning of complex patterns. The specific structure is shown in

Figure 2.

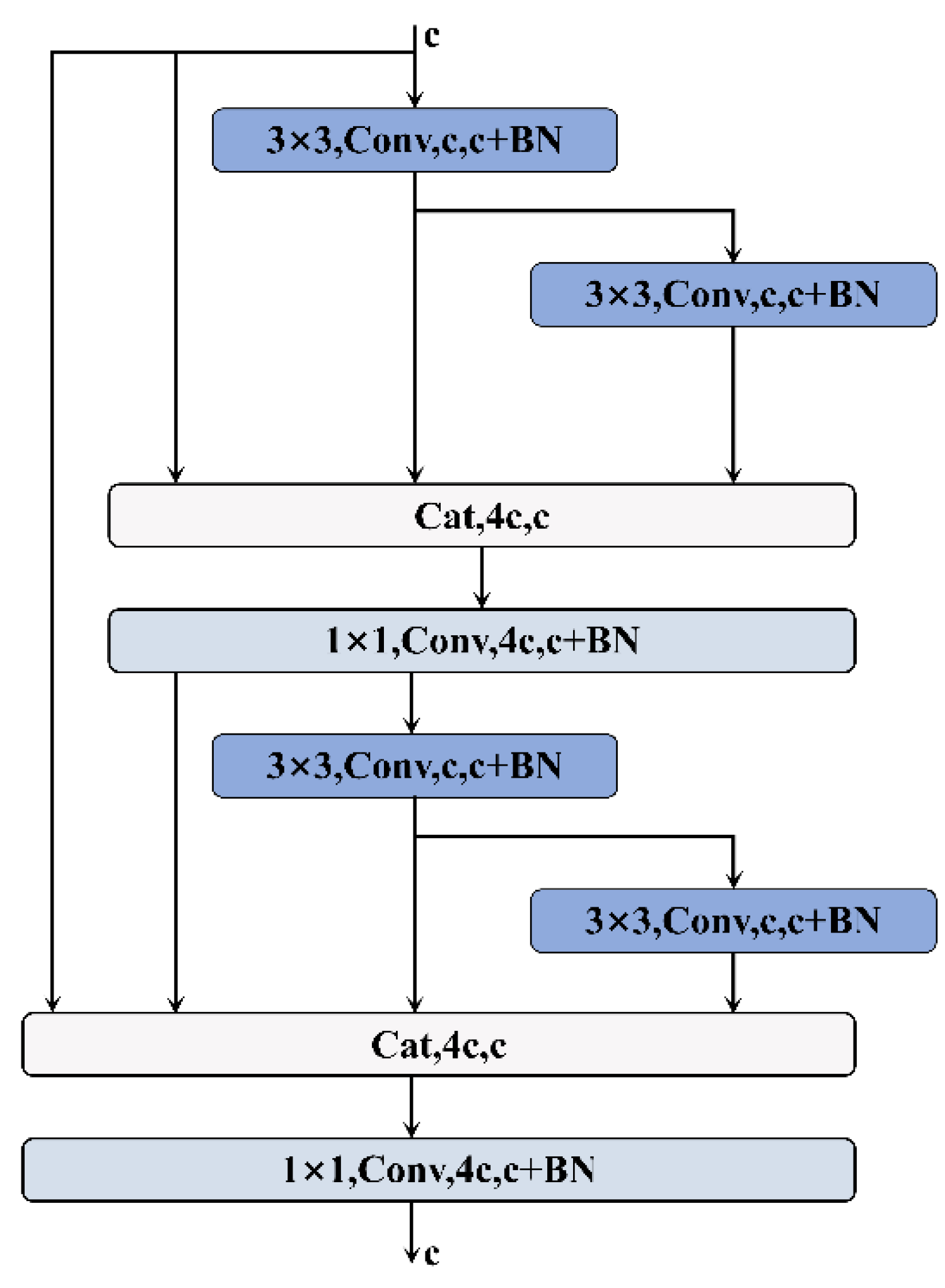

2.2.3. One-Shot Aggregation Convolution (OSAConv)

OSAConv is a novel deep learning network designed for feature data, with a core focus on processing one-dimensional sequence signals through deep feature extraction and efficient feature fusion. The network employs multiple stacked convolutional modules that feature multi-path convolution and feature concatenation mechanisms. Additionally, the number of channels is progressively increased after each layer, significantly enhancing the richness of features and the expressiveness of the model.

Each module utilizes multiple branches to extract features through consecutive convolution operations. These features are then concatenated with the original input, increasing the input information for subsequent layers. This design helps the model capture more refined local features and allows the network to acquire direct information from the original data before proceeding to deeper abstractions.

After each feature concatenation, the network performs feature fusion through convolutional layers, effectively reducing feature dimensions, alleviating computational burden, and ensuring the effectiveness and completeness of features in the depth direction. Below, this paper elaborates on the relevant formulas for the network structure:

In each module, the input first passes through a 3 × 3 convolutional layer to start the feature extraction process. For the nth layer of the module, its features (where n is the layer index) are obtained in the following manner:

Extract features on feature

a through

series of 3 × 3 convolutional layers, the process is defined as follows:

Subsequently, the first concatenation layer fuses the relevant features as follows,the process is defined as follows:

Here, Concat is the feature concatenation operation, which concatenates various feature maps along the channel dimension.

Subsequently, a 1 × 1 convolution is applied to the fused features for channel compression and feature integration to reduce the number of parameters and maintain computational efficiency,the process is defined as follows:

The subsequent processing within the same module follows a similar pattern, further enhancing feature expression, the process is defined as follows::

In summary, the construction of OSAConv is completed as

Figure 3.

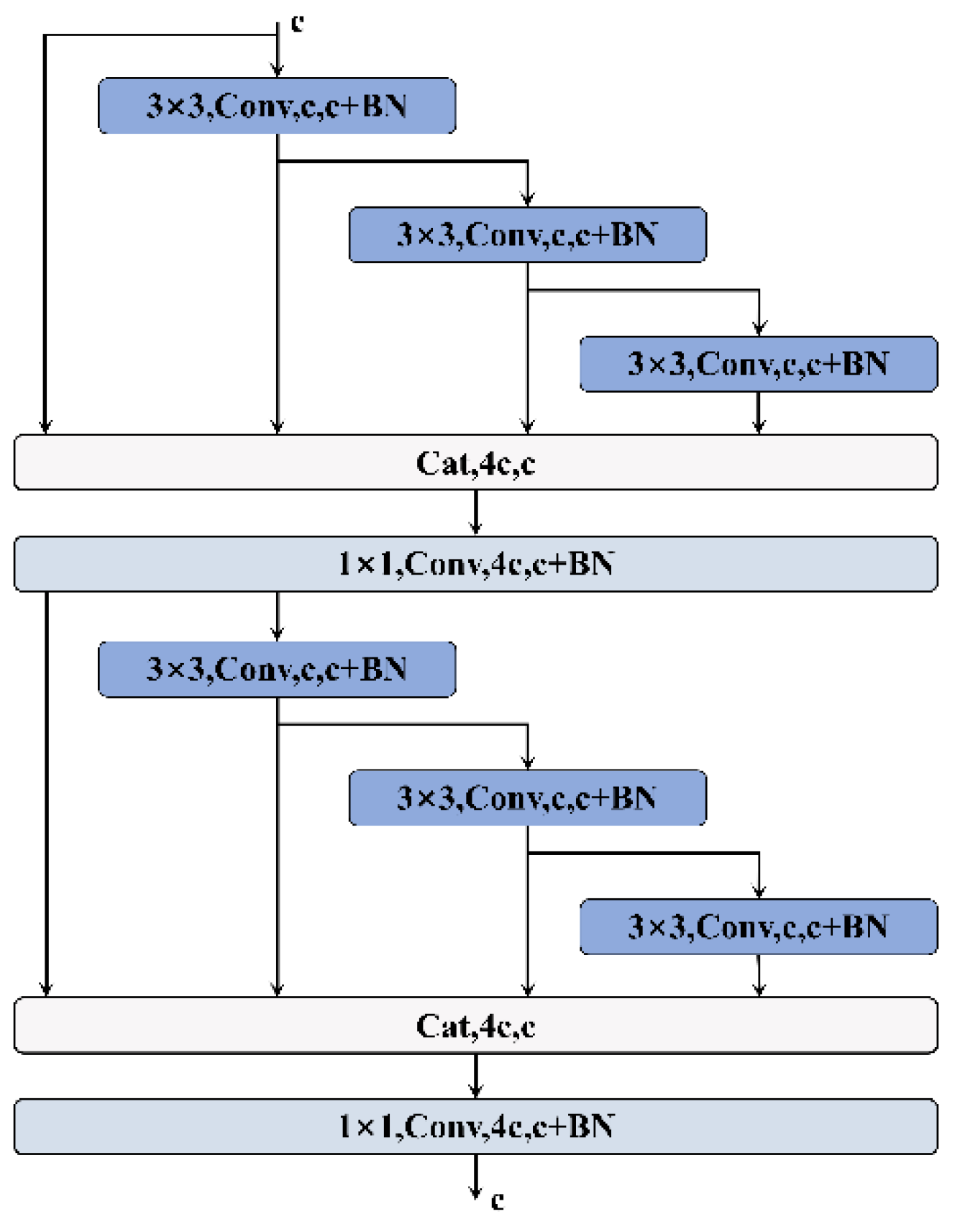

2.2.4. Cross Stage Aggregation Convolution (CSAConv) Network

CSAConv is an innovative deep learning network designed for one-dimensional sequence signals. This architecture enhances the network’s feature extraction capability through cross-stage feature fusion combined with multi-scale convolutional kernels. The design aims to extract more diverse features through cross-stage feature fusion while maintaining computational efficiency. Specifically, the structure includes multiple 3 × 3 convolutional layers and 1 × 1 convolutional layers, which perform cross-stage convolution operations, ultimately concatenating the features to form a more powerful feature representation.

Below, this paper elaborates on the relevant formulas for the network structure:

The input feature x first passes through the first 3 × 3 convolutional layer and a batch normalization layer,the process is defined as follows:

Then it passes through another 3 × 3 convolutional layer for further feature extraction, the process is defined as follows:

Subsequently, the relevant features are concatenated together,the process is defined as follows :

Subsequently, a 1 × 1 convolutional layer and a batch normalization layer are used for channel compression and feature integration, the process is defined as follows:

At this point, the feature

is passed through two more 3 × 3 convolutional layers to extract deeper-level features, the process is defined as follows:

Subsequently, the relevant features are further concatenated,the process is defined as follows:

Finally, the features are integrated through a 1 × 1 convolutional layer and a batch normalization layer to form the final output feature, the process is defined as follows:

In summary, the construction of CSAConv is completed as

Figure 4.

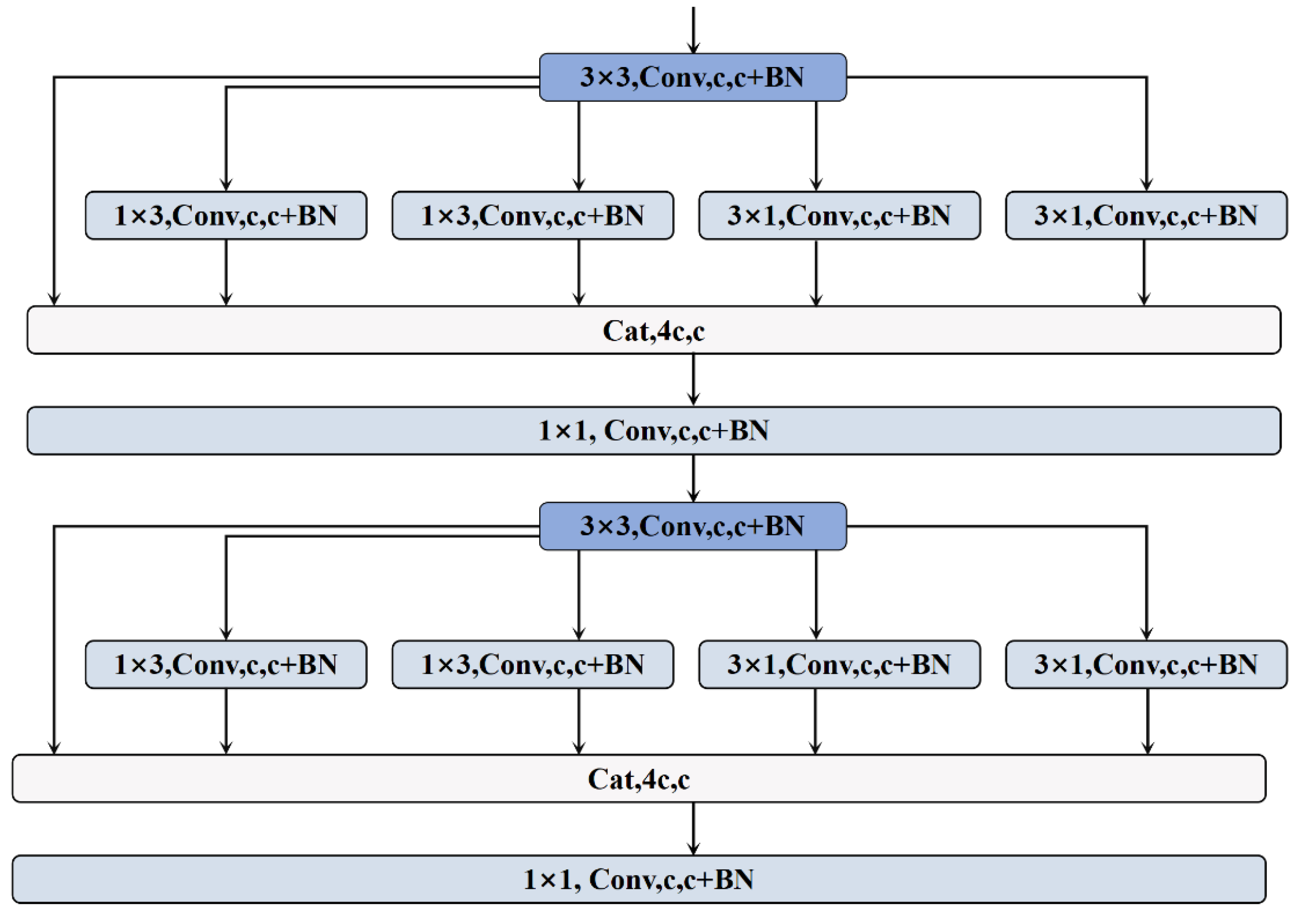

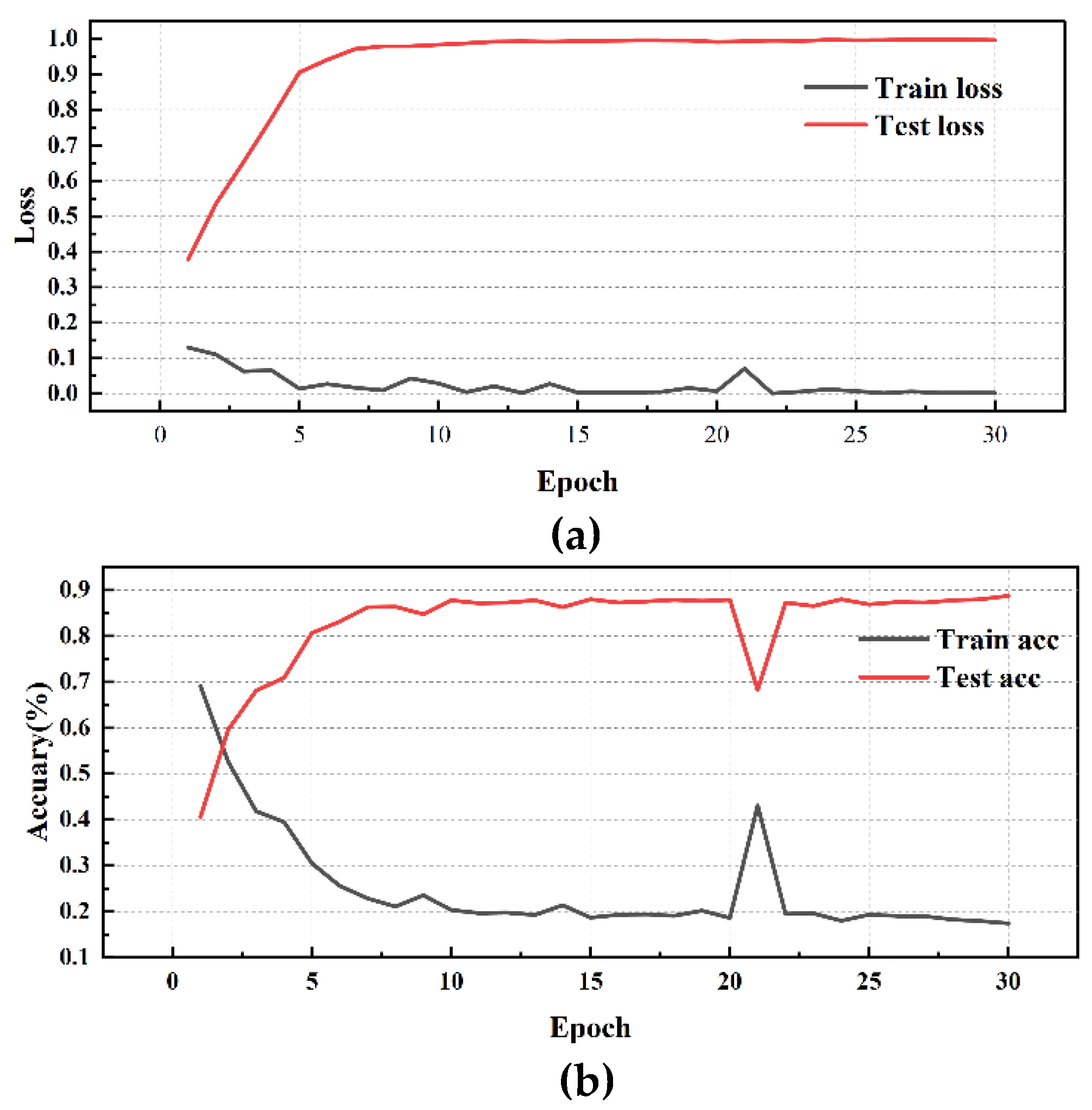

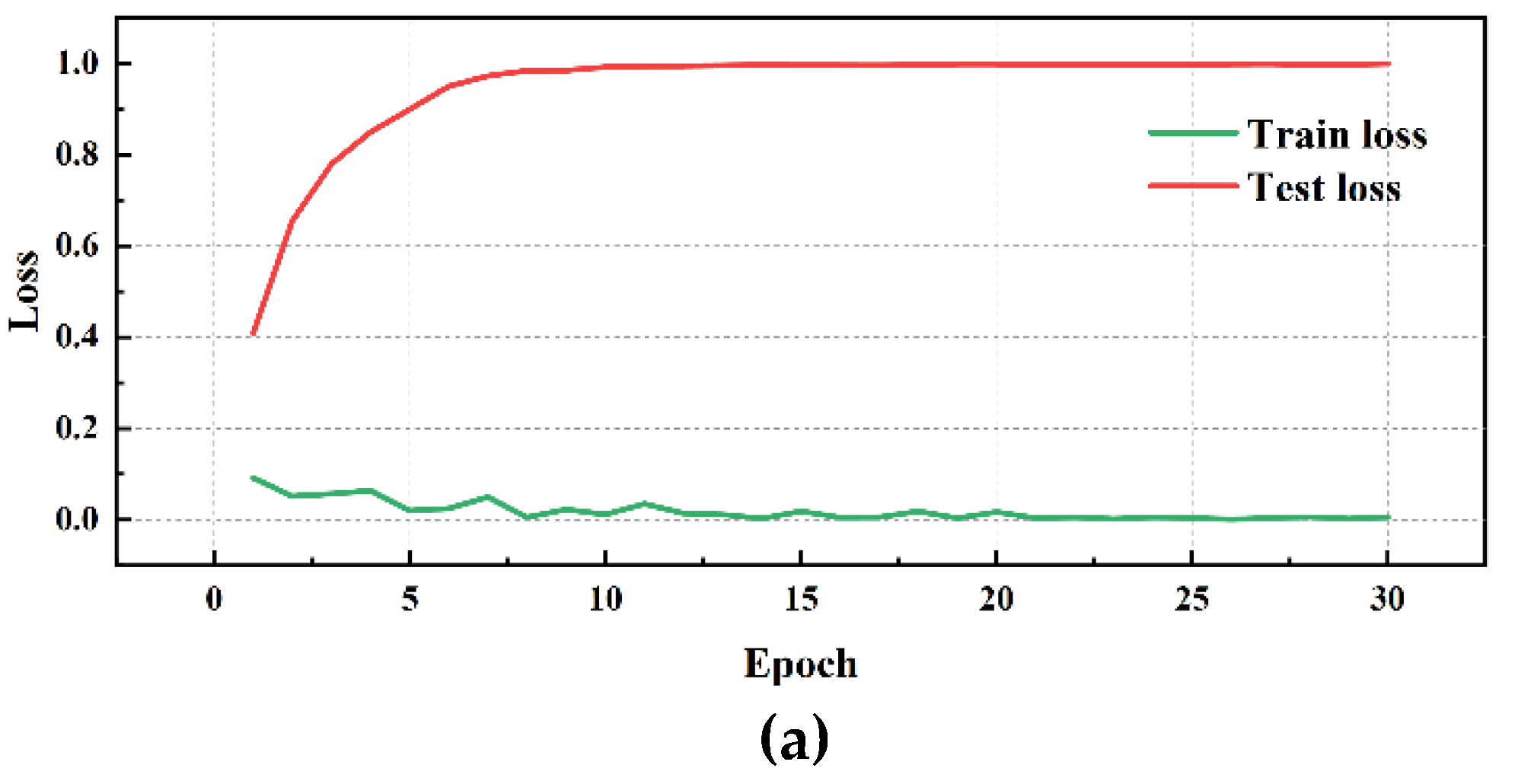

2.2.5. Multi-Directional Dense Aggregation Convolution (MD-DAConv) Network

Currently, the use of dilated convolutional layers with different directions and scales has become a strategy for improving related networks. To verify whether this approach is suitable for fault diagnosis, this paper proposes a new convolutional structure called Multi-Directional Dense Aggregation Convolution (MD-DAConv). This structure aims to enhance the network’s feature extraction capabilities through multi-directional and multi-scale dilated convolutional layers combined with dense connections.

The design goal of this network is to extract more diverse features through multi-directional and multi-scale convolutional kernels while maintaining computational efficiency. Specifically, MD-DAConv includes multiple 3 × 3 convolutional layers, 1 × 1 convolutional layers, left and right dilated convolutional layers (1 × 3 and 3 × 1), and top and bottom dilated convolutional layers (3 × 1 and 1 × 3). The features from each layer are fused through dense connections to form a more powerful feature synthesis.

Below, this paper elaborates on the relevant formulas for the network structure:

In each module, the input x first passes through a 3 × 3 convolutional layer to begin the feature extraction process. For the nth layer of the module, its feature

(where n is the layer index), which is defined as follows:

On feature , features are extracted through dilated convolutional layers in four directions:

Subsequently, the concatenation operation, which is performed as follows:

Subsequently, channel compression and feature integration are performed through a 1 × 1 convolutional layer and a batch normalization layer, which is performed as follows:

And feature

is passed through a 3 × 3 convolutional layer and a batch normalization layer to extract deeper-level features, which is performed as follows:

Subsequently, feature

is passed sequentially through left-right and top-bottom dilated convolutional layers, which is performed as follows:

Subsequently, all the features from the dilated convolutional layers are concatenated as follows:

Finally, integration is performed through a 1 × 1 convolutional layer and a batch normalization layer to form the final output feature, which is performed as follows:

In the end, we can get network structure as shown in

Figure 5:

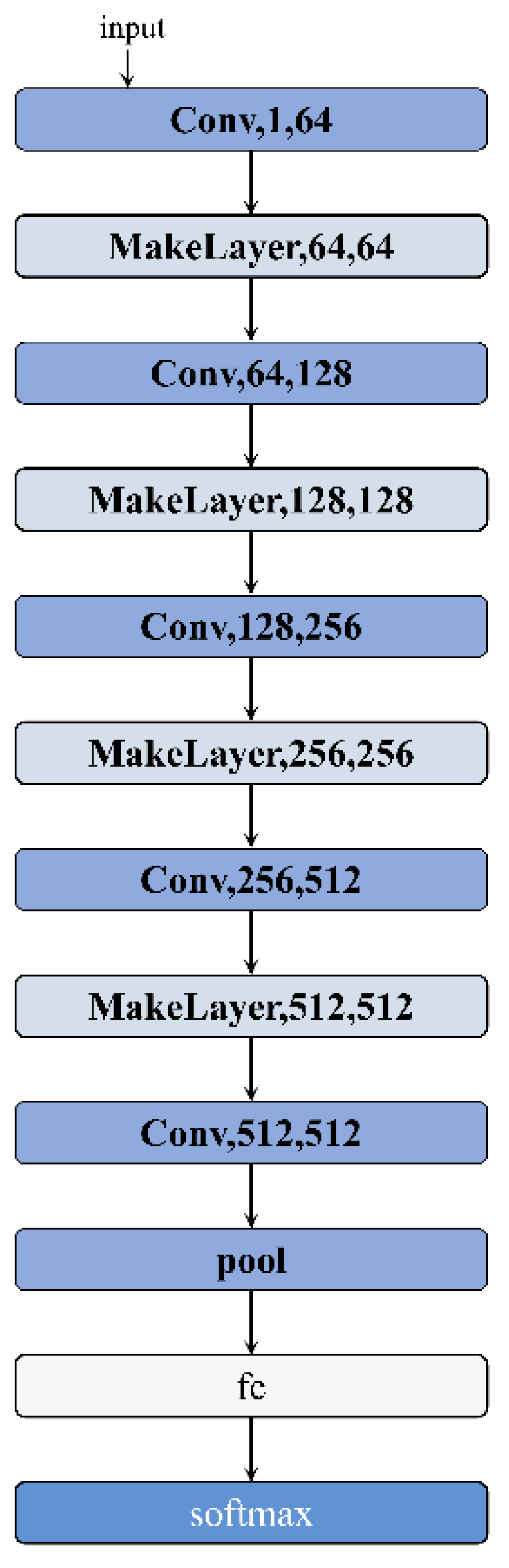

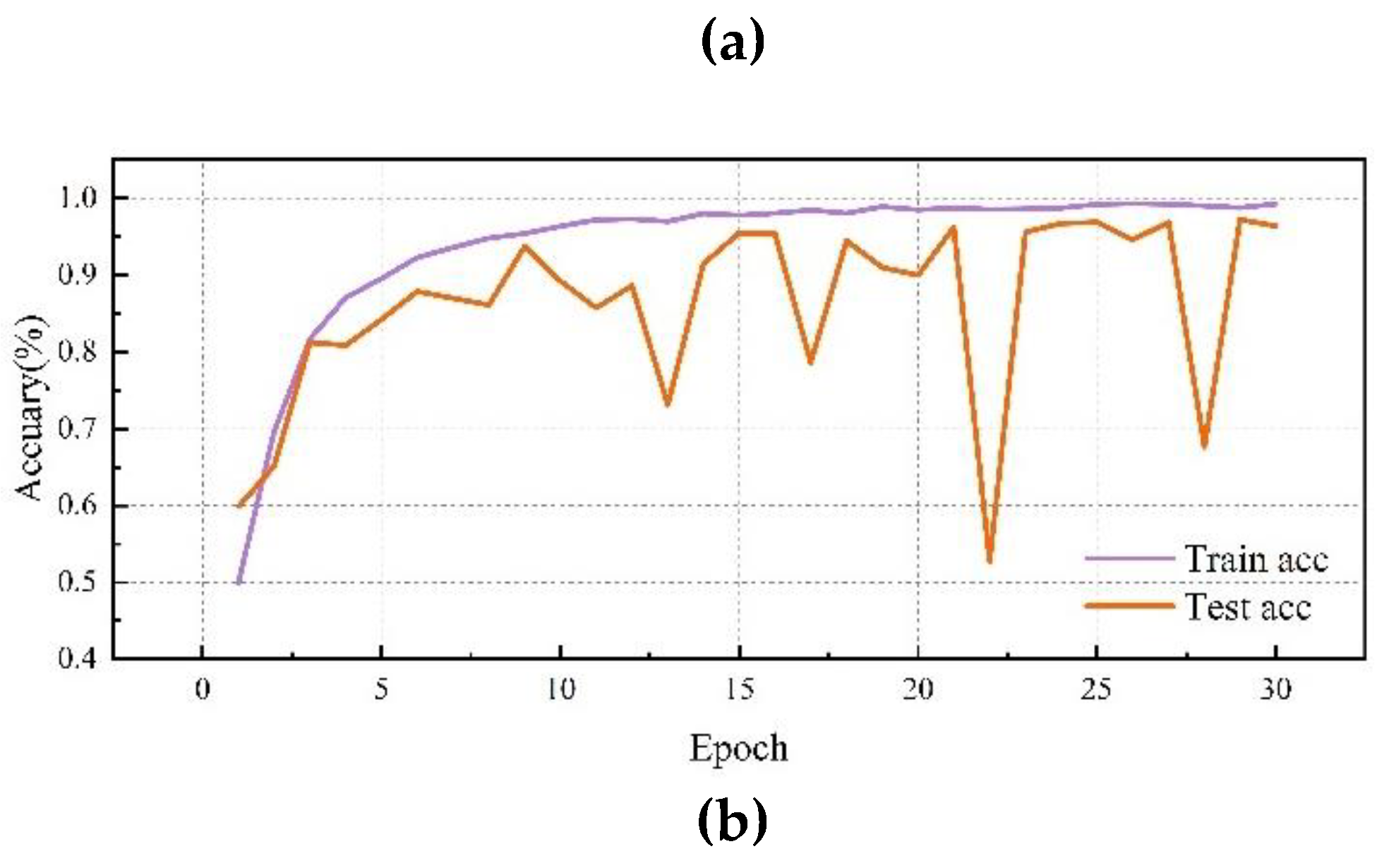

2.3. Overall Network Model Structure

In the previous sections, we introduced various convolutional structures, including residual convolution (ResConv), multi-direction dense aggregation convolution (MD-DAConv), and so on. To compare the performance of different convolutional structures, we propose the following architecture as a general framework for subsequent experiments and validation as

Figure 6.

The CNN model is composed of multiple convolutional layers and pooling layers, and it performs classification through fully connected layers and a Softmax layer at the end. The input layer of the model accepts one-dimensional signal data with a size of 1 × N, where NNN is the length of the input signal. The first convolutional layer uses 64 filters to perform convolution operations on the input signal, extracting initial features. Subsequently, the second convolutional layer further enhances the feature representation ability. The third convolutional layer increases the number of channels to 128 to capture more complex features, and the fourth convolutional layer continues the convolution operation on the features. The fifth convolutional layer increases the number of channels in the feature map to 256, and the sixth convolutional layer further enhances the feature representation ability. The seventh convolutional layer increases the number of channels in the feature map to 512 through convolution operations, and the eighth and ninth convolutional layers continue convolution operations on the features, further enhancing the feature representation ability.

The pooling layers use max pooling to reduce the size of the feature map by half, reducing computational load and the number of parameters while retaining the main features. Finally, the features extracted by the convolution and pooling operations are flattened into a one-dimensional vector through fully connected layers for feature fusion and classification, and the Softmax layer is used to output the final classification results.