AI, or Artificial Intelligence, refers back to the simulation of human intelligence processes via machines, specifically pc structures. The latest improvement of AI has sparked a debate in specific sectors of life. Its speedy pace is envisioned to attain a hundred ninety. Sixty one billion dollars with the aid of 2025, at a compound annual boom charge of 36.62 percent [

1]. AI has been broadly used for the reason that beyond few many years for solving troubles in numerous fields of lifestyles [

2]. Some argue that AI has been an irreplaceable asset in the medical field, specially since AI-powered microscopes that can automatically detect malaria parasites in blood samples had been developed by means of researchers from Sub-Saharan Africa [

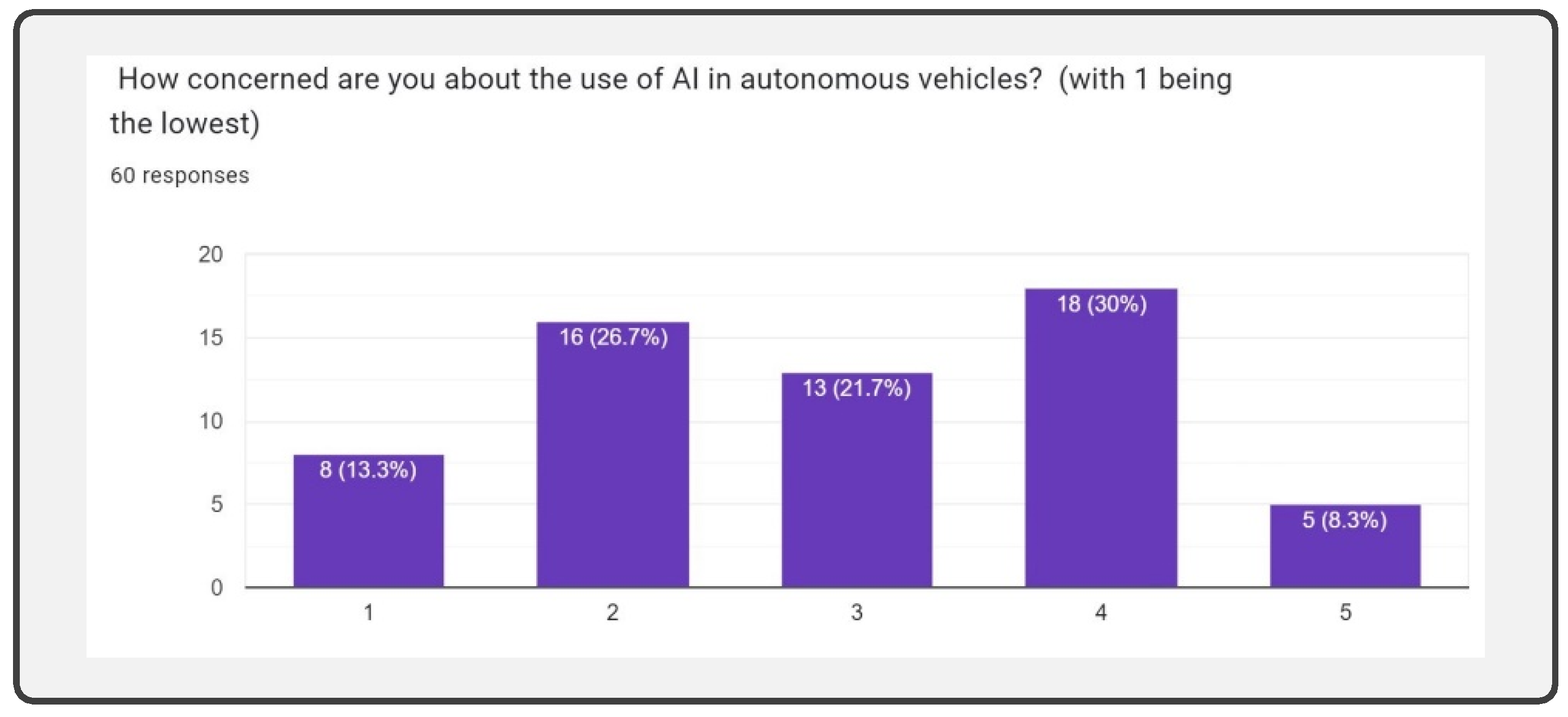

3]. Moreover, there is additionally a robust support for AI due to the speedy lower of car accidents because of the usage of self sustaining motors. The chance of a crash main to an injury is notably lower in AVs (11.7%) than in human driven (30.3%) [

4]. However, like each development, there are a few downsides as nicely. One of the main worries being the social manipulation because of the increase in AI, which impairs an individual’s ability to make decisions due to the affect of fake records [

5]. During the Philippines’ election in 2022, Ferdinand Marcos Jr., mobilized a TikTok troll military to coax the younger Filipinos’ voters [

6]. Furthermore, the breach of privateness has raised greater troubles. These elements have sparked a debate regarding the effect AI development has. Thus, the main question arises, is the increase in AI a fine improvement? Let’s first take into account the perspective that the growth in AI is a fine development. The advantageous impact of AI is in reality seen within the subject of medical, pondered in AI healthcare marketplace accomplishing an predicted fee of

$11 billion in 2021 [

7]. In a look at, a crew from Germany, France and United States proved that AI-based Computer-Aided Diagnosis (CAD) System outperforms human-primarily based prognosis of cancerous skin lesions [

8]. Engaging researchers from diverse international locations offers global illustration, and helps put off capacity biases - by using supplying broader know-how, one-of-a-kind perspectives, cross-cultural validation and generalizability, thereby substantially improving the credibility and authenticity of the supply. The argument about positive impact of AI on healthcare is similarly supported by another study finished by using IBM- International Business Machine (IBM) [

9] where it turned into deter- mined that 64% of sufferers are comfortable with using AI for continuous get entry to to statistics, furnished by means of an AI-primarily based Virtual Nurse. As in line with the National Academies of Sciences, Engineering, and Medicine (NASEM), the researchers observed that there may be a scarcity of nurses because of will increase in the populace and the high charge of leaving the nursing career by means of the young nurses [

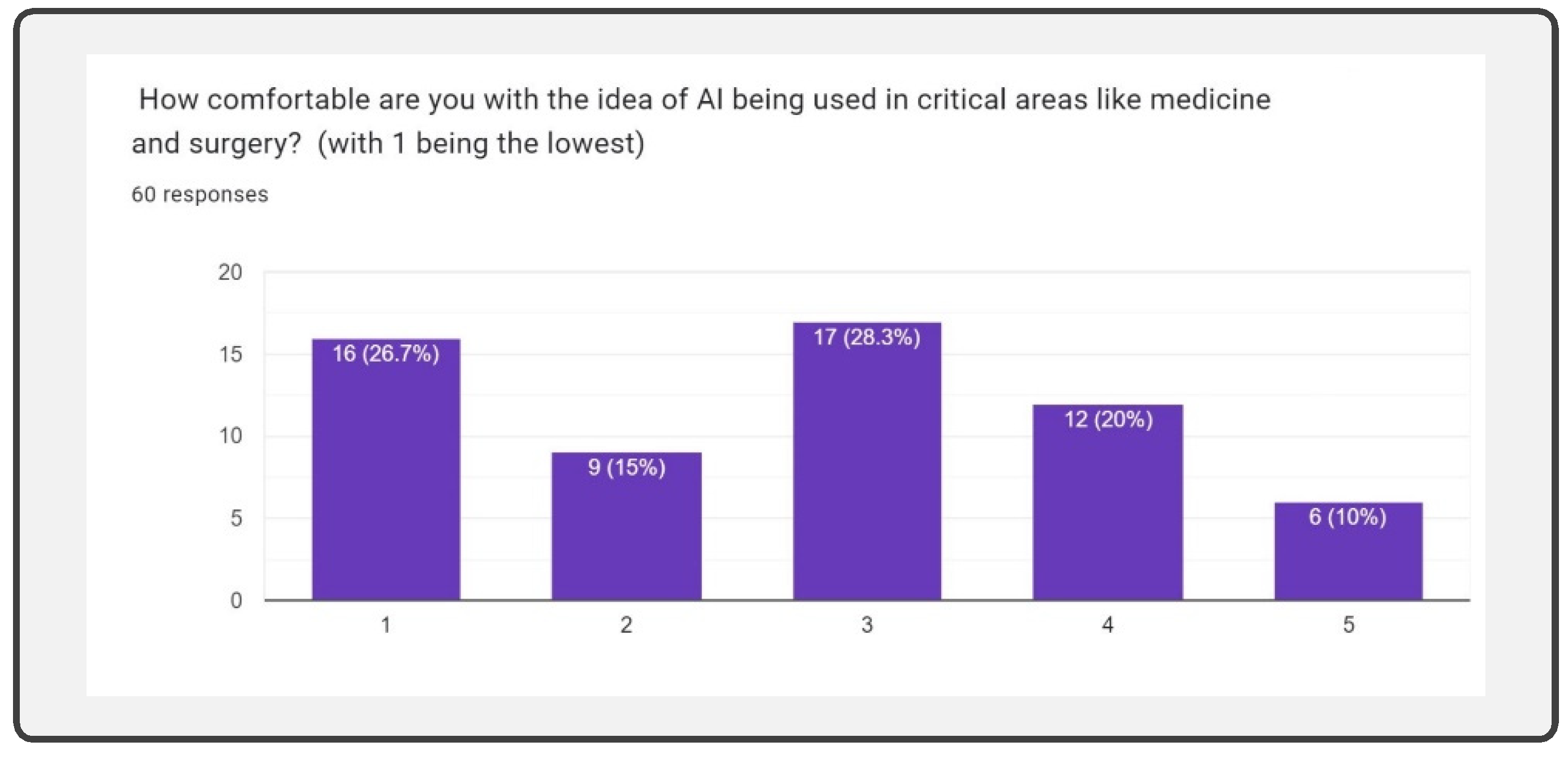

10]. In this kind of state of affairs AI is again playing its position and presenting digital nurses to conquer the dearth of nurses. IBM, main in AI because of its extremely good contributions and NASEM, a famend institute providing evidence-based steerage to the government and public, both are identified for their reliability and robust credentials of their respective domain names. Furthermore, it’s far argued that Vehicles having the capability to navigate and function without human manipulate, referred to as Autonomous Vehicles (AV), which might be some other spinoff of the state-of- the-art advancements in AI leading to much less injuries. A observe published in the Journal of Advanced Transportation known as “Safety of Autonomous Vehicles” in comparison the variety of injuries caused by people versus AI. Over three.7 kilometers, out of 128 accidents, 63% of the accidents have been due to autonomous riding. How- ever, most effective 6% had been directly linked to AV while 94% have been because of 1/3 parties, inclusive of pedestrians, cyclists, motorcyclists, and conventional motors [

11]. Thus, demonstrating that AV is safer as 94% injuries had been due to human beings. The study, authored through Jun Wang, Li Zhang, Yanjun Huang, and Jian Zhao, emphasizes the validity of their argument because of their expertise. They exam- ine the potential shortcomings of AVs, despite statistical proof of their protection. In any case, the have a look at doesn’t just highlight issues but also proposes solutions, improving the reliability in their argument. This argument of AI having a tremendous impact on AV is likewise supported by way of Swiss Re and Waymo’s collaborative study. Swiss Re‘s baselines, based totally on 600,000 claims and a hundred twenty five billion miles, allow powerful contrast [

12]. The Waymo Driver, in assessments without human intervention, precipitated no damage claims compared to 1.Eleven per million miles for human drivers, and had significantly fewer belongings harm claims at 0. Seventy eight in line with million miles versus three.26 for human drivers proving how AV has a fine impact on the society. The record changed into composed through authentic organizations which have the manner to acquire applicable facts and have a duty to uphold their recognition of imparting correct facts, which makes their argument extra credible. The evidence provided is likewise relevant which similarly highlights how using AV is important to reduce injuries which generates nice social consequences. However, critics contend that as AI maintains to advance in numerous fields, it poses a hazard to societal protection via facilitating the spread of incorrect information. This misinformation can cause impaired choice-making as people may be manipulated socially, highlighting concerns regarding the capability bad influences of AI on society. In 2020, researchers at the University of Sussex and an advertising employer made a deep-fake video of preceding U.S. President Barack Obama, highlighting AI’s capacity risk by spreading misinformation and dissolving consider in dependable sources [

13]. This underscores the want for moral policies and guidelines to keep away from mis- use. The proof is dependable because it comes from an academic placing and involves collaboration with a reputable establishments [

14]. Hany Farid, a professor at the University of California, Berkeley, has notably researched deep fakes and their societal implications, specializing in strategies to mitigate their threats. Collaborations with respectable institutions just like the Electronic Frontier Foundation (EFF) and the Brookings Institution make certain the reliability and rigor of deepfake research [

15]. This emphasizes how the development of Artificial Intelligence leads to societal decline, as respected individuals have progressed to underscore this hazard for the general public. Another instance is the Facebook/Cambridge Analytica case which suggests how facts misuse can effect ideals and movements [

16]. Information from thou- sands and thousands of Facebook users changed into collected without authorization to make psychographic profiles for centered political advertisements. This affected the 2016 U.S. Election and Brexit vote, raising worries approximately social manipulation, statistics security, and political impact The observe where this turned into posted, is a strong piece of evidence because of the reliable affiliations and various understanding of its authors, the multidisciplinary method, and its book in 2019 thru a peer-reviewed technique, indicating relevance and scholarly validation. But what weakens is that the writer does not completely discover counter arguments or spotlight any realistic solutions. Secondly AI has made hacking simple, threatening our on line records, presence, and safety. An instance is Conficker, additionally called Kido, Downadup, and Downup, which became notorious as a worm that infected tens of millions of computers around the arena in the past due 2000s [

17]. Exploiting vulnerabilities in Microsoft Windows, it spread over structures, creating a botnet managed by means of cyber- criminals. The worm should disable protection packages, take touchy statistics, and launch DDoS assaults against websites. In spite of efforts to mitigate its effect, Conficker stays energetic on several systems, posing a continual hazard to safety. Conficker highlights the link among cybersecurity dangers like malware and societal security. It underscores the project of guarding AI systems from cyber threats [

18]. Conficker’s persevering nearness serves as a clear name for proactive measures and time-honored collaboration to stand up to the risks posed by using pernicious on-display characters in the virtual domain. Conficker’s presence requires proactive measures and common collaboration to confront malicious actors within the digital area. Be that as it is able to, the paper does no longer cope with improving popularity-based blacklisting for identifying Conficker or provide counterarguments. In spite of the truth that it covers 25 million victims, giving valuable insights, no empirical proof is utilized. Generally, the study helps us recognize the significance and seriousness of this circumstance and the negative impact it has on our society Another comparable case is a major phish ing scheme focusing on Google customers in May 2017, encouraging them to click on false e mail links [

19]. These emails postured as actual invitations to collaborate on Google Docs, main recipients to a faux Google login page where hackers stole their login records. Google diagnosed this phishing attack as a actual safety threat after broad media coverage. Thorough examinations of the phishing emails and the phony website offered verifiable proof of the cybercriminal’s strategies. The severity of the scenario became emphasised by using Google’s activate motion, which included the suspension of malicious accounts and the creation of similarly security measures [

20]. The proof from the Association for Computing Machinery (ACM) is reliable due to its thorough peer overview method, making certain accuracy and pertinence. ACM’s appeared reputation as a leading authority in computing solidifies the reliability of its facts. Overall, this incident highlights how simply one click can reason a person to lose the whole lot, from his personal info to his debts, the whole lot is under threat, resulting in an unfavourable final results for society. Both views on AI pos ing a threat to societal protection made logical claims which had been subsidized via credible sources to bolster their argument. Those arguing in want of AI included researchers from Germany, France and the United States which increases its generalisability, allows a comparative evaluation and offers pass cultural validity. It additionally hyperlinks to how AI plays an immediate and vast role in assisting and assisting suf- ferers. The statistical and numerical data provided, quantitatively validates the large fantastic impact of AI in clinical and healthcare, strengthening the argument with the aid of demonstrating vast financial boom and relevance within this zone. Qualitative and Quantitative proof showcases the impact the AV cars have added in decreasing accidents. A weak spot is evident in generalizing AI popularity information without accounting for demographics, cultural nuances, or specific situations that could affect patient consolation, thereby diminishing the precision and applicability of the offered statistics. The competition’s worries about AI risks benefit traction through the Face- book/Cambridge Analytica scandal which serves as a compelling real-global example of AI’s potential for social manipulation and political affect, bolstering concerns about its risks. Moreover, incorporating examples just like the Conficker trojan horse and the Google phishing scheme offers tangible evidence of AI’s cybersecurity threats as exact specifics decorate persuasiveness and shed light on broader societal implications. A top notch shortcoming is the absence of exploration into present or proposed AI legislation, crucial for understanding administrative structures. Also, both viewpoints lack particular methodological information, doubtlessly undermining the findings’ reliability. Despite those weaknesses, the compelling evidence from each sides leads to the belief that favorable AI effects are feasible with suitable rules.