1. Introduction

Plant and crop diseases are an economic cost to the agricultural and food sectors. Mycotoxins are substances that are naturally produced by certain types of fungi under particular conditions of humidity and temperature. The presence of mycotoxins in food poses a serious problem for the health of human and animals. The International Agency for Research on Cancer (IARC) included a group of aflatoxins into the group 1 carcinogenic substances [

1]. The common via of mycotoxin exposure is the ingestion of contaminated food. Among the risks to human health, risks to the immune system, kidney disease and a higher probability to some types of liver cancer has been indentified [

2,

3,

4]. The fungus Aspergillus flavus, which occurs at temperatures between 12º and 27º and 85% humidity, multiplies in foods such as maize, peanuts, rice, nuts, figs, etc., and although its presence is typical of tropical climates, it also proliferates under some irrigation conditions. In this study, we focus on the proliferation of Aspergillus flavus fungi in fig tree fruits, especially fresh figs.

The cultivation of the fig tree (Ficus carica L.) has its origins in the Carian region of Asia and has spread to other areas such as the Mediterranean region, Africa and the Americas. Today, Spain is the sixth largest producer in the world, accounting of

. Figs grown in Spain reached

ha in 2023 (

dry and

irrigated). The region of Extremadura with

ha (

dry and

irrigated) represents the largest producer in Spain [

5]. In terms of production [

6], Extremadura accounted for

(

tonnes) of national production, which reached

tonnes in 2022 (data of 2023 are not available yet). The increase in productivity is linked to the adoption of innovative techniques such as fertilization, pruning, soil treatment and irrigation [

7,

8]. Irrigation techniques pose a trend that can be observed in the gradual increase in the number of hectares under cultivation for irrigated figs (

vs ) and the decrease for dried figs (

vs ).

Arid and semi-arid areas have perfect conditions for fig trees cultivation, but its shallow roots and prolonged droughts result in a lack of water and mineral requirements to ensure the quality of the fruit [

9]. In order to alleviate the tree stress, increase productivity and improve the fruit quality, supplemental irrigation arose as a good alternative for different crops [

10], included fig trees [

11,

12]. However, changes in humidity facilitate the spread of Aspergillus flavus mycotoxin and scientists needs to carry out additional research to analyse and prevent infected figs from entering the human food chain.

The

Scientific and Technological Research Center of Extremadura (CICYTEX-“Finca La Orden-Valdesequera” located in Guadajira (Badajoz)) is referent in agri-food research, including fig tree cultivation, on which different research works have been carried, analysing the proliferation of the Aflatoxin before and after harverst. Just to mention a few, researchers in [

13] addressed the effect of the temperature during the fig productive process, controlling the hygienic and sanitary quality of the figs. In [

14], authors evaluated the application of two treatments at the early post-harvest stages of dried figs to control mycotoxin. Authors in [

15] analysed the suitability of two yeast strains (H. opuntiae L479 and H. uvarum L793) to act as biopreservatives, to inhibit A. flavus growth and to reduce aflatoxin accumulation.

Even though the detection of the aflatoxin Aspergillus has been widely addressed, the most commonly used methods are intrusive and they involve destruction of the sample. On the other hand, dried figs have been the main focus of the application of innovative methods using AI techniques. However, fresh figs are perishable, they have a limited shelf life and are more sensitive to microbial growth [

16], which alters the quality of the product and becomes a serious risk to the human health. The detection of the aflatoxin Aspegillus Flavus in Fresh figs is the goal of this study.

Over the past few decades, the use of hyperspectral imaging (HSI) techniques has been on the rise as an analytical tool. Its performance identifying chemical composition faster than traditional methods and non-destructively meets the needs of the agriculture sector, among others [

17]. Furthermore, the superficial distribution of the aflatoxin is an advantage for the analysis by HSI. Thus, last decades its application has attracted the researcher’s interest. However, data provided by HSI poses high dimensionality, which is challenge for classification algorithms. In this light, Deep Learning (DL) has become an innovative and not intrusive method to analyse and interpret HSI data [

18,

19].

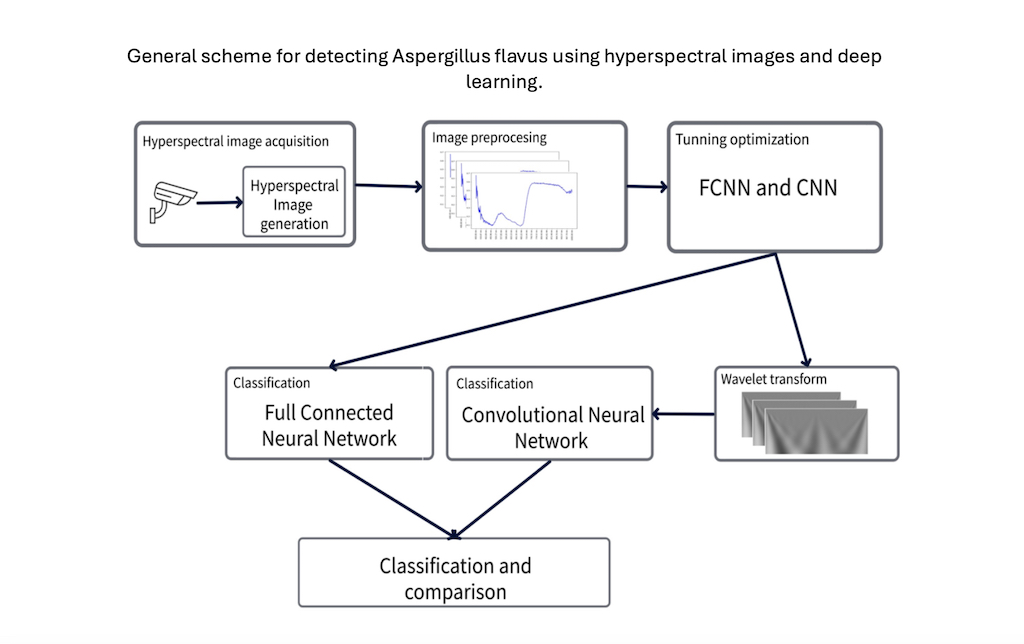

The aim of this study is to develop an accurate method for the early detection of aflatoxin in fresh figs. Our proposal is based on the analysis of hyperspectral images at a pixel level via non-intrusive methods such as deep learning. Two different phases can be identified in this research work. The first one is a parameter tuning phase that is responsible for finding the best parameter values for the deep learning algorithms to be applied in the next phase; the second one corresponds to the comparison of two different deep learning approaches: (i) A Full Connected Neural Network (FCNN) that works with the hyperspectral signatures of each image pixel, and (ii) A Convolutional Neural Network (CNN) that is fed up with images obtained after applying the wavelet transform to the spectral signature of each image pixel.

The rest of the paper is organized as follows:

Section 2 analyses different research work in the literature.

Section 3 presents the methodology applied to perform the analysis.

Section 4 presents the results obtained. Finally, the conclusion and future work are presented in

Section 5.

2. Background

Precision agriculture represents a key instrument to increase productivity, improve crop quality and address problems due to climate change. The development of IoT systems through the use of remotely controlled sensors, imaging with unmanned vehicles, and the ability to analyse the collected data using AI techniques has made precision agriculture a reality and a dynamic area of research. A wide range of research in this field is reported in recent literature reviews [

20,

21,

22,

23].

In this light, HSI has become a popular technique applied in a wide range of areas to identify internal and external features of the objects under study. Just for name a few, mineral mapping [

24], atmospheric characterization [

25], biological and medical issues [

26], food and agriculture [

27,

28], among others. HSI can capture fine spectral features through consecutive channels and many narrow spectral bands [

29], the larger the number of bands, the more detailed the spectral characteristics. However, not all bands include relevant information and enhance the accuracy, so removing redundant wavebands is useful to facilitate the analysis [

30]. Detailed information facilitates the detection of subtle changes and improves the accuracy, which is a crucial factor for the early detection of plant diseases [

31].

HSI offers significant advantages in terms of monitoring tasks. The interest in using it in the agri-food sector has been driven by the need to improve product quality and provide automated, non-destructive methods at all stages to identify potential issues early, including plant diseases, and provide a solution [

32]. In addition, HSI generates huge amounts of complex data that needs to be analysed. This is a resource-intensive and time-consuming task for which machine learning techniques, especially deep learning, are well suited to [

33,

34,

35]. During the last few decades, the use of Machine Learning in all fields of science has skyrocketed. ML makes use of a wide range of algorithms that allow computer systems to learn from data and to identify patterns in order to infer outcomes. Authors in [

36,

37] presented comprehensive reviews focused on food sciences and safety applying Neural Networks, and general ML techniques, respectively.

With regard to the aim of our study, the identification of Aspergillus flavus is challenging due to the variety of the morphological features, even genetically [

3,

4]. As previously mentioned, the aflatoxins produced by A. Flavus, in particular aflatoxin

, are very harmful to animals and humans. Their detection is essential to prevent contaminated food from entering the food chain [

38].

Siedliska et al [

39] applied HSI with the assistance of two different cameras, VNIR (400 nm-1000 nm) and SWIR (1000 nm-2500 nm) on strawberries inoculated with the fungal pathogens Botrytis cinerea and Collatotrichum acutatum (900 samples for each different type) and healthy controls (900 samples). The images were taken every 24 hours after inoculation (for each set of strawberries). Based on the second derivative of the original spectra, they selected 19 wavelengths. They carried out two experiments using the FCNN, RF and SVM models, obtaining better results with the FCNN network model.

In [

40] authors presented an extensive literature review, where studies published used HSI aimed to identify fungal and mycotoxin-contaminated individual grain kernels applying different classifiers. Authors in [

41] addressed the detection of aflatoxin in peanuts using a deep convolution neural network (CNN) and they achieved an accuracy of

. In a later work [

42] they also included corn with a dimensional CNN, where the accurary was

and

for peanut and corn, respectively. Similarly, [

43] recently addressed the identification of mycotoxins produced by Aspergillus flavus in peanuts and corn using six ML models. With an accuracy of

in distinguishing between healthy and infected samples, the authors pointed out that better data processing and further analysis are needed.

Kim et al [

44] presented a method based on hyperspectral fluorescence, VNIR, or SWIR imaging combined with machine learning algorithms to detect contamination of aflatoxin in ground maize. Higher accuracy and generalizasion is reached by the SWIR with SVM model.

Contamination in figs has been usually addressed in dried figs. Just to mention a few recent ones, in [

45], authors analysed UV light images using a deep CNN approach to detect and classified aflatoxin in dried figs. They carried out a fine-tuning process for pre-trained models, and obtained a validation accuray of

. The same authors [

46] proposed a real-time method applying pre-trained models for deep feature extraction and classification. Images were acquired using light sources of different wavelengths. The best performance was achieved by SVM with accuracy of

and

for contaminated and non-contaminated samples, respectively.

Our study focuses on fresh figs, which will also be consumed, thus entering the food chain and posing a risk to human health. On the other hand, detecting before drying means eliminating the risk at an earlier stage.

3. Materials and Methods

This section describes the methodology applied to carried out this research work.

Figure 1 represents the general methodology scheme, where the main steps are defined.

This study manages images of figs collected from a fig tree plantation of calabacita variety at the "Finca La Orden-Valdesequera" located at 38º51’N, 6º40’W, altitude 184 m Guadajira, Spain, where CICYTEX has its headquarter. This area is characterized by a mediterranean climate, with dry and hot summers, where the average annual temperature between 2000 and 2022 was 16.28ºC, rainfall 447.08 mm and reference evapotranspiration 1296.15 mm. The soil texture was loamy loam and the farm was provided by a irrigation system. The following section details the procedure to take hyperspectral images.

3.1. Image Acquisition

The images were taken with a SPECIM hyperspectral camera, in particular the model FX10 VNIR, whose main technical characteristics are: spatial resolution of 1024 pixels, spectral range from 400 nm to 1000 nm (VIS and part of the NIR), 448 spectral bands and a spectral leap of .

We worked with a set of 320 figs harvested from the plantation previously mentioned. Hyperspectral images were taken for 2 weeks, each week using 160 figs harvested at different stages of maturity. Each week, the 160 figs were divided into four subsets of 40 figs. The first corresponds to the healthy controls (class 0); the following three sets of 40 figs were inoculated with a concentration of 103 UFC/ml (class 1), 105 UFC/ml (class 2) and 107 UFC/ml (class 3), respectively. Hyperspectral images were taken post-innoculation every 24 hours, for five days on figs that were harvested during the current week, according to protocol with next describe. Each day, one toxin-inoculating fig for each class was extracted for chemical analysis and testing for the presence of the toxin. Thus, the number of figs for each class, except the healthy controls, decreased daily by one unit (from 40 the first day to 36 the last). The inoculation process with the A. Flavus was done by immersion that has a similar surface distribution to natural aflatoxin contamination. Each class consits of 380 hyperspectral images, giving a total of 1900 hyperspectral images.

Once acquired, the images were pre-processed with black/white correction to remove noise due to particle variation and to compensate for poor light distribution. This black/white reference is provided by the camera during hyperspectral imaging.

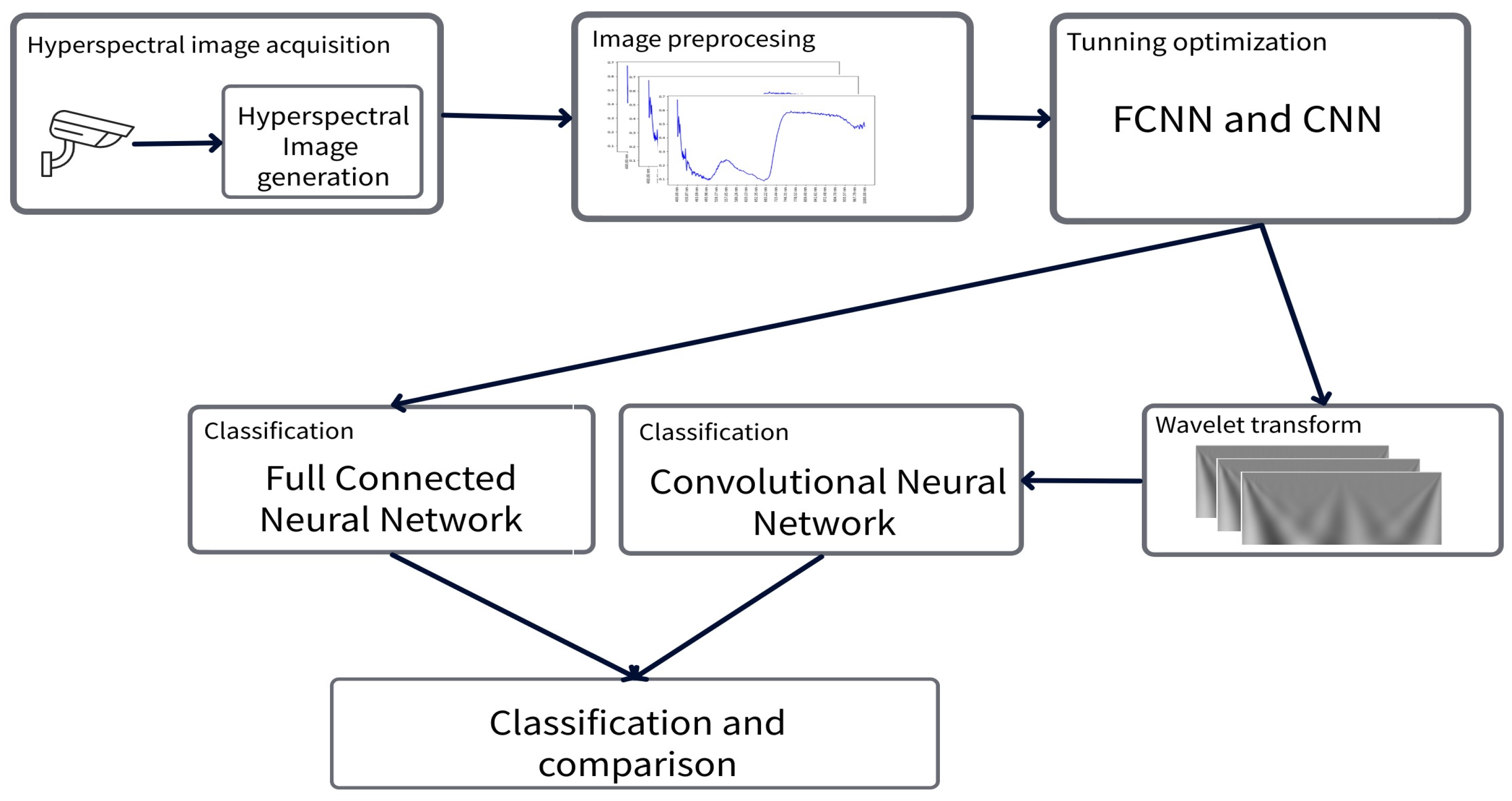

As the inoculation procedure was carried out by immersion, the region of interest (ROI) was not the entire surface of the hyperspectral image, but rather the lower part of each fig image.

Given that our study works at the pixel level, it was necessary to delimit the study areas from which the pixels were to be extracted. In this light, pixel masking was carried out to identify the infected regions and extract the pixels only for these regions. This task was performed with the

Labelme software

1, a free and open source image annotation tool developed in Python, and widely applied to label and generate masks for different types of images.

Figure 2 shows an example of mask generation and labeled for a set of figs.

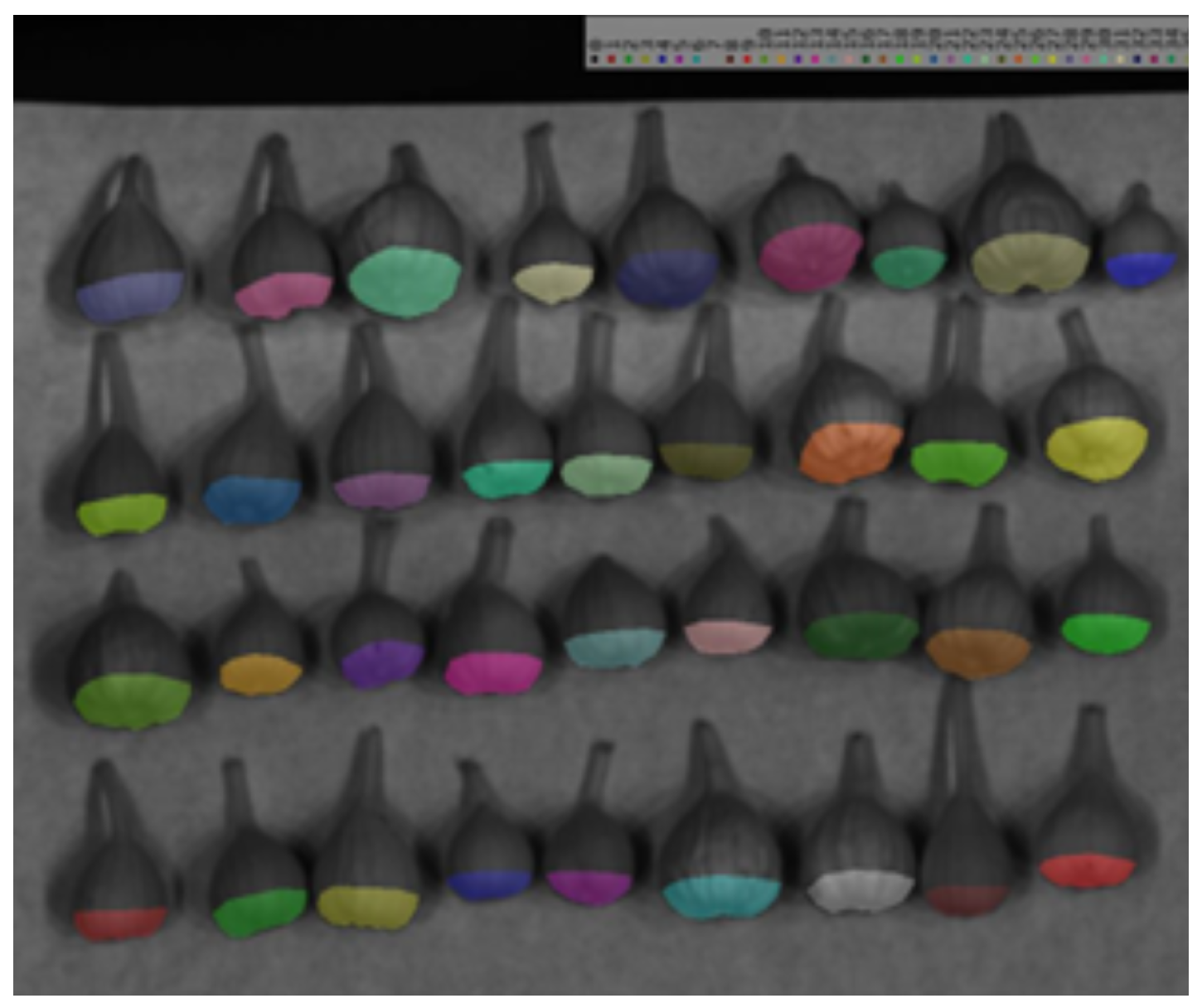

For all the images, pixels in the ROI are extracted, this process uses the coordinates of the pixels to be studied. Each pixel was extracted with its 448 spectral bands representing its features, and a new feature was included indicating the class to which it belongs. Additional information has also been included to make it easier to identify the pixel.

Figure 3 shows the shape of a fig pixel and its hyperspectral signature.

As a result, our dataset consists of hyperspectral pixels with 450 features (448 spectral bands, class label and pixel identification).

3.2. Data Analysis

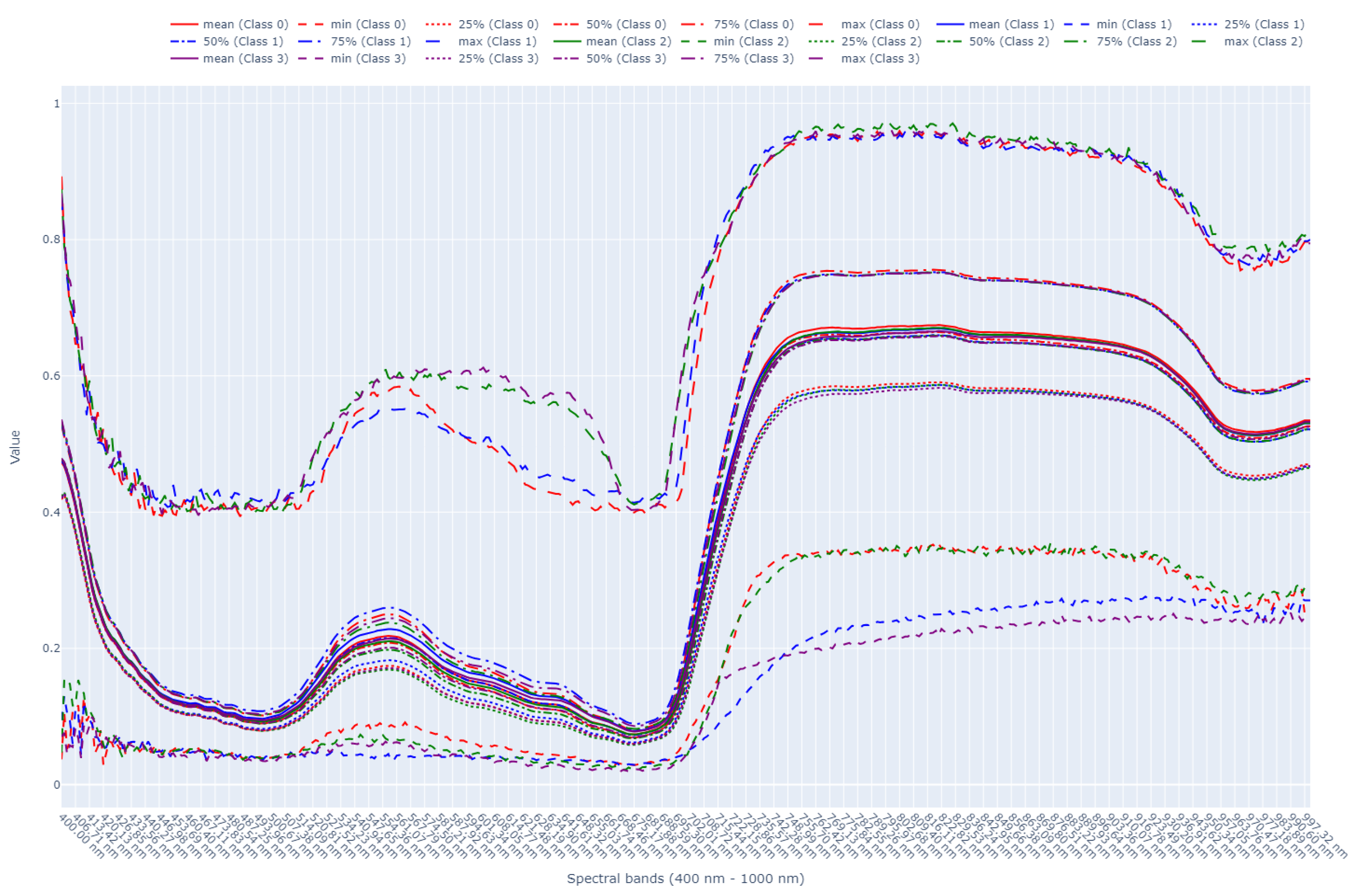

Deep learning (DL) has become an innovative and not intrusive method for image classification. However, before using any AI technique is necessary to analyse data we are working with. This section carried a brief statistics analysis before addressing the main part of this work. Considering a spectral band as a predictive numerical variable, all spectral bands obtained values between 0 and 1, as expected. Three bands stand out from the rest, taking into account the standard deviation: Bands closed to ultraviolet (400nm-415nm); bands between the blue and the orange colours (526.17nm-623nm), and bands closed to the red and infrared channels (700nm-1000nm). The distribution in the first few spectral bands appears to be symmetrical, and there is a slight difference between the mean value and the median value. From 500 nm the distribution is slightly asymmetric towards high values.

Next, the distribution of each spectral band by class was analysed. Thus, the dataset was separated into each of the categories and we calculated the 10th, 25th, 50th (median), 75th, and 90th percentiles along with the maximum and minimum values. The aim was to find differences in the distribution of the bands in the different classes. We can conclude that maximum values are really similar for all classes and differences are present between

and

. Regarding minimum values, we appreciate differences in the previously mentioned range of bands (400nm-415nm, 526.17nm-623nm, and 700nm-1000nm).

Figure 4 shows a comparison of these distributions.

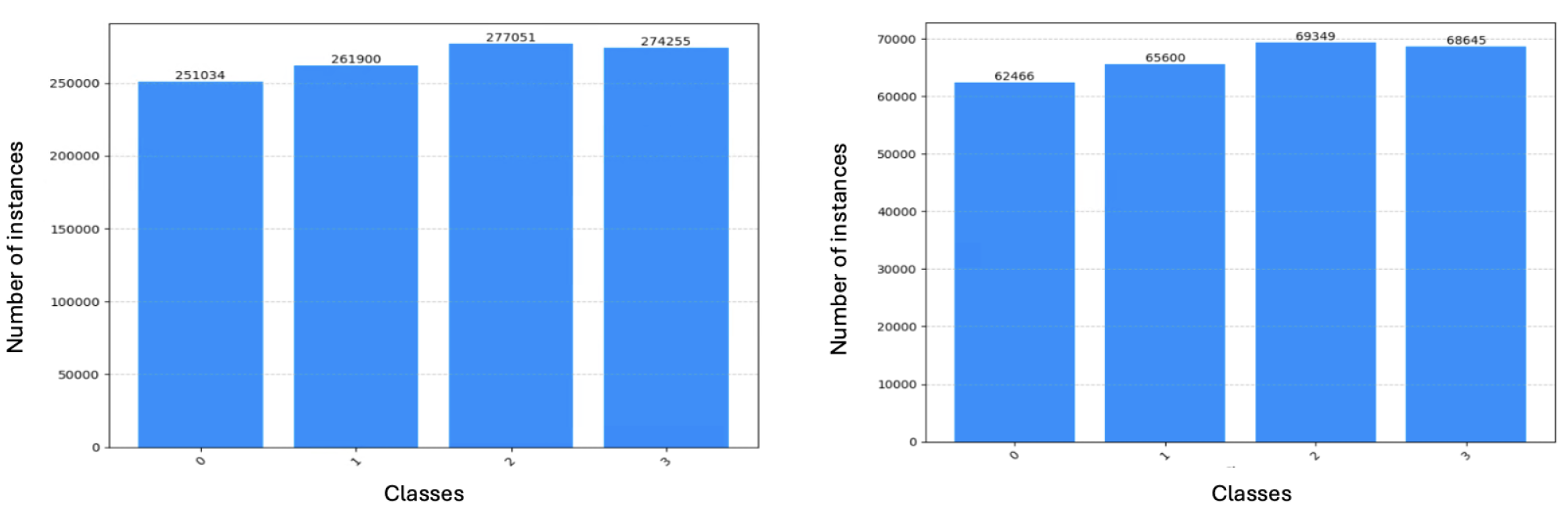

With regard to the number of instances per classes, classes are balanced with , , and pixels belonging to classes 0, 1, 2 and 3, respectively.

The dataset was randomly divided into two different dataset, 80% for the training set (

) and 20% for the test set (

). 20% of the training set was used for training validation. In addition, 20% of the instances get for training was randomly chosen for training validation (

) to avoid the over-fitting issue. Before run the different algorithms, we analysed if data were balanced both in the training and test sets.

Figure 5 graphically shows the balanced distribution of instances per class in training and test sets. Next section details the DL techniques and perspectives used to address this problem using a comparative point of view.

3.3. Deep Learning Techniques

This comparative analysis has been carried out with two types of DL models, which have demonstrated its performance in a variety of reseach works. As DL models are really sensitive to the hiperparameters values, a hyperparameter search was done to find the optimal ones for each type of model, which will be detailed in

Section 3.4.1. The DL models chosen for this study were:

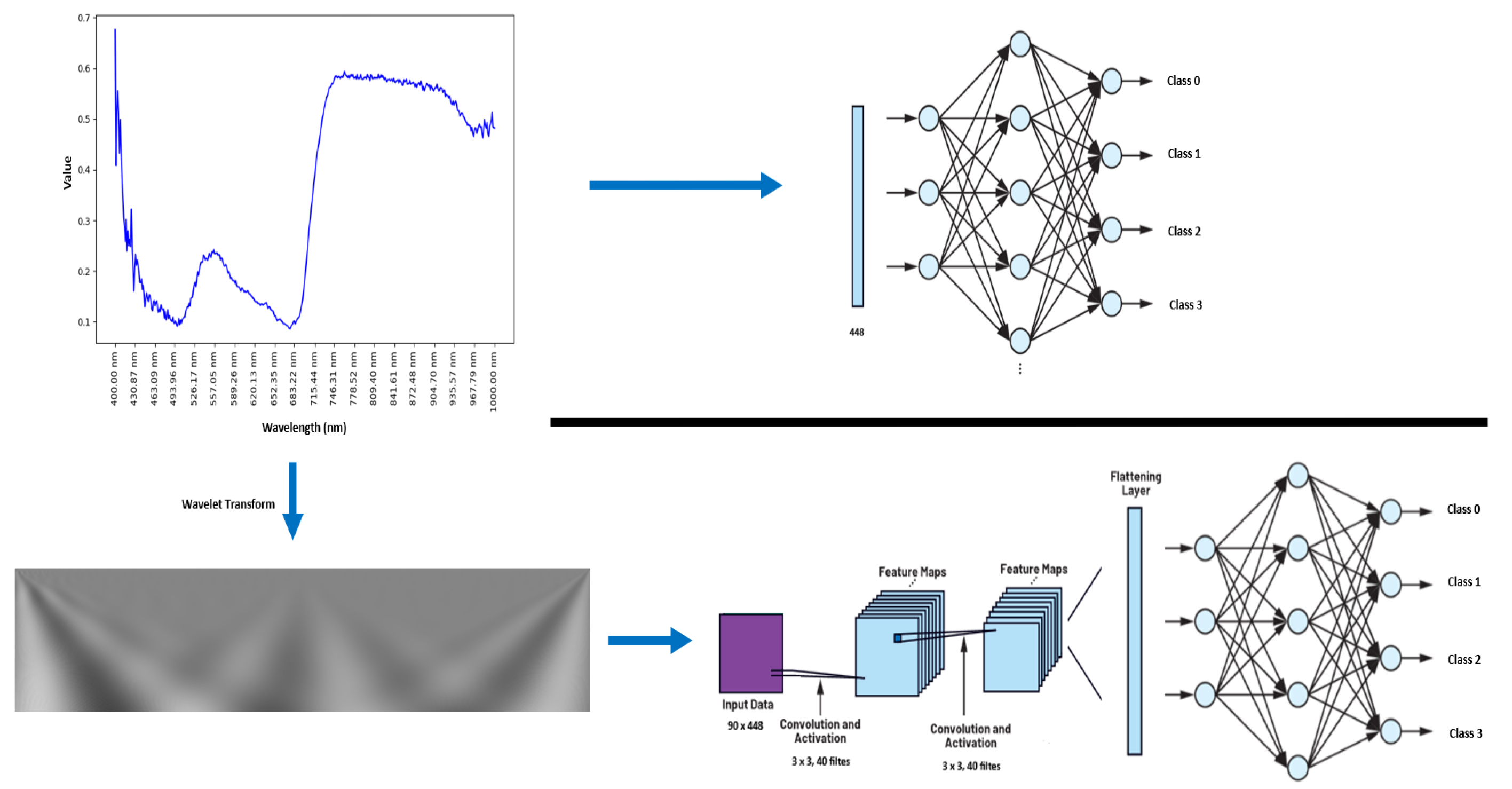

3.3.1. FCNN

This model has an input layer of 448 neurons (spectral signature pixels). The number of hidden layers and the number of neurons in each are determined by the hyper-parameter search. The output layer has 4 neurons. Softmax is defined as the activation function to select the class to be predicted.

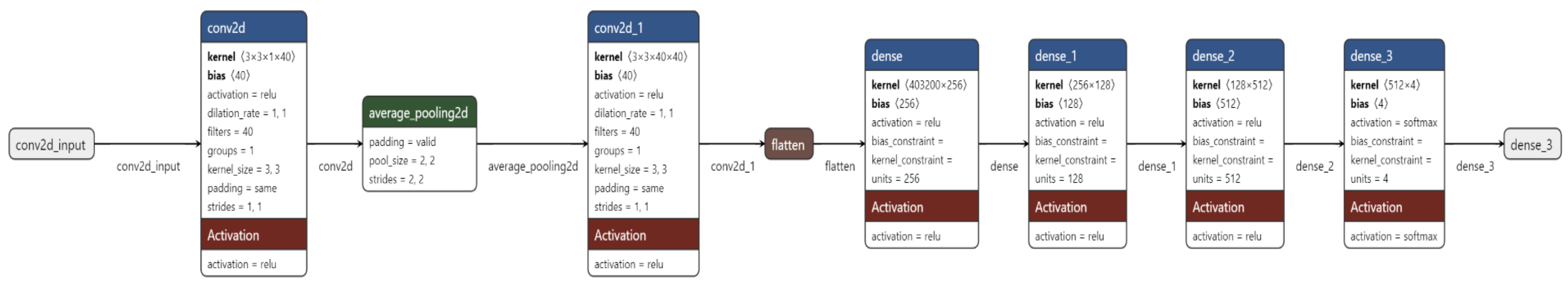

3.3.2. CNN-FCNN Pipeline

This model consists of an input layer of 448, although in this case it is a 2D image (90 px height and 448 px. height), after the application of the continuous wavelet transform to obtain its frequency information. Wavelet transform facilitates image compression due to its ability to localize time and frequency. The number of intermediate convolutional layers, hidden layers and their number of neurons, the activation function, and the pooling technique to be applied are determined using a hyperparameter search. The output layer has 4 neurons and the activation function used is the softmax function.

A Wavelet analysis can provide information in the frequency domain of the hyperspectral pixel, revealing details that are missed in the time domain. Regarding the wavelet transform, the selection of the mother wavelet is crucial to obtain a smooth continuous wavelet amplitude and phase information. Since complex Morlet wavelet (CWT) is a non-orthogonal complex exponential wavelet tapered by Gaussian, it meets both requirements [

47]. CWT, represented by eq.

1 2, is an effective method for image processing, and it is chosen as the mother wavelet.

Figure 6 shows the architecture models for both approaches.

3.4. Sustainability Analysis

In our view, there is a need for researchers to understand the environmental impact of their AI models. AI requires a huge amount of computing power to train the models, and it can consume large amounts of energy and generate large amounts of carbon dioxide emissions. In this work, we take into account the impact of the solutions proposed during the training phase. With that said, CodeCarbon and Green Algorithms are the only Python tools that estimate energy consumption and emissions for memory, CPU and GPU separately.

CodeCarbon tracks carbon dioxide (

) emissions from machine learning experiments, measuring the carbon footprint in kilograms

per kilowatt-hour consumed. This tool helps estimate and minimise the environmental impact of AI models by providing accurate data on energy consumption and greenhouse gas emissions [

48]. Recent studies [

49] confirm that CodeCarbon provides values close to those provided by a wattmeter. In this way, both the energy consumed by memory, CPU and GPU during the training phase of the models and the

emissions were evaluated. Results will be drawn in

Section 4.

3.4.1. Hyper-Parameter Search

Hyperparameter search was carried out for both network architectures to identify those values that reached the best performance. An accuracy value of

was defined as the threshold to address the next level of hyperparameter search within a particular network setting.

Table 1 shows the hyperparameters and values tested for the FCNN and CNN approaches. We used the Random Search method over 100 networks or combination of hyperparameter for the FCNN approach and 40 for the CNN. Differences are because of the excessive training time for CNN.

For both architecture networks, the Adam algorithm was used as the optimisation function and the categorical cross-entropy as the loss function.

4. Experimental Results

This section presents and analyses the results obtained for each model individually and comparatively. Furthemore, a comparative study about the training time, energy consumption and carbon footprint during the training phase is shown.

The implementation of FCNN and CNN in this research was done with the Tensorflow library (version

) and Python (version

) as the programming language.

Table 2 shows the technical information about the computer systems used.

The results are evaluated using a set a well-known metrics. The choice of evaluation metrics used in this study is not arbitrary. It responds to the need to capture different aspects of the performance of the classification models.

Accuracy provides the total percentage of correct predictions and it is useful in scenarios where the data are balanced, as our data are.

Precision measures the proportion of instances correctly classified as positive out of those that are predicted to be positive. Precision helps to minimize the number of false positives.

Recall quantifies the number of positive class predictions with respect to all positive instances in the dataset.

F-Measure provides a single score that balances both precision and recall in one single metric.

In addition, the confusion matrix is provided to help understand how the models perform and the nature of the errors (false positives or false negatives), and gives us a general overview to improve the models.

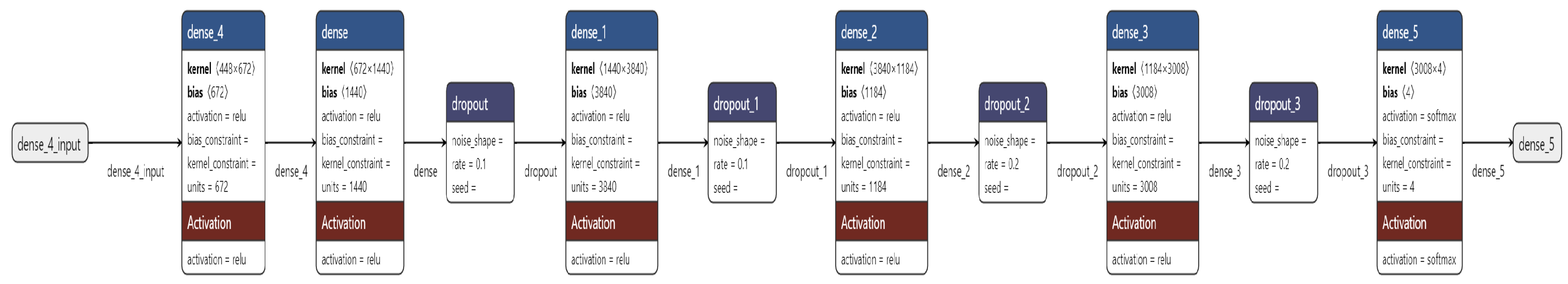

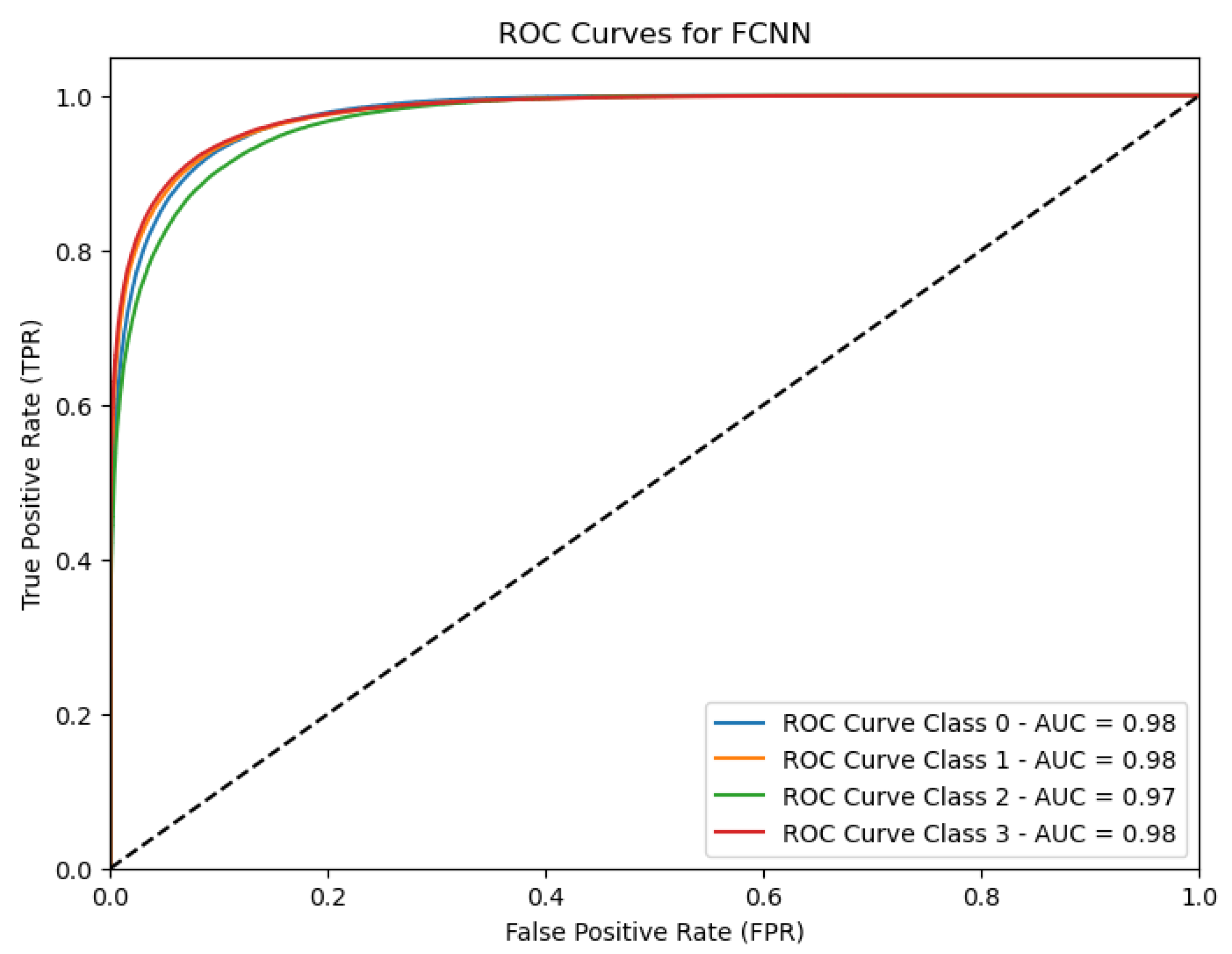

4.1. FCNN Results

As a result of the hyperparameter search, the obtained network architecture consists of 8 layers, 1 input layer, 6 hidden layers, and 1 output layer. Regarding the hidden layers, the dropout ratio was applied between them, increasing with the network architecture. In addition, ReLU has been chosen as the activating function.

Figure 7 shows the FCNN settings and

Table 3 details additional information about the architecture structure.

Regarding the hidden layers, the dropout ratio was applied between them, increasing with the network architecture. In addition, ReLU has been chosen as the activating function. This model has been training Results reached with this approach are shown in

Table 4, which represents the confusion matrix, where columns represent the predicted class and rows the real class. To clarify the results,

Table 5 shows the values for all different metrics used to evaluate the model presented.

The analysis of the results shows a good performance of the FCNN model. On average,

of instances are correctly classified. The next step is the analysis of each metric individually. Considering that classes 1, 2 and 3 correspond to different levels of aflatoxin inoculated, the

precision metric is high and there is a low incidence of false positives. Class 0 represents healthy controls and the precision value is lower, and consequently, the number of false positive results is higher than in the other classes. Due to the low presence of false negatives in the corresponding predictions, class 0 has a higher recall value than the other classes. The

F1-measure is the harmonic mean between Precision and Recall. Classes 1,2,3, which represent the inoculated instances, obtain similarly good results, and only class 0 obtains a low value due to its low precision value. We can conclude that our model based on the spectral signature of individual pixels can identify cases inoculated with aflatoxin with high values for each metric, but performs worse for healthy controls. To complete this analysis, we measure the goodness of the classification results generating the ROC curve for each class and computing the AUC value for each ROC curve.

Figure 8 plots how well the classification model behaves for each different classes. Even though further research needs to be done, the FCNN approach obtained high AUC values.

In addition, we analysed the environmental cost of this solution during the training phase.

Table 6 presents the training time in hours, the

emissions in Carbon dioxide equivalent kilograms (

) and the energy consumption (KWh)for RAM, CPU and GPU. The emission rate, which is measured in

per hour, provides a more detailed view of the impact on the environment per unit of time. Finally, energy consumption in kilowatt-hours (kWh) measures the amount of energy consumed during the training process. These metrics are important for the assessment of the environmental and energy costs associated with the development and training of artificial intelligence models.

Although this experiment does not release a large amount of CO2 into the atmosphere, the measurements are an important part of improving the models and reducing their environmental impact.

4.2. CNN Model

The purpose of this experiment was the processing of images after wavelet transform. It was started with the network architecture shown in

Figure 9. This was the one that gave the best result in the hyperparameter search. This architecture consists of an input layer with a number of neurons equal to the size of the image (90 px high; 448 px wide), a combination of convolutional and sequential layers (detailed in

Table 7), and an output layer using

Softmax as the activation function.

This experiment was launched on the Nvidia A100-PCIE-40GB GPU, because of the computational requirements. Results obtained in the test phase are detailed in the confusion matrix in

Table 8, where the instances correctly predicted (main diagonal) and uncorrectly predicted for each class are shown. Based on these results Precision, Recall y F1-measure metrics are computed in

Table 9 to analyse the performance of the model when predict each class individually.

On average, of the instances are classified correctly and it is a relatively good performance. Next, we analyse each metric individually.

The best result for Precision metric is obtained by class 3, of the instances were correctly identified. Class 3 correspond with the highest inoculated concentration. Classes 0, 1 and 2 reached , and , respectively. This results showed that higher concentration of the aflatoxin are better identified with a lower number of false positives. Regarding the Recall metric, all classes obtained a value , indicating that the model is able to identify relevant instances (true positives and false negatives). The higher value () is obtained by the class 0 (healthy controls). The harmonic mean between Precision and Recall, represented by F1-measure are similar, all values are between [ - ]. This metric gives us information about model reliability, although we need to explore this issue further, the results are promising.

As it was done before for the FCNN model, the ROC curve is generated for each class and the AUC values are calculated.

Figure 10 shows the results obtained, where the AUC values reveal a high discriminative power of

,

,

and

for classes 0, 1, 2 and 3, respectively.

As for the FCNN model, we have evaluated the environmental impact of this proposal during the training phase.

Table 10 presents information previouly described, the training time in hours, the

emissions in Carbon dioxide equivalent kilograms (

) and the energy consumption (KWh) for RAM, CPU and GPU, and the emission rate, which is measured in

per hour.

This experiment releases a significant amount of CO2 into the atmosphere and consumes a lot of energy. In addition, the emissions and energy consumption are much higher than those of the FCNN, whose metrics are higher. We must pay attention to this information for further planned research to reduce environmental impact.

5. Conclusions

In conclusion, this study demonstrates the feasibility of early detection of Aspergillus flavus toxin in fresh figs using hyperspectral imaging combined with deep learning techniques, without the use of invasive techniques. The FCNN model achieved an accuracy of , while the CNN model performed slightly lower, with accuracy. These results demonstrate the effectiveness of pixel-level analysis in identifying the presence of the fungus, both across different days and different contamination concentrations. This evidence supports the viability of the non-invasive techniques applied in improving food safety.

Although both approaches demonstrated relative success, it was observed that the FCNN network exhibited superior performance in classifying samples infected with different aflatoxin concentrations, while the CNN encountered difficulties in distinguishing between the different classes. Moreover, the detection of healthy figs presented challenges in both models, with a higher number of false positives. This suggests the need for improved algorithms to reduce errors and improve the classification of uncontaminated samples. The models presented in this paper have considerable scope for improvement, with the ones presented in this work representing a robust and highly promising starting point.

The necessity of assessing the environmental impact of AI models is underscored. During the training process, the CNN network exhibited considerably higher energy consumption and CO2 emissions than the FCNN network. This highlights the imperative to strike a balance between technical performance and environmental sustainability in future studies. The work suggests further research into optimising models to enhance their accuracy and reduce their ecological impact.

Author Contributions

Conceptualization, Josefa Díaz-Álvarez and Francisco Chávez de la O; Data curation, Cristian Cruz-Carrasco and Abel Sánchez-Venegas; Formal analysis, Cristian Cruz-Carrasco, Josefa Díaz-Álvarez, Francisco Chávez de la O and Juan Villegas-Cortéz; Funding acquisition, Josefa Díaz-Álvarez and Francisco Chávez de la O; Investigation, Cristian Cruz-Carrasco, Josefa Díaz-Álvarez, Francisco Chávez de la O and Abel Sánchez-Venegas; Methodology, Josefa Díaz-Álvarez and Francisco Chávez de la O; Project administration, Josefa Díaz-Álvarez; Resources, Josefa Díaz-Álvarez and Francisco Chávez de la O; Software, Cristian Cruz-Carrasco; Supervision, Josefa Díaz-Álvarez and Francisco Chávez de la O; Validation, Josefa Díaz-Álvarez, Francisco Chávez de la O and Juan Villegas-Cortéz; Writing – original draft, Cristian Cruz-Carrasco, Josefa Díaz-Álvarez, Francisco Chávez de la O, Abel Sánchez-Venegas and Juan Villegas-Cortéz; Writing – review & editing, Cristian Cruz-Carrasco, Josefa Díaz-Álvarez, Francisco Chávez de la O, Abel Sánchez-Venegas and Juan Villegas-Cortéz.

Funding

PJDA, FCO, JVC are supported by Spanish Ministry of Science and Innovation under projects PID2023-147409NB-C22 and PID2020-115570GB-C21 funded by MCIN/AEI/10.13039/501100011033 and Junta de Extremadura, project GR15068. FCO are also supported by development and innovation project PID2020-117392RR-C41 funded by MCIN/AEI/ 10.13039/501100011033 and by “ERDF A way of making Europe” and the AGROS2022 project.

Institutional Review Board Statement

Not applicable

Data Availability Statement

Data and code will be available after ongoing research were completed.

Conflicts of Interest

The authors declare that they have no competing interests.

Abbreviations

The following abbreviations are used in this manuscript:

| IARC |

International Agency for Research on Cancer |

| HSI |

Hyperspectral imaging |

| DL |

Deep Learning |

| FCNN |

Full Connected Neural Network |

| CNN |

Convolutional Neural Network |

| ML |

Machine Learning |

| VNIR |

Visible & Near Infrared |

| SWIR |

Short-wave infrared |

| SVM |

Support vector machines |

| UV |

Ultraviolet |

| VIS |

Visible infrared |

| NIR |

Near infrared |

| UFC |

Colony-forming unit |

| ROI |

Region of Interest |

| CWT |

Complex Morlet wavelet |

| AI |

Artificial Intelligence |

| CPU |

Central processing unit |

| GPU |

Graphic processing unit |

| ReLU |

rectified linear unit |

| AUC |

Area under the curve |

| ROC |

Receiver Operating Characteristic |

| CO2

|

Carbon dioxide |

| KWh |

KiloWatt per hour |

| RAM |

Random Access Memory |

References

- International Agency for Research on Cancer (IARC).. Agents Classified by the IARC Monographs. https://publications.iarc.fr/123, 2014. Online. (accessed on 26 May 2024).

- Kowalska, A.; Walkiewicz, K.; Kozieł, P.; Muc-Wierzgoń, M. Aflatoxins: characteristics and impact on human health. Advances in Hygiene and Experimental Medicine 2017, 71, 315–327. [Google Scholar] [CrossRef] [PubMed]

- Arastehfar, A.; Carvalho, A.; Houbraken, J.; Lombardi, L.; Garcia-Rubio, R.; Jenks, J.; Rivero-Menendez, O.; Aljohani, R.; Jacobsen, I.; Berman, J.; others. Aspergillus fumigatus and aspergillosis: From basics to clinics. Studies in mycology 2021, 100, 100115–100115. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Giraldo, A.M.; Mora, B.L.; Loaiza-Castaño, J.M.; Cedano, J.A.; Rosso, F. Invasive fungal infection by Aspergillus flavus in immunocompetent hosts: A case series and literature review. Medical Mycology Case Reports 2019, 23, 12–15. [Google Scholar] [CrossRef] [PubMed]

- Ministerio de Agricultura. Encuesta sobre Superficies y Rendimientos de Cultivos. https://www.mapa.gob.es/es/estadistica/temas/estadisticas-agrarias/agricultura/esyrce/default.aspx, note=Online Last access: 08/06/2024, Year=2023.

- FAO. FAO statistics. https://www.fao.org/faostat/es/#data/QCL, 2023. Last access: 08/01/2024.

- Jafari, M.; López-Corrales, M.; Galván, A.; Galván, A.; Hosomi, e.a. Orchard establishment and management.; In: Sarkhosh, A., Yavari, A., Ferguson, L. (Eds.), 2022; pp. 184–230. [Google Scholar] [CrossRef]

- Galván, A.; Serradilla, M.; Córdoba, M.; Domínguez, G.; Galán, A.; López-Corrales, M. Implementation of super high-density systems and suspended harvesting meshes for dried fig production: Effects on agronomic behaviour and fruit quality. Scientia Horticulturae 2021, 281, 109918. [Google Scholar] [CrossRef]

- Abdolahipour, M.; Kamgar-Haghighi, A.A.; Sepaskhah, A.R.; Zand-Parsa, S.; Honar, T.; Razzaghi, F. Time and amount of supplemental irrigation at different distances from tree trunks influence on morphological characteristics and physiological responses of rainfed fig trees under drought conditions. Scientia Horticulturae 2019, 253, 241–254. [Google Scholar] [CrossRef]

- García, L.; Parra, L.; Jimenez, J.M.; Lloret, J.; Lorenz, P. IoT-Based Smart Irrigation Systems: An Overview on the Recent Trends on Sensors and IoT Systems for Irrigation in Precision Agriculture. Sensors 2020, 20. [Google Scholar] [CrossRef]

- Abdolahipour, M.; Kamgar-Haghighi, A.; Sepaskhah, A.; Dalir, N.; Shabani, A.; Honar, T.; Jafari, M.; others. Supplemental irrigation and pruning influence on growth characteristics and yield of rainfed fig trees under drought conditions. Fruits 2019, 74, 282–293. [Google Scholar] [CrossRef]

- Khozaie, M.; Sepaskhah, A.; others. Economic analysis of the optimal level of supplemental irrigation for rain-fed figs. Iran Agricultural Research 2018, 37, 17–26. [Google Scholar]

- Galván, A.I.; Rodríguez, A.; Martín, A.; Serradilla, M.; Martínez-Dorado, A.; Córdoba, M. Effect of Temperature During Drying and Storage of Dried Figs on Growth, Gene Expression and Aflatoxin Production. Toxins 2021, 13, 134. [Google Scholar] [CrossRef]

- Galván, A.I.; Hernández, A.; de Guía Córdoba, M.; Martín, A.; Serradilla, M.J.; López-Corrales, M.; Rodríguez, A. Control of toxigenic Aspergillus spp. in dried figs by volatile organic compounds (VOCs) from antagonistic yeasts. International Journal of Food Microbiology 2022, 376, 109772. [Google Scholar] [CrossRef]

- Tejero, P.; Martín, A.; Rodríguez, A.; Galván, A.I.; Ruiz-Moyano, S.; Hernández, A. In Vitro Biological Control of Aspergillus flavus by Hanseniaspora opuntiae L479 and Hanseniaspora uvarum L793, Producers of Antifungal Volatile Organic Compounds. Toxins 2021, 13. [Google Scholar] [CrossRef] [PubMed]

- Özer, K.B. 19 Mycotoxins in Fig. In Advances in Fig Research and Sustainable Production; 2022; p. 318. [Google Scholar]

- ElMasry, G.; Sun, D.W. Principles of hyperspectral imaging technology. In Hyperspectral imaging for food quality analysis and control; Elsevier, 2010; pp. 3–43.

- Signoroni, A.; Savardi, M.; Baronio, A.; Benini, S. Deep learning meets hyperspectral image analysis: A multidisciplinary review. Journal of imaging 2019, 5, 52. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.; Vibhute, A.D.; Mali, S.; Patil, C.H. A systematic review on hyperspectral imaging technology with a machine and deep learning methodology for agricultural applications. Ecological Informatics 2022, 69, 101678. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Computer Networks 2020, 172, 107148. [Google Scholar] [CrossRef]

- Cisternas, I.; Velásquez, I.; Caro, A.; Rodríguez, A. Systematic literature review of implementations of precision agriculture. Computers and Electronics in Agriculture 2020, 176, 105626. [Google Scholar] [CrossRef]

- Liu, W.; Shao, X.F.; Wu, C.H.; Qiao, P. A systematic literature review on applications of information and communication technologies and blockchain technologies for precision agriculture development. Journal of Cleaner Production 2021, 298, 126763. [Google Scholar] [CrossRef]

- Rivera, G.; Porras, R.; Florencia, R.; Sánchez-Solís, J.P. LiDAR applications in precision agriculture for cultivating crops: A review of recent advances. Computers and Electronics in Agriculture 2023, 207, 107737. [Google Scholar] [CrossRef]

- Peyghambari, S.; Zhang, Y. Hyperspectral remote sensing in lithological mapping, mineral exploration, and environmental geology: an updated review. Journal of Applied Remote Sensing 2021, 15, 031501–031501. [Google Scholar] [CrossRef]

- Jia, J.; Wang, Y.; Chen, J.; Guo, R.; Shu, R.; Wang, J. Status and application of advanced airborne hyperspectral imaging technology: A review. Infrared Physics & Technology 2020, 104, 103115. [Google Scholar]

- ul Rehman, A.; Qureshi, S.A. A review of the medical hyperspectral imaging systems and unmixing algorithms’ in biological tissues. Photodiagnosis and Photodynamic Therapy 2021, 33, 102165. [Google Scholar] [CrossRef]

- Lu, Y.; Saeys, W.; Kim, M.; Peng, Y.; Lu, R. Hyperspectral imaging technology for quality and safety evaluation of horticultural products: A review and celebration of the past 20-year progress. Postharvest Biology and Technology 2020, 170, 111318. [Google Scholar] [CrossRef]

- Wang, B.; Sun, J.; Xia, L.; Liu, J.; Wang, Z.; Li, P.; Guo, Y.; Sun, X. The applications of hyperspectral imaging technology for agricultural products quality analysis: A review. Food Reviews International 2023, 39, 1043–1062. [Google Scholar] [CrossRef]

- Sahoo, R.N.; Ray, S.S.; Manjunath, K.R. Hyperspectral remote sensing of agriculture. Current Science 2015, 108, 848–859. [Google Scholar]

- Jia, B.; Wang, W.; Ni, X.; Lawrence, K.C.; Zhuang, H.; Yoon, S.C.; Gao, Z. Essential processing methods of hyperspectral images of agricultural and food products. Chemometrics and Intelligent Laboratory Systems 2020, 198, 103936. [Google Scholar] [CrossRef]

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Computers and Electronics in Agriculture 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Feng, Y.Z.; Sun, D.W. Application of hyperspectral imaging in food safety inspection and control: a review. Critical reviews in food science and nutrition 2012, 52, 1039–1058. [Google Scholar] [CrossRef]

- Wang, C.; Liu, B.; Liu, L.; Zhu, Y.; Hou, J.; Liu, P.; Li, X. A review of deep learning used in the hyperspectral image analysis for agriculture. Artificial Intelligence Review 2021, 54, 5205–5253. [Google Scholar] [CrossRef]

- Wieme, J.; Mollazade, K.; Malounas, I.; Zude-Sasse, M.; Zhao, M.; Gowen, A.; Argyropoulos, D.; Fountas, S.; Van Beek, J. Application of hyperspectral imaging systems and artificial intelligence for quality assessment of fruit, vegetables and mushrooms: A review. biosystems engineering 2022, 222, 156–176. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A review on the combination of deep learning techniques with proximal hyperspectral images in agriculture. Computers and Electronics in Agriculture 2023, 210, 107920. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, C.; Liu, F.; Qiu, Z.; He, Y. Application of deep learning in food: a review. Comprehensive reviews in food science and food safety 2019, 18, 1793–1811. [Google Scholar] [CrossRef]

- Wang, X.; Bouzembrak, Y.; Lansink, A.O.; van der Fels-Klerx, H. Application of machine learning to the monitoring and prediction of food safety: A review. Comprehensive Reviews in Food Science and Food Safety 2022, 21, 416–434. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Pathak, H.; Bhadauria, S.; Sudan, J. Aflatoxin contamination in food crops: causes, detection, and management: a review. Food Production, Processing and Nutrition 2021, 3, 1–9. [Google Scholar] [CrossRef]

- Siedliska, A.; Baranowski, P.; Zubik, M.; Mazurek, W.; Sosnowska, B. Detection of fungal infections in strawberry fruit by VNIR/SWIR hyperspectral imaging. Postharvest Biology and Technology 2018, 139, 115–126. [Google Scholar] [CrossRef]

- Femenias, A.; Gatius, F.; Ramos, A.J.; Teixido-Orries, I.; Marín, S. Hyperspectral imaging for the classification of individual cereal kernels according to fungal and mycotoxins contamination: A review. Food Research International 2022, 155, 111102. [Google Scholar] [CrossRef]

- Han, Z.; Gao, J. Pixel-level aflatoxin detecting based on deep learning and hyperspectral imaging. Computers and Electronics in Agriculture 2019, 164, 104888. [Google Scholar] [CrossRef]

- Gao, J.; Zhao, L.; Li, J.; Deng, L.; Ni, J.; Han, Z. Aflatoxin rapid detection based on hyperspectral with 1D-convolution neural network in the pixel level. Food Chemistry 2021, 360, 129968. [Google Scholar] [CrossRef]

- Ma, J.; Guan, Y.; Xing, F.; Eltzov, E.; Wang, Y.; Li, X.; Tai, B. Accurate and non-destructive monitoring of mold contamination in foodstuffs based on whole-cell biosensor array coupling with machine-learning prediction models. Journal of Hazardous Materials 2023, 449, 131030. [Google Scholar] [CrossRef]

- Kim, Y.K.; Baek, I.; Lee, K.M.; Kim, G.; Kim, S.; Kim, S.Y.; Chan, D.; Herrman, T.J.; Kim, N.; Kim, M.S. Rapid Detection of Single-and Co-Contaminant Aflatoxins and Fumonisins in Ground Maize Using Hyperspectral Imaging Techniques. Toxins 2023, 15, 472. [Google Scholar] [CrossRef]

- Kılıç, C.; İnner, B. A novel method for non-invasive detection of aflatoxin contaminated dried figs with deep transfer learning approach. Ecological Informatics 2022, 70, 101728. [Google Scholar] [CrossRef]

- Kılıç, C.; Özer, H.; İnner, B. Real-time detection of aflatoxin-contaminated dried figs using lights of different wavelengths by feature extraction with deep learning. Food Control 2024, 156, 110150. [Google Scholar] [CrossRef]

- Antoine, J.P.; Carrette, P.; Murenzi, R.; Piette, B. Image analysis with two-dimensional continuous wavelet transform. Signal processing 1993, 31, 241–272. [Google Scholar] [CrossRef]

- et al, L.; et al. Project, C. (2024). Codecarbon: Track and reduce your carbon emissions., 2019. Last access: 08/07/2024.

- Bouza, L.; Bugeau, A.; Lannelongue, L. How to estimate carbon footprint when training deep learning models? A guide and review. Environmental Research Communications 2023, 5, 115014. [Google Scholar] [CrossRef] [PubMed]

| 1 |

|

| 2 |

Pywt library - pywavelets documentation for Morlet Wavelet ("morl") |

Figure 1.

General scheme of the methodology followed to carry out the study.

Figure 1.

General scheme of the methodology followed to carry out the study.

Figure 2.

Pixel masking for a set of figs to extract the pixel from the region of interest using the Labelme software.

Figure 2.

Pixel masking for a set of figs to extract the pixel from the region of interest using the Labelme software.

Figure 3.

Hyperspectral signature of a fig pixel.

Figure 3.

Hyperspectral signature of a fig pixel.

Figure 4.

Statistics computed for each class per spectral band.

Figure 4.

Statistics computed for each class per spectral band.

Figure 5.

Number of instances per classes in training and test sets.

Figure 5.

Number of instances per classes in training and test sets.

Figure 6.

Architectural models for FCNN and CNN.

Figure 6.

Architectural models for FCNN and CNN.

Figure 7.

Setting for FCNN architecture model.

Figure 7.

Setting for FCNN architecture model.

Figure 8.

ROC curve for the predictive model with FCNN representing the different classes.

Figure 8.

ROC curve for the predictive model with FCNN representing the different classes.

Figure 9.

Setting for CNN architecture model.

Figure 9.

Setting for CNN architecture model.

Figure 10.

ROC curve for each class and AUC values for the CNN model.

Figure 10.

ROC curve for each class and AUC values for the CNN model.

Table 1.

Hyperparameter search for FCNN and CNN approaches.

Table 1.

Hyperparameter search for FCNN and CNN approaches.

| FCNN |

|---|

| Hyperparameter |

Values |

Description |

| Activation function |

relu, elu, selu |

Activation function in the hidden layers |

| Hidden layers |

2-7 |

Number of hidden layers in the network |

| Neurons/hidden layer |

64-4096 (in step of 32) |

Number of neurons in each hidden layer |

| Dropout |

0.0-0.3 (in step of 0.1) |

Dropout for each hidden layer |

| DNN |

| Hyperparameter |

Values |

Description |

| Activation function |

relu, elu, selu |

Activation function in the hidden layers |

| Hidden layers |

1-4 |

Number of hidden layers in the network |

| Neurons/hidden layer |

64-1024 (in step of 32) |

Number of neurons in each hidden layer |

| Pooling layer |

Max pooling, Average pooling |

Type of pooling layer |

Table 2.

Systems used to launch the experiments.

Table 2.

Systems used to launch the experiments.

| DL Model |

GPU |

Processor |

RAM |

Operating system |

| FCNN |

GeForce RTX 2080 Super |

Intel Core i9-9900K |

16GB |

Windows 11 |

| CNN |

A100-PCIE-40GB 108 cores |

Intel Xeon Silver 4310 |

500 GB |

Linux-5.15.0-97 |

Table 3.

FCNN architectural details.

Table 3.

FCNN architectural details.

| Layer |

Output format |

Training settings |

| Layer 1 (Dense) |

672 |

|

| Layer 2 (Dense) |

|

|

| Dropout layer |

- |

- |

| Layer 4 (Dense) |

|

|

| Dropout layer |

- |

- |

| Layer 6 (Dense) |

|

|

| Dropout layer |

- |

- |

| Layer 8 (Dense) |

|

|

| Dropout layer |

- |

- |

| Layer 10 (Dense) |

4 |

|

| Total |

- |

|

| Total |

- |

|

Table 4.

Confusion matrix FCNN model.

Table 4.

Confusion matrix FCNN model.

| |

Predicted class |

| |

Class 0 |

Class 1 |

Class 2 |

Class 3 |

| Real class |

Class 0 |

56,933 |

1,545 |

2,585 |

1,403 |

| Class 1 |

9,271 |

52,593 |

2,280 |

1,456 |

| Class 2 |

11,031 |

1,866 |

54,294 |

2,158 |

| Class 1 |

8,807 |

1,312 |

2,251 |

56,275 |

Table 5.

Classification metrics for the FCNN model.

Table 5.

Classification metrics for the FCNN model.

| |

Class 0 |

Class 1 |

Class 2 |

Class 3 |

| Precision |

0.66 |

0.92 |

0.88 |

0.92 |

| Recall |

0.91 |

0.8 |

0.78 |

0.82 |

| F1-Measure |

0.77 |

0.86 |

0.83 |

0.87 |

Table 6.

Study of sustainability. FCNN model training time, energy consumption per component, and CO2 emissions.

Table 6.

Study of sustainability. FCNN model training time, energy consumption per component, and CO2 emissions.

| Metric |

Value |

| Duration (h) |

0.70 |

| Emissions () |

0.038 |

| Emissions rate () |

0.054 |

| Energy consumption (KWh) |

0.20 |

Table 7.

CNN architectural details.

Table 7.

CNN architectural details.

| Layer |

Output format |

Training settings |

| Layer 1 (Convolutional) |

90 x 448 x 40 |

400 |

| Pooling layer |

45 x 224 x 40 |

- |

| Layer 2 (Convolutional) |

45 x 224 x 40 |

14,440 |

| Flatten layer |

403,200 |

- |

| Layer 3 (Dense) |

256 |

|

| Layer 4 (Dense) |

128 |

|

| Layer 5 (Dense) |

512 |

|

| Layer 6 (Dense) |

4 |

|

| Layer 7 (Dense) |

256 |

|

| Total |

- |

|

Table 8.

Confusion matrix CNN model.

Table 8.

Confusion matrix CNN model.

| |

Predicted class |

| |

Class 0 |

Class 1 |

Class 2 |

Class 3 |

| Real class |

Class 0 |

50,759 |

4,477 |

4,237 |

2,993 |

| Class 1 |

5,497 |

51,757 |

4,920 |

3,426 |

| Class 2 |

7,091 |

6,501 |

50,437 |

5,320 |

| Class 1 |

5,220 |

5,115 |

6,198 |

52,112 |

Table 9.

Classification metrics for the CNN model.

Table 9.

Classification metrics for the CNN model.

| |

Class 0 |

Class 1 |

Class 2 |

Class 3 |

| Precision |

0.74 |

0.76 |

0.77 |

0.82 |

| Recall |

0.81 |

0.79 |

0.73 |

0.76 |

| F1-Measure |

0.77 |

0.78 |

0.75 |

0.79 |

Table 10.

Study of sustainability. CNN model training time, energy consumption per component, and emissions.

Table 10.

Study of sustainability. CNN model training time, energy consumption per component, and emissions.

| Metric |

Value |

| Duration (h) |

55.37 |

| Emissions () |

3.44 |

| Emissions rate () |

0.0623 |

| Energy consumption(KWh) |

17.75 |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).