2.2. PolyModel-Based Time Series Relationship

For any time series of a chosen asset

, by PolyModel theory, at any time stamp

t, we can compute a

P-Value Score (for more details, see section 2.2 of [

6] and [

7]) with respect to each risk factor

; let’s denote it as

. As discussed in the chapter of PolyModel Theory, this number represents the reaction of

to

during a short past time period before

t. The set

represent how asset

reacted to the whole environment, which can be regarded as an

characterization of

.

For any two assets and , we have two groups of P-Value Scores: and . As these numbers find their roots as p-values, we can set a threshold to filter out those P-Value Scores that are relatively too small. We use the same notations to denote the filtered groups of P-Value Scores.

Then we can use and to define new types of correlations between and . There are two natural ways are:

-

We can treat and as coordinates and calculate the corresponding cosine similarity, denoted as .

-

We can use and to give a rank to the risk factors and calculate the corresponding rank correlation, denote as .

We use Spearman’s as the particular case. Assume that the risk factors are enumerated. Then for every asset , we can give a rank to these risk factors at time t according to the values of . Let’s denote these ranks as .

For two assets and with associated and ,

is more robust while may carry more information, thus, we use a weighted sum of them as our final PolyModel-Based time series correlation: where w is between 0 and 1 representing the favor of the trade-off between robustness and more information.

2.3. Financial Network Construction and Analysis

For any given time stamp t, using the innovative correlation proposed in the above section, we can construct the PolyModel-Based network of the assets which we are interested in. As mentioned above, this network is equivalent to a symmetric adjacent matrix, given some predetermined order of these assets:

, where if .

Next we want to study and characterize the dynamical evolution of the above matrix along with time t. One popular way is to study some algebraic and topological properties of the network and see how they change. Thus, let’s first review some important network topological properties.

Definition 3.

Eigenvalues In this thesis, we will be always working with real number field. Given a real square matrix M, if there exists a n-dimensional and real number λ such that

then is called eigenvector and λ is the associated eigenvalue.

If M is a real and symmetric, it has exactly n eigenvectors and n eigenvalues. If we rearrange these eigenvalues according to their values, we can call the largest one the eigenvalue.

Definition 4.

Degree measures how densely a network is connected. By our notations, degree is defined as:

Degree denotes the percentage of edges in a network. It is the average of edges of each node in the network divided by .

Definition 5.

Clustering Coefficient is defined as the probability a triangular connection occur in a given network. By triangular connection, we mean that if there are three nodes and c, a and b are connected, b and c are connected, c and a are connected. Clustering coefficient (CC) is defined as:

The larger clustering coefficient is, the more likely we see connectedness in the network.

Definition 6.

Closeness measures the average of lengths of the shortest paths between any two nodes in the network. Given 2 nodes a and b, we use denotes the shortest length between them. If there is no path connecting these two nodes, we define the length of the shortest path as . The closeness is defined as:

Closeness reflects the distance within the network and how fast information can be transmit from one node to another.

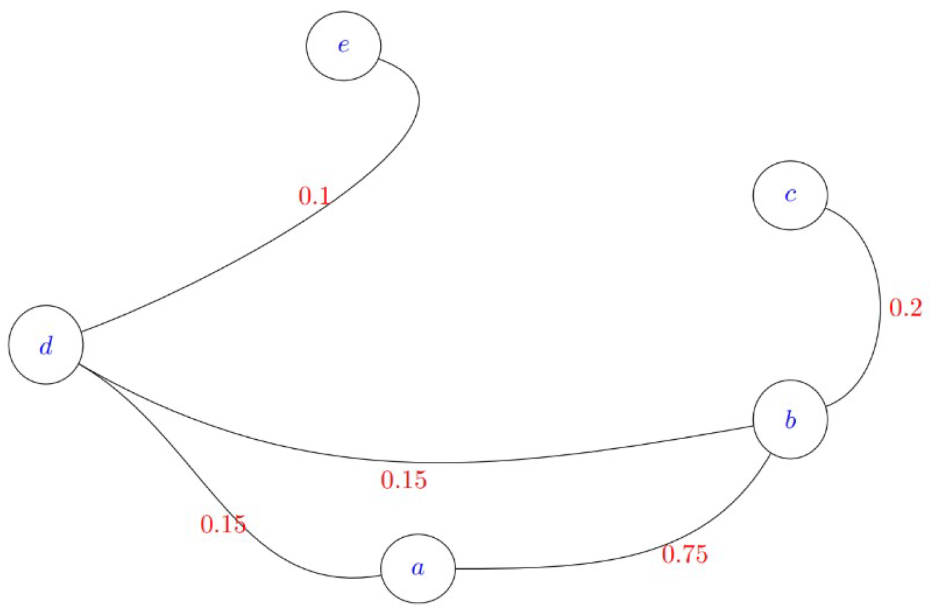

Let’s use a simple example to illustrate the above definitions.

Example The picture below represents the weighted network of five nodes: and e.

Let’s calculate the three topological properties introduced above for this graph example:

Degree The degree of this network is .

Clustering Coefficient There is only one triangular connection in the network . As there are five different nodes, the number of all possible triangular connections is . Thus, the clustering coefficient is .

Closeness To compute the closeness, let’s first look at the lengths of the shortest paths between each pair of nodes:

The closeness is .

Next, we apply the methodologies and concepts developed and introduced above to study the stability of financial market, more preciously, the market is represented by some equity index, for instance S&P 500, Nasdaq, Dow 30, and so forth. The group of the (target) assets, which we are interested in, is the set of all the components of the selected index. We also have a carefully selected list of risk factors which covers almost all the major aspects of the real-world, thus, can be regarded a good proxy of it.

The group of target assets can vary quite differently due to the fact that the components of different equity indices can be very different. However, as the group of risk factors are selected as a complete proxy of the real-world, its components can be treated as universal and change in a very slow manner. We list some of the representatives of the risk factors below to illustrate how they look like.

Table 1.

List of the Risk Factors for Network

Table 1.

List of the Risk Factors for Network

| Ticker |

Name |

| U.S. Treasury |

LUATTRUU INDEX |

| U.S. Corp |

LUACTRUU INDEX |

| Global High Yield |

LG30TRUU INDEX |

| Heating Oil |

BCOMHO INDEX |

| Orange Juice |

BCOMOJ INDEX |

| Euro (BGN) |

EURUSD BGN Curncy |

| Japanese Yen (BGN) |

USDJPY BGN Curncy |

| ... |

... |

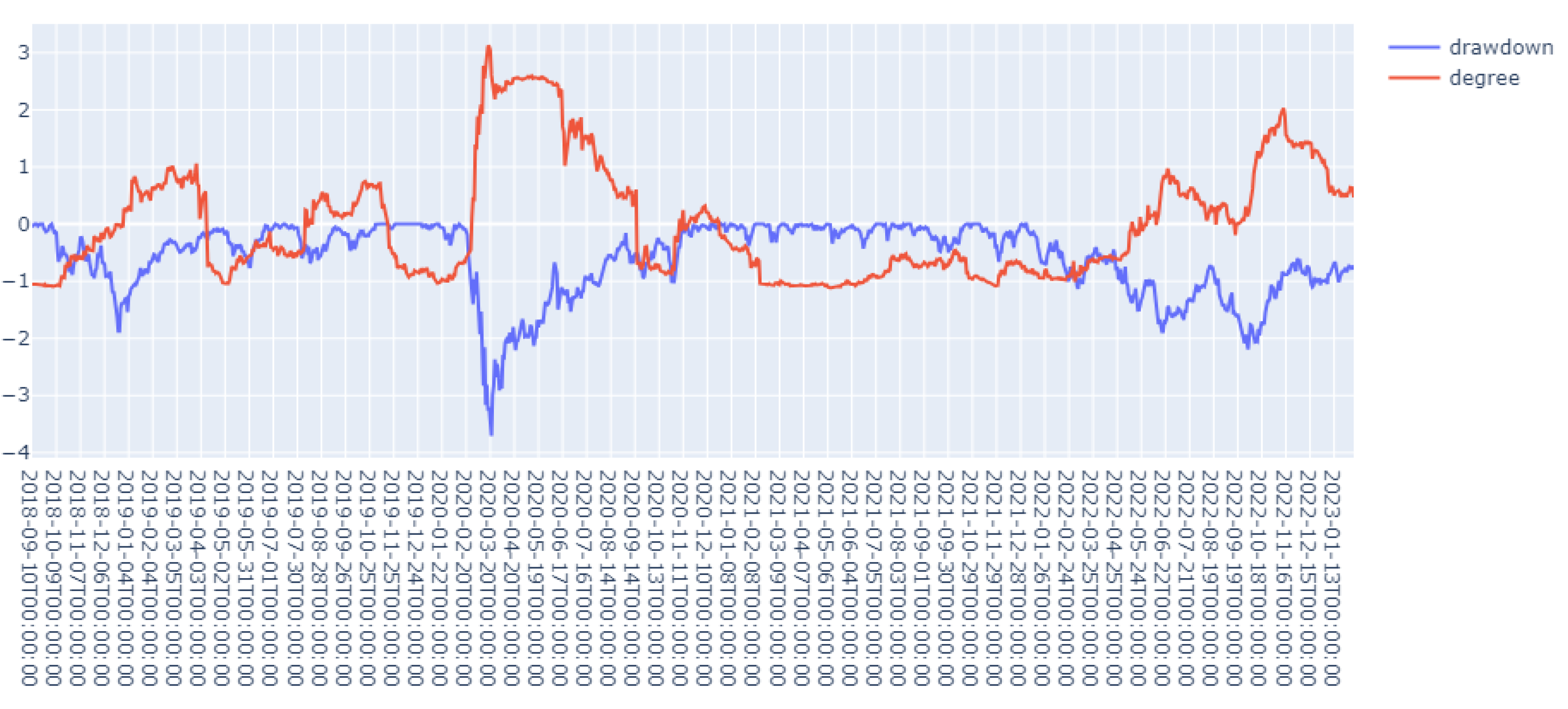

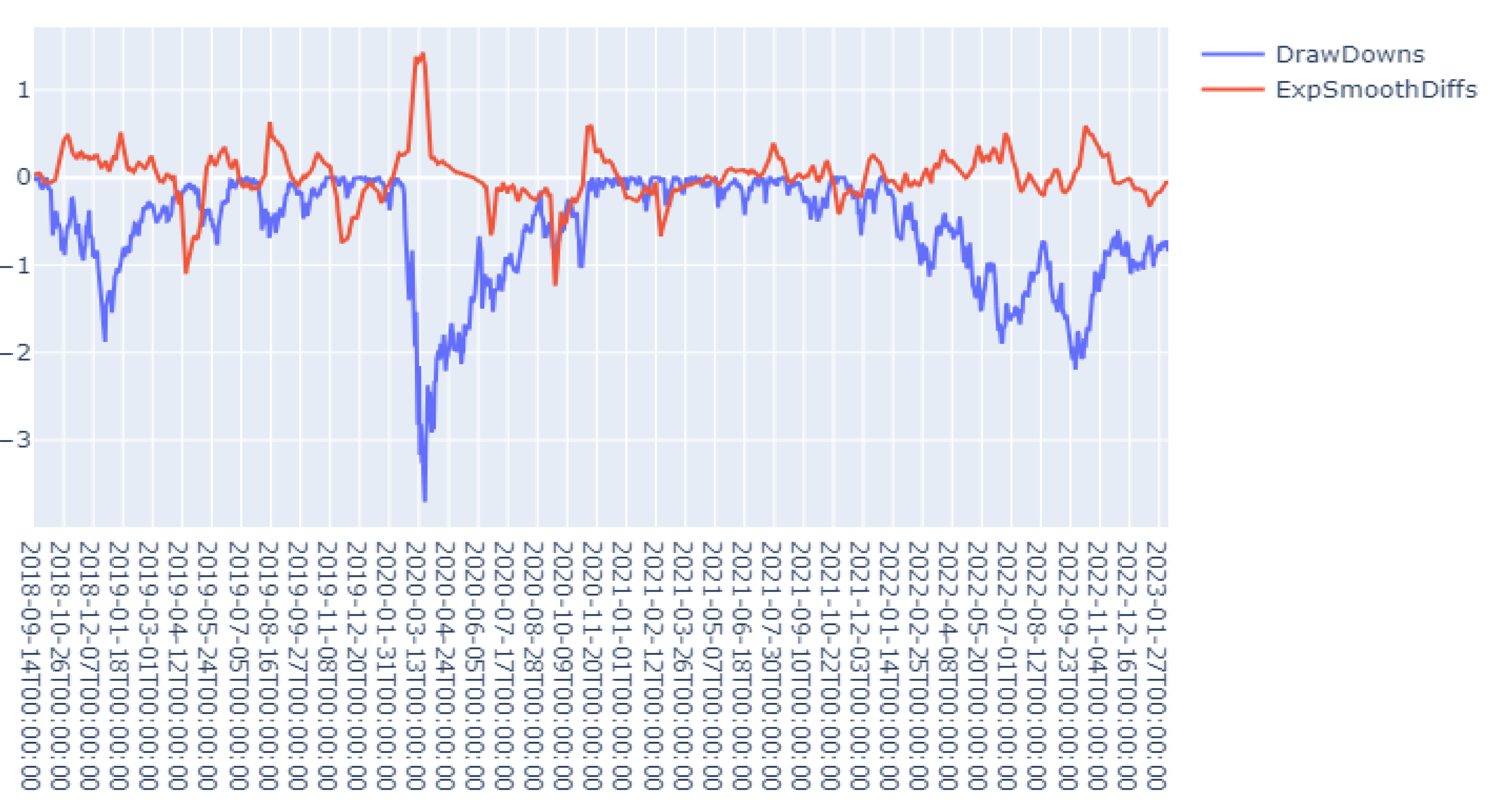

Now, we get back to discuss how to measure and predict the instability of the chosen equity index. At each time stamp t, we generate the PolyModel-Based correlation matrix for the component assets of the chosen index with respect to the universe of the selected risk factors, denoted as , then we can calculate the algebraic and topological quantities associated to . For every such quantity, we get a time series; to reduce the impact of noise, we can apply backward moving average or exponential decay to smooth the time series. These time series are the features. Sometimes, instead of these features themselves, we can consider their changes (like their first order derivatives) which also reflects the underlying network’s dynamics.

We are interested in the drawdown process of the selected index which represents the financial market. In particular, we will calculate the risk indicators based on PolyModel theory as described above, and analyze their relationships with the drawdown time series of DOW 30. More details are in the next section.