Introduction

The dawn of the Information Age has witnessed the meteoric rise of artificial intelligence (AI), seamlessly integrating into the very fabric of modern society. It has dictated patterns of profound transformation, shaping the modalities of communication, work, and broader life experiences. Landmark platforms such as Twitter are shaping the contours of public discourse, while revolutionary AI models like ChatGPT are pioneering advancements in natural language comprehension. In a world where technological advancements occur at a blistering pace, the adoption of novel techniques over classical approaches to address longstanding challenges becomes imperative. The extraordinary potential of AI systems has been demonstrated in a variety of applications in recent years, but bias issues have become a serious barrier to mass applications. AI bias refers to the systematic and unfair discrimination that can be present in the outcomes of AI algorithms, often reflecting existing societal prejudices. Such biases can arise from various sources, including the data used to train these systems, the design of the algorithms themselves, or the objectives for which they are optimized. The impact of AI bias is multifaceted as it can have real-world impacts on individuals’ lives Biases in AI algorithms might deny people opportunities, such as loans or job interviews, based on unjustified factors (Seyyed-Kalantari et al., 2021). In healthcare, biased medical AI could lead to misdiagnoses or unequal treatment (Yapo & Weiss, 2018). The spread of biased information through AI-generated content can also contribute to the amplification of misinformation and harmful stereotypes. A deeply entrenched and multifaceted issue, traditional computational models, despite their prowess, often fall short of offering comprehensive solutions. As AI continues its ascent, both in terms of capabilities and societal influence, there is an escalating urgency to find scalable, efficient, and robust techniques to mitigate its pitfalls. An examination of several case studies across industries underscores the multifarious nature of AI bias and the urgent need for mitigative strategies. The COMPAS algorithm, utilized within the criminal justice system, exemplifies such bias. Similar to ProPublica, an investigative news organization, highlighted a significant racial bias in a criminal justice algorithm deployed in Broward County, Florida (Khademi & Honavar, 2020). Their findings revealed that African American defendants were erroneously classified as “high risk” almost twice as frequently as their white counterparts, resulting in potentially unjust treatment within the criminal justice system (Angwin et al., 2016). This misclassification can have grave implications, from sentencing to parole decisions, reflecting the dire need for equity in AI-guided legal processes.

In policing, PredPol, a predictive policing system employed across several US states, has also faced scrutiny. Intended to anticipate crime locations and times, the algorithm has inadvertently steered law enforcement towards communities with a high density of racial minorities. Such targeting risks establishing a pernicious feedback loop that reinforces and magnifies existing racial biases. The domain of facial recognition exemplifies further bias, particularly concerning race and gender. AI systems deployed in law enforcement have been found to accurately identify white males while faltering with dark-skinned females. The implications of misidentification are profound, potentially leading to false accusations and systemic exclusion. Google’s AI algorithms, which power services like image searches and advertising, have not been immune to bias. For instance, image searches for "CEO" returned disproportionately fewer results depicting women, despite the reality of their representation in such roles. Furthermore, an inclination to display high-income job advertisements more frequently to men than to women has also been reported. Instances such as a Palestinian worker’s arrest due to Facebook’s AI mistranslating "good morning" in Arabic to "attack them" in Hebrew highlight the immediate personal repercussions that AI misunderstandings can engender. Similarly, Amazon was compelled to abandon an AI recruitment tool that unfavorably assessed female applicants—a reflection of male predominance in the tech industry’s historical data. In healthcare, algorithmic bias was spotlighted when a system used to forecast patient healthcare needs underestimated the needs of Black patients. The assessment was based on healthcare spending—a metric that does not equitably represent healthcare necessity. Microsoft’s chatbot Tay, which adapted its responses from Twitter conversations, spiraled into issuing racist and discriminatory statements, influenced by the platform’s negative interactions. This incident highlights the susceptibility of AI to absorb and replicate the prejudices present in their training data. These instances illuminate the potential perils associated with AI systems and the ethical obligations to be addressed. Biases typically arise from skewed training data, algorithmic design, and the predefined objectives of AI systems. To combat these biases, a multifaceted approach is required: diverse development teams, fairness-aware algorithms, rigorous external audits, and an ongoing review process to ensure AI systems evolve alongside societal values and norms.

Quantum technology, leveraging the mechanics of superposition and entanglement, enables quantum bits or qubits to occupy multiple states at once, unlike the binary states of classical bits. This characteristic allows quantum computers to perform many calculations in parallel, offering a leap in computational power for tasks such as simulating quantum physical processes, factoring large numbers for cryptography, and optimizing complex systems that are intractable for classical computers. The entangled states of qubits also provide a new dimension to processing and transmitting information, promising advancements in secure communication and quantum networking. However, despite the theoretical advantages, quantum systems face practical challenges. Quantum coherence, error rates, and qubit interconnectivity are areas requiring significant innovation to build scalable, fault-tolerant quantum computers. As the field advances, overcoming these obstacles is critical to unlocking the full potential of quantum computing in solving some of the most complex problems in science and engineering.

By integrating the principles of quantum mechanics with the objective of mitigating AI bias, classical algorithms, which lack the necessary tools, may be inadequate in addressing the challenges posed where biased datasets used to train machine learning models are a common source of AI bias. Large and complicated datasets may contain subtle biases that are difficult for classical algorithms to identify and mitigate (Alvi, Zisserman & Nellåker, 2019). In this context, insights from the Zeno effect, a phenomenon triggered by continuous measurement, become relevant, suggesting that such continuous measurement can impede the evolution of a quantum system (Aftab & Chaudhry, 2017). Translated into the realm of AI, this suggests that by applying persistent and methodical monitoring, we can thwart the progression of biases in AI algorithms. This approach would require a system designed like a vigilant quantum observer, constantly checking, and correcting the algorithm’s outputs, essentially ‘freezing’ the state of biases before they can influence outcomes.

In another domain, research demonstrated that when natural language processing models are trained on news articles, they can inadvertently inherit and perpetuate prevailing gender stereotypes. Such instances underline the imperative nature of addressing AI bias, as it can reinforce and amplify societal inequalities.

Quantum technology, inspired by the principles of quantum mechanics, represents a revolutionary change in thinking in the realm of computation and information processing. Unlike classical computers, which rely on bits that exist in a state of either 0 or 1, quantum computers use quantum bits or qubits. These qubits can exist in a superposition of states, allowing them to process vast amounts of information simultaneously (Li et al., 2001). Leveraging unique properties such as superposition and entanglement, quantum computers hold the potential to solve complex problems that are currently beyond the reach of classical machines. From simulating intricate molecular structures to optimizing large-scale systems and potentially revolutionizing fields like cryptography, quantum technology promises transformative breakthroughs across various sectors (Shor, 1998). However, as this technology remains in its nascent stages, challenges related to stability, error rates, and scalability persist, making the journey toward practical quantum computing both fascinating and demanding.

So, why introduce quantum technology to the quest for fairer AI algorithms? The inception of such a proposition can be traced back to an arresting analogy: just as the intricacies and nuances of AI biases are multifaceted, deeply rooted, and span multiple dimensions, the realm of quantum mechanics revels in its unique capacity to exist in superpositions and harness the power of entanglement. These attributes enable quantum systems to capture and process immense volumes of data concurrently. Given the profound complexity and depth of the challenge posed by AI bias, quantum computing, boasting unmatched computational prowess, emerges as a fitting candidate to address it.

In the vast and evolving landscape of technology, quantum computing presents an opportunity not just as an innovative computing model, but as a sentinel, akin to a sentinel, designed to scrutinize biases within other AI algorithms. While one might argue in favor of deploying quantum algorithms directly, replacing the multitude of AI models across diverse domains, such a proposition is riddled with complexities. Quantum computing harnesses quantum-mechanical phenomena, like superposition and entanglement, to perform computations, whereas quantum algorithms are specific computational procedures designed for these quantum systems. While quantum computing is a broader concept encompassing the hardware, principles, and techniques of quantum information processing, quantum algorithms are the specific steps or methods used to solve problems on quantum computers. Adopting quantum algorithms universally across diverse domains is challenging due to theoretical complexities, inflated costs, and large-scale practical implementation issues. In contrast, the broader field of quantum computing, with its potential for sentinel approaches, offers a more pragmatic alternative without replacing every AI model in existence. A watchdog, by its very nature, is characterized by its alertness, vigilance, and ability to respond swiftly to potential threats or deviations. These qualities are essential for monitoring and regulating systems, especially those as intricate as AI. Where a conventional AI system might take seconds to process information, a quantum-based sentinel, embodying the qualities of a watchdog, could assess the AI’s outputs in mere milliseconds. This rapid processing is made possible due to quantum principles such as superposition, where a quantum bit (qubit) can exist in multiple states simultaneously, and entanglement, where qubits that are entangled can be correlated even when separated by large distances. Additionally, quantum tunneling allows quantum algorithms to explore multiple solutions at once, optimizing problem-solving. These quantum phenomena, inherent to quantum computers, grant them unparalleled computational capabilities, enabling the rapid processing of vast datasets and the optimization of algorithms. Such real-time checks ensure that biases and other undesired outputs are promptly identified and addressed.

Building on this, in this ever-expanding digital cosmos, big tech companies loom large, wielding enormous influence and resources. Their role is dual-pronged. On one hand, these tech giants, by virtue of deploying AI on a colossal scale, find themselves on the frontline, frequently contending with issues of AI bias. Their abundant resources empower them to delve deep into embryonic technologies like quantum computing. On the other hand, the magnitude of their influence in crafting the digital narrative ensures that any innovative solution they embrace can potentially dictate industry norms. For these behemoths, venturing into quantum technology is not merely about technological prowess; it is about maintaining a vanguard position, persistently pushing the envelope to realize the ideal of a bias-free AI. However, navigating the quantum realm is not without its trials. Quantum technology, in its fledgling state, is yet to witness widespread practical applications. Present-day quantum computers, with their heightened susceptibility to errors, demand exacting, controlled environments for optimal functionality. Furthermore, crafting algorithms that can adeptly exploit quantum principles, particularly for intricate challenges such as AI bias, remains an incipient domain. The fusion of quantum tech with the AI ecosystem is thus twofold - a technical challenge and a strategic conundrum. Companies are pressed to balance the tantalizing long-term prospects against immediate hurdles and financial implications.

But the allure of quantum computing is inescapable. This avant-garde technology heralds a seismic shift, proffering tools uniquely attuned to untangle the complex web of biases in AI systems. Against the backdrop of classical computing’s inherent limitations and the copious resources within big tech’s arsenal, the expedition into quantum-based solutions is not merely defensible, but imperative. The aspiration is clear: as quantum technology advances and its real-world applications become increasingly viable, it is poised to become a pivotal force in championing ethical AI, fostering an era of digital fairness, equity, and justice. Even when quantum concepts are used to increase the capabilities of AI technologies, ethical considerations and oversight remain vital (Miller, 2019).

Following the introductory segment, the paper unfolds its exploration into the nexus of quantum computing and AI bias mitigation through a series of intricately designed sections. It begins with a Literature Review, providing a comprehensive backdrop against which the study’s contributions can be contextualized, highlighting the critical intersections and gaps in current research on AI biases and the quantum computing landscape. The narrative then advances to Metrics to Quantify Bias, where we delineate the metrics developed for quantifying bias in AI systems, establishing the evaluative criteria essential for assessing the efficacy of bias mitigation strategies. The subsequent section, Safe Learning and Quantum Control in AI Systems, delves deep into the mechanics of quantum computing applications in AI, exploring Quantum Superposition, Quantum Entanglement, and the Quantum Zeno effect as pivotal concepts for achieving fair and sustainable learning. This section further unpacks the roles of Quantum Support Vector Machines (QSVM) and Quantum Neural Networks (QNN), showcasing their potential in fostering equitable AI learning environments.

The journey continues with Quantum Quantification of Uncertainty and Risk in AI Systems Units, which examines the application of quantum principles such as probability amplitudes, risk matrices, and quantum entropy in analyzing the nuances of uncertainty and risk associated with biased decisions. In a comprehensive discourse on Decision Making in Quantum AI: Navigating Uncertainty and Limited Information, the paper addresses the intricate process of decision-making within quantum AI frameworks. This includes an exploration of Grover’s Algorithm for bias detection, strategies for ensuring AI robustness against perturbations, and quantum techniques for anomaly detection, among others. It culminates in a discussion on ensuring safe human-quantum-AI interactions and the integration of the Quantum Sentinel with CRISP-DM. The Results section presents the empirical findings that validate the theoretical constructs and methodologies proposed, followed by Conclusions that summarize the study’s implications for the advancement of AI and quantum computing, setting the stage for future research directions.

Building upon the meticulously structured exploration of quantum computing’s capacity to mitigate biases in AI, this study carves out a distinctive niche in the intersection of ethical AI and quantum mechanics. By introducing the Quantum Sentinel, an avant-garde conceptual framework, this research pioneers the application of quantum principles to the realm of AI bias detection and mitigation. It meticulously demonstrates, through rigorous empirical analysis, the superiority of quantum computing techniques—harnessing the power of quantum superposition, entanglement, and the Quantum Zeno effect—over traditional computational methods in identifying and addressing biases within AI algorithms. This not only showcases the potential of quantum computing as a transformative tool for ensuring fairness and equity in AI systems but also significantly advances the discourse on ethical considerations in artificial intelligence.

The contributions of this research extend beyond the development of the Quantum Sentinel, offering profound insights into the ethical and societal ramifications of AI. By delving into the nuanced complexities of AI-induced biases and presenting quantum computing as an essential advancement for tackling these issues, the study enriches the ongoing conversation about the moral responsibilities of AI development and deployment. It paves new avenues for future research at the juncture of quantum computing and artificial intelligence, laying the foundational stones for crafting ethically conscious, equitable AI systems. Through this holistic approach, the research not only addresses the technical challenges posed by AI biases but also highlights the imperative for a balanced digital environment, underscored by fairness, justice, and ethical consideration.

Materials & Methods

An overview of intersection between quantum computing and artificial intelligence

In recent years, quantum computing and artificial intelligence have become two prominent topics in the field of technology and research. A plethora of studies have been conducted to explore the intricate relationship between quantum computing and artificial intelligence. The significance of quantum computing has been emphasized in the work of Charlesworth (2014), who discussed the comprehensibility theorem and its implications for the foundations of artificial intelligence. Similarly, Mikalef, Conboy & Krogstie (2021) highlighted the potential role of artificial intelligence as an enabler of B2B marketing through a dynamic capabilities micro-foundations approach. Wichert (2016) explored the relationship between artificial intelligence and universal quantum computers, whereas Zhu & Yu (2023) investigated the mutual benefits of quantum and AI technologies.

Quantum artificial intelligence has been approached with caution by the United States, as Taylor (2020) elaborated in his discussion of the precautionary approach adopted by the U.S. towards the development of quantum AI. Nagaraj et al. (2023) conducted a detailed investigation into the potential impact of quantum computing on improving artificial intelligence, revealing promising outcomes. Gigante & Zago (2022) presented the applications of DARQ technologies, which include AI and quantum computing, in the financial sector, emphasizing their utility for personalized banking.

Researchers such as Ahmed & Mähönen (2021) have proposed the use of quantum computing for optimizing AI based mobile networks. Moret-Bonillo (2014) questioned whether artificial intelligence could benefit from quantum computing, exploring advantages. Abdelgaber & Nikolopoulos (2020) provided an overview of quantum computing and its applications in artificial intelligence. Kakaraparty, Munoz-Coreas & Mahbub (2021) discussed the future of mm-wave wireless communication systems for unmanned aircraft vehicles in the era of artificial intelligence and quantum computing.

An application framework for quantum computing using artificial intelligence techniques has been proposed by Bhatia et al. (2020), highlighting the potential synergy between these technologies. Moret-Bonillo (2018) described emerging technologies in artificial intelligence, including quantum rule-based systems. Robson & Clair (2022) discussed the principles of quantum mechanics for artificial intelligence in medicine, with reference to the Quantum Universal Exchange Language (QUEL). Marceddu & Montrucchio (2023) explored a quantum adaptation of the Morra game and its variants, demonstrating the potential of quantum strategies. The major challenges in accelerating the machine learning pipeline with quantum artificial intelligence have been identified in Gabor et al. (2020). Miller (2019) explored the intrinsically linked future for human and artificial intelligence interaction, emphasizing the importance of these technologies. Jannu et al. (2024) proposed energy-efficient quantum-informed ant colony optimization algorithms for industrial Internet of Things.

Gyongyosi & Imre (2019) conducted a comprehensive survey on quantum computing technology, outlining its potential impact on various fields. Chauhan et al. (2022) reviewed how quantum computing can boost AI, highlighting the promise of this technological constructive collaboration. Manju & Nigam (2014) surveyed the applications of quantum-inspired computational intelligence, highlighting its broad range of potential uses. Huang, Qian & Cai (2022) analyzed the recent developments in quantum computer and quantum neural network technology, emphasizing their significance. Gill et al. (2022) discussed emerging trends and future directions for AI in next-generation computing. Sharma & Ramachandran (2021) highlighted the emerging trends of quantum computing in data security and key management. Sridhar, Ashwini & Tabassum (2023) reviewed quantum communication and computing, emphasizing their significance in the current technological landscape.

Bayrakci & Ozaydin (2022) has introduced a novel concept for quantum repeaters in the realm of long-distance quantum communications and the quantum internet. This concept puts forth an entanglement swapping procedure rooted in the quantum Zeno effect (QZE). Remarkably, this approach attains nearly perfect accuracy through straightforward threshold measurements and single particle rotations. This approach led to the introduction of the quantum Zeno repeaters, streamlining the intricacies of quantum repeater systems, holding promise for enhancing long-range quantum communication and quantum computing in distributed systems.

Shaikh & Ali (2016) surveyed quantum computing in big data analytics, underlining its potential benefits. Singhal & Pathak (2023) explored the future era of computing, emphasizing the role of automatic computing. Long (2012) proposed a novel heuristic differential evolution optimization algorithm based on chaos optimization and quantum computing. Amanov & Pradeep (2023) reviewed the significance of artificial intelligence in the second scientific revolution, emphasizing the role of quantum computing.

Considering the vast body of research, it is evident that scholars from various fields have deeply probed the nexus between quantum computing and artificial intelligence, uncovering significant potential benefits of their integration. This paper aims to further dissect these insights and pinpoint areas still awaiting thorough examination. Despite the rich literature highlighting the constructive collaboration of quantum computing and AI, there are notable areas of concern. A pressing demand exists for research that intertwines quantum mechanics and AI methodologies. Moreover, ethical concerns, especially concerning data security and misuse, have not been sufficiently addressed. As these technologies evolve, the urgency to tackle scalability issues and formulate uniform standards becomes increasingly paramount.

In the rapidly evolving domain of Quantum AI, where quantum algorithms process and predict vast amounts of data, ensuring fairness becomes even more critical. This is especially true when quantum computations, with their potential for exponential speedups, can introduce biases at scales previously unimagined. For instance, consider a quantum enhanced social media platform that recommends content to users. Using the Disparate Impact (DI) metric, where Y are the model predictions and D is the group of the sensitive attribute, represented by Eq. (1), the platform can gauge if content recommendations are unfairly skewed towards or against certain demographic groups. The strength of DI is its simplicity, but it does not consider the underlying distribution of true positive and negative instances.

In the realm of quantum-enhanced healthcare, where accurate diagnosis is paramount, the Equal Opportunity Difference (EOD) metric becomes particularly relevant, where True Positive Rate (TPR) calculates the percentage of real positive examples that each group’s classifier properly detected. With its Eq. (2), EOD can help ensure that a quantum diagnostic tool does not miss positive cases more frequently for one demographic than another. While its focus on true positives is commendable, it overlooks the consequences of false positives.

The Statistical Parity Difference (SPD), defined by Eq. (3), where D is the group containing the sensitive attribute and Y are the model predictions, can be applied to a quantum-driven social media advertisement targeting system to ensure that ads are displayed fairly across different user groups. Its direct approach is advantageous, but it does not account for the nuances of true instance distributions.

Lastly, in a quantum healthcare system where both false negatives (missing a diagnosis) and false positives (incorrectly diagnosing a healthy individual) have profound implications, the Average Odds Difference (AOD) metric shines. Eq. (4) offers a comprehensive view of fairness, although it might be more intricate to interpret.

Deciding on the right fairness metric in Quantum AI requires a deep understanding of the application’s context. In sectors like social media, where user satisfaction and engagement are key, metrics like DI and SPD might be more relevant. In contrast, in critical areas like healthcare, where lives are at stake, metrics like EOD and AOD become indispensable. The choice of metric should always align with the specific goals and challenges of the application, ensuring that quantum advancements benefit all equitably.

AI systems, renowned for their adaptive learning prowess, can unfortunately be swayed by biases in their training datasets, potentially resulting in distorted outcomes (Caliskan, Bryson & Narayanan, 2017). By weaving in the principles of quantum mechanics, notably superposition, entanglement, and tunnelling, there’s potential to bolster these systems against inherent biases, paving the way for a more steadfast and resilient AI infrastructure. Yet, the journey from quantum-theoretical AI concepts to tangible applications is intricate, calling for breakthroughs in both quantum algorithms and hardware.

Quantum superposition allows a qubit to be in a combination of both |0⟩ and |1⟩ states. This is represented as in Eq. (5).

where |

α|

2 is the probability of the qubit being in state |0⟩ and |

β|

2 is the probability of being in state |1⟩. In the realm of AI, superposition could mean that a model’s parameter or decision node can be in multiple configurations simultaneously. This allows for more comprehensive exploration during training, which can potentially counterbalance the influence of biased data samples.

|

Algorithm 1 - Quantum AI Training. |

Input: Number of training iterations, N

Parameter: None

Output: Updated AI model

1: Let i = 0.

2: while i < N do

3: Prepare qubit in superposition state.

4: Apply quantum gates to model the AI learning process.

5: Measure the qubit state.

6: Update the AI model based on the measurement.

7: Increment i by 1.

8: end while

9: return AI model |

By harnessing superposition, AI models can explore a broader set of potential solutions concurrently. This could help to identify and rectify biases in decisions by comparing outcomes from multiple superimposed states, thus guiding the AI towards more neutral outputs.

Entanglement is a phenomenon where two qubits become interconnected in such a way that the state of one (whether it is |0⟩ or |1⟩) instantly influences the state of the other, represented in Eq. (6).

Considering AI, this interrelation can be harnessed to depict how two features or parameters are interdependent. Recognizing these entangled pairs might aid in understanding hidden relationships and dependencies in the data. Quantum entanglement can be employed to ensure the AI model understands and considers deeply interconnected features cohesively. By recognizing such intricate relationships, the model might be better equipped to resist developing biases based on superficial or isolated data points.

|

Algorithm 2 - Quantum Entanglement for Cohesive Learning. |

Input: Number of training iterations, N

Parameter: None

Output: Updated AI model

1: Initialize two qubits to a separable state.

2: Apply a quantum gate (e.g., a CNOT gate) to entangle them.

3: Let i = 0.

4: while i < N do

5: Measure one qubit.

6: Use the measurement to influence the learning

process of the related feature in the AI model.

7: Increment i by 1.

8: end while

9: return AI model |

The Quantum Zeno Effect (QZE) is rooted in the fundamental principles of quantum mechanics, and it can be utilized for implementing quantum logic operations (Franson & Pittman, 2011). At its core, the QZE posits that by frequently observing a quantum system, its evolution can be inhibited or even halted. This phenomenon can be likened to a watched pot that never boils. In quantum terms, when a system is continuously measured to ascertain if it is in a particular state, the system is "locked" into that state and is prevented from evolving into a different state (Bayrakci & Ozaydin, 2022; Bayindir & Ozaydin, Ozaydin et al., 2022; Ozaydin et al., 2023; 2018; Kraus, 1981; Franson & Pittman, 2011; Ullah, Paing & Shin, 2022; Cacciapuoti et al., 2020; Dotsenko et al., 2011; Zhao & Dong, 2017). This effect arises due to the wave function collapse, a fundamental postulate of quantum mechanics, which states that the act of measurement collapses the quantum state into one of the possible eigenstates of the measurement operator.

In the context of AI bias correction using quantum computing, the QZE’s working principle offers a compelling advantage. By frequently measuring the quantum representation of an AI model, one can effectively "lock" the model into a state of fairness, preventing it from drifting into biased configurations (Kraus, 1981). This continuous monitoring and adjustment mechanism could be the best approach because it addresses bias at the quantum level, ensuring that fairness is ingrained into the very fabric of the AI model’s evolution. Traditional methods often tackle bias post-training or during data preprocessing, but the QZE offers a dynamic, real-time correction mechanism that is deeply embedded in the model’s training process. As the undesired evolution of a quantum system is slowed down or even frozen via quantum Zeno effect (Franson & Pittman, 2011), the system being trained can be made to stick to the desired fairness in a sustainable way.

QSVMs operate by finding the hyperplane that best divides a dataset into classes. In the quantum version, this process is expedited by leveraging quantum parallelism. To integrate the QZE, during the training phase of the QSVM, frequent measurements can be made to ensure that the quantum state representing the hyperplane remains unbiased. If any bias is detected, quantum logic operations can be applied to correct the trajectory of the hyperplane, ensuring that the final model is fair.

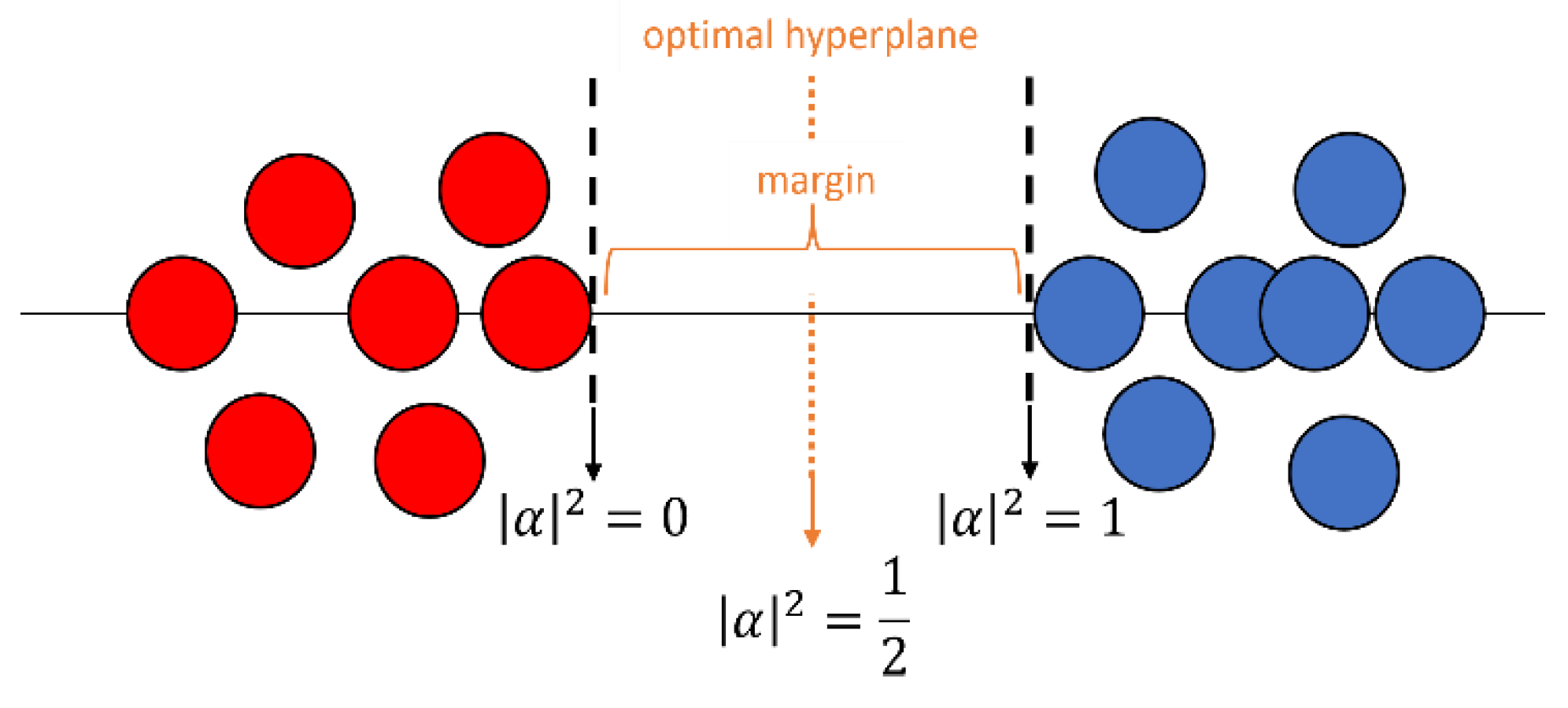

To present a concrete example on how QZE can inhibit undesired bias, we have developed the following three quantum simulations. We consider a hyperplane optimal in maximizing the margin for classification of data as illustrated in

Figure (1), and that the hyperplane is defined by the superposition coefficient

α of a qubit in the state presented in Eq. (5) such that the hyperplane is optimal for the maximum superposition case at

.

For each simulation, we consider one of the three basic types of bias where the hyperplane i) approaches (towards blue data points), ii) approaches (towards red data points), and iii) performs a random walk around the optimal point at . We associate the following physical models, respectively, acting on the qubit that would lead to these bias types: amplitude damping channel (ADC) which decreases α, amplitude amplifying channel (AAC) which increases α, and a simple combination of amplitude damping and amplitude amplifying which randomly increases and decreases α.

A set of Kraus operators {

Ki} apply on the density matrix of a quantum system

ρ associated with its evolution as

where

is the conjugate transpose of the operator

K. Kraus operators corresponding to ADC are given as

and Kraus operators corresponding to AAC are,

Each simulation consists of n iteration steps in which the physical model of the considered bias type applies with a randomly selected value for probability in the range 0 ≤ p ≤ 0.05.

To protect the classifier hyperplane from bias, we consider frequent measurements to implement QZE as follows. We measure frequently if the qubit is in the original maximal superposition state with . Due to bias, with an exceedingly small probability ϵ ≈ 0, the qubit is found not in the initial state. But with unit probability 1−ϵ, the qubit is projected to the initial superposition state due to the collapse of the wavefunction to that state.

For each simulation, we consider four scenarios that reflect the impact of the frequency of the measurement, which is in the heart of Zeno effect. In terms of the iteration steps as the time scale, the period of the Zeno measurements is chosen as ,i.e., no QZE is implemented, and = j, i.e., a Zeno measurement is performed at every j steps.

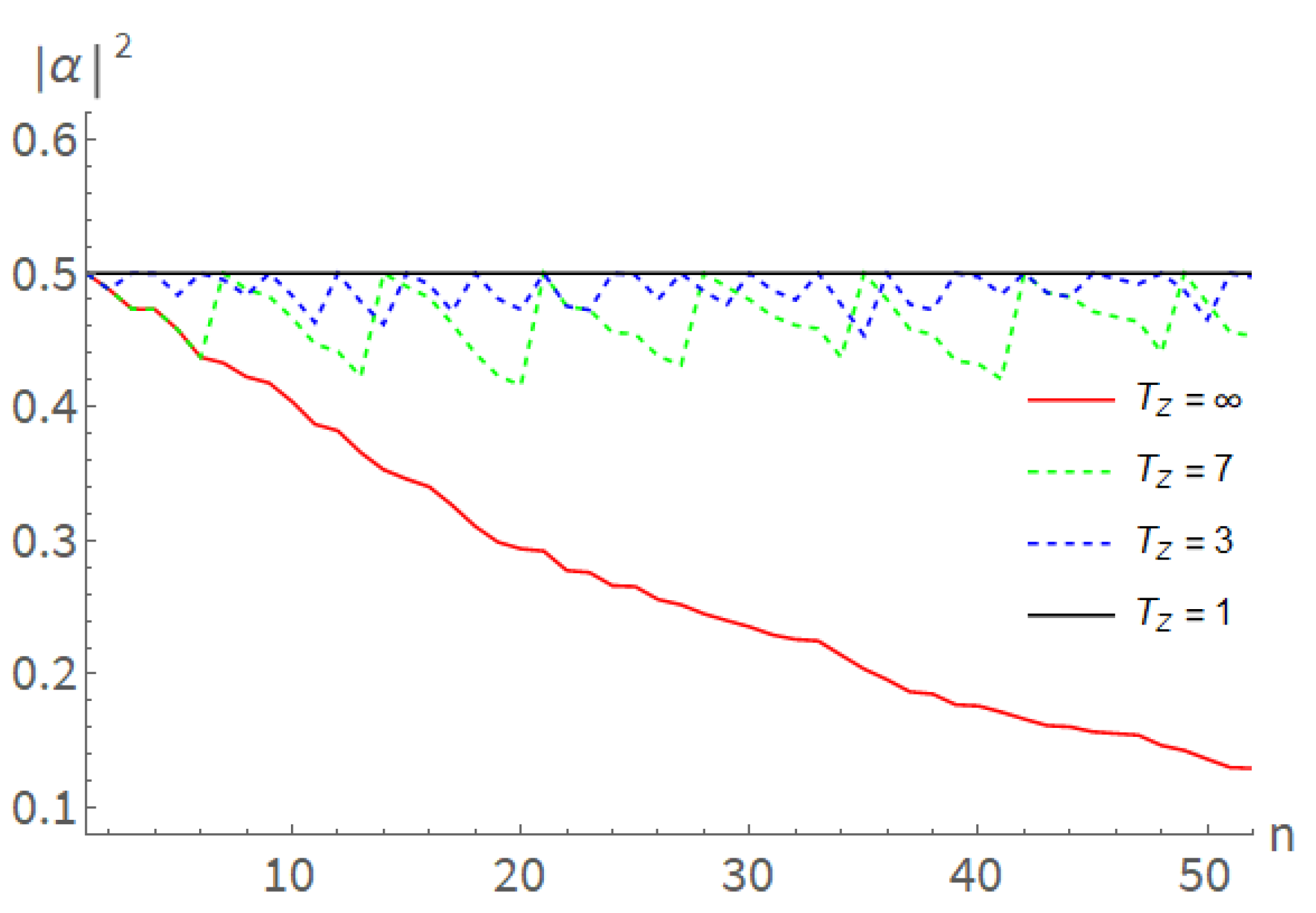

In the first simulation considering ADC with

in each iteration, as shown in

Figure 2, if no QZE is implemented

, the superposition coefficient corresponding to the SVM hyperplane approaches 1. However, performing frequent measurements limits the unbiases, and if the measurement is applied in every step, the hyperplane is perfectly protected from the undesired bias.

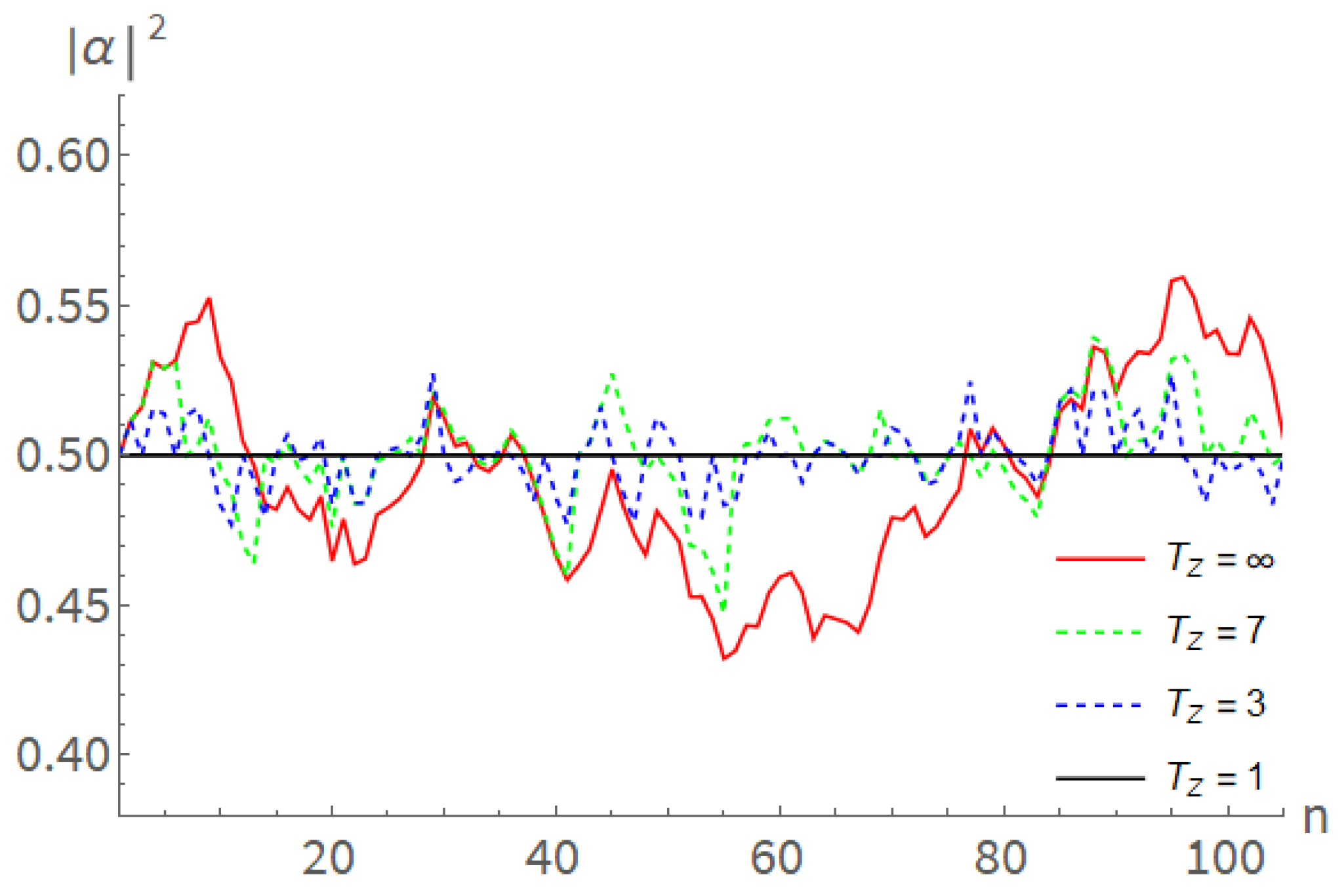

Similarly, in the second simulation considering AAC with

in each iteration that results in bias of the hyperplane towards red data points, and in the third simulation considering both ADC with

and AAC with

in each iteration that results in a random walk bias of the hyperplane around its optimal, we show in

Figure 3 and

Figure 4, respectively, that QZE helps keeping the hyperplane unbiased.

In this particular example, the simulations results show how QZE can inhibit undesired bias in quantum SVM, while in a broader sense they indicate the potential role of QZE in sustainable fair learning.

QNNs are quantum analogs of classical neural networks. They utilize qubits instead of classical bits and quantum gates instead of classical activation functions. In the context of QZE, as the QNN evolves and learns from data, continuous quantum measurements can be made on the qubits representing the network’s weights and biases. If any qubit begins to exhibit biased behavior, quantum logic operations can be applied to rectify it. This ensures that QNN remains fair throughout its training process. Implementing these algorithms in the context of AI bias correction would necessitate a hybrid quantum-classical approach. The quantum computer would manage the quantum aspects of the algorithms, like the QZE-based measurements and corrections, while the classical computer would manage data preprocessing, results interpretation, and other non-quantum tasks. The iterative process of measurement, coupling, and logic operations would form the core of the quantum component of the implementation.

The Quantum Zeno Effect, when combined with quantum algorithms like QSVM and QNN, offers a robust and dynamic approach to AI bias correction. By addressing bias at its root and providing real-time corrections, this method holds the potential to revolutionize fairness in AI, ensuring that AI models are both accurate and ethically sound.

In conclusion, these quantum principles, while challenging to explicitly implement due to the nascent stage of quantum computing, provide a rich tapestry of concepts that can be metaphorically and, in the future, practically applied to address the persisting challenge of bias in AI systems. By leveraging the multi-dimensional capacities of quantum mechanics, there’s potential for developing AI models that are not only more robust but also ethically sound., there's potential for developing AI models that are not only more robust but also ethically sound.

Quantum mechanics, with its inherent probabilistic nature, offers a unique approach to quantify the uncertainty and risk inherent in AI systems. This uncertainty arises from both the model’s inherent limitations and from the data it is trained on. Bias in AI, often seen as a deterministic issue, can be more thoroughly understood when viewed from the probabilistic lens provided by quantum mechanics. Here is an exploration of how quantum principles can be used to understand and mitigate these uncertainties and risks.

Traditional AI models produce probabilities based on training data and the model’s architecture. Quantum systems, however, describe probabilities using wave functions, with the square of the amplitude giving the likelihood of a particular outcome as shown in Eq.9.

where P(x) is the probability density of outcome x and ψ(x) is the wave function for that outcome. This method delves into the realm of quantum mechanics to assess uncertainty in AI model predictions. Starting with the AI model’s state represented as a quantum state, the process evaluates each potential prediction outcome, x. For each outcome, it determines its quantum probability amplitude, a complex number indicating the likelihood of that outcome. To derive a tangible probability, this amplitude is squared, resulting in the probability density P(x), given by equation 9. This quantum-derived probability is then contrasted with the traditional probability from a standard AI model. The method concludes by returning a set of comparison results, shedding light on the nuances between quantum and classical probability measures.

By using quantum probability amplitudes, AI systems can capture the inherent uncertainties in predictions in a more nuanced manner, potentially providing richer insights into areas of low confidence or high variability in model outputs.

Risk is often seen as a product of likelihood and impact. Quantum mechanics allows for the simultaneous evaluation of multiple possibilities, which can be leveraged to create a quantum risk matrix. This matrix can measure both the likelihood of a biased decision and its potential impact on outcomes.

|

Algorithm 3 - Quantum Risk Matrices for Evaluating Biased Decisions. |

Input: None

Parameter: None

Output: Quantum risk matrix

1: Initialize a 2D quantum register representing likelihood and impact axes.

2: Apply quantum gates to model the AI decision-making process.

3: Measure the register to evaluate the likelihood and impact of biases.

4: Aggregate measurements to construct a quantum risk matrix.

5: return Quantum risk matrix |

The quantum risk matrix offers a novel method for visualizing and understanding the complex interplay between bias, likelihood, and impact in AI decisions. By mapping these on a quantum plane, one can rapidly assess areas of substantial risk and prioritize interventions.

In quantum mechanics, entropy measures the uncertainty of a quantum state. By applying this concept to AI models, one can get a better grasp of the inherent uncertainties in the model’s predictions and decisions.

where S is the entropy and P(i) is the probability of outcome i. The Quantum Entropy Algorithm for Model Uncertainty is a novel approach that borrows concepts from quantum mechanics to assess the uncertainty inherent in AI model predictions. Initially, the AI model’s state is translated into a quantum representation. With a foundational entropy value set at zero, the algorithm delves into analyzing each potential prediction the model might make. For every prediction, it gauges the associated probability, denoted as P(i) for the outcome i prediction. Using this probability, the algorithm calculates the prediction’s contribution to the overall uncertainty using Eq. 10. This individual uncertainty is then aggregated to the running total. After iterating through all predictions, the cumulative entropy value offers a comprehensive measure of the model’s overall uncertainty.

In conclusion, the algorithm provides a quantum-inspired metric, with higher values indicating greater uncertainty in the model’s predictions, thereby shedding light on the model’s reliability and trustworthiness.

Quantum entropy offers a rigorous metric for gauging the uncertainty inherent in an AI system. By evaluating this, stakeholders can more precisely understand the reliability and confidence level of AI predictions.

To conclude, integrating quantum principles to measure uncertainty and risk in AI systems provides a more holistic and rigorous approach than classical methods. While the practical integration of these concepts remains a significant challenge due to the nascent state of quantum computing, their theoretical implications can reshape our understanding of bias, uncertainty, and risk in the AI.

Grover’s algorithm in bias detection

In the context of AI bias, erroneous decisions often arise due to uncertainties or limited data, especially when that data lacks representation from marginalized groups. Quantum computing can process various data scenarios simultaneously, making decisions more inclusive and reducing the potential for bias.

In the realm of AI, detecting biases can be viewed as searching for an anomalous piece of information in an unsorted dataset. Consider a dataset of N items, with a marked item representing biased data. Grover’s algorithm provides a quantum advantage by searching for this marked item with O(√N) iterations, as opposed to O(N) in a classical scenario. Mathematically, Grover’s algorithm utilizes quantum superposition to prepare a uniform superposition state as shown in Eq. 11.

A sequence of Grover operators, comprised of the oracle operator and Grover diffusion operator, is applied on this state to amplify the amplitude of the marked item. After approximately O(√N) applications, the quantum state collapses upon measurement to reveal the marked item.

In the context of bias detection, this marked item might represent a piece of data or a pattern that introduces bias in AI predictions. Using Grover’s algorithm, one can efficiently detect and isolate these biases, enabling more equitable and reliable AI models.

By harnessing the quadratic speedup provided by Grover’s algorithm, quantum computing promises a more efficient route to navigate the labyrinth of vast datasets in AI, making informed decisions despite missing or uncertain data, and especially locating biases that might otherwise remain hidden in classical computational scenarios.

Analogous to the way biases and errors can distort an AI system’s decisions, errors in quantum computations can mislead quantum-based AI models. This is where quantum error-correcting codes, such as the Shor code, become invaluable.

The Shor code encodes a single logical qubit into nine physical qubits, offering a means to correct both bit-flip and phase-flip errors. The encoding of a qubit state |ψ⟩ can be represented as depicted by Eq. 12, 13, 14.

When a single qubit error occurs, the Shor code uses the redundancy of the encoded state to identify and correct the error, ensuring the quantum state remains intact.

In the context of AI robustness, imagine quantum computations as the underpinnings of an AI’s decision-making process. If these computations are influenced by even minor errors, the resultant decisions can be heavily skewed, enhancing existing biases. By implementing the Shor code, we can protect the quantum computations that inform AI systems, ensuring a level of robustness against perturbations.

Just as error correction is vital in classical computing to maintain data integrity, in a quantum-enhanced AI, the Shor code acts as a guardian against quantum errors, bolstering the reliability and fairness of AI outcomes. Embracing error-correcting methodologies like the Shor code is paramount for the feasibility of quantum-based AI. Without such mechanisms, the potential advantages of quantum computing could be overshadowed by its inherent susceptibility to errors, especially when navigating the intricate challenges of AI biases.

Swiftly identifying biases as anomalies in AI outputs is crucial. Quantum techniques excel at analyzing vast datasets for these anomalies, making the process of pinpointing and rectifying biases much faster.

Quantum anomaly detection algorithms can specifically be trained to recognize biases as anomalies, ensuring biases do not go undetected. The quantum phase estimation algorithm can be employed, wherein a unitary operation U is applied to a quantum state, and the phase is estimated. This phase could correspond to potential anomalies in data, allowing for bias detection. Rapid bias detection can prevent unfair decisions from influencing real-world outcomes, ensuring a just application of AI technologies.

Ensuring AI models are designed with minimal bias from the outset is paramount. Quantum methodologies rigorously evaluate models against bias benchmarks, building trust in their outputs. Quantum validation protocols for AI models can validate models against standards that specifically prioritize fairness and bias minimization. Consider a quantum gate sequence G applied on a state |ψ⟩. Quantum process tomography can be used to verify the accuracy and fairness of this operation, ensuring bias-free model operations. Validating AI models against anti-bias benchmarks ensures that the systems prioritize fairness from their foundation.

Real-time bias detection can prevent the unfair influence of AI recommendations or decisions. Quantum protocols serve as vigilant sentinels, instantly identifying and flagging biases. In this section, the Quantum Fourier Transform (QFT) is presented for enhanced data analysis. The Quantum Fourier Transform is an essential component of many quantum algorithms, including Shor’s algorithm. The QFT, in the quantum realm, serves a similar purpose to the classical Fourier transform – it translates data from the time domain to the frequency domain. Given the vast and intricate nature of data overseen by AI systems, employing QFT could unearth patterns and biases otherwise obscured in the sheer volume of information. For example, recurrent biases might manifest as notable frequencies upon the application of QFT, allowing for clearer identification and subsequent mitigation.

As users interact with AI models, the outputs need to consistently align with fairness standards. Quantum checks on these interactions can ensure that bias does not creep into real-time recommendations or decisions.

Evaluating the Quantum Volume as a measure for its potential in AI systems can be considered. Quantum Volume is a single-number metric that can be used to benchmark the computational capability of quantum computers. It considers both gate and measurement errors, qubit connectivity, and crosstalk. A higher quantum volume indicates a more powerful quantum computer.

For AI, understanding the quantum volume of the computational backbone is vital. Robust quantum volume means the system can oversee more complex AI models and is better suited to tackle the multi-dimensional challenges posed by biases. Thus, ensuring high quantum volume is crucial for the development and validation of AI systems that aim to identify and rectify biases effectively.

The integration of these quantum methods and algorithms offers a promising pathway towards crafting AI models that are both insightful and impartial. They provide the tools to delve deeper, to see clearer, and to act more decisively against biases that might otherwise undermine the fairness and efficacy of AI systems.

Business understanding data understanding

While quantum interventions at this stage are more about awareness and alerts, the practical application of bias correction algorithms is not very prominent. However, having a preliminary understanding of tools like Synthetic Minority Over-sampling Technique (SMOTE), Adaptive Synthetic Sampling (ADASYN), Generative Adversarial Networks (GAN), and Variational Autoencoders (VAE) can guide the business objective formation by knowing what corrections are possible later.

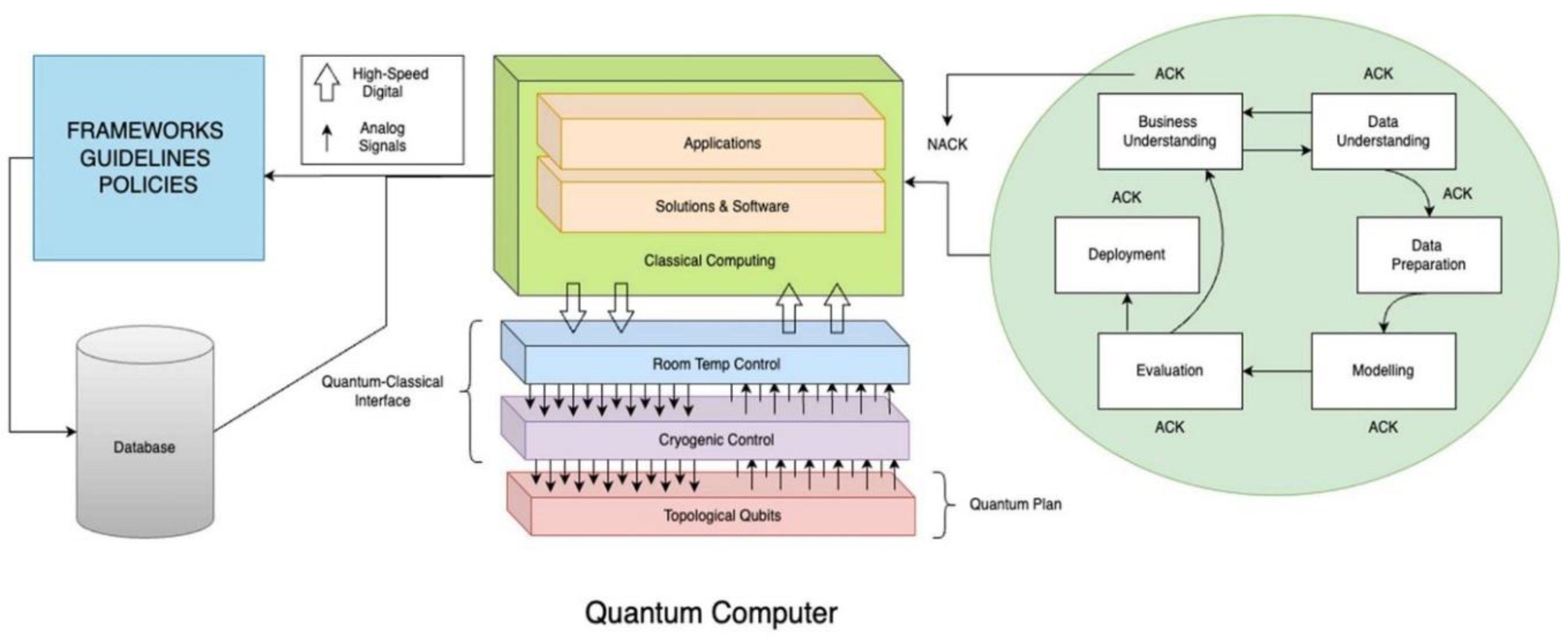

Figure 5 illustrates the integration of a Quantum Sentinel within the widely adopted CRISP-DM architecture, highlighting its high-level structure. In this initial phase, where objectives are defined, and project requirements are understood, the quantum sentinel can be integrated to:

Flag: Alert stakeholders if the project goals have inherent biases or prejudices that might lead to unfair AI outcomes.

Correction: If the objectives are found to be biased, quantum algorithms like Grover’s can help identify the specific bias quickly, enabling stakeholders to adjust the goals accordingly.

ACK/NACK: Once goals are defined or adjusted, the quantum sentinel can provide an acknowledgment (ACK) for bias-free goals or a negative acknowledgment (NACK) if biases persist.

During the phase where data is collected and understood:

Flag: The quantum sentinel examines the data, identifying any biases in data sourcing, sampling, or initial insights. Quantum process tomography can offer insights into the nature and extent of these biases.

Correction: Quantum Fourier Transform (QFT) can be utilized to better understand data distributions and highlight areas that need correction.

ACK/NACK: Post analysis, ACK for unbiased data sets, and NACK for those that require further scrutiny.

During the phase where data is collected and understood:

Flag: The quantum sentinel examines the data, identifying any biases in data sourcing, sampling, or initial insights. Quantum process tomography can offer insights into the nature and extent of these biases.

Correction: Quantum Fourier Transform (QFT) can be utilized to better understand data distributions and highlight areas that need correction.

ACK/NACK: Post analysis, ACK for unbiased data sets, and NACK for those that require further scrutiny.

While constructing data models:

Flag: If the selected models or algorithms inherently favor certain data patterns or exhibit biases, the quantum sentinel intervenes.

-

Correction:

Grover’s algorithm can help in the rapid selection of alternative algorithms that might be more balanced.

Generative Adversarial Networks (GAN) can be used to generate data that can help in achieving a balanced dataset or to augment data in cases where data is limited.

Variational Autoencoders (VAE) help in generating new data instances that can balance out biases in the original dataset.

If data scarcity is the root of the bias, techniques like VAE and GAN can be employed to generate more diverse data.

ACK/NACK: Once models are constructed, the sentinel provides an ACK for those ready for evaluation or a NACK for those that need adjustments.

When the models are being evaluated:

Flag: The quantum sentinel highlights if the evaluation metrics or tests employed overlook certain biases or unfair outcomes.

Correction: Quantum-enhanced techniques, like the Shor code, can be applied to rectify and re-evaluate the model, ensuring its robustness.

ACK/NACK: Post-evaluation, models that meet the fairness criteria receive an ACK, while those falling short receive a NACK. The model can be retrained with corrected data using algorithms like SMOTE, ADASYN, or even GAN and VAE to ensure it is more robust and less biased.

As models are deployed:

Flag: The quantum sentinel monitors real-world model applications, signaling any biases that manifest in real-world scenarios.

Correction: Leveraging Quantum Volume, the sentinel ensures the quantum backbone is robust enough to oversee real-world data, making necessary tweaks in real-time. If biases arise in specific scenarios, use GAN or VAE to simulate those scenarios better and retrain the model, ensuring that the AI system remains robust against those biases.

ACK/NACK: In the deployment phase, continuous monitoring results in periodic ACKs or NACKs, ensuring the model remains fair throughout its life cycle.

Essentially, integrating a quantum sentinel with the CRISP-DM process ensures a continuous and rigorous check on biases at every step of the data mining process. Also, by integrating this with established algorithms like Synthetic Minority Over-sampling Technique (SMOTE), Generative Adversarial Networks (GAN), Variational Autoencoders (VAE), and Adaptive Synthetic Sampling (ADASYN), this approach provides a multi-layered defense against biases in AI systems. This integrated methodology offers a comprehensive method to ensure fairness and robustness in AI systems by employing quantum systems as sentinels that flag potential issues and then using established algorithms to correct those biases.