Submitted:

23 September 2024

Posted:

24 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Physics-Informed Neural Network

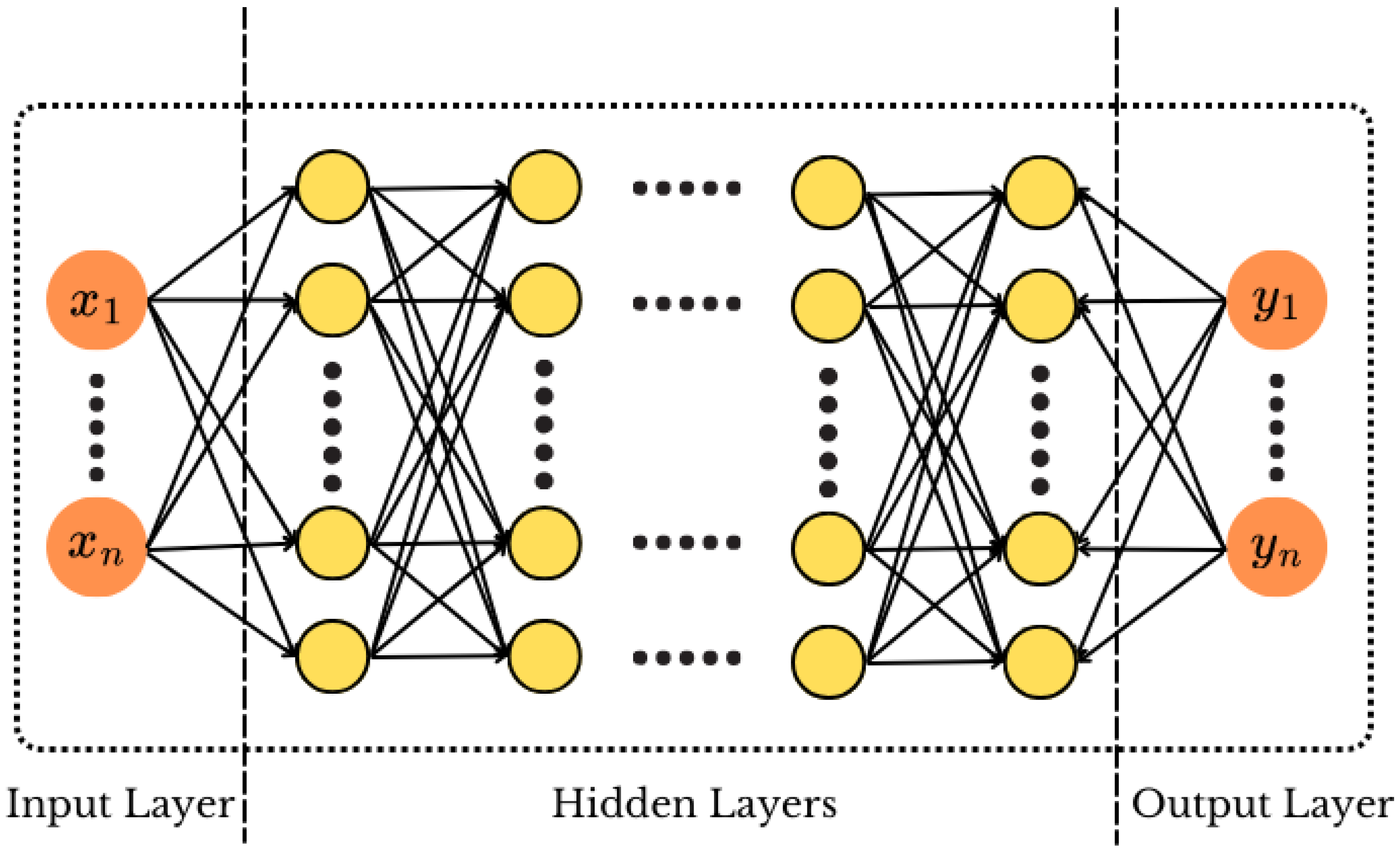

2.1. Artificial Neural Network

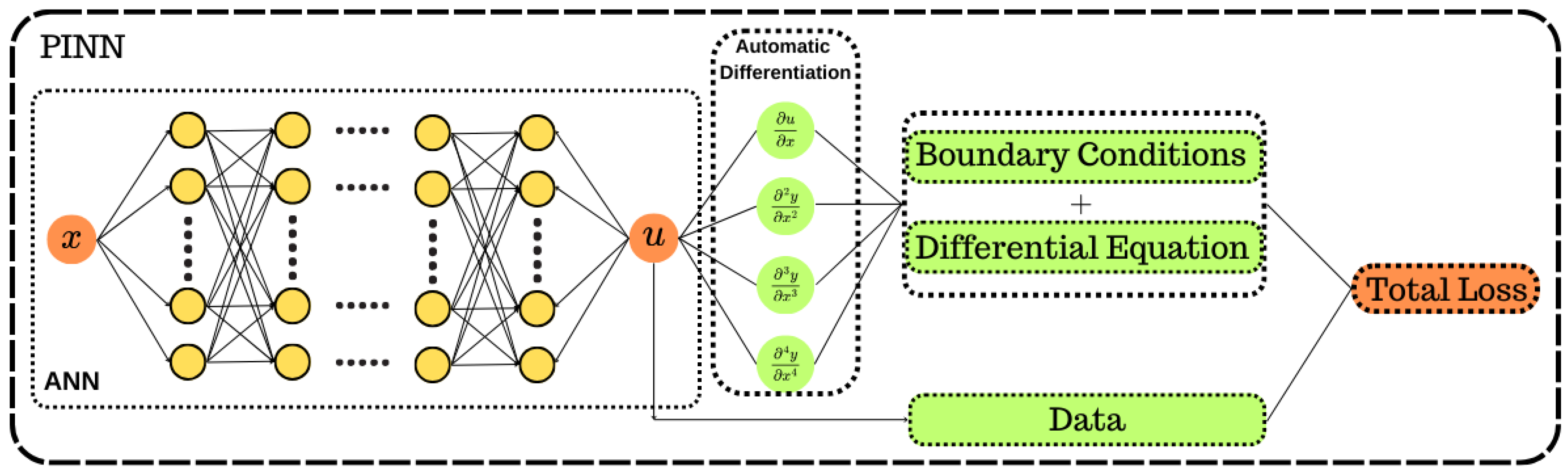

2.2. Physics-Informed Neural Networks(PINNs)

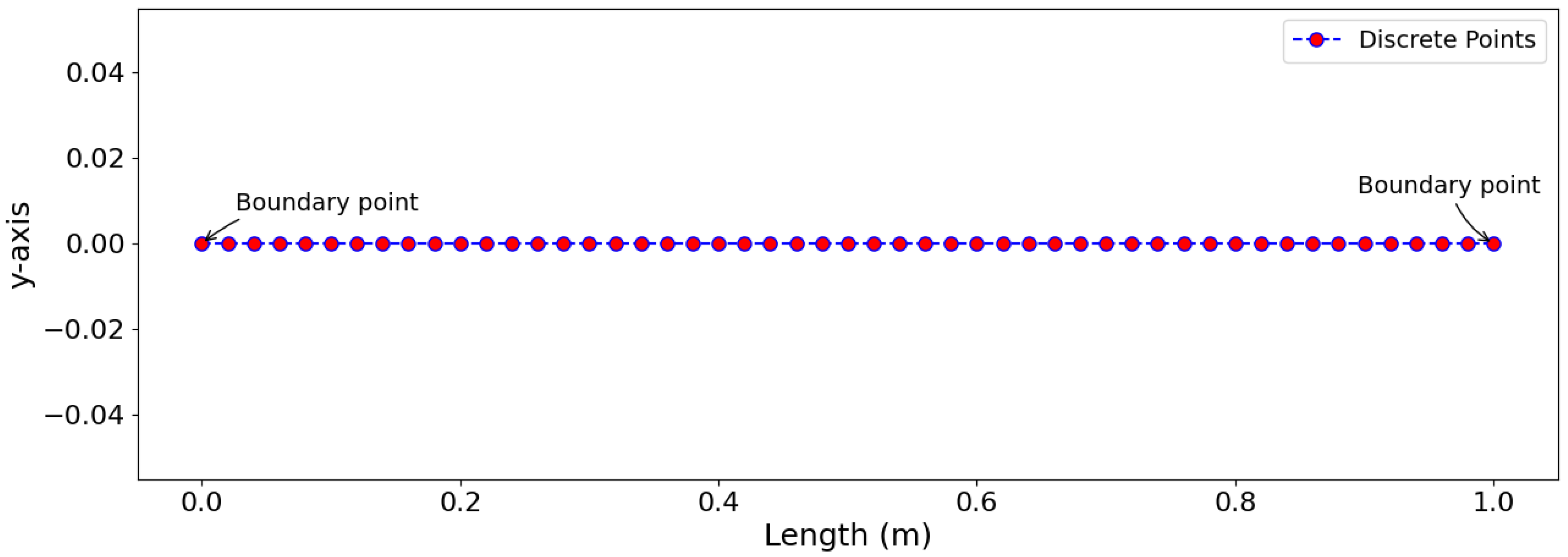

3. PINN for 1D Solid Mechanics Problem

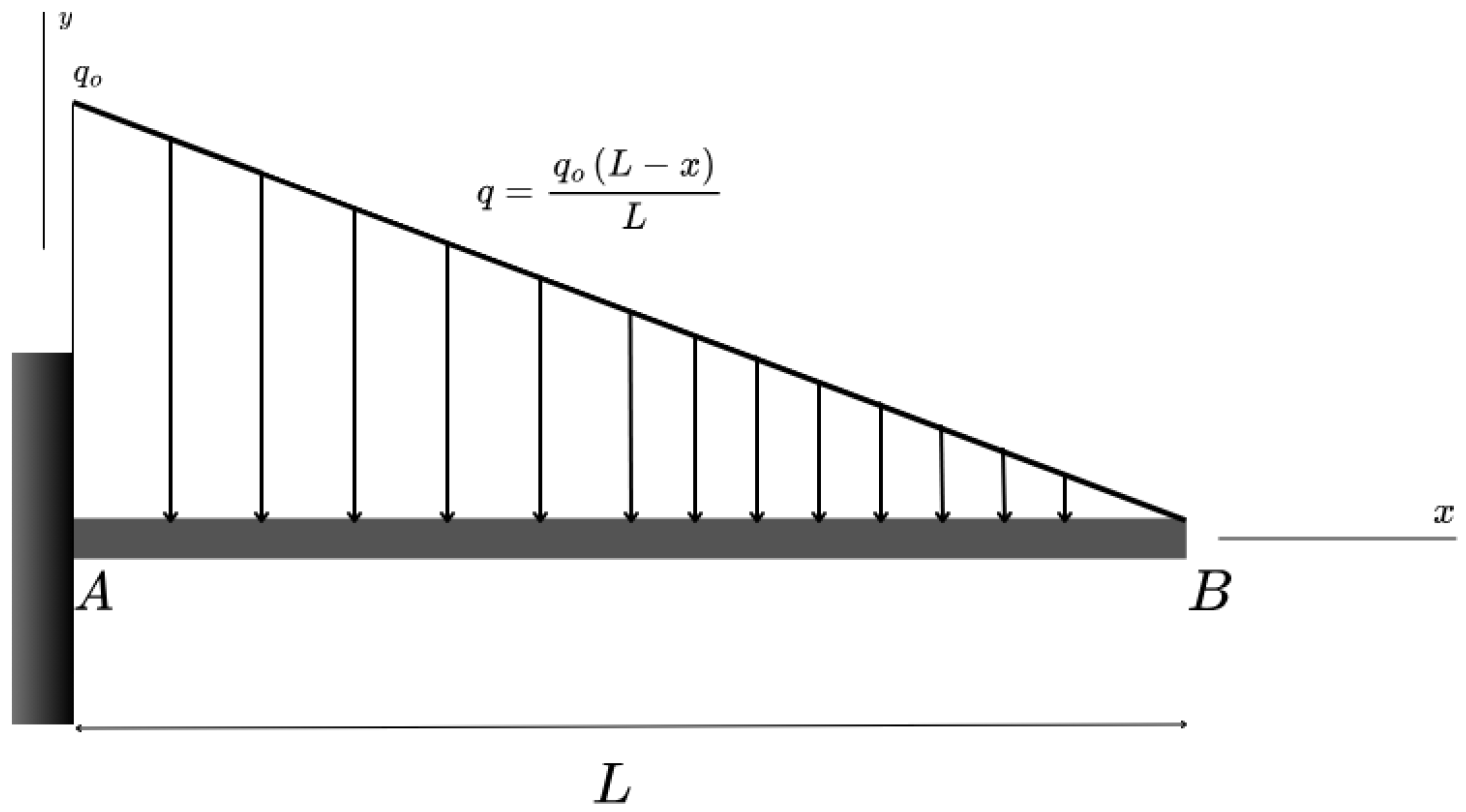

3.1. Problem Definition

3.2. Governing Equations

3.3. Loss-Defined

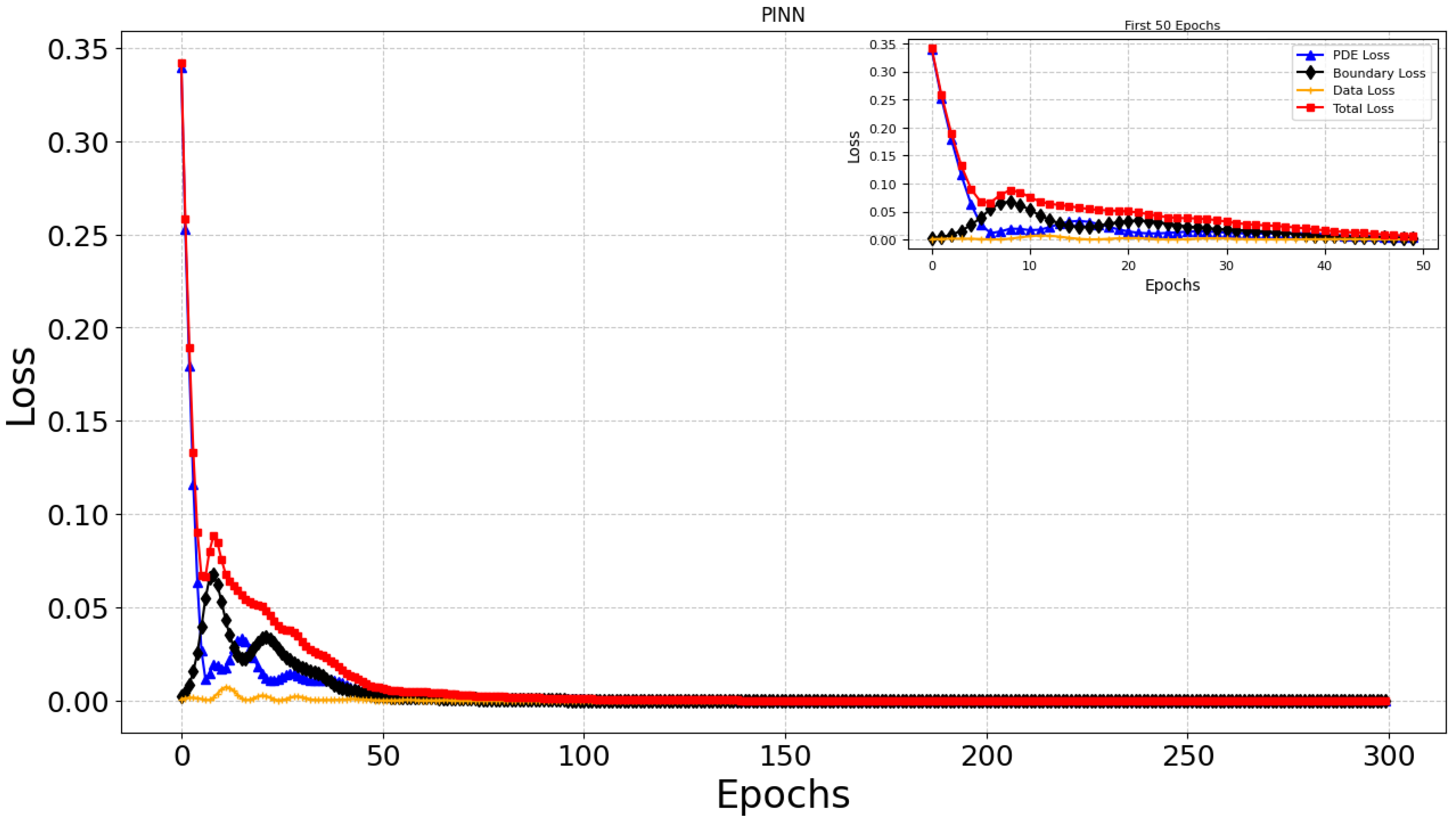

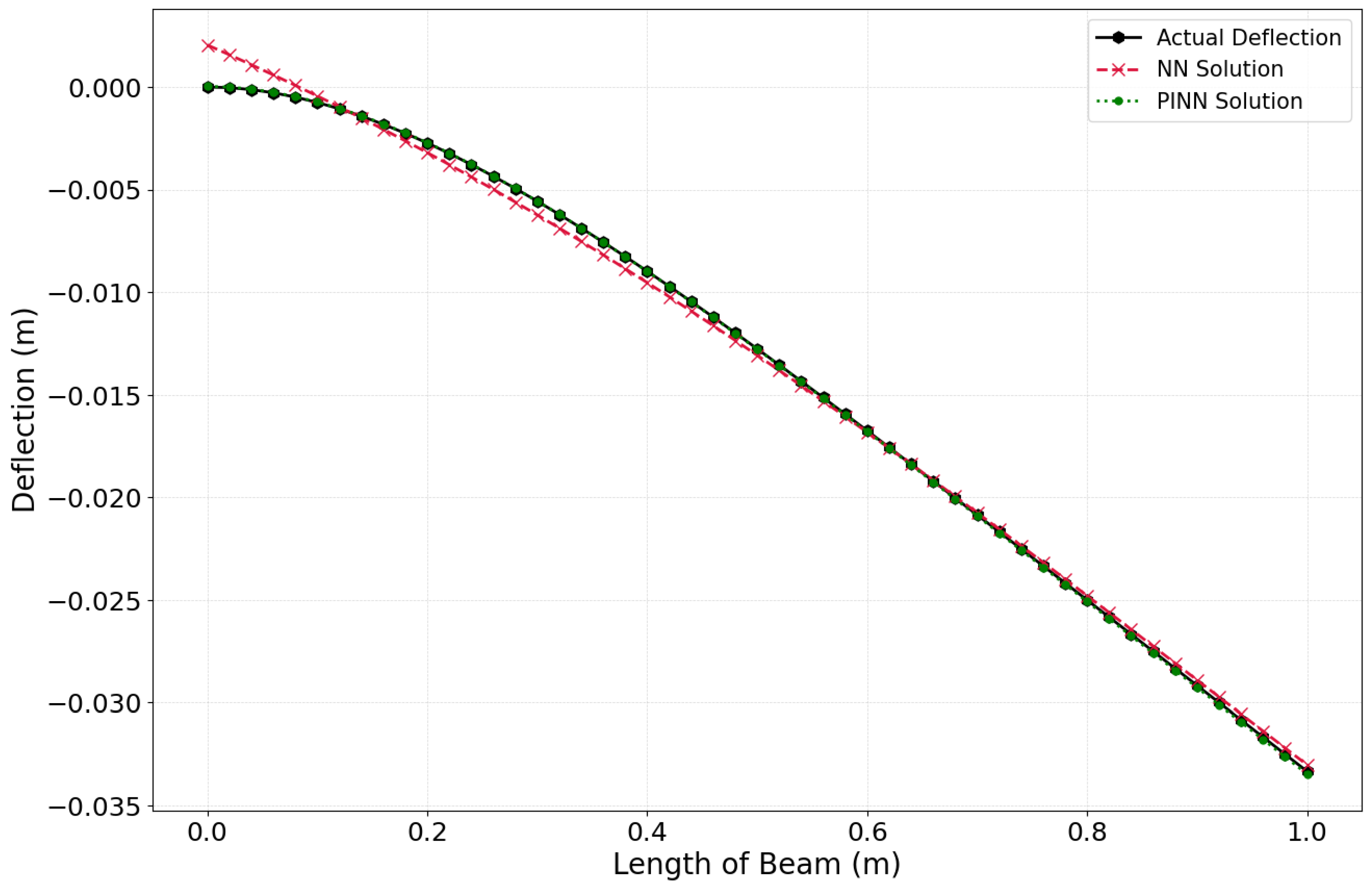

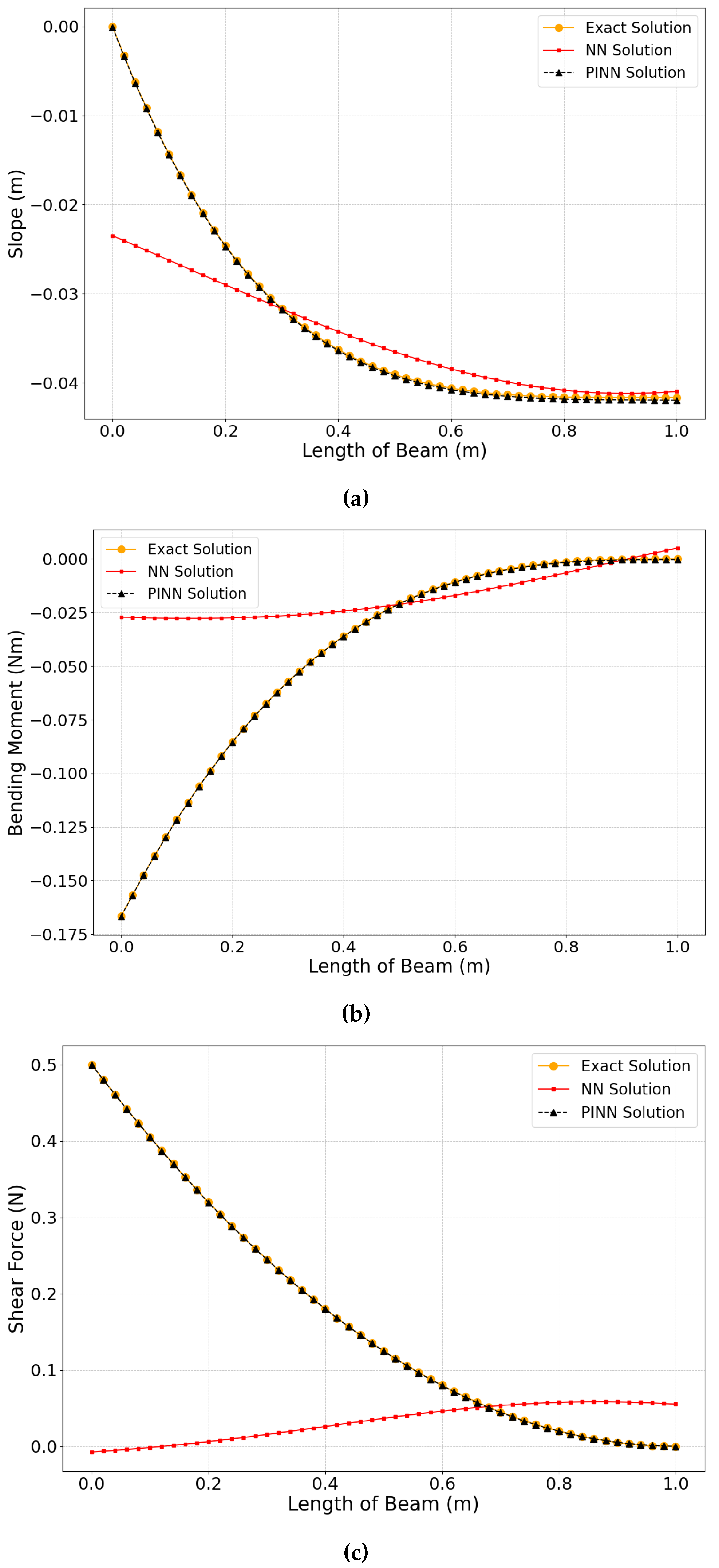

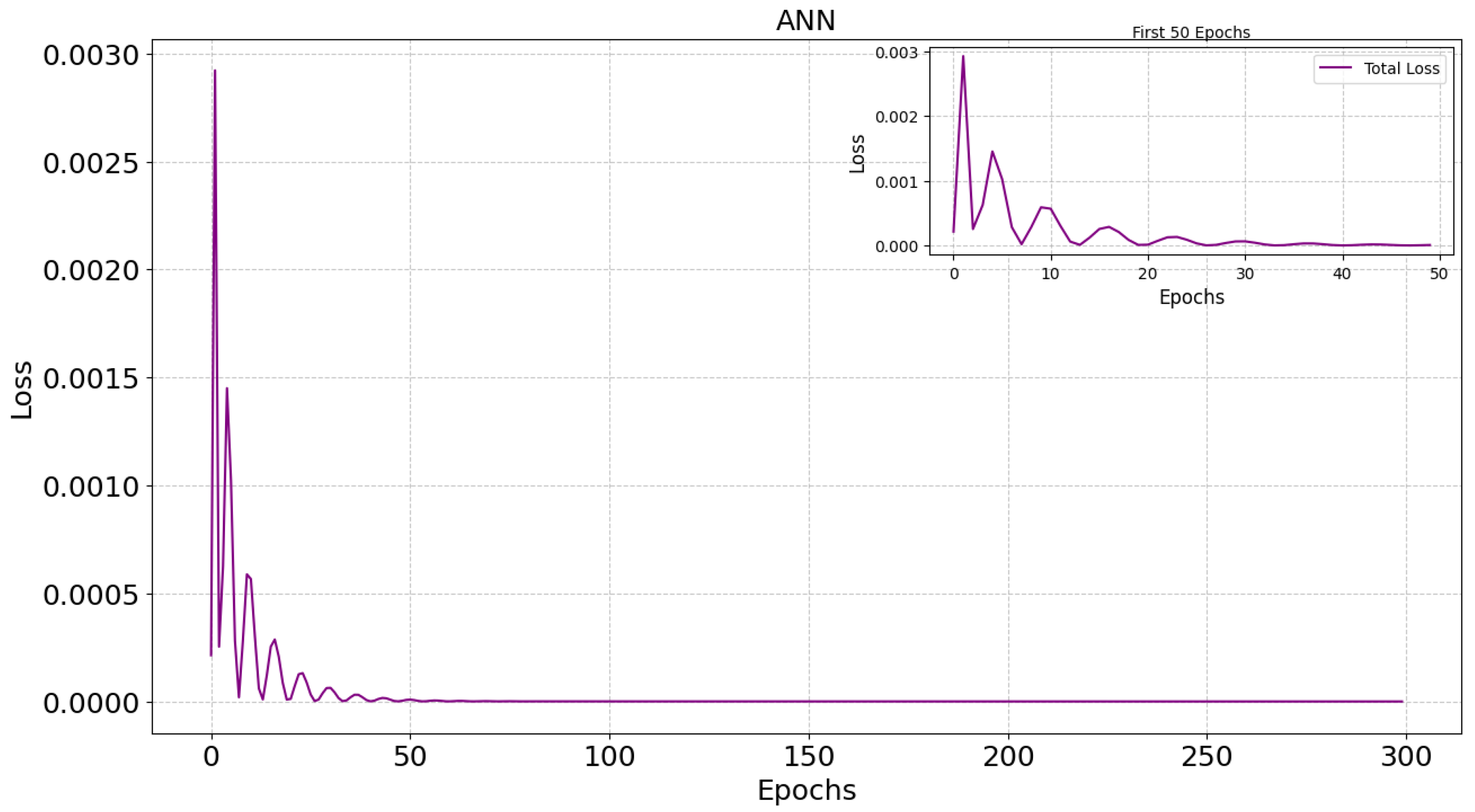

4. Results and Discussion

5. Conclusions

Acknowledgments

References

- Gere, J.; Goodno, B. Mechanics of Materials, Brief Edition; Cengage Learning, 2011.

- Curnier, A. Computational methods in solid mechanics; Vol. 29, Springer Science & Business Media, 2012.

- Bykiv, N.; Yasniy, P.; Lapusta, Y.; Iasnii, V. Finite element analysis of reinforced-concrete beam with shape memory alloy under the bending. Procedia Structural Integrity 2022, 36, 386–393. 1st Virtual International Conference “In service Damage of Materials: Diagnostics and Prediction. [CrossRef]

- Ma, G.; Jiang, Q.; Zong, X.; Wang, J. Identification of flexural rigidity for Euler–Bernoulli beam by an iterative algorithm based on least squares and finite difference method. Structures 2023, 55, 138–146. [CrossRef]

- Chehel Amirani, M.; Khalili, S.; Nemati, N. Free vibration analysis of sandwich beam with FG core using the element free Galerkin method. Composite Structures 2009, 90, 373–379. [CrossRef]

- Liu, G. Meshfree Methods: Moving Beyond the Finite Element Method, Second Edition; CRC Press, 2009.

- Kononenko, O.; Kononenko, I. Machine Learning and Finite Element Method for Physical Systems Modeling, 2018, [arXiv:cs.CE/1801.07337].

- Kag, V.; Gopinath, V. Physics-informed neural network for modeling dynamic linear elasticity, 2024, [arXiv:cs.NE/2312.15175].

- Kaymak, S.; Helwan, A.; Uzun, D. Breast cancer image classification using artificial neural networks. Procedia Computer Science 2017, 120, 126–131. 9th International Conference on Theory and Application of Soft Computing, Computing with Words and Perception, ICSCCW 2017, 22-23 August 2017, Budapest, Hungary. [CrossRef]

- Sako, K.; Mpinda, B.N.; Rodrigues, P.C. Neural Networks for Financial Time Series Forecasting. Entropy 2022, 24. [CrossRef]

- Sinha, D.; Sarangi, P.K.; Sinha, S., Efficacy of Artificial Neural Networks (ANN) as a Tool for Predictive Analytics. In Analytics Enabled Decision Making; Sharma, V.; Maheshkar, C.; Poulose, J., Eds.; Springer Nature Singapore: Singapore, 2023; pp. 123–138. [CrossRef]

- Yue, T.; Wang, Y.; Zhang, L.; Gu, C.; Xue, H.; Wang, W.; Lyu, Q.; Dun, Y. Deep Learning for Genomics: From Early Neural Nets to Modern Large Language Models. International Journal of Molecular Sciences 2023, 24. [CrossRef]

- Otter, D.W.; Medina, J.R.; Kalita, J.K. A Survey of the Usages of Deep Learning for Natural Language Processing. IEEE Transactions on Neural Networks and Learning Systems 2021, 32, 604–624. [CrossRef]

- McCracken, M.F. Artificial Neural Networks in Fluid Dynamics: A Novel Approach to the Navier-Stokes Equations. In Proceedings of the Proceedings of the Practice and Experience on Advanced Research Computing, New York, NY, USA, 2018; PEARC ’18. [CrossRef]

- Morimoto, M.; Fukami, K.; Zhang, K.; Fukagata, K. Generalization techniques of neural networks for fluid flow estimation. Neural Computing and Applications 2021, 34, 3647–3669. [CrossRef]

- Hsu, Y.C.; Yu, C.H.; Buehler, M.J. Using deep learning to predict fracture patterns in crystalline solids. Matter 2020, 3, 197–211.

- Mianroodi, J.R.; Siboni, N.H.; Raabe, D. Teaching Solid Mechanics to Artificial Intelligence: a fast solver for heterogeneous solids, 2021, [arXiv:cond-mat.mtrl-sci/2103.09147].

- Diao, Y.; Yang, J.; Zhang, Y.; Zhang, D.; Du, Y. Solving multi-material problems in solid mechanics using physics-informed neural networks based on domain decomposition technology. Computer Methods in Applied Mechanics and Engineering 2023, 413, 116120. [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics 2019, 378, 686–707. [CrossRef]

- Cuomo, S.; di Cola, V.S.; Giampaolo, F.; Rozza, G.; Raissi, M.; Piccialli, F. Scientific Machine Learning through Physics-Informed Neural Networks: Where we are and What’s next, 2022, [arXiv:cs.LG/2201.05624].

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–44. [CrossRef]

- Lu, L.; Meng, X.; Mao, Z.; Karniadakis, G.E. DeepXDE: A Deep Learning Library for Solving Differential Equations. SIAM Review 2021, 63, 208–228. [CrossRef]

- Kadeethum, T.; Jørgensen, T.M.; Nick, H.M. Physics-informed neural networks for solving nonlinear diffusivity and Biot’s equations. PLOS ONE 2020, 15, e0232683. [CrossRef]

- Meng, X.; Li, Z.; Zhang, D.; Karniadakis, G.E. PPINN: Parareal physics-informed neural network for time-dependent PDEs. Computer Methods in Applied Mechanics and Engineering 2020, 370, 113250. [CrossRef]

- Sharma, P.; Evans, L.; Tindall, M.; Nithiarasu, P. Stiff-PDEs and physics-informed neural networks. Archives of Computational Methods in Engineering 2023, 30, 2929–2958.

- Muller, A.P.O.; Costa, J.C.; Bom, C.R.; Klatt, M.; Faria, E.L.; de Albuquerque, M.P.; de Albuquerque, M.P. Deep pre-trained FWI: where supervised learning meets the physics-informed neural networks. Geophysical Journal International 2023, 235, 119–134. [CrossRef]

- Rebai, A.; Boukhris, L.; Toujani, R.; Gueddiche, A.; Banna, F.A.; Souissi, F.; Lasram, A.; Rayana, E.B.; Zaag, H. Unsupervised physics-informed neural network in reaction-diffusion biology models (Ulcerative colitis and Crohn’s disease cases) A preliminary study, 2023, [arXiv:cs.LG/2302.07405].

- Sahin, T.; von Danwitz, M.; Popp, A. Solving Forward and Inverse Problems of Contact Mechanics using Physics-Informed Neural Networks, 2023, [arXiv:math.NA/2308.12716].

- Eivazi, H.; Tahani, M.; Schlatter, P.; Vinuesa, R. Physics-informed neural networks for solving Reynolds-averaged Navier–Stokes equations. Physics of Fluids 2022, 34.

- Jalili, D.; Jang, S.; Jadidi, M.; Giustini, G.; Keshmiri, A.; Mahmoudi, Y. Physics-informed neural networks for heat transfer prediction in two-phase flows. International Journal of Heat and Mass Transfer 2024, 221, 125089. [CrossRef]

- Mukhmetov, O.; Zhao, Y.; Mashekova, A.; Zarikas, V.; Ng, E.Y.K.; Aidossov, N. Physics-informed neural network for fast prediction of temperature distributions in cancerous breasts as a potential efficient portable AI-based diagnostic tool. Computer Methods and Programs in Biomedicine 2023, 242, 107834. [CrossRef]

- Dhiman, A.; Hu, Y. Physics Informed Neural Network for Option Pricing, 2023, [arXiv:q-fin.PR/2312.06711].

- Haghighat, E.; Raissi, M.; Moure, A.; Gomez, H.; Juanes, R. A physics-informed deep learning framework for inversion and surrogate modeling in solid mechanics. Computer Methods in Applied Mechanics and Engineering 2021, 379, 113741. [CrossRef]

- Rao, C.; Sun, H.; Liu, Y. Physics-informed deep learning for computational elastodynamics without labeled data. Journal of Engineering Mechanics 2021, 147, 04021043.

- Bai, J.; Rabczuk, T.; Gupta, A.; Alzubaidi, L.; Gu, Y. A physics-informed neural network technique based on a modified loss function for computational 2D and 3D solid mechanics. Comput. Mech. 2022, 71, 543–562. [CrossRef]

- Bai, J.; Jeong, H.; Batuwatta-Gamage, C.P.; Xiao, S.; Wang, Q.; Rathnayaka, C.M.; Alzubaidi, L.; Liu, G.R.; Gu, Y. An Introduction to Programming Physics-Informed Neural Network-Based Computational Solid Mechanics. International Journal of Computational Methods 2023, 20, 2350013. [CrossRef]

- Abueidda, D.W.; Koric, S.; Guleryuz, E.; Sobh, N.A. Enhanced physics-informed neural networks for hyperelasticity. International Journal for Numerical Methods in Engineering 2022, 124, 1585–1601. [CrossRef]

- Kapoor, T.; Wang, H.; Núñez, A.; Dollevoet, R. Physics-informed neural networks for solving forward and inverse problems in complex beam systems. IEEE Transactions on Neural Networks and Learning Systems 2023.

- Verma, A.; Mallick, R.; Harursampath, D.; Sahay, P.; Mishra, K.K. PHYSICS-INFORMED NEURAL NETWORKS WITH APPLICATION IN COMPUTATIONAL STRUCTURAL MECHANICS.

- P. Dell’Aversana, “Artificial neural networks and deep learning. a simple overview,” 12 2019.

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press, 2016. http://www.deeplearningbook.org.

- Liu, G.R. Machine Learning with Python; WORLD SCIENTIFIC, 2022; https://www.worldscientific.com/doi/pdf/10.1142/12774. [CrossRef]

- Liquet, B.; Moka, S.; Nazarathy, Y. Mathematical Engineering of Deep Learning; CRC Press, 2024.

- Schäfer, V. Generalization of physics-informed neural networks for various boundary and initial conditions. PhD thesis, Technische Universität Kaiserslautern, 2022.

- Baydin, A.G.; Pearlmutter, B.A.; Radul, A.A.; Siskind, J.M. Automatic differentiation in machine learning: a survey, 2018, [arXiv:cs.SC/1502.05767].

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization, 2017, [arXiv:cs.LG/1412.6980].

- Dubey, S.R.; Singh, S.K.; Chaudhuri, B.B. Activation Functions in Deep Learning: A Comprehensive Survey and Benchmark, 2022, [arXiv:cs.LG/2109.14545].

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library, 2019, [arXiv:cs.LG/1912.01703].

| Epochs | 300 |

|---|---|

| Learning Rate | 0.001 |

| Optimizer | Adam |

| Input Layer | 1 |

| Hidden Layers | 5 |

| Output Layer | 1 |

| Number of Neurons | 50 |

| Activation Function | Tanh |

| ANN | PINN | |

|---|---|---|

| Deflection | 3.070e-07 | 3.060e-09 |

| Slope | 4.500e-05 | 2.935e-08 |

| Bending Moment | 0.002 | 5.517e-08 |

| Shear Force | 0.049 | 1.127e-07 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).