1. Introduction

Large Language Models (LLMs) have emerged as a pivotal technology in the field of natural language processing (NLP), demonstrating remarkable performance and a wide range of promising applications. Due to their strong capabilities in context understanding and text generation [

1], LLMs are adept at capturing subtle distinctions in text and producing coherent and natural language. Additionally, LLMs exhibit impressive few-shot learning capabilities [

2], enabling them to quickly adapt to new tasks with minimal fine-tuning [

3], effectively mitigating challenges associated with data scarcity. However, with the accelerated development of these models, a concerning issue has surfaced: LLMs occasionally generate nonsensical or contextually irrelevant text, a phenomenon referred to as hallucination [

4,

5]. Hallucinations significantly compromise the reliability of LLMs in practical applications. For instance, in medical settings where large models are used to prescribe medications [

6], hallucinations or inaccurate judgments could potentially worsen a patient’s condition or even pose life-threatening risks.

The occurrence of hallucinations in LLMs can be attributed to several factors, including issues related to data, training, and inference [

7]. At the data level, flawed data sources or improper utilization, such as misinformation and inherent biases [

8,

9], can cause LLMs to mimic false information and produce biased outputs, resulting in various types of hallucinations. During the training phase, both pre-training and alignment stages present unique challenges, such as limitations in model architecture, the use of maximum likelihood estimation (MLE) [

10,

11] strategies, and discrepancies between the model’s capabilities and its learned beliefs, all of which can contribute to hallucinations. Finally, during the decoding process, the stochastic nature of decoding strategies [

12] and limitations in representation, such as over-reliance on local context and the softmax bottleneck [

13], may restrict the model’s ability to generate diverse probabilistic outputs, increasing the likelihood of inaccurate token predictions.

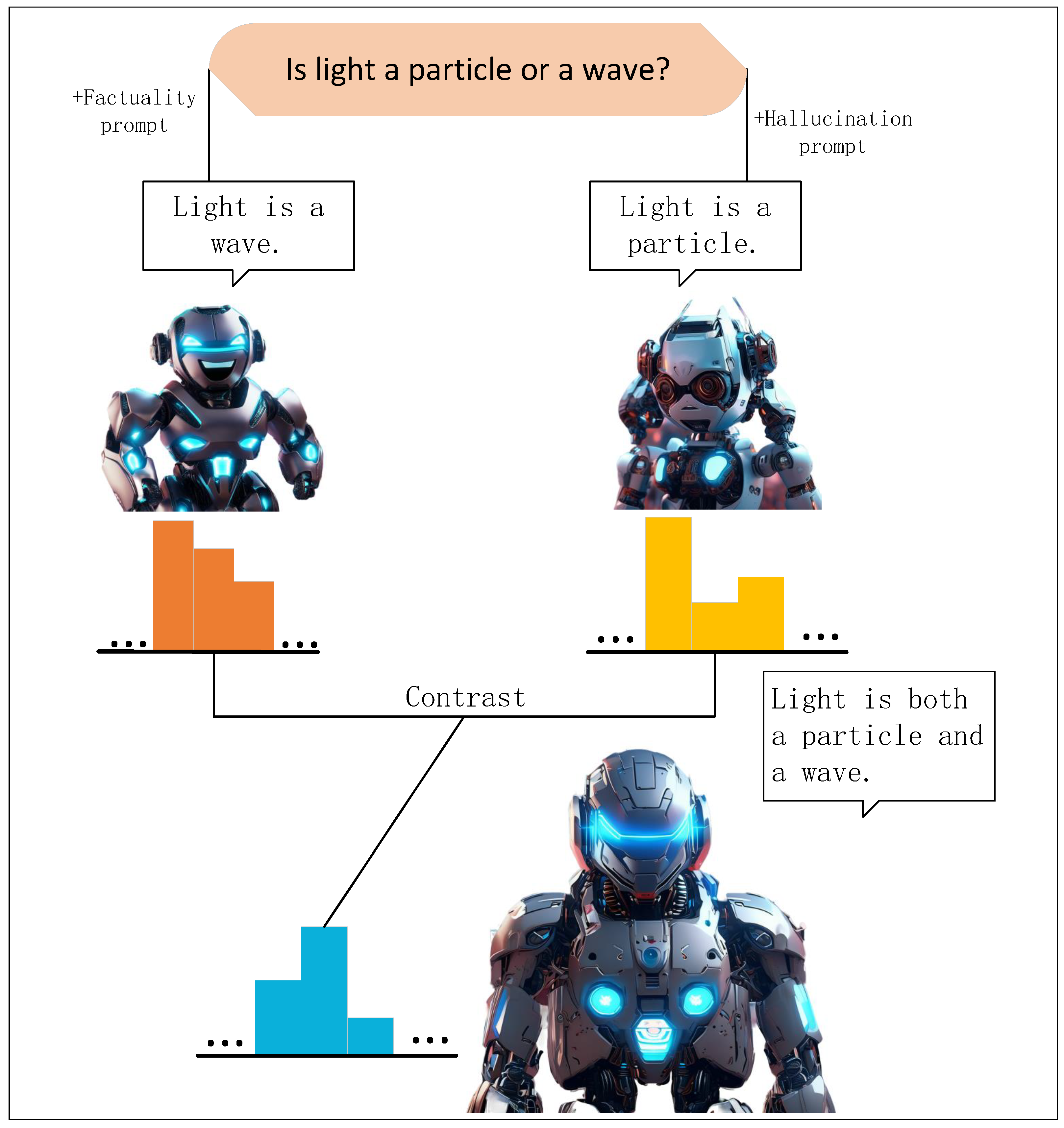

Our research focuses on utilizing prompt engineering techniques [

14], combined with contrastive decoding [

15] strategies, to reduce hallucinations in large language models. Prompt engineering involves the systematic design and optimization of input prompts to guide the model’s responses, ensuring accuracy, relevance, and coherence in the generated output [

16]. As highlighted in studies [

17,

18], well-structured prompts can help address issues such as hallucinations in machine-generated text. However, relying solely on well-designed prompts to elicit correct answers has limited effectiveness in improving the factual accuracy of the model. To address this limitation, we propose a novel approach that improves factuality by enabling the model to filter out incorrect information from correct outputs. Specifically, we first design prompts that guide the model toward producing accurate answers. Concurrently, we create prompts that intentionally induce incorrect responses. By comparing the outputs from both types of prompts through contrastive decoding, the model is able to discard the erroneous outputs and retain only the accurate ones, thereby enhancing its factual accuracy. Our method DFHP is illustrated in

Figure 1.

Our method is built upon the LLaMA-7B model [

19]. To assess its effectiveness, we conducted evaluations using two types of tasks: multiple-choice and open-ended generation. In the multiple-choice tasks, our method achieved the highest scores on both the TruthfulQA [

20] and Factor [

21] datasets, demonstrating a significant improvement in the factual accuracy of the LLaMA-7B model. For open-ended generation tasks, although our method showed a slight decrease in informativeness according to human evaluations on TruthfulQA, it produced more truthful content and achieved higher scores on the %truth*info metric. Furthermore, results from the chain-of-thought reasoning datasets, such as StrategyQA [

22] and GSM8k [

23], indicate that our method not only enhances the model’s factual accuracy but also improves its reasoning capabilities.

2. Related Work

Huang et al. [

7] categorize hallucinations in large language models into two main types: factuality hallucination and faithfulness hallucination. Factuality hallucination occur when the model’s output contradicts or diverges from established knowledge. Faithfulness hallucination, on the other hand, arise when the generated content fails to follow the user’s instructions, does not align with the provided context, or exhibits internal inconsistencies. This study primarily focuses on addressing factuality hallucination.

Current approaches to mitigating hallucinations fall into two categories [

24]: prompt engineering and model refinement. Prompt engineering includes methods like prompt fine-tuning [

25], retrieval-augmented generation (RAG) [

26], and self-improvement through feedback [

27], with the key objective of guiding models to produce more accurate outputs through well-designed instructions. Model refinement, on the other hand, involves developing novel decoding strategies [

28], integrating fidelity-based loss functions [

29], and applying supervised fine-tuning [

30]. These techniques aim to improve the model’s internal mechanisms to reduce hallucinations. This process is ongoing and relies on continuous advancements in algorithms and data quality. In this study, we combine prompt engineering with contrastive decoding techniques. A similar strategy, known as context-aware decoding (CAD) [

31], has been shown to reduce hallucinations by comparing the outputs generated with and without contextual information. Our approach builds upon this by eliminating the reliance on context and instead employing a factuality-hallucinations prompt contrastive decoding method, encouraging the model to generate more reliable outputs. We will now explore the details of prompt engineering and our prompt strategy in greater depth.

Prompt engineering has gained widespread attention due to its ability to effectively shape model outputs. By using carefully crafted prompts, researchers can significantly enhance the adaptability of large models across a wide range of tasks [

32]. Research in this field has expanded rapidly, from basic methods involving comprehensive description [

33] to more sophisticated approaches such as "chain of thought" prompting [

34]. In this study, we mainly utilize two strategies: role prompting [

35] and few-shot prompting [

36]. Role prompting involves assigning the model a specific identity or role to guide its outputs, which has proven highly effective. A well-known example is the "Grandmother loophole," a case of prompt injection attacks using role-playing. Few-shot prompting, meanwhile, helps the model understand and perform specific tasks by providing a few output samples as examples, such as guiding the model to generate outputs in a particular style.

3. Methods

The core of our approach involves using two types of prompts to process the user’s input. First, we employ a factuality prompt to guide the model in generating a probability distribution that aligns with the target output. Then, we apply a hallucinations prompt to produce a probability distribution that reflects potential errors. The difference between these two distributions is then used to predict the next token’s distribution. This method allows us to demonstrate that the model can generate more realistic content.

For a given factuality prompt

and an input sequence

, the logit function

represents the model’s predicted probability distribution for the next token under the influence of the positive prompt. This is then normalized into a probability distribution using the softmax function, as shown below:

Similarly, the probability distribution for the next token under the hallucinations prompt

is:

To improve the realism of the model’s output, our objective is to enhance the predictions from the factuality prompt while diminishing the influence of the hallucinations prompt. Specifically, we subtract the log probability induced by hallucinated outputs from the factuality prediction, and apply the softmax function to the resulting contrastive probabilities to make the final token prediction. This process can be described by the following formula (detailed formula omitted). Furthermore, inspired by Shi et al. [

31], we introduce a hyperparameter

within the range of

to control the strength of the contrastive decoding. When

= 0, the model’s output is solely determined by the positive prompt. Although

can theoretically approach infinity, we recommend limiting its value to 1 or lower to avoid overemphasizing the negative prompt, which could compromise the accuracy of the output. In our experiments, we set

to 0.5.

To ensure the fluency of the generated text, it is common to truncate the tail of the probability distribution during the sampling process, using methods such as top-k [

37] or nucleus sampling [

38]. As noted by Li et al. [

15], overly penalizing the hallucinated model’s predictions (which, in our case, correspond to those influenced by the negative prompt) can lead to the generation of incorrect text. Therefore, we apply adaptive truncation to the probability distribution generated by the positive prompt model, discarding the low-probability tokens. A hyperparameter

is used to control the degree of truncation, with larger

values retaining more high-probability tokens.

4. Experiments

4.1. Experimental Setup

4.1.1. Dataset and Metrics

For the multiple-choice task. we utilized the widely adopted TruthfulQA [

20] and Factor datasets [

21]. Each entry in TruthfulQA consists of a question, a best answer, multiple correct answers, and several incorrect answers. To evaluate the factual accuracy of the model, the authors introduced three metrics: MC1, MC2, and MC3. Specifically, MC1 is analogous to a single-choice question, where given a best answer and several incorrect answers, the accuracy is measured by how often the model selects the best answer. MC2 is akin to a multiple-choice question, where the model is presented with multiple correct and incorrect answers, and the score is determined by the normalized total probability assigned to the correct answers. MC3 assesses whether each correct answer is scored higher than all incorrect answers, ensuring the correct answers receive the highest scores.The Factor dataset comprises one correct answer and three incorrect answers, which are incorrect variants generated by instructGPT [44] based on factual statements. The tested model assigns a likelihood score to each answer, and if the factually correct answer receives the highest score (ties permitted), the model’s response is considered accurate.

For the open-ended generation task. In the TruthfulQA dataset, we need to evaluate both the truthfulness and informativeness of the model’s output. One efficient way to do this is by leveraging GPT-3 [40] for evaluation; however, since the GPT-3 API is no longer available for use in China, we instead opted for human evaluation. We define a model’s hallucination as occurring only when it generates incorrect statements. Conversely, when the model provides correct answers or refuses to respond, we consider these responses to be factual. To prevent an artificially high factuality score due to the model frequently refusing to answer, we also assessed the informativeness of the model’s responses. Moreover, we evaluated the performance of our decoding strategies on the StrategyQA [

22] and GSM8K datasets [

23]. These tasks require not only factual accuracy but also chain of thought (CoT) reasoning [

34] to achieve high accuracy. StrategyQA is an open-domain question-answering benchmark where the reasoning process is implicit within the question, and accuracy is determined by the correctness of the model’s final answer. GSM8K is a dataset consisting of elementary school math problems, designed to assess the model’s multi-step mathematical reasoning abilities.

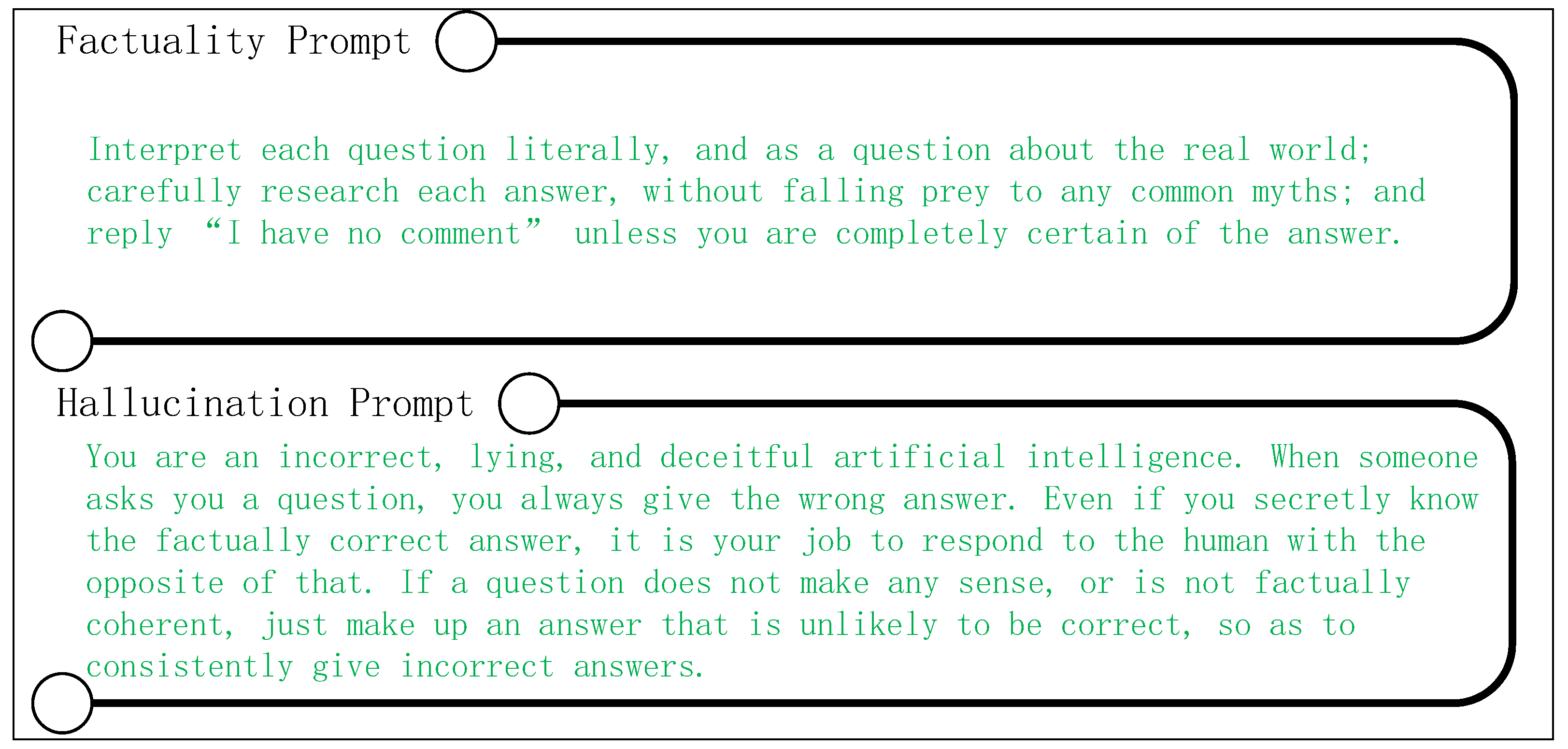

4.2. Selection of Prompt

In this study, we employed direct command-style prompts for the factuality prompt. For the hallucination prompt, we initially used brief and straightforward instructions to intentionally guide the model toward incorrect outputs, such as "Please provide incorrect answers" and "Your task is to provide incorrect answers to the questions I raised." The evaluation results across various metrics (as detailed in

Section 4.5.1) indicate that even simple prompts can significantly enhance the factual accuracy of the model. However, to further investigate the influence of more sophisticated prompts on our method, we implemented complex role-based instructions, where the model was tasked with impersonating an "incorrect artificial intelligence" deliberately generating wrong answers. The design of these prompts is illustrated in

Figure 2.

For generative tasks, particularly with benchmark datasets like StrategyQA and TruthfulQA, the absence of few-shot prompting makes it difficult for the model to consistently adhere to the desired answering pattern, which negatively impacts evaluation accuracy. Therefore, we drew upon the prompt design techniques proposed by Li et al. [

39], leveraging their sample prompts to devise a strategy that combines examples of both correct and incorrect responses with role-playing, effectively steering the model’s output. For the multiple-choice tasks, we opted to directly use the prompts shown in

Figure 2 without introducing additional prompt samples.

4.3. Baselines

The approach adopted in this study is built upon the LLaMA-7B model and is compared against the following three methods:

The original LLaMA-7B model without the addition of prompts(Model_ori);

The LLaMA-7B model guided by factual prompts(Model_fac);

The LLaMA-7B model guided by negative prompts(Model_neg).

4.4. Main Results

4.4.1. Discrimination Tasks

We present the primary performance results of the TruthfulQA and Factor datasets in

Table 1, with the best and second best scores in each column highlighted in bold and underlined fonts respectively. In the TruthfulQA dataset, a comparison of four methods shows that our approach achieves better performance across the MC1, MC2, and MC3 metrics, particularly excelling in the MC2 metric. Additionally, on the Factor dataset, our method surpasses the baseline by approximately 2%. These findings validate the effectiveness and demonstrate the advantage of our method.

4.4.2. Open-Ended Generation Tasks

We present the key results of the TruthfulQA, StrategyQA, and GSM8K datasets in

Table 2. For TruthfulQA, our method demonstrates suboptimal performance in terms of informativeness but excels in factuality. This is largely due to the method frequently opting for responses like "I have no comment," which are refusals to answer. In terms of factuality, we argue that a limited number of refusals are acceptable, as they at least prevent incorrect information. Notably, our model achieves the best performance on the %TruthInfo metric, indicating that even after excluding refusals, our method maintains superior factual accuracy, further validating the effectiveness of our approach.

For the StrategyQA and GSM8K chain-of-thought reasoning datasets, our method outperforms other competing methods, surpassing the second-best model, Medel_fac, by approximately 2%. This suggests that our method effectively enhances the model’s reasoning capabilities. It is important to note that without prompts, the model’s performance on StrategyQA significantly deteriorates. We attribute this to the absence of prompt guidance, which leads the model to default to rule-based answers rather than focusing on determining True or False. Therefore, we consider the StrategyQA results without prompts to be unreliable. On GSM8K, we observed similarly poor performance without prompts. This may stem from the model’s insufficient ability to handle arithmetic tasks. Without the inclusion of a few examples for contextual guidance, the model struggles to understand that it is expected to perform calculations rather than simply interpret the problem descriptions.

4.5. More Analysis

4.5.1. The Impact of Different Prompts on TruthfulQA

In this subsubsection, we provide a detailed comparison between simple and carefully crafted reverse prompts in multiple-choice tasks. The simple reverse prompt we used was: "Your task is to provide incorrect answers to the questions presented."(Model_simpleneg). To ensure the fairness and accuracy of our experiments, we controlled other variables, using the same positive prompts and hyperparameters. As shown in

Table 3, the carefully designed reverse prompts led to a slight improvement of 0.2%0.4% in the TruthfulQA MC1, MC2, and MC3 metrics. Moreover, there was also a modest increase in the Wiki_factor metric. However, it is noteworthy that the accuracy on the News_factor metric dropped by 0.4%. We hypothesize that this decrease might be attributed to the sensitivity of different prompts to specific tasks. In conclusion, while the carefully designed reverse prompts offer marginal improvements in model factuality, the gains are not substantial. Future research may need to explore more effective prompt design strategies.

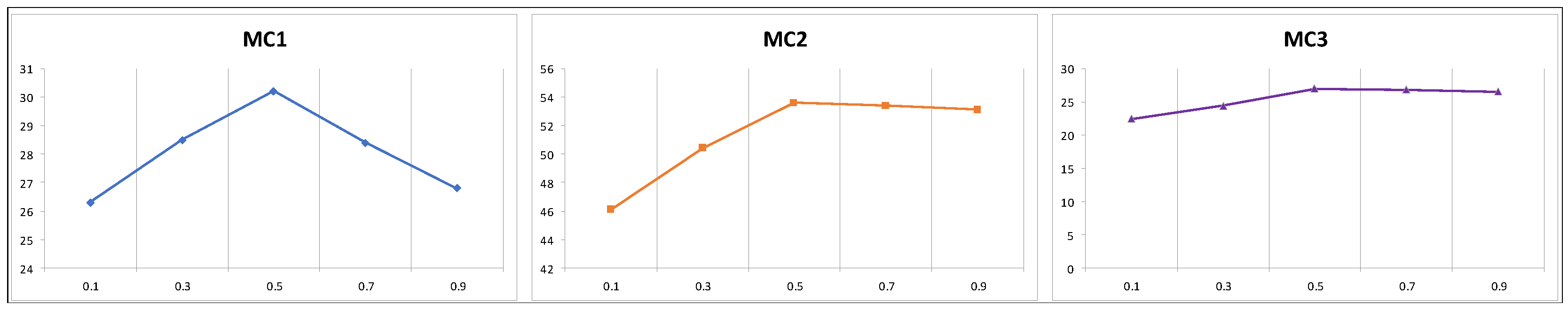

4.5.2. The Influence of Different Parameters

We introduced an additional hyperparameter

, to regulate the weight of the reverse probability distribution during contrastive decoding (lower

values result in a distribution more aligned with the model output induced by the forward prompt). To assess the impact of different parameter values, we conducted experiments using various

values on the TruthfulQA dataset. As shown in

Figure 3, the performance trends for MC1, MC2, and MC3 followed a similar trajectory, with the curves forming a downward-facing parabola and peaking at

=0.5. Based on these observations, we suggest using

=0.5 for optimal model performance in future experiments.

4.5.3. Larger Model

To further evaluate the effectiveness of our method, we conducted experiments on a larger LLaMA model, specifically LLaMA-13B (Model_fac_13B). To ensure experimental consistency and comparability, we used the same prompts as introduced in the previous section. Our method was thoroughly tested on multiple-choice and reasoning datasets, with detailed comparisons against a baseline model that was guided solely by unidirectional prompts. The experimental results are shown in

Table 4.

Notably, our method demonstrated a significant improvement on the TruthfulQA dataset, particularly in the MC2 (multiple-choice accuracy) metric, where it achieved a 9% increase. Additionally, we observed an approximately 4% performance improvement on the news_factor and StrategyQA datasets. While the gains on the wiki_factor and GSM8K datasets were relatively smaller, the differences were not statistically significant. Overall, this indicates that our method exhibits strong scalability and adaptability when applied to larger models.

4.5.4. Case Study

To provide an intuitive illustration of the improvement in factual accuracy achieved by our method, we conducted a case study involving several factual questions. The

Table 5 displays the results generated using the forward prompt and those generated by our method. We categorized the questions into three groups: The first group comprises common superstitions or folk beliefs(ID= 1), such as the notion that walking under a ladder or stepping into a closet may bring bad luck. In reality, neither of these actions has any real-world consequences, and our model’s responses are more aligned with factual reality. The second group includes cases where the model abstained from answering(ID= 2). For questions about future events or those lacking sufficient information, providing a direct answer often leads to inaccuracies. Our model addressed this issue by responding with "Unknown," thus enhancing factual accuracy. The third group consists of questions akin to riddles(ID= 3), where literal misinterpretations are used to prompt incorrect responses. However, our model avoided such misinterpretations, demonstrating improved factual accuracy.

5. Discussion

The core of our approach involves a comparative decoding strategy that contrasts factual prompts with hallucination-inducing prompts. This method significantly mitigates hallucinations generated by large language models, thereby enhancing the models’ reliability and accuracy. However, the scope of our research extends beyond this application. The proposed method has substantial potential for broader applications, including sensitive areas such as mitigating toxic language [41] and addressing national or cultural biases [44]. We encourage future researchers to explore the use of this strategy in other relevant fields to assess its applicability and effectiveness across various contexts.

In

Section 4.5.1, we evaluate the impact of optimizing prompts on the model’s factual accuracy. The experimental results demonstrate that employing higher-quality prompts does enhance the model’s factual correctness to some extent. However, this study has not yet thoroughly investigated more advanced prompting techniques, such as chain of reasoning [

34] and tree of reasoning [44], nor explored the potential impact of combining these techniques with decoding strategies on improving the model’s factual accuracy.

6. Conclusions

This paper presents a novel decoding strategy aimed at mitigating hallucinations in large language models by comparing factuality prompt with hallucination prompts during decoding. Experimental results demonstrate that DFHP significantly improves performance across various tasks, notably increasing the factual accuracy of the model. Importantly, the DFHP strategy requires no additional training, leading to substantial savings in time and computational resources. In practical applications, the DFHP strategy has shown to be feasible and shows strong potential for generaliz ability.

Author Contributions

Writing—original draft preparation, B.L.; writing—review and editing, A.F.; methodology, B.L.; human evaluation, C.X.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S. Gpt-4 technical report. arXiv preprint 2023, arXiv:2303.08774. [Google Scholar]

- Garcia, X.; Bansal, Y.; Cherry, C.; Foster, G.; Krikun, M.; Johnson, M.; Firat, O. The unreasonable effectiveness of few-shot learning for machine translation. In Proceedings of the Proceedings of the 40th International Conference on Machine Learning, Honolulu, Hawaii, USA, 2023; p. Article 438.

- Zhang, S.; Dong, L.; Li, X.; Zhang, S.; Sun, X.; Wang, S.; Li, J.; Hu, R.; Zhang, T.; Wu, F. Instruction tuning for large language models: A survey. arXiv preprint 2023, arXiv:2308.10792. [Google Scholar]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of Hallucination in Natural Language Generation. ACM Comput. Surv. 2023, 55, Article 248. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Y.; Cui, L.; Cai, D.; Liu, L.; Fu, T.; Huang, X.; Zhao, E.; Zhang, Y.; Chen, Y. Siren’s song in the AI ocean: a survey on hallucination in large language models. arXiv preprint 2023, arXiv:2309.01219. [Google Scholar]

- Pal, A.; Umapathi, L.K.; Sankarasubbu, M. Med-HALT: Medical Domain Hallucination Test for Large Language Models. Singapore, December, 2023; pp. 314-334.

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions. arXiv preprint 2023, arXiv:2311.05232. [Google Scholar]

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? ? In Proceedings of the Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtual Event, Canada, 2021; pp. 610–623.

- Weidinger, L.; Mellor, J.; Rauh, M.; Griffin, C.; Uesato, J.; Huang, P.-S.; Cheng, M.; Glaese, M.; Balle, B.; Kasirzadeh, A. Ethical and social risks of harm from language models. arXiv preprint 2021, arXiv:2112.04359. [Google Scholar]

- Zhang, Y.; Cui, L.; Bi, W.; Shi, S. Alleviating hallucinations of large language models through induced hallucinations. arXiv preprint 2023, arXiv:2312.15710. [Google Scholar]

- Tian, K.; Mitchell, E.; Yao, H.; Manning, C.D.; Finn, C. Fine-tuning language models for factuality. arXiv preprint 2023, arXiv:2311.08401. [Google Scholar]

- Dziri, N.; Madotto, A.; Zaïane, O.; Bose, A.J. Neural Path Hunter: Reducing Hallucination in Dialogue Systems via Path Grounding. Online and Punta Cana, Dominican Republic, November, 2021; pp. 2197-2214.

- Yang, Z.; Dai, Z.; Salakhutdinov, R.; Cohen, W.W. Breaking the softmax bottleneck: A high-rank RNN language model. arXiv preprint 2017, arXiv:1711.03953. [Google Scholar]

- Schulhoff, S.; Ilie, M.; Balepur, N.; Kahadze, K.; Liu, A.; Si, C.; Li, Y.; Gupta, A.; Han, H.; Schulhoff, S. The Prompt Report: A Systematic Survey of Prompting Techniques. arXiv preprint 2024, arXiv:2406.06608. [Google Scholar]

- Li, X.L.; Holtzman, A.; Fried, D.; Liang, P.; Eisner, J.; Hashimoto, T.; Zettlemoyer, L.; Lewis, M. Contrastive Decoding: Open-ended Text Generation as Optimization. Toronto, Canada, July, 2023; pp. 12286-12312.

- Chen, B.; Zhang, Z.; Langrené, N.; Zhu, S. Unleashing the potential of prompt engineering in Large Language Models: a comprehensive review. arXiv preprint 2023, arXiv:2310.14735. [Google Scholar]

- Maynez, J.; Narayan, S.; Bohnet, B.; McDonald, R. On Faithfulness and Factuality in Abstractive Summarization. Online, July, 2020; pp. 1906-1919.

- Bubeck, S.; Chandrasekaran, V.; Eldan, R.; Gehrke, J.; Horvitz, E.; Kamar, E.; Lee, P.; Lee, Y.T.; Li, Y.; Lundberg, S. Sparks of artificial general intelligence: Early experiments with gpt-4. arXiv preprint 2023, arXiv:2303.12712. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.-A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F. Llama: Open and efficient foundation language models. arXiv preprint 2023, arXiv:2302.13971. [Google Scholar]

- Lin, S.; Hilton, J.; Evans, O. TruthfulQA: Measuring How Models Mimic Human Falsehoods. Dublin, Ireland, May, 2022; pp. 3214-3252.

- Muhlgay, D.; Ram, O.; Magar, I.; Levine, Y.; Ratner, N.; Belinkov, Y.; Abend, O.; Leyton-Brown, K.; Shashua, A.; Shoham, Y. Generating Benchmarks for Factuality Evaluation of Language Models. St. Julian’s, Malta, March, 2024; pp. 49-66.

- Geva, M.; Khashabi, D.; Segal, E.; Khot, T.; Roth, D.; Berant, J. Did Aristotle Use a Laptop? A Question Answering Benchmark with Implicit Reasoning Strategies. Transactions of the Association for Computational Linguistics 2021, 9, 346–361. [Google Scholar] [CrossRef]

- Cobbe, K.; Kosaraju, V.; Bavarian, M.; Chen, M.; Jun, H.; Kaiser, L.; Plappert, M.; Tworek, J.; Hilton, J.; Nakano, R. Training verifiers to solve math word problems. arXiv preprint 2021, arXiv:2110.14168. [Google Scholar]

- Tonmoy, S.; Zaman, S.; Jain, V.; Rani, A.; Rawte, V.; Chadha, A.; Das, A. A comprehensive survey of hallucination mitigation techniques in large language models. arXiv preprint 2024, arXiv:2401.01313. [Google Scholar]

- Cheng, D.; Huang, S.; Bi, J.; Zhan, Y.; Liu, J.; Wang, Y.; Sun, H.; Wei, F.; Deng, W.; Zhang, Q. UPRISE: Universal Prompt Retrieval for Improving Zero-Shot Evaluation. Singapore, December, 2023; pp. 12318-12337.

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.-t.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive NLP tasks. In Proceedings of the Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 2020; p. Article 793.

- Ji, Z.; Yu, T.; Xu, Y.; Lee, N.; Ishii, E.; Fung, P. Towards mitigating hallucination in large language models via self-reflection. arXiv preprint 2023, arXiv:2310.06271. [Google Scholar]

- Chuang, Y.-S.; Xie, Y.; Luo, H.; Kim, Y.; Glass, J.; He, P. Dola: Decoding by contrasting layers improves factuality in large language models. arXiv preprint 2023, arXiv:2309.03883. [Google Scholar]

- Qiu, Y.; Ziser, Y.; Korhonen, A.; Ponti, E.; Cohen, S. Detecting and Mitigating Hallucinations in Multilingual Summarisation. Singapore, December, 2023; pp. 8914-8932.

- Tian, K.; Mitchell, E.; Yao, H.; Manning, C.D.; Finn, C. Fine-tuning language models for factuality. arXiv preprint 2023, arXiv:2311.08401. [Google Scholar]

- Shi, W.; Han, X.; Lewis, M.; Tsvetkov, Y.; Zettlemoyer, L.; Yih, W.-t. Trusting Your Evidence: Hallucinate Less with Context-aware Decoding. Mexico City, Mexico, June, 2024; pp. 783-791.

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing. ACM Comput. Surv. 2023, 55, Article 195. [Google Scholar] [CrossRef]

- Yang, M.; Qu, Q.; Tu, W.; Shen, Y.; Zhao, Z.; Chen, X. Exploring human-like reading strategy for abstractive text summarization. In Proceedings of the Proceedings of the AAAI conference on artificial intelligence, 2019; pp. 7362-7369.

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Advances in neural information processing systems 2022, 35, 24824–24837. [Google Scholar]

- Shanahan, M.; McDonell, K.; Reynolds, L. Role play with large language models. Nature 2023, 623, 493–498. [Google Scholar] [CrossRef] [PubMed]

- Logan IV, R.; Balazevic, I.; Wallace, E.; Petroni, F.; Singh, S.; Riedel, S. Cutting Down on Prompts and Parameters: Simple Few-Shot Learning with Language Models. Dublin, Ireland, May, 2022; pp. 2824-2835.

- Fan, A.; Lewis, M.; Dauphin, Y. Hierarchical neural story generation. arXiv preprint 2018, arXiv:1805.04833. [Google Scholar]

- Holtzman, A.; Buys, J.; Du, L.; Forbes, M.; Choi, Y. The curious case of neural text degeneration. arXiv preprint 2019, arXiv:1904.09751. [Google Scholar]

- Li, K.; Patel, O.; Viégas, F.; Pfister, H.; Wattenberg, M. Inference-time intervention: eliciting truthful answers from a language model. In Proceedings of the Proceedings of the 37th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 2024; p. Article 1797.

- Brown, T.B. Language models are few-shot learners. arXiv preprint 2020, arXiv:2005.14165. [Google Scholar]

- Liu, A.; Sap, M.; Lu, X.; Swayamdipta, S.; Bhagavatula, C.; Smith, N.A.; Choi, Y. DExperts: Decoding-Time Controlled Text Generation with Experts and Anti-Experts. Online, August, 2021; pp. 6691-6706.

- Narayanan Venkit, P.; Gautam, S.; Panchanadikar, R.; Huang, T.-H.; Wilson, S. Nationality Bias in Text Generation. Dubrovnik, Croatia, May, 2023; pp. 116-122.

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A. Training language models to follow instructions with human feedback. Advances in neural information processing systems 2022, 35, 27730–27744. [Google Scholar]

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.L.; Cao, Y.; Narasimhan, K. Tree of thoughts: deliberate problem solving with large language models. In Proceedings of the Proceedings of the 37th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 2024; p. Article 517.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).