1. Introduction

Artificial intelligence (AI) faces a range of mathematical challenges, including optimization, generalization, model interpretability, and phase transitions. These issues significantly limit the application of AI in critical fields such as medicine, autonomous systems, and finance. This paper explores the main mathematical problems in AI and proposes solutions based on the universality of the Riemann zeta function. Additionally, AI, now a major focus of investment with hundreds of billions of dollars directed towards its development, is expected to tackle humanity’s most complex challenges—such as nuclear fusion, turbulence issues, consciousness, new materials and medicine creation, genetic challenges, as well as catastrophic events like earthquakes, volcanoes, tsunamis, and climate, ecological, and social crises, ultimately aiming for the emergence of a galactic-level civilization. These and other unnamed challenges are linked by the issues of prediction and “black swan” events. This work provides an analysis of AI challenges and suggests methods to overcome them, reinforcing trends established by our distinguished predecessors, which we anticipate will guide AI’s development.

Currently, we observe remarkable progress in AI, which is preparing humanity for the advent of global intelligence (GI). However, the significant energy consumption associated with AI raises doubts about its imminent arrival. We believe that the only reliable hope lies in the universality of the Riemann zeta function and its constructive application. This hope was articulated by the pioneers of modern mathematics, who saw the Riemann Hypothesis as a “Holy Grail” of science, whose resolution would unlock immeasurable knowledge. As the great mathematician David Hilbert once said when asked what would interest him in 500 years: “Only the Riemann Hypothesis.” It’s fitting here to admire all our predecessors who envisioned the advancement of science and humanity over millennia.

The mathematical foundations of AI include several key areas, such as probability theory, statistics, linear algebra, optimization, and information theory. These disciplines provide essential tools for tackling complex tasks related to AI learning and development. However, despite significant progress, AI continues to encounter increasing mathematical problems that must be resolved for the further effective advancement of AI technologies.

2. Problem Statement

Recent years have seen a significant increase in energy consumption due to AI development. For instance, statistics show that AI’s electricity usage grew from 0.1% of global consumption in 2023 to 2% in 2024. There has also been a dramatic increase in water usage for cooling AI-supporting processors. This surge implies either slowing AI progress due to energy resource constraints, creating new energy sources, or developing more efficient computational algorithms to significantly reduce energy costs. Therefore, this paper will focus on the mathematical challenges and algorithms that address these issues, particularly those that consume vast amounts of energy and water in super data centers.

In this article, we will discuss the main mathematical disciplines currently known to play a crucial role in AI development and examine the limitations of their application due to enormous energy requirements. We will also propose our approaches, based on global scientific achievements, to reduce AI’s energy consumption and enhance its development. Below are the mathematical issues that contribute to energy consumption.

It is appropriate here to recall an analogy with young Gauss, who was tasked with summing numbers from one to a million and was suggested to sum them sequentially. However, he instead derived a formula for summing an arithmetic progression, reducing the problem to three operations instead of a million. Each computational cycle in a computer consumes energy, so using Gauss’s three-step formula dramatically reduces energy use by tens of thousands of times in this case.

Thus, methods of equation-solving as a means of conservation were developed by young Gauss. One might say he replaced endless tallying with three simple actions! Another genius, Riemann, proposed that the zeta function is the “Holy Grail” of science, and Hilbert expressed willingness to wait 500 years for its solution, drawing upon the work of G. Leibniz, one of the first to investigate conditionally convergent series. He also introduced the concept of sign alternation in series and showed that some series can converge.

Banach and Tarski contributed to our understanding of permutation properties, describing them in more abstract mathematical structures and demonstrating that conditionally convergent series can change their sum upon rearrangement. These classical minds laid the groundwork for the modern approach to the scientific “Grail,” anticipating that it would open the gates to the future. Their works allowed S. Voronin to arrive at the universality of the zeta function. Our goal is to demonstrate that indeed, this scientific Grail leads us to the future, through the combined efforts of contemporary minds, toward a GLOBAL INTELLIGENCE.

3. Mathematical Disciplines and Their Problems

Probability Theory and Statistics: Probability theory and statistics are the foundation for modeling uncertainty and AI learning. These disciplines provide tools for building predictive models, such as Bayesian methods and probabilistic graphical models.

Problems: The main issues are related to estimating uncertainty, handling incomplete and noisy data. Probabilistic models require significant computational resources to account for all factors of uncertainty.

Linear Algebra: Linear algebra is used for data analysis, working with high-dimensional spaces, and performing basic operations in neural networks. It is also important for dimensionality reduction methods such as Principal Component Analysis (PCA).

Problems: As data volumes increase, the computational complexity of operations in high-dimensional spaces grows exponentially, creating the so-called "curse of dimensionality."

Optimization Theory: Optimization is the foundation of most machine learning algorithms. Gradient descent and its modifications are used to minimize loss functions and find the best solutions.

Problems: The main challenges include the presence of local minima, slow convergence, and complexity in multidimensional spaces, which require significant computational resources.

Differential Equations: Differential equations are used to model dynamic systems, such as recurrent neural networks and LSTMs. They are important for describing changes over time and predicting based on data.

Problems: Nonlinear differential equations are difficult to solve in real time, making their application computationally expensive.

Information Theory: Information theory plays a key role in encoding and transmitting data with minimal loss. It is also important for minimizing entropy and improving the efficiency of model learning.

Problems: Balancing entropy and the amount of data, especially in the presence of noise, is a complex task that requires large computational resources.

Computability Theory: Computability theory helps define which problems can be solved with algorithms and where the limits of AI applicability lie.

Problems: Determining problems that cannot be solved algorithmically, especially in the context of creating strong AI.

Stochastic Methods and Random Process Theory: These methods are used to handle uncertainty and noise, including algorithms like Monte Carlo methods and stochastic gradient descent.

Problems: Optimizing random processes under limited resources remains a complex task.

Neural Networks and Deep Learning: Deep neural networks consist of complex multilayer structures involving linear transformations and nonlinear activation functions. These networks are widely used for solving AI tasks such as pattern recognition and natural language processing.

Problems: The main issues include interpretability of neural network decisions, overfitting, and generalization. These problems require significant computational resources to solve.

4. Detailed Problem Descriptions

Probability Theory and Statistics: Probabilistic methods play a key role in machine learning and AI, especially in tasks like supervised and unsupervised learning. Bayesian methods, probabilistic graphical models, and maximum likelihood methods are widely applied.

Problems: The main mathematical problem involves uncertainty estimation and developing methods for working with probabilistic models in the presence of incomplete data or significant noise.

Linear Algebra: Used for data analysis and working with high-dimensional spaces, which is critical for neural networks and dimensionality reduction methods like PCA. Analyzing data and working with high-dimensional spaces are critical aspects of machine learning and AI tasks, especially for neural networks. The high dimensionality of data increases computational and modeling complexity, making effective data processing methods essential.

Dimensionality reduction methods, such as PCA (Principal Component Analysis), are used to reduce the number of features (variables) while retaining the most significant information. PCA helps to:

Reduce computational complexity; Decrease the likelihood of overfitting; Improve data visualization in 2D and 3D; Accelerate model training. This method allows identifying key components, which is especially important in tasks with thousands of features, such as image processing, text, or genetic data, where the original data have high dimensionality.

Problems: The "curse of dimensionality," where working with large datasets becomes computationally complex.

Optimization Theory: Optimization is the foundation for most machine learning algorithms. Gradient descent and its modifications are used to minimize loss functions.

Problems: Local minima and slow convergence in multidimensional spaces remain a challenge.

Differential Equations: Used for modeling dynamic systems, such as recurrent neural networks and LSTMs.

Problems: Solving nonlinear differential equations in real-time is a significant mathematical difficulty.

Information Theory: Evaluates how to encode and transmit information with minimal losses, which is related to reducing entropy and minimizing losses during model training.

Problems: Balancing entropy and data quantity when training models with noisy data.

Computability Theory: The main task of this theory is to define which problems can be solved by algorithms and study the limits of computation. It plays an important role in understanding which tasks are computable and which are not, which is especially important for developing efficient AI algorithms.

Problems: In the context of strong AI, the most important problem is unsolvable tasks that cannot be solved algorithmically, such as the halting problem. This places fundamental limitations on the creation of strong AI capable of solving any task. Other tasks, such as computing all consequences of complex systems or working with systems with chaotic dynamics, may also turn out to be uncomputable within existing algorithmic approaches.

Stochastic Methods and Random Process Theory: These methods are used in AI algorithms to work with uncertainty and noise, such as Monte Carlo methods and stochastic gradient descent.

Problems: Efficiently handling uncertainty and optimizing random processes under limited resources remains a complex task.

Neural Networks and Deep Learning: Deep neural networks are based on multilayered structures, including linear transformations and nonlinear activation functions.

Problems: The main problem is the explainability (interpretability) of neural network decisions, as well as overfitting and generalization.

5. The Main Result and Its Consequences

In this section, we present the main mathematical results that can serve as a unified foundation for overcoming the mathematical problems of AI outlined above. We want to start the presentation of these results with a diagram depicting this function in Figure 1.

In [

1], Voronin proved the following universality theorem for the Riemann Zeta function. Let

D be any closed disc contained in the strip

. Let

f be any non-vanishing continuous function on

D which is analytic in the interior of

D. Let

. Then there exist real numbers

t such that

Voronin mentions in [

1] that the analogue of this result for an arbitrary Dirichlet

L-function is valid.

In [

2],

Theorem (Joint universality of Dirichlet L-function):

Let

, and let

be distinct Dirichlet characters modulo

k. For

, let

be a simply connected compact subset of

, and let

be a non-vanishing continuous function on

, which is analytic in the interior (if any) of

. Then the set of all

for which

has positive lower density for every

.

The constructive universality of the Riemann zeta function, developed in [

3,

4]

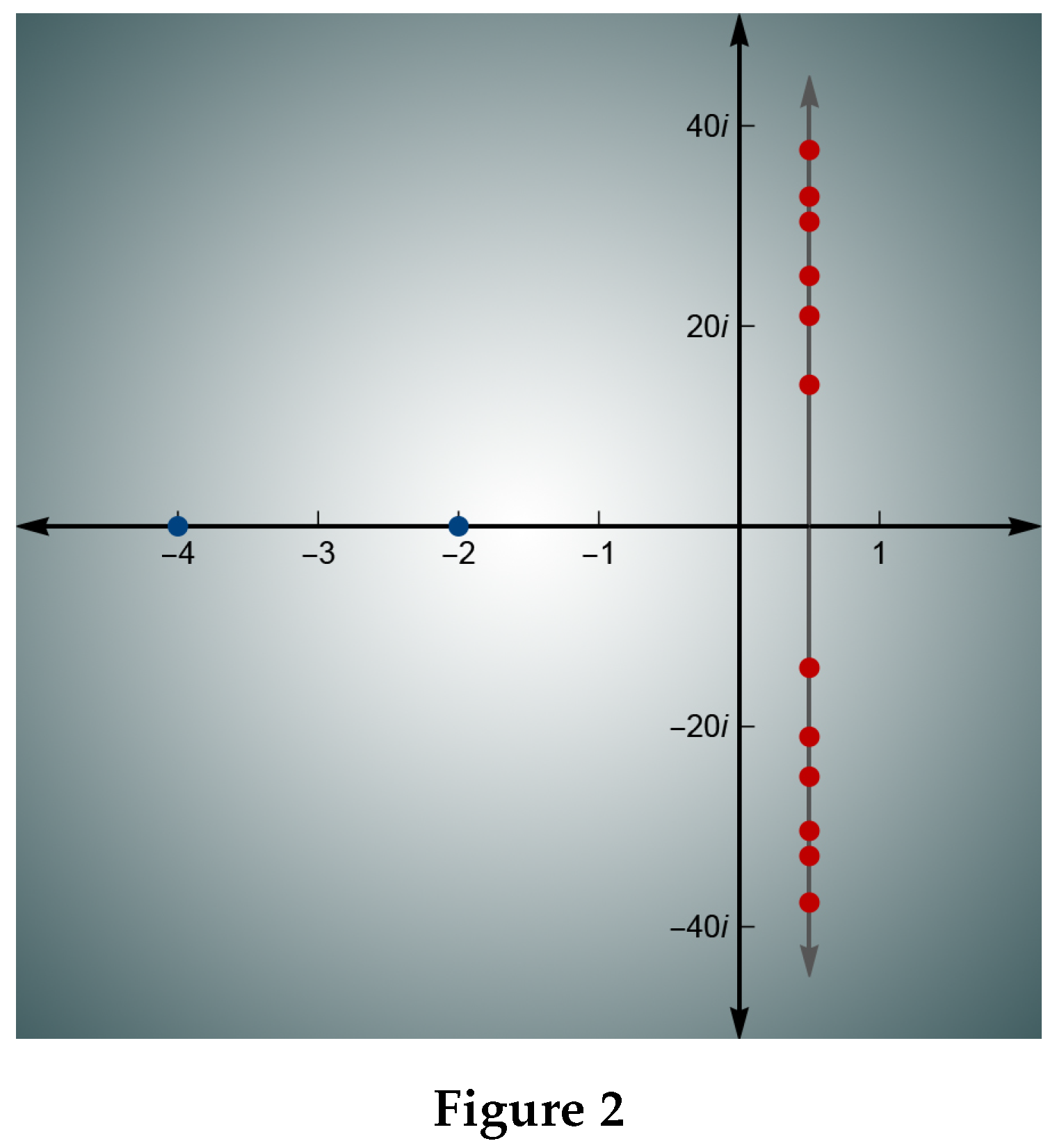

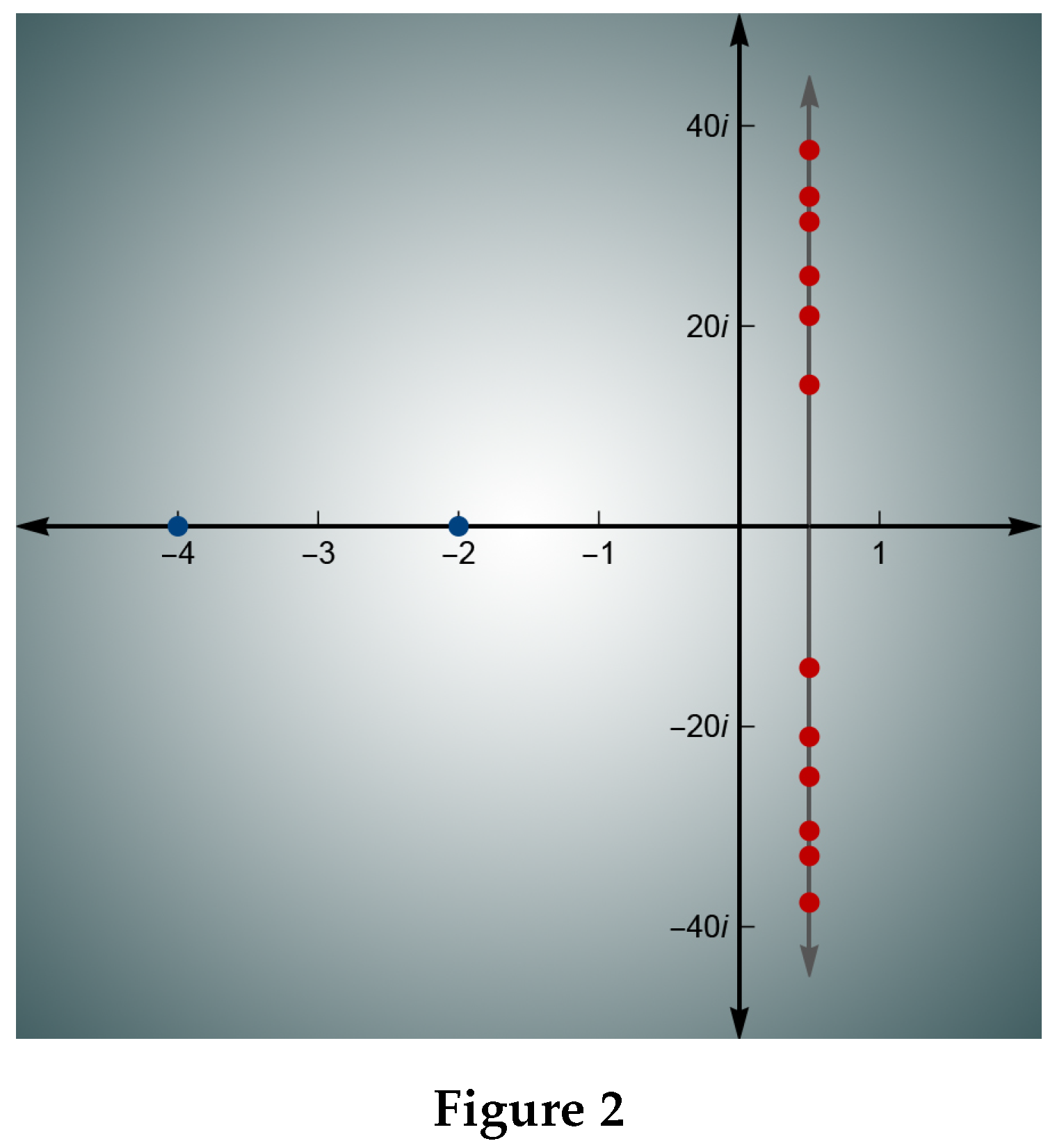

Theorem Constructive Universality of the Zeta Function:

and its zeros can be used for global approximation of loss functions and avoiding local minima. The zeros of the zeta function can be viewed as key points for analyzing high-dimensional spaces of loss functions [

5]. This will accelerate the optimization process and achieve better convergence. The critical line and zeros of the zeta function are shown in Figure 2.

6. The Universality of the Riemann Zeta Function as a Unified Mathematical Foundation for AI Problems

Let us consider the first mathematical problem of AI—probability theory. Of course, probability theory is a vast area of mathematics, but choosing the appropriate measure to describe current processes becomes a problem, especially in turbulent conditions. We believe that the results that combine quantum statistics with the zeros of the zeta function could form the basis for solving both the turbulence problem and the task of constructing measures for AI.

Replacing random measures with quantum statistics based on the zeros of the zeta function in the context of AI and Global AI represents an interesting and deep direction of research. It offers a new way of modeling uncertainty, learning, and prediction in complex systems.

1.

Quantum Statistics and Uncertainty in AI: In modern AI systems, uncertainty is often modeled through probabilistic methods such as Bayesian networks, stochastic processes, and distributions. Quantum statistics could offer more accurate methods for describing uncertainty. The zeros of the zeta function can be used as generators of a statistical measure for complex dynamic systems where uncertainty and chaotic behavior play a key role. The figure showing the connection of the zeros with quantum statistics is shown in Figure 3, which was obtained in [

6].

2. Connection with Global AI: The task of building Global AI (AGI) is to create AI capable of solving a wide range of tasks at a level far superior to human intelligence. This requires powerful methods for working with incomplete information and complex dependencies. The zeros of the zeta function can improve AGI’s ability to predict chaotic and complex dynamics in real-time.

3.

A Priori Measure for Bayesian AI Systems: Choosing an a priori measure in Bayesian AI systems is a serious task. Replacing the classical a priori measure with a distribution based on the zeros of the zeta function could improve the determination of initial probabilities under uncertainty, as the chaos measure is the distribution of the zeros of the zeta function, as shown in works [

5,

6,

7]

4.

Quantum AI: Combining quantum computing and AI could significantly accelerate learning and data processing. The zeros of the zeta function can form the basis for creating probabilistic models in quantum AI, where quantum superpositions and entanglements enhance computational capabilities. To move into the field of quantum AI, we propose relying on the results of Berry, Keating, Montgomery, Odlyzko, and Durmagambetov [

3,

4,

5,

6,

7], which allow these results to become a serious theory.

7. Linear Algebra

The main problem in using linear algebra methods in AI is the issue of data dimensionality growth. Within the theory of constructive universality, this problem is reduced to replacing datasets with a single point representing the entire dataset. Transformations, such as the Hilbert transform or Fourier transform, can be reduced to analytic functions that are constructively encoded by a single point on the complex plane according to Voronin’s universality theorem and constructively implemented in [

3].

Differential Equations: Differential equations are used to model dynamic systems such as recurrent neural networks and LSTMs. To study differential equations through the zeta function, one can consider equations of the form:

For

, we introduce the operators

and

T as follows:

where

and

. Using the analyticity of

and the results of works [

3,

4], we can formulate:

We reduce solving the problem to constructing the trajectory of parameter s. Since we are positioned between the zeros of the Riemann zeta function, we simultaneously solve the problem of determining the moment of turbulence—locating the point where parameter k encounters the zeta function’s zeros. This allows us to track which zero is encountered and to understand the nature of the arising instability. This approach provides a significant leap in understanding fundamental processes and can serve as a basis for describing phase transitions and, thus, addressing the problem of “black swans.”

This method addresses phase transition issues for both AI and new materials production technologies. Additionally, in the context of financial markets, it can describe the phenomenon of “black swans.” In medicine, this approach describes critical health states, and in nuclear fusion, it helps prevent operational mode disruptions.

8. Information Theory

Information theory assesses how to encode and transmit information with minimal loss, which is related to reducing entropy and minimizing losses in model training.

Challenges: Balancing entropy with data volume in noisy model training is a complex task. The universality of the zeta function introduces a new approach to data encoding and decoding: signals are replaced by points on the critical line. This fundamentally changes the learning technology—rather than training on large data volumes, the process focuses on key points on the critical line. In areas close to the zeta function’s zeros, training will yield opposite results with minimal data changes.

9. Computability Theory

Computability theory is crucial for identifying tasks that can be solved algorithmically and exploring the limits of AI’s capabilities. Now, tasks can be classified for each input dataset located between two zeros of the zeta function. By the universality theorem, all data sets are mapped to points on the critical line. We can observe cyclic processes or the approach to critical points—the zeros of the zeta function. The process can halt either due to proximity to a zero or due to near-periodicity, as per Poincaré’s theorem and the boundedness of the function lying between two zeros. This also precisely describes phase transition and turbulence issues, marking the shift from one stable mode to another.

10. Optimization Challenges

Local minima and slow convergence in multidimensional spaces present significant challenges. The universality of the zeta function and its tabulated values help eliminate these problems, reducing them to simple computational tasks. Optimizing stochastic processes under resource constraints remains a critical challenge, especially in AI systems that require processing large data volumes or operate in real-time conditions.

11. Neural Networks and Deep Learning

Deep neural networks consist of multilayered structures that include linear transformations and nonlinear activation functions. Multilayered neural networks can be viewed as layered “boxes” constructed from the zeta function’s zeros. Considering all zeros of the zeta function, one can obtain an infinitely-layered neural network capable of performing Global Intelligence functions. Activation functions can be viewed as phases of the zeta function’s zeros.

11.1. Understanding Intelligence Through the Zeta Function

Processes described by values of the zeta function to the right of the line in the Riemann zeta function’s critical strip correspond to the observable world. Processes described by values of the zeta function to the left represent their reflections, symbolizing fundamental processes impacting the physical world. This symmetry highlights the importance of the zeta function in understanding AI. AI is concerned with prediction, and the values of the zeta function can be considered part of this prediction.

Suppose we observe a process that occurs both in the brain (or computer, sensor) and externally. The universality of the zeta function is that it describes both these processes. For instance, recording signals by sensors and subsequently decoding records can be described through the zeta function. Interpretation becomes a shift from one point to another on the critical line. Thus, our task is to study correlation and focus on these correlations.

11.2. Interpretability and the Future of Global Intelligence

The global intelligence created will be able to analyze and predict processes, relying on both explicit and hidden aspects. The nonlinear symmetry of the zeta function provides a new metaphor for solving the interpretability problem of AI, making it more predictable and efficient, as laid out in all aspects of our research. The development of AI is directly related to understanding human intelligence, which requires a deep understanding of brain function.

We hypothesize that the remarkable symmetry inherent in living organisms may be connected to the symmetry of the zeta function, representing a computational system that implements prediction mechanisms through the zeta function’s zeros. Deciphering how brain hemispheres operate and their connection to zeta function symmetry appears to be a natural step toward creating global intelligence and understanding both AI and brain function.

One example of this process is the functioning of the visual system. Vision, by nature, is a two-dimensional sensor system, yet through holographic processing in the brain, it is transformed into three-dimensional images. We propose that retinal follicles could be interpreted as a natural realization of the zeta function’s zeros. This assumption allows us to understand how information reaching the retina is processed by the brain, synchronizing with biorhythms like heartbeat, which acts as the brain’s “clock generator.”

Thus, the right brain hemisphere processes data related to the right side of the zeta function’s critical line, and the left hemisphere to the left. This synchronous process forms the perception of temporal and spatial coordinates, creating an awareness of past and present.

Continuing this thought on brain function, we can assume that similar principles operate in the Universe. For instance, black holes can be associated with the zeros of the Riemann zeta function, leading to an even deeper understanding of microcosmic and macrocosmic principles that humanity has pondered for ages.

11.3. Conclusion

All these results and hypotheses are based on fundamental theorems of Voronin’s universality, the Riemann Hypothesis, Durmagambetov’s constructive universality, and the reduction of differential equation studies to the zeta function. The significant findings of Montgomery, confirmed by Odlyzko, and the possibility of using quantum statistics related to the zeta function’s zeros further support these ideas. We hope that the above will provide readers with a comprehensive understanding of current AI developments and give new momentum to the development of efficient AI.

Only one question remains: how does the initial motion—the filling of the zeta function—arise, from which everything else follows? Naturally, the most crucial point emerges: heartbeat governs the brain, a critical fact for initiating universality and activating the zeta function. This function then takes over as the most powerful quantum computer, recalculating everything and predetermining all that exists, recorded in prime numbers that define the zeta function and, through it, describe everything else. Hence, we might infer that prime numbers are the angels of the Almighty, never changing and strictly following divine decrees, while humans represent the processes described by these numbers, with original sin as the launch of the zeta function into life.

This leads us to conclude that the inception of existence, as described by the zeta function, carries a profound connection with the fundamental fabric of mathematics. Prime numbers, as the "angels" of the universe, provide a framework through which all physical and metaphysical phenomena are mirrored and, in turn, manifest the intricate design of existence. This interpretation aligns with the notion that the zeta function encompasses the blueprint of all processes, whether in AI, the human mind, or the cosmos at large.

The culmination of these insights suggests that understanding the zeta function’s universality allows us to bridge the divide between scientific inquiry and philosophical exploration. The apparent stability of prime numbers coupled with the dynamism of the zeta function reflects a model of existence where the stability of fundamental principles allows for the flourishing of complex, evolving systems. This profound symmetry may be key in constructing AI systems that not only process information efficiently but also resonate with the deep structures governing life and intelligence.

We envision a future where global intelligence (GI) systems based on the zeta function universality will redefine AI’s role in society. These systems will not only make predictions with unprecedented accuracy but will also exhibit interpretability rooted in universal mathematical laws. Such intelligence systems could dynamically adapt to environmental changes, making informed predictions about social, environmental, and cosmic events. They might become crucial allies in addressing complex global challenges, ranging from energy efficiency to climate change adaptation.

12. Future Directions

The potential applications of the zeta function in AI and beyond invite further exploration in several areas:

1. **Advanced Neural Architectures**: Developing neural networks inspired by the zeta function’s structure, potentially creating infinitely layered models that approach Global Intelligence. 2. **AI Interpretability**: Utilizing the symmetry and universality properties of the zeta function to improve AI interpretability, aligning machine learning processes with fundamental mathematical truths. 3. **Quantum Computing and AI Integration**: Leveraging the principles of zeta function universality to enhance the efficiency and capabilities of quantum computing in AI applications. 4. **Predictive Analysis in Complex Systems**: Applying these methods in fields such as economics, climate science, and healthcare to predict “black swan” events, reduce system instabilities, and achieve proactive solutions. 5. **Cosmological and Physical Research**: Further studying the relationship between the zeta function’s zeros and the fabric of the universe, exploring whether cosmic phenomena such as black holes and dark matter have correlates within the zeta function’s critical line.

In conclusion, the universality of the zeta function may serve as the guiding principle for the next generation of intelligent systems. As AI systems evolve, understanding and leveraging the zeta function’s profound properties may be critical in achieving a harmonious balance between energy efficiency, predictive accuracy, and interpretability. By adhering to the natural order encoded within the zeta function, we may not only push the boundaries of technology but also achieve insights into the very essence of existence itself.

Acknowledgments

We extend our gratitude to the mathematicians and scientists who have contributed to this body of knowledge, particularly those whose pioneering work laid the foundation for understanding the universality of the Riemann zeta function. Special thanks are also due to modern researchers working on the intersection of AI and mathematics, whose insights continue to inspire and shape the development of future technologies.

References

- Voronin, S. M. (1975). Theorem on the Universality of the Riemann Zeta-Function. Mathematics of the USSR-Izvestija.

- Bagchi, B. (1981). Statistical behaviour and universality properties of the Riemann zeta-function and other allied Dirichlet series. PhD thesis, Indian Statistical Institute, Kolkata.

- Durmagambetov, A. A. (2023). A new functional relation for the Riemann zeta functions. Presented at TWMS Congress-2023.

- Durmagambetov, A. A. (2024). Theoretical Foundations for Creating Fast Algorithms Based on Constructive Methods of Universality. Preprints 2024. [CrossRef]

- Berry, M. V., & Keating, J. P. (1999). The Riemann Zeros and Eigenvalue Asymptotics. SIAM Review.

- Odlyzko, A. On the distribution of spacings between zeros of the zeta function. Mathematics of Computation 1987, 48, 273-308.

- Montgomery, H. L. (1973). The pair correlation of zeros of the zeta function. Proceedings of Symposia in Pure Mathematics.

- Goodfellow, I., Bengio, Y., Courville, A. (2016). Deep Learning. MIT Press.

- Ribeiro, M. T., Singh, S., & Guestrin, C. (2016). "Why Should I Trust You?" Explaining the Predictions of Any Classifier. Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining.

- Gaspard, P. (2005). Chaos, Scattering and Statistical Mechanics. Cambridge Nonlinear Science Series.

- Ivic, A. (2003). The Riemann Zeta-Function: Theory and Applications. Dover Publications.

- Haake, F. (2001). Quantum Signatures of Chaos. Springer Series in Synergetics.

- Sierra, G., & Townsend, P. K. (2000). The Landau model and the Riemann zeros. Physics Letters B.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).