Preprint

Article

Situational Awareness Classification Based on EEG Signals and Spiking Neural Network

Altmetrics

Downloads

48

Views

32

Comments

0

A peer-reviewed article of this preprint also exists.

This version is not peer-reviewed

Submitted:

27 September 2024

Posted:

29 September 2024

You are already at the latest version

Alerts

Abstract

Situational awareness detection and characterization of mental states have a vital role in medicine and many other fields. An electroencephalogram (EEG) is one of the most effective tools for identifying and analyzing cognitive stress. Yet, the measurement, interpretation, and classification of EEG sensors is a challenging task. This study introduces a novel machine learning-based approach to assist in evaluating situational awareness detection using EEG signals and spiking neural networks (SNNs) based on a unique spike-continuous-time-neuron (SCTN). The implemented biologically inspired SNN architecture is used for effective EEG feature extraction by applying time-frequency analysis techniques and allows adept detection and analysis of the various frequency components embedded in the different EEG sub-bands. The EEG signal undergoes encoding into spikes and is then fed into an SNN model which is well-suited to the serial sequence order of the EEG data. We utilize the SCTN-based resonator for EEG feature extraction in the frequency domain which demonstrates a high correlation with the classical FFT features. A new SCTN-based 2-D neural network is introduced for efficient EEG feature mapping aiming to achieve a spatial representation of each EEG sub-band. To validate and evaluate the performance of the proposed approach a common publicly available EEG dataset is used. Experimental results show that by using the extracted EEG frequencies features and the SCTN-based SNN classifier the mental state can be accurately classified with an average accuracy of 96.8% for the common EEG dataset. Our proposed method outperforms existing machine learning-based methods and demonstrates the advantages of using SNN for situational awareness detection and mental state classifications.

Keywords:

Subject: Computer Science and Mathematics - Computer Science

1. Introduction

The advancement of neuromorphic computing has amplified the interest in utilizing biologically plausible networks for classifying physiological signals [1,2,3,4]. SNNs are characterized by low energy consumption and low computational cost, and therefore are most suitable for applying machine learning for edge application [2]. These include indicators of emotional arousal states such as respiratory activity, cardiac activity, EEG signals, and electrodermal activity response [5,6]. Such neural signals can be obtained using a variety of techniques, including electroencephalography (EEG) and functional magnetic resonance imaging, providing insights into cognitive and mental states [7,8].

Situational Awareness (SA) pertains to an individual’s understanding of their complex operating environment. It involves comprehending what has happened, what is currently happening, and what might happen based on the surrounding context. Evaluation of SA is essential in tasks that require processing multiple streams of information, as it helps human operators effectively navigate and well respond to their environment [9,10].

EEG brain activity recordings are highly promising for assessing SA. EEG signals capture signals from all sensory inputs, providing deep insights into an individual’s physiological state. It is widely used to monitor and predict mental states such as workload, mental fatigue, sleepiness, and drowsiness. Given the close relationship between these mental states and SA, many studies utilize EEG to evaluate situational awareness [11,12].

EEG analysis involves a variety of methods to process and interpret the electrical activity of the brain. These methods can be categorized into time-domain [13], frequency-domain [14,15], and time-frequency domain analyses [16,17], along with advanced techniques involving Deep Neural Networks (DNN), machine learning and statistical modeling [18,19]. Time-domain analysis includes Event-Related Potentials (ERPs), and EEG amplitude and latency measurements [20]. Frequency-domain techniques include power spectral density estimation methods based on time-varying parameters modeling [21,22], while time-frequency analysis mainly include Short-Time Fourier Transform (STFT) and Wavelet Transform [23]. Filtering techniques and preprocessing are used to remove noise and artifacts from the EEG signal.

Kexin et al. propose a classification network for EEG signals based on cross-domain features related to brain activities using multi-domain attention mechanism [7]. Cigdem et al. utilize Support Vector Machine method for monitoring mental attention states (focused, unfocused, and drowsy) by using EEG brain activity imaging [24].

Evelina at el. evaluate various spike encoding techniques for time-dependent signal classification through a Spiking Convolutional Neural Network including signal preprocessing consisting of a bank of filters [25,26]. S. A. Nasrollahi [27] propose the use of the well-known encoding scheme in the design of SNN input-layer to convert analog sensor voltages into spike trains with firing rates that are linearly proportional to the input voltage. Pulse-density modulation (PDM), also known as Delta-sigma modulation (DSM), is a form of modulation used to represent an analog signal by a binary signal. The analog signal’s amplitude is converted into binary sequences corresponding to the amplitude [28]. A PDM bitstream is encoded from an analog signal through the process of a one-bit delta-sigma modulation. This process uses a one-bit quantizer that produces either a 1 or 0 depending on the analog signal’s amplitude. A high density of 1s occurs at the peaks of the sine wave, while a low density of 1s occurs at the troughs of the sine wave. Leu et al. present a novel approach utilizing SNN and EEG processing techniques for emotion state recognition. The method employs discrete wavelet transform (DWT) and fast Fourier transform (FFT) for extracting EEG signals [29]. Results show an average accuracy of about 80% for the four emotion states classification (arousal, valence, dominance, and liking). L. Devnath et al. [30] suggest using the DWT to remove noises from the EEG signals, while retains the ECG signal morphology effectively. D. Zhang et al. [31] present a deep learning-based EEG decoding method to read mental states. They propose 1D CNN with different filter lengths to capture different frequency band information, demonstrating 96% accuracy.

Numerous studies have been conducted to classify cognitive load using various methods, including EEG [32,33], ECG [34], and gaze detection [35,36]. Moreover, there is a growing demand for executing cognitive load estimation with edge devices across diverse fields, including healthcare [37,38], and automotive industries [39]. The ability to classify physiological signals using machine learning tools on low-hardware resources and low power allows its integration, especially in wearable sensors [39,40].

A configurable parametric model of a digital Spike-continuous-Time-Neuron (SCTN) is introduced by [41]. The efficiency of the usage of the SCTN neuron for sound signal processing is presented in [42]. In this paper we adapt the basic architecture of the published SCTN neuron and propose using an SCTN-based bio-inspired network for classifying situational awareness based on EEG signals. First, we introduce the usage of SCTN-based resonators for feature extraction in the frequency domain. A supervised spike-timing-dependent plasticity (STDP) learning algorithm is used for training the network and adjusting the weights to detect the frequencies of EEG sub-bands. Then, we propose a method for integrating the extracted features via spatial mapping, leveraging the interrelationships between the various EEG channels using a two-dimensional structure of spiking neurons. Finally, an SCTN-based 3-layer network is used for the mental state classification.

Our main contributions are listed below:

- 1.

- We propose a novel approach for mental states and situation awareness classification based on a biologically inspired Spiking Neural Network using EEG signals.

- 2.

- A new SCTN-based 2D Neural Network is introduced for efficient feature mapping aiming to achieve a spatial representation of each EEG sub-band.

- 3.

- We utilize the SCTN-based resonator for feature extraction in the frequency domain and demonstrate a high correlation with the known FFT features.

2. SCTN-Based Resonator

This section describes the SCTN neuron mathematical model and the SCTN-based resonator used for frequency detection.

2.1. SCTN-Spike Continuous Time Neuron Model

The SCTN model presented in [41], is given by Equation (1).

where the membrane potential, , of a neuron with N input synapses can be further expressed as follows:

where is the synapse input, represents the corresponding weight, and represents the neuron bias. The SCTN cell includes a leaky integrator characterized by the time constant [41], allowing to delay the input signals. The Leakage Period () corresponds to the neuron’s operation rate, while the Leakage Factor () corresponds to the integrator’s leakage rate. The SCTN emits a spike whenever the potential of the membrane () surpasses the threshold similar to the behavior observed in biological neurons.

The SCTN may postpone the incoming signals according to an expected leakage time constant. For an appropriate choice of the and parameters, the SCTN has the ability to generate a phase shift of for a given frequency [42]:

2.2. Frequency Detection Using SCTN-Based Resonator

The following section introduces the resonator circuit, designed as a frequency detector that utilizes the fundamental phase-shifting capability of the spiking neuron. We propose employing an SNN architecture to identify the sub-band frequencies embedded in an EEG signal by exploiting the inherent phase-shifting feature.

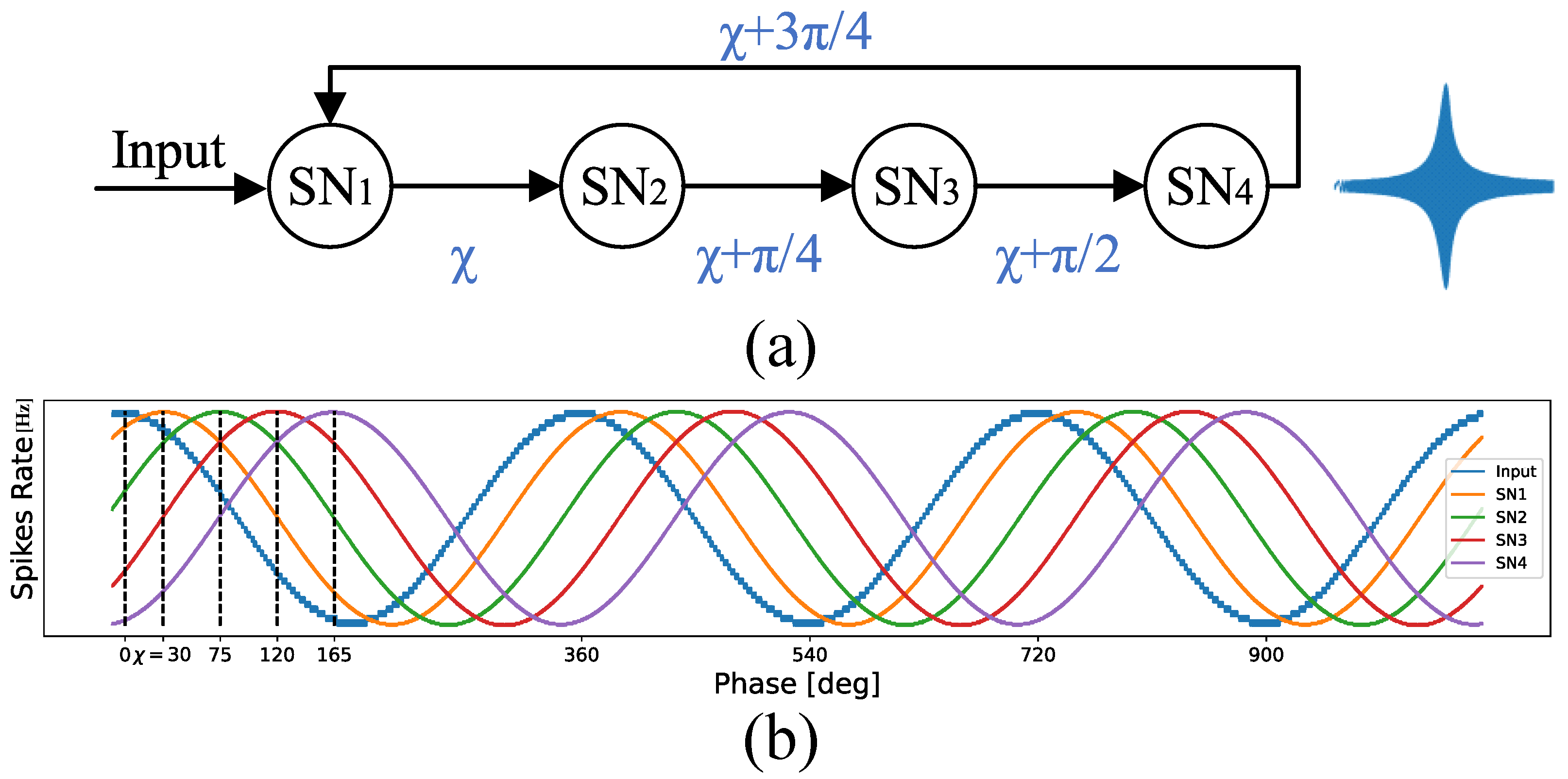

Figure 1a shows the resonator circuit composed of the SCTN-based network. This circuit consists of four SCTNs for which each neuron introduces a phase shift of degrees. The first neuron’s phase shift is denoted as . Figure 1b illustrates the output of each of the four neurons in the proposed architecture (for ). The amplitude expresses the spike rate within a predefined time window. The spike rate at the output of each SCTN neuron, in a predefined time window, represents the phase shift and therefore the signal amplitude as depicted in Figure 1. For a one-second normalized time window, the spike rate is given in Hz. The fourth neuron’s output is fed back to the first neuron, resulting in a feedback path with a phase shift of . Thus, the first neuron receives two input signals: (a) the original input signal and (b) a shifted signal ().

The resonator is designed to identify a wide range of frequencies within a time-series signal by leveraging the neuron’s intrinsic phase-shifting capability. The leakage factor and the leakage period are adjusted to target a specific frequency. The output neuron emits a train of pulses whenever a frequency within the range of is detected. We employed a supervised STDP algorithm to adjust the network parameters for the desired frequency [43].

A modified supervised STDP learning rule is used for training the SCTN-based resonator circuits. To achieve the desired frequency response the SCTN’s weights () and biases () are adjusted during the training phase.

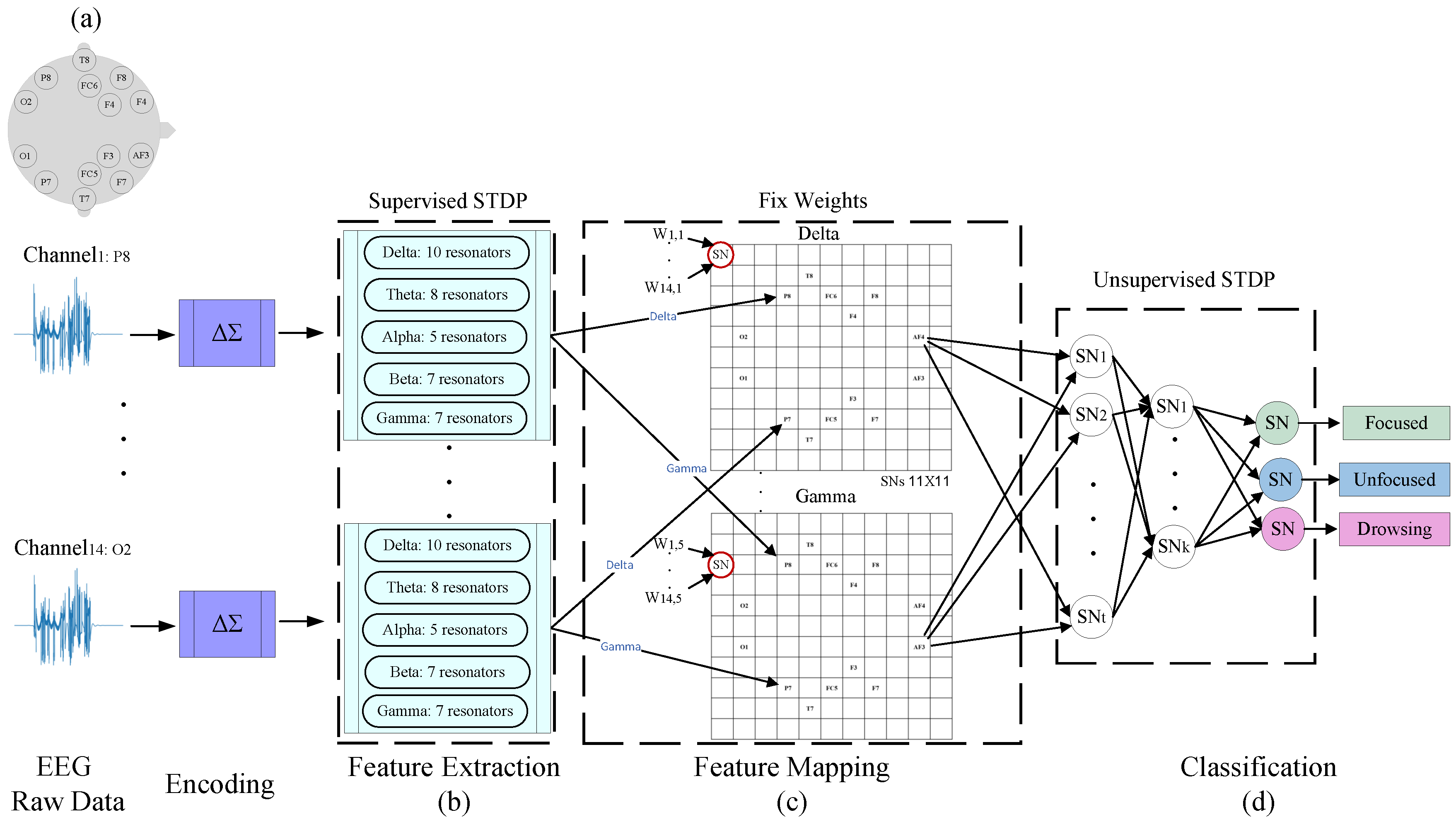

3. Proposed Method

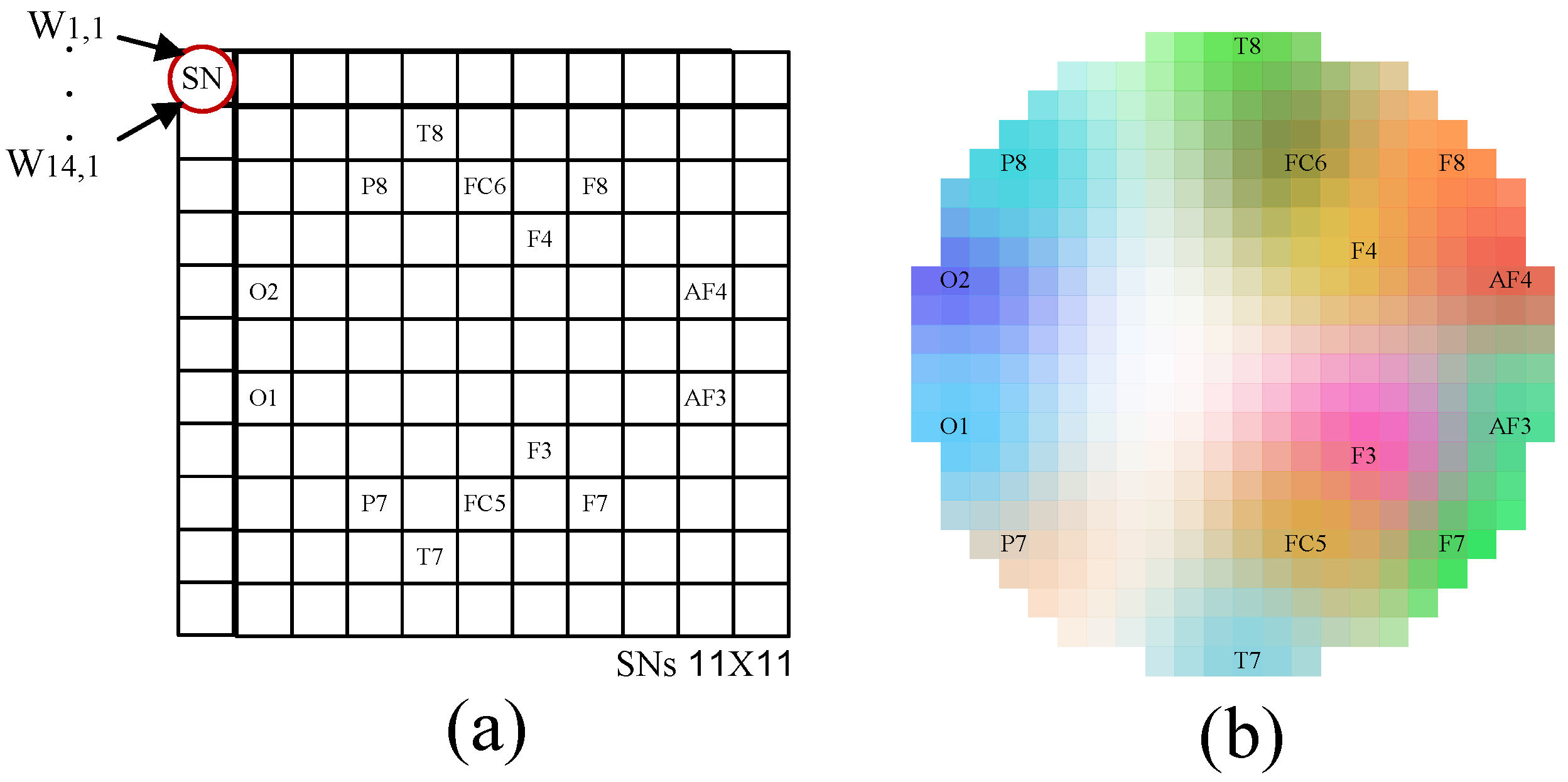

Figure 2 illustrates the proposed architecture for classifying situational awareness based on EEG signals. In the first step, the signals sampled from fourteen electrodes located on the subject’s head (as depicted in Figure 2a) are converted into pulses in the timeline using Delta-sigma modulation (DSM) coding. Classifying an EEG signal using SNN requires encoding the raw EEG data into spikes. We utilize the well-known Delta-sigma modulation and an SCTN-based Integrate-and-Fire (IF) neuron, to convert the analog input sensor voltages into binary stream of spike trains with firing rates that are linearly proportional to the input voltage [27]. After that, the frequency characteristics are extracted for each channel using a dedicated array of resonators. The features extraction phase is carried out using the SNN-based resonator architecture and applying a supervised STDP learning rule [43]. The weights and biases of the resonator networks are adjusted using the proposed supervised algorithm aiming to detect the targeted EEG sub-bands frequencies. In the next step, we propose a unique mapping of the extracted features using five SCTN-based networks. Finally, another network is used for classification. The proposed process for feature extraction, feature mapping, and classification is detailed below.

3.1. EEG Feature Extraction

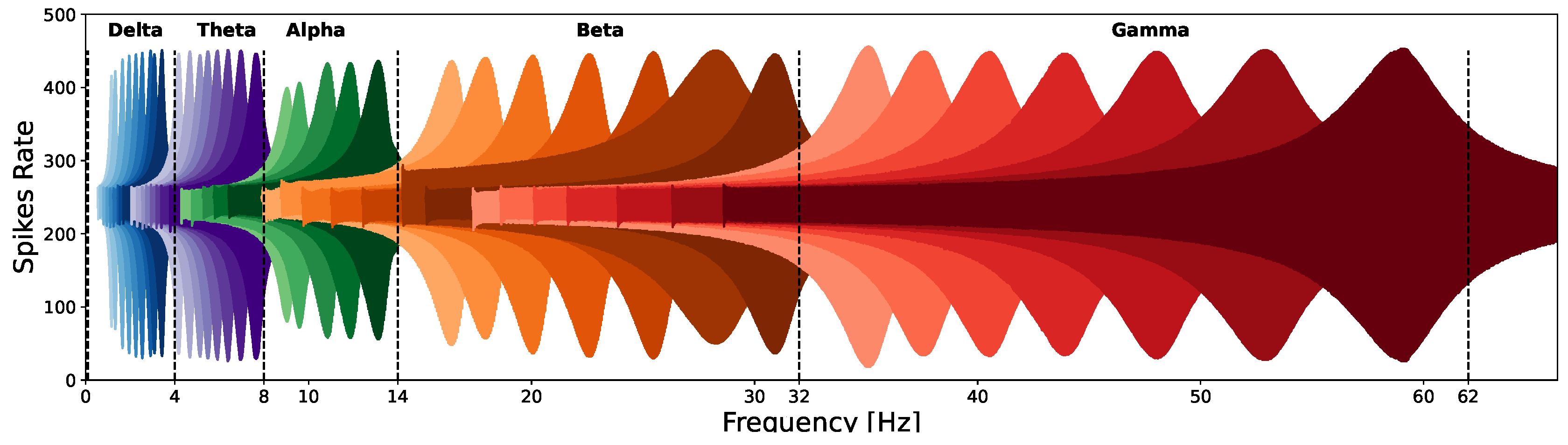

Figure 2b depicts the preprocessing stage which includes feature extraction in the frequency domain. The EEG signal is composed of five sub-bands: Delta (0.1-4Hz), Theta (4-8 Hz), Alpha (8-14 Hz), Beta (14-32 Hz), and Gamma (32-60 Hz). The frequency characteristics are extracted for each channel using a dedicated array of resonators. To cover the whole frequency range, each of the five EEG sub-bands is further divided into several specific frequencies. Therefore, for each sub-band, a different number of resonators is assigned as follows: 10, 8, 5, 7, and 7 resonators for Delta, Theta, Alpha, Beta, and Gamma accordingly. We propose an array of a total of 37 SNN-based resonators for each of the fourteen EEG channels to detect the whole EEG frequency components. the rationale behind selecting different number of resonators for each EEG sub-band has been added. Since each resonator is tuned to detect frequencies in the range of the number of the required resonators depends not only on the specific EEG sub-band range but also differs for the low and the high frequencies ranges, as can be seen in Figure 3.

The EEG signal undergoes encoding and converted into spikes using Delta-Sigma- Modulation coding and is then fed into the resonator circuit as a binary string in the timeline. To detect a specific EEG frequency within different sub-bands the neurons’ parameters (LP and LF) are determined by Equation (3). The resonator’s frequency response to a chirp signal is depicted in Figure 3. The response signal is normalized in terms of amplitude. To capture the energy within a specified frequency band and detect the different frequencies in each EEG sub-band we propose using an array of SCTN-based resonators.

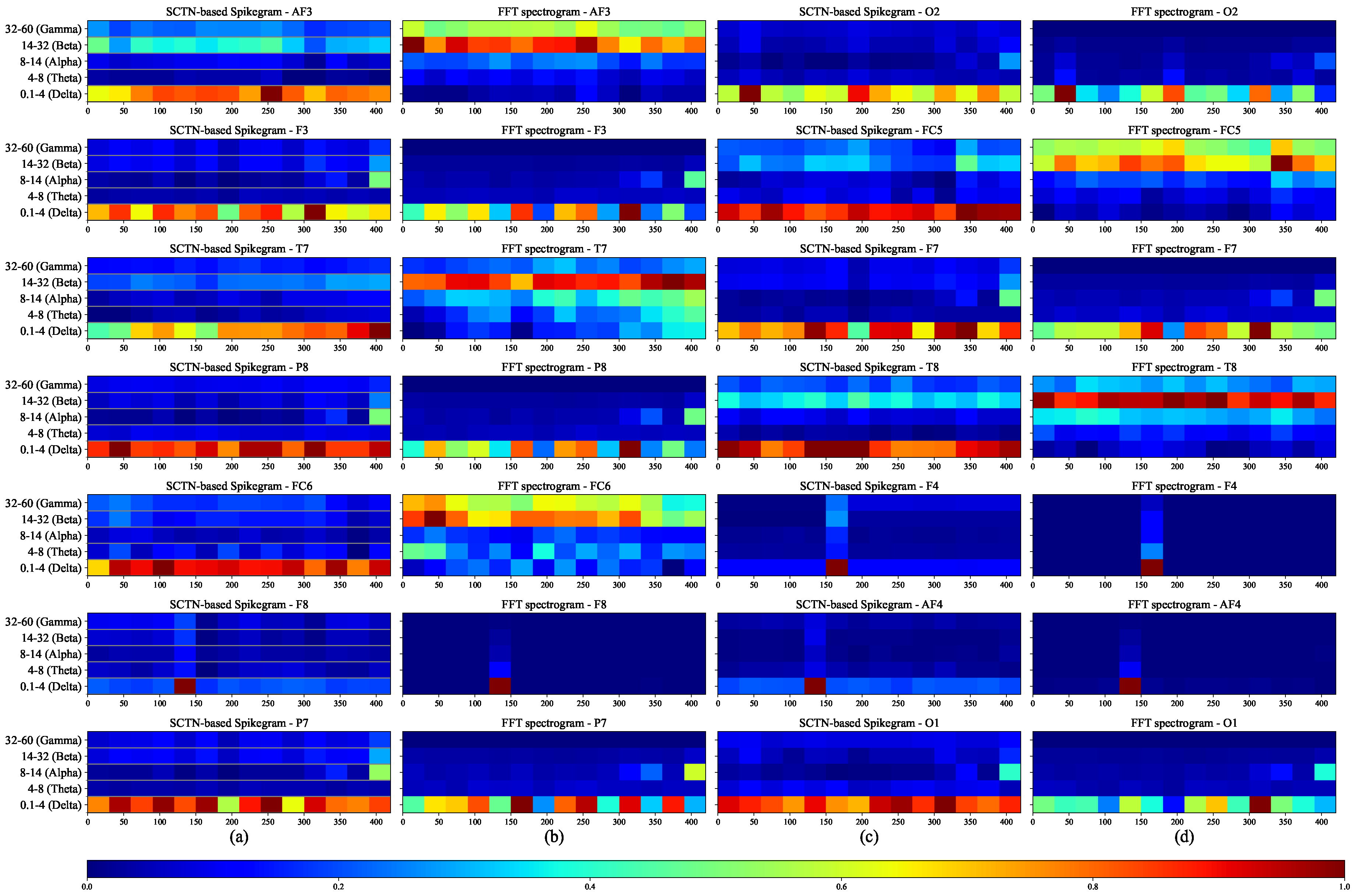

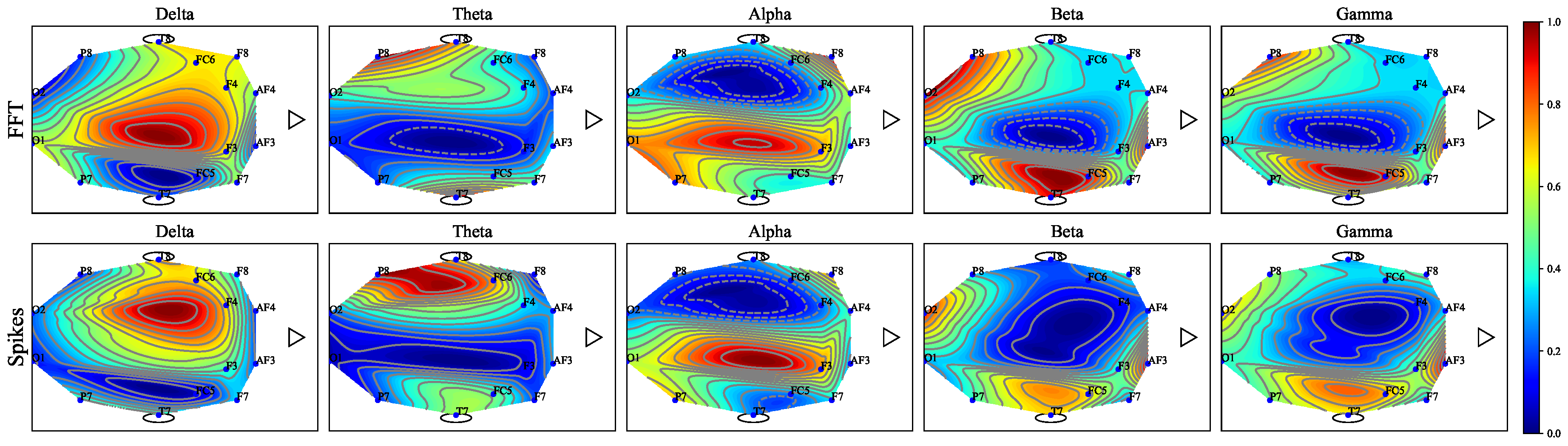

Figure 4a,c depict the frequency outputs of the resonators using a Spikegram representation method, which demonstrates the occurrence of spikes over time for each EEG sub-band. Each time bin represents the aggregated spikes from each group of resonators reflecting the power within that bin. The strength of the signal is shown in color, where red represents the maximum spike rate. To evaluate the quality of the proposed frequency features, we compare them to the features extracted in the Fourier domain. Figure 4b,d depict the spectrogram of the FFT transform of the same under-test EEG signal for the five sub-bands. A comparison between the SNN-based Spikegram and the FFT spectrogram demonstrates a high correlation between the SNN-based extracted features and the FFT features.

3.2. EEG Feature Mapping

This section describes a unique mapping of the extracted features using five SCTN-based networks (one for each EEG sub-band). We introduce a novel approach for mapping each of the five EEG sub-bands into a unique spacial map using an SNN network. Each of the fourteen EEG channels is characterized by five sub-band. For each sub-band, we construct a unique spiking neural network that maintains the EEG topographic map, as depicted in Figure 5a. Each network is composed of an matrix of SCTN-type neurons. Every neuron has fourteen inputs representing the relevant frequency band derived from each of the 14 channels.

The contribution of each channel to a specific EEG sub-band (i.e., Delta, Theta, Alpha, Beta, and Gamma) is represented by a unique spacial topographic map. Within each map, 14 specific neurons are assigned in accordance with the placement of the fourteen electrodes on the test subject’s head, according to EEG 10-20 system standard [44]. This representation captures the mutual influences between the channels and their spatial correlation.

The network weights are determined, without any learning process, according to a Gaussian kernel, centered around each of the specific neurons (which represent a specific channel). Channels connected to these neurons receive a maximal weight for the said channel, i.e., for the specific channel they represent. Therefore, the proposed topographic mapping reflects the spatial correlation and mutual influence among the fourteen EEG channels.

The SCTN network weights are given by:

where represents the coordinates of the specific neuron assigned for a specific electrode and for . Figure 5b depicts the weight distribution for each channel for a given sub-band according to the EEG channels map (Figure 2a).

Figure 6 shows the five SCTN-based topographic maps (one for each sub-band) compared to the spacial maps created using FFT. Again, a high correlation can be observed between the two feature maps.

3.3. SNN Classification

Figure 2d depicts the classification final phase. The classifier is constructed from an SCTN network comprising three layers. The input layer consists of 121 neurons, representing the spatial mapping of each sub-channel. Each neuron in the input layer receives 121 inputs from each of the five EEG sub-band maps, aggregating to 605 input synapses (). A fully connected 2 hidden layers contains 64 and 32 SCTN-type neurons. The Relu activation function is implemented for all the neurons across all layers. The output layer contains three neurons that represent the three mental states: focus, unfocused, and drowsing [24]. The training process is based on an unsupervised STDP learning algorithm [45], where the weights of each SCTN neuron are updated according to the following equation:

where A represents the learning rate, and is the time constant used to control the synaptic potentiation and depression. The magnitude of the learning rate decreases exponentially with the absolute value of the timing difference. When multiple spikes are simultaneously fired, the required weight change is calculated by summing the individual changes derived from all possible spike pairs. and are sets of spikes representing the pre and post-synaptic neurons, respectively, where stands for the timing of spike k (from set), and represents the timing of spike l (from set).

Each neuron in the final layer is assigned to a specific category and by using the unsupervised STDP training is automatically adjusted to detect the patterns tailored to its assigned category. All the weights in the classification SNN are initially randomly configured.

The implementation of the proposed SNN-based architecture consists of only 2776 spiking SCTN neurons. A total of 2072 () neurons are required to implement the feature extraction network (fourteen channels, each connected to thirty-seven resonators composed of four neurons each). The feature mapping network consists of 605 () neurons, while the classification network is composed of 99 () neurons.

4. Experimental Results

4.1. Dataset

The dataset used in this study comprises a total of 25 hours of EEG recordings collected from five healthy volunteer participants, all of whom were students, engaged in a low intensity control task [24]. This task involved controlling a computer-simulated train using the "Microsoft Train Simulator" program. Each experiment required participants to control the train for 35 to 55 minutes along a primarily featureless route. The study focused on three mental states: focused but passive attention, unfocused or detached but awake, and drowsy.

The first mental state, "focused," involved participants passively supervising the train while maintaining continuous concentration and focus, without active engagement. The second state, "unfocused," was characterized by participants being awake but not paying attention to the screen, representing a potentially dangerous state that should trigger an alert. This state, difficult to detect through external cues such as video monitoring, requires sophisticated discrimination methods. The third state involved explicit drowsing [24].

Each participant controlled the simulated train for three distinct 10-minute phases during each experiment. Initially, participants focused closely on the simulator’s controls. In the second phase, they stopped providing control inputs and ceased paying attention to the screen while remaining awake. In the final phase, participants were allowed to relax, close their eyes, and doze off. Each participant completed seven experiments, with a maximum of one per day. The first two experiments were for habituation, and data collection was conducted during the last five trials. To facilitate the transition to the drowsy state, all experiments were conducted between 7 pm and 9 pm. The raw data from these experiments is available to researchers on the Kaggle website.

4.2. Simulation Results

Table 1 shows the classification accuracy results using an open-source classification tool for six different classification models [46]. The performance of the classifiers was evaluated for both the SCTN-based and the FFT features. The FFT features have been extracted for various frame durations (for 5-10-15, and 20ms). The best feature set that results in the best classification has been selected for comparison. Results show that EEG classification based on our proposed approach (SCTN) outperforms the FFT-based classification for all five models and demonstrates an accuracy improvement of up to 6.9%.

We employed two evaluation methods for situational awareness detection: subject-specific and group-level classification. For the subject-specific classification the classification network was trained individually for each participant using only their data. For this, 80% of random samples from the specific subject EEG data have been used for training. The remaining 20% of the subject data, which were not used for training, has been used for testing.

Similarly, also for the group-level classification, an 80-20 data splitting has been used. For this scenario, the training data set included combined data from all participants, randomly selected of 80% of the joint EEG recordings of all participants. The testing has been carried out on the remaining 20% mixed dataset that had not been used during the training phase.

To further evaluate the SCTN-based classifier, we compare our proposed approach with previous related work applied to EEG classification. Table 2 summarizes the results of four previous studies on detecting mental attention states as described in [24] compared to the result of our proposed SCTN-based classifier. As seen in the table, our method demonstrates better results than all previous studies which used the SVM classifier. An average classification accuracy of 96.8% and 91.7% is achieved for classifying the three mental states while using our method (SCTN) and the SVM method [24], respectively.

The proposed architecture has been evaluated for individual training for each participant and a common-subject paradigm where a single generic classifier is jointly trained for all participants. Results show that the generic mental state detector performs slightly worse than the subject-specific detectors, demonstrating accuracy degradation of about 4-6% compared to training with individual subjects. The good generalization ability is achieved due to the proposed features extraction method and the EEG feature mapping, which successfully mitigates the differences between users and highlights relevant characteristics.

The performance evaluation is demonstrated for each subject for both the subject-specific model, and the transfer learning and the generic model.

Table 3 depicts the results of applying a transfer learning (TL) approach (training on one subject and testing on another) for the five participants (P0 to P4). Additionally, the table also provides the results of the generic model (training with samples from all participants and testing on a specific participant).

Results show a success rate in the range of 94.7% to 98.3% for the subject-specific case (indicated on the table diagonal) and an average accuracy of 92.53% for the transfer learning model. The generic model demonstrates comparable results with a 92.64% success rate on average. These findings underscore the model’s ability to effectively classify situational awareness based on EEG data for both training paradigms.

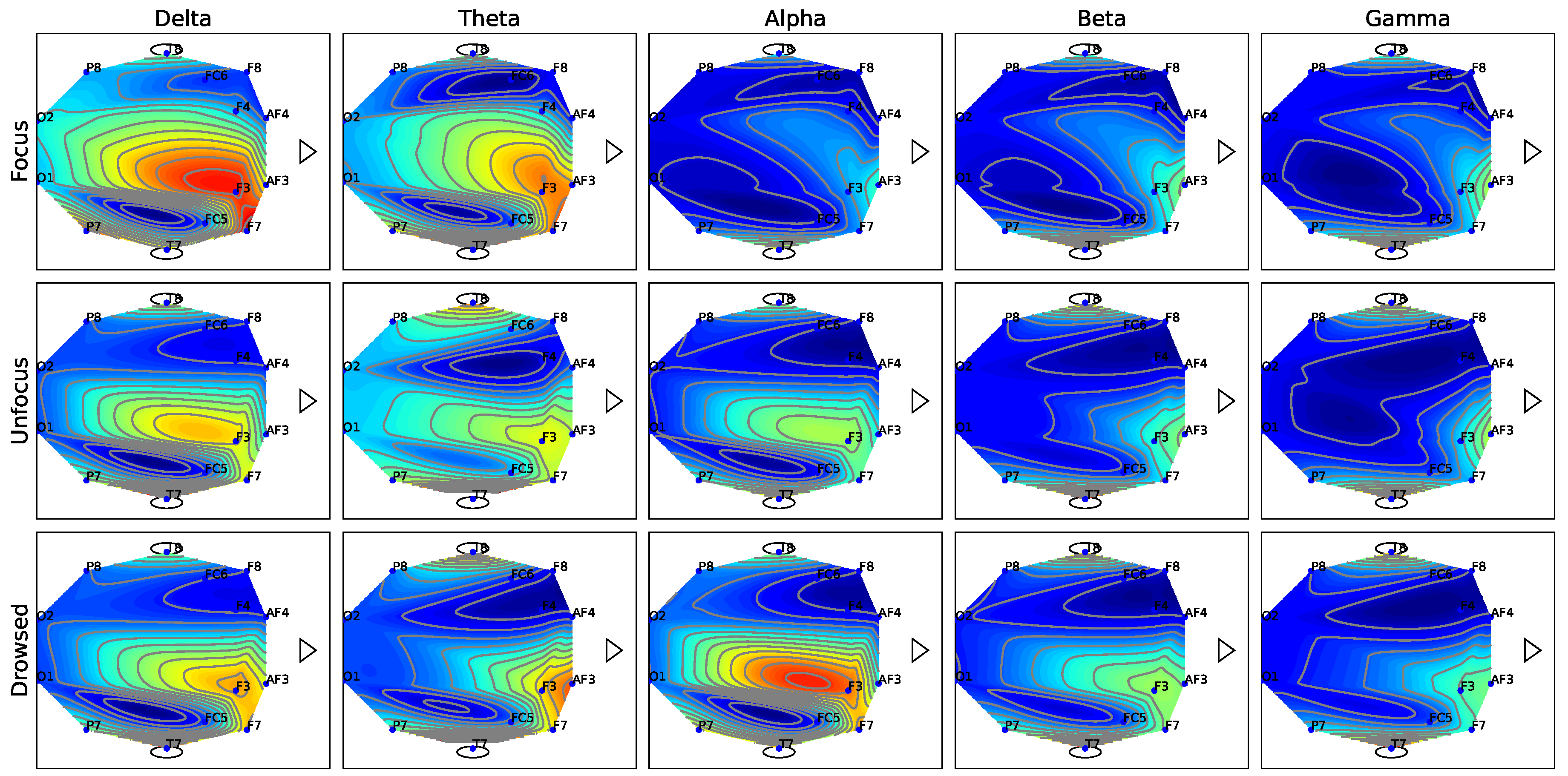

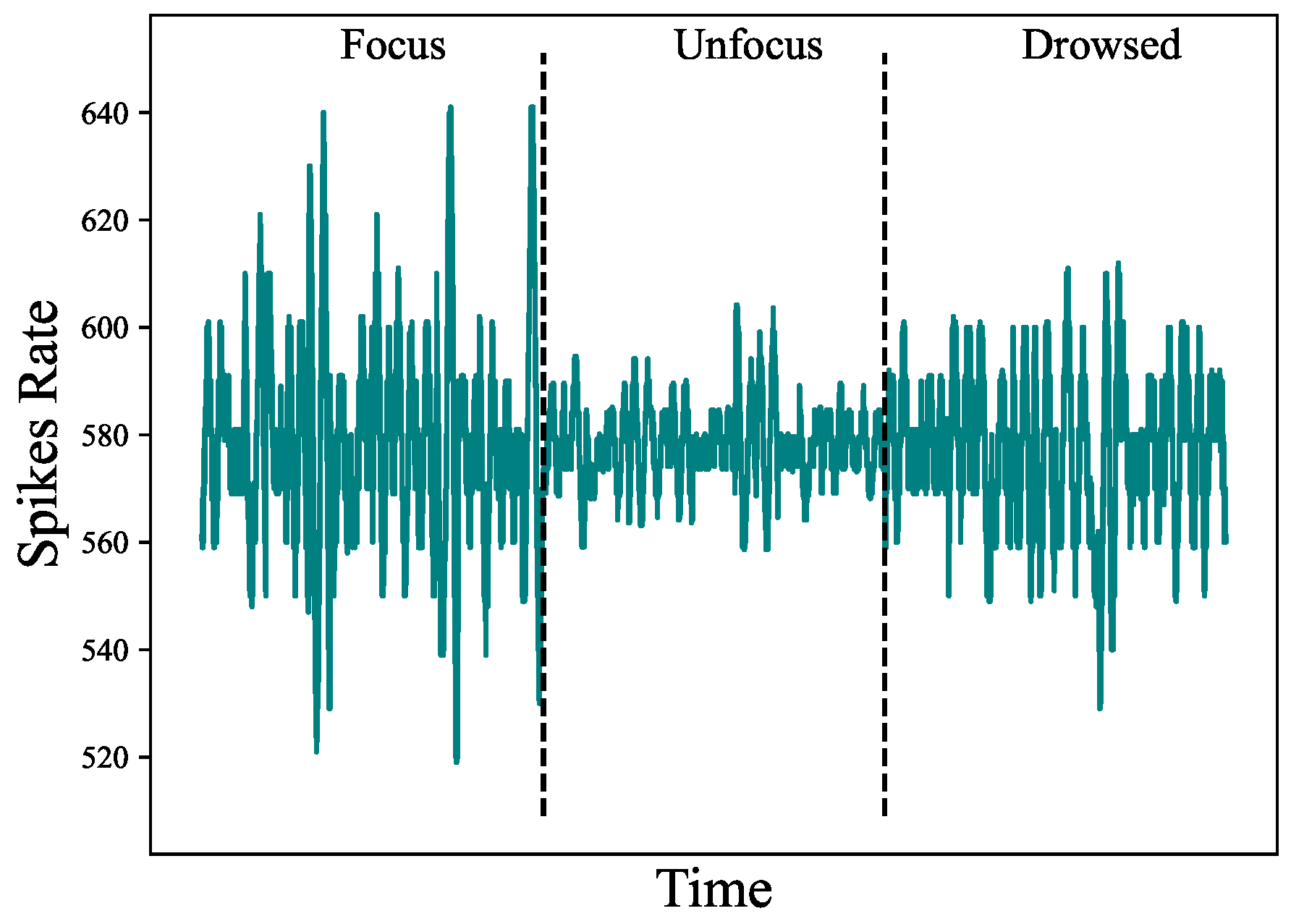

Figure 7 depicts the topography maps of EEG signal magnitude divided into five frequency bands (Delta, Theta, Alpha, Beta, and Gamma) for each of the three mental states (focus, unfocused, and drowsing). It can be seen that both focus and unfocus mental states are mainly characterized by the Delta and Theta channels (i.e., in the low-frequency EEG activity, specifically within the one to 8 Hz range). However, while in the focus state there is increased activity in the frontal lobe, in the state of lack of focus there is a decrease in activity in this area. The drowsing state is characterized mainly by the alpha channel sub-band (8-14 Hz). This outcome is consistent with existing literature findings on the correlation between the Alpha EEG band and the drowsiness mental state [24]. Figure 8 depicts the timely activity examined in the F3 EEG channel located in the frontal lobe for the Delta sub-band. The spike rate accurately corresponds to the three mental states and demonstrates the activity decrease for the unfocus compared to the focus state.

We evaluate the relative contribution of the various EEG electrodes in discerning the three mental states (focused, unfocused, and drowsing). Remarkably, we discovered that the best classification performance could be achieved using only 5 EEG electrodes: F7, F3, AF3, F4, and AF4. Three of those five electrodes are significantly situated over the frontal lobe.

Table 4 shows the mental state classification results achieved with a reduced set of only five EEG electrodes compared to the 14 available EEG electrodes while employing all the 5 EEG sub-bands or only the three low-frequency sub-bands (Delta, Theta, and Alpha). Although using all 14 EEG channels and the five sub-bands results in the best performance with 96.8% accuracy, the accuracy loss while using only 5 EEG channels and three sub-bands is minor, with a degradation of only 3.2%. However, the benefit is reducing area and power since only 1202 neurons are required compared to 2776 neurons for the best-case scenario. Applying only the three dominant sub-bands (Delta, Theta, and Alpha) for mental classification results in 94.1% accuracy compared to 96.8% with all five available sub-bands while reducing the number of the required neurons by about 9%. Using all the frequency sub-bands with only five EEG electrodes results in a slight accuracy loss of 1.9% but saves about 50% of the required neurons.

4.3. Performance Evaluation

The performance evaluation of the proposed method is carried out using the four following metrics:

- 1.

- Accuracy: calculated as the ratio of the sum of the true positive () and true negative () predictions to the total number of predictions (, , false positive (), and false negative ()) made by the model:

- 2.

- Sensitivity: determined as the ratio of true positive () predictions to the sum of true positive () and false negative () predictions.

- 3.

- Specificity: calculated as the ratio of true negative () predictions to the sum of true negative () and false positive () predictions.

- 4.

- False Positive Rate (FPR): determined as the ratio of false positives () to the sum of false positives () and true negatives ().

Table 5 shows the proposed method’s performance in terms of the four metrics including accuracy, sensitivity, specificity, and False Positive Rate (FPR). An average accuracy of 96.8% is demonstrated for the subject-specific model and a precise identification of all three EEG-based mental states is achieved.

The high average sensitivity (96.9%) highlights the model’s robust ability to detect true positive cases, while an average specificity of 98.33% underscores its proficiency in correctly identifying true negative cases, thereby minimizing the risk of misclassifying normal brain activity as an indication to wrong situational awareness classification.

Furthermore, the low FPR average of 1.63% indicates a low incidence of false positive predictions, ensuring reliable differentiation between different mental states based on EEG recordings. Table 6 depicts a confusion matrix to evaluate the classification of the three mental states, demonstrating highly accurate classification results.

5. Conclusions

This article suggests utilizing a biologically inspired SNN-based architecture for feature extraction in the frequency domain. We propose an original approach for classifying mental states and situation awareness based on EEG signals and a biologically inspired SNN using an array of unique SCTN-based resonators and an SNN classification network.

Narrowing the frequency bands to three sub-bands achieves area savings with comparable classification results to the best scenario. Moreover, using only five out of the fourteen EEG electrodes results in sufficient accuracy of 94.9% and 93.6% with five and three EEG sub-bands, respectively, while significantly reducing the number of the required neurons to about 50%.

Results demonstrate a strong correlation between the SCTN-based bio-inspired features and the FFT-extracted features. This suggests that the proposed method could be effectively applied to diverse applications. Simulations show that our approach outperforms previous related work, demonstrating 96.8% accuracy in classifying the three mental states (focus, unfocus, and drowse). Moreover, we achieved 93.6% accuracy with only five EEG electrodes compared to 91.7% with 14 electrodes in the work of [24].

The proposed SNN-based classifier has a particularly good generalization ability, therefore enabling using individual training paradigms (i.e., for a specific participant) and then successfully applying the trained model to other participants. The proposed approach has been evaluated using a limited number of subjects, and therefore, we believe generalizability for a large number of subjects and checking more awareness states would better demonstrate the power of the suggested method.

This study describes the potential usage of spiking neural networks for EEG signal analysis. Future work may expand the proposed approach to apply the neuromorphic SCTN-based classification network to further EEG-based applications like early detection of epileptic seizures and more.

Author Contributions

Conceptualization, M.B. and S.G.; methodology, M.B. and S.G.; software, Y.H.; validation, Y.H. and M.B.; formal analysis, Y.H. and M.B.; investigation, Y.H. and M.B.; writing—original draft preparation, M.B., S.G. and Y.B.; writing—review and editing, M.B., S.G. and Y.B.; visualization, Y.H.; supervision, S.G. and Y.B.; project administration, S.G. and Y.B.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The source code and dataset can be found in the following GitHub Repository: https://github.com/NeuromorphicLabBGU/Situational-Awareness-Classification.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SCTN | Spike-Continuous-Time-Neuron |

| SNN | Spiking Neural Network |

| SN | Spiking Neuron |

| IF | Integrate-and-Fire |

| SA | Situational Awareness |

| LF | Leakage Factor |

| LP | Leakage Period |

| TL | Transfer Learning |

| STDP | Spike-Timing-Dependent Plasticity |

| FFT | Fast Fourier Transform |

| DWT | Discrete Wavelet Transform |

| DSM | Delta-Sigma Modulation |

| PDM | Pulse-Density Modulation |

References

- Clark, K.; Wu, Y. Survey of Neuromorphic Computing: A Data Science Perspective. 2023 IEEE 3rd International Conference on Computer Communication and Artificial Intelligence (CCAI). IEEE, 2023, pp. 81–84.

- Shrestha, A.; Fang, H.; Mei, Z.; Rider, D.P.; Wu, Q.; Qiu, Q. A survey on neuromorphic computing: Models and hardware. IEEE Circuits and Systems Magazine 2022, 22, 6–35. [Google Scholar] [CrossRef]

- Cai, L.; Yu, L.; Yue, W.; Zhu, Y.; Yang, Z.; Li, Y.; Tao, Y.; Yang, Y. Integrated Memristor Network for Physiological Signal Processing. Advanced Electronic Materials 2023, 9, 2300021. [Google Scholar] [CrossRef]

- Yang, G.; Liu, K.; Yu, H.; Li, C.; Zeng, M. Examination and Repair of Technology of Equipment Status Based on SNN in Intelligent Substation. Journal of Physics: Conference Series. IOP Publishing, 2023, Vol. 2666, p. 012037.

- Desai, U.; Shetty, A.D. Electrodermal activity (EDA) for treatment of neurological and psychiatric disorder patients: a review. 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS). IEEE, 2021, Vol. 1, pp. 1424–1430.

- Yang, Y.; Eshraghian, J.K.; Truong, N.D.; Nikpour, A.; Kavehei, O. Neuromorphic deep spiking neural networks for seizure detection. Neuromorphic Computing and Engineering 2023, 3, 014010. [Google Scholar] [CrossRef]

- Zhu, K.; Zhang, X.; Wang, J.; Cheng, N.; Xiao, J. Improving EEG-based Emotion Recognition by Fusing Time-Frequency and Spatial Representations. ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2023, pp. 1–5.

- Xu, Q.; Shen, J.; Ran, X.; Tang, H.; Pan, G.; Liu, J.K. Robust transcoding sensory information with neural spikes. IEEE Transactions on Neural Networks and Learning Systems 2021, 33, 1935–1946. [Google Scholar] [CrossRef] [PubMed]

- Samuel, S.; Borowsky, A.; Zilberstein, S.; Fisher, D.L. Minimum time to situation awareness in scenarios involving transfer of control from an automated driving suite. Transportation research record 2016, 2602, 115–120. [Google Scholar] [CrossRef]

- Endsley, M.R. Toward a theory of situation awareness in dynamic systems. Human factors 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Kästle, J.L.; Anvari, B.; Krol, J.; Wurdemann, H.A. Correlation between Situational Awareness and EEG signals. Neurocomputing 2021, 432, 70–79. [Google Scholar] [CrossRef]

- Catherwood, D.; Edgar, G.K.; Nikolla, D.; Alford, C.; Brookes, D.; Baker, S.; White, S. Mapping brain activity during loss of situation awareness: an EEG investigation of a basis for top-down influence on perception. Human factors 2014, 56, 1428–1452. [Google Scholar] [CrossRef]

- Iqbal, S.; Muhammed Shanir, P.; Khan, Y.U.; Farooq, O. Time domain analysis of EEG to classify imagined speech. Proceedings of the Second International Conference on Computer and Communication Technologies: IC3T 2015, Volume 2. Springer, 2016, pp. 793–800.

- Qin, X.; Zheng, Y.; Chen, B. Extract EEG features by combining power spectral density and correntropy spectral density. 2019 Chinese Automation Congress (CAC). IEEE, 2019, pp. 2455–2459.

- Elgandelwar, S.M.; Bairagi, V.K. Power analysis of EEG bands for diagnosis of Alzheimer disease. International Journal of Medical Engineering and Informatics 2021, 13, 376–385. [Google Scholar] [CrossRef]

- Al-Fahoum, A.S.; Al-Fraihat, A.A. Methods of EEG Signal Features Extraction Using Linear Analysis in Frequency and Time-Frequency Domains. International Scholarly Research Notices 2014, 2014, 730218. [Google Scholar] [CrossRef]

- Zheng, J.; Liang, M.; Sinha, S.; Ge, L.; Yu, W.; Ekstrom, A.; Hsieh, F. Time-frequency analysis of scalp EEG with Hilbert-Huang transform and deep learning. IEEE Journal of biomedical and health informatics 2021, 26, 1549–1559. [Google Scholar] [CrossRef] [PubMed]

- Altaheri, H.; Muhammad, G.; Alsulaiman, M.; Amin, S.U.; Altuwaijri, G.A.; Abdul, W.; Bencherif, M.A.; Faisal, M. Deep learning techniques for classification of electroencephalogram (EEG) motor imagery (MI) signals: A review. Neural Computing and Applications 2023, 35, 14681–14722. [Google Scholar] [CrossRef]

- Pahuja, S.; Veer, K. Recent approaches on classification and feature extraction of EEG signal: A review. Robotica 2022, 40, 77–101. [Google Scholar]

- Light, G.A.; Williams, L.E.; Minow, F.; Sprock, J.; Rissling, A.; Sharp, R.; Swerdlow, N.R.; Braff, D.L. Electroencephalography (EEG) and event-related potentials (ERPs) with human participants. Current protocols in neuroscience 2010, 52, 6–25. [Google Scholar] [CrossRef] [PubMed]

- Sahu, R.; Dash, S.R.; Cacha, L.A.; Poznanski, R.R.; Parida, S. Epileptic seizure detection: a comparative study between deep and traditional machine learning techniques. Journal of integrative neuroscience 2020, 19, 1–9. [Google Scholar] [PubMed]

- Qing-Hua, W.; Li-Na, W.; Song, X. Classification of EEG signals based on time-frequency analysis and spiking neural network. 2020 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC). IEEE, 2020, pp. 1–5.

- Zhang, Z. Spectral and time-frequency analysis. EEG Signal Processing and feature extraction 2019, pp. 89–116.

- Aci, Ç.İ.; Kaya, M.; Mishchenko, Y. Distinguishing mental attention states of humans via an EEG-based passive BCI using machine learning methods. Expert Systems with Applications 2019, 134, 153–166. [Google Scholar] [CrossRef]

- Forno, E.; Fra, V.; Pignari, R.; Macii, E.; Urgese, G. Spike encoding techniques for IoT time-varying signals benchmarked on a neuromorphic classification task. Frontiers in Neuroscience 2022, 16, 999029. [Google Scholar] [CrossRef]

- Shamma, S.A.; Elhilali, M.; Micheyl, C. Temporal coherence and attention in auditory scene analysis. Trends in neurosciences 2011, 34, 114–123. [Google Scholar] [CrossRef]

- Nasrollahi, S.A.; Syutkin, A.; Cowan, G. Input-Layer Neuron Implementation Using Delta-Sigma Modulators. 2022 20th IEEE Interregional NEWCAS Conference (NEWCAS). IEEE, 2022, pp. 533–537.

- Park, S. Principles of sigma-delta modulation for analog-to-digital converters 1999.

- Luo, Y.; Fu, Q.; Xie, J.; Qin, Y.; Wu, G.; Liu, J.; Jiang, F.; Cao, Y.; Ding, X. EEG-based emotion classification using spiking neural networks. IEEE Access 2020, 8, 46007–46016. [Google Scholar] [CrossRef]

- Devnath, L.; Kumer, S.; Nath, D.; Das, A.; Islam, R. Selection of wavelet and thresholding rule for denoising the ECG signals. Annals of pure and applied mathematics 2015, 10, 65–73. [Google Scholar]

- Zhang, D.; Cao, D.; Chen, H. Deep learning decoding of mental state in non-invasive brain computer interface. Proceedings of the International conference on artificial intelligence, information processing and cloud computing, 2019, pp. 1–5.

- Friedman, N.; Fekete, T.; Gal, K.; Shriki, O. EEG-based prediction of cognitive load in intelligence tests. Frontiers in human neuroscience 2019, 13, 191. [Google Scholar] [CrossRef] [PubMed]

- Xia, L.; Feng, Y.; Guo, Z.; Ding, J.; Li, Y.; Li, Y.; Ma, M.; Gan, G.; Xu, Y.; Luo, J.; others. MuLHiTA: A novel multiclass classification framework with multibranch LSTM and hierarchical temporal attention for early detection of mental stress. IEEE Transactions on Neural Networks and Learning Systems 2022.

- Xiong, R.; Kong, F.; Yang, X.; Liu, G.; Wen, W. Pattern recognition of cognitive load using eeg and ecg signals. Sensors 2020, 20, 5122. [Google Scholar] [CrossRef] [PubMed]

- Souchet, A.D.; Philippe, S.; Lourdeaux, D.; Leroy, L. Measuring visual fatigue and cognitive load via eye tracking while learning with virtual reality head-mounted displays: A review. International Journal of Human–Computer Interaction 2022, 38, 801–824. [Google Scholar] [CrossRef]

- Belwafi, K.; Gannouni, S.; Aboalsamh, H. Embedded brain computer interface: State-of-the-art in research. Sensors 2021, 21, 4293. [Google Scholar] [CrossRef]

- Venkatesan, C.; Karthigaikumar, P.; Paul, A.; Satheeskumaran, S.; Kumar, R. ECG signal preprocessing and SVM classifier-based abnormality detection in remote healthcare applications. IEEE Access 2018, 6, 9767–9773. [Google Scholar] [CrossRef]

- Zayim, N.; Yıldız, H.; Yüce, Y.K. Estimating Cognitive Load in a Mobile Personal Health Record Application: A Cognitive Task Analysis Approach. Healthcare Informatics Research 2023, 29, 367. [Google Scholar] [CrossRef]

- Prabhakar, G.; Mukhopadhyay, A.; Murthy, L.; Modiksha, M.; Sachin, D.; Biswas, P. Cognitive load estimation using ocular parameters in automotive. Transportation Engineering 2020, 2, 100008. [Google Scholar] [CrossRef]

- Gjoreski, M.; Mahesh, B.; Kolenik, T.; Uwe-Garbas, J.; Seuss, D.; Gjoreski, H.; Luštrek, M.; Gams, M.; Pejović, V. Cognitive load monitoring with wearables–lessons learned from a machine learning challenge. IEEE Access 2021, 9, 103325–103336. [Google Scholar] [CrossRef]

- Bensimon, M.; Greenberg, S.; Ben-Shimol, Y.; Haiut, M. A New SCTN Digital Low Power Spiking Neuron. IEEE Transactions on Circuits and Systems II: Express Briefs 2021, 68, 2937–2941. [Google Scholar] [CrossRef]

- Bensimon, M.; Greenberg, S.; Haiut, M. Using a low-power spiking continuous time neuron (SCTN) for sound signal processing. Sensors 2021, 21, 1065. [Google Scholar] [CrossRef] [PubMed]

- Bensimon, M.; Hadad, Y.; Ben-Shimol, Y.; Greenberg, S. Time-Frequency Analysis for Feature Extraction Using Spiking Neural Network. Authorea Preprints 2023. [Google Scholar]

- Malmivuo, J.; Plonsey, R. Bioelectromagnetism: principles and applications of bioelectric and biomagnetic fields; Oxford University Press, USA, 1995.

- Song, S.; Miller, K.D.; Abbott, L.F. Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nature neuroscience 2000, 3, 919–926. [Google Scholar] [CrossRef] [PubMed]

- Erickson, N.; Mueller, J.; Shirkov, A.; Zhang, H.; Larroy, P.; Li, M.; Smola, A. Autogluon-tabular: Robust and accurate automl for structured data. arXiv arXiv:2003.06505.

- Myrden, A.; Chau, T. A passive EEG-BCI for single-trial detection of changes in mental state. IEEE Transactions on neural systems and rehabilitation Engineering 2017, 25, 345–356. [Google Scholar] [CrossRef] [PubMed]

- Alirezaei, M.; Sardouie, S.H. Detection of human attention using EEG signals. 2017 24th national and 2nd international iranian conference on biomedical engineering (icbme). IEEE, 2017, pp. 1–5.

- Nuamah, J.; Seong, Y. Support vector machine (SVM) classification of cognitive tasks based on electroencephalography (EEG) engagement index. Brain-Computer Interfaces 2018, 5, 1–12. [Google Scholar] [CrossRef]

Figure 1.

(a) SNN-based resonator architecture and, (b) the neurons’ output.

Figure 2.

Overall SNN-based architecture: (a) EEG electrodes position according to 10-20 standard, (b) SNN-based resonators used for feature extraction using supervised STDP learning, (c) feature mapping: EEG topologic map consists of SCTN neurons, and (d) SCTN-based classification network trained with unsupervised STDP.

Figure 2.

Overall SNN-based architecture: (a) EEG electrodes position according to 10-20 standard, (b) SNN-based resonators used for feature extraction using supervised STDP learning, (c) feature mapping: EEG topologic map consists of SCTN neurons, and (d) SCTN-based classification network trained with unsupervised STDP.

Figure 3.

SCTN-based resonator frequency response to a chirp signal in the EEG sub-bands ranges.

Figure 4.

EEG frequency features: (a),(c) SNN-based Spikegram, and (b),(d) FFT spectrogram.

Figure 5.

EEG feature mapping. (a) EEG topologic map consists of SCTN neurons for each sub band, (b) weight distribution for the 14 synapses of each SCTN neuron, according to EEG electrodes position map.

Figure 5.

EEG feature mapping. (a) EEG topologic map consists of SCTN neurons for each sub band, (b) weight distribution for the 14 synapses of each SCTN neuron, according to EEG electrodes position map.

Figure 6.

EEG sub-bands topography maps: FFT vs. SCTN. SCTN-based topographic maps (one for each sub-band) compared to the spacial maps created using FFT.

Figure 6.

EEG sub-bands topography maps: FFT vs. SCTN. SCTN-based topographic maps (one for each sub-band) compared to the spacial maps created using FFT.

Figure 7.

EEG topography maps for the five EEG sub-bands (Delta, Theta, Alpha, Beta, and Gamma).

Figure 8.

The activity measured in the F3 EEG electrode for the Delta sub-band.

Table 1.

Average classification result.

| Model | Frame Duration[ms] | FFT | SCTN |

|---|---|---|---|

| Weighted Ensemble | 10 | 90.10% | 93.86% |

| SVM | 20 | 91.51% | 93.18% |

| XGBoost | 10 | 88.82% | 92.64% |

| Random Forest | 5 | 86.81% | 92.46% |

| LightGBM | 15 | 89.42% | 91.96% |

| KNN | 20 | 63.77% | 68.98% |

Table 2.

A comparison of mental state classification results.

| Reference | Mental states | Accuracy |

|---|---|---|

| Myrden and Chau [47] | 3 (fatigue, frustration, attention) | 84.8% |

| Alirezaei and Sardouie [48] | 2 (attentive or inattentive) | 92.8% |

| Nuamah and Seong [49] | 2 (attentive or inattentive) | 93.33% |

| Acı et al. [24] | 3 (focused, unfocused, drowsy) | 91.72% |

| Our | 3 (focused, unfocused, drowsy) | 96.8% |

Table 3.

Transfer Learning Results: This table summarizes the simulation results where the network was trained on data from one subject and tested across all participants. The right column shows the performance of the generic model, which was trained using data collected from all participants and then tested using each of the subjects separately.

Table 3.

Transfer Learning Results: This table summarizes the simulation results where the network was trained on data from one subject and tested across all participants. The right column shows the performance of the generic model, which was trained using data collected from all participants and then tested using each of the subjects separately.

| Trained | ||||||||

|---|---|---|---|---|---|---|---|---|

| P0 | P1 | P2 | P3 | P4 | Avg TL | Generic Model | ||

| Tested | P0 | 98.3% | 91.1% | 88.5% | 93.8% | 97.3% | 92.68% | 93.4% |

| P1 | 91.3% | 94.7% | 87.3% | 92.4% | 90.2% | 90.3% | 89.8% | |

| P2 | 93.9% | 85.1% | 95.9% | 88.1% | 89.3 % | 89.1% | 94.3% | |

| P3 | 91.2% | 92.8% | 95.2% | 97.2% | 96.3% | 93.88% | 93.5% | |

| P4 | 89.9% | 87.5% | 92.5% | 96.8% | 97.9% | 91.68% | 92.2% | |

| Avg | 92.92% | 90.24% | 91.88% | 93.66% | 94.2% | 92.53% | 92.64% | |

Table 4.

A comparison of EEG classification accuracy with five electrodes and three sub-bands.

| EEG Electrodes | Frequency Bands | Neurons | Accuracy |

|---|---|---|---|

| All (14) | All (5) | 2776 | 96.8% |

| All (14) | Delta, Theta, Alpha | 2534 | 94.1% |

| F7, F3, AF3, F4, and AF4 | All (5) | 1444 | 94.9% |

| F7, F3, AF3, F4, and AF4 | Delta, Theta, Alpha | 1202 | 93.6% |

Table 5.

Performance evaluation in terms of the four metrics: accuracy, sensitivity, specificity, and False Positive Rate (FPR).

Table 5.

Performance evaluation in terms of the four metrics: accuracy, sensitivity, specificity, and False Positive Rate (FPR).

| Accuracy | Sensitivity | Specificity | FPR | |

|---|---|---|---|---|

| Focus | 96.8% | 98.3% | 99.4% | 0.9% |

| Unfocused | 96.8% | 96.4% | 97.4% | 1.8% |

| Drowsing | 96.8% | 95.7% | 98.2% | 2.2% |

Table 6.

Confusion Matrix.

| Focus | Unfocused | Drowsing | |

|---|---|---|---|

| Focus | |||

| Unfocused | |||

| Drowsing |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Copyright: This open access article is published under a Creative Commons CC BY 4.0 license, which permit the free download, distribution, and reuse, provided that the author and preprint are cited in any reuse.

MDPI Initiatives

Important Links

© 2024 MDPI (Basel, Switzerland) unless otherwise stated