1. Introduction and Motivation

When discussing topics related to digitization and technological innovation in the context of an industrial system, Industry 4.0 is frequently mentioned. Industry 4.0 pertains to the intelligent interconnection of machines and processes in the industrial sector by utilizing Cyber-Physical Systems (CPS). CPS is a technology that enables intelligent control by integrating embedded networked systems [

1]. It is subject to various interpretations. (Vogel-Heuser and Hess, 2016) examined the primary design principles of Industry 4.0, which include a service-oriented reference architecture, intelligent and self-organizing cyber-physical production systems (CPPS), interoperability between CPPS and humans, and secure data integration across disciplines and the entire life cycle.

Digital Twin (DT) has become increasingly prominent in recent years, being widely discussed in the literature on Industry 4.0 and conversations within large companies, particularly in the IT and digital sectors. The term "Digital Twin" is evocative and partially self-explanatory, although not entirely accurate. As a result, two outcomes have emerged. Firstly, the concept has gained significant popularity across various application areas, surpassing its initial domains, such as aerospace engineering, robotics, manufacturing, and IT [

2]. Furthermore, there is a lack of consensus concerning the exact definition of the term, its areas of application and the minimum requirements to distinguish it from other technologies [

3].

Although there is no consensus on the definition of DT, we can identify the following definition as comprehensive enough for the manufacturing environment: the Digital Twin consists of a virtual representation of a production system that is able to run on different simulation disciplines that is characterized by the synchronization between the virtual and real system, thanks to sensed data and connected smart devices, mathematical models and real-time data elaboration [

4].

Compared to Grieves' (2005) initial idea [

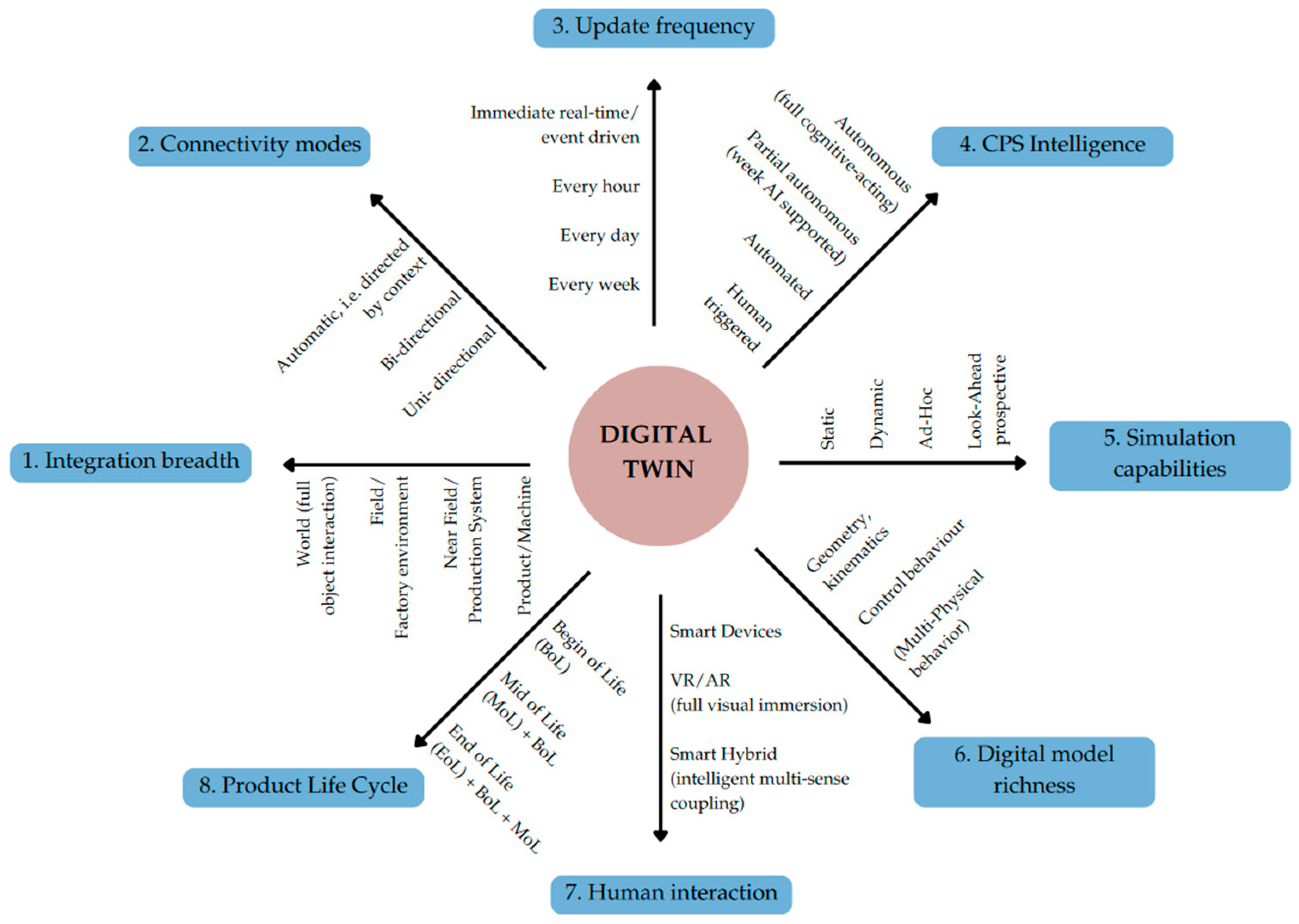

5], it is important to highlight that a fundamental concept remains intact. This concept emphasizes that a DT is not simply a digital representation of a physical system. What sets a digital twin apart from a basic digital model is its ability to engage in a bidirectional exchange of information. It is also possible to identify some of the most common dimensions and peculiar axes that can be used to capture the nature of a DT [

6]: the integration breadth; the connectivity mode, the update frequency, the simulation capabilities, the human interactions, the digital model richness.

When examining the concept of DT in the industrial domain, specifically in manufacturing, it is important to consider several crucial factors to characterize the work direction accurately. Relevant examples encompass the scope of the area that the digital twin needs to encompass, the time horizon it needs to capture and simulate, the information systems it must interact with to generate additional value, the technology employed to create the digital component of the digital twin, and the reference framework [

7,

8].

Numerous studies and industrial applications have demonstrated that DTs have numerous uses across various decision-making levels. For instance, in the context of manufacturing processes, they can be employed to enhance production schedules, detect quality control problems, and anticipate equipment malfunctions.

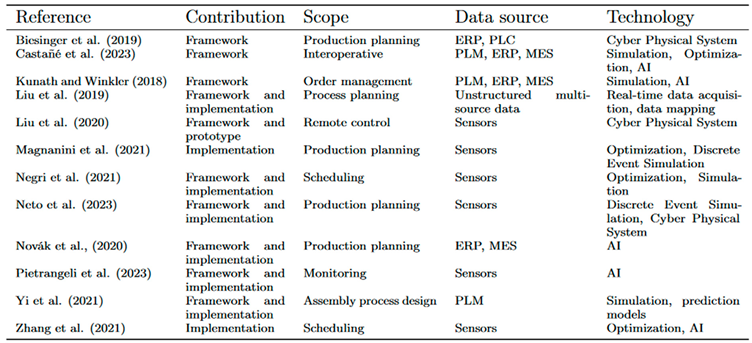

Starting from a strategic point of view, the work of Kunath and Winkler [

9] has introduced a DT model tailored for manufacturing systems, functioning as decision support for order management within the framework of Cyber-Physical Systems (CPS). Simultaneously, Liu et al. [

10] pioneered the application of DT in Computer-Aided Process Planning, specifically addressing the evaluation of optimal machining process routes. Complementing this, Yi et al. [

11] undertook an extensive case study centering on the intelligent design of a smart assembly process.

Taking a tactical approach, Biesinger et al. [

12] developed a CPS-rooted DT for production planning. Also, Novák et al. [

13] delved into integrating the DT within smart production planning, focusing on integrating the DT with production planners employing artificial intelligence methods. There are also examples of DT applications to facilitate the optimization of short-term production planning [

14,

15].

Regarding operations, Zhang et al. [

16] proposed a tailored DT for dynamic job shop scheduling, providing a responsive solution to the evolving demands of manufacturing environments. Previously, Fang et al. [

17] formulated a DT framework supporting scheduling activities in a job shop scenario. In advancing this narrative, Negri et al. [

18] presented a holistic DT framework designed to tackle scheduling challenges in the presence of uncertainty, enhancing adaptability in operational scenarios.

Turning attention to the control and monitoring activities, Zuhang et al. [

19] introduced a DT designed to enhance traceability in the complex products manufacturing process. Venturing beyond traceability, Pietrangeli et al. [

20] structured a DT model leveraging Artificial Neural Networks to monitor the behavior of a specific mechanical component. On a different trajectory, Liu et al. [

21] contributed by implementing a DT for the remote control of a Cyber-Physical Production System (CPPS). This diverse set of contributions, as summarized in Tab. 1, highlights the broad applicability and nuanced benefits of Digital Twins across various phases and facets of industrial operations.

This work aims to provide a framework for the integration of a DT supporting decision-making for production planning in a discrete manufacturing context, working with a specific company in Italy. The scope of the decision-making will be related to operation monitoring and management to make the production planning decisions more informed and resilient in case of delays and/or disruptive events.

Table 1.

Contribution about DT in industry.

Table 1.

Contribution about DT in industry.

The paper will be structured as follows: in

Section 2 the methodology followed for the study will be presented; in

Section 3 the peculiarities of the information systems to be worked with will be presented, proposing integration requirements for DT; in

Section 4 the results will be presented.

Section 5 will discuss the main points of the framework; conclusions will follow.

2. Materials and Methods

The following is a description of the working method used in the drafting and proposal of a framework for a DT, fed by MES data, to support decision-making in production planning for a specific company in northern Italy.

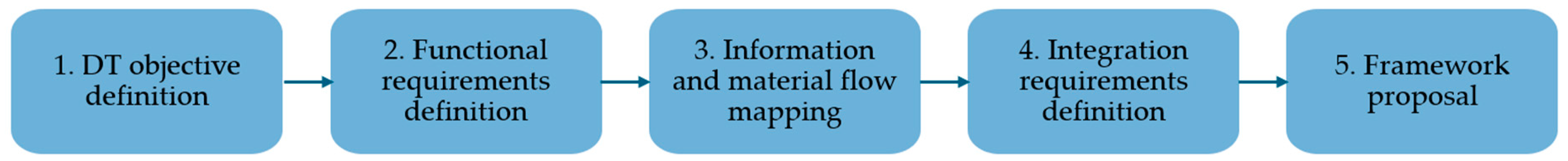

The framework development proposed in this paper is carried out in five main phases and performed incrementally. The phases are as follows and as presented in

Figure 1

DT objective definition

Functional requirements definition

Information and material flow mapping

Integration requirements and definition

Framework proposal

The development of these points is only partially exhaustive for actual technical feasibility testing for implementation. However, it provides an initial benchmark for comparing the next steps after the project definition. Adopting this approach allowed the development of a solution tailored to the specific business context.

The first phase is the definition of strategic and operational objectives by the company, which expresses the need for decision-making support related to production management in order to be able to improve the internal punctuality of the production department and improve the material accumulation situation in the inter-operational buffers. This is followed by work on gathering the technical and functional requirements of the DT. Beneficial is Stark's work [

6], in which he presented an 8-dimensional model of the Digital Twin (

Figure 2). Notably, developing a DT does not require an aprioristic effort toward increasing or improving all dimensions. At this framework proposal stage, it is necessary to identify the critical and most relevant ones and the right trade-offs. The output of this phase is a list of system requirements and identifying the most suitable software to develop the DT.

The third phase focused on mapping the information and material flows within the company. Data from existing machinery, control systems, sensors, and management software is analyzed. This phase is essential to understand how to seamlessly integrate real data from the production system within the virtual model of the Digital Twin. The mapping of material flows involved an analysis of existing production processes and the technological infrastructure already in place in the company. Once the information flow is mapped, the requirements analysis for integration is conducted, which must be done for both enterprise information systems and techniques and technologies already used in the company. The last step is the framework proposal, which will be presented in the Results section.

3. DT Objective and Functional Requirements Definition

The first two phases of the work were related to the identification of the objectives of the DT and the definition of the depth of functional requirements.

3.1. DT Objective Definition

To identify the objectives of DT for the company, it was first necessary to identify why the company opted for such a solution. The company has two main goals: operational goals and organizational goals. The operational goals focus on enhancing on-time demand satisfaction. The organizational goals aim is to enhance the efficiency and accuracy of processing times, cycle times, and makespan updates for different codes. The Digital Twin will autonomously acquire some of this information and auto-adapt, eliminating the need for explicit efforts from the company.

The DT will serve two different purposes that align with business operational goals. The DT will need to monitor production progress from a predictive process monitoring perspective, alerting appropriately when a batch is expected to be completed late, and it will need to propose rescheduling options should it be decided to take action to avoid such a reported delay. This second function requires optimization through the simulation of what-if scenarios, and it is the core functionality supporting and improving decision-making.

The overall objective of the DT is to facilitate planning activities and to enable comprehensive, sophisticated, and responsive analysis in response to the presented scenarios. An illustration of this analysis is simulation optimization: utilizing an appropriate Digital Twin framework, one could effectively assess rescheduling alternatives in the event of a disruptive occurrence, such as extended machine downtimes, significant defects in previously synchronized assembly lots, or supplier delays in inputs; furthermore, the optimization objective could differ: it may be designated as the aim to minimize inventory buildup or to reduce subsequent delays to the end customer.

3.2. Functional Requirements Definition

After conducting a software/tool selection process, a Discrete Event Simulation (DES) model has been chosen as the virtual counterpart of the Digital Twin. The DES model will be implemented using an open-source software to enhance integration across various technologies and tools already present in the company.

The specific characteristics of the environment under analysis determine the selection of a discrete-event simulator. In this case, the company operates a large number of machines and processes batched orders, making a discrete-event simulation model the most suitable approach to describe the system accurately. It is important to underline that the data fed into the DES model will be prepared and preprocessed using process mining techniques. The decision to opt for an open-source tool is motivated by the necessity to integrate other existing functions within the company seamlessly and to avoid the substantial initial costs associated with the project, reflecting a cautious approach during these early stages.

Discussing the dimensions by Stark [

6],

Figure 2, of the DT itself, the following hold:

Integration breadth: the model has to be integrated into the whole production system.

Connectivity mode: the interconnection between the real and virtual worlds must be bidirectional; however, during the initial usage phase, system decision-makers will scrutinize and filter any insights gained from simulation and forecasting. The goal for this dimension is to shift to a more automated mode of connection gradually.

Update frequency: while consistent with the Industry 4.0 philosophy, a completely real-time data flow is currently impractical and would contain too much detail for effective planning. The frequency of updates is primarily determined by the time required to extract data from the MES system and have them preprocessed and cleaned to be fed into the DES model. It is prudent to monitor data updates and analyses regarding order progression daily and to implement corrective measures judiciously over time to avoid excessive interventions or re-scheduling activities, which can be detrimental if too frequent.

Cyber-Physical System intelligence: in the first phase of the work, the interconnections from the virtual world to the real one will be partially automated and occasionally human-triggered.

Simulation capabilities: Discrete Event Simulation is a specialized method for modeling stochastic, dynamic, and discretely evolving systems. This technique facilitates dynamic analysis of production progress and enables timely deviation assessment, thereby enhancing the ability to identify underlying causes. Besides assessing deviations, it will simulate diverse order distributions to facilitate more thorough optimization regarding business objectives and overall service levels.

Digital model richness: the digital model will be sufficiently expressive of the intricate relationships among the batches, the orders, the availability of the machine, and the level of complexity will be dependent on the data quality that will be possible to extract from the company information system

Human interaction: this dimension will be the less developed one, given that only highly-trained decision-makers will interact with the DT, and there will not be a deeply evolved visualization for the digital model.

Product Life Cycle: the represented phase of the products will be the mid-life.

4. DT Objective and Functional Requirements Definition

The third and the fourth phases were dedicated to delving into the analysis of the integration requirements for the “as is” company scenario

4.1. Information and Material Flow Mapping

Regarding the mapping of information and material flows, it is important to briefly describe the reference system. The company under consideration is a manufacturing company that produces final units in batches and has a mixed market response system: it produces both to order and based on the forecasts of its distributors. There are priority treatments for certain types of orders, but there is no explicit rule of customer differentiation since production campaigns can last from a few days to several months. The production logic is a job shop with hundreds of machines divided into departments within the production floor. Given the breadth of the range, the extremely volatile production mix, and the time extension of production campaigns, not only can it be challenging to plan monthly production or schedule weekly and daily production, but also the data that can be acquired from information systems can be very fragmented and complex to aggregate.

The way to track material flows in the company was identified by analyzing historical data from the company's main information systems, using data analysis techniques, data mining and process mining.

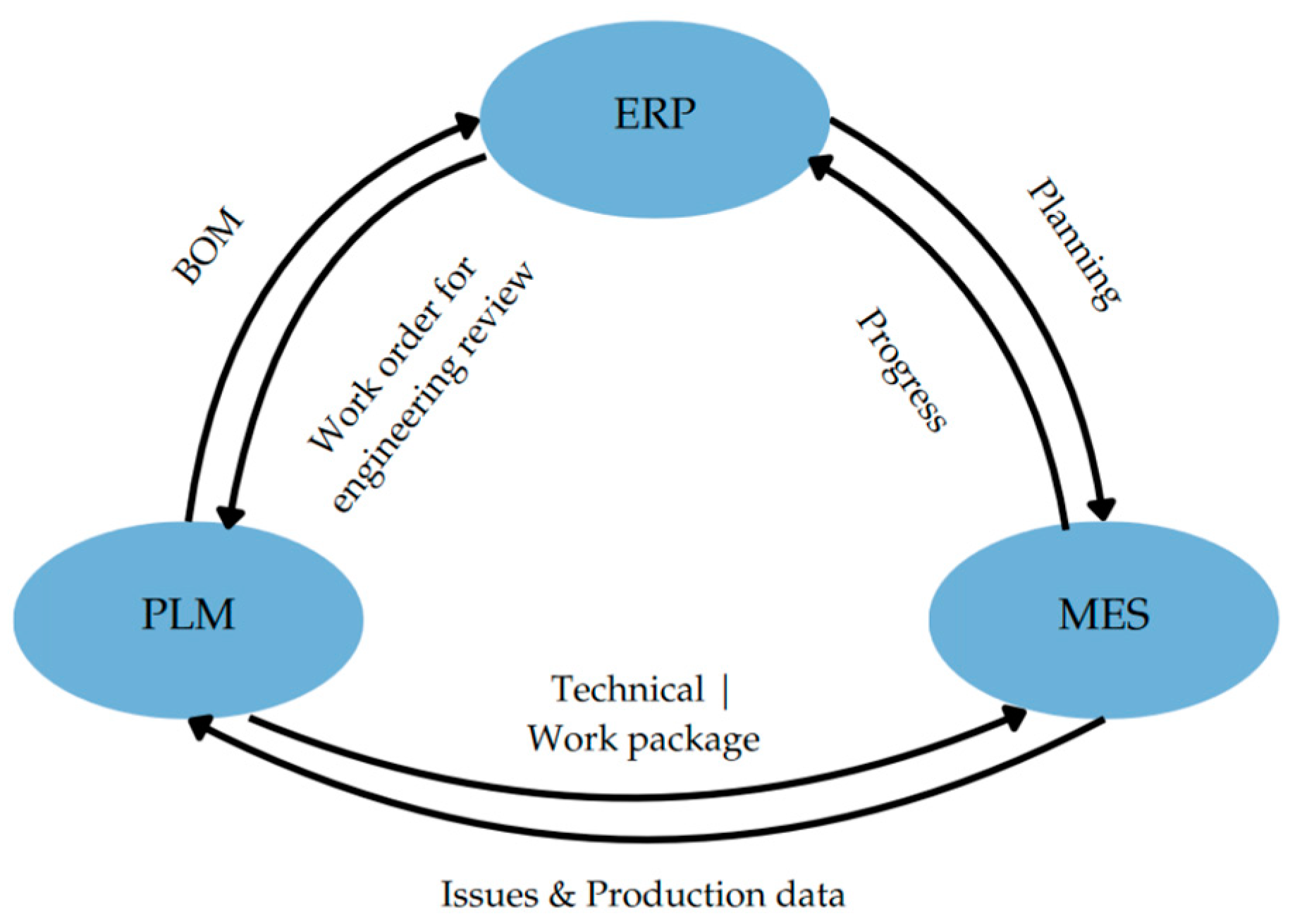

The reference information systems in a manufacturing company are Product Lifecycle Management (PLM), Enterprise Resource Planning (ERP), and the aforementioned MES.

These three systems are independent but intrinsically interconnected. We can define them as follows: PLM is a strategic methodology for managing data, processes, documents, drawings, and resources throughout the life cycle of products and services, starting from conception, development, production, and recall; ERP consolidates all business processes, such as sales, purchasing, inventory management, finance, and accounting into a unified system to improve managerial support; MES is a system designed to manage and control a company's production processes: order dispatch, quantity and time optimization, inventory warehousing, and direct machinery connectivity. These three systems integrate different information sources: PLM integrates information from different sources throughout the life of a product, ERP integrates different business functions, and MES integrates heterogeneous information that may come from the production unit.

Specifically, the MES collects highly accurate and timely information on machine utilization, resource utilization, and batch progress: recorded times can be accurate down to real-time due to direct connection on the machines via PLC and SCADA. However, it is important to point out that not all machines are automatically connected to the MES: there are many manual pouring machines in the company's reality, so the quality, veracity, and synchronization of the data depend to a large extent on the accuracy of the operator.

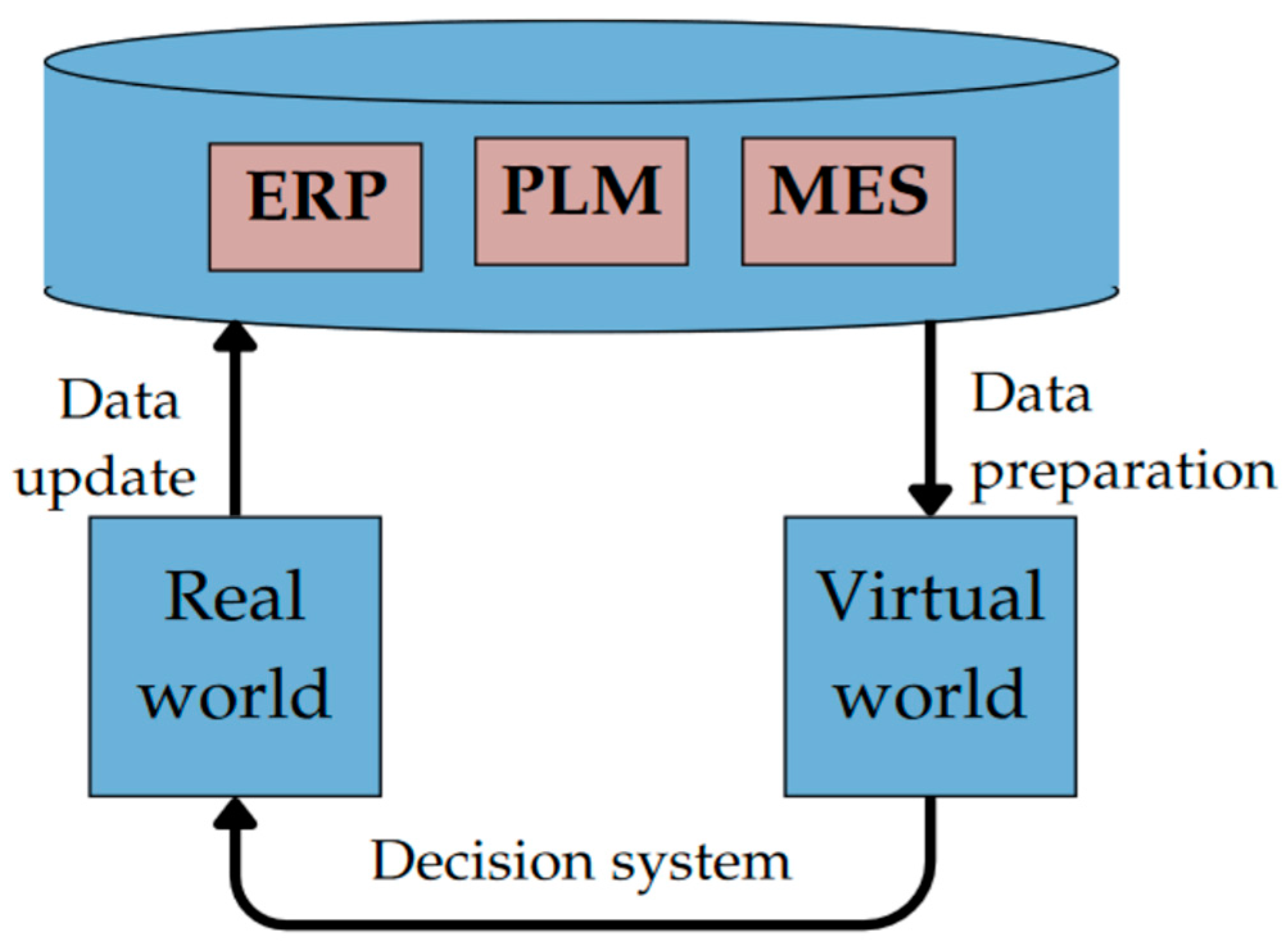

The points of contact of these information systems, which, as explained, already represent systems to consolidated data, are depicted in

Figure 3. It is evident that these systems need to be frequently updated and checked, and that there are data attending, albeit for different purposes, in more than one of the information systems. In general, it can be assessed that PLM contains the most time-stable information, while MES contains the most rapidly changing information.

Looking at the relationship between PLM and ERP, the PLM provides the ERP with information related to product master data, the bills of material, and technical specifications. From the ERP, on the other hand, it is possible to identify revision orders to the PLM if products are discontinued, components are discontinued, suppliers are changed, or products undergo reengineering changes.

Finally, between ERP and MES, mainly data related to production and planning are exchanged: from MES, the progress of specifications is derived. ERP, on the other hand, supports the drafting of production planning in an aggregate sense.

The communication among these three elements is frequently not seamless and necessitates considerable effort to acquire, extract, cleanse, and transfer data for usability. This is often performed during periods when the plant operates at its lowest productivity, such as overnight.

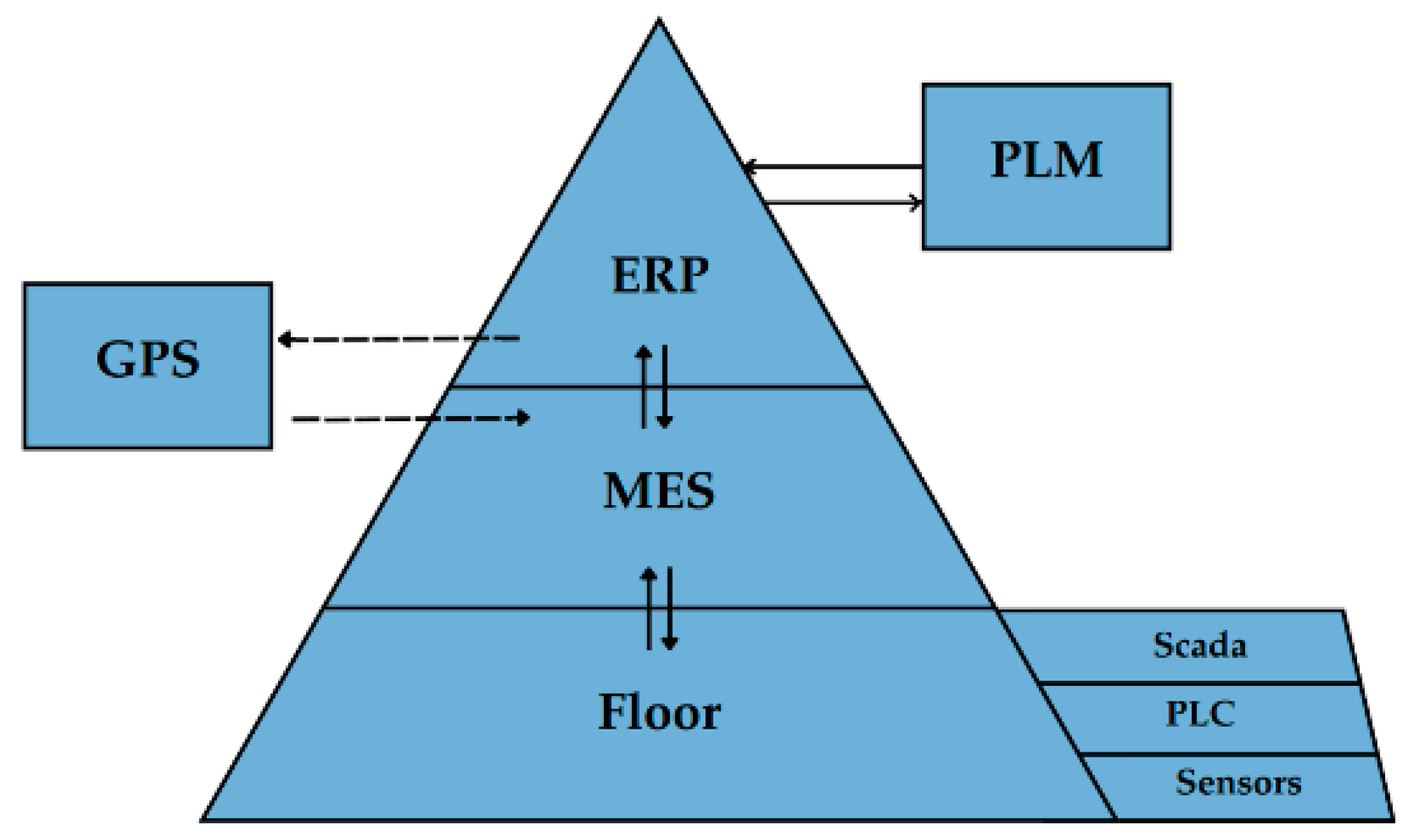

These are not the only information systems that can be found in the enterprise. The Pyramid of Industrial Automation is shown in

Figure 4, defined as “the hierarchical representation of the different levels of automation and control available in the industrial manufacturing process” by ANSI/ISA95.

Integrating the Pyramid of Automation into the relevant business context makes it possible to give a more precise interpretation of the relationships between the various information systems, emphasizing their hierarchical dependencies.

With respect to the previous information, it is possible to identify ERP as the top of this hierarchical pyramid. Consequently, all other information systems depend on it. It is supported, however, as a data source by PLM.

The MES operates at the operational level at the second level of the hierarchy. Interestingly, the link between ERP and MES is mediated by the Global Planning System, a business decision-support information system that, through built-in features, can support decision-makers in the planning arena. SCADA, PLCs, and sensors represent the supervisory, control, and field-level devices beneath the MES.

Although the ANSI/ISA95 standard was developed specifically to more consciously and sensibly guide the integration of various enterprise information systems, meeting an industry challenge, the complex problem of dataflow management and data passing structures remains.

4.2. Integration Requirements Definition

The objectives of integrating the DT's virtual counterpart with its real reference system must be clear. In this case, evaluating the business information systems and the other techniques used to link these systems together was important.

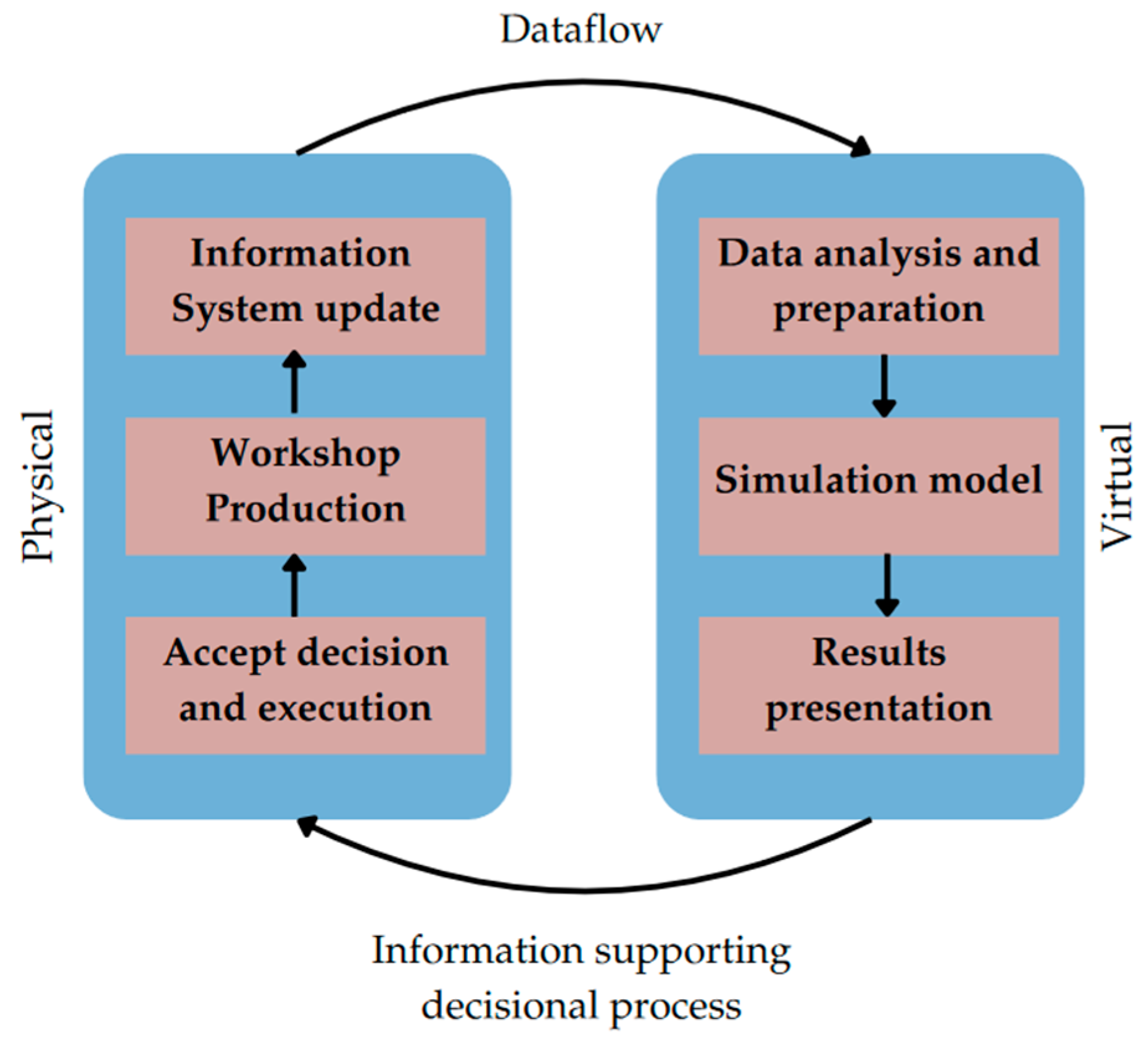

The functional objective of the DT's two-way linkage is shown in

Figure 5. The data exchange process must be appropriately structured to allow information systems to be accessed from the real world. Through data analysis, data mining and process mining, it is then required that the data be adequately prepared for use within the DES model.

The results of the DES model must provide two categories of data: monitoring the progress of production and providing predictive process monitoring to signal when and how much actual production will deviate from planned production by presenting appropriate alerts and flags. From there, if the decision maker triggers the command, the simulation system must be able to provide proposals for managing the delay, which may be rescheduling or adjustments in production planning for a larger period.

Should the proposed options be accepted, one would then return to the physical world with an impact on the production system. Should a decision be made to accept the delay, the decision-maker would still have chosen in a better information situation than before, finding himself advantaged in assessing the costs and benefits of the choices to be made.

He would begin the exchange cycle again, collecting data from the physical world to pass on to the real world and vice versa.

The DT unit, therefore, can be seen as a structure with autonomous functionality (e.g., the DES model, the possibility to provide what-if analyses), but not independent of its own reference system, nor other information systems, nor even of data filtering, cleaning, and preprocessing techniques, which should remain external to the simulation model itself.

Given that the data structure and the simulation scenarios obtained through the DES model will rely on the historical data obtained from the MES, and given the difficulties in handling such a large volume of data, it is required that data analysis techniques, such as process mining and other data analysis techniques, to appropriately filter, prepare, and preprocess the data that will be fed into the DES model.

It is important to note that historical data from the MES will also be used to have benchmark metrics regarding processing times, cycle times, delivery times, and downtime. The simulation model will then be validated on the appropriately processed historical data, and the real situation will be progressively simulated with the updated data that the MES provides.

The connection between virtual and real counterparts is thus not only intricate because of the number of information systems involved and the large amount of heterogeneous data that must be managed, but it is also complex in that data from the same sources and recorded at different times can be used for distinct purposes, increasing the number of logical links between the systems under consideration.

This again highlights how the DT cannot replace any enterprise information system, but it also highlights how thinking about how the flow of enterprise data can be adapted to integrate a DT can have positive effects on the entire enterprise IT management, as it requires that attention be paid to and updated dataflow management, and there be explicit efforts to aggregate and consolidate the various data sources (

Figure 6).

5. Results

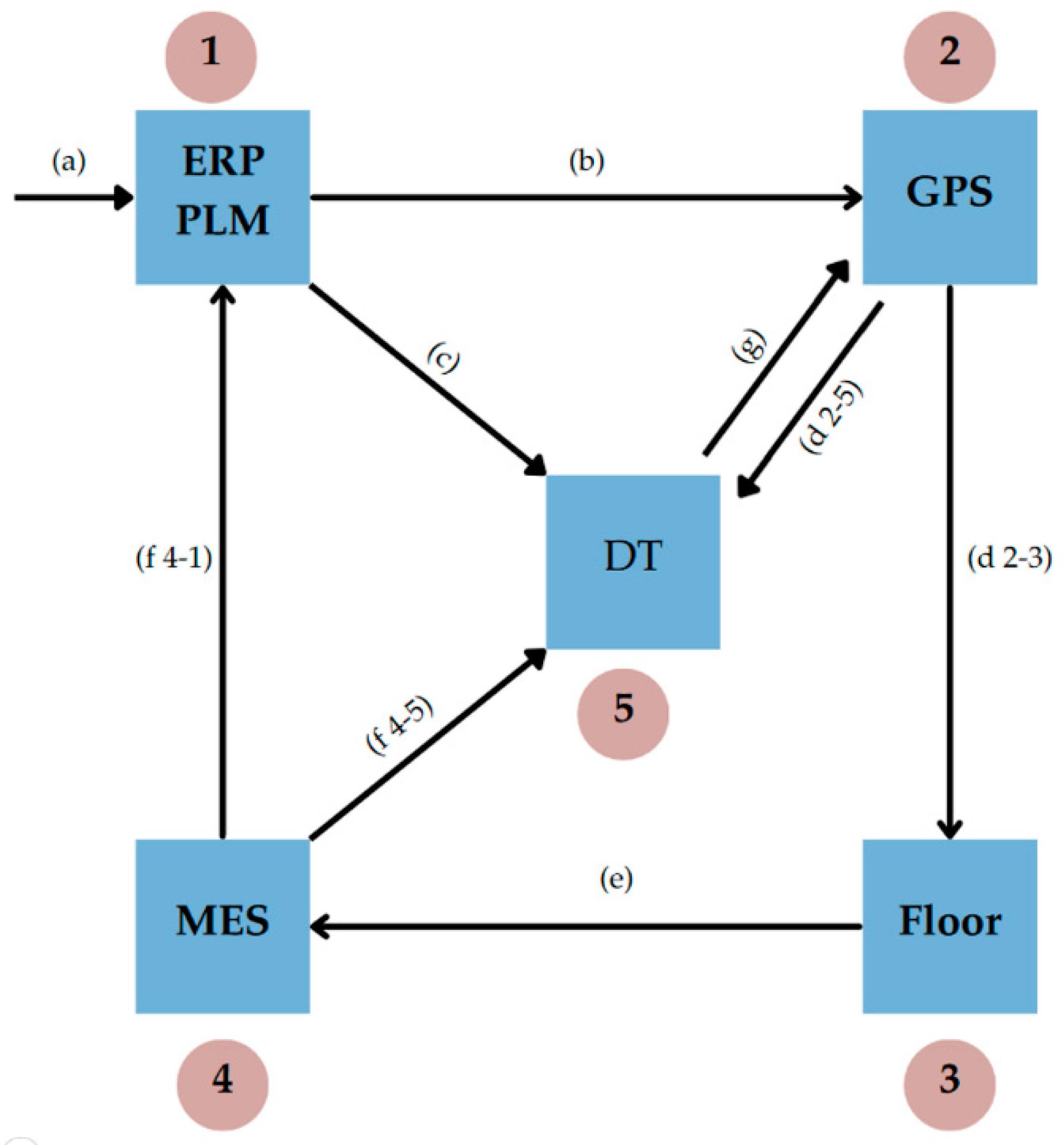

The results shown represent the summary representation of the proposed DT integration framework. Please refer to

Figure 7.

Nodes (1) and (4) represent the already described information systems: PLM, ERP, and MES. It is shown that MES is still directly connected with PLM and ERP, while ERP transmits data through other nodes.

Node (2) represents the GPS (Global Planning System). It is an active decision-making hub: the production plan decided through (and with the help of) GPS is transmitted to the floor level (3), whose advances, both automatic and manual, are recorded on the MES (4). Passing these advances to the ERP (1) closes the external cycle of the system.

Integration with the DT (5), whose virtual counterpart will involve a DES model, requires the following interactions, described on the arcs:

The arc (c) represents the structural information needed for the boundary conditions for any type of simulator: the incoming orders, inventory levels, product master data, production cycles, bill of materials, suppliers for external processing, customer master data, assembly cycles, machinery involved in the department, and machinery specifications. These are the starting data to describe and contextualize the reference environment and begin conceptually modeling the DES model to implement.

The arc (d 2-5) represents the production planning processed by the decision-makers with the support of GPS. Using this benchmark, the DT will monitor production and analyze deviations.

As anticipated, the arc (f 4-5) is a link that represents, however, two logically different links: historical data and update data.

The historical data are those related to previous productions and will be processed with appropriate data mining systems to be able to derive the necessary information for subsequent analysis, such as cycle times, through times, punctuality by order or product category, queue waits, and the impact of rework on the system. These final data will be the basis for factory times and will be kept up to date, so that truthful data is always available to measure the process.

Update data, on the other hand, are those that are collected, every reasonable tak-time, by the system, and with which the DT simulation system will perform monitoring and predictive process monitoring

While the historical data will require periodic schedulable updating, the linkage to update data must be reduced as much as possible, while respecting data extraction and simulation time, so that timely action can be taken should the need for decision-making arise.

The arc (g) represents the information gained to support the decision-maker: the simulation system thus structured will allow an immediate and in-depth presentation of production progress, providing monitoring and predictive monitoring, signaling in a timely manner if deviations from the initial formulated plan occur. Upon triggers from decision-makers, the DT simulation system will propose options for dealing with delays or other disruptive events that could cause deviations from the plan (e.g., delivery delays, extraordinary machinery maintenance, sudden increase in defects).

Thus, this integrated system is the implementation framework that will guide the following steps: the DT, in continuous connection with the lower levels of the automation pyramid, will prove to be supportive to top decision-makers.

6. Discussion

The presentation of this framework leads to the development of two thematic threads inherent to DT, which are the use of data and the potentiality for the industry solutions.

Regarding the use of data, it should be noted that the historical data from the MES properly processed and preprocessed will also be used to have the reference metrics to validate the simulation model (processing times, through times, cycle times); the simulation model of the DT will have to progressively perform the simulation of the real situation with the updated data that the MES provides.

The link between DT and big data is addressed within the 4.0 literature, and several contributions manifest the possible positive synergies [

22,

23]. However, it is important to note that big data management techniques, such as data mining and process mining, are not a sufficient shield for possible human error. Indeed, operators manually load ESM data for those steps that are difficult to automate, too sensitive to be automated, or non-economical to automate.

A particular adaptation of the real system to ensure data quality and a continuous dataflow is necessary due to challenges that cannot be resolved through process mining alone. Relying only on historical data as the reference for the scenario analysis obtained through the DES model is inherently fragile, and certain types of data corruption or lack thereof can make the entire process unreliable. Therefore, it is crucial to establish an unambiguous standard for the quality of the required data and the collection method.

As for industry application, t is well known that in human-managed decision-making, the quality of the solution identified is contingent and depends on the experience and information of the person who is making the decision. Employees with greater experience may more readily anticipate the consequences of their actions than those with less experience; however, regardless of the experience level, the accuracy of independently calculated estimates or probabilistic prediction of events remains inadequate [

24].

This is compounded by the fact that disruptive events, such as extraordinary maintenance and significant changes in order mix and quantity, make it increasingly challenging to organize demand management in a defined and unsophisticated way.

In addition, a certain lack of transparency in the management of orders (from their receipt to their fulfillment) is a problem that all systems, not just manufacturing ones, face; especially in small and medium-sized enterprises, data are often not sufficiently digitized, integrated, coordinated, or updated.

To manage demand volatility and system dynamism, many companies are starting to invest in optimization systems; the problem is that problems are often evaluated locally and not globally, thus deceiving the decision makers instead of helping them, leading them to prefer locally excellent solutions instead of globally excellent solutions that better fit the overall business goals [

9].

Although an effort in the direction of digitization to integrate a DT in the company certainly has risk factors and downsides (technologies present in the company that are not yet mature enough, company employees not ready for the technology transition, initial costs and system maintenance costs), it also represents a willingness of explicit commitment to want to improve one's internal data management and updating system, and is one of the challenges of the digitalization [

25].

The benefits directly related to DT are related to its ability to enhance the stability of planning performance amidst unforeseen circumstances: as mentioned earlier, there is a need for decision support systems that can be more transparent, more traceable, and more reliable, and that can propose solutions that have sustainable impacts on the entire evaluated system, not just on the production line or specific machine.

7. Conclusions

This study explored the first steps required for the implementation and integration of a Digital Twin (DT) in a discrete manufacturing context, with a focus on synergy with existing information systems, such as Manufacturing Execution System (MES) and Enterprise Resource Planning (ERP).

The importance of a well-defined integration framework that supports data flow synchronization and facilitates monitoring and control within the manufacturing department was highlighted.

Data management proves crucial to the success of DT implementation, as the quality and reliability of information directly affects the reliability of monitoring, simulations, and what-if analyses, and thus the ability to make informed decisions. Integrating existing enterprise information systems is critical to ensure operational cohesion and continuity of information, enabling the DT to transparently and effectively support decision-making. Thanks to its virtual representation of the real world of reference and its bidirectional interaction with the real world, which tends as much as possible to real-time, the DT can contribute to more timely informed management of warning signals that may be identified.

In the proposed case scenario, the reality of complex production requires an explicit effort to create data sharing, management, and processing structures. Without these, it is impossible to structure a DT with a Discrete Event Simulator model as the core of the virtual part.

The main limitation of this work lies in the fact that it is based on a particular case study in a discrete manufacturing context, which may limit the transferability of the results to other companies or industries. In addition, the work is still in the early stages of development; therefore, the conclusions may only partially reflect the complexities and challenges that arise during a full DT implementation.

To further develop the research, it is critical to first focus on structuring the simulation system, already creating the infrastructure for integration with business information systems, especially the linkage with historical data from MES, which is properly cleaned and preprocessed.

Author Contributions

Conceptualization, L.M. and S.Z.; methodology, F.I., S.Z., L.M.; formal analysis, L.M.; investigation, L.M.; resources, S.Z., F.I. and L.M.; data curation, L.M.; writing—original draft preparation, L.M..; writing—review and editing, S.Z., F.I, L.M.; visualization, L.M.; supervision, S.Z.; project administration, S.Z.; funding acquisition, S.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Acknowledgments

This project has been developed in collaboration with Fabbrica d'Armi Pietro Beretta S.p.A, Gardone V/T, Brescia, Italy

References

- Zhong, Ray Y., Xun Xu, Eberhard Klotz, and Stephen T. Newman. 2017. “Intelligent Manufacturing in the Context of Industry 4.0: A Review.” Engineering 3 (5): 616–30. [CrossRef]

- Botín-Sanabria, Diego M., Adriana-Simona Mihaita, Rodrigo E. Peimbert-García, Mauricio A. Ramírez-Moreno, Ricardo A. Ramírez-Mendoza, and Jorge de J. Lozoya-Santos. 2022. “Digital Twin Technology Challenges and Applications: A Comprehensive Review.” Remote Sensing 14 (6): 1335. [CrossRef]

- Lanzini, Michela, Nicola Adami, Sergio Benini, Ivan Ferretti, Gianbattista Schieppati, Calogero Spoto, and SImone Zanoni. 2023. “Implementation and Integration of a Digital Twin for Production Planning in Manufacturing.” In Proceedings of the 35th European Modeling & Simulation Symposium, EMSS. CAL-TEK srl. [CrossRef]

- Negri, Elisa, Luca Fumagalli, and Marco Macchi. 2017. “A Review of the Roles of Digital Twin in CPS-Based Production Systems.” Procedia Manufacturing 11: 939–48. [CrossRef]

- Grieves, Michael W. 2005. “Product Lifecycle Management: The New Paradigm for Enterprises.” International Journal of Product Development 2 (1/2): 71. [CrossRef]

- Stark, Rainer, Carina Fresemann, and Kai Lindow. 2019. “Development and Operation of Digital Twins for Technical Systems and Services.” CIRP Annals 68 (1): 129–32. [CrossRef]

- Salini, S., and B. Persis Urbana Ivy. 2023. “Digital Twin and Artificial Intelligence in Industries.” In Digital Twin for Smart Manufacturing, 35–58. Elsevier. [CrossRef]

- Galli, Erica, Virginia Fani, Romeo Bandinelli, Sylvain Lacroix, Julien le Duigou, Benoit Eynard, and Xavier Godart. 2023. “Literature Review And Comparison Of Digital Twin Frameworks In Manufacturing.” In ECMS 2023 Proceedings Edited by Enrico Vicario, Romeo Bandinelli, Virginia Fani, Michele Mastroianni, 428–34. ECMS. [CrossRef]

- Kunath, Martin, and Herwig Winkler. 2018. “Integrating the Digital Twin of the Manufacturing System into a Decision Support System for Improving the Order Management Process.” Procedia CIRP 72: 225–31. [CrossRef]

- Liu, Jinfeng, Honggen Zhou, Xiaojun Liu, Guizhong Tian, Mingfang Wu, Liping Cao, and Wei Wang. 2019. “Dynamic Evaluation Method of Machining Process Planning Based on Digital Twin.” IEEE Access 7: 19312–23. [CrossRef]

- Yi, Yang, Yuehui Yan, Xiaojun Liu, Zhonghua Ni, Jindan Feng, and Jinshan Liu. 2021. “Digital Twin-Based Smart Assembly Process Design and Application Framework for Complex Products and Its Case Study.” Journal of Manufacturing Systems 58 (January): 94–107. [CrossRef]

- Biesinger, Florian, Davis Meike, Benedikt Kraß, and Michael Weyrich. 2019. “A Digital Twin for Production Planning Based on Cyber-Physical Systems: A Case Study for a Cyber-Physical System-Based Creation of a Digital Twin.” Procedia CIRP 79: 355–60. [CrossRef]

- Neto, Anis Assad, Elias Ribeiro da Silva, Fernando Deschamps, Laercio Alves do Nascimento Junior, and Edson Pinheiro de Lima. 2023. “Modeling Production Disorder: Procedures for Digital Twins of Flexibility-Driven Manufacturing Systems.” International Journal of Production Economics 260 (June): 108846. [CrossRef]

- Novák, Petr, Jiří Vyskočil, and Bernhard Wally. 2020. “The Digital Twin as a Core Component for Industry 4.0 Smart Production Planning.” IFAC-PapersOnLine 53 (2): 10803–9. [CrossRef]

- Magnanini, Maria Chiara, Oleksandr Melnychuk, Anteneh Yemane, Hakan Strandberg, Itziar Ricondo, Giovanni Borzi, and Marcello Colledani. 2021. “A Digital Twin-Based Approach for Multi-Objective Optimization of Short-Term Production Planning.” IFAC-PapersOnLine 54 (1): 140–45. [CrossRef]

- Zhang, Meng, Fei Tao, and A.Y.C. Nee. 2021. “Digital Twin Enhanced Dynamic Job-Shop Scheduling.” Journal of Manufacturing Systems 58 (January): 146–56. [CrossRef]

- Fang, Weiguang, Hao Zhang, Weiwei Qian, Yu Guo, Shaoxun Li, Zeqing Liu, Chenning Liu, and Dongpao Hong. 2023. “An Adaptive Job Shop Scheduling Mechanism for Disturbances by Running Reinforcement Learning in Digital Twin Environment.” Journal of Computing and Information Science in Engineering 23 (5). [CrossRef]

- Negri, Elisa, Vibhor Pandhare, Laura Cattaneo, Jaskaran Singh, Marco Macchi, and Jay Lee. 2021. “Field-Synchronized Digital Twin Framework for Production Scheduling with Uncertainty.” Journal of Intelligent Manufacturing 32 (4): 1207–28. [CrossRef]

- Zhuang, Cunbo, Tian Miao, Jianhua Liu, and Hui Xiong. 2021. “The Connotation of Digital Twin, and the Construction and Application Method of Shop-Floor Digital Twin.” Robotics and Computer-Integrated Manufacturing 68 (April): 102075. [CrossRef]

- Pietrangeli, Ilaria, Giovanni Mazzuto, Filippo Emanuele Ciarapica, and Maurizio Bevilacqua. 2023. “Artificial Neural Networks Approach for Digital Twin Modelling of an Ejector.” In Proceedings of the 35th European Modeling & Simulation Symposium, EMSS. CAL-TEK srl. [CrossRef]

- Liu, Chao, Pingyu Jiang, and Wenlei Jiang. 2020. “Web-Based Digital Twin Modeling and Remote Control of Cyber-Physical Production Systems.” Robotics and Computer-Integrated Manufacturing 64 (August): 101956. [CrossRef]

- Qi, Qinglin, and Fei Tao. 2018. “Digital Twin and Big Data Towards Smart Manufacturing and Industry 4.0: 360 Degree Comparison.” IEEE Access 6: 3585–93. [CrossRef]

- Tao, Fei, Jiangfeng Cheng, Qinglin Qi, Meng Zhang, He Zhang, and Fangyuan Sui. 2018. “Digital Twin-Driven Product Design, Manufacturing and Service with Big Data.” The International Journal of Advanced Manufacturing Technology 94 (9–12): 3563–76. [CrossRef]

- Takemura, Kazuhisa. 2021. Behavioral Decision Theory. Singapore: Springer Singapore. [CrossRef]

- Silva Mendonça, Rafael da, Sidney de Oliveira Lins, Iury Valente de Bessa, Florindo Antônio de Carvalho Ayres, Renan Landau Paiva de Medeiros, and Vicente Ferreira de Lucena. 2022. “Digital Twin Applications: A Survey of Recent Advances and Challenges.” Processes 10 (4): 744. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).