1. Introduction

Ever since James Clerk Maxwell formulated his unified theory of electromagnetism (Maxwell 1865, Mahon 2003), physicists have been on the hunt for a broader theoretical framework embracing gravity – the original force of nature, as deduced by Isaac Newton (Newton 1687, Westfall 1980). Since Maxwell’s day, we have become aware of additional forces, notably the subatomic weak and strong forces (Perkins 2000, Griffiths 2008). Over the course of the twentieth century, progress has been made to unify all these short-range forces within, what has become known as, the Standard Model of Particle Physics (Quigg 2013, Close 2011). At the same time, our comprehension of the forces of nature has fundamentally changed. Albert Einstein’s general theory of relativity has altered how we appreciate gravity (Einstein 1915, Wheeler and Taylor 2000), while quantum mechanics has given us a completely new perspective on the nature of matter and how particles of matter interact (Heisenberg 1927, Whitaker 2015).

Quantum field theory is a theoretical framework which combines classical field theory, special relativity and quantum mechanics to describe the interactions of subatomic particles (Peskin and Shroeder 1995, Weinberg 1995). Through several iterations over the last century, a singular construct has been formulated, which combines electromagnetism with the strong and weak forces, giving rise to the Standard Model (Quigg 2013, Griffiths 2008). This has been highly successful in predicting the behaviour of subatomic particles. It does not, however, include the longer-range force of gravity (Glashow 1980, Zee 2010). One of the major challenges for integrating gravity with the other forces is that gravity seems to operate over much longer distances and is relatively so weak (Kaku and Trainer 1987, Hawking and Mlodinow 2011).

The search for a grand unified theory has to-date been tackled in various ways (Ross 1984), including but not limited to:

Supersymmetry (referred to as SUSY) attempts to extend the success of the Standard Model. This approach proposes a symmetry between fermions (matter particles) and bosons (force carriers) (Kane 2001). It involves postulating the existence of a higher symmetry group and treating the short-range forces as a singular force. Such force would then break down into the known combination of forces at lower energies (Martin 1997).

Quantum Gravity (also known as Loop Quantum Gravity) builds upon the general success of quantum mechanics and seeks to treat spacetime itself as quantised (Rovelli 2004, Ashtekar and Lewandowski 2004).

Theories of Everything represent substantially different approaches, including String Theory (Green et al 2012) as the most notable contender. String Theory encompasses various formulations, all of which are based on the idea that particles are vibrating strings. M-theory (Witten 1995) seeks to unify string theories by making use of an 11-dimensional framework.

Holographic Theory emerges from a combination of string theory and black hole physics, suggesting that all information within a volume of space can be described by information on the boundary of that space (Susskind 1995).

Emergent Gravity explores whether gravity is an emergent phenomenon arising from more fundamental quantum processes (Verlinde 2011, Padmanabhan 2010).

None of the models noted above have been experimentally confirmed (Peskin and Schroeder 1995). A solution remains elusive, and the search continues to integrate gravity into some wider framework (Weinberg 1995, Smolin 2000, Greene 2003). Given the success to-date in formulating the Standard Model, the quest to find a broader theory tends to be tackled with mathematics, seeking out new or extended mathematical constructs which can accommodate all the forces. Fundamentally all these approaches, including the Emergent Gravity concepts, fall within the ontological framework of mechanistic reductionist materialism. They assume that the universe consists of particles, fields and forces that follow deterministic laws of physics (Weinberg 1992, Toretti 1999, Smolin 2006, Bohm 1980).

Other concepts have been proposed over the years, including vitalism, panpsychism, process philosophy and animism, and of course the variety historic religious narratives. But none of these are deemed to be truly scientific. However, there may be another way of looking at this problem, which, whilst grounded in 19th and 20th century science, is not wholly deterministic. In this alternative construct, the universe we observe today has been forged through evolutionary processes, with the particles and their interactions emerging through natural selection, just like life on Earth. The universe we know was not pre-determined.

The ideas proposed in this paper represent a radically different approach, dissimilar to any other seemingly tried so far. A provocative alternative solution is derived, which can explain much more than unification of the forces of nature. It can also neatly resolve some other equally confounding mysteries, which have emerged over the last hundred years, such as what causes entropy, why wave/particle duality (Rovelli 2021), and how to reconcile the physical with the social and life sciences (Zimmer 2021, Davies 2019, Schneider and Sagan 2005, Volodyaev 2005).

Addicted to Atomism and Reductionism

The groundwork for 20th century physics and the formulation of quantum mechanics was laid during the 19th century. Scientists of that era inhabited an atomistic, materialistic, reductionist universe. They saw everything around them in terms of objects and forces following deterministic laws (Houghton 1977, Camprubi 2022, de Waal and Kluwick 2022, Marshall 2010). As reductionists, they could see that if you ground down any solid, you just get smaller stuff – rocks, stones, gravel, sand, dust, powder – until you end up with atoms - the smallest Lego bricks from which to build things. Atoms were seen as miniature ball-bearings – small, indivisible particles – as originally envisaged by the Ancient Greeks, Democritus and Leucippus. Layered onto this were Isaac Newton’s idea that these particles could be attracted to each other through an invisible force, called gravity. Once they had gained a better understanding of electricity through the contributions of Maxwell amongst others, the notion of electric charge, positives and negatives attracting, became a further layer onto this construct (Shore 2008), providing the basis for short-range forces which hold those particles tightly together, thereby creating molecules and solid substances.

Winding forwards to today, despite the best endeavours of our cosmos to tell us otherwise, we remain addicted to the same deterministic approach. We continue to see atoms, and particles generally, in terms of objects bound by invisible springs (however conceived), which we call forces (see

Figure 1). Object (mass) and spring (force) are envisaged as being conceptually distinct phenomena, where

the motions of objects are dictated by the forces applied to them.

One of the key reasons why we continue to think of the universe in this way is because of the ground-breaking work of the Austrian mathematician, Ludwig Boltzmann, whose contribution came at the dawn of the 20th century, presaging the formulation of quantum mechanics.

Entropy

As they gained expertise in converting heat energy into motion through steam engines,

one of the 19th century scientists most intractable puzzles became how to explain the expansion and mixing of gases, and the concordant loss of useful energy. To them, atoms (those indivisible, sometimes sticky, ball-bearings) obeyed Newton’s laws of motion, undergoing elastic collisions. But applying Newton’s laws to large populations of atoms does not lead to rapidly mixing or expanding gases, from which the original energy is seemingly lost. Various attempts were made to explain these observations. This mystery was eventually resolved in a rather unconventional way by Boltzmann.

Boltzmann decided to apply statistics to the problem and through this formulated, what is now known as, statistical mechanics (Pathria and Beale 2011). His function entropy, generally denoted with the sign ‘S’, provides both a quantification and a seeming rationale for the loss of useful energy in a system. But, you can’t directly measure entropy, like you can those other state variables, such as temperature, pressure and volume. S can only be deduced.

Being at a time shortly before the birth of quantum mechanics, Boltzmann started out with the assumption that atoms were in essence miniature inanimate ball-bearings. He then crafted a mathematical construct which can provide a quantifiable estimate for the difference between two states, say Temperature 1 and Temperature 2, for an isolated gaseous system (that is a system which is, as far as is possible, closed off with no inputs or outputs of energy or matter – absolutely no interaction with its surroundings). His solution could be likened to time-lapse photography, providing a reel of snapshot solutions for each delta change in temperature or pressure, showing a system transitioning from an initial state towards a final equilibrium, in which all parts of the chosen system have the same temperature, or pressure, or level of mixing of atoms, or all of these. But, whilst the mathematics provides a means to calculate a function, which provides a very good fit against observed availability (or apparent loss) of energy, especially in nearly isolated systems, it does not in any way explain the process by which a system has progressed from one state to the next.

Though Boltzmann’s work was originally received with scepticism, it has now become a mainstay of modern science. It represents the theory that is seen to explain the observed dispersal of energy and gaseous matter in simple systems, providing the mathematical foundations for the 2nd Law of Thermodynamics. It has proven to be an incredibly useful tool, contributing to many collective successes through the 20th century, allowing us to manipulate elements and compounds to produce all sorts of useful chemical processes and thence chemicals from plastics to medicines to silicon chips. Yet it is not a simple concept to comprehend and causes many people to tie themselves in knots trying to understand how best to apply it (Popovic 2018).

Statistical mechanics is now used to describe the banal to the fantastic, from cups of tea cooling to black holes hypothetically evaporating. The concept is so powerful that many have construed it to provide the arrow of time itself (Carroll 2010, Price 1996). And through extrapolating the 2nd Law from discrete isolated systems to everything (the whole cosmos), it is now generally thought that the universe will ultimately undergo some form of heat death as all energy and matter eventually disperse and mix (Davies 1994).

By dint of its success, Boltzmann’s work has locked-in our atomistic and reductionist expectations that all matter deterministically obeys some peculiar cosmic law, in which energy always escapes and things always become more mixed up, messy and disordered, the universe travelling unerringly towards ubiquitous uniformity. It is as ever-present as the gravity keeping our feet firmly planted on the ground. Except that it isn’t what we always observe. Each Spring we see new greenery sprouting forth from every tree and plant, all seemingly disobeying these universal rules.

Much research was done over the latter half of 20th century to try and resolve this obvious discord between the life and physical sciences. This was first written about by none other than Erwin Schrodinger, one of the fathers of quantum mechanics (Schrodinger 1944). He came up with the term negentropy and concluded that, given the solidity of our belief in entropy, we simply haven’t yet found the science to explain life. The gauntlet was picked up by another Nobel Laureate, Ilya Prigogine (Kondepudi et al 2017), who spent a considerable amount of time trying to marry together Boltzmann’s mathematics with systems that are construed to be far-from-equilibrium, through which energy and/or materials flow and which explicitly do interact with their surroundings. Such systems are not isolated from their environment and include all living systems (Schneider and Sagan 2005). From Prigogine’s work arose the concepts of entropy export and maximum entropy production, suggesting that an energetic system (such as a living organism) could internally become more ordered if things around it became commensurately more disordered and that such systems will move towards states in which they maximise the production of entropy within the wider universe (Prigogine and Stengers 1984).

Despite the logic of Prigogine’s approach, unlike Boltzmann’s mathematics, it has proven extremely challenging to use in any quantifiable way. Consequently, notions of entropy export and maximum entropy production remain matters of intense scientific debate with numerous scientists seeking (and generally failing) to find ways to make some practical use out of them (Kleidon 2009, Roach 2020, Endres 2017, Jorgensen and Svirezhev 2004, Volk and Pauluis 2010, Aristov et al 2022, Jaynes 1980, Chakrabarti and Ghosh 2010).

The reality is that we have not yet been able to truly reconcile our physical sciences with our biological sciences. There is an unavoidable disjunct between the material world of atoms and particles, the motions of which we consider to be dictated by forces applied to them, compared to the biological world of energetic systems from bacteria to blue whales, which we see as energetic systems which intrinsically respond to their energetic and material environments.

So, where do we go next? This is treacherous ground; many have become ship-wrecked in the process of trying to challenge or re-think entropy. Boltzmann’s mathematics has proven its worth time and time again. Furthermore, elements of Boltzmann’s work now form a mainstay of quantum mechanics, being also strongly grounded on statistics. The answer may lie not in trying to dispute entropy, but rather to understand afresh what is the process behind it? And, if we can deduce that, then perhaps some of the other intractable enigma that we now face might fall into place. This may seem a circuitous route to addressing the challenge to unify the forces of nature; but the journey is worth it.

Thought Experiment

Imagine two systems. One is a small canister of a single gas sitting within a larger, sealed box, filled with a variety of other gases. Gas pressure inside both is the same. The second system is a shipping container full of tigers sited within a large game reserve – a vibrant jungle ecosystem, filled with all the normal flora and fauna that you would expect, except tigers (see

Figure 2).

Focusing on the atomic system, if we puncture the canister, we know that the atoms within will spill out and spread throughout the larger box of gases. In accordance with the 2nd Law of Thermodynamics, the system will rapidly move towards a state of uniformity with the newly introduced gas evenly dispersed throughout the larger gaseous mix. Whilst at a microscopic level the whole subsequent system will undergo continuous dynamic change, the gaseous atoms all moving around chaotically, macroscopically it will remain in that fully mixed state forever after. We refer to this observed unchanging final state as the system reaching equilibrium (also referred to as maximum entropy).

Turning to the tiger system, we release all the tigers at the same moment. The tigers hastily spread out across the game reserve until they are evenly distributed across the whole area. And they, too, will remain that way indefinitely thereafter, in dispersed dynamic equilibrium.

So, what’s the difference?

Observationally, these two systems are behaving the same.

Macroscopically, these two systems are behaving the same.

Mathematically, these two systems are behaving the same.

But, according to modern science, these are two very different systems. One involves a population of inanimate objects behaving deterministically according to the 2nd Law of Thermodynamics, moving unerringly towards a state of maximum entropy. The other involves a population of energetic systems, living organisms, whose innate competitiveness for food drives them to spread out across the habitat.

Let’s take a deeper look. If we are willing to be open-minded, could it be that they are not so different after all?

What Do We Know About Atoms?

Since Boltzmann’s time, we have learnt a lot more about atoms. And they are very definitely not akin to miniature ball-bearings. Firstly, thanks to Rutherford’s experiments firing alpha particles at gold foil, we know that atoms have internal structure – a nucleus surrounded by a shell of electrons (Campbell 1999). Secondly, thanks to varied contributors including Schrodinger, through quantum mechanics we now appreciate the configurations of that electronic shell (Griffiths and Schroeter 2018). This has aided us in understanding the structure and characteristics of objects that we can see and touch, such as snowflakes and diamonds. And, thirdly, we know that atoms are continuously interacting with their environment, as evidenced through observations such as black body radiation (Kittel and Kroemer 1980). Your ability to see the world around you is dependent on every atom on every surface within your field of vision constantly absorbing and emitting energy.

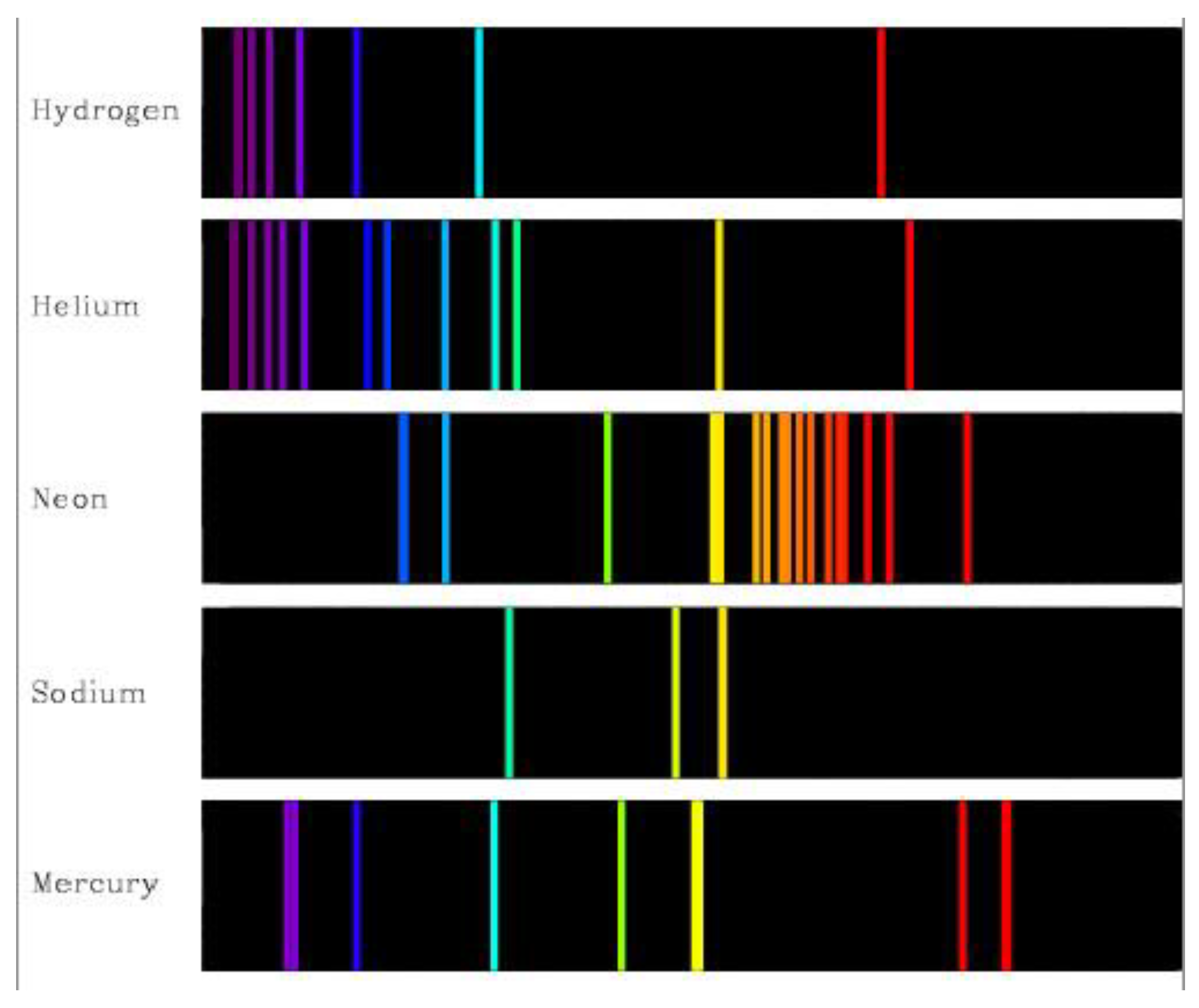

It was Albert Einstein who realised that energy comes in the form of photons – being particles, not rays as his predecessors thought. Each photon has its own discrete quantifiable amount of energy. This paved the way for the formulation of quantum mechanics (Isaacson 2007). And, in a nutshell, the original conception of quantum mechanics arose from appreciating that atoms are rather choosy about which photons they absorb and emit (Rovelli 2021) – as exhibited through their electromagnetic spectra (

Figure 3). Furthermore, for each type of free gaseous atom or molecule, above a certain wavelength (or below a certain energy) any radiation will simply pass straight through and cannot be absorbed (Eisberg and Resnick 1985).

What else do we now know about matter?

Building on the foundations created by our 19th century forefathers, we now know that there are three constitutional impossibles within our universe:

energy (whether in the form of matter or energy) cannot be created or destroyed (1st Law of Thermodynamics);

you cannot go below a lowest temperature – being zero Kelvin (3rd Law of Thermodynamics); and

matter cannot travel faster than the speed of light (that’s Einstein’s most notable contribution).

These three conjectures have a fundamental connection. From them, one can infer that it is impossible to completely isolate any particle or lumps of matter from the wider universe (Kondepudi and Prigogine 1998, Reif 1965). Even the 19th century scientists knew this experimentally, despite their precise theoretical definition of the 2nd Law of Thermodynamics as pertaining specifically to an isolated system.

The notion of complete isolation can be likened to a variation on Schrodinger’s Cat thought experiment. If you were to create a truly isolated system, a perfect thermos flask, you would have effectively removed an item of matter from the universe, thereby violating the 1st Law of Thermodynamics. Such isolated system would have to involve the particle(s) of matter floating in a vacuum surrounded by zero Kelvin with no physical contact, no interaction with any fields and no black body radiation to or from the outside world – absolutely no interaction, effectively removed from the universe. When you peaked back inside this perfect thermos, would your tea still be there?

Turning this inference on its head, we must conclude that all particles of matter MUST constantly interact with their surroundings. It is an inherent nature of matter to continuously interact either through direct contact with other matter or through directly absorbing/emitting radiation. No particle in the universe can truly stand alone and be isolated … ever (Griffiths 2004, Cohen-Tannoudji et al. 1977, Haroche and Raimond 2006, Callen 1985, Prigogine 1980, Bertalanffy 1972, Capra 1996, Heidegger 1962, Bohm 1980).

Energy particles, Einstein’s photons, get around this. In travelling at the speed of light, they experience no time. So, from their frame of reference, despite us observing them travelling as individual particles or waves across billions of light years, photons never in fact lose contact with the universe of which they are a part (emission from star and absorption by human-built telescope is instantaneous). Further, we know that photons are always an interacting part of the universe because the path of those particles bends round massive objects, such as galaxies. But as soon as any energy becomes effectively stationary, wrapped up as a piece of matter, it experiences time and, so it would seem, must, in some way, remain in constant contact with ambient energy or other local matter, and thereby the wider universe.

Following this line of thinking, our universe can be viewed as being an immense network of interactions and material connections – “everything is connected”. Anything not coupled in some way with that web is not part of our universe. And everything which is part of that network, whilst able to change from one thing to another (matter to energy, etc), must forever remain a part of the whole – energy cannot be created or destroyed. What is part of the universe cannot cease to be part of the universe.

What, Then, Are Atoms?

This question can be asked of any particle at the molecular through to subatomic scales. But for simplicity, we’ll stick with atoms.

Atoms are very definitely not the equivalent of miniature ball-bearings. They have internal structure and necessarily constantly interact with their surroundings – absorbing and emitting energy. They are, by deduction, energetic systems. In our everyday lives, we experience many other more familiar such systems – all those living things around us including houseplants, pets, other people, and, of course, tigers. These larger energetic systems are also all completely dependent on continuously interacting with their surroundings in order to survive and remain coherent entities within our universe – to exist as part of that universal web of interactions.

Let’s put aside for a moment the concepts of animate and inanimate as being distracting notions. Instead, let’s be radical and try out thinking purely in terms of energetic systems, which must interact with their surroundings. With this alternative mindset, the idea of animation is a matter of degree, rather than a binary, is or isn’t. We recognise already that there are a wide variety of living systems from ourselves, capable of seemingly complex thought and intentions, all the way down to bacteria, which we perceive instead as energetic systems which simply respond to their energetic and chemical environments, such as swimming up a sugar gradient. From this point of view, atoms and molecules are just simpler still – energetic systems which respond spontaneously to their material and energetic environment.

What, Then, Are Forces?

Seeing atoms as inherently energetic systems gives us a new way to understand forces – those invisible connecting springs, which we construe to bind particles together. The easiest way to explain an alternative approach to forces is to dip into the human domain.

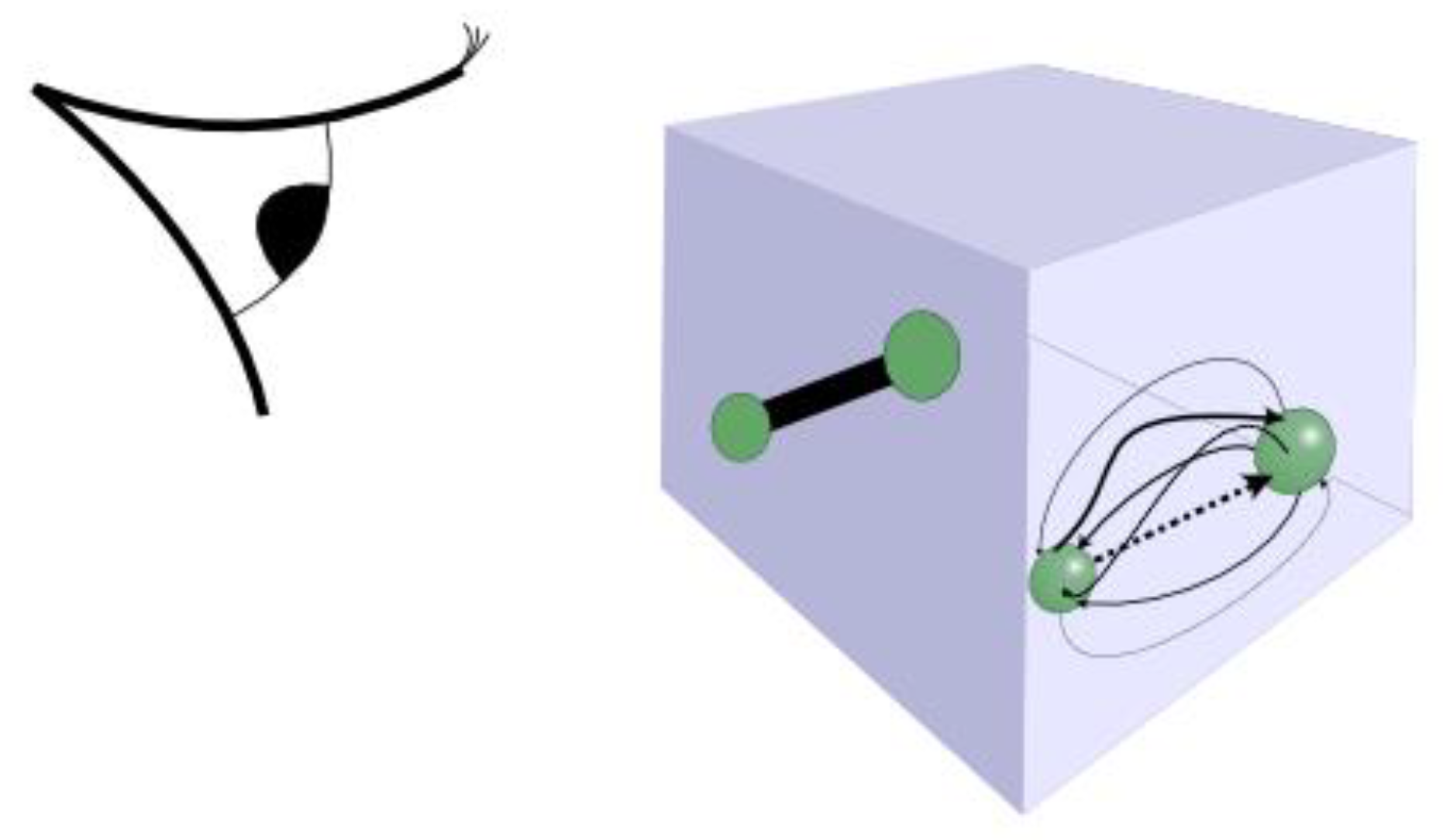

Relationships, such as that within a married couple, are a peculiar thing. What we see on the outside is very different to what takes place on the inside (see

Figure 4). From afar, we see two people hook up and think of them functioning as a couple. In a social sense, they are now bound together, such that their movements and activities in the real world are correlated – say, sharing a home, going on dates, seeing other friends, all as a duo. Only their closest friends are party to the internal workings of the relationship. To everyone else, these two people are now bound together as a pair, until suddenly one day, through death or divorce, they may not be.

If you were to study a relationship in detail on the inside, then it is a completely different matter. Putting aside for a moment all those emotions and feelings experienced by the individuals involved (the qualitative aspect), from an observationally quantifiable perspective a relationship boils down to energy and information continually flowing to and fro between the pair of people, each as individuals. There is no specific or separately existing bond or force, as such. Rather, there is a continual process of reciprocation, taking turns to expend energy on each other, whether that’s through active listening, some tender care and attention, doing household chores or the gardening, and much more besides. When one or other of the parties involved stops pulling their weight, then the relationship struggles, the so-called bond weakens and eventually fails.

Applying these observations to the material world, we might think we see a pair of atoms, which form a molecule, as two objects bound together by a force to create a new whole. But, perhaps, that internal force is simply the manifestation of a process going on inside? Rather than any real force involved, we can conceive that each atom is itself responding to its energetic environment. The externally observed bond is instead a constant energetic interaction between the two particles causing them to correlate their movements in space. (Is that any more ludicrous than imagining that the two objects are held together by some invisible force?)

This other way of thinking about bonds is already embedded in the Standard Model of particle physics. Over the course of the last 100 years, as quantum mechanics has matured both theoretically and practically, through iterations such as Quantum Electrodynamics (QED) and Quantum Chromodynamics (QCD), the fundamental forces of nature are indeed now seen in terms of particle exchanges. In quantum field theory, the known short-range forces (electromagnetic, weak and strong) are all described as being mediated by force-carrying particles known as gauge bosons (Peskin and Schroeder 1995, Lancaster and Blundell 2014). The perceived forces arise from emission and absorption by the objects of matter (quarks, nucleons, electrons, etc) of these force-carrying particles, which allow for a transfer of energy and momentum. These force-carrying particles include gluons, W and Z bosons, and, of course, photons.

The Standard Model has proven to be very effective in explaining experimental results in particle accelerators and has enabled various predictions, which have then proven correct (Coughlan and Dodd 1991). However, it embeds a fundamental change in our perception of the forces of nature. If the forces involved are actually the manifestation of energetic interactions, then the particles involved, even electrons, can no longer be reduced to indivisible atomistic objects of matter. They are inherently energetic systems, capable of absorbing and emitting energy and constantly interacting with their environment. This is a completely different starting point to the one originally envisaged by Boltzmann. If atoms, and for that matter any particle, are indeed energetic systems, then there is scope to consider their behaviour in a completely different way, one that is not inherently deterministic.

A New Physics

Let us imagine that atoms are indeed energetic systems. Further, as a constitutional requirement of the universe, each particle of matter must constantly interact with its environment through absorption and emission of energy. That’s fine, when there are plenty of ambient photons of sufficiently short wavelength with which each atom in a gas can interact to maintain a necessary frequency of interaction to remain a part of the universe. But what happens when there’s not enough energy and an atom in a gas finds itself at risk of becoming effectively detached from the universe through lack of suitable photons passing through with which to interact? How might the atom spontaneously respond to such a situation to avoid isolation? We can find an answer to this in our modern biological sciences.

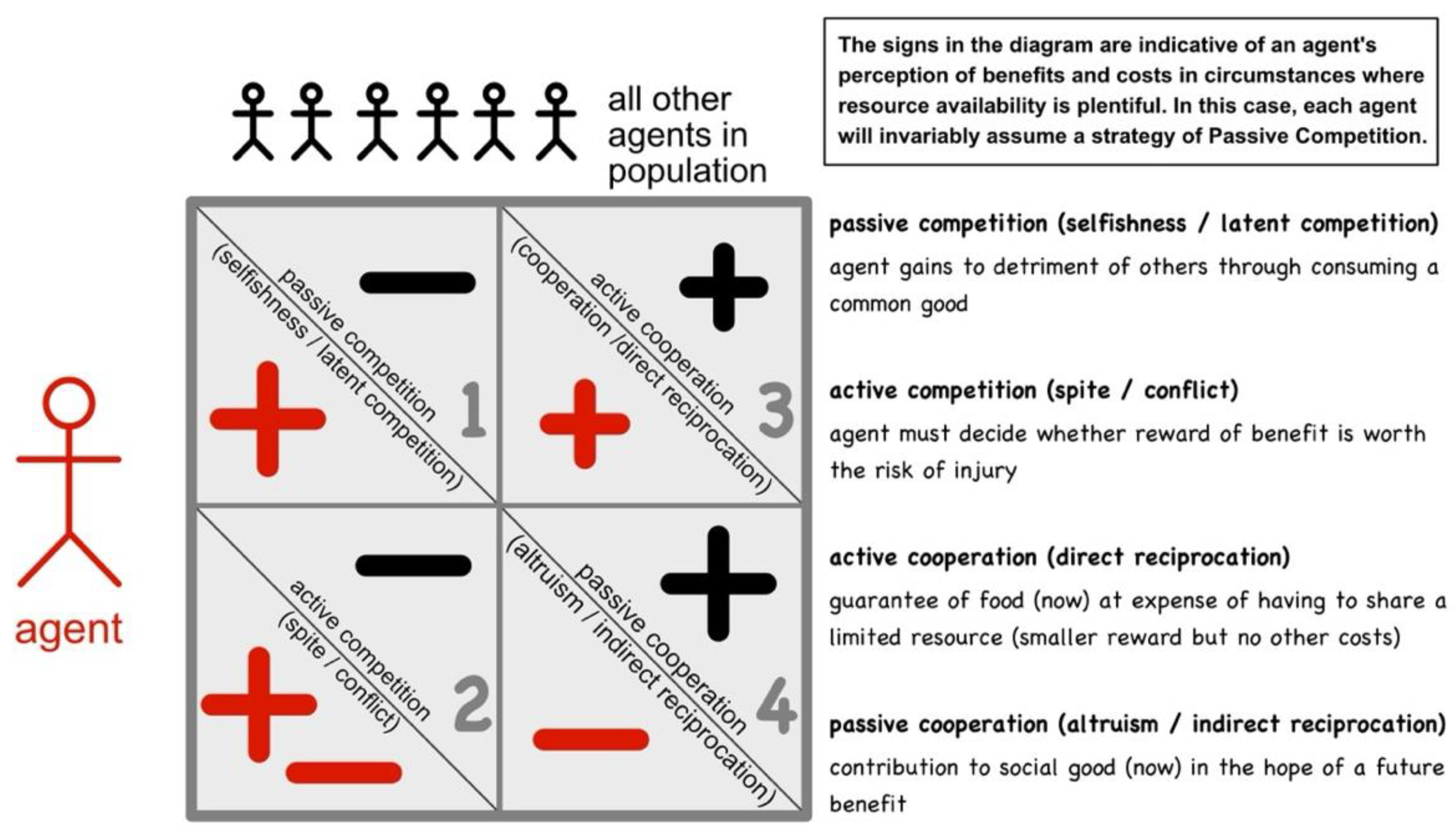

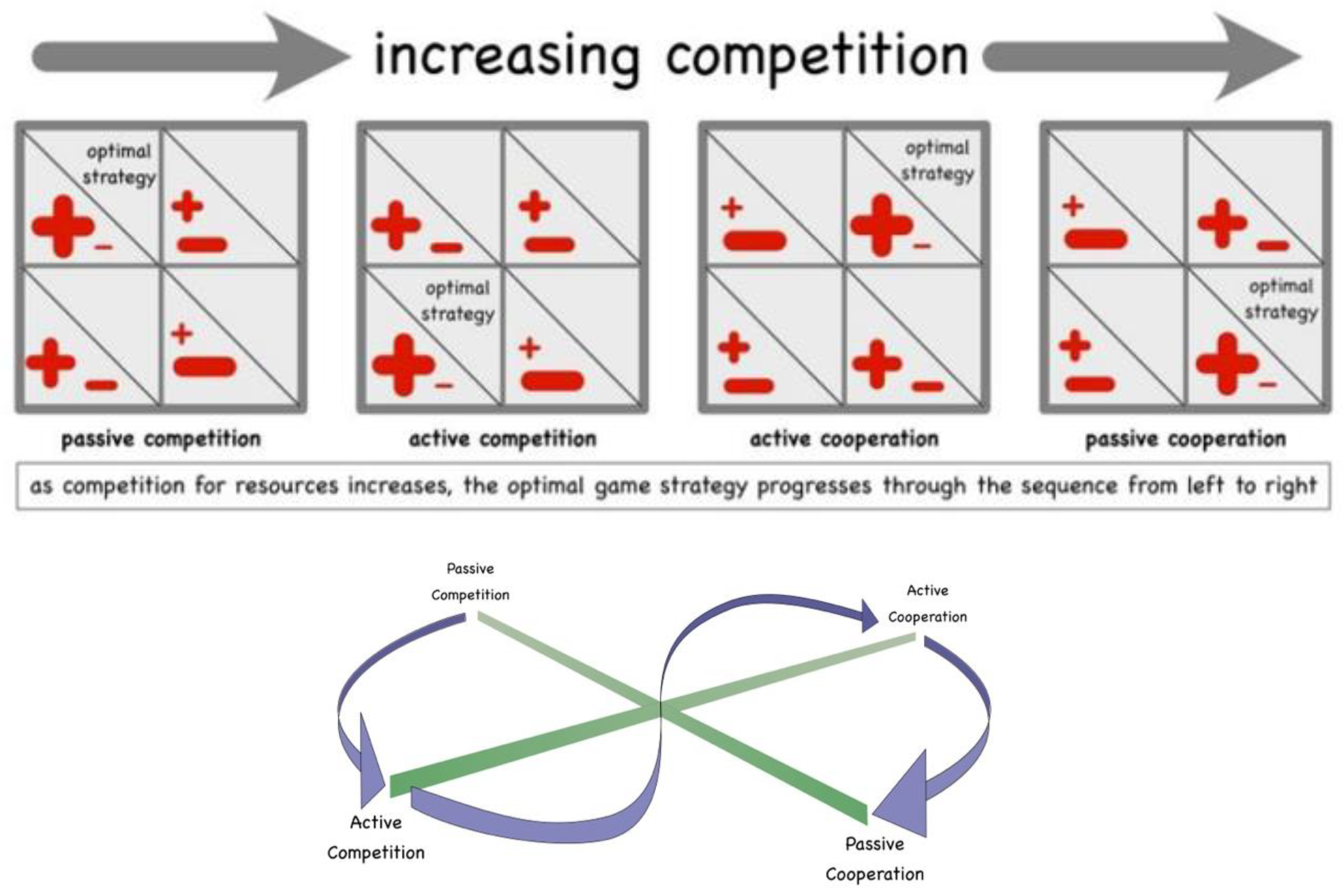

There is now a growing body of knowledge concerning interactions between conspecific organisms (that is members of the same species). Being identical living systems, conspecifics are, according to Darwin (Darwin 1859), inherently competitive, especially when available food is limited. It has recently become established, through a field of study called evolutionary game theory, that there are four specific ways in which self-same energetic systems (organisms, agents, atoms(?)) can interact, as depicted in

Figure 5 and explained below (Maynard Smith 1972, Maynard Smith 1974, Maynard Smith 1982, Nowak 2006, Nowak 2006, Nowak 2012). These are hereafter referred to as Forms of Interaction. These have been deduced through the mathematics of game theory (Axelrod 1984). We’ll start by considering how this framework can be applied to gaseous systems and then progress to other phases of matter.

- 1)

Passive Competition (also denoted selfishness or latent competition) applies where one agent (atom) acts independently without any consequences, but its behaviour is in any event detrimental to another party or all other parties. This equates to the selfish party consuming a limited common good (using the economic interpretation of the term common good), reducing its availability to all others. An example would be picking all the blackberries from the side of a footpath, thereby denying the next passer-by of any tasty morsels, or a gaseous atom absorbing a passing photon, thereby preventing absorption of said photon by another proximate atom.

- 2)

Active Competition (also referred to as spite or conflict) corresponds to circumstances where there is a potential disadvantage from the chosen course of action by each party. Typically, this represents deciding whether to enter into conflict (such as seeking to steal food) with the risk of injury. However, the reward of being able to eat may make the risk worthwhile despite the possible consequence. Applying this to the physical sciences corresponds to particles colliding and thereby in effect stealing momentum off each other. But when collisions occur, there is a risk that a particle, say a molecule, may break apart.

- 3)

Active Cooperation happens where agents choose to cooperate because they can see a benefit arising. This can be expressed through sharing a limited common good or direct reciprocation (which equate to the same thing). This results in both or all parties obtaining a guaranteed smaller immediate gain (dividing the resource between them) than each could potentially have achieved from a competitive course of action, but now without any consequences such as injury. An example from our human world would be two people peacefully picking blackberries side-by-side from the same bush. As will be discussed below, the effect of this in the atomic world is the aggregation of particles to create larger particles (say, atoms to molecules).

- 4)

-

Passive Cooperation is often referred to as indirect reciprocation or altruism, where one party seemingly contributes to another’s benefit without an obvious immediate return. However, more detailed analysis has shown that there is usually an expectation of some reward from another agent or later (say, the altruism cycles round a community, everyone helping and being helped at various times according to their changing circumstances). This can then be accompanied by behavioural changes, including:

exchanging different food stuffs with the same calorific value, such that each agent benefits from consuming a wider range of nutrients but there is no net flow of energy between them; or

everyone contributing to the creation of a

social good (say, collective store of food) in the expectation that each agent will each be able to draw from this later.

This latter cooperative behaviour is only beneficial for any individual if most or preferably all other agents also participate in a similar cooperative way. The implications for the physical sciences are explored further below.

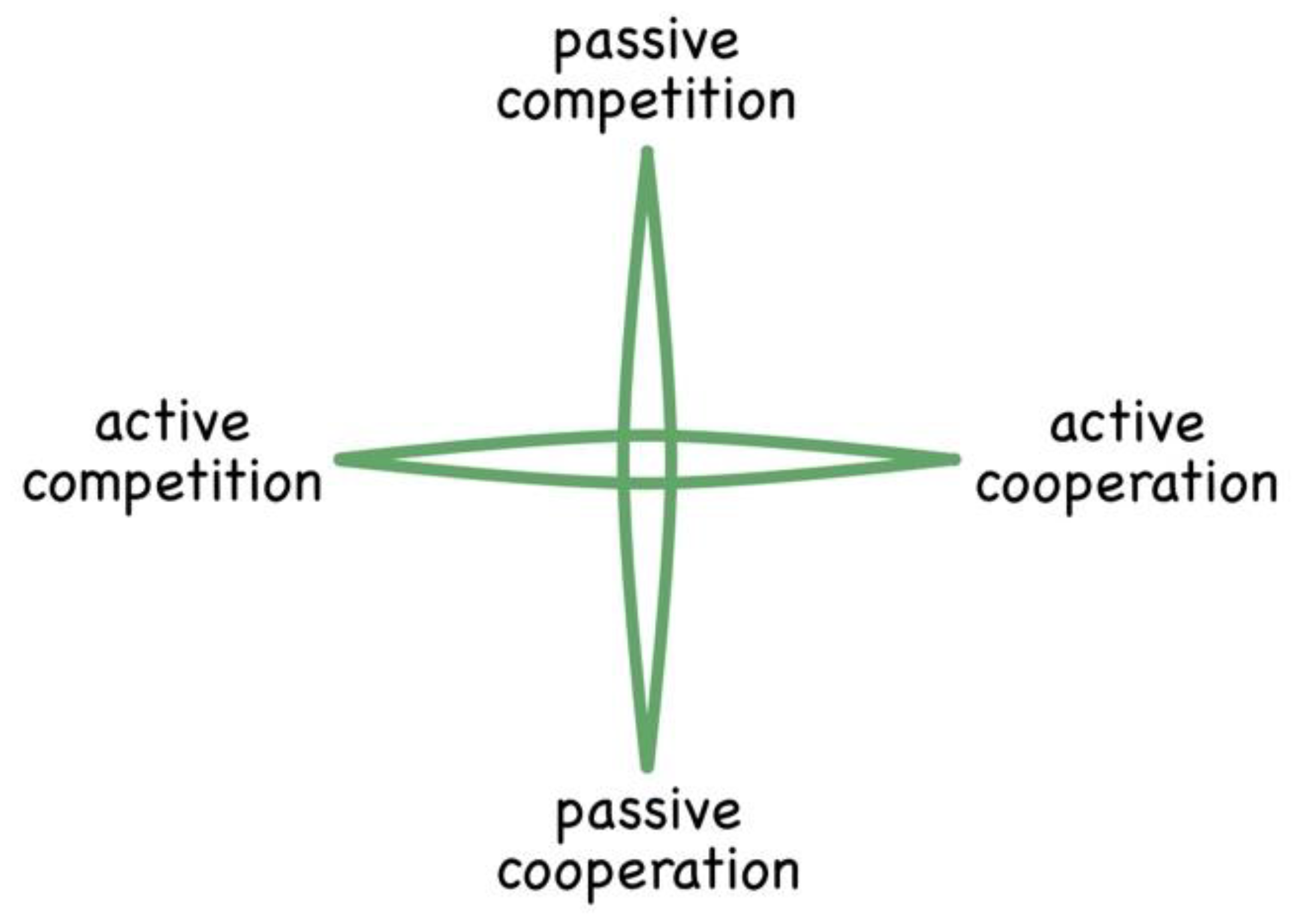

Critically, in all the above interactions, competition always represents a one-way flow of energy. In contrast, cooperation (such as sharing or exchanging) is inherently fair and equitable, there being no net directional flow of energy between the interacting parties. Consequently, the agents involved in cooperative interactions return repeatedly to interact, thereby forming relationships. For ease of depiction, these four strategies can be laid out on a cross-diagram (see

Figure 6).

In the ecological sciences, Passive Competition gives rise to dispersal of organisms throughout a habitat. With each organism seeking to operate independently, maximise its access to food by minimising experienced competition from its conspecifics, and avoid direct conflict, the population spreads out to all areas where the organisms can acquire the food they need to survive. The end result is that each agent is physically as far apart as possible from every other self-same agent (Clobert et al 2012). If, however, food becomes scarce, then this leads to agents changing their behaviour and expressing Active Competition. This manifests as mobbing behaviour (fluctuating dispersal and concentration): for example, sea gulls or pigeons mobbing a food source and stealing fish or breadcrumbs off each other (Cheng et al 2020, Ward et al 2002).

Applying this construct to the physical sciences would see Passive Competition expressed as self-same gaseous atoms dispersing when there is sufficient ambient radiation. Noting from their absorption/emission spectra how selective gaseous particles are for specific wavelengths of energy, each atom will be able to absorb a maximal amount of suitable passing photons by being physically as far apart as possible from any other identical atoms (those of the same element). If there were several gases, then using this construct it would be predicted that each type of gas would independently disperse into a volume, the combination thereby mixing to create a gaseous ecosystem, each gas expressing its own partial pressure (as observed).

Active Competition would manifest as atoms effectively stealing energy off each other, imparting momentum outwards away from any energy source. Wherever there is an imbalance of energy, Active Competition would naturally cause it to dissipate, flowing from those atoms who have, to those who have not – dispersing throughout a system and outwards to the environs. This can be readily modelled in any agent-based modelling programme (without any requirement for agents to operate with intent), through which it can be shown that, in a contained system, competition gives rise to an equalising effect, leading to all agents eventually having a similar amount of energy.

Taken together both Forms of Competition end up as repetitive zero-sum games for all the agents involved. The outcome for a whole system of agents/atoms would be complete mixing of all the different elementary conspecifics together with dispersal of energy. This effects the same end result as we currently describe with the terms equilibrium and maximum entropy, and explain through the 2nd Law of Thermodynamics by means of Boltzmann’s statistical mechanics.

Turning to cooperation, when available energy reduces further and direct competition becomes more intense, then game theory shows that the cost-benefit equation for each agent tips to favour cooperation (Axelrod 1984). Active Competition flips over to Active Cooperation. In ecology, this gives rise to the aggregation of conspecifics, creating herds, troops, packs, pods and other groupings of organisms, which roam the landscape as units, all peacefully sharing food together within their groups, competing at a group level against other similar groups.

Translating this over to the physical sciences, we would predict gaseous atoms aggregating together to create free-roaming bound groups of atoms, which we otherwise know of as molecules. In reducing the temperature, then suitable available photons within the black body radiation, which the original atoms could absorb, would become rare. Free atoms in a gas would no longer be able to interact adequately alone directly with their environment. Consequently, they would spontaneously join up with other atoms, where the subsequent molecules would be large enough to continue to absorb and emit the more readily available, longer wavelength, ambient photons.

As explored in any undergraduate chemistry course, molecules have additional degrees of freedom (vibration and rotation) and can absorb longer wavelengths of radiation than the individual constituent atoms. Within these molecules, as per the Standard Model, the atoms would be constantly passing photons to and fro between each other, giving rise to an apparent bond. For each atom, to achieve that necessary contact with the wider universe, it is now better to be part of some larger system (part of a molecule) than roaming around freely on its own – the new whole being better able to absorb energy from the ambient lower temperature environment than the elemental parts alone.

That’s all very simple – straightforward alternative explanations for physics and chemistry learnt at high school and university first degrees. Now, here’s the interesting bit that takes us into new territory.

In the biological world, the reason why a monkey, say, must stay part of its actively cooperating group is that it will only benefit from sharing food (Active Cooperation - direct reciprocation) if it is ever-present. None of its peers will save up some spare fruit and hand it over later. Every monkey must be there with all others in the group to benefit from the collective cooperation. So, they all stick together out of energetic necessity, roaming the landscape as a group hunting for stuff to eat.

Passive Cooperation does not require such continued localised presence as Active Cooperation. Indirect reciprocation can involve instantaneous exchanges of energy (such as trading) or delayed reciprocations, such as contributing to and later accessing a store of food. But, for this game strategy to be successful for the entire population, it does require there to be a focal point of collective interaction. A good example might be leafcutter ants, going out foraging, bringing back leaf cuttings to the nest, in return for which (an exchange) they get some food (being previously mulched and decomposed leaves). Essentially, by being a source of energy – a place to exchange the efforts of their labours for some hard-earned food – the nest provides a centre of attraction for the whole society of ants, to which each ant must constantly return for its own survival. In the human world, our equivalent are marketplaces – foci of exchange interactions – which lead to agglomeration economics and determine our human and economic geography with towns and cities forming around such trading centres (Jacobs 1969, Krugman 1991, Glaeser 2011, Wengrow 2018, Kim and Conte 2024).

Remember that, in this new physics, apparent forces are the manifestation of the behaviour of atoms: the consequence, not the cause. So, translating the behaviour of Passive Cooperation at a population level to the physical sciences, we find ourselves with a simple explanation for the force of gravity! In this alternative way of seeing things, gravity arises from indirect reciprocation, not direct. It occurs within large populations and not between pairs of agents. Consequently, it ends up being expressed as a relatively feeble, nebulous force – far weaker than those which appear to hold atoms together and bind molecules (roaming, sharing groups). Furthermore, being a shared locus of exchange for the entire population, gravitational focal points become pegs on the physical map – fixed points in space with an inertia created by all the local participating population of particles.

Whereas in the example of leafcutter ants each creature needs to return to the heart of the nest to obtain some food, we know from the human domain of cities that the gravitational effect of a city influences its hinterland through factors such as land value and ability to participate in the economy in such a way that it doesn’t require every person to continually travel to and from the centre of town. The gravitational tension created by a major city has, therefore, a weak indistinct influence on the behaviour of the whole surrounding human population, giving rise to the well-appreciated and documented mapping of human societies on the landscape with identifiable towns and cities surrounded by more rural hinterlands. (And, when you add energy, such as oil and gas, to this agent system then it naturally spreads out, giving rise to suburban sprawl (Hart 2015)). Transferring this analogy over to the physical sciences, then it is not surprising that gravity appears to distort space and time for all the matter in the vicinity of a gravitational well.

To-date we have interpreted the force of gravity as a weak universal force applying to all particles of matter – Newton’s original equation, subsequently re-cast by Einstein. The formation of stars is then seen to arise by means of this weak force gradually causing large clouds of hydrogen to eventually collapse.

This new physics sees things differently. A large cloud of hydrogen atoms/molecules, all of which are at a similar temperature, would experience next-to-no internal gravitational force. It is only when the inner volume of such cloud becomes sufficiently cold – with those particles in the cloud centre starved of energy, denied any incoming suitable photons because these have all been absorbed by the outer layers – and the energy differential between exterior and interior of the cloud has reached a critical point, then passive cooperation interactions kick into effect at scale. This causes a gravitational force to start manifesting, effecting a positive feedback process which ultimately causes implosion of the cloud and formation of a star. Thereafter, the star represents a source of energy for all surrounding matter within that emergent solar system and beyond. It is precisely the same evolutionary process as that which gives rise to thunderstorms and cities, and originally caused the evolution of societal nests in the insect world. All explained without resort to any undetectable gravitons.

So, we have our first few explanations from this new physics:

▪ Passive and Active Competition give rise to Entropy. Entropy, as we understand it, would arise from competition between self-same energetic systems. In this construct, Boltzmann’s ‘S’ would essentially be a measure of or proxy for the level of competition between all identical agents within a system. It would thereby be a function of the system in question and not something that can be exported. The mathematics is entirely correct. But we now also have an explanation for the process behind it.

▪ Active Cooperation causes Molecular Bonding. Molecular bonding would arise from direct reciprocation between atoms. All other short-range forces likewise arise from direct reciprocation interactions, enabling participating energetic systems to maximise their interaction with the surrounding matter and energy environment.

▪

Passive Cooperation leads to Gravity. Gravity would arise from indirect reciprocation between atoms (and particles generally). This would explain why it is so different from and so much weaker than those other apparent forces of nature. Furthermore, this way of understanding gravity helps explain why it often appears as an opposite or counterforce, of sorts, to entropy – concentration versus dispersal of populations (and hence the cruciform depiction in

Figure 6).

But, if there were to be no originating gravitational force, this seriously messes with our deterministic mindsets – everything we have been taught at school and university is suddenly not quite so … grounded. In this construct, the observation of gravity arises because of the behaviour of umpteen numbers of particles all wanting to be part of the action and participate in exchange interactions so as to avoid becoming isolated – no more or less than human beings gravitate towards cities as a means to generate an income to survive (where money is arguably just a virtual proxy for energy).

The above described sequence of game strategies (Forms of Interaction) has a natural order correlating with the available ambient energy. When you reduce the temperature of a system of gaseous self-same particles, this raises the experienced competition for available energy. So, according to game theory, the system transitions from Passive Competition, through Active Competition and Active Cooperation, finally to Passive Cooperation. This is portrayed in

Figure 7a and 7b.

Recognising the black body radiation distribution for different temperatures, the principle emerges that larger matter systems form out of component parts when the energy content of the ambient photons drops – reduced temperature. Conversely, when the temperature rises again, exposing the atoms or molecules to increasingly higher energy photons (with shorter and shorter wavelengths), then the constituent parts – atoms, nucleons and electrons – find that it is no longer necessary to be part of a larger system, and spontaneously escape, now able to achieve direct interaction in the vacuum with those higher energy ambient photons without being part of a larger whole. The splintering of the atoms or molecules arises, not because of breaking forces, but from the component particles responding spontaneously to the new circumstances – the prior bond simply ceases to exist.

Within the above discussion, the words compete and cooperate may portray unintended meaning – that of operating with intent. Whilst there are alternative words for cooperate, such as correlate and aggregate, there aren’t any good alternative suitable pairs of opposite terms in the English language. And, in any event, we are happy to think of bacteria in terms of competing and cooperating, so why not something even smaller? Let’s, then, stick with compete and cooperate, recognising that the systems under consideration, the particles, are being entirely spontaneous. There is no need to construe intent, animation or consciousness. This terminology also enables appreciation that this new physics construct is equally applicable to the biological and social sciences.

Lowering the Temperature Further

So far, the focus has been on gaseous systems. What happens when the temperature is lowered further, and ambient (black body) radiation comprises mostly photons with longer and longer wavelengths? At face value, one might expect the formation of larger and larger atomic systems or molecules. But it’s not quite that simple, because the Periodic Table of atoms are highly stable and effective energetic systems at absorbing and emitting the type of radiation found around the universe outside of the interior of stars. The atomic nuclei are themselves forged in stellar environments. Once outside, they can’t simply merge and convert into larger and larger atoms – no more or less than two organisms could merge to create some monstrosity. And when molecules get too big, they don’t have the structural coherence to exist in a gaseous state.

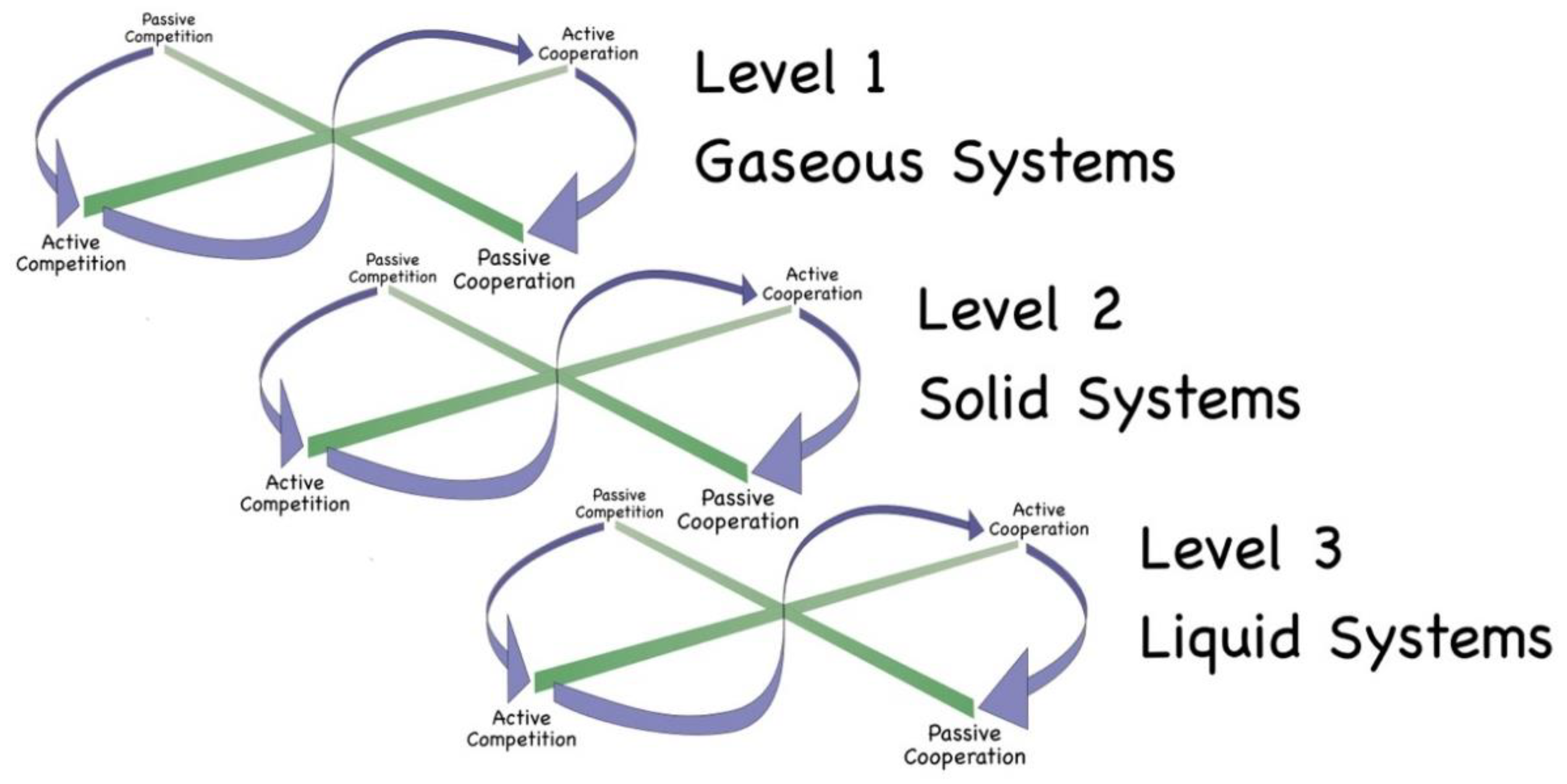

Being that they are energetic systems, self-same atoms or molecules are seen as innately competitive. So, when the temperature drops, rather than forming larger atomic or molecular systems, they find interesting new ways to compete and cooperate for available energy. They create composite energetic systems, resulting in the variety of matter structures that we observe in the world around us.

In the biological sciences, this construct is expressed through living systems having a discrete set of needs, giving rise to a hierarchy of needs: Level 1 = survival, Level 2 = safety/security, Level 3 = health maintenance, and Level 4 = information needs. It is difficult to appreciate atoms or molecules as having needs as such. However, these concepts can be interpreted into the physical sciences as representing competition or cooperation between particles with respect to: Level 1 – frequency of acquisition of intermittent energy, Level 2 – flow of energy, Level 3 – total quantum of energy, and Level 4 – spatial information about available energy. These basic energetic requirements give rise to Ideal Type interactions (Level 1 to Level 1, Level 2 to Level 2, etc) and manifest through the basic states of matter – gas, solid and liquid:

▪ Level 1 - gases occur when there is sufficient ambient radiation for atoms and molecules to operate as free agents, absorbing and emitting photons directly to and from the surrounding vacuum;

▪ Level 2 - solids occur when the wavelengths of most of the radiation is too long for individual free atoms/molecules to interact with; they can only achieve sufficient interaction through being part of some larger object, which is able to create a surface onto which those long-wavelength photons can be incident; the atoms within solids access energy through direct contact with other atoms, energy passing from one atom directly to its neighbours or being shared/exchanged across multiple atoms in the form of vibrations;

▪ Level 3 - liquids occur when the particles can, in part, obtain energy directly from ambient radiation, but need to top this up with intermittent direct-contact interactions with other atoms; hence liquids sit at the interface between gases and solids, combining interactions observed in both gases and solids; and

▪ Level 4 – dynamic fluid systems occur when there is a flow of energy through a liquid (or gaseous) system, leading the matter particles to keep responding spontaneously to an ever-changing energetic environment.

When the temperature is significantly lowered, then, for each atom to continue to interact with the wider universe, aggregations of atoms/molecules must come together to create larger objects (still being energetic systems). Within such larger systems, the particles compete or cooperate for energy in the same way as already discussed in a gaseous context – hence for each type of competition/cooperation (Levels 1 to 4) there is seen to be the same sequence of Forms of Interaction running from Passive Competition through to Passive Cooperation (see

Figure 8). By way of example, in some solid systems the atoms can be construed as internally competing, whereas in other solid structures the component atoms are cooperating. The internal competition or cooperation between atoms/molecules manifests in terms of macroscopic properties, such as insulation and conduction.

The Forms of Interaction are evident within matter systems (gas, solid, liquid) through creating four categories of material at each level - as set out in

Table 1. These various systems or structures have been deduced by looking at how the same competitive and cooperative processes operate within the biological and social sciences domains. The fourth level to this framework corresponds to behaviours of matter systems, which sit beyond our ability to explain through a purely atomistic and reductionist mindset of objects and forces.

Through this new physics, the reason for the existence of different states of matter becomes readily explicable and, further, it is understandable why phase changes take place at such precise temperatures. Essentially, each atom is constantly responding to its energetic environment. When the local energetic environment changes, whether that be the radiation or energy obtained through direct contact with neighbouring atoms, all particles spontaneously flip in terms of type of interactions (Level 1 to 4) and from it being optimal to correlate or not correlate, aggregate or disaggregate (compete or cooperate) with its neighbours.

If all the above were to be true, then constructs that we have invented through our deterministic physics to explain the existence of forces, such as the electromagnetic force, may become unnecessary. By way of example, the concept of electric charge can alternatively be explained through appreciating energetic systems competing or cooperating. For instance, electrons compete against other electrons, thereby spontaneously dispersing. The notion that they have a negative charge and thereby repel each other becomes redundant. Within this new physics, attraction between electrons and protons is better understood as a symbiotic relationship to enable each particle type to achieve greater interaction with their environment at low temperatures, than by means of the notion of positives and negatives attracting. In addition to this, the long-standing conundrum of monopoles can be resolved. Magnetism is the manifestation of direct reciprocation between particles. As it takes two to tango, true monopoles are an impossibility.

Wave-Particle Duality

So, what about that most intractable of quantum mysteries: wave-particle duality? This is a phenomenon which is indubitably observed yet remains inexplicable (Rovelli 2021). With this new physics, the answer becomes trivial.

Energetic systems come across as particles when they are competing as separate entities in this universe. They appear as waves when cooperating with other energetic systems, forming part of something larger. An equivalent example, at a scale we can understand, would be a shoal of fish – the identity of each fish becoming subsumed within some larger whole, yet each fish remains a unique entity. For particles, that larger whole may be other photons or electrons, or perhaps the experimental apparatus itself. Whilst particles can lose themselves in the crowd and function like waves, they remain unique innately competitive entities, which helps to explain concepts such as the Pauli Exclusion Principle (that no two fermions (such as electrons) can occupy the same quantum state simultaneously within a quantum system).

Origins of Life

In the aftermath of the theoretical success, but practical failure, of Prigogine’s work on entropy export, there was a flurry of other activity during the 1980s and 1990s, looking to reconcile the physical and life sciences. The new mathematical fields of chaos theory, complexity theory and non-linear dynamics gave hope that an alternative explanation for life might be forthcoming. The expectation was that these new mathematical disciplines might show how life arose from complex, otherwise deterministic, systems (Mitchell 2009). This gave rise to the notion of emergence.

Despite much number crunching by computers since then, this line of thought has stalled. A satisfactory explanation has not been forthcoming. The great white elephant – that discord between physical and life sciences – has remained unresolved. Despite huge amounts of computing power now available to us, the truth is that we still haven’t quite cracked this question of how does life emerge from atomistic, Boltzmannian physical systems.

But, perhaps, the honest answer is that, starting with an atomistic mindset, it will never be possible to explain life. Clearly the existing objects-forces approach has generated a host of clever explanations for the behaviour of relatively simple systems – gases, solids and liquids containing a few different component types – using constructs such as a universal tendency towards maximum entropy counterposed by invisible forces holding things together supported by ideas such as electric charge. However, this will always break down when seeking to progress towards explaining why and how bacteria exist, replicate or can swim upstream towards higher sugar concentrations. Explanations for more complex organic systems such as the growth of plants and the formation of human society will always fall out of the reach of pure determinists.

Treat atoms and any other particles as energetic systems, which respond spontaneously to their environment, and everything falls into place. The actions of bacteria (or humans, even) follow the same patterns as those of atoms. Life emerges as a natural consequence of the behaviour of basic physical systems, component parts, atoms and increasingly larger molecules, competing and cooperating to give rise to ever-more complicated life forms. Darwin’s process of natural selection applies as much to simple and complex molecules as it does to whole life forms.

Life itself emerges on the knife edge between competition and cooperation – push too far one way and things evaporate into chaotic competitive gaseous freedom (Level 1 competitive systems), push too far the other and you end up with seemingly inanimate rigid solidity (Level 2 systems). Life naturally arises in the fluid universe (Level 3 and 4 systems), where there is scope for both structure and change, order and disorder. And the increasing complexity of organisms and ecosystems is seen as a direct consequence of the innate competitiveness of each and every energetic entity driving progressive evolution, at all scales.

Through their notion of Yin and Yang (though putting aside some of the misogynistic aspects to these old ideas), the Ancient Chinese appreciated the (living) universe for what it really is – a perpetual dance between competition and cooperation, non-correlation and correlation, disaggregation and aggregation, dispersion and concentration – all just expressions of what we already see in the physical universe, albeit currently describe otherwise.

Our Universe … But not as We Know It

Our 19th century forefathers made it their business to deduce the universal laws and constants that make the universe tick, so-to-speak. When thinking about the beginning of things, such as the Big Bang, such determinists conceptualise that the rules governing the universe were pre-scripted. Before the universe began, those important constants, such as the gravitational constant, the mass and electric charge of an electron, and numerous others, were all pre-defined. It’s an engineer’s way of thinking about the universe. This has led to a variety of leading thinkers wondering why these constants are what they are: as they all note, if these were just the merest fraction altered, the universe would be a very different place, and us probably not a part of it (Barrow and Tipler 1986, Hawking 1988, Penrose 2004, Barrow 2002). This takes some authors down the road of thinking that there must have been purposive design to the universe to enable us to exist (McGrath 2009, Rees 2000).

If you are willing to let go of such a deterministic mindset, then you must also leave behind this idea about how things began. Rather, everything started with a completely blank sheet, just like life on Earth did. From there, the universe evolved. Everything that we now see arose because of paths being taken. None of the universal constants, which we have measured, existed at the start of the process … no more than our human DNA was anything more than a theoretical possibility when life on Earth first appeared.

By way of example, we don’t question that all life on Earth is based on certain chirality rules (chiral molecules are mirror images of each other - most biological amino acids have left-hand chirality and most biological sugars have right-hand chirality). We appreciate that this arose because of forks in our evolutionary history. Thereafter, the incorrect chirality always caused things to go wrong within biological systems, so evolutionary processes selected for only those chemical reactions and processes which lead to the correct chirality. Man, of course, had to learn the hard way not to make chemicals with the wrong chirality (witness the thalidomide disaster). Turning to the physical sciences, the question is regularly posed in popular scientific press – ‘where’s all the anti-matter?’ The answer is simple. The universe chose a path. Thereafter, putting anti-matter into the equation just made a mess of things – tending to destroy all structure. And so (using Darwinian jargon), particles and particle interactions have been selected for, which keep it out.

In putting aside our addiction to determinism in the physical sciences, and instead seeing matter in terms of energetic systems evolving, there is scope at last to properly reconcile and integrate the physical, life and social sciences into a singular process-oriented understanding of our universe (Nicholson and Dupre 2018).

In a universe understood in terms of energetic systems at all scales competing and cooperating for available energy, the end of things is not some form of heat death. Rather the universe is a wonderfully complicated interconnected ecosystem, forever adapting. Objects within – atoms, life, ourselves, stars and galaxies – are born, survive (competing and cooperating as required) and die. Species of systems at all scales, from atoms to galaxies, gradually evolve. We inhabit a truly living universe; nothing is pre-determined; nothing therein is completely inanimate; and we (or something akin to us) are a very natural and inevitable part of it.

Conclusions

We return at the end to the question of entropy and Boltzmann’s statistical mechanics. His mathematical construct has been incredibly useful for the last 120 years, helping us to solve all sorts of challenges. But Boltzmann’s mathematics is actually a statistical model for a population of competing agents, predicting that all agents in a system are driven by competition to differentiate and end up evenly dispersed across all the available microstates. Maximum entropy thereby represents the outcome of countless repetitive zero-sum competitive games, leading to complete spatial dispersal of agents and even distribution of energy.

The mathematics is genius. It is a wholly correct approach to model a population of competing agents and consequently provides an extremely good fit against measured observation for isolated (as far as is possible) gaseous systems. But, could it be, despite all this – and it seems ridiculous to write this – we have all been caught up in the biggest questionable-cause logical fallacy since the Enlightenment. Boltzmann’s concept of entropy correlates with what we observe. It is not the cause. The cause is so much simpler.

Now, if entropy as currently construed is a correlation, not cause, then many everyday phenomena would be far easier to explain to children and undergraduate students than relying on concepts in statistical mechanics such as macro configurations and microstates. Rather, it turns thermodynamics into fun: “Wonder why your tea cools?” “It’s because those cheeky air molecules literally steal the heat away.” And, in one fell swoop, in the spirit of Copernicus’ heliocentric theory of five hundred years ago, all that was previously complicated becomes simple. And all those other constructs, such as electrical charge, which we have collectively invented, suddenly become redundant.

Clearly, if this new physics has any validity, then other questions come to the fore, such as: “how frequent must the interactions of a lone particle be, to not become isolated?” Furthermore, if gravity isn’t constant, then our whole comprehension of the cosmos will be thrown into disarray. Notwithstanding such challenges and disruptions to existing ways of thinking about the way of things, the potential benefit gained from unifying the sciences would be worth it … and without resort to any hidden dimensions, undetectable particles or dark energy.

Rather, this alternative approach to physics provides scope for many new magical insights into how our universe works. Within the social sciences, the collective interactions of agents, producing macroscopic social processes, can be shown to give form to virtual phenomena such as money, laws, religions and the multiverse (Hart 2024). When applied to the physical sciences, the same construct has the potential to provide insight into currently inexplicable processes such as why thunderstorms form, how snowflakes grow, the reason for vortices, and much more besides. By understanding better the underlying processes going on in physics, our ability to manipulate the material world can improve yet further.

If you are willing to think the unthinkable and treat particles as being not quite so inanimate – rather as energetic systems responding spontaneously to their environment – then an alternative non-deterministic construct for understanding our universe is possible. In this alternative construct, all the forces that we perceive, and thence all the structures that we experience in space or time, are manifestations of underlying processes. It may be that the only way to unify all the forces of nature into a theory of everything is to recognise that there are no fundamental forces after all.

THE END

Non-financial Interests

None

Declarations of interest

None

Author contributions

Julian Hart is the sole author and protagonist of this work

Ethics Approval Statement

not applicable

Open Research Statement/Data Availability Statement

This is a theory paper. No supportingdata or modelling is provided.

Non-financial Interests

None

Declarations of interest

None

Conflict of interest

None

References

- Aristov, Vladimir V., Anatoly S. Buchelnikov, and Yury D. Nechipurenko. (2022). "The Use of the Statistical Entropy in Some New Approaches for the Description of Biosystems" Entropy 24, no. 2: 172. [CrossRef]

- Ashtekar, A. and Lewandowski, J. (2004) “Background Independent Quantum Gravity: A Status Report”. Classical and Quantum Gravity, 21, R53-R152. [CrossRef]

- Axelrod, R. (1984). The Evolution of Cooperation. Basic Books.

- Barrow, J. D., & Tipler, F. J. (1986). The Anthropic Cosmological Principle. Oxford University Press. [CrossRef]

- Barrow, J. D. (2002). The Constants of Nature: The Numbers That Encode the Deepest Secrets of the Universe. Pantheon Books.

- Bertalanffy, L. (1972). General System Theory: Foundations, Development, Applications. Allen Lane.

- Bohm, D. (1980). Wholeness and the Implicate Order. Routledge. [CrossRef]

- Callen, H. B. (1985). Thermodynamics and an Introduction to Thermostatistics. (2nd ed.). Wiley.

- Campbell, J. (1999). Rutherford: Scientist Supreme. AAS Publications.

- Camprubí, L. (2022). “Materialism and the History of Science”. In: Romero, G.E., Pérez-Jara, J., Camprubí, L. (eds) Contemporary Materialism: Its Ontology and Epistemology. Synthese Library, vol 447. Springer, Cham. [CrossRef]

- Capra, F. (1996). The Web of Life: A New Scientific Understanding of Living Systems. Anchor Books. [CrossRef]

- Carroll, S. (2010). From Eternity to Here: The Quest for the Ultimate Theory of Time. Dutton.

- Chakrabarti CG, Ghosh K. Maximum-entropy principle: ecological organization and evolution. J Biol Phys. 2010 Mar;36(2):175-83. http://doi.org/10.1007/s10867-009-9170-z.

- Cheng, L., Zhou, L., Bao, W., Mahtab, N. “Effect of conspecific neighbors on the foraging activity levels of the wintering Oriental Storks (Ciconia boyciana): Benefits of social information.” Ecology and Evolution, 2020: 10(19): 10384–10394. [CrossRef]

- Clobert, J., Baguette, M., Benton, T. G., & Bullock, J. M. (Eds.). Dispersal ecology and evolution. Oxford University Press, 2012.

- Close, F. (2011). The Infinity Puzzle: Quantum Field Theory and the Hunt for an Orderly Universe. Basic Books.

- Cohen-Tannoudji, C., Diu, B., & Laloë, F. (1977). Quantum Mechanics (Vol. 1). Wiley VCH.

- Coughlan, G. D., Dodd, J.E. (1991). The Ideas of Particle Physics: An Introduction for Scientists. Cambridge University Press (2nd Ed).

- Darwin, C. (1859). On the Origin of Species by Means of Natural Selection, or the Preservation of Favoured Races in the Struggle for Life. London: John Murray.

- Davies, P. (1994). The Last Three Minutes: Conjectures About the Ultimate Fate of the Universe. Basic Books.

- Davies, P. (2019). The Demon in the Machine: How Hidden Webs of Information Are Solving the Mystery of Life. Allen Lane.

- de Waal, A. and Kluwick, U. (2022). Victorian materialisms: approaching nineteenth-century matter, European Journal of English Studies, 26:1, 1-13. [CrossRef]

- Einstein, A. (1915). “Die Feldgleichungen der Gravitation.” Preussische Akademie der Wissenschaften, Sitzungsberichte, (part 2), 844–847.

- Eisberg, R., & Resnick, R. (1985). Quantum Physics of Atoms, Molecules, Solids, Nuclei, and Particles (2nd ed.). Wiley.

- Endres R.G. (2017). “Entropy production selects nonequilibrium states in multistable systems”. Sci Rep. 7(1):14437. [CrossRef]

- Glaeser, E. (2011). Triumph of the City: How Our Greatest Invention Makes Us Richer, Smarter, Greener, Healthier, and Happier. Penguin Press.

- Glashow, S. L. (1980). Towards a Unified Theory: Threads in a Tapestry. Reviews of Modern Physics, 52(3), 539-541. [CrossRef]

- Greene, B. (2003). The Elegant Universe: Superstrings, Hidden Dimensions, and the Quest for the Ultimate Theory. W.W. Norton & Company.

- Green, M. B., Schwarz, J. H., & Witten, E. (2012). Superstring Theory, Volume 1, Introduction. Cambridge University Press.

- Griffiths, D. (2008). Introduction to Elementary Particles. (2nd revised ed.) Wiley-VCH. [CrossRef]

- Griffiths, D. J., & Schroeter, D. F. (2018). Introduction to Quantum Mechanics. Cambridge University Press (3rd ed.).

- Haroche, S., & Raimond, J. M. (2006). Exploring the Quantum: Atoms, Cavities, and Photons. Oxford University Press.

- Hart, J. (2015). Towns and Cities: Function in Form: Urban Structures, Economics and Society. (1st ed.). Routledge. [CrossRef]

- Hart, J. (2024). Our Universe but not as we know it. Kindle.

- Hawking, S. (1988). A Brief History of Time. Bantam Books.

- Hawking, S., and Mlodinow, L. (2011). The Grand Design. Bantam Books.

- Heidegger, M. (1962). Being and time. In J. Macquarrie, & E. Robinson, (Trans.), New York, NY: Harper & Row.

- Heisenberg, W. (1927). “Über den anschaulichen Inhalt der quantentheoretischen Kinematik und Mechanik”. Zeitschrift für Physik, 43(3), 172-198. [CrossRef]

- Houghton, W. E. (1977). The Victorian Frame of Mind, 1830-1870. Yale University Press (13th Edition).

- Isaacson, W. (2007). Einstein: His Life and Universe. Simon & Schuster.

- Jacobs, J. (1969). The Economy of Cities. Vintage Books.

- Jaynes, E. T. (1980). "The Minimum Entropy Production Principle." Annual Review of Physical Chemistry, 31(1), 579-601. [CrossRef]

- Jørgensen, S. E., & Svirezhev, Y. M. (2004). Towards a Thermodynamic Theory for Ecological Systems. Elsevier.

- Kaku, M. and Trainer, J. (1987). Beyond Einstein: The Cosmic Quest for the Theory of the Universe. Bantam Books.

- Kane, G. L. (2001). Supersymmetry: Unveiling the Ultimate Laws of Nature. (reprint ed.). Basic Books.

- Kelvin, W.T. (1867). “On Vortex Atoms.” Philosophical Magazine, 34, 15–24.

- Kim, J., Conte, M., Oh, Y., Park, J. (2024). “From barter to market: an Agent-Based Model of Prehistoric Market Development.” Journal of Archaeological Method and Theory. [CrossRef]

- Kittel, C., & Kroemer, H. (1980). Thermal Physics (2nd ed.). W. H. Freeman. [CrossRef]

- Kleidon, A. (2009). “Nonequilibrium thermodynamics and maximum entropy production in the Earth system”. Naturwissenschaften, 96:653-677. [CrossRef]

- Kondepudi, D., Petrosky, T. and Pojman, J.A. (2017). “Dissipative structures and irreversibility in nature: Celebrating 100th birth anniversary of Ilya Prigogine (1917–2003)”. Chaos, 27-10. [CrossRef]

- Kondepudi, D., & Prigogine, I. (1998). Modern Thermodynamics: From Heat Engines to Dissipative Structures. Wiley.

- Krugman, P. (1991). Geography and Trade. MIT Press.

- Lancaster, T., & Blundell, S. J. (2014). Quantum Field Theory for the Gifted Amateur. Oxford University Press.

- Mahon, B. (2003). The Man Who Changed Everything: The Life of James Clerk Maxwell. Wiley.

- Marshall, C. (2010). The Metaphysical Society (1869-1880): Intellectual Life in Mid-Victorian England. Oxford University Press.

- Martin, S. P. (1997). A Supersymmetry Primer. arXiv.

- Maxwell, J. C. (1865). "A Dynamical Theory of the Electromagnetic Field." Philosophical Transactions of the Royal Society of London, 155, 459-512.

- Maynard Smith, J. Evolution and the Theory of Games. Cambridge University Press, 1982.

- Maynard Smith, J. "Game Theory and the Evolution of Fighting." In On Evolution, edited by John Maynard Smith, 55-77. Edinburgh: Edinburgh University Press, 1972.

- Maynard Smith, J. "The Theory of Games and the Evolution of Animal Conflicts." Journal of Theoretical Biology, 1974: 47(1), 209-221. [CrossRef]

- McGrath, A. E. (2009). The Fine-Tuned Universe: The Quest for God in Science and Theology. Westminster John Knox Press.

- Mitchell, M. (2009). Complexity: A Guided Tour. Oxford University Press.

- Newton, I. (1687). "Mathematical Principles of Natural Philosophy." (commonly known as Principia). Cambridge University Press.

- Nicholson, D.J. and Dupre, J. (2018). Everything Flows: towards a processual philosophy of biology. Oxford University Press.

- Nowak, M. A. (2012). “Evolving cooperation.” Journal of Theoretical Biology. 299(1-8).

- Nowak, M. A. "Five Rules for the Evolution of Cooperation." Science 314, no. 5805 (2006): 1560-1563. [CrossRef]

- Nowak, M. A. Evolutionary dynamics: exploring the equations of life. Belknap Press, 2006.

- Padmanabhan, T. (2010). “Thermodynamical Aspects of Gravity: New Insights”. Reports on Progress in Physics, 73, 046901. [CrossRef]

- Pathria, R.K. and Beale, P.D. (2011). Statistical Mechanics. Academic Press, 3rd edition.

- Penrose, R. (2004). The Road to Reality: A Complete Guide to the Laws of the Universe. Alfred A. Knopf.

- Perkins, D. H. (2000). Introduction to High Energy Physics. Cambridge University Press. [CrossRef]

- Peskin, M. E., & Schroeder, D. V. (1995). An Introduction to Quantum Field Theory. (1st ed.). CRC Press. [CrossRef]

- Price, H. (1996). Time’s Arrow and Archimedes’ Point: New Directions for the Physics of Time. Oxford University Press.

- Prigogine, I. (1980). From Being to Becoming: Time and Complexity in the Physical Sciences. W. H. Freeman. [CrossRef]

- Prigogine, I. and Stengers, I. (1984). Order Out of Chaos: Man’s New Dialogue with Nature. Bantam Books.

- Popovic, M.E. (2018). “Research in entropy wonterland: A review of the entropy concept”. Thermal Science, 22:2, 1163-1178. [CrossRef]

- Quigg, C. (2013). Gauge Theories of the Strong, Weak, and Electromagnetic Interactions. (2nd ed.) Princeton University Press.

- Rees, M. (2000). Just Six Numbers: The Deep Forces that Shape the Universe. Basic Books. [CrossRef]

- Reif, F. (1965). Fundamentals of Statistical and Thermal Physics. McGraw-Hill. [CrossRef]

- Roach, T.N.F. (2020). “Use and Abuse of Entropy in Biology: A Case for Caliber”. Entropy, 22(12), 1335; https://doi.org/10.3390/e22121335.

- Ross, G. G. (1984). Grand Unified Theories (Frontiers in Physics). Benjamin-Cummings Pub Co.

- Rovelli, C. (2004). Quantum Gravity. Cambridge University Press.

- Rovelli, C. (2021). Helgoland: Making Sense of the Quantum Revolution. Translated by Segre, E. and Carnell, S. Riverhead Books.

- Schneider, E.D. and Sagan, D. (2005). Into the Cool: Energy Flow, Thermodynamics, and Life. University of Chicago Press.

- Schrodinger, E. (1944). What is life? Cambridge University Press.

- Shore, S.N. (2008). Forces in Physics: a historical perspective. Bloomsbury Academic.

- Smolin, L. (2000). Three Roads to Quantum Gravity. Weidenfeld & Nicolson.

- Smolin, L. (2006). The Trouble with Physics: The Rise of String Theory, the Fall of a Science, and What Comes Next. Houghton Mifflin Harcourt.

- Susskind, L. (1995). “The World as a Hologram”. Journal of Mathematical Physics, 36(11), 6377-6396. [CrossRef]

- Torretti, R. (1999). The Philosophy of Physics (The Evolution of Modern Philosophy). Cambridge University Press.

- Verlinde, E. (2011). “On the origin of gravity and the laws of Newton”. J. High Energ. Phys. 2011, 29 (2011). [CrossRef]

- Volk, T. and Pauluis, O. (2010). “It is not the entropy you produce, rather, how you produce it”. Philos Trans R Soc Lond B Biol Sci. 12;365(1545):1317-22. [CrossRef]

- Volodyaev I. “Bridging the gap between physics and biology.” Riv Biol. 2005 May-Aug;98(2):237-64.

- Ward, P., Zahavi, A., & Feare, C. (2002). "The role of conspecifics in the acquisition of new feeding sites by foraging great skuas Catharacta skua." Behavioral Ecology and Sociobiology, 52(5), 289-293.

- Weinberg, S. (1992). Dreams of a Final Theory. Pantheon Books. [CrossRef]

- Weinberg, S. (1995). The Quantum Theory of Fields: Volume 1, Foundations. Cambridge University Press.

- Wengrow, D. (2018). What makes civilisation? Oxford University Press.

- Westfall, R. S. (1980). Never at Rest: A Biography of Isaac Newton. Cambridge University Press.

- Wheeler, J. A., & Taylor, E. F. (2000). Exploring Black Holes: Introduction to General Relativity. (1st ed.). Pearson.

- Whitaker, A. (2015). The New Quantum Age: From Bell’s Theorem to Quantum Computation and Teleportation. (reprint). Oxford University Press.

- Witten, E. (1995). “String Theory Dynamics in Various Dimensions.” Nuclear Physics B, 443(1), 85-126. [CrossRef]

- Zee, A. (2010). Quantum Field Theory in a Nutshell. Princeton University Press.

- Zimmer, C. (2021). Life’s Edge: The Search for What It Means to Be Alive. Dutton.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).