Submitted:

02 October 2024

Posted:

02 October 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

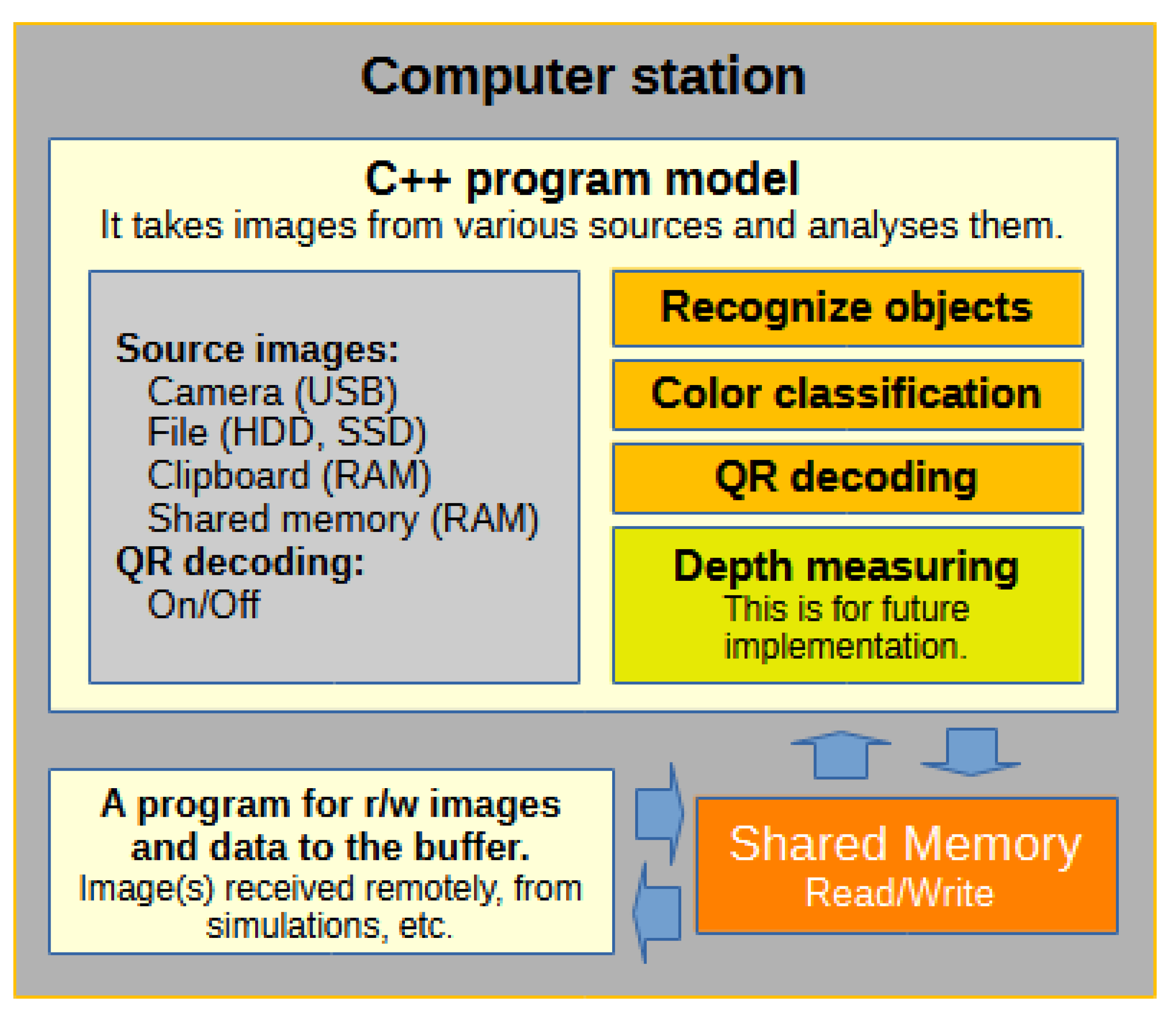

2. Program Model

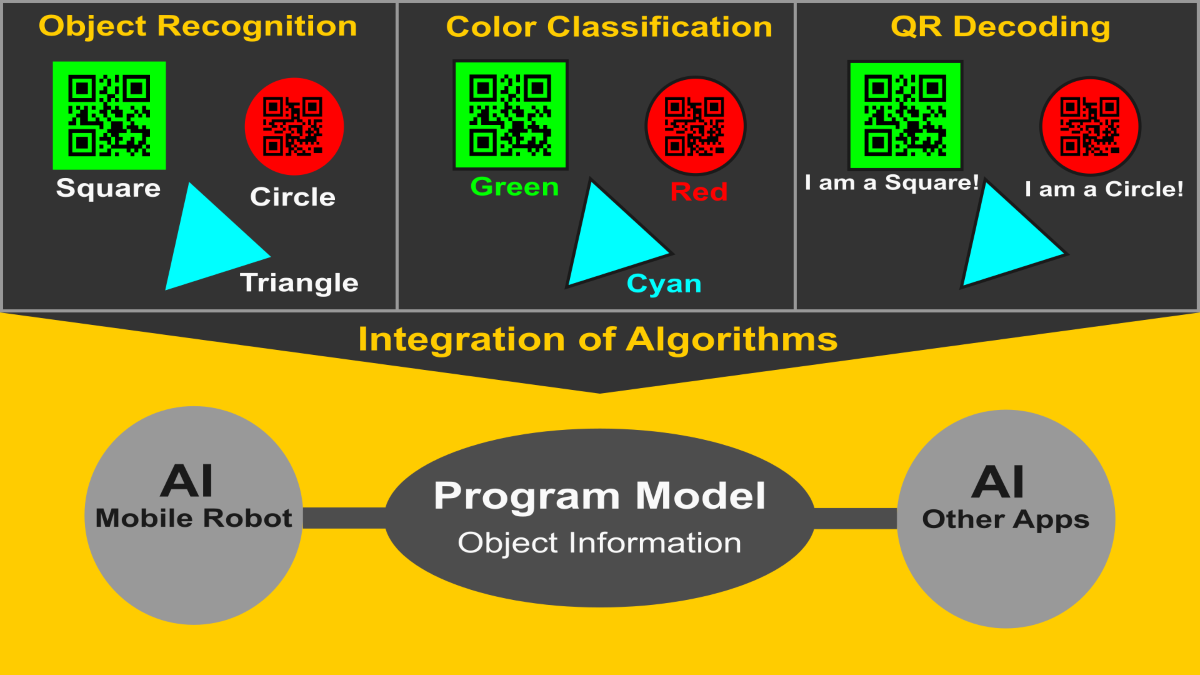

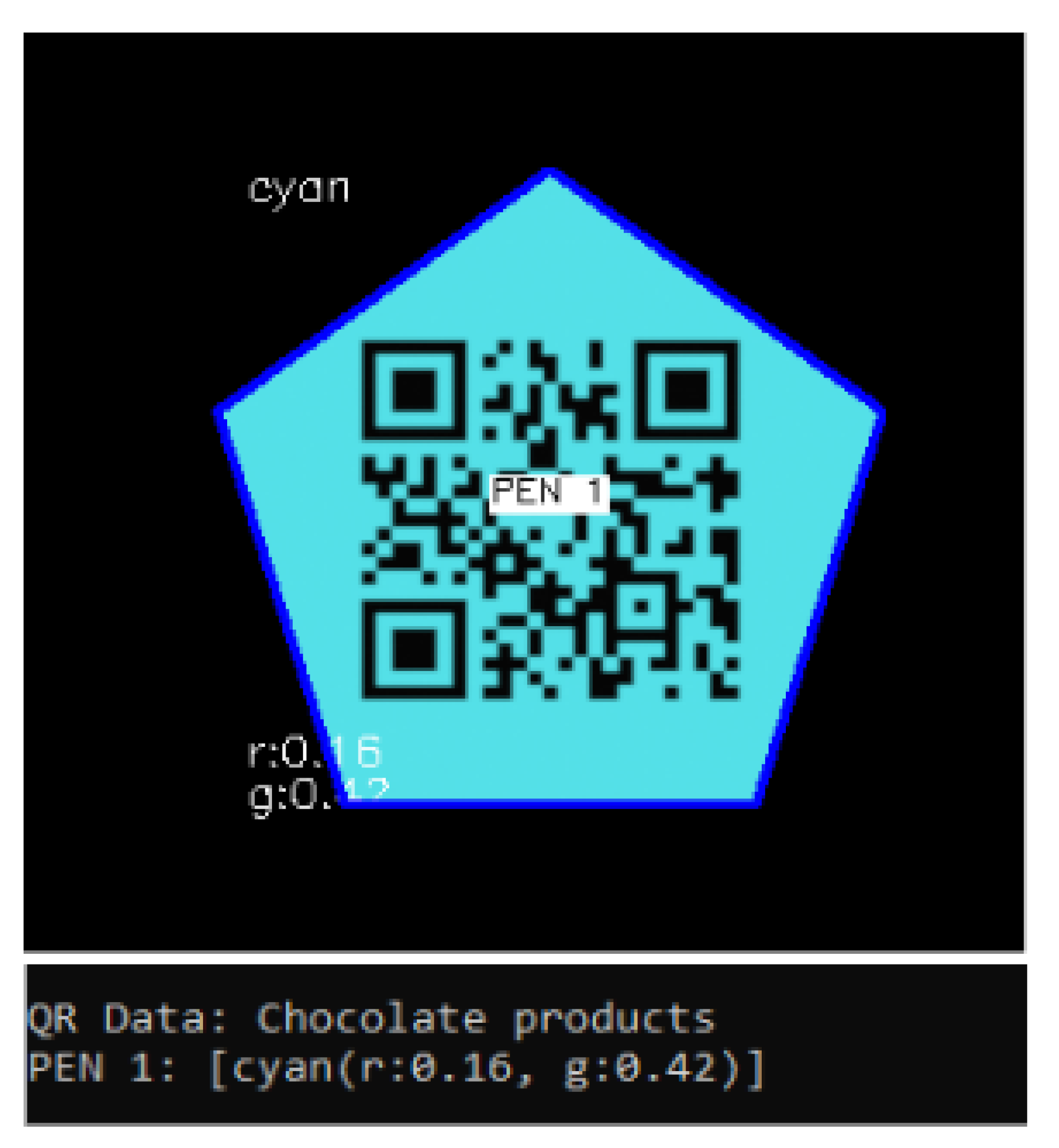

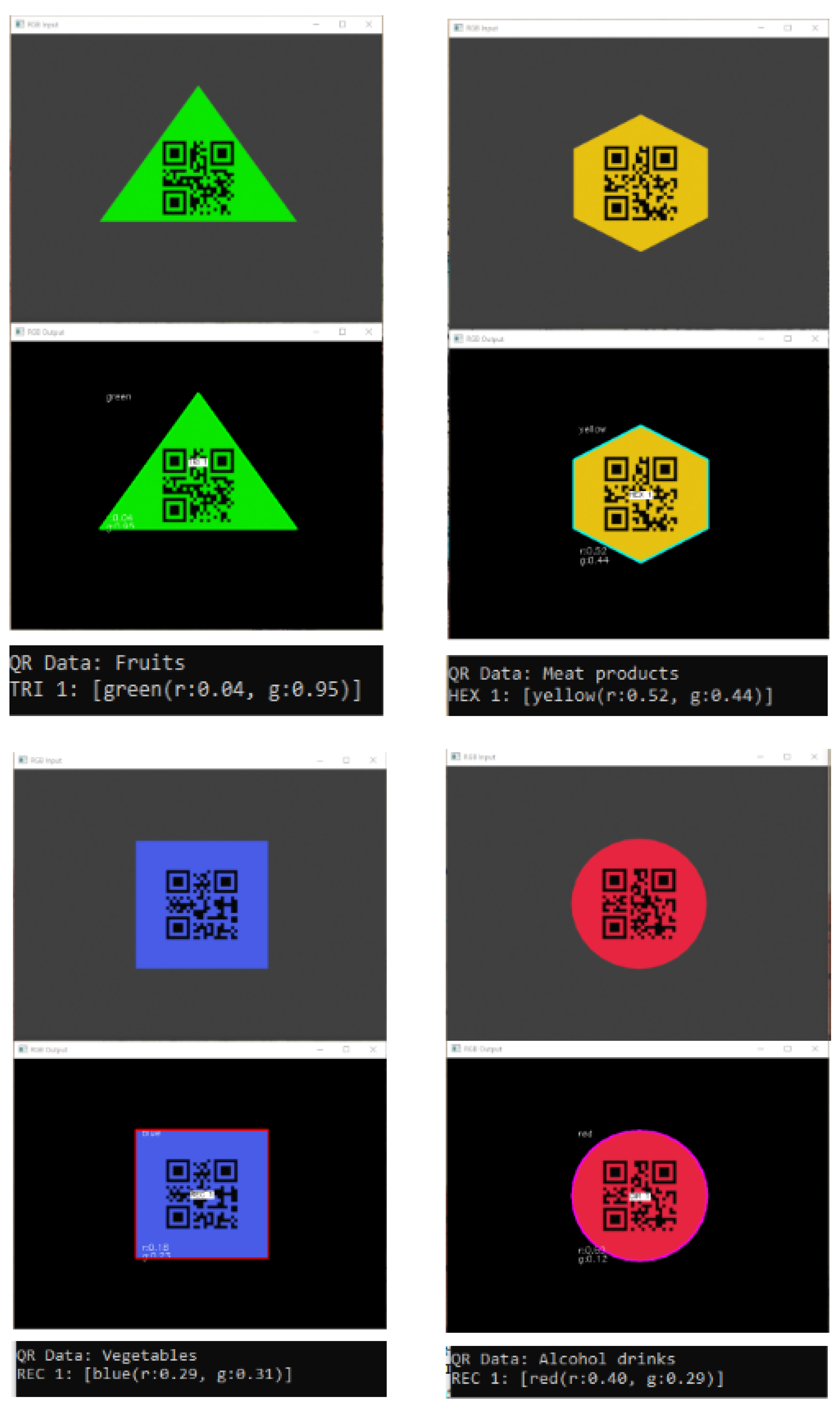

- Object recognition: identification and localization of various types of objects within the input images.

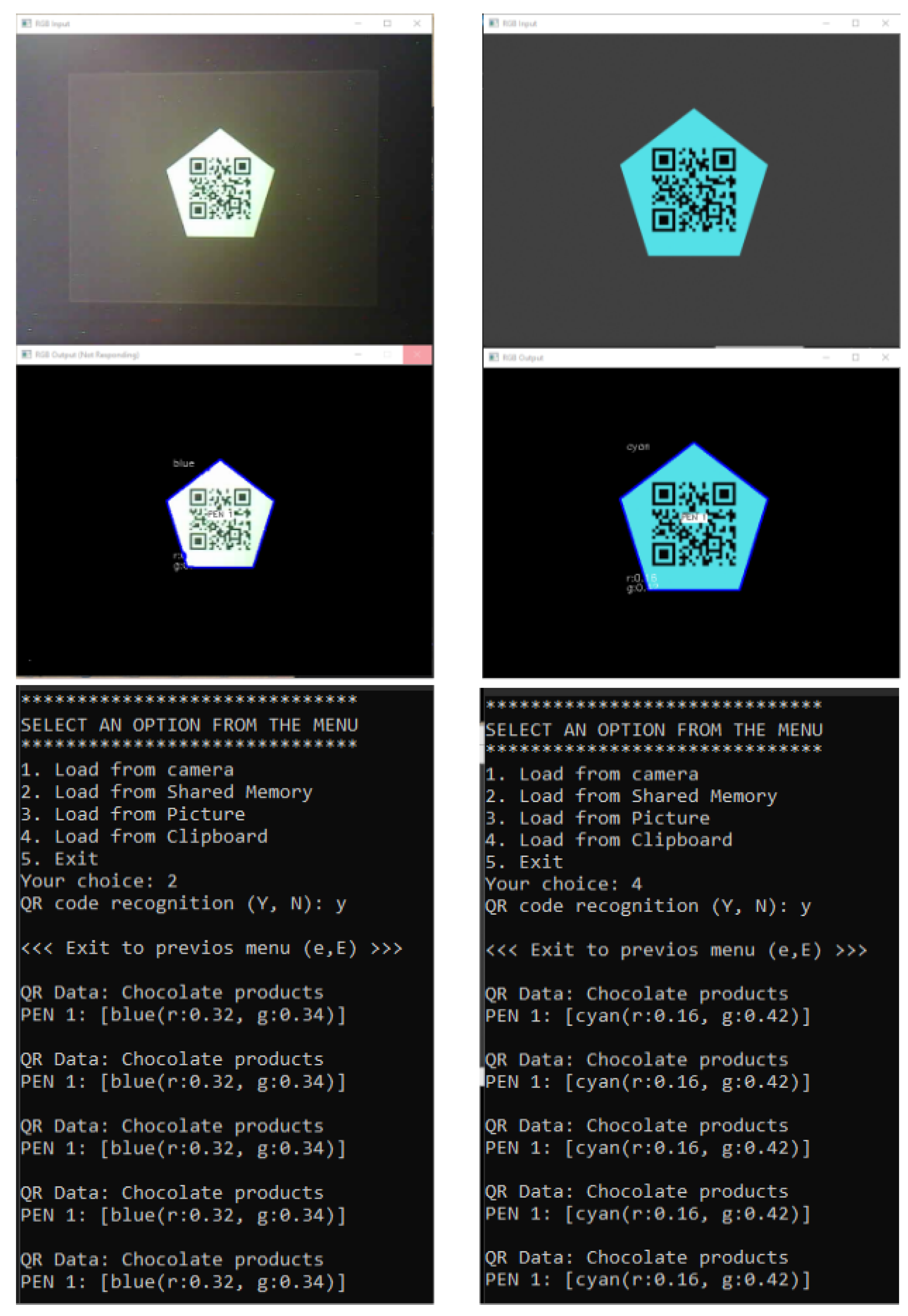

- Color classification of objects: color analysis of the recognized objects to determine their color within one of six possible color categories.

- QR decoding: extracting information from QR codes located within the recognized objects.

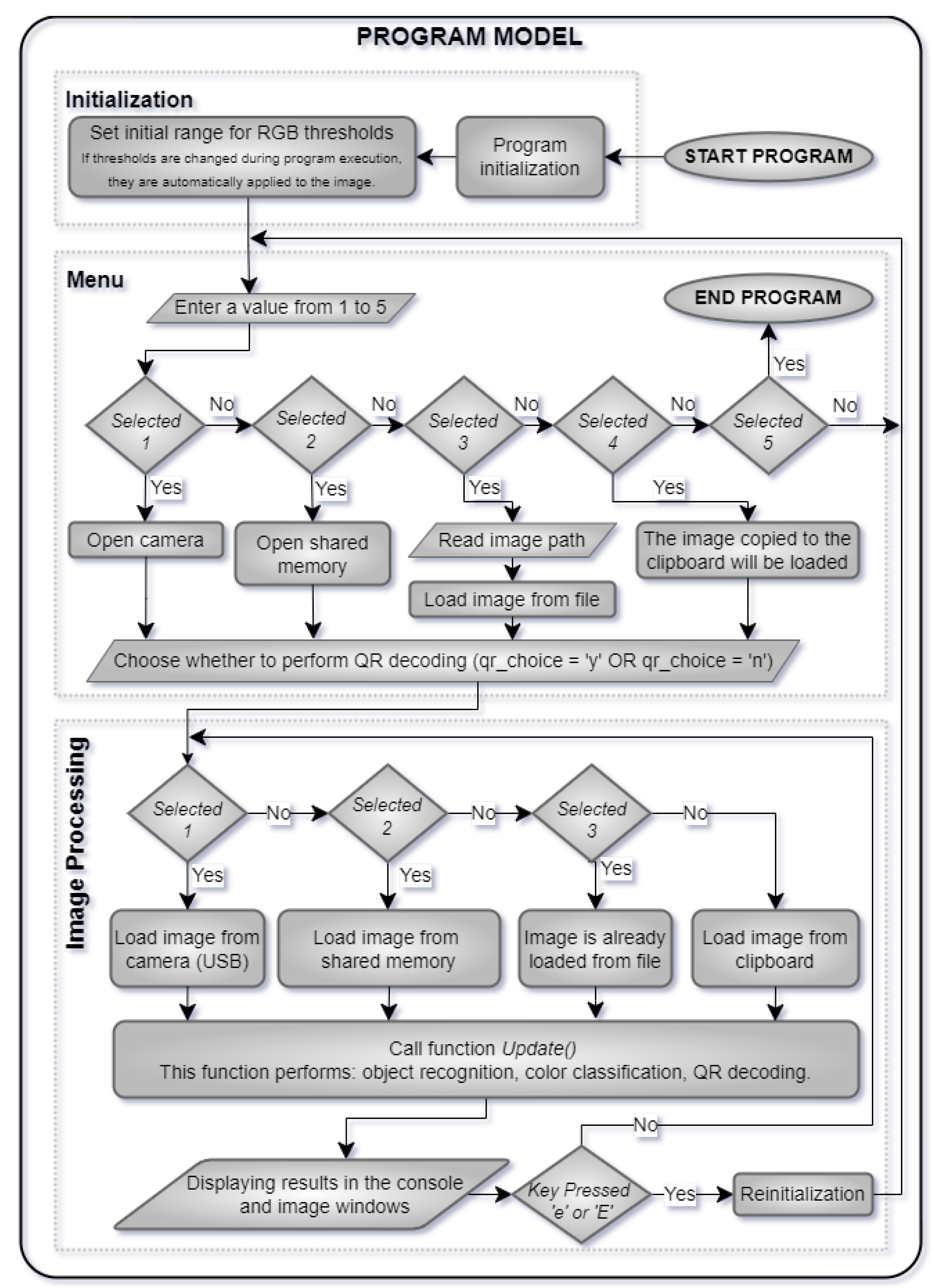

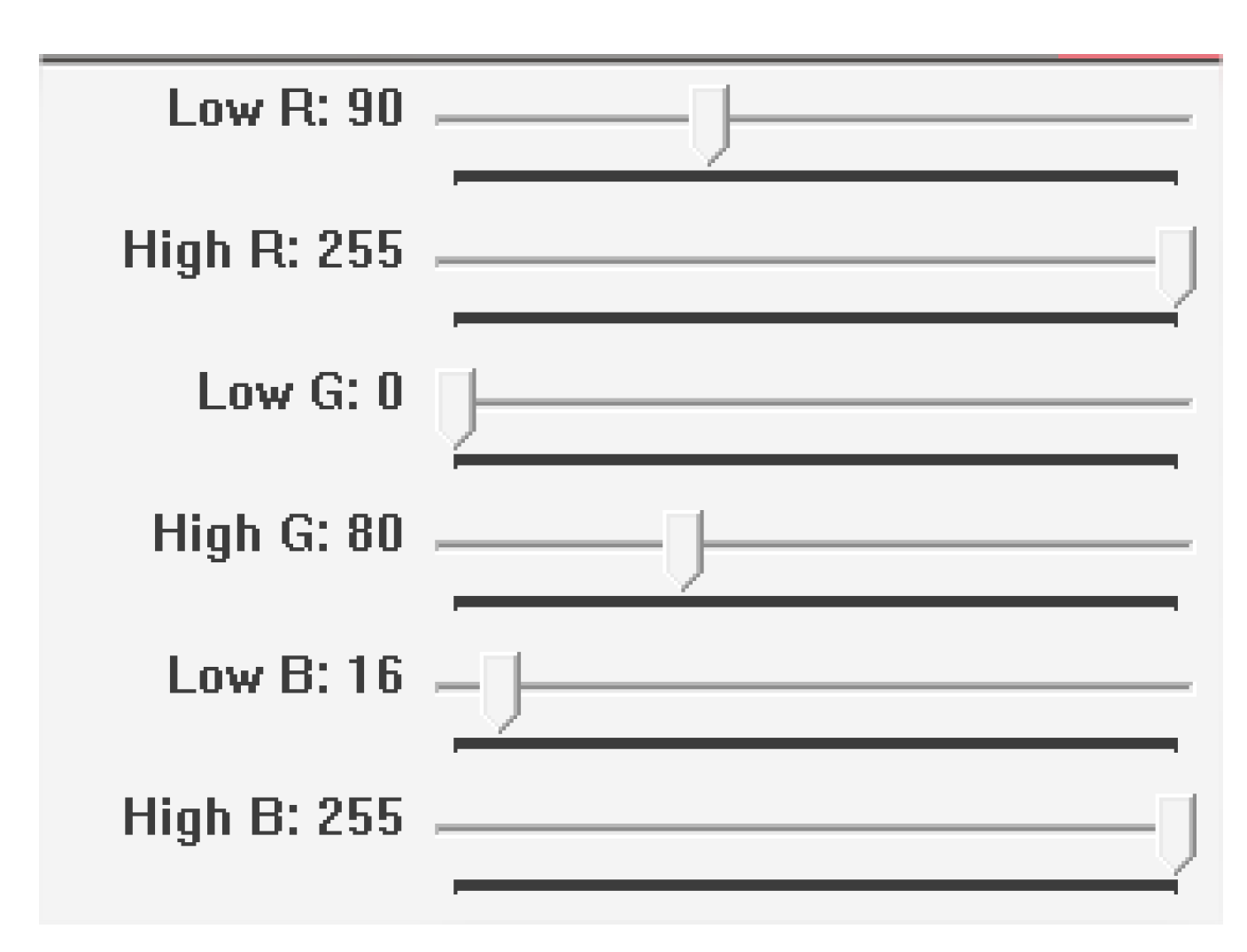

- Initialization: Creation of variables and windows for displaying images, a trackbar window for setting RGB thresholds (filtering) applied to the input image, and camera initialization.

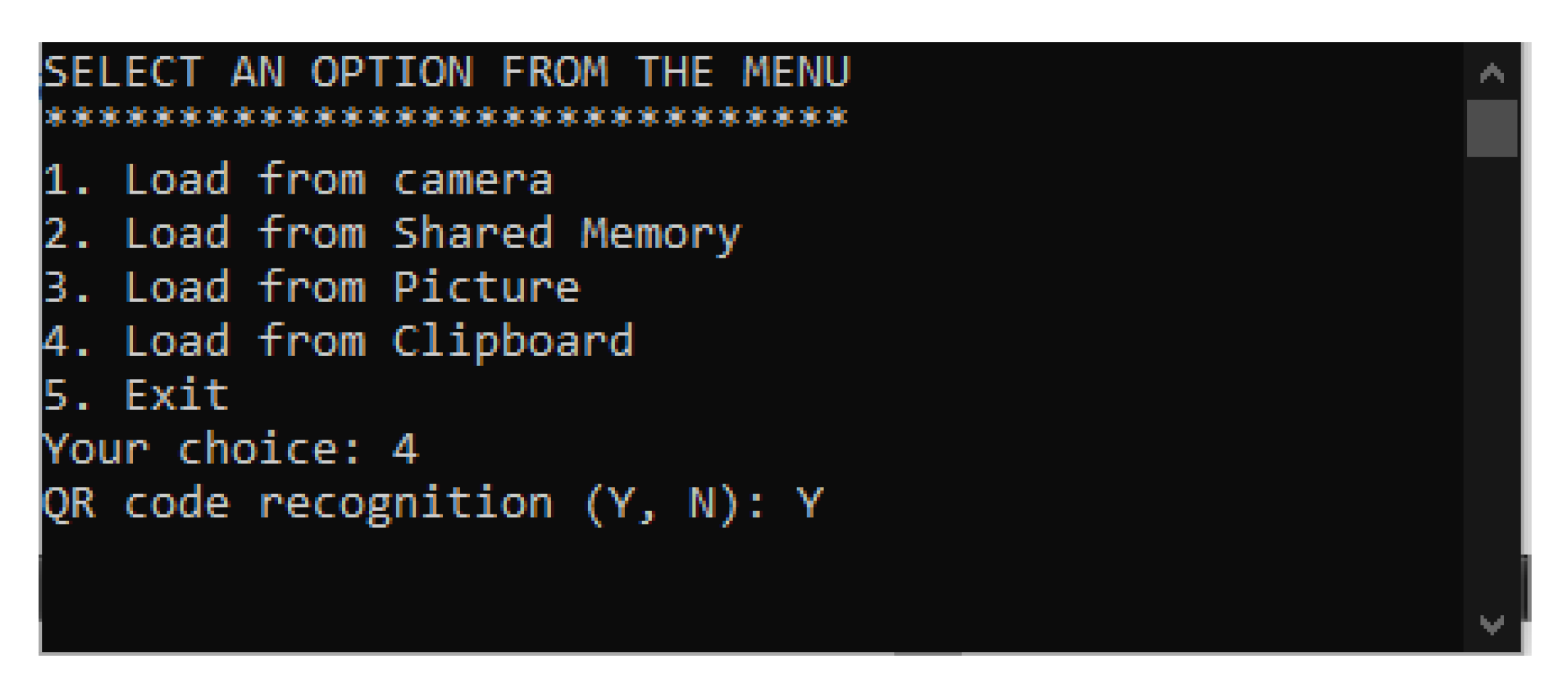

- Selection menu: The program provides a menu from which users can select from a list of alternative sources for loading images or can exit the program.

- Cyclic image processing: The loop provides continuous updates of the images, which are processed using various methods from OpenCV-setting a color threshold, finding contours, approximating the detected contours, and determining the objects detected in the image. A specialized function is called to perform color classification of the recognized objects. Each recognized object is labeled with an appropriate name and number. After recognition and color classification, the program provides the opportunity to decode QR codes that are located within the recognized objects. The program executes this cycle until the user presses the "e/E" key.

- End of program: The program completes its execution if the user selects to exit the program by pressing the "e/E" key.

- Capturing a frame (image) from a source selected from the menu;

- Extracting information from the frame and recognizing objects;

- Color classification of the recognized objects;

- Assigning identifiers to the recognized objects;

- Decoding QR codes within the objects, if any;

- Displaying results in windows.

3. Object Recognition

3.1. Presentation of the Algorithm

3.2. Specifics of the Algorithm

- The quality of the images: Images from the sensors often have noise, which is generated for various reasons, such as a low-quality sensor, poor WiFi signal, inadequate lighting, etc. This noise leads to difficulties in processing images in real time. The problem can largely be solved by using high-quality sensors (cameras), high resolution and frame rates, appropriate lenses, focal lengths, and good lighting. To improve the quality of images at the pixel level, various filters can be applied, such as the Gaussian filter [10], deep local parametric filters using neural networks [11], and others.

- Visibility of objects. The visibility and size of the objects captured in the images depend on many factors: technical equipment, the distance of the objects from the camera, shading, light reflections, etc. This means that the lighting and camera must be positioned appropriately in order for the objects in the image to be qualitatively analyzed [12,13]. In the case of static robots, lighting and cameras can be selected and placed at suitable positions and distances relative to the working objects in the environment. For mobile robots, the situation is more complex, as the choice of lighting and cameras depends on many factors in the robot-environment relationship - the tasks the robot performs, the dynamics of the environment, and more. In partially observable environments, where certain properties of the surroundings can only be perceived under specific conditions and from particular viewpoints, various approaches can be applied to adapt sensor information in the context of dynamic and changing conditions [14]. In complex or incomplete image contexts, attribute recognition techniques that belong to objects can be particularly effective in object recognition under insufficient information [15].

- Determining Color Threshold Values. The color threshold values are used to filter the input image, helping to separate the target object from unnecessary information in the image. Determining these threshold values through a trackbar or another manual method complicates the algorithm and makes it unreliable in response to changes in lighting, shading from objects, reflections, background changes, and other external influences.

- To minimize these issues, various approaches can be used. For example, when possible, a color background that is easy to remove can be utilized. Problems related to changes in lighting can be addressed by ensuring good illumination and using an additional sensor that measures the light level. Based on the sensor data, appropriate color threshold values can be pre-defined and automatically applied. These solutions are not always applicable, so algorithms that automatically adapt the color thresholds can be employed. For instance, by locally calculating regions using an iterative process, threshold values can be automatically computed without the need for pre-defined thresholds or parameters [16]. Another method for multi-level segmentation, based on the Emperor Penguin Optimizer (EPO) algorithm, offers an approach for automatically determining threshold values using optimization algorithms for efficient and precise image segmentation [17].

4. Classification of Objects by Colors

4.1. A Color Classification Model

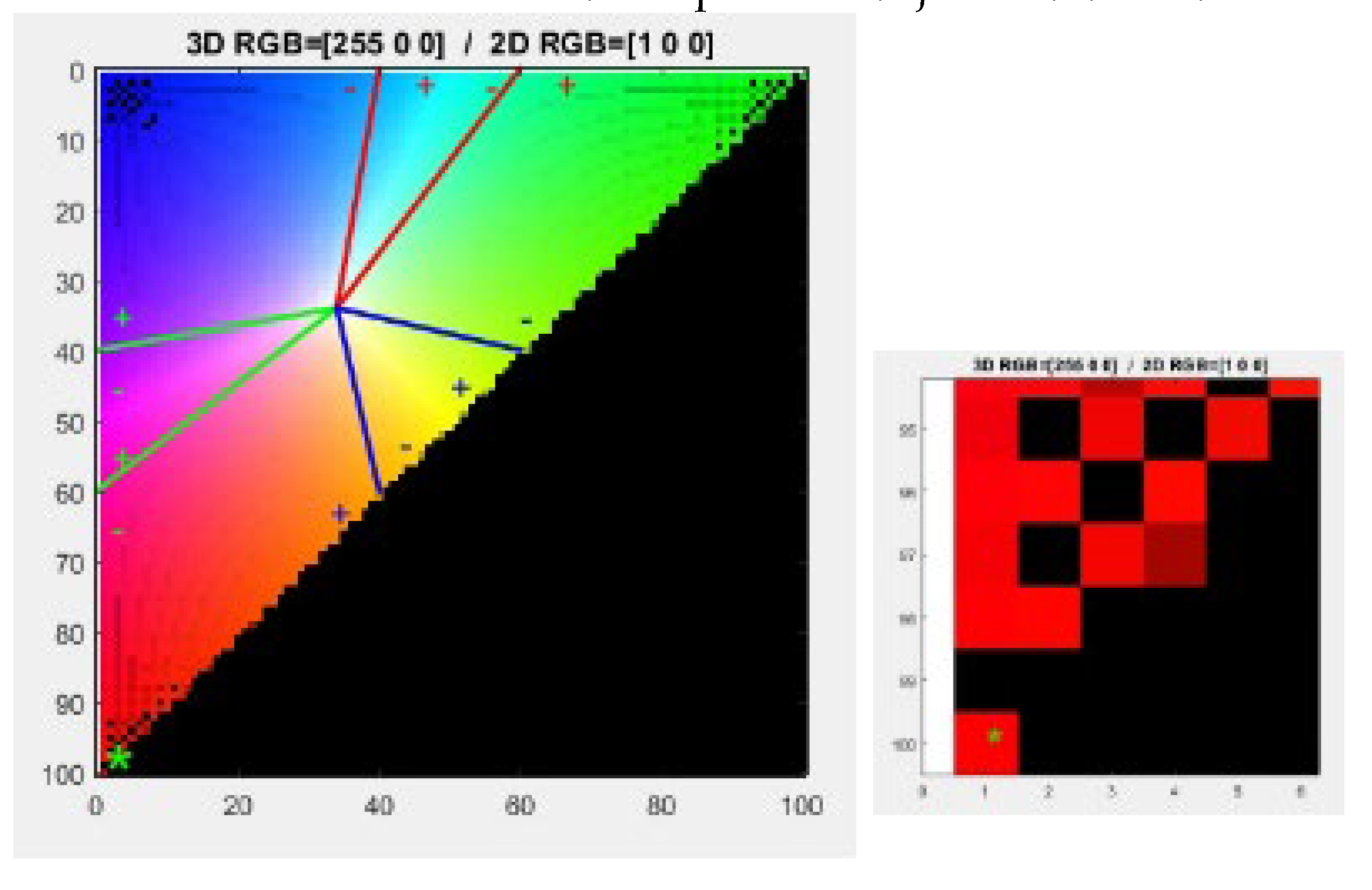

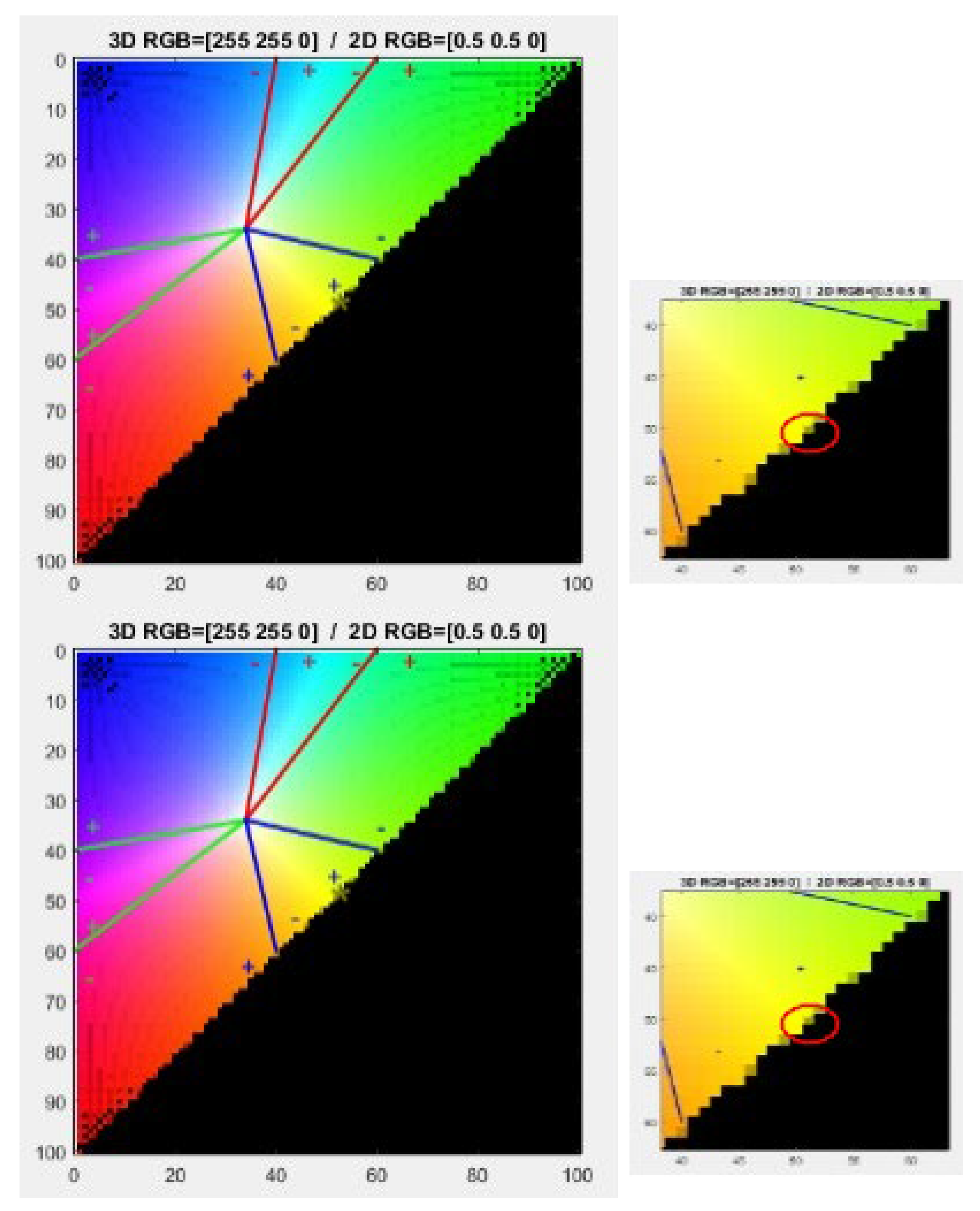

- Construction of a 3D color model in RGB space;

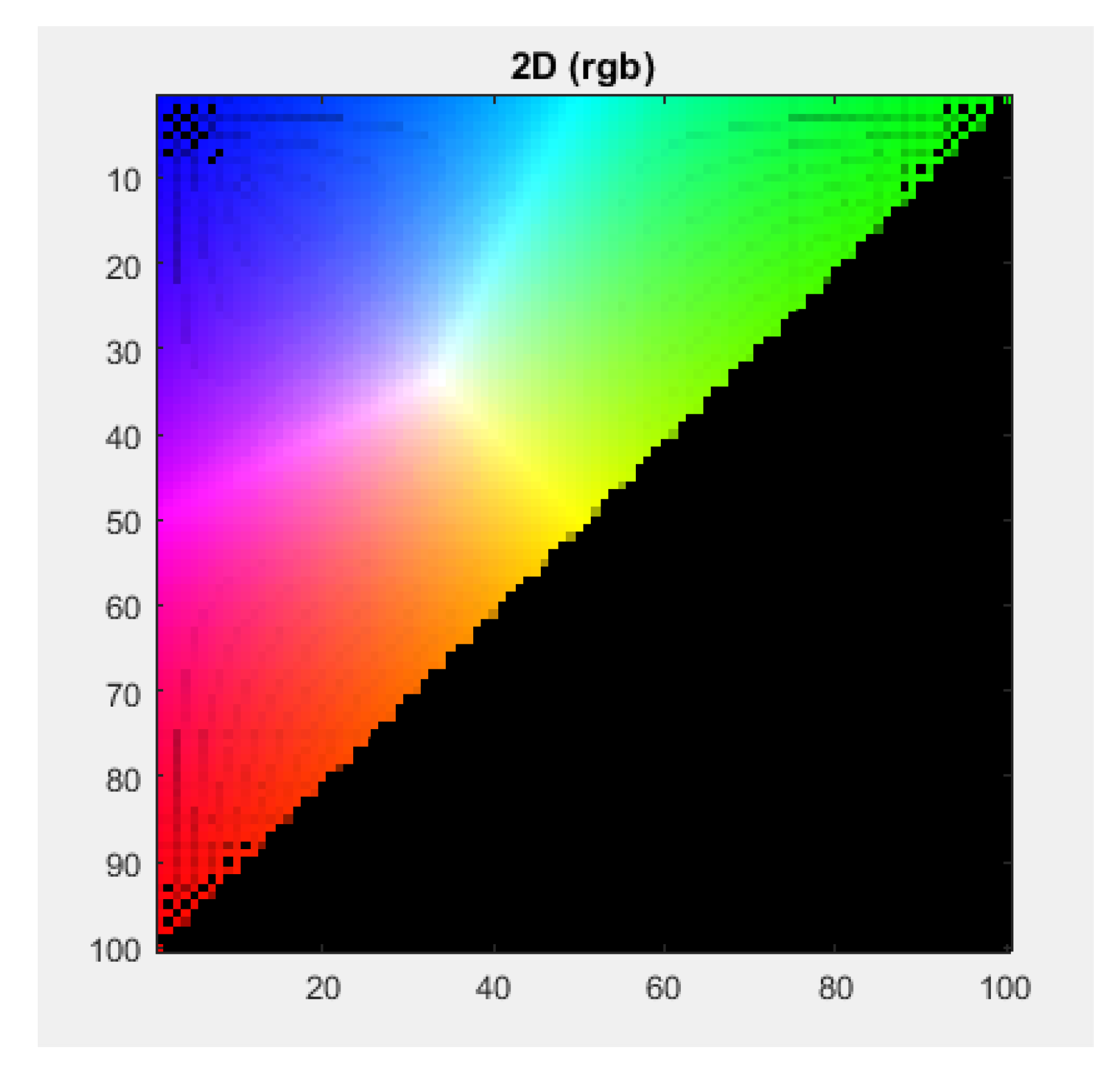

- Transformation of the 3D model into a 2D color model;

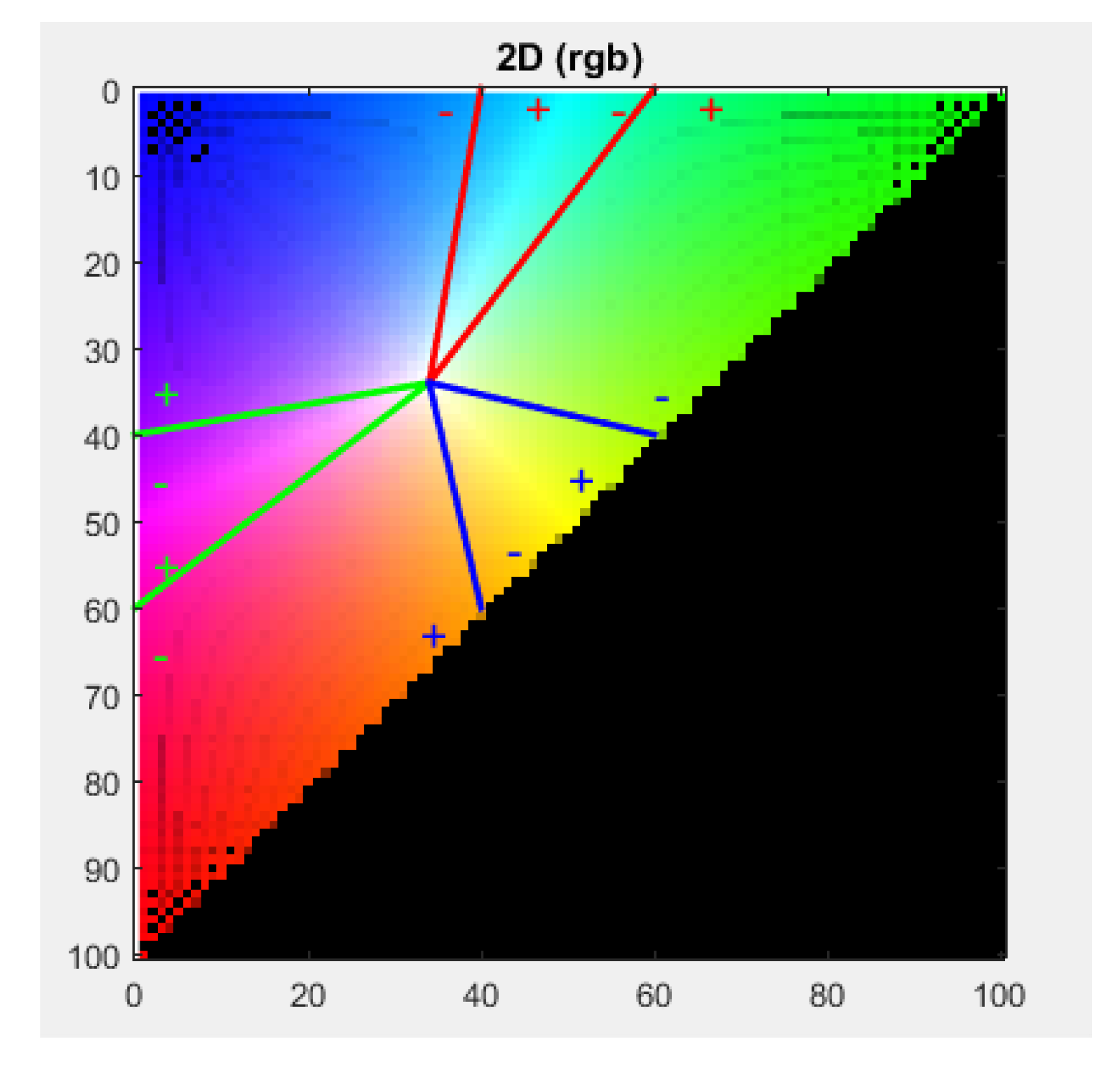

- Construction of lines for color separation in the 2D space;

- Testing of the model. 4.1.1.

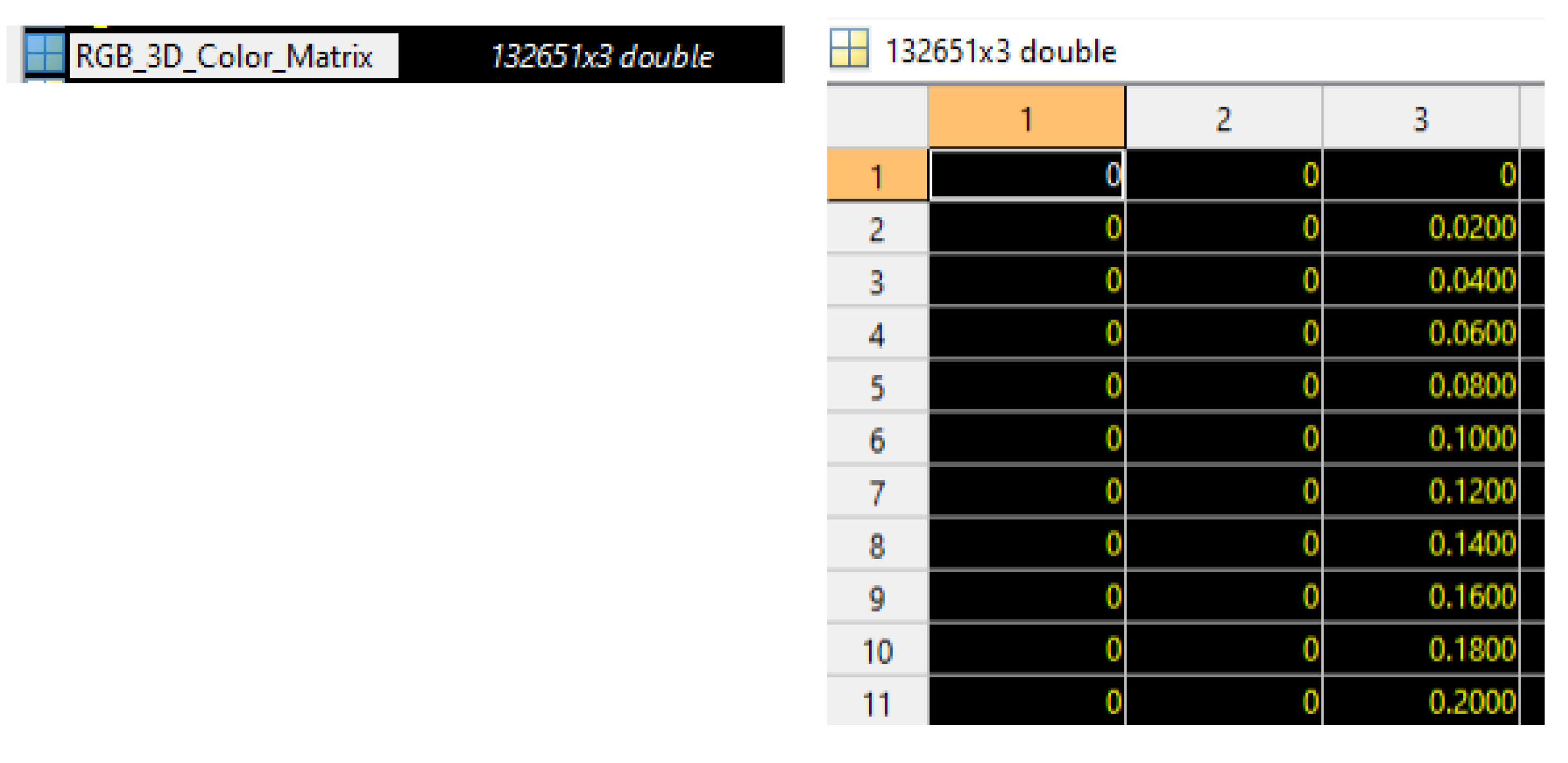

4.1.1. Building a 3D Color Model in the RGB Space

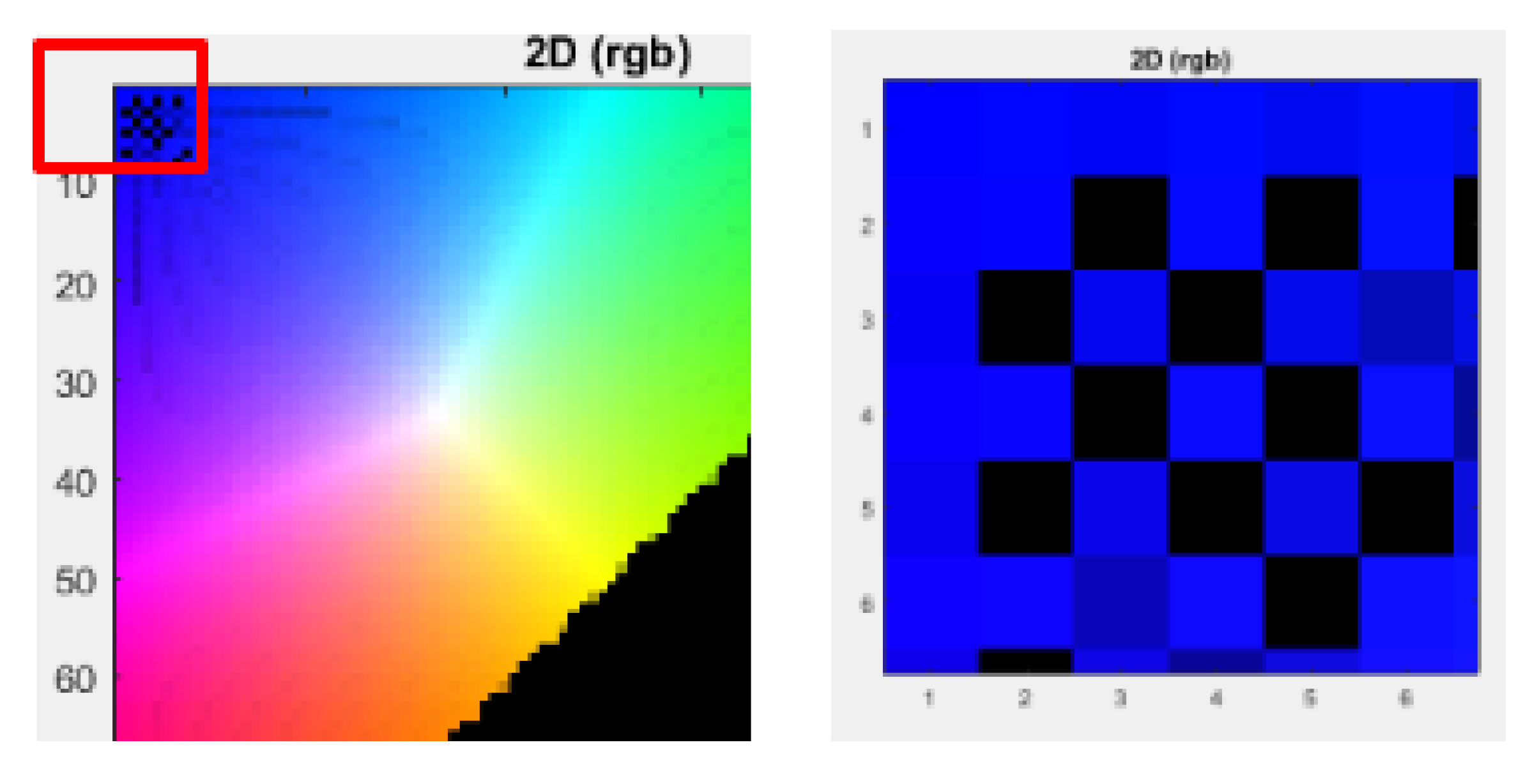

4.1.2. Convert the RGB 3D Color Space to 2D (rgb)

4.1.3. Construction of Lines for Color Separation in the 2D Space

- The average color value for the recognized object in the three-dimensional RGB space is determined, with values in the range [0, 255].

- The resulting RGB color is converted to the rgb two-dimensional space in the range [0, 1]. The rg values are used to determine the following two values:x_green = 1 + g * 99; y_red = 1 + r * 99,

- where x_green and y_red are the color values of the recognized object in the two-dimensional space for the classification model.

- The obtained values of x_green and y_red are substituted into the equations of Zbr1, Zbr2, Zbg1, Zbg2, Zrg1, Zrg2, shown below, and only the resulting sign is considered.

4.2. Testing the Classification Model

5. QR decode

5.1. Description

- Detection of the QR code: The program uses the QRDetector function to identify the area of the QR code. In the current implementation of the program, the QR code must be located within the recognized object detected by the program.

- Decoding the information: The code recognition algorithm decodes the information using the detectAndDecode method. This information can include plain text, numbers, URLs, or other data.

5.2. Implementation

- inputImage is the input image on which the decoding attempt is made.

- bbox (bounding box) is an output parameter for the area where the code was detected.

- rectifiedImage is an output parameter that provides a rectified (corrected) image of the code after the decoding process (an improved structured image of the QR code).

6. Testing the Program Model

- Load from camera - load images from a USB camera.

- Load from Shared Memory - load images from shared memory. The program reads (extracts) images from shared memory, where images from various sources can be stored: video, cameras (USB, ESP32CAM, etc.), simulations from programs, and more. The storage of images in shared memory is performed by additional programs external to the program model.

- Load from Picture - load an image saved on disk.

- Load from Clipboard - load images from the Clipboard. This option is suitable for frequent changes to the tested images.

- Exit - exit the program.

- Decoding QR codes - this functionality is selected on the line "QR code recognition (Y, N):".

- Option 1 - a standard USB camera was used.

- Option 2 - an ESP32CAM camera was used, as shown in Figure 11. The images from the camera are stored in a shared area. For this purpose, an additional program was created to copy the obtained images from the WiFi camera into the shared memory.

- Option 3 - various test images saved on disk were used.

- Option 4 - the Blender program was used, where the created images are copied to the Clipboard, and the program extracts them from there.

7. Application of the Program Model

7.1. Context for the Use of the Program Model

7.2. Integration of the Program Model with Other Types of Sensors

8. Discussion

- Capabilities for measuring distance to objects using a depth camera;

- Structuring and storing information about recognized objects.

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rubio, F.; Valero, F.; Llopis-Albert, C. A review of mobile robots: Concepts, methods, theoretical framework, and applications. Int. J. Adv. Robot. Syst. 2019, 16. [Google Scholar] [CrossRef]

- Holz, D.; Holzer, S.; Rusu, R.B.; Behnke, S. Real-time plane segmentation using RGB-D cameras. In Robot Soccer World Cup XV, 18 June 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 306–317. [Google Scholar] [CrossRef]

- Coradeschi, S.; Saffiotti, A. Perceptual Anchoring of Symbols for Action. In Proceedings of the 17th International Joint Conference on Artificial Intelligence (IJCAI'01), Seattle, WA, USA, 4 August 2001; Volume 1, pp. 407–412. [Google Scholar]

- Microsoft. Using File Mapping. Available online: https://learn.microsoft.com/en-us/windows/win32/memory/using-file-mapping (accessed on 15 January 2024).

- OpenCV: Open Source Computer Vision Library. Available online: https://github.com/opencv/opencv (accessed on 21 January 2023).

- Amit, Y.; Felzenszwalb, P.; Girshick, R. Object Detection. In Computer Vision: A Reference Guide; Ikeuchi, K., Ed.; Springer International Publishing: Cham, Switzerland, 2021; pp. 875–883. [Google Scholar] [CrossRef]

- Tkachuk, A.; Bezvesilna, O.; Dobrzhanskyi, O.; Pavlyuk, D. Object Identification Using the Color Range in the HSV Scheme. The Scientific Heritage 2021, 1, 50–56. [Google Scholar]

- Hema, D.; Kannan, S. Interactive Color Image Segmentation Using HSV Color Space. Sci. Technol. J. 2019, 7, 37–41. [Google Scholar] [CrossRef]

- Gong, X.Y.; Su, H.; Xu, D.; et al. An Overview of Contour Detection Approaches. Int. J. Autom. Comput. 2018, 15, 656–672. [Google Scholar] [CrossRef]

- Yu, J. Based on Gaussian Filter to Improve the Effect of the Images in Gaussian Noise and Pepper Noise. In Proceedings of the 3rd International Conference on Signal Processing and Machine Learning (CONF-SPML 2023), Oxford, UK, 25 February 2023; IOP Publishing: Oxford, UK, 2023. [Google Scholar] [CrossRef]

- Moran, S.; Marza, P.; McDonagh, S.; Parisot, S.; Slabaugh, G. DeepLPF: Deep Local Parametric Filters for Image Enhancement. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13-19 June 2020; IEEE: 2020; pp. 12826–12835. [CrossRef]

- Fernández, I.; Mazo, M.; Lázaro, J.L.; Pizarro, D.; Santiso, E.; Martín, P.; Losada, C. Guidance of a mobile robot using an array of static cameras located in the environment. Autonomous Robots 2007, 23, 305–324. [Google Scholar] [CrossRef]

- Hanel, M.L.; Kuhn, S.; Henrich, D.; Grüne, L.; Pannek, J. Optimal Camera Placement to Measure Distances Regarding Static and Dynamic Obstacles. International Journal of Sensor Networks 2012, 12, 25–36. [Google Scholar] [CrossRef]

- Lamanna, L.; Faridghasemnia, M.; Gerevini, A.; Saetti, A.; Saffiotti, A.; Serafini, L.; Traverso, P. Learning to Act for Perceiving in Partially Unknown Environments. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence (IJCAI-23), August 2023. [Google Scholar] [CrossRef]

- Farhadi, A.; Endres, I.; Hoiem, D.; Forsyth, D. Describing objects by their attributes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20-25 June 2009; pp. 2007–2014. [Google Scholar] [CrossRef]

- Navon, E.; Miller, O.; Averbuch, A. Color image segmentation based on adaptive local thresholds. Image and Vision Computing, Volume 23, Issue 1, 1 January 2005, pp. 69–85. [CrossRef]

- Xing, Z. An Improved Emperor Penguin Optimization Based Multilevel Thresholding for Color Image Segmentation. Knowledge-Based Systems 2020, 194, 105570. [Google Scholar] [CrossRef]

- Gochev, G. Kompyutarno zrenie i nevronni mrezhi; Technical University – Sofia: Sofia, Bulgaria, 1998; pp. 83–84. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 4th ed.; Pearson: New York, NY, USA, 2018; pp. 400–408. [Google Scholar]

- Wikipedia. QR code. Available online: https://en.wikipedia.org/wiki/QR_code (accessed on 25 June 2024).

- Zhang, H.; Zhang, C.; Yang, W.; Chen, C.-Y. Localization and navigation using QR code for mobile robot in indoor environment. In 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; IEEE: 2015; pp. 741–9715. [CrossRef]

- Sneha, A.; Sai Lakshmi Teja, V.; Mishra, T.K.; Satya Chitra, K.N. QR Code Based Indoor Navigation System for Attender Robot. EAI Endorsed Trans. Internet Things 2020, 6, e3–e3. [Google Scholar] [CrossRef]

- Bach, Sy-Hung; Khoi, Phan-Bui; Yi, Soo-Yeong. Application of QR Code for Localization and Navigation of Indoor Mobile Robot. IEEE Access 2023, 11, 28384–28390. [Google Scholar] [CrossRef]

- Aman, A.; Singh, A.; Raj, A.; Raj, S. An Efficient Bar/QR Code Recognition System for Consumer Service Applications. In Proceedings of the 2020 Zooming Innovation in Consumer Technologies Conference (ZINC), Novi Sad, Serbia, 26-27 May 2020; IEEE, 2020; pp. 127–131. [Google Scholar] [CrossRef]

- Wang, J.; Olson, E. AprilTag 2: Efficient and robust fiducial detection. In 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea (South), 09-14 October 2016; IEEE: 2016. [CrossRef]

- Krogius, M.; Haggenmiller, A.; Olson, E. Flexible Layouts for Fiducial Tags. In 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 03-08 November 2019; IEEE: 2019. [CrossRef]

- Wang, X. High Availability Mapping and Localization. Ph.D. Dissertation, University of Michigan, 2019. Available online: https://hdl.handle.net/2027.42/151428.

- Bonci, A.; Cheng, P.D.C.; Indri, M.; Nabissi, G.; Sibona, F. Human-Robot Perception in Industrial Environments: A Survey. Sensors 2021, 21, 1571. [Google Scholar] [CrossRef]

- Wikipedia. Dijkstra's algorithm. Available online: https://en.wikipedia.org/wiki/Dijkstra%27s_algorithm (accessed on 8 August 2024).

- GeeksforGeeks. How to Find Shortest Paths from Source to All Vertices Using Dijkstra’s Algorithm. Available online: https://www.geeksforgeeks.org/dijkstras-shortest-path-algorithm-greedy-algo-7/ (accessed on 8 August 2024).

- Alshammrei, S.; Boubaker, S.; Kolsi, L. Improved Dijkstra Algorithm for Mobile Robot Path Planning and Obstacle Avoidance. Computers, Materials and Continua. 2022, 72, 5939–5954. [Google Scholar] [CrossRef]

- Kester, W. Section 6: Position and Motion Sensors. Available online: https://www.analog.com/media/en/training-seminars/design-handbooks/Practical-Design-Techniques-Sensor-Signal/Section6.PDF (accessed on 6 August 2024).

- Zhmud, V.A.; Kondratiev, N.O.; Kuznetsov, K.A.; Trubin, V.G.; Dimitrov, L.V. Application of ultrasonic sensor for measuring distances in robotics. In Journal of Physics: Conference Series 2018, 1015, 032189. [Google Scholar] [CrossRef]

- Suh, Y.S. Laser Sensors for Displacement, Distance and Position. Sensors 2019, 19, 1924. [Google Scholar] [CrossRef]

- Lee, C.; Song, H.; Choi, B.P.; Ho, Y.-S. 3D scene capturing using stereoscopic cameras and a time-of-flight camera. IEEE Transactions on Consumer Electronics 2011, 57, 1370–1376. [Google Scholar] [CrossRef]

| Function | Application | Integration scenarios |

|---|---|---|

| Determination of geographic position | Using GPS modules to accurately determine the location of the robot on a global scale. | Using a GPS module to determine the current geographic position of the robot. When certain objects are recognized, their coordinates can be saved, which can be useful for creating maps and specifying specific points on those maps. |

| Orientation and navigation in space | Using inertial motion (accelerometers) and rotation (gyroscopes) sensors to read the robot's orientation and motion. | Using the output information from the sensors (gyroscope and accelerometer) to determine the orientation and navigation of the robot. Upon recognition of certain objects, the robot can automatically navigate the environment and take appropriate actions. |

| Obstacle detection, distance measurement to objects | Using distance sensors such as ultrasonic and infrared to measure the distance to nearby obstacles. | Using ultrasonic or infrared sensors to measure the distance to nearby objects. In object recognition, the measured distances are used to optimize navigation or prevent collisions. Dynamic response and actions by the robot according to the recognized objects (obstacles). |

| Measurement of physical parameters in the environment | Using sensors to measure temperature, humidity, illumination and other physical parameters. | Using sensors to measure temperature, humidity, atmospheric pressure and other physical parameters in the environment. When certain objects are recognized, an analysis can be made for their possible effects on the robot or the environment. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).