1. Introduction

Chatbots are software systems created to have human-like conversations to offer automated support and guidance [

1]. Nowadays, The utilization of chatbots is widely adopted across various business sectors, encompassing banking, manufacturing, law, healthcare, education, and various other fields [

2]. The number of research works investigating the application of Chatbots in the education domain is rising [

3]. To highlight the importance of this phenomenon, the authors of [

4] claim that the implementation of Chatbot technology in education could have a notable impact on the way educational institutions are administered. Moreover, regarding the importance of administrative chatbots, the authors of [

5] have stated that incorporating AI technologies like Chatbots into educational environments would hold significant importance. ``It enables the dissemination of academic information, alleviates administrative responsibilities, enhances the overall user experience, and ultimately, fosters greater participation and engagement within the environments. While researchers have explored the use of chatbots in education for teaching and learning, their potential to improve the effectiveness of educational administration remains underexplored.

Existing literature reviews, such as [

6,

7,

8,

9,

10,

11] have mainly focused on chatbot utilization in the education sector for teaching and learning purposes. Despite the importance of administrative aspects of education provision, the number of researches focusing on the aspect is limited. In support of this claim, investigations such as [

6] reveal that chatbots are deployed in educational institutions for various purposes, predominantly for teaching and learning (66%) and research and development (19%). The authors claim that only (5%) of developed or proposed bots are geared toward administration-related tasks. This lack of emphasis on administrative chatbots has led to significant shortcomings, including the absence of a standardized framework, varied implementation technologies, and inconsistent evaluation approaches.

Our research contributes to the field by conducting a comprehensive review to examine the current state of administrative chatbots in education. In this review, we aim to answer questions in five specific research areas: the tasks and functionalities of administrative chatbots, the data and technologies used, evaluation methods, and future challenges.

The outcome of this review has uncovered the main characteristics of a potential administrative chatbot framework, including the data used to train chatbot models, the technologies employed in their development, specific administrative tasks they address, evaluation methods used, and challenges associated with their future developments. Generative AI models like ChatGPT have garnered significant attention across academia, research, and various industries for their applications in areas such as customer service, healthcare, and education [

12]. The review highlights the need to integrate generative AI, into educational administrative chatbots, to enhance their functionalities and effectiveness.

This paper is structured as follows:

Section 2 presents the literature review.

Section 3 discusses the methodology employed for conducting the systematic review.

Section 4 outlines the research results, including the data analysis and findings.

Section 5 examines the implications of the findings and discusses their significance. Finally,

Section 6 concludes the research by summarizing the key findings, discussing their implications, and proposing directions for future research.

2. Literature Review

The main purposes of having administrative chatbots in educational institutions can be classified into three categories: 1)Provide information, 2)support administration, and 3)evaluate technologies. Information provision dominates as the primary purpose for educational administrative chatbots in the reviewed literature. This category encompasses chatbots designed to address commonly asked questions (FAQs) and offer users general information, including details about educational institutes, tuition fees, admission procedures, and academic departments. Chatbots falling under this category offer automated assistance to both internal and external users of academic institutions. This type of chatbot can be further classified into six types:

Institutions: This type of chatbots provide users with information regarding institutes, faculties, and departments. FAQ bot [

13], ADPOLY [

14], HIVA bot [

15], KUSE-ChatBOT [

16], Erasmus [

17], EduChat [

18] are some examples of this type of chatbots.

Activities: This type handles queries regarding various activities that occur within the educational institutions. Some examples of this type of chatbots are: AVA [

19], FIT-Ebot [

20,

21], FAQ bot [

13], Educational Assistant [

22], HIVA bot [

15].

Admission: This type of chatbots processes admission-related queries such as admission date, admission requirements, and online admission link. Examples of this type are: Ana Chatbot [

23], Educational Assistant [

22], NEU-chatbot [

24], DINA [

25], Jooka [

26], Virtual-Assistant [

5] , EduChat [

18], Admission Bot [

27], FAQ bot [

13], HIVA bot [

15].

Academic: This type of chatbot provides students with information about academic courses such as course names, course grades, course registration, and schedules. ParichartBOT [

28], Edubot [

29], Nabiha [

30], , EduChat [

18], University Bot [

31], Virtual-Assistant [

5], SOCIO Chatbot [

32], AVA [

19] are some examples of this type of chatbot.

Library: BCNPYLIB CHATBOT [

33], Library Bot [

34], HIVA bot [

15] are two examples of chatbots designed for library services. They can respond to inquiries regarding book location, , categorization, and library operating hours. This capability saves time and allows librarians to allocate their time to other duties.

Other purpose: CSM chatbot [

35] addresses educational administrative tasks related to student inquiries about registration procedures and tuition payments at the University of Guayaquil. EduChat [

18] and HIVA bot [

15] provide information about student life including canteen, human resources, library, student life, and events.

The second category of chatbots is designed to support administration aiming to reduce workload and enhance user experience through automation. These chatbots fall into one of the following eight types:

Save time: Several studies in the literature have suggested and implemented chatbots to automate administrative tasks, thereby saving time for stakeholders such as students, educators, and administrative staff. By handling routine inquiries and processes efficiently, chatbots enable stakeholders to focus on more value-added activities, improving overall productivity and efficiency within educational institutions. UNIBOT [

36], and CollegeBot [

37] are some examples of this type of chatbots.

Reduce labor cost: It refers to a type of chatbot designed to lower expenses related to human labor. This is achieved by automating tasks or processes, thus decreasing the necessity for manual intervention, or reducing the number of personnel required for specific functions. For instance, NEU-chatbot [

24] and Edubot [

29] are two notable examples within this type.

Reduce burden: It refers to a type of chatbot aimed at alleviating the workload or strain on individuals within an organization. These chatbots are designed to automate tasks or processes, thereby lessening the burden of manual work or administrative responsibilities on employees. Examples of this type of chatbots include ArabicBot [

38], FAQChatbot [

39], Ava [

40], and Jooka [

26] which have been developed to alleviate administrative burdens within educational institutions.

24/7 availability: This type of chatbots is designed to offer uninterrupted access to information or services, catering to users’ needs whenever they arise. By being available 24/7, these chatbots ensure that users can receive prompt responses to their inquiries or requests, enhancing convenience and accessibility. Examples of chatbots belonging to this type include LiSA [

41], and GraduateBot [

42], EduChat [

18] which have been implemented to provide continuous support to users within educational institutions, regardless of the time of day.

User engagement: It refers to chatbots designed to actively involve and interact with users, fostering meaningful connections and participation. Equipped with features to capture users’ interest and encourage involvement, these chatbots enhance satisfaction and retention. Examples include LiSA [

41] and Nabiha [

30], Virtual-Assistant [

5] which engage users in educational settings through interactive dialogue and tailored support.

User experience: This type aimes to prioritize seamless interactions and satisfaction for users. These bots focus on usability and responsiveness, aiming to provide an intuitive interface. AIBot [

43] and EnrollmentBot [

44] are two examples of such chatbots. They enhance user experiences in educational settings through intuitive design and personalized interactions.

Facilitate Communication: This type of chatbots streamline administrative processes, and facilitate efficient communication between students, parents, and staff. They enhance support through personalized assistance, automated notifications, and real-time feedback collection. Assisting Chatbot [

21], SOCIO Chatbot [

32], AVA [

19], Lilo [

45] are some examples of this type of chatbots.

Automate admission process: It refers to chatbots designed to automate the traditional admission procedures. Admission test bot [

46] is an example of this type of chatbots.

Evaluating technology is the purpose behind designing the third type of chatbots. Some studies in the literature utilize different technologies and platforms to find out their reliability to be implemented for designing chatbots within the education domain. For instance, [

47] seeks to investigate the potential benefits and challenges of employing conversational agents to gather course evaluations across three European universities located in the UK, Spain, and Croatia. [

48] contrasts the intent classification outcomes of two commonly used chatbot frameworks with those of a cutting-edge Sentence BERT (SBERT) model, which can construct a resilient chatbot. Existing literature on administrative chatbots in education suffers three serious shortcomings: 1) Lack of standardized frameworks which has led to significant variations in research approaches. This results in ambiguity regarding data characteristics, collection practices for chatbot training, the utilized implementation technologies, and ultimately, inconsistent evaluation methods. 2) Despite the widespread adoption of Generative AI across various sectors, its application in educational administration remains limited. Integrating these powerful techniques could potentially enhance chatbot capabilities in the domain; 3) While publications on educational administrative chatbots have seen some increase in recent years, this area of research remains relatively understudied.

3. Materials and Methods

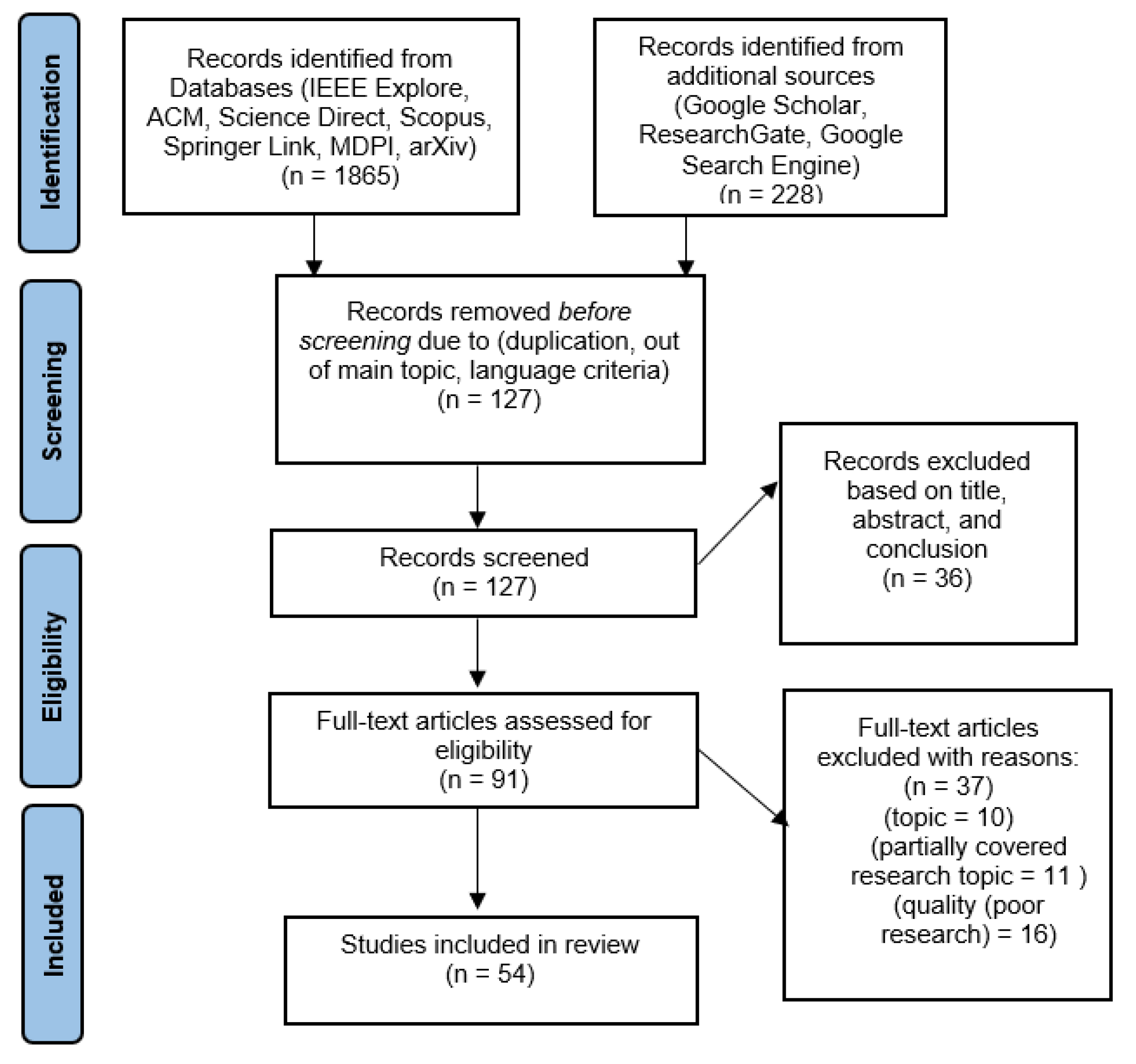

This research is conducted through a systematic review of the literature. As stated by [

49], a systematic literature review involves a rigorous search method aimed at identifying pertinent findings on a specific research topic. To enhance the review process, the researchers have adhered to the preferred reporting items for systematic reviews and meta-analysis (PRISMA) framework. The PRISMA framework comprises a checklist containing 27 items and a four-phase flowchart, designed to enhance the clarity and transparency of systematic reviews and meta-analyses. However, it is essential to note that PRISMA is not intended to be used as a quality assessment tool [

50]. To obtain relevant articles for this study, a comprehensive search is conducted using databases such as IEEE Explore, ACM, Scopus, Springer Link, Science Direct, MDPI, and arXiv, as the primary references. To ensure a comprehensive coverage of suitable articles, additional sources like ResearchGate, Google Scholar, and the classic Google search engine are also considered. However, it is worth mentioning that undergraduate, master, and doctoral theses are excluded from this study. The research process comprises five distinct steps: (1) Identify research questions, (2) Search for relevant articles in scientific journals and conferences, (3) Apply the PRISMA protocol to select literature, (4) Extract information from the selected studies, and (5) Formulate conclusions and outline future research directions.

3.1. Research Questions

To align with the main objectives of this study and explore the effective administrative uses of chatbots in educational institutions, we have formulated the following research questions to gather the necessary information:

RQ1. What educational administrative tasks (functionalities) do existing studies propose or implement?

RQ2. What are the data characteristics and data collection practices for chatbot training in educational institutions?

RQ3. What are the main chatbot implementation technologies used by researchers?

RQ4. How are implemented chatbots evaluated?

RQ5. What are the challenges faced during chatbot implementations?

Each research question has been chosen to explore different aspects of chatbot effectiveness, which, ultimately contributes to effective educational administration. High-quality data and relevant information train the chatbot for accurate responses [

51]. Poor data leads to misunderstandings and frustration [

52]; technologies like Natural Language Processing (NLP) allow for natural conversation, while Machine Learning helps the chatbot learn and improve over time [

1]. Choosing the wrong tech hinders its effectiveness [

53]; Clearly defined tasks, like answering registration questions or providing deadline reminders, ensure the chatbot excels in its role. In addition, the ability of chatbots to automate routine tasks frees up time for administrative staff and enhances efficiency in educational institutions [

54]; Regular evaluation using user feedback, data analysis, and automatic approaches helps identify areas for improvement and ensures the chatbot remains helpful [

55]; finally, addressing the current challenges ensures the effectiveness of the proposed model [

56].

3.2. Literature Search Strategy

To gather the essential literature for this study, we employed a systematic search strategy, which can be summarized in the following steps:

- 9.

Study and identify possible search keywords. Based on some literature searches, a number of the most frequently used terms are identified to create the query to be run for literature research. The query ((Chatbot* OR Conversational Agents OR AI Assistants) AND (Educational Administration OR Educational Management OR Educational Technology)) was set for all databases on 21/03/2024 and revised on 09/07/2024.

- 10.

Use the identified keywords to search for possible literature available from reputable publishers, such as those mentioned earlier. To minimize the number of irrelevant studies that could appear in the search process, the search domains were limited to computer science, education, and management.

- 11.

To ensure the completeness and correctness of the search, manual searches were also performed on each of the databases. The search was performed on the article title, abstract, and keywords. The retrieved articles span the period from 2018 to 2023.

3.3. Inclusion/Exclusion Criteria

For article selection, several inclusion criteria are used and searches for those criteria are performed on article titles, abstracts, keywords, and the main content body. The inclusion criteria are as follows: a) Studies presented in English only; b) Studies that cover the main domain which is administrative chatbots in educational institutes; c) Studies published in reputable databases as named previously. The exclusion criteria are a) Studies presented in other languages; b) Studies covering other topics such as teaching, learning, consultation, and recommendation; c) Undergraduate, master’s, and PhD theses, as they typically do not undergo the peer review process.

4. Results

The initial search yielded 2093 articles. Filtering for duplicates, non-English articles, and those outside the research scope reduced the pool to 127. Further screening based on titles, abstracts, and conclusions narrowed the selection to 91. Finally, a thorough review of full texts for quality, topic relevance, and context resulted in a final sample of 54 articles.

Figure 1 shows the study flow diagram. Among all the exclusion criteria, only the quality criteria have the potential to introduce bias. To maintain objectivity, after selecting articles published in reputable journals and conference proceedings with ≥10 citations, a quality evaluation was conducted as shown in

Table 1. This evaluation is based on the five research questions mentioned in section 2.1 and rates each aspect of the articles using a Likert scale from 0 to 3 (0 – not mentioned, 1 – poorly presented, 2 – fairly presented, 3 – well presented). Articles scoring ≥8 out of a possible 15 were included.

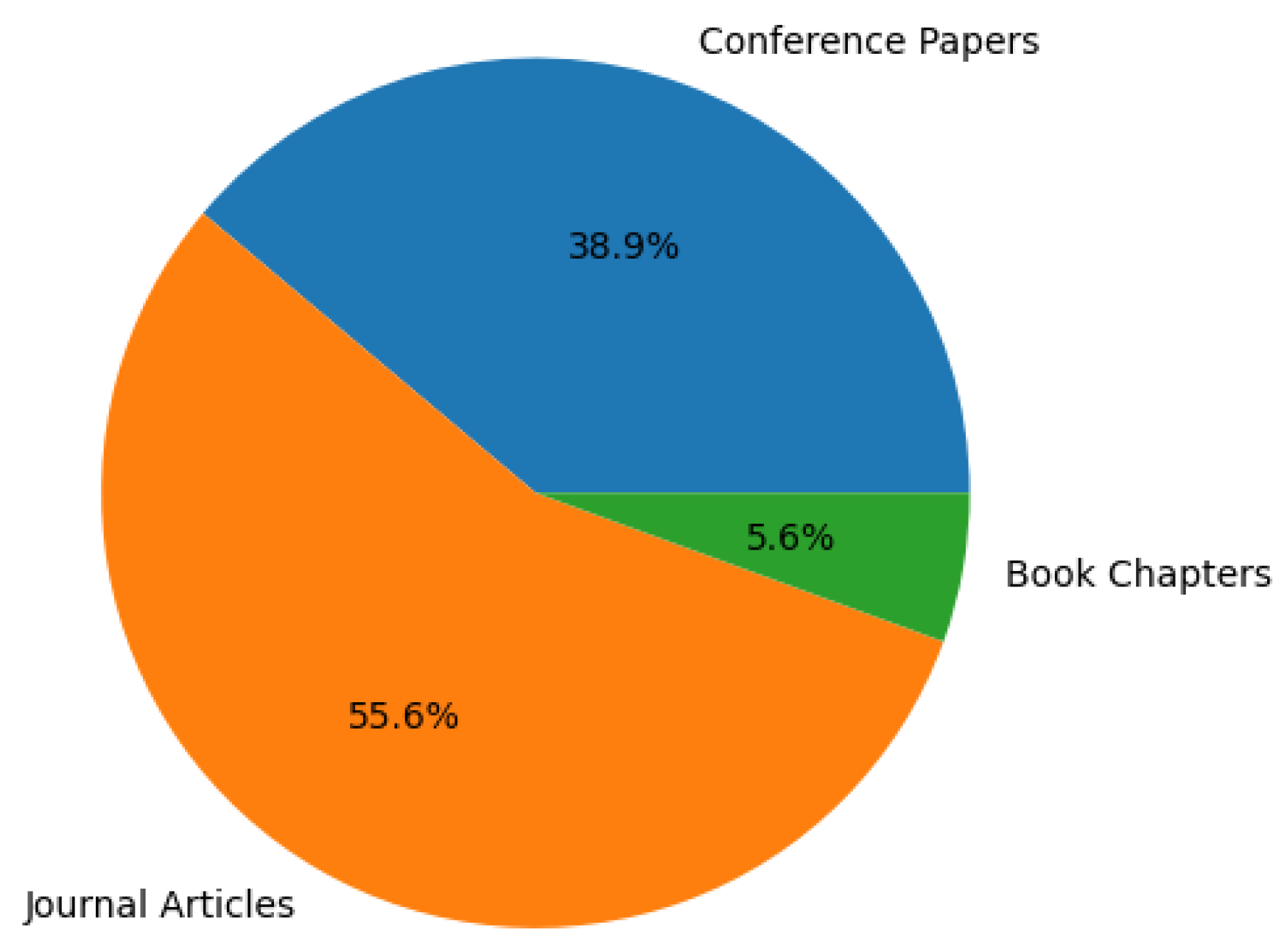

Some statistical analyses of the results have been presented to provide a deeper understanding of the literature landscape. The analysis categorizes the types of literature reviewed to give a clear picture of the sources contributing to the field. Out of the total of 54 articles analyzed, the majority, comprising 30, were journal articles, reflecting their dominant role in disseminating research findings. Conference papers made up a significant portion as well, with 21 papers indicating the active engagement of researchers in presenting and discussing their work at various conferences. Additionally, 3 book chapters were included, showing contributions to more comprehensive, edited volumes that provide broader context and synthesis of the research.

Figure 2 illustrates the relative percentages of these types of literature, highlighting the predominance of journal articles and conference papers in the research landscape.

The distribution of articles based on their publication years is presented in

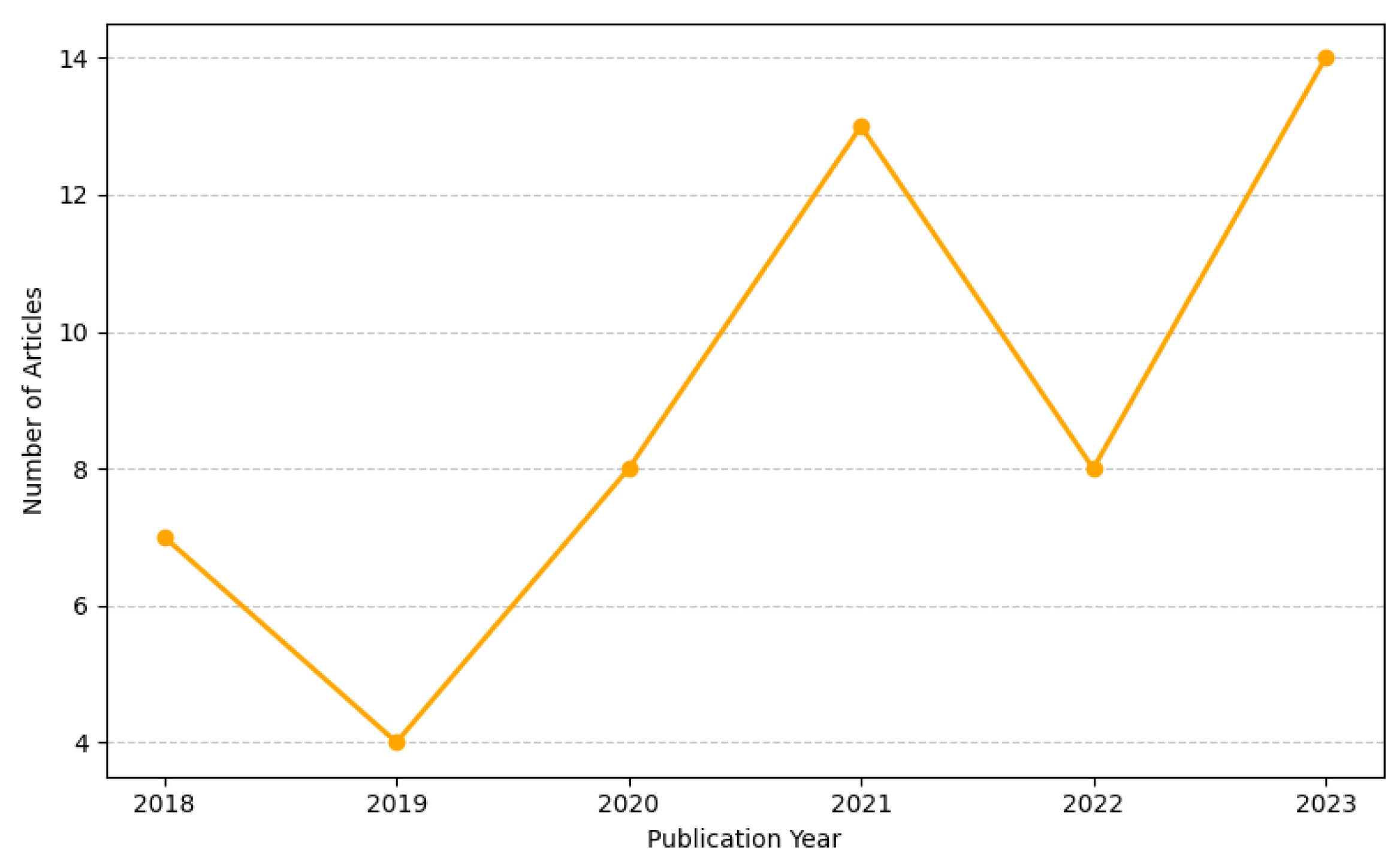

Figure 3. The number of publications per year demonstrates notable trends from 2018 to 2023. In 2018, there were 7 publications, which slightly decreased to 4 in 2019. However, this was followed by a substantial increase in 2020 with 8 publications. The upward trend continued significantly in 2021, reaching a peak of 13 publications. This surge in 2021 can be attributed to the impact of the COVID-19 pandemic, which stimulated a considerable amount of research and innovation in response to new challenges. After a minor dip to 8 publications in 2022, there was another surge in 2023, culminating in 14 publications, the highest in the observed period. This increase in 2023 reflects a rising interest and expanding research activity in this area, indicating sustained growth and the importance of the field.

4.1. Chatbot-based Implemented Tasks/Functionalities

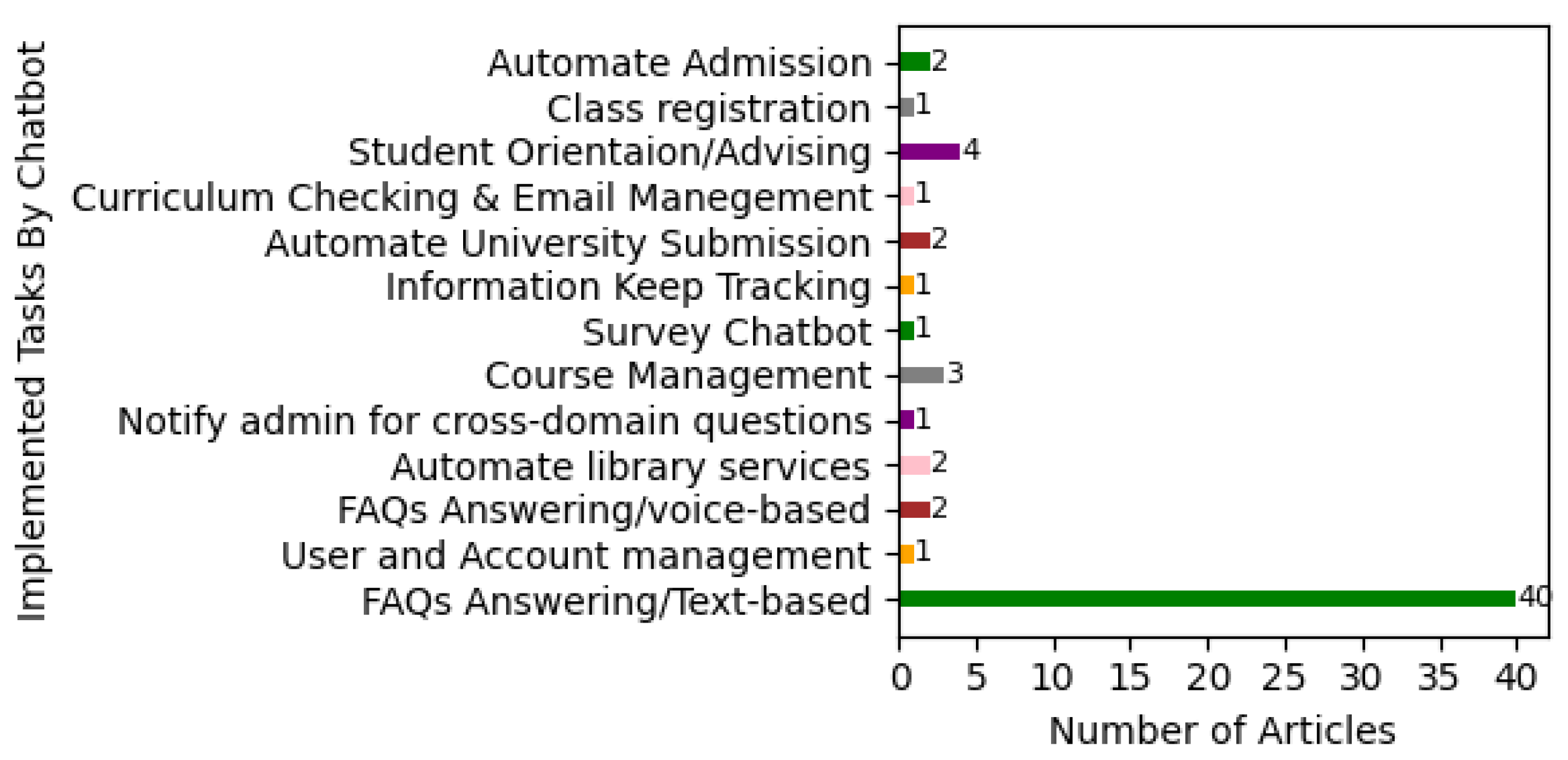

Our comprehensive review of chatbot applications in educational settings, as addressed in RQ1, has revealed a diverse landscape of implemented tasks or functionalities. These tasks, summarized in

Table 2 and visually represented in

Figure 4, offer valuable insights into the current capabilities and potential future directions of educational chatbots. The most dominant application, accounting for a staggering 74% of the reviewed studies, is answering user FAQs through text-based conversation. This encompasses a wide range of queries, from general information about institutions and academics to specific details about admissions, placements, schedules, and courses. This overwhelming preference for FAQ handling highlights the potential of chatbots to alleviate administrative burdens and free up human resources for more complex tasks [

39]. Beyond this core functionality, a rich tapestry of additional tasks emerges. Notably, 19% of studies reported chatbots managing user and account-related functions, suggesting their potential for personalized access, and streamlined administrative processes. Furthermore, some studies like [

38] implemented mechanisms to handle unanswered queries, such as pinging administrators or forwarding questions for later resolution. This ability to adapt and learn from unanswered queries demonstrates the growing sophistication of chatbot technology and its potential to continuously improve user experience. Multi-modal communication also makes its mark, with 31% of studies reporting chatbots capable of answering user queries through voice, image, and cards. This move beyond text-based interactions opens exciting possibilities for accessibility and caters to diverse learning styles. For instance, the chatbot described in [

40] utilizes speech recognition to handle voice-based queries, potentially benefiting users with visual impairments or those who prefer more natural interaction.

Moving beyond basic information dissemination, chatbots are also venturing into educational support and administrative automation. The chatbot in [

41] assists students in selecting elective courses through advice-based conversation, demonstrating the potential for personalized guidance and decision-making support. Other chatbots, like the one in [

23], replace traditional online submission systems with conversational interfaces, streamlining administrative processes and potentially enhancing user engagement. However, it’s crucial to acknowledge the limitations of the current data. The focus on text-based interactions in many studies may underrepresent the potential of multi-modal chatbots. Additionally, the lack of information on chatbot effectiveness in real-world educational settings presents an area for future research.

Table 2.

Identified chatbot-based implemented asks/functionalities.

Table 2.

Identified chatbot-based implemented asks/functionalities.

| Tasks |

References |

| FAQs Answering/Text-based |

[5,13,14,15,16,17,18,20,22,24,25,26,27,28,30,31,32,35,36,37,38,39,40,42,43,48,57,59,61,62,63,65,66,67,68,69,70,71,74] |

| User and Account management |

[58] |

| |

|

| FAQs Answering/voice-based |

[21,36] |

| Automate library services |

[33,34] |

| Notify admin for cross-domain questions. |

[38] |

| Course Management |

[32,39,48] |

| Survey chatbot |

[41] |

| keeps track of the applicant’s information |

[26] |

| Automate university submission |

[42,44] |

| Curriculum detail checking and email management |

[40] |

Student orientation/advising

Class Registration

Automate Admission |

[19,45,72,73]

[73]

[23,46] |

4.2. Data Used to Train the Bot

About RQ2, the articles are analyzed, and the result of the analysis is shown in

Table 3. Only 57% of the surveyed articles openly declared the source of data used to train their administrative chatbots. This lack of transparency makes it difficult to assess the reliability and potential biases of the information provided, hindering user trust and confidence in the chatbot’s accuracy.

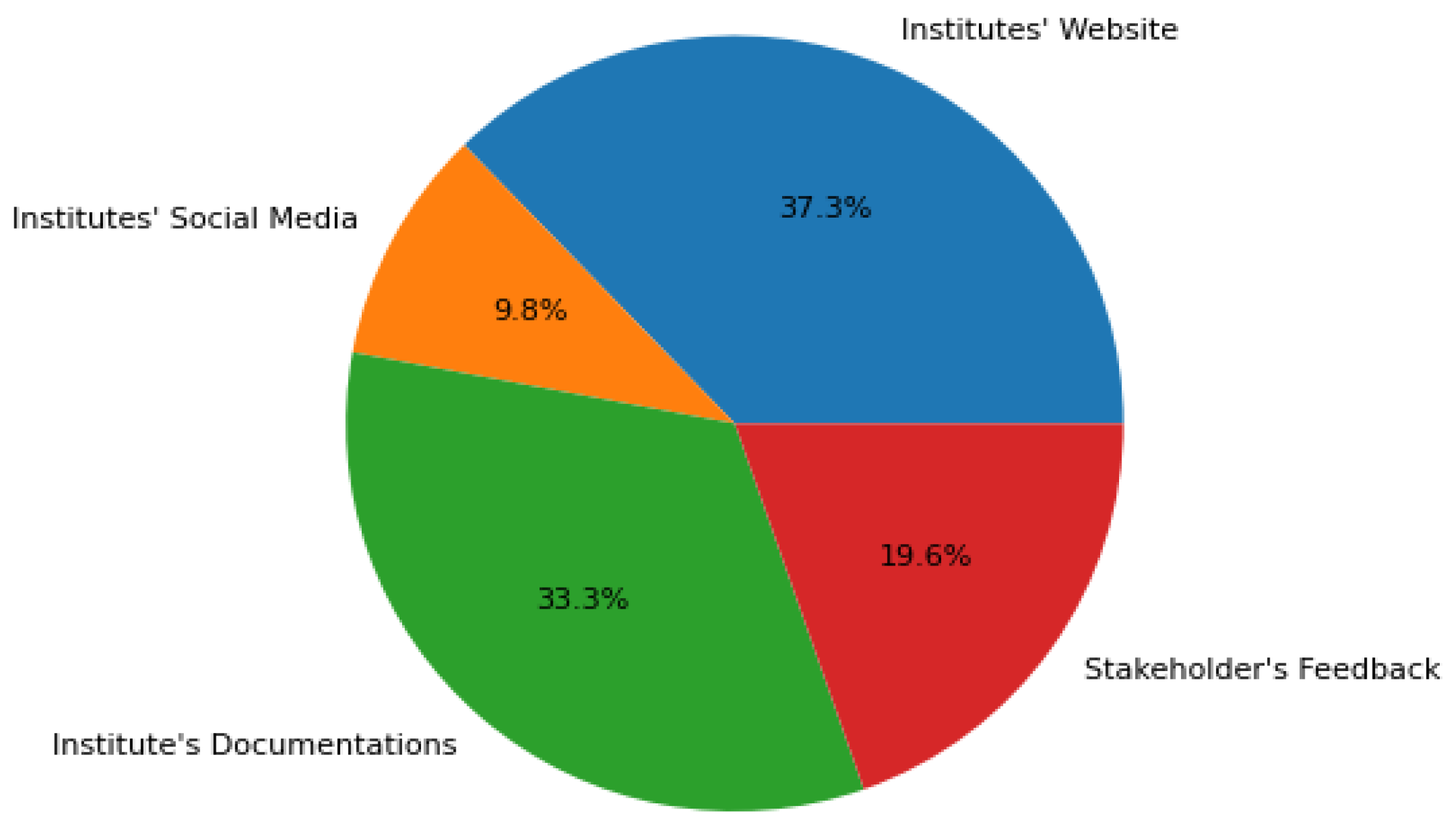

Figure 5 presents the identified data sources in the literature. The declared sources primarily consisted of institutes’ websites (37.3%), social media accounts (9.8%), available documents from the institutes (33.3%), and feedback/questionnaires from stakeholders which include students, educators, and admission staff (19.6%). This suggests a reliance on readily available but potentially incomplete or subjective data sources.

Disclosing the size of the chatbot’s knowledge base, typically measured in conversations, question-answer pairs, user messages, or examples, is crucial for understanding its potential comprehensiveness and depth.

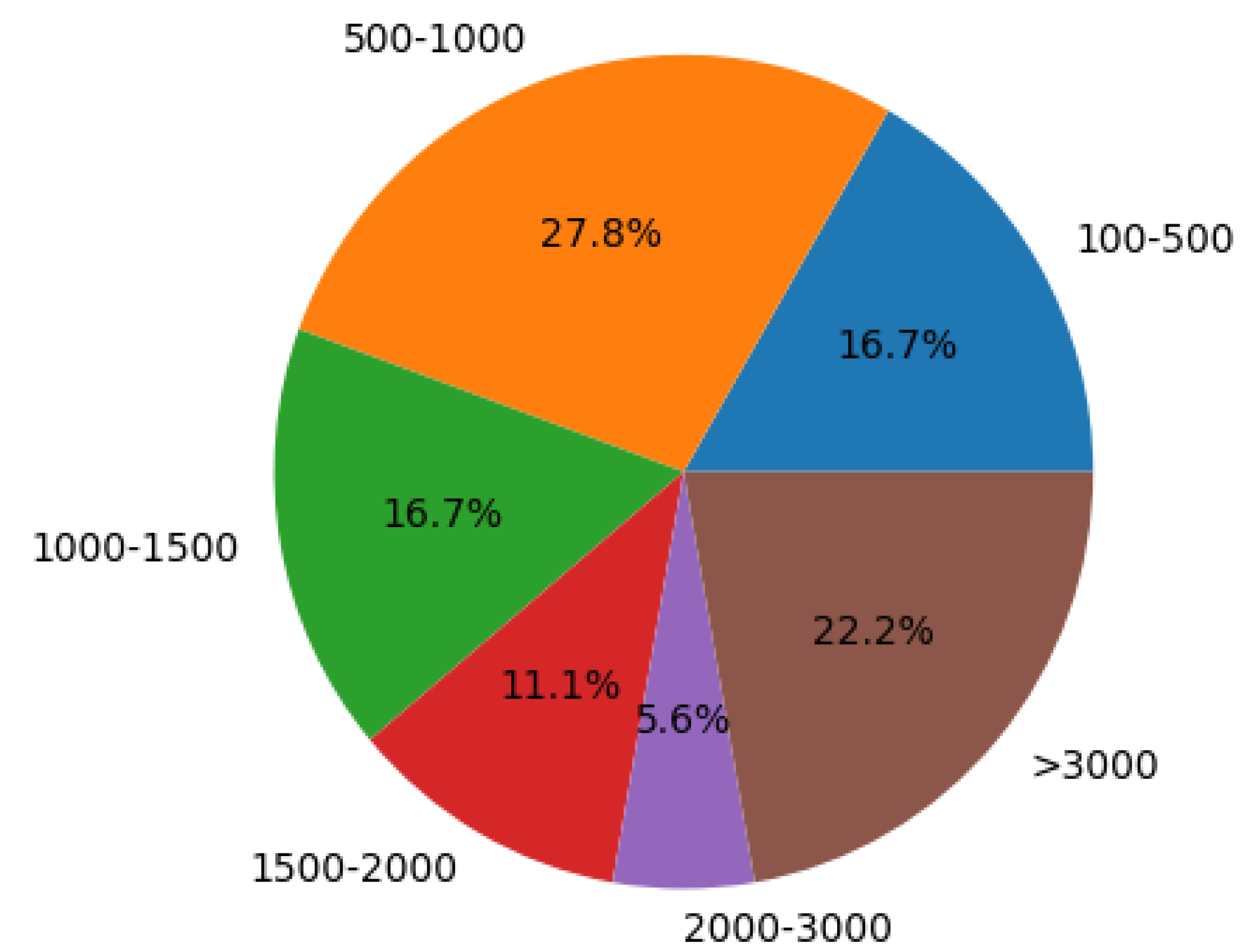

Figure 6 shows the different data sizes used in the literature. However, about 29% of the articles mentioned data size. This lack of information makes it difficult to compare the capabilities of different chatbots and assess their suitability for handling complex inquiries. Only [

22] utilized two large datasets: the CTUBot dataset, which consists of 35,702 question-answer pairs for the closed-domain chatbot, and the OpenSubViet dataset of over 419,712 dialogue pairs for the open-domain chatbot. Additionally, the reported sizes ranged from 210 examples to 6500, indicating significant variation and potential gaps in knowledge coverage across different implementations.

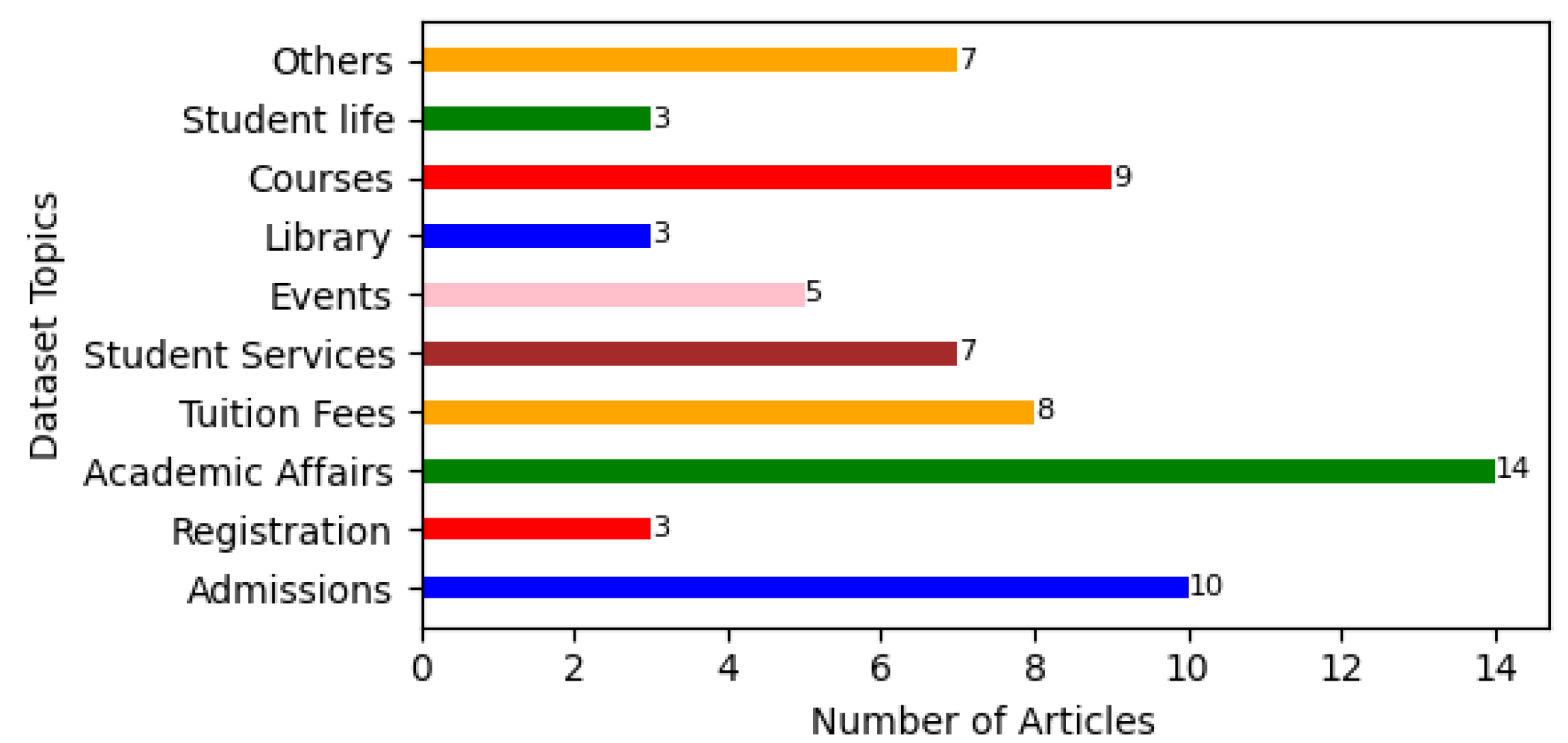

About 31% of the literature reported the topics of the data used in training the chatbots.

Figure 7 depicts the topic focus and coverage, with Academic Affairs (25%), Admissions (19%), Courses (16%), Tuition Fees (15%), Student Services (13%), Events (09%), Registration, Library, and Student life (05%) dominating the landscape. This highlights a potential under-representation of other crucial administrative areas, such as library services, financial aid, and accommodations, which could limit the chatbot’s ability to fully support users’ diverse needs.

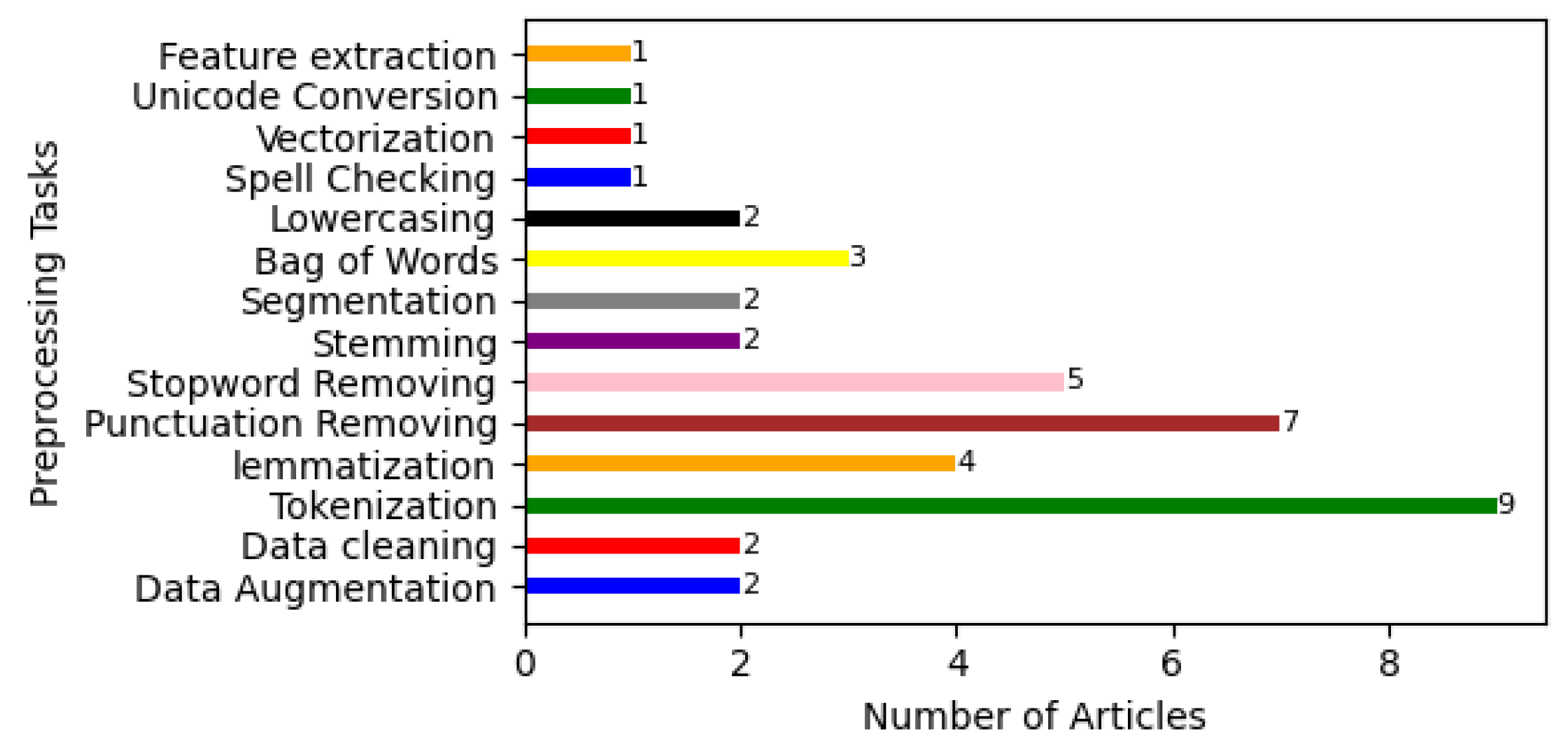

Pre-processing data is essential for maintaining high data quality [

75]. About 31% of the studies discussed the data preprocessing techniques applied to the collected information (

Figure 8). While common tasks like tokenization, punctuation removal, and stop word removal were observed, the lack of further details raises concerns about potential biases and inconsistencies introduced during the preparation process. Transparency in data preprocessing is essential for ensuring the accuracy and fairness of the chatbot’s responses.

Regarding data collection, Manual data collection, predominant in the reviewed studies (except for two utilizing automatic tools), can be time-consuming and resource-intensive, limiting the scalability and efficiency of chatbot development. Exploring and adopting automated data collection and processing tools could significantly improve efficiency and potentially allow for incorporating wider and more diverse datasets, leading to more comprehensive and reliable chatbot experiences.

Table 3.

Identified data source, size, intents, and preprocessing tasks in the literature.

Table 3.

Identified data source, size, intents, and preprocessing tasks in the literature.

| Data-related questions |

Reference |

| Data source |

[13,15,16,17,18,19,20,21,22,23,26,27,28,30,32,35,36,37,40,45,46,57,62,65,66,68,69,70,71,72,73] |

| Data size |

[15,19,20,22,24,30,35,37,57,61,62,66,68,69,70,73] |

| Data intents |

[13,15,18,19,21,22,23,26,27,32,35,42,45,70,72,76] |

| Data preprocessing |

[13,15,16,18,19,22,24,26,32,35,37,44,48,66,68,69,72] |

4.3. Technologies Shaping Educational Chatbots

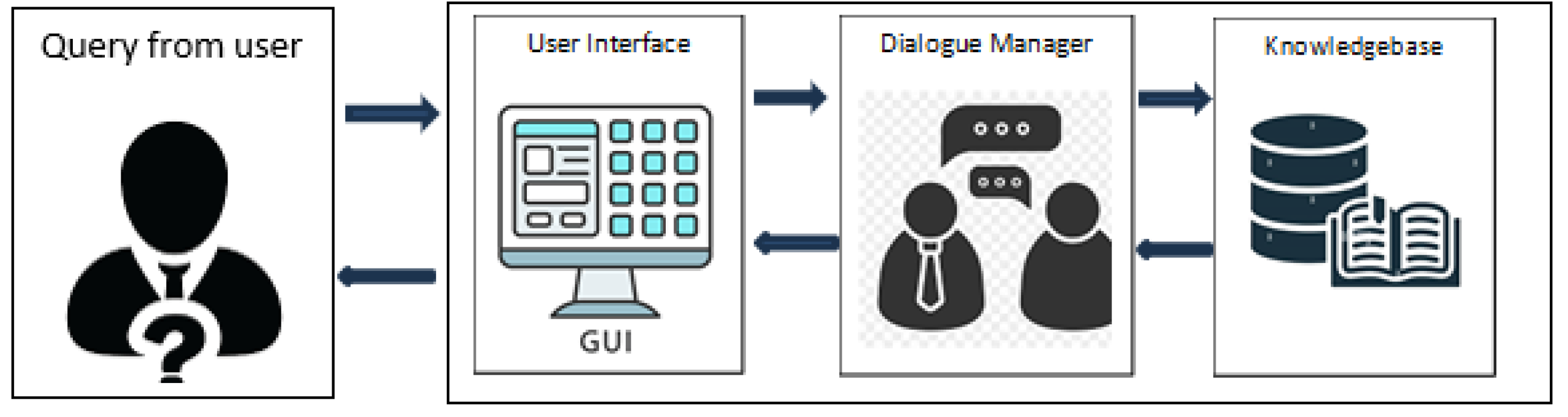

Table 4 summarizes the findings through an interface, such as a website or a social app messenger, the dialogue receives the message, comprehends it, and provides an appropriate response to the user.for RQ3. Chatbot architecture generally consists of three main parts: User manager Interface, Dialogue Manager, and Knowledgebase (

Figure 9). When a user submits a query

4.3.1. User Interfaces

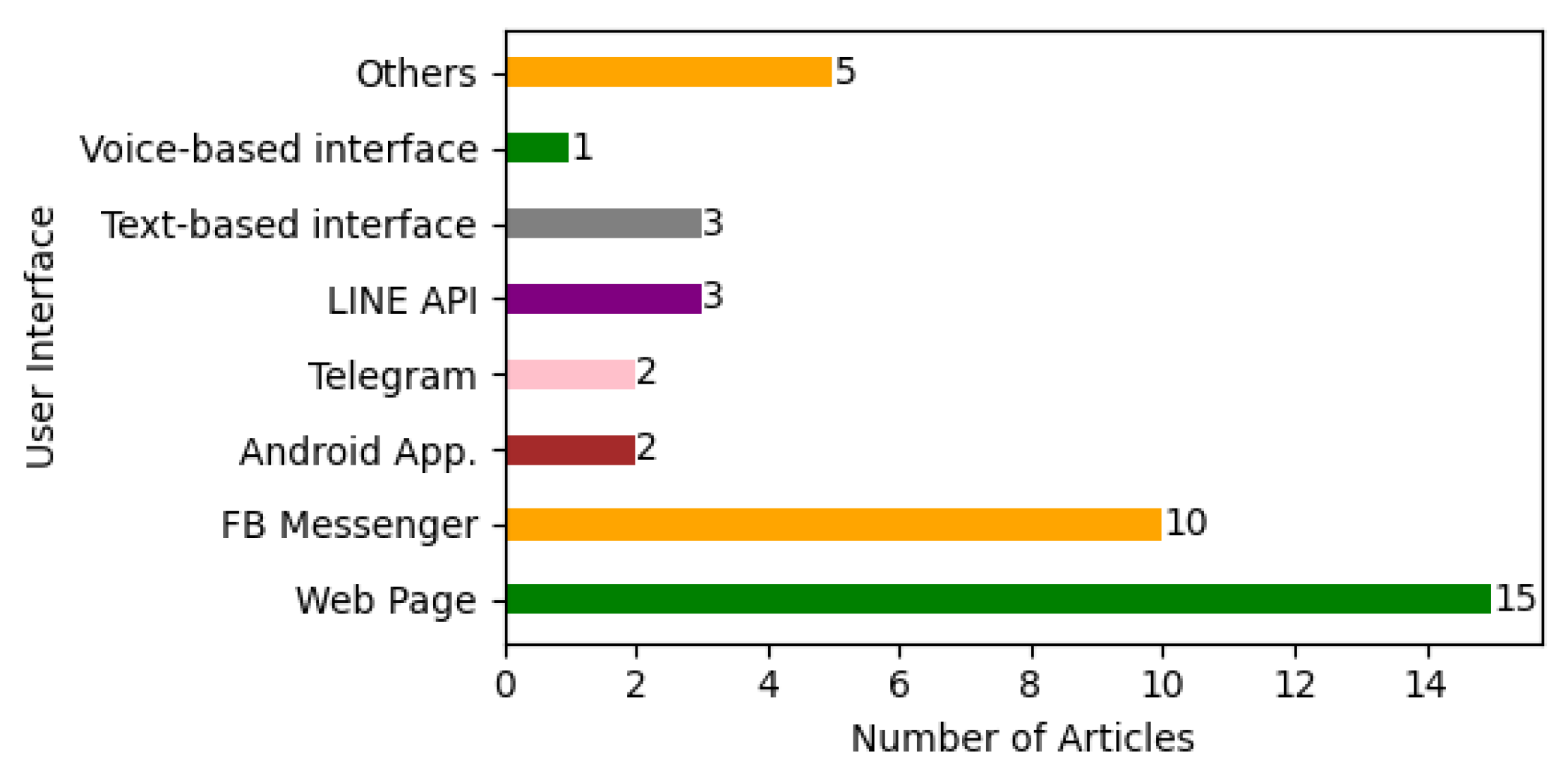

Figure 10 visually presents the distribution of user interfaces. The dominance of web interfaces (37%) reflects a focus on accessibility and integration with existing institutional websites. This ensures broad reach for users accustomed to online resources, but limitations in engagement compared to mobile apps or chat-specific platforms like Facebook Messenger (24%) cannot be ignored. Recognizing these diverse preferences, future research could explore hybrid solutions that leverage web-based accessibility while incorporating personalization features found in mobile or chat-specific platforms.

4.3.2. Dialogue Management

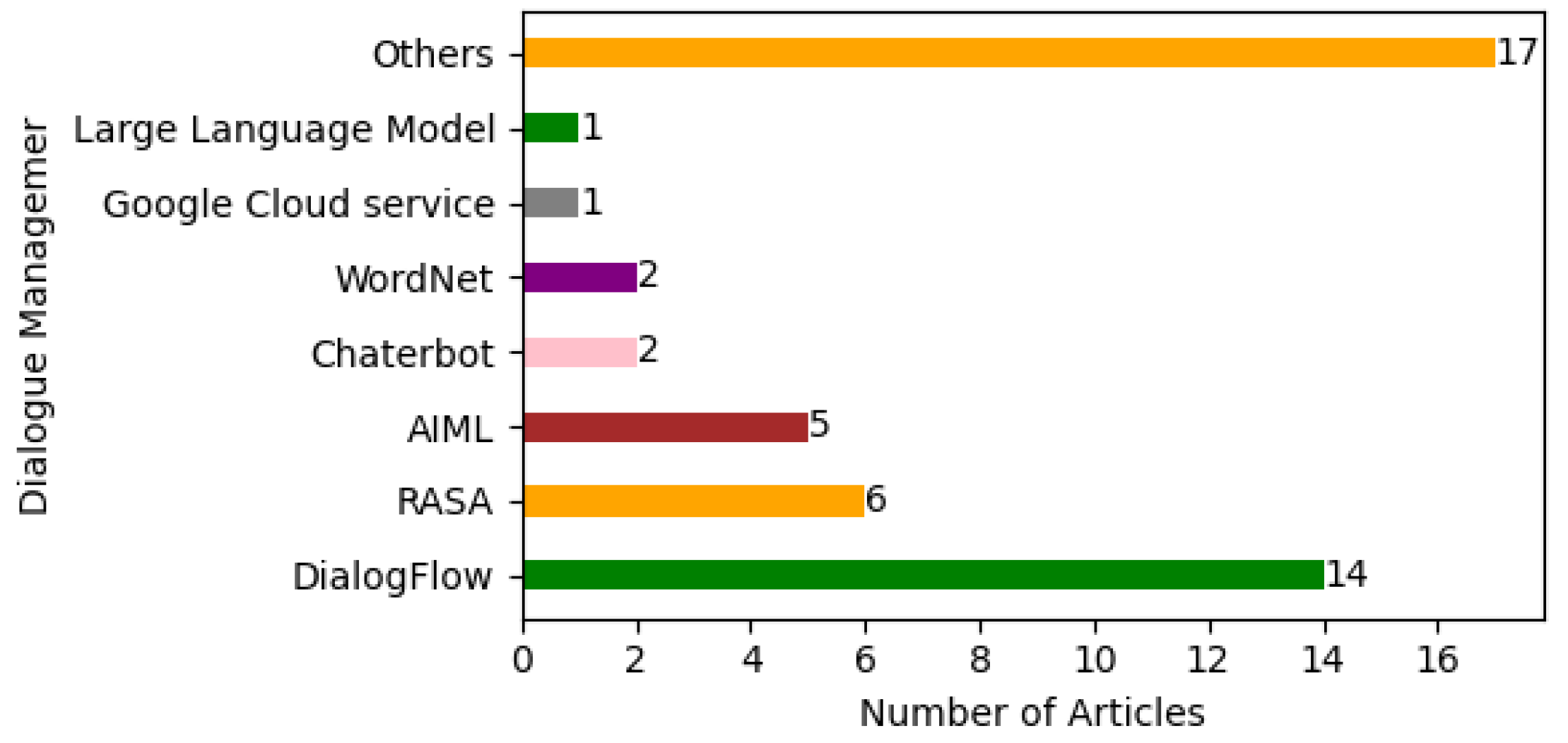

The recognized dialogue managers are shown in

Figure 11. The popularity of Dialogflow highlights its ease of use and pre-built templates, catering to efficient development for well-defined questions. However, its reliance on predefined rules necessitates the exploration of alternative approaches. The rise of NLP-powered platforms like RASA and GPT-based models showcases a future where chatbots can dynamically adapt to conversation, handle complex queries, and personalize responses. Research in this area should focus on optimizing NLP integration within dialogue management while ensuring data privacy and mitigating potential biases.

4.3.3. Knowledge Bases

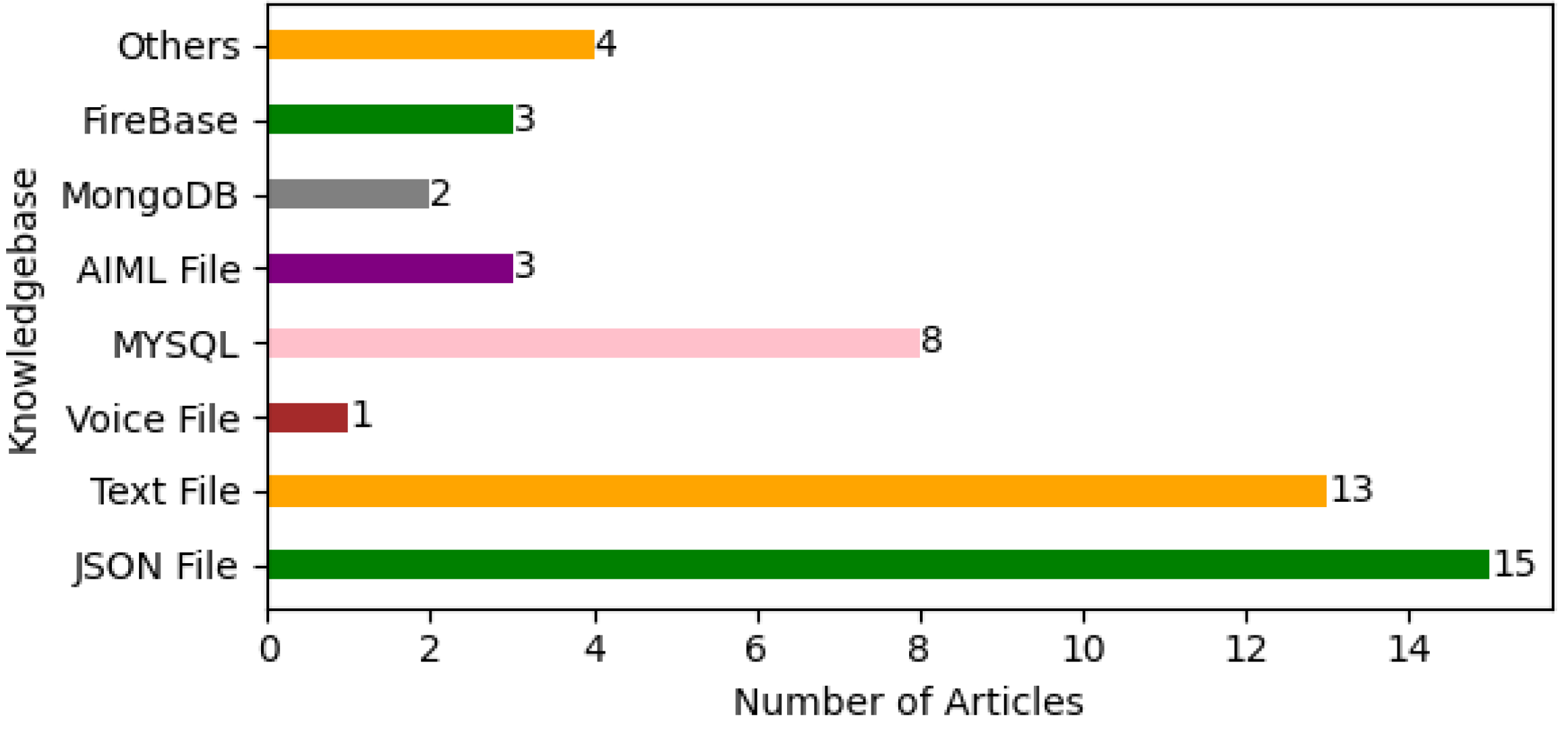

The knowledge base distribution is depicted in

Figure 12. While JSON files (30%) reign supreme as the knowledge base format, their simplicity comes at the cost of limited organization and scalability. Structured databases like MySQL (16%) and MongoDB (4%) offer significant advantages in data organization and future expansion. Additionally, integrating external knowledge sources, such as APIs or ontologies, holds immense potential for enriching the knowledge base and broadening the chatbot’s capabilities. Moving forward, research efforts should explore best practices for designing and evolving knowledge bases, considering factors like data format, organization efficiency, and integration with external knowledge sources.

Regarding the response to user queries, Chatbots, integral components of modern digital interactions, operate through various models tailored to their functionality. These models encompass rule-based, retrieval-based, and generative-based approaches [

1].

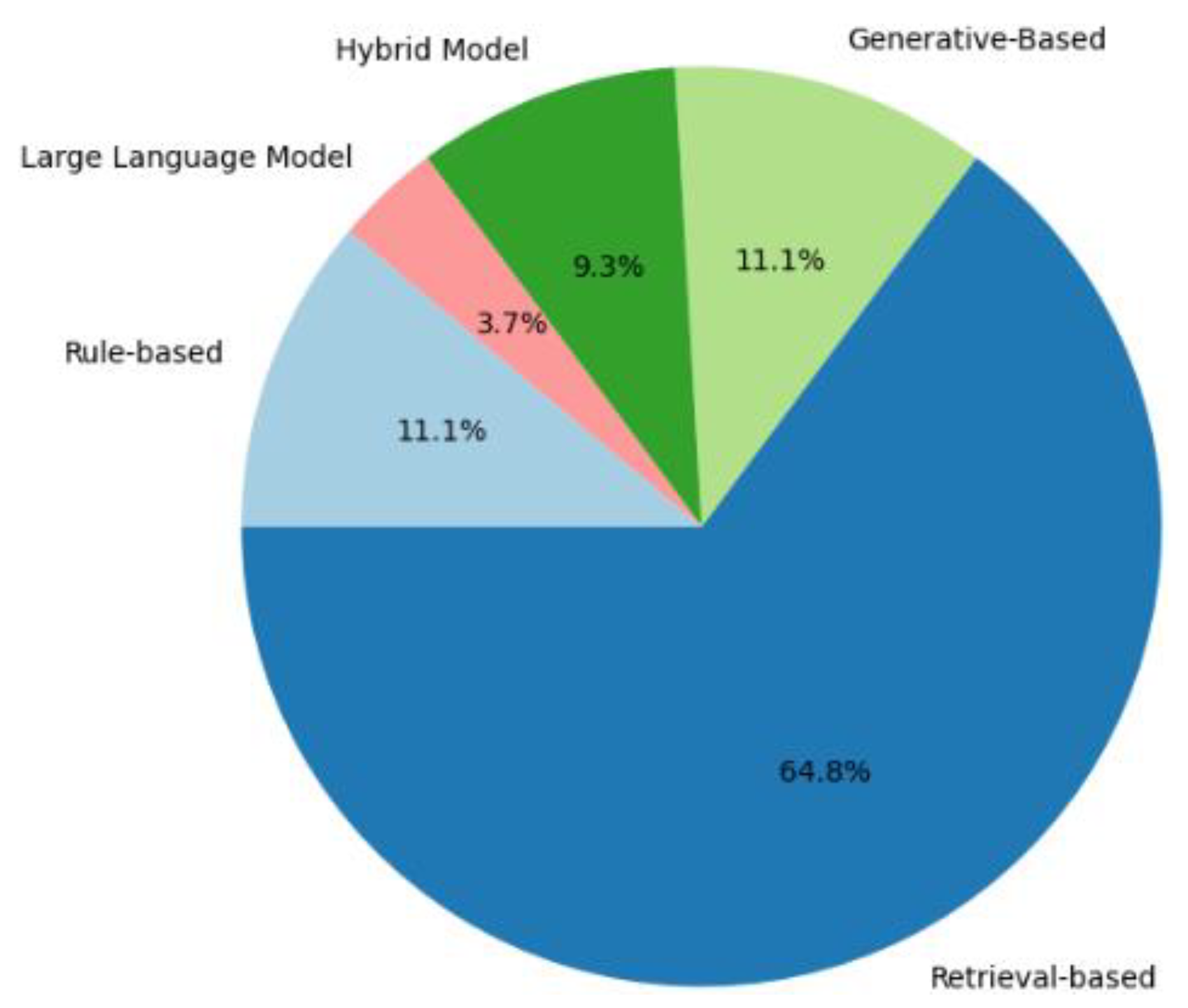

Figure 13 shows the different models of chatbots, each offering distinct advantages in conversational AI. Rule-based chatbots rely on predefined rules and patterns to generate responses, suitable for straightforward queries with predictable outcomes. Retrieval-based models retrieve pre-existing responses from a database based on similarity metrics, effectively handling a broader range of inquiries by matching user inputs with stored knowledge. Generative-based models, on the other hand, employ deep learning techniques to generate responses dynamically, allowing for more nuanced and contextually appropriate interactions.

The prevalence of retrieval-based models (65%) stems from their ease of

implementation for well-defined questions. However, their limitations with novel or complex queries necessitate the exploration of other approaches. Rule-based models (11%), though less prevalent, offer potential for specific tasks requiring logic or decision-making. While data requirements and potential biases remain challenges, generative models hold promise for dynamically generating creative responses based on context and understanding. Only five studies in the literature combined more than one model type to construct a hybrid approach. The combinations included rule-based and retrieval-based methods together as in [

38], rule-based and generative-based methods as in [

57], and rule-based methods with large language models as in [

18]. Future research could explore more hybrid model combinations, dynamically switching between retrieval, rule-based, and generative approaches depending on user intent and context.

Figure 14 shows the distribution of Chatbot Models.

4.4. Chatbot Evaluation

Regarding RQ4, the findings are summarized in

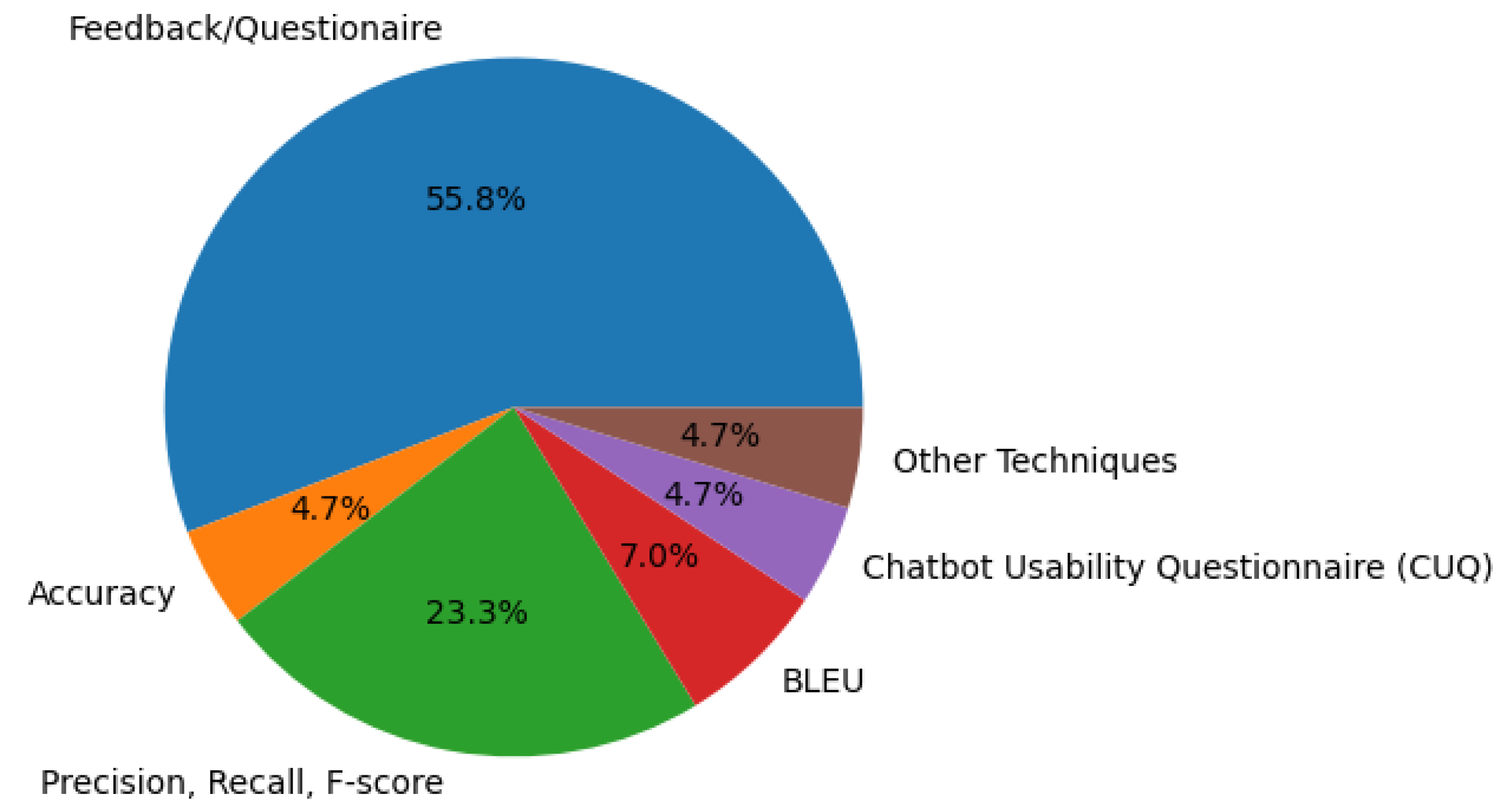

Table 5. Our exploration of RQ4, focusing on chatbot evaluation practices in education, reveals a surprisingly fragmented landscape. About 28% of the reviewed studies don’t conduct any form of evaluation, leaving a significant portion veiled in uncertainty. While this begs the question of why evaluation remains an optional exercise, it also presents an opportunity to define best practices for assessing the effectiveness of these promising educational tools.

Figure 15 illustrates the distribution of each evaluation approach. Among the evaluators, a diverse set of methods emerged, each offering unique insights. Automatic techniques like BLEU (7.0%), used for machine translation comparisons, provide objective measures of language fluency. Precision, recall, and F-score (23.3%) offer valuable data on the chatbot’s accuracy in handling specific tasks. However, the most popular technique (55.8%) involves user questionnaires, capturing subjective experiences and satisfaction levels. These evaluation efforts primarily focused on user satisfaction, usability, and efficiency, followed by aspects like fluency, reliability, and maintainability. Interestingly, the information retrieval rate, task completion rate, and user workload received less attention, potentially indicating gaps in current assessment practices. As [

72] aptly points out, focusing solely on effectiveness, usefulness, and user engagement might not paint the full picture. Considering the limited adoption of evaluation practices and the potential shortcomings of commonly used methods, future research needs to embrace more comprehensive approaches. One direction involves adopting mixed-method evaluations, combining objective data on performance with subjective user experiences. Additionally, researchers should explore context-specific metrics tailored to the educational tasks chatbots perform, ensuring a nuanced understanding of their impact.

4.5. Challenges and Future Functionalities of Chatbot Implementation

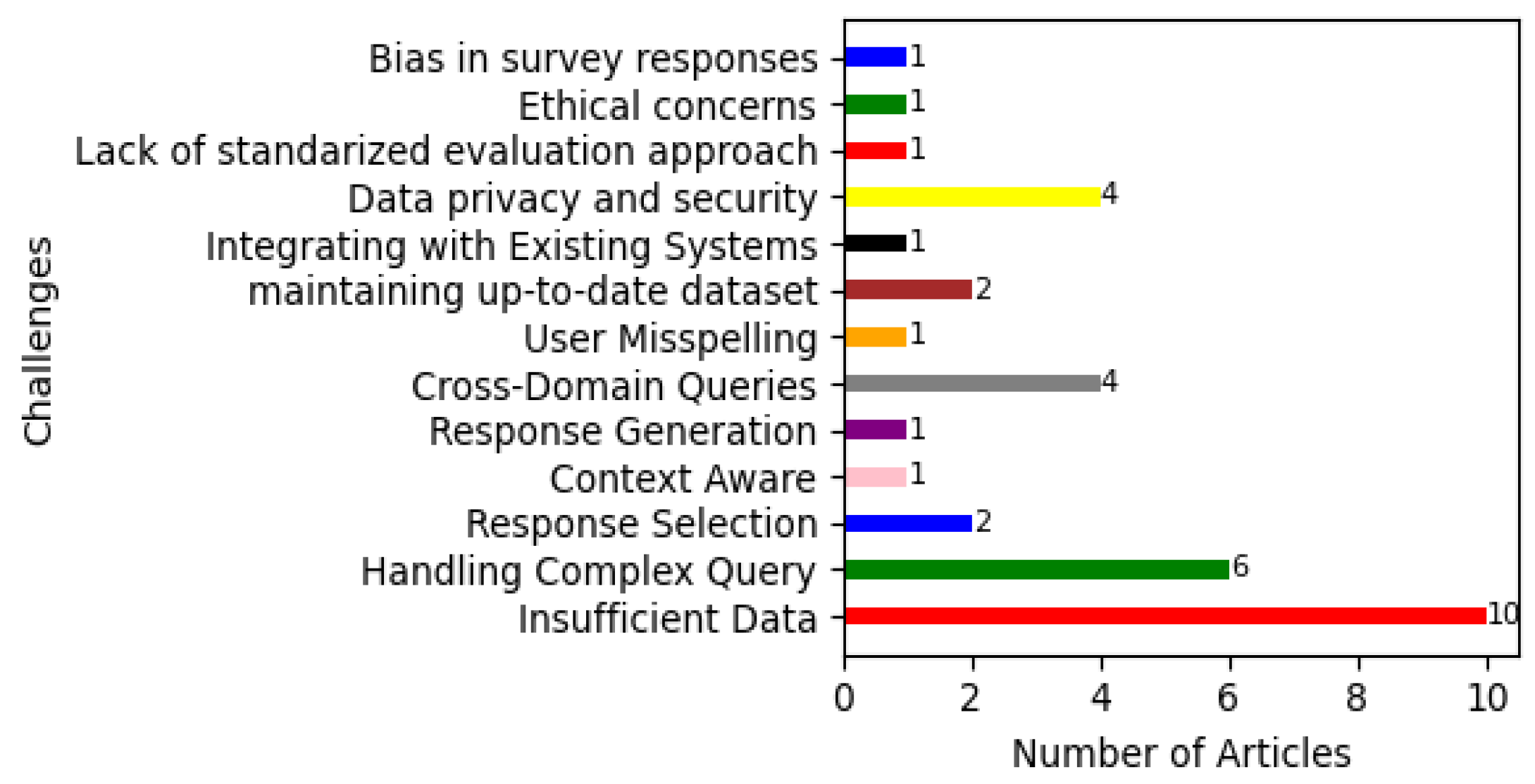

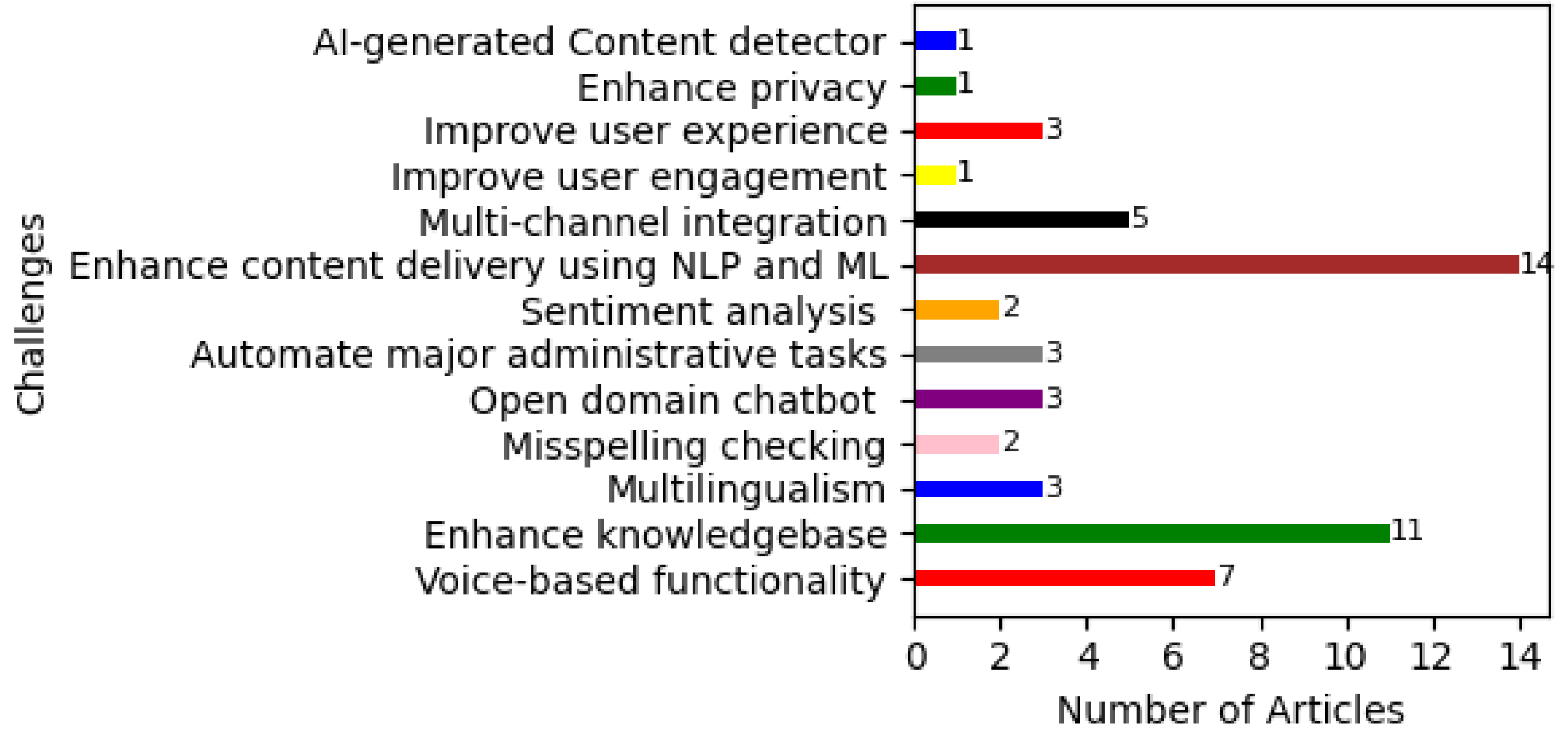

Regarding RQ5, the literature was analyzed to identify the current challenges encountered during chatbot implementation and to explore potential future functionalities. These results are presented in

Table 6 and the distribution of articles about each challenge is depicted in

Figure 16. Among the critical challenges, insufficient data, handling complex queries, cross-domain queries, and data privacy and security stand as formidable roadblocks. These limitations often lead to response challenges, hinder context awareness, and impede the ability to replace administrative overhead. Additionally, challenges like response selection in retrieval-based chatbots, response generation in generative-based chatbots, maintaining up-to-date datasets, and lack of standardized chatbot evaluation approach further highlight the need for continued development.

Looking towards the future, several exciting functionalities hold immense potential. The functionalities have been consolidated in

Table 7, and

Figure 17 provides a graphical representation of the distribution of articles based on the functionalities mentioned in the articles. As harnessing cutting-edge NLP and machine learning techniques is crucial for advancing chatbot capabilities and response accuracy, enhancing content delivery using NLP and ML is a functionality addressed by many studies in the literature (25%). Enhancing knowledge bases to incorporate qualified data is the second most prominent future functionality (18%). Voice-based interaction promises enhanced accessibility and natural user experiences (13%). Multilingual support can unlock broader information access and cater to diverse educational environments. Open-domain chatbots offer the potential for more natural and unrestricted interactions, while auto-correct features and sentiment analysis can improve user experience and personalize chatbot responses. Automating administrative tasks streamlines processes, and multi-channel integration provides seamless omnichannel support. Prioritizing these functionalities needs to consider their impact on key stakeholders. However, ethical considerations surrounding user information capture and sentiment analysis must be carefully addressed to ensure privacy and responsible data use.

5. Discussion

In this section, we discuss the implications of our findings in the context of the research questions. We explore how our results relate to the existing literature and address the research questions. We also identify any limitations of the study and suggest directions for future research to further develop the educational administration chatbot framework.

5.1. Research Questions Discussion

In this sub-section, all five research questions have been discussed in terms of findings.

5.1.1. Administrative Tasks/Functionalities of Chatbots in Education (RQ1)

Our exploration of RQ1, focusing on chatbot tasks in educational institutes, reveals a promising landscape ripe for expansion. As expected, the most dominant is FAQ handling, with chatbots effectively answering user queries about institutions, academics, admissions, and more (74%). This automation liberates administrative staff for deeper interactions [

54]. However, the potential of chatbots transcends mere information provision. They extend their tentacles into the realm of administrative support, demonstrating capabilities in tasks like managing user accounts, handling unanswered queries, and even basic process automation. Two key limitations become apparent. First, the current coverage of administrative objectives remains limited. The literature primarily focuses on well-defined, low-hanging fruit tasks, neglecting broader administrative domains within educational institutions. A vast reservoir of opportunities awaits exploration, from automated scheduling and enrollment management to student support, resource allocation, and even automated tuition fee payment. Second, the implementation of advanced administrative tasks remains in its infancy. Existing chatbots primarily excel at basic automation, leaving more complex workflows untouched. Chatbots streamlining course registration, offering personalized academic guidance, or managing student financial matters – these are just glimpses of the untapped potential waiting to be harnessed. To address these limitations, future research should pursue two distinct paths. Firstly, comprehensive assessments of various administrative domains within educational organizations are crucial. These assessments should map administrative processes, identify bottlenecks, and pinpoint suitable tasks for chatbot intervention. This will expand the horizon beyond the current narrow focus to wider opportunities for optimization. Secondly, it is imperative for researchers and developers to advance in designing and implementing functionalities for managing complex administrative tasks. This requires exploring sophisticated technologies like natural language processing and machine learning [

1], combined with innovative design approaches that prioritize user trust and ethical considerations [

56].

5.1.2. Data Used (RQ2)

By overlooking crucial data-related questions like data source transparency, data size, data intents, and data preprocessing, existing research hinders our understanding of potential biases and limitations in chatbot knowledge bases. This lack of transparency can pose significant risks, as users might be unaware of the sources influencing the chatbot’s responses, potentially breeding distrust and reducing the perceived accuracy of the information provided [

51]. In their work, the authors of [

52] emphasize the role of high-quality training data in establishing grounding. Limited or irrelevant data can hinder the chatbot’s ability to understand user intent and context, leading to misunderstandings and frustration. Our analysis revealed that about 57% of the reviewed studies mentioned the data sources and collection process, and about 30% addressed the data size and intent or topic of their chatbot knowledge bases. Data collection and management is considered by [

75] as a major component of data quality. With limited information on the scope and depth of the data used, it is difficult to assess the chatbot’s ability to handle complex inquiries or address a wider range of topics. Future research efforts need to focus on developing frameworks for comprehensive data collection and utilization in educational chatbot development. This might involve exploring diverse data sources beyond institute websites, investigating effective data preprocessing techniques to mitigate biases, and integrating user feedback mechanisms to personalize the chatbot’s knowledge base and ensure its ongoing relevance and accuracy.

5.1.3. Technologies Shaping Educational Chatbots (RQ3)

RQ3 checked the technologies used in chatbot architecture, the analysis of chatbot architecture in educational settings is summarized as: web platforms reign supreme (37%) as user interfaces, reflecting a focus on accessibility and integration with existing institutional websites. This ensures broad reach but risks sacrificing engagement compared to mobile apps or platforms like Facebook Messenger (24%). Notably, a significant 25% of studies omitted mentioning their user interface choice, highlighting a potential area for improved transparency in future research. Dialogflow emerges as the popular dialogue manager platform (29%), likely due to its ease of use and pre-built templates. However, 63% of studies remained silent on the rationale behind their dialogue manager selection, whether opting for existing platforms or building their own using NLP/Neural networks. Understanding the driving force behind these choices is crucial for evaluating their appropriateness and effectiveness [

77]. JSON files (30%) dominate as knowledge bases, likely due to their simplicity. However, limitations in organization and scalability necessitate exploration of structured databases like MySQL (16%) and MongoDB (4%). Furthermore, a concerning 10% of studies omitted specifying their knowledge base format, indicating another area requiring greater transparency in reporting technologies.

The literature leans heavily towards retrieval-based chatbot models (65%), likely due to their relative ease of implementation for well-defined questions. However, the absence of clear justifications for choosing this model over generative (11%) or rule-based (11%) models leaves a critical gap in understanding. Additionally, no studies explored the possibility of hybrid models, potentially overlooking opportunities to leverage the strengths of multiple approaches. To enhance future research in this area, studies need to provide detailed explanations for:

Selecting dialogue manager platforms (e.g., Dialogflow, RASA) or building their own using NLP/Neural networks, considering factors like performance, scalability, ease of implementation, and suitability for specific educational needs.

Choosing specific chatbot models (retrieval, generative, rule-based) based on task complexity, user intent, and desired level of personalization.

Exploring the potential benefits and challenges of hybrid models that combine different techniques.

In their recently published work, the authors of [

53] mentioned that despite notable advancements in natural language processing in recent years, the underlying technology of chatbots remains partially developed, leading to frequent errors in user interactions. Their research revealed that errors made by chatbots adversely impact users’ perceptions of Ease of Use, Usefulness, Enjoyment, and Social Presence. [

78] verifies the same claim and states that while the adoption of AI-powered chatbots has been driven by the promise of cost and time savings, they often fall short of meeting customer expectations, potentially leading to reduced user compliance with chatbot requests. Addressing these shortcomings will lead to a more comprehensive understanding of chatbot implementation in educational settings, paving the way for the development of engaging and effective learning experiences. Large language models such as ChatGPT or Gemini can be part of these solutions. As a recent study noted, ChatGPT has quickly gained significant interest from academics, researchers, and businesses [

12]. However, the analysis of the literature explored that the utilization of generative AI in educational administration is still limited.

5.1.4. Chatbot Evaluation in Education (RQ4)

Our exploration in RQ5 reveals a perplexing situation in educational chatbot evaluation. Dedicated tools and standardized evaluation frameworks are absent [

1]. Reliable human evaluation is crucial for understanding these models, but it’s hampered by the absence of standard testing procedures and the secrecy surrounding the models’ code and parameters [

79]. This evaluation gap hinders our understanding of chatbot effectiveness and impedes their potential to revolutionize education. While numerous models rely on human assessment, this method proves costly, labor-intensive, challenging to expand, prone to biases, and lacks consistency. To address these limitations, there is a call for the development of a novel, dependable automatic evaluation method. In supporting the importance of the evaluation process, the authors of [

77] stated that Numerous institutions continue to overlook the potential of Conversational Agents (CAs) due to a deficiency in understanding how to assess and enhance their quality, hindering their sustainable integration into organizational functions. But within this challenge lies the spark of opportunity, three key solutions to illuminate the path forward have been proposed:

Forging Specialized Tools: Instead of relying on repurposed metrics from other domains, forging specialized evaluation tools tailored to the unique functionalities and contexts of educational chatbots. These tools should offer automated and reliable assessments of performance, accuracy, and user satisfaction, providing crucial insights to researchers and developers.

Building a Common Framework: Collaboration is key to crafting a shared language for chatbot evaluation. By establishing a common set of criteria and methodologies – encompassing user satisfaction, response accuracy, and system usability – we can ensure consistent and comparable evaluations across studies. Standardized questionnaires and evaluation mechanisms, empowering researchers and informing stakeholders.

Illuminating the Details: Transparency is vital. Researchers need to embrace meticulous reporting practices, explicitly documenting sample sizes and participant demographics. This fosters trust in evaluation results, paving the way for reproducibility and robust research advancements.

In response to these limitations, the authors of [

80] claimed that their research explores mathematical frameworks to connect individual model metrics to the answerability metric and presents modeling approaches for predicting a chatbot’s answerability to user queries. [

79] introduces ChatEval, a tool designed for the evaluation of chatbots. It addresses the challenges in evaluating open-domain dialog systems by providing a unified framework for human evaluation. The responses to the topic are limited and addressing these limitations holds immense potential. Specialized tools can streamline evaluations, standardized frameworks can ensure consistency and improved reporting can build trust. According to [

6], the evaluation aspect poses a significant challenge in the implementation of Chatbot systems in education. They highlight the importance of conducting appropriate evaluations to test the system’s usefulness, recommending the use of a larger and more representative sampling population. Our review findings are consistent with this perspective on evaluation.

5.1.5. Challenges and Future Functionality (RQ5)

To identify current practices and future functionalities, [

56] refers to the importance of analyzing the challenges that are faced in developing effective chatbots. Therefore, RQ5 investigated the literature to identify the current challenges and future functionalities in educational institutes regarding chatbot implementation. Insufficient data, complex user queries, and language dependence emerge as critical challenges, often leading to limitations in response generation, context awareness, and administrative support. These hurdles can hinder chatbot effectiveness and adoption within educational institutions. To address these challenges and unlock the full potential of chatbots, several promising functionalities can be implemented. Extensive and diverse knowledgebases informed by various sources can minimize “out-of-knowledge” situations. As highlighted by [

74], voice-based interaction enhances accessibility, catering to diverse user needs. Additionally, harnessing cutting-edge NLP and ML techniques can improve response quality in both retrieval-based and generative chatbots [

16]. Breaking down language barriers is crucial. We propose developing multilingual chatbots, trained on multi-language data, as only 5% of existing chatbots can interact in more than one language. Furthermore, implementing login functionality enables personalized information delivery based on user level. Transitioning from closed to open-domain chatbots effectively tackles cross-domain query challenges. To provide a seamless user experience, integrating chatbots across multiple communication channels like social media is valuable. Finally, automating administrative tasks such as submissions, enrollments, and notifications streamlines processes and reduces overhead [

54]. Prioritizing these functionalities should consider their impact on key stakeholders. Students benefit from personalized learning support, efficient administrative processes, and accessible information. Educators gain valuable time through task automation, while administrators enjoy improved efficiency and data-driven insights. However, ethical considerations surrounding user data collection and analysis must be carefully addressed to ensure privacy and responsible data use. By overcoming identified challenges and implementing promising functionalities, the full potential of educational chatbots could be unlocked. These intelligent assistants have the power to personalize learning, streamline administration, and empower all stakeholders in the educational ecosystem.

5.2. Implications

Our findings reveal the potential of administrative chatbots in educational institutions, with significant implications for both future research and practical applications. Regarding the enhancing research directions, the implications that need to be considered are:

Standardized Framework Development: Existing research on administrative chatbot development suffers from the lack of standardization. Future studies should work towards establishing a standardized framework for chatbot implementation. This framework should define consistent data characteristics, data collection practices [

75], implementation technologies [

1], and evaluation methods [

77].

Exploring Generative AI Applications: Given the limited application of Generative AI in educational administration (as highlighted in our review), future research should investigate how these advanced AI technologies can be integrated into administrative chatbots to enhance their capabilities and effectiveness. This could involve exploring Generative AI for administrative tasks that are more complex than tasks addressed in the literature.

Comprehensive Evaluation Methodologies: The full potential of chatbots remains unrealized in many organizations due to the lack of knowledge about how to assess and enhance their quality for ongoing use within the organization [

77]. Therefore, developing effective and consistent evaluation methodologies is crucial. These methodologies should incorporate diverse user groups (students, faculty, staff) and assess task completion rates, user satisfaction, ethical considerations such as data privacy [

56], and impacts on learning outcomes [

8].

Ethical Considerations: The ethical implications of chatbot technology in education require deeper exploration. This includes addressing issues such as data privacy and security [

32], user consent [

45], potential biases in chatbot responses [

35], and the potential for job displacement in administrative roles.

Regarding the practical applications for educational institutions, the potential implications are:

Identify Key Tasks: administrative tasks/functionalities that can benefit from automation through chatbots need to be identified. Prioritize tasks with high repetition, clear procedures, and readily available data.

User-Centered Design: Employing accessible and user-friendly interface designs that cater to diverse user populations, including students, parents, faculty, and staff. Integrate real-time feedback mechanisms to gather continuous user input and refine the chatbot’s performance.

Comprehensive Data Collection: Ensuring access to robust and well-curated datasets relevant to the identified tasks. Using reliable sources, employing appropriate preprocessing techniques such as data cleaning, and normalization, and addressing ethical considerations throughout data collection and use such as anonymization.

Targeted Evaluation: Rigorous evaluations using methodologies that incorporate diverse user groups and go beyond task completion rates need to be conducted. Measure user satisfaction, identify areas for improvement, and assess the impact on learning outcomes such as student performance, and course completion rates.

Stakeholder Involvement: Integrating all stakeholders, including students, faculty, and administrators, throughout the chatbot development and implementation process. This ensures their needs are addressed, concerns are mitigated, and the chatbot aligns with institutional goals.

Continuous Improvement: Implementing a feedback loop to gather user input, identify shortcomings, and make data-driven improvements to the chatbot’s performance over time.

We can conclude from the above points that by following these implications and actively researching the identified areas, educational institutions can harness the potential of administrative chatbots to streamline processes, enhance user experience, and contribute to a more efficient and personalized learning environment.

6. Conclusion

This study investigated the effective use of chatbots for administrative tasks in educational institutions through a systematic review of 54 articles. To uncover the key factors of a potential chatbot framework for educational administration, we analyzed the tasks/functionalities, data sources, technologies, evaluation methods, and implementation challenges of existing chatbots. A critical gap was found: the lack of a general framework specifically designed for developing administrative chatbots in educational settings. Additionally, several other critical gaps including limited research in this domain and the lack of integration of generative AI, such as ChatGPT, in educational administration were identified as important areas for further research. The findings hold significant implications for both future research and practical applications. Researchers can explore the main characteristics of a comprehensive framework for educational administration chatbots. Institutions, on the other hand, can employ generative AI to implement effective administrative chatbots tailored to their requirements. Ultimately, this research contributes to a future where chatbots easily support educational workflows, fostering an enhanced learning experience for all stakeholders.

Author Contributions

KA: Conceptualization, Methodology, Writing the Initial Draft, and Revision; CO: Conceptualization and Supervision. HM: Revision, Restructuring the Initial Draft; TA: Methodology, Restructuring the Initial Draft, and Revision. All authors have reviewed and approved the final manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data used and analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- G. Caldarini, S. Jaf, and K. McGarry, “A Literature Survey of Recent Advances in Chatbots,” Inf., vol. 13, no. 1, 2022, doi: 10.3390/info13010041. [CrossRef]

- P. A. Olujimi and A. Ade-Ibijola, “NLP techniques for automating responses to customer queries: a systematic review,” Discov. Artif. Intell., vol. 3, no. 1, 2023, doi: 10.1007/s44163-023-00065-5. [CrossRef]

- C. W. Okonkwo and A. Ade-Ibijola, “Chatbots applications in education: A systematic review,” Comput. Educ. Artif. Intell., vol. 2, 2021, doi: 10.1016/j.caeai.2021.100033. [CrossRef]

- L. Chen, P. Chen, and Z. Lin, “Artificial Intelligence in Education: A Review,” IEEE Access, vol. 8, pp. 75264–75278, 2020, doi: 10.1109/ACCESS.2020.2988510. [CrossRef]

- W. Villegas-ch, J. Garc, K. Mullo-ca, S. Santiago, and M. Roman-cañizares, “Implementation of a Virtual Assistant for the Academic Management of a University with the Use of Artificial Intelligence,” Futur. Internet, vol. 13, no. 4, p. 97, 2021, doi: doi.org/10.3390/fi13040097. [CrossRef]

- C. W. Okonkwo and A. Ade-Ibijola, “Chatbots applications in education: A systematic review,” Comput. Educ. Artif. Intell., vol. 2, p. 100033, 2021, doi: 10.1016/j.caeai.2021.100033. [CrossRef]

- S. Wollny, J. Schneider, D. Di Mitri, J. Weidlich, M. Rittberger, and H. Drachsler, “Are We There Yet? - A Systematic Literature Review on Chatbots in Education,” in Frontiers in Artificial Intelligence, 2021, vol. 4, no. July, pp. 1–18. doi: 10.3389/frai.2021.654924. [CrossRef]

- M. A. Kuhail, N. Alturki, S. Alramlawi, and K. Alhejori, “Interacting with educational chatbots: A systematic review,” Educ. Inf. Technol., vol. 28, no. 1, pp. 973–1018, 2022, doi: 10.1007/s10639-022-11177-3. [CrossRef]

- J. Q. Pérez, T. Daradoumis, and J. M. M. Puig, “Rediscovering the use of chatbots in education: A systematic literature review,” Comput. Appl. Eng. Educ., vol. 28, no. 6, pp. 1549–1565, 2020, doi: 10.1002/cae.22326. [CrossRef]

- R. Winkler and M. Soellner, “Unleashing the Potential of Chatbots in Education: A State-Of-The-Art Analysis,” Acad. Manag. Proc., vol. 2018, no. 1, p. 15903, 2018, doi: 10.5465/AMBPP.2018.15903abstract. [CrossRef]

- D. T. T. Mai, C. Van Da, and N. Van Hanh, “The use of ChatGPT in teaching and learning: a systematic review through SWOT analysis approach,” Front. Educ., vol. 9, no. February, pp. 1–17, 2024, doi: 10.3389/feduc.2024.1328769. [CrossRef]

- P. P. Ray, “ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope,” Internet Things Cyber-Physical Syst., vol. 3, no. March, pp. 121–154, 2023, doi: 10.1016/j.iotcps.2023.04.003. [CrossRef]

- K. Ramalakshmi, D. J. David, M. Selvarathi, and T. J. Jebaseeli, “Using Artificial Intelligence Methods to Create a Chatbot for University Questions and Answers †,” Eng. Proc., vol. 59, no. 1, pp. 1–9, 2023, doi: 10.3390/engproc2023059016. [CrossRef]

- N. Alzaabi, M. Mohamed, H. Almansoori, M. Alhosani, and N. Ababneh, “ADPOLY Student Information Chatbot,” 2022 5th Int. Conf. Data Storage Data Eng., pp. 108–112, 2022, doi: 10.1145/3528114.3528132. [CrossRef]

- R. Isaev, R. Gumerov, G. Esenalieva, R. R. Mekuria, and E. Doszhanov, “HIVA: Holographic Intellectual Voice Assistant,” Proc. - 2023 17th Int. Conf. Electron. Comput. Comput. ICECCO 2023, pp. 1–6, 2023, doi: 10.1109/ICECCO58239.2023.10146600. [CrossRef]

- N. Muangnak, N. Thasnas, T. Hengsanunkul, and J. Yotapakdee, “The neural network conversation model enables the commonly asked student query agents,” Int. J. Adv. Comput. Sci. Appl., vol. 11, no. 4, pp. 154–164, 2020, doi: 10.14569/IJACSA.2020.0110421. [CrossRef]

- J. Thakkar, P. Raut, Y. Doshi, and K. Parekh, “Erasmus AI Chatbot,” Int. J. Comput. Sci. Eng., vol. 6, no. 10, pp. 498–502, 2018, doi: 10.26438/ijcse/v6i10.498502. [CrossRef]

- H. Dinh and T. K. Tran, “EduChat: An AI-Based Chatbot for University-Related Information Using a Hybrid Approach,” Appl. Sci., vol. 13, no. 22, p. 12446, 2023, doi: 10.3390/app132212446. [CrossRef]

- R. Lucien and S. Park, “Design and Development of an Advising Chatbot as a Student Support Intervention in a University System,” TechTrends, vol. 68, no. 1, pp. 79–90, 2024, doi: 10.1007/s11528-023-00898-y. [CrossRef]

- H. T. Hien, P.-N. Cuong, L. N. H. Nam, H. L. T. K. Nhung, and L. D. Thang, “Intelligent Assistants in Higher-Education Environments: The FIT-EBot, a Chatbot for Administrative and Learning Support,” in Proceedings of the 9th International Symposium on Information and Communication Technology (SoICT ’18), 2018, pp. 69–76. doi: 10.1145/3287921.3287937. [CrossRef]

- S. Mendoza, L. M. Sánchez-adame, J. F. Urquiza-yllescas, B. A. González-beltrán, and D. Decouchant, “A Model to Develop Chatbots for Assisting the Teaching and Learning Process,” pp. 1–21, 2024.

- K. N. Lam, L. H. Nguy, V. L. Le, and J. Kalita, “A Transformer-Based Educational Virtual Assistant Using Diacriticized Latin Script,” IEEE Access, vol. 11, no. August, pp. 90094–90104, 2023, doi: 10.1109/ACCESS.2023.3307635. [CrossRef]

- S. C. Man, O. Matei, T. Faragau, L. Andreica, and D. Daraba, “The Innovative Use of Intelligent Chatbot for Sustainable Health Education Admission Process: Learnt Lessons and Good Practices,” Appl. Sci., vol. 13, no. 4, 2023, doi: 10.3390/app13042415. [CrossRef]

- T. T. Nguyen, A. D. Le, H. T. Hoang, and T. Nguyen, “NEU-chatbot: Chatbot for admission of National Economics University,” Comput. Educ. Artif. Intell., vol. 2, p. 100036, 2021, doi: 10.1016/j.caeai.2021.100036. [CrossRef]

- H. Agus Santoso et al., “Dinus Intelligent Assistance (DINA) Chatbot for University Admission Services,” Proc. - 2018 Int. Semin. Appl. Technol. Inf. Commun. Creat. Technol. Hum. Life, iSemantic 2018, pp. 417–423, 2018, doi: 10.1109/ISEMANTIC.2018.8549797. [CrossRef]

- W. El Hefny, Y. Mansy, M. Abdallah, and S. Abdennadher, “Jooka : A Bilingual Chatbot for University Admission,” in Trends and Applications in Information Systems and Technologies, 2021, pp. 671–681. doi: 10.1007/978-3-030-72660-7. [CrossRef]

- A. Aloqayli and H. Abdelhafez, “Intelligent Chatbot for Admission in Higher Education,” Int. J. Inf. Educ. Technol., vol. 13, no. 9, pp. 1348–1357, 2023, doi: 10.18178/ijiet.2023.13.9.1937. [CrossRef]

- S. Sakulwichitsintu, “ParichartBOT: a chatbot for automatic answering for postgraduate students of an open university,” Int. J. Inf. Technol., vol. 15, no. 3, pp. 1387–1397, 2023, doi: 10.1007/s41870-023-01176-z. [CrossRef]

- R. B. K. Md. Abdullah Al Muid, Md. Masum Reza, M. M. Reza, R. Bin Kalim, N. Ahmed, M. T. Habib, and M. S. Rahman, “Edubot: An unsupervised domain-specific chatbot for educational institutions,” Lect. Notes Networks Syst., vol. 144, pp. 166–174, 2021, doi: 10.1007/978-3-030-53970-2_16. [CrossRef]

- D. Al-ghadhban and N. Al-twairesh, “Nabiha : An Arabic Dialect Chatbot,” Int. J. Adv. Comput. Sci. Appl., vol. 11, no. 3, pp. 452–459, 2020, doi: 10.14569/IJACSA.2020.0110357. [CrossRef]

- P. Udupa, “Application of artificial intelligence for university information system,” Eng. Appl. Artif. Intell., vol. 114, p. 105038, 2022, doi: 10.1016/j.engappai.2022.105038. [CrossRef]

- R. Ren, S. Perez-Soler, J. W. Castro, O. Dieste, and S. T. Acuna, “Using the SOCIO Chatbot for UML Modeling: A Second Family of Experiments on Usability in Academic Settings,” IEEE Access, vol. 10, no. November, pp. 130542–130562, 2022, doi: 10.1109/ACCESS.2022.3228772. [CrossRef]

- N. Thalaya and K. Puritat, “BCNPYLIB CHAT BOT: The artificial intelligence Chatbot for library services in college of nursing,” in 2022 Joint International Conference on Digital Arts, Media and Technology with ECTI Northern Section Conference on Electrical, Electronics, Computer and Telecommunications Engineering (ECTI DAMT & NCON), 2022, pp. 247–251. doi: 10.1109/ECTIDAMTNCON53731.2022.9720367. [CrossRef]

- S. Rodriguez and C. Mune, “Uncoding library chatbots: deploying a new virtual reference tool at the San Jose State University library,” Ref. Serv. Rev., vol. 50, no. 3–4, pp. 392–405, 2022, doi: 10.1108/RSR-05-2022-0020. [CrossRef]

- J. Barzola-monteses, S. Member, and J. Guillén-mirabá, “CSM : a Chatbot Solution to Manage Student Questions About payments and,” vol. 4, pp. 1–12, 2024, doi: 10.1109/ACCESS.2024.3404008. [CrossRef]

- R. Parkar, Y. Payare, K. Mithari, J. Nambiar, and J. Gupta, “AI and Web-Based Interactive College Enquiry Chatbot,” Proc. 13th Int. Conf. Electron. Comput. Artif. Intell., pp. 1–5, 2021, doi: 10.1109/ECAI52376.2021.9515065. [CrossRef]

- A. K. Nikhath et al., “An Intelligent College Enquiry Bot using NLP and Deep Learning based techniques,” 2022 Int. Conf. Adv. Technol. (CONAT), pp. 1–6, 2022, doi: 10.1109/ICONAT53423.2022.9725865. [CrossRef]

- N. A. Al-Madi, K. A. Maria, M. A. Al-Madi, M. A. Alia, and E. A. Maria, “An Intelligent Arabic Chatbot System Proposed Framework,” 2021 Int. Conf. Inf. Technol., pp. 592–597, 2021, doi: 10.1109/ICIT52682.2021.9491699. [CrossRef]

- K. Lee, J. Jo, and J. Kim, “Can Chatbots Help Reduce the Workload of Administrative Of fi cers ? - Implementing and Deploying FAQ Chatbot Service in a University,” in 21st International Conference on Human-Computer Interaction, HCI International 2019, 2019, pp. 348–354. doi: 10.1007/978-3-030-23522-2. [CrossRef]

- A. Agung and S. Gunawan, “Ava: knowledge-based chatbot as virtual assistant in university,” ICIC Express Lett. Part B Appl., vol. 13, no. 4, pp. 437–444, 2022, doi: 10.24507/icicelb.13.04.437. [CrossRef]

- M. Dibitonto, K. Leszczynska, F. Tazzi, and C. M. Medaglia, “Chatbot in a Campus Environment : Design of LiSA , a Virtual Assistant to Help Students in Their University Life,” in Human-Computer Interaction. Interaction Technologies, Cham., M. Kurosu, Ed. Springer International Publishing, 2018, pp. 103–116. doi: 10.1007/978-3-319-91250-9. [CrossRef]

- M. Rukhiran and P. Netinant, “Automated information retrieval and services of graduate school using chatbot system,” in International Journal of Electrical and Computer Engineering (IJECE), 2022, vol. 12, no. 5, pp. 5330–5338. doi: 10.11591/ijece.v12i5.pp5330-5338. [CrossRef]

- T. Lalwani, S. Bhalotia, A. Pal, S. Bisen, and V. Rathod, “Implementation of a Chat Bot System using AI and NLP,” Int. J. Innov. Res. Comput. Sci. Technol., vol. 6, no. 3, pp. 26–30, 2018, doi: 10.21276/ijircst.2018.6.3.2. [CrossRef]

- J. P. and J. S. L. Galko, “Improving the user experience of electronic university enrollment,” in 2018 16th International Conference on Emerging eLearning Technologies and Applications (ICETA), 2018, pp. 179–184. doi: 10.1109/ICETA.2018.8572054. [CrossRef]

- H. Nguyen, J. Lopez, B. Homer, A. Ali, and J. Ahn, “Reminders, reflections, and relationships: insights from the design of a chatbot for college advising,” Inf. Learn. Sci., vol. 124, no. 3–4, pp. 128–146, 2023, doi: 10.1108/ILS-10-2022-0116. [CrossRef]

- P. Giannos and O. Delardas, “Performance of ChatGPT on UK Standardized Admission Tests: Insights from the BMAT, TMUA, LNAT, and TSA Examinations,” JMIR Med. Educ., vol. 9, pp. 1–7, 2023, doi: 10.2196/47737. [CrossRef]

- “University Student Surveys Using Chatbots: Artificial Intelligence Conversational Agents,” in Learning and Collaboration Technologies: Games and Virtual Environments for Learning, 2021, pp. 155–169. doi: 10.1007/978-3-030-77943-6_10. [CrossRef]

- K. Peyton and S. Unnikrishnan, “A comparison of chatbot platforms with the state-of-the-art sentence BERT for answering online student FAQs,” Results Eng., vol. 17, no. October 2022, p. 100856, 2023, doi: 10.1016/j.rineng.2022.100856. [CrossRef]

- H. Snyder, “Literature review as a research methodology: An overview and guidelines,” J. Bus. Res., vol. 104, no. July, pp. 333–339, 2019, doi: 10.1016/j.jbusres.2019.07.039. [CrossRef]

- H. Kamioka, “Preferred reporting items for systematic review and meta-analysis protocols (prisma-p) 2015 statement,” Japanese Pharmacol. Ther., vol. 47, no. 8, pp. 1177–1185, 2019, doi: 10.1186/2046-4053-4-1. [CrossRef]

- H. Jiang, Y. Cheng, J. Yang, and S. Gao, “AI-powered chatbot communication with customers: Dialogic interactions, satisfaction, engagement, and customer behavior,” Comput. Human Behav., vol. 134, no. May, p. 107329, 2022, doi: 10.1016/j.chb.2022.107329. [CrossRef]

- R. Irvine et al., “Rewarding Chatbots for Real-World Engagement with Millions of Users,” 2023, [Online]. Available: http://arxiv.org/abs/2303.06135.

- M. A. de Sá Siqueira, B. C. N. Müller, and T. Bosse, “When Do We Accept Mistakes from Chatbots? The Impact of Human-Like Communication on User Experience in Chatbots That Make Mistakes,” Int. J. Hum. Comput. Interact., vol. 0, no. 0, pp. 1–11, 2023, doi: 10.1080/10447318.2023.2175158. [CrossRef]

- G. Attigeri and A. Agrawal, “Advanced NLP Models for Technical University Information Chatbots : Development and Comparative Analysis,” IEEE Access, vol. 12, no. February, pp. 29633–29647, 2024, doi: 10.1109/ACCESS.2024.3368382. [CrossRef]

- M. C. B. Nicole M. Radziwill, “Evaluating Quality of Chatbots and Intelligent Conversational Agents,” 2017, doi: 10.48550/arXiv.1704.04579. [CrossRef]

- C. Kooli, “Chatbots in Education and Research: A Critical Examination of Ethical Implications and Solutions,” Sustain., vol. 15, no. 7, 2023.

- N. Teckchandani, A. Santokhee, and G. Bekaroo, “AIML and Sequence-to-Sequence Models to Build Artificial Intelligence Chatbots: Insights from a Comparative Analysis,” Lect. Notes Electr. Eng., vol. 561, no. May, pp. 323–333, 2019, doi: 10.1007/978-3-030-18240-3_30. [CrossRef]

- M. Mekni, Z. Baani, and D. Sulieman, “A Smart Virtual Assistant for Students,” in Proceedings of the 3rd International Conference on Applications of Intelligent Systems (APPIS 2020), 2020, pp. 1–6. doi: 10.1145/3378184.3378199. [CrossRef]

- S. S. Ranavare and R. S. Kamath, “Artificial Intelligence based Chatbot for Placement Activity at College Using DialogFlow,” Our Herit., vol. 68, no. 30, pp. 4806–4814, 2020, [Online]. Available: https://www.researchgate.net/publication/347948058.

- and P. K. G. S. Ramesh, G. Nagaraju, Vemula Harish, “Chatbot for CollegeWebsite,” in Proceedings of International Conference on Advances in Computer Engineering and Communication Systems, Learning and Analytics in Intelligent Systems 20, 2020, vol. 20, pp. 511–521. doi: https://doi.org/10.1007/978-981-15-9293-5_47. [CrossRef]

- M. Jaiwai, K. Shiangjen, S. Rawangyot, S. Dangmanee, T. Kunsuree, and A. Sa-Nguanthong, “Automatized Educational Chatbot using Deep Neural Network,” 2021 Jt. 6th Int. Conf. Digit. Arts, Media Technol. with 4th ECTI North. Sect. Conf. Electr. Electron. Comput. Telecommun. Eng. ECTI DAMT NCON 2021, pp. 85–89, 2021, doi: 10.1109/ECTIDAMTNCON51128.2021.9425716. [CrossRef]

- M. T. Nguyen, M. Tran-Tien, A. P. Viet, H. T. Vu, and V. H. Nguyen, “Building a Chatbot for Supporting the Admission of Universities,” 2021 13th Int. Conf. Knowl. Syst. Eng., pp. 1–6, 2021, doi: 10.1109/KSE53942.2021.9648677. [CrossRef]

- S. Meshram, N. Naik, V. R. Megha, T. More, and S. Kharche, “College Enquiry Chatbot using Rasa Framework,” 2021 Asian Conf. Innov. Technol., pp. 1–8, 2021, doi: 10.1109/ASIANCON51346.2021.9544650. [CrossRef]

- C. Chun Ho, H. L. Lee, W. K. Lo, and K. F. A. Lui, “Developing a Chatbot for College Student Programme Advisement,” Proc. - 2018 Int. Symp. Educ. Technol. ISET 2018, pp. 52–56, 2018, doi: 10.1109/ISET.2018.00021. [CrossRef]

- L. Fauzia, R. B. Hadiprakoso, and Girinoto, “Implementation of Chatbot on University Website Using RASA Framework,” 2021 4th Int. Semin. Res. Inf. Technol. Intell. Syst. ISRITI 2021, pp. 373–378, 2021, doi: 10.1109/ISRITI54043.2021.9702821. [CrossRef]

- N. N. Khin and K. M. Soe, “Question Answering based University Chatbot using Sequence to Sequence Model,” 2020 23rd Conf. Orient. COCOSDA Int. Comm. Co-ord. Stand. Speech Databases Assess. Tech., pp. 55–59, 2020, doi: 10.1109/O-COCOSDA50338.2020.9295021. [CrossRef]

- Y. Windiatmoko, A. F. Hidayatullah, and ..., “Developing FB chatbot based on deep learning using RASA framework for university enquiries,” in IOP Conf. Ser.: Mater. Sci. Eng, 2020, p. 1077 012060. doi: 10.1088/1757-899X/1077/1/012060. [CrossRef]

- Y. W. Chandra and S. Suyanto, “Indonesian chatbot of university admission using a question answering system based on sequence-to-sequence model,” Procedia Comput. Sci., vol. 157, pp. 367–374, 2019, doi: 10.1016/j.procs.2019.08.179. [CrossRef]

- S. Sinha, S. Basak, Y. Dey, and A. Mondal, “An Educational Chatbot for Answering Queries,” in Emerging Technology in Modelling and Graphics, 2020, pp. 55–60. doi: 10.1007/978-981-13-7403-6. [CrossRef]

- N. N. K. and K. M. Soe, “University Chatbot using Artificial Intelligence Markup Language,” 2020 IEEE Conf. Comput. Appl., pp. 1–5, 2020, doi: 10.1109/ICCA49400.2020.9022814. [CrossRef]

- T. Thanh, S. Nguyen, D. Huu, T. Ho, N. Tram, and A. Nguyen, “An Ontology-Based Question Answering System for University Admissions Advising,” Intell. Autom. Soft Comput., vol. 36, no. 1, pp. 601–616, 2023, doi: 10.32604/iasc.2023.032080. [CrossRef]

- V. Oguntosin and A. Olomo, “Development of an E-Commerce Chatbot for a University Shopping Mall,” Appl. Comput. Intell. Soft Comput., vol. 2021, no. 1, 2021, doi: 10.1155/2021/6630326. [CrossRef]

- A. Nurshatayeva, L. C. Page, C. C. White, and H. Gehlbach, “Are Artificially Intelligent Conversational Chatbots Uniformly Effective in Reducing Summer Melt? Evidence from a Randomized Controlled Trial,” Res. High. Educ., vol. 62, no. 3, pp. 392–402, 2021, doi: 10.1007/s11162-021-09633-z. [CrossRef]

- and P. K. G. S. Ramesh, G. Nagaraju, Vemula Harish, “ChatBot for college website,” in International Journal of Innovative Technology and Exploring Engineering, vol. 8, no. 10, K. S. R. C. Kiran Mai · B. V. Kiranmayee, Margarita N. Favorskaya, Suresh Chandra Satapathy, Ed. Springer, 2020, pp. 566–569. doi: 10.35940/ijitee.J8867.0881019. [CrossRef]

- A. Aldoseri, K. N. Al-Khalifa, and A. M. Hamouda, “Re-Thinking Data Strategy and Integration for Artificial Intelligence: Concepts, Opportunities, and Challenges,” Appl. Sci., vol. 13, no. 12, 2023, doi: 10.3390/app13127082. [CrossRef]

- S. Alqaidi, W. Alharbi, and O. Almatrafi, “A support system for formal college students: A case study of a community-based app augmented with a chatbot,” 2021 19th Int. Conf. Inf. Technol. Based High. Educ. Train. (ITHET ), vol. 978, no. 1, 2021, doi: 10.1109/ITHET50392.2021.9759796. [CrossRef]

- T. Lewandowski, E. Kučević, S. Leible, M. Poser, and T. Böhmann, “Enhancing conversational agents for successful operation: A multi-perspective evaluation approach for continuous improvement,” Electron. Mark., vol. 33, no. 1, pp. 1–20, 2023, doi: 10.1007/s12525-023-00662-3. [CrossRef]

- M. Adam, M. Weesel, and A. Benlian, “AI-based chatbots in customer service and their effects on user compliance.pdf,” pp. 427–445, 2021.

- J. Sedoc, D. Ippolito, A. Kirubarajan, J. Thirani, L. Ungar, and C. Callison-Burch, “ChatEval: A tool for chatbot evaluation,” NAACL HLT 2019 - 2019 Conf. North Am. Chapter Assoc. Comput. Linguist. Hum. Lang. Technol. - Proc. Demonstr. Sess., pp. 60–65, 2019.

- P. Gupta et al., “Answerability: A custom metric for evaluating chatbot performance,” GEM 2022 - 2nd Work. Nat. Lang. Gener. Eval. Metrics, Proc. Work., pp. 316–325, 2022, doi: 10.18653/v1/2022.gem-1.27. [CrossRef]

Figure 1.

PRISMA flowchart

Figure 1.

PRISMA flowchart

Figure 2.

Proportion of conference papers and journal articles.

Figure 2.

Proportion of conference papers and journal articles.

Figure 3.

Number of articles per publication year

Figure 3.

Number of articles per publication year

Figure 4.

Number of articles per administrative tasks/ functionalities.

Figure 4.

Number of articles per administrative tasks/ functionalities.

Figure 5.

Proportion of identified data sources.

Figure 5.

Proportion of identified data sources.

Figure 6.

Proportion of Identified dataset sizes.

Figure 6.

Proportion of Identified dataset sizes.

Figure 7.

Frequency of identified topics in the literature

Figure 7.

Frequency of identified topics in the literature

Figure 8.

Frequency of identified preprocessing tasks in the literature.

Figure 8.

Frequency of identified preprocessing tasks in the literature.

Figure 9.

Simple chatbot architecture.

Figure 9.

Simple chatbot architecture.

Figure 10.

Frequency of recognized user interfaces in the literature.

Figure 10.

Frequency of recognized user interfaces in the literature.

Figure 11.

Frequency of recognized dialogue managers in the literature.

Figure 11.

Frequency of recognized dialogue managers in the literature.

Figure 12.

Frequency of recognized knowledge bases in the literature.

Figure 12.

Frequency of recognized knowledge bases in the literature.

Figure 13.

Chatbot Models Classification.

Figure 13.

Chatbot Models Classification.

Figure 14.

The proportion of chatbot models in literature.

Figure 14.

The proportion of chatbot models in literature.

Figure 15.

Proportion of identified evaluation approaches.

Figure 15.

Proportion of identified evaluation approaches.

Figure 16.

Challenges for chatbot development

Figure 16.

Challenges for chatbot development

Figure 17.

Chatbot’s Future Functionalities

Figure 17.

Chatbot’s Future Functionalities

Table 1.

Quality evaluation results.

Table 1.

Quality evaluation results.

| Reference |

RQ1 |

RQ2 |

RQ3 |

RQ4 |

RQ5 |

|

Total score |

[5]

[13] |

3

3 |

3

2 |

3

2 |

3

2 |

3

1 |

|

15

10 |

[14]

[15] |

3

3 |

0

3 |

3

2 |

3

1 |

0

3 |

|

9

12 |

| [16] |

3 |

0 |

3 |

3 |

0 |

|

9 |

[17]

[18]

[19] |

3

3

3 |

1