Submitted:

07 October 2024

Posted:

07 October 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction and Background

- They provide an end-to-end ML pipeline in a generic and structured way.

- They contain technical details and application scenarios and thereby allow use in arbitrary ML tasks.

- They provide performance measurements indicating the models’ performance.

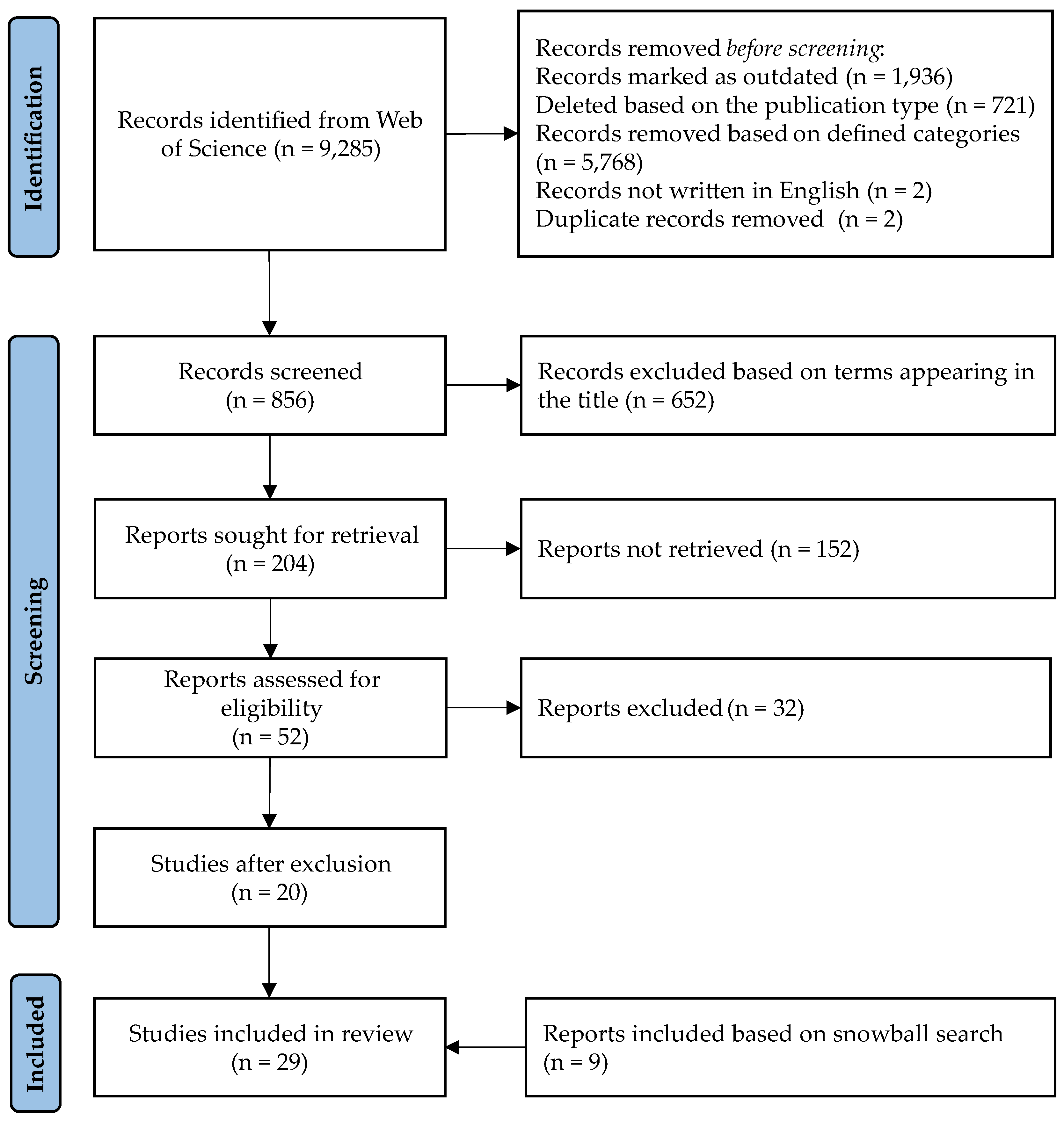

2. Systematic Literature Review Methodology

3. Results of the Review

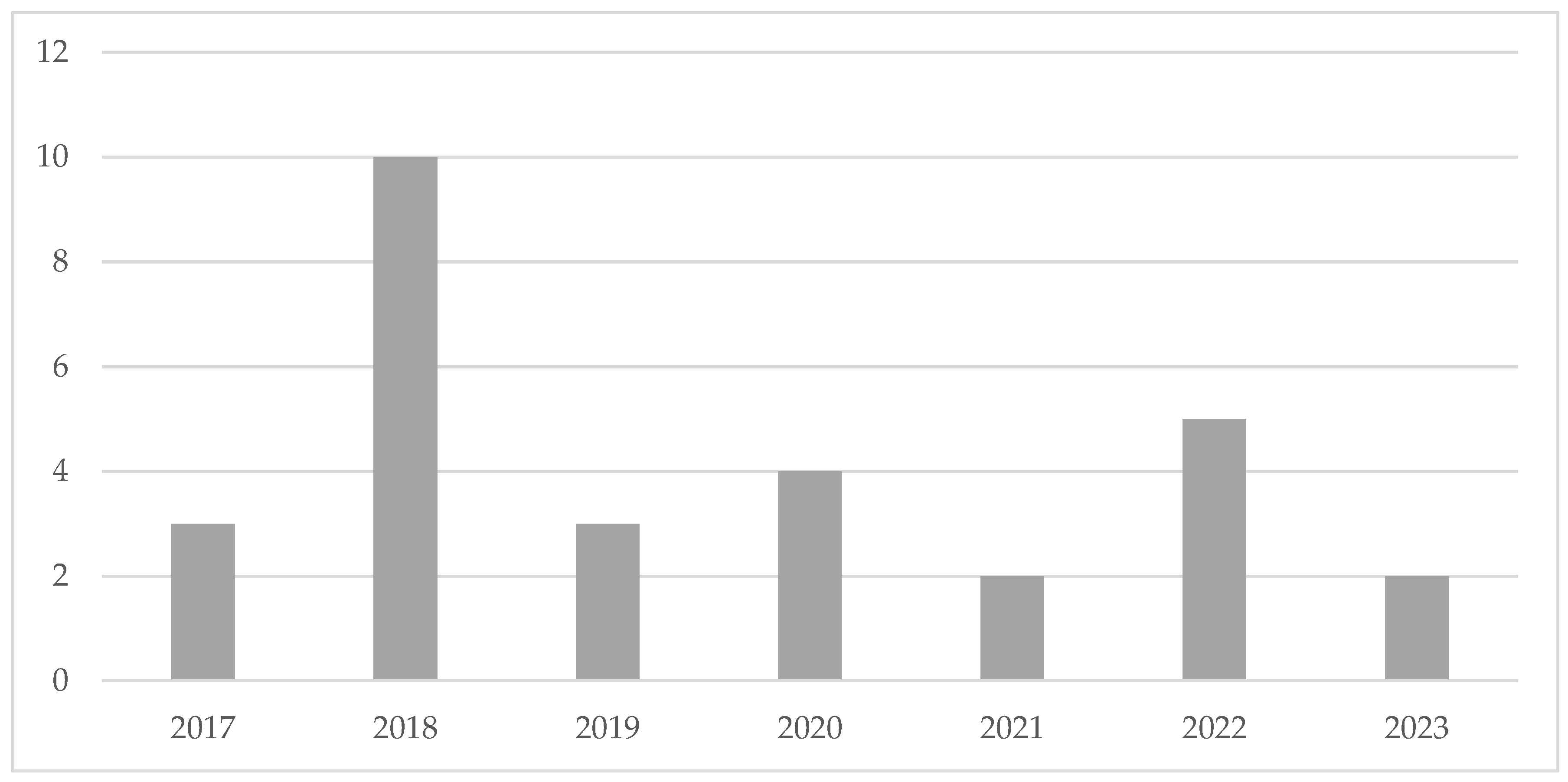

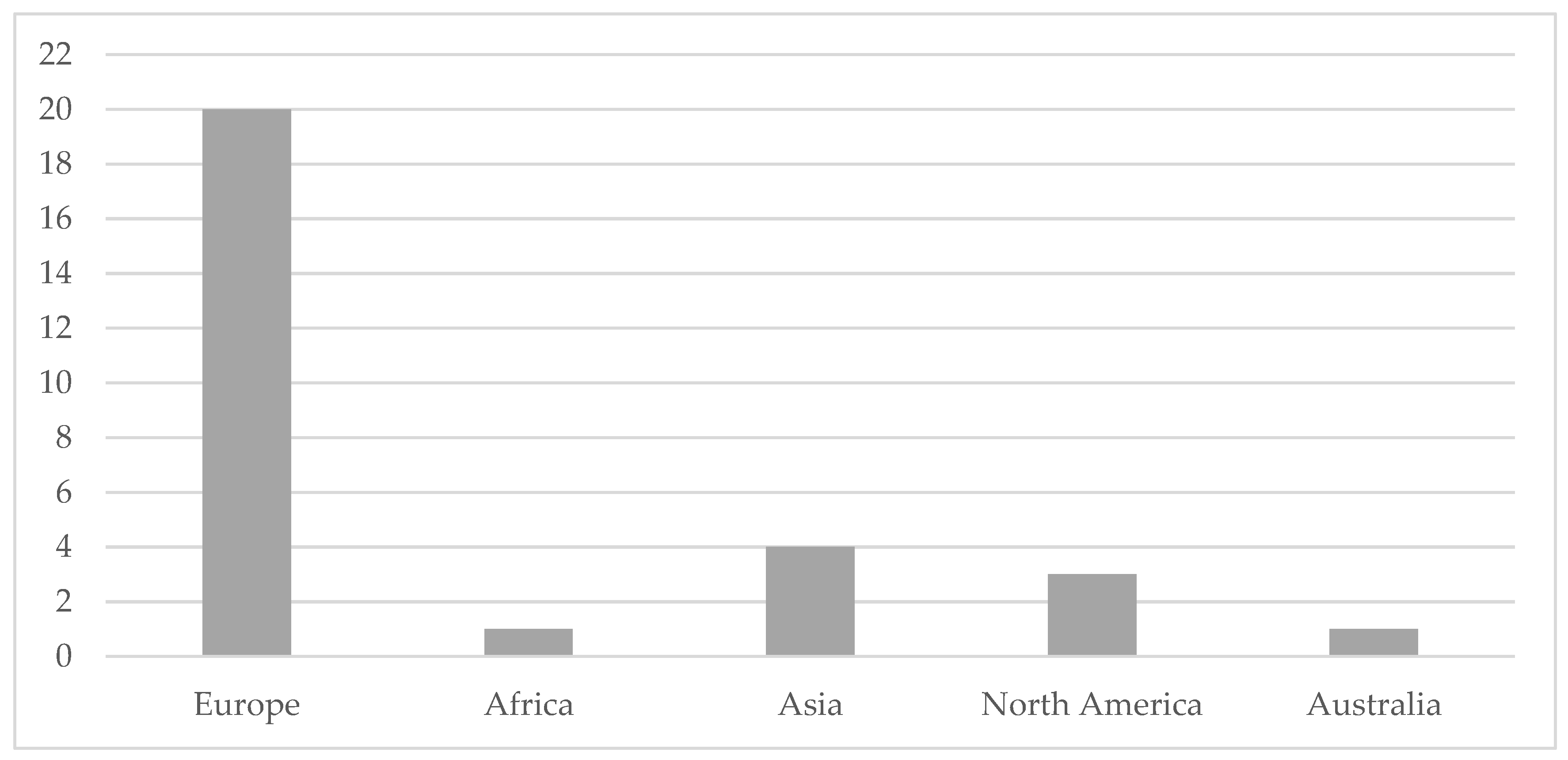

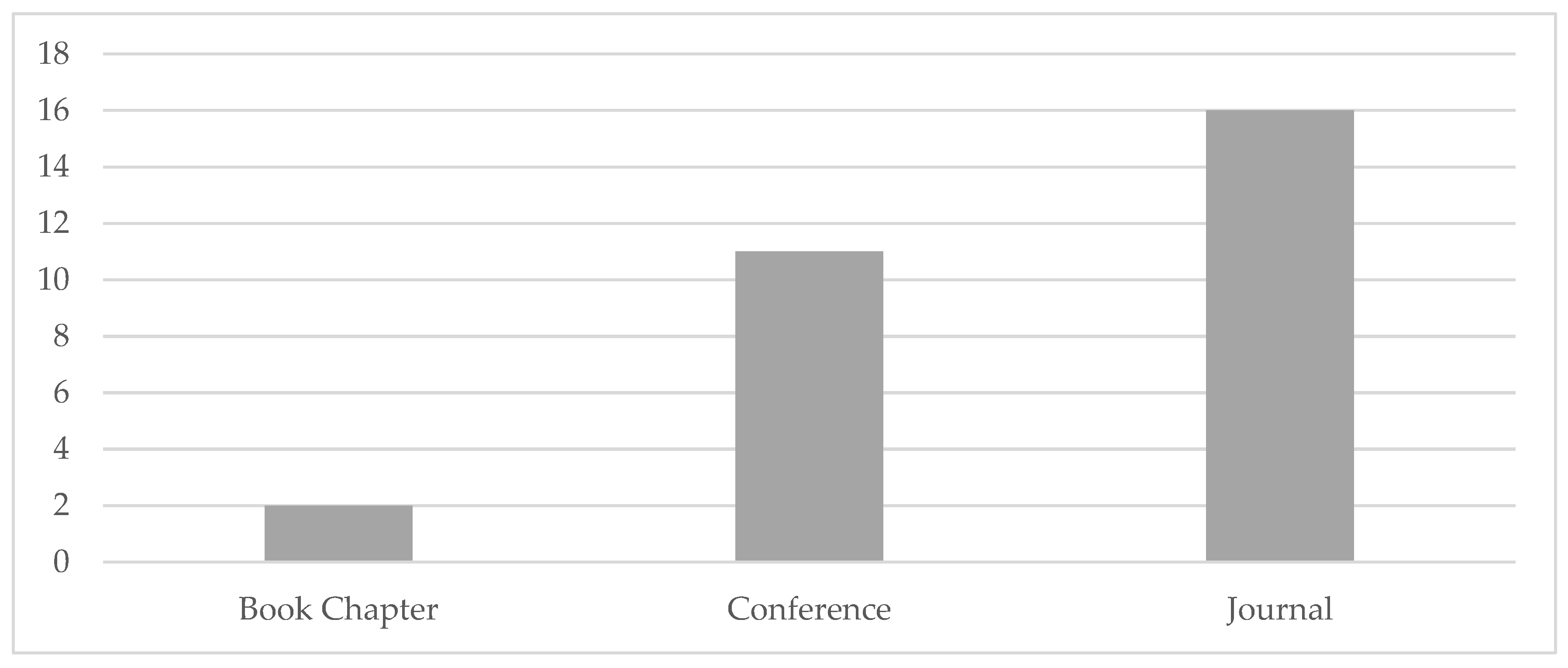

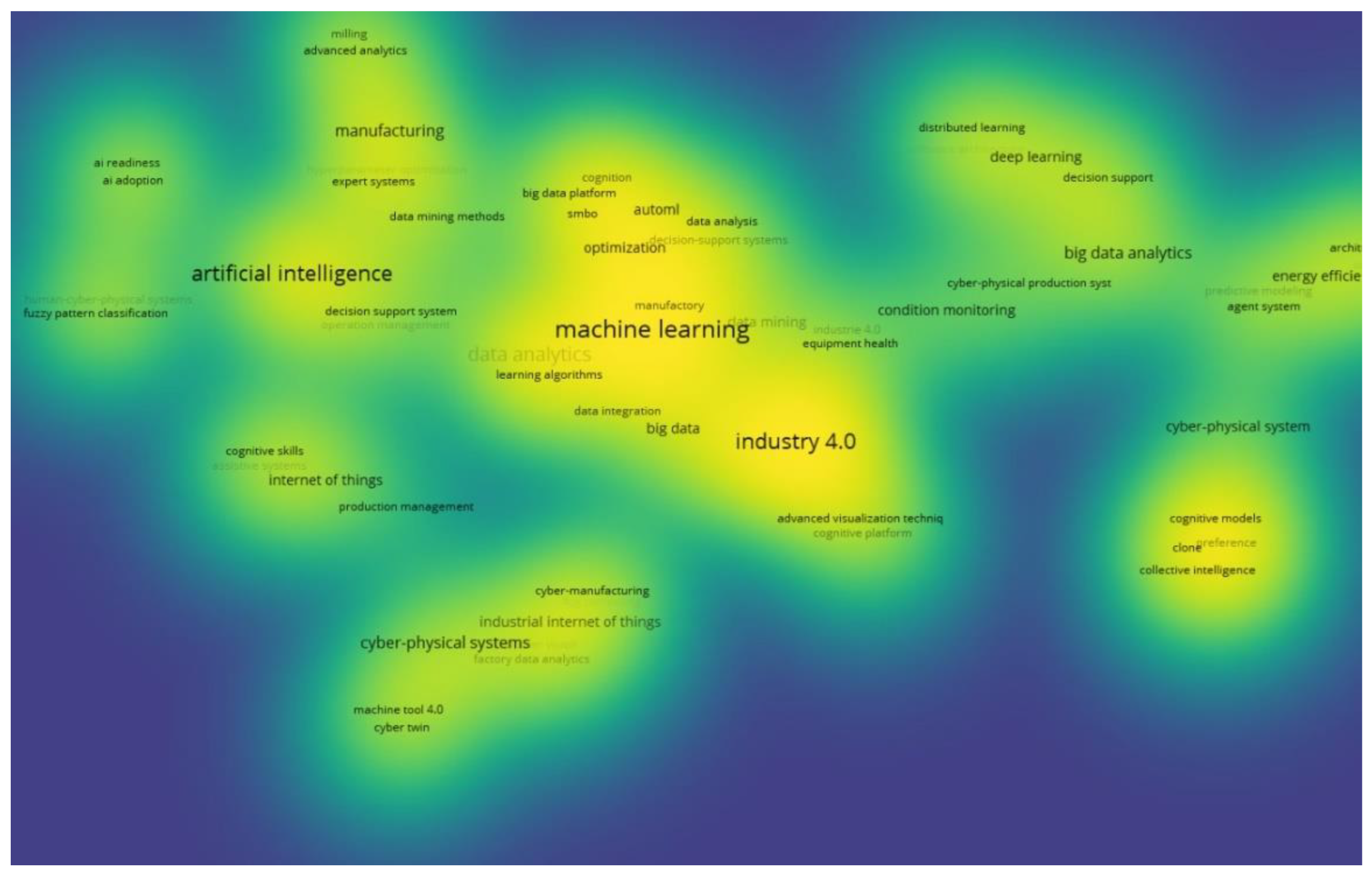

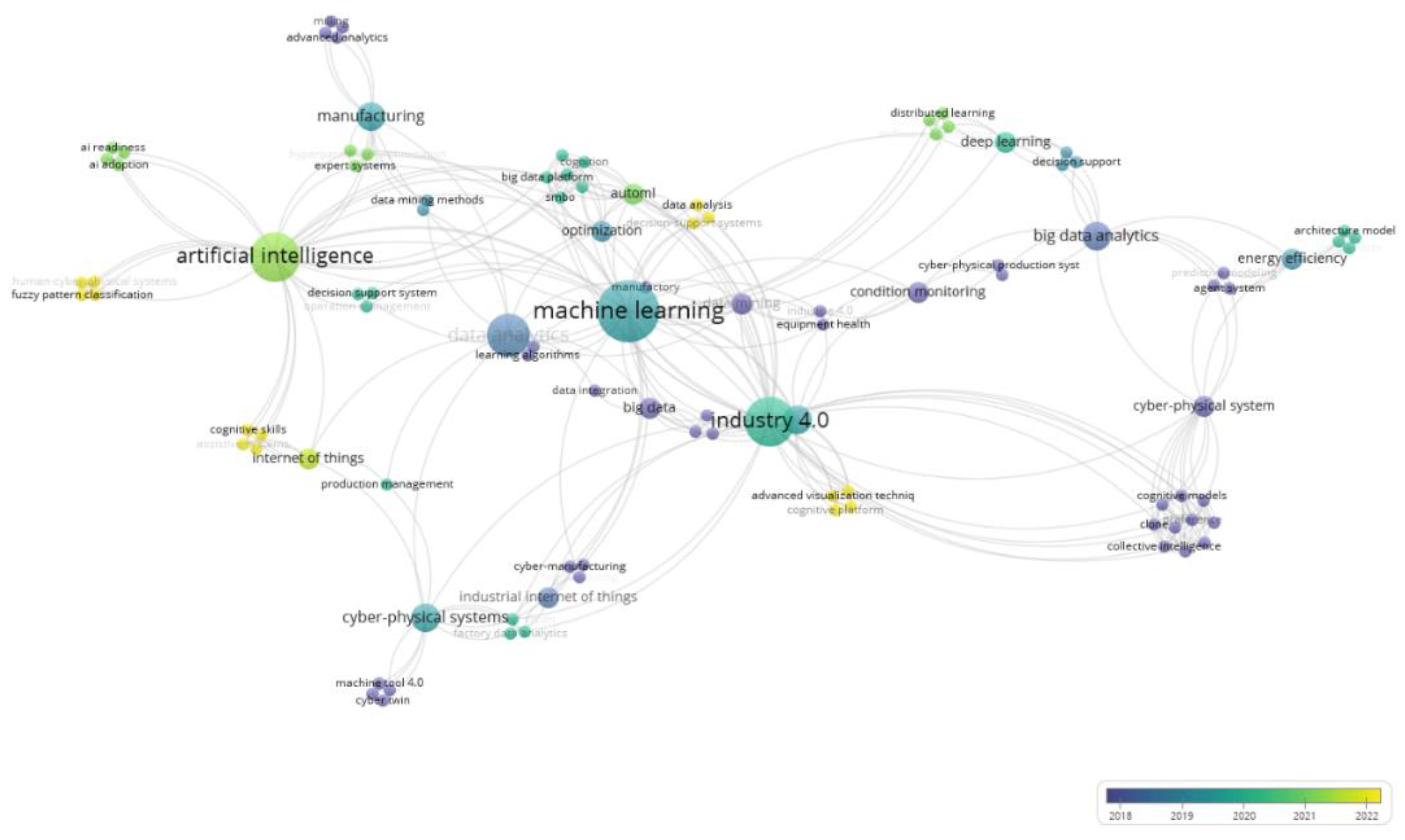

3.1. Descriptive Analysis

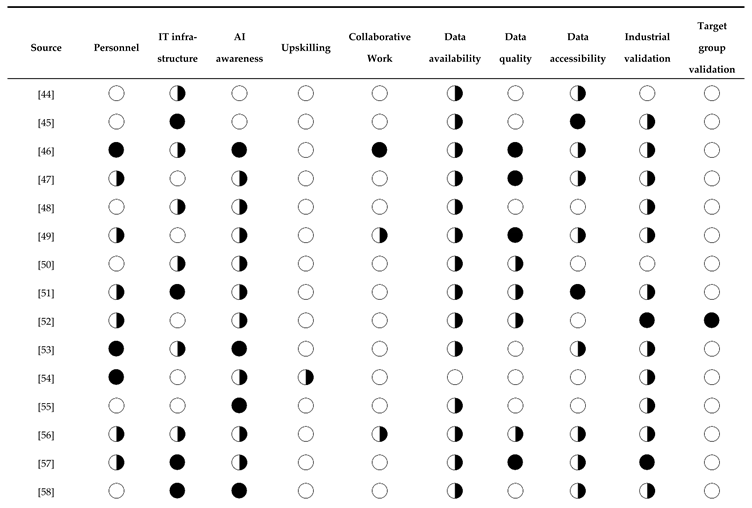

3.2. Content Analysis

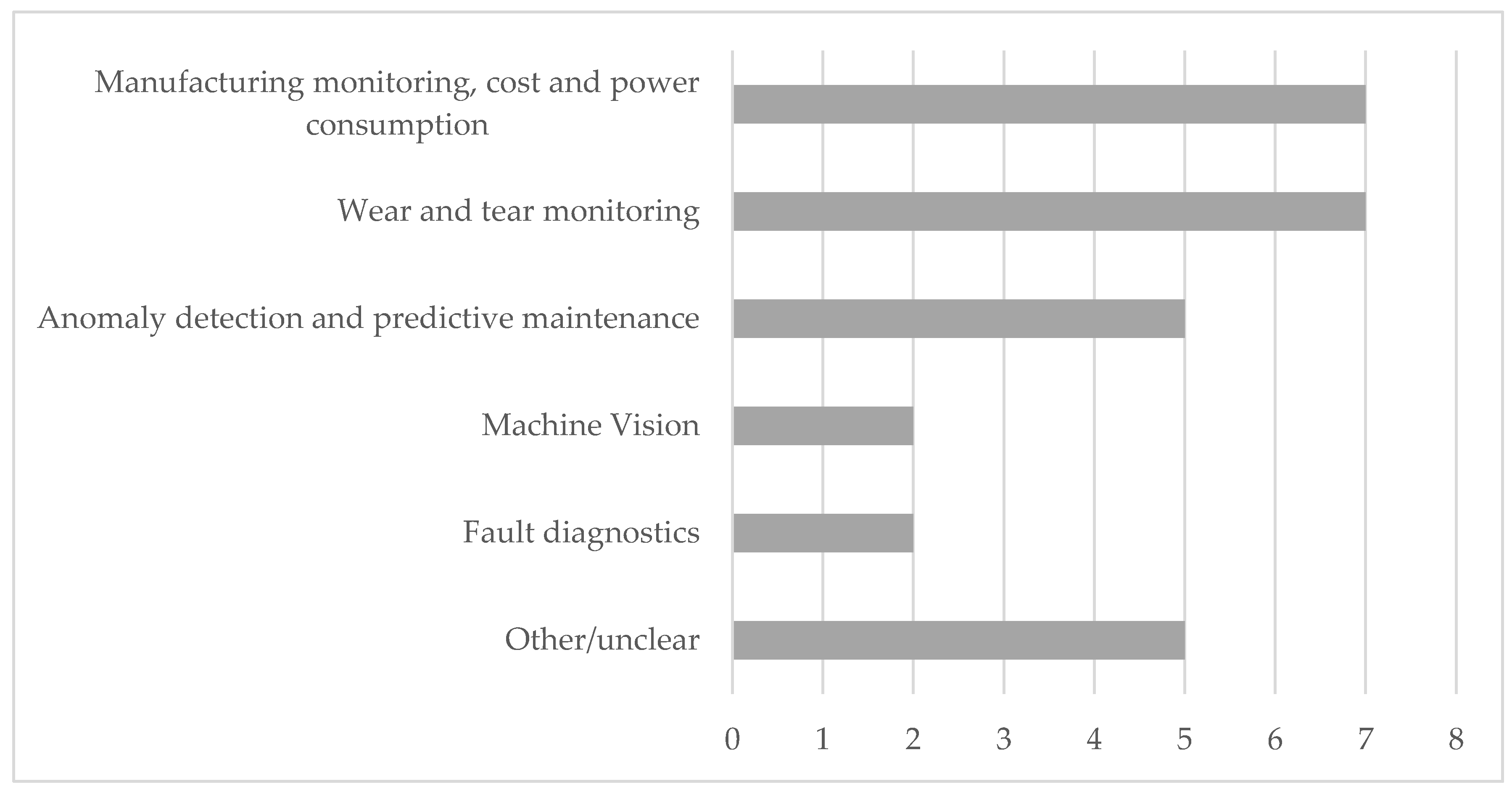

Results of RQ1

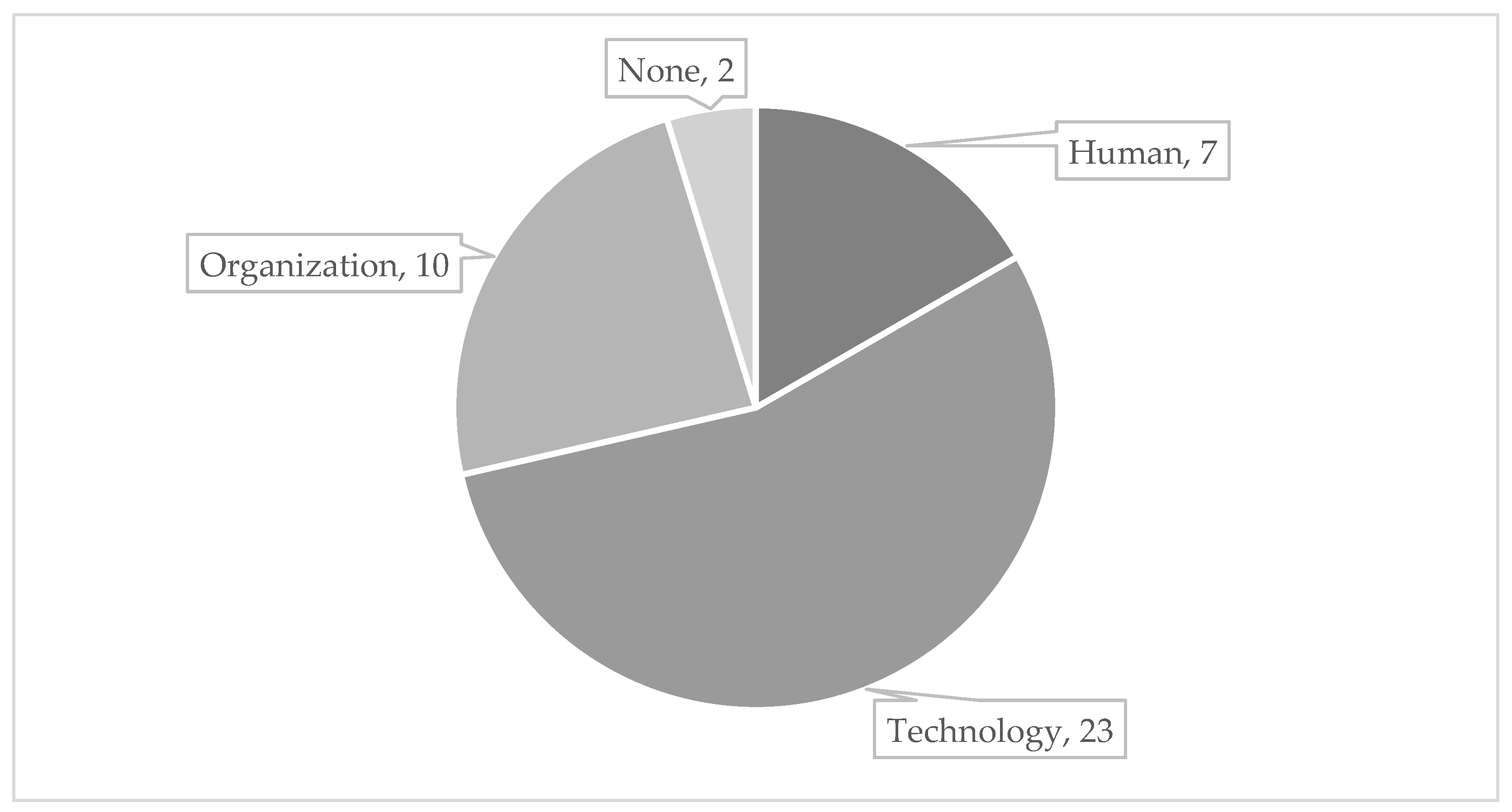

Results of RQ2

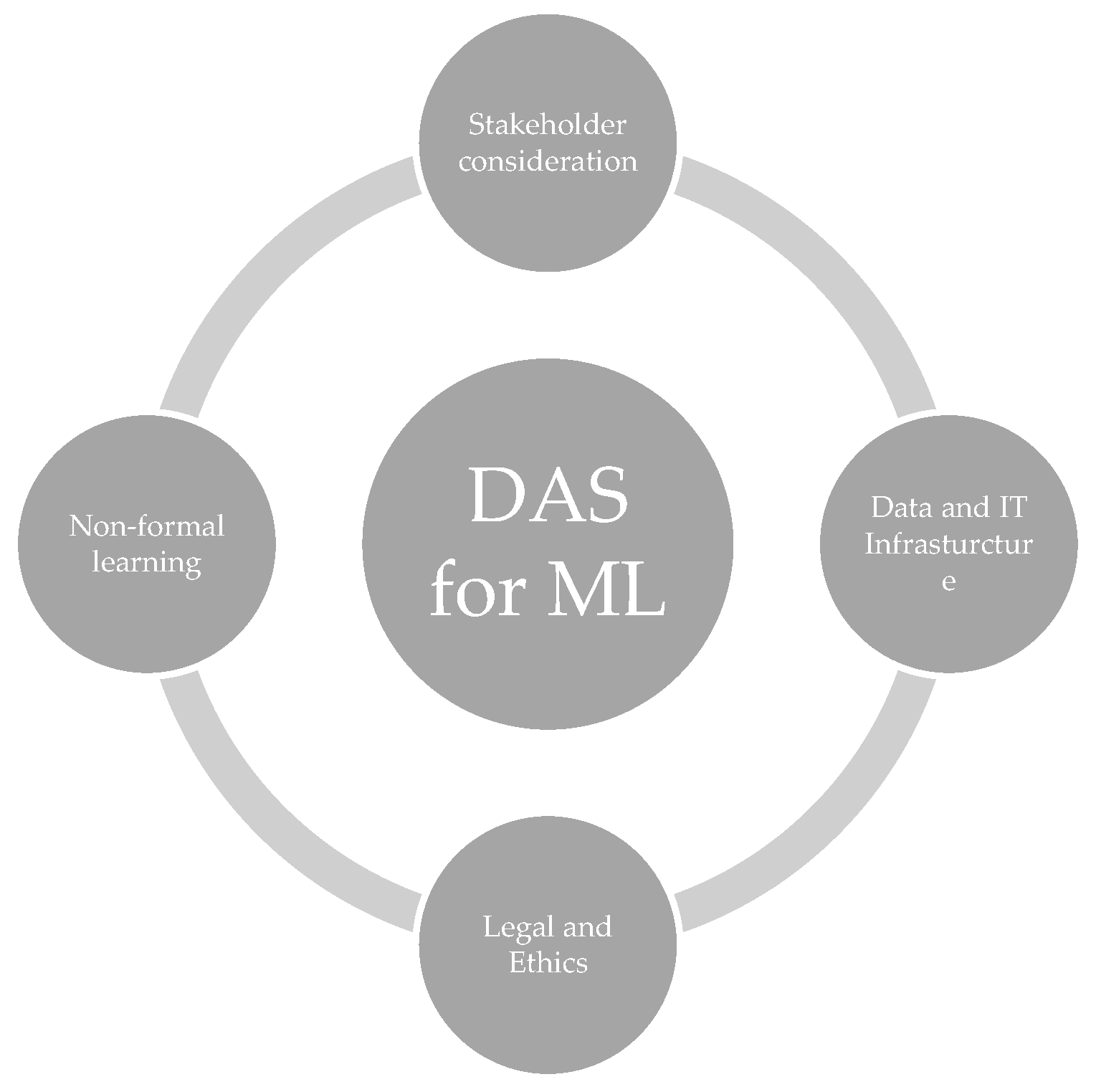

Results of RQ3

4. Discussion of the Results and Research Outlook

5. Conclusions and Outlook

Author Contributions

Funding

Conflicts of Interests

References

- Papadopoulos, T.; Sivarajah, U.; Spanaki, K.; Despoudi, S.; Gunasekaran, A. Editorial: Artificial Intelligence (AI) and data sharing in manufacturing, production and operations management research. Int. J. Prod. Res. 2022, 60, 4361–4364. [Google Scholar] [CrossRef]

- Rai, R. , Tiwari, M.K., Ivanov, D., Dolgui, A., 2021. Machine learning in manufacturing and industry 4.0 applications. International Journal of Production Research 59 (16), 4773–4778.

- Armutak, E.A. , Fendri, M., 2022. Unlocking Value from Artificial Intelligence in Manufacturing. Centre for the Fourth Industrial Revolution Turkey; World Economic Forum’s Platform for Shaping. https://www3.weforum.org/docs/WEF_AI_in_Manufacturing_2022.pdf. Accessed 6 March 2024.

- Ermakova, T. , Blume, J., Fabian, B., Fomenko, E., Berlin, M., Hauswirth, M., 2021. Beyond the Hype: Why Do Data-Driven Projects Fail?, in: Proceedings of the 54th Hawaii International Conference on System Sciences, pp. 5081–5090.

- Metternich, J. , Biegel, T., Bretones Cassoli, B., Hoffmann, F., Jourdan, N., Rosemeyer, J., Stanula, P., Ziegenbein, A., 2021. Künstliche Intelligenz zur Umsetzung von Industrie 4.0 im Mittelstand: Leitfaden zur Expertise des Forschungsbeirats der Plattform Industrie 4.0. https://www.acatech.de/publikation/fb4-0-ki-in-kmu/download-pdf/?lang=wildcard. Accessed 6 March 2024.

- Biegel, T. , Bretones Cassoli, B., Hoffmann, F., Jourdan, N., Metternich, J., 2021. An AI Management Model for the Manufacturing Industry - AIMM. https://hds.hebis.de/ulbda/Record/HEB48543220X. Accessed 6 March 2024.

- Krauß, J.; Pacheco, B.M.; Zang, H.M.; Schmitt, R.H. Automated machine learning for predictive quality in production. Procedia CIRP 2020, 93, 443–448. [Google Scholar] [CrossRef]

- Bauer, M. , van Dinther, C., Kiefer, D., 2020. Machine learning in SME: an empirical study on enablers and success factors, in: AMCIS 2020 proceedings - Advancings in information systems research. 26th Americas Conference on Information Systems. August 10-14 2020. Association for Information Systems (AIS), Atlanta, pp. 1–10.

- Bettoni, A.; Matteri, D.; Montini, E.; Gładysz, B.; Carpanzano, E. An AI adoption model for SMEs: a conceptual framework. IFAC-PapersOnLine 2021, 54, 702–708. [Google Scholar] [CrossRef]

- Pinzone, M. , Fantini, P., Fiasché, M., Taisch, M., 2016. A Multi-horizon, Multi-objective Training Planner: Building the Skills for Manufacturing, in: Bassis, S., Esposito, A., Morabito, F.C., Pasero, E. (Eds.), Advances in Neural Networks. Computational Intelligence for ICT, vol. 54. Springer International Publishing Switzerland, pp. 517–526.

- Fantini, P. , Pinzone, M., Sella, F., Taisch, M., 2018. Collaborative Robots and New Product Introduction: Capturing and Transferring Human Expert Knowledge to the Operators, in: Trzcielinski, S. (Ed.), Advances in Ergonomics of Manufacturing: Managing the Enterprise of the Future. Springer International Publishing AG, pp. 259–268.

- Pinzone, M.; Fantini, P.; Taisch, M. Skills for Industry 4.0: a structured repository grounded on a generalized enterprise reference architecture and methodology-based framework. Int. J. Comput. Integr. Manuf. 2023, 37, 952–971. [Google Scholar] [CrossRef]

- Pfaff-Kastner, M.M.-L.; Wenzel, K.; Ihlenfeldt, S. Concept Paper for a Digital Expert: Systematic Derivation of (Causal) Bayesian Networks Based on Ontologies for Knowledge-Based Production Steps. Mach. Learn. Knowl. Extr. 2024, 6, 898–916. [Google Scholar] [CrossRef]

- Aggogeri, F.; Pellegrini, N.; Tagliani, F.L. Recent Advances on Machine Learning Applications in Machining Processes. Appl. Sci. 2021, 11, 8764. [Google Scholar] [CrossRef]

- Mypati, O.; Mukherjee, A.; Mishra, D.; Pal, S.K.; Chakrabarti, P.P.; Pal, A. A critical review on applications of artificial intelligence in manufacturing. Artif. Intell. Rev. 2023, 56, 661–768. [Google Scholar] [CrossRef]

- Dobra, P.; Jósvai, J. Overall Equipment Effectiveness-related Assembly Pattern Catalogue based on Machine Learning. Manuf. Technol. 2023, 23, 276–283. [Google Scholar] [CrossRef]

- Andrianakos, G.; Dimitropoulos, N.; Michalos, G.; Makris, S. An approach for monitoring the execution of human based assembly operations using machine learning. Procedia CIRP 2019, 86, 198–203. [Google Scholar] [CrossRef]

- Zhang, S.-W.; Wang, Z.; Cheng, D.-J.; Fang, X.-F. An intelligent decision-making system for assembly process planning based on machine learning considering the variety of assembly unit and assembly process. Int. J. Adv. Manuf. Technol. 2022, 121, 805–825. [Google Scholar] [CrossRef]

- Weichert, D.; Link, P.; Stoll, A.; Rüping, S.; Ihlenfeldt, S.; Wrobel, S. A review of machine learning for the optimization of production processes. Int. J. Adv. Manuf. Technol. 2019, 104, 1889–1902. [Google Scholar] [CrossRef]

- Mazzei, D.; Ramjattan, R. Machine Learning for Industry 4.0: A Systematic Review Using Deep Learning-Based Topic Modelling. Sensors 2022, 22, 8641. [Google Scholar] [CrossRef]

- Doltsinis, S.; Ferreira, P.; Lohse, N. A Symbiotic Human–Machine Learning Approach for Production Ramp-up. IEEE Trans. Human-Machine Syst. 2017, 48, 229–240. [Google Scholar] [CrossRef]

- Mhlanga, D. Artificial Intelligence and Machine Learning for Energy Consumption and Production in Emerging Markets: A Review. Energies 2023, 16, 745. [Google Scholar] [CrossRef]

- Wirth, R. , Hipp, J., 2000. CRISP-DM: Towards a Standard Process Model for Data Mining. http://www.cs.unibo.it/~montesi/CBD/Beatriz/10.1.1.198.5133.pdf. Accessed 6 March 2024.

- Azevedo, A. , Santos, M., 2008. KDD, SEMMA and CRISP-DM: a parallel overview. IADIS European Conference on Data Mining.

- Huber, S.; Wiemer, H.; Schneider, D.; Ihlenfeldt, S. DMME: Data mining methodology for engineering applications – a holistic extension to the CRISP-DM model. Procedia CIRP 2019, 79, 403–408. [Google Scholar] [CrossRef]

- Studer, S.; Bui, T.B.; Drescher, C.; Hanuschkin, A.; Winkler, L.; Peters, S.; Müller, K.-R. Towards CRISP-ML(Q): A Machine Learning Process Model with Quality Assurance Methodology. Mach. Learn. Knowl. Extr. 2021, 3, 392–413. [Google Scholar] [CrossRef]

- Diamantis, D.E.; Iakovidis, D.K. ASML: Algorithm-Agnostic Architecture for Scalable Machine Learning. IEEE Access 2020, 9, 51970–51982. [Google Scholar] [CrossRef]

- Wostmann, R.; Schlunder, P.; Temme, F.; Klinkenberg, R.; Kimberger, J.; Spichtinger, A.; Goldhacker, M.; Deuse, J. Conception of a Reference Architecture for Machine Learning in the Process Industry. 2020 IEEE International Conference on Big Data (Big Data). IEEE, Piscataway, NJ; pp. 1726–1735.

- Martín, C.; Langendoerfer, P.; Zarrin, P.S.; Díaz, M.; Rubio, B. Kafka-ML: Connecting the data stream with ML/AI frameworks. Futur. Gener. Comput. Syst. 2022, 126, 15–33. [Google Scholar] [CrossRef]

- Berthold, M.R. , Cebron, N., Dill, F., Gabriel, T.R., Kötter, T., Meinl, T., Ohl, P., Sieb, C., Thiel, K., Wiswedel, B., 2008. KNIME: The Konstanz Information Miner, in: Data Analysis, Machine Learning and Applications. Springer Berlin Heidelberg, Berlin, Heidelberg, pp. 319–326.

- Garner, S.R. , 1995. WEKA: The Waikato Environment for Knowledge Analysis. https://www.cs.waikato.ac.nz/~ml/publications/1995/Garner95-WEKA.pdf. Accessed 6 March 2024.

- Demšar, J. , Curk, T., Erjavec, A., Gorup, Č., Hočevar, T., Milutinovič, M., Možina, M., Polajnar, M., Toplak, M., Starič, A., Štajdohar, M., Umek, L., Žagar, L., Žbontar, J., Žitnik, M., Zupan, B., 2013. Orange: Data Mining Toolbox in Python. Journal of Machine Learning Research 14 (71), 2349–2353.

- Rosemeyer, J. , Neunzig, C., Akbal, C., Metternich, J., Kuhlenkötter, B., 2024. A maturity model for digital ML tools to be used in manufacturing environments, Darmstadt. https://tuprints.ulb.tu-darmstadt.de/26519/1/Rosemeyer%20et%20al._2024_A%20maturity%20model%20for%20digital%20ML%20tools%20to%20be%20used%20in%20manufacturing%20environments.pdf. Accessed 10 July 2024.

- Apt, W. , Schubert, M., Wischmann, S., 2018. Digitale Assistenzsysteme: Perspektiven und Herausforderungen für den Einsatz in Industrie und Dienstleistungen. https://www.iit-berlin.de/iit-docs/fd2aa38ad4474e6cb53720e7878ffd4a_2018_02_01_Digitale_Assistenzsysteme_Perspektiven_und_Herausforderungen.pdf. Accessed 6 March 2024.

- Neunzig, C.; Möllensiep, D.; Kuhlenkötter, B.; Möller, M. ML Pro: digital assistance system for interactive machine learning in production. J. Intell. Manuf. 2023, 1–21. [Google Scholar] [CrossRef]

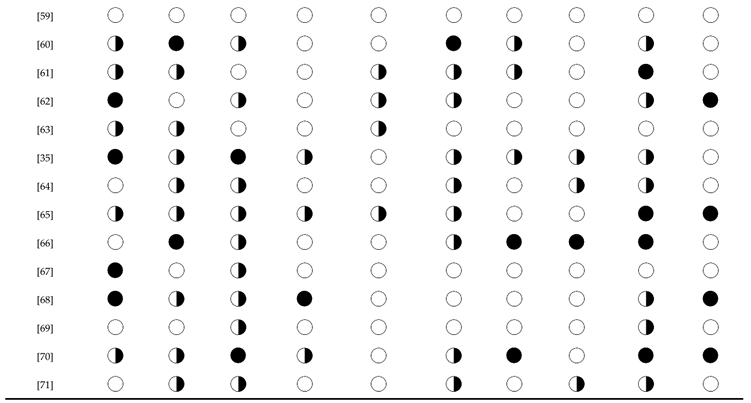

- Kitchenham, B. , 2004. Procedures for Performing Systematic Reviews. http://artemisa.unicauca.edu.co/~ecaldon/docs/spi/kitchenham_2004.pdf. Accessed 6 March 2024.

- Page, M.J. , McKenzie, J.E., Bossuyt, P.M., Boutron, I., Hoffmann, T.C., Mulrow, C.D., Shamseer, L., Tetzlaff, J.M., Akl, E.A., Brennan, S.E., Chou, R., Glanville, J., Grimshaw, J.M., Hróbjartsson, A., Lalu, M.M., Li, T., Loder, E.W., Mayo-Wilson, E., McDonald, S., McGuinness, L.A., Stewart, L.A., Thomas, J., Tricco, A.C., Welch, V.A., Whiting, P., Moher, D., 2021. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ (Clinical research ed.) 372, n71.

- Gibbert, M. , Ruigrok, W., Wicki, B., 2008. What passes as a rigorous case study? Strategic Management Journal 29 (13), 1465–1474.

- Nti, I.K.; Adekoya, A.F.; Weyori, B.A.; Nyarko-Boateng, O. Applications of artificial intelligence in engineering and manufacturing: a systematic review. J. Intell. Manuf. 2021, 33, 1581–1601. [Google Scholar] [CrossRef]

- Pumplun, L. , Tauchert, C., Heidt, M., 2019. A new organizational chassis for artifical intelligence: Exploring organizational readiness factors, in:, Proceedings of the 27th European Conference on Information Systems (ECIS), Uppsala & Stockholm, Sweden.

- Jöhnk, J. , Weißert, M., Wyrtki, K., 2021. Ready or Not, AI Comes— An Interview Study of Organizational AI Readiness Factors. Business & Information Systems Engineering 63 (1), 5–20.

- Hamm, P. , Klesel, M., 2021. Success Factors for the Adoption of Artiticial Intelligence in Organizations: A Literature Review, in: AMCIS 2021 proceedings - Advancings in information systems research. 27th Americas Conference on Information Systems. Association for Information Systems (AIS), pp. 1–10.

- Strohm, O. , Escher, O.P., 1997. Unternehmen arbeitspsychologisch bewerten: Ein Mehr-Ebenen-Ansatz unter besonderer Berücksichtigung von Mensch, Technik und Organisation. vdf Hochschulverlag an der ETH Zürich, Zürich, 448 pp.

- Zhu, K.; Zhang, Y. A Cyber-Physical Production System Framework of Smart CNC Machining Monitoring System. IEEE/ASME Trans. Mechatronics 2018, 23, 2579–2586. [Google Scholar] [CrossRef]

- Wu, D.; Liu, S.; Zhang, L.; Terpenny, J.; Gao, R.X.; Kurfess, T.; Guzzo, J.A. A fog computing-based framework for process monitoring and prognosis in cyber-manufacturing. J. Manuf. Syst. 2017, 43, 25–34. [Google Scholar] [CrossRef]

- Zacarias, A.G.V.; Reimann, P.; Mitschang, B. A framework to guide the selection and configuration of machine-learning-based data analytics solutions in manufacturing. Procedia CIRP 2018, 72, 153–158. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, H.; Li, J.; Gao, H. A generic data analytics system for manufacturing production. Big Data Min. Anal. 2018, 1, 160–171. [Google Scholar] [CrossRef]

- Cho, S. , May, G., Tourkogiorgis, I., Perez, R., Lazaro, O., La Maza, B. de, Kiritsis, D., 2018. A Hybrid Machine Learning Approach for Predictive Maintenance in Smart Factories of the Future, in: Moon, I., Lee, G.M., Park, J., Kiritsis, D., Cieminski, G. von (Eds.), Advances in Production Management Systems. Smart Manufacturing for Industry 4.0. IFIP WG 5.7 International Conference, APMS 2018, Proceedings Part II, vol. 536. Springer International Publishing, pp. 311–317.

- Lechevalier, D.; Narayanan, A.; Rachuri, S.; Foufou, S. A methodology for the semi-automatic generation of analytical models in manufacturing. Comput. Ind. 2018, 95, 54–67. [Google Scholar] [CrossRef]

- Cinar, E.; Kalay, S.; Saricicek, I. A Predictive Maintenance System Design and Implementation for Intelligent Manufacturing. Machines 2022, 10, 1006. [Google Scholar] [CrossRef]

- Liu, C.; Vengayil, H.; Zhong, R.Y.; Xu, X. A systematic development method for cyber-physical machine tools. J. Manuf. Syst. 2018, 48, 13–24. [Google Scholar] [CrossRef]

- Rodríguez, G.G.; Gonzalez-Cava, J.M.; Pérez, J.A.M. An intelligent decision support system for production planning based on machine learning. J. Intell. Manuf. 2019, 31, 1257–1273. [Google Scholar] [CrossRef]

- Kranzer, S.; Prill, D.; Aghajanpour, D.; Merz, R.; Strasser, R.; Mayr, R.; Zoerrer, H.; Plasch, M.; Steringer, R. An intelligent maintenance planning framework prototype for production systems. 2017 IEEE International Conference on Industrial Technology (ICIT). LOCATION OF CONFERENCE, COUNTRYDATE OF CONFERENCE; pp. 1124–1129.

- Senna, P.P.; Almeida, A.H.; Barros, A.C.; Bessa, R.J.; Azevedo, A.L. Architecture Model for a Holistic and Interoperable Digital Energy Management Platform. Procedia Manuf. 2020, 51, 1117–1124. [Google Scholar] [CrossRef]

- Fischbach, A.; Strohschein, J.; Bunte, A.; Stork, J.; Faeskorn-Woyke, H.; Moriz, N.; Bartz-Beielstein, T. CAAI—a cognitive architecture to introduce artificial intelligence in cyber-physical production systems. Int. J. Adv. Manuf. Technol. 2020, 111, 609–626. [Google Scholar] [CrossRef]

- Jun, C.; Lee, J.Y.; Kim, B.H. Cloud-based big data analytics platform using algorithm templates for the manufacturing industry. Int. J. Comput. Integr. Manuf. 2019, 32, 723–738. [Google Scholar] [CrossRef]

- Rousopoulou, V.; Vafeiadis, T.; Nizamis, A.; Iakovidis, I.; Samaras, L.; Kirtsoglou, A.; Georgiadis, K.; Ioannidis, D.; Tzovaras, D. Cognitive analytics platform with AI solutions for anomaly detection. Comput. Ind. 2022, 134, 103555. [Google Scholar] [CrossRef]

- Deshpande, A.M.; Telikicherla, A.K.; Jakkali, V.; Wickelhaus, D.A.; Kumar, M.; Anand, S. Computer Vision Toolkit for Non-invasive Monitoring of Factory Floor Artifacts. Procedia Manuf. 2020, 48, 1020–1028. [Google Scholar] [CrossRef]

- Vafeiadis, T.; Kalatzis, D.; Nizamis, A.; Ioannidis, D.; Apostolou, K.; Metaxa, I.; Charisi, V.; Beecks, C.; Insolvibile, G.; Pardi, M.; et al. Data analysis and visualization framework in the manufacturing decision support system of COMPOSITION project. Procedia Manuf. 2019, 28, 57–62. [Google Scholar] [CrossRef]

- Woo, J.; Shin, S.-J.; Seo, W.; Meilanitasari, P. Developing a big data analytics platform for manufacturing systems: architecture, method, and implementation. Int. J. Adv. Manuf. Technol. 2018, 99, 2193–2217. [Google Scholar] [CrossRef]

- Frye, M.; Krauß, J.; Schmitt, R. Expert System for the Machine Learning Pipeline in Manufacturing. IFAC-PapersOnLine 2021, 54, 128–133. [Google Scholar] [CrossRef]

- Bocklisch, F.; Paczkowski, G.; Zimmermann, S.; Lampke, T. Integrating human cognition in cyber-physical systems: A multidimensional fuzzy pattern model with application to thermal spraying. J. Manuf. Syst. 2022, 63, 162–176. [Google Scholar] [CrossRef]

- Richter, J. , Nau, J., Kirchhoff, M., Streitferdt, D., 2021. KOI: An Architecture and Framework for Industrial and Academic Machine Learning Applications, in: Simian, D., Stoica, L.F. (Eds.), Modelling and Development of Intelligent Systems, vol. 1341. Springer International Publishing, Cham, pp. 113–128.

- Magnotta, L. , Gagliardelli, L., Simonini, G., Orsini, M., Bergamaschi, S., 2018. MOMIS Dashboard: a powerful data analytics tool for Industry 4.0, in: Transdisciplinary engineering methods for social innovation of Industry 4.0. Proceedings of the 25th International Conference on Transdisciplinary Engineering. IOS Press, Washington DC, pp. 1074–1081.

- Terziyan, V.; Gryshko, S.; Golovianko, M. Patented intelligence: Cloning human decision models for Industry 4.0. J. Manuf. Syst. 2018, 48, 204–217. [Google Scholar] [CrossRef]

- Heimes, H. , Kampker, A., Buhrer, U., Steinberger, A., Eirich, J., Krotil, S., 2019. Scalable Data Analytics from Predevelopment to Large Scale Manufacturing, in: 2019 Asia Pacific Conference on Research in Industrial and Systems Engineering (APCoRISE), Depok, Indonesia. IEEE, Piscataway, NJ, pp. 1–6.

- Wellsandt, S. , Foosherian, M., Lepenioti, K., Fikardos, M., Mentzas, G., Thoben, K.-D. Supporting Data Analytics in Manufacturing with a Digital Assistant, in: Kim, D.Y., Cieminski, G. von, Romero, D. (Eds.), Advances in Production Management Systems. Smart Manufacturing and Logistics Systems: Turning Ideas into Action, vol. 664, 664 ed. IFIP Advances in Information and Communication Technology, pp. 511–518.

- Angulo, C.; Chacón, A.; Ponsa, P. Towards a cognitive assistant supporting human operators in the Artificial Intelligence of Things. Internet Things 2022, 21. [Google Scholar] [CrossRef]

- Gyulai, D.; Bergmann, J.; Gallina, V.; Gaal, A. Towards a connected factory: Shop-floor data analytics in cyber-physical environments. Procedia CIRP 2019, 86, 37–42. [Google Scholar] [CrossRef]

- Garouani, M.; Ahmad, A.; Bouneffa, M.; Hamlich, M.; Bourguin, G.; Lewandowski, A. Towards big industrial data mining through explainable automated machine learning. Int. J. Adv. Manuf. Technol. 2022, 120, 1169–1188. [Google Scholar] [CrossRef]

- Chakravorti, N.; Rahman, M.M.; Sidoumou, M.R.; Weinert, N.; Gosewehr, F.; Wermann, J. Validation of PERFoRM reference architecture demonstrating an application of data mining for predicting machine failure. Procedia CIRP 2018, 72, 1339–1344. [Google Scholar] [CrossRef]

- Web of Science, 2024. Analyze Results: AI OR "artificial intelligence" OR ML OR "machine learning". https://www.webofscience.com/wos/woscc/analyze-results/f935061a-0d6c-407c-ab02-017786b1d759-d204f0dc. Accessed 6 March 2024.

- Łapińska, J.; Escher, I.; Górka, J.; Sudolska, A.; Brzustewicz, P. Employees’ Trust in Artificial Intelligence in Companies: The Case of Energy and Chemical Industries in Poland. Energies 2021, 14, 1942. [Google Scholar] [CrossRef]

- Varsaluoma, J.; Väätäjä, H.; Heimonen, T.; Tiitinen, K.; Hakulinen, J.; Turunen, M.; Nieminen, H. Guidelines for Development and Evaluation of Usage Data Analytics Tools for Human-Machine Interactions with Industrial Manufacturing Systems. , in: Proceedings of the 22nd International Academic Mindtrek Conference. Mindtrek 2018: Academic Mindtrek 2018, Tampere Finland. ACM, New York, NY, USA; pp. 172–181.

- Blessing, L.T.M. , Chakrabarti, A., 2009. DRM, a design research methodology. Springer, Dordrecht, Heidelberg, 397 pp.

- van Oudenhoven, B. , van de Calseyde, P., Basten, R., Demerouti, E., 2022. Predictive maintenance for industry 5.0: behavioural inquiries from a work system perspective. International Journal of Production Research.

- Sun, X.; Houssin, R.; Renaud, J.; Gardoni, M. A review of methodologies for integrating human factors and ergonomics in engineering design. Int. J. Prod. Res. 2018, 57, 4961–4976. [Google Scholar] [CrossRef]

- International Organization for Standardization, 2020. Ergonomics of Human System Interaction: Part 110: Dialogue principles, Geneva, Switzerland.

- Ngoc, H.N.; Lasa, G.; Iriarte, I. Human-centred design in industry 4.0: case study review and opportunities for future research. J. Intell. Manuf. 2021, 33, 35–76. [Google Scholar] [CrossRef]

- Fischer, L.; Ehrlinger, L.; Geist, V.; Ramler, R.; Sobiezky, F.; Zellinger, W.; Brunner, D.; Kumar, M.; Moser, B. AI System Engineering—Key Challenges and Lessons Learned. Mach. Learn. Knowl. Extr. 2020, 3, 56–83. [Google Scholar] [CrossRef]

- Hooshyar, D.; Azevedo, R.; Yang, Y. Augmenting Deep Neural Networks with Symbolic Educational Knowledge: Towards Trustworthy and Interpretable AI for Education. Mach. Learn. Knowl. Extr. 2024, 6, 593–618. [Google Scholar] [CrossRef]

- Oliveira, M. , Arica, E., Pinzone, M., Fantini, P., Taisch, M., 2019. Human-Centered Manufacturing Challenges Affecting European Industry 4.0 Enabling Technologies, in: Stephanidis, C. (Ed.), HCI International 2019 – Late Breaking Papers. Springer Nature Switzerland, pp. 507–517.

- Rožanec, J.M.; Novalija, I.; Zajec, P.; Kenda, K.; Ghinani, H.T.; Suh, S.; Veliou, E.; Papamartzivanos, D.; Giannetsos, T.; Menesidou, S.A.; et al. Human-centric artificial intelligence architecture for industry 5.0 applications. Int. J. Prod. Res. 2022, 61, 6847–6872. [Google Scholar] [CrossRef]

- Clement, T.; Kemmerzell, N.; Abdelaal, M.; Amberg, M. XAIR: A Systematic Metareview of Explainable AI (XAI) Aligned to the Software Development Process. Mach. Learn. Knowl. Extr. 2023, 5, 78–108. [Google Scholar] [CrossRef]

- Naqvi, M.R.; Elmhadhbi, L.; Sarkar, A.; Archimede, B.; Karray, M.H. Survey on ontology-based explainable AI in manufacturing. J. Intell. Manuf. 2024, 1–23. [Google Scholar] [CrossRef]

- Moencks, M.; Roth, E.; Bohné, T.; Romero, D.; Stahre, J. Augmented Workforce Canvas: a management tool for guiding human-centric, value-driven human-technology integration in industry. Comput. Ind. Eng. 2021, 163, 107803. [Google Scholar] [CrossRef]

| Inclusion criteria | Exclusion criteria |

|---|---|

|

|

| Publication medium | Number |

|---|---|

| Journal of Manufacturing Systems | 4 |

| The International Journal of Advanced Manufacturing Technology | 3 |

| Procedia CIRP | 3 |

| Computers in Industry | 2 |

| Journal of Intelligent Manufacturing | 2 |

| IFIP Advances in Information and Communication Technology | 2 |

| Procedia Manufacturing | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).