1. Introduction

With the rapid adoption of Internet of Things (IoT) devices and the continuous advancement of artificial intelligence (AI) technology, Edge computing is becoming a trend that cannot be ignored. The combination of Edge computing and AI not only changes the way data is processed, but also provides unprecedented opportunities for many application scenarios. The core content of this paper is to discuss the integration of Edge computing and artificial intelligence background as the theme of the current application challenges, as well as some practical cases and future directions as the outline of the paper. Edge computing as a distribution is one of the paradigms of cloud computing, which refers to the transfer of centralized data and the boundary points of data generators in the process of data processing and data storage. These disadvantages, including some Internet of things devices, smart furniture devices, industrial sensors, smart phones, etc. In the traditional Edge computing model combined with cloud computing, all data will first be transmitted to the cloud for processing, so such a traditional processing method will not only lead to data delay, but also bring certain privacy and security risks. That is, Edge computing can significantly reduce data latency and save broadband, thereby enhancing data privacy and secure handling.

With the more and more extensive and application of artificial intelligence technology, data processing and data decision-making have also become one of the deployments of artificial intelligence algorithms, which includes the independent data analysis and decision-making of Edge devices, thereby reducing the dependence on Yulin, therefore, this combination also makes the data processing of many traditional applications that are difficult to achieve relatively simple. In daily life, the biggest example is the real-time perception and analysis of automatic driving. Through the artificial intelligence model on the vehicle equipment, driving decisions can be made through the data of camera radar and lidar light sensor through Edge computing technology, which can improve safety and improve the efficiency of data analysis. Secondly, many smart furniture, including sound cameras, home robots, etc., are provided with more personalized services and security through the combination of artificial intelligence technology and Edge computing. The main advantage of Edge computing on these devices is that it can reduce the dependence on cloud processing while processing language recognition and image analysis locally, improve user data privacy and process data more quickly; In addition, the combination of Edge computing and artificial intelligence in the industrial iot environment can play a great role in the production line, including monitoring and predicting equipment failures, primary prevention of new maintenance, and so on. From these everyday applications, we can see that the mutual assistance of Edge computing and artificial intelligence not only improves industrial productivity but also reduces maintenance costs. At the same time, rapid data processing can quickly identify risks and make relevant decisions. Although the current Edge computing, artificial intelligence shows a wide range of applications, in our view are comparative advantages, but there are still many challenges in the actual deployment.

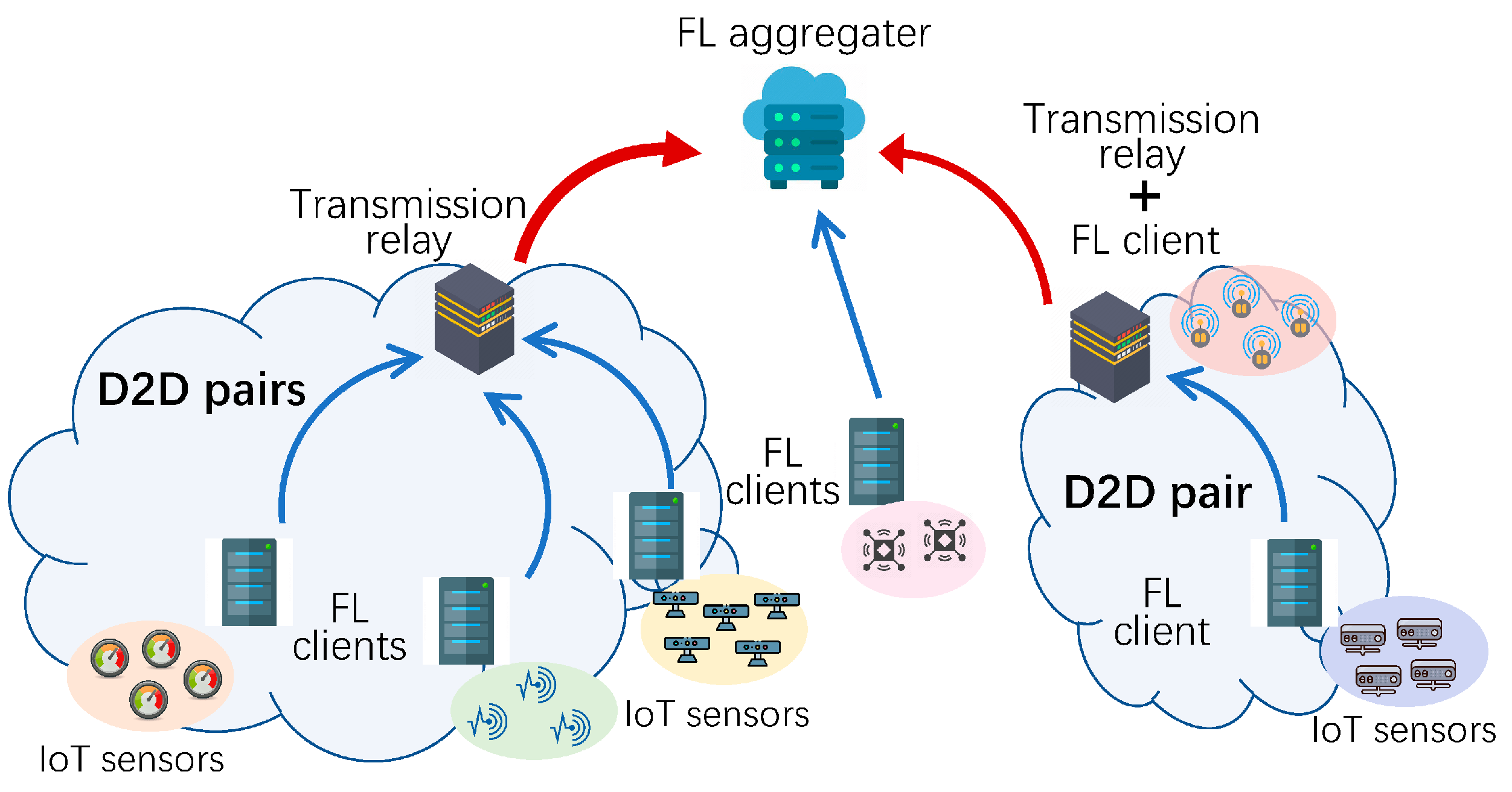

At present, there are four major challenges: First, limited computing resources, and the computing power of Edge computing devices is generally lower than that of cloud servers. Therefore, in combination with the complexity and scale of artificial intelligence models, Edge computing has a certain phenomenon of hardware accelerator weakening. Therefore, in the process of improvement, artificial intelligence algorithms can be implemented on Edge devices. Larger and more efficient models must be developed to achieve more efficient data processing. The second is the energy efficiency of Edge computing. It can be seen that ensuring the security of Edge artificial systems and preventing data leakage is one of the biggest research issues of Edge computing. The last challenge is model update and maintenance. Due to the relatively wide distribution of Edge devices, effective device maintenance is a relatively big challenge. Therefore, distributed mechanism, federated learning and other technologies need to be combined in daily maintenance.

Figure 1 below is an architecture diagram of the current challenges of Edge computing devices.

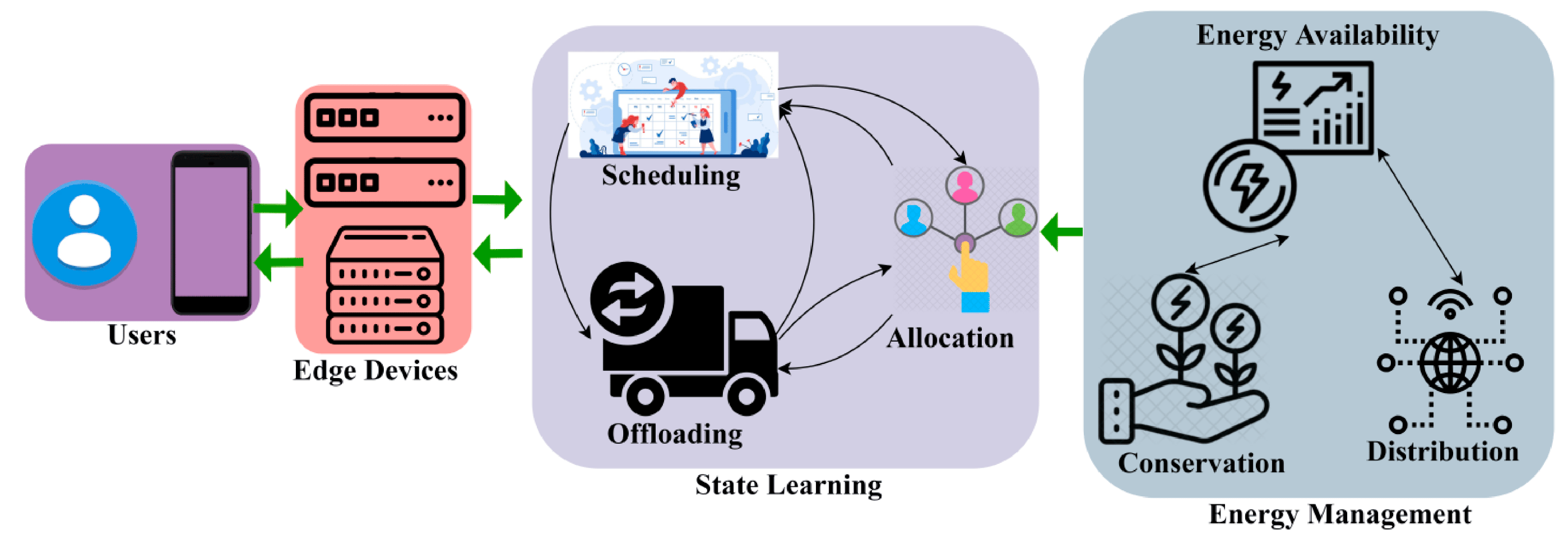

However, from the current development direction of the combination of artificial intelligence and Edge computing, the trend can be seen that Edge computing has the following important developments, among which the first is the development of hardware accelerators for Edge devices, combined with the hardware of artificial intelligence technology, to accelerate the emergence of some technologies that enhance the computing power of Edge devices, such as TPU and NPU, and so on. The last advantage is the development of AIoT, the deep combination of artificial intelligence and the Internet of Things, will promote the development of the next generation of new intelligent devices, the formation of a certain highly interconnected and autonomous intelligent network system, and promote the accelerated development of intelligence. The specific implementation architecture is shown in

Figure 2 below.

It can be seen from the above that the combination of Edge computing and artificial intelligence is changing the traditional application of data processing and decision-making. Through the deployment of artificial intelligence algorithms on Edge devices for data processing, three aspects of low latency, high performance and high privacy protection can be greatly achieved. However, there are still great challenges in the issue of computing resources energy efficiency efficiency and security of Edge computing. The combination of Edge computing devices and artificial intelligence can achieve a wider range of applications in more fields and promote the further development of more social intelligence in the future. This combination will not only improve the efficiency of devices and reduce latency, but also greatly improve the data privacy and security issues that people are most concerned about, as the application of a wide range of fields, including autonomous driving smart furniture and industrial automation, optimized AI models and strong security measures, the need will become increasingly important. Therefore, this paper analyzes the future prospects by exploring in depth the specific impact of AI-driven Edge computing on the development of the Internet of Things, as well as solving existing applications and challenges.

2. Related Work

2.1. Edge Computing on the Internet of Things

With the continuous development of the global Internet of Things, speed, countless devices and sensors will be interwoven, so intelligent decision-making and intelligent optimization management will become one of the core concerns of the Internet of Things. As the number of iot devices continues to grow, the demand for data processing and transmission will continue to increase, such accounting not only brings new challenges, but also gives rise to the rise of Edge computing, Edge computing plays a crucial role in the iot architecture, he calculates and optimizes the relevant response time through the execution of the data of the iot device itself. In this way, the complex burden of the server is reduced, and the data people in the task data storage and service area are brought to the Edge while ensuring the timeliness and security of data processing. Therefore, it can also be understood as Edge computing, which is to reduce latency and save broadband to provide faster and more efficient data processing and solutions. In the Internet of Things, Edge computing can solve the problem that central servers can't handle the massive amount of data in a timely manner. For example, self-driving cars need to process data generated by onboard sensors in real time, and delays or interruptions can be disastrous. Here, Edge computing ensures that data is processed quickly at the point of generation to drive real-time decisions.

The implementation of Edge computing in iot systems can bring the following significant advantages: 1. Low latency - By processing data at the Edge nodes, response times are greatly reduced, which is critical for applications that require real-time feedback. 2. Bandwidth optimization - Pre-processing data at the Edge, sending only the necessary information to the cloud or central server, can significantly reduce data traffic. 3. Fault tolerance for interruptions - Processing data locally guarantees a degree of autonomy for the system, even when cloud services are not available. 4. Privacy and security - Edge computing can realize localized data processing, reduce sensitive data transmission, and thus improve data security.

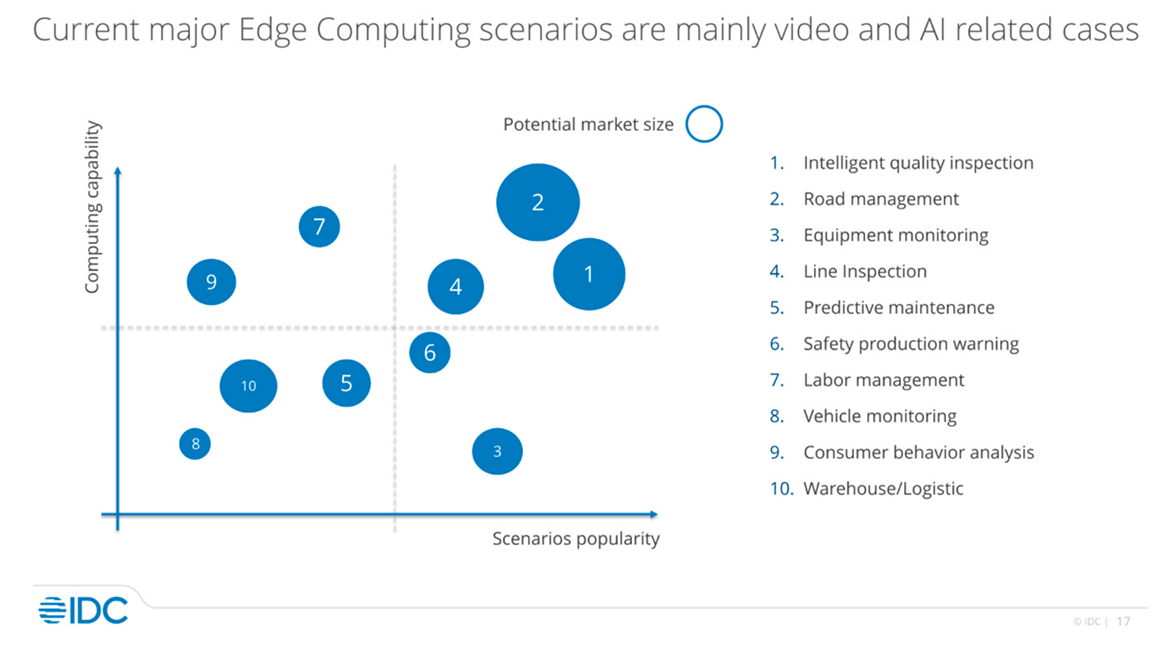

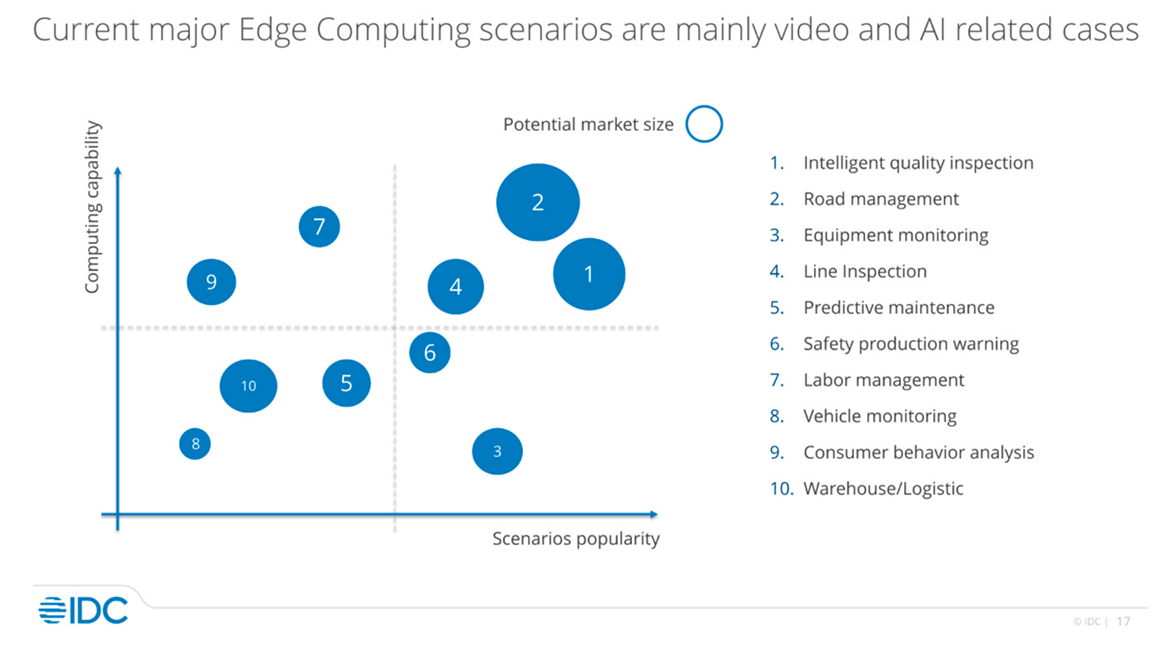

2.2. AI-Assisted Edge Computing – Tesla

At Tesla's Shanghai Gigafactory, Edge computing is enabled by AI (artificial intelligence) to play a big role in the production process. In fact, the integration of the two is also the general trend. Last year, International Data Corporation (IDC) released the "China Semi-annual Edge Computing Server Market (the first half of 2021) Tracking Report", summarizing the top ten scenarios of Edge computing. These include intelligent quality inspection, road management, equipment monitoring, line inspection, preventive maintenance, safety production early warning, workforce management, consumer behavior analysis, warehouse/logistics management, most of which are closely related to video and AI.

In addition to this, when the device is operating as expected, the raw monitoring data indicates normal behavior and is not useful. Edge computing means there is no need to unnecessarily send large amounts of data to the cloud. For condition-based monitoring, Edge computing is not required to implement use cases, but it can provide some substantial benefits. Stratus Technologies is an example of how this works in practice today.

Network Topology refers to the physical layout of various devices that are interconnected using transport media. A specific physical (i.e., real) or logical (i.e., virtual) arrangement of network members. If two networks have the same connection structure, they have the same network topology, although their internal physical connections and distance between nodes may be different. Compared with traditional computing, edge computing also has many advantages, such as the security and management of distributed computing resources.In the processing work of Edge computing, organizations should carefully consider their needs, and distinguish and optimize data, so as to make certain adjustments to the importance of Edge computing in future computing. The key to Edge computing is to be able to get closer to the data source more quickly and to process and analyze the data quickly. Edge computing is the ability to place resources at the Edge of the network to reduce transmission distances, thereby reducing latency and speeding up data processing events to calculate the content of the data more sensitively.

Secondly, reliability, Edge computing can reduce the use of broadband by processing data locally. For example, in some remote or rural areas, broadband itself is limited and environmentally effective, so it takes a lot of time for delayed data to be transmitted from one point to another through the network, which will also bring a certain decline in performance and user satisfaction. Therefore, cloud computing can reduce this delay in a variety of ways and improve user satisfaction on network facilities for a certain low latency, efficient processing. The last point is Edge computing, which can also improve network security by reducing the amount of data sent by the network. That is to say, when Edge computing deals with some local sensitive data, it will reduce certain risk of leakage and security threats, so that placing resources closer to the data source can release and innovate more security possibilities while improving user experience.

2.3. Edge Computing Architecture Model for Edge Devices

Edge computing architecture refers to the structural computation of frameworks and components at the edge of the network. That said, the core of the edge computing architecture is to allocate edge computing resources closer to the data source or processing power, enabling more efficient edge computing data processing and decision making. The components of the architecture typically include edge devices, edge cloud servers, and edge devices, which are small and low-power devices. The core components have these distributions. First, the Kubernetes control node adopts the original data model of the cloud part and keeps the original control and data flow unchanged, that is, the node run by KubeEdge appears as an ordinary node on Kubernetes. Kubernetes can manage the nodes that KubeEdge runs on just like normal nodes.

The reason why KubeEdge can run on edge nodes with limited resources and uncontrollable network quality, On the basis of Kubernetes control nodes, KubeEdge realizes the sinking of Kubernetes cloud computing orchestration containerization applications through CloudCore in the cloud part and EdgeCore in the edge part.

CloudCore in the cloud part is responsible for monitoring the instructions and events of the Kubernetes control node to the EdgeCore in the edge part and submitting the status information and event information reported by the EdgeCore in the edge part to the Kubernetes control node. EdgeCore at the edge is responsible for receiving commands and event information from CloudCore at the cloud, executing related commands and maintaining edge loads, and reporting status information and event information at the edge to CloudCore at the cloud.

In addition, EdgeCore is tailored and customized on the basis of Kubelet components, which cuts the rich functions that Kubelet cannot use on the edge, and adds offline computing functions on the basis of Kubelet in view of the status quo of limited edge resources and poor network quality. EdgeCore is well adapted to the marginal environment. Generally speaking, the architecture of Edge computing and network is very complex and multifaceted. In the process of data processing and architecture processing, it is necessary to carefully plan and manage complex data of multiple parties to ensure effective and reliable data processing. At the same time, the Edge platform is also increased, and other components are constantly innovating and developing. These innovations not only help drive data processing, but also allow for faster progress in reanalysis and real-time data decision-making.

3. Testing of the Framework

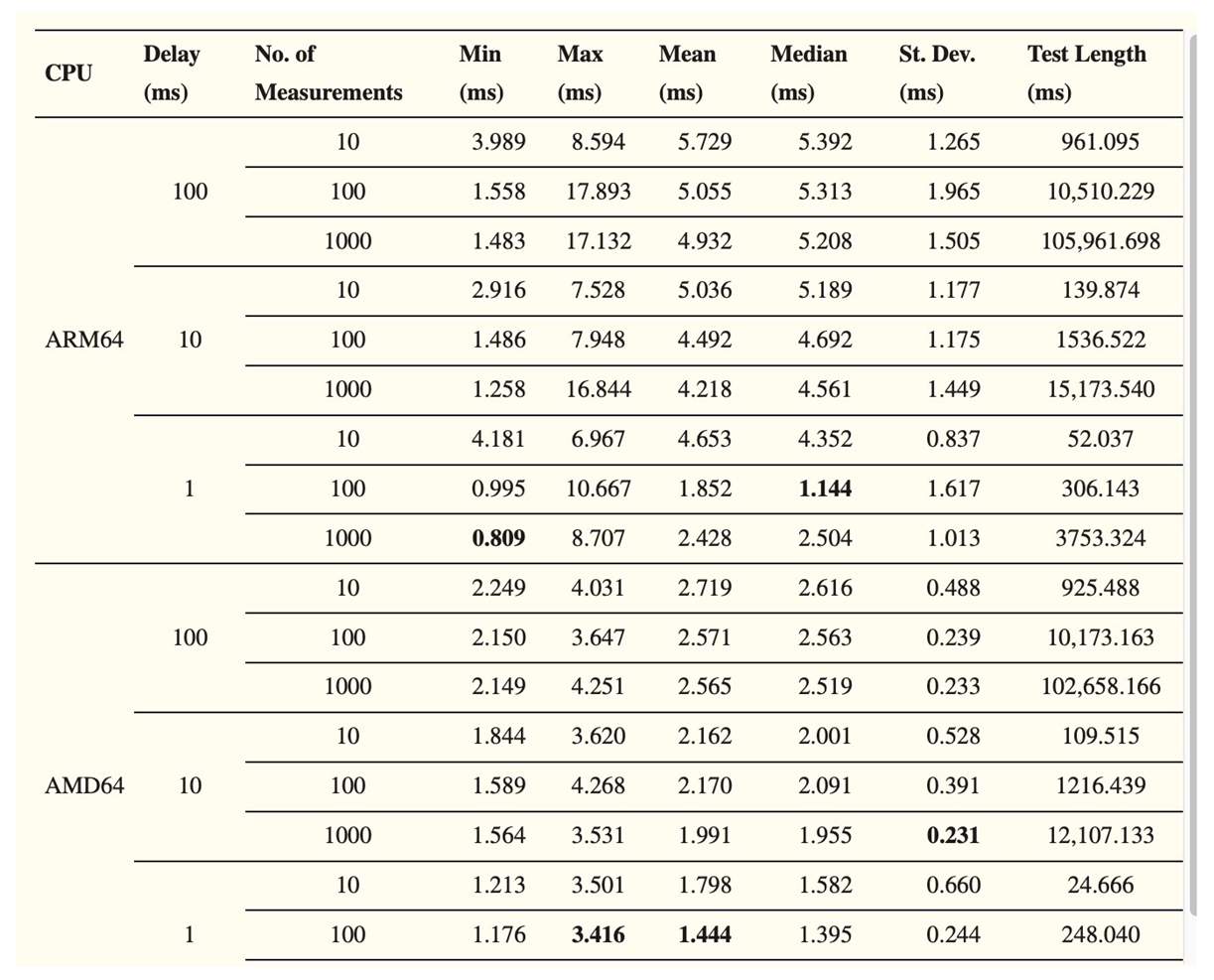

In this experimental evaluation process, we conducted a comprehensive analysis of the edge device framework, focusing on the round-trip time (RTT) metric in ETL. We tested multiple data flows (such as 100 and 1000) and different latencies (1 ms, 10 ms and 100 ms) to simulate different scenarios. For example, we use a short burst of 10 values with a 1-millisecond delay and a long burst of 1000 values with a 1-millisecond delay, which can represent an industrial sensor that requires an immediate response. In contrast, longer latency is suitable for commercial iot devices such as temperature sensors.

Table 1.

Testing Parameters for Edge Device Framework Evaluation.

Table 1.

Testing Parameters for Edge Device Framework Evaluation.

| Test Scenario |

Data Volume |

Latency |

Hardware Platform |

CPU Cores |

RAM |

| Short Burst |

10 |

1 ms |

64-bit ARM |

8 |

8192 MB |

| Long Burst |

1000 |

1 ms |

64-bit ARM |

8 |

8192 MB |

| Short Burst |

10 |

1 ms |

64-bit x86 |

8 |

8192 MB |

| Long Burst |

1000 |

1 ms |

64-bit x86 |

8 |

8192 MB |

| Long Delay |

1000 |

10 ms |

64-bit ARM |

8 |

8192 MB |

| Long Delay |

1000 |

100 ms |

64-bit x86 |

8 |

8192 MB |

To verify the efficiency and performance of the framework on different modern hardware platforms, we chose two test methods: first on a 64-bit ARM processor, and then on a 64-bit x86 processor. Eight CPU cores and 8192 MB of RAM were each allocated in the test environment. In this way, we can compare the performance of the framework under different platforms. Since most of the experiment was focused on testing ETL services within our proposed edge device framework, issues such as lack of computing resources and limited control over data transfer rates would arise, which would require ignoring actual iot devices from our test environment. A test container was designed to simulate iot devices, allowing easy adjustment of data transfer speed and capacity.

3.1. The Test Environment

To fully evaluate our framework, we took two approaches to compare the effectiveness and performance of the two hardware platforms, 64-bit ARM cpus and 64-bit x86 cpus. The reason for choosing these two platforms is that they represent the two most common CPU architectures in edge computing, ensuring that our framework has good compatibility. In addition, we wanted to test whether there were significant performance differences between frameworks running on different hardware platforms.

During testing, we designed several scenarios to see how these platforms performed with different workloads. This includes short burst data streams and long delay scenarios to simulate the variety of situations that may be encountered in real-world applications. In this way, we are able to gain insight into how the framework performs under different conditions.

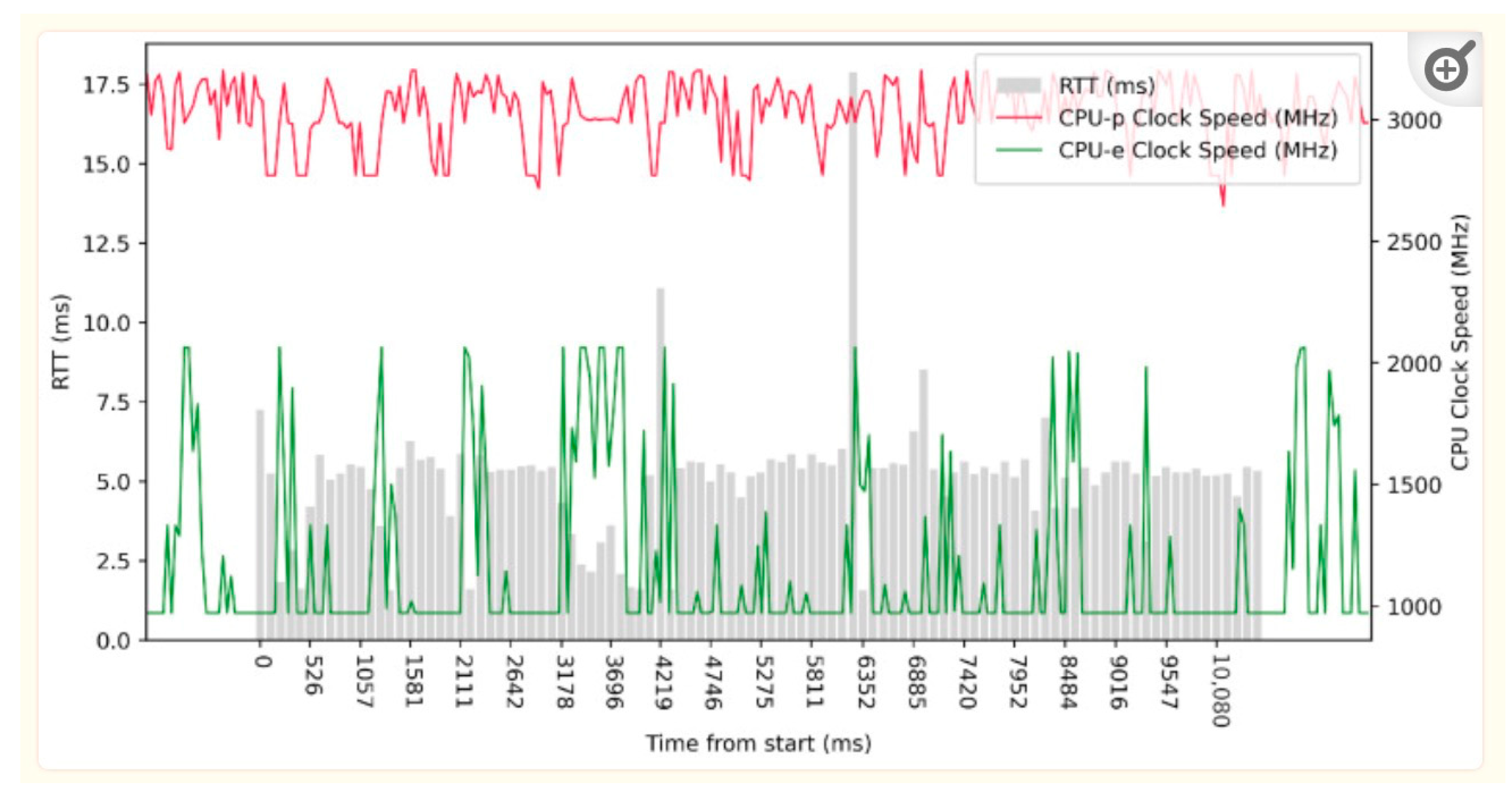

Table 2 shows the different platforms used in our tests. The Apple M1 CPU is based on the ARM architecture, using a combination of a big processor and LITTLE architecture, with a high-performance core (CPU-P) Firestorm and an energy-efficient core (CPU-E) Icestorm. This design allows tasks to switch seamlessly between different cores to optimize performance and energy consumption. In addition, we also considered other hardware platforms to ensure broad applicability of the test results. Through such testing, we hope to be able to determine how the framework performs under various workloads, which can provide a basis for future optimizations and improvements.

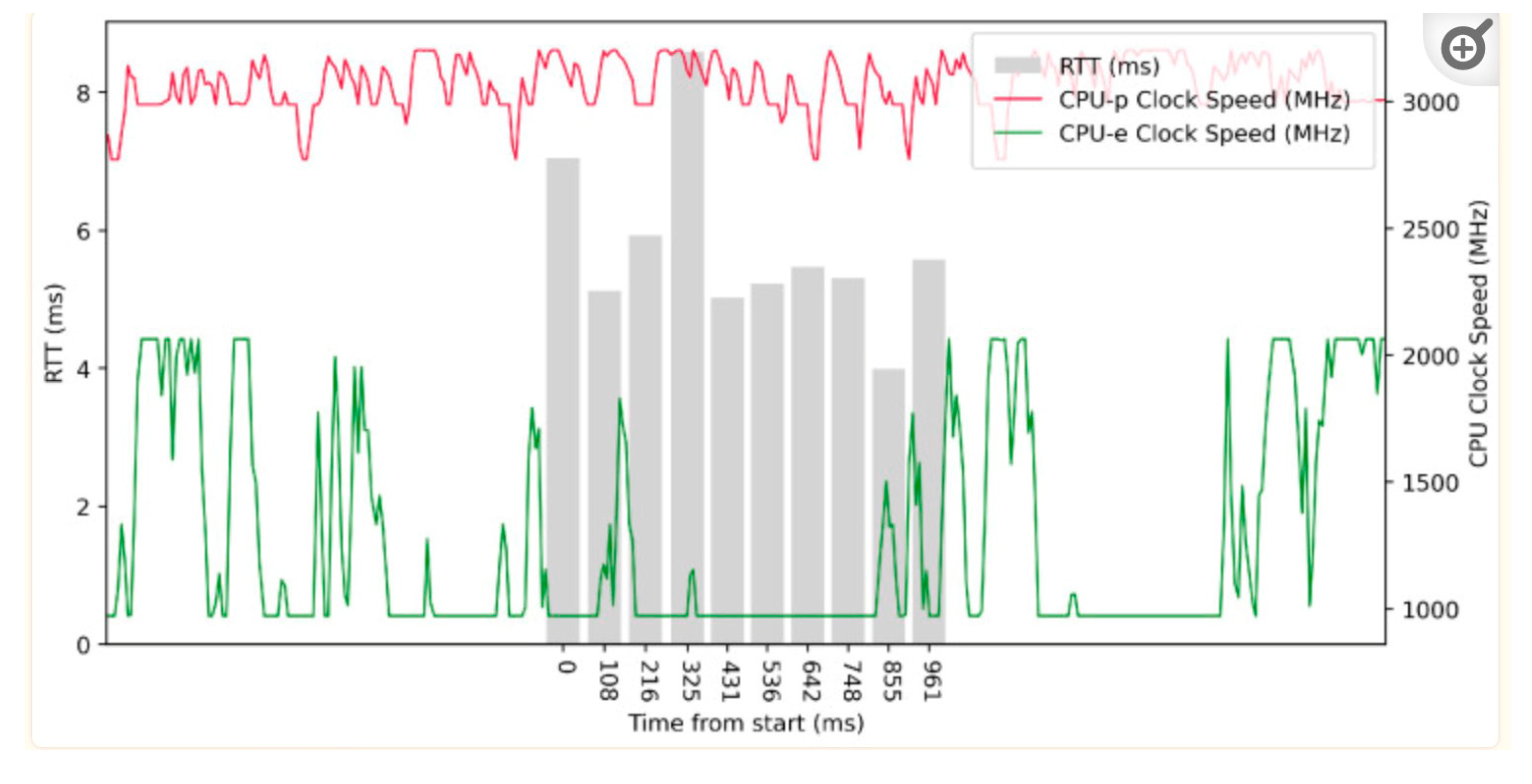

3.2. Tests on ARM64 with 100 ms Delay

During the first test, 10 RTT measurements were passed across ETL servers, where the delay between 10 servers was 100 ms and the center bit value was 5.392ms, with an average of 5.729ms distributed symmetrically. The average deviation on the deviation paper is 1.265ms at one point, and the specific results can be shown in

Figure 8. It can be seen that in the testing process of the whole edge device, the core of the data does not increase more activities, but there is a large change in the core of performance at high frequency.

In the second test, mainly by measuring the data of 100 RTT fingers of the leap-over ETL server, the latency is still 100 ms, the center bit is 5.313ms, and the average is only 5.055. The average index indicates that the average values of this measurement are mostly close to the norm. The standard deviation of the data is 1.965ms, which is also in line distribution, as can be seen in

Table 3. From the results, it can be seen that the RTT decreases significantly when the data analysis volume with an efficient core reaches the maximum speed, but the performance and the head always maintain a high frequency.

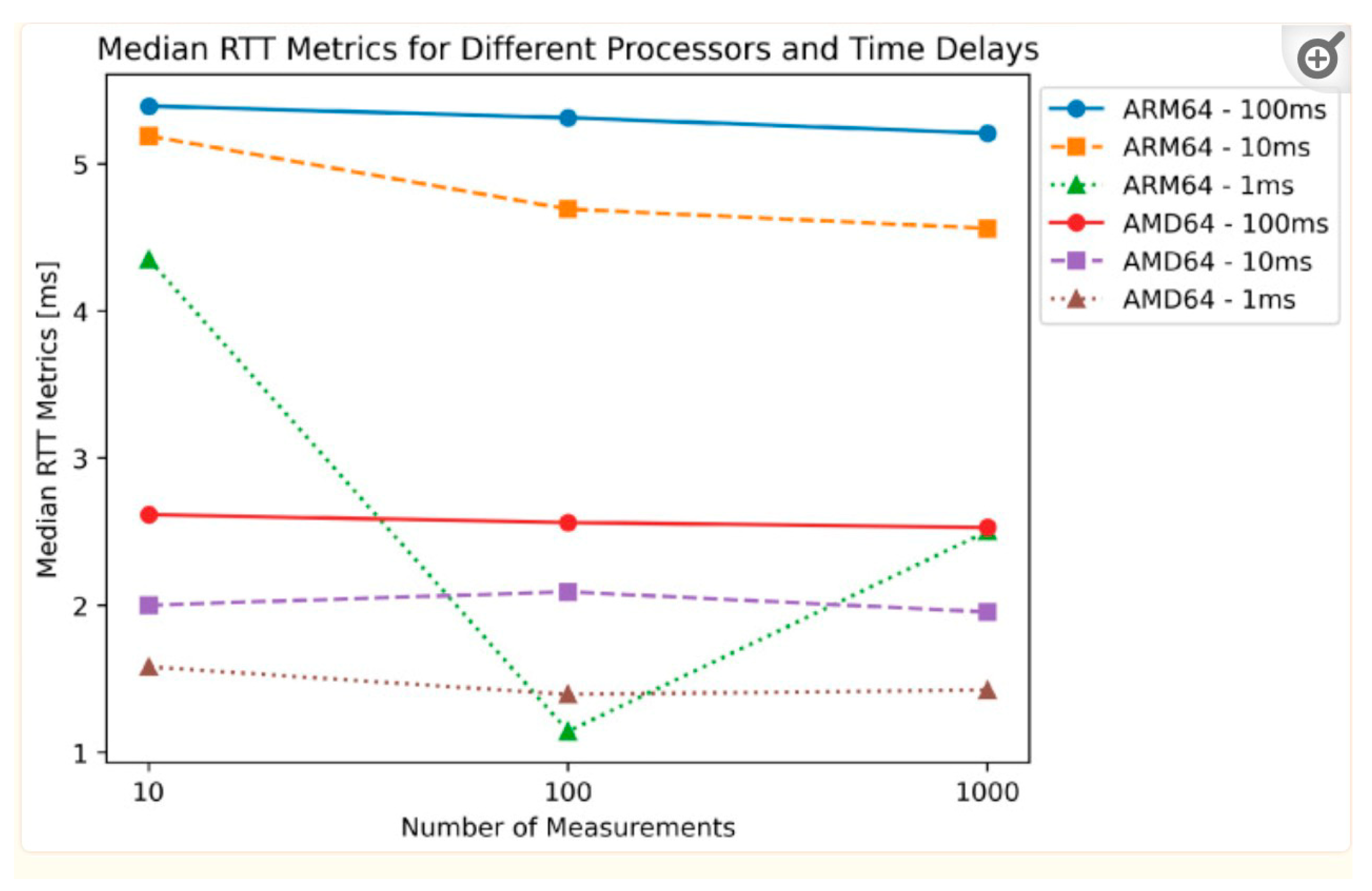

3.4. Tests on ARM64 with 10 ms Delay

In the remaining measurement tests of this experiment, we performed multiple RTT (round trip time) measurements on the ARM64 platform with a 10ms delay. Compared to the first test, the median was reduced by 0.203 ms, indicating an improvement in the RTT measure. The results of the fifth and sixth tests showed a similar trend, with a median of 4.692 ms and 4.561 ms observed, respectively, indicating a further tendency for RTT to decrease as the number of measurements increased.

These test results have important implications for edge computing and iot applications. As data transfers between edge devices and the cloud become more efficient, low-latency RTT metrics can support real-time data processing to meet the needs of smart devices for rapid response. For example, in industrial iot scenarios, the ability to monitor and analyze data in real time can significantly improve operational efficiency and response speed. As a result, these tests provide data support for future optimization of the edge computing framework, ensuring that it can effectively handle the diverse needs of iot applications.

3.5. Tests on AMD64 with 10 ms Delay

In this experiment, we made several measurements of RTT (round trip time), focusing specifically on the performance at a 10-millisecond delay. In the fourth test, 10 measurements were taken, and the results showed a mean RTT of 2.162 ms, a median of 2.001 ms, and a standard deviation of 0.538 ms. This shows that the shorter delay significantly reduces the RTT value compared to the 100 ms delay of the first test.

These results have important implications for edge computing and iot applications. With reduced RTT, the system is able to process data from various iot devices more quickly, which is critical for application scenarios that require real-time reactions. For example, in intelligent manufacturing and automated monitoring, fast data transmission and processing can significantly improve the response speed and efficiency of the system. Therefore, this experiment provides empirical support for the optimization of the edge computing framework to ensure that it can maintain efficient and stable performance in the face of diverse iot applications.

3.6. Discussion

Our test results provide important insights into ETL service performance and efficiency across different hardware platforms and configurations. By using analog iot devices as test containers, we were able to focus on data processing speed and communication between ETL services, while controlling transfer speed and other test parameters. In addition, testing the framework on ARM64 and x86 64-bit processors allowed us to evaluate its performance and compatibility in modern hardware architectures.

The results show that the proposed framework performs well under different conditions (as can be seen in

Figure 10), and the RTT index always remains within the acceptable range during the test. Tests also showed that the framework is capable of handling multiple data transfer speeds and measurements, demonstrating its scalability and adaptability in various iot deployment scenarios. This is especially important for edge computing, as devices in these environments often need to process large amounts of data in real time for fast response and decision making.

However, it is important to note that while our test environment is relatively comprehensive, it does not cover all possible scenarios. Further testing with real-world networking devices and different network conditions may reveal more challenges and potential directions for optimization. Comparing the RTT performance of ARM64 and AMD64 processors, we found that ARM64 experienced a greater decrease in median RTT as the number of measurements increased, while AMD64 maintained consistently low latency across all test scenarios, underscoring its suitability for real-time computing applications that require low latency. These results provide an important reference for selecting the hardware required for specific use cases, ensuring optimal performance in edge computing and iot.

The final test results showed that even at the highest CPU frequency, the processing speed was not enough to process the existing data before the new data arrived, resulting in an increase in the mean and median, and an increase in the processing queue and processing time. While the proposed framework shows good efficiency and performance in data processing and communication between ETL services, further testing with real iot devices, networks, and analytics services is needed to better understand its potential in different iot scenarios. In addition, our tests on ARM64 and AMD64 cpus show that although single-board computers (SBC) do not use a uniform CPU architecture, it is critical to ensure that the framework works on both architectures. Future research is needed to explore whether the differences between architectures apply to smaller devices.

There are still some research challenges in edge computing. The use of multiple devices complicates task offloading and load balancing. For latency-sensitive tasks, the offload mechanism must consider the distance of available nodes, the current load, and potential performance. In addition, device performance differences make load balancing more difficult, and compatibility also needs to be considered, because not all devices can perform the required tasks, and the system must be able to adapt to the capabilities of other nodes. In mobile edge computing, the movement of devices or users needs to anticipate and ensure that services are deployed in a timely manner, especially in places where users gather, and services need to be dynamically scaled and scaled down. At the same time, the security of edge computing also needs to be paid attention to, devices may not be able to support the security tools of other devices, and diverse devices require specific protection measures. Any one vulnerable device can compromise the entire network, so data must be protected throughout its journey from the sensor to the cloud to prevent interception at any step.

4. Conclusion

Edge computing technology belongs to an innovative artificial intelligence computing model, the core of which lies in the decentralization of data processing functions and data storage capabilities to the edge of the network where data is generated. This mode can not only significantly reduce the delay caused by data transmission distance, but also improve the timeliness and efficiency of data processing. In traditional cloud computing architectures, data often needs to be transferred to remote data centers for processing, a process that is inevitably delayed due to physical distance. Near edge computing effectively avoids this problem by performing the calculation on the data source. The rise of the computing edge stems from the urgent need for real-time data processing and low-latency operations. With the proliferation of edge computing and iot devices and the proliferation of sensors, traditional cloud computing infrastructure is struggling to cope with the sheer volume of edge data.

Edge computing provides an efficient solution that enables data to be processed immediately where it is generated, speeding up the decision-making process and optimizing the use of network resources. With the advancement of technology, edge computing has evolved into a variety of forms to adapt to different application scenarios and industry needs. It plays a vital role in smart cities, autonomous driving, industrial automation, telemedicine and other fields. The architecture of edge computing is also evolving, including edge servers, fog computing, and the integration of distributed computing models.

Since edge computing is a rapidly growing field, the lack of standardization in practical applications has hindered its development. So, the diversity of edge devices that collect and generate data is proving to be a barrier. In this experiment, we first looked at tools that can help develop and deploy edge computing solutions, such as Docker, Kubernetes, and Terraform. We then described the architecture of our data processing pipeline and our framework to bring the pipeline together. Containerization makes it easy to deploy, scale, and monitor tasks using a coordinator. Some parts of the pipeline can be in different languages or different versions of the same language. We have also created a unified test platform that can be used to evaluate and compare the performance of Edge devices.

The flexibility of Edge computing devices and AI model architectures enables organizations to leverage the centralized benefits of cloud computing and the decentralized nature of edge computing to build more efficient and agile computing environments. The rise and development of Edge computing reflects a central requirement of our highly connected, data-driven era - the constant pursuit of efficient, real-time data processing capabilities. Continued innovation in technology, combined with the rapid growth of iot devices and the continued emergence of new applications, has driven the continued advancement and development of edge computing as a key element of modern computing architectures.

References

- Huh, Jun-Ho, and Yeong-Seok Seo. "Understanding edge computing: Engineering evolution with artificial intelligence." IEEE Access 7 (2019): 164229-164245.

- Fragkos, Georgios, Sean Lebien, and Eirini Eleni Tsiropoulou. "Artificial intelligent multi-access edge computing servers management." IEEE Access 8 (2020): 171292-171304.

- Slama, Sami Ben. "Prosumer in smart grids based on intelligent edge computing: A review on Artificial Intelligence Scheduling Techniques." Ain Shams Engineering Journal 13.1 (2022): 101504.

- Gong, Chao, et al. "Intelligent cooperative edge computing in internet of things." IEEE Internet of Things Journal 7.10 (2020): 9372-9382.

- Wen, X., Shen, Q., Zheng, W., & Zhang, H. (2024). AI-Driven Solar Energy Generation and Smart Grid Integration A Holistic Approach to Enhancing Renewable Energy Efficiency. International Journal of Innovative Research in Engineering and Management, 11(4), 55-55.

- Lou, Q. (2024). New Development of Administrative Prosecutorial Supervision with Chinese Characteristics in the New Era. Journal of Economic Theory and Business Management, 1(4), 79-88.

- Liu, Y., Tan, H., Cao, G., & Xu, Y. (2024). Enhancing User Engagement through Adaptive UI/UX Design: A Study on Personalized Mobile App Interfaces.

- Xu, H., Li, S., Niu, K., & Ping, G. (2024). Utilizing Deep Learning to Detect Fraud in Financial Transactions and Tax Reporting. Journal of Economic Theory and Business Management, 1(4), 61-71.

- Carvalho, G., Cabral, B., Pereira, V., & Bernardino, J. (2020). Computation offloading in edge computing environments using artificial intelligence techniques. Engineering Applications of Artificial Intelligence, 95, 103840.

- Liu, Y., Tan, H., Cao, G., & Xu, Y. (2024). Enhancing User Engagement through Adaptive UI/UX Design: A Study on Personalized Mobile App Interfaces.

- Huang, D., Yang, M., Wen, X., Xia, S., & Yuan, B. (2024). AI-Driven Drug Discovery: Accelerating the Development of Novel Therapeutics in Biopharmaceuticals. Journal of Knowledge Learning and Science Technology ISSN: 2959-6386 (online), 3(3), 206-224.

- Li, S., Xu, H., Lu, T., Cao, G., & Zhang, X. (2024). Emerging Technologies in Finance: Revolutionizing Investment Strategies and Tax Management in the Digital Era. Management Journal for Advanced Research, 4(4), 35-49.

- Shi J, Shang F, Zhou S, et al. Applications of Quantum Machine Learning in Large-Scale E-commerce Recommendation Systems: Enhancing Efficiency and Accuracy[J]. Journal of Industrial Engineering and Applied Science, 2024, 2(4): 90-103.

- Ji, H., Alfarraj, O., & Tolba, A. (2020). Artificial intelligence-empowered edge of vehicles: architecture, enabling technologies, and applications. IEEE Access, 8, 61020-61034.

- Yang, M., Huang, D., Zhang, H., & Zheng, W. (2024). AI-Enabled Precision Medicine: Optimizing Treatment Strategies Through Genomic Data Analysis. Journal of Computer Technology and Applied Mathematics, 1(3), 73-84.

- Wang, S., Zheng, H., Wen, X., & Fu, S. (2024). DISTRIBUTED HIGH-PERFORMANCE COMPUTING METHODS FOR ACCELERATING DEEP LEARNING TRAINING. Journal of Knowledge Learning and Science Technology ISSN: 2959-6386 (online), 3(3), 108-126.

- Lei, H., Wang, B., Shui, Z., Yang, P., & Liang, P. (2024). Automated Lane Change Behavior Prediction and Environmental Perception Based on SLAM Technology. arXiv preprint arXiv:2404.04492.

- Singh, S. K., Rathore, S., & Park, J. H. (2020). Blockiotintelligence: A blockchain-enabled intelligent IoT architecture with artificial intelligence. Future Generation Computer Systems, 110, 721-743.

- Ahmed, I., Zhang, Y., Jeon, G., Lin, W., Khosravi, M. R., & Qi, L. (2022). A blockchain-and artificial intelligence-enabled smart IoT framework for sustainable city. International Journal of Intelligent Systems, 37(9), 6493-6507.

- Ghosh, A., Chakraborty, D., & Law, A. (2018). Artificial intelligence in Internet of things. CAAI Transactions on Intelligence Technology, 3(4), 208-218.

- Xiao, J., Wang, J., Bao, W., Deng, T., & Bi, S. (2024). Application progress of natural language processing technology in financial research. Financial Engineering and Risk Management, 7(3), 155-161.

- Li, J., Wang, Y., Xu, C., Liu, S., Dai, J., & Lan, K. (2024). Bioplastic derived from corn stover: Life cycle assessment and artificial intelligence-based analysis of uncertainty and variability. Science of The Total Environment, 174349.

- Wang, S., Zhu, Y., Lou, Q., & Wei, M. (2024). Utilizing Artificial Intelligence for Financial Risk Monitoring in Asset Management. Academic Journal of Sociology and Management, 2(5), 11-19.

- Shen, Q., Wen, X., Xia, S., Zhou, S., & Zhang, H. (2024). AI-Based Analysis and Prediction of Synergistic Development Trends in US Photovoltaic and Energy Storage Systems. International Journal of Innovative Research in Computer Science & Technology, 12(5), 36-46.

- Zhu, Y., Yu, K., Wei, M., Pu, Y., & Wang, Z. (2024). AI-Enhanced Administrative Prosecutorial Supervision in Financial Big Data: New Concepts and Functions for the Digital Era. Social Science Journal for Advanced Research, 4(5), 40-54.

- Li, H., Zhou, S., Yuan, B., & Zhang, M. (2024). OPTIMIZING INTELLIGENT EDGE COMPUTING RESOURCE SCHEDULING BASED ON FEDERATED LEARNING. Journal of Knowledge Learning and Science Technology ISSN: 2959-6386 (online), 3(3), 235-260.

- Pu, Y., Zhu, Y., Xu, H., Wang, Z., & Wei, M. (2024). LSTM-Based Financial Statement Fraud Prediction Model for Listed Companies. Academic Journal of Sociology and Management, 2(5), 20-31.

- Liu, Y., Tan, H., Cao, G., & Xu, Y. (2024). Enhancing User Engagement through Adaptive UI/UX Design: A Study on Personalized Mobile App Interfaces.

- Huang, D., Yang, M., Wen, X., Xia, S., & Yuan, B. (2024). AI-Driven Drug Discovery: Accelerating the Development of Novel Therapeutics in Biopharmaceuticals. Journal of Knowledge Learning and Science Technology ISSN: 2959-6386 (online), 3(3), 206-224.

- Yang, M., Huang, D., Zhang, H., & Zheng, W. (2024). AI-Enabled Precision Medicine: Optimizing Treatment Strategies Through Genomic Data Analysis. Journal of Computer Technology and Applied Mathematics, 1(3), 73-84.

- Wen, X., Shen, Q., Zheng, W., & Zhang, H. (2024). AI-Driven Solar Energy Generation and Smart Grid Integration A Holistic Approach to Enhancing Renewable Energy Efficiency. International Journal of Innovative Research in Engineering and Management, 11(4), 55-55.

- Lou, Q. (2024). New Development of Administrative Prosecutorial Supervision with Chinese Characteristics in the New Era. Journal of Economic Theory and Business Management, 1(4), 79-88.

- Liu, Y., Tan, H., Cao, G., & Xu, Y. (2024). Enhancing User Engagement through Adaptive UI/UX Design: A Study on Personalized Mobile App Interfaces.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).