Submitted:

09 October 2024

Posted:

10 October 2024

Read the latest preprint version here

Abstract

Keywords:

1. Introduction

- a)

- Contributions of This Study

- Application of Deep Learning Models: This study provides an initial analysis of the effectiveness of deep learning models in exchange rate prediction, using MSE and MAE as key metrics to identify the best-performing models.

- Enhancement of Predictive Performance: To improve the accuracy of machine learning models, this study employs various techniques, including feature selection, to reduce redundancy and retain the most relevant subset of features for exchange rate forecasting.

- Analysis of Influential Factors Over Time: By applying attention mechanisms, this study enhances the interpretability of machine learning models, offering insights into how different factors influence exchange rate predictions across different periods. This analysis aims to uncover which aspects of economic data the models prioritize during the prediction process, thereby providing a more nuanced understanding of the underlying dynamics.

2. Methods

2.1. Data Collection and Preprocessing

| Indicator | Description | Indicator Name |

| Exchange Rate | Offshore Spot RMB Closing Price | rate |

| Long-term Bond Yield Differential (U.S.) | 10-Year Government Bond Yield - U.S. Federal Funds Rate | udr |

| CPI (U.S.) | U.S. Consumer Price Index | cpiu |

| CSI 300 Index | CSI 300 Index | HS300 |

| Import and Export Value | Current Month Import and Export Value | trade |

| Import Value | Current Month Import Value | inputu |

| M2 (U.S.) | M2 in the United States | m2u2 |

| EUR/USD | EUR/USD Exchange Rate | EURUSD |

| AUD/USD | AUD/USD Exchange Rate | AUDUSD |

| USDX | U.S. Dollar Index Published by ICE | USDX |

| Category | Indicator | Description | Indicator Name |

| Fundamental Data | Exchange Rate | Offshore Spot RMB Closing Price | rate |

| Interest Rate | U.S. Federal Funds Rate | rusa | |

| Interest Rate | PBoC Benchmark Deposit Rate | rchn | |

| Interest Rate Differential | Interest Rate Differential Between Two Countries | dr | |

| Long-term Bond Yield Differential (China) | 10-Year Government Bond Yield - Bank Lending Rate | ydr | |

| Long-term Bond Yield Differential (U.S.) | 10-Year Government Bond Yield - U.S. Federal Funds Rate | udr | |

| CPI (U.S.) | U.S. Consumer Price Index | cpiu | |

| CPI (China) | China Consumer Price Index | cpic | |

| Consumer Confidence Index | China Consumer Confidence Index | ccp | |

| Stock Index Data | Dow Jones Index | Dow Jones Index | dowjones |

| MSCI China Index | MSCI China Index | MSCIAAshare | |

| CSI 300 Index | CSI 300 Index | HS300 | |

| S&P 500 China Index | S&P 500 China Index | sprd30.ci | |

| Nasdaq China Index | Nasdaq China Index | nyseche | |

| Hang Seng China Enterprises Index | Hang Seng China Enterprises Index | hscei.hi | |

| Current Account | Import and Export Value | Current Month Import and Export Value | trade |

| Export Value | Current Month Export Value | output | |

| Import Value | Current Month Import Value | inputu | |

| Capital Account | FDI | Foreign Direct Investment | fdix |

| FDI | Actual Foreign Investment in the Current Month | fdi | |

| PI | Portfolio Investment | PI | |

| OI | Other Investment | OI | |

| Short-term International Capital Flow | Foreign Exchange Reserves Change in the Current Month Net Current Account Balance | cf | |

| Currency Market | M2 (China) | M2 in China | cm2 |

| M2 Growth (China) | M2 Growth in China | dm2 | |

| M2 (U.S.) | M2 in the United States | um2 | |

| Exchange Rates | USD/JPY | USD/JPY Exchange Rate | USDJPY |

| GBP/USD | GBP/USD Exchange Rate | GBPUSD | |

| EUR/CHN | EUR/CHN Exchange Rate | EURCHN | |

| EUR/USD | EUR/USD Exchange Rate | EURUSD | |

| AUD/USD | AUD/USD Exchange Rate | AUDUSD | |

| USD/CAD | USD/CAD Exchange Rate | USDCAD | |

| USD/CHF | USD/CHF Exchange Rate | USDCHF | |

| U.S. Dollar Index | USDX | U.S. Dollar Index Published by ICE | USDX |

| Category | Indicator | Description | Indicator Name |

| Fundamental Data Exchange Rate | Offshore Spot RMB Closing Price | rate | |

| Long-term Bond Yield Differential (U.S.) | 10-Year Government Bond Yield - U.S. Federal Funds Rate | udr | |

| CPI (U.S.) | U.S. Consumer Price Index | cpiu | |

| Stock Index Data CSI 300 Index | CSI 300 Index | HS300 | |

| Current Account Import and Export Value | Current Month Import and Export Value | trade | |

| Import Value | Current Month Import Value | inputu | |

| Currency Market M2 (U.S.) | M2 in the United States | um2 | |

| Exchange Rates EUR/USD | EUR/USD Exchange Rate | EURUSD | |

| AUD/USD | AUD/USD Exchange Rate | AUDUSD | |

| U.S. Dollar Index USDX | U.S. Dollar Index Published by ICE | USDX |

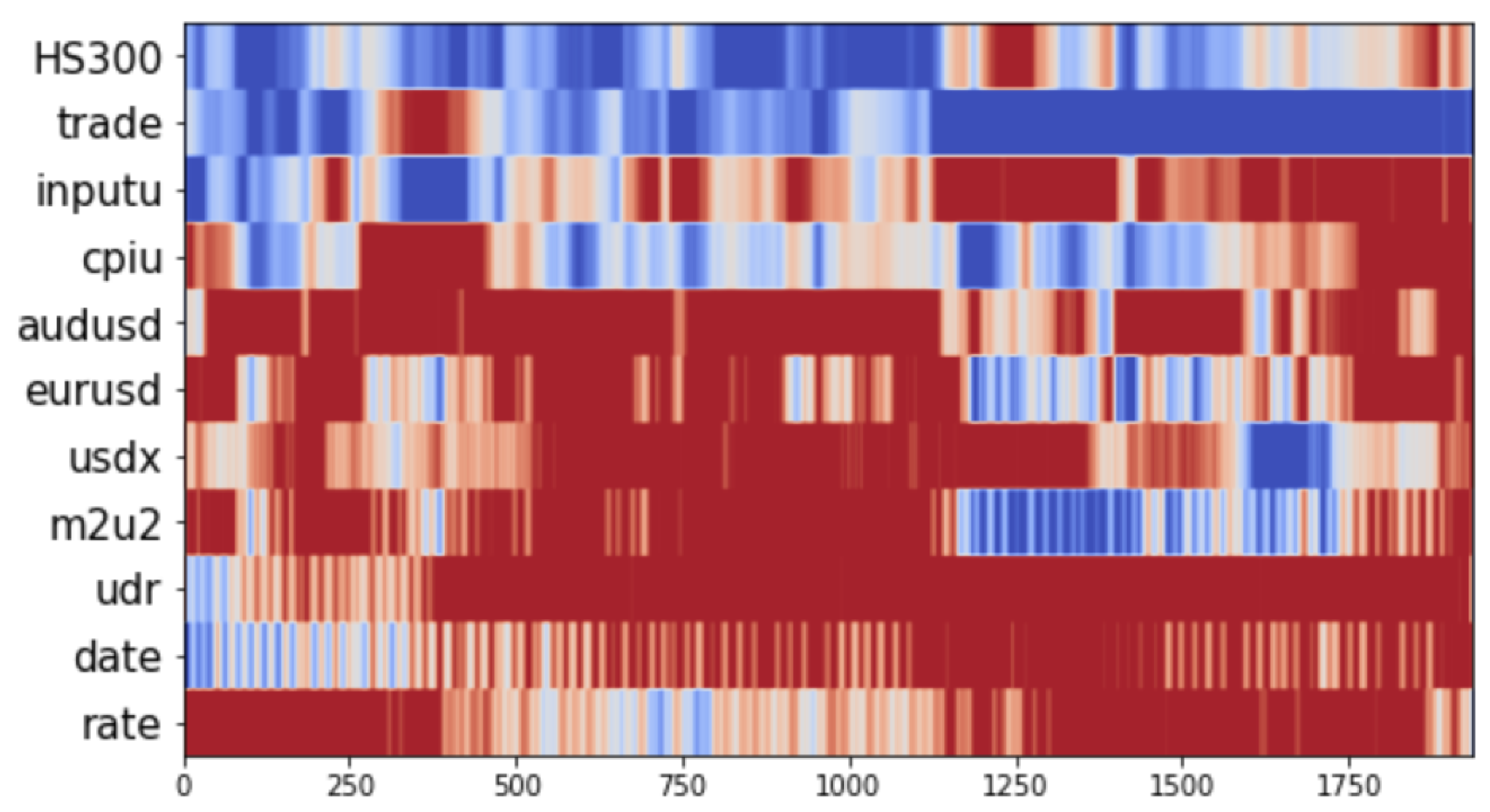

2.2. Feature Selection

2.3. Models

3. Experiments

3.1. Evaluation Metrics

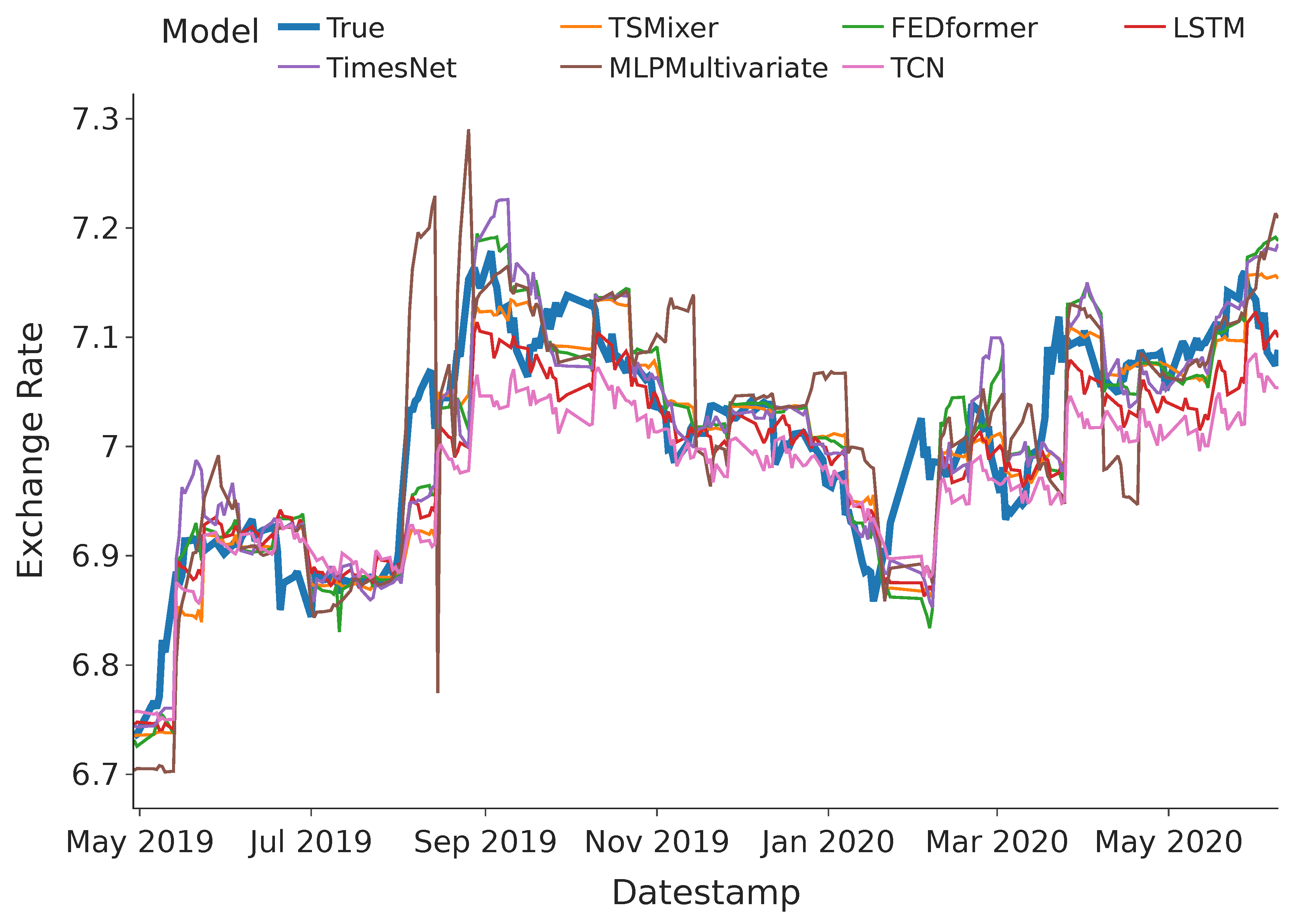

3.2. Performance Analysis

3.3. Gradient Based Feature Importance Analysis

4. Discussion

4.1. Modern Deep Learning Models on Exchange Rate Prediction

4.2. Theoretical and Real World Implications

5. Limitations and Future Research

6. Conclusions

References

- Dang, B.; Ma, D.; Li, S.; Qi, Z.; Zhu, E. Deep learning-based snore sound analysis for the detection of night-time breathing disorders. Applied and Computational Engineering 2024, 76. [Google Scholar] [CrossRef]

- Dang, B.; Zhao, W.; Li, Y.; Ma, D.; Yu, Q.; Zhu, E.Y. Real-Time Pill Identification for the Visually Impaired Using Deep Learning. arXiv preprint arXiv:2405.05983 2024.

- Song, X.; Wu, D.; Zhang, B.; Peng, Z.; Dang, B.; Pan, F.; Wu, Z. ZeroPrompt: Streaming Acoustic Encoders are Zero-Shot Masked LMs. Proc. INTERSPEECH 2023, 2023, pp. 1648–1652. [CrossRef]

- Ma, D.; Li, S.; Dang, B.; Zang, H.; Dong, X. Fostc3net: A lightweight YOLOv5 based on the network structure optimization. Journal of Physics: Conference Series 2024, 2824, 012004. [Google Scholar] [CrossRef]

- Li, S.; others. Utilizing the LightGBM algorithm for operator user credit assessment research. Applied and Computational Engineering 2024, 75, 36–47. [Google Scholar] [CrossRef]

- Chen, Y.; Xiao, Y. Recent Advancement of Emotion Cognition in Large Language Models. arxiv preprint 2024.

- Li, Y.; others. MPGraf: a Modular and Pre-trained Graphformer for Learning to Rank at Web-scale. ICDM. IEEE, 2023, pp. 339–348.

- Zhang, Z.; others. Simultaneously detecting spatiotemporal changes with penalized Poisson regression models. arXiv:2405.06613 2024.

- Li, X.; Liu, S. Predicting 30-Day Hospital Readmission in Medicare Patients: Insights from an LSTM Deep Learning Model. medRxiv 2024. [CrossRef]

- Zheng, H.; others. Identification of Prognostic Biomarkers for Stage III Non-Small Cell Lung Carcinoma in Female Nonsmokers Using Machine Learning. arXiv preprint arXiv:2408.16068 2024.

- Li, Y.; Xiong, H.; Kong, L.; Zhang, R.; Dou, D.; Chen, G. Meta hierarchical reinforced learning to rank for recommendation: a comprehensive study in moocs. ECML PKDD, 2022, pp. 302–317.

- Zhang, Q.; others. CU-Net: a U-Net architecture for efficient brain-tumor segmentation on BraTS 2019 dataset. arXiv:2406.13113 2024.

- Li, Y.; others. MHRR: MOOCs Recommender Service With Meta Hierarchical Reinforced Ranking. IEEE Transactions on Services Computing 2023. [Google Scholar] [CrossRef]

- Wang, L.; others. Semi-supervised learning for k-dependence Bayesian classifiers. Applied Intelligence 2022, pp. 1–19.

- Li, Y.; others. Coltr: Semi-supervised learning to rank with co-training and over-parameterization for web search. IEEE Transactions on Knowledge and Data Engineering 2023, 35, 12542–12555. [Google Scholar] [CrossRef]

- Liu, X.; others. Enhancing Skin Lesion Diagnosis with Ensemble Learning. arXiv preprint arXiv:2409.04381 2024.

- Chen, Y.; others. Do Large Language Models have Problem-Solving Capability under Incomplete Information Scenarios? Proceedings of the 62nd Annual Meeting of the ACL, 2024.

- Xu, H.; others. Can Speculative Sampling Accelerate ReAct Without Compromising Reasoning Quality? The Second Tiny Papers Track at ICLR 2024, 2024.

- Ji, Y.; others. RAG-RLRC-LaySum at BioLaySumm: Integrating Retrieval-Augmented Generation and Readability Control for Layman Summarization of Biomedical Texts. arXiv preprint arXiv:2405.13179 2024.

- Xu, H.; others. LlamaF: An Efficient Llama2 Architecture Accelerator on Embedded FPGAs. arXiv preprint arXiv:2409.11424 2024.

- Ji, Y.; Yu, Z.; Wang, Y. Assertion Detection Large Language Model In-context Learning LoRA Fine-tuning. arXiv:2401.17602 2024.

- Ji, Y.; others. Prediction of COVID-19 Patients’ Emergency Room Revisit using Multi-Source Transfer Learning. 2023 IEEE 11th International Conference on Healthcare Informatics (ICHI), 2023, pp. 138–144. [CrossRef]

- Li, Y.; others. Ltrgcn: Large-scale graph convolutional networks-based learning to rank for web search. ECML PKDD, 2023, pp. 635–651.

- Dan, H.C.; others. Multiple distresses detection for Asphalt Pavement using improved you Only Look Once Algorithm based on convolutional neural network. International Journal of Pavement Engineering 2024. [Google Scholar] [CrossRef]

- Li, Y.; Xiong, H.; Kong, L.; Bian, J.; Wang, S.; others. GS2P: a generative pre-trained learning to rank model with over-parameterization for web-scale search. Machine Learning 2024, pp. 1–19.

- Dan, H.C.; Lu, B.; Li, M. Evaluation of asphalt pavement texture using multiview stereo reconstruction based on deep learning. Construction and Building Materials 2024, 412, 134837. [Google Scholar] [CrossRef]

- Xiong, H.; Bian, J.; Li, Y.; Li, X.; Du, M.; Wang, S.; Yin, D.; Helal, S. When Search Engine Services meet Large Language Models: Visions and Challenges. IEEE Transactions on Services Computing 2024. [Google Scholar] [CrossRef]

- Fan, X.; Tao, C. Towards Resilient and Efficient LLMs: A Comparative Study of Efficiency, Performance, and Adversarial Robustness. arXiv preprint arXiv:2408.04585 2024.

- Wang, Z.; others. CDC-YOLOFusion: Leveraging Cross-Scale Dynamic Convolution Fusion for Visible-Infrared Object Detection. IEEE Transactions on Intelligent Vehicles 2024, pp. 1–14.

- Chen, Y.; others. EmotionQueen: A Benchmark for Evaluating Empathy of Large Language Models. Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, 2024.

- Gao, H.; others. A novel texture extraction method for the sedimentary structures’ classification of petroleum imaging logging. CCPR. Springer, 2016, pp. 161–172.

- Chen, Y.; others. HOTVCOM: Generating Buzzworthy Comments for Videos. Proceedings of the 62nd Annual Meeting of the ACL, 2024.

- Yang, X.; others. Retargeting destinations of passive props for enhancing haptic feedback in virtual reality. VRW, 2022, pp. 618–619.

- Shen, X.; others. Harnessing XGBoost for robust biomarker selection of obsessive-compulsive disorder (OCD) from adolescent brain cognitive development (ABCD) data. ICBBE 2024. SPIE, 2024, Vol. 13252.

- Li, Y.; others. S2phere: Semi-supervised pre-training for web search over heterogeneous learning to rank data. ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2023, pp. 4437–4448.

- Ouyang, N.; others. Anharmonic lattice dynamics of SnS across phase transition: A study using high-dimensional neural network potential. Applied Physics Letters 2021, 119. [Google Scholar] [CrossRef]

- Liu, X.; Yu, Z.; Tan, L. Deep Learning for Lung Disease Classification Using Transfer Learning and a Customized CNN Architecture with Attention. arXiv preprint arXiv:2408.13180 2024.

- Chen, Y.; others. Hallucination detection: Robustly discerning reliable answers in large language models. CIKM, 2023, pp. 245–255.

- Li, Z.; others. Incorporating economic indicators and market sentiment effect into US Treasury bond yield prediction with machine learning. Journal of Infrastructure, Policy and Development 2024, 8, 7671. [Google Scholar] [CrossRef]

- Yu, C.; others. Advanced User Credit Risk Prediction Model using LightGBM, XGBoost and Tabnet with SMOTEENN. arXiv:2408.03497 2024.

- Chen, Y.; others. TemporalMed: Advancing Medical Dialogues with Time-Aware Responses in Large Language Models. WSDM, 2024.

- Ding, Z.; others. Regional Style and Color Transfer. CVIDL. IEEE, 2024, pp. 593–597. [CrossRef]

- Yu, H.; others. Enhancing Healthcare through Large Language Models: A Study on Medical Question Answering. arXiv:2408.04138 2024.

- Ding, Z.; others. Confidence Trigger Detection: Accelerating Real-Time Tracking-by-Detection Systems. ICECAI, 2024, pp. 587–592. [CrossRef]

- Yang, Q.; others. A Comparative Study on Enhancing Prediction in Social Network Advertisement through Data Augmentation. MLISE. IEEE, 2024, pp. 214–218. [CrossRef]

- Zhou, Y.; others. Evaluating Modern Approaches in 3D Scene Reconstruction: NeRF vs Gaussian-Based Methods. arXiv preprint arXiv:2408.04268 2024.

- Ni, H.; others. Harnessing Earnings Reports for Stock Predictions: A QLoRA-Enhanced LLM Approach. arXiv preprint arXiv:2408.06634 2024.

- Chen, Y.; others. Mapo: Boosting large language model performance with model-adaptive prompt optimization. Findings of the Association for Computational Linguistics: EMNLP 2023, 2023, pp. 3279–3304.

- Ouyang, N.; Wang, C.; Chen, Y. Temperature-and pressure-dependent phonon transport properties of SnS across phase transition from machine-learning interatomic potential. International Journal of Heat and Mass Transfer 2022, 192, 122859. [Google Scholar] [CrossRef]

- Ke, Z.; Li, Z.; Cao, Z.; Liu, P. Enhancing transferability of deep reinforcement learning-based variable speed limit control using transfer learning. IEEE Transactions on Intelligent Transportation Systems 2020, 22, 4684–4695. [Google Scholar] [CrossRef]

- Ke, Z.; Zou, Q.; Liu, J.; Qian, S. Real-time system optimal traffic routing under uncertainties–Can physics models boost reinforcement learning? arXiv preprint arXiv:2407.07364 2024.

- Li, P.; Lin, Y.; Schultz-Fellenz, E. Contextual Hourglass Network for Semantic Segmentation of High Resolution Aerial Imagery. 2024 5th International Conference on Electronic Communication and Artificial Intelligence (ICECAI). IEEE, 2024, pp. 15–18. [CrossRef]

- Gao, L.; others. Autonomous multi-robot servicing for spacecraft operation extension. IROS. IEEE, 2023, pp. 10729–10735.

- Yu, L.; others. Stochastic analysis of touch-tone frequency recognition in two-way radio systems for dialed telephone number identification. ICAACE. IEEE, 2024, pp. 1565–1572.

- Ni, H.; others. Time Series Modeling for Heart Rate Prediction: From ARIMA to Transformers. EEI. IEEE, 2024, pp. 584–589. [CrossRef]

- Gao, L.; others. Decentralized Adaptive Aerospace Transportation of Unknown Loads Using A Team of Robots. arXiv:2407.08084 2024.

- Zhang, Y.; others. Manipulator control system based on machine vision. ATCI. Springer, 2020, pp. 906–916.

- Li, P.; Yang, Q.; Geng, X.; Zhou, W.; Ding, Z.; Nian, Y. Exploring Diverse Methods in Visual Question Answering. ICECAI. IEEE, 2024, pp. 681–685. [CrossRef]

- Ding, Z.; Li, P.; Yang, Q.; Li, S. Enhance Image-to-Image Generation with LLaVA-generated Prompts. 2024 5th International Conference on Information Science, Parallel and Distributed Systems (ISPDS). IEEE, 2024, pp. 77–81. [CrossRef]

- Li, P.; others. Deception Detection from Linguistic and Physiological Data Streams Using Bimodal Convolutional Neural Networks. ISPDS. IEEE, 2024, pp. 263–267. [CrossRef]

- Gao, L.; others. Adaptive Robot Detumbling of a Non-Rigid Satellite. arXiv preprint arXiv:2407.17617 2024.

- Song, Y.; others. Looking From a Different Angle: Placing Head-Worn Displays Near the Nose. Proceedings of the Augmented Humans International Conference 2024, 2024, pp. 28–45.

- Tan, L.; others. Enhanced self-checkout system for retail based on improved YOLOv10. arXiv preprint arXiv:2407.21308 2024.

- Li, Z.; others. A Contrastive Deep Learning Approach to Cryptocurrency Portfolio with US Treasuries. Journal of Computer Technology and Applied Mathematics 2024, 1, 1–10. [Google Scholar] [CrossRef]

- Xiang, J.; Guo, L. Comfort Improvement for Autonomous Vehicles Using Reinforcement Learning with In-Situ Human Feedback. Technical report, SAE Technical Paper, 2022.

- Chen, Y.; others. Grow-and-Clip: Informative-yet-Concise Evidence Distillation for Answer Explanation. 2022 IEEE 38th International Conference on Data Engineering (ICDE). IEEE, 2022, pp. 741–754.

- Zheng, S.; others. Coordinated variable speed limit control for consecutive bottlenecks on freeways using multiagent reinforcement learning. Journal of advanced transportation 2023, 2023, 4419907. [Google Scholar] [CrossRef]

- Liu, W.; others. Enhancing document-level event argument extraction with contextual clues and role relevance. arXiv:2310.05991 2023.

- Chen, Y.; Xiao, Y.; Li, Z.; Liu, B. XMQAs: Constructing Complex-Modified Question-Answering Dataset for Robust Question Understanding. IEEE Transactions on Knowledge and Data Engineering 2023. [Google Scholar] [CrossRef]

- Chen, Y.; others. Dr.Academy: A Benchmark for Evaluating Questioning Capability in Education for Large Language Models. ACL, 2024.

- Xie, T.; others. Darwin series: Domain specific large language models for natural science. arXiv preprint arXiv:2308.13565 2023.

- Liu, D.; others. GraphSnapShot: Graph Machine Learning Acceleration with Fast Storage and Retrieval. arXiv preprint arXiv:2406.17918 2024.

- Xie, T.; others. Large language models as master key: unlocking the s ecrets of materials science with GPT. arXiv:2304.02213 2023.

- Li, Z.; others. SiaKey: A Method for Improving Few-shot Learning with Clinical Domain Information. BHI. IEEE, 2023, pp. 1–4.

- Ke, Z.; Qian, S. Leveraging ride-hailing services for social good: Fleet optimal routing and system optimal pricing. Transportation Research Part C: Emerging Technologies 2023, 155, 104284. [Google Scholar] [CrossRef]

- Chen, Y.; others. Can Pre-trained Language Models Understand Chinese Humor? Proceedings of the Sixteenth ACM International Conference on Web Search and Data Mining, 2023, pp. 465–480.

- Fan, X.; others. Advanced Stock Price Prediction with xLSTM-Based Models: Improving Long-Term Forecasting. Preprints 2024.

- Ke, Z.; others. Interpretable mixture of experts for time series prediction under recurrent and non-recurrent conditions. arXiv preprints arXiv:2409.03282 2024.

- Yu, C.; others. Credit card fraud detection using advanced transformer model. arXiv preprint arXiv:2406.03733 2024.

- Zheng, S.; Li, Z.; Li, M.; Ke, Z. Enhancing reinforcement learning-based ramp metering performance at freeway uncertain bottlenecks using curriculum learning. IET Intelligent Transport Systems 2024. [Google Scholar] [CrossRef]

- Song, Y.; others. Looking From a Different Angle: Placing Head-Worn Displays Near the Nose. Augmented Humans, 2024, pp. 28–45.

- Ouyang, N.; Wang, C.; Chen, Y. Role of alloying in the phonon and thermal transport of SnS–SnSe across the phase transition. Materials Today Physics 2022, 28, 100890. [Google Scholar] [CrossRef]

- Chen, Y.; others. Talk Funny! A Large-Scale Humor Response Dataset with Chain-of-Humor Interpretation. Proceedings of the AAAI Conference on Artificial Intelligence, 2024, Vol. 38, pp. 17826–17834.

- Wang, Z.; others. Graph neural network recommendation system for football formation. Applied Science and Biotechnology Journal for Advanced Research 2024, 3, 33–39. [Google Scholar]

- Liu, D.; others. LLMEasyQuant – An Easy to Use Toolkit for LLM Quantization. arXiv preprint arXiv:2406.19657 2024.

- Ekambaram, V.; others. TSMixer: Lightweight MLP-Mixer Model for Multivariate Time Series Forecasting. ACM SIGKDD, 2023, p. 459–469.

- Wang, L.; others. Semi-supervised weighting for averaged one-dependence estimators. Applied Intelligence 2022, pp. 1–17.

- Liu, X.; others. Deep learning in medical image classification from mri-based brain tumor images. arXiv preprint arXiv:2408.00636 2024.

- Zeng, Z.; others. RSA: Resolving Scale Ambiguities in Monocular Depth Estimators through Language Descriptions. arXiv preprint arXiv:2410.02924 2024.

- Liu, D.; others. Distance Recomputator and Topology Reconstructor for Graph Neural Networks. arXiv preprint arXiv:2406.17281 2024.

- Zhou, T.; others. Fedformer: Frequency enhanced decomposed transformer for long-term series forecasting. ICML, 2022.

- Zeng, Z.; others. Wordepth: Variational language prior for monocular depth estimation. CVPR, 2024, pp. 9708–9719.

- Zhang, T.; others. Improving the efficiency of cmos image sensors through in-sensor selective attention. ISCAS, 2023, pp. 1–4.

- Yang, F.; others. NeuroBind: Towards Unified Multimodal Representations for Neural Signals. arXiv preprint arXiv:2407.14020 2024.

- Zhang, R.; others. Dspoint: Dual-scale point cloud recognition with high-frequency fusion. arXiv preprint arXiv:2111.10332 2021.

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Computation 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Kang, Y.; others. Tie Memories to E-souvenirs: Hybrid Tangible AR Souvenirs in the Museum. UIST, 2022, pp. 1–3.

- Zhang, R.; Zeng, Z.; Guo, Z.; Li, Y. Can language understand depth? ACM Multimedia, 2022, pp. 6868–6874.

- Nie, Y.; others. A time series is worth 64 words: Long-term forecasting with transformers. arXiv preprint arXiv:2211.14730 2022.

- Song, Y.; others. Going Blank Comfortably: Positioning Monocular Head-Worn Displays When They are Inactive. ISWC, 2023, pp. 114–118.

- Wu, H.; others. Timesnet: Temporal 2d-variation modeling for general time series analysis. ICLR, 2022.

- Song, Y. Deep Learning Applications in the Medical Image Recognition. American Journal of Computer Science and Technology 2019. [Google Scholar]

- Arora, P.; others. Comfortably Going Blank: Optimizing the Position of Optical Combiners for Monocular Head-Worn Displays During Inactivity. ACM ISWC, 2024, pp. 148–151.

- Vaswani, A.; others. Attention Is All You Need. Advances in neural information processing systems 2017, 30. [Google Scholar]

- Zhang, T.; others. Transformer-Based Selective Super-resolution for Efficient Image Refinement. AAAI, 2024, pp. 7305–7313.

- Yuan, Y.; Huang, Y.; others. Rhyme-aware Chinese lyric generator based on GPT. arXiv preprint arXiv:2408.10130 2024.

- LeCun, Y.; others. Backpropagation Applied to Handwritten Zip Code Recognition. Neural computation 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Zhang, J.; others. Prototypical Reward Network for Data-Efficient RLHF. arXiv preprint arXiv:2406.06606 2024.

- Zhang, Z.; others. Complex scene image editing by scene graph comprehension. arXiv preprint arXiv:2203.12849 2022.

- Bai, S.; others. An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling. arXiv:1803.01271 2018.

- Zhang, Z.; Qin, W.; Plummer, B.A. Machine-generated Text Localization. arXiv preprint arXiv:2402.11744 2024.

- Chen, Y.; others. XMeCap: Meme Caption Generation with Sub-Image Adaptability. Proceedings of the 32nd ACM Multimedia, 2024.

- Zhang, Z.; others. Movie genre classification by language augmentation and shot sampling. WACV, 2024, pp. 7275–7285.

- Chang, P.; others. A transformer-based diffusion probabilistic model for heart rate and blood pressure forecasting in Intensive Care Unit. Computer Methods and Programs in Biomedicine 2024, 246. [Google Scholar] [CrossRef]

- Kang, Y.; others. 6: Simultaneous Tracking, Tagging and Mapping for Augmented Reality. SID Symposium Digest of Technical Papers. Wiley Online Library, 2021, Vol. 52, pp. 31–33.

- Mo, K.; others. Fine-Tuning Gemma-7B for Enhanced Sentiment Analysis of Financial News Headlines. ICETCI, 2024, pp. 130–135.

- Huang, S.; Song, Y.; Kang, Y.; Yu, C.; others. AR Overlay: Training Image Pose Estimation on Curved Surface in a Synthetic Way. CS & IT Conference Proceedings, 2024, Vol. 14.

- Chen, Y.; others. Hadamard adapter: An extreme parameter-efficient adapter tuning method for pre-trained language models. CIKM, 2023, pp. 276–285.

- Bo, S.; others. Attention Mechanism and Context Modeling System for Text Mining Machine Translation. arXiv:2408.04216 2024.

- Liu, W.; others. Beyond Single-Event Extraction: Towards Efficient Document-Level Multi-Event Argument Extraction. arXiv preprint arXiv:2405.01884 2024.

- Ma, D.; others. Transformer-Based Classification Outcome Prediction for Multimodal Stroke Treatment. arXiv preprint arXiv:2404.12634 2024.

- Zhang, T.; others. Patch-based Selection and Refinement for Early Object Detection. WACV, 2024, pp. 729–738.

- Selvaraju, R.R.; others. Grad-CAM: visual explanations from deep networks via gradient-based localization. International journal of computer vision 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Wang, Z.; others. Research on Autonomous Driving Decision-making Strategies based Deep Reinforcement Learning. arXiv:2408.03084 2024.

| Model | MAE | MSE | ||||||||

| Prediction Length | 16 | 24 | 32 | 48 | 64 | 16 | 24 | 32 | 48 | 64 |

| TSMixer | 0.032 | 0.039 | 0.045 | 0.054 | 0.063 | 0.002 | 0.003 | 0.004 | 0.006 | 0.007 |

| FEDformer | 0.033 | 0.042 | 0.049 | 0.063 | 0.086 | 0.002 | 0.004 | 0.004 | 0.007 | 0.012 |

| iTransformer | 0.035 | 0.054 | 0.072 | 0.089 | 0.064 | 0.002 | 0.005 | 0.008 | 0.011 | 0.008 |

| PatchTST | 0.039 | 0.060 | 0.056 | 0.082 | 0.125 | 0.003 | 0.011 | 0.006 | 0.012 | 0.034 |

| TimesNet | 0.038 | 0.052 | 0.063 | 0.084 | 0.104 | 0.003 | 0.005 | 0.008 | 0.012 | 0.020 |

| Transformer | 0.042 | 0.057 | 0.067 | 0.088 | 0.095 | 0.004 | 0.007 | 0.008 | 0.014 | 0.015 |

| MLP | 0.053 | 0.074 | 0.124 | 0.103 | 0.233 | 0.006 | 0.013 | 0.043 | 0.017 | 0.108 |

| TCN | 0.049 | 0.080 | 0.149 | 0.128 | 0.063 | 0.004 | 0.009 | 0.026 | 0.020 | 0.007 |

| LSTM | 0.047 | 0.056 | 0.064 | 0.098 | 0.132 | 0.005 | 0.006 | 0.007 | 0.015 | 0.027 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).