1. Introduction

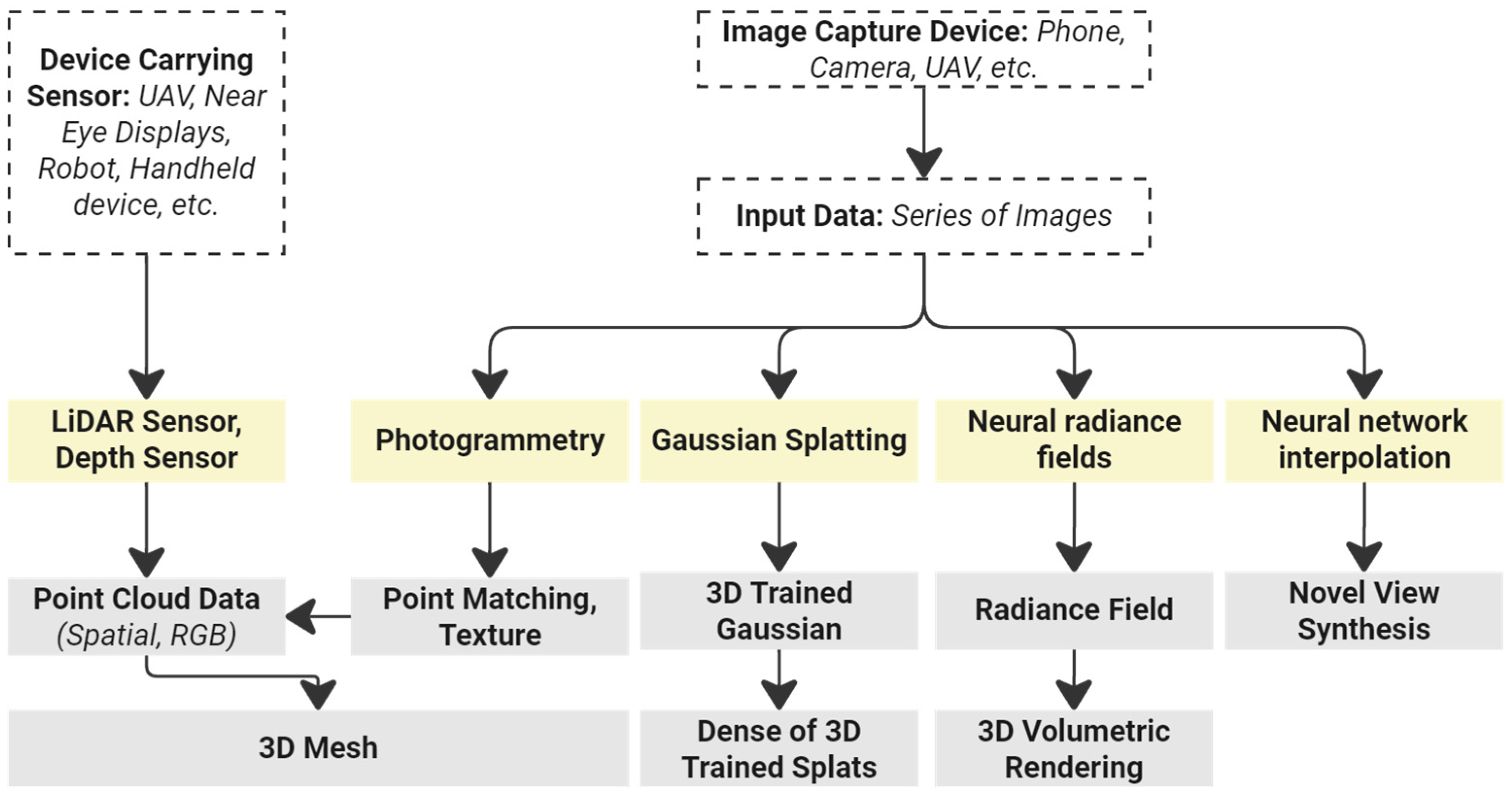

Three-dimensional (3D) reconstructions of real-world environments are valuable for various applications in architecture and urban planning. These applications include urban and construction management, site surveying, heritage preservation, data simulation, and modeling urban and architectural spaces [

1,

2,

3,

4,

5,

6]. Additionally, 3D reconstructions support collaborative design and development of digital twin. The process involves converting real-world data, such as images and spatial information, into a corresponding 3D digital replica within a virtual environment [

2,

3,

7]. This virtual twin allows users to interact with and utilize replicated spaces for various purposes. In the current era of urban development, digital twin technology is a key research focus for addressing urban challenges and enhancing urban management through smart development strategies [

1,

7]. Recent advancements in computer technology and artificial intelligence have led to the development of new methods and algorithms to improve the quality of 3D reconstruction, particularly in architecture and urbanism. These methods are being refined to enhance results using cost-effective tools and software to increase accessibility and commercial viability. Common techniques used in 3D reconstruction of architecture and urbanism include light detection and ranging (LiDAR), depth sensors, photogrammetry, neural radiance fields (NeRF), Gaussian splatting, and novel view synthesis with data interpolation [

2] (

Figure 1).

Among the methods mentioned, LiDAR and depth sensors are utilized to directly capture spatial data, creating a point cloud where each point includes spatial coordinates and RGB color information. These sensors are commonly integrated into devices with navigation capabilities, including unmanned aerial vehicles (UAVs), handheld devices, near-eye displays, and robots. The collected point-cloud data is then processed using specialized computer software to construct a 3D mesh [

8]. However, utilizing these tools for data collection demands specific expertise and access to specialized equipment, leading to relatively high costs. On the other hand, other methods rely on raster data as input, typically in the form of captured images from various recording devices ranging from smartphones to UAVs. Photogrammetry, a method extensively researched for decades and now widely used, employs point matching to generate 3D meshes [

4,

9,

10]. The output of photogrammetry, being a mesh, can be applied across various scenarios. However, this method has limitations such as long processing times, potential data over- or underestimation, and challenges with reflective surfaces, water, glass, and dark-colored materials [

4,

8]. Advancements in neural networks have led to the emergence of methods like NeRF [

11,

12] and neural network interpolation [

13,

14,

15], exhibiting superior outcomes in 3D reconstruction compared to traditional techniques. However, these methods have limitations as they are unable to generate 3D meshes. Gaussian Splatting, the most recent method in 3D reconstruction, is currently under exploration with the aim of achieving the highest quality results to date [

16,

17].

Gaussian splatting is an advanced technology in the 3D reconstruction and rendering field that utilizes the real-time representation of 3D scenes using Gaussians. Unlike traditional methods relying on discrete point clouds, meshes, or voxel grids, Gaussian splatting represents a scene as a set of anisotropic Gaussian kernels distributed in space [

16,

18]. This approach ensures an accurate representation of nearly all viewpoints captured during data collection in the final rendering. The data collected serves as reference images for the Gaussian splatting algorithm, enabling real-time rendering from various perspectives with high fidelity and detail. Post-training, each trained Gaussian represents a small scene region with associated attributes such as color, size, density, and spatial location [

18]. A primary limitation of Gaussian splatting is its computational intensity, necessitating powerful graphics processing units (GPUs), especially for rendering large-area scenes. Moreover, akin to NeRF and neural network interpolation methods, Gaussian splatting does not generate a 3D mesh. Nevertheless, recent studies consistently showcase the superior 3D reconstruction quality of Gaussian splatting compared to NeRF and photogrammetry, indicating its potential as a cutting-edge 3D reconstruction technology [

16,

19,

20]. Neural network interpolation can achieve near-perfect novel view synthesis, producing highly realistic images from new perspectives. However, this method requires precise image data collection, typically feasible only for small scenes [

13,

21]. Hence, this study focuses solely on analyzing the outcomes of Gaussian splatting.

Some experts argue that the limitation of Gaussian splatting in generating a 3D mesh poses a significant challenge for subsequent applications, particularly in areas like virtual reality (VR), augmented reality (AR), and 3D modeling [

16,

19], which are vital to architecture and urban planning. To address this challenge, this study suggests a visualization application aimed at enhancing the capabilities of Gaussian splatting–Hologram. This application could serve as an ideal technological advancement, facilitating broader and more efficient utilization across various domains. Hologram technology represents a state-of-the-art development in 3D visualization, enabling users to directly interact with and view 3D content without the need for specialized equipment [

22,

23,

24]. Holograms utilize light diffraction and stereoscopic visualization to create ultra-realistic replicas of objects in real-world environments. Recent progress has led to the creation of holograms using CHIMERA hologram printers, achieving impressive speeds of up to 25 Hz for holograms with a 500 μm hogel resolution and 50 Hz for those with a 250 μm hogel resolution [

22]. Similar to pixels in 2D images, holograms are the smallest 3D units of a hologram, each containing multiple viewpoints depending on the selected viewing angle. Previous studies have shown the potential for seamless integration of hologram visualization into architectural and urban projects, enhancing immersive and interactive design processes and enabling collaborative decision-making based on digital twin of real projects [

23]. One key advantage of holograms as visualization tools for the 3D reconstruction output of Gaussian splatting from real-space data collection is that they do not require a 3D mesh as input data. Instead, holograms rely on a stereoscopic visualization mechanism that aligns well with the continuous and volumetric radiance field representations used in Gaussian splatting.

Since late 2023, significant scientific research has focused on Gaussian splatting [

16,

25]. The main goals of these studies include optimizing the Gaussian splatting algorithm to improve output quality, reduce processing time, and minimize GPU power requirements. Additionally, research has been aimed at expanding the applications of Gaussian splatting across various fields and integrating it with emerging technologies to enhance its utility and effectiveness [

19,

25]. While some studies have explored the applications of Gaussian splatting in urban and architectural domains [

26,

27], none have fully integrated it with downstream applications to establish a complete workflow. In this study, experiments were conducted that involved data collection from large real-world spaces, processing the original data using the Gaussian splatting method, and utilizing the final rendering output to calculate and produce holograms. In addition to Gaussian splatting, photogrammetry was employed using the same data source. Photogrammetry was chosen owing to its established commercial development, accessibility, and widespread application in architecture and urban planning [

6,

10,

28,

29]. By comparing the outcomes of Gaussian splatting and photogrammetry, the aim is to explore broader applications of the former in architecture and urban planning across various contexts. The research objectives were as follows:

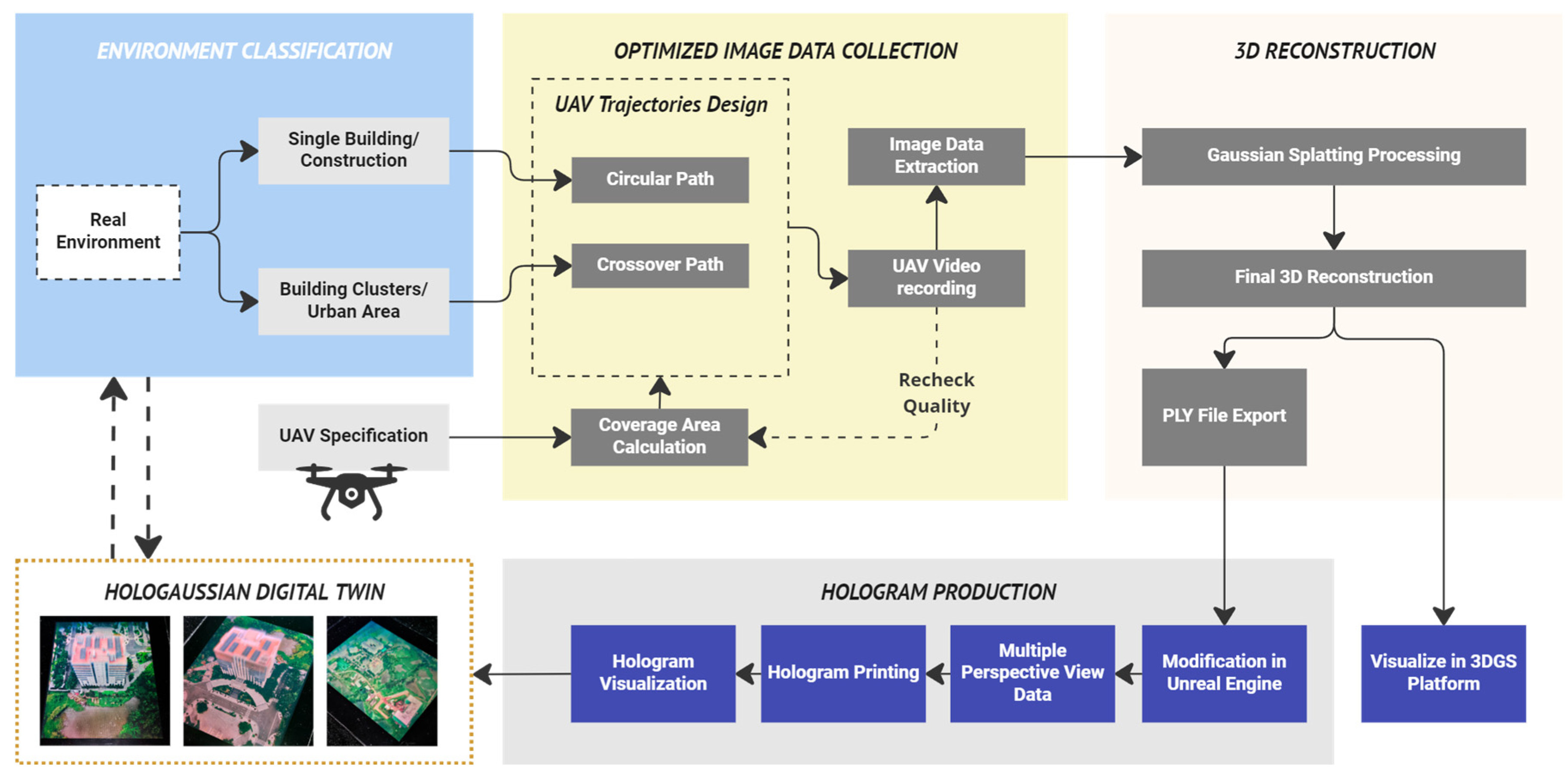

(O1) to validate the usage of Gaussian splatting for urban and architectural 3D reconstruction;

(O2) to demonstrate optimized UAV data collection for Gaussian splatting in different scenarios; and

(O3) to develop a workflow for creating HoloGaussian digital twin for real urban and architectural environments.

2. Materials and Methods

2.1. Experiment Environments

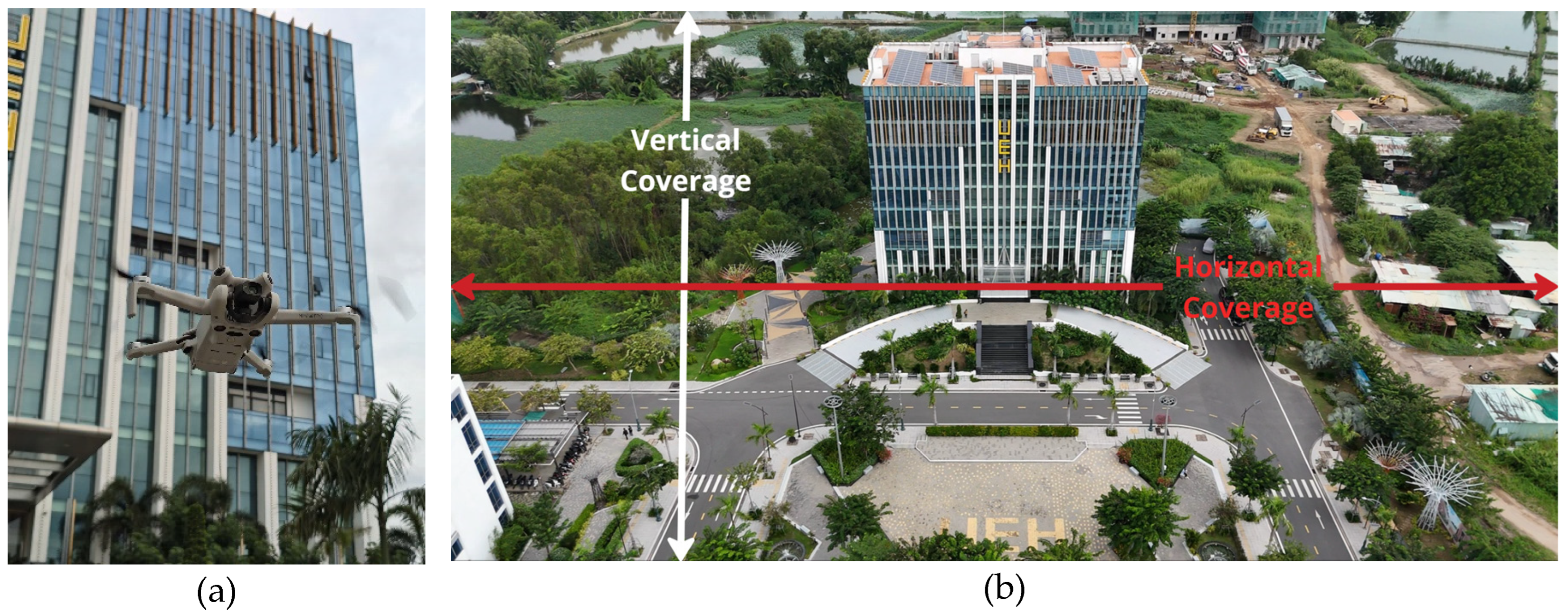

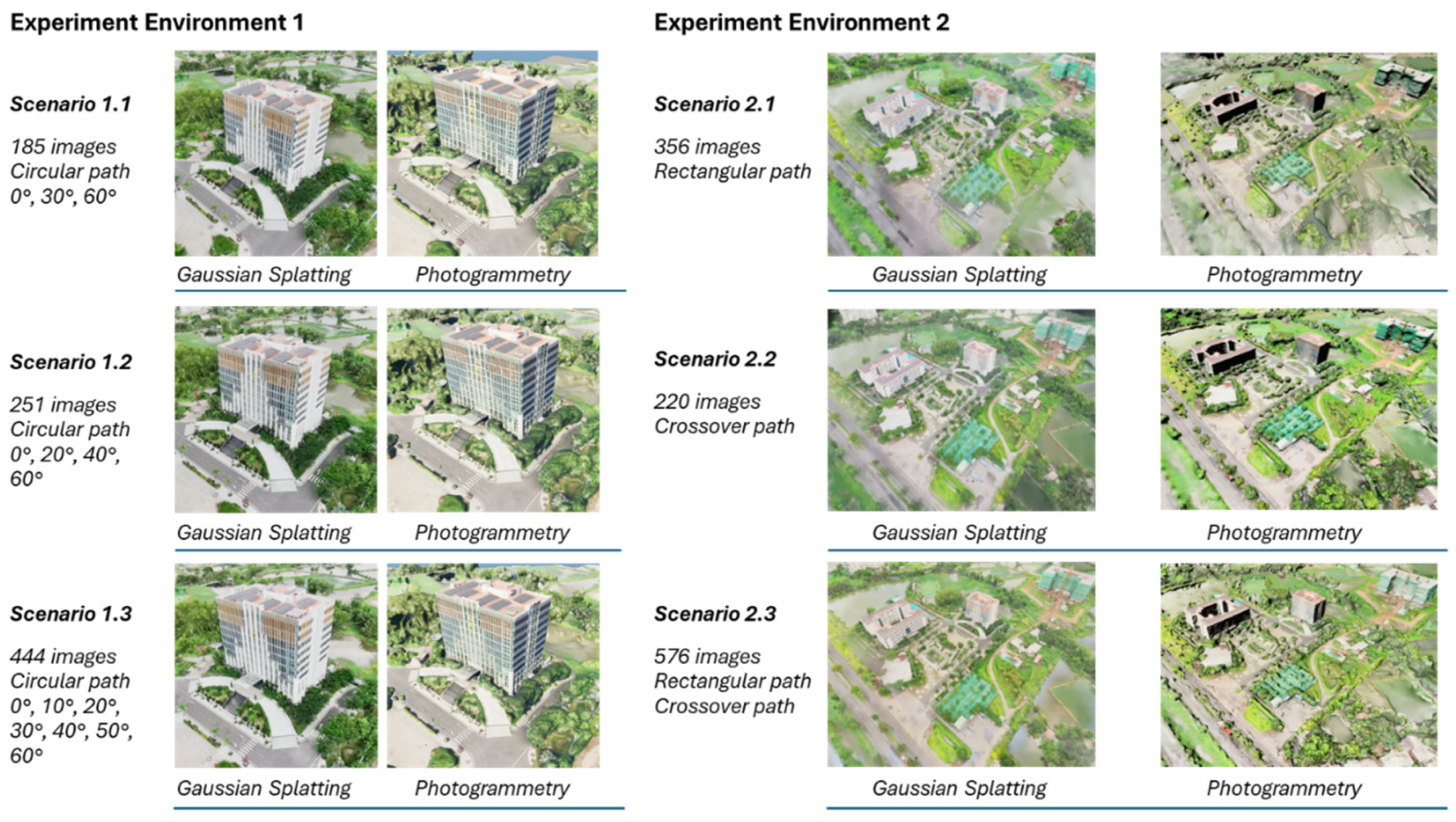

The experimental setting for this study was chosen to be on the campus of the University of Economics, Ho Chi Minh City (UEH). Data collection using UAVs was fully authorized for the experiments. Selecting the campus location offered the research team better control over environmental variables and allowed for flexible scheduling of experiments. The campus comprises a main building measuring 44 m x 32.7 m x 37.2 m, a classroom block, various technical infrastructure, and open spaces such as squares, parking lots, sports fields, and dormitories currently under construction. Considering the construction and landscape features of the survey area, the study site was divided into two experimental environments. Experimental environment 1 included solely the campus headquarters building and its immediate surroundings, while experimental environment 2 encompassed a larger area of 300 m x 400 m, including the entire campus, all buildings, and amenities within (

Figure 2).

In experiment environment 1, the moderate size and height of the studied building made it a suitable model for high-rise buildings in urban settings. The sparse presence of surrounding structures near the main building provided the research team with greater flexibility to test different UAV-based data collection techniques. In experiment environment 2, the diverse landscape, comprising buildings, green spaces, and water surfaces, poses a challenge when comparing the quality of 3D reconstructions generated by photogrammetry and Gaussian splatting, given the complexity of accurately reconstructing these elements.

2.2. 3D Gaussian Splatting and Rendering Devices

Numerous Gaussian splatting solutions have been proposed for various optimization applications [

19]. However, for the experiments in this study, the research team selected Bernhard’s 3D Gaussian splatting (3DGS) technology (

Figure 3) [

18]. This choice was made because it represents a foundational study and is one of the first widely recognized Gaussian splatting solutions, gaining prominence over the past year owing to its high stability and adaptability across multiple computer operating systems. Bernhard’s work also served as the basis for many subsequent advancements in Gaussian splatting [

19,

25].

Structure-from-motion point clouds are generated from image sequence data obtained using multiview stereo (MVS) mechanisms. The subsequent step involves training the 3D Gaussians by employing adaptive density control within the algorithm. This process optimizes the representation of a 3D scene by adjusting the Gaussian density to accurately capture details. Finally, the complete 3D scene reconstruction was projected and rendered in real-time from multiple perspectives, with the results displayed via the 3DGS interface. The information utilized to build the computer environment for Gaussian splatting is derived from a previous study [

18] and the associated GitHub repository [

30]. These resources offer the essential data and codebase for implementing and experimenting with Gaussian splatting. The 3DGS allows real-time rendering at a resolution of 1080p with a frame rate of 100 frames per second (fps). The computer used for performing Gaussian splatting in this study was equipped with a Central Processing Unit (CPU): AMD Ryzen 9 5900HX; GPU 1: AMD Radeon TM; GPU 2: NVIDIA GeForce RTX 3080; and Random Access Memory (RAM): 32 GB. After testing various scenarios with the collected data, the research team concluded that to optimize processing time and achieve the best possible outcome within the GPU’s capabilities, the number of input images should be capped at a maximum of 800 images. This directly impacted the experimental implementation plan of this study.

Dense 3D Gaussian splat products with real-time rendering can be monitored through a 3DGS interface (

Figure 2). However, a significant challenge was identified by the research team regarding the practical application of Gaussian splatting: real-time rendering is limited to the 3DGS platform. This limitation implies that the real-time rendering capabilities are lost once the files are exported in polygon file format (PLY) for utilization in other software, such as Unreal Engine or Blender. Furthermore, the quality of the PLY files is notably lower when accessed in alternative applications, such as Unreal Engine, compared to the high-quality continuous rendering achieved within the 3DGS environment.

2.3. DJI Mini 4 Pro UAV

UAVs have become a popular and easily accessible method for collecting spatial data, serving as a practical alternative to remote sensing when tasks require close proximity to the ground or structures. Owing to their flexibility in configuring flight trajectories and experiments, UAVs can be utilized in a wide range of experimental environments, particularly in urban areas [

9,

10,

31,

32]. The device used in this experiment was the DJI Mini 4 Pro, a mini-UAV version well-suited for integrating both aerial and terrestrial close-range recordings, as well as performing flight tasks [

9,

32] (

Figure 4). To ensure efficient data processing for Gaussian splatting, the image data were collected sequentially using a mechanism that guaranteed full MVS coverage for each experimental environment, capturing all necessary perspectives for accurate and comprehensive 3D reconstruction. The acquired image data must be appropriately processed to avoid excessive redundancy. Accumulating an excessive amount of irrelevant data, particularly data outside the experimental environment, imposes unnecessary strain on computational resources during the rendering and visualization phases. The camera of the UAV maintained a fixed aperture parameter of f = 1.7 and recorded a video of the experimental environment at 60 fps for collecting raster data. The focal length of the camera was fixed at 24 mm, and the video was recorded at a resolution of 1920 × 1080 px. To optimize the data collection process, the UAV coverage area was calculated by considering the field of view (FOV) of the camera. Although various sources provide different values for this parameter, the team selected specific values for their calculations [

33], opting for a horizontal FOV of 69.7° and a vertical FOV of 42.8°. (

Table 1).

The UAV flight altitude and camera angle were adjusted to align with each predetermined flight waypoint and path as per the experimental plan. In this study, the raster data collection using UAV was divided into various methods customized for each experimental environment.

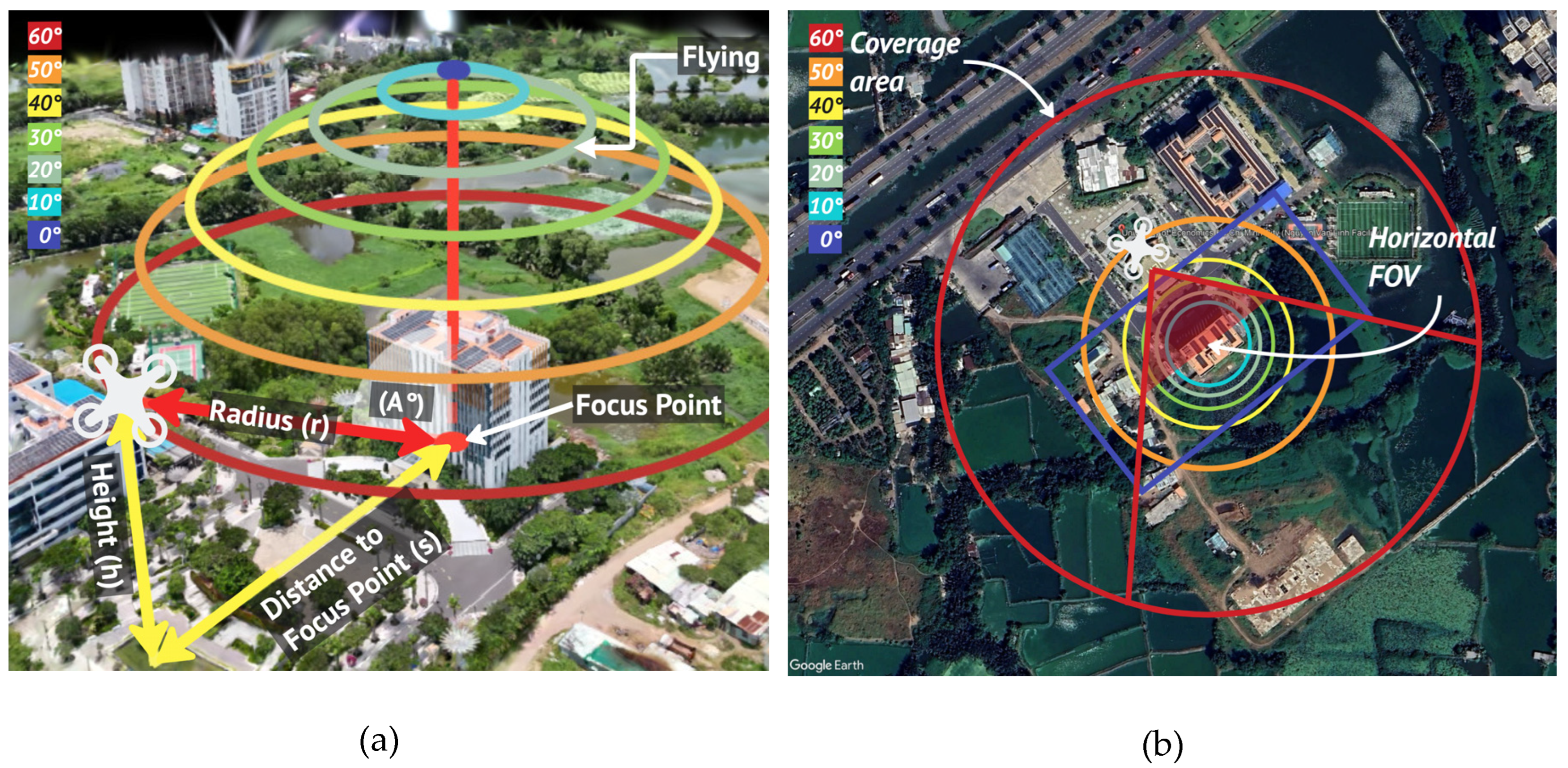

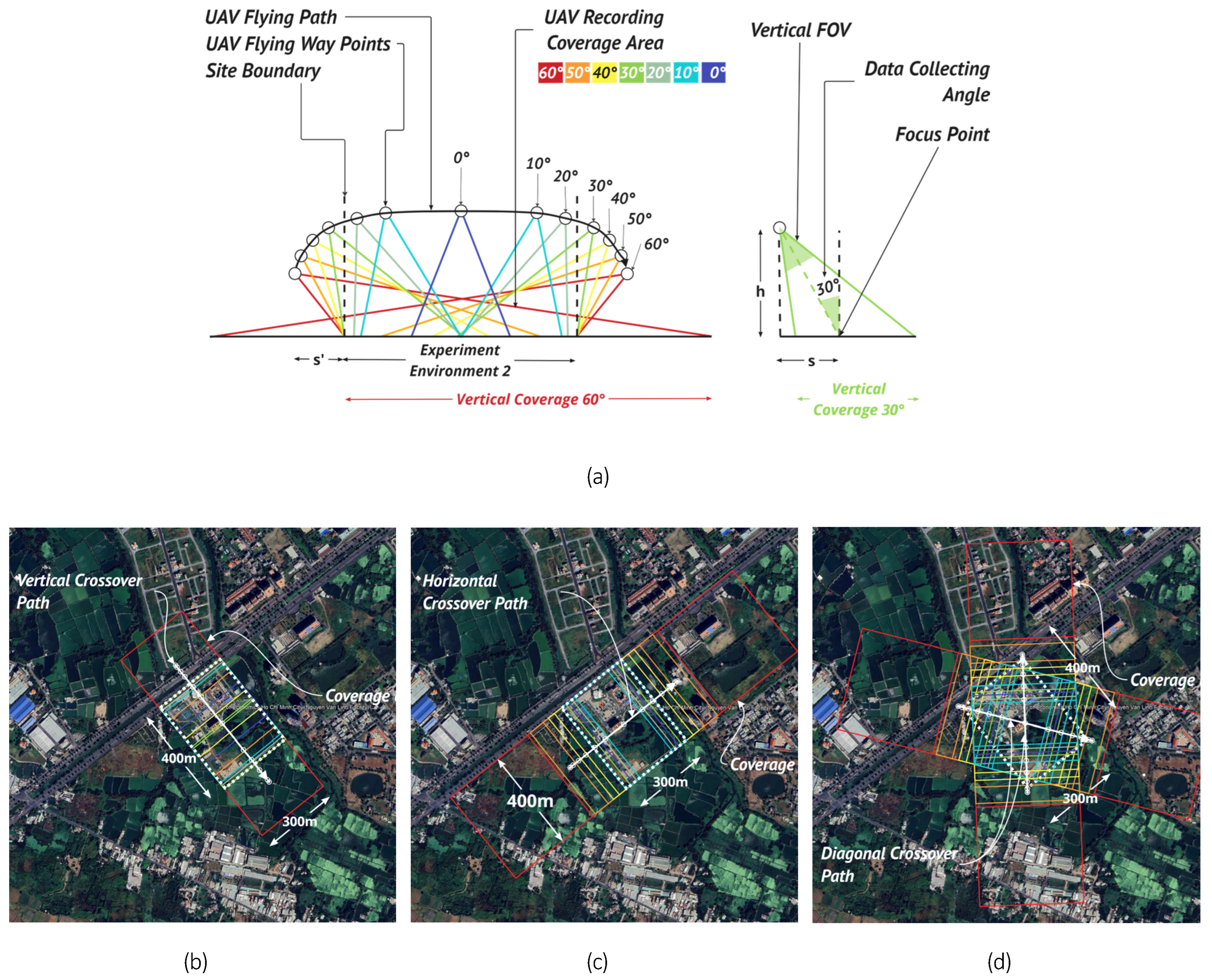

For experiment environment 1, the study focused on the campus headquarters, which has a height of approximately 35 m. The researchers devised a UAV flight path that followed a circular trajectory, creating a half-sphere around the building (

Figure 5a). This method ensured optimal coverage and viewing angles around the structure, preventing any data gaps. The half-sphere was centered on the building’s ground floor midpoint with a radius of 150 m, divided into seven rotational angles corresponding to distinct flight paths (

Table 2). Each flight route or waypoint determined the UAV camera setup using two crucial parameters: the radius (r), indicating the distance between the camera and the focal point, and also the radius of the half-sphere in this case. Angle (A) represented the angle between two lines: one passing through the camera and the other perpendicular to the ground, both intersecting at the focal point of the camera on the ground (

Figure 5a). By utilizing the data on angle (A) and radius (r), the researchers could easily compute the horizontal coverage (CH) and vertical coverage (CV) areas based on the UAV camera’s FOV parameters using the following equations:

where

and

are the horizontal and vertical FOV of the camera vertical, respectively. Additionally, CV and CH are the horizontal and vertical coverage lengths based on the respective FOV of the camera. Finally, r is the distance between the camera and the focal point.

By calculating the coverage area, the optimal radius for recording from all angles was determined, ensuring that the entirety of experimental environment 1 fell within the data collection range while also preventing the capture of excessively irrelevant data (

Figure 5b). The radius (r) was fixed at 150 m in the experiment environment 1 (

Table 2). To calculate the distance (s) and height (h), the Equations (3) and (4) were derived from the properties of a right triangle, where the radius (r) is the hypotenuse and fixed at 150 m, and one of the angles, the recording angle (A), is known. The flight route length (C) is the total moving distance of the UAV when one set of data is recorded. Using the circular path data collection methodology, (C) is calculated as the circumference of the UAV’s flight path, as shown in Equation (5). The number of images extracted from the recorded video for each flight path was calculated to ensure that the overlap between consecutive images was greater than 90%. The research team used a UAV to record videos while following predefined flight paths. The images were extracted at intervals corresponding to the movement of the UAV, with one image selected every 7m of travel. By limiting the number of images used, the computational load on the graphics card for Gaussian splatting remained within manageable limits. This limitation was necessary because of the constraints of the data-processing equipment available for the experiment. One exception is that for a camera rotation angle of A=0°, the UAV captures only a single image taken from a bird’s-eye view directly above the building.

where

is the distance between the camera and the focal point in the flight path of angle A,

is the horizontal distance between the UAV and focal point in the circular flight path at angle A,

is the height of the UAV in the flight path at angle A, and

is the total length of the circular flight path of angle A in the experiment environment 1.

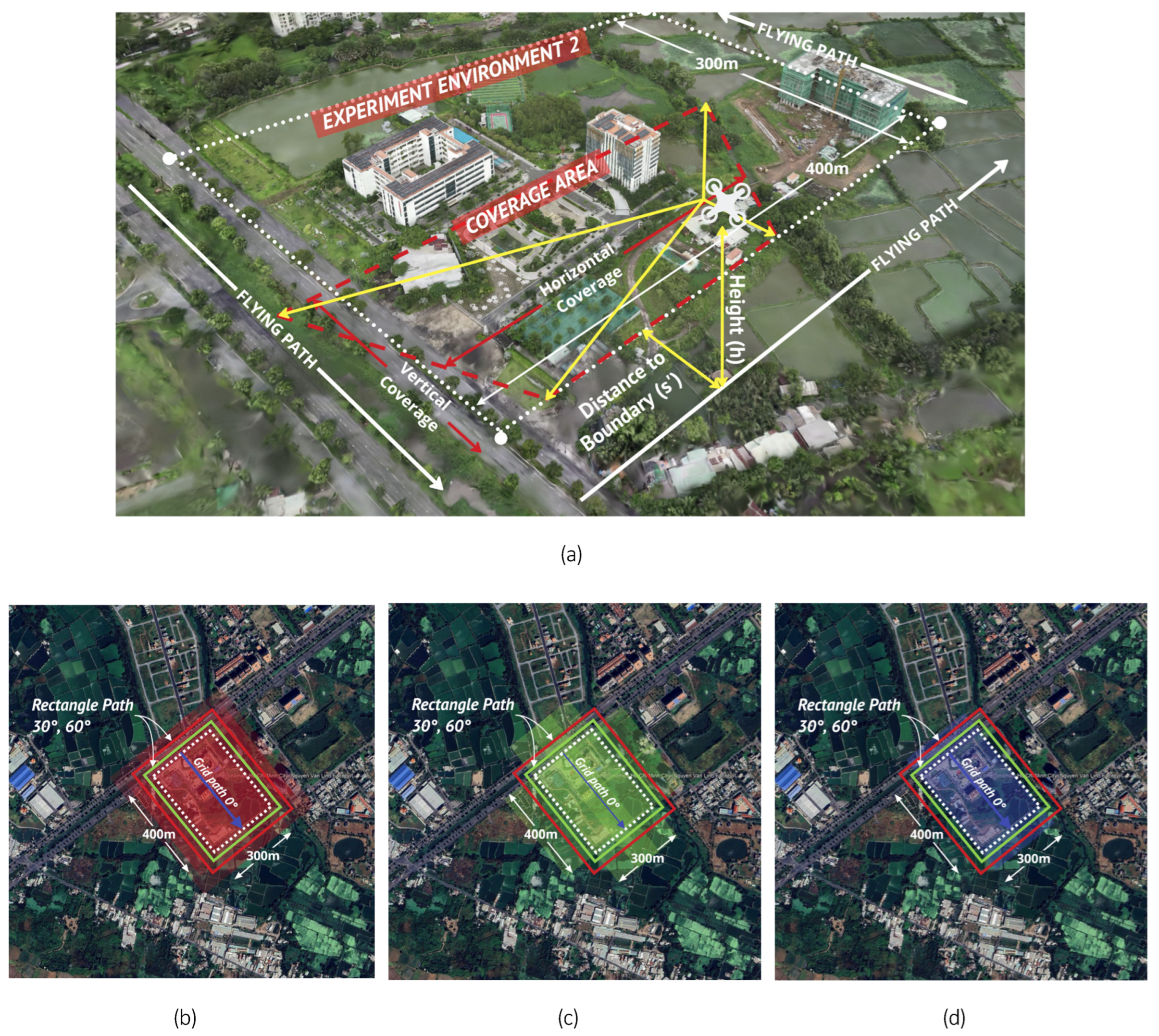

For experiment environment 2, data collection was necessary over a significantly larger area compared to the first environment, encompassing a land area of 300 m x 400 m, which included all campus facilities. Implementing the circular route method in this scenario posed challenges due to the UAV’s need to be positioned far from the recording area. Consequently, image quality was compromised, hindering clear capture of small objects and leading to a substantial increase in flight route length. In the data collection process for Gaussian splatting, the research team initially utilized the grid path and double grid path methods during the preliminary research stage. Although these conventional methods were used for collecting aerial image data over extensive areas, they did not yield successful 3D reconstruction results. The team noted that the Gaussian splatting technique necessitated panoramic image data rather than focusing on specific areas to define a 3D reconstruction space effectively. To ensure comprehensive coverage, the research team devised a UAV flight path using two alternative approaches: rectangular and crossover paths.

In the rectangular path method, the UAV flight paths were designed to fly along and parallel to the boundary of the experimental area (

Figure 6a). The radius (r) was determined considering the coverage capability of the UAV’s camera, ensuring efficient image data collection (

Figure 6b–d). The UAV’s position is characterized by two main parameters: (s’), the horizontal distance between the UAV’s flight path and the boundary of the research area projected directly onto the ground, and (h), the UAV’s flight altitude. The distance (s’) is calculated using Equation (6). For experiment environment 2, employing the rectangular path method, the UAV’s radius (r) during data collection angles of 30° and 60° was 216 m, while that at a 0° angle was 150 m (

Table 3). To achieve over 90% image overlap, the number of images extracted from the UAV video along the rectangular flight paths was set at one image per 10 m of movement, with the specific distance determined based on the desired overlap and coverage requirements.

where

is the vertical FOV of the camera,

is the distance between the camera and focal point at recording angle A

is the horizontal distance between the UAV at the position of recording angle A and the boundary of experiment environment 2. A positive value indicates that the UAV is outside the boundary of the experimental area. A negative value indicated that the UAV was inside the boundary of the experimental area. Finally,

is the horizontal distance between the UAV and the focal point of the camera at recording angle A, and

is the height of the UAV at recording angle A.

In the crossover path method, determining the flight path of the UAV becomes more complex as the UAV traverses over the experimental environment rather than around it. The crossover path comprised a vertical path, a horizontal path, and two diagonal paths. To enhance the efficiency of image data collection, the UAV followed a curved flight trajectory, adjusting both its altitude and camera angles at different waypoints (

Figure 7a). The radius (r) at each data collection angle (A) must be calculated to ensure optimal coverage (

Figure 7b–d). The research team computed the horizontal distance (s’) from the UAV to the nearest ground-projected boundary and the flight altitude (h) based on the specific camera angle. For the crossover path option utilized in experimental environment 2, the radius (r) was maintained at 216 m for the vertical crossover path and increased to 290 m for both the horizontal and diagonal crossover paths (

Table 3). To achieve over 90% image overlap, the number of images extracted from the UAV video during the rectangular flight paths was set at one image per 10 m of movement, with the exact distance determined according to the desired overlap and coverage requirements.

2.4. Photogrammetry

Owing to advancements in camera technology and computer vision, photogrammetry has become a widely used tool in architecture and urbanism. Various applications, both for computers (such as Agisoft Metashape, Reality Capture, and Meshroom) and smartphones (such as Polycam, Kiri Engine, and Scaniverse), enable users to create 3D mesh products using image sequences or videos as inputs. Photogrammetry is recognized as a cost-effective and flexible method for 3D reconstruction that provides results of sufficient quality for a range of scenarios [

8,

28]. In this study, the experimental team applied photogrammetry to multiple scenarios using the same data source as that used for Gaussian splatting. The software utilized for this purpose was the latest version of Agisoft Metashape available at the time of the experiment, and the final 3D mesh product generated through photogrammetry was viewed through the Agisoft Metashape software interface. To allow for adjustments, comparisons, and integration with other downstream software, such as Unreal Engine and Blender, 3D mesh products can be exported as wavefront OBJ format files, which are widely compatible for further processing and visualization.

2.5. Unreal Engine and External Plugins

The research team utilized Unreal Engine version 5.3 for the experimental activities, where Unreal Engine served as middleware for executing post-processing steps before hologram printing. The choice was influenced by several factors: its free accessibility, the efficiency of the level of detail (LOD) technique in handling large mesh files, and the real-time ray-tracing rendering capability that enables high-speed rendering, making it ideal for generating input data for hologram production [

34,

35,

36]. The output products of Gaussian plating processed with 3DGS are exclusively viewable within the 3DGS interface. At the time of the research, the utilization of 3DGS for post-processing tasks in architectural and urban fields was limited. To overcome this limitation, the research team exported PLY files from the 3DGS environment and used Unreal Engine for post-processing prior to hologram printing (

Figure 8a). Because Unreal Engine 5.3 does not inherently support opening PLY files, the team utilized the LUMA plugin (version 0.4.1) [

37] to facilitate this process. When converting Gaussian splatting PLY files into blueprints using the LUMA plugin, users can choose from four different styles: (1) containing environmental lighting with temporal anti-aliasing, (2) containing environmental lighting without temporal anti-aliasing, (3) influenced by Unreal Engine environment with temporal anti-aliasing, and (4) influenced by an Unreal Engine environment without temporal anti-aliasing. These options offer flexible rendering configurations based on the specific visual and performance requirements of the project. OBJ files exported from Agisoft Metashape using the photogrammetry method can be directly imported into Unreal Engine without requiring additional support or plugins (

Figure 8b).

To capture scenes and create multiple-perspective view data for hologram printing, the research team utilized a custom plugin tailored for full-parallax tabletop holograms. This plugin facilitates the customization of an image sequence forming a hemisphere above the 3D model within Unreal Engine, offering adjustable parameters such as the center of the half-sphere, hologram FOV, and capture distance [

23]. These parameters provide accurate manipulation of the visual characteristics of the hologram, guaranteeing meticulous data acquisition for top-notch hologram production.

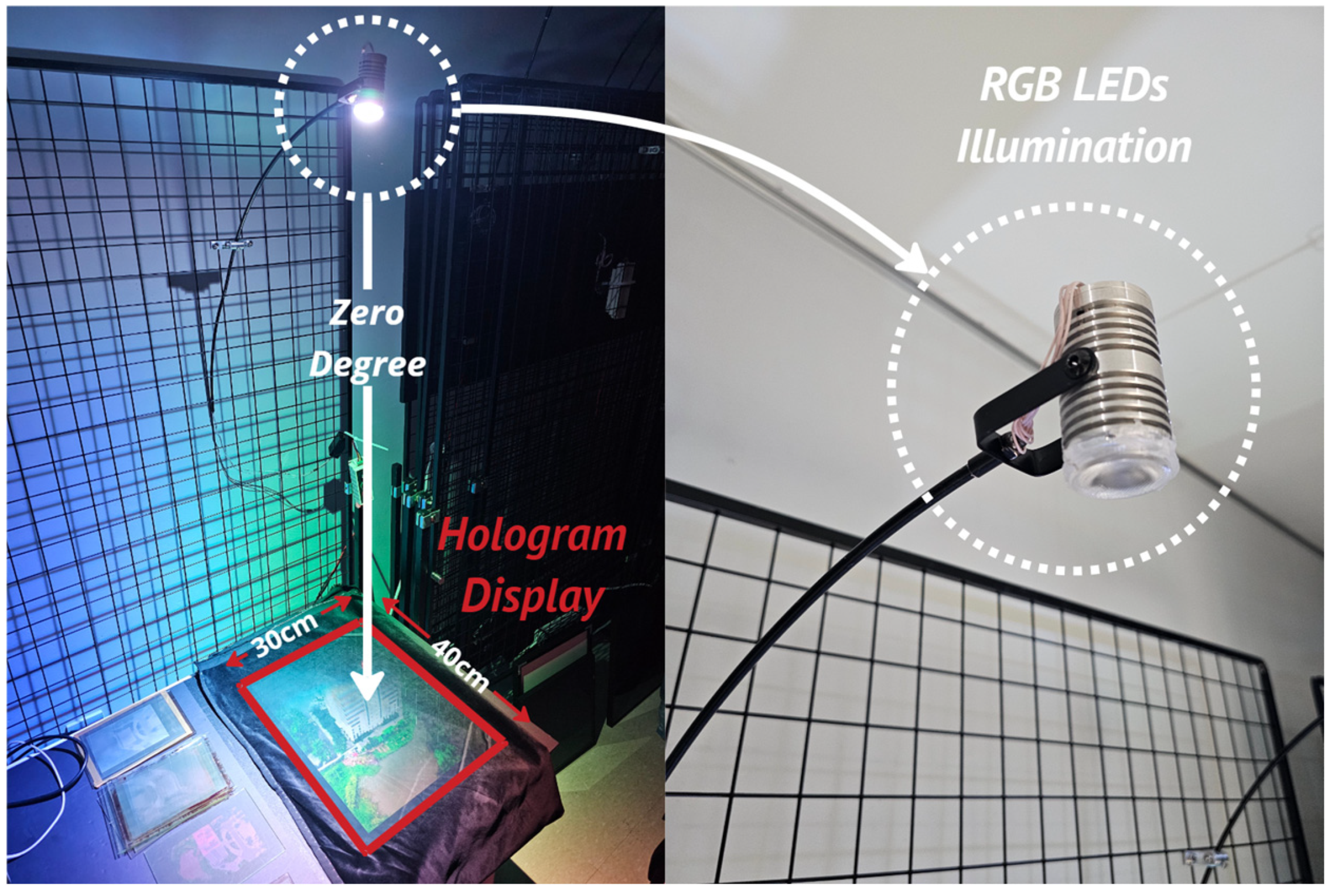

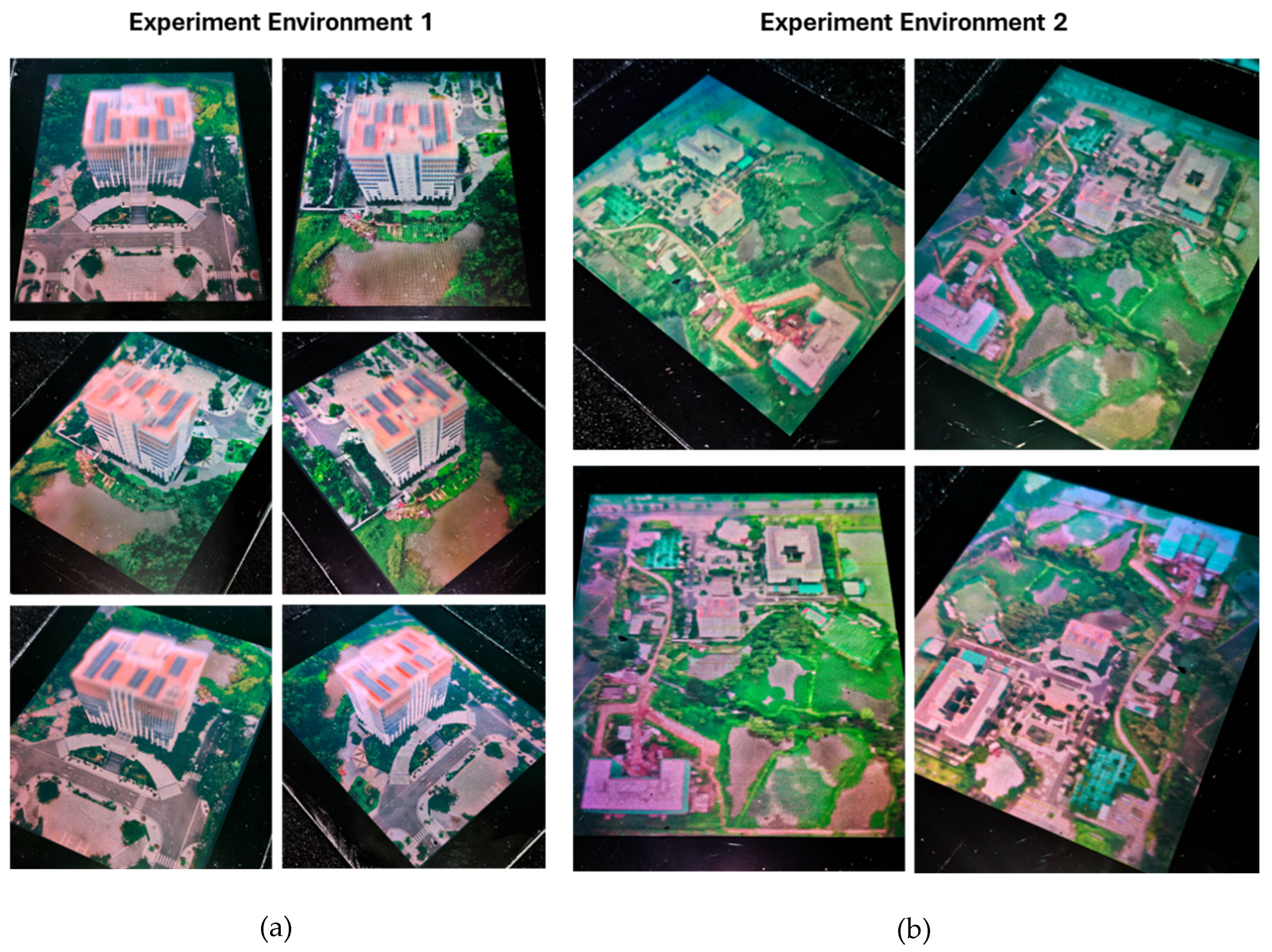

2.6. Tabletop Hologram Production

Tabletop holography offers a bird’s-eye visual representation of the object under observation, serving as an alternative to conventional methods such as 3D physical models, VR, and AR in architecture and urban planning. It finds application in architectural projects, expansive landscapes, and urban settings [

23]. Holography emerges as an optimal downstream visualization tool post Gaussian splatting, employing innovative view synthesis for input, thereby eliminating the necessity for 3D mesh generation. This compatibility facilitates the seamless integration of Gaussian splatting results into holographic representations. The tabletop hologram generated in the present study is a zero-degree full parallax hologram with a 360° FOV and a 120° viewing angle. Leveraging cutting-edge CHIMERA hologram printing technology, a full parallax hologram measuring 30 cm x 40 cm with a resolution of 250 μm can be produced in approximately 8.8 h. Two hologram tabletops will be produced for two experimental environments in this study.

The tabletop holograms were recorded using a U04 silver halide holographic glass plate. Following the printing process, the holograms underwent chemical post-processing and sealing for protection. To present the final zero-degree tabletop hologram optimally, a suitable RGB LEDs lighting system must be installed [

22,

23], as shown in

Figure 9.

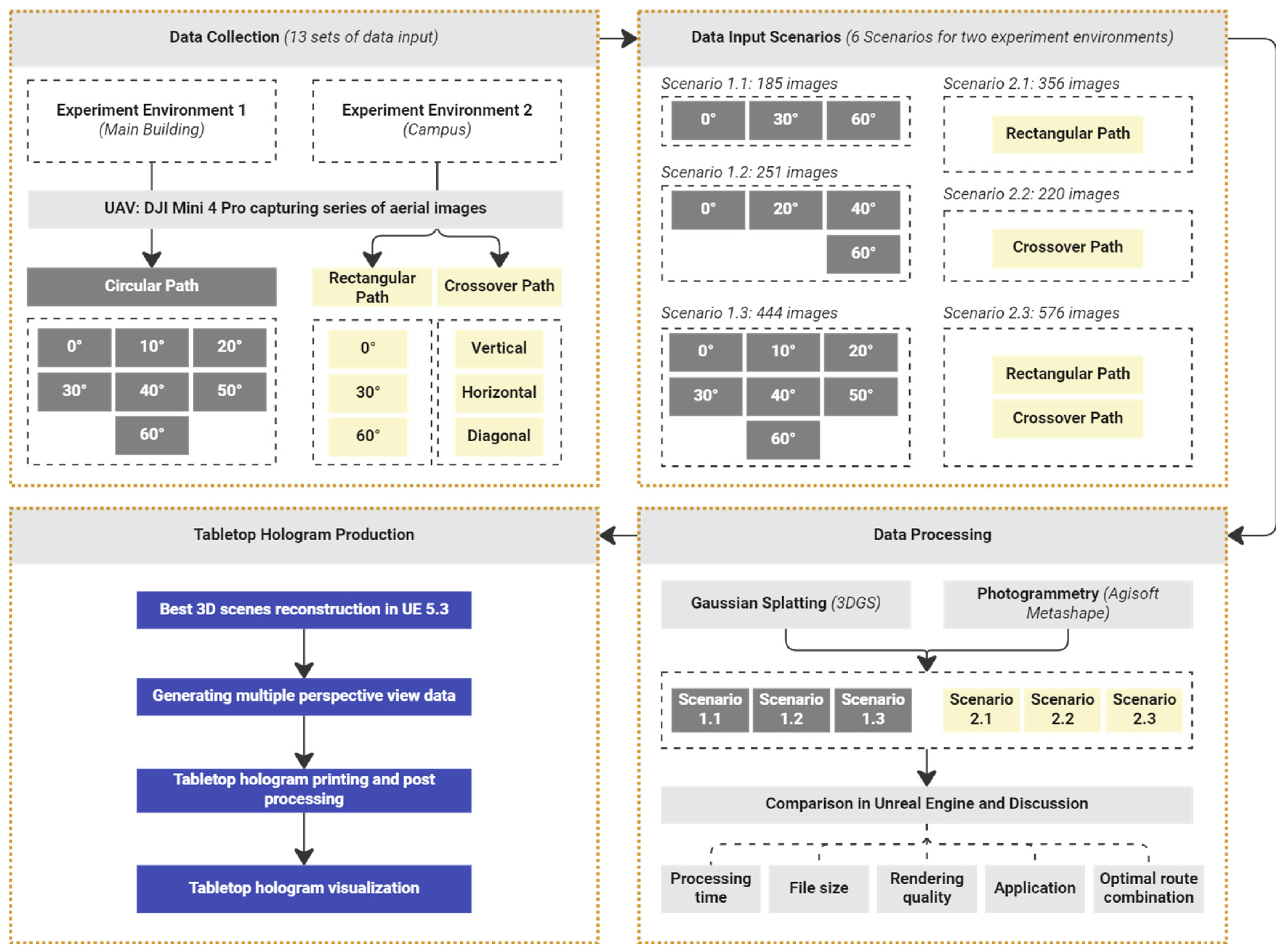

2.7. The Experiment Methodology

The experiment is designed to achieve results that provide a foundation for addressing the proposed research objectives. It comprised four main phases: (1) data collection, where spatial data was gathered using UAVs and other tools; (2) data input scenarios, which involved creating different input scenarios for processing the collected data; (3) data processing, which involved processing the input through Gaussian splatting and photogrammetry; and (4) tabletop hologram production, where the final 3D outputs were used to create holographic visualizations (

Figure 10).

Data collection: A total of 10 datasets were gathered using the mini-UAV. In experiment environment 1, seven datasets were recorded at angles ranging from 0° to 60° using the circular path technique. In experiment environment 2, six datasets were collected using rectangular and crossover paths.

Data input scenarios: The research team compiled the 13 collected data sets into six distinct scenarios evenly distributed across the two experiment environments. These scenarios aimed to evaluate the optimal level of data collection required for 3D reconstruction with acceptable quality. The number of images extracted from the UAV-recorded videos per scenario was limited to 800 due to GPU processing constraints, as detailed in the Gaussian splatting section.

Data processing: Utilizing identical data sources across all scenarios in both experimental environments, the research team conducted 3D reconstructions via Gaussian splatting (using 3DGS) and photogrammetry (using Agisoft Metashape). The results from both techniques were saved in formats compatible with Unreal Engine for further analysis. Subsequently, the team evaluated the quality and potential of the 3D reconstruction results generated through Gaussian splatting in comparison to the well-established standards of photogrammetry based on the following criteria:

Processing time: Duration of each method for completing the 3D reconstruction.

File size: The size of the output files generated by each method.

Reconstruction quality: A qualitative evaluation of the accuracy and visual fidelity of 3D visualization.

Application: Practical applications provided by each method, particularly in architecture and urban planning.

Optimal route combination: Evaluation of the most effective data collection scenarios for each method based on the findings.

Tabletop hologram production: Following the identification of the optimal Gaussian splatting 3D reconstruction output in each experimental environment, the research team proceeded to create tabletop holograms to evaluate the feasibility and potential integration of these advanced technologies. The process involved converting the 3D models into holographic content using Unreal Engine and specialized plugins for hologram capture. Subsequently, the holographic visualizations were printed using the CHIMERA hologram printer, yielding a physical representation of the 3D reconstruction output. This phase facilitated the assessment of how well the Gaussian splatting data translates into holographic form, with a focus on accuracy, depth perception, clarity, color, detail level, and suitability for architectural and urban applications. Through this endeavor, the team sought to investigate the potential of combining Gaussian splatting and hologram technology as a novel visualization tool for real-world architectural and urban projects.

3. Results

3.1. Data Collection Using the UAV and Data Input Scenarios for Both Experiment Environments

In addition to offering the opportunity to work with a new and unique dataset, the original field data collection also emphasized practical challenges. Despite the relatively open survey area chosen for this study, the research team had to carefully choose an appropriate time to ensure high-quality image data. Both experimental environments were surveyed on overcast days with minimal sunlight to decrease lighting variability. Data collection was conducted over the weekend to minimize the presence of individuals and vehicles that could potentially disrupt the 3D reconstruction results.

For experimental environment 1, data collection followed the structured methodology outlined in the previous section and comprised seven distinct datasets recorded at various angles relative to the building (

Figure 11). The number of images generated during post-processing aligned with the calculations for each circular flight path. The UAV maintained a consistent speed throughout each planned flight route to record videos. Extracted images, calculated at an average distance interval of 7 m, were utilized for subsequent steps, ensuring uniform data distribution to support further analysis and 3D reconstruction tasks. As anticipated by the research team, the prearranged UAV flight path facilitated data collection with relative accuracy. Mini UAVs are susceptible to wind forces at higher altitudes, leading to slight deviations from the planned circular flight route centers. Additionally, the number of mission points for each flight route was not sufficiently dense. Technological constraints of the UAV during the experiment resulted in relatively accurate data collection, aligning closely with the pre-established flight plan. The seven image datasets collected using the circular path method were categorized into three distinct scenarios: (0°, 30°, 60°), (0°, 20°, 40°, 60°), and (0°, 10°, 20°, 30°, 40°, 50°, 60°).

In experiment environment 2, the six datasets were collected using both the rectangular path and crossover path methods, which were then divided into three scenarios. The first scenario involved 3D reconstruction solely based on the data from the rectangular path. The second scenario focused on 3D reconstruction using only the data from the crossover path. The third scenario utilized a combination of data from both methods for 3D reconstruction.

Figure 11 and

Figure 12 show selected images from the dataset utilized for 3D reconstruction, illustrating the range of shooting angles. The images do not encompass the complete dataset but aim to visually demonstrate the diversity in capture angles during data collection. These scenarios varied in the number of images used and the angles recorded around the structure. The objective was to determine an optimal configuration that achieves a balance between high-quality 3D reconstruction with a minimal number of images, ensuring efficient data utilization while maintaining the quality of the final Gaussian splatting 3D reconstruction.

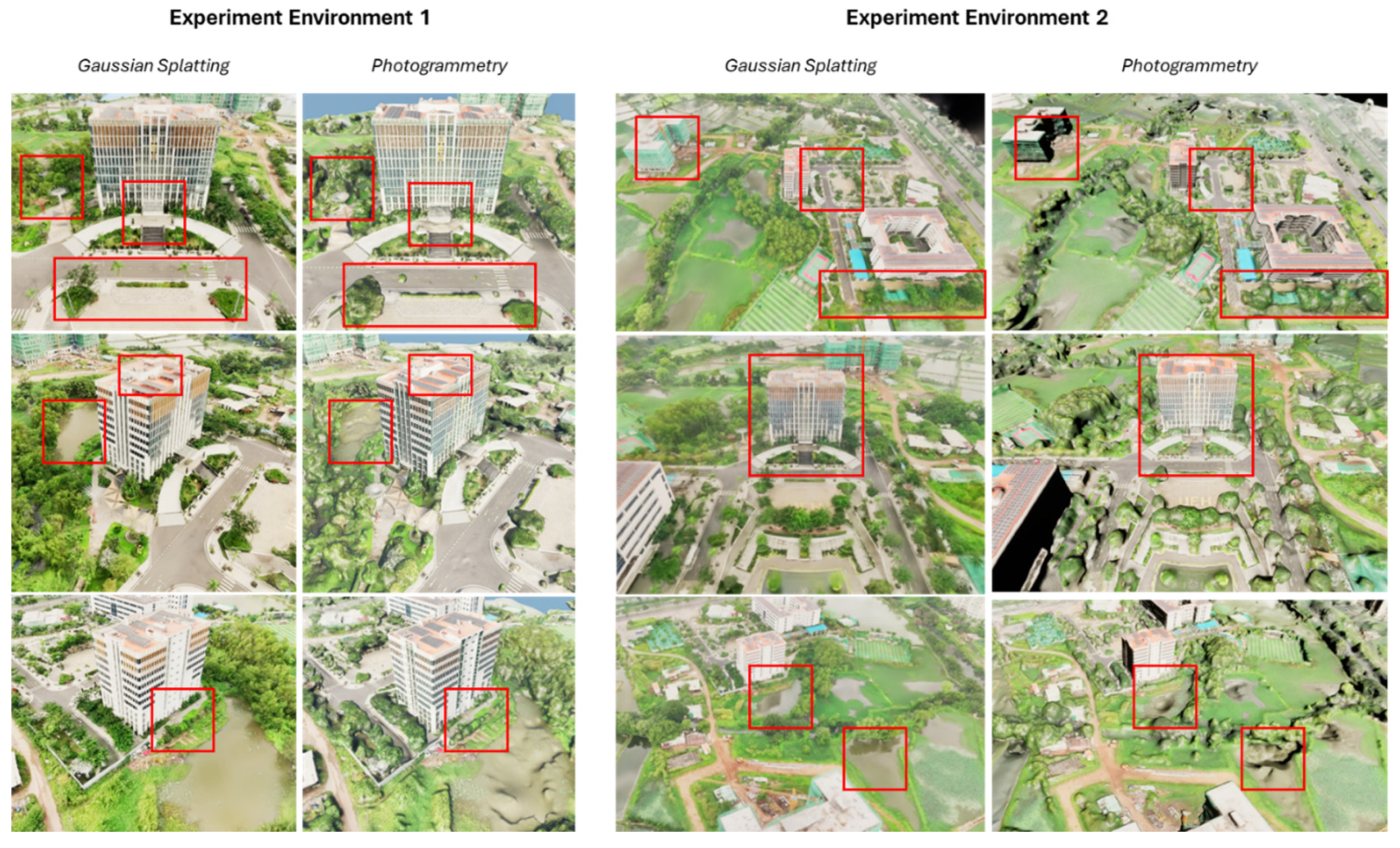

3.2. 3D Reconstruction Using Gaussian Splatting and Photogrammetry

All scenarios generated from the same image data source in the two experiment environments underwent 3D reconstruction using Gaussian splatting and photogrammetry. A significant difference was observed in the reconstruction time between Gaussian splatting with 3DGS and photogrammetry using Agisoft Metashape, as detailed in

Table 3. These factors are crucial when evaluating the feasibility and suitability of each method for specific applications in the subsequent research phase. The resultant 3D model files were compared for quality within the Unreal Engine environment.

In terms of processing time, both methods demonstrated a direct correlation between the volume of data analyzed in each scenario and the processing duration. Photogrammetry’s execution time is approximately 50-220% faster than that of gaussian splatting in experiment environment 1, and approximately the same as gaussian splatting in the experiment environment 2. The file size of the Gaussian splatting products was significantly larger, approximately 4–11 times that of the photogrammetry outputs. A significant finding from the Gaussian splatting 3D reconstruction is that an increased file size corresponds to denser splats, indicating a more comprehensive capture of detailed data with a higher number of input images. In contrast, the photogrammetry products maintained a nearly constant file size and number of faces across all three scenarios. This implies that augmenting image data or introducing different angles has minimal impact on the final 3D reconstruction of photogrammetry products within the scope of this study.

When comparing the rendering quality between Gaussian splatting and photogrammetry, it is observed that, throughout all scenarios, the 3D reconstruction produced by Gaussian splatting consistently preserves significantly more detail using the same dataset. However, photogrammetry encounters difficulties with reflective surfaces, water bodies, and intricate details such as light poles, steps, manhole covers, water tanks, tensile structures, shadows, and vegetation. In contrast, Gaussian splatting successfully captures all these features with relatively high fidelity, particularly excelling in rendering water surface reflections from various viewing angles. Owing to the necessity of constructing a 3D mesh, photogrammetry algorithms often introduce distortions in fine details, particularly in the absence of close-range image data. In experiment environment 2, during UAV flight missions conducted at elevated altitudes and in areas requiring extensive recording, photogrammetry encountered challenges in performing 3D reconstruction. The Gaussian splatting model provided significantly higher levels of detail compared to photogrammetry (

Figure 13).

Table 4.

Performance comparison between Gaussian splatting and photogrammetry in terms of processing time and file size.

Table 4.

Performance comparison between Gaussian splatting and photogrammetry in terms of processing time and file size.

| Scenarios |

Recording

Angles |

Collected

Images |

Gaussian Splatting –

3DGS |

Photogrammetry –

Agisoft Metashape |

| Processing Time (min) |

File Size (mb) |

Processing Time (min) |

File Size (mb) |

| Experiment Environment 1 |

| 1.1 |

0°, 30°, 60° |

185 |

41 |

477 |

18 |

40 |

| 1.2 |

0°, 20°, 40°, 60° |

251 |

45 |

508 |

23 |

42 |

| 1.3 |

0°, 10°, 20°, 30°, 40°, 50°, 60° |

444 |

62 |

577 |

41 |

41 |

| Experiment Environment 2 |

| 2.1 |

Rectangular path |

356 |

56 |

831 |

64 |

151 |

| 2.2 |

Crossover path |

220 |

40 |

470 |

36 |

48 |

| 2.3 |

Rectangular and crossover path |

576 |

49 |

589 |

45 |

158 |

When comparing the quality of scenarios in each research environment to determine the most optimized dataset, the research team assessed if the collected data volume was sufficient for generating a high-quality 3D reconstruction. The emphasis was on balancing data quantity with model details, ensuring fidelity in the reconstruction without excessive data collection. In experiment environment 1, parameters such as file size, model face count, and overall quality and detail of reconstructions in scenarios 1.1, 1.2, and 1.3 exhibited no significant differences. A similar trend was observed when comparing 3D reconstruction quality across all three scenarios using the Gaussian splatting method. These results indicate that dataset variations did not result in significant differences in reconstruction quality for the remaining three scenarios in experiment environment 1. Based on the outcomes of both the Gaussian splatting and photogrammetry approaches, the research team concluded that with data collected at angles of 0°, 30°, and 60° and a total dataset of 185 images, 3D reconstruction in this study is entirely feasible. The resulting product quality was nearly optimal, given the available data. Therefore, the research team acknowledged that an efficient image data collection structure is crucial for achieving high-quality 3D reconstruction (

Figure 14).

In experiment environment 2, despite the relatively low number of images, the 3D reconstruction results obtained using the crossover path method were significantly superior to those obtained using the rectangular path method for both Gaussian scattering and photogrammetry. Combining the image data from both methods did not result in a notable improvement over the crossover method alone. Additionally, the execution time and file sizes for both scenarios 2.1 and 2.3 were considerably higher than for 2.2. Thus, the research team evaluated the crossover method as the most optimal for image data collection in the selected environment for this study, utilizing 3D reconstruction via Gaussian splatting or photogrammetry (

Figure 14).

Within the scope of this study, following the outlined experiment process, the most optimal 3D reconstruction quality for each scenario in both experimental environments is selected as input data for hologram printing. In experimental environment 1, as the quality of the Gaussian splatting model did not exhibit significant differences across the three scenarios, the research team selected the product from scenario 1.1, which utilized 185 images (

Figure 15a and Video S1). Meanwhile, for experiment environment 2, the 3D reconstruction model of scenario 2.2 with 220 images was chosen as the input data for the hologram (

Figure 15b and Video S2). For each research environment, a holographic display measuring 30 cm x 40 cm was printed. In particular, the main building of the university campus in experiment environment 1 was displayed at a scale of 1:300, whereas research environment 2 was displayed at a scale of 1:1000. In this experimental study, the input data for hologram printing were directly captured in Unreal Engine, aiming to produce a full parallax tabletop hologram with a 120° FOV around the model [

23].

By examining the hologram products in both experimental environments, the research team confirmed that the input data derived from Gaussian splatting were effectively incorporated into the hologram. This integration led to the creation of a high-quality urban digital twin for the project and campus. The hologram effectively captures and displays various aspects of the building, showcasing additional details such as plants, water features, shadows, lighting poles, and tensile structures with clarity. The showcase Videos S1 (Experiment environment 1) and S2 (Experiment environment 2) present demonstrations of these two 0° full-parallax holograms.

4. Discussion

This section focuses on investigating the research objectives by thoroughly examining the results. Each experimental result was evaluated considering the study’s aims, offering insights into the efficacy of the methods used, encountered challenges, and the relevance of the findings.

(O1) Validation of the use of Gaussian splatting for urban and architectural 3D reconstruction: During the 3D reconstruction process using 3DGS and primary data from a large area in this study, it was observed that Gaussian splatting poses challenges for large-scale environments owing to optimization constraints and the absence of user-friendly data collection and processing platforms. Moreover, Gaussian splatting tends to generate larger 3D reconstruction files as the data volume grows, even without a noticeable enhancement in reconstruction quality. However, with a more standardized workflow, Gaussian splatting holds significant promise for architecture and urban planning. Importantly, the quality of reconstruction achieved with Gaussian splatting exceeded that of photogrammetry using the same datasets. Following the research, the team identified three critical issues that need attention for the broader application of Gaussian splatting in large-scale environments. First, the image data collection process should be standardized and precisely executed in the field to optimize data for Gaussian splatting and reduce unnecessary data redundancy. Second, an enhanced version of the 3DGS platform should be developed to offer users more flexibility in configuring camera views and editing 3D models. Lastly, the integration of 3DGS with advanced visualization applications and tools for creating virtual environments and lighting is essential to enhance the platform’s overall capabilities.

(O2) Demonstration of the optimized UAV data collection for Gaussian splatting in different scenarios: In this study, the research team conducted 3D reconstructions across two experimental environments—one with a single building and the other with multiple buildings, along with traffic and landscape elements. Various data collection scenarios were tested. The experiments show that the Gaussian splatting method is highly effective when the collected image data provide a panoramic view. Effective data collection methods include circular, and crossover flight paths. For a single building, the circular method proved particularly efficient, as the circular flight paths of the UAV formed a half-sphere around the building, capturing comprehensive data from above. Even with only three circular flight paths at data collection angles of 0°, 30°, and 60°, the gathered image data were sufficient for successful 3D reconstruction using Gaussian splatting. For aerial data collection in large environments, the research team recommends using crossover path methods, depending on the specific circumstances. One limitation of the crossover path is that the UAV flight paths must be calculated carefully to ensure accurate coverage and optimal data collection across the entire area.

(O3) Development of a workflow for creating HoloGaussian digital twin for real urban and architectural environments: High-quality, low-volume 3D reconstruction using Gaussian splatting serves as an excellent data input for hologram production, significantly reducing the time required to prepare data for holograms from multiple perspective views. To present a vision for a standardized modeling framework for a Hologaussian digital twin, particularly for applications in architecture and urban planning, the research team proposed a comprehensive workflow. This workflow outlines the steps required to streamline the integration of Gaussian splatting with hologram technology, paving the way for further applications and research in this field (

Figure 16). In the first stage, the primary task is to evaluate the characteristics of the actual environment, such as whether the area consists of single or multiple building clusters and whether it is densely populated or sparsely inhabited. This evaluation forms the foundation for developing an optimized data-collection framework by designing effective UAV flight programs. The exact locations of the waypoints and flight trajectories were calculated based on the coverage area provided by the FOV of the UAV. It is noteworthy that this process may not succeed on the first attempt. If poor-quality data are observed after establishing waypoints in the field, practitioners can recalibrate the flight trajectory as required. Once satisfactory video recordings are obtained from the UAV, the image datasets can be extracted as inputs for 3D reconstruction. The Gaussian splatting process provides users with a visualized version of the environment on the 3DGS platform. To produce holograms, practitioners can export the 3D reconstruction as a PLY file and modify it in Unreal Engine using a plugin specifically designed for Gaussian splatting. In Unreal Engine, multiple perspective views of 3D reconstruction scenes are generated and then used as input for hologram printing. The resulting hologram is a high-quality digital replica of the real-world scene. Currently, the creation of Hologaussian digital twin models from original data allows for their unlimited replication with the hologram copy machine [

23]. This presents a significant opportunity for applications in education, research, conservation, and exhibition.

However, this workflow had several limitations. Although current holograms provide high-quality 3D content to multiple viewers simultaneously with ultra-realistic visuals and without the need for supporting devices like computers or near-eye displays, interactivity remains limited. Users are unable to interact with holograms in real time. Moreover, generating multiple-perspective views for 3D reconstruction through Gaussian splatting necessitates the utilization of Unreal Engine, despite the ability of the 3DGS platform to render splats in real-time. This introduces an additional step to the workflow and may potentially complicate the process.

5. Conclusion

In this study, experiments were conducted to create digital twin versions of two real environments—one involving a single building and the other involving a university campus with multiple building clusters and surrounding landscapes. Two zero-degree full-parallax HoloGaussian digital twin versions were produced, each measuring 30 cm x 40 cm with a resolution of 250 μm. Based on the experimental results, the research team concluded that Gaussian splatting is a highly promising alternative to 3D reconstruction in the fields of architecture and urbanism. However, image data collection must be optimized because the Gaussian splatting algorithm is still in its early stages of development and can place a significant computational burden on systems when handling large datasets. This study also highlights several effective methods for optimizing image data collection for Gaussian plating, including circular (forming a half-sphere), and crossover paths, depending on the characteristics of the implementation area. Notably, the collected images should capture a comprehensive view of the environment rather than focusing on fine details. Therefore, when designing a flight trajectory, the coverage area must be calculated carefully based on the FOV of the UAV. The 3D reconstruction output from Gaussian splatting is of high quality from multiple perspectives, making it an ideal input for building multiview datasets for hologram production.

The research team developed a comprehensive workflow to create a HoloGaussian digital twin, converting real-time environments into precise digital counterparts. The identical twin can be rapidly duplicated with a hologram copy machine to produce an unlimited number of copies, enabling diverse applications in fields such as education, research, conservation, and exhibition. This contribution is valuable in the fields of architecture and urban planning, offering a promising methodology that warrants further study for broader applications. As Gaussian splatting and hologram technologies evolve, supporting technologies such as VGU graphic processing capabilities, Internet speed, UAV technology, GPS, and downstream integrated software are essential for enhancing workflow efficiency and effectiveness. Based on the limitations encountered during the experimental process, we propose several future research directions. These include the integration of Gaussian splatting with downstream applications and camera navigation, real-time interactive hologram visualization, and the expansion of the image data collection framework for urban contexts to better support Gaussian splatting.

Supplementary Materials

The following supporting information can be downloaded at the website of this paper posted on Preprints.org. Video S1: The Full Parallax Tabletop Hologram of Experiment Environment 1, Video S2: The Full Parallax Tabletop Hologram of Experiment Environment 2.

Author Contributions

Conceptualization, Tam Do ; Data curation, Tam Do, Jinwon Choi, Viet Le and Philippe Gentet; Formal analysis, Tam Do ; Funding acquisition, Seunghyun Lee; Investigation, Tam Do and Viet Le; Methodology, Tam Do ; Project administration, Tam Do, Leehwan Hwang and Seunghyun Lee; Resources, Tam Do and Seunghyun Lee; Software, Tam Do, Jinwon Choi and Philippe Gentet; Supervision, Tam Do and Leehwan Hwang; Validation, Tam Do ; Visualization, Tam Do and Philippe Gentet; Writing – original draft, Tam Do ; Writing – review & editing, Tam Do.

Funding

This research was supported by the MSIT(Ministry of Science and ICT), Korea, under the ITRC(Information Technology Research Center) support pro-gram(IITP-xxxxxx) supervised by the IITP(Institute for Information & Communications Technology Planning & Evaluation), This research is supported by Ministry of Culture, Sports and Tourism and Korea Creative Content Agency(Project Number: RS-xxxxxxx), The present research has been conducted by the Excellent researcher support project of Kwangwoon University in 202x

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding authors.

Acknowledgments

The research team extends its gratitude to Ho Chi Minh City University of Economics (UEH) for providing the opportunity to conduct experiments on the university campus.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Deng, T., K. Zhang, and Z.-J.M. Shen, A systematic review of a digital twin city: A new pattern of urban governance toward smart cities. Journal of Management Science Engineering, 2021. 6(2): p. 125-134. [CrossRef]

- Kamra, V., et al., Lightweight reconstruction of urban buildings: Data structures, algorithms, and future directions. IEEE Journal of Selected Topics in Applied Earth Observations Remote Sensing, 2022. 16: p. 902-917.

- Gonizzi Barsanti, S., M. Guagliano, and A. Rossi, Digital (re) construction for Structural Analysis. The International Archives of the Photogrammetry, Remote Sensing Spatial Information Sciences, 2023. 48: p. 685-692. [CrossRef]

- Xu, W., Y. Zeng, and C. Yin, 3D city reconstruction: a novel method for semantic segmentation and building monomer construction using oblique photography. Applied Sciences, 2023. 13(15): p. 8795. [CrossRef]

- Obradović, M., et al., Virtual reality models based on photogrammetric surveys—A case study of the iconostasis of the serbian orthodox cathedral church of saint nicholas in Sremski Karlovci (Serbia). Applied Sciences, 2020. 10(8): p. 2743. [CrossRef]

- Ma, Z. and S. Liu, A review of 3D reconstruction techniques in civil engineering and their applications. Advanced Engineering Informatics, 2018. 37: p. 163-174. [CrossRef]

- Kavouras, I., et al., A low-cost gamified urban planning methodology enhanced with co-creation and participatory approaches. Sustainability, 2023. 15(3): p. 2297. [CrossRef]

- Do, T.L.P., et al., Real-Time Spatial Mapping in Architectural Visualization: A Comparison among Mixed Reality Devices. Sensors, 2024. 24(14): p. 4727. [CrossRef]

- Carnevali, L., et al., Close range mini Uavs photogrammetry for architecture survey. International Archives of the Photogrammetry, Remote Sensing Spatial Information Sciences, 2018. 42: p. 217-224. [CrossRef]

- Achille, C., et al., UAV-based photogrammetry and integrated technologies for architectural applications—methodological strategies for the after-quake survey of vertical structures in Mantua (Italy). Sensors, 2015. 15(7): p. 15520-15539. [CrossRef]

- Jia, Z., B. Wang, and C. Chen, Drone-NeRF: Efficient NeRF based 3D scene reconstruction for large-scale drone survey. Image Vision Computing, 2024. 143: p. 104920. [CrossRef]

- Croce, V., et al., Neural radiance fields (nerf): Review and potential applications to digital cultural heritage. The International Archives of the Photogrammetry, Remote Sensing Spatial Information Sciences, 2023. 48: p. 453-460. [CrossRef]

- Gentet, P., et al., Outdoor Content Creation for Holographic Stereograms with iPhone. Applied Sciences, 2024. 14(14): p. 6306. [CrossRef]

- Reda, F., et al. Film: Frame interpolation for large motion. in European Conference on Computer Vision. 2022. Springer.

- Jiang, H., et al. Sense: A shared encoder network for scene-flow estimation. in Proceedings of the IEEE/CVF international conference on computer vision. 2019.

- Dalal, A., et al., Gaussian Splatting: 3D Reconstruction and Novel View Synthesis, a Review. IEEE Access, 2024. [CrossRef]

- Wu, G., et al. 4d gaussian splatting for real-time dynamic scene rendering. in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2024.

- Kerbl, B., et al., 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph., 2023. 42(4): p. 139:1-139:14. [CrossRef]

- Wu, T., et al., Recent advances in 3d gaussian splatting. Computational Visual Media, 2024: p. 1-30. [CrossRef]

- Guo, C., et al., RD-SLAM: Real-Time Dense SLAM Using Gaussian Splatting. Applied Sciences, 2024. 14(17): p. 7767. [CrossRef]

- Gentet, P., Y. Gentet, and S. Lee. An in-house-designed scanner for CHIMERA holograms. in Practical Holography XXXVII: Displays, Materials, and Applications. 2023. SPIE.

- Gentet, Y. and P. Gentet, CHIMERA, a new holoprinter technology combining low-power continuous lasers and fast printing. Applied optics, 2019. 58(34): p. G226-G230. [CrossRef]

- Do, T.L.P., et al., Development of a Tabletop Hologram for Spatial Visualization: Application in the Field of Architectural and Urban Design. Buildings, 2024. 14(7): p. 2030. [CrossRef]

- Saxby, G., Practical holography. 2003: CRC press.

- Fei, B., et al., 3d gaussian splatting as new era: A survey. IEEE Transactions on Visualization Computer Graphics, 2024.

- Cai, Z., et al., 3D Reconstruction of Buildings Based on 3D Gaussian Splatting. The International Archives of the Photogrammetry, Remote Sensing Spatial Information Sciences, 2024. 48: p. 37-43. [CrossRef]

- Ham, Y., M. Michalkiewicz, and G. Balakrishnan. DRAGON: Drone and Ground Gaussian Splatting for 3D Building Reconstruction. in 2024 IEEE International Conference on Computational Photography (ICCP). 2024. IEEE.

- Palestini, C. and A. Basso, The photogrammetric survey methodologies applied to low cost 3D virtual exploration in multidisciplinary field. The International Archives of the Photogrammetry, Remote Sensing Spatial Information Sciences, 2017. 42: p. 195-202. [CrossRef]

- Ozimek, A., et al., Digital modelling and accuracy verification of a complex architectural object based on photogrammetric reconstruction. Buildings, 2021. 11(5): p. 206. [CrossRef]

- Kerbl, B., et al., 3D Gaussian Splatting for Real-Time Radiance Field Rendering, in Github. 2023. [CrossRef]

- Gerke, M., Developments in UAV-photogrammetry. Journal of Digital Landscape Architecture, 2018. 3: p. 262-272.

- Zheng, X., F. Wang, and Z. Li, A multi-UAV cooperative route planning methodology for 3D fine-resolution building model reconstruction. ISPRS journal of photogrammetry remote sensing, 2018. 146: p. 483-494. [CrossRef]

- Barris, W. Horizontal and Vertical FOV Calculator. 2023 [cited 2024; Available from: https://www.litchiutilities.com/fov-1.php.

- Basso, A., et al., Evolution of Rendering Based on Radiance Fields. The Palermo Case Study for a Comparison Between Nerf and Gaussian Splatting. The International Archives of the Photogrammetry, Remote Sensing Spatial Information Sciences, 2024. 48: p. 57-64. [CrossRef]

- González, E.R., J.R.C. Ausió, and S.C. Pérez, Application of real-time rendering technology to archaeological heritage virtual reconstruction: The example of Casas del Turuñuelo (Guareña, Badajoz, Spain). Virtual Archaeology Review, 2023. 14(28): p. 38-53. [CrossRef]

- Shannon, T., Unreal Engine 4 for design visualization: Developing stunning interactive visualizations, animations, and renderings. 2017: Addison-Wesley Professional.

- LumaAI, Luma Unreal Engine Plugin (0.41). 2024, Notion.

Figure 1.

Common 3D reconstruction methods for real-world environments.

Figure 1.

Common 3D reconstruction methods for real-world environments.

Figure 2.

Locations of experiment environments 1 and 2 on the university campus.

Figure 2.

Locations of experiment environments 1 and 2 on the university campus.

Figure 3.

3DGS practice interface displaying the data set of the research experiment.

Figure 3.

3DGS practice interface displaying the data set of the research experiment.

Figure 4.

DJI Mini 4 Pro performing flight mission for data collection at the selected experiment environment. (a) Dji Mini Pro 4 under operation. (b) FOVs of the UAV and the coverage area.

Figure 4.

DJI Mini 4 Pro performing flight mission for data collection at the selected experiment environment. (a) Dji Mini Pro 4 under operation. (b) FOVs of the UAV and the coverage area.

Figure 5.

Circular paths for data collection in experiment environment 1. (a) Detailed circular flight paths of the UAV. (b) Calculated coverage area of the data collection based on different recording angles.

Figure 5.

Circular paths for data collection in experiment environment 1. (a) Detailed circular flight paths of the UAV. (b) Calculated coverage area of the data collection based on different recording angles.

Figure 6.

Data collection for rectangular paths in experiment environment 2 (a) Detailed rectangular flight paths of the UAV, with calculated coverage areas based on different recording angles: (b) 60°, (c) 30°, and (d) 0°.

Figure 6.

Data collection for rectangular paths in experiment environment 2 (a) Detailed rectangular flight paths of the UAV, with calculated coverage areas based on different recording angles: (b) 60°, (c) 30°, and (d) 0°.

Figure 7.

Data collection crossover paths for experiment environment 2. (a) Detailed crossover flight paths of the UAV, with calculated coverage areas based on different recording crossover paths: (b) vertical path, (c) horizontal path, and (d) diagonal path 1 and 2.

Figure 7.

Data collection crossover paths for experiment environment 2. (a) Detailed crossover flight paths of the UAV, with calculated coverage areas based on different recording crossover paths: (b) vertical path, (c) horizontal path, and (d) diagonal path 1 and 2.

Figure 8.

Operations with Unreal Engine when displaying data and performing novel view synthesis rendering for hologram printing. (a) PLY file opened in the Unreal Engine interface and (b) OBJ file opened in the Unreal Engine interface.

Figure 8.

Operations with Unreal Engine when displaying data and performing novel view synthesis rendering for hologram printing. (a) PLY file opened in the Unreal Engine interface and (b) OBJ file opened in the Unreal Engine interface.

Figure 9.

Setup of the exhibited zero-degree tabletop hologram.

Figure 9.

Setup of the exhibited zero-degree tabletop hologram.

Figure 10.

Flow chart of the experiment used in this study. The method comprises four main phases, including data collection, data input scenarios, data processing, and tabletop hologram production.

Figure 10.

Flow chart of the experiment used in this study. The method comprises four main phases, including data collection, data input scenarios, data processing, and tabletop hologram production.

Figure 11.

Representation of images extracted from the collected data in experiment environment 1.

Figure 11.

Representation of images extracted from the collected data in experiment environment 1.

Figure 12.

Representation of images extracted from the collected data in experiment environment 2.

Figure 12.

Representation of images extracted from the collected data in experiment environment 2.

Figure 13.

Comparison of 3D reconstruction quality between Gaussian splatting and photogrammetry in both experiment environments.

Figure 13.

Comparison of 3D reconstruction quality between Gaussian splatting and photogrammetry in both experiment environments.

Figure 14.

3D reconstruction of data from six scenarios using Gaussian splatting and photogrammetry.

Figure 14.

3D reconstruction of data from six scenarios using Gaussian splatting and photogrammetry.

Figure 15.

Hologram visualization for (a) experiment environment 1 (b) and experiment environment 2.

Figure 15.

Hologram visualization for (a) experiment environment 1 (b) and experiment environment 2.

Figure 16.

Workflow of the urban and architectural HoloGaussian digital twin.

Figure 16.

Workflow of the urban and architectural HoloGaussian digital twin.

Table 1.

DJI Mini 4 Pro specifications for the experiment data collection.

Table 1.

DJI Mini 4 Pro specifications for the experiment data collection.

| No |

Specifications |

Parameters |

Units |

| 1 |

Take – off weight |

249 |

g |

| 2 |

Take – off dimensions (Length x Width x Height) |

298×373×101 |

mm |

| 3 |

Flying speed during experiment |

6-16 |

m/s |

| 4 |

Camera sensor |

1/1.3-inch CMOS 48 MP |

N/A |

| 5 |

Focal length |

24 |

mm |

| 6 |

Aperture |

1.7 |

N/A |

| 7 |

Video resolution |

1920×1080 (FHD) |

px |

| 8 |

Framerate |

60 |

fps |

| 9 |

ISO |

Automatic |

N/A |

| 10 |

Vertical Field of View (FOV) |

42.9 |

degree |

| 11 |

Horizontal Fiew of View (FOV) |

69.7 |

degree |

Table 2.

Parameters of UAV’s circular flight routes for data collection in experiment environment 1.

Table 2.

Parameters of UAV’s circular flight routes for data collection in experiment environment 1.

Data Collecting Angle

(A) |

Radius (r) |

Height

(h) |

Distance

(s) |

Route

(C) |

Vertical Coverage (CV) |

Horizontal Coverage (CH) |

Collected images

(I) |

| Experiment environment 1 – Circular path |

|---|

| 60° |

150m |

75.0m |

129.9m |

816.2m |

439.1m |

208.9m |

117 |

| 50° |

150m |

96.4m |

114.9m |

722.0m |

234.9m |

208.9m |

103 |

| 40° |

150m |

114.9m |

96.4m |

605.8m |

172.6m |

208.9m |

87 |

| 30° |

150m |

129.9m |

75.0m |

471.2m |

143.5m |

208.9m |

67 |

| 20° |

150m |

141.0m |

51.3m |

322.3m |

128.1m |

208.9m |

46 |

| 10° |

150m |

147.7m |

26.0m |

163.7m |

120.3m |

208.9m |

23 |

| 0° |

150m |

150.0m |

0.0m |

0.0m |

117.9m |

208.9m |

1 |

Table 3.

Parameters of UAV’s rectangular and crossover flight routes for data collection in experiment environment 2.

Table 3.

Parameters of UAV’s rectangular and crossover flight routes for data collection in experiment environment 2.

| Flying direction |

Data Collecting Angle

(A) |

Radius (r) |

Height

(h) |

Distance

(s) |

Distance to boundary (s’) |

Route

(C) |

Vertical Coverage (CV) |

Horizontal Coverage (CH) |

Collected images

(I) |

| Experiment environment 2 – Rectangular path |

| N/A |

60° |

216m |

75.0m |

129.9m |

59.8m |

1639m |

439.1m |

208.9m |

164 |

| N/A |

30° |

216m |

187.1m |

108.0m |

28.1m |

1512m |

206.6m |

300.8m |

152 |

| N/A |

0° |

150m |

216.0m |

0.0m |

0.0m |

400m |

169.7m |

300.8m |

40 |

| Experiment environment 2 – Crossover path |

| Vertical |

60° |

216m |

145.0m |

251.1m |

115.5m |

572m |

632.3m |

300.8m |

58 |

| 50° |

216m |

186.4m |

222.2m |

101.4m |

338.2m |

300.8m |

| 40° |

216m |

222.2m |

186.4m |

74.5m |

248.6m |

300.8m |

| 30° |

216m |

251.1m |

145.0m |

37.8m |

206.6m |

300.8m |

| 20° |

216m |

272.5m |

99.2m |

-6.9m |

184.4m |

300.8m |

| 10° |

216m |

285.6m |

50.4m |

-57.8m |

173.2m |

300.8m |

| 0° |

216m |

290.0m |

0.0m |

-150.0m |

169.7m |

300.8m |

Horizontal/

Diagonal 1 and 2 |

60° |

290m |

145.0m |

251.1m |

115.5m |

531m for each path |

848.9m |

403.9m |

54 for each path |

| 50° |

290m |

186.4m |

222.2m |

101.4m |

454.1m |

403.9m |

| 40° |

290m |

222.2m |

186.4m |

74.5m |

333.8m |

403.9m |

| 30° |

290m |

251.1m |

145.0m |

37.8m |

277.4m |

403.9m |

| 20° |

290m |

272.5m |

99.2m |

-6.9m |

247.6m |

403.9m |

| 10° |

290m |

285.6m |

50.4m |

-57.8m |

232.5m |

403.9m |

| 0° |

290m |

290.0m |

0.0m |

-150.0m |

227.9m |

403.9m |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).