Submitted:

15 October 2024

Posted:

16 October 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Poor road infrastructure and management

- Non-road worthy vehicles

- Unenforced or non-existent traffic laws

- Unsafe road user behaviors and

- Inadequate post-crash care.

2. Literature Review

2.1. Robotic Perception and Machine Vision

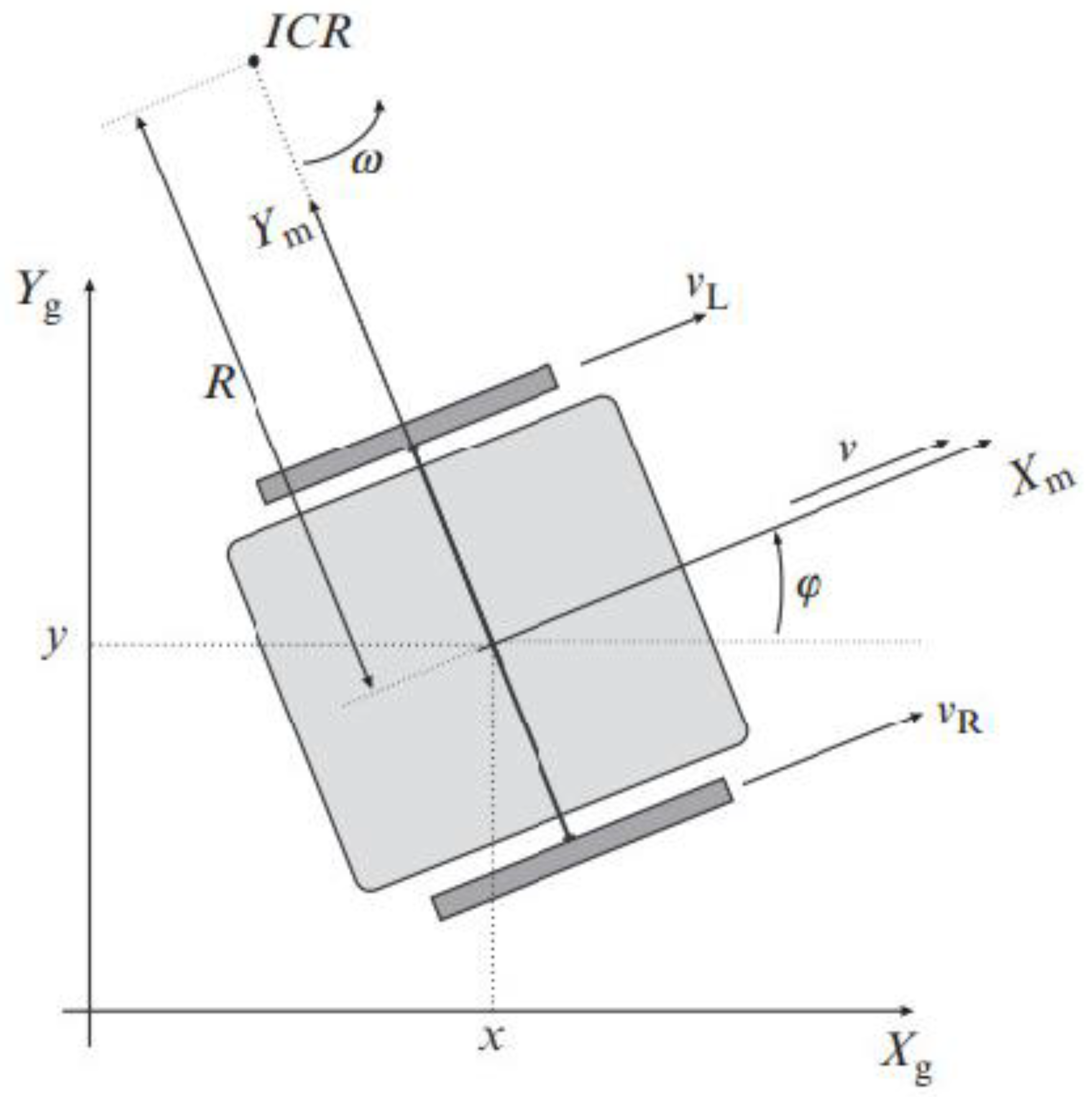

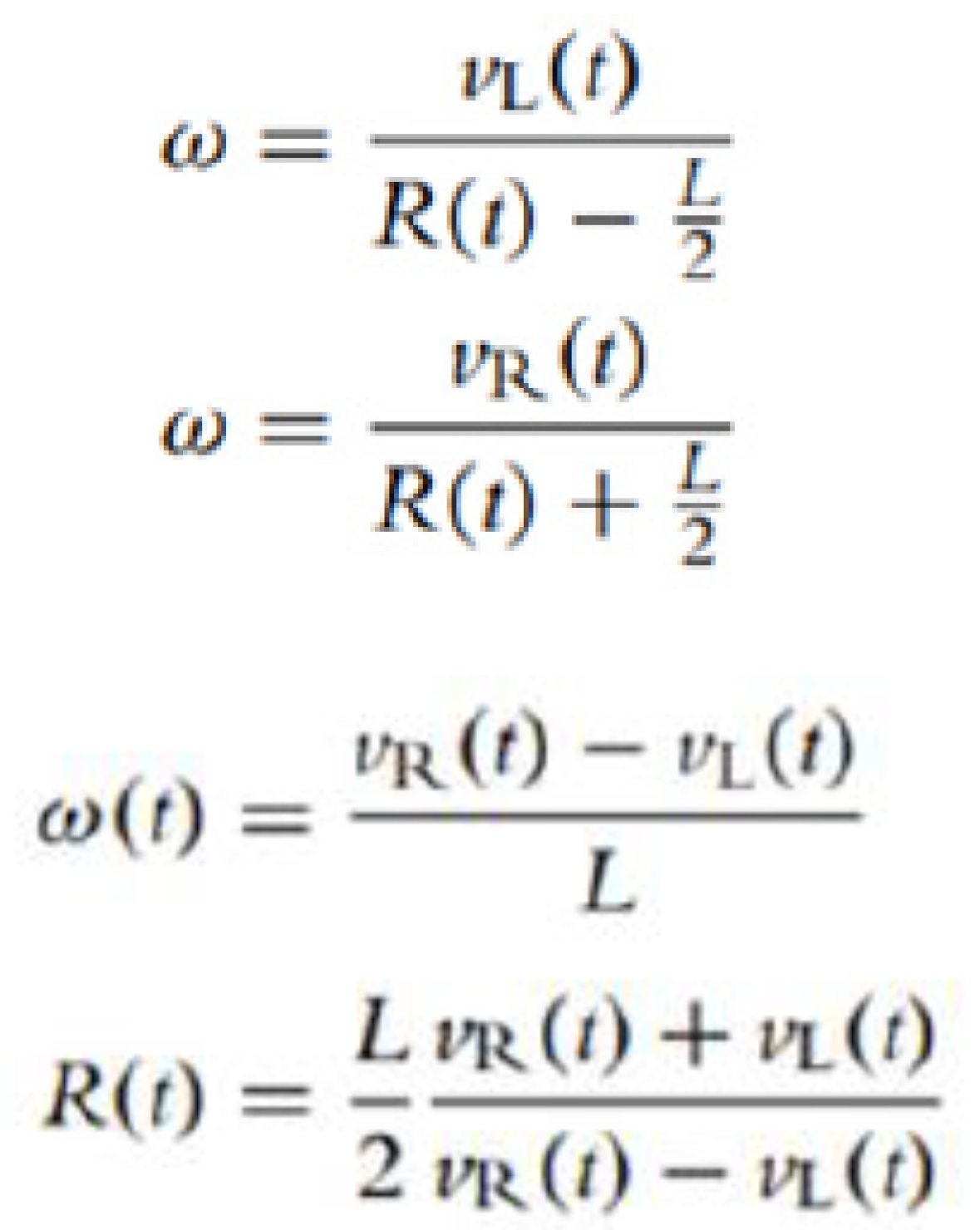

2.2. Robotic Control

2.3. Robotic Design and Construction

2.4. University Marking Robot Projects

2.5. Marking Robot Products

| UI | User Interface |

| RPI | Raspberry Pi |

| SSH | Secure Shell Protocol |

| VNC | Virtual Network Computing |

| GUI | Graphical user interface |

| HVLP | High Volume Low Pressure |

| PLA | Plastic Liquefying Agent |

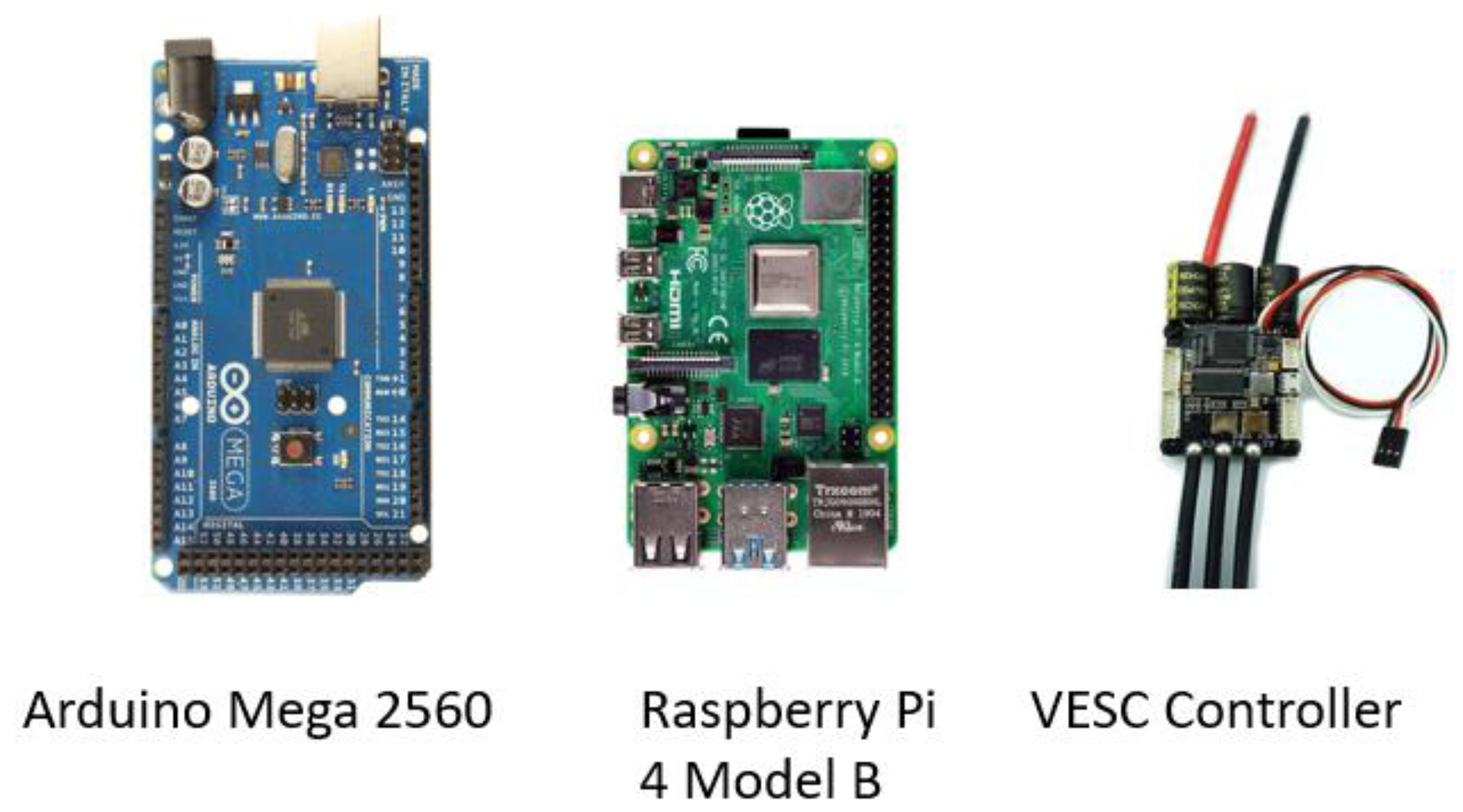

| VESC | Vedder Electronic Speed Controller |

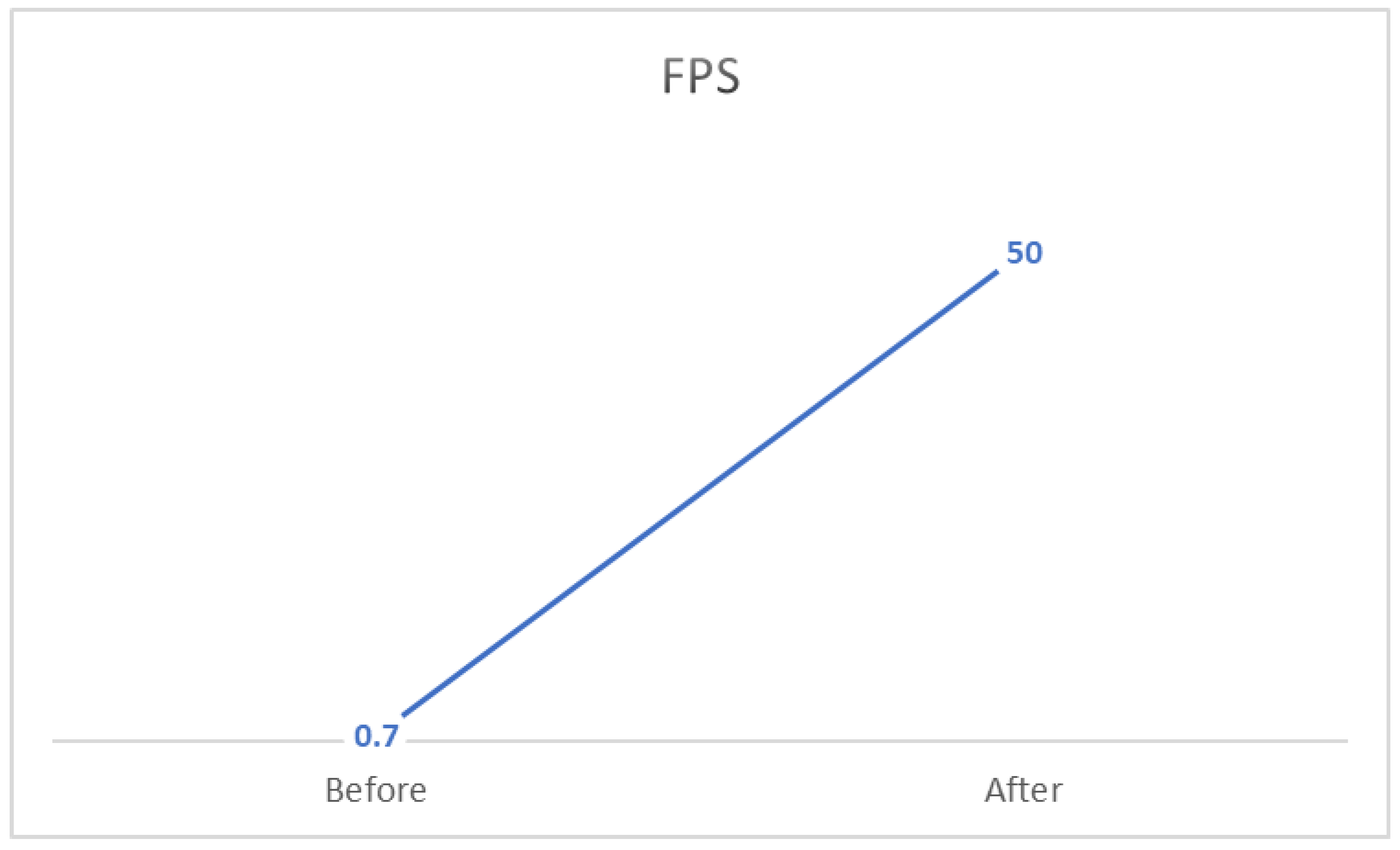

| FPS | Frames per second |

| Product | Operation / Specifications | Pros | Cons |

TinyPreMarker [7]

|

The desired marking is drawn on a tablet. Localization using GNSS receiver. Completely autonomous. |

High Marking speed of 7 km/h. The relatively lightweight of 18 kg. Autonomous. |

Small can size of 750ml. No previous line recognition. |

LineLazer V 3900 [21]

|

Manually controlled precision painting machinery. |

Many use cases. Precision. Easy to adjust and use. |

Manual. Huge size. |

TinyLineMarker Pro [10]

|

Set on a soccer field, autonomously paint the field markings. Weigh 35 kg. |

Autonomous. Reliable: 1-2 cm precision. Battery life for a full day. Paint capacity of 10L. Marking speed of 1m/s. |

Narrow use case ( for soccer fields only ). |

Kontur 600 [16]

|

A massive machine with a working station to control the machine. Weigh 4300 kg with an engine of 61 hp. |

Three types of paint, reflective glass spray. Huge paint capacity of 600 kg of paint and 170 kg of glass beads. |

Manual. Huge size. |

Shmelok HP Structure [18]

|

Manual trolly with basic operation. Weigh 100 kg. |

Relatively low cost. | Manual. Paint prepared on the spot. Pushed manually. |

TinyPreMarker Sport [8]

|

Set on a soccer field, autonomously paint the field markings. Weigh 25 kg. |

Lightweight. Easy to operate. Autonomous. Reliable: 1-2 cm precision. Battery life for a full day. |

Smaller capacity compared to [10] with 5L. Slower marking speed compared to [10] (0.7 m/s). |

3. Problem Statement

4. System Design

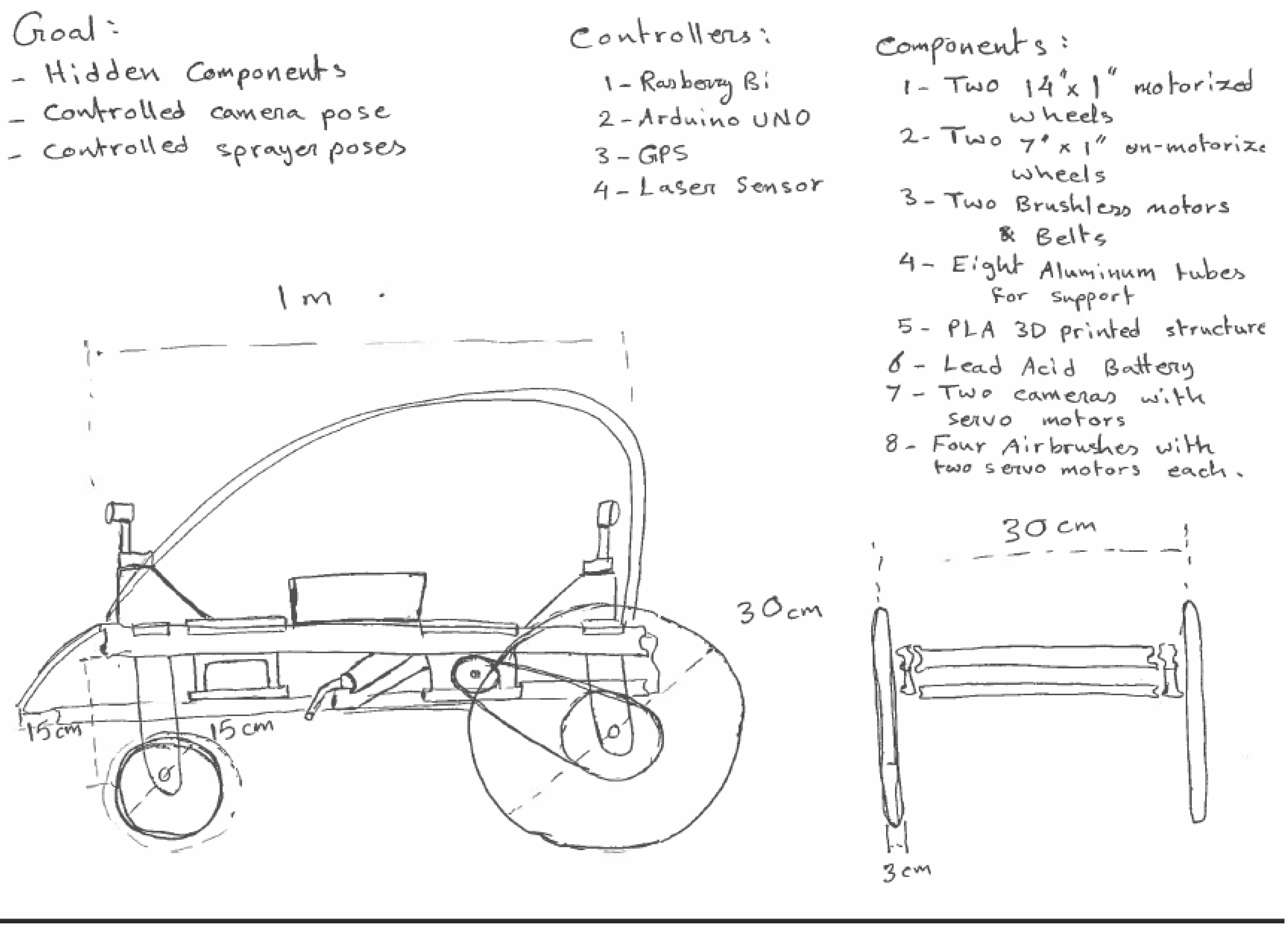

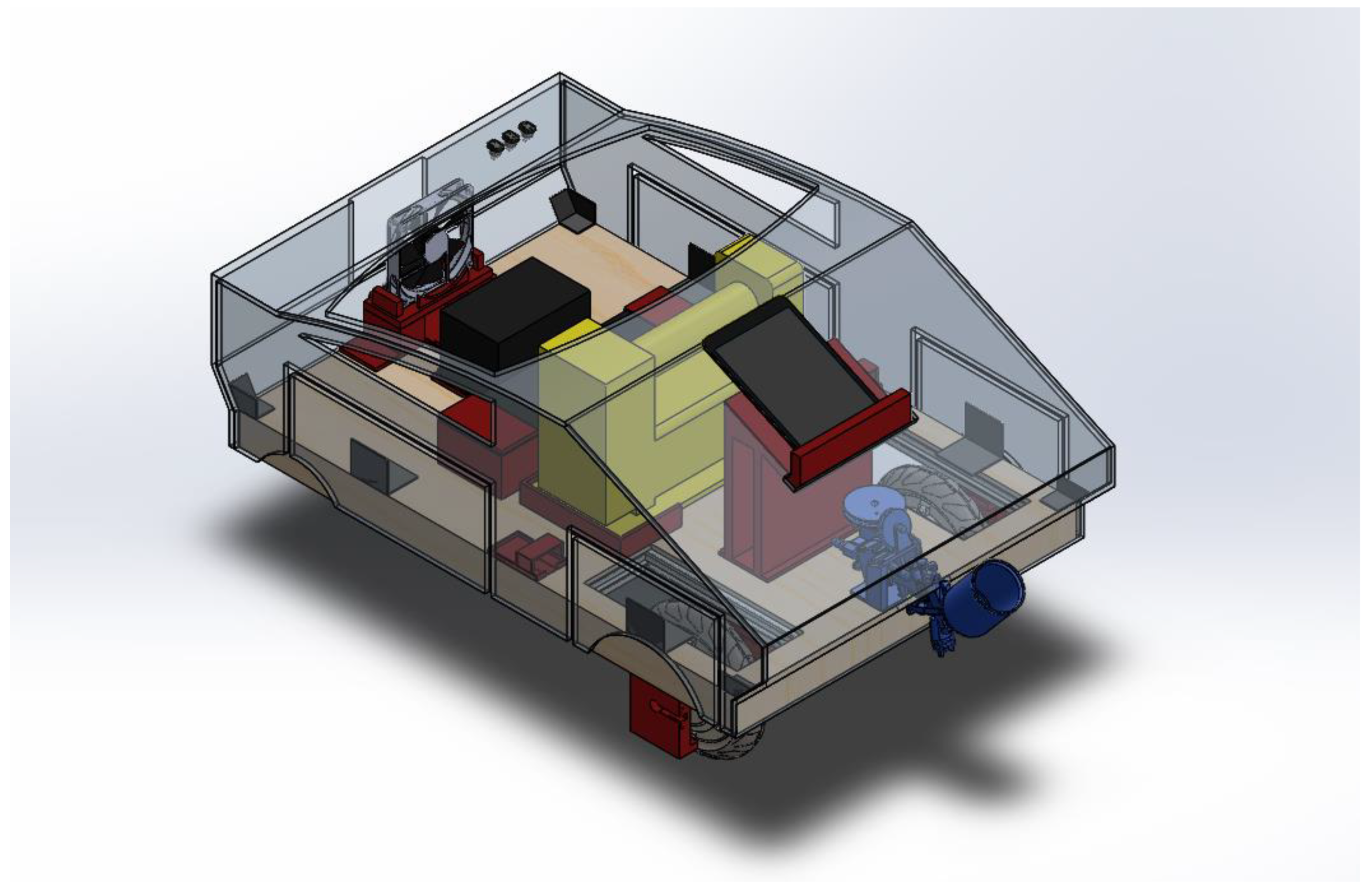

4.1. Hardware

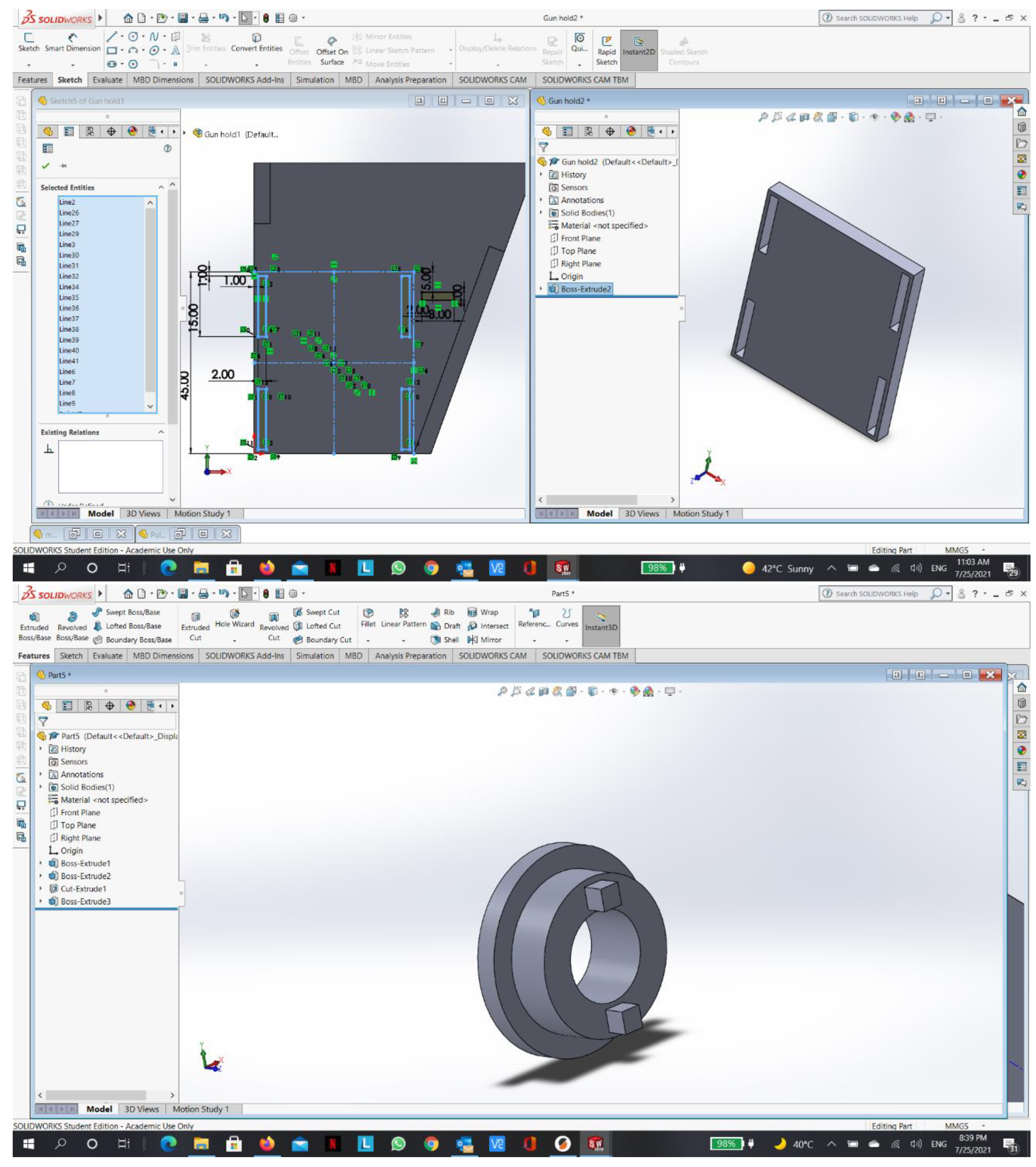

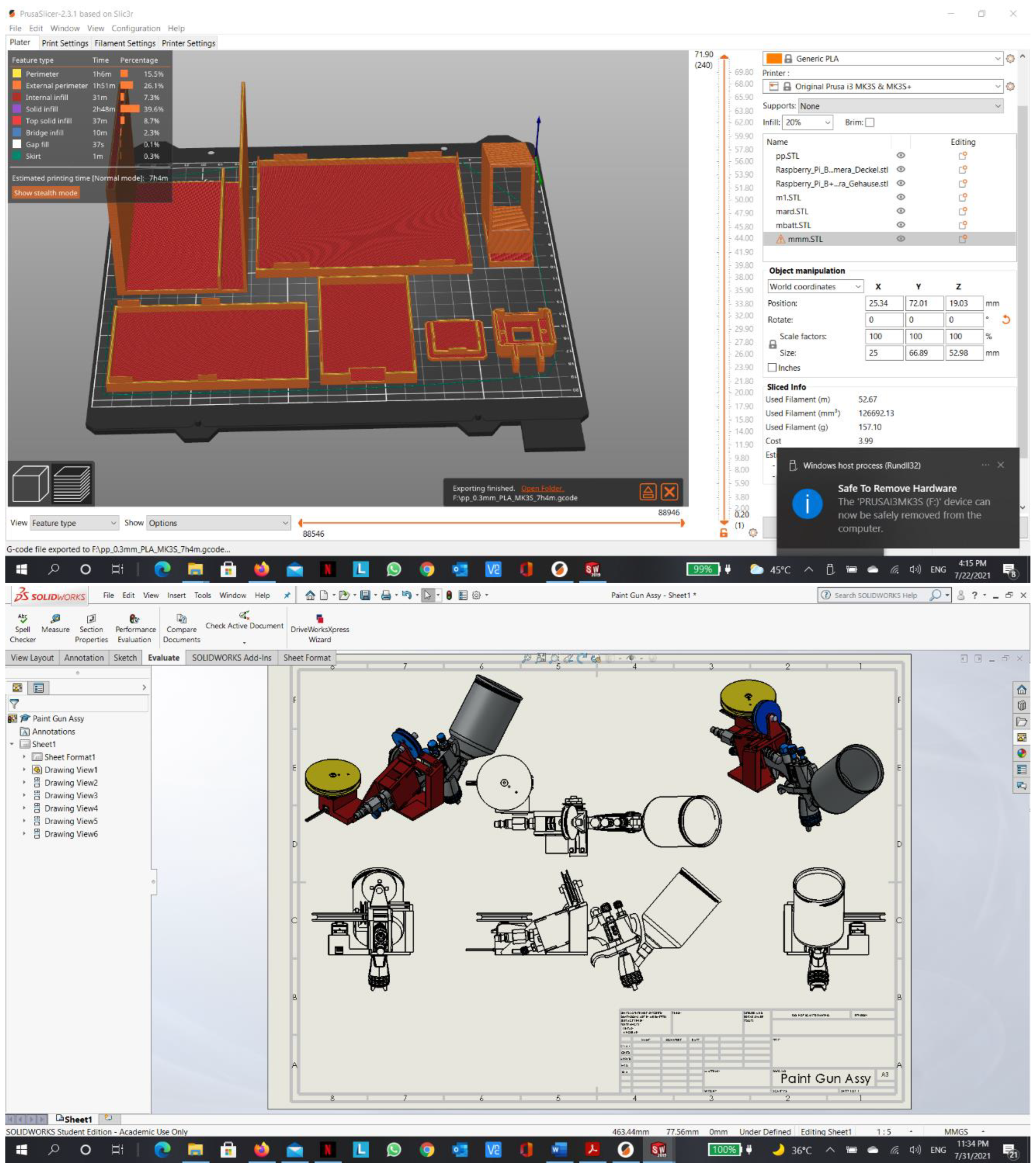

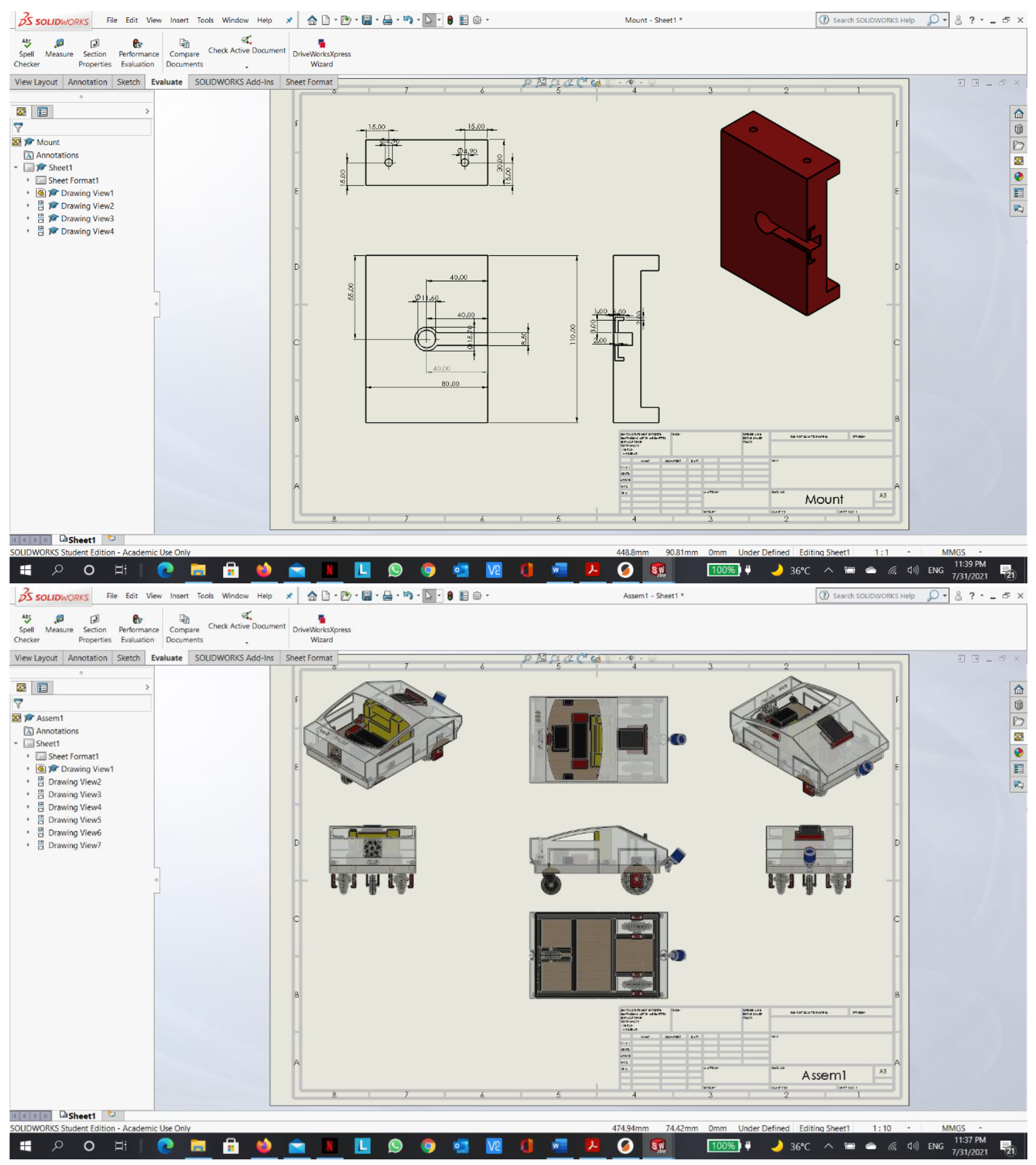

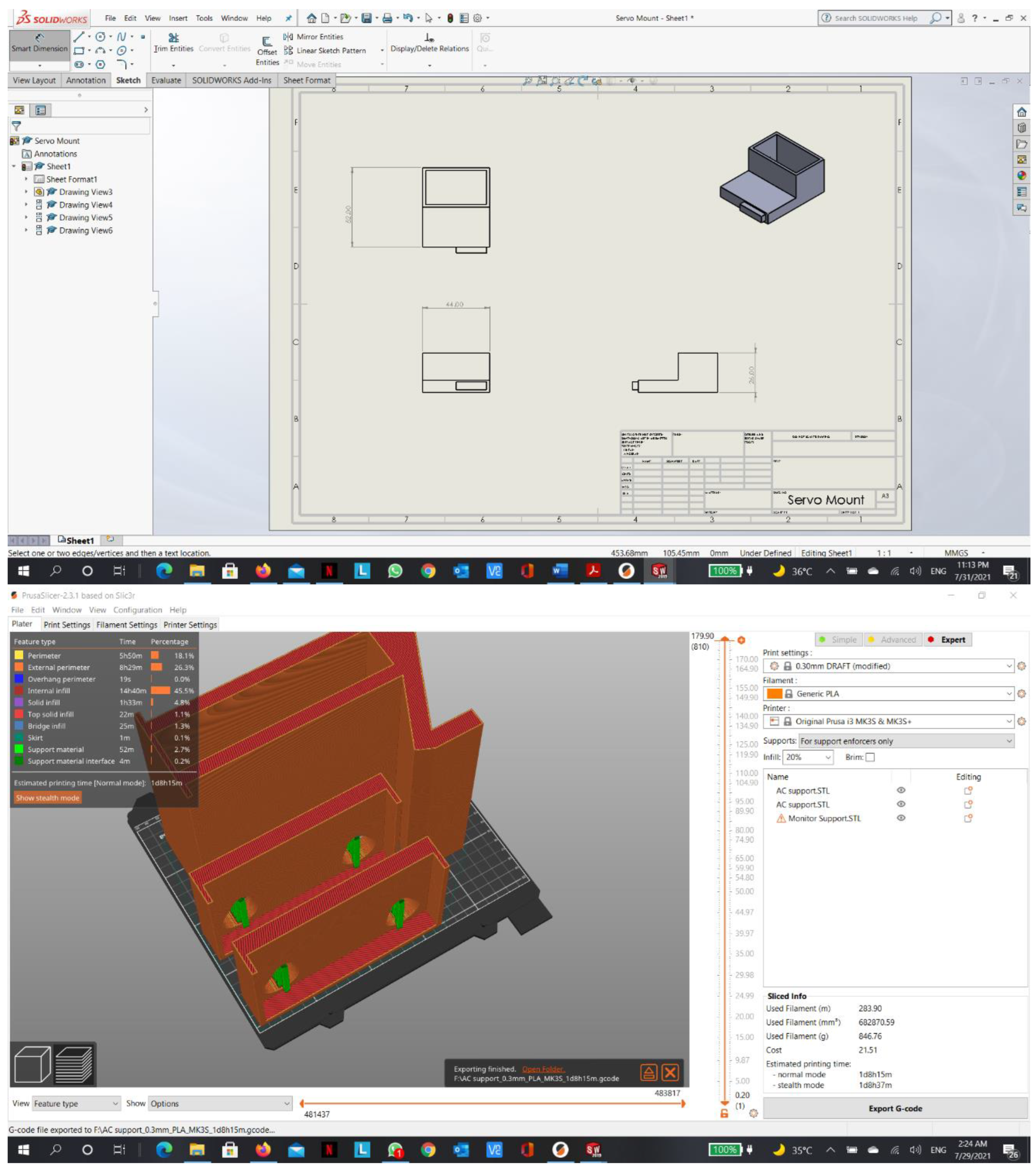

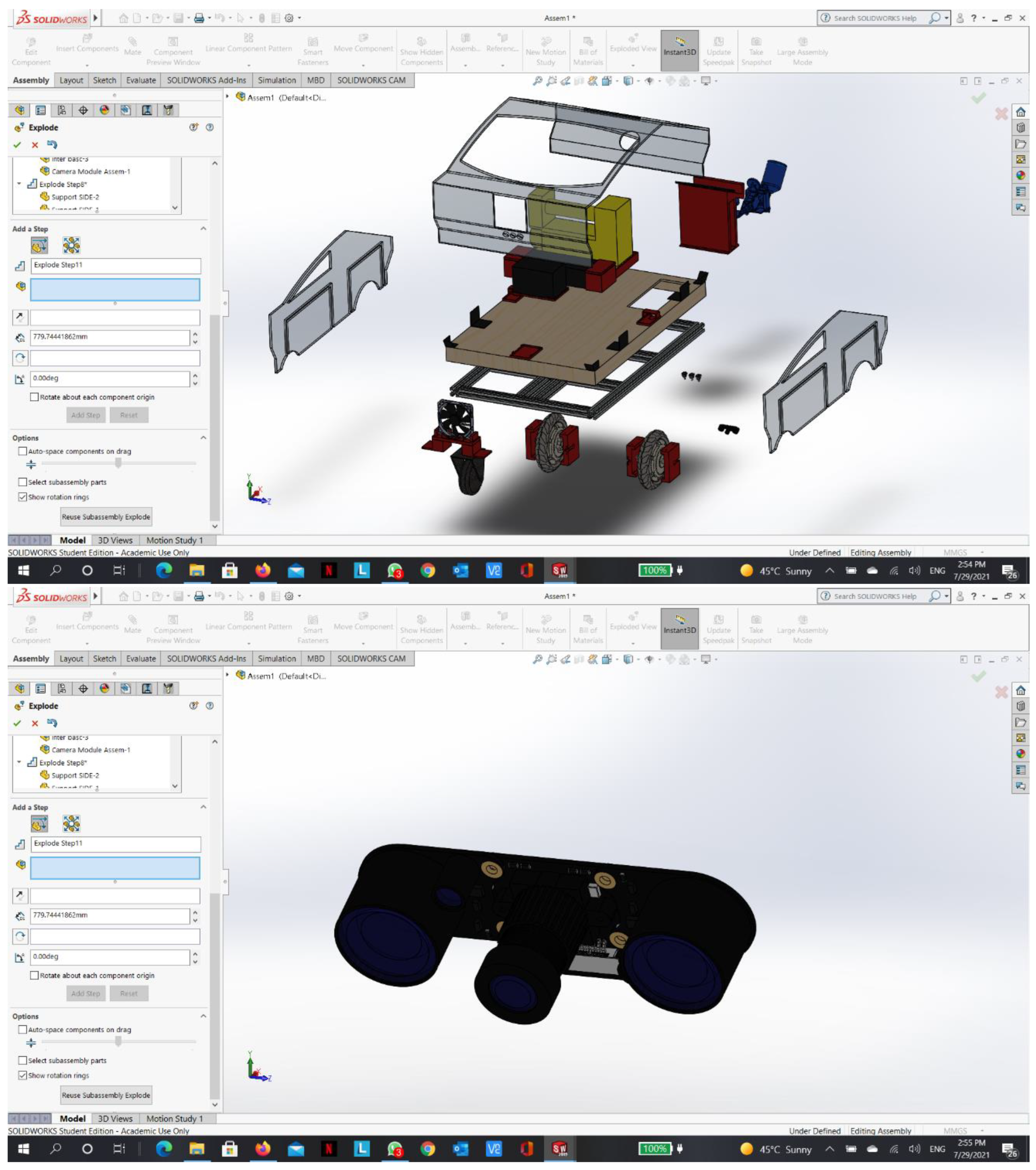

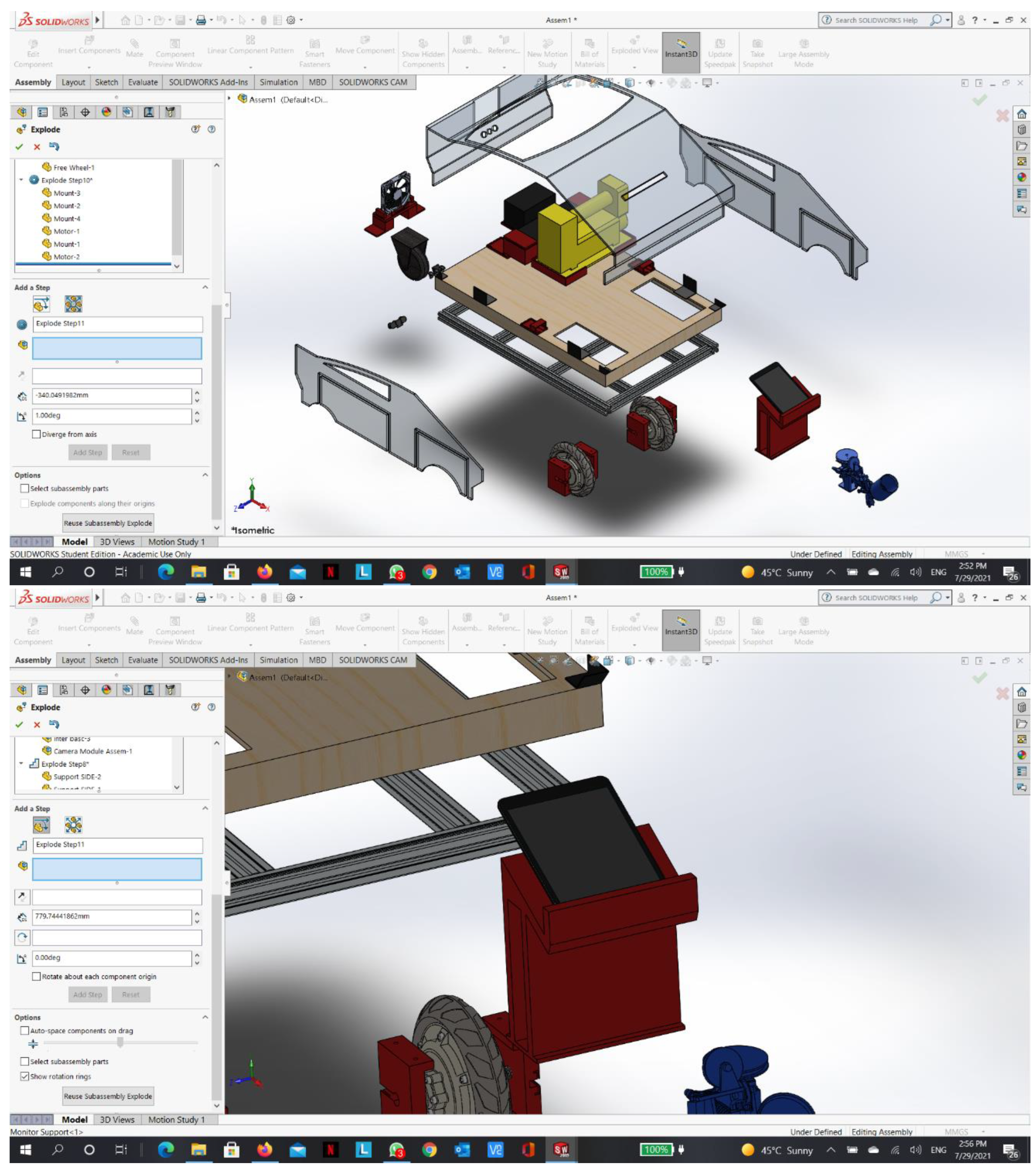

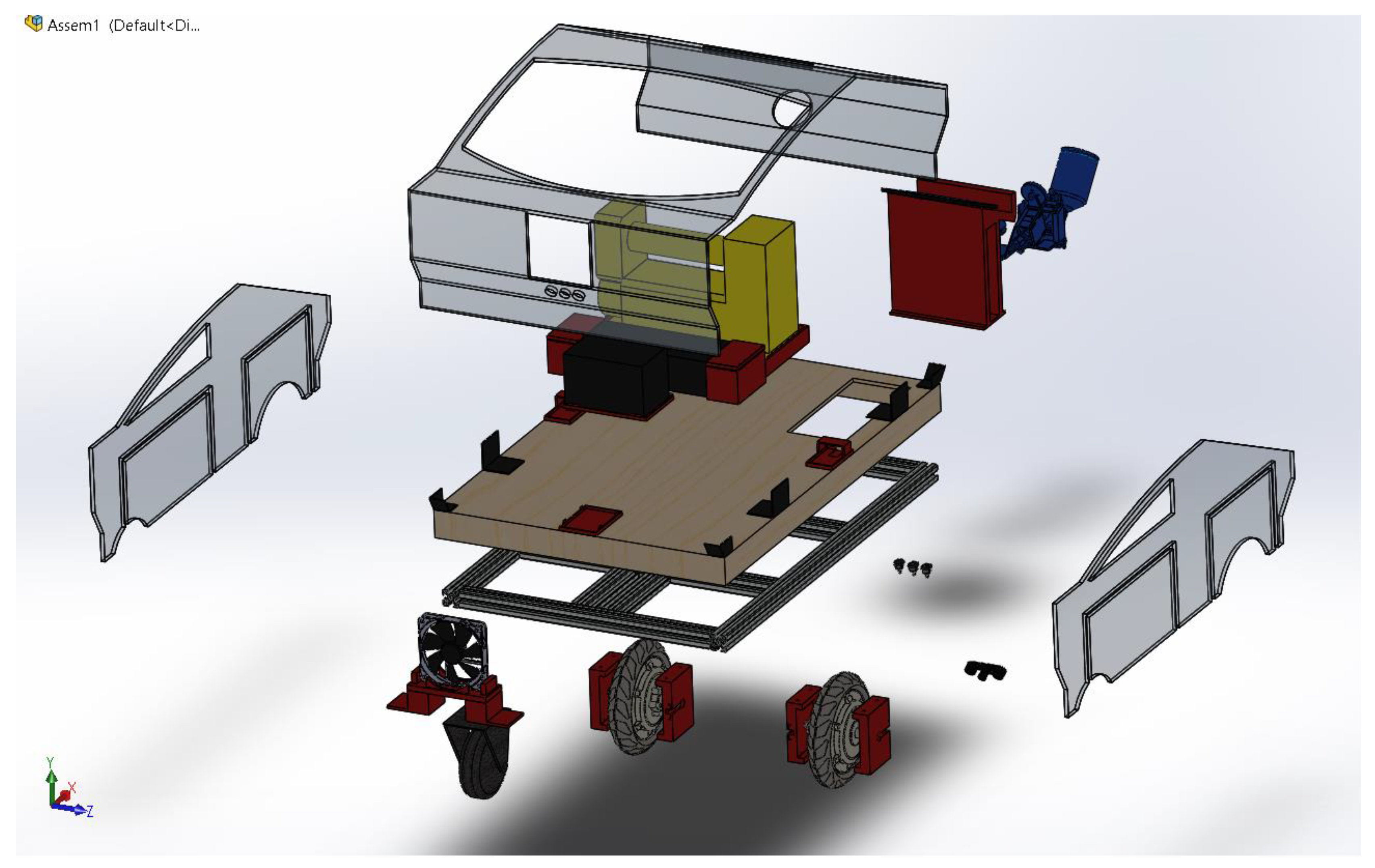

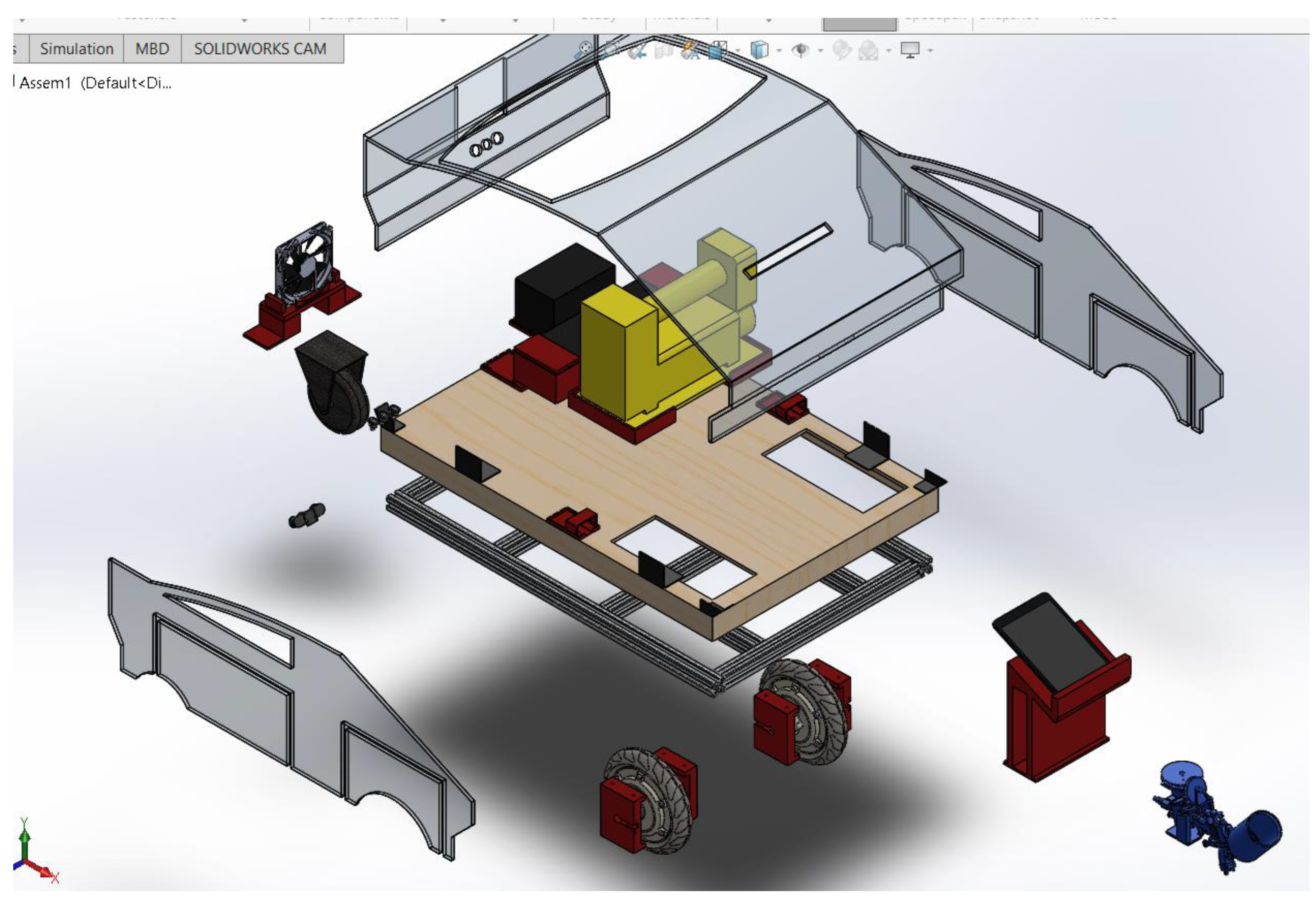

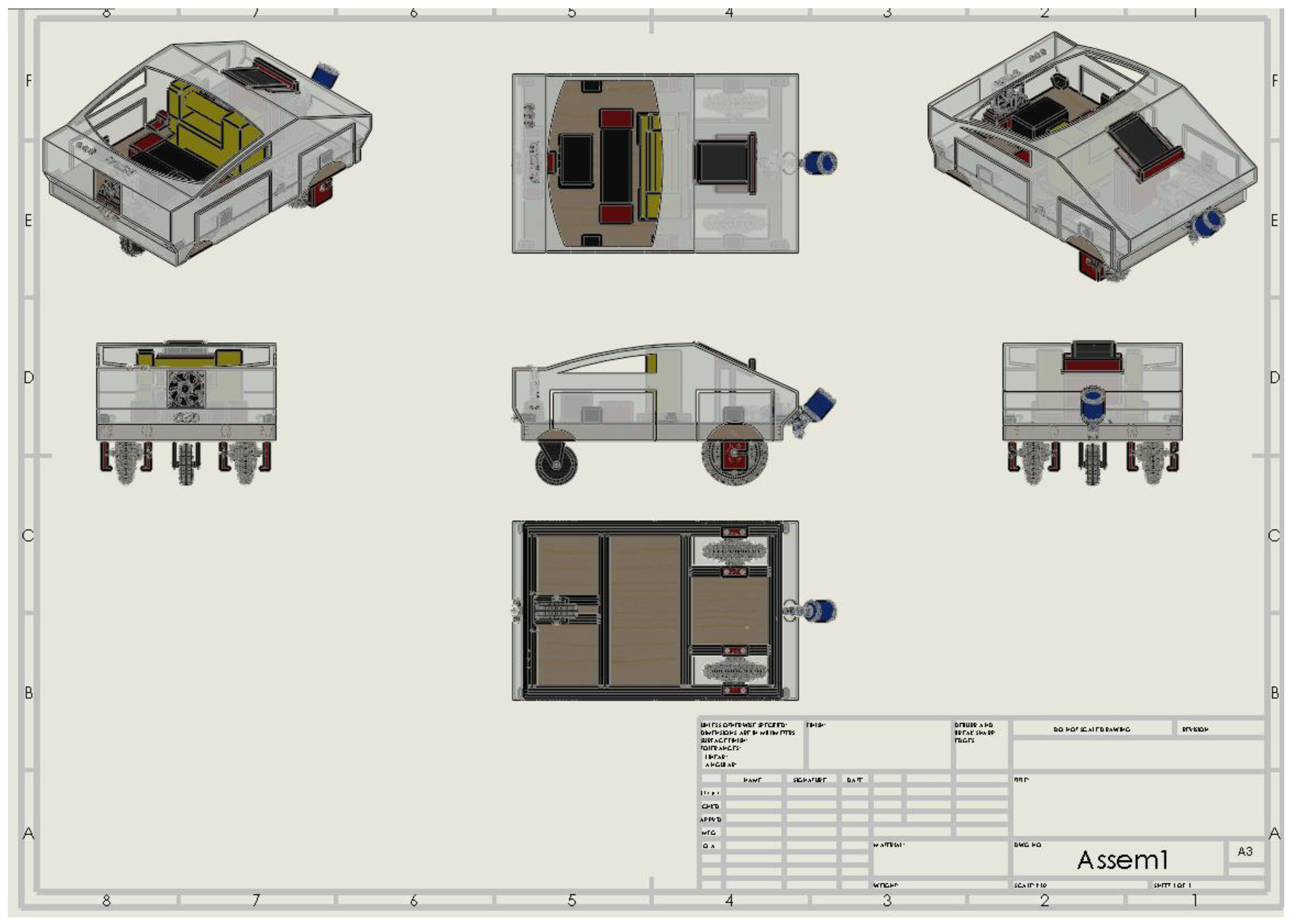

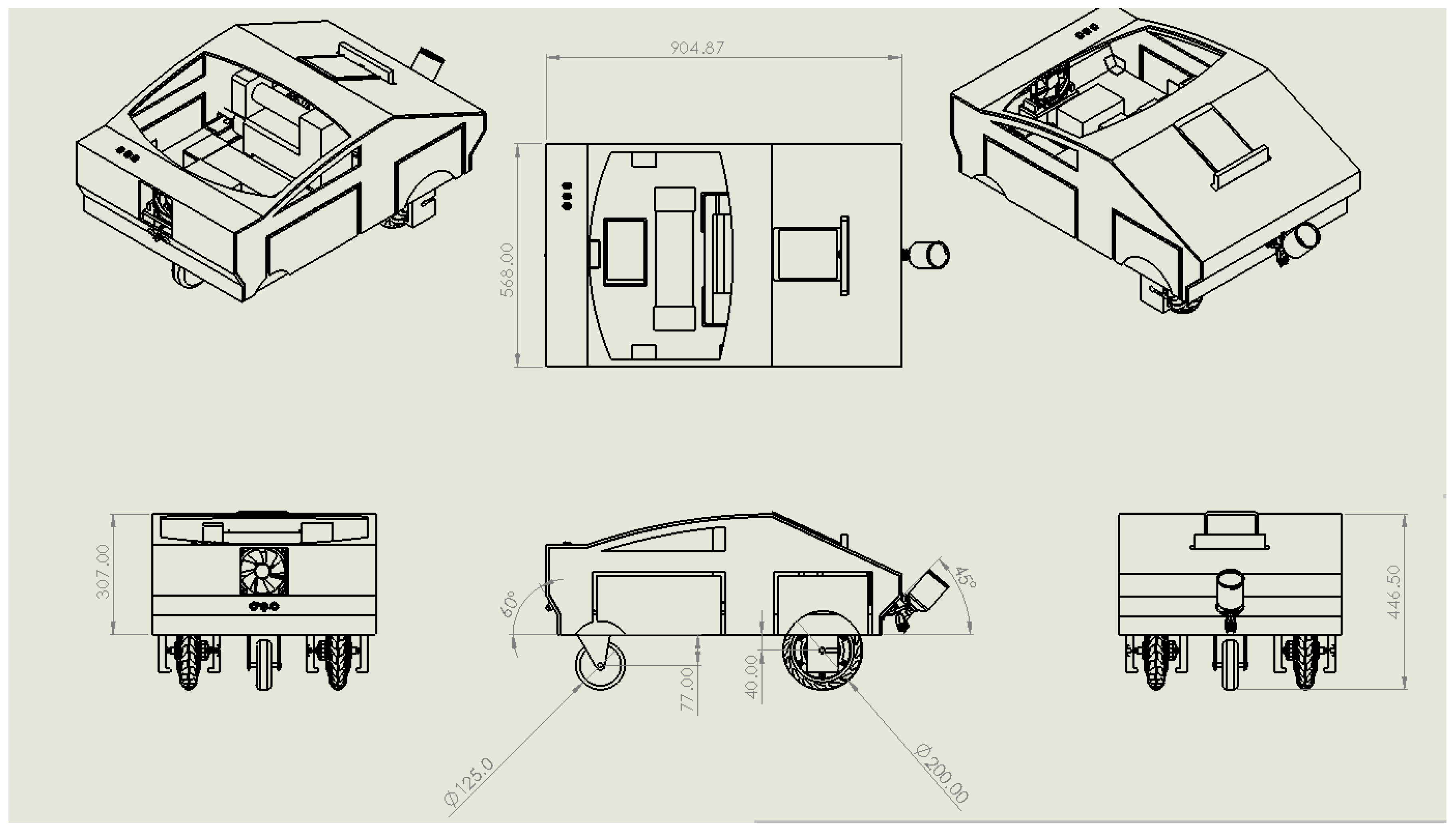

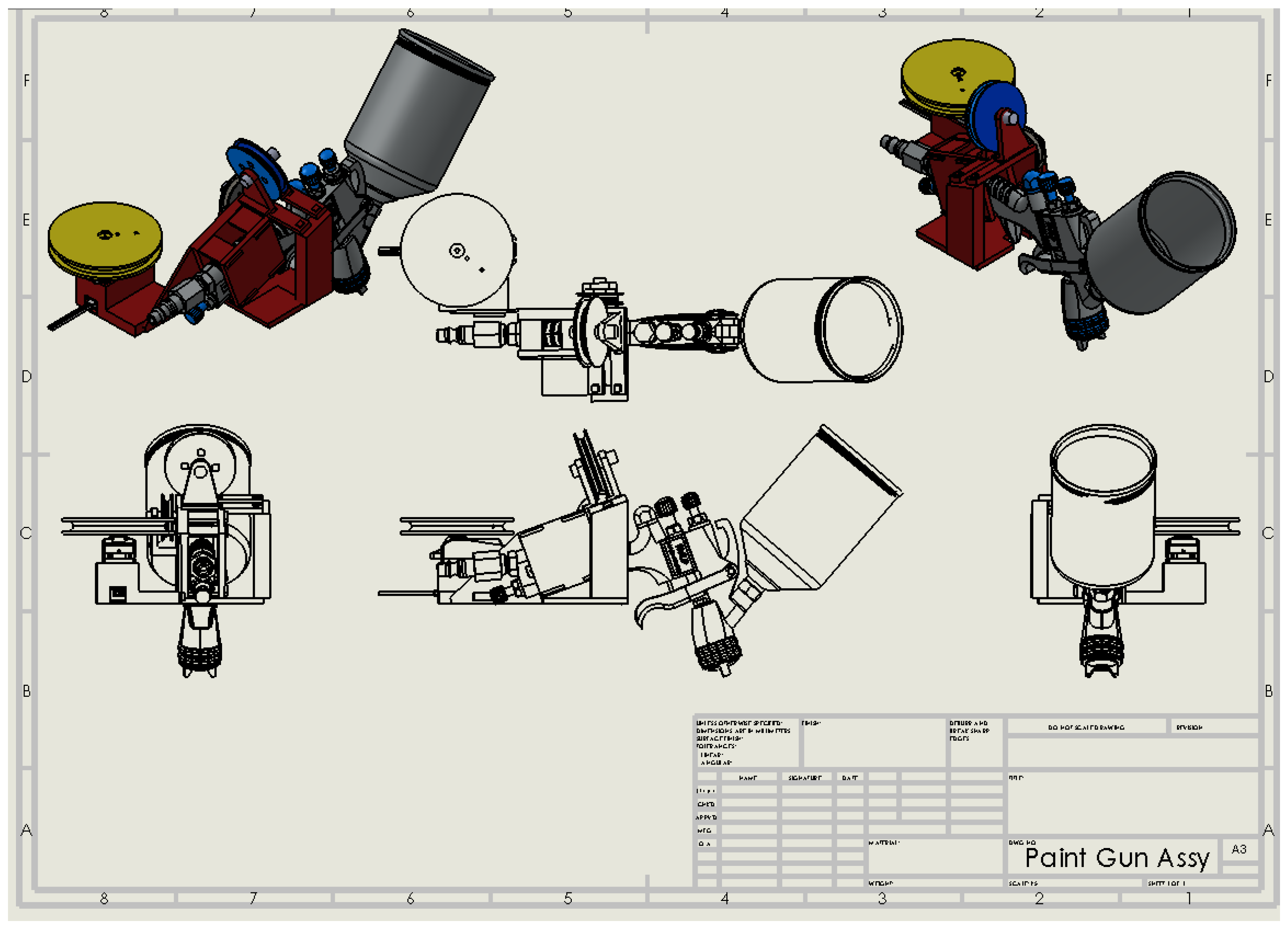

4.1.1. Mechanical System

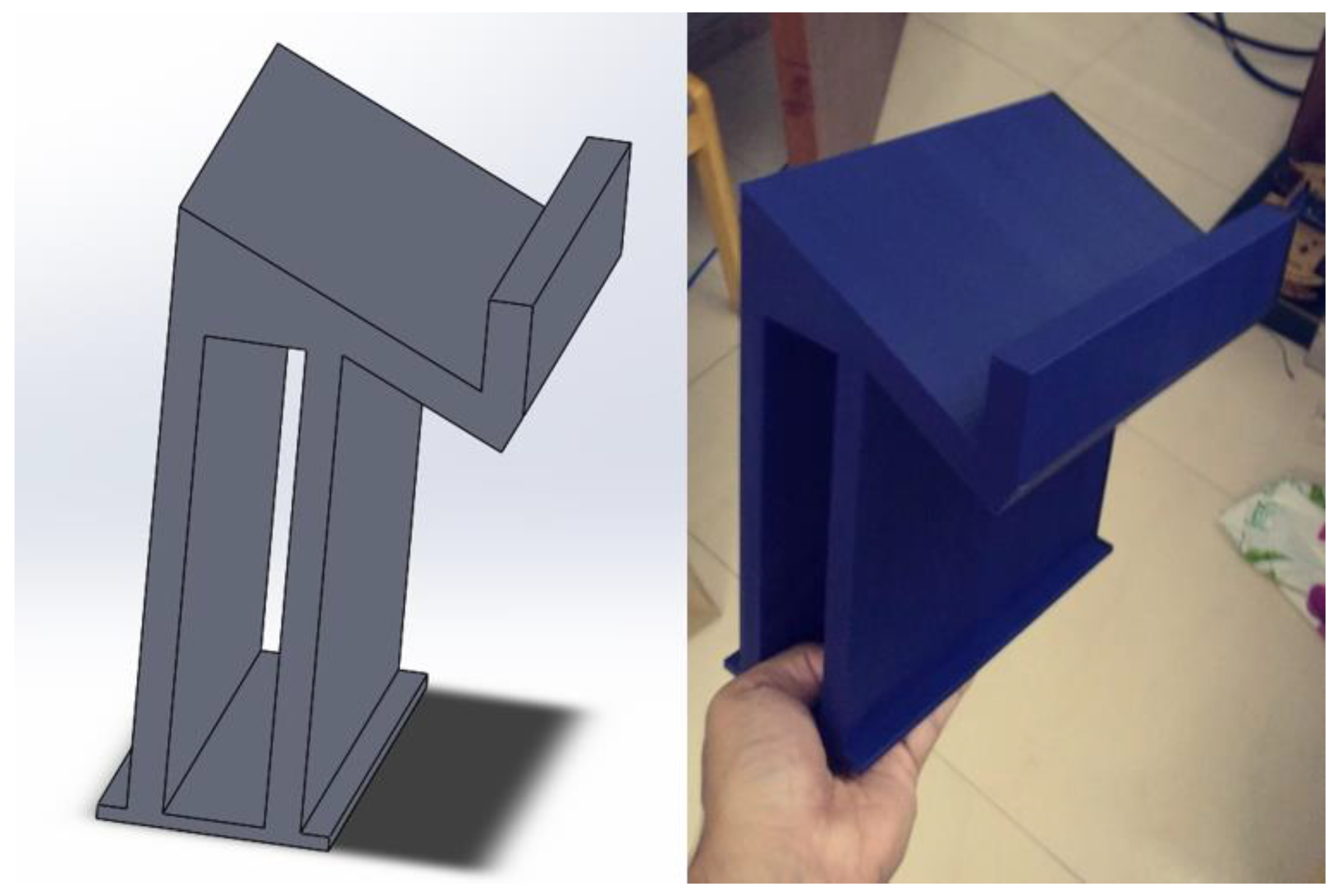

Chassis

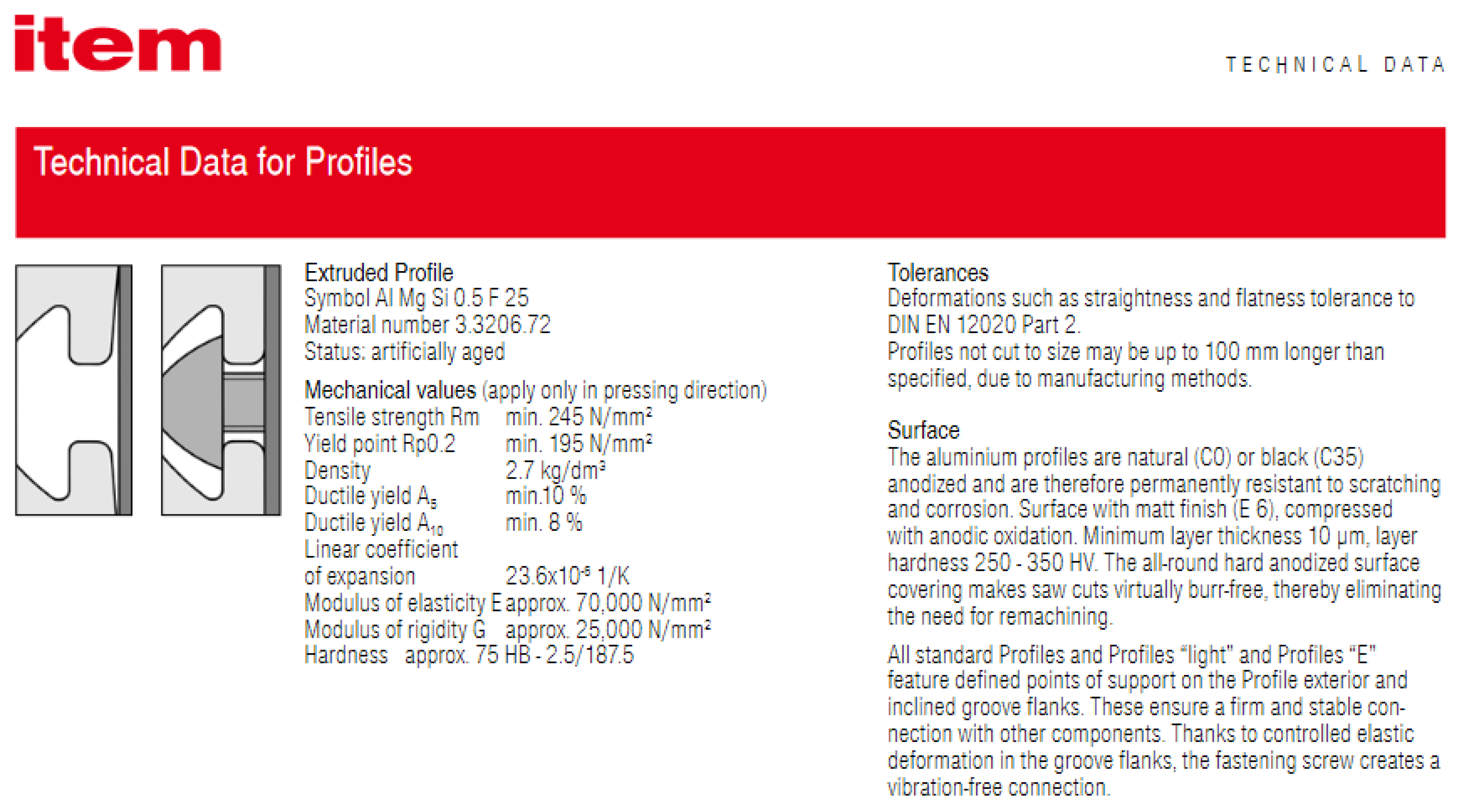

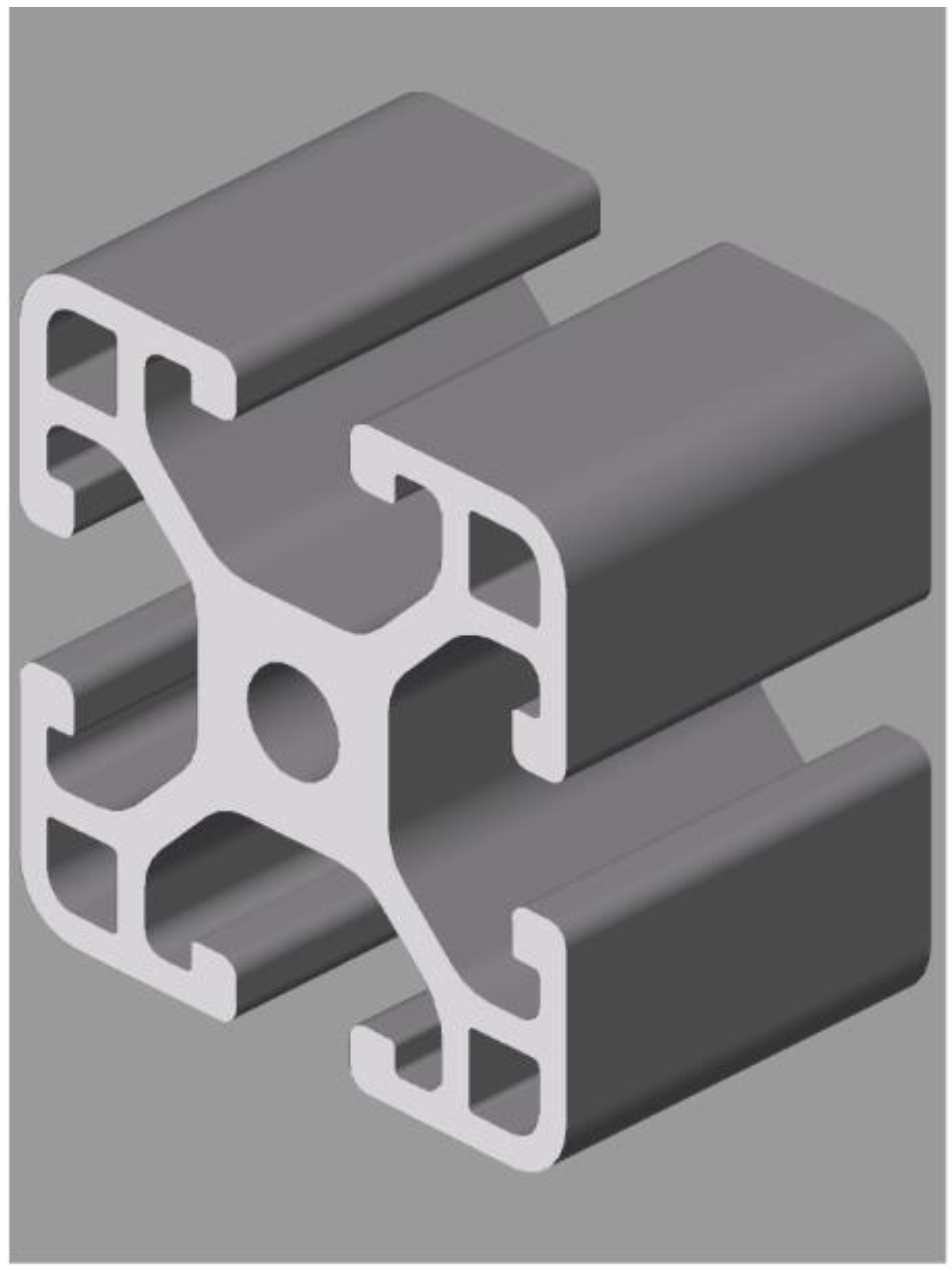

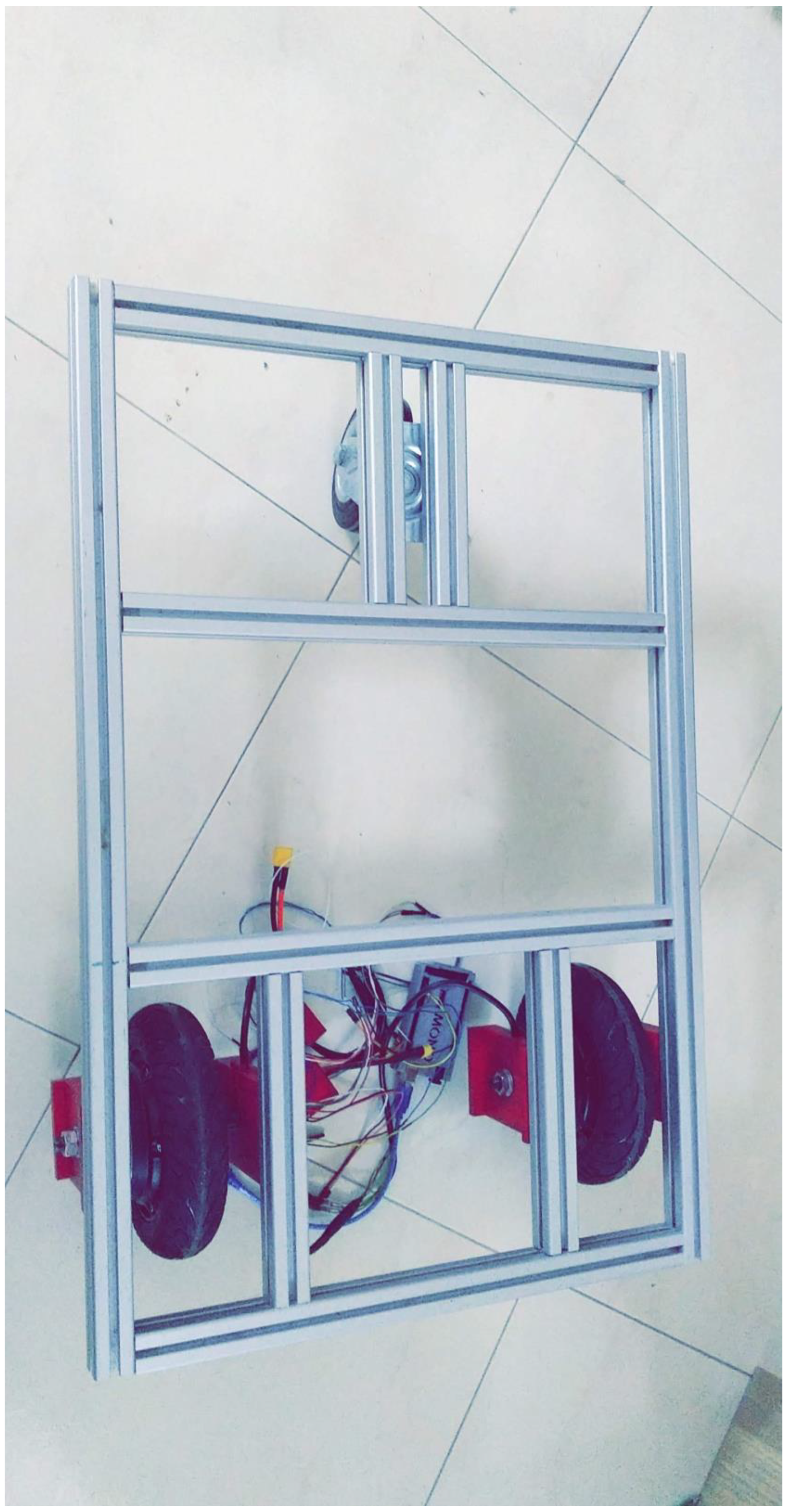

Frame

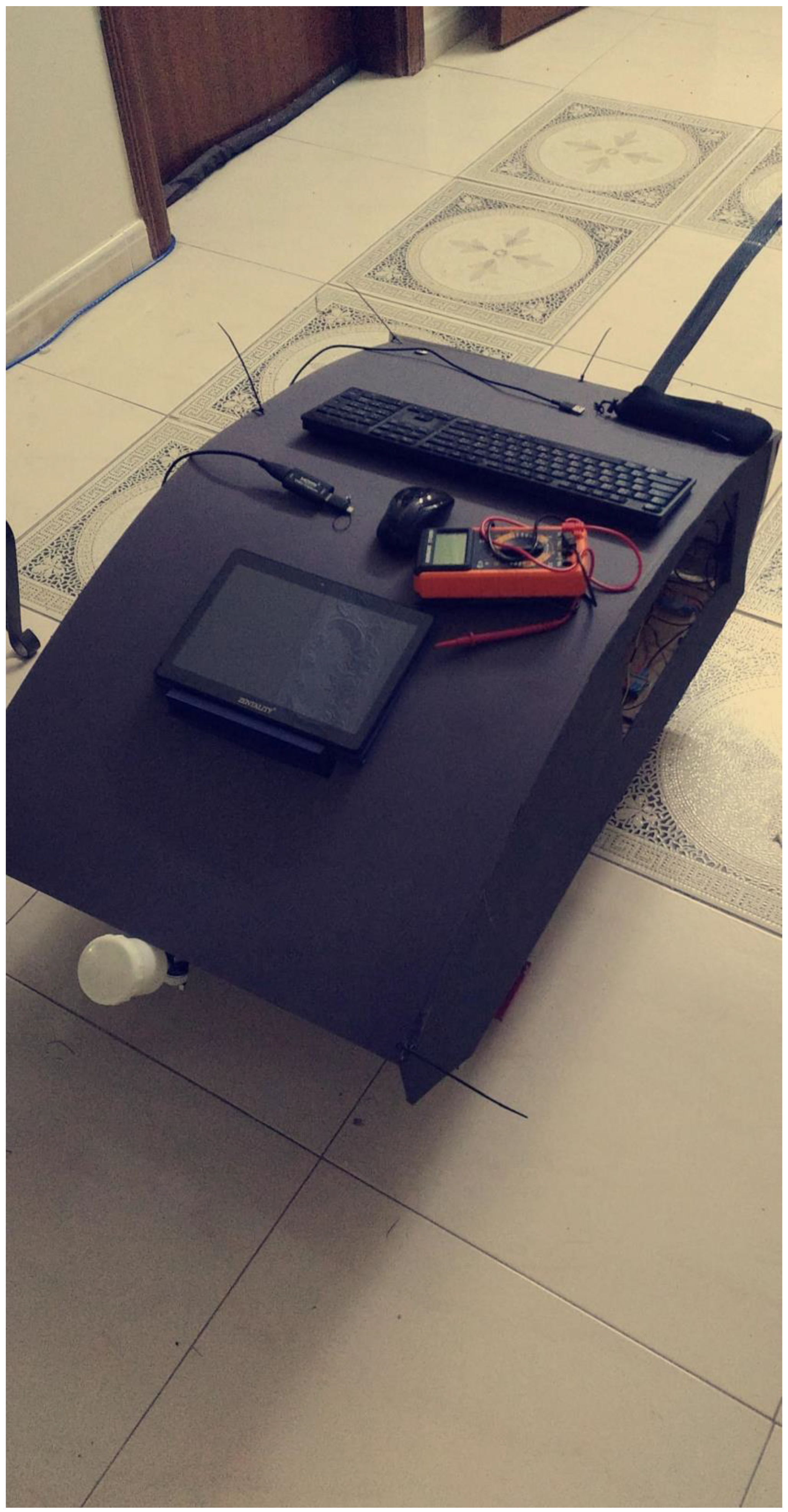

Cover

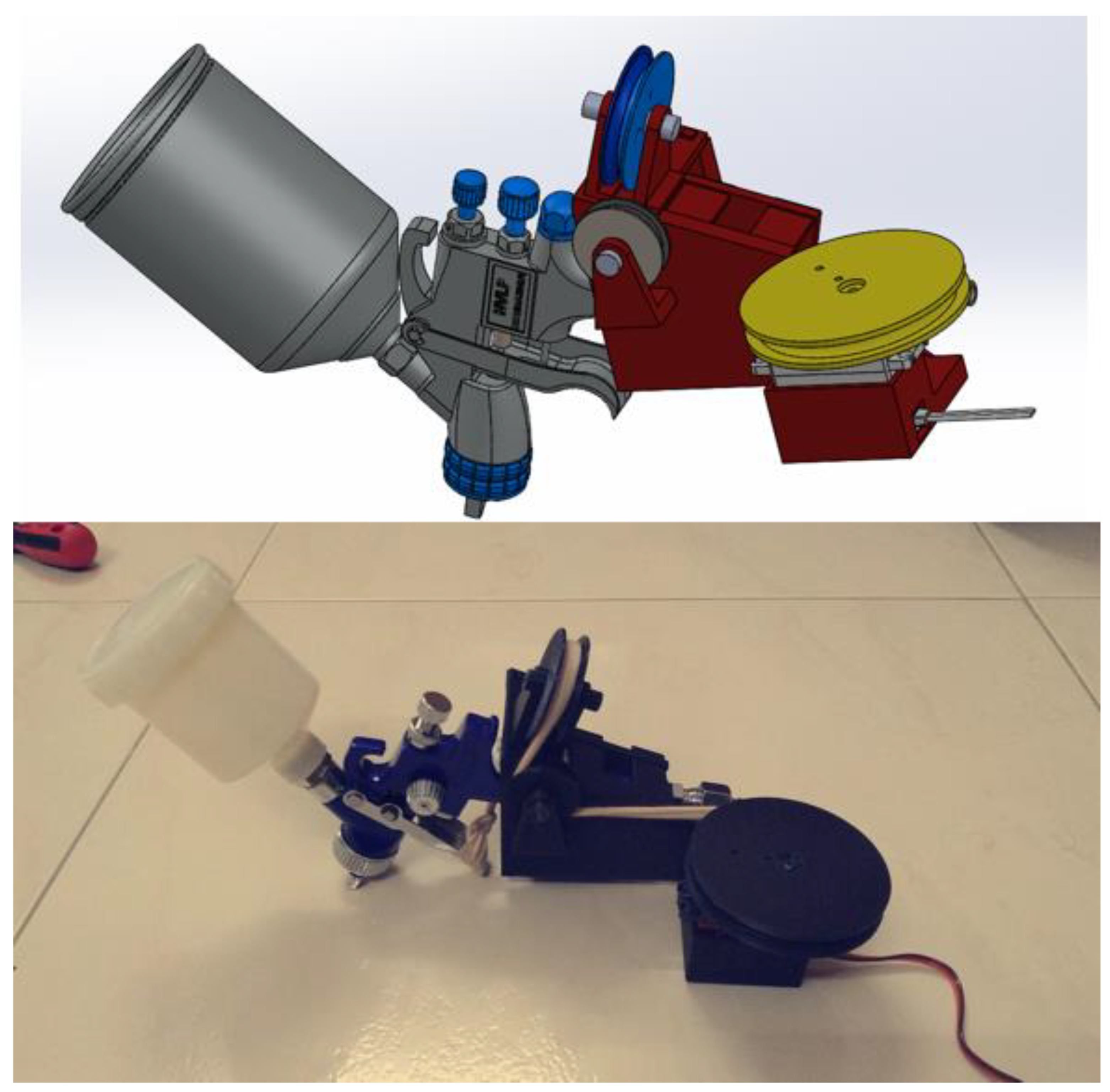

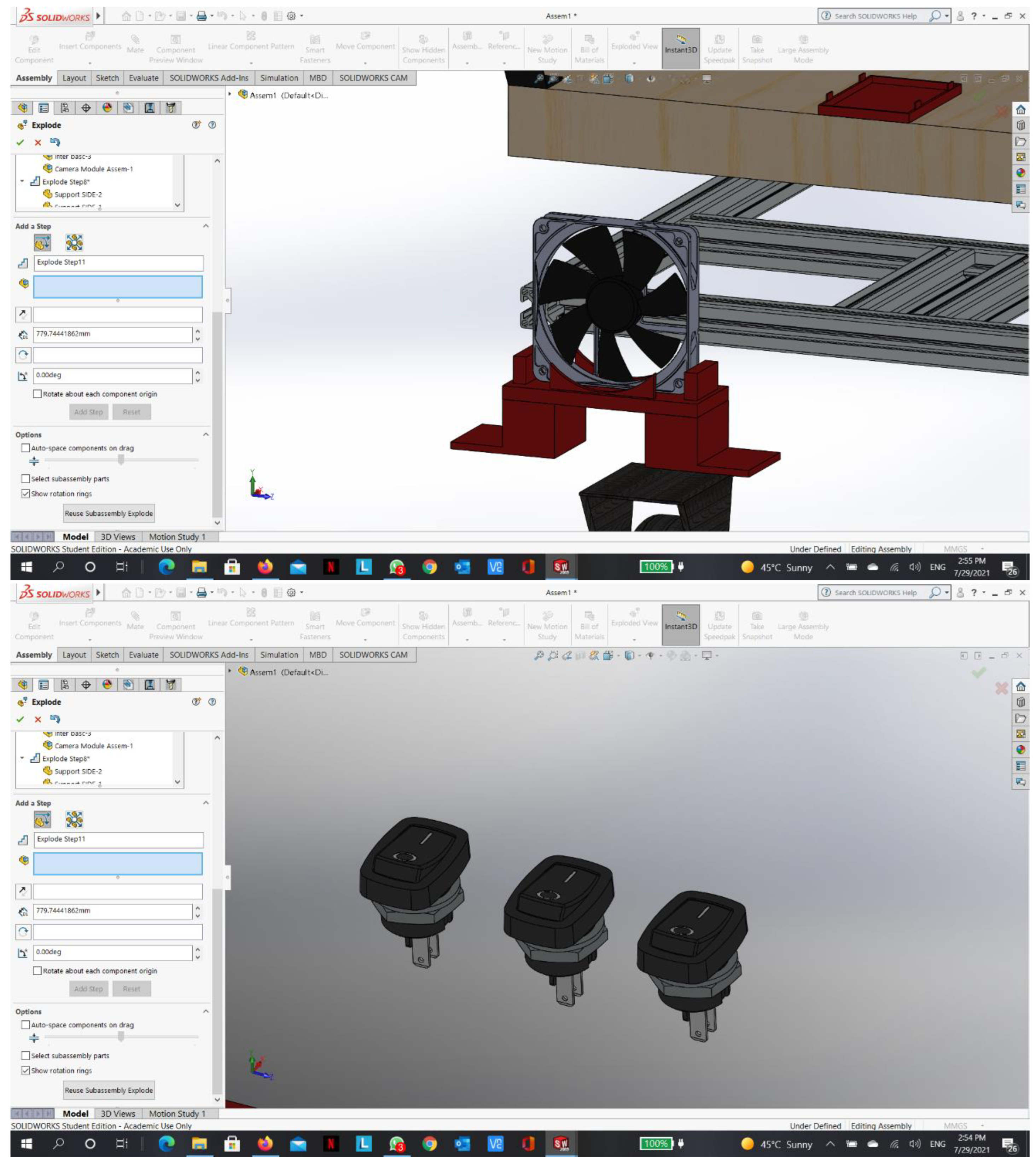

Painting Unit

Air Compressor

- Voltage: DC 12V-13.8V

- Amperage: 45A

- Max. Pressure: 150 PSI

- Airflow: 160 L/min

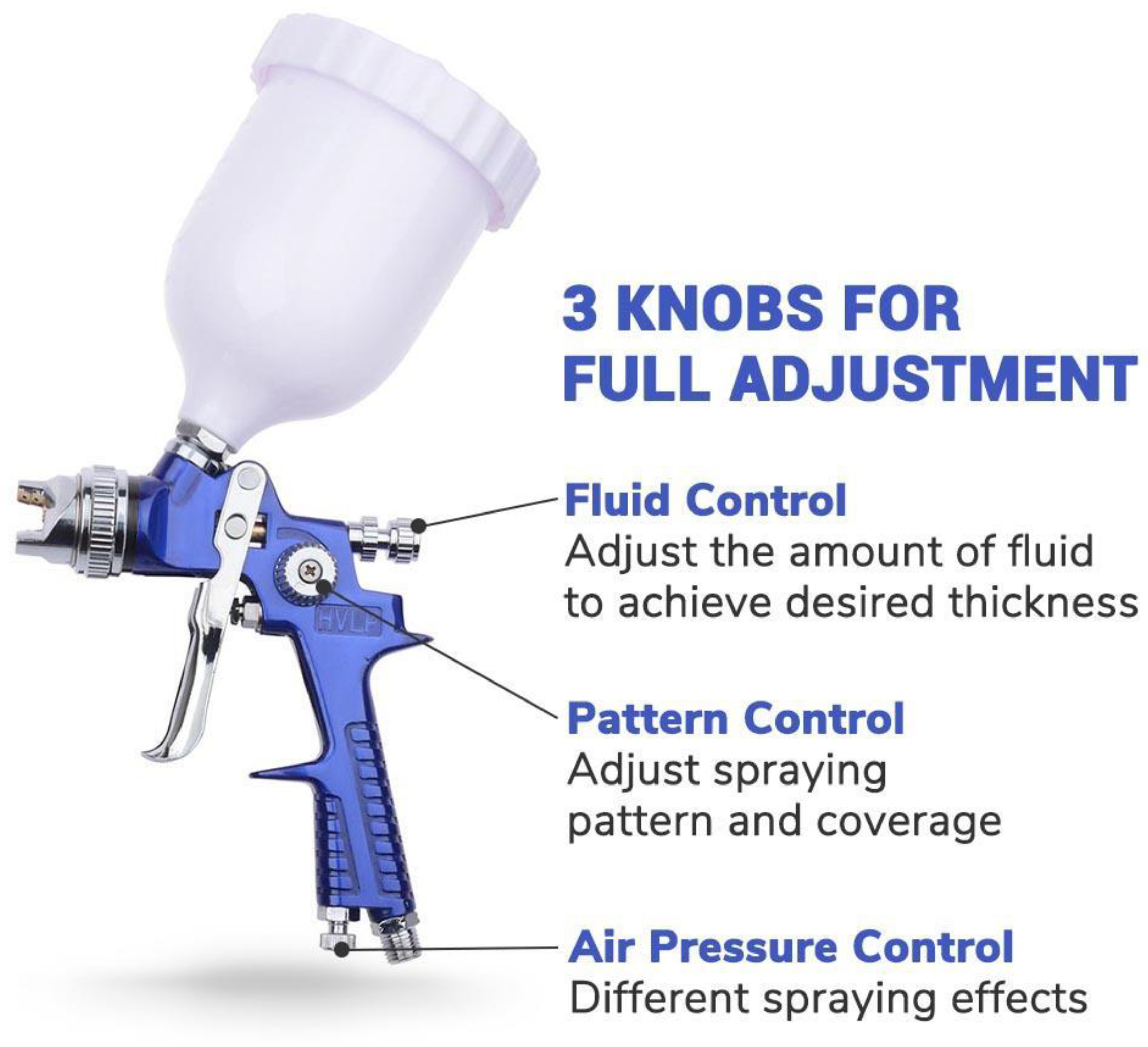

Paint Sprayer

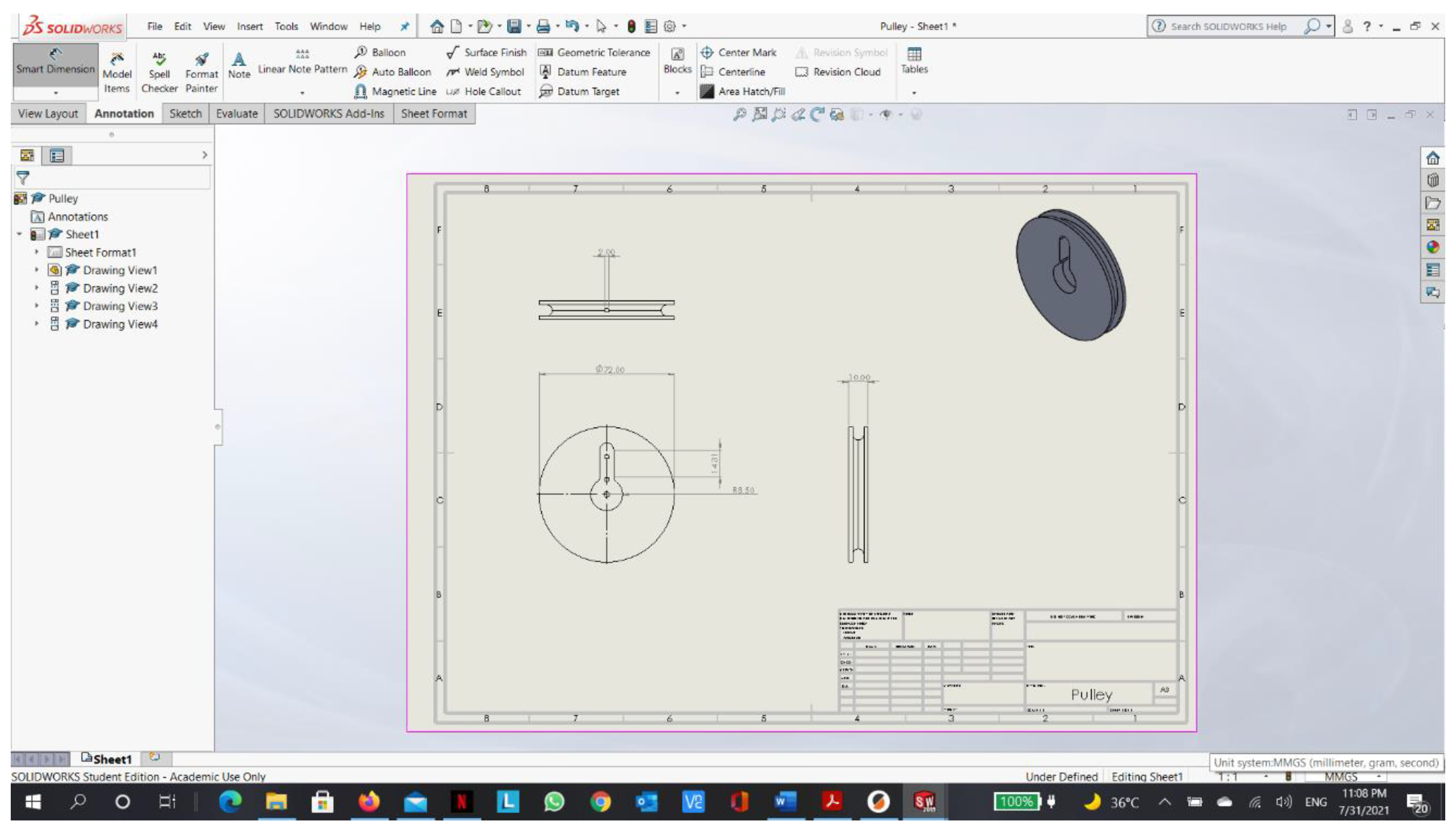

Pulley System

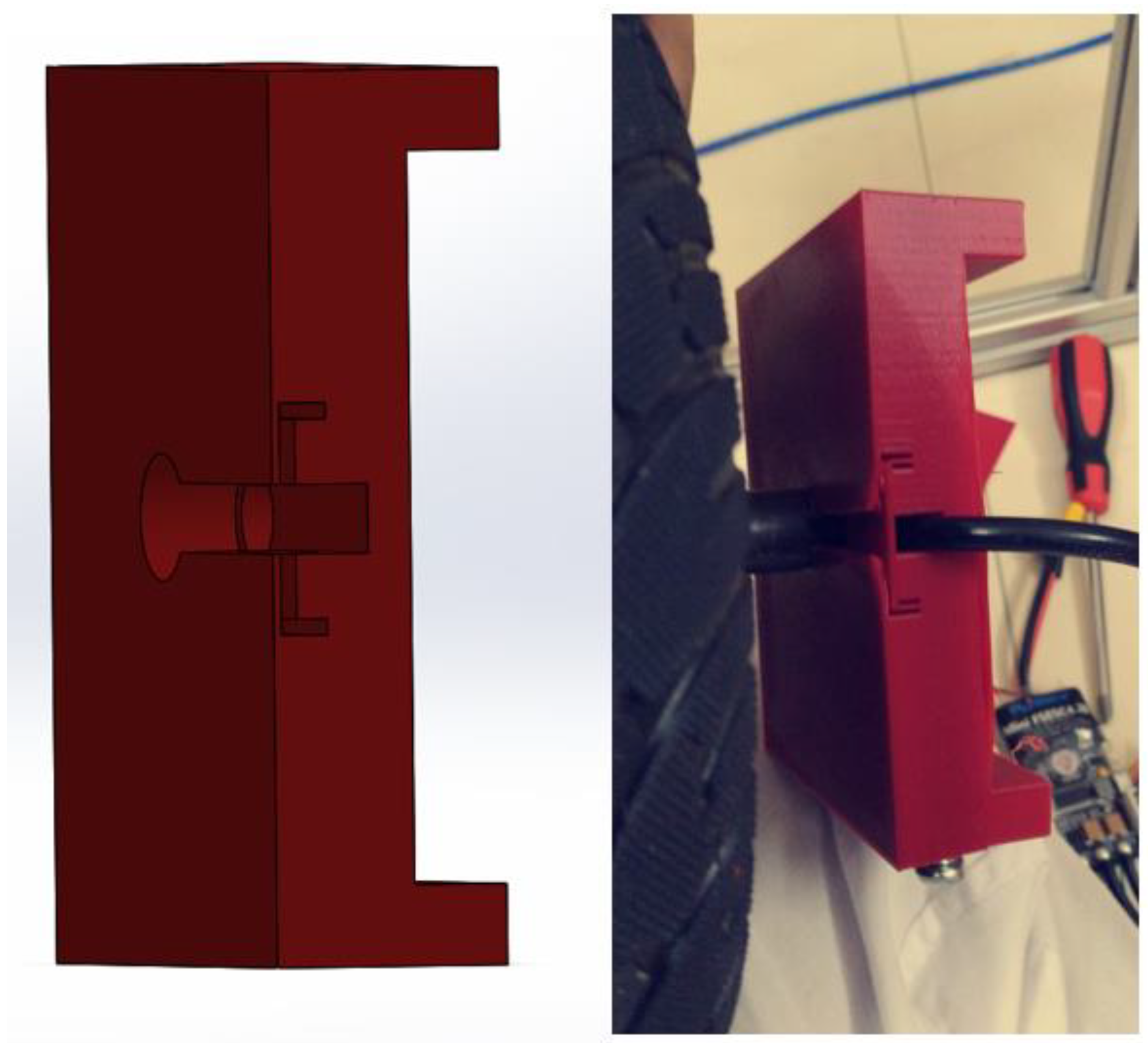

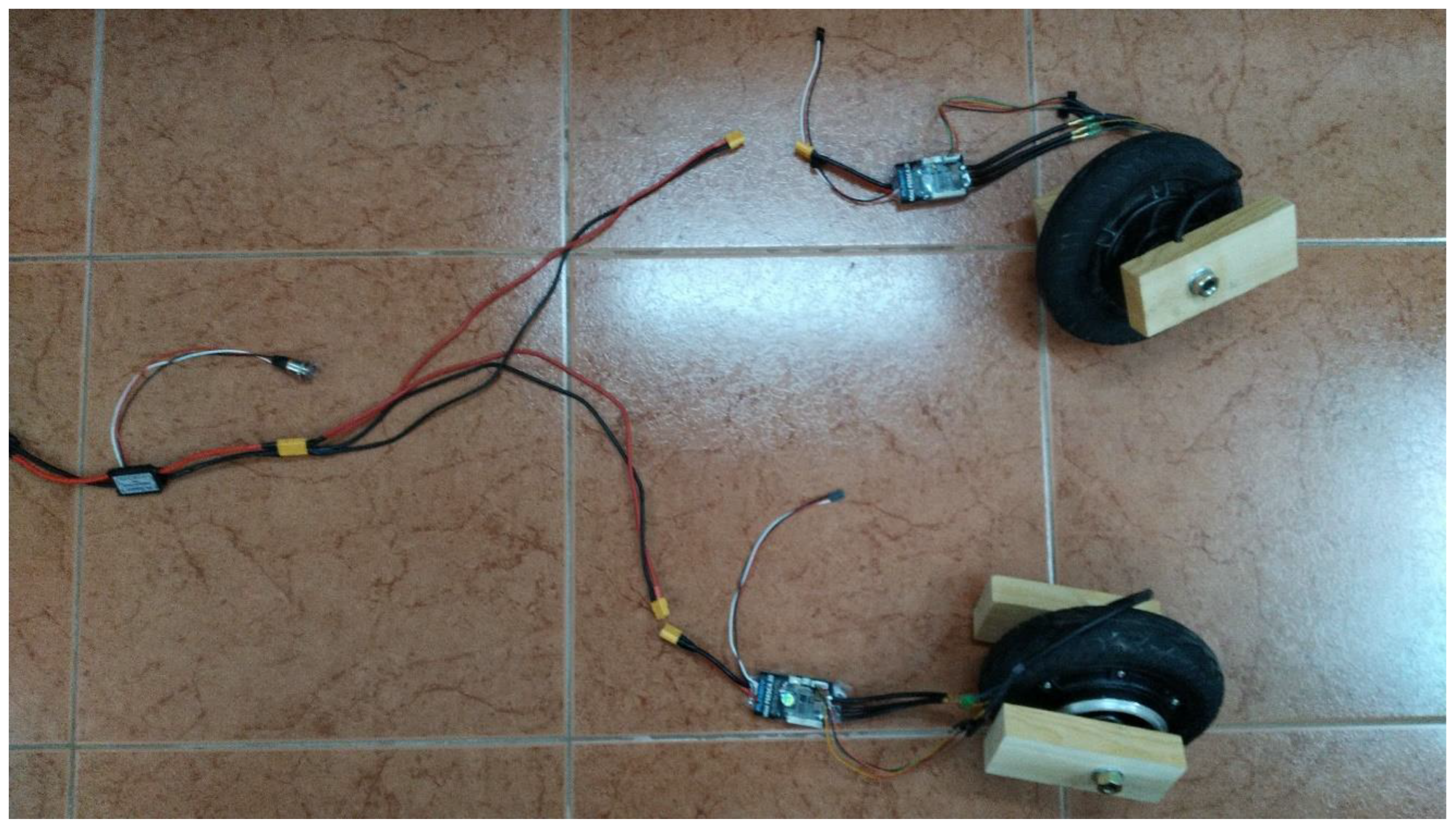

Driving Unit

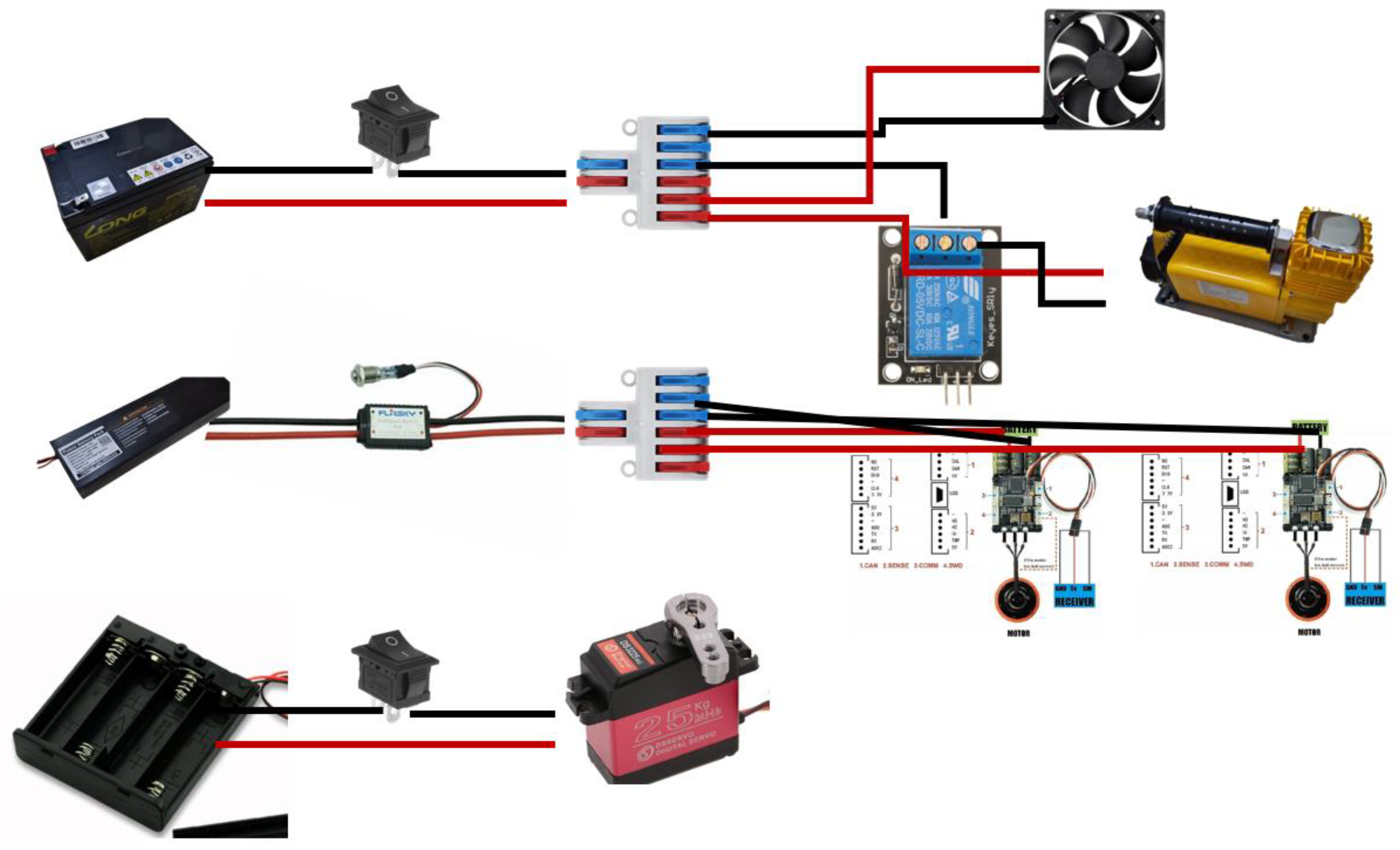

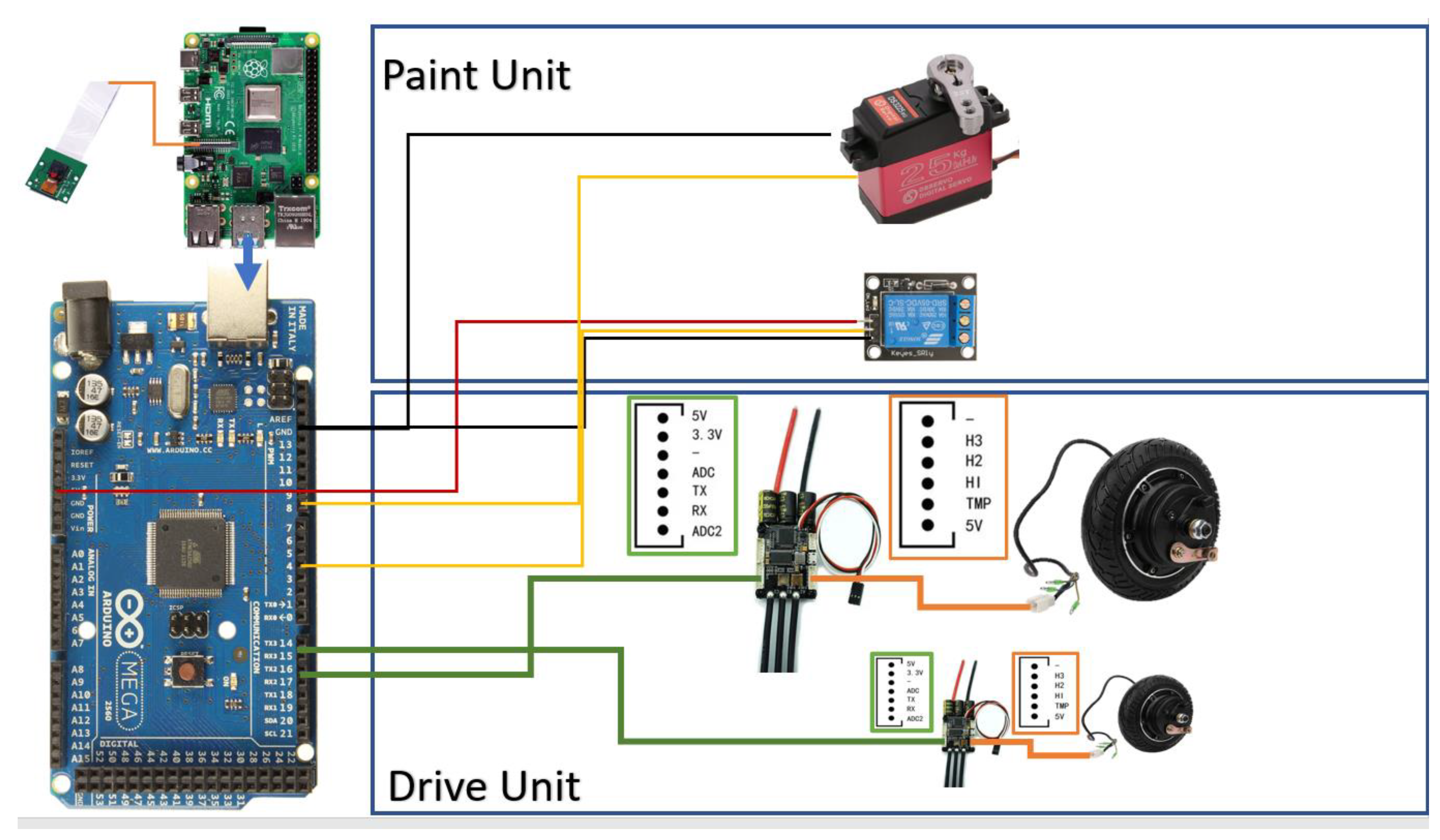

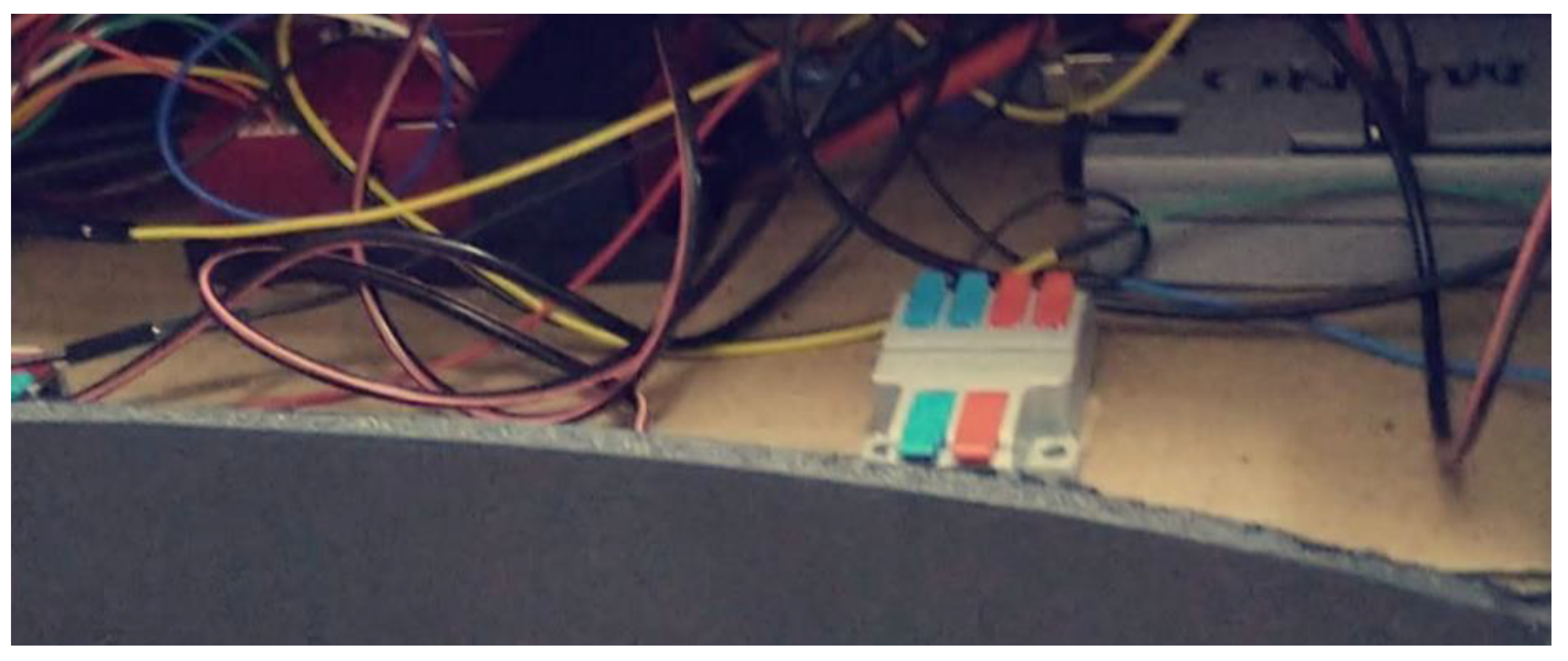

4.1.2. Electrical System

Power

Signal

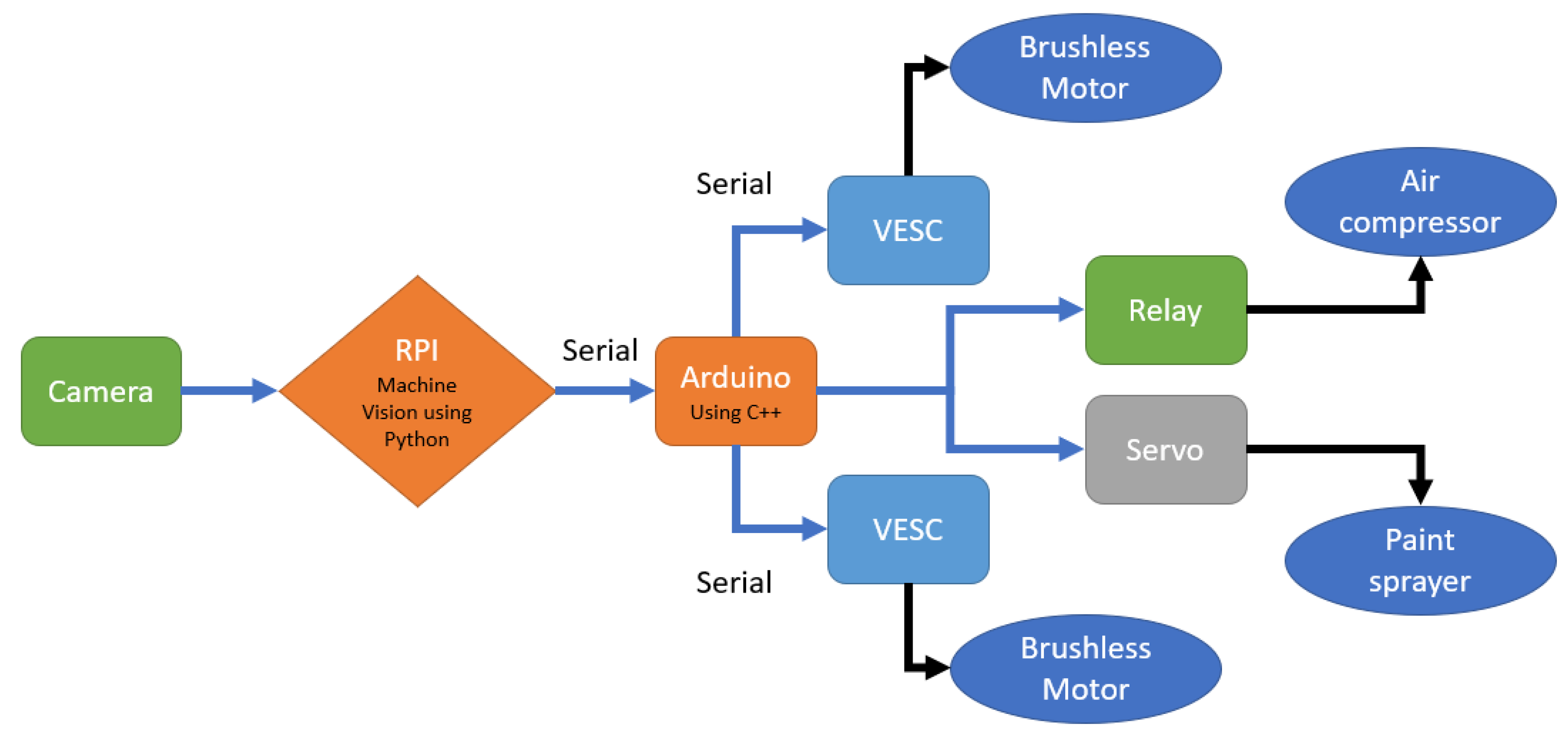

4.2. Software

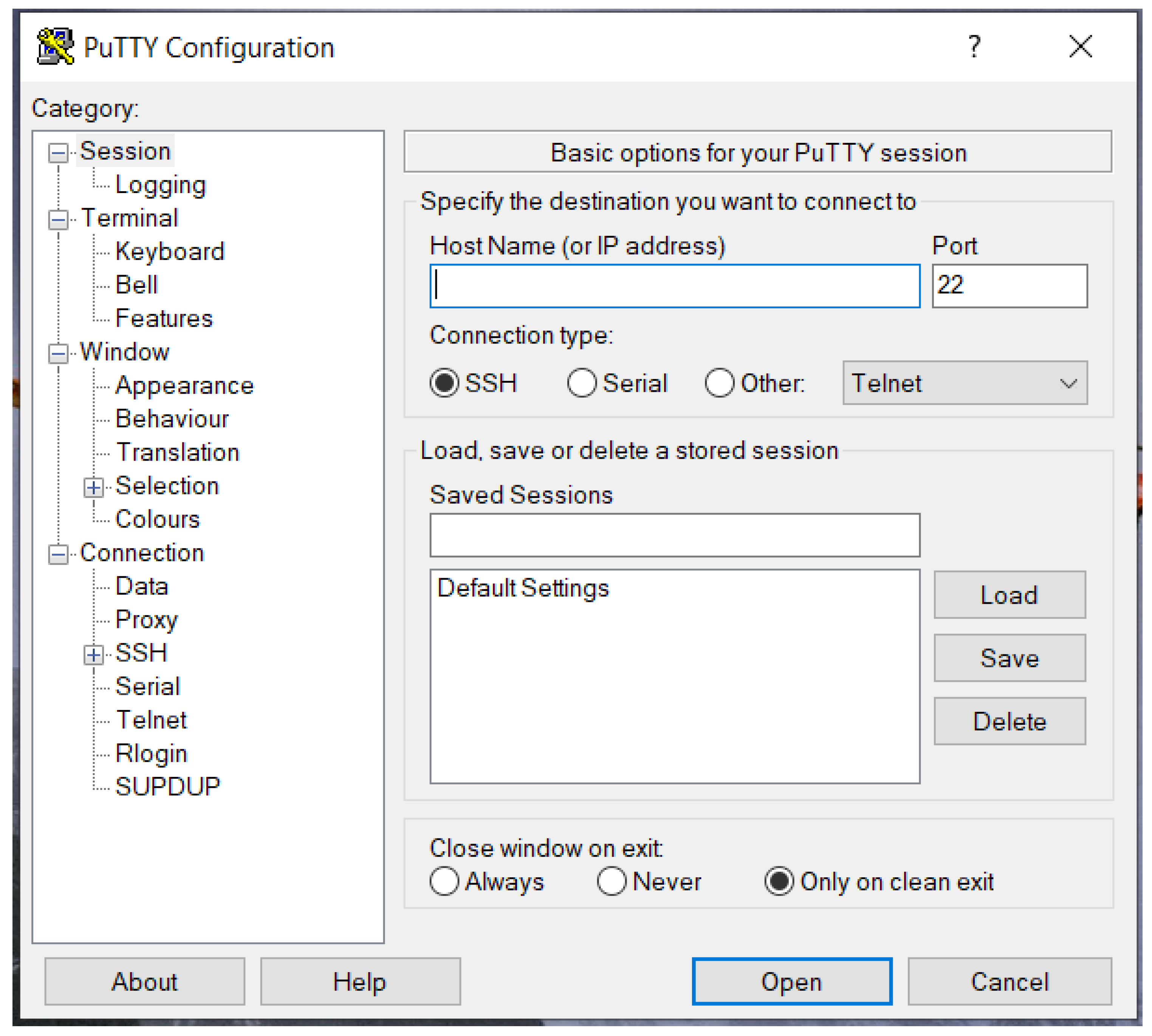

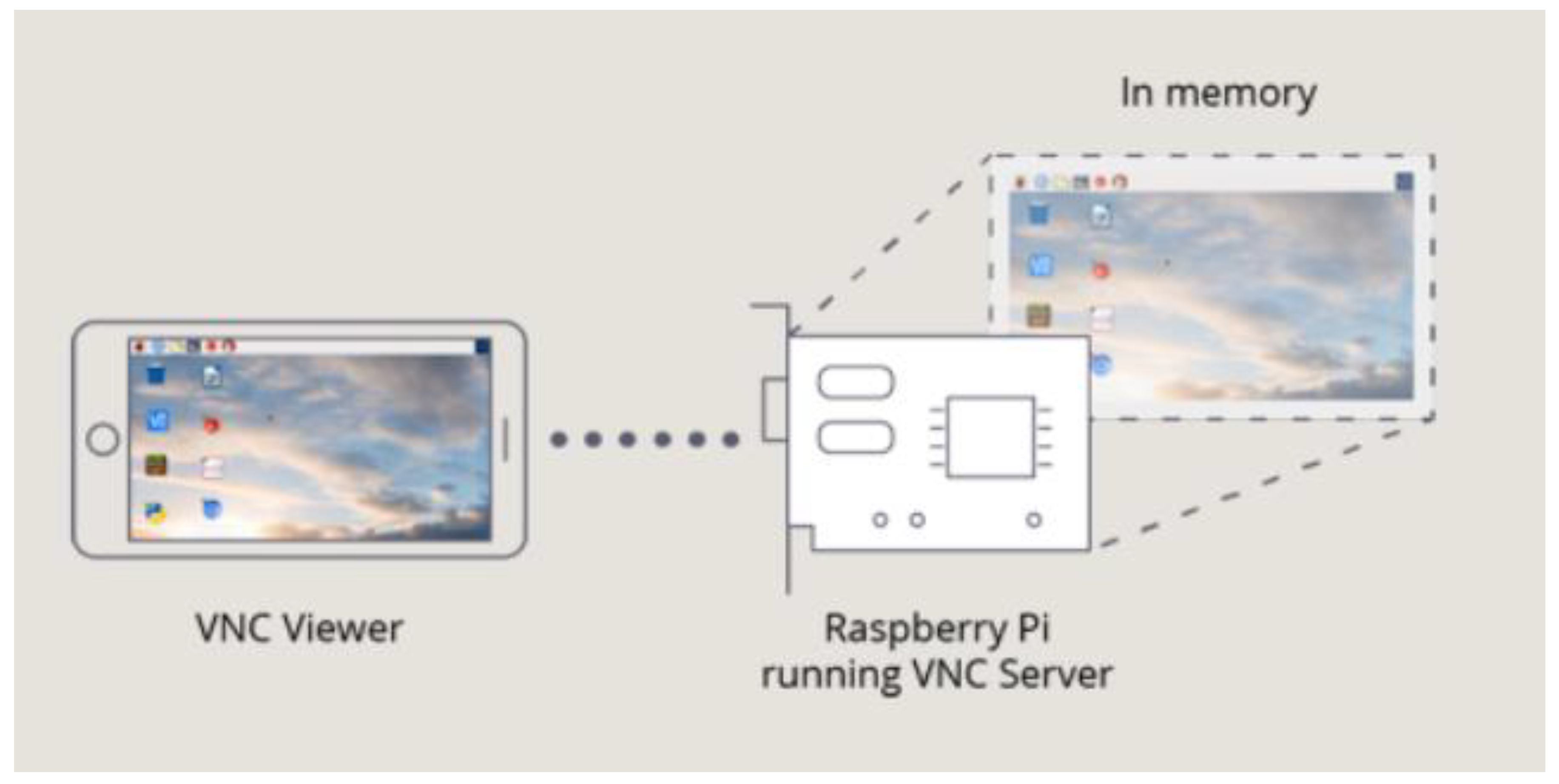

4.2.1. Communication Establishment between RPI and External Computer

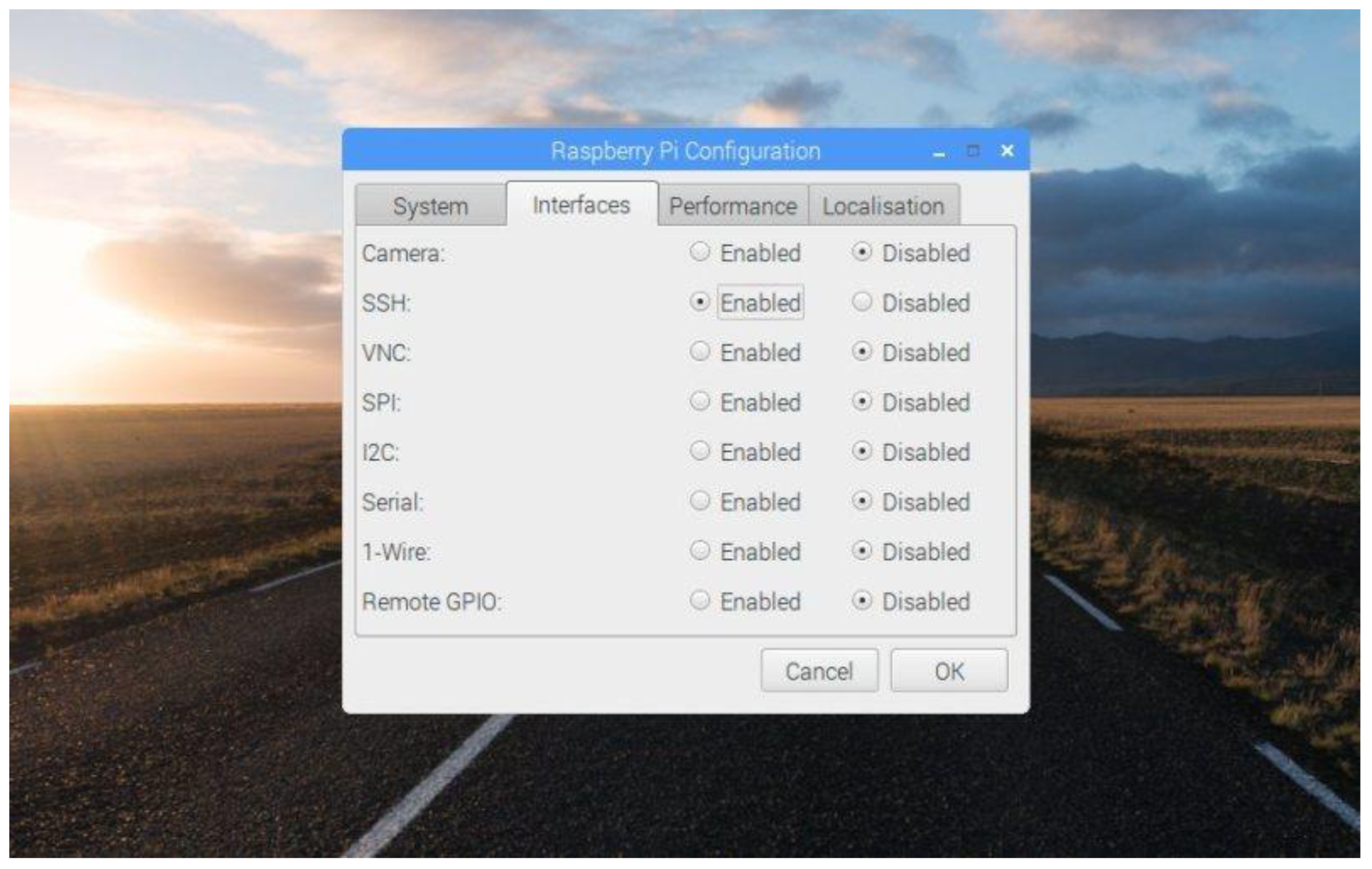

- Open the “Raspberry Pi Configuration” window from the “Preferences” menu.

- Click on the “Interfaces” tab.

- Select “Enable” next to the SSH row and VNC row.

- Click on the “OK” button for the changes to take effect.

- In order to connect to the Raspberry Pi from another machine using SSH or VNC, we need to know the Pi’s IP address. So, make sure that RPI is connected to the same network as the external computer, then type “hostname -I” will display the IP address in the terminal.

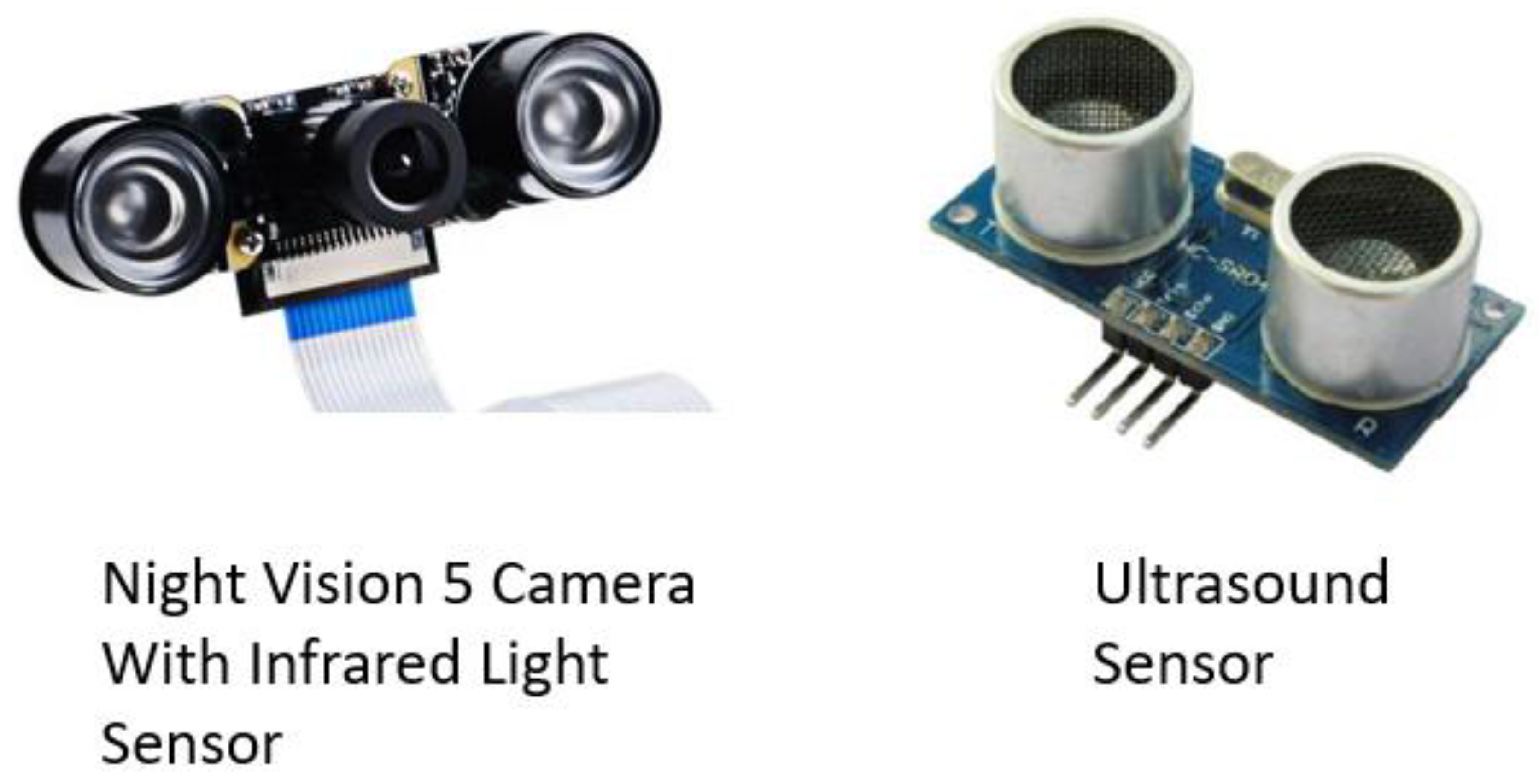

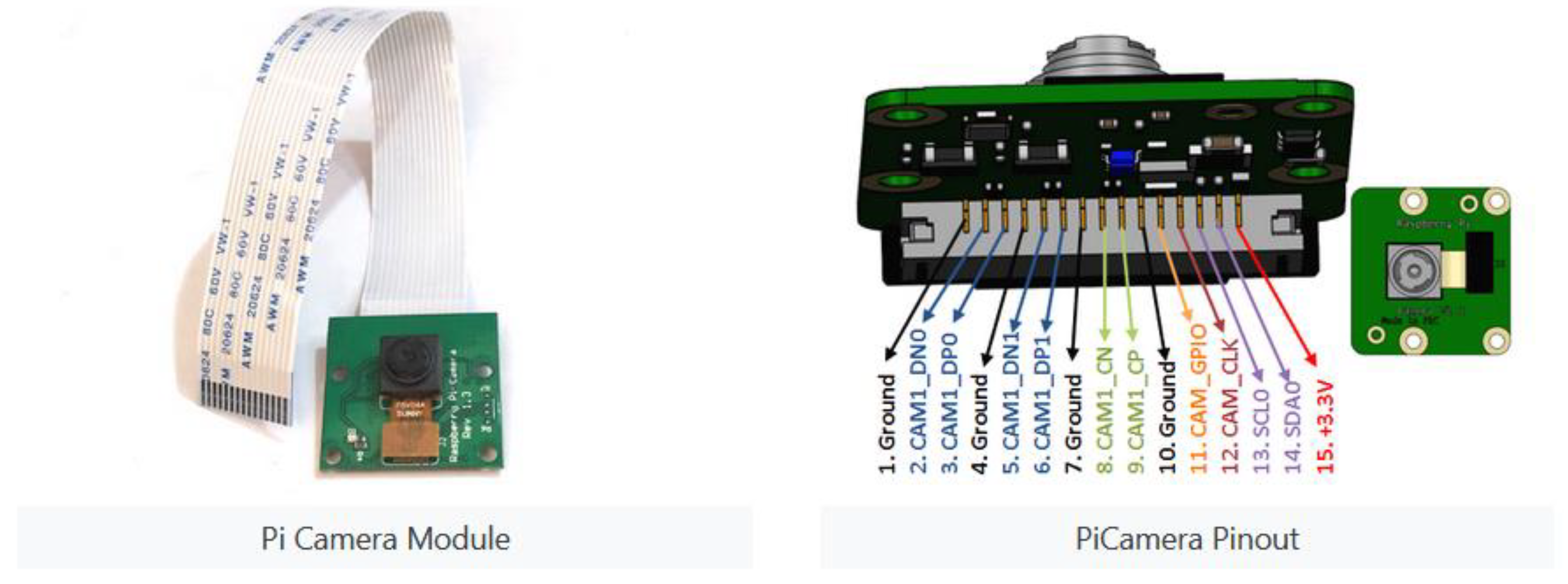

4.2.2. Communication Establishment between RPI and RPI Camera

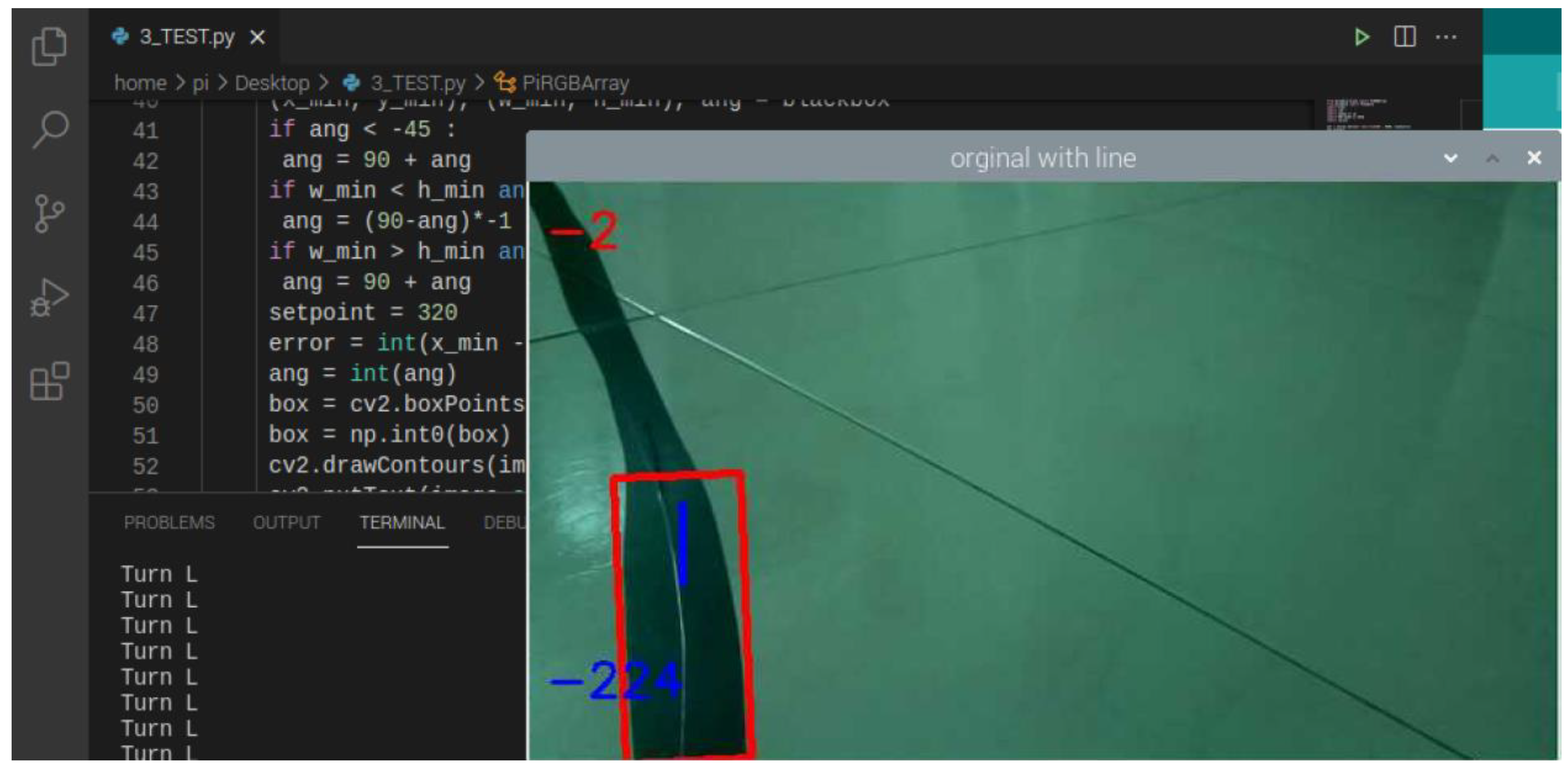

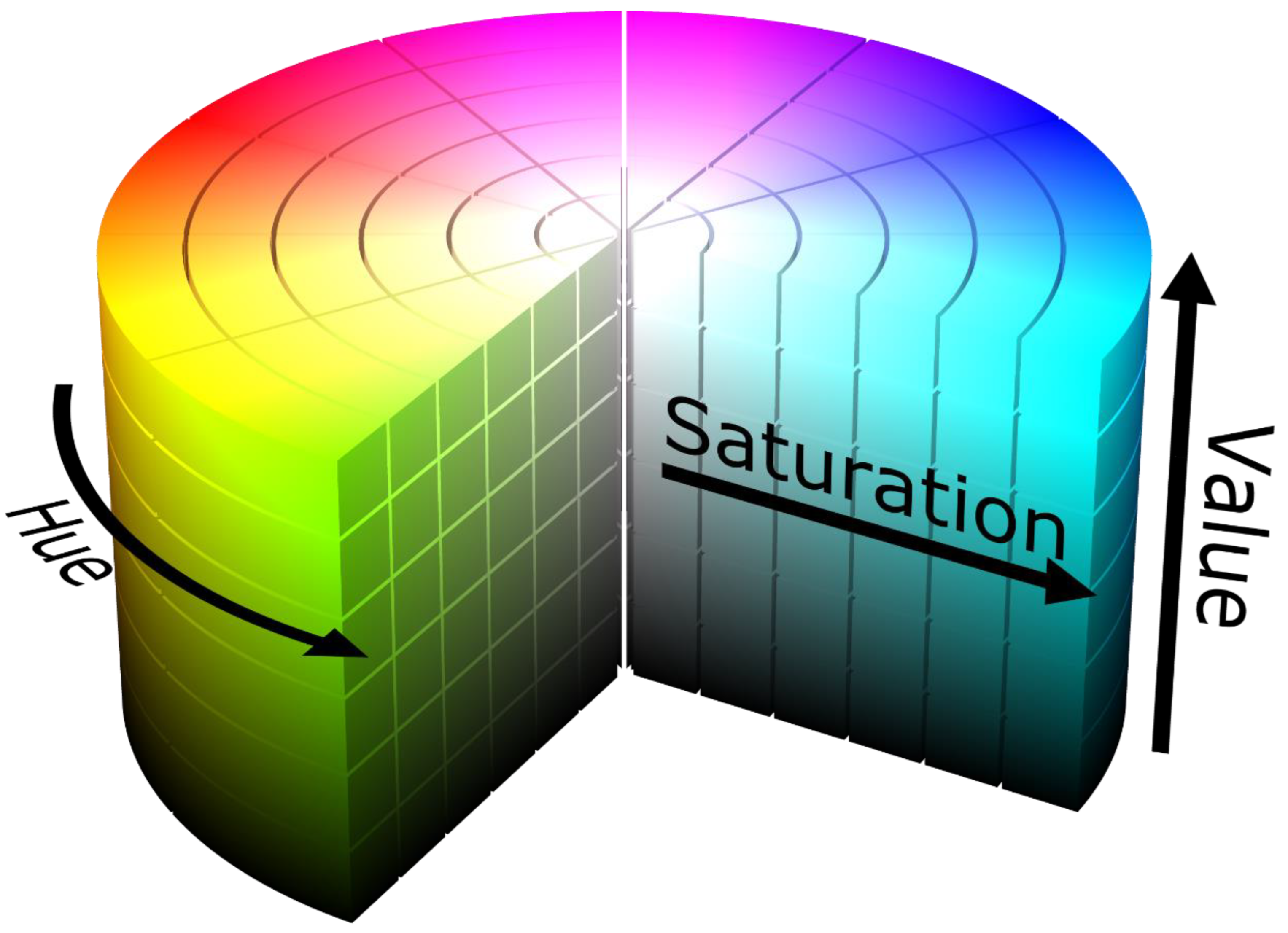

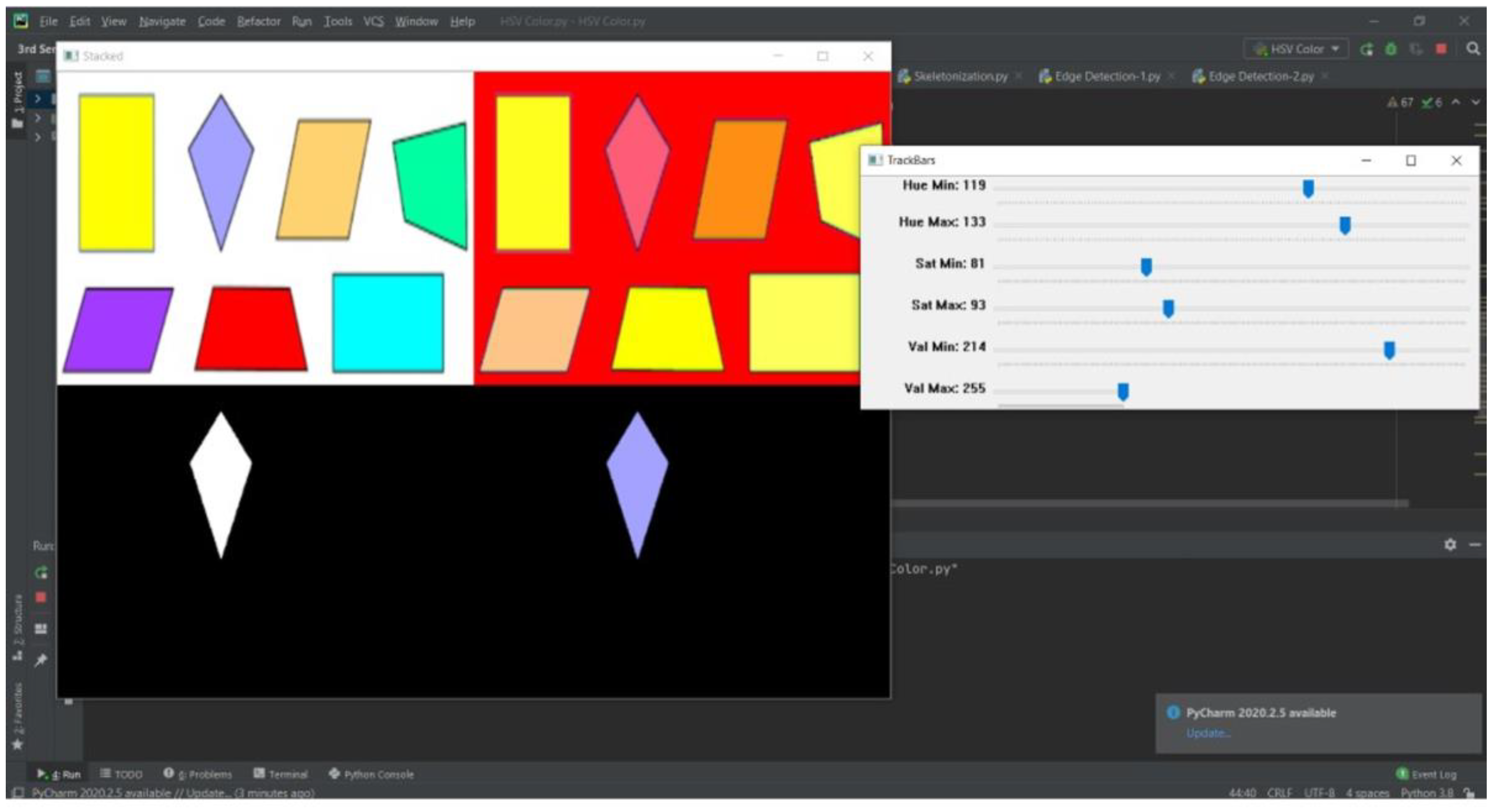

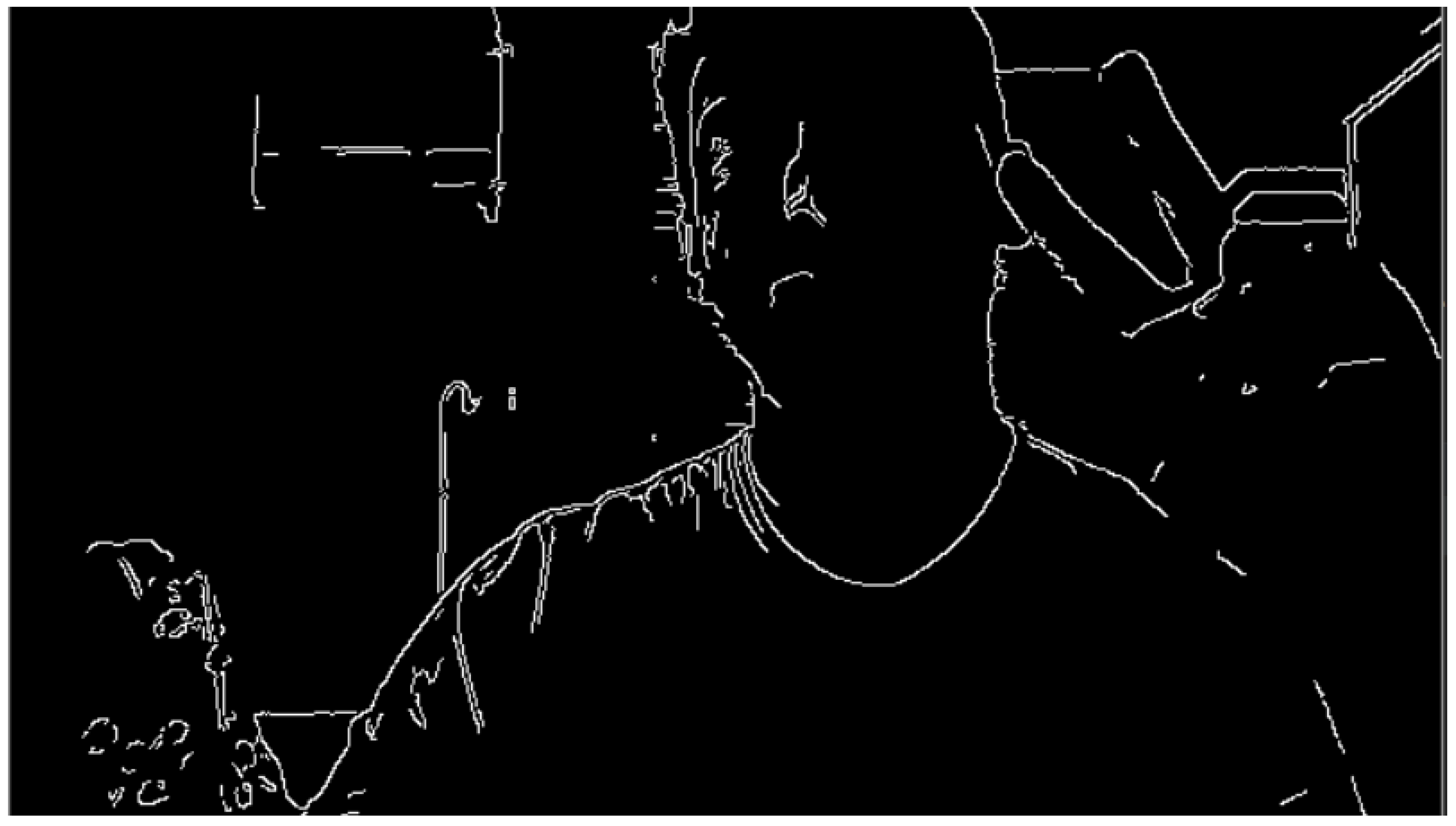

4.2.3. Machine Vision Code for Line Following and Line Degradation State Recognition

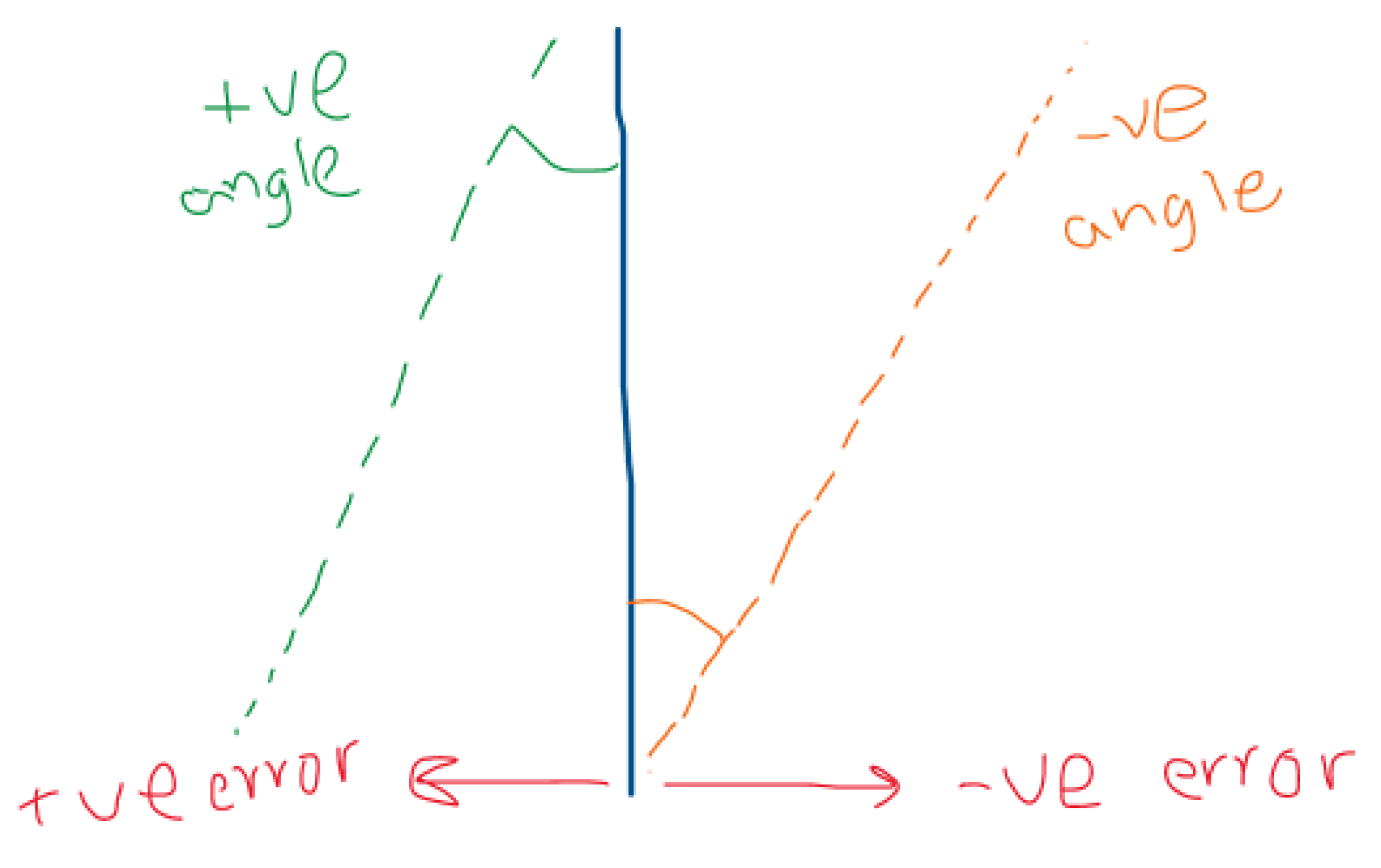

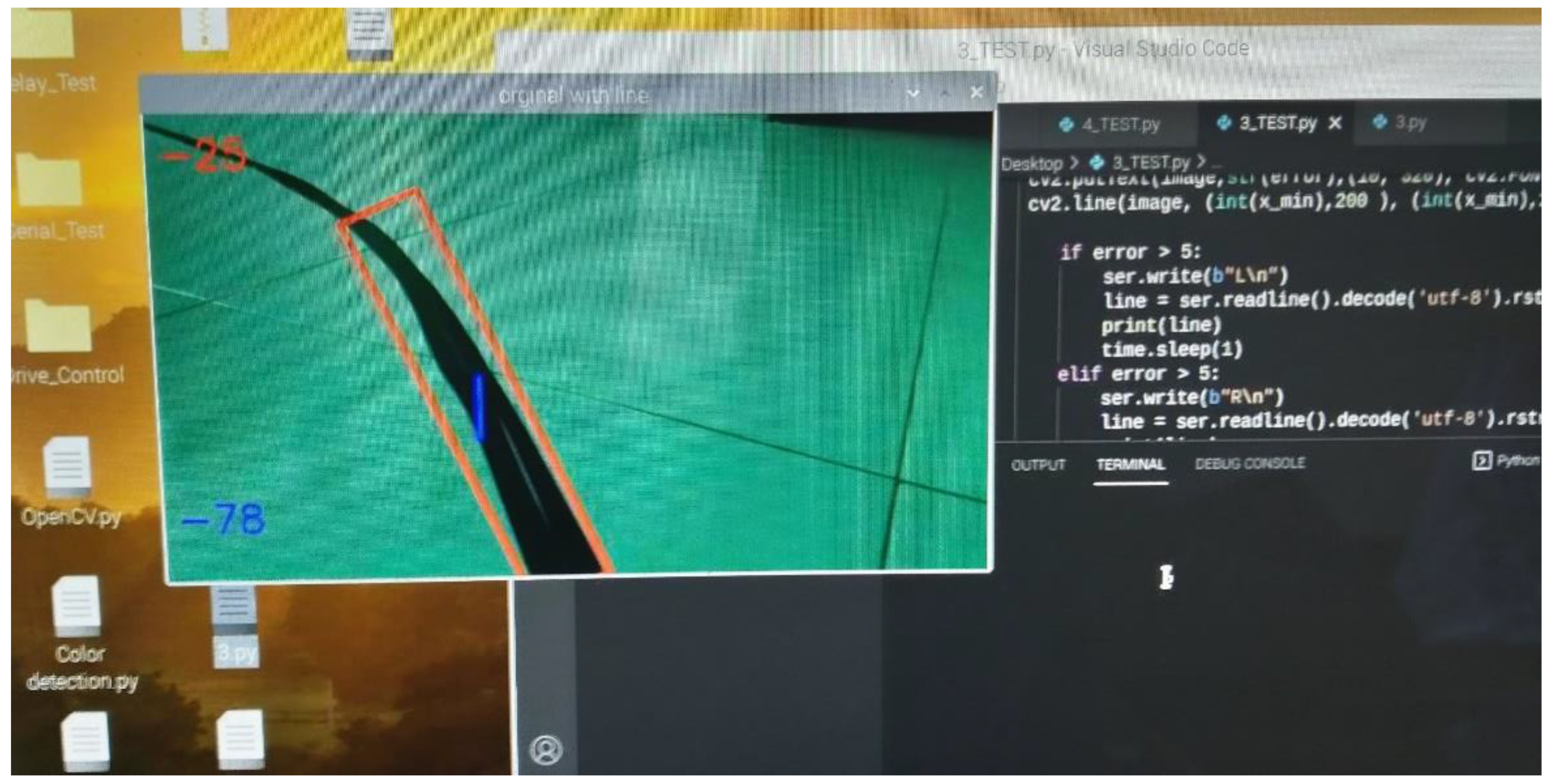

- Is the robot to the right or left of the line, and how far is it from the line.

- Is the robot moving in parallel to the line or angled towards/from the line?

- Is the line in good condition, or does it need repainting?

- Recognizing the line

- Making a contour to the line

- Warping the line

- Drawing a centerline to the line

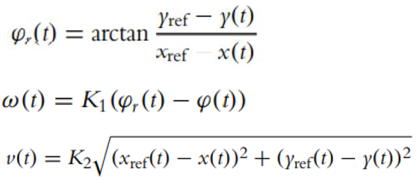

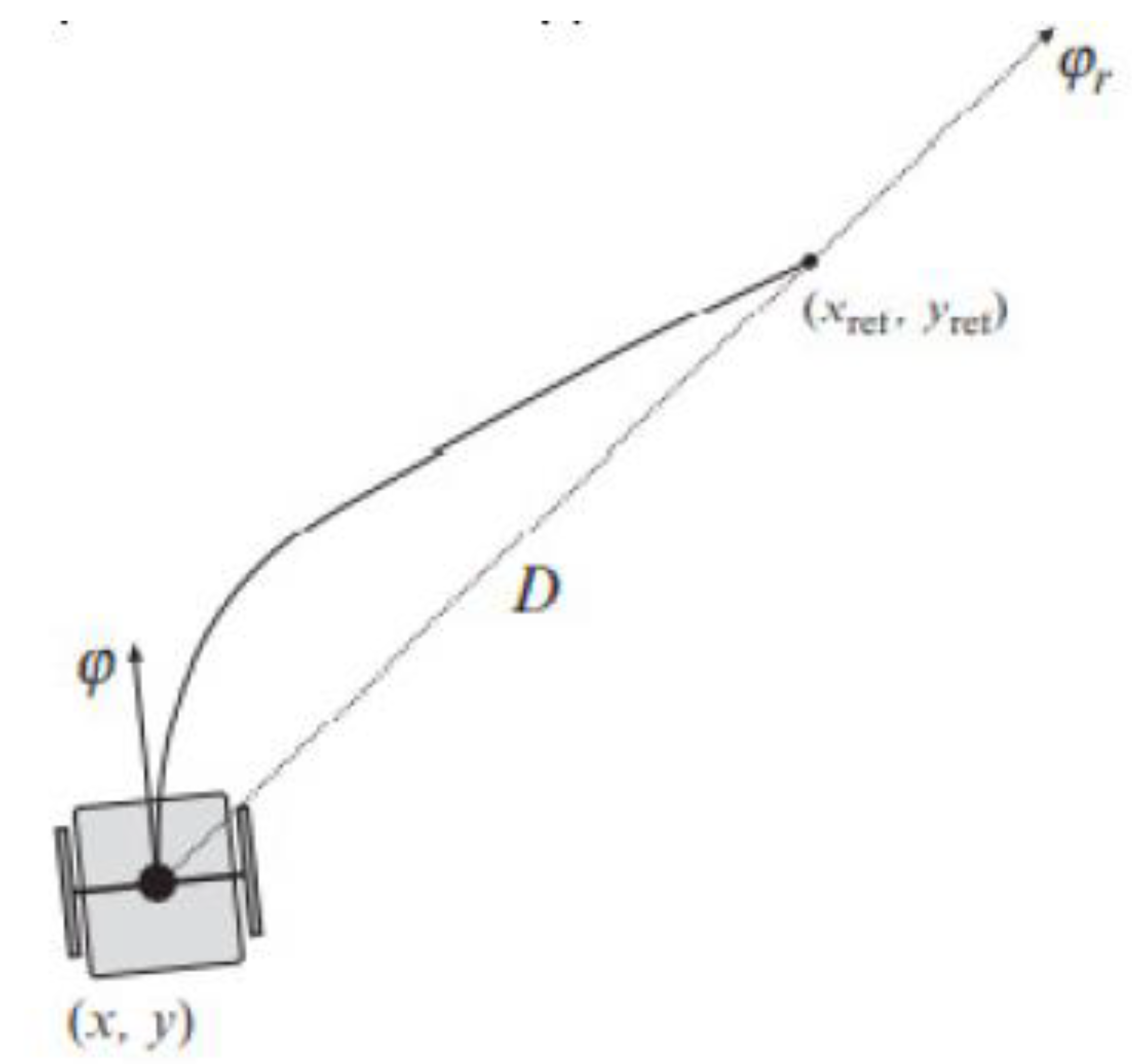

4.2.4. Machine Path Planning Code for Line Following Robot

- Stability

- Minimum steering and deviation

- Low speed to control the painting

4.2.5. Communication Establishment between RPI and Arduino

-

Sending a string type of message:

- ○

- Error is X\n

- ○

- Angle Error is Y\n

- ○

- Lane SD is Z\n

- ○

- Where X, Y, Z are arbitrary numbers.

-

The format of the message is as follows:

- ○

- ExAySz\n

-

The Arduino will decode the message as follows:

- ○

- Take substring between E and A and store it in a variable called error

- ○

- Take substring between E and A and store it in a variable called Angle

- ○

- Take substring between E and A and store it in a variable called STD

- Converting those string variables into integer values

- Using the values in Arduino accordingly for the path planning algorithm.

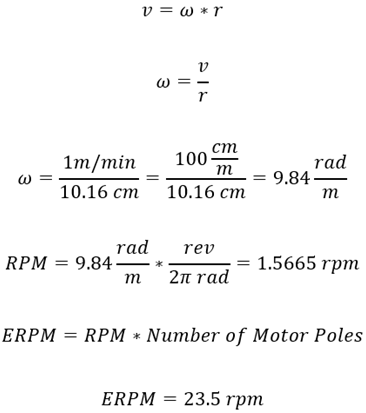

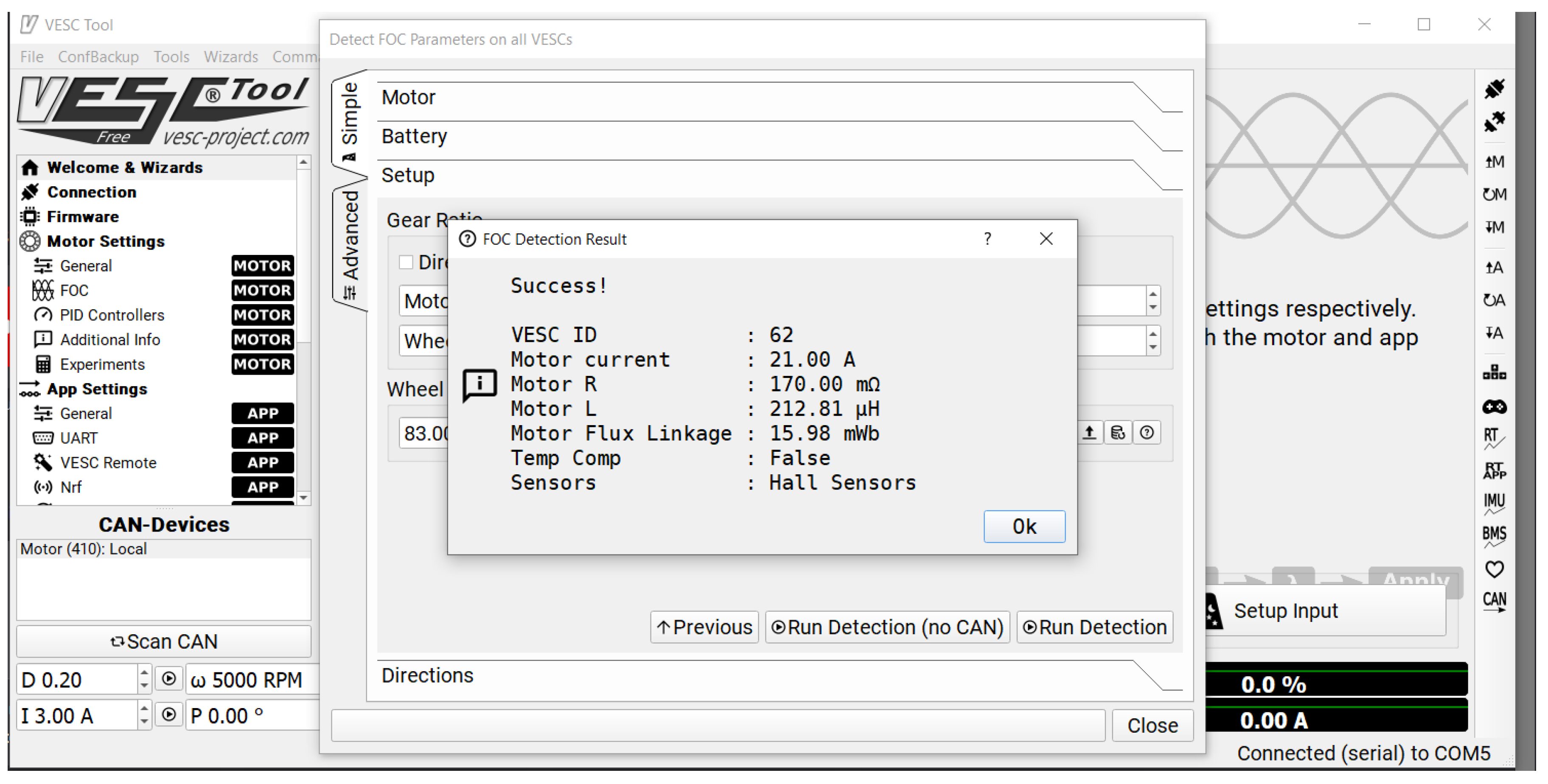

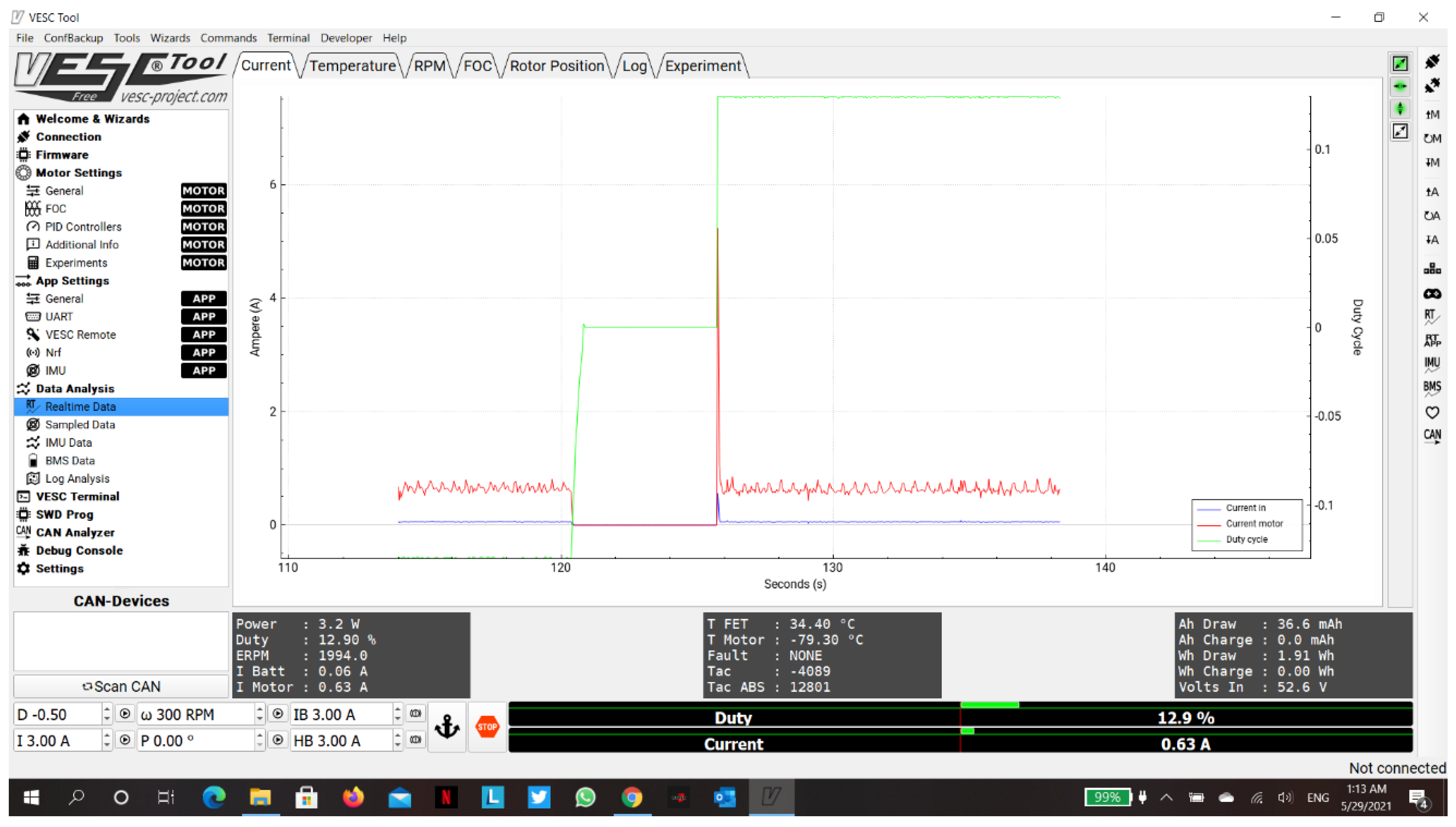

4.2.6. Communication Establishment between Arduino and VESC Controllers

4.2.7. Controlling Paint Unit Using Arduino

4.2.8. Localization

5. Performance Evaluation

5.1. Performance Measures

- Cognition part performance: measuring the precision of detection and the accuracy of line state recognition.

- Localization/Path planning part performance: measuring localization precision and the path’s deviation.

- Mechanical part performance: calculating the errors from design flows and the efficiency of the system.

- Paint performance: measuring the enhancement to the existing line and the cost analysis of the paint.

5.2. Experimental/Simulation Design

5.2.1. Manufacturing Processes and Assembly

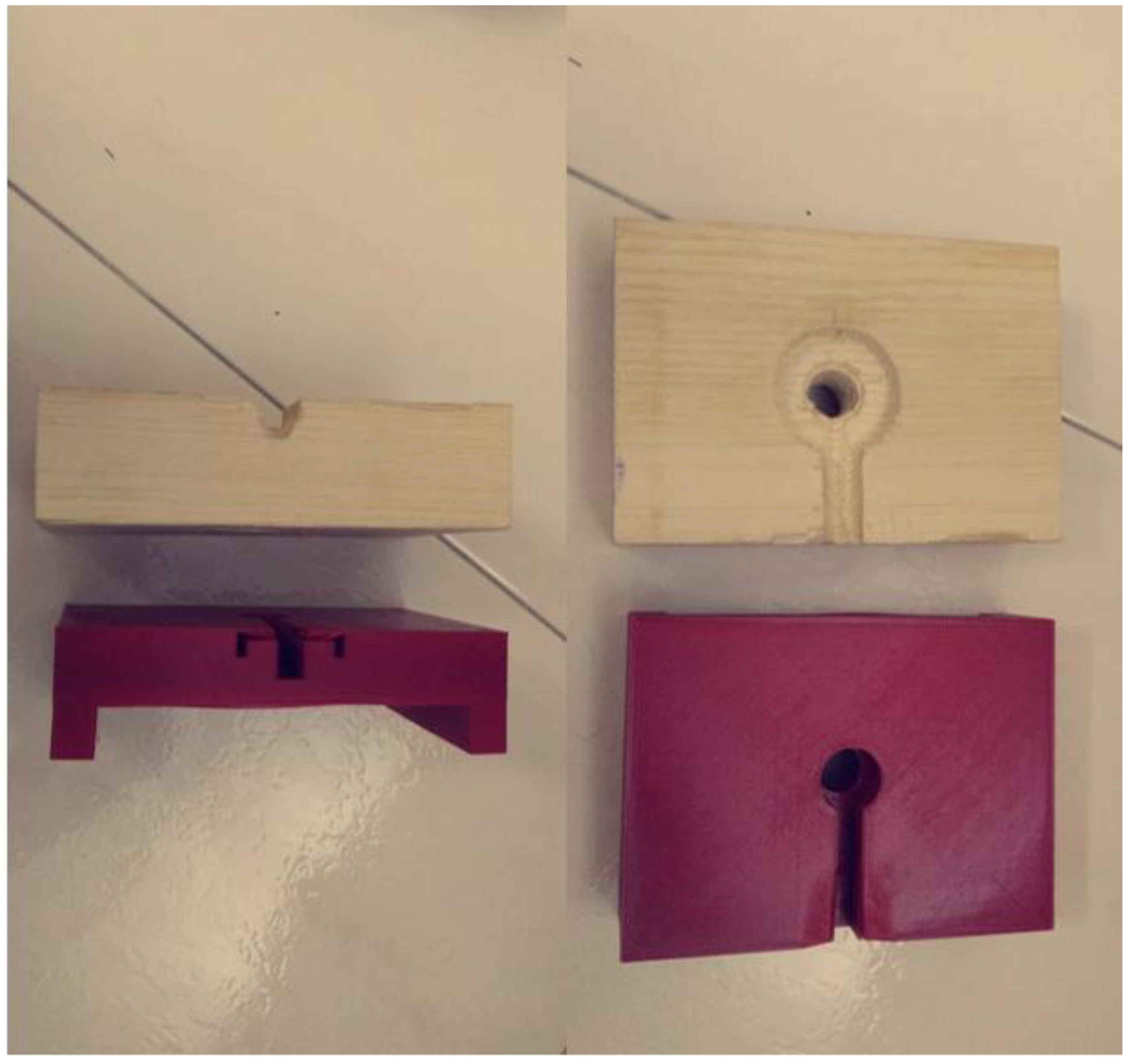

3D Printing

Wiring

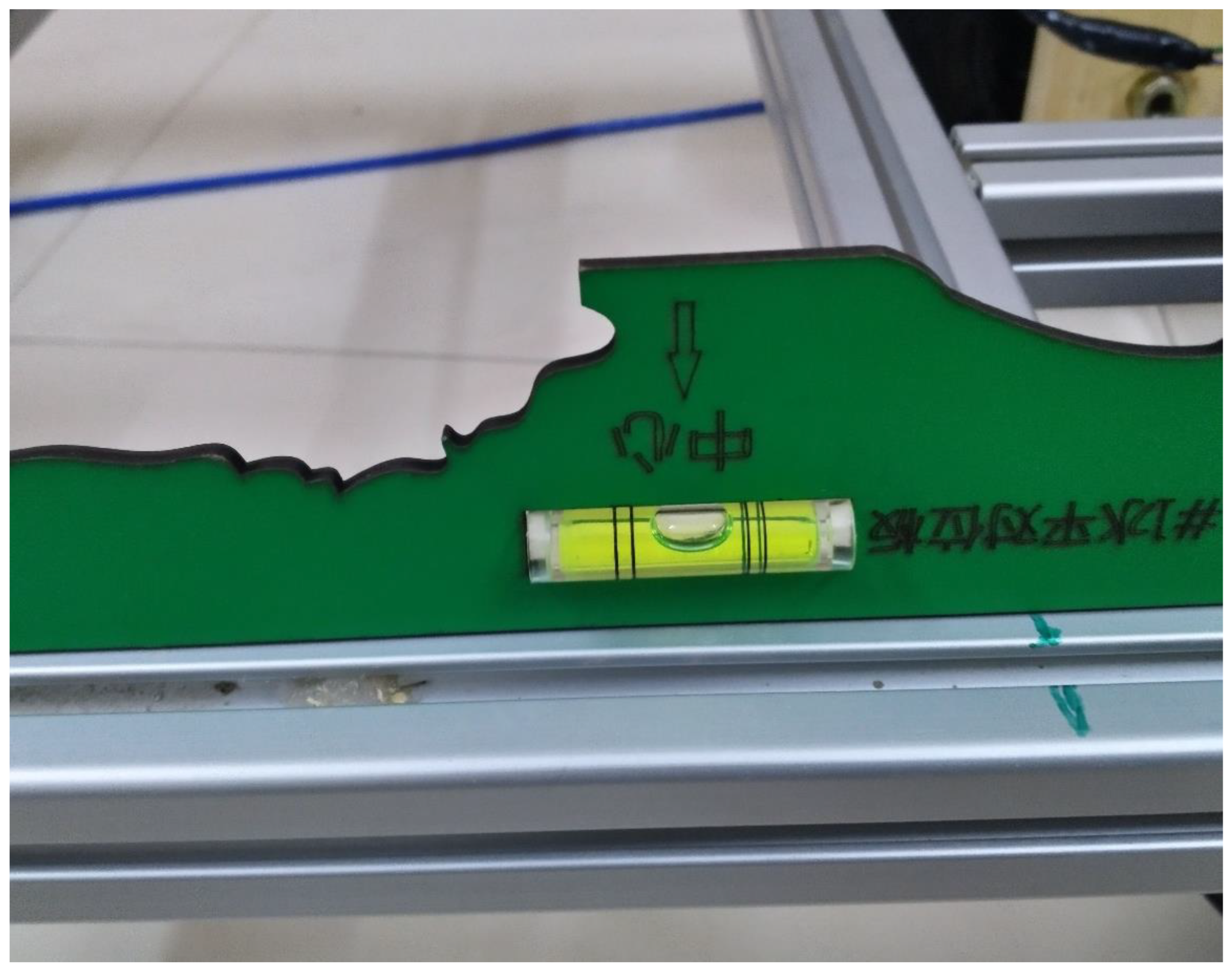

5.2.2. Calibration:

5.2.3. Experiment Design:

5.3. Results and Discussion

5.3.1. Tests Results

Motors Tests

Painting Tests

Air Compressor and Servo Motor

Machine Vision Tests

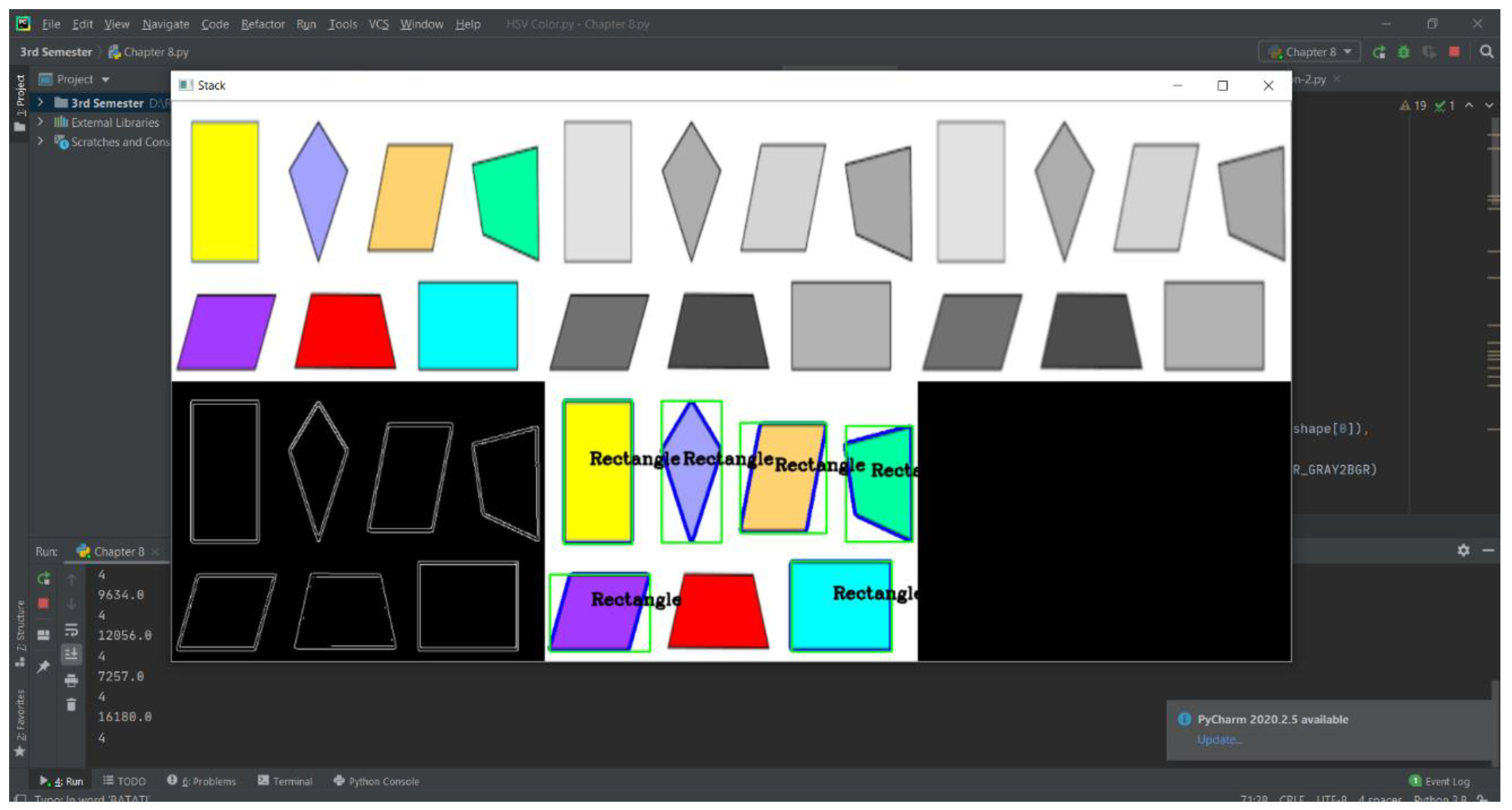

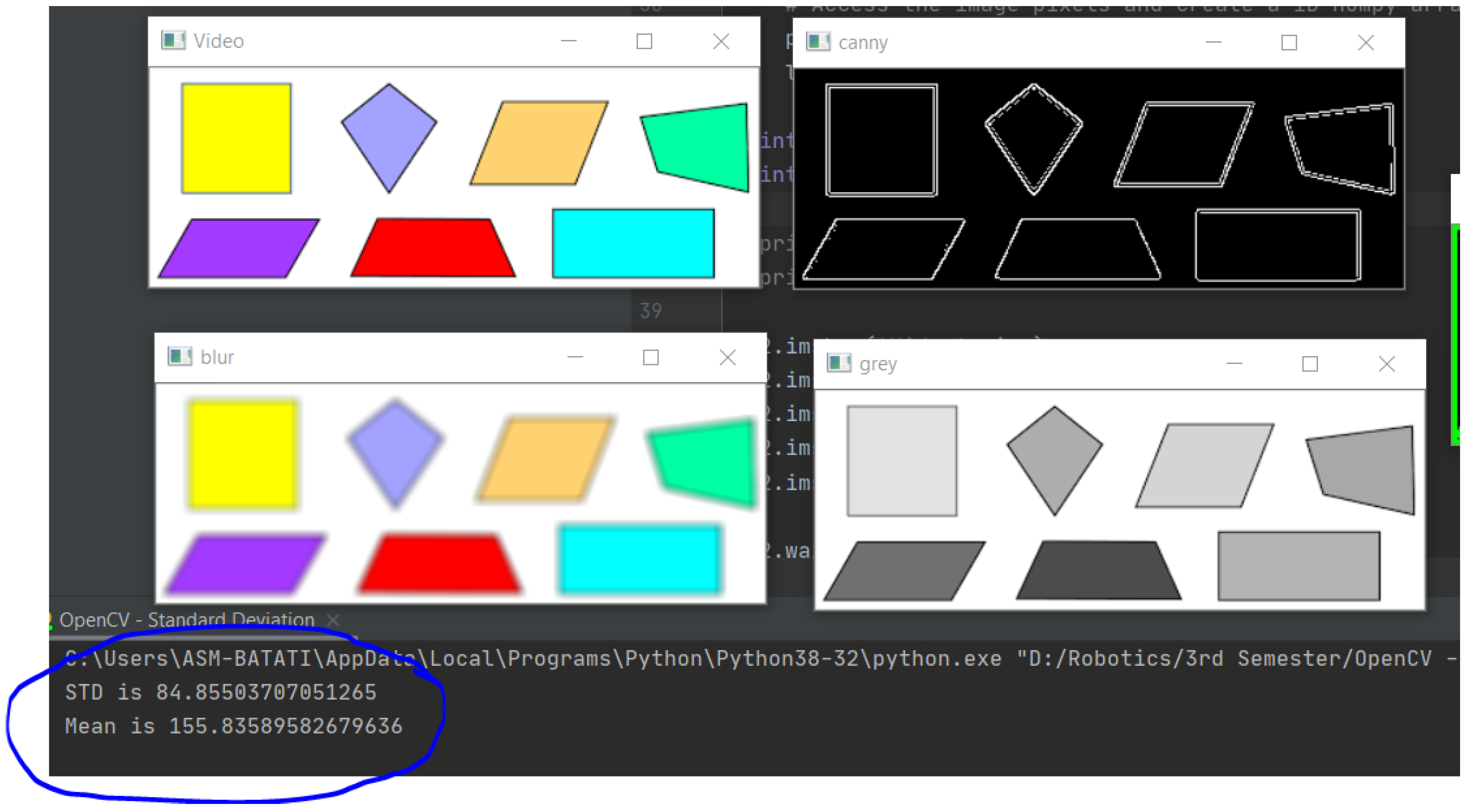

Color Detection

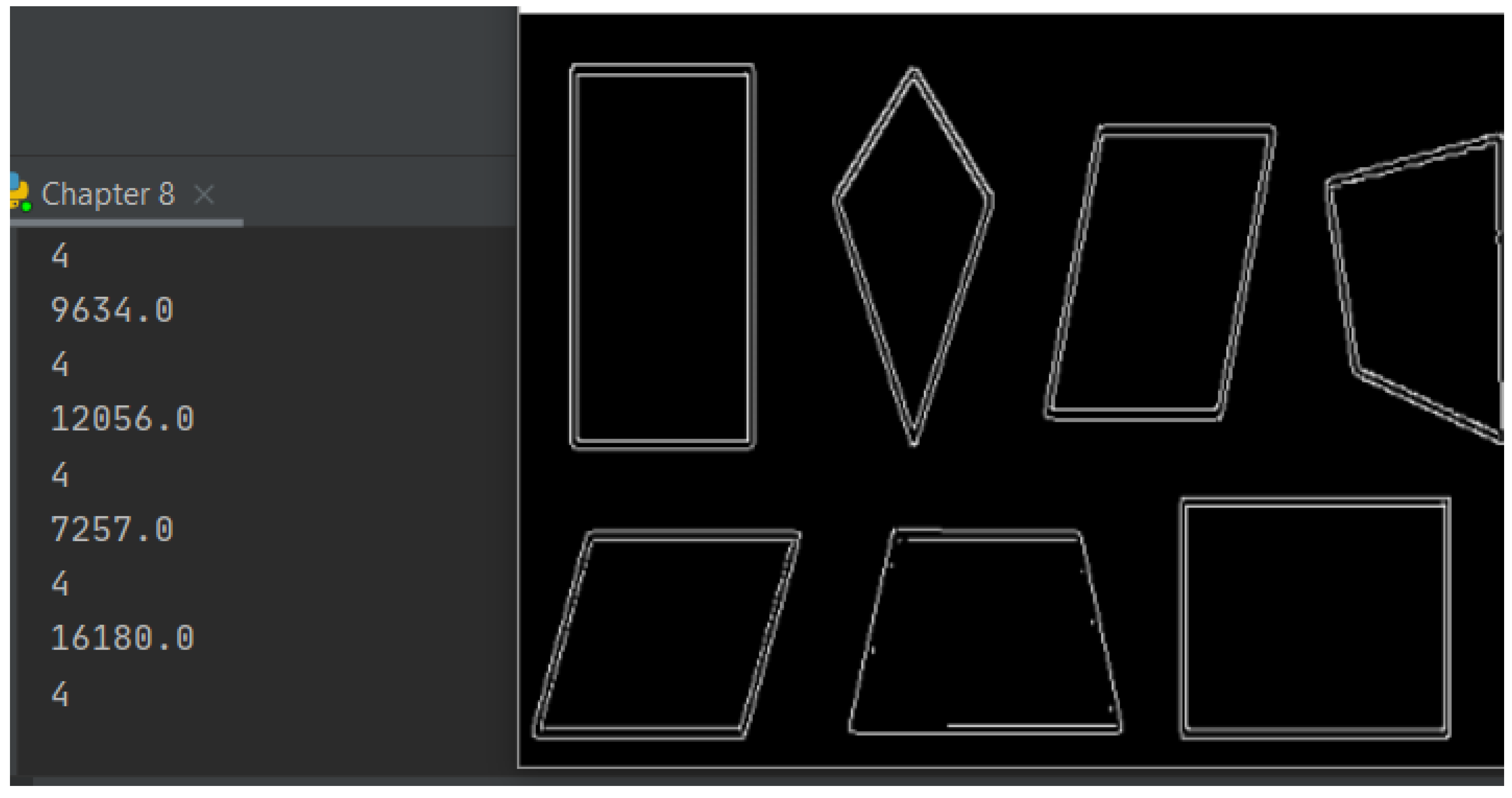

Contour Detection

Points Extraction

Shape Recognition

Color Mean and Standard Deviation

Line Following Algorithm

Communication Tests

Full Prototype Tests

5.3.2. Improvements and Modifications:

Motors Mounts Improvements

Painting Mechanism Modification

Machine Vision Improvements

Performance and UI Improvements

5.3.3. Discussion

- Cognition part performance: measuring the precision of detection and the accuracy of line state recognition.

- Localization/Path planning part performance: measuring localization precision and the path’s deviation.

- Mechanical part performance: calculating the errors from design flows and the efficiency of the system.

- Paint performance: measuring the enhancement to the existing line and the cost analysis of the paint.

Cognition Part Performance

Localization/Path Planning Part Performance

Mechanical Part Performance

Painting Performance

5.4. Problems and Difficulties

5.4.1. Risk Assessment And Challenges

- Parts market research time.

- Finding an assembly of a particular specification or assembling one.

- Misleading parts specifications on the market.

- Weeks 12-15 had the minimum productivity due to term 202 Final exams, Assignment’s submissions, Ramadan last ten days, and the Eid.

- Lead time for some parts was extreme (up to one month).

- Discrepancies on some of the delivered parts, which required extra time to fix.

6. Financial Analysis And Monetary Investments

6.1. Project’s Tangible Costs

6.2. Project’s Intangible Costs

| Risk | Probability | Mitigation Plan | Occurrence |

| Materials Delay | Mid | Purchase from other vendors (Higher cost), Or Fabrication (Time consuming) |

Yes, valves, RPI accessories, 3D printer, and RPI camera had a delay issue. At the same time, the ordered batteries were not delivered completely. |

| The debugging phase will take more time | High | Ask for guidance, or consult experienced people Plan for more debugging time |

Yes, many issues were installing the Raspian OS and debugging, along with debugging issues in communication. Also, multiple tests failures elongated debugging and troubleshooting. |

| Risks that were not considered | Occurrence |

| Materials reliability issues | Multiple acquired parts had a reliability issue, such as the motors from the local market. |

| Ordered parts specifications | Multiple parts were designed and ordered to meet certain specifications; however, the delivered parts had an error, such as dimension error on the frame. |

| Components | Cost | Tools | Cost | Failed components | Cost |

| Frame | 972 | 3D printer | 3767 | Failed motor | 210 |

| Cardboard | 140 | 3D printer Filaments | 506 | Failed Valves | 398 |

| Motors | 350 | Multimeter | 30 | Failed SD card | 73 |

| Castor Wheel | 22 | Battery Chargers | 50 | ||

| Arduino Mega | 158 | Soldering station | 573 | ||

| Raspberry Pi 4 | 549 | Fastening tools + Tap | 192 | ||

| Raspberry Pi Camera | 79 | ||||

| Raspberry Pi Accessories | 410 | ||||

| VESC | 1107 | ||||

| Servo motor | 93 | ||||

| Relay (on Arduino electronics kit) | 472 | ||||

| Air compressor | 250 | ||||

| Paint sprayer | 126 | ||||

| Batteries | 1020 | ||||

| Switches | 209 | ||||

| Connectors | 543 | ||||

| Cooling fan | 20 | ||||

| Monitor | 470 | ||||

| HDMI capture | 69 | ||||

| 7014 | 5118 | 681 | |||

| 12813 SAR | |||||

| Task | Time spent |

| Design | There are 197 fully designed drawings using SolidWorks, with a minimum of 30 min per drawing. Moreover, approximating the average of drawing to be 2 hours, the total time spent designing would be approximately 400 hours. |

| Local market search | The local searching was conducted in both Jeddah and Riyadh. The search was for different components at the time. Moreover, there were ten times in which the search was done through an entire day. Hence, the time would be approximated as 60 hours. |

| Machining | Machining was done at the beginning on the aluminum frame, and it took around two days. Therefore, approximately 12 hours. |

| Soldering | Soldering was done two times, the first was at the beginning, and the second was after the failure of the motor. The first took much more time due to practicing, whereas the second time took around 6 hours. Therefore, the total approximated time is 24 hours. |

| Printing | Based on the log files of the 3D printer, the total time of printing is 158.9 hours. |

| Assembly | Assembling was done multiple times, approximately five times. However, the majority took around half a day, whereas the final assembly took three full days. Therefore, the approximated time is 40 hours. |

| Programming | Programming was the most consuming out of all the tasks, especially since the SD card has failed at a late stage which made me repeat all the setup of the RPI and programing. However, the approximated time spent programming and troubleshooting daily from week 13 to week 19 was half an hour daily, totaling 21 hours. Nevertheless, from week 19 to week 23, the programming intensified and troubleshooting to approximately 3 hours daily, totaling 105 hours. Therefore, the approximated time spent is 126 hours. |

| Testing & debugging | Testing has started since Week 13 with the motors and painting. First, the motors were tested using VESC, then Arduino, and finally RPI. Each test was conducted approximately three times, and each time took around half an hour, totaling approximately 5 hours. Additionally, painting testing took approximately the same time. At the same time, the prototype testing and debugging took two whole weeks. Therefore, the estimated time spent is approximately 122 hours. |

| Total | ~940 hours ( ~39 days) |

7. Conclusion and Future Directions

7.1. Limitations

7.2. Future Development

7.2.1. Fully Autonomous and Robust Road Maintenance Robot

7.2.2. Fully Autonomous and Robust Industrial Painting Robot

7.3. Conclusion:

- Design drawing

- Physical operational product

- Programming codes

- A detailed document of the project

Acknowledgments

Appendix A - Materials characteristics

- ○

- softening temperature: 50 °C.

- ○

- Rockwell hardness: R70-R90.

- ○

- Elongation at failure: 3.8%

- ○

- flexing strength: 55.3 MPa.

- ○

- Tensile breaking strength: 57.8 MPa.

- ○

- Modulus of longitudinal elasticity: 3.3 GPa

Appendix B - Initial Design

Appendix C - Final 3D CAD drawings

Appendix D - RPI python code:

Appendix E - Arduino C++ code:

Appendix F - Arduino C++ code (Not used):

References

- Naidu, V. , & Bhaiswar, V. (2020). Review on road marking paint machine and material. IOP Conference Series: Materials Science and Engineering, 954, 012016. [CrossRef]

- Mathibela, B. , Newman, P., & Posner, I. (2015). Reading the Road: Road Marking Classification and Interpretation. IEEE Transactions on Intelligent Transportation Systems, 16(4), 2072–2081. [CrossRef]

- Heping Chen, Fuhlbrigge, T., & Xiongzi Li. (2008). Automated industrial robot path planning for spray painting process: A review. 2008 IEEE International Conference on Automation Science and Engineering, 1. [CrossRef]

- Kotani, S. , Mori, H., Shigihara, S., & Matsumuro, Y. (2002). Development of a lane mark drawing robot. Proceedings of 1994 IEEE International Symposium on Industrial Electronics (ISIE’94), 1. [CrossRef]

- Ali, M. A. H. , Yusoff, W. ( 2016). Mechatronic design and development of an autonomous mobile robotics system for road marks painting. 2016 IEEE Industrial Electronics and Applications Conference (IEACon), 1. [CrossRef]

- Woo, S., Hong, D., Lee, W.-C., Chung, J.-H., & Kim, T.-H. (2008). A robotic system for road lane painting. Automation in Construction, 17(2), 122–129. [CrossRef]

- TinyMobileRobots®. TinyPreMarker. ( 2020.

- TinyMobileRobots®. TinyPreMarker Sport. ( 2020.

- Position Partners®. Tiny Surveyor. ( 2018.

- TinyMobileRobots®. TinyLineMarker Pro. ( 2020.

- Jung, B.-J., Park, J.-H., Kim, T.-Y., Kim, D.-Y., & Moon, H.-P. (2011). Lane Marking Detection of Mobile Robot with Single Laser Rangefinder. Journal of Institute of Control, Robotics and Systems, 17(6), 521–525. [CrossRef]

- Guangzhou Top Way Road Machinary CO. Design of Road Marking Machine. http://www.topwaytraffic.com/en/news/2014-10-7/93.html.

- INTELLIGENT MACHINES®. Road marking robot.

- MOHAMMED AH ALI, M. MAILAH AND TANG HOWE HING. (2014). Autonomous Mobile Robotic System for On-the-Road Painting. Universiti Teknologi Malaysia.

- MAS OMAR BIN MAS ROSEMAL HAKIM. (2017). DEVELOPMENT OF A SEMI-AUTOMATEDON-THE-ROAD PAINTING MACHINE. Universiti Teknologi Malaysia.

- STiM®. Kontur 600.

- Vacek, S.; Schimmel, C.; Dillmann, R. Road-marking analysis for autonomous vehicle guidance. In Proceedings of the European Conference on Mobile Robots, Freiburg, Germany, 19–21 September2007; pp. 1–6. [Google Scholar]

- STiM®. Shmelok HP Structure.

- Ignatiev, K. V. , Serykh, E. V. ( 2017). Road marking recognition with computer vision system. 2017 IEEE II International Conference on Control in Technical Systems (CTS), 1. [CrossRef]

- Brock, O. , Trinkle, J., & Ramos, F. (2009). Robotics: Science and Systems IV (The MIT Press) (Illustrated ed.). The MIT Press.

- The Story of Road Markings (2015). https://www.roadtraffic-technology.com/contractors/road_marking/deltaol/pressreleases/pressthe-story-of-road-markings.

- The Story of Road Markings (2015). https://www.roadtraffic-technology.com/contractors/road_marking/deltaol/pressreleases/pressthe-story-of-road-markings.

- https://roboticsbackend.com/raspberry-pi-arduino-serial-communication/#What_is_Serial_communication_with_UART.

- https://www.raspberrypi.org/documentation/remote-access/vnc/README.md.

- https://robu.in/installing-opencv-using-cmake-in-raspberry-pi/.

- https://www.asirt.org/safe-travel/road-safety-facts/.

| Chassis |

|

| Drive Unit |

|

| Paint Unit |

|

| Sensors |

|

| Control Unit |

|

| Power |

|

| Performance & UI. |

|

| Availability in JED | Assembling difficulty | Quality | Price | Total | |

| Aluminum Extruded Profile | +2 | +2 | +2 | -1 | +5 |

| Carbon Fiber Tubes | -2 | -1 | +2 | -2 | -3 |

| Sheet Metal Fabrication | +2 | -2 | -1 | +2 | +1 |

| Availability in JED | Energy consumption | Weight | Price | Total | |

| Air compressor + Paint gun | +2 | +2 | +2 | +1 | +7 |

| Paint pump | -2 | -2 | -2 | -2 | -8 |

| Airless paint gun | -2 | -2 | -2 | -2 | -8 |

| . | Assembling difficulty | Control difficulty | Maneuverability | Price | Total |

| Steerable front wheels and motorized rear wheels (Ackermann steering drive) | -1 | -2 | +2 | -1 | 0 |

| Front castor wheel and Motorized rear wheels (Differential drive) | +2 | +2 | +1 | +1 | +6 |

| Motor\Test | Using VESC tool | Using Arduino serial | Using Raspberry Pi serial to Arduino |

| Right | OK | OK | Failure ( Burnt the motor with the VESC ) |

| Left | OK | OK | OK |

| Right ( New ) | OK | OK | OK |

| Sprayer\Test | Water – No valve | Water – air valve | Paint – air valve |

| Siphon HVLP | OK | OK | Low paint volume |

| Gravity feed HVLP (small) | OK | OK | OK |

| Gravity feed HVLP (large) | OK | OK | Failure ( Valve exploded ) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).