Submitted:

11 October 2024

Posted:

17 October 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review

2.1. Research on China’s Image in the International Mainstream Media

2.2. Research on Platforms for Opinion Mining

3. Platform Construction and Operation

3.1. Construction Principles and Software and Hardware Operating Environment

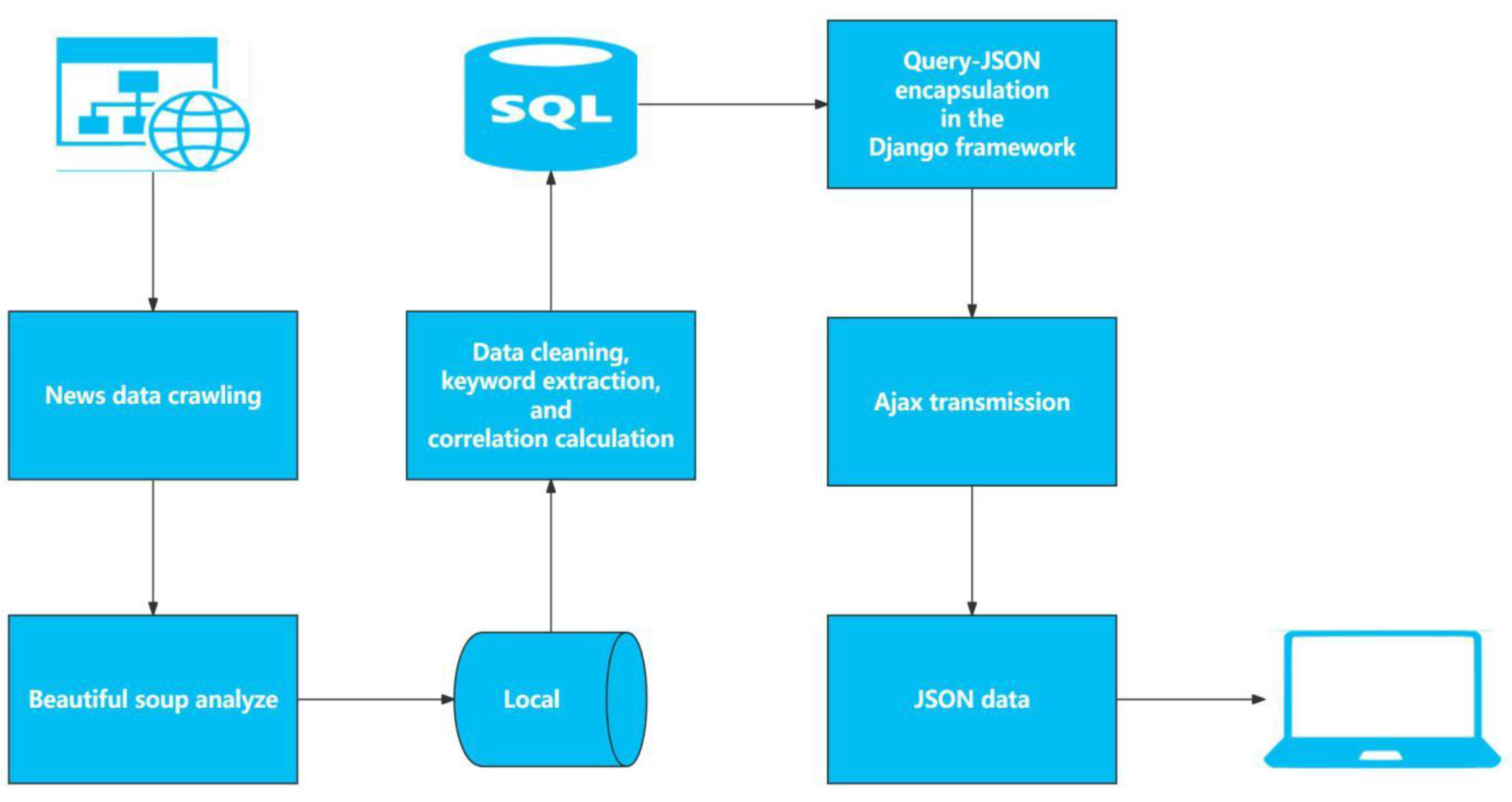

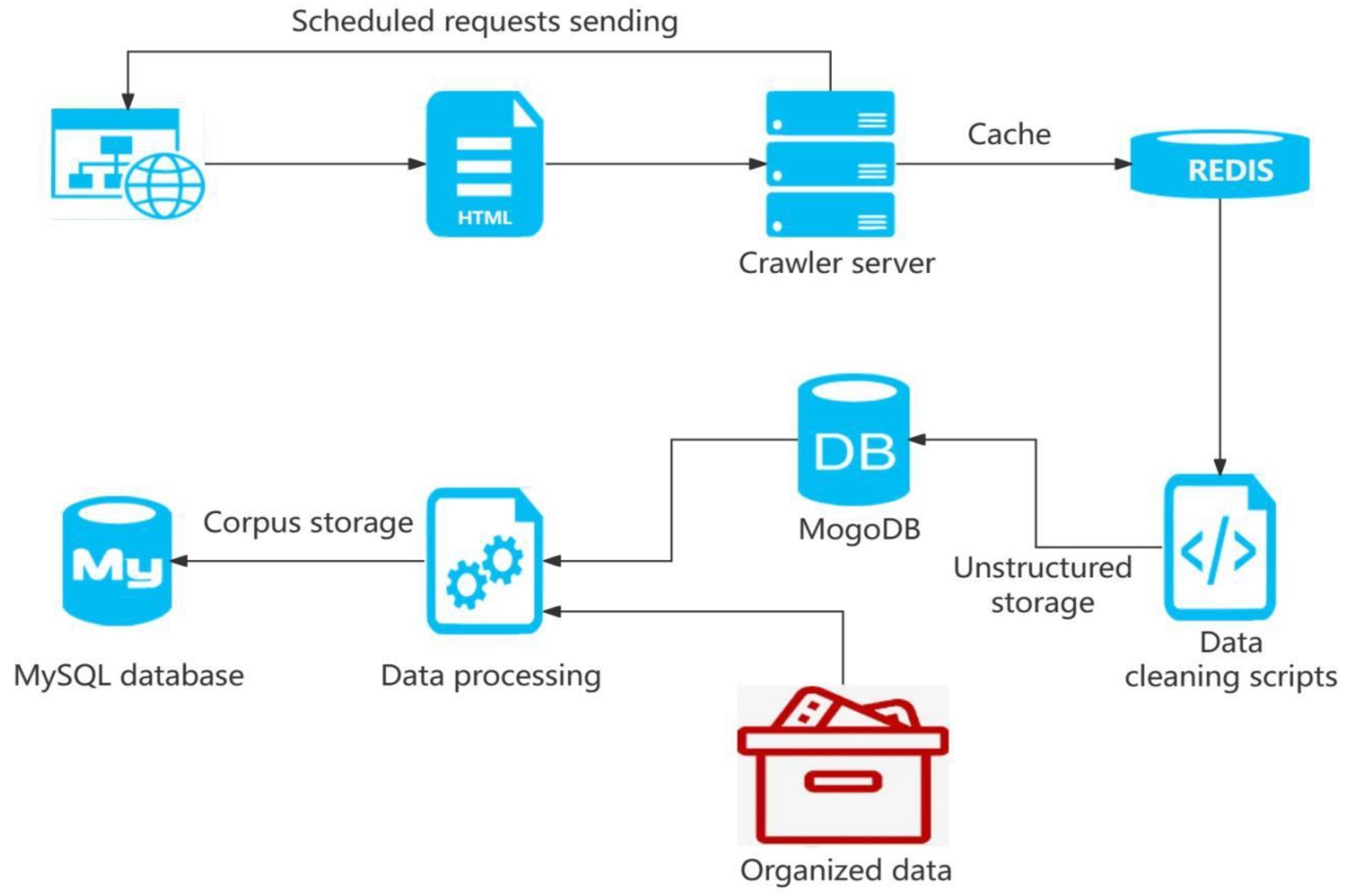

3.2. Principles and Methods of Corpus Crawling

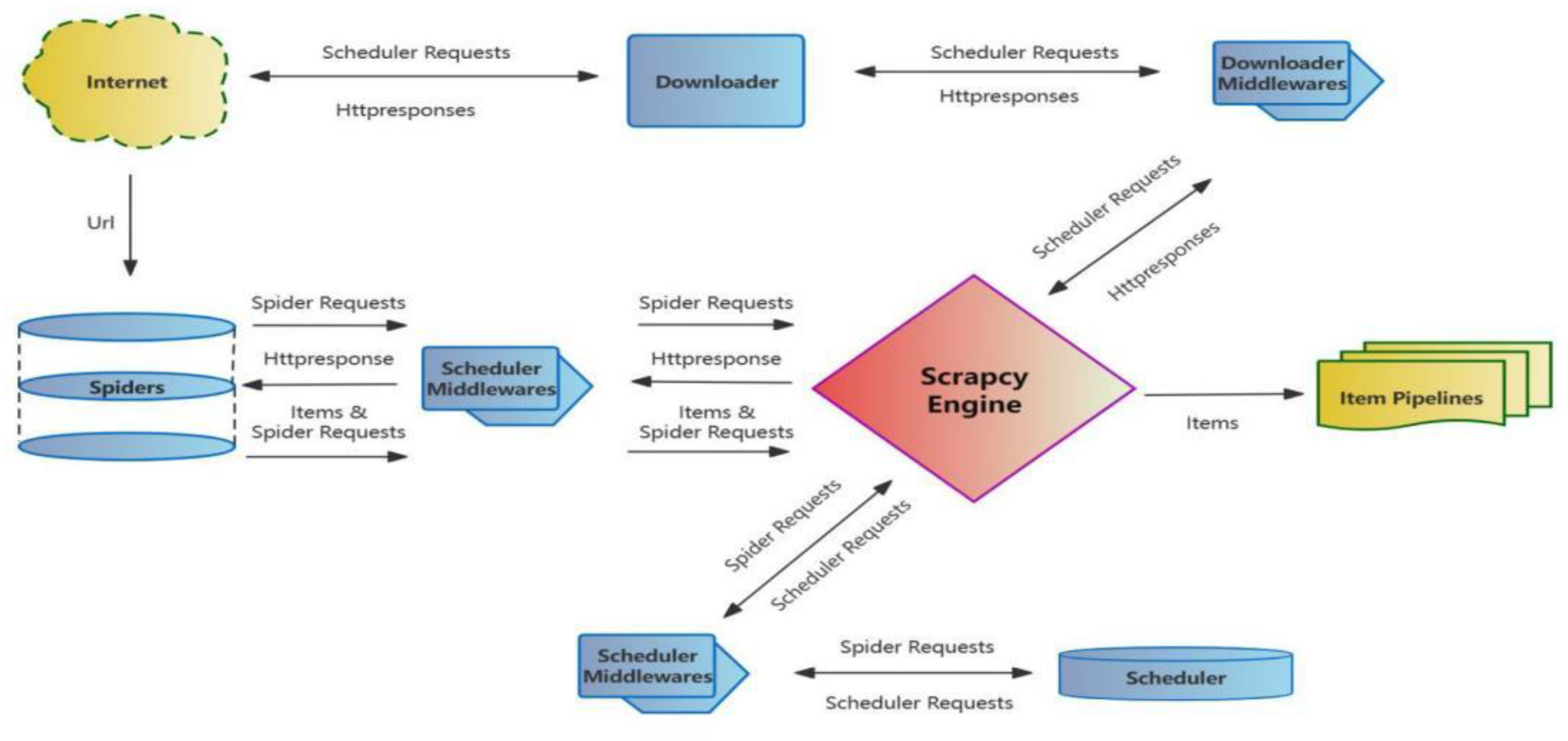

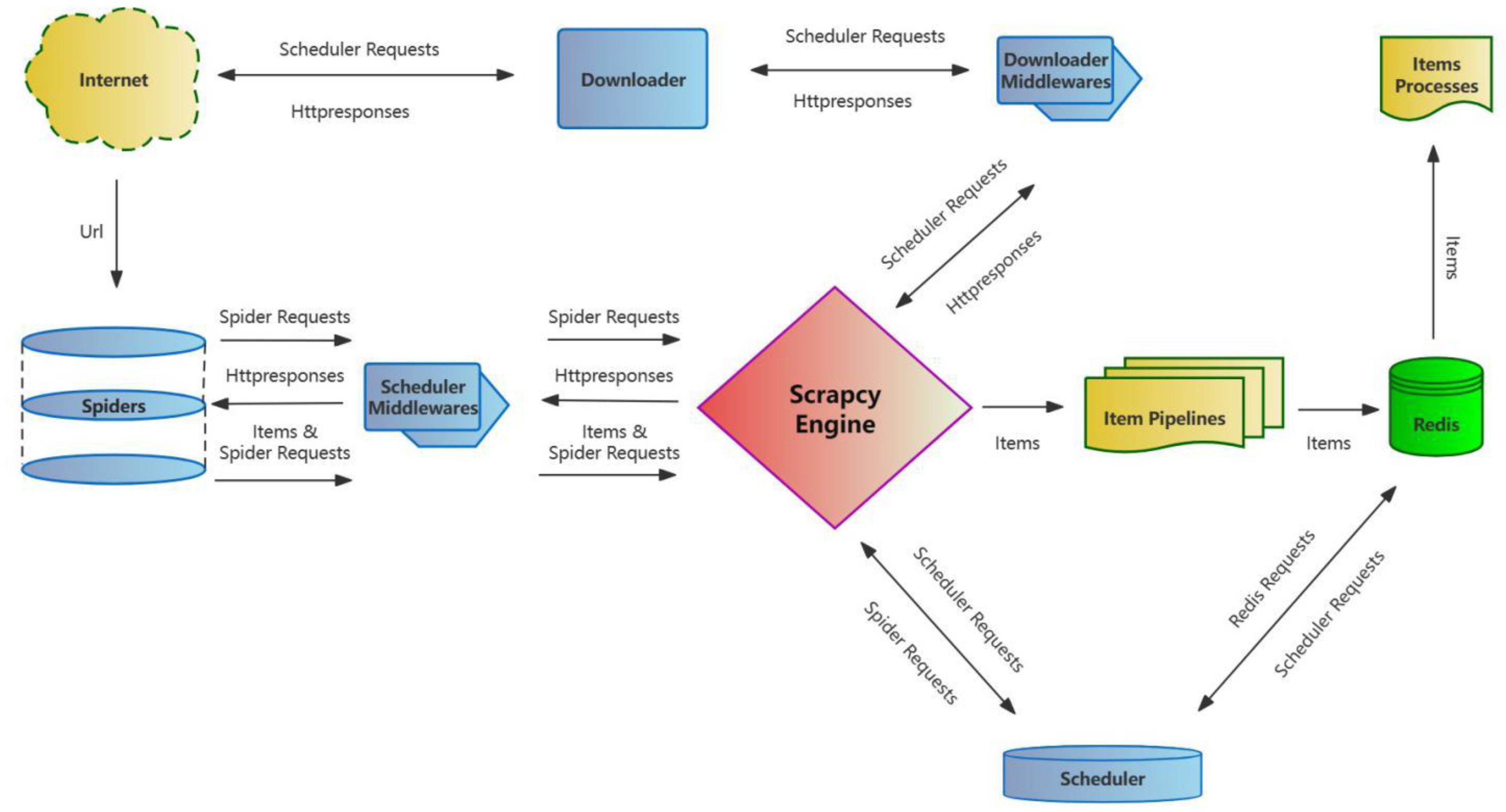

3.2.1. Principles of Corpus Data Crawling

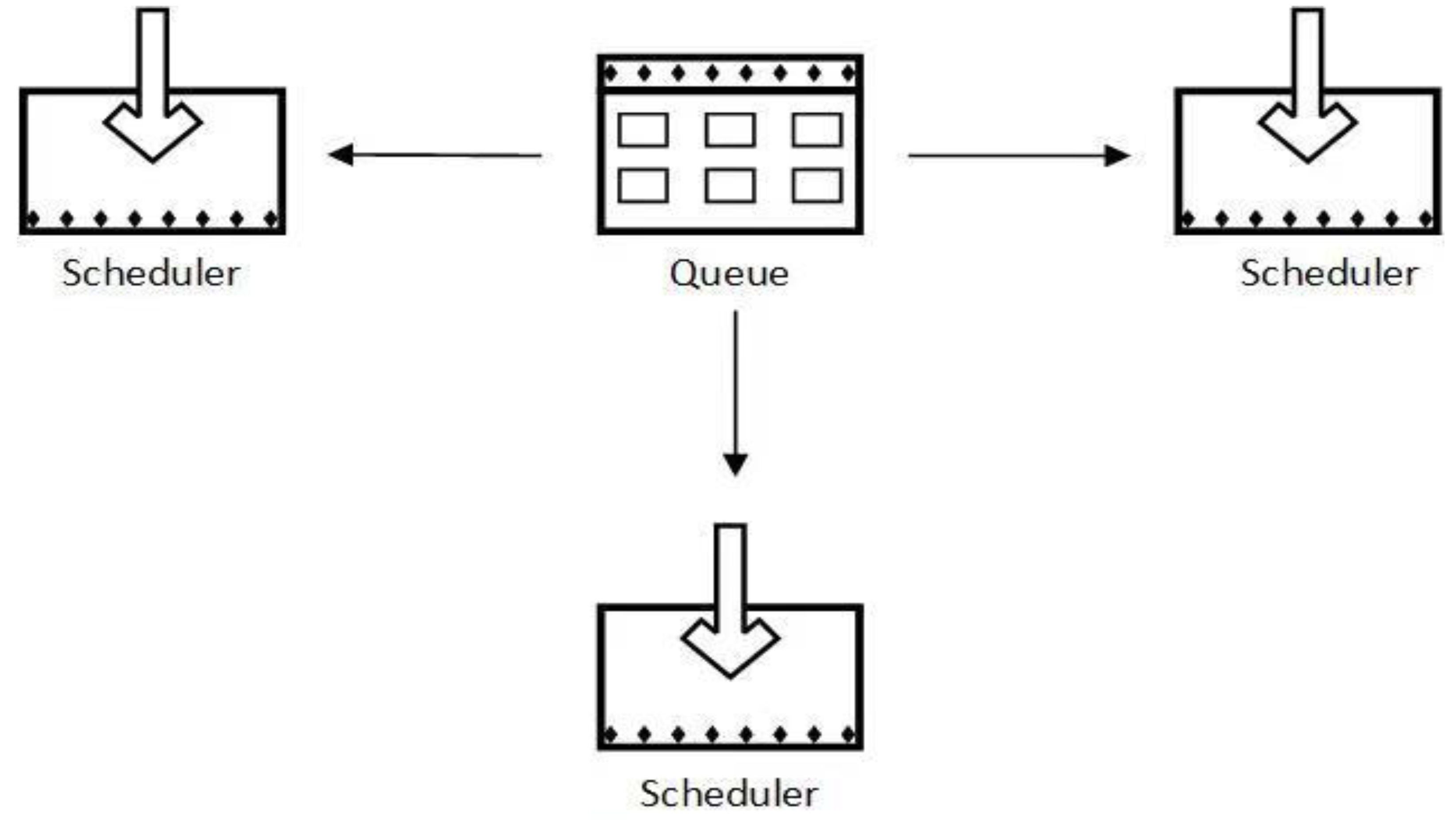

3.2.2. Acquisition and Deduplication of Corpus Data

3.3. Automatic Classification and Organization of Corpus

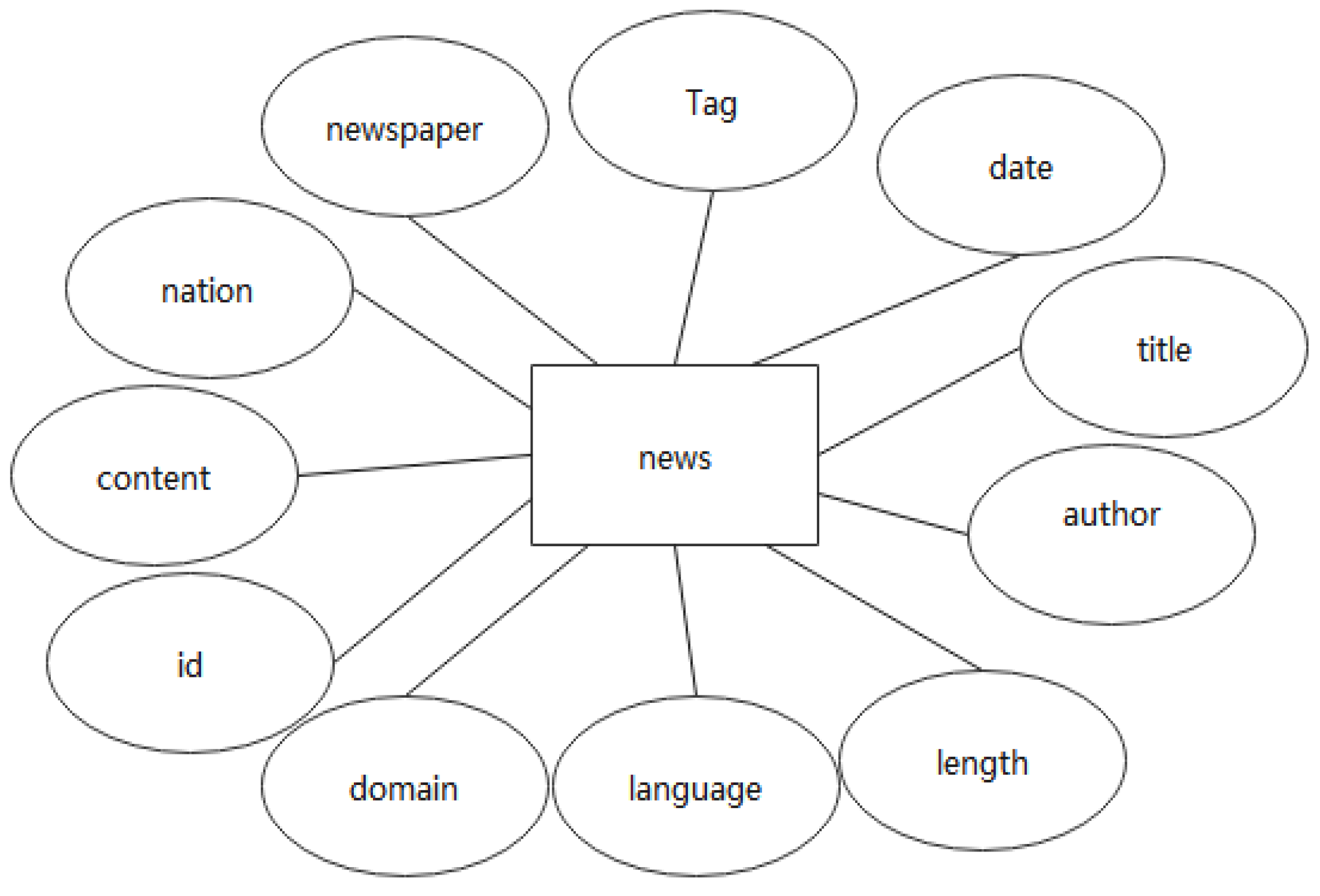

3.3.1. Design of Corpus Database

3.3.2. Classification Tag Generation

3.3.3. Corpus Organization

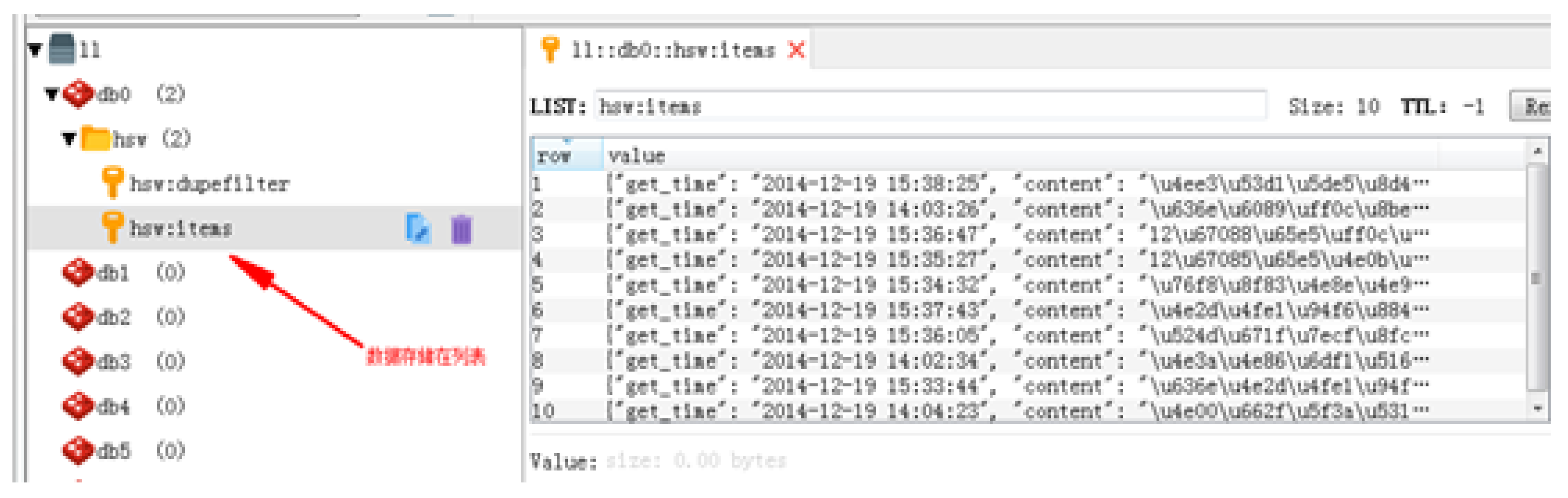

3.4. Cleaning and Storage of Corpus

3.4.1. Cleaning of Crawler Data

3.4.2. Calibration of Corpus Data

3.4.3. Input of Existing Corpus Data

3.5. Research and Development (R&D), Embedding and Operation of Corpus Analysis Software

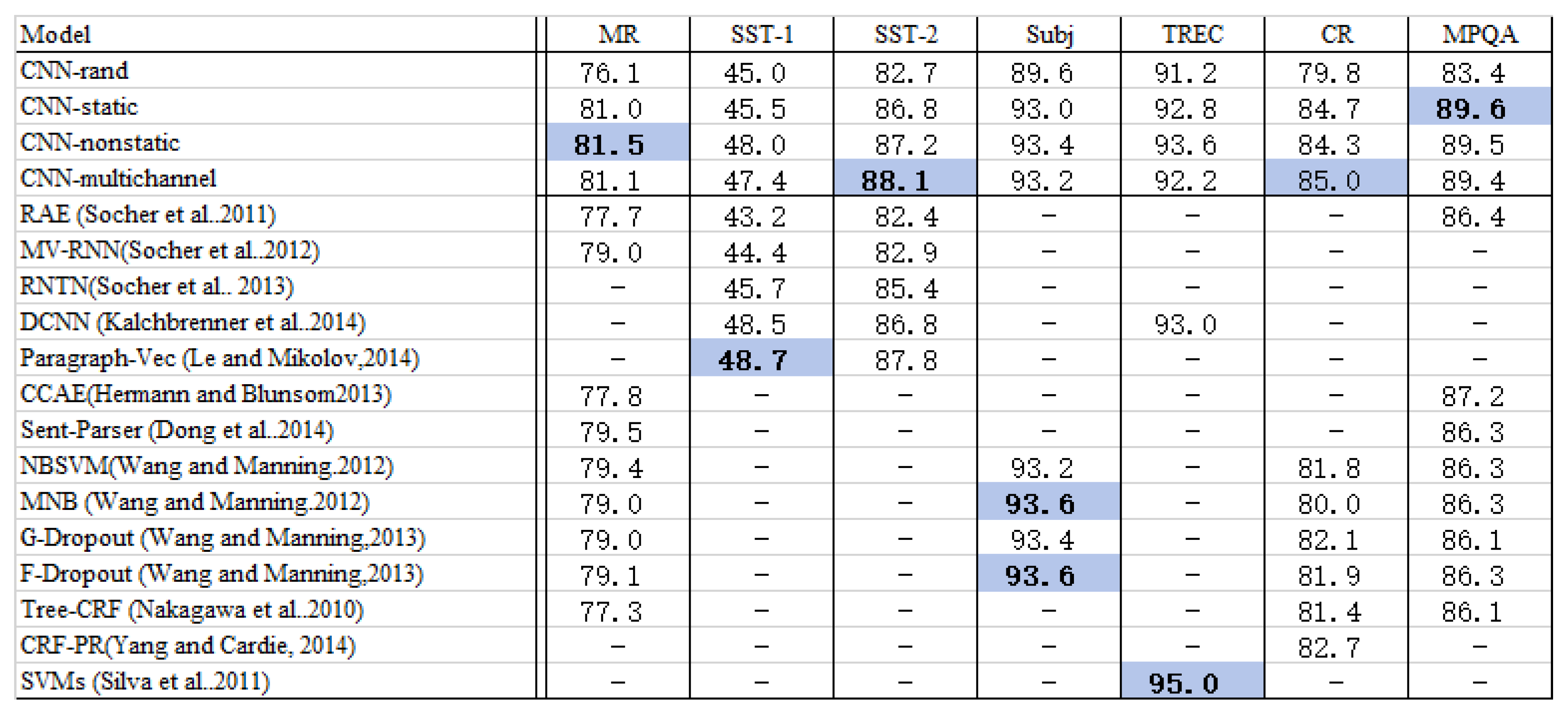

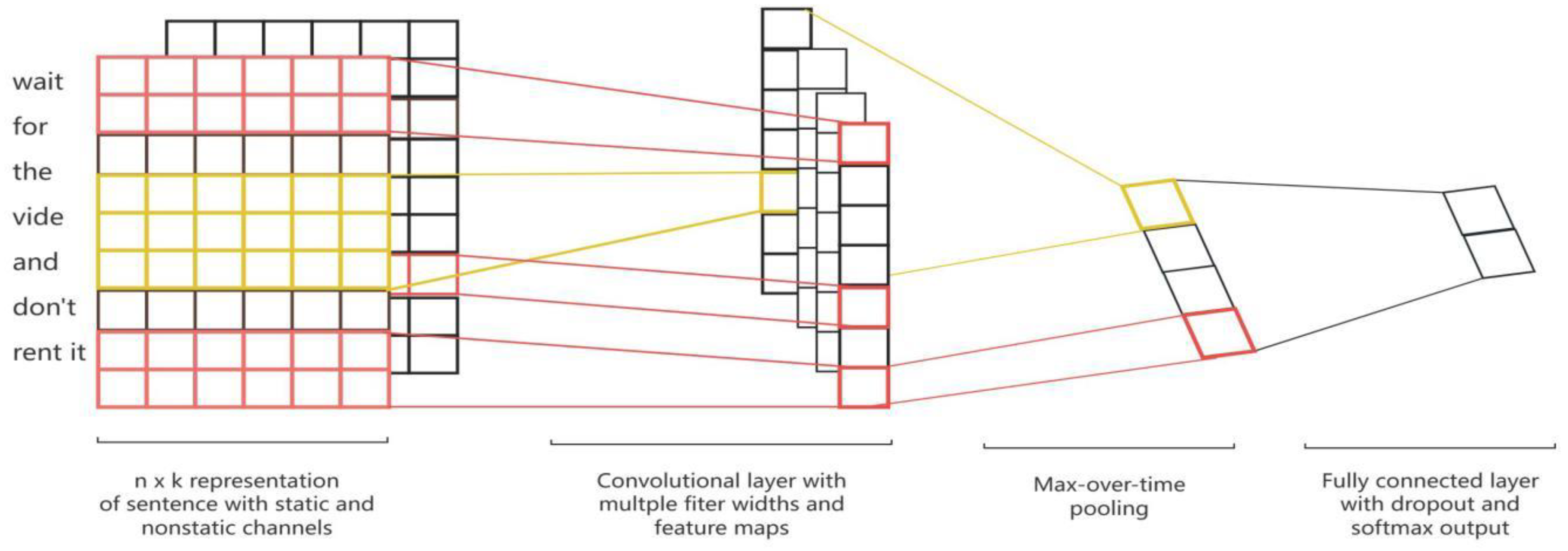

3.5.1. R&D of Corpus Analysis Software

3.5.2. R&D and Embedding of Corpus Analysis Software

3.5.3. Operation of Corpus Analysis Software

3.6. Detection and Statistics of Coverage Concentration of Dimensions of China’s Image and of Each Dimension

3.7. Extraction and Analysis of Statistical Results

4. Conclusion

Disclosure statement

References

- Adak, S.; Chakraborty, S.; Das, P.; Das, M.; Dash, A.; Hazra, R.; Mathew, B.; Saha, P.; Sarkar, S.; Mukherjee, A. Mining the online infosphere: A survey. WIREs Data Mining and Knowledge Discovery 2022, 12, e1453. [Google Scholar] [CrossRef]

- Chaudhary, K.; Alam, M.; Al-Rakhami, M.S.; Gumaei, A. Machine learning-based mathematical modelling for prediction of social media consumer behavior using big data analytics. J. Big Data 2021, 8, 1–20. [Google Scholar] [CrossRef]

- Chen, J.S.; Chen, X.J. Changes in China’s national image through the eyes of Western media—A corpus-based study. Journal of Guangdong University of Foreign Studies 2017. [Google Scholar]

- Cui, Q. C. Python3 Web Scraping Hands-On Development Practice; Posts & Telecom Press: Beijing, 2018. [Google Scholar]

- Gao, W.H.; Jia, M.M. Analysis of the reporting framework of China’s multi-ethnic state image in American mainstream media. Journalism University 2016. [Google Scholar]

- Goldberg, Y. Neural Network Methods for Natural Language Processing. Synth. Lect. Hum. Lang. Technol. 2017, 10, 1–309. [Google Scholar] [CrossRef]

- Hartmann, J.; Huppertz, J.; Schamp, C.; Heitmann, M. Comparing automated text classification methods. Int. J. Res. Mark. 2019, 36, 20–38. [Google Scholar] [CrossRef]

- He, H. Introduction to natural language processing. Posts and Telecom Press: Beijing, 2020. [Google Scholar]

- He, Z.W.; Chen, X.X. The generalization of politics: The bias of American media in constructing China’s image—A content analysis of articles on China in Newsweek (2009-2010). Contemporary Communication 2012. [Google Scholar]

- Hu, K.; Li, X. The image of the Chinese government in the English translations of Report on the Work of the Government: a corpus-based study. Asia Pac. Transl. Intercult. Stud. 2022, 9, 6–25. [Google Scholar] [CrossRef]

- Hu, K.B.; Li, X. Corpus-based study of translation and China’s image: Connotations and implications. Foreign Language Research 2017. [Google Scholar]

- Hu, W.H.; Xu, Y.J. Twenty years of research on the image of China in the international media—a scientific knowledge mapping analysis based on CiteSpace. Technology Enhanced Foreign Language Education 2022. [Google Scholar]

- Huan, C. China opportunity or China threat? A corpus-based study of China’s image in Australian news discourse. Soc. Semiot. 2023, 1–18. [Google Scholar] [CrossRef]

- Huan, C.; Deng, M. Partners or Predators? A Corpus-Based Study of China’s Image in South African Media. Afr. Journal. Stud. 2021, 42, 34–50. [Google Scholar] [CrossRef]

- Huang, Y.X. Development practice of Django Web. Tsinghua University Publishing House: Beijing, 2019. [Google Scholar]

- Karamouzas, D.; Mademlis, I.; Pitas, I. Public opinion monitoring through collective semantic analysis of tweets. Soc. Netw. Anal. Min. 2022, 12, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Lan, J.; Luo, R. British mainstream media’s perception of China’s image. Hubei Social Sciences 2013. [Google Scholar]

- Li, J. A review of studies on China’s image in Japanese mainstream media. Communication and Copyright 2017. [Google Scholar]

- Nandwani, P.; Verma, R. A review on sentiment analysis and emotion detection from text. Soc. Netw. Anal. Min. 2021, 11, 1–19. [Google Scholar] [CrossRef]

- Pan, Y.Y.; Dong, D. Study on the discourse strategies of American mainstream news media in constructing China’s image and major country relations: A case study of the coverage of the 2016 China-Russia joint military exercise. Journal of Xi’an International Studies University 2017. [Google Scholar]

- Peng, Z. Representation of China: An across time analysis of coverage in theNew York TimesandLos Angeles Times. Asian J. Commun. 2004, 14, 53–67. [Google Scholar] [CrossRef]

- Ramya, G.R.; Sivakumar, P.B. An incremental learning temporal influence model for identifying topical influencers on Twitter dataset. Soc. Netw. Anal. Min. 2021, 11, 1–16. [Google Scholar] [CrossRef]

- Saito, Y. Deep learning from scratch. Posts and Telecom Press: Beijing, 2020. [Google Scholar]

- Shen, Y.; Wu, G. China's image in Russian regional media: A case study of reports by “Gubernia Daily”, “Business World”, and “Ural Politics Web”. Russian, East European & Central Asian Studies 2013. [Google Scholar]

- Tang, L. Transitive representations of China’s image in the US mainstream newspapers: A corpus-based critical discourse analysis. Journalism 2018, 22, 804–820. [Google Scholar] [CrossRef]

- Teo, P.; Xu, H. A Comparative Analysis of Chinese and American Newspaper Reports on China's Belt and Road Initiative. Journal. Pr. 2023, 17, 1268–1287. [Google Scholar] [CrossRef]

- Tsirakis, N.; Poulopoulos, V.; Tsantilas, P.; Varlamis, I. Large scale opinion mining for social, news and blog data. J. Syst. Softw. 2017, 127, 237–248. [Google Scholar] [CrossRef]

- Wang, G. A corpus-assisted critical discourse analysis of news reporting on China’s air pollution in the official Chinese English-language press. Discourse Commun. 2018, 12, 645–662. [Google Scholar] [CrossRef]

- Wang, K.F. 2016. Construction of a novel diachronic multiple corpora. Chinese Social Sciences Today.

- Wang, X.L.; Han, G. “Made in China” and national image communication—A content analysis of 30 years of reports in American mainstream media. International Journalism 2010. [Google Scholar]

- Wang, Z.Q. The economic image of China from the perspective of Germany’s Die Zeit (2004—2009). German Studies 2009. [Google Scholar]

- Xia, F. The discursive construction of other countries' images—The image of China in The New York Times’ reporting on the theft case in the Forbidden City. Journalism and Communication 2012. [Google Scholar]

- Xu, M.H.; Wang, Z.Z. The “change” and “unchange” of China's image in Western media discourse. Modern Communication 2016. [Google Scholar]

- Yang, H., & Van Gorp. A frame analysis of political-media discourse on the Belt and Road Initiative: Evidence from China, Australia, India, Japan, the United Kingdom, and the United States. Cambridge Review of International Affairs 2023, 36, 625–651. [Google Scholar] [CrossRef]

- Zhang, K., & Chen. Differential analysis of China’s image construction in geopolitical conflict reporting: A case study of The Times and The New York Times' reporting on the Diaoyu Islands incident. Contemporary Communication 2014. [Google Scholar]

- Zhang, K.; Wang, C.Y. Analysis of the national image model under the dimension of time and space—Based on the perspective of cognitive interaction. Journalism and Communication 2017. [Google Scholar]

- Zhang, L.; Wu, D. Media Representations of China: A Comparison of China Daily and Financial Times in Reporting on the Belt and Road Initiative. Crit. Arts 2017, 31, 29–43. [Google Scholar] [CrossRef]

- Zhang, J.; Cameron, G.T. China’s agenda building and image polishing in the US: assessing an international public relations campaign. Public Relations Rev. 2003, 29, 13–28. [Google Scholar] [CrossRef]

- Zhang, Y. Textual analysis of The New York Times' perception and shaping of China’s national image during the Obama administration. Journal of International Relations 2011. [Google Scholar]

- Zhong, X. Intertextuality and China’s image: “Ye Shiwen discourse” in British national newspapers. Modern Communication (Journal of Communication University of China) 2013. [Google Scholar]

- Zhou, Y., & Zheng. Image China: The image of China in American mainstream newspapers. International Journalism 2010. [Google Scholar]

- Zhou, Z.H. Machine learning. 2017. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).