1. Introduction

Deep learning has emerged as a transformative force across a diverse array of domains, including image processing [

1,

2,

3,

4,

5,

6,

7], natural language processing (NLP) [

8,

9,

10,

11], and audio processing [

12,

13,

14,

15]. In particular, the realm of question-answering systems has witnessed remarkable advancements in recent years. These sophisticated algorithms are instrumental in efficiently extracting pertinent information from vast quantities of text, enabling users to receive precise and contextually relevant answers to their queries. Despite the strides made in end-to-end training, the challenge of answering complex questions with a singular machine-learning model persists. This research paper aims to bridge this gap by developing a question-answering system tailored specifically for PDF files within the realms of scientific, financial, and biomedical literature [

16,

17,

18,

19]. The intricate nature of these documents often necessitates exhaustive analysis, making them difficult to comprehend. A robust question-answering system has the potential to alleviate this burden, allowing users to swiftly access the information they need. Previous studies have demonstrated that machine learning models can successfully retrieve information from extensive documents, with their performance typically assessed using metrics such as exact matches and F1 scores. Among these, Bidirectional Encoder Representations from Transformers (BERT) have shown exceptional prowess in deep learning tasks, including quality control and text summarization, requiring minimal modifications beyond pre-training with just one additional output layer. This capability could be particularly beneficial in medical contexts, assisting healthcare professionals in researching disease symptoms and treatment options. Notably, pre-trained BERT models like Bio-BERT and Sci-BERT have outperformed traditional models, aligning with findings in prior research on unsupervised biomedical question-answering pre-training.

This study aspires to enhance our understanding of the effective utilization of transformers in the development of question-answering software for the specified PDF documents. Ultimately, a well-designed QA system presents a more intelligent, efficient, and user-friendly approach to extracting information from extensive PDFs, such as annual reports, academic articles, and medical documents.

Research Question: The challenges outlined above lead us to the following research question:

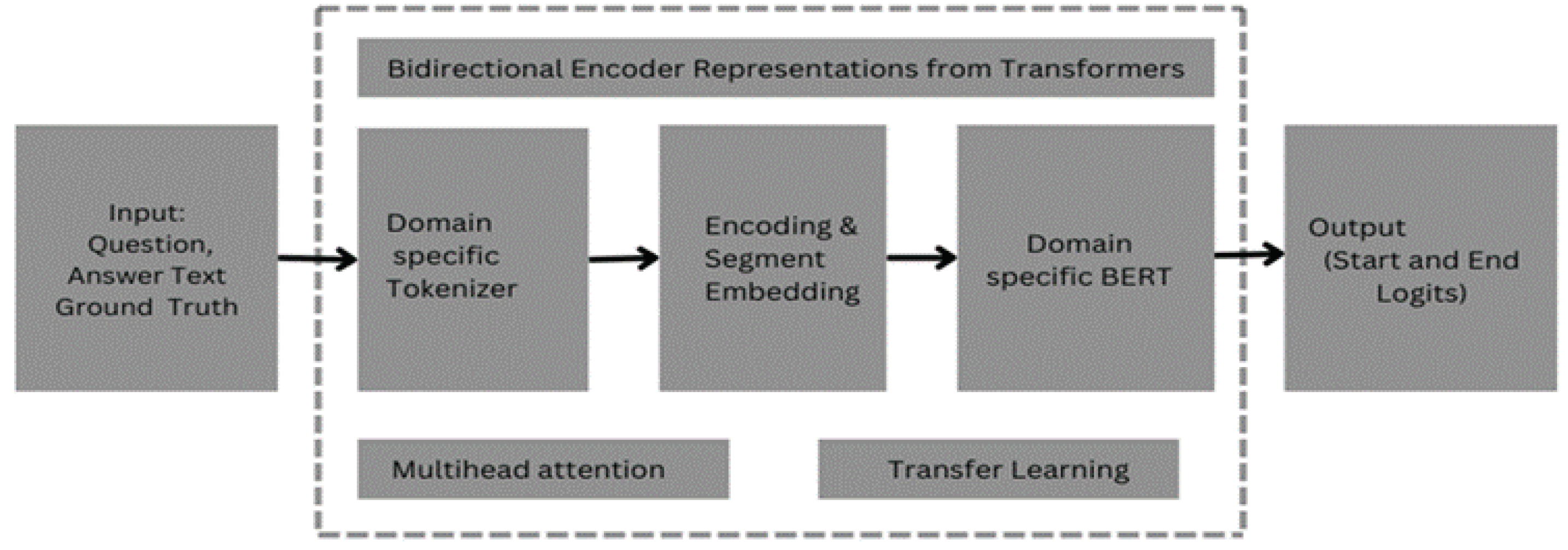

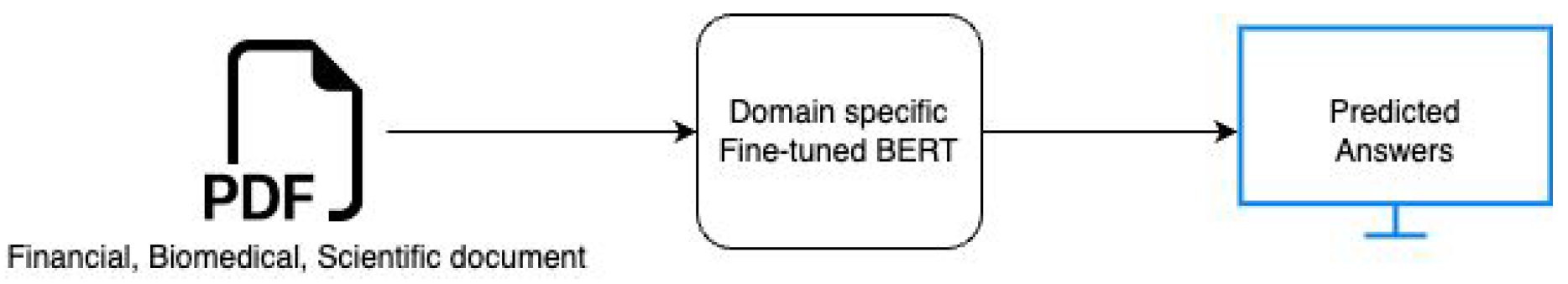

In the financial, biomedical, and scientific domains, how can BERT transformers be employed to effectively answer questions and retrieve knowledge from PDF files? This research aims to implement a QA system using pre-trained BERT models tailored for these domains and evaluate their performance to identify the most effective model. An overview of the question-answering system utilizing domain-specific pre-trained BERT on documents is depicted in

Figure 1.

The structure of this paper is organized as follows:

Section 2 discusses related work,

Section 3 outlines the research methodology,

Section 4 elaborates on design specifications,

Section 5 illustrates the implementation aspects,

Section 6 examines evaluation results, and finally,

Section 7 highlights conclusions and future work.

2. Related Work

BERT (Bidirectional Encoder Representations from Transformers) has emerged as a groundbreaking framework in the field of Natural Language Processing (NLP), achieving remarkable results across a multitude of tasks. Its design, characterized by conceptual simplicity and empirical robustness, positions BERT as a prime candidate for advancing question-answering systems. This research focuses on developing a BERT-based question-answering system tailored for PDF documents, aiming to navigate the intricate challenges posed by the extraction of nuanced information from this ubiquitous format. Given that PDF files are prevalent in both academic and professional contexts, their diverse structures and complex formatting often hinder effective information retrieval. By leveraging BERT’s capabilities, we seek to enhance comprehension and accessibility in the domain of PDF-based question-answering systems.

Our literature review aims to explore existing research that integrates BERT models for question-answering in PDFs, identifying gaps and opportunities for innovation. This examination is intended to propel the development of intelligent systems for document comprehension, providing critical insights into the design and implementation of a BERT-based QA system optimized for PDF files.

A seminal contribution to the foundation of this work is the introduction of the Transformer architecture by Vaswani et al. [

20]. This architecture revolutionized sequence transduction models by utilizing attention mechanisms, effectively overcoming the limitations associated with conventional recurrent neural networks. The Transformer architecture significantly outperforms traditional encoder-decoder frameworks in terms of speed and efficacy, particularly in language translation tasks, where it outshines even previously established ensemble models.

Further exploration into the capabilities of pre-trained language models was undertaken by Zhao et al. [

21], who assessed their generalization across a spectrum of question-answering datasets. These datasets, varying in complexity, present challenges that require models to navigate different levels of reasoning. This study involved training and fine-tuning various pre-trained language models on diverse datasets, revealing that models like RoBERTa and BART consistently outperform others. Notably, the BERT-BiLSTM architecture demonstrated enhancements over the baseline BERT model, emphasizing the significance of bidirectionality in developing robust systems for nuanced reasoning.

In a distinct application, Müller et al. (2023) introduced Covid-Twitter-BERT (CT-BERT), which was pre-trained on Twitter data related to Covid-19. This study reinforces the effectiveness of domain-specific models, demonstrating how tailored architectures can better address specific tasks [

23]. Another innovative approach, presented by Huang et al. [

24], expands the BERT architecture to account for inter-cell connections within tables. By utilizing a substantial table corpus from Wikipedia, this method retrains parameters to better capture associations between tabular data and surrounding text, thereby enhancing the efficiency and accuracy of question-answering in documents.

Despite BERT’s notable success in language comprehension, its adaptation for language generation tasks remains a challenge. The research conducted by Zhang et al. [

25] introduced a novel method termed C-MLM, which modifies BERT for target generation tasks. This innovative approach employs BERT as a "teacher" model, guiding traditional Sequence-to-Sequence (Seq2Seq) models, referred to as "students." This teacher-student dynamic significantly enhances the performance of Seq2Seq models in various text generation tasks, yielding substantial improvements over existing Transformer baselines.

Further contributions to BERT’s capabilities are highlighted in the work of Devlin et al. [

26], where a dual-context training method is employed. This technique jointly trains the model on both left and right contexts across all layers, enabling the pre-training of deep bidirectional representations from unlabeled text. By fine-tuning the pre-trained BERT model with minimal architectural modifications, advanced models for tasks such as question answering and language inference can be developed, achieving an impressive F1 score of 93 percent on the SQuAD v1.1 dataset.

In the realm of disaster management, a pioneering automated approach utilizing BERT for extracting infrastructure damage information from textual data has been proposed [

28]. This innovative method, trained on reports from the National Hurricane Center, demonstrates high accuracy in scenarios involving hurricanes and earthquakes, outperforming traditional methods. The approach involves two key steps: Paragraph Retrieval using Sentence-BERT, followed by information extraction with a BERT model. The model was trained on 533 question-answer pairs extracted from hurricane reports, achieving F1 scores of 90.5 percent and 83.6 percent for hurricane and earthquake scenarios, respectively.

In the domain of document classification, an extensive study [

29] has pioneered the application of BERT, achieving state-of-the-art results across four diverse datasets. Despite initial concerns regarding computational demands, the proposed BERT-based model surpasses previous baselines by utilizing knowledge distillation to create smaller bidirectional LSTMs, achieving comparable performance with significantly reduced parameters.

Furthermore, BERTSUM, a simplified variant of BERT specifically designed for extractive summarization, has demonstrated its capability in enhancing summarization tasks [

30]. This research underscores BERT’s robust architecture and extensive pre-training dataset, providing compelling evidence for its effectiveness in extractive summarization, especially given recent advancements that had previously plateaued.

In parallel, the introduction of novel data augmentation techniques leveraging distant supervision has been explored to enhance BERT’s performance in open-domain question-answering tasks [

31]. This approach addresses challenges related to noise and genre mismatches in distant supervision data, illustrating the sensitivity of models to diverse datasets and hyper-parameters.

A comprehensive survey [

32] analyzed various adaptations of BERT, including BioBERT for biomedical texts, Clinical BERT for clinical notes, and SciBERT for scientific literature. These models have showcased superior performance in their respective fields, with recommendations for future research focusing on their application in tasks such as summarization and question answering [

33].

Moreover, research tackling the overload of biomedical literature has employed contextual embeddings from models such as XLNet, BERT, BioBERT, SciBERT, and RoBERTa for keyword extraction, yielding significant improvements in F1 scores [

35]. Studies have also highlighted the efficacy of BioBERT and SciBERT in processing biomedical text [

36], while another investigation noted that ALBERT outperforms BERT with fewer parameters and faster training times in natural language understanding benchmarks [

38].

The comprehensive literature review reveals that researchers have achieved significant accuracy with BERT-based models across various NLP tasks, particularly in the domain of question-answering [

21,

28]. Notable studies have illustrated the efficacy of models like Roberta for processing complex PDF documents, as well as the importance of domain-specific adaptations such as BioBERT and SciBERT in the biomedical context. The findings underscore the versatility and robustness of BERT, while also illuminating ongoing challenges, including model sensitivity to varying datasets, scalability issues, and the complexities involved in applying BERT to generative tasks. These insights lay the groundwork for future research aimed at refining BERT-based models and enhancing their applicability across diverse domains.