1. Introduction

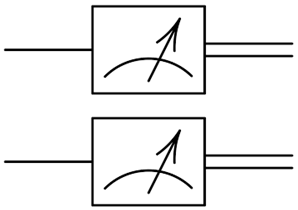

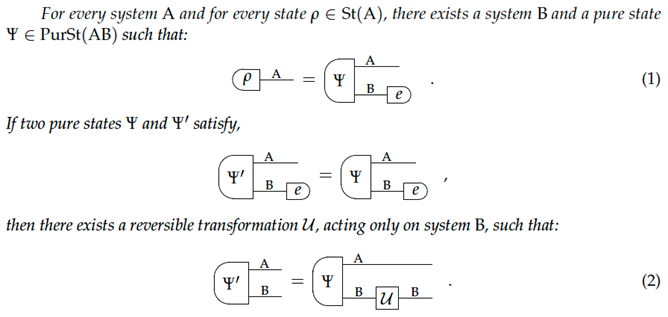

The argument of this article is threefold:

- (1)

The article argues that, from its rise in the sixteenth century, in the work of such figures Galilei, René Descartes, and Johannes Kepler, to our own time, the advancement of modern physics, as mathematical-experimental science, has been defined by the invention of new mathematical structures, possibly borrowing them from mathematics itself. Among the greatest such inventions (all using differential equations) are:

*Classical physics (CP), based on analytic geometry or calculus from Sir Issac Newton on;

*Maxwell’s electromagnetic theory, based on the idea of (classical) field and its mathematization, as represented by Maxwell’s equations;

*Relativity, special (SR) and especially general (GR), based on Riemannian geometry;

*Quantum theory (QT), specifically, quantum mechanics (QM) and quantum field theory (QFT), based on the mathematics of Hilbert spaces over and the operator algebras. (I refer, for simplicity, to the standard version of quantum formalism introduced by John von Neumann, rather than the original ones of Werner Heisenberg’s matrix mechanics, developed by Max Born and Pasqual Jordan, Erwin Schrödinger’s wave mechanics, and Paul Dirac’s q-number quantum mechanics, all of which are essentially equivalent mathematically).

- (2)

-

This article, then, argues that QM and QFT (to either of which the term QT will refer hereafter) gave this thesis a radically new meaning by virtue of the following two features:

- (a)

On the one hand, quantum phenomena themselves are defined by purely physical features, as essentially different from all previous physics, beginning with the role of Planck’s constant h in them, features manifested in such paradigmatic experiments as the double-slit experiment or those dealing with quantum correlations.

- (b)

On the other hand, QT qua theory, at least QM or QFT, is defined (including as different from CT and RT) by purely mathematical postulates, which connect it to quantum phenomena strictly in terms of probabilities, without representing how these phenomena physically come about.

These two features may appear discordant, if not inconsistent, especially given that previously, in CT and RT, physical features of the corresponding phenomena and mathematical postulates defining these theories, were connected,

representationally, by mathematically representing how the phenomena considered come about by the corresponding theory. I shall argue, however, that (a) and (b) are in accord with each other, at least in a certain interpretations (including the one adopted here), designated as “reality without realism,” RWR, interpretations, introduced by this author previously (e.g., [

1,

2,

3]). In Heisenberg’s invention of QM, moreover, and then in Dirac’s invention of both, his version of QM and quantum electrodynamics (QED), physics itself emerges from mathematics rather than mathematics following physics, as had been the case in theoretical physics previously.

- (3)

The argument outlined in (2), allows this article to offer a new perspective on a thorny problem of the relationships between continuity and discontinuity in quantum physics (QP). Indeed, QP was introduced, with this problem at the core, with Max Planck’s discovery of his black-body radiation law, in 1900, as a physics of discontinuity, specifically as discreteness, to which the term “quantum” originally referred. In particular, rather than being concerned only with the discreteness and continuity of quantum objects or phenomena (which are essentially different from quantum objects) QM and QFT relate their continuous mathematics to the irreducibly discrete quantum phenomena, in terms of probabilistic predictions. At the same time, QM and QFT, at least in RWR interpretations, preclude a representation or even conception of how these phenomena come about, in accord with (2) above. As a complex combination of geometry and algebra, this mathematics contains discrete structures as well, but so does the continuous mathematics of CT or RT. The point here is the fundamental, irreducible role of continuous mathematics in QT and QFT. This subject is rarely, if ever discussed, apart from previous work by the present author [

1,

2,

3,

4]. It will, however, be given new dimensions in this article in connection with QFT and renormalization. The fundamentally probabilistic nature of QT is fully in accord with the experimental evidence, available thus far, because no other predictions than probabilistic are in general possible in quantum experiments.

These three lines of this article’s argumentation are interconnected, and support and even, especially (2) and (3), are co-defining.

Modern physics emerged in the work of Descartes, Kepler, Galileo, and others, along with modernity itself. Physics became a mathematical-experimental science that deals with the material constitution of matter, in fundamental physics, such as classical mechanics, relativity (SR and GR), and QT, with the ultimate constitution of matter. Although this is not always sufficiently recognized, mathematics comes first in this conjunction, initially, as geometry, which Newton was still compelled to use in presenting his new mechanics, discovered by him by means of calculus (not considered legitimate mathematically at the time), in

Philosophiae Naturalis Principia Mathematica [

5]. As stated above, this primacy of mathematics, reflected in the full title of Newton’s

Principia, was, however, recognized by Heidegger, who, in reflecting on the rise of modern physics in these figures, observed: “Modern science is experimental because of its mathematical project” [

6] (p. 93). While the case requires qualifications as concerns other natural sciences, such as biology, it is pretty much straightforwardly correct as concerns modern physics, which need not mean that modern physics does not contain aspects that are not mathematical.

This is the case not only or even primarily because of the role of measurement in it vs. Aristotle’s physics as a physics of qualitative observations, as is often argued, not entirely accurately, although the role of (quantitative) measurement in modern physics is essential, including vis-à-vis Aristotle’s physics. Ancient Greek geometry, geo-metry, was, however, a science [episteme] of physical space, such as that of surfaces of the ground, and measurements in space, which made geometry physical. Modern (and even some ancient Greek) scientists extended geometry to spaces beyond earth, ultimately to the cosmological scale, still defined, in post-Big-Bang extending universe, geometrically. Ancient physics, on the other hand, was, primarily, a qualitative theory of motions of entities, material or mental, in correspondingly, physical or mental domains.

The geometrical view was restored to cosmology by Albert Einstein, with GR (1915), which, while still modern physics, made the physics of gravity a geometry, by using modern mathematics, Riemannian geometry, and which was quickly (around 1917) applied by Einstein to cosmology. By that time, modern geometry was separated, abstracted, from physics, a defining aspect of modern mathematics, with Einstein, accordingly, returning this abstracted mathematics, to physics, a move repeated, epistemologically more radically (on RWR lines), by Heisenberg in his invention of QM. In the case of spatiality, this separation was amplified by the role of topology, a modern discipline, although it has an earlier genealogy, in particular, in the work of Leonhard Euler. Euler was one of the key figures, along with Jean-Baptiste le Rond d’Alembert and Pierre Simon de Laplace, in the history of the relationships between mathematics and physics in the eighteenth century, before the emergence of modern mathematics defined by its separation from physics. As Euler’s work on topology indicates, the situation is more complex, in both directions, those of separating mathematics from physics and that of connecting, or reconnecting it, to physics. This complexity is also found in the work of such major figures of modern mathematics, as Karl Friedrich Gauss, Riemann, and Henri Poincaré in the nineteenth century, and David Hilbert, Hermann Weyl, and John von Neumann, in the twentieth century, with Poincaré also crossing into the twentieth century, a symbolic figure in this respect. Nevertheless, the move itself of returning modern mathematics, abstract from physics, was more pronounced in both RT and, epistemologically, more radically QT.

The most fundamental reason for Heidegger’s claim that modern physics is experimental because of its mathematical project was the following. From its emergence on, modern physics—CP, relativity, SR and GR, and QT, the main types of fundamental theories (theories dealing with the ultimate constitution of matter) now, with several theories comprising each—uses mathematics to relate to and especially to predict the quantitative data found in experimentally observed phenomena. (CP is not always seen as a fundamental theory, but for the reasons explained below and in detail in [

7], it may and will be in the present article as well). Indeed, René Thom argued that this is the case already in Aristotle, insofar as one sees his physics as

in effect (qualitative) topology [

8,

9,

10]. This argument would imply that, rather than only modern physics, all physics, at least from Aristotle on, is experimental because of its mathematical project. The main difference would, then, be that, unlike Aristotle’s qualitative topology of physics, the mathematics of modern physics is also quantitative, as is required in physics if it needs to relate to measurements of the quantitative data observed in phenomena, rather than only phenomena themselves. That, however, does not invalidate, only qualifies, the claim that, from Aristotle on, physics is defined by mathematics, initially geometry or even proto-topology, although the Pythagoreans defined their physics or at least their cosmology by both geometry and arithmetic. The Pythagorean harmony of the spheres represented by proportions or, in our terms, rational numbers, the view shattered by the Pythagoreans’ discovery of the incommensurable magnitudes, such as those of the diagonal and the side of a square.

Mathematics, beginning with the mathematics emerging at the rise of modernity, roughly around 1600, restored the role of both geometry and (by then) algebra to physics and made physics modern. This is how we still see the universe now, by extending the representation of its geometry and topology, from the Riemannian spaces, accompanied by the tensor calculus on them, of GR, to such stratospheric objects as Calabi–Yau manifolds or Alexandre Grothendieck’s motive theory. While the expanding macroscopic space of the universe appears to be on average flat, its origin in the Big Bang or what happened before it is a separate matter, especially given that this early history may be quantum in nature. If so, it may not be possible to speak or even conceive of its ultimate constitution, including as either continuous or discrete.

In dealing with the interpretation of QT, one cannot avoid the question of the nature of reality, material and, because of the mathematics of QT, mental, and of our capacity to deal with this reality, by representing, knowing, or conceiving of it, or the impossibilty thereof. “Reality” is assumed here to be a primitive concept and is not given an analytical definition. By “reality” I refer to that which is assumed to exist, without making any claims concerning the character of this existence, claims that, as explained below, define what is called realism, or in the case of mathematics, Platonism (although “mathematical realism” is used as well). On the other hand, the absence of such claims allows one to place this character beyond representation or, which is the view assumed in this article, even beyond conception. This placement is considered here under the heading of reality without realism (RWR) following [

1,

2]. I understand existence as a capacity to have effects on the world. The assumption that something is real, including of the RWR-type, is made, by inference, on the basis of such effects, as experiential phenomenal effects, rather than something as merely imagined. By the same token, RWR interpretations allow for and even require a representation of these effects but not a representation or even a conception of how they come about. As RWR, the ultimate reality that makes them possible cannot be experienced as such, but only makes possible the assumption of its existence through these effects.

I might note that it is more rigorous to see a different interpretation of a given theory as forming a different theory, because an interpretation may involve concepts not shared by other interpretations. For simplicity, however, I shall continue to speak of different interpretations of QM or QFT. On the other hand, as is common, in the case of CT, comprised of several theories, and relativity, SR or GR (which, too, contains different theories), I shall refer to theories themselves, because most interpretations of these theories, including the ones assumed here, are realist. Also, as QP or CP, relativity is not restricted to theories, SR and GR, and I shall, when necessary, qualify when I refer to relativistic phenomena rather than to SR or GR. I shall also use the term “view” to indicate a broader perspective grounded in a given in interpretation, such as the realist view vs. the RWR view.

The concept of RWR, arguably, originated in the foundations of mathematics, where it was suggested by Henri Lebesgue, one of the founders of modern integration and measure theory, in the wake of the paradoxes of Georg Cantor’s set theory [

11], although the idea has remained marginal and rarely, if ever, adopted in mathematics [

2]. Indeed, its origin there has been barely noticed, if at all, at least in what it signals philosophically, as considered here. The term RWR was not used either. The idea has been better known, if debated, in fact always remaining a minority view, in physics, following QM, especially in Niels Bohr’s RWR interpretation of it, the type of interpretation adopted here, along with the designation itself following [

1,

2]. (Bohr did not use this designation either.) RWR interpretations place the

ultimate reality responsible for quantum phenomena beyond conception. At the same time (hence, my emphasis) these interpretations assume that such a conception or even representation is possible in considering quantum phenomena as observed phenomena, which are effects of this ultimate reality. The existence of this reality is inferred from these effects, predicted by the mathematics of QM. While it was fundamentally defined by new (noncommutative) algebra, QM also introduced a new form of geometry, that of Hilbert spaces over

and the operator algebras, into physics. Just as the algebra of QM, this geometry had already existed in mathematics. It was one of Hilbert’s many contributions to geometry, although usually seen as that to functional analysis, as it was as well. Initially, QM did not use this concept, and it was only formalized, in fact axiomatized, in these terms by von Neumann [

12], who also introduced the term Hilbert space in mathematics. The concept was invented, in a less abstract form, by Hilbert. In the case of CP and relativity, a mathematical, in fact (while involving algebra and analysis, in this case commutative) geometrical representation is possible at all levels of reality considered.

The assumption of the independent existence of nature or matter essentially amounts to the assumption that it existed before we existed and will continue to exist when we will no longer exist. This assumption has been challenged, even to the point of denying that there is any material reality

vs. mental reality, with Plato as the most famous ancient case and Bishop Berkeley as the most famous modern case. Such views are useful in suggesting that any conception of how anything exists, or even that it exists, including as independent of human thought, belongs to thought. It need not follow, however, that something which such concepts represent, or to which they relate otherwise than by representing it, possibly placing it beyond representation or even conception, does not exist. Berkeley assumed

that God does exist as this type of independent reality. Plato also saw the ultimate nature of ideal reality theologically, as divine, even if connected to human thought. In any event, that any conception of how anything exists, or even that it exists (including as beyond thought), still belongs to thought, need not imply that something beyond thought does not exist.

This was indeed Lebesgue’s point made in 1905. This was two decades before QM, which, introduced in 1925-1926, by Heisenberg and Schrödinger, brought the RWR view into the debate concerning fundamental physics. QT, discovered by Planck in 1900, was barely introduced by the time of Lebesgue’s comment, and whether Lebesgue knew about it or not, it could not have been his source, because it had not posed this type of possibility then. Bohr’s 1913 atomic theory used the RWR view in dealing with the “quantum jumps” (transitions between stationary, constant-energy, states of electrons in atoms), still a decade after Lebesgue’s comment. Lebesgue’s reasons were mathematical. Lebesgue observed, in commenting on the paradoxes of set theory, shaking the foundations of mathematics then, that the fact that we cannot imagine or mathematically define objects, such as “sets,” that are neither finite nor infinite, does not mean that such objects do not exist [

11] (pp. 261-273) [

13] (p. 258). Lebesgue did not specify in what type of domain, material or mental, such entities might exist. His observation is, however, a profound reflection of the possible limit of our thought concerning the nature of reality, either material or mental. Similarly, the fact that we cannot conceive of entities that are neither continuous nor discontinuous does not mean that such entities do not exist, including in nature, a possibility brought about by QP. Indeed, Lebesgue’s insight was also a response to the problem of the continuum and the debates concerning it shaped by Cantor’s work and especially his continuum hypothesis that was given a deeper and more radical understanding, defining our view now, by Gödel’s incompleteness theorems of 1931 and then Paul Cohen’s proof of the undecidability of the continuum hypothesis in the 1960s. The undecidability of Cantor’s continuum hypothesis suggests that the concept of continuum may not be realizable mathematically. That the continuum may not exist in nature, for example, as space, time, or motion, has been questioned long before then, even by the pre-Socratics.

It need not follow, however, that the ultimate constitution of physical reality, spatial or temporal, is discrete, although this view has been proposed. Instead, it may be beyond anything that we can conceive, and hence no more discrete than continuous, in short, RWR, which it is assumed to be in RWR interpretations. On the other hand, in RWR interpretations reality is assumed to exist in nature, in accord with Lebesgue’s observation that something that cannot be thought by us can, nevertheless, exist as real.

As Lebesgue appears to have understood as well, it is equally impossible to be certain that any such reality does exist. The possibility of their existence, however, reflects both possible limits of our thought and our thought’s capacity to conceive of this possibility. While not originating in the RWR view, Gödel’s incompleteness theorems and the undecidability of the continuum hypothesis may be open to and may entail this view of mental reality in mathematics [

2].)

Assuming this type of reality, even if only with a practical justification (no other justification is claimed in this article or is possible in the RWR view), is a philosophical wager. It was, however, this wager that led Heisenberg to QM, defined by a new mathematics combining both continuity and discreteness, and correlatively geometry and algebra, the mathematics enabling QM to predict quantum phenomena, irreducibly discrete relative to each other. Given the nature of the ultimate reality responsible for quantum phenomena as beyond representation or even conception, it is not surprising that, in accord with the second line of my argument, QM or QFT is defined (including as different from CT and RT) by purely mathematical postulates, which connect either theory to quantum phenomena strictly in terms of probabilities. Indeed, as explained below, it follows that under these conditions, these predictions could only be probabilistic, which is, as noted, strictly in accord with quantum experiments. By the same token, the nature of quantum probability is different from that of CP, like classical statistical physics or chaos theory. There the recourse to probability is a practical, epistemological matter, due to our lack of knowledge concerning the underlying behavior of the complex systems considered, while their elementary constituents could in principle be predicted exactly deterministically. In the case of quantum systems, in RWR interpretations, there is just no possible knowledge or even conception concerning the behavior of quantum system, no matter how simple, which makes all quantum predictions in general probabilistic. Thus, while in CP, some predictions are, ideally, exact, deterministic, and others are probabilistic, in QP, all predictions are probabilistic.

These circumstances change the nature of causality in QT, compelling me to distinguish between “classical causality” and “quantum causality.” By “classical causality,” commonly called just “causality,” I refer to the claim that the state,

X, of a physical system is determined, in accordance with a law, at all future moments of time once its state,

A, is determined at a given moment of time, and state

A is determined by the same law by any of the system’s previous states. This assumption implies a concept of reality, which defines this law, thus making this concept of causality ontological or realist. Some, beginning with P. S. Laplace, have used “determinism” to designate classical causality. I prefer to use “determinism” as an epistemological category referring to the possibility of predicting the outcomes of classically causal processes ideally exactly. In classical mechanics, when dealing with individual or small systems, both concepts are co-extensive. On the other hand, classical statistical mechanics or chaos theory are classically causal but not deterministic in view of the complexity of the systems considered, which limit us to probabilistic or statistical predictions concerning their behavior. The main reason for my choice of “classical causality,” rather than just causality, is that, in view of the nature of quantum probability in RWR interpretations, it is possible to introduce alternative, probabilistic, concepts of causality, applicable in QM, at least in RWR interpretations, where classical causality does not apply [

1] (pp. 207-218). I shall properly define this concept in

Section 2, only stating its main feature: an actual quantum event, A, allows one to determine and predict which events may happen with one probability or another, but, in contrast to classical causality, without assuming that any of these events will necessarily happen, regardless of outside interference. (An outside interference can change what can happen when classical causality applies, while, however, restoring classical causality after this interference). As Bohr stressed:

[I]t is most important to realize that the recourse to probability laws under such circumstances is essentially different in aim from the familiar application of statistical considerations as practical means of accounting for the properties of mechanical systems of great structural complexity. In fact, in quantum physics we are presented not with intricacies of this kind, but with the inability of the classical frame of concepts to comprise the peculiar feature of indivisibility, or “individuality,” characterizing the elementary processes. [

14] (v. 2, p. 34)

The “indivisibility” refers to the indivisibility of phenomena in Bohr’s sense, defined by the impossibility of considering quantum objects independently of their interactions with these instruments, as explained in detail below. “Individuality” refers to the assumption that each phenomenon is individual and unrepeatable, is the outcome of a unique act of creation, as well as discrete relative to any other phenomenon, while also embodying the essential randomness of QP. This kind of randomness, quantum randomness, is not found in CP. This is because even when one must use probability in CP, at bottom one deals with individual process that are classically causal and in fact are at bottom deterministic, and, in the first, place allow for a (realist) representation by means of classical mechanics. (There are situations, such as those of the Einstein-Podolsky-Rosen (EPR) type of experiments where predictions concerning certain variables are, ideally, possible with probability equal to one, but it can be shown that, in view of the conditional nature of these predictions, they still do not entail classical causality [1, pp. 207-218].

It follows, in accord with the third line of my argument, that if the ultimate nature of the reality responsible for quantum phenomena cannot be assumed to be either continuous or discrete, there is no special reason to assume that the mathematics required to predict the data observed in quantum phenomena should be discrete or even finite, as some argue to be necessary, rather than continuous. The reasons for this argument are most commonly the residual problems of QFT, dealing with the appearance of mathematical infinities and divergent objects, such as certain integrals, related to renormalization. These infinities would disappear if the mathematics used is finite. In the present, RWR, view, this mathematics could be either insofar as it makes correct predictions concerning the phenomena considered, which are always discrete relative to each other and are represented by CP. I shall argue, however, that the nature of quantum phenomena imposes requirements on this mathematics if it is discrete (including finite), specifically that it has to have a structural complexity analogous and related to that of the continuous mathematics that has been used in QT so far. The nature of this complexity and relations is subtle and will be explained in

Section 5. This view is one of the main contributions of this article, under the heading of

the mathematical complexity principle.

The article will proceed as follows. The next section deals primarily with classical physics and relativity, as defined, as continuous physics, by their mathematical projects. It also revisits Aristotle’s physics, with which modern physics retains its connections, in particular in the case of CP and RT, by virtue of realism, continuity, and causality, as the physics of the continuum.

Section 3 and 4 consider the mathematical-experimental architecture of QT, specifically QM and QFT, focusing on its experimental side in

Section 3 and mathematical side in

Section 4, while, at the same time, assuming that both sides are mutually complicit with mathematics governing this complicity.

Section 5 deals with the relationships between continuity and discontinuity in QT and fundamental physics in general. My main point is, again, that while, experimentally defined by discontinuity, QT is mathematically defined by continuous mathematics, although it does contain discrete mathematical structures. (That, however, is also true about CT and RT.) I then consider the question of a possible discrete QT in high-energy regimes, now governed by QFT, in relation to renormalization. Section 6 is a philosophical postscript.

2. The Physics of Continuum, from Aristotle to Einstein, and Beyond

I begin with the definition of observation and measurement in physical theory, via Bohr’s remark concerning the subject. Bohr was commenting on quantum experiments. In effect, however, he was also establishing, along with distinguishing aspects of QP, a continuity between the concepts of observation and measurement in all modern physics (classical, relativistic, and quantum) and even, as concerns observation, Aristotelian physics. In Aristotle’s physics there was no measurement, at least not as the main ingredient of physics, because some elements of measurement could be found there. Modifying Bohr’s formulation, to brings out this continuity (with the new features of QM considered below):

Any measurement in a physical theory refers either to a fixation of the initial state, or an initial preparation, at t0 or to the test of such predictions, predicted observations, at t1, and it is the combination of measurements of both kinds which constitutes a well-defined physical experiment.

[

15] (p. 101; paraphrased)

One must refer to a physical

theory, because, while “ultimately, every observation can, of course, be reduced to our sense perceptions, … in interpreting observations use has always to be made of theoretical notions” [

14] (v. 1, p. 54). In QM, these notions included those defining quantum phenomena, specifically the role of

h in them, which leads to other new features of these phenomena vis-à-vis CT and RT. (On that earlier occasion, in 1927, Bohr expressly refers, under the heading of quantum postulate, to the role of

h as “symbolizing” the nature of quantum phenomena, the subject considered in [

1] (pp. 171-174) [

16]. In fact, the above definition applies even in Aristotelian physics, with which classical physics retains more proximities than it is often thought, although that in Aristotle’s physics one deals only with observation rather than measurement remains crucial. (This difference, as noted, remains in place, even assuming that Aristotle’s key concepts are proto-topological and in this sense, qualitatively, mathematical.) It may be instructive to comment on Aristotle’s physics first. I shall use the following diagram, hereafter Diagram A:

E0 (q0) -------------------------------------------→ E1 (q1)

t0 t1

This diagram as such applies in all physics. What changes are the concepts involved and connections between them, which also indicates that diagrams are rarely sufficient and may, if their concepts are not properly considered, be misleading. In Aristotle’s physics, events E0 and E1 of observing positions q0 and q1 were assumed to be connected by a continuous, classically causal process represented qualitatively to our phenomenal intuition. This representation defines observations, in accordance with (in our language) the topology of continuity and, in the case of some motions, such as those of projectiles, geometry of straight lines. Thus, as noted, there is still some mathematics defining experimental physics.

CP changed, and in several key respects corrected, Aristotle’s physics. The nature of these corrections is well known and need not be rehearsed here. My concern is the conceptual (mathematical-experimental) transformation enacted by modern physics, which retains connections to Aristotle’s physics on the account of continuity and causality. In the same diagram:

E0 (q0) -----------------------------------------------→E1 (q1)

t0 t1

events E0 and E1 of observing and quantitatively measuring coordinates q0 and q1, respectively, are still assumed to be connected by a continuous causal process, but now represented mathematically, specifically geometrically. This representation may be seen as a mathematical refinement of the general phenomenal representation of bodies and motions in space and time, the representation on which Aristotle’s physics is based, refining it only philosophically.

Galileo was, arguably, the most important figure in establishing modern physics as a mathematical-experimental science, while also giving geometry the main, including cosmological, significance in physics. This significance has not been diminished by calculus, which introduced new algebraic dimensions into physics. Algebra was far from absent in Galileo, or Descartes, but geometry, a heritage of ancient Greek mathematics, remained dominant, not the least as the way of

thinking about physics, including in Newton, his use and the very invention of calculus notwithstanding. That was only to change with Heisenberg and QM, compelling Einstein to speak, rather disparagingly and not entirely accurately, of Heisenberg’s method as “purely algebraic.” In fact, Heisenberg’s “method” also brought in new geometry to physics, along with new algebra [

1] (pp. 111-126).

Galileo, as Edmund Husserl observed, inherited geometry itself as an already established field, preceding his physics and thus modern physics: “The relatively advanced geometry known to Galileo, already broadly applied not only to the earth but also in astronomy, was for him, accordingly, already pregiven by tradition as a guide to his thinking,

which [then] related empirical matters to the mathematical ideas of limit” [

17] (p. 25; emphasis added). It is the latter aspect of his thinking, that is crucial and distinguishes his thinking from that of his predecessors, such as Copernicus, Descartes, and Kepler (of whose work Galileo was not aware). Galileo also gave geometry, as the science of physical space, a cosmological meaning, and reciprocally the cosmos a mathematical, geometrical meaning, thus making the cosmos mathematical, or at least mathematically representable.

It is not only or primarily a matter of considering the solar system geometrically, which was already the case in his predecessors, Indeed, even apart from the fact that a version of the Copernican system was known to the ancient Greeks, the Ptolemean system was cosmological, too. Galileo’s thinking was much more radical. Galileo replaced philosophy with mathematics, especially geometry (although he also uses functions, and thus algebra), in physics, thus bracketing philosophy, or at least the phenomenological part of it, from physics. In Galileo and in all modern physics after him, the primordial grounding of physics was in mathematics, which was primordially grounded only in itself, rather than in philosophy. It was this break with philosophy (in both physics and mathematics) that worried Husserl and waseven seen by him as a crisis, extending to our own time [

17] . Galileo’s cosmos is a mathematical cosmos, which is written as “the book of nature” in the language of geometry. On the other hand, the idea of the book of nature, known to his precursors in physics, was borrowed by Galileo from theology. Thus, this new scientific cosmology emerged from two different trajectories or technologies of thought, theological and mathematical, akin to the way present-day smartphones have emerged from and merged two technologies, the telephone and the computer. Galileo’s thinking was more multiply shaped, given philosophical trajectories of his thinking, from Plato and before on, or literary and artistic ones, such as Dante’s poetry or Titian’s paintings, mentioned by Galileo. In 1558, on the invitation of the Florentine Academy, Galileo also gave two lectures “On the Shape, Location, and Size of Dante’s Inferno,” which secured him a position of a lecturer in mathematics at the University of Pisa. (There are also more trajectories to the genealogy of the smartphone.) In Galileo’s famous words:

Philosophy is written in that great book which ever is before our eyes—I mean the universe— but we cannot understand it if we do not first learn the language and grasp the symbols in which it is written. The book is written in mathematical language, and the symbols are triangles, circles and other geometrical figures, without whose help it is impossible to comprehend a single word of it; without which one wanders in vain through a dark labyrinth. [

18] (pp. 183–184)

Galileo, thus, departs from Aristotle’s physics both in making his physics that of nature alone (sometimes referred to as “the Galilean reduction”) and in assuming a fundamentally mathematical character of the philosophy written in the book of nature the physics as conceived by him needed to be read. In sum, more than anyone else before him, Galileo gave mathematics its defining role in modern physics, a role eventually codified by Newton in

Principia [

5], and made modern physics experimental by making it mathematical, as Husserl and, following him, Heidegger argue [

6] (p. 93). As noted, a predominantly geometrical view was restored to cosmology by Einstein in GR, which made the physics of gravity a geometry by using modern mathematics (by then developed separately from physics), Riemannian geometry. Maxwell’s electromagnetism, Einstein’s main model, was a key precursor physically, but it was not as expressly geometrical as was Einstein’s GR, which Einstein had tried to bring together with electromagnetism for the rest on his life without ever succeeding. While, however, similarly to QT, using, especially in GR, modern mathematics, previously divorced from physics, RT, remained a theory of the continuum, inheriting this aspect of it from classical physics, although RT also brought fundamental changes into physics. In the case of SR:

E0 (q0) -----------------------------------------------→E1 (q1)

t0 t1

Events E0 and E1 of observing positions q0 and q1, respectively, are still assumed to be connected by causal process, but now represented by the equations of special relativity, including Lorentz’s equation for the addition of velocities. As such SR represents a radical ontological and epistemological transformation of the concept of motion, reflected by the role of c, which is a measurable numerical constant. SR is grounded in two main postulates. Postulate 1 is that of Galilean relativity. Postulate 2 is a new physical postulate, represented mathematically.

- 1.

The laws of physics are invariant in all inertial frames of reference.

- (4)

The speed of light, c, in vacuum is the same for all observers, regardless of the motion of light source or observer.

The difference from classical physics is the second postulate. It becomes part of the first postulate if the laws of physics include Maxwell’s equations, while the laws of Newton’s mechanics no longer apply without modifications, such as Lorentz’s equation for addition of velocities, which mathematically represented the second postulate. This equation was part of the new mathematics of relativity. Relativity, thus, posed insurmountable difficulties for our general phenomenal intuition, because the relativistic law of addition of velocities (defined by the Lorentz transformation)

for collinear motion, runs contrary to any possible intuitive conception of motion. We cannot conceive of this kind of motion by our general phenomenal intuition. This makes this concept of motion no longer a mathematical refinement of a daily concept of motion as the concept of motion is in classical physics. It is an independent physical concept that was, however, still represented mathematically, in contrast to QM or QFT in RWR interpretations. There, the ultimate reality considered (responsible for quantum phenomena) is beyond any conception we can form, including a mathematical one.

SR was, nevertheless, the first physical theory that defeated our ability to form a visualization of an individual physical process, although the concept of (classical) field in electromagnetism already posed complexities in this regard.

GR, in accord with my argument, was a remarkable example, arguably the greatest since Newton’s mechanics, of advancing physics by means of new mathematical structures, in the case, expressly, borrowing them from mathematics itself, Riemannian geometry. It also retained and enhanced epistemological aspects of SR as concerns the difficulty and ultimately impossibility of grasping its physics by means of our general phenomenal intuitions, thus only allowing for a mathematical realism.

Bohr did not miss this point. He said: “I am glad to have the opportunity of emphasizing the great significance of Einstein’s theory of relativity in the recent development of physics with respect to our emancipation from the demands of [intuitive] visualization” [

14] (v. 1, pp. 115-116). “Emancipation” is not a casual word choice, rarely found in Bohr’s writings. Nevertheless, SR or GR, still offers a mathematically idealized representation of the reality considered, thus making this reality available to thought, even if not our general phenomenal intuition. This is no longer possible in QP, in RWR interpretations, such as that of Bohr (in its ultimate version) or the one assumed here.

Bohr saw the existence, at least a possible existence, of a reality beyond the reach of representation or even thought itself, as ultimately responsible for what is available to thought and even to our immediate phenomenal perception in quantum phenomena, as “an epistemological lesson of quantum mechanics” [

14] (v. 3, p. 12). At least, this was an epistemological lesson of his

interpretation. Perhaps, however, physics cannot teach us its epistemological lessons otherwise than by an interpretation. There appears, however, to be more consensus as concerns the interpretation of CP and relativity as realist theories, although this not an entirely unanimous one either. It has been questioned, for example,

whether the mathematical architecture of relativity corresponds to the architecture of nature, as opposed to serving as a mathematical model for correct predictions concerning relativistic phenomena (e.g., [

19]

). In this case, these predictions are deterministic, as opposed to the irreducibly probabilistic predictions of QT, even in dealing with most elementary individual quantum phenomena. This is a fundamental difference due to the impossibility, in principle, of controlling the interference of observational instruments with the quantum object under investigation, regardless of interpretation. When it comes to QM or QFT, the proliferation of diverse (and sometimes incompatible) interpretations and the debates concerning them has been uncontainable, and continues with an undiminished intensity and no end in sight.

3. Quantum Discontinuum: “Interactions between Atoms and the External World”

QP brought the “emancipation” from the demands of visualization invoked by Bohr to the level of making the ultimate reality responsible for quantum phenomena “invisible to thought.” The meaning of this expression, introduced in [

7], is essentially the same as that of reality without realism. At least in the corresponding, RWR interpretations, QT, specifically QM and QFT, placed this reality beyond the reach of thought, any thought, even mathematical thought, rather than only our general phenomenal perception or intuition. Similarly to the role of

c in SR, this situation is reflected in the role of Planck’s constant,

h, which is, just as

c, numerical and experimentally observed. Indeed, as noted, quantum phenomena are defined by the fact that in considering them,

h must be taken into account.

Speaking of the ultimate reality responsible for quantum phenomena as “invisible to thought” follows Bohr’s appeal to the impossibility of visualization of this reality. Bohr’s use of the term “visualization” in part owed to the German term for intuition, Anschaulichkeit, which etymologically relates to what is visualizable. Bohr, however, gave it a broader meaning of being available to our general phenomenal intuition. This availability is, as explained, no longer possible in relativity, which still allowed for a mathematical conception and representation of all levels of reality considered. In Bohr or the present view, this is no longer possible in the case of the ultimate reality responsible for quantum phenomena. It is, however, still possible in considering quantum phenomena, assumed to be represented by classical physics. We can “see” in our conscious phenomenal experience what is observed in quantum experiments. We can also consciously experience the mathematics used to predict what is observed. As a result, both can be communicated unambiguously, and in this sense, but in the present view, only in this sense, can be assumed to be objective.

At least in RWR interpretations, however, we cannot experience or represent, including mathematically, or even conceive of,

think, how quantum events that we see come about. In other words, we cannot perceive or even conceive of the reality ultimately responsible for what we can phenomenally experience but we see the effects of this reality in our phenomenal experience. In fact, nobody has ever

seen (no matter how good one’s instruments) a moving electron or photons. One can only see a trace, say, a spot on a silver bromide plate, left as an effect of the interaction between an electron or a photon and the instrument used, capable of this interaction and creating this trace. Quantum physics is a physics of traces, the origins of which can never be reached experimentally. This is the case regardless of an interpretation. RWR interpretations, however, in principle preclude any representation or even conception, physical or mathematical, of how quantum phenomena come about. In fact, in the present interpretation, the concept of a quantum object, such as an electron or photon, is only applicable at the time of observation and not independently [

1,

2]. As stated, we also cannot form a general phenomenal conception of the reality considered in relativity or the reality of electromagnetic field but only of effects of either reality. Either reality can, however, be mathematically represented and thus made “visible to thought” vs. the ultimate reality responsible for quantum phenomena in RWR interpretations. This reality is not only invisible to our phenomenal perception but is also invisible to thought—is beyond the reach of thought.

In order, however, to avoid the complexities involved in using the term visualization (or the impossibility thereof), which can be associated with different and sometimes divergent concepts, I shall henceforth, in considering RWR interpretations, mostly speak of the ultimate reality responsible for quantum phenomena as “beyond conception” or “beyond thought.” Bohr never expressly spoke in these terms, but his ultimate interpretation, introduced around 1937, assumes this view. The present interpretation, but not that of Bohr, also assumes that the concept of quantum objects, such as an electron or photon is only applicable at the time of observation but not independently of observations [

1,

2,

3]. This aspect of the present interpretation will, however, not be discussed here. In any event, RWR interpretations, place the ultimate character of the reality responsible for quantum phenomena beyond representation or even beyond conception, in the strong form of these interpretations, such as the one assumed here. Hereafter, unless qualified, RWR interpretations will refer to strong versions of them. Realist or (another common terms) ontological interpretations offer a representation or at least a conception of this reality, usually in terms of or connected to the mathematical formalism of a theory. (For a comprehensive discussion of RWR interpretations, see [

1,

2].)

Shortly before his paper containing his discovery of QM was published, Heisenberg wrote to Ralph Kronig: “What I really like in this scheme [QM] is that one can really reduce

all interactions between atoms and the external world to transition probabilities” (Letter to R. Kronig, 5 June 1925; cited in [

20], v. 2, p. 242]. By referring to the “interactions between atoms and the external world,” this statement suggests that QM was only predicting the effects of these interactions observed in quantum phenomena. Quantum phenomena are defined in observational instruments, and amenable, along with the observed parts of these instruments, to a treatment by classical physics. The later circumstance became crucial to Bohr’s interpretation, eventually leading him to his concept of [quantum] phenomenon, discussed below. Establishing these effects required special instruments. Human bodies are sufficient in some cases and are good models of the instruments used in classical physics, but not so in quantum physics, which is irreducibly technological, or in relativity, which is also irreducibly technological, because its effects cannot be perceived by human bodies alone [

3]. Their irreducibly technological nature, however, still allows SR and GR to be classically causal, in fact deterministic, and in the first place, realist theories. This is because the observational instruments used (rods and clocks) allowed one to observe the systems considered without disturbing them and (ideally) mathematize their independent behavior. This is no longer possible in considering quantum phenomena, which fact poses major difficulties for realism, even if it does not preclude it. Heisenberg’s view just cited was adopted by Bohr as a defining feature of his interpretation in all its versions, with a special emphasis on the role of classical physics in describing quantum phenomena and data contained in them. (It should be kept in mind that Bohr adjusted his views, sometimes significantly, a few times, eventually arriving at his ultimate, RWR, interpretation around 1937, after a decade of debate with Einstein. This requires one to specify to which version of his interpretation one refers, which I shall do as necessary, while focusing on his ultimate interpretation, unavoidably

in the present interpretation of his interpretation. Unless qualified, “Bohr’s interpretation” will refer to his ultimate interpretation. The designation “the Copenhagen interpretation” requires even more qualifications as concerns whose interpretation it is, say, that of Heisenberg, Dirac, or von Neumann, which compels me to avoid this designation entirely.)

The idea of placing the ultimate reality responsible for quantum phenomena beyond representation was adopted by Bohr, following Heisenberg, in the immediate wake of Heisenberg’s discovery of QM (before Schrödinger’s wave mechanics was introduced):

In contrast to ordinary mechanics, the new quantum mechanics does not deal with a space–time description of the motion of atomic particles. It operates with manifolds of quantities which replace the harmonic oscillating components of the motion and symbolize the possibilities of transitions between stationary states in conformity with the correspondence principle. These quantities satisfy certain relations which take the place of the mechanical equations of motion and the quantization rules [of the old quantum theory].

Bohr’s strong form of the RWR interpretation, which placed the reality ultimately responsible for quantum phenomena beyond conception, was introduced a decade later, shaped by Bohr’s debate with Einstein. In an article, arguably, introducing this interpretation, he referred to “our not being any longer in a position to speak of the autonomous behavior of a physical object, due to the unavoidable interaction between the object and the measuring instrument,” which by the same token, entails a “renunciation of the ideal of [classical] causality in atomic physics” [

21] (p. 87). Obviously, if we could form a concept of this behavior, we would be able to say something about it. In reflecting on this interpretation in 1949, in response to Einstein’s criticism, Bohr amplified this view: “In quantum mechanics, we are not dealing with an arbitrary renunciation of a more detailed analysis of atomic phenomena, but with a recognition that such an analysis is

in principle excluded’’.

As noted, “the unavoidable interaction between the object and the measuring instrument,” defined this new epistemological situation, always dealing, to return to Heisenberg’s formulation, with “the interactions between atoms and the external world” and “transition probabilities” between these interactions. As Bohr argued from the outset of his interpretation of QM, in CP and relativity “our … description of physical phenomena [is] based of the idea that the phenomena concerned may be observed

without disturbing them appreciably,” which also enables one to identify these phenomena with the objects considered [

14] (v. 1, p. 53; emphasis added). By contrast, “any observation of atomic phenomena will involve an

interaction [of the object under investigation] with the agency of observation not to be neglected” [14[ (v. 1, p. 54; emphasis added). This argument was retained in all versions of his interpretation, all grounded in the irreducible role of observations instruments, as necessary part of any agency of observation, in the constitution of quantum phenomena. One should keep in mind the subtle nature of this contrast: the interaction between the object under investigation and the agency of observation

gives rise to a quantum phenomenon, in fact in a unique act of creation, rather than

disturbs it [

14] (v. 2, p. 64). Relativity, again, represented a step in this direction, insofar as, in contrast to Newtonian mechanics, space and time were no longer seen as preexisting (absolute) entities then measured by rods and clocks but were instead defined by the latter in each reference frame. Still the interference of observational instruments on the behavior of the objects considered could be disregarded, thus allowing, as in CP, the identification of these objects with observed phenomena for all practical purposes. As a result, the objects under investigation could be considered independently of their interactions with measuring instruments.

Disregarding this interference is no longer possible in considering quantum phenomena, empirically, and hence even in realist interpretations of QM, or in alternative theories, such as Bohmian mechanics. This impossibility eventually led Bohr to his concept of “phenomenon,” as applicable in QT, under the condition of an RWR interpretation, introduced by him at the same time (around 1937). Bohr adopted the term “phenomenon” to refer only to what is observed in measuring instruments:

I advocated the application of the word phenomenon exclusively to refer to the observations obtained under specified circumstances, including an account of the whole experimental arrangement. In such terminology, the observational problem is free of any special intricacy since, in actual experiments, all observations are expressed by unambiguous statements referring, for instance, to the registration of the point at which an electron arrives at a photographic plate. Moreover, speaking in such a way is just suited to emphasize that the appropriate physical interpretation of the symbolic quantum-mechanical formalism amounts only to predictions, of determinate or statistical character, pertaining to individual phenomena appearing under conditions defined by classical physical concepts [describing the observable parts of measuring instruments].

Technically, one can no longer speak of the electron having

arrived at a photographic plate, which implies a classical-like motion, rather than that the spot on the plate registers, in the corresponding phenomenon, the electron, as the quantum object considered. This is, however, clearly what Bohr means, given what else he says in this article. The difference between phenomena and objects has its genealogy, in modern times (it had earlier precursors), in Kant’s distinction between objects as things-in-themselves in their independent existence and phenomena as representations created by our mind. As defined by strong RWR interpretations, however, Bohr’s or the present view is more radical than that of Kant. While Kant’s things-in-themselves are assumed to be beyond knowledge, they are not assumed to be beyond conception, at least a

hypothetical conception, even if such a conception cannot be guaranteed to be correct and is only practically justified in its applications [

22] (p. 115]. By contrast, in (strong) RWR interpretations, what is practically justified is not any possible conception of the ultimate reality responsible for quantum phenomena, but the impossibility of such a conception.

Bohr came to see quantum phenomena as revealing “a novel feature of atomicity in the laws of nature,” “disclosed” by “Planck’s discovery of the quantum of action [

h], supplementing in such unexpected manner the old [Democritean] doctrine of the limited divisibility of matter” [

15] (p. 94). Atomicity and, thus, discreteness or discontinuity in QP initially emerged on this Democritean model, beginning with Planck’s discovery of the quantum nature of radiation in 1900, which led Planck to his concept of the quantum of action,

h, physically defining this discontinuity, and Einstein’s concept of a photon, as a particle of light, in 1906. The situation, however, especially following the discovery of QM revealed itself to be more complex. This complexity led Bohr to his concepts of phenomenon and atomicity, as part of his ultimate, RWR, interpretation of quantum phenomena and QM.

Bohr’s concept of atomicity is essentially equivalent to that of phenomenon (every instance of “atomicity” is a phenomenon, and vice versa) but highlights such features of quantum phenomena as their individual, even unique nature, and their discreteness relative to each other, as follows. First, Bohr’s concept of phenomena implies that nothing about quantum objects themselves could ever be extracted from phenomena. This impossibility defines what Bohr calls the wholeness or indivisibility of phenomena, which makes them “closed”: the ultimate constitution of reality that led to the emergence of any quantum phenomenon are sealed withing this phenomenon and cannot be unsealed. As he says:

The essential wholeness of a proper quantum phenomenon finds indeed logical expression in the circumstance that any attempt at its well-defined subdivision would require a change in the experimental arrangement incompatible with the appearance of the phenomenon itself. … every atomic phenomenon is closed in the sense that its observation is based on registrations obtained by means of suitable amplification devices with irreversible functioning such as, for example, permanent marks on a photographic plate, caused by the penetration of electrons into the emulsion. … the quantum-mechanical formalism permits a well-defined application referring only to such closed phenomena.

[

14] (v. 2, pp. 72–73; also p. 51)

In this way phenomena acquire the property of “atomicity” in the original Greek sense of an entity that is not divisible any further, which, however, now applies at the level of phenomena, rather than referring, along Democritean lines, to indivisible physical entities, “atoms,” of nature. Bohr’s scheme enables him to transfer to the level of observable configurations manifested in measuring instruments all the key features of quantum physics—discreteness, discontinuity, individuality, and atomicity (indivisibility)—previously associated with quantum objects themselves. As is Bohr’s concept of phenomenon, the concept of “atomicity” is defined in terms of individual effects of quantum objects on the classical world, as opposed to Democritean atoms of matter itself. Accordingly, “atomicity” in Bohr’s sense refers to physically complex and hence physically subdivisible entities, and no longer to single physical entities, whether quantum objects themselves or even point-like traces of physical events. In other words, these “atoms” are individual phenomena in Bohr’s sense, rather than indivisible atomic quantum objects, to which one can no longer ascribe atomic physical properties any more than any other properties. Any attempt to “open” or “cut through” a phenomenon (this would require a different experiment, and hence one is never really cutting through the same phenomenon, which confirms the uniqueness of each) can only produce yet another closed individual phenomenon, a different “atom” or set of such “atoms,” leaving quantum objects themselves inaccessibly inside phenomena.

Importantly, as defined by “

the observations [already]

obtained under specified circumstances,” phenomena refer to events that have already occurred and not to possible future events, such as those predicted by QM. This is the case even if these predictions are ideally exact, which they can be in certain circumstances, such as those of EPR type experiments. The reason that such a prediction cannot define a quantum phenomenon is that a prediction for variable

Q (for example, that related to a coordinate,

q) cannot, in general, be assumed to be confirmed by a future measurement, in the way they can be in CP or relativity, where are all possible measurable quantities are always defined simultaneously. In QP, one can always, instead of the predicted measurement, perform a complementary measurement, that of

p (the momentum), which will leave any value predicted by using

Q entirely undetermined by the uncertainty relations. This measurement would in principle preclude associating a physical reality corresponding to a coordinate

q when one measures

p [

1] (pp. 210-212). This is why classical causality does not apply in the way it does in CP, even when probability is used there, or relativity (which is a deterministic theory, in which all predictions are ideally exact). This point has major implication for understanding the EPR experiment and countering EPR’s arguments, along the lines of Bohr’s reply [

1] (pp. 227-257). I use capital vs. small letters to differentiate, Hilbert-space operators,

Q and

P, associated with predicting the values of measured quantities,

q and

p, observed on measuring instruments. One can never speak of both variables unambiguously, even if they are associated with measuring instruments, while any references, even that to a single property of a quantum object is not possible at all in RWR interpretations even at the time of observation, let alone independently. In CP, this difficulty does not arise because one can, in principle, always define both variables simultaneously and unambiguously speak of the reality associated with both and assign them to the object itself. By contrast, in a quantum experiment one always deals with a system containing an object and an instrument. Thus, in considering quantum phenomena (strictly defined by observation), there is, on the one hand, always a discrimination between an object and an instrument, and, on the other, the impossibility of separating them. This impossibility compelled Bohr to speak of

“the essential ambiguity involved in a reference to physical attributes of objects when dealing with phenomena where no sharp distinction can be made between the behavior of the objects themselves and their interaction with the measuring instruments” [

14]

(v. 2, p. 61). By contrast, a reference to what is observed can, as classical, be unambiguous and communicated as such.

This interpretation radically changes the meaning of all elements and relations between them in Diagram A, in the case of QM or QFT, in accord with Bohr’s statement, with which I have started, modifying to fit all physics, which was, however, in accord with Bohr’s view. To cite Bohr’s actual statement defining this diagram in QT:

The essential lesson of the analysis of measurements in quantum theory is thus the emphasis on the necessity, in the account of the phenomena, of taking the whole experimental arrangement into consideration, in complete conformity with the fact that all unambiguous interpretation of the quantum mechanical formalism involves the fixation of the external conditions, defining the initial state of the atomic system concerned and the character of the possible predictions as regards subsequent observable properties of that system. Any measurement in quantum theory can in fact only refer either to a fixation of the initial state or to the test of such predictions, and it is first the combination of measurements of both kinds which constitutes a well-defined phenomenon.

Technically, in Bohr’s definition of the concept, one deals here with two phenomena corresponding to two events in Diagram A. This statement need not mean that Bohr’s concept of phenomenon applies to two measurements, or, and in particular that it can refer to a prediction, which is why Bohr’s speaks of “the test of … predictions,” that is, already performed experiments. The point is that one must specify two measurements and the instruments prepared accordingly: the first is the initial, actual, measurement or phenomenon and the second is a, possible, future measurement or phenomenon that would enable us to verify our probabilistic prediction, or our statistical predictions, after repeating the experiments many times. As Bohr said in the same article: “It is certainly far more in accordance with the structure and interpretation of the quantum mechanical symbolism, as well as with elementary epistemological principles, to reserve the word phenomenon for the comprehension of the effects observed under given experimental conditions” [

15] (p. 105). Thus, as his other statements confirm, a phenomenon is defined by an already performed measurement, as an effect of the interactions between quantum objects and the apparatus, but never by a prediction. Then, the first description above is contextualized as referring to “that all unambiguous interpretation of the quantum mechanical formalism involves the fixation of the external conditions, defining the initial state of the atomic system concerned and the character of the possible predictions as regards subsequent observable properties of that system.” The second refers to the test of any such prediction. One needs both arrangements and both phenomena (defined when both measurements are performed) to test our predictions, in QP, in repeated experiments, because our predictions are in general probabilistic or statistical.

Accordingly, observational instruments must now be added to Diagram A:

E0 (q0) E1 (q1)

t0 t1

Instrument 1 Instrument 2

The diagram now reflects the fundamental difference between CP or RP and QP. In CP or RP, the interference of measuring instruments could, at least in principle, be neglected or controlled, allowing one, in dealing with individual systems, to identify the observed phenomenon with the object considered. In QP, this interference cannot be controlled and, hence, cannot be neglected, which makes the phenomena observed in measuring instruments, the only observable phenomena, different from quantum objects considered. As stated, nobody has ever seen an actual electron or photon, or any quantum object, as such. One can only observe traces of their interactions with measuring instruments. I indicate this in the diagram but leaving the space between E0 (q0) and E1 (q1) empty. In dealing with quantum phenomena, deterministic predictions are not possible even in considering the most elementary quantum systems. The repetition of identically prepared quantum experiments in general leads to different recorded data, and unlike in CP, this difference cannot be diminished beyond the limit, defined by h, by improving the capacity of our measuring instruments. These data are both those recorded of the initial measurement E0 enabling a prediction, and those of the second measurement, E1, which would verify this prediction. These recordings will in general be different either when one repeats the whole procedure in the same set of experimental arrangements or when one builds a copy of the apparatus and sets it up in the same way in order to verify experimental findings by others. This makes the verification in QP in general statistical.

In RWR interpretation, QM or QFT, only predicts the effects of these interactions observed in the instruments used, without representing how these effects come about. This procedure replaced measurement in the classical sense (of measuring preexisting properties of objects) with the establishment of each quantum phenomenon as each time a unique act of creation. Observed phenomena can be treated classically, without measuring the properties of quantum objects, at least in an RWR interpretation. In fact, RWR interpretations responds to this experimental situation, arising, in these interpretations, because of the irreducible role of measuring instruments in the constitution of quantum phenomena. Returning to Diagram A, in its quantum version, In RWR interpretations, no representation or even conception of how the transition between E0 (q0) and E1(q1) happens is possible; but it is possible to find transition probabilities between these events. To do so one needs a theory, such as QM, or in high-energy regimes, QFT, which would predict these probabilities or statistics. (The difference between probability and statistics will be put aside, although it may affect an interpretation of QM or QFT.) Quantum phenomena and the data observed there are, in Bohr or the present view, represented by classical physics.

One can, for example, use Schrödinger’s equation (considering it in one dimension for simplicity)

for making a prediction concerning a future coordinate measurement associated with an electron on the basis of one previously performed, at time

t0 position measurement

qo. By

Born’s rule, the wave function is associated with the probability amplitude, by providing the probability density by the square modulus of |, , which is always positive and can be normalized so the probability is always between zero and one. Then, to confirm such a prediction, one sets up a suitable observational device and makes a new observation at time

t1 registering an outcome,

q1, of the experiment, thus predicted. The instrument needs to be prepared in accordance with this prediction for the coordinate measurement. This is because one can always perform a different type of measurement, that of the momentum,

p, at

t1, which will irrevocably disable verifying the prediction concerning

q. This is the transition from the “quantum” to the “classical” reality in this experiment. One cannot associate any concept of “quantum” with the ultimate reality responsible for quantum phenomena in RWR interpretations, because one cannot associate any concept with this reality at all. I speak of this reality as “quantum” only because CP, which describes the data thus classically observed in two experiments, cannot predict them.

As Bohr emphasized, in spite or even because of their radical epistemological feature his and, by implications RWR interpretations, remain fully consistent with “the basic principles of science,” [23, p. 697], including “the unambiguous logical representation of relations between experiences” and hence the possibility of unambiguously communicating them [

14] (v. 2, p. 68). Thus, his concept of complementarity (explained below) was expressly introduced to ensure the possibility of maintaining these principles in quantum physics, under an RWR interpretation [

23] (p. 699). Indeed, one

of Bohr’s aims was to argue that this principle and other basic principles of science are not threatened by the radical epistemological implications of QP, especially in RWR interpretations, as many worried at the time and still do. Of course, what constitutes the basic principles of science may change and have changed, and it could be, and has been, debated. At present, there is a nearly unanimous agreement on the logical consistency and unambiguity of communication in mathematics and physics, or in physics, on the consistency of physical theories with the experimental evidence available or their capacity, in modern physics by means of mathematics, for predicting the outcomes of the experiments considered by them. (In general, a physical theory must be consistent with all available experimental evidence or confirmed physical laws rather than only those within its purview.) For Bohr, to be in accord with these requirements, as QT was and remains, it was sufficient to see it as conforming to “the basic principles of science.” The present view is the same. On the other hand, many physicists and philosophers of science, Einstein famously among them, saw a representation of how physical phenomena, quantum phenomena included, come about, in other words, realism, is a basic principle of science.

It is not coincidental that in stating that “when speaking of conceptual framework, we refer merely to the unambiguous logical representation of relations between

experiences,” Bohr refers to experiences rather than experiments, as one might expect in dealing with physics [

14] (v. 2, p. 68; emphasis added). The reason is that Bohr makes a broader point, which equally applies in mathematics. Our experiences in mathematics and science combine individual and shared aspects with shared ones conforming to the unambiguity communication. “Individual” and “shared” are arguably better terms than “subjective” and “objective,” although this depends on how one defines subjectivity and objectivity. Science and especially mathematics must maximally reduce the ambiguity of defining and communicating their findings. This was the definition of “objectivity” in Bohr, vs. as it would be for realism the “objective” representation of the ultimate reality responsible for quantum phenomena, which representation is “in principle excluded” by Bohr’s RWR interpretation [

14] (v. 2, p. 62). This representation, by means of CP, is possible and, in Bohr or the present interpretation, necessary in considering what is observed as quantum phenomena. (It is possible to have an RWR interpretation apart from this assumption.)

The uncertainty relations exemplify this situation. They are a defining feature of quantum phenomena, rather than of QM, the predictions of which are, however, fully in accord with the uncertainty relations. This difference is important. While this is a major testimony to the accord between QM and the experimental data it considers, the uncertainty relations remain an experimentally established fact, a law of nature, independent of any theory, and could in principle be predicted by an alternative theory. An uncertainty relation is represented by a formula ΔqΔp ≅ h, where q is the coordinate, p is the momentum in the corresponding direction, which are measurable quantities observed in instruments, h is the Planck constant (strictly measurable as well), and Δ is the standard deviation, which measures the amount of variation of a set value. There is nothing unambiguous about the formula itself. It represents an experimentally confirmed law independent of any theory, although a physical interpretation of the uncertainty relations is subtle, in part in view of their statistical nature. It suffices to note that the uncertainty relations are not a manifestation of the limited accuracy of measuring instruments, because they would be valid even if we had perfect measuring instruments. In classical and quantum physics alike, one can only measure or predict each variable within the capacity of our measuring instruments. In classical physics, however, one can in principle measure both variables simultaneously within the same experimental arrangement and improve the accuracy of this measurement by improving the capacity of our measuring instruments, in principle indefinitely. The uncertainty relations prevent us from doing so for both variables in quantum physics regardless of this capacity. Accordingly, what the uncertainty relations state, unambiguously, is that the simultaneous exact measurement or prediction of both variables (always possible, at least ideally and in principle, in classical physics) is not possible. On the other hand, it is always possible to measure or predict each variable ideally exactly. It is also possible to measure both inexactly. For Bohr, however, and following him here, the uncertainty relations also mean that one cannot unambiguously consider or even define both variables simultaneously in QP, but only define one at each moment in time and thus make unambiguous statements about it.

This situation is understood by Bohr in terms of complementarity, his most famous concept: the mutual exclusivity of certain measurements or predictions at any given point in time and yet the possibility, by a conscious decision, of measuring either one or the other exactly at any given point in time, which, however, in principle preclude doing the same for the other, complementary, variable. The role of this conscious decision is crucial in quantum physics and is one of the key differences between it and classical physics or relativity, which do not contain either the uncertainty relations or complementarity [

1] (pp. 209-218). By the same token, one can only make, by means of QM, the corresponding prediction, which is in general probabilistic, concerning a future measurement of one variable, while precluding one from doing so for the other. Complementarity, thus, helps to establish the possibility of strictly unambiguous definition or communication of everything involved in considering quantum phenomena, including, again, the uncertainty relations, unambiguously predicted by QM.

This formalism can also be unambiguously communicated, as can all mathematics used in quantum theory, or any mathematics as such. Indeed, after defining in the passage cited above, “a conceptual framework” as “the unambiguous logical representation of relations between experiences,” Bohr expressly comments on mathematics:

A special role in physics is played by mathematics which has contributed so decisively to the development of logical thinking, and which by its well-defined abstractions offers invaluable help in expressing harmonious relationships. Still, in our discussion . . . we shall not consider pure mathematics as [an entirely] separate branch of knowledge, but rather a refinement of general language [and concepts], supplementing it with appropriate tools to represent relations for which ordinary verbal expression is imprecise and cumbersome. In this connection, it may be stressed that, just by avoiding the reference to conscious subject which infiltrates daily language, the use of mathematical symbols secures the unambiguity of definition required for objective description.

[

14] (v. 2, p. 68; emphasis added)