Most studies of nanothermodynamics focus on evaluating measured behavior, especially in systems that are in (or near) equilibrium [

25,

26,

27,

57,

58]. In this case, the assumption of a homogeneous macroscopic system from standard thermodynamics (

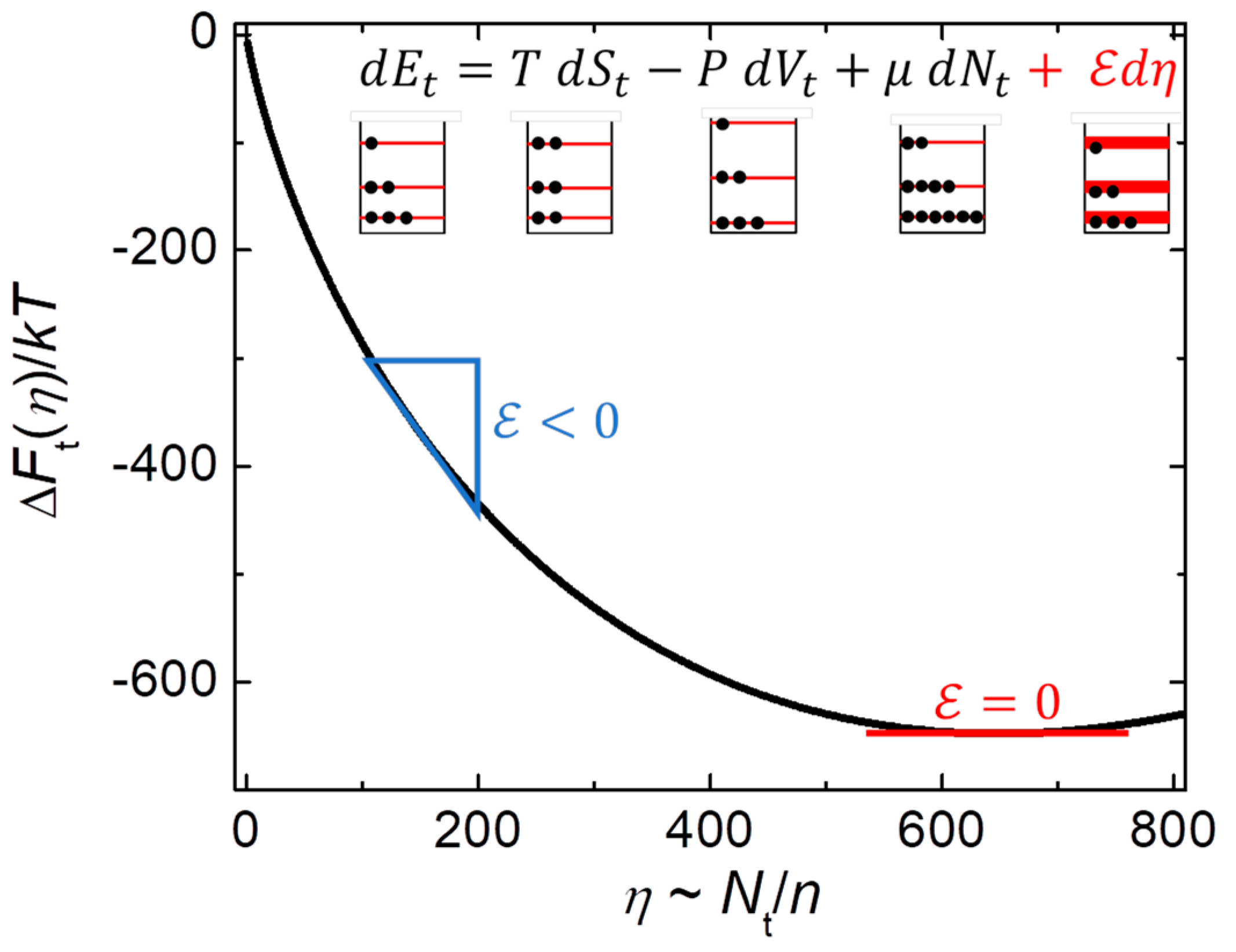

in

Figure 1) is replaced by stable nanothermodynamics (

), yielding the nanocanonical ensemble of independent internal subsystems consistent with measured thermal and dynamic heterogeneity [10-14,25-27,44-46]. In fact, quantitative agreement is found between models based on nanothermodynamics and the measured temperature dependence of the magnetic correlation range (

) in cobalt [

27], structural correlations in LaMnO

3 [

26], and the typical sizes of independently relaxing regions in glass-forming liquids [44-46,57]. Furthermore, a mean-field cluster model that includes finite-size effects from the nanocanonical ensemble yields improved agreement with measured corrections to scaling near ferromagnetic phase transitions by maintaining

as

[

27]. Moreover, essentially the same model yields a gradual glass transition that is smeared out by finite-size effects when the correlation range is limited by disorder [

32]. Finally, strict enforcement of the 2

nd law of thermodynamics in nanoscale subsystems provides improved agreement with measured 1/

f-like noise from qubits [

25], metal films, spin glasses, and nanopores [

58]. Here we review and refine six ways nanothermodynamics has been applied to simple models to yield improved agreement with measured behavior.

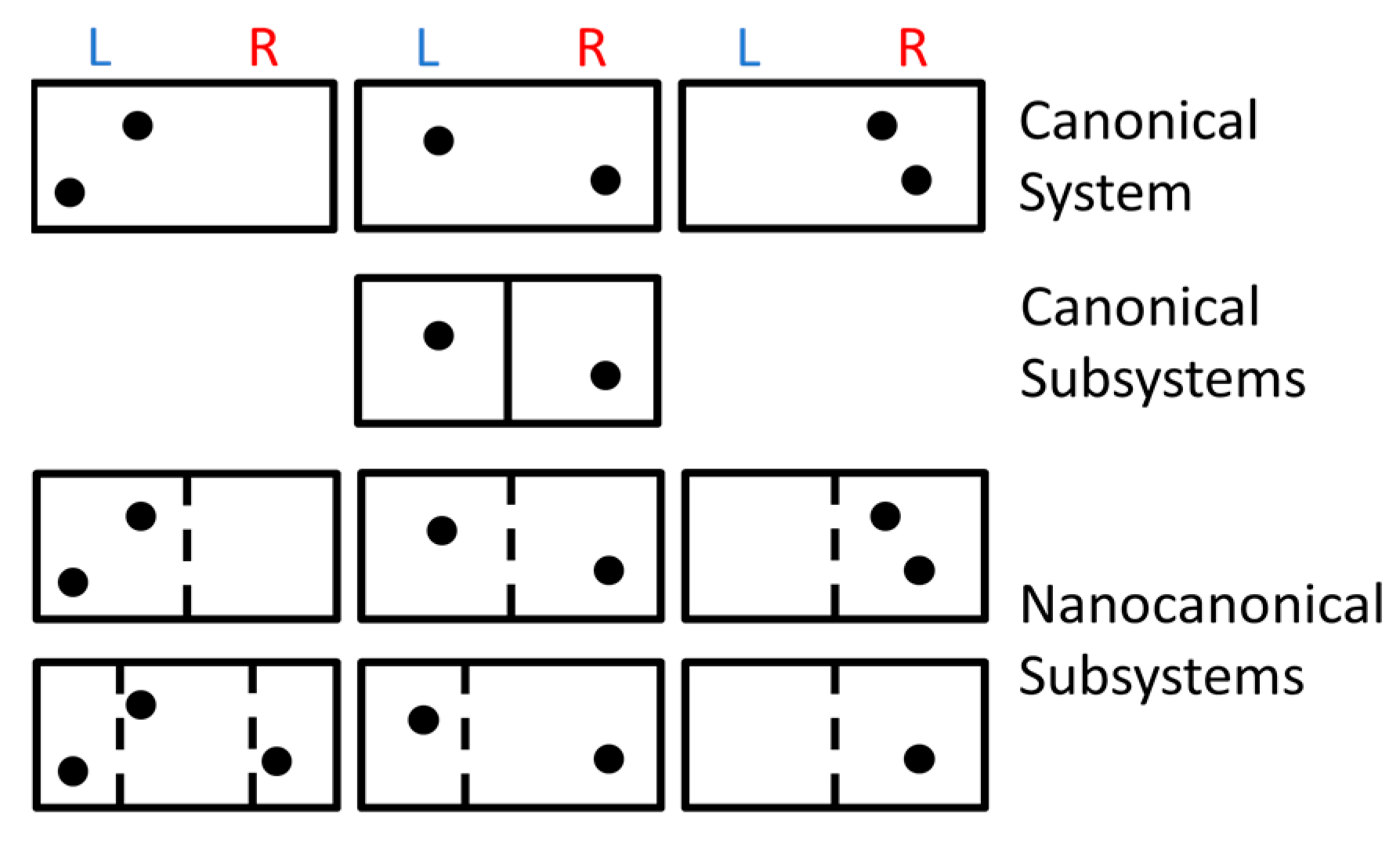

3.1. Novel Solution to Gibbs’ Paradox

Gibbs’ paradox refers to a prediction from classical statistical mechanics of ideal gas particles that violates the 2

nd law of thermodynamics, first recognized by Gibbs in 1876 [

59]. The violation occurs when an impenetrable barrier is moved into a box of distinguishable particles, subdividing the box into two halves, which reduces the volume that each particle can explore and reduces the total entropy. If the barrier is inserted reversibly (slowly and frictionlessly), this process lowers the entropy of a closed system, violating the 2

nd law. The usual solution to Gibbs’ paradox is to assume that all identical particles are statistically indistinguishable, as sketched in

Figure 3A–C [

25,

59,

60,

61,

62,

63,

64].

Figure 3 A shows a box that is initially subdivided into two sides, each having volume

V and

N particles. This initial state has type-X particles on the

L side (blue) and type-O particles on the

R side (red), with X’s and O’s indistinguishable particles (but they are distinguishable X’s from O’s). Each side of the box has the canonical ensemble (subscript

c) partition function

. Here

is the thermal de Broglie wavelength, with

Planck’s constant and

the mass of each particle, while the

removes overcounting of states assuming indistinguishable particles. In the canonical ensemble of small-system thermodynamics, the entropy of each side is given by the Sackur-Tetrode equation minus a non-extensive term that depends logarithmically (not linearly) on

due to finite-size effects:

. This non-extensive term comes from the subdivision potential,

, which cannot (and should not) be set to zero: mathematically because it comes from Stirling’s formula for

, and physically because it applies to the canonical ensemble of constrained (fixed size) subvolumes. This contribution to total entropy from small-system thermodynamics is negligible for large systems,

ppm for

, but non-negligible for small systems,

for

.

Figure 3B shows the box with the partition removed. The resulting increase in entropy is

, as expected because each X and O has twice as much volume to explore.

Figure 3C shows the barrier returned to its original position, dividing the homogeneous mixture of particles into two subvolumes. The change in entropy is

. Although this reduction in entropy depends logarithmically on the number of particles and is therefore relatively small for large systems, any violation of the 2

nd law of thermodynamics could be a concern [

60]. Furthermore, this entropy is super additive, failing a basic theorem of quantum mechanics [

20,

52]. Specifically, doubling the number of indistinguishable particles at constant density more than doubles the entropy:

,

D-F are sketches depicting a novel solution to Gibbs’ paradox based on nanothermodynamics [

25].

Figure 3 D shows the initial box with the same separation of particles as in

Figure 3A, but now identical particles are indistinguishable only if they are close enough for exchange symmetry, yielding distinguishable particles when they are in distinct subsystems. In the nanocanonical ensemble, each subsystem has fluctuations in the number of particles and volume, so that only their average values are well defined,

and

. The entropy of each subsystem is

, which includes a Sackur-Tetrode-like equation plus a non-extensive term from subtracting the subdivision potential,

. Assuming each side of the box in

Figure 3D contains

subsystems, the initial entropy of each side can be written as:

Equation (1) consists of the Sackur-Tetrode equation (in square brackets) plus a non-extensive (final) term. Maximizing this final term maximizes the total entropy, as favored by the 2

nd law of thermodynamics, and stabilizes the nanocanonical ensemble for internal subsystems. This final term also makes the entropy sub-additive, as needed for quantum mechanics [

20,

52]. Specifically, doubling the number of indistinguishable particles in an average subsystem at constant density reduces the entropy per particle:

.

Equation (1) reveals the reasonable result that maximum entropy requires all three Legendre transforms:

,

, and

. However, for large systems, the total increase in entropy from the first two Legendre transforms is negligible, with the final term in Eq. (1) coming entirely from the final transform into the nanocanonical ensemble. In fact, it is this final transform alone that causes significant deviations from the usual assumption that all ensembles yield equivalent results. Another issue in standard statistical mechanics that is solved by nanothermodynamics is the validity of ensembles having no extensive environmental variables. Indeed,

Figure 1 shows that thermal equilibrium requires the stability condition,

. From the final term in Eq. (1), stability for the semi-classical ideal gas has

, opposite to

for the usual thermodynamic limit of standard thermodynamics. However, both limits are taken in ways that maintain a constant density of particles,

. One way to ensure uniform density for small subsystems in

Figure 3D is to let the number of subdivisions in the ensemble diverge,

, while fixing the total number of each type of particle (

) and the total volume of each box (

). Alternatively, by defining an average distance between particles, e.g.

for each side of

Figure 3D, then the local particle density (

) may vary in space and time as a system evolves because nanothermodynamics remains valid on the nanoscale.

There are two interesting limits in the non-extensive contributions to entropy from the nanocanonical ensemble, final term in Eq (1). If all similar atoms are indistinguishable, as in the standard solution to Gibbs’ paradox, each side initially has a single homogeneous subsystem

, so that the usual thermodynamic limit (

) yields

. In this case, the final term in Eq. (1) is negligible, leaving only the Sackur-Tetrode equation. If instead the system is in the nanothermodynamic limit where most atoms are distinguishable,

yields

and

. Now the final term in Eq. (1) adds an extra entropy of

to the total, about 5.4% above the Sackur-Tetrode value for argon at standard temperature and pressure [

25]. Indeed, the nanocanonical ensemble yields a significant increase in entropy (Eq. 1) and distinct physical picture (

Figure 3D–F) for the semi-classical ideal gas, without changing the Hamiltonian. Thus, a full understanding of even the simplest models may require non-Hamiltonian contributions to energy contained only in Hill’s fundamental equation of small-system thermodynamics shown in

Figure 1.

To test Eq. (1) against Gibbs’ paradox we assume that

is vanishingly small but fixed by the stability condition, so that the starting value

in

Figure 3D remains constant with density changes coming only from changes in

. Upon removing the barrier,

Figure 3E, the change in entropy is

, matching the Sackur-Tetrode value. Now, if the barrier is returned to its original position,

Figure 3F with

, the entropy is precisely preserved for this reversible process:

. Thus, Gibbs’ paradox is solved with no violations of the 2

nd law on any level, and without requiring indistinguishable particles beyond the nanoscale. Although Boltzmann provided an early solution to Gibbs’ paradox by subdividing systems into cells of fixed volume [

59,

61], the nanocanonical ensemble justifies such subdivisions via increased entropy from variable volumes.

Ironically, experimental evidence for Eq. (1) may come from the accuracy of the Sackur-Tetrode equation for measured entropies. In [

65], quantitative entropies of four monatomic gases are determined by adding all measured changes (

) to a residual entropy that is assumed to be

at

. This

is deduced from specific-heat and latent-heat measurements that yield the total change in entropy from the solid phase at

to the gas phase above the temperature of vaporization. For krypton, the values given in [

65] (converted to units of

) are

and

, within 0.3% of the Sackur-Tetrode prediction,

. Similar measurements on neon, argon, and mercury yield values of

that are also within 0.07-1.4% of the Sackur-Tetrode predictions. However, the assumption of

in [

65] neglects known contributions to the residual entropy, such as the entropy of mixing from distinct isotopes that occur in natural abundances (

) [

66,

67]. Indeed, the stable isotopes of krypton and mercury should add extra residual entropies of at least

and

, respectively.

Appendix A provides an explanation for the missing entropy in measured values of

, due to nanothermodynamics where most atoms remain distinguishable in both the liquid and gas phases.

3.2. The Ising Model in Stable Equilibrium

The Ising model is the simplest microscopic model for a thermodynamic phase transition. As such, it remains among the most widely used models in statistical physics [

68], providing basic insight into the thermal and dynamic behavior of many systems, and a simple guide to more sophisticated models. A solution to the one-dimensional (1D) Ising model was published by Ernst Ising in 1925, and a solution to the 2D Ising model was published by Lars Onsager in 1944, but neither solution is in stable equilibrium if finite-size effects are included. Here, two methods are used to give the stable solution to Ising’s original model for a finite chain of interacting spins. Mathematical details are given in

Appendix B.

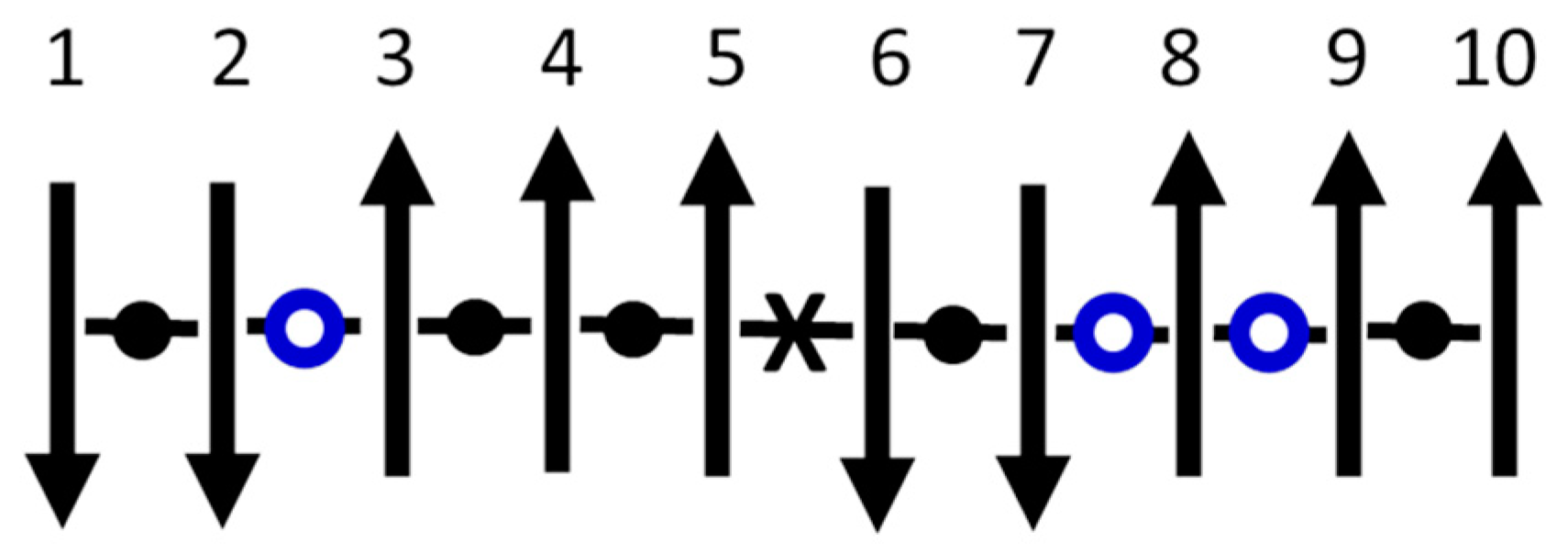

The Ising model is based on binary degrees of freedom (“spins”) with nearest-neighbor interactions that are usually attributed to quantum exchange.

Figure 4 shows a specific system of

interactions between

spins in a 1D chain. For simplicity, let there be no external field. This model is readily solved using the statistics of the three types of interactions: low energy (

), high energy (

X), and no energy (

O) (“breaks”). These breaks, which must intermittently replace interactions for the equilibrium nanocanonical ensemble inside large systems, serve as the interfaces between subsystems. Mechanisms that could cause such breaks in real materials include Anderson localization or many-body localization [

69,

70]. Using

as the ferromagnetic exchange constant, the net energy of interaction is

, where

and

are the number of high-energy interactions and breaks, respectively.

Stable equilibrium in the nanocanonical ensemble comes from

, yielding

in Eq. (B4) of

Appendix B. An identical answer comes from Eq. (B10) for the average number of spins between breaks in the canonical ensemble of an infinite system, consistent with

Figure 4:

There are multiple ways to confirm Eq. (2) for the thermal-average number of interactions in finite chains,

. First,

when

, as expected for an equal mix of interactions (● or

X) and breaks (

O) that maximizes the entropy of mixing when energy can be neglected. Next,

when

, as expected for an infinite homogeneous system that minimizes the energy when entropy can be neglected. Furthermore, as pictured in

Figure 4 and derived in

Appendix B, Eq. (2) comes from adding breaks (

O) to a large Ising system (

), where nanothermodynamics subdivides the system into a stable distribution of subsystems, each having an average of

spins. The same result comes from the generalized ensemble of small-system thermodynamics by treating finite chains of interacting (● or

X) spins in a bath of spins having chemical potential (

) and temperature (

) in stable equilibrium (

), which yields an average of

spins with breaks only at the endpoints of each chain. Thus, the nanocanonical ensemble of nanothermodynamics and the stable equilibrium of the generalized ensemble of small systems are equivalent, and both match the canonical ensemble of 1D spins with breaks.

Some additional conclusions come from the stable solutions of Ising’s model given in

Appendix B. A large system governed by a Hamiltonian with intermittent breaks (Eq. B6) solved in the canonical ensemble yields heterogeneous subsystems identical to Ising’s original Hamiltonian (Eq. B1) in the nanocanonical ensemble, but only if the subsystems obey Hill’s stability condition. Thus, distinct Hamiltonians can yield equivalent results when solved in different ensembles, again emphasizing the need to include all contributions to energy in Hill’s fundamental equation of small-system thermodynamics shown in

Figure 1. Sections 3.3–3.6 utilize Ising-like spins in stable distributions of independent subsystems to improve the agreement between simple models and measured behavior.

3.3. Mean-Field Cluster Model for Non-Classical Critical Scaling

Critical scaling is used to characterize divergent behavior near continuous phase transitions. One example is the divergence of magnetic susceptibility (

) in ferromagnetic materials near the Curie temperature,

, which can be written as

. Here we focus on the effective scaling exponent,

, especially its temperature dependence. The “classical” (i.e. not modern) value from standard mean-field theory introduced by Weiss in 1907 has no temperature dependence,

, yielding the Curie-Weiss law that is measured at

. At

, however,

is usually found. Although related non-classical critical exponents were routinely measured before 1900 [

71], such measurements were mostly ignored until after 1944 when Onsager found a theoretical value of

at

in his solution of the 2D Ising model. Results from MC simulations of the 3D Ising model show a monotonic increase [

72] from

at

to

at

, but like Onsager’s solution these simulations assume effectively infinite and homogeneous systems in the canonical ensemble, hence the simulations are not in the stable equilibrium of the nanocanonical ensemble. Consequently, many measurements fail to follow standard models, to the point where it has been said: “The critical exponents of iron and nickel are very similar to each other, while those for cobalt are clearly different. There is no theoretical understanding of these results” [

73]. And: “It is thus as if theorists and experimentalists in this field often behave like two trains passing in the night. Why is this?” [

74]. Indeed, it might be said that ferromagnetic transitions should be added to the

-transition in

4He [24,

75] as examples where measured critical exponents remain unexplained by standard theories based on infinite and homogeneous systems. At least for ferromagnetic materials, a viable solution comes from adding heterogeneity that yields nanoscale subsystems, improving the agreement between measured critical behavior and standard theories, including real-space renormalization and Landau theory [

76].

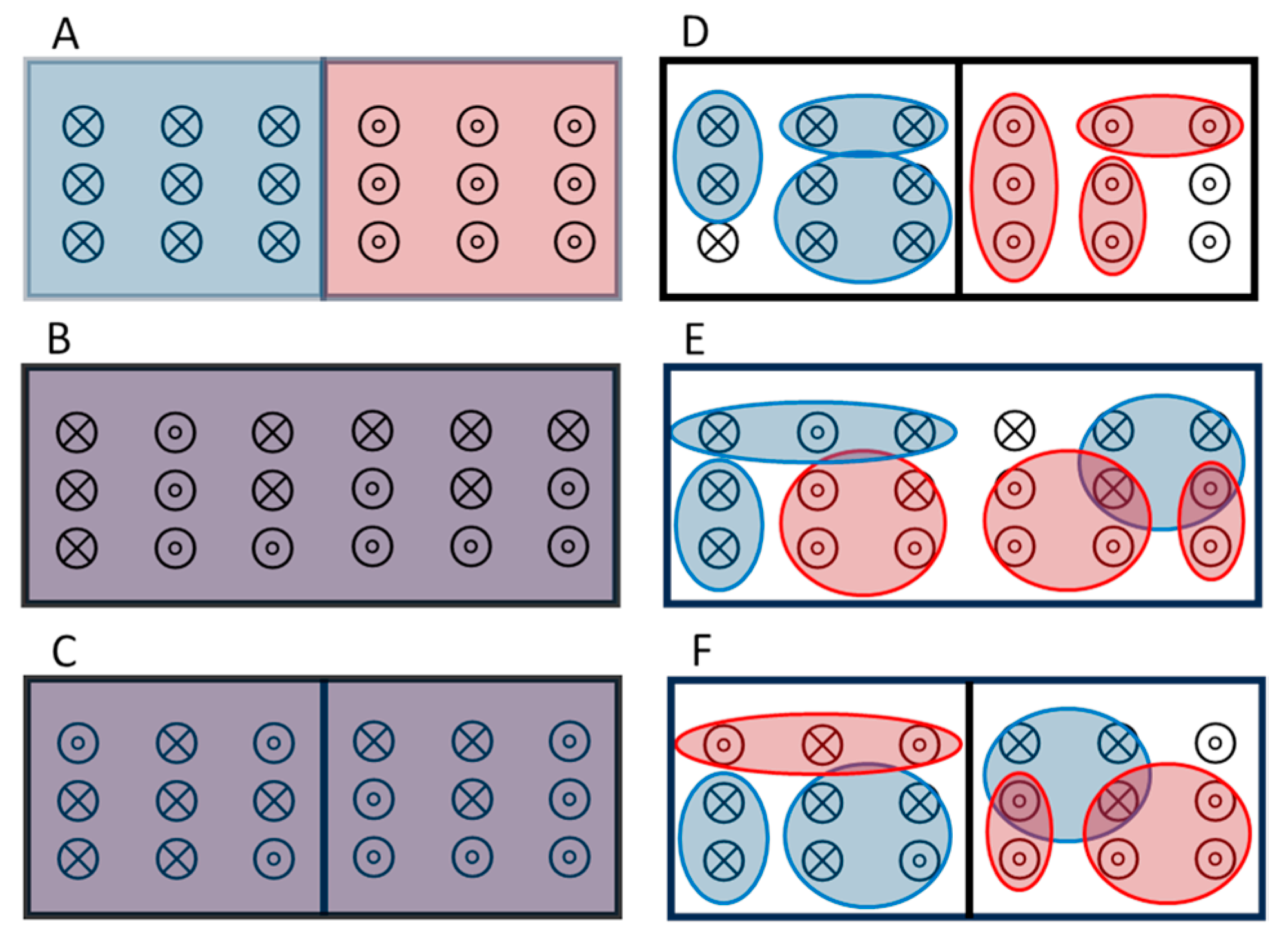

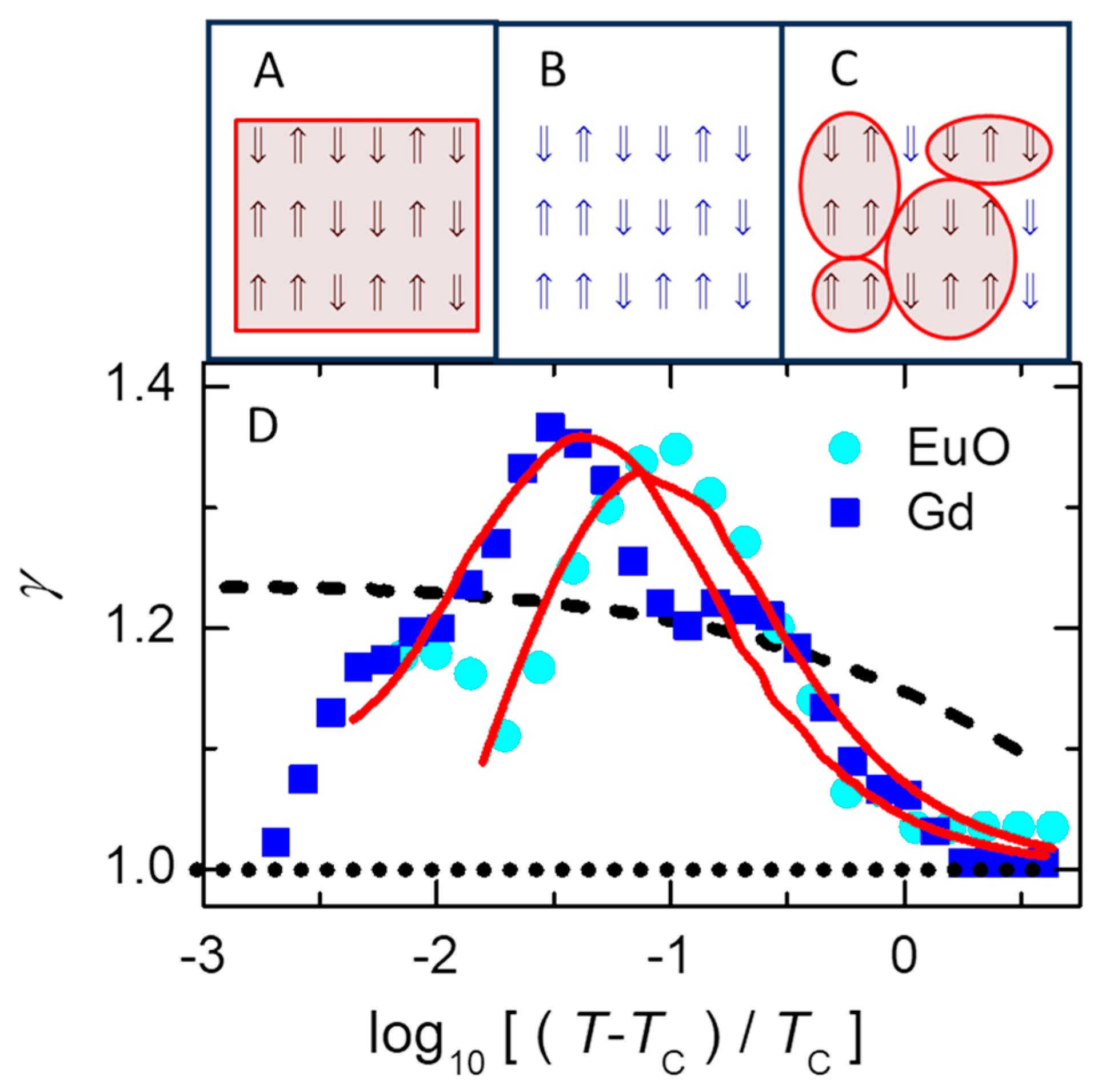

Here, we present a simplistic picture [

26,

27] for comparing three models of magnetic response, as sketched in

Figure 5A–C. The magnetic susceptibility of these models at

can be characterized by taking appropriate limits of:

The two sums in Eq. (3) are over the number of up spins () and the total number of spins () in the subsystems, starting at to ensure at least one interaction per subsystem (although starting at does not greatly alter the results). Note that () is proportional to the magnetic moment, which comes from the first derivative of the partition function () with respect to the magnetic field (that is set to zero and hence not included in Eq. (3)), while the susceptibility comes from the second derivative, yielding the factor of in the summand. The adjustable parameters in Eq. (3) used to fit data are an amplitude pre-factor (), energy scale (), and the chemical potential ().

The choice of model determines the behavior of Eq. (3). For the usual Ising model all spins are fully localized, with no spatial or temporal averaging, as sketched in

Figure 5B. In this case, the mean-field energy

and its multiplicity

are replaced by time-varying values having local correlations that preclude simple analysis in the 3D Ising model. At the other extreme, standard mean-field theory assumes averaging over a homogeneous and infinite system (i.e.

with no sum over

in Eq. (3)), yielding the same average behavior for every spin, as sketched in

Figure 5A. Fluctuations are infinitesimal for such large systems, yielding

for

so that the mean-field energy is negligible. Alternatively, the mean-field cluster model has mean-field behavior on nanoscale subsystems, as sketched in

Figure 5C, which facilitates nanoscale fluctuations. This model is essentially a mixture of the two extremes: mean-field theory on small subsystems where time- and/or space-averaged behavior between nearby spins can yield simple values for the net behavior of each subsystem (see last two paragraphs of

Section 3.5, below).

Figure 5 D shows the temperature dependence of

from measurements on two magnetic materials (symbols, given in the legend) and the predictions of three models (lines) that are represented by the sketches in

Figure 5A–C. The dotted (horizontal) line in

Figure 5D at

is from classical mean-field theory based on assuming average behavior of all spins at every site,

Figure 5A. The dashed line in

Figure 5D comes from simulations of the usual 3D Ising model based on assuming a homogeneous system in the canonical ensemble with uniform exchange interaction between every neighboring spin, despite the assumption that each spin is localized to its own site,

Figure 5B. Although a careful analysis of this model [

72] confirms the monotonic increase in

with decreasing

shown by the dashed line in

Figure 5D, the data from ferromagnetic materials have a prominent peak in

. A similar peak is also found in various critical fluids [

77]. The limit

as

is especially clear in detailed measurements on Gd [

78].

Solid lines in

Figure 5D that mimic the measurements come from a mean-field cluster model [

27].

Figure 5C is a sketch indicating how this model is essentially a mixture of the models in

Figure 5A,B. Specifically, mean-field energies are found for clusters of each size, then used as the effective energy of that cluster size. This cluster averaging allows local fluctuations (unlike averaging over the whole sample,

Figure 5A) and is consistent with the exchange interaction for spins that are delocalized or indistinguishable over the cluster (unlike spins localized to a single site,

Figure 5B). Equation (3) has two sums that transform the extensive environmental variables to two intensive variables,

and

. The stability condition (

) connects these variables, yielding

as a function of

. Often,

is relatively constant, e.g. for the ideal gas,

. The solid lines in

Figure 5D come from fitting Eq. 3 using the constant value of

that gives best agreement with each set of data, as shown by the solid lines. This

from

then gives the

dependence of other properties in the material, such as an average cluster size (

) that mimics the measured temperature dependence of the magnetic correlation range in cobalt [

27].

3.4. A Microscopic Model for Supercooled Liquids and the Glass Transition

Although amorphous materials comprise the oldest and most pervasive forms of synthetic substances, there is no widely accepted microscopic model for the mechanisms governing the glass transition. Thus, the glass transition is considered an “unsolved problem in physics” [24,

79,

80]; certainly, the standard Ising model is too simple to fully describe amorphous materials. However, by adding “orthogonal dynamics” to simulations of the Ising model on an equilibrium distribution of region sizes (similar to, but smaller than the clusters used for ferromagnetic materials in section

3.3), a microscopic model is found that mimics at least 25 features measured in supercooled liquids and the glass transition [

57]. It is called the orthogonal Ising model (OIM).

Orthogonal dynamics refers to time steps that separate changes in spin alignment (

from changes in spin energy (

), so that the two relevant conservation laws can be uncorrelated if favored by the system. Energy-conserving changes in

require spins that have no net interaction, e.g. spins 5, 6, or 8 in

Figure 4. Spin 8 (

) because it has no interactions, spins 5 (

) and 6 (

) because they have an equal number of low- and high-energy interactions so that inverting the spin trades the energies without changing the net energy. Meanwhile,

-conserving changes in

come from Kawasaki spin exchange (trading places of two interacting spins), which never changes the net

but often changes the net

, e.g. spins

in

Figure 4. Experimental evidence for orthogonal dynamics comes from observations that conservation of energy and conservation of (angular) momentum often occur on different time scales. One example is in magnetic resonance, where precession rates that change the spin alignment generally occur much faster than spin-lattice relaxation rates that change the energy. Other examples come from non-resonant spectral hole burning [

10,

12,

14], where energy induced by a large-amplitude low-frequency pump oscillation can persist in local degrees of freedom for minutes, or even hours, while changes in dipole alignment often occur much faster. Insight into such separation of time scales may come from analyzing MD simulations [

15], where equilibrium fluctuations often involve potential energy traded back-and-forth between neighboring spatial blocks for several atomic vibrations. Thus, conservation of local energy dominates over the relaxation of energy via slow coupling to distant parts of the sample that serve as the heat bath. Energy localization is found to require anharmonic interactions, presumably needed to scatter the harmonic modes (phonons) and cause the localized anharmonic modes to decouple from the phonons. In any case, orthogonal dynamics separates two main conservation laws allowing them to be independent if favored by the system, or to be correlated when appropriate. For a thermal transition that mimics liquid-glass behavior, the OIM is simulated utilizing the nanocanonical ensemble in 3D by adding Metropolis steps that make or break the interactions between neighboring spins [

57].

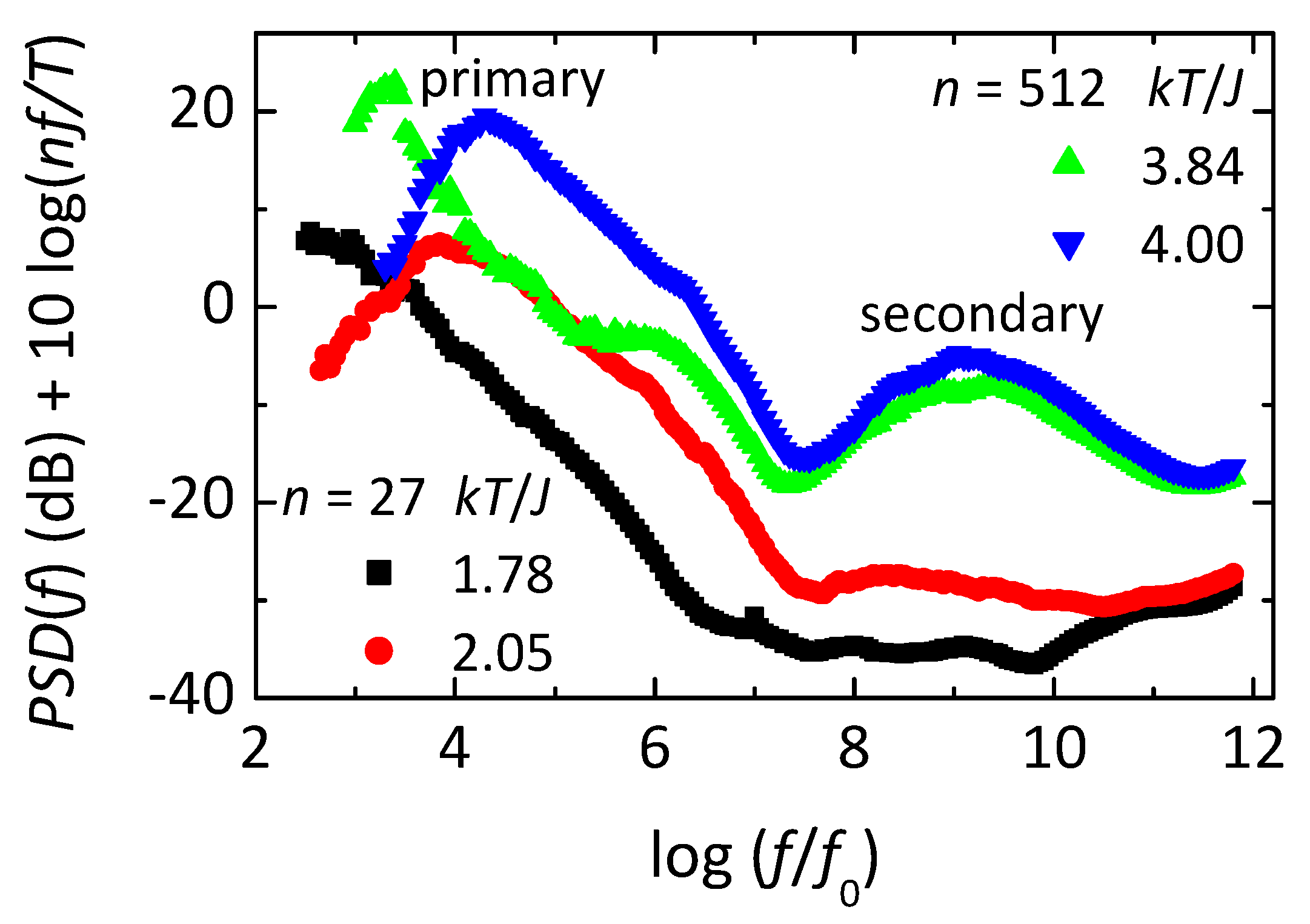

Figure 6 shows frequency-dependent losses deduced from simulations of the OIM. This loss is found from the power spectral density (

PSD, i.e. the magnitude squared of the Fourier transform of time-dependent fluctuations in

) using the fluctuation-dissipation theorem, with the normalization given in the label of the ordinate. The simulations are made on subsystems of two sizes, each at two temperatures, as given in the legends. Note that each loss spectrum from the larger subsystem (

, green at

or blue at

) shows three distinct maxima. The peak at lowest frequency is the primary response, the secondary response is at intermediate frequency, with the microscopic response at the highest frequency. The microscopic response involves inverting individual spins that have no net interaction with their neighbors, e.g. spins 5, 6, or 8 in

Figure 4, hence they invert with each attempt. The primary response comes from net inversions of the entire subsystem, yielding

to

, or vice versa (see inset of

Figure 7A), transiently connecting states that would have broken symmetry if not for finite-size effects. The secondary response comes from normal thermal fluctuations in

and

near one of the two free energy minima at

. Thus, time-dependent changes in a single quantity

in a microscopic Ising-like model yield all three main types of response found in supercooled liquids, but only if orthogonal dynamics is used to separate the three responses. As seen in

Figure 6, the simplicity of the model allows simulations over nearly ten orders of magnitude in frequency using a simple algorithm and relatively short computation times.

Response in the OIM involves changes in

that are orthogonal to, but still influenced by fluctuations in

. Specifically, if the energy of a finite-sized subsystem fluctuates to

from its average value

, using

, a second-order Taylor-series expansion yields the change in entropy:

Here, definitions of temperature and heat capacity are used to give

and

, respectively. The likelihood of such energy fluctuations is given by Boltzmann’s probability,

. Note that standard statistical mechanics is based on this probability applied to an infinite heat bath (subscript

H), with negligible quadratic term and a negative linear term (

) from conservation of energy with the subsystem. Mean-field theory on the Ising model to second order in inverse subsystem size yields an analytic expression for the average energy [

57]:

Notice the similarity between the pre-factor in Eq. (5) and the Curie-Weiss law for magnetism with

the Curie temperature, a consequence of mean-field theory on finite-sized subsystems that can fluctuate [

27]. Furthermore [

32], if this pre-factor is used as an activation energy in the Arrhenius law it yields the Vogel-Fulcher-Tammann (VFT) law, which is a common empirical formula for super-Arrhenius activation of the primary relaxation time (

) in supercooled liquids. However, when a subsystem is in thermal equilibrium, this lowest-order term (

in Eq. (4)) is balanced by the linear term of the heat bath (

). Thus, dynamics in the orthogonal Ising model is governed by energy fluctuations, not activation. Using Eq. (5) in Eq. (4), with

so that

, Boltzmann’s probability yields an expression for primary relaxation times that can be written as [

57]:

Key parameters in Eq. (6) are the curvature coefficient , and prefactor that gives the relaxation time of an infinitely large subsystem (). The temperature-dependent divergence of the exponential argument in Eq. (6) is similar to the VTF law squared, hence the term “VFT2 law” is used for the behavior of Eq. (6).

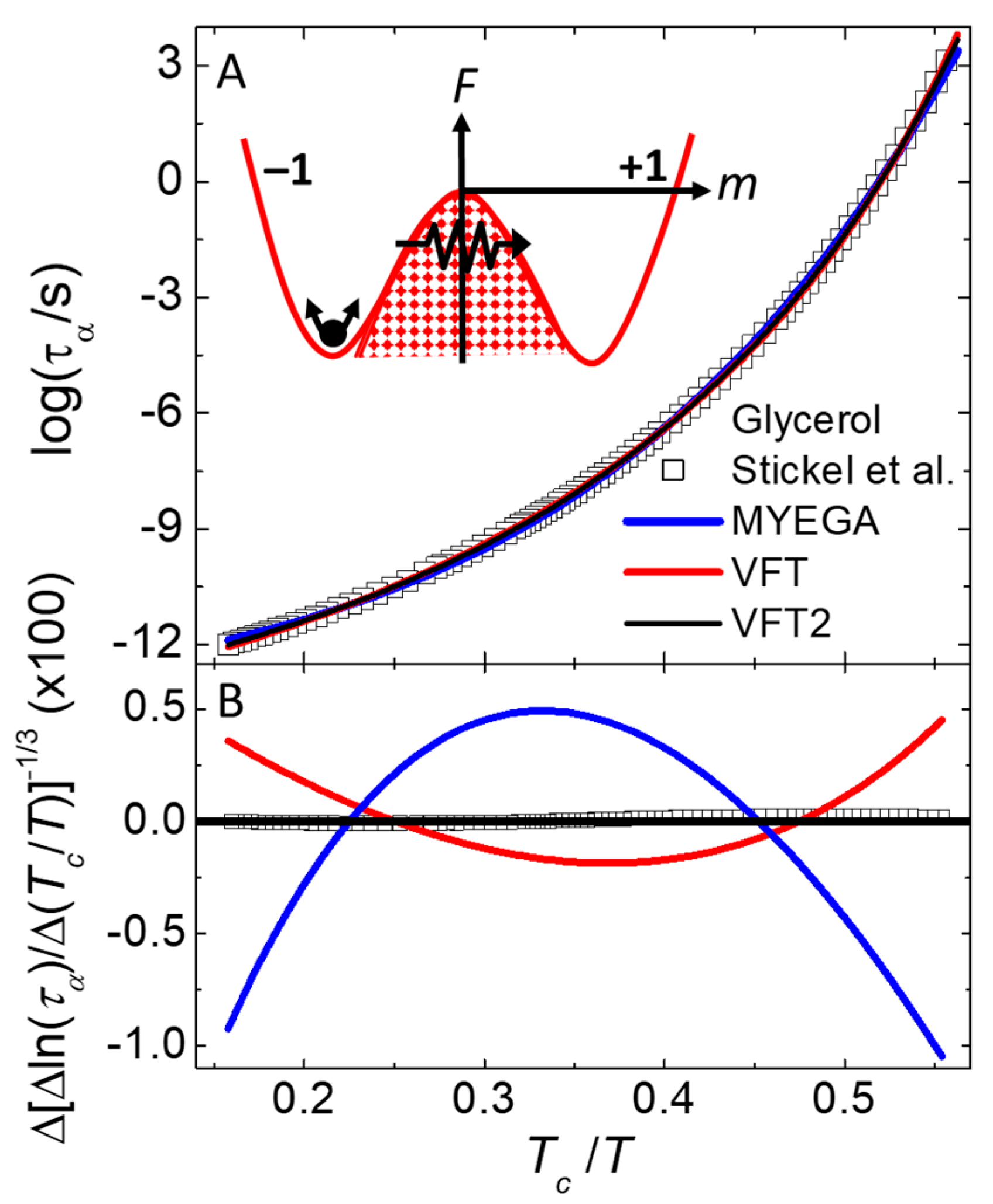

Figure 7 A shows the

dependence of

from models (lines) and measurements [

81,

82] (symbols) of glycerol, a glass-forming liquid. The inset of

Figure 7A shows an interpretation of the response mechanism, described in the next paragraph.

Figure 7B shows the difference between the VFT2 function (solid black line at origin), the measurements (symbols), and the VFT function (red line). Also shown is a fit to the MYEGA function (blue line), where slow dynamics is attributed to a double exponential instead of a finite transition temperature [

83].

Figure 7B is a type of Stickel plot [

81] that utilizes a differential of

as a function of

(given in the ordinate label) that removes

from Eq. (6), then takes the inverse

power to linearize the exponential argument with respect to

. The standard deviation between the measurements and VFT2 behavior of Eq. (6) shown in

Figure 7B is at least an order of magnitude smaller than the other functions that each have one extra adjustable parameter. The inverse size dependence of the effective activation energy (

in Eq. (6)) is similar to the size dependence known to give better agreement with the spectrum of response found in supercooled liquids [

32,

84]. Furthermore, values of

deduced from fitting Eq. (6) to measurements and simulations of

[

57] give good agreement with the sizes of dynamic heterogeneities measured directly by multi-dimensional nuclear magnetic resonance [44-46].

The inset of

Figure 7A is a sketch of a free-energy double-well potential depicting the OIM interpretation of slow responses in amorphous materials. Note that due to the finite size of the subsystems, their free energies can have normal fluctuations represented by the arrows near the bottom of the left-side well, identified as the secondary response in

Figure 6. A key to understanding primary response comes from orthogonality: changes in

never change

. Thus, inverting

from one well to the other cannot come from simple activation over a fixed energy barrier, as evidenced by the rates near the alignment inversion [

57]. Specifically,

increases slowly up the barrier and rapidly down as expected for activated energies, but

has opposite behavior: changing faster while

increases then slower when

decreases. Such non-activated slow response is attributed to energy fluctuations that open an entropy bottleneck, allowing the subsystem to find a pathway through the barrier, as represented by the zigzag path and arrow in the inset of

Figure 7A. Thus, the OIM has complexity even in the relaxation of a single subsystem, from internal degrees of freedom that can alter the height and/or porosity of each barrier, not from activation of a single point in a fixed energy landscape. Additional complexity comes from a stable distribution of subsystem sizes due to the nanocanonical ensemble. In any case, orthogonal dynamics allows the equilibrium fluctuations of a single parameter (

) to exhibit all three types of response typically found in amorphous materials,

Figure 6.

3.5. Maximum Entropy as a Mechanism for 1/f-Like Noise

A general mechanism for 1/

f-like noise comes from adding a local bath to internal subsystems, so that maximum entropy is maintained during reversible fluctuations by each subsystem plus its local bath. In the nanocanonical ensemble, the local bath may come from neighboring subsystems inside the larger system. Various models based on this mechanism exhibit not only 1/

f noise, but also deviations from pure 1/

f behavior that mimic measurements on thin metal films, nanopores, tunnel junctions and qubits [

58,

25]. Furthermore, when orthogonal dynamics is added, a 1D Ising model shows a crossover from 1/

f-like noise to Johnson-Nyquist (white) noise at higher frequencies. Thus, although measurements (by Johnson) and theory (by Nyquist) of white noise were published together in 1927, it has taken nearly 100 years to find a model that simultaneously yields white noise and the 1/

f noise that Johnson first reported in 1925.

Start by considering a system of two non-interacting and distinguishable binary degrees of freedom, e.g. two versions of spin 8 from

Figure 4 which can be either up or down. Let

be the relative alignment, where

and

are the number of up and down spins, respectively. The multiplicities of the spin configurations,

, are

for both spins up,

for both spins down, and

for the two ways that distinguishable spins can have one spin up and the other one down. Using the Boltzmann-Planck expression,

, the entropy of the two-spin system fluctuates up-and-down between

and

. If this system was isolated from its environment, a fluctuation

would violate the second law of thermodynamics. However, the system cannot be isolated because information about

is needed to realize this entropy change. Otherwise, if the spin alignment is not (or cannot) be known,

, the entropy of the system remains constant at

from the

distinct configurations of the two spins. Assume that the spin system and its local bath form a closed system, and that the alignment of the spins is (or can be) sensed by the local bath, e.g. from the magnetic field on the bath. To avoid violating the second law of thermodynamics during reversible fluctuations, entropy from the system must be transferred back-and-forth to the local bath. Specifically, as the entropy of the system fluctuates up-and-down, the entropy of the local bath (

) must fluctuate down-and-up, so that total entropy remains maximized. Models for 1/

f-like noise come from generalizing this idea of maintaining maximum entropy to interacting spins with

.

Figures 8 A-E show all possible configurations of a subsystem containing

spins in a 1D chain. The figures are arranged in order of decreasing relative alignment,

in

Figure 8A to

in

Figure 8E. An

exact expression for alignment entropy (preferrable to using Stirling’s

approximation, especially for small subsystems) comes from the binomial

coefficient for the multiplicity of each alignment:

Note that if the subsystem fluctuates from a configuration with zero net alignment (

Figure 8C) to all spins down (

Figure 8E), its alignment entropy decreases from

to

. Thus, the entropy of the environment must increase an equal amount if violations of the 2

nd law of thermodynamics are to be avoided. Assume that the environment consists of an ideal heat bath of entropy

(that accommodates changes in energy) plus a local bath of entropy

(that accommodates changes in alignment entropy of the subsystem). In nanothermodynamics these baths come from an ensemble of similar subsystems, so that together they form a self-consistent system with total entropy

. During equilibrium fluctuations the total entropy should be stationary with respect to all changes, which can be written as:

Here, the square brackets enclose terms for the entropy of subsystems, with from finite-size effects. Three conditions are implied by Eq. (8). The definition of temperature yields , conservation of energy requires , and maintaining maximum entropy during changes in alignment gives . Note that always involves the finite difference from because derivatives provide a poor approximation to the highly nonlinear changes in entropy during large fluctuations of small subsystems. Furthermore, the maximum entropy () remains the most-probable value for any subsystem that is above the critical temperature in zero external field.

Transition rates and probabilities for thermal fluctuations in energy and alignment are governed by Eq. (8) via the entropies of the baths. For example, the probability that the heat bath has an extra energy above its equilibrium value is . Thus, high-energy states are favored, opposite to the usual Boltzmann’s factor, but necessary for the heat-bath entropy to yield Boltzmann’s factor for subsystems using conservation of energy, . Indeed, it is this heat-bath entropy that is needed for Boltzmann’s factor to give the statistics of systems in known states having no entropy. Similarly, the probability that the local bath has an extra amount of entropy above its equilibrium value is , a term that is absent from standard statistical mechanics where contributions to the 2nd law from configurational entropy are usually neglected.

Transition rates come from applying detailed balance to each bath, , where gives the rate for changes from state to state in the bath. First, for transitions that increase the energy of the heat bath (): . The Metropolis algorithm for fast equilibration rates uses , leaving . Thus, energy of the heat bath tends to increase faster than it decreases, opposite to the usual Metropolis algorithm, but needed for detailed balance of the multiplicities in the heat bath. Indeed, this is the fundamental mechanism for Boltzmann’s factor and using yields the Metropolis algorithm. Similarly, for transitions that increase the entropy of the local bath (): . A Metropolis-like algorithm for fast equilibration rates uses , leaving . Using to convert to the alignment entropy of the subsystem gives , leaving . To reiterate, because of the highly nonlinear nature of the entropy difference of small subsystems, this in the exponent is the total entropy difference from the maximum-entropy configuration, not a differential.

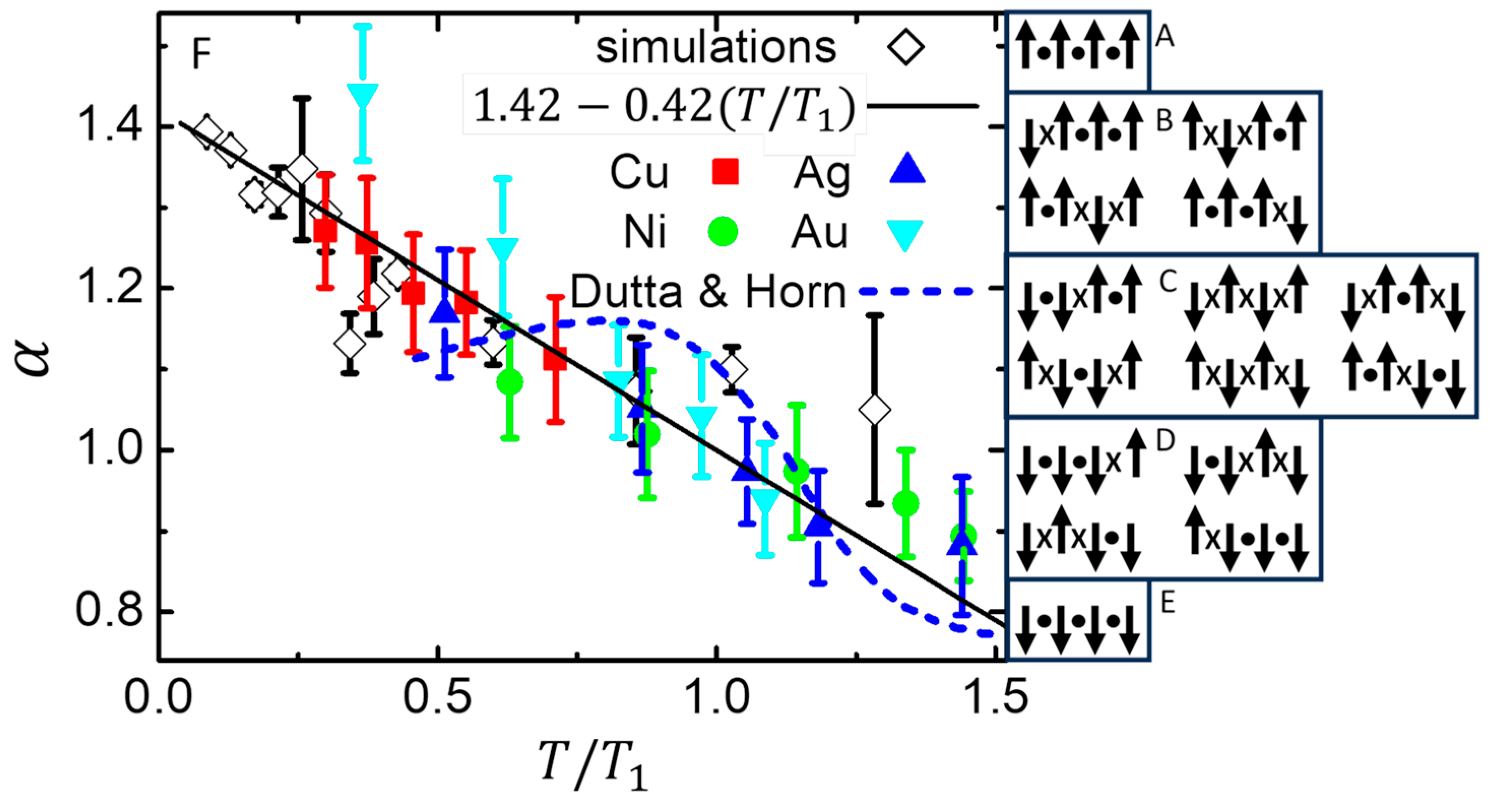

By combining the usual Metropolis algorithm with the analogous

, where

is a random number that is evenly distributed over the interval 0 to 1, simulations of the standard Ising model show 1/

f-like noise. In fact, using an exponent

to characterize the power-spectral density,

, yields 1/

f-like noise with

that is often temperature dependent.

Figure 8 F shows a comparison of

from measurements of noise from thin metal films [

85] (solid symbols), a random fluctuation model [

86] (dashed line), and MC simulations that maintain maximum entropy [

58] (open symbols with error bars) with their weighted linear regression (solid line). The simulations are of the standard 3D Ising model on a simple-cubic lattice, with subsystems containing

spins. The measurements and simulations shown in

Figure 8F are normalized by the temperature

that gives

, with no other adjustable parameters.

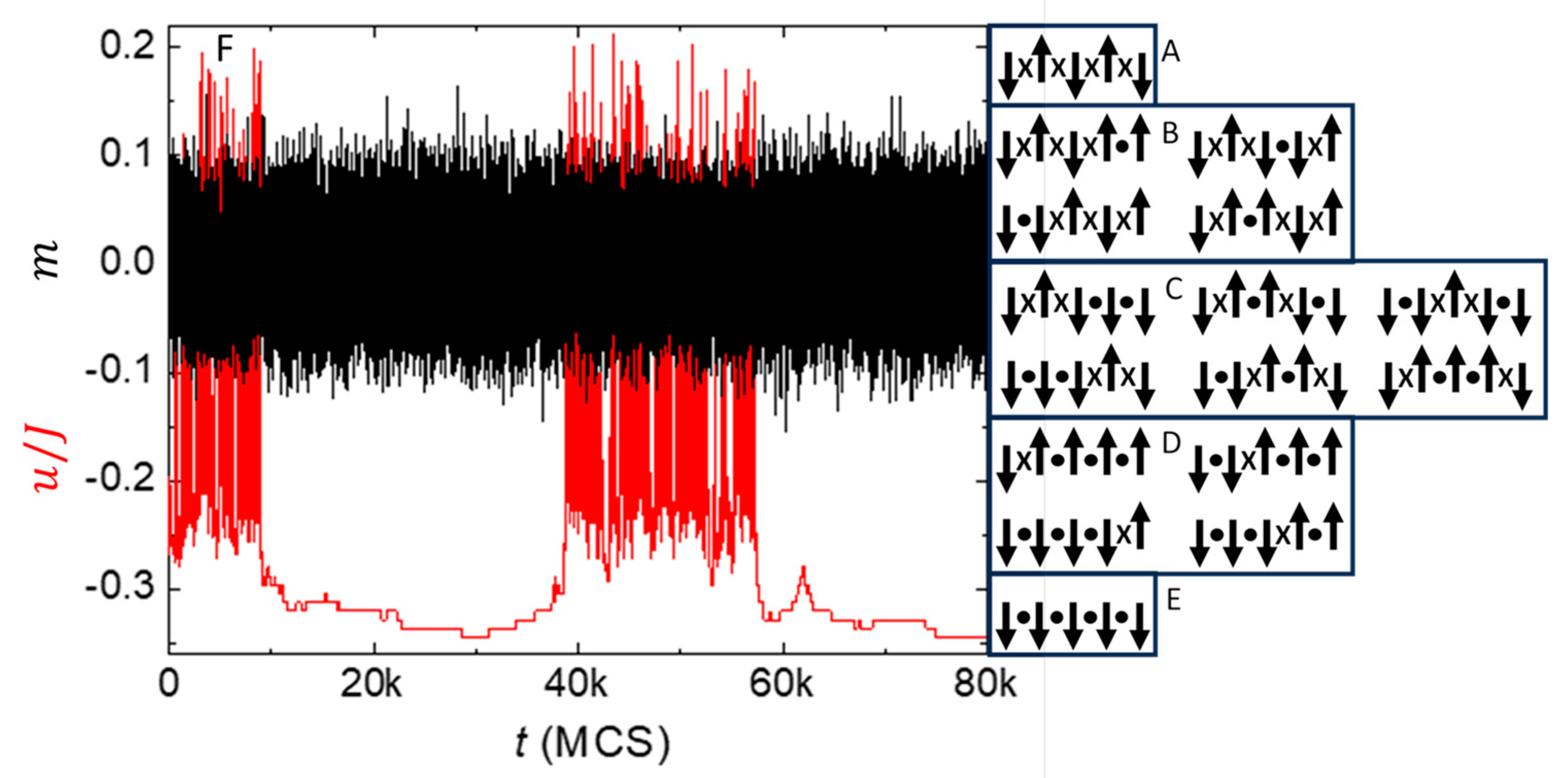

Figure 9A–E show sketches of a 1D chain of Ising spins, similar to those in

Figure 8A–E. However, now there are

interactions (5 spins) arranged in order of decreasing energy per interaction:

from

(A) to

(E). Here

is the number of high-energy interactions (

) and

is the number of low-energy interactions (

). The number of configurations in each figure represents the multiplicity of each energy (mirror-image configurations with the left-most spin up are not shown), yielding a multiplicity that is twice the argument of the logarithm in Eq. (7) with

replaced by

. Thus in Eq. (8), if changes in alignment entropy (

) are replaced by changes in energy multiplicity (

), maintaining maximum entropy gives 1/

f-like noise in the energy fluctuations. Then, using orthogonal dynamics to allow decorrelated conservation laws yields fluctuations in alignment with an amplitude that is modulated by the energy. Specifically,

Figure 9A (

) and 9B (

) have a narrow range of alignments,

(recall, configurations with the left-most spin up are not shown);

Figure 9C (

) and 9D (

) have an intermediate range of alignments,

; whereas

Figure 9E (

) has the broadest range of alignments,

.

Figure 9F shows a time series from a simulation of this model with

(one contribution to the PSD in

Figure 10) [

25]. Note how the fluctuations in alignment (black) tend to occur at a consistently fast rate, indicative of white noise, while the fluctuations in energy (red) exhibit jumps and steps over a wide range of time scales, indicative of 1/

f-like noise, also known as random telegraph noise or burst noise because if its appearance. Careful inspection reveals how the amplitude of fluctuations in

is modulated by the value of

. Specifically, fluctuations in

tend to increase when

, similar to how the range of alignments in

Figure 9A–E increases with decreasing energy. Thus, normal fluctuations in alignment are dominated by white noise, with 1/

f-like noise in their second spectrum, consistent with measurements of thermal noise in equilibrium (non-driven) samples [

87,

88]. All these complexities come from a simple system based on the Ising model, with physically reasonable assumptions about orthogonal dynamics plus strict adherence to the 2

nd law of thermodynamics to maintain maximum entropy. Thus, the single parameter (

) can exhibit both white noise and 1/

f-like noise, with no need for any separate distributions.

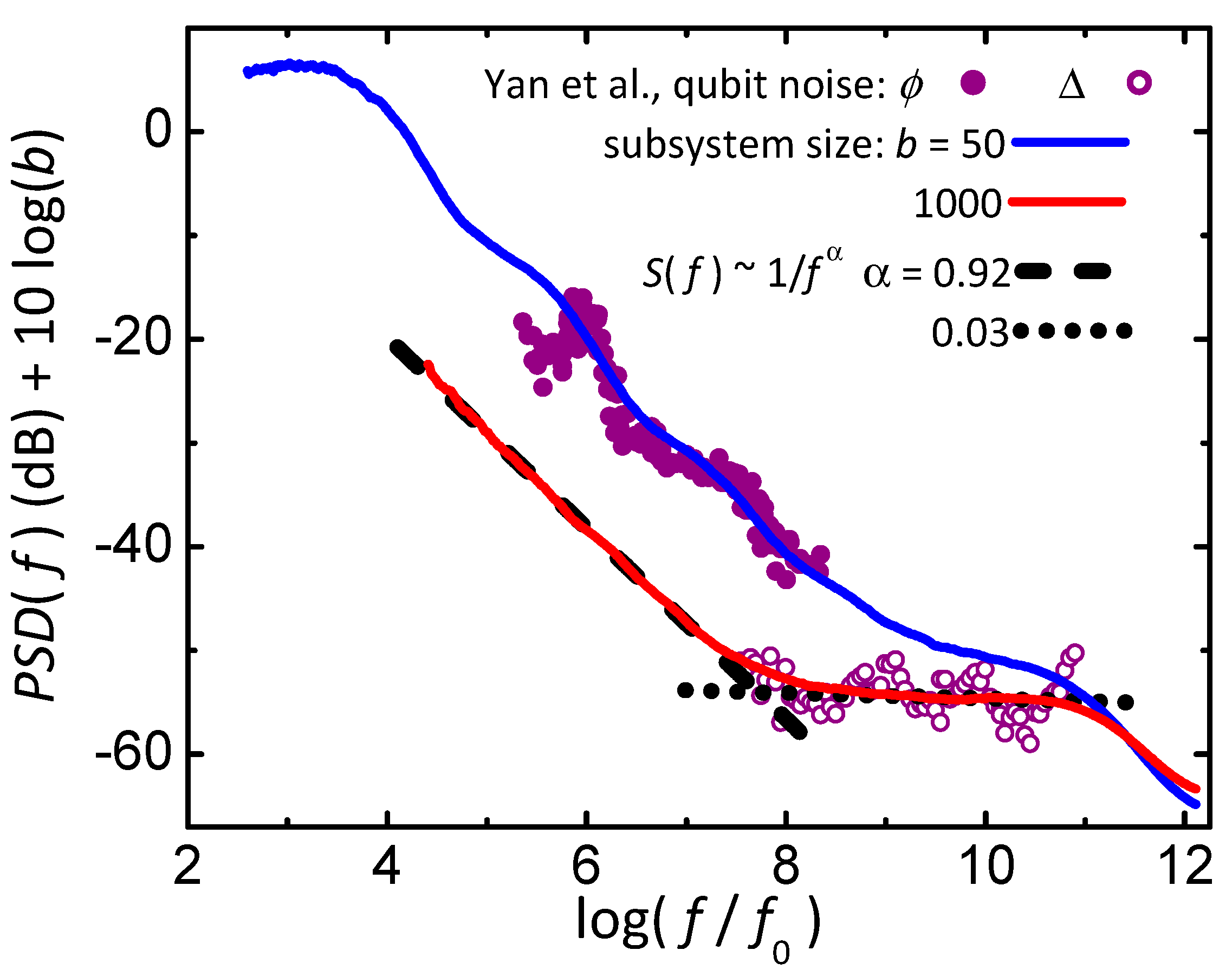

Figure 10 shows power spectral densities from simulations [

25] (lines) and measurements [

89] (symbols). The simulations are from the 1D Ising model with orthogonal dynamics and a local bath that maintains maximum entropy. Each PSD comes from

as a function of time (e.g. black line in

Figure 9F) by taking the magnitude squared of the Fourier transform of the time series. The PSD from simulations of smaller subsystems, e.g.

interactions (blue), show 1/

f-like behavior with bumps characteristic of individual Lorentzians, similar to the PSD from measurements of the flux noise (

) in a qubit (filled circles). The PSD of larger subsystems, e.g.

interactions (red), show white noise at higher frequencies (dotted line) that crosses over to 1/

f-like noise at lower frequencies with an exponent of

(dashed line). Most measurements exhibiting 1/

f-like noise show a similar crossover, including noise from the effective tunnel-coupling (

) of a qubit (open circles). Thus, this single model provides a general mechanism for several features found in the measured noise from many systems.

Another interesting aspect of orthogonal dynamics is that it can make mean-field theory exact for the 1D Ising model. In general, when averaged over all energies having a given alignment (

), the net energy of

spins in a 1D chain (

interactions) is:

. Specifically, for the subsystems having

spins shown in

Figure 8C–E: if

(

Figure 8C) the average energy of the six configurations is

; if

(

Figure 8D) the average energy of the four configurations is

; and if

(

Figure 8E) the energy of the single configuration is

. All agree exactly with the mean-field expression

. Indeed, if conservation of alignment in orthogonal dynamics persists long enough for the energy of a subsystem to exchange freely within its local environment before coupling to the heat bath, mean-field theory becomes exact in 1D due to averaging of all configurations having a given alignment. Thus, mean-field theory can be exact in 4+ dimensions due to spatial averaging, and in 1D due to time averaging. Although simple expressions have not been found for the Ising model in 2D and 3D, the accuracy of mean-field theory is generally improved when energies are time averaged using orthogonal dynamics. Such time averaging could also explain the good agreement between the mean-field cluster model and measured behavior of ferromagnetic materials shown in

Figure 5.

Time-averaging of 1D systems with orthogonal dynamics may also explain why mean-field theory mimics the measured properties of various linear-chain molecules, including biopolymers [

90,

91,

92]. Indeed, we speculate that the behavior of linear-chain systems can be more-easily (and perhaps more-accurately) modelled by mean-field theory on short-segment subsystems, with a distribution of breaks in the exchange interaction between subsystems. Then, simple linear chains will have a stable equilibrium distribution of breaks, given by Eq. (2), while more-complex linear chains such as proteins can be modelled by mean-field theory on segment lengths that are shifted away from the equilibrium distribution by specific sequences of amino acids.

3.6. The Arrow of Time in Simple Systems

All basic laws of physics encountered in our daily lives are reversible except the second law of thermodynamics [

93,

94,

95,

96,

97]. Indeed, it is this 2

nd law that complicates the reconstruction of a raw egg that has fallen onto a hard surface, and prevents us from going back in time to stop it from falling. Sometimes it is said that there can be violations of the 2

nd law [

98,

99,

100], especially in small and simple systems, implying that it is a statistical rule of thumb valid only for large and complex systems. Further, it is often said that the explanation for 2

nd-law behavior in complex systems comes from assuming that entropy increases for the vast majority of initial states, while entropy will decrease for some initial states. These assumptions have been tested and found to be false, at least for a simple (Creutz-like) model where the exact entropy can be calculated at every step for both the system and its heat bath [

16]. Related behavior is found in molecular dynamics (MD) simulations of various models, which are based on Newtonian dynamics and hence intrinsically reversible [

15]. Such deviations from standard statistical mechanics can be traced to the inherent reversibility and energy localization common to these simulations.

Simulations of the Creutz-like model and MD simulations corroborate a paradox in the theoretical properties of entropy [

20], where systems with reversible dynamics cannot obey the 2

nd law of thermodynamics. Specifically, entropy remains constant for classical systems due to Liouville’s theorem, and for quantum systems due to Schrödinger’s equation. According to [

20], the only way to resolve this paradox and yield 2

nd-law behavior in the dynamics is to utilize intrinsically irreversible (Markovian) steps, such as the master equation or Metropolis algorithm. Indeed, the simple Creutz-like model yields maximum entropy and 2

nd-law behavior only if there is an intrinsically irreversible step [

16].

The original goal of the Creutz model [

101,

102] was to utilize an explicit heat bath to avoid random numbers for efficient simulation of the 2D Ising model. For tests of the 2

nd law the Creutz-like model starts with a 1D ring of

Ising-like spins, similar to the 1D chain in

Figure 4 but with periodic boundary conditions (which facilitates calculations of entropy) and with

. The heat bath consists of

Einstein oscillators, one oscillator per spin, but the spins often couple to distant oscillators to more-closely mimic an ideal heat bath. Each oscillator has an infinite number of equally spaced energy levels,

, with

a non-negative integer (

) so that each oscillator acts as source of kinetic energy (“

ke source”). The crucial and novel ingredient for the model to show the arrow of time is to include a thermal distribution of intermittent breaks, consistent with Eq. (2) and

Figure 4, and needed for stable equilibrium of the 1D Ising model.

The Creutz-like model is a type of cellular automaton [

103,

104]. Simulations of the model can be thought of as a type of microcanonical Monte-Carlo (MC) algorithm, where total energy is exactly conserved at every step. Dynamics in the model comes from choosing a site, then attempting to either flip the spin or change the interaction break-state for that site by exchanging energy with a

ke source. If the change does not increase the potential energy (

pe), it happens every attempt with any excess energy transferred to the

ke source. If the change would increase the

pe, it happens if and only if the

ke source has sufficient energy, i.e. if the

ke source can supply the potential energy and remain nonnegative. The entropy of the spins is given by the logarithm of their multiplicity, essentially the logarithm of the pre-factor to the exponent in the summand of Eq. (B7) (or Eq. (1) in [

16]) comprised of the trinomial coefficient times

. The entropy of the

ke bath is given by the logarithm of a binomial coefficient for the number of ways that the total kinetic energy from all

ke sources can be shared among the

Einstein oscillators ([

48] or Eq. (3) in [

16]).

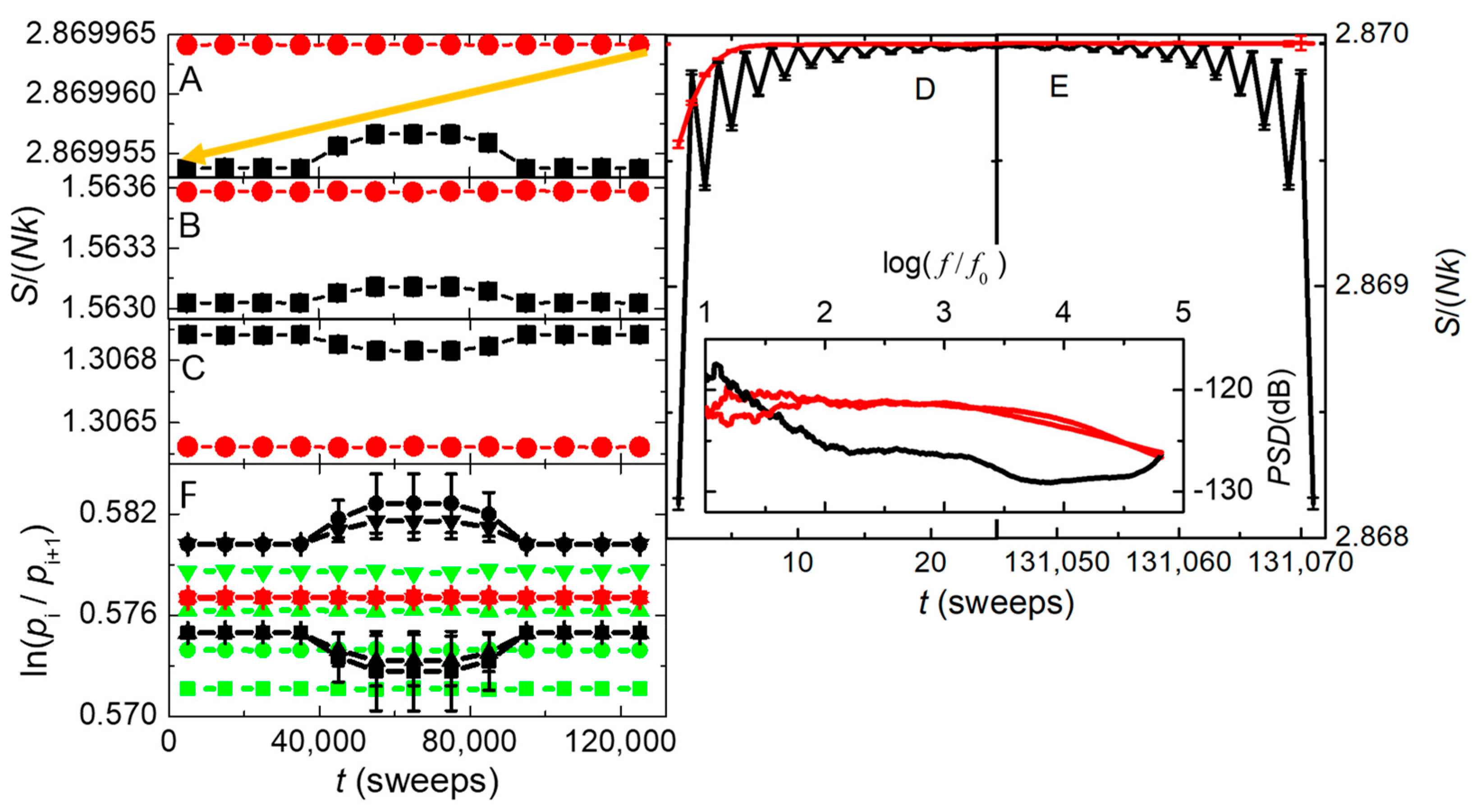

The Creutz-like model is simulated on systems of size

to

using various types of dynamics. Line color in

Figure 11 identifies dynamics that is reversible (black) or irreversible (red or green). All black and red lines in

Figure 11 come from simulations of large systems in the thermodynamic limit,

, where

marks the crossover between behaviors, whereas the green lines in

Figure 11F, from

, show significant finite-size effects. In all cases the simulations have a fixed total energy (

) shared among all spins and

ke sources. For reversible dynamics, each simulation sweep utilizes three randomly chosen (but fixed) sequences. One sequence gives the order of choosing each spin and its interaction. The second sequence gives the

ke source for each spin-flip attempt. The third sequence gives the

ke source for each break-state change attempt. Reversing the dynamics involves reversing every sequence. Such simulations are non-Markovian, having a long-term (but necessary) memory for the reversal. Irreversible dynamics is Markovian, utilizing a new random number for every choice of spin, interaction, and

ke source.

Figure 11A–E show the time dependence of the entropies per spin,

. Specifically:

Figure 11C

shows the entropies of the spin system,

Figure 11B the entropies of the

ke sources, and

Figure 11A the total entropies (their sum).

Even when the break-state attempt rate is reduced to 1/10 the spin-flip attempt

rate (middle third of each simulation), with error bars visible if larger than

the symbol size,

Figure 11A shows that

the total entropy from irreversible dynamics is several (> 4) standard

deviations higher than the entropy of reversible dynamics.

Figure 11B mimics this difference, while

Figure 11C mirrors the difference, showing that

the increase in entropy is dominated by the

ke bath. Thus, standard MC

simulations of the Ising model will never show this entropy increase with

intrinsic randomness, not only because the Metropolis algorithm is always

Markovian, but also because the algorithm assumes essentially instantaneous

coupling to an effectively infinite heat bath without explicit details about

the coupling and how energy is conserved.

Figure 11F shows the time dependence of the logarithm of the ratio of occupation probabilities from neighboring energy levels in the

ke bath,

. Symbol shape identifies the levels:

(squares),

(circles),

(up triangles), and

(down triangles). Therefore, if Boltzmann’s factor applies to the statistics of the Einstein oscillators,

Figure 11F gives the inverse of an effective temperature for neighboring energy levels,

. Symbol color identifies the system size and type of dynamics. Green symbols show four distinct values of

. Thus, even with irreversible dynamics, Boltzmann’s factor fails to describe the

ke bath of this system because it is not in the thermodynamic limit (

), attributable to insufficient total energy in the microcanonical simulation to thermally occupy the higher levels. Black symbols show two (or more) distinct values of

. Although this system is in the thermodynamic limit (

), again Boltzmann’s factor fails to describe the

ke bath, but now because the dynamics is reversible. In contrast, overlapping red symbols show that irreversible dynamics in the thermodynamic limit (

) yields a single temperature that is consistent with Boltzmann’s factor.

The right-hand panels in

Figure 11 show the total entropy per particle at the start (D) and end (E) of the simulations. The data are the same as in

Figure 11A, but on an expanded time scale without time averaging. The red line in (D), from irreversible dynamics, shows that the initial entropy rises rapidly to a maximum value, then stays at this maximum to the very end of the simulation shown in (E). By contrast, the black line in (D), from reversible dynamics, oscillates with every sweep while evolving towards an entropy that is visibly less than the irreversible maximum entropy. Then, in (E) the system returns to its low-entropy initial state, exactly reversing every step in the process, even after nearly

steps. This return to the initial state directly demonstrates Loschmidt’s paradox for entropy that will decrease if reversible dynamics is inverted at a midpoint in time. The inset gives the power spectral densities of these simulations. Note how irreversible dynamics (red) shows a broad overdamped spectrum, with white noise below a characteristic frequency of

. In contrast, reversible dynamics (black) has a sharp peak at the maximum frequency, corresponding to the oscillations seen in the main parts of these figures.

Insight into why systems having reversible dynamics deviate from standard statistical mechanics comes from details in the Creutz-like model. Recall that this model shows a difference between reversible and irreversible dynamics only if simulated with interactions that have intermittent breaks. Then, at least on short times with reversible dynamics, the breaks in the 1D system yield isolated segments of interacting spins that couple only to their own set of

ke sources. Thus, energy tends to be localized within these subsystems, oscillating back-and-forth between the spins and their

ke sources, causing the oscillations seen in

Figure 11D,E. In other words, due to intermittent interaction breaks, conservation of local energy overwhelms the slow transfer of energy to the rest of the system that serves as the large heat bath. However, when there is intrinsic randomness in the choice of

ke sources, energy disperses quickly, without oscillations. Oscillations in energy also appear in molecular dynamics (MD) simulations of various models [

15]. Like the reversible Creutz-like model with breaks, the oscillations can be attributed to inherently reversible Newtonian dynamics with anharmonic interactions that localize energy. Although these MD systems are too complex to yield entropy directly, their failure to follow standard statistical mechanics comes from excess fluctuations in energy that yield multiple local temperatures, similar to the black and green symbols in

Figure 11F,

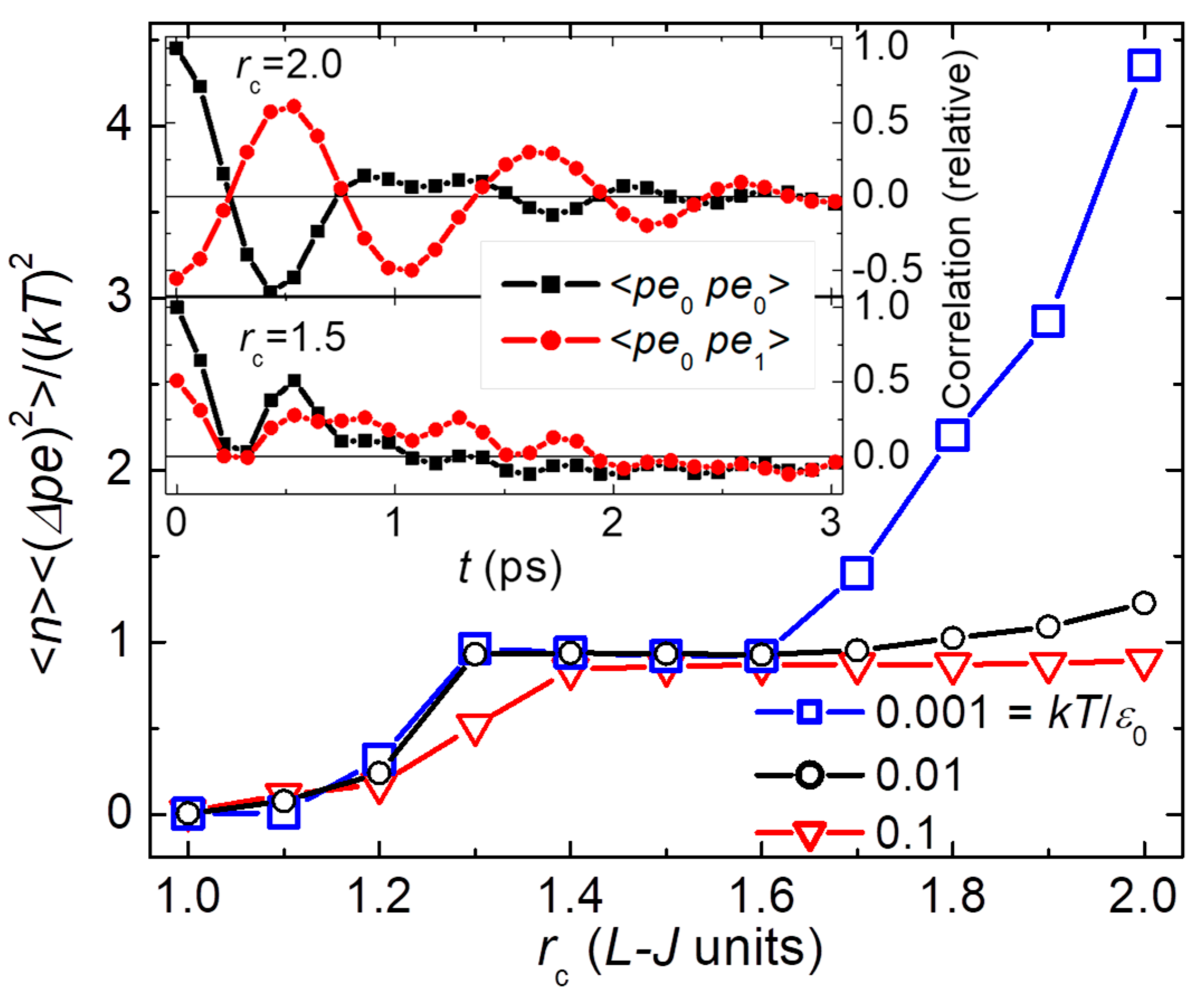

Figure 12 shows results from MD simulations of the Lennard-Jones (L-J) model in its crystalline phase at low

, adapted from Ref [

15]. Symbol shape and color identify the simulation

, as given in the legend. (L-J units can be converted to physical quantities using values for specific substances, e.g. argon has

K.) Simulations are made on a 3D system having 48 unit-cells on each side, yielding a total of

atoms. Thus, the system forms a large heat bath for small internal blocks having

atoms. (Blocks are subvolumes, without the interaction breaks needed for independently fluctuating subsystems. Indeed, neighboring blocks have correlated fluctuations as shown by the symbols in the insets.) The main part of

Figure 12 shows averaged and normalized values of the

pe fluctuations for blocks of

atoms as a function of the cutoff radius (

) for the L-J interaction. Using

as the potential energy per particle, standard statistical mechanics predicts

. Here,

is expected for the specific heat from the equipartition theorem at low

when the lattice is highly harmonic. Averaging the data from

with

yields

. The overlapping symbols show the expected constancy with

and

, but

due to harmonic modes that correlate the dynamics in neighboring blocks (see inset at

). In contrast,

becomes strongly

-dependent for

, diverging as

when

.

Quantitative agreement with

as

is obtained by removing the 2

nd-neighbor interaction from Boltzmann’s factor [

15]. These deviations from Boltzmann’s factor can be attributed to conservation of local energy overwhelming the weak and slow coupling to distant blocks, due to energy that is localized by the intrinsic anharmonicity between 2

nd-neighbor atoms that interact only when

. This interpretation is supported by the behavior of

pe correlations as a function of time shown in the two insets of

Figure 12. The insets show autocorrelations of

pe fluctuations within each block (black squares) and

pe correlations between each central block and its six nearest neighbors (red circles) for two different values of

. Note that in the purely harmonic crystal (

, lower inset)

pe fluctuations between nearest-neighbor blocks (red) are positively correlated. However, when there are second-neighbor interactions (

, upper inset) neighboring blocks are strongly anticorrelated. In other words, when interactions extend beyond nearest-neighbor particles (upper inset), most of the

pe in the fluctuation of each block (black squares) comes from a local bath comprised of nearby blocks (red circles), with negligible

coming from the large heat bath of distant blocks. No wonder Boltzmann’s factor fails to describe these fluctuations in

pe that are localized to nearby blocks, effectively isolated from the heat bath. By contrast, in the purely harmonic lattice (lower inset), positive correlations are due to the dominance of plane-wave excitations that are positively correlated between neighboring blocks. These plane waves provide a uniform heat bath for the localized fluctuations, but only if the interactions are robustly harmonic. Similar deviations from Boltzmann’s factor are found from MD simulations of other models having anharmonic interactions. In general, any system having realistic interactions and reversible dynamics will deviate from Boltzmann’s factor, at least when observed closely enough.

Figure 11 and

Figure 12 show that computer simulations of various systems deviate significantly from standard statistical mechanics, but only if the dynamics is inherently reversible with energy fluctuations that are localized. Simple, intrinsically irreversible steps are sufficient for the Creutz-like model to yield maximum entropy and 2

nd-law behavior. Common thermostats for MD simulations, such as the Nose-Hoover algorithm, are too weak to overcome conservation of local energy that causes deviations from Boltzmann’s factor [

15]. It is speculated [

16] that in real systems, intrinsically irreversible dynamics may come from the quantum measurement process. Applying this idea to the Creutz-like model, when the spin system couples to its heat bath the specific amount of energy in the randomly chosen

ke source is unknown until the coupling occurs, consistent with the calculation of entropy from the total energy that is shared among all

ke sources. Future work is needed to explore this speculation, and whether intrinsically irreversible dynamics and a stable distribution of heterogeneous interactions can be added to MD simulations to improve agreement with standard statistical mechanics, and to measured behavior.